It’s halfway through spring, and the delivery teams at WonderTek are just starting to realize how much of their work is falling between the cracks.

That’s not saying there haven’t been some encouraging signs of progress. By setting up and enforcing blame-free postmortems, they’ve made significant headway in encouraging a culture that is more focused on learning from mistakes instead of punishment, blame, and evasion. They’re cutting down the time they spend on dead ends and red herrings by treating their development stories and features as guesses – experiments – bets that they can either double down on or abandon if they don’t hit predetermined marks.

By far, the best changes are in the fundamental way that Operations and Development are learning to work together. Ben and Emily are starting to show a commitment in the things valued by their other partner – well coordinated and thought out release plans by Ops and delivery retrospectives and planning by Dev. Having displays everywhere that focus on business-centric KPIs like response time, availability, and defects is having a subtle but penetrating effect; developers and operations are starting to see problems from a common, global point of view.

As positive as these developments are, there’s still so much work left undone. Engineers are still drowning in tickets turfed to them by Operations, who are using a completely separate ticket queue. Security is still an afterthought. Most seriously, a seemingly positive and long-overdue development – a true value stream map – threatens to break up that fragile truce that he and Emily have established.

It’s clear that testing and quality, which used to be the limiting factor in their delivery life cycle, has become much less of a problem. Taking its place as a constraint is infrastructure – the time it takes to spin up environments and configure them properly. WonderTek is still very much a political swamp in many ways, and old habits die hard. Will that tenuous working relationship between dev and IT fall back into the old game of finger pointing and blame?

Leaner and Meaner (Small Cross-Functional Teams)

Karen’s audit and the aftermath hit us like a bomb. It was like somebody had poked a stick into an anthill.

Emily and I had talked with Karen shortly after her presentation, and we started to form a plan. Over the next few weeks, I was very busy talking to security and our architects, often folding Karen in over the phone. I also stepped up my discussions with Tabrez. I felt confident that once the audit report appeared, we’d be ready.

A few weeks later, the audit report came in. Thankfully, it wasn’t a tree-killing dictionary of “best practices” – just a few dozen pages summarizing Karen’s assessment of WonderTek’s delivery cycle and some simple steps she recommended to resolve our current delivery bottleneck. Most of this was around infrastructure.

In the old days, not so many months ago, getting ammunition like this to use against my rival would have been cause for rejoicing. But Emily and I have been having lunch or coffee together a few times a week, and she and Kevin have been incredibly busy trying to wrap his arms around how to best create immutable infrastructure on demand. Somehow, I can’t find it in myself to start putting in the dagger on a problem that both of us share.

Not that there aren’t temptations. During my weekly 1x1 with Douglas, he brought up the audit again, and you couldn’t have asked for a better opportunity to throw IT under the bus. He begins by complaining, “You know, I told you a year ago that WonderTek just isn’t ready for this DevOps thing. I think we’ve gone a bridge too far here, Ben. It’s time to scale this back, we shouldn’t have to deal with this much stress and friction.”

I have to walk cautiously here. Douglas has a point that there’s a strong undercurrent of suspicion that “this DevOps thing” is a fad, and we’ve gotten a lot of resistance. I’ve been very careful to narrow my focus to specific workstreams, not even using that loaded (and imperfect!) word “DevOps” in any meetings. Instead, I put things in terms like “delivering value,” “driving efficiency,” or “eliminating waste.” We’ve found that if we keep a laser focus on delivering business value and streamlining our release pipeline, and back it up with monitor displays of our current and desired future state, we win. But if it comes across as a dev-centered movement around better tooling or process refactoring, it falls flat.

I tell Douglas that we aren’t trying to do DevOps – just straightening out the kinks in the hose that are preventing us from delivering value. This particular morning, that isn’t working for me. He stares at the ceiling, eyelids half closed, jaw jutting out. “Don’t piss on me and tell me it’s raining, Ben. I’m telling you, every organization has a turning radius. WonderTek isn’t a sportscar, it’s a supertanker. You’re risking the ship by trying too much change, too fast.”

I get the message, and I’m concerned about where this is coming from; we’ve been very careful with our bets and have tried to keep a low profile. He’s evasive about where he’s getting this information, and ultimately it doesn’t matter; I have to bring our attention back to the future we’re trying to create and leave the office scuttlebutt alone. I reply, “You’ve read the audit – the numbers speak for themselves. We don’t have an Ops problem, or a Dev problem, or a PM/BSA problem – regardless of how people try to frame things. The issue is the blinks – the spaces in between these different delivery groups. If you want faster and better delivery of value, we need to seal those gaps.”

I just get a groan in response; his eyes are still on the ceiling. Time to try a different tack. “Douglas, you’re a military guy. What do you think about Grant’s campaign at Vicksburg during the Civil War?”

This gets his attention, and he arrows a sour look in my direction. It’s an irresistible gambit; Douglas loves military history and especially the Civil War. He slowly says, “Vicksburg? Ah, that was kind of genius. You know, people think General Lee was the dominant military genius of the war, but I always say that if those two men had switched places, Grant would have acted exactly as Lee had. Both could take great risks when they needed to.”

Bait taken! “If I remember right, Grant didn’t succeed the first time, did he?”

“Ha! No, far from it. He failed so many times. He had to cross the Mississippi river against a prepared enemy that outnumbered him. First he tried approaching from two sides; that failed, twice – then he tried digging canals to bypass the city’s forts, which failed… shoot, had to be a half dozen different things he tried. All losers, and it cost him months.” He’s starting to warm up and then catches himself. “Why?”

“What ended up being the winning formula for Grant?”

“Ah… well, he did the bold and unexpected thing. He risked crossing the river, completely away from his supply lines and any hope of retreat, and fought a series of battles away from the city that forced the enemy commander to make guesses. His opponent guessed wrong, and the North ended up defeating them in detail. It was the biggest turning point of the war, in my opinion.”

I’m not Douglas’ match when it comes to history, but I have read enough to try to make my point. “So, let me put this to you – Lee said Grant would ‘hang on like a bulldog and never let go.’ Grant’s greatest quality was persistence, even when things looked grim. Grant tried, what, six different approaches at Vicksburg and they all failed miserably – everyone but the last. Any other general would have quit months earlier. Can we take a page from his book and show some persistence and courage here? To try to do something different?”

The sour look is gone; Douglas looks intrigued. “You mean, Karen’s SWAT team recommendation?”

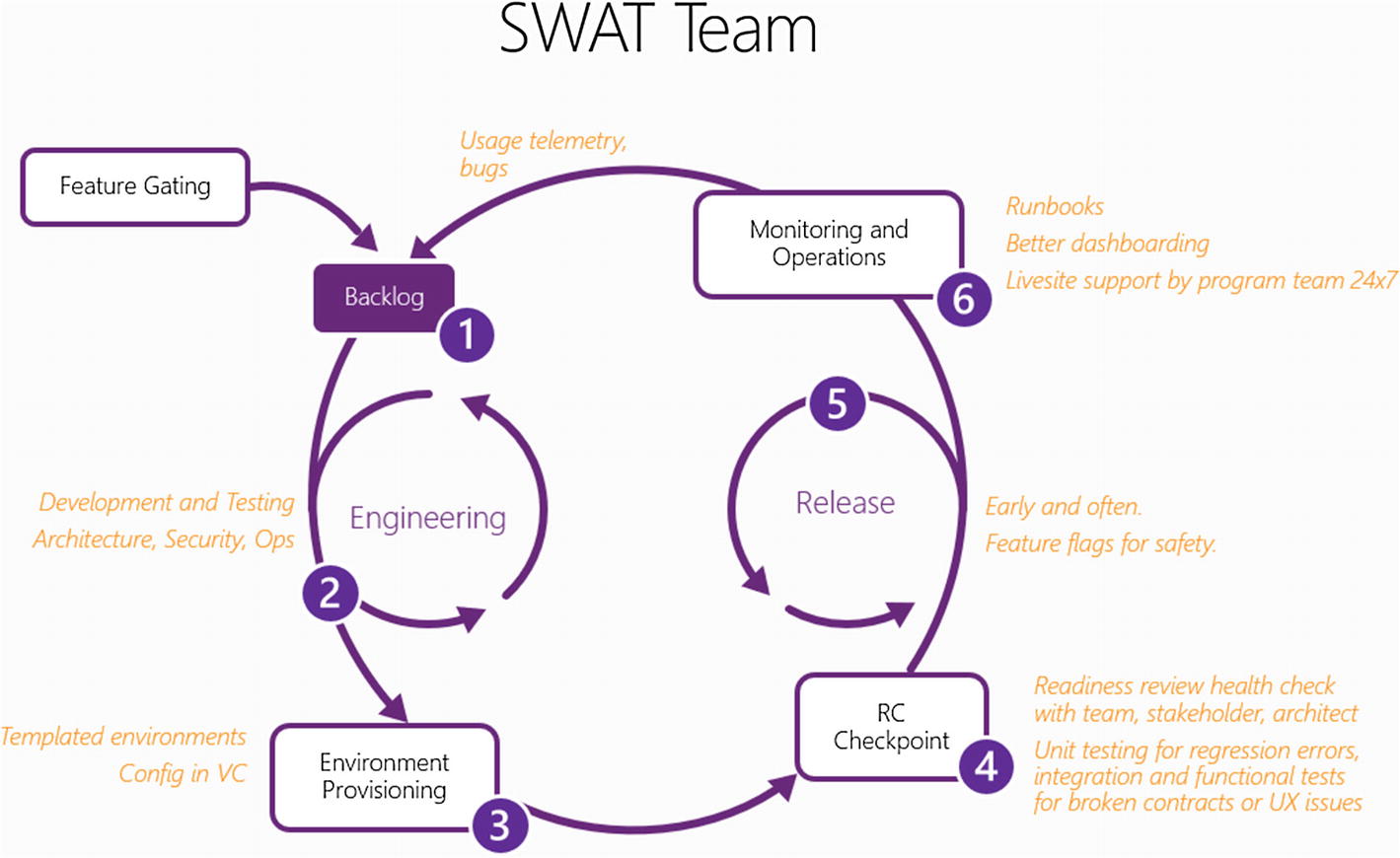

“That’s exactly what I’m talking about.” I open my tablet to that page of the report:

“Karen is proposing that we try something new,” I continue. “She’s asking us if we’re ready to cross the river. The report proposes that for our future releases with Footwear, we try using a very small team – like under 10 people – that can deliver a single feature end to end in two weeks. With this, we close the ‘blinks’ – we should have none of the delays or lost information and rework that we’ve suffered in every release in the past.”

I build up some steam as I continue talking about some of the benefits; it’s something Emily and I are starting to get excited about. We already have a lot of the building blocks in place with better version control and continuous integration.

Better yet, I might have some very powerful allies in the wings. I know that our architects – who always complain about being brought in late or not at all – are very enthusiastic about this as a concept. We’re still working out how this might look, but it could be in the form of part time involvement – maybe a day each sprint, more if needed – by an architect embedded with the product team directly. Security is also onboard; they’re thinking about a similar engagement model, where they give us some best practices training on folding security in as part of our development life cycle. Music to my ears, they’re even floating ideas around about making this as painless as possible to implement by creating a community-curated security framework.

As I expected, this resonates with Douglas as we’re talking better-integrated teams – and reducing the hassles and static he gets from both of these very vocal groups. Still, the core problem remains. “Ben, what worries Emily and I the most is how this report is sandbagging on her IT team; it’s divisive and doesn’t take into account how hard her people are working. And Karen’s recommending that we make the jump to the cloud, which is off the table. It’s a massive security risk, not to mention throwing away the millions of dollars we’ve invested in our own datacenters. Yet this is one of the key recommendations in the audit – saying moving to the cloud is the only way we’ll be able to deliver truly scalable and resilient infrastructure in the timeline that Footwear is wanting. That’s just not based on reality, at all.”

I’m going to need to sidestep a little; any mention of the cloud provokes a strong allergic reaction from anyone at IT and Operations. But I also know how long it takes us to spin up a single VM and get it configured correctly, and the beating our availability takes due to untested patches and configuration changes that are impossible to roll back. I’m convinced that Karen is right; we are going to have to step away from our supply lines and try something new. My guess is, if we empower our teams to handle end-to-end support and deployments and not mandate one specific platform, exactly where our services are hosted will cease to become such a contentious question.

“Karen recommended that we have at least one and preferably two Operations specialists on each team,” I reply. “That’s like a quarter of the team, Douglas – so it’s not like Emily’s people aren’t being involved enough. Just the fact that we’re going to be in the same room and talking to each other is a huge win. When it comes to the cloud – I agree with you, whether the apps run there or on our own datacenters is not the question to be asking. I think we should have as a goal that faster end to end delivery cycle that Tabrez is asking for. Anything that gets in the way of that turnaround needs to be eliminated, without remorse.

“Right now our scoreboard is saying we’re taking 9 days for each release, but that’s just out the door to QA. That’s not the actual finish line – when our bits make it out the door to production. And you know what that number actually is – we’re talking in months, not days. 23 days of work – and 53 days of delays due to handoffs. I think we can cut that delivery time in half, or better, if we adopt some of Karen’s proposals.”

There’s a pause for a few seconds as Douglas scans me. “Have you talked to Tabrez about this?”

“Yes. He’s totally onboard. He has a space available – a large conference room, really comfortable, big enough to fit 20, right next to his designers there in Footwear. He loves the thought of having the team close by and accessible.”

“I’ll bet he does.” Douglas groans again, “You realize you’re opening up your team to all kinds of problems, don’t you? They’re going to be interrupted ninety times a day now with Tabrez and his people stopping by with their little requests.”

“Maybe.” That’s something else I haven’t thought about; I like our little cocoon of silence. Was that mystery voice right – am I introducing more chaos in our work patterns than we can handle? I decide to brazen it out. “Our thinking is, the closer we can get to the customer, the quicker we can understand what they really want. I’m confident they’ll play by the rules, and personally I’m excited about seeing them more at our retrospectives as stakeholders. I’m getting pretty tired of presenting our work to an empty room, Douglas.”

“Mmmmm.” Douglas is looking back at his monitor display. “You know, I don’t agree with everything Karen said, and I agree with Emily that she should have talked more to senior management ahead of launching this little bombshell. But, I like the thoughts in here about first responder training. I know for a fact that our customers are complaining about their tickets waiting around for days or weeks for a developer to get to them; we’d be much better off if we could knock down the easy stuff in a few minutes. You know, I’ve said to Ivan a hundred times, having his team act as some kind of glorified turnstile that churns out bugs isn’t helping us at all. Lack of good triage has always been a pain point.”

I propose a weekly review where the team can present progress transparently. “Let’s be up front and tell people that this is an experiment, an open lab. We’re going to be trying some new things and making some mistakes, and we want to share our wins and losses openly with everyone. For example, how do we use feature flags? We think it’ll really help us be able to safely toggle new functionality on and off so our releases will be less disruptive – but that’s just a guess right now. And what about monitoring – do we go with a vendor or roll our own? How do we set up dashboarding so everyone is looking at work the same way? How do we fold usage data back in to our backlog prioritization before each sprint? What about – well, you get the idea. We’re going to try a different approach, because we’re wanting a different result.”

It takes another hour of back-and-forth negotiations, but I walk out with what I needed. We’re going to give the SWAT team a 3-month try. Our judge will be Footwear; if after 3 months the needle doesn’t budge or if there is excessive thrash, Douglas will pull the plug.

McEnvironments (Configuration Management and Infrastructure As Code)

Like I said, Kevin and Emily had been talking about immutable infrastructure. But talk it seems was still a long way from action, and for the next few weeks we suffered through incident after incident caused by environmental instability.

First, we had a charming outage caused by a distributed denial-of-service attack against our DNS nameservers; it took some scrambling to figure out what was going on, and a few days more to figure out a fix with our caching and a failover strategy for what had become a sneaky single point of failure for our network. Then, we had one of our production services not restart after the host servers were up-patched; our monitoring missed that completely. In an embarrassing repeat of the Footwear services abend a few months earlier, it took some customers calling in to realize that the service was unresponsive and force a restart. And we kept having to spend valuable time determining if an issue was code related or environmental related. As none of our environments really matched each other as yet, piecing together what had happened and when took much longer than it should. If I was to take George and Alex at their word – easily 75% of our ticket resolution time was spent isolating problems and turfing server or environmental-related problems back to Operations.

This made for a somewhat chilling impact on my lunches with Emily; no doubt, she was getting an earful from her people as well. After our postmortem on the DDoS attack, Emily agreed quickly to a powwow on our next step with infrastructure as code.

I got in a little late, just in time for Kevin to hit his concluding remarks. “Alex, Ivan and I have been looking over our production-down issues from the last three months. It’s very common for networking elements like routers, DNS, and directory services to break our services and apps in prod, as well as causing ripple effects with our network availability and performance. Our recommendation is that we beef up our network monitoring software to catch these issues while they’re still in a yellow state, and make sure that every time a connection times out or is closed our apps need to be logging warnings. And our test environment topology needs to be beefed up so that we’ve got more assurance that what works in QA will perform similarly in production.”

Alex nodded. It was a good sign that he and Kevin were sitting next to each other and both relatively calm. “One other aspect that Kevin and Emily are in favor of is making sure every part of our networking infrastructure is in version control. That means that we need to make sure that our switches, routers, everything can be externally configured, and that we are able to roll out changes to these elements using code. That’s true of a lot of our infrastructure but not everything; it’s going to be a gradual process.”

If this is like our move to version control, I’m confident that what seems to be an overwhelming task will end up being triage. We’ll take one batch of networking elements at a time, starting with the most troublesome, and gradually isolate out and shoot the parts that can’t be configured and updated externally. Just the fact that we’re talking about automation and infrastructure as code is a positive step. Still, this isn’t a postmortem about one isolated incident; I’m hoping that we can begin a larger discussion around enabling self-service environments. After saying that, I offer up some tech candy: “Do we have the tools we need to recover faster from failure?”

Ivan snorts. “You’re talking about configuration management, right Ben? Well, we’ve had Puppet here for about three years. We spend a fortune on it with licensing every year, and frankly it fails more often than it works. If we can’t get it to work reliably outside of ad hoc script commands, we’re going to scrap it in favor of something cheaper.” His tone is flat and emphatic.

A few more ideas get batted around of different software solutions. As people talk animatedly about their own pet favorites – containers, Packer, Terraform, Ansible – I stay silent and absent-mindedly swirl my coffee cup around. I keep thinking back to that value stream map that’s taunting me from the conference room; 53 days of delay caused by handoffs and delays in provisioning infrastructure. The problem isn’t that Emily’s team is lazy or incompetent; I know from my visits over there that they’re constantly under the gun and putting in long hours. The problem is that gap, the blink between the developers needing new environments and the way software is built out at WonderTek. We need to figure out a better way of closing the gap, something that can please both sides. I’m not convinced that new software would actually help, but my guess is that it’s our use of that software – our process – that needs to get tightened up.

I look up from my coffee cup just in time to catch Emily giving me a significant look. We’ve been talking about better ways of configuring our servers over the past few weeks. She reaches out and pats Ivan on the hand; it’s not a condescending gesture, but it does get his attention and stops him mid-rant. “I’m not sold on the idea of Puppet being a loser yet, Ivan. Right now, we’re running Puppet on an ad hoc basis, for a few workstreams in our portfolio. And we use it to maintain an asset library and catch licensing compliance issues. That’s a good start, but I think we need to trust the tool more, and use it like it’s designed to be used.”

Ivan is incredulous. “Emily, we’ve never been able to get it to work. It seems to break down after a few weeks on any of our servers, and it’s a nightmare trying to find out what the proper template is for our environments. Sorry, but configuration management given our working constraints – and the constellation of servers and environments we have to manage – is a dead end.”

Alex’s eyebrows go up at this conversational hand grenade, but before he can get a word out Kevin speaks up: “Agreed, it’s going to be challenging finding the proper source of truth with our environments. But we all know that configuration drift is a problem that’s not going away, and it seems to be getting worse. Puppet – really any configuration management tool, but that’s the one we’ve got – is purpose built to fix this problem.”

Alex chimes in, “We had to force ourselves to use version control as the single point of truth for all our application builds. Yeah, it wasn’t easy, but we started with the most fragile, important workstreams – and one at a time, we put up some guardrails. We didn’t allow any manual config changes and used our CI/CD release pipeline for any and all changes, including hotfixes. No change, no matter how small or how urgent, was allowed to be rolled out manually. Could we use the same approach in managing our infrastructure?”

Ivan puffs out his cheeks in disbelief and says slowly, “Once again, we can’t get it to work. It breaks. What works on one app server, doesn’t run green on another – the Puppet run fails, and we even had a few servers go down. And we’re too busy to invest more time in this. Now, maybe if we had the people I’ve been asking for…”

Emily is drumming her fingers on the table. “Ivan, we gave you more people, three months ago. Is anyone here seriously arguing that setting up and keeping our machines configured using automated scripts a bad idea?” Ivan starts to speak, then snaps his jaw shut as Emily gives him a hard look. “On this subject, Ben and I have been in agreement for some time. If we tighten things up so we run our environments through the same release process we do code – with a pipeline, one we can roll back or inspect – I’m betting we’ll knock out most of the trouble tickets your team is loaded down with, Ivan.”

Kevin says, “What would happen if we let Puppet run unattended across our entire domain?” There’s now a few people shaking their heads no; there was just too much variance for this kind of a switch-flip to work. He squinches up his face a little, in thought. “OK, then we run this in segments. Let’s take a single set of servers handling one workstream – make sure that our configuration definitions are set and work properly, and then we let Puppet run unattended on them. If that works, after two weeks, we start rolling this out to other batches.”

Ivan is fighting a rearguard action now; he allows that, if Kevin is willing to try this experiment first with the apps he’s managing, including Footwear, then perhaps it wouldn’t be a complete disaster. I suppress an irritated twitch to my mouth; Ivan’s fear of automation is almost pathological. I keep telling myself that servers and environments are complex and unsafe, with lots of moving breakable parts; his caution is a learned behavior from years of dodging hammers.

If this gets us to where our environments are more consistent, it’s a big win. Perhaps, I should be satisfied with that, but there’s still that vision of true end-to-end responsibility, where the SWAT teams handle both production support and maintain their own set of environments. There’s so much left to be done for us to get to where we are self-service. It’s clearly the elephant in the room; of those 53 days of delay, most is wait time on provisioned environments. I could make my delivery promises with room to spare if we had more direct control and responsibility.

The thought of being able to create a new set of environments with a pushbutton script, without waiting weeks on the infrastructure CAB process and coming up with business case justification arguments, is just too appealing to pass up. Best of all, it’d give Emily’s infrastructure team a higher purpose and more rewarding work, overseeing and strengthening the framework instead of doing low-level work like spinning up VM’s manually.

However, Emily and I are not there yet, and I have no desire to continue pressing the point if it’ll cost us our gains. Last week, she told me point-blank that she didn’t think our developers can or should handle infrastructure support directly; she still clearly views provisioning as being the exclusive domain of her team.

I have to give her credit though – in a conciliatory move, Emily has been working with our cloud provider to allow us to select from one of three possible templates on demand. As a first effort, it’s a start, but these approved images are so plain-vanilla and locked down that Alex told me that they were functionally useless as true application servers. In a rare burst of humor, he called them “McEnvironments” – fast, mass-produced, and junky.

So, half a loaf it is. I’ll keep the pain points we have around provisioning environments visible. I’m convinced that over time those self-service options will become less rigid, and that core question – who has responsibility over creating and maintaining environments? – will be resolved in a way that will help free up the infrastructure team from tedious grunt work and help the coders take more ownership over the systems their applications and services run on.

An Insecurity Complex (Security As Part of the Life Cycle)

Brian, a balding middle-aged man who always reminds me a little of Elmer Fudd, sighs deeply. “Like I told you, this software can’t be released. Your version of Bouncy Castle has a weak hash-based messaging authentication code that’s susceptible to cracking.” He sees my blank look and tries again, his voice having just a tinge of a whine – “Your application uses a cryptographic API called Bouncy Castle; the version you chose is not on our list of approved versions, for good reason – we’ve known for several months now that it can be brute forced in just a few seconds by a hacker using a hash collision attack. It’s beyond me why you guys chose it.”

Try as I might, whenever I talk with Brian, I can’t help but think of Looney Tunes and “Wabbit Season”; it makes it nearly impossible to take what he says seriously. I swivel to Alex and ask: “I have no idea really what’s going on here, but Brian seems to think that we have a problem with our authentication library. How widespread is this?”

Alex is slouching in his chair, slumped in frustration. “For this application, updating this out of date library to a more current version is just a few keystrokes. Then we have to kick off a whole new round of testing – not that big of a deal, thanks to our shiny new release pipeline. But it’s not just this application – we have dozens to support, and we’ll have to check each one. Most of them don’t have any kind of a release pipeline other than walking over with a thumbdrive to Ops, and there’s no test layer to protect us. Honestly, it could take us weeks, more likely months. This is going to kill our velocity, Ben.”

The teeth-grating whine again, this time a little louder: “That’s hardly MY fault! We’ve asked you guys for months to do a little better checking on your deployments using the known Top 10 threats published by OWASP. That’s a basic level of competence, Alex. If you would have thought ahead even a little, this update would have been easy!”

I cut him off. “Brian, I’m convinced you have our best interests – and the safety of our users and our data – at heart. You don’t have to worry about us shooting the messenger; we’re better off knowing about this than living in ignorance.”

George interrupts, “I’m not so worried about this particular attack vector. I know this will get fixed, now that we’re aware. I’m worried about the next one. It seems like these vulnerabilities keep popping up more and more often. It’s causing us a lot of headaches, usually right before a release. And it seems like our dependencies are usually the vulnerable spot – stuff like this Bouncy Castle API. Brian, do you have any ideas on how we can get a little ahead of this?”

Brian says slowly and patiently, “Just the things you already know about. Your team needs to make security a first-priority concern. Right now it’s last, and that’s why this release is going to miss its dates.”

Brian may know security, but he doesn’t understand the repercussions of missing dates and how that’s viewed here. I counter, “Brian, I don’t miss dates. We can scale back on the features we deliver, but two things don’t change on my teams – quality and the delivery date. The business cares very much about getting what they need out on time. To me, something like this security bug is a quality issue. So, we’ll fix it – and we’ll make our date.”

George breaks in again. “Brian, help us out a little here. This isn’t something written in COBOL back in the 70’s. We’ve got a lot of cutting edge stuff packed into this site and it’s rolling out fully automated. We can make changes on the fly, safely – nothing is fixed in stone. So how can we get a better picture of these security gaps early on?”

Brian waves a pudgy hand and says dismissively, “Well, not knowing your code or anything about the design, I really can’t say. Just stick to what the checklist pointed out in my latest security scan.”

“Brian, that security scan is three weeks old,” Alex says, grating his teeth. “That’s a lifetime ago when we’re talking about software – how do I know that when you run your audit again, that we won’t have other holes pop up?”

Brian looks like he has Bugs Bunny staring right down the barrel of his 12-gauge shotgun. “You don’t. But I don’t think you want to get your name on the front page of the paper, do you? Or hear from management after I submit my report?” He gives us a self-satisfied, smug, almost sleepy grin. Classic Elmer.

Once we pledge fealty and promise to address this new vulnerability as our “top priority,” the meeting peters out and Brian shuffles off to his next victim. Alex throws his notepad at the whiteboard. “Dammit! We were almost there! Now, I have to waste days satisfying this bureaucratic nightmare of a checklist! And I guarantee you, that’s not the end of the story. He’ll find something else.”

I say, “You know, I’m not so sure. I’ve been talking to Erik about this – he’s a lot more helpful than Brian is, and it helps he’s an actual decisionmaker with InfoSec. They’re onboard with the idea of having someone from their team bundled up with our new SWAT teams, a half day a week.”

I get a suspicious sideways glance from Alex. “It’s not Brian, is it?”

“I don’t know. For God’s sake, let’s not make Elmer – I mean Brian, sorry – the problem here. He’s not telling us anything we didn’t need to know. We just didn’t hear it soon enough. Now, how are we going to get ahead of this next time?”

George looks thoughtful. “You know, we’ve been getting pretty slack lately with some of our basic hygiene. There’s no reason why we can’t gate our check-ins so that it looks for those top 10 vulnerabilities. That would give us early feedback – and it won’t allow checking in code we know is insecure. And it’s a lot more objective than Brian coming in at the 11th hour with a red octagon sign based on his vulnerability du jour.”

“Will this lengthen our build times?” Alex wonders, his brow furrowed. Alex watches check-in times like a hawk and is merciless about forcing developers to rewrite slow-running tests. He was the originator of the now-infamous “Nine Minutes Or Doom” rule at WonderTek – throwing a royal hissy fit and hunting down culprits if it took longer to check in code than it took to grab a cup of coffee from the cafeteria.

Both George and I chuckle, remembering the last particularly epic tantrum with great fondness. “No, it takes almost no time at all to run some basic security checks,” George says. “If we’re smart, we’ll need to augment this with some penetration testing. We can’t keep on trying to harden our apps when they’re 90% baked like this.”

I ask, “Isn’t this overkill? I mean, is there any information here that someone would actually WANT to steal?”

“On this app, no.” Alex smirks wearily. “This is what I mean – those security guys have a hammer, every single frigging thing is a nail. There’s absolutely no proprietary information here at all. No sensitive records, no socials, no credit card info, nada. We’re clean.”

“OK, so there’s no PI data. But we still have information in there we need to keep private,” George muses. “Otherwise it’d be an open system and you wouldn’t need to authenticate at all. At the very least, we need to make sure the user information we use for logins is protected and that the site itself doesn’t get hacked and users get directed elsewhere.”

I’m convinced this is a gap that we need to fill – and that it’ll bring a powerful advocate to my side. “I’m convinced this is a gap we need to fill. Security reviews need to be a part of every feature design – Erik will totally be behind that. But we can’t do it ourselves, and static scans aren’t enough. We need a better framework that’s preauthorized – make it easy on our guys to do the right thing.”

Alex says, “These guys see themselves as gatekeepers, Ben. They’re not going to want to sink time into being at our design sessions and looking over our deployment code.”

If we were talking about Brian, Alex’s assumptions would be dead on. Thankfully, Erik’s made of different stuff; since our talk he’s been five steps ahead of me, championing the creation of a curated framework that was community-owned and blessed by our security gods. But I just smile and say, “Give them some credit. I think they’re ready for a more cooperative model. I’ll meet with Erik later today and see what his thoughts are. I’ll tell him we want to do the right thing – and that we need his help. He’ll love that – especially if I make him think it’s his idea!”

Like magic, a few weeks later Brian starts appearing at our biweekly design sessions. The hated checklist stops making such frequent appearances as we begin folding in security scans into our check-in gating.

We’re still a ways away from a comprehensive security framework – but I trust Erik and I know it’ll come, soon. And now we’re getting regular feedback on security as part of our development life cycle.

Effective First Response (Automated Jobs and Dev Production Support)

Ivan takes a long slurp on his coffee and lets the silence build a little. As usual, his hackles are up. “Folks, I’m a little surprised to see this meeting fall on my calendar this week. We weren’t given any time to prepare and I don’t like the agenda, to be honest. Seems kind of dev-driven to me. Is this another pin-the-tail-on-the-Ops guy session? If so, I’m walking.”

Try as I might, our relationship with Ivan and his support team hasn’t thawed like it has with Emily; my charm offensive (such as it was) was stopped in its tracks. I’m not giving up yet on the campaign, but it does look like we’ve got at least one very vocal detractor who’s dug in.

I lean forward and say, “I appreciate your concern, Ivan – I’ve been in a few meetings like that here myself. This isn’t a blame session; we’re trying to figure out ways we can work together better in handling support.”

“Yeah, that seems like the same thing.” Ivan takes another long sip, drawing it out to show his contempt. “My people are already working very hard, round the clock, to try to support your crap. Forgive me for being blunt here, Ben, but it seems to me like you’re angling to shuffle more support work on my guys. That’s not going to fly with me, and I think Emily will back me up on that one.”

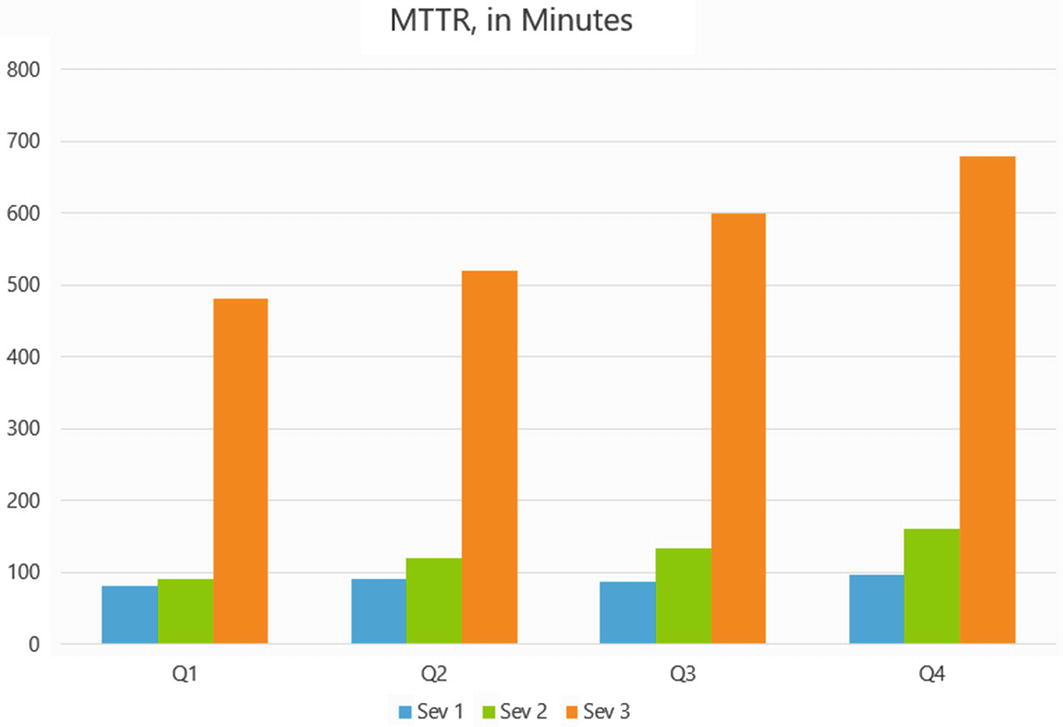

Tabrez, our Footwear stakeholder, now steps in. “I’m sure we’d all love another round of Ops and Devs playing tag, but that’s not the point. I called this meeting for one purpose. We’re seeing a lot of tickets lately with our new app; our customers are getting angry that these tickets just seem to sit there in queue, awaiting developer attention. And we’ve had a few high-profile outages that seemed to drag on and on. What can we do collectively to turn this situation around with our support system?”

The charts Tabrez displays tell the story, and it’s a grim one. “As you can see, we seem to be treading water. Our response time on our high severity tickets are getting worse, and those third tier tickets – things like customers getting authentication errors or timeout issues – they’re totally circling the drain. It’s taking us on average more than 10 hours to knock down these local issues, by which time the customer has often moved on to other, more reliable apps.

“I don’t even want to read to you the comments we’re getting in the app store for both Google and Apple – they could remove paint. Our ratings are in the toilet. We’re hemorrhaging customers. This just can’t go on.”

Ivan snorts derisively. “See, that’s exactly what I’m talking about. For this app, we received zero training. Ben’s team just dropped it on us, out of nowhere. And you’ll notice, the changes I instituted about three months ago are working. We’re processing more tickets than ever, and it’s automated – meaning less time per ticket by the Ops team. That’s nearly doubling our efficiency. We’re a multibillion dollar company with a global scope, and I have a lot more apps to support than just Footwear. I don’t think anyone here has any idea of how difficult it is to be in the firing line like we are, 7 days a week.”

I’m wearing my best poker face but have to fight not to roll my eyes. Ivan’s claims of weary serfdom and powerless victimhood are starting to wear a little thin. We did training, exhaustively, and Alex tells me that we’ve provided a list of “Top 10” issues that we expected Ivan’s team to help knock down.

“As you can see, our ticket numbers are escalating,” Tabrez says, continuing with the next slide. “If we view success narrowly as being ‘efficiency in intake of tickets,’ then maybe this is a win. But from the customer’s point of view, this is a disaster. We do need to provide true global support, 24x7, and if the ticket has to wait for the next available dev – that’s just too long for most of our customers.”

Ivan smirks derisively, looking thoroughly unimpressed. “Again, sounds like a problem for the coders to figure out. Reliability is all about architecture; maybe a better thought out design would give you the numbers you need.”

I say flatly, “We aren’t breaking out separately here the number of noncode related problems; we can do that if you want. I don’t think it’d be productive personally. But a large number of these escalations were caused by patches to the infrastructure. And Ivan, a win for us here has to be global. The tickets are spending too long in queue, and our first touches aren’t helping knock down easily triaged bugs – look at that flag green line there on the bottom. We need a better definition of success here, one that’s more customer-driven.”

“Well then, put your money where your mouth is, Ben. Start sharing the load. If you want better first-touch resolution rates, put your people in the call center.”

Tabrez and I start to laugh. After gathering myself, I say sunnily, “Great minds think alike! That’s exactly what we’re talking about, Ivan – sharing the load. What if we made support and reliability more of a commitment for everyone, not just your Operations team?”

Finally, the cat-with-a-mouse smirk was gone. “Still seems like an end-run to me. What are we talking about here exactly?”

- 1.

Noncode-related issues (OS patches, networking or config issues, or scheduled downtimes)

- 2.

Code changes or deployment-related issues

- 3.

Interrupts

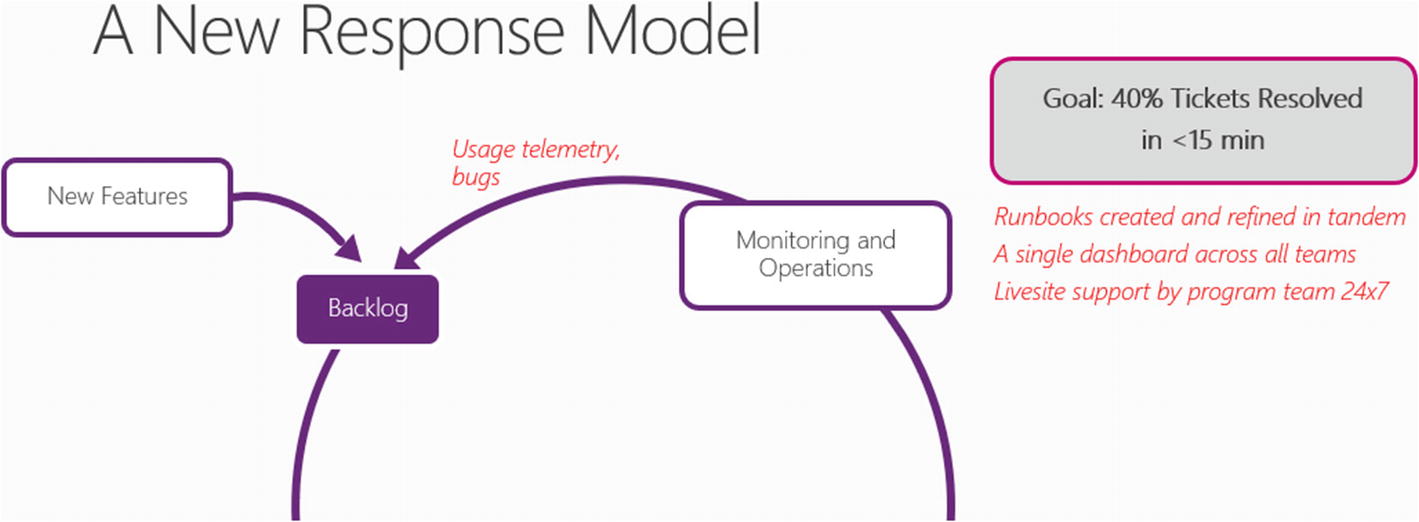

I say, “That last one is important. These are the bulk of the service requests we’re seeing for the app. These are the Sev 3 tickets Tabrez mentioned earlier – user login issues, timeouts, caching problems. Most of these problems are small, and they’re repeatable – they could be fixed with a simple service restart or a job that updates login credentials for example. In fact, if we had those documented ‘Top 10’ issues available as automated jobs for your support team to remediate, almost 75% of these incoming tickets would have been resolved within 15 minutes. That’s very low-hanging fruit, Ivan. It’s a win for you, as we can close tickets faster. We’ll have happier customers – and my team spends less time playing Sherlock Holmes with long-dead tickets.”

Tabrez is smiling in agreement. “Ivan, up to now we’ve been defining success in narrow, localized terms, like lines of code delivered for devs; or availability and server metrics for Ops. We need to change our incentives and our point of view. Success for our customers means reliability, pure and simple. How fast can a user authenticate and get to the second screen of the app? That’s something they actually care about. And if there’s a problem – how long does it take for a ticket to get fixed? Not just put in a queue, but actually addressed and done? If we can fix this – we’ll get the app back on track and our user base will start growing instead of cratering.”

Ivan is smirking again; the well of sarcasm runs deep with him. “Yeah, like I said – an end-run. I love how you put noncode related problems up top. What about all those problems with deployments? What are you going to be doing to stop these bugs early on, Ben?”

I exhale in frustration; I’ve given it my best. “Ivan, cut it out. We’re trying to partner with you here so we can better meet our customer’s needs. So let’s drop the ‘tude. Here’s what we’re thinking – and this is a rough sketch at the moment, so everyone in the room here can come in with their thoughts:”

I continue: “You say we – meaning the devs – haven’t been stepping it up when it comes to production support; I’ll meet you halfway and agree with you. Right now everything you’re seeing in red doesn’t exist at the moment. We aren’t getting any usage information, bugs are flowing through untouched, and we have completely different metrics and dashboarding than your support teams. Even our ticket queues are separate.

“So let’s start over with a fresh approach. Let’s say I put some skin in the game. I dedicate two people on each team to handle livesite support. They work with your team, in the same room, and for 4 hours a day they take incoming calls. I think, with a little time spent in the foxholes, they’d have a much better appreciation of what it takes to create and support highly available software.”

I’ve screwed up. Emily, George and I have been going over this, but I forgot to include Alex – and now his mouth drops in shock. “Ben, you can’t ask us to do this. We’ll be stuck in the mud. Our people are very expensive, highly trained specialists. You’re yanking them into a call center? They’ll quit, Ben. And I shouldn’t have to tell you, it’ll take us months to find a replacement. A call center person takes a single phone call, and we can get them the next day – 16 bucks an hour.”

I tell him as best I can – we can’t continue begging for more coders and complaining about the tickets we’re drowning in and refusing to make any tradeoffs. “Ivan is right when he says we’re not doing enough. I want to try this – as an experiment.”

Alex says desperately, “Ben, once again, we’ll lose these people. No one wants to work in a call center. No one. For us to do our jobs effectively, we need to not be interrupt driven. Come on, you know how context switching works – it’s slow death for any programmer. I can’t imagine anyone on our team being willing to swallow this.”

I reply firmly, “We can talk about this later if you want – nothing’s been decided. But if we want something to change, we’re going to have to stop thinking of support as being some other person’s job. And you can’t tell me this isn’t costing us anyway. Last sprint, we lost half the team to firefighting on the third day of the sprint. What would you rather have – a 25% reduction in firepower, or 50%?”

Gears are starting to turn, so I press my advantage. I explain that we’ll rotate support on the team so no one is stuck with a pager full time. And the people embedded with the support teams will be spending at least half their time doing what they’ve always done and are best at – coding. This means automating those manual tasks – creating runbooks and jobs that can be executed single-click. I make some headway with Alex, but you can tell he’s still convinced we’ll see a mass exodus of talent once we saddle them with any amount of livesite support.

Ivan, for once, is also thinking. “So you’re to help with support. What’s this going to cost me in trade?”

“What’s it going to cost you? Information. We need information, Ivan, because we’re working blind right now. We need to know what’s working and what isn’t for our customers, and your team is closer to that source than anyone.

We need your teams to commit to using those runbooks as they’re created – and then help us make them better. We’re not trying to automate everything here – just the most common, manual tasks that crop up repeatedly. The kind of stuff that’s better done by a machine than a human being.”

Ivan isn’t smirking anymore, but he’s still deeply suspicious. “Really. Hmm. So if we go ahead with this – attempt – this means you’ll meet, every week, for an hour with us? We’ll go over all the bugs opened in the past week and work together on these automated jobs? Come on, Ben, some new crisis will pop up and you’ll flit off elsewhere. I doubt you have the attention span for this kind of effort. Really, don’t you think it’s smarter not to start?”

I tell him to put me to the test – for 3 months. We’ll experiment with creating these runbooks and a single queue displaying open support tickets that’s the same as the work queue for the devs. I even offer to pay out of my budget for new monitors in their war room. “And yes, the entire support team – that includes you and I – will meet every Monday for one hour and go over the tickets, just like you said. I’ll bet you a steak dinner Ivan – in 3 months, we’ll hit our target. That means 40% of our tickets resolved in less than 15 minutes.”

The only thing that kept Ivan in the room long enough to broker a deal was the fact that Tabrez was there. Even then, it takes a few more haggling sessions with Ivan – and finally a Come-To-Jesus moment with Emily – to kick this off as an experiment. I’d love to say it took off like a rocket, but Alex’s concerns about developer grumbling were well founded. Our first few rounds of support were a little ragged, and there was still too much cross-team sniping.

But in the end, no one walked out the door. There would come a time when it was hard to believe that we’d ever worked any differently; it made a fundamental difference in getting us a better connection to the customer and knocking down our firefighting costs. And best of all, I got to enjoy an enormous T-bone steak, medium rare, courtesy of Emily and Ivan.

Behind the Story

During this chapter, Ben introduces the concept of working in smaller-sized teams that have true end-to-end-responsibility, in an effort to reduce the waste they are seeing because of handoffs between siloed groups. The role of security as part of their “Shift Left” movement makes an appearance, as does improving their initial support and remediation capabilities through the use of runbooks and automated jobs. Let’s go into a little more detail on each of these topics.

Small Cross-Functional Teams

My own version of the networked system is small, multidisciplinary teams focused on solving a particular business challenge. As the requirements of the company shifts, the initial teams might be joined by more small teams, each tackling a different problem. …Simple physics tells us that change requires energy, and I think there is far more momentum created when people are minded to want to go in a particular direction rather than being told to. It’s heart, and not just head, supported by a big opportunity that people can believe in. – Neil Perkin 1

You know, I’m all for progress. It’s change I object to. – Mark Twain

The team’s first foray into a value stream map was way back in Chapter 2, in the section “A Focus on Flow, Not on Quality.” Now, months later, they’re doing a second pass. This actually isn’t unusual; in fact, it’s healthy to do a reassessment from time to time. It never ceases to amaze us what an immediate impact a simple sticky-note flowchart can have, especially on numbers- and results-oriented executives. Most of us have never seen what it actually takes in effort to move an idea from concept through to delivery. Value stream maps expose friction and gaps in our processes and can become a powerful catalyst for global-scope improvements.

We’re indebted to the books Making Work Visible and The DevOps Handbook for their much more detailed discussions on creating actionable and useful value stream maps. Making Work Visible in particular outlines an alternative approach that seeks to engage that vital upper stratosphere we’ll need to create lasting change. Instead of an outside agent proscribing a course as in our story, which can backfire badly, the committed leadership members are “locked into a room” and build the flow map themselves over several days. Having an enterprise diagnose itself using nonbiased elapsed time metrics – vs. a report coming from a disengaged outside party – creates a much better environment of buy-in and cooperation. Often, the coordinators will have to put on the brakes, forcing participants to finish mapping out the problem before driving to potential solutions – an excellent problem to have!2

Finding the Blinks

Ben’s proposal to Douglas in the section “Leaner and Meaner” gets the winning nod for a few reasons. First, this is a timeboxed trial period of 3 months only. After 3 months, if there’s not improvement, rolling the attempt back won’t come with a huge cost in terms of rep. Secondly, he did his prework. Soliciting and getting the buy-in from the architects and security auditors made for some nice trump cards in his discussion, and it will set the groundwork for a much better holistic solution down the road.

But the biggest single reason why the SWAT team proposal won out was that it had the strong backing of the business, Footwear. They’re clearly not happy with the cost, lack of transparency, and the effectiveness of the siloized process as it currently stands. Each group has been trying to do their work in a vacuum with a local mandate and criteria for success; with Tabrez’s backing, Ben can try as an experiment delivering value with very small cross-functional teams – and get feedback from Tabrez on a regular basis so they can make adjustments on the fly.

Ben is betting that if they break down feature requests to very small pieces of functionality – there’s only so much work that can be done with a 6–12 person team after all – and focus all their efforts on a successful release to production in 2 weeks, Tabrez and his Footwear customer base will start to see some improvements, and they’ll start to pick up speed in a meaningful, sustainable way.

We commonly see executives drilling into deep-level detail about the individual artifacts and steps themselves, even on the first few passes. However, the primary value of the value stream map is to expose, not what’s working, but what isn’t working – waste. This can be in the form of manual information flow, but more than likely – as we’ve mentioned before – this waste is happening as work is handed off to a separate team’s work queue – what was called “blinks” in the book Team of Teams.

WonderTek is no exception. Most of their waste has come in the form of delays due to handoffs. Ben is proposing a change where delivery is happening across a single cross-functional team. It comes with a cost; the delivery teams will have to accept directions from the stakeholder much more frequently, and the stakeholders will need to invest time into providing accurate and timely feedback, so the work stays on course.

This is comparable to a restaurant breaking down the walls between the kitchen and the seating area and having all the meal preparation happen in the open. This can be undesirable; it exposes a lot more mess and noise and can be limiting. However, many top-end restaurants have found an open and transparent kitchen to be a deciding factor in having a clean, safe, and productive working area; the lack of physical walls leads to a stronger connection between the people preparing the food and those enjoying it.

Expectations are being set up front that this is a learning opportunity and that mistakes will be made. There’s simply too much unknown at the starting line; they don’t know yet how to set up robust monitoring, or feed usage back to set priorities in their work. Runbooks and better job automation are still a blank page. Using feature flags is still an unknown space, as is templated environments for the most part.

Still, this is a huge turning point. A smaller team will be much tighter knit and lose less due to communication overhead than a larger bloated team. They’re getting early feedback from security and architects – meaning fewer heart attack-inducing surprises late in the game as the application is halted just before release for refactoring. Karen is proposing a readiness review as part of an ongoing effort – where the team, stakeholders, and architects jointly review self-graded scorecards and flag a few priorities to address over the next few sprints – meaning quality and addressing technical debt won’t be victimized by a single-minded focus on flow.

The SWAT Team Model

A separate offsite location. In this case, Tabrez is hooking the team up with a common work area very close to their end user base. Despite Douglas’ objections, we think a closer tie to Footwear will in the end be a net positive – there’ll be less delays in communication and the developers will feel and be seen as part of the business flow, vs. an isolated, remote group.

Work is visible, and there’s a single definition of value. WonderTek has already made some advances in this area; Karen’s proposal takes this even further. We can’t overstate the importance of displaying the flow of work and finding ways to continually make those displays powerful and omnipresent. Toyota made information radiators and omnipresent displays a key part of their Lean transformation. In the fight against Al Qaeda, General McChrystal broke down calcified organizational siloes with a constant barrage of information and huge war room displays in one common location.3 This new SWAT team will have large displays showing their key business-facing metrics everywhere as well. Operations might have special modules or subdisplays showing numbers like availability or CPU metrics, or page response time. Developers might have their own minidashboards showing bug counts and delivery velocity. But the real coin of the realm, the common language and focus, will be the numbers that matter to the business – in WonderTek’s case, cycle time, global availability, response time, and # of unique logins by month.

A common work queue. Bugs, feature stories, and tasks, and environment and configuration requests are all the same thing – work. Having a single queue showing work for an entire project across every delivery team will be key for them to expose cross-team blockers quickly and maintain that open, honest, transparent behavior in working with their customers. And as we discussed earlier in Chapter 4, there’s going to be a strong need to gate the work the team is acting on. BSAs and PMs will still very much be needed to help shape requirements into actionable work and ask the hard questions:

What business problem are we trying to solve?

How will we know we’re on the right track?

What monitoring do we have in place to gather this information, and what numbers do we need to track to confirm our assumptions and validate the design? 4

Hopefully, this gating will turn away a significant amount of work before a line of code is ever written. Studies have found that lead time – not the number of releases a day – is one of the greatest predictors of both customer satisfaction and employee happiness. A large backlog of work waiting in queue, which we used to think was a comforting sign of job security, is as damaging to a software delivery team as a huge inventory sitting on shelves are to a manufacturer.

A learning friendly environment. Besides a blame-free postmortem ethos that favors learning from errors, the team is committing to regular weekly demos that are open to all in the company. Some argue that having a single “DevOps team” is an antipattern; we do not agree. Past experience shows that having several pilot teams experimenting with DevOps is a much less disruptive and necessary part of the “pioneers/settlers” early on growth phase. One definite antipattern we’d want to avoid is exclusiveness; if this new team is showered with all kinds of attention and shiny new gadgets while the rest of the organization languishes as “legacy,” resentment will build. Treating this work as an open experiment can help spread enthusiasm and defuse potential friction.

Destructible, resilient environments. What form this will take is still unknown. Will the team use Chaos Monkey or Simian Army type approach to force high availability on their production systems? Will they explore Docker or Kubernetes? Will their architecture use worker roles to help with scalability? Will they end up using Chef, Puppet, or Ansible to help configure and script their environments and enforce consistency? All of this is still in the gray areas of the map still; but early on, with global availability being a very visible indicator, the entire team will be thinking of ways of taming their ecosystem problems.

Self-organizing, self-disciplining teams. They didn’t propose blowing up the organization – an idea completely above their grasp. Instead, each SWAT team is both small and virtual, with each team member having the same direct report they did previously. Ben for example might have two to three engineers on each delivery team that still reports to him, and he handles resourcing and administration. Architecture might have one architect spread out among five or six of these virtual teams, spending perhaps one day every 2 weeks embedded with them. And Emily’s IT team may have two or more people writing environment and configuration recipes alongside the developers; perhaps, over time as the need for new environments drops, their involvement could shrink to a single halftime IT admin on the team.

Are Shared Services Models a Dead End?

The point earlier about a virtual self-organizing team is important. The proposal doesn’t depend on breaking up the organization into horizontal small delivery groups – a reshuffle that isn’t always possible with many organizations. We’ve seen several examples of “virtual” cross-functional teams that have different direct reports – but still function as a single unit, with the same incentives and goals. This is imperfect, but workable, and far from a serious limitation.

As tempting as it seems, one cannot reorganize your way to continuous improvement and adaptiveness. What is decisive is not the form of the organization, but how people act and react. The roots of Toyota’s success lie not in its organizational structures, but in developing capability and habits in people. It surprises many people, in fact, to find that Toyota is largely organized in a traditional, functional-department style. 5

We do feel that having the physical org match the way software is being delivered is a powerful asset and works in line with Conway’s Law – as powerfully demonstrated by Amazon. Reshuffling the delivery teams, removing the middle layer to make the org more horizontal, and creating a new standard common title were identified by the Azure DevOps management team as key contributors to instituting real change and improving velocity. But we won’t be dogmatic on the subject; there’s too many winning examples of teams that made their delivery teams work without a complete reshuffle.

Every Azure DevOps program team has a consistent size and shape – about 8-12 people, working across the stack all the way to production support. This helps not just with delivering value faster in incremental sizes but gives us a common taxonomy so we can work across teams at scale. Whenever we break that rule – teams that are smaller than that, or bloat out to 20 people for example – we start to see antipatterns crop up; resource horse-trading and things like that. I love the ‘two pizza rule’ at Amazon; there’s no reason not to use that approach, ever. 6

You could argue that the decisions Ben made in this section should have happened much earlier. And perhaps, Ben missed an opportunity by not folding in the business earlier and taking a more holistic view of things. But, no one gets it perfect right out of the gate – and the pieces they put in place around source control, release management, and CI/CD were all necessary leaps in maturity they had to make to get to where global problems with delivery could be addressed. It’s hard to dispute with the careful, conservative approach of taking it one step at a time and choosing to focus first on what Ben’s team was bringing to the table.

You’ll notice, at no point did the proposal mention the word “microservices” or even “DevOps.” The SWAT team proposal is really a Trojan horse, introducing not just those concepts but also enabling a better way of handling legacy heartburn with the strangler fig or scaffolding pattern. Reasonably sized teams using services-oriented interfaces and responsible for smaller capsules of functionality are not far off from our ideal of Continuous Delivery and microservices, with little or no delays due to handoffs, unstable environments, or outside dependencies.

Configuration Management and Infrastructure as Code

Your greatest danger is letting the urgent things crowd out the important.

– Charles E. Hummel

Provisioning new servers is manual, repetitive, resource-intensive, and error-prone process – exactly the kind of problem that can be solved with automation.

– Continuous Delivery

Consistency is more important than perfection. As an admin, I’d rather have a bad thing done 100 times the same terrible way, than the right thing done once – and who the hell knows what the other 99 configs look like?

– Michael Stahnke

This book is written primarily from the perspective of a group of developers moving toward the DevOps model. We’ve discussed at length topics like peer review, Kanban, continuous integration, MVP, and hypothesis-driven development – all are coding-centric attempts to try to catch defects earlier and deploy software in smaller, safer batches.

Putting the onus on the development team was a deliberate choice. There’s a common misperception among the development community that DevOps means “NoOps,” and they can finally throw off the shackles of IT and manage their own environments independently. It’s important for development teams to realize that for DevOps to work, producing quality code becomes much more important. It’s a massive shift in thinking for most development teams to have to worry about customer satisfaction, feature acceptance, and how their apps are actually performing in the real world; ultimately, that’s what true end-to-end responsibility means. Far from “NoOps” or “ShadowOps,” coders will need to find ways to engage more frequently with their compadres that are handling infrastructure and operations support.

We’re only able to spend a few pages skimming the basics of configuration management software and infrastructure as code, a vast and fast-moving landscape. But it was important that we give it some consideration, as arguably this is the best bang-for-the-buck value that the DevOps movement offers. As our software has gotten better and containerization and cloud platforms have matured, there are fewer and fewer obstacles to having servers and environments maintained with code just as we would software.

…Even with the latest and best new tools and platforms, IT operations teams still find that they can’t keep up with their daily workload. They don’t have the time to fix longstanding problems with their systems, much less revamp them to make the best use of new tools. In fact, cloud and automation often makes things worse. The ease of provisioning new infrastructure leads to an ever-growing portfolio of systems, and it takes an ever-increasing amount of time just to keep everything from collapsing. Adopting cloud and automation tools immediately lowers barriers for making changes to infrastructure. But managing changes in a way that improves consistency and reliability doesn’t come out of the box with the software. It takes people to think through how they will use the tools and put in place the systems, processes, and habits to use them effectively. 7

And that’s the problem – we rarely give these people the bandwidth to think about anything beyond the short term. Most IT teams are suffering from the tyranny of the urgent, where important things that bring long-term benefits are constantly being deferred or shuffled to the back of the deck. As with the problems we’ve experienced with software delivery teams, the issue is rarely the people – it’s the processes and tools we give them to work with. Poor performing IT and Operations teams rarely have the capacity to think beyond the demands of the day.

As with software delivery teams, delivering infrastructure more reliably and repeatably comes down to better automation and better process. Having infrastructure teams track their work and make it visible in Kanban promotes transparency and helps drive tasks through to completion – and limits work in progress so people are not overwhelmed with attempting to multitask multiple critical tasks at once. Managers and orgs that truly care about their people will take care to track the amount of time they’re spending on urgent, nonvalue added tasks – what Google’s SRE movement appropriately labels toil – and make sure it’s capped so that at least 20%, and preferably 50%, of their time is spent on more rewarding work that looks to the future. Better process and tools in the form of infrastructure as code – especially the use of configuration management and scripted environments, containers, and orchestration – promote portability and lessen the problems caused by unique, invaluable, and brittle environments.

Either You Own Your Environments or Your Environments Own You

At his first day at one former assignment, Dave was shown his desk workspace. Oddly, there was an antique desktop computer humming away below his desk, but it didn’t appear to have any monitor display hooked up. When he asked what the desktop was doing under his desk, he was told, “Don’t turn that off! The last time we powered that desktop down, it shut down parts of our order processing system. We’re not sure what it does, and the guy that set it up left a long time ago. Just leave it alone.”

In the old way of doing things, we treat our servers like pets, for example Bob the mail server. If Bob goes down, it’s all hands on deck. The CEO can’t get his email and it’s the end of the world. In the new way, servers are numbered, like cattle in a herd. For example, www001 to www100. When one server goes down, it’s taken out back, shot, and replaced on the line. 8

Mainframes, servers, networking components, or database systems that are unique, indispensable, and can never be down are “pets” – they are manually built, lovingly named, and keeping these static and increasingly creaky environments well fed and happy becomes an increasingly important part of our worklife. Gene Kim called these precious, impossible to replace artifacts “works of art” in the classic Visible Ops Handbook; Martin Fowler called them “snowflake environments,” with each server boasting a unique and special configuration.

The rise of modern cloud-based environments and the challenges of maintaining datacenters at scale has led to the realization that servers and environments are best treated as cattle – replaceable, mass-produced, modular, predictable, and disaster tolerant. The core tenet here is that it should always be easier and cheaper to create a new environment than to patch or repair an old one. Those of us who grew up on farms knew why our parents told us not to name new baby lambs, goats, or cows; these animals were here to serve a purpose, and they wouldn’t be here forever – so don’t get attached!

The Netflix operations team, for example, knows that a certain percentage of their AWS instances will perform much worse than average. Instead of trying to isolate and track down the exact cause, which might be a unique or temporary condition, they treat these instances as “cattle.” They have their provisioning scripts test each new instance’s performance, and if they don’t meet a predetermined standard, the script destroys the instance and creates a new one.

The situation has gotten much better in the past 10 years with the rise of configuration management. Still, in too many organizations we find manually configured environments and a significant amount of drift that comes with making one-off scripting changes and ad hoc adjustments in flight. As one IT manager we talked with put it, “Pets?! WE are the pets! Our servers own us!”

What Is Infrastructure As Code?

Infrastructure as Code (IAC) is a movement that aims to bring the same benefits to infrastructure that the software development world has experienced with automation. Modern configuration management tools can treat infrastructure as if it was software and data; servers and systems can be maintained and governed through code kept on a version control system, tested and validated, and deployed alongside code as part of a delivery pipeline. Instead of manual or ad-hoc custom scripts, it emphasizes consistent, repeatable routines for provisioning systems and governing their configuration. Applying the tools we found so useful in the “first wave” of Agile development: version control, continuous integration, code review, and automated testing to the Operations space allow us to make infrastructure changes much more safe, reliable, and easy.

IAC is best described by the end results: your application and all the environmental infrastructure it depends on – OS, patches, the app stack and its config, data, and all config – can be deployed without human intervention. When IAC is set up properly, operators should never need to log onto a machine to complete setup. Instead, code is written to describe the desired state, which is run on a regular cadence and ensures the systems are brought back to convergence. And once running, no changes should ever be made separate from the build pipeline. (Some organizations have actually disabled SSH to prevent ad hoc changes!) John Willis once infamously described this as the “Mojito Test” – can I deploy my application to a completely new environment while holding a Mojito?

It took some bold steps to get our infrastructure under control. Each application had its own unique and beautiful configuration, and no two environments were alike – dev, QA, test, production, they were all different. Trying to figure out where these artifacts were and what the proper source of truth was required a lot of weekends playing “Where’s Waldo”! Introducing practices like configuration transforms gave us confidence we could deploy repeatedly and get the same behavior and it really helped us enforce some consistency. The movement toward a standardized infrastructure – no snowflakes, everything the same, infrastructure as code – has been a key enabler for fighting the config drift monster. 9

We keep beating the configuration problem drum, but we truly believe it is the root cause of many organizations’ software problems. Instead of treating configuration like a software development afterthought, treat it like the first-class citizen that it is. By solving the configuration problem, you ensure a consistent process which will inevitably lead you towards software that is easy to operate. 10

While in general we are not a fan of locking things down and establishing approval processes, when it comes to your production infrastructure it is essential. And since you should treat your testing environments the same way you treat production – this impacts both. Otherwise it is just too tempting, when things go wrong, to log onto an environment and poke around to resolve problems. …The best way to enforce auditability is to have all changes made by automated scripts which can be referenced later (we favor automation over documentation for this reason). Written documentation is never a guarantee that the documented change was performed correctly. 11

This was what Gene Kim was referring to when he spoke about “stabilizing the patient.” Keeping all the changes and patches the Operations team is rolling out as part of a script in VC and deployed as part of a pipeline means there’s a reliable record of what is being done and when, which dramatically simplifies reproducing problems. Having all your configuration information in version control is a giant step forward in preventing inadvertent changes and allowing easier rollbacks. It’s the only way to ensure our end goal of easier to operate software; this depends on a consistent process and stable, well-functioning systems.

It also keeps your Ops teams from going crazy, spending all their time firefighting issues caused by unplanned and destructive changes. Changes to systems change from becoming high-stress, traumatic events to routine. Users can provision and manage the resources they need without involving IT staff; in turn, IT staff are freed up to work on more valuable and creative tasks, enabling change and more robust infrastructure frameworks.

There is another very important, and often overlooked, reason for why you should use IAC: happiness. Deploying code and managing infrastructure manually is repetitive and tedious. Developers and sysadmins resent this type of work, as it involves no creativity, no challenge, and no recognition. You could deploy code perfectly for months, and no one will take notice – until that one day when you mess it up. That creates a stressful and unpleasant environment. IAC offers a better alternative that allows computers to do what they do best (automation) and developers to do what they do best (coding). 12

One often overlooked benefit is the way it changes the Development and Operations dynamic to a virtuous cycle. Before, when there was problems, it was hard to tell if the problem was caused by server variability or an environmental change, which wastes valuable time and causes finger pointing. And in “throwing it over the fence,” developers often miss or provide incomplete setup information to the release engineers in charge of getting new features to work in production. Moving to coding environments and IAC forces these two separate groups to work together on a common set of scripts that are tracked and released from version control. There’s a tighter alignment and communication cycle with this model, so changes will work at every stage of the delivery plan.

Infrastructure as code leads to a more robust infrastructure, where systems can withstand failure, external threats, and abnormal loading. Contrary to what we used to believe, gating changes for long periods or preventing them (“don’t touch that server!”) does not make our servers and network more robust; instead, it weakens them and makes them more vulnerable, increasing the risk of disruption when long-delayed changes are rolled out in a massive push. Just as software becomes more resilient and robust as teams make smaller changes more often, servers and environments that are constantly being improved upon and replaced are more ready to handle disaster. Combined with another principle we learned from software development – the art of the blameless postmortem – IT teams that use IAC tools properly focus on improving and hardening systems after incidents, instead of patching and praying.

For us, automating everything is the #1 principle. It reduces security concerns, drops the human error factor; increases our ability to experiment faster with infrastructure our codebase. Being able to spin up environments and roll out POC’s is so much easier with automation. It all comes down to speed. The more automation you have in place, the faster you can get things done. It does take effort to set up initially; the payoff is more than worth it. Getting your stuff out the door as fast as possible with small, iterative changes is the only really safe way; that’s only possible with automation.

You would think everyone would be onboard with the idea of automation over manually logging on and poking around on VM’s when there’s trouble, but – believe it or not – that’s not always the case. And sometimes our strongest resistance to this comes from the director/CTO level! 13

IAC and Configuration Management Tools

There’s some subtle (and not-so-subtle) differences in the commercially available products around IAC in the market today. Server provisioning tools (such as Terraform, CloudFormation, or OpenStack Heat) that can create servers and networking environments – databases, caches, load balancers, subnet and firewall configs, routing rules, etc. Server templating tools like Docker, Packer, and Vagrant all work by uploading an image or machine snapshot, which can then be installed as a template using another IAC tool. Configuration management tools – among which are included Chef, Ansible, Puppet, and SaltStack – install and manage software on existing servers. They can scan your network continuously, discover and track details about what software and configurations are in place, and create a complete server inventory, flagging out of date software and inaccurate user accounts.