4

SUBSPACE TRACKING FOR SIGNAL PROCESSING

TELECOM SudParis, Evry, France

4.1 INTRODUCTION

Research in subspace and component-based techniques originated in statistics in the middle of the last century through the problem of linear feature extraction solved by the Karhunen–Loeve Transform (KLT). It began to be applied to signal processing 30 years ago and considerable progress has since been made. Thorough studies have shown that the estimation and detection tasks in many signal processing and communications applications such as data compression, data filtering, parameter estimation, pattern recognition, and neural analysis can be significantly improved by using the subspace and component-based methodology. Over the past few years new potential applications have emerged, and subspace and component methods have been adopted in several diverse new fields such as smart antennas, sensor arrays, multiuser detection, time delay estimation, image segmentation, speech enhancement, learning systems, magnetic resonance spectroscopy, and radar systems, to mention only a few examples. The interest in subspace and component-based methods stems from the fact that they consist in splitting the observations into a set of desired and a set of disturbing components. They not only provide new insights into many such problems, but they also offer a good tradeoff between achieved performance and computational complexity. In most cases they can be considered to be low-cost alternatives to computationally intensive maximum-likelihood approaches.

In general, subspace and component-based methods are obtained by using batch methods, such as the eigenvalue decomposition (EVD) of the sample covariance matrix or the singular value decomposition (SVD) of the data matrix. However, these two approaches are not suitable for adaptive applications for tracking nonstationary signal parameters, where the required repetitive estimation of the subspace or the eigenvectors can be a real computational burden because their iterative implementation needs O(n3) operations at each update, where n is the dimension of the vector-valued data sequence. Before proceeding with a brief literature review of the main contributions of adaptive estimation of subspace or eigenvectors, let us first classify these algorithms with respect to their computational complexity. If r denotes the rank of the principal or dominant or minor subspace we would like to estimate, since usually r « n, it is traditional to refer to the following classification. Algorithms requiring O(n2r) or O(n2) operations by update are classified as high complexity; algorithms with O(nr2) operations as medium complexity and finally, algorithms with O(nr) operations as low complexity. This last category constitutes the most important one from a real time implementation point of view, and schemes belonging to this class are also known in the literature as fast subspace tracking algorithms. It should be mentioned that methods belonging to the high complexity class usually present faster convergence rates compared to the other two classes. From the paper by Owsley [56], that first introduced an adaptive procedure for the estimation of the signal subspace with O(n2r) operations, the literature referring to the problem of subspace or eigenvectors tracking from a signal processing point of view is extremely rich. The survey paper [20] constitutes an excellent review of results up to 1990, treating the first two classes, since the last class was not available at the time it was written. The most popular algorithm of the medium class was proposed by Karasalo in [40]. In [20], it is stated that this dominant subspace algorithm offers the best performance to cost ratio and thus serves as a point of reference for subsequent algorithms by many authors. The merger of signal processing and neural networks in the early 1990s [39] brought much attention to a method originated by Oja [50] and applied by many others. The Oja method requires only O(nr) operations at each update. It is clearly the continuous interest in the subject and significant recent developments that gave rise to this third class. It is outside of the scope of this chapter to give a comprehensive survey of all the contributions, but rather to focus on some of them. The interested reader may refer to [29, pp. 30–43] for an exhaustive literature review and to [8] for tables containing exact computational complexities and ranking with respect to the convergence of recent subspace tracking algorithms. In the present work, we mainly emphasize the low complexity class for both dominant and minor subspace, and dominant and minor eigenvector tracking, while we briefly address the most important schemes of the other two classes. For these algorithms, we will focus on their derivation from different iterative procedures coming from linear algebra and on their theoretical convergence and performance in stationary environments. Many important issues such as the finite precision effects on their behavior (e.g. possible numerical instabilities due to roundoff error accumulation), the different adaptive stepsize strategies and the tracking capabilities of these algorithms in nonstationary environments will be left aside. The interested reader may refer to the simulation sections of the different papers that deal with these issues.

The derivation and analysis of algorithms for subspace tracking requires a minimum background in linear algebra and matrix analysis. This is the reason why in Section 2, standard linear algebra materials necessary for this chapter are recalled. This is followed in Section 3 by the general studied observation model to fix the main notations and by the statement of the adaptive and tracking of principal or minor subspaces (or eigenvectors) problems. Then, Oja's neuron is introduced in Section 4 as a preliminary example to show that the subspace or component adaptive algorithms are derived empirically from different adaptations of standard iterative computational techniques issued from numerical methods. In Sections 5 and 6 different adaptive algorithms for principal (or minor) subspace and component analysis are introduced respectively. As for Oja's neuron, the majority of these algorithms can be viewed as some heuristic variations of the power method. These heuristic approaches need to be validated by convergence and performance analysis. Several tools such as the stability of the ordinary differential equation (ODE) associated with a stochastic approximation algorithm and the Gaussian approximation to address these points in a stationary environment are given in Section 7. Some illustrative applications of principal and minor subspace tracking in signal processing are given in Section 8. Section 9 contains some concluding remarks. Finally, some exercises are proposed in Section 10, essentially to prove some properties and relations introduced in the other sections.

4.2 LINEAR ALGEBRA REVIEW

In this section several useful notions coming from linear algebra as the EVD, the QR decomposition and the variational characterization of eigenvalues/eigenvectors of real symmetric matrices, and matrix analysis as a class of standard subspace iterative computational techniques are recalled. Finally a characterization of the principal subspace of a covariance matrix derived from the minimization of a mean square error will complete this section.

4.2.1 Eigenvalue Value Decomposition

Let C be an n × n real symmetric [resp. complex Hermitian] matrix, which is also non-negative definite because C will represent throughout this chapter a covariance matrix. Then, there exists (see e.g. [37, Sec. 2.5]) an orthonormal [resp. unitary] matrix U = [u1,…, un] and a real diagonal matrix Δ = Diag(λ1, …, λn) such that C can be decomposed1 as follows

The diagonal elements of Δ are called eigenvalues and arranged in decreasing order, satisfy λ1 ≥ … ≥ λn > 0, while the orthogonal columns (ui)i=1,…, n of U are the corresponding unit 2-norm eigenvectors of C.

For the sake of simplicity, only real-valued data will be considered from the next subsection and throughout this chapter. The extension to complex-valued data is often straightforward by changing the transposition operator to the conjugate transposition one. But we note two difficulties. First, for simple2 eigenvalues, the associated eigenvectors are unique up to a multiplicative sign in the real case, but only to a unit modulus constant in the complex case, and consequently a constraint ought to be added to fix them to avoid any discrepancies between the statistics observed in numerical simulations and the theoretical formulas. The reader interested by the consequences of this nonuniqueness on the derivation of the asymptotic variance of estimated eigenvectors from sample covariance matrices (SCMs) can refer to [34], (see also Exercise 4.1). Second, in the complex case, the second-order properties of multidimensional zero-mean random variables x are not characterized by the complex Hermitian covariance matrix E(xxH) only, but also by the complex symmetric complementary covariance [58] matrix E(xxT).

The computational complexity of the most efficient existing iterative algorithms that perform EVD of real symmetric matrices is cubic by iteration with respect to the matrix dimension (more details can be sought in [35, Chap. 8]).

4.2.2 QR Factorization

The QR factorization of an n × r real-valued matrix W, with n ≥ r is defined as (see e.g. [37, Sec. 2.6])

![]()

where Q is an n × n orthonormal matrix, R is an n × r upper triangular matrix, and Q1 denotes the first r columns of Q and R1 the r × r matrix constituted with the first r rows of R. If W is of full column rank, the columns of Q1 form an orthonormal basis for the range of W. Furthermore, in this case the skinny factorization Q1R1 of W is unique if R1 is constrained to have positive diagonal entries. The computation of the QR decomposition can be performed in several ways. Existing methods are based on Householder, block Householder, Givens or fast Givens transformations. Alternatively, the Gram–Schmidt orthonormalization process or a more numerically stable variant called modified Gram–Schmidt can be used. The interested reader can seek details for the aforementioned QR implementations in [35, pp. 224–233]), where the complexity is of the order of O(nr2) operations.

4.2.3 Variational Characterization of Eigenvalues/Eigenvectors of Real Symmetric Matrices

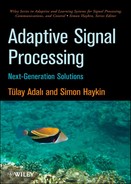

The eigenvalues of a general n × n matrix C are only characterized as the roots of the associated characteristic equation. But for real symmetric matrices, they can be characterized as the solutions to a series of optimization problems. In particular, the largest λ1 and the smallest λn eigenvalues of C are solutions to the following constrained maximum and minimum problem (see e.g. [37, Sec. 4.2]).

![]()

Furthermore, the maximum and minimum are attained by the unit 2-norm eigenvectors u1 and un associated with λ1 and λn respectively, which are unique up to a sign for simple eigenvalues λ1 and λn. For nonzero vectors ![]() , the expression

, the expression ![]() is known as the Rayleigh's quotient and the constrained maximization and minimization (4.3) can be replaced by the following unconstrained maximization and minimization

is known as the Rayleigh's quotient and the constrained maximization and minimization (4.3) can be replaced by the following unconstrained maximization and minimization

![]()

For simple eigenvalues λ1, λ2, …, λr or λn, λn−1, …, λn−r+1, (4.3) extends by the following iterative constrained maximizations and minimizations (see e.g. [37, Sec. 4.2])

![]()

![]()

and the constrained maximum and minimum are attained by the unit 2-norm eigenvectors uk associated with λk which are unique up to a sign.

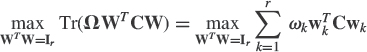

Note that when λr > λr+1 or λn−r > λn−r+1, the following global constrained maximizations or minimizations (denoted subspace criterion)

or

where W = [w1, …, wr] is an arbitrary n × r matrix, have four solutions (see e.g. [70] and Exercise 4.6), W = [u1, …, ur]Q or W = [un−r+1, …, un]Q respectively, where Q is an arbitrary r × r orthogonal matrix. Thus, subspace criterion (4.7) determines the subspace spanned by {u1, …, ur} or {un−r+1, …, un}, but does not specify the basis of this subspace at all.

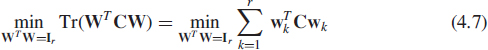

Finally, when now, λ1 > λ2 > … > λr > λr+1 or λn−r > λn−r+1 > … > λn−1 > λn,3 if (ωk)k=1, … r denotes r arbitrary positive and different real numbers such that ω1 > ω2 > … > ωr > 0, the following modification of subspace criterion (4.7) denoted weighted subspace criterion

or

with Ω = Diag(ω1, …, ωr), has [54] the unique solution {±u1, …, ±ur} or {±un−r+1, …, ±un}, respectively.

4.2.4 Standard Subspace Iterative Computational Techniques

The first subspace problem consists in computing the eigenvector associated with the largest eigenvalue. The power method presented in the sequel is the simplest iterative techniques for this task. Under the condition that λ1 is the unique dominant eigenvalue associated with u1 of the real symmetric matrix C, and starting from arbitrary unit 2-norm w0 not orthogonal to u1, the following iterations produce a sequence (αi, wi) that converges to the largest eigenvalue λ1 and its corresponding eigenvector unit 2-norm ±u1.

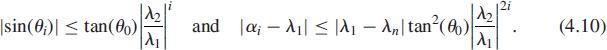

The proof can be found in [35, p. 406], where the definition and the speed of this convergence are specified in the following. Define θi ∈ [0, π/2] by ![]() satisfying cos(θ0) ≠ 0, then

satisfying cos(θ0) ≠ 0, then

Consequently the convergence rate of the power method is exponential and proportional to the ratio ![]() for the eigenvector and to

for the eigenvector and to ![]() for the associated eigenvalue. If w0 is selected randomly, the probability that this vector is orthogonal to u1 is equal to zero. Furthermore, if w0 is deliberately chosen orthogonal to u1, the effect of finite precision in arithmetic computations will introduce errors that will finally provoke the loss of this orthogonality and therefore convergence to ±u1.

for the associated eigenvalue. If w0 is selected randomly, the probability that this vector is orthogonal to u1 is equal to zero. Furthermore, if w0 is deliberately chosen orthogonal to u1, the effect of finite precision in arithmetic computations will introduce errors that will finally provoke the loss of this orthogonality and therefore convergence to ±u1.

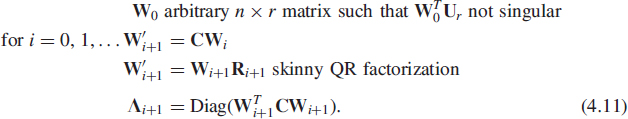

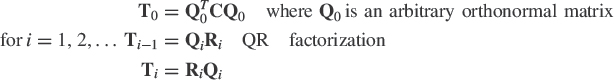

Suppose now that C nonnegative. A straightforward generalization of the power method allows for the computation of the r eigenvectors associated with the r largest eigenvalues of C when its first r+ 1 eigenvalues are distinct, or of the subspace corresponding to the r largest eigenvalues of C when λr > λr+1 only. This method can be found in the literature under the name of orthogonal iteration, for example, in [35], subspace iteration, for example, in [57] or simultaneous iteration method, for example, in [64]. First, consider the case where the r + 1 largest eigenvalues of C are distinct. With ![]() and Δr = Diag(λ1, …, λr), the following iterations produce a sequence (Λi, Wi) that converges to (Δr, [±u1, …, ±ur]).

and Δr = Diag(λ1, …, λr), the following iterations produce a sequence (Λi, Wi) that converges to (Δr, [±u1, …, ±ur]).

The proof can be found in [35, p. 411]. The definition and the speed of this convergence are similar to those of the power method, it is exponential and proportional to ![]() for the eigenvectors and to

for the eigenvectors and to ![]() for the eigenvalues. Note that if r = 1, then this is just the power method. Moreover for arbitrary r, the sequence formed by the first column of Wi is precisely the sequence of vectors produced by the power method with the first column of W0 as starting vector.

for the eigenvalues. Note that if r = 1, then this is just the power method. Moreover for arbitrary r, the sequence formed by the first column of Wi is precisely the sequence of vectors produced by the power method with the first column of W0 as starting vector.

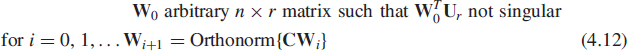

Consider now the case where λr > λr+1. Then the following iteration method

where the orthonormalization (Orthonorm) procedure not necessarily given by the QR factorization, generates a sequence Wi that converges to the dominant subspace generated by {u1, …, ur} only. This means precisely that the sequence ![]() (which here is a projection matrix because

(which here is a projection matrix because ![]() ) converges to the projection matrix

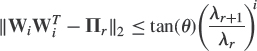

) converges to the projection matrix ![]() . In the particular case where the QR factorization is used in the orthonormalization step, the speed of this convergence is exponential and proportional to

. In the particular case where the QR factorization is used in the orthonormalization step, the speed of this convergence is exponential and proportional to ![]() , that is, more precisely [35, p. 411]

, that is, more precisely [35, p. 411]

where ![]() is specified by

is specified by ![]() . This type of convergence is very specific. The r orthonormal columns of Wi do not necessary converge to a particular orthonormal basis of the dominant subspace generated by u1, …, ur, but may eventually rotate in this dominant subspace as i increases. Note that the orthonormalization step (4.12) can be realized by other means than the QR decomposition. For example, extending the r = 1 case

. This type of convergence is very specific. The r orthonormal columns of Wi do not necessary converge to a particular orthonormal basis of the dominant subspace generated by u1, …, ur, but may eventually rotate in this dominant subspace as i increases. Note that the orthonormalization step (4.12) can be realized by other means than the QR decomposition. For example, extending the r = 1 case

![]()

to arbitrary r, yields

![]()

where the square root inverse of the matrix ![]() is defined by the EVD of the matrix with its eigenvalues replaced by their square root inverses. The speed of convergence of the associated algorithm is exponential and proportional to

is defined by the EVD of the matrix with its eigenvalues replaced by their square root inverses. The speed of convergence of the associated algorithm is exponential and proportional to ![]() as well [38].

as well [38].

Finally, note that the power and the orthogonal iteration methods can be extended to obtain the minor subspace or eigenvectors by replacing the matrix C by In − μC where 0 < μ< 1/λ1 such that the eigenvalues 1 − μλn > … ≥ 1 − μλ1 > 0 of In − μC are strictly positive.

4.2.5 Characterization of the Principal Subspace of a Covariance Matrix from the Minimization of a Mean Square Error

In the particular case where the matrix C is the covariance of the zero-mean random variable x, consider the scalar function J(W) where W denotes an arbitrary n × r matrix

![]()

The following two properties are proved (e.g. see [71] and Exercises 4.7 and 4.8).

First, the stationary points W of J(W) [i.e., the points W that cancel J(W)] are given by W = UrQ where the r columns of Ur denotes here arbitrary r distinct unit-2 norm eigenvectors among u1, …, un of C and where Q is an arbitrary r × r orthogonal matrix. Furthermore at each stationary point, J(W) equals the sum of eigenvalues whose eigenvectors are not included in Ur.

Second, in the particular case where λr > λr+1, all stationary points of J(W) are saddle points except the points W whose associated matrix Ur contains the r dominant eigenvectors u1, …, ur of C. In this case J(W) attains the global minimum ![]() . It is important to note that at this global minimum, W does not necessarily contain the r dominant eigenvectors u1, …, ur of C, but rather an arbitrary orthogonal basis of the associated dominant subspace. This is not surprising because

. It is important to note that at this global minimum, W does not necessarily contain the r dominant eigenvectors u1, …, ur of C, but rather an arbitrary orthogonal basis of the associated dominant subspace. This is not surprising because

![]()

with Tr(WTCW) = Tr(CWWT) and thus J(W) is expressed as a function of W through WWT which is invariant with respect to rotation WQ of W. Finally, note that when r = 1 and λ1 > λ2, the solution of the minimization of J(w) (4.14) is given by the unit 2-norm dominant eigenvector ±u1.

4.3 OBSERVATION MODEL AND PROBLEM STATEMENT

4.3.1 Observation Model

The general iterative subspace determination problem described in the previous section, will be now specialized to a class of matrices C computed from observation data. In typical applications of subspace-based signal processing, a sequence4 of data vectors ![]() is observed, satisfying the following very common observation signal model

is observed, satisfying the following very common observation signal model

![]()

where s(k) is a vector containing the information signal lying on an r-dimensional linear subspace of ![]() with r < n, while n(k) is a zero-mean additive random white noise (AWN) random vector, uncorrelated from s(k). Note that s(k) is often given by s(k) = A(k)r(k) where the full rank n × r matrix A(k) is deterministically parameterized and r(k) is a r-dimensional zero-mean full random vector (i.e., with E[r(k)rT(k)] nonsingular). The signal part s(k) may also randomly select among r deterministic vectors. This random selection does not necessarily result in a zero-mean signal vector s(k).

with r < n, while n(k) is a zero-mean additive random white noise (AWN) random vector, uncorrelated from s(k). Note that s(k) is often given by s(k) = A(k)r(k) where the full rank n × r matrix A(k) is deterministically parameterized and r(k) is a r-dimensional zero-mean full random vector (i.e., with E[r(k)rT(k)] nonsingular). The signal part s(k) may also randomly select among r deterministic vectors. This random selection does not necessarily result in a zero-mean signal vector s(k).

In these assumptions, the covariance matrix Cs(k) of s(k) is r-rank deficient and

![]()

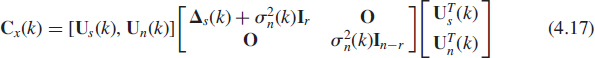

where ![]() denotes the AWN power. Taking into account that Cs(k) is of rank r and applying the EVD (4.1) on Cx(k) yields

denotes the AWN power. Taking into account that Cs(k) is of rank r and applying the EVD (4.1) on Cx(k) yields

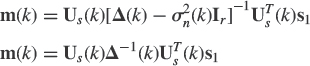

where the n × r and n × (n − r) matrices Us(k) and Un(k) are orthonormal bases for the denoted signal or dominant and noise or minor subspace of Cx(k) and Δs(k) is a r × r diagonal matrix constituted by the r nonzero eigenvalues of Cs(k). We note that the column vectors of Us(k) are generally unique up to a sign, in contrast to the column vectors of Un(k) for which Un(k) is defined up to a right multiplication by a (n − r) × (n − r) orthonormal matrix Q. However, the associated orthogonal projection matrices ![]() and

and ![]() respectively denoted signal or dominant projection matrices and noise or minor projection matrices that will be introduced in the next sections are both unique.

respectively denoted signal or dominant projection matrices and noise or minor projection matrices that will be introduced in the next sections are both unique.

4.3.2 Statement of the Problem

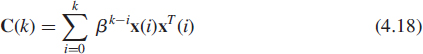

A very important problem in signal processing consists in continuously updating the estimate Us(k), Un(k), Πs(k) or Πn(k) and sometimes with Δs(k) and ![]() , assuming that we have available consecutive observation vectors x(i), i = …, k − 1, k, … when the signal or noise subspace is slowly time-varying compared to x(k). The dimension r of the signal subspace may be known a priori or estimated from the observation vectors. A straightforward way to come up with a method that solves these problems is to provide efficient adaptive estimates C(k) of Cx(k) and simply apply an EVD at each time step k. Candidates for this estimate C(k) are generally given by sliding windowed sample data covariance matrices when the sequence of Cx(k) undergoes relatively slow changes. With an exponential window, the estimated covariance matrix is defined as

, assuming that we have available consecutive observation vectors x(i), i = …, k − 1, k, … when the signal or noise subspace is slowly time-varying compared to x(k). The dimension r of the signal subspace may be known a priori or estimated from the observation vectors. A straightforward way to come up with a method that solves these problems is to provide efficient adaptive estimates C(k) of Cx(k) and simply apply an EVD at each time step k. Candidates for this estimate C(k) are generally given by sliding windowed sample data covariance matrices when the sequence of Cx(k) undergoes relatively slow changes. With an exponential window, the estimated covariance matrix is defined as

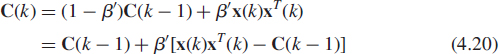

where 0 < β < 1 is the forgetting factor. Its use is intended to ensure that the data in the distant past are downweighted in order to afford the tracking capability when we operate in a nonstationary environment. C(k) can be recursively updated according to the following scheme

![]()

Note that

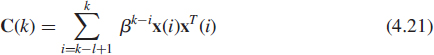

is also used. These estimates C(k) tend to smooth the variations of the signal parameters and so are only suitable for slowly changing signal parameters. For sudden signal parameter changes, the use of a truncated window may offer faster tracking. In this case, the estimated covariance matrix is derived from a window of length l

where 0 < β ≤ 1. The case β = 1 corresponds to a rectangular window. This matrix can be recursively updated according to the following scheme

![]()

Both versions require O(n2) operations with the first having smaller computational complexity and memory needs. Note that for β = 0, (4.22) gives the coarse estimate x(k)xT(k) of Cx(k) as used in the least mean square (LMS) algorithms for adaptive filtering (see e.g. [36]).

Applying an EVD on C(k) at each time k is of course the best possible way to estimate the eigenvectors or subspaces we are looking for. This approach is known as direct EVD and has high complexity which is O(n3). This method usually serves as point of reference when dealing with the different less computationally demanding approaches described in the next sections. These computationally efficient algorithms will compute signal or noise eigenvectors (or signal or noise projection matrices) at the time instant k + 1 from the associated estimate at time k and the new arriving sample vector x(k).

4.4 PRELIMINARY EXAMPLE: OJA'S NEURON

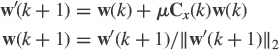

Let us introduce these adaptive procedures by a simple example: The following Oja's neuron originated by Oja [50] and then applied by many others that estimates the eigenvector associated with the unique largest eigenvalue of a covariance matrix of the stationary vector x(k).

![]()

The first term on the right side is the previous estimate of ±u1, which is kept as a memory of the iteration. The whole term in brackets is the new information. This term is scaled by the stepsize μ and then added to the previous estimate w(k) to obtain the current estimate w(k+ 1). We note that this new information is formed by two terms. The first one x(k)xT(k)w(k) contains the first step of the power method (4.9) and the second one is simply the previous estimate w(k) adjusted by the scalar wT(k)x(k)xT(k)w(k) so that these two terms are on the same scale. Finally, we note that if the previous estimate w(k) is already the desired eigenvector ±u1, the expectation of this new information is zero, and hence, w(k + 1) will be hovering around ±u1. The step size μ controls the balance between the past and the new information. Introduced in the neural networks literature [50] within the framework of a new synaptic modification law, it is interesting to note that this algorithm can be derived from different heuristic variations of numerical methods introduced in Section 4.2.

First consider the variational characterization recalled in Subsection 4.2.3. Because ![]() , the constrained maximization (4.3) or (4.7) can be solved using the following constrained gradient-search procedure

, the constrained maximization (4.3) or (4.7) can be solved using the following constrained gradient-search procedure

in which the stepsize μ is sufficiency small enough. Using the approximation μ2«μ yields

Then, using the instantaneous estimate x(k)xT(k) of Cx(k), Oja's neuron (4.23) is derived.

Consider now the power method recalled in Subsection 4.2.4. Noticing that Cx and In+ μCx have the same eigenvectors, the step ![]() of (4.9) can be replaced by

of (4.9) can be replaced by ![]() and using the previous approximations yields Oja's neuron (4.23) anew.

and using the previous approximations yields Oja's neuron (4.23) anew.

Finally, consider the characterization of the eigenvector associated with the unique largest eigenvalue of a covariance matrix derived from the mean square error E(||x − wwTx||2) recalled in Subsection 4.2.5. Because

![]()

an unconstrained gradient-search procedure yields

![]()

Then, using the instantaneous estimate x(k)xT(k) of Cx(k) and the approximation wT(k)w(k) = 1 justified by the convergence of the deterministic gradient-search procedure to ±u1 when μ → 0, Oja's neuron (4.23) is derived again.

Furthermore, if we are interested in adaptively estimating the associated single eigenvalue λ1, the minimization of the scalar function ![]() by a gradient-search procedure can be used. With the instantaneous estimate x(k)xT(k) of Cx(k) and with the estimate w(k) of u1 given by (4.23), the following stochastic gradient algorithm is obtained.

by a gradient-search procedure can be used. With the instantaneous estimate x(k)xT(k) of Cx(k) and with the estimate w(k) of u1 given by (4.23), the following stochastic gradient algorithm is obtained.

![]()

We note that the previous two heuristic derivations could be extended to the adaptive estimation of the eigenvector associated with the unique smallest eigenvalue of Cx(k). Using the constrained minimization (4.3) or (4.7) solved by a constrained gradient-search procedure or the power method (4.9) where the step ![]() of (4.9) is replaced by

of (4.9) is replaced by ![]() (where 0 < μ < 1/λ1) yields (4.23) after the same derivation, but where the sign of the stepsize μ is reversed.

(where 0 < μ < 1/λ1) yields (4.23) after the same derivation, but where the sign of the stepsize μ is reversed.

![]()

The associated eigenvalue λn could be also derived from the minimization of ![]() and consequently obtained by (4.24) as well, where w(k) is issued from (4.25).

and consequently obtained by (4.24) as well, where w(k) is issued from (4.25).

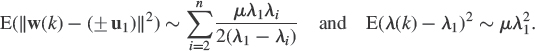

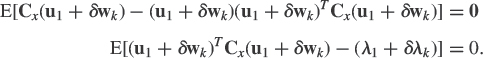

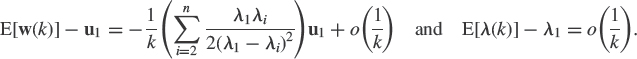

These heuristic approaches are derived from iterative computational techniques issued from numerical methods recalled in Section 4.2, and need to be validated by convergence and performance analysis for stationary data x(k). These issues will be considered in Section 4.7. In particular it will be proved that the coupled stochastic approximation algorithms (4.23) and (4.24) in which the stepsize μ is decreasing, converge to the pair (±u1, λ1), in contrast to the stochastic approximation algorithm (4.25) that diverges. Then, due to the possible accumulation of rounding errors, the algorithms that converge theoretically must be tested through numerical experiments to check their numerical stability in stationary environments. Finally extensive Monte Carlo simulations must be carried out with various stepsizes, initialization conditions, SNRs, and parameters configurations in nonstationary environments.

4.5 SUBSPACE TRACKING

In this section, we consider the adaptive estimation of dominant (signal) and minor (noise) subspaces. To derive such algorithms from the linear algebra material recalled in Subsections 4.2.3, 4.2.4, and 4.2.5 similarly as for Oja's neuron, we first note that the general orthogonal iterative step (4.12): Wi+1 = Orthonorm{CWi} allows for the following variant for adaptive implementation

![]()

where μ > 0 is a small parameter known as stepsize, because In + μC has the same eigenvectors as C with associated eigenvalues (1 + μλi)i=1,…,n. Noting that In − μC has also the same eigenvectors as C with associated eigenvalues (1 − μλi)i=1,…, n, arranged exactly in the opposite order as (λi)i=1,…, n for μ sufficiently small (μ < 1/λ1), the general orthogonal iterative step (4.12) allows for the following second variant of this iterative procedure to converge to the r-dimensional minor subspace of C if λn−r > λn−r+1.

![]()

When the matrix C is unknown and, instead we have sequentially the data sequence x(k), we can replace C by an adaptive estimate C(k) (see Section 4.3.2). This leads to the adaptive orthogonal iteration algorithm

![]()

where the + sign generates estimates for the signal subspace (if λr > λr+1) and the − sign for the noise subspace (if λn−r > λn−r+1). Depending on the choice of the estimate C(k) and of the orthonormalization (or approximate orthonormalization), we can obtain alternative subspace tracking algorithms.

We note that maximization or minimization in (4.7) of ![]() subject to the constraint WTW = Ir can be solved by a constrained gradient-descent technique. Because

subject to the constraint WTW = Ir can be solved by a constrained gradient-descent technique. Because ![]() , we obtain the following Rayleigh quotient-based algorithm

, we obtain the following Rayleigh quotient-based algorithm

![]()

whose general expression is the same as general expression (4.26) derived from the orthogonal iteration approach. We will denote this family of algorithms as the power-based methods. It is interesting to note that a simple sign change enables one to switch from the dominant to the minor subspaces. Unfortunately, similarly to Oja's neuron, many minor subspace algorithms will be unstable or stable but non-robust (i.e., numerically unstable with a tendency to accumulate round-off errors until their estimates are meaningless), in contrast to the associated majorant subspace algorithms. Consequently, the literature of minor subspace tracking techniques is very limited as compared to the wide variety of methods that exists for the tracking of majorant subspaces.

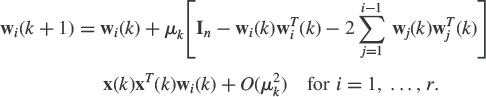

4.5.1 Subspace Power-based Methods

Clearly the simplest selection for C(k) is the instantaneous estimate x(k)xT(k), which gives rise to the Data Projection Method (DPM) first introduced in [70] where the orthonormalization is performed using the Gram–Schmidt procedure.

![]()

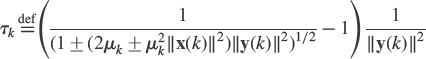

In nonstationary situations, estimates (4.19) or (4.20) of the covariance Cx(k) of x(k) at time k have been tested in [70]. For this algorithm to converge, we need to select a stepsize μ such that μ « 1/λ1 (see e.g. [29]). To satisfy this requirement (in nonstationary situations included) and because most of the time we have Tr[Cx(k)] » λ1(k), the following two normalized step sizes have been proposed in [70]

![]()

where μ may be close to unity and where the choice of ν ∈ (0, 1) depends on the rapidity of the change of the parameters of the observation signal model (4.15). Note that a better numerical stability can be achieved [5] if μk is chosen, similar to the normalized LMS algorithm [36], as ![]() where α is a very small positive constant. Obviously, this algorithm (4.28) has very high computational complexity due to the Gram–Schmidt orthonormalization step.

where α is a very small positive constant. Obviously, this algorithm (4.28) has very high computational complexity due to the Gram–Schmidt orthonormalization step.

To reduce this computational complexity, many algorithms have been proposed. Going back to the DPM algorithm (4.28), we observe that we can write

![]()

where the matrix G(k + 1) is responsible for performing exact or approximate orthonormalization while preserving the space generated by the columns of ![]() . It is the different choices of G(k + 1) that will pave the way for alternative less computationally demanding algorithms. Depending on whether this orthonormalization is exact or approximate, two families of algorithms have been proposed in the literature.

. It is the different choices of G(k + 1) that will pave the way for alternative less computationally demanding algorithms. Depending on whether this orthonormalization is exact or approximate, two families of algorithms have been proposed in the literature.

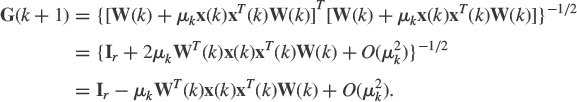

The Approximate Symmetric Orthonormalization Family The columns of W′(k + 1) can be approximately orthonormalized in a symmetrical way. Since W(k) has orthonormal columns, for sufficiently small μk the columns of W′(k + 1) will be linearly independent, although not orthonormal. Then ![]() is positive definite, and W(k + 1) will have orthonormal columns if

is positive definite, and W(k + 1) will have orthonormal columns if ![]() (unique if G(k + 1) is constrained to be symmetric). A stochastic algorithm denoted Subspace Network Learning (SNL) and later Oja's algorithm have been derived in [53] to estimate dominant subspace. Assuming μk is sufficiency enough, G(k + 1) can be expanded in μk as follows

(unique if G(k + 1) is constrained to be symmetric). A stochastic algorithm denoted Subspace Network Learning (SNL) and later Oja's algorithm have been derived in [53] to estimate dominant subspace. Assuming μk is sufficiency enough, G(k + 1) can be expanded in μk as follows

Omitting second-order terms, the resulting algorithm reads5

![]()

The convergence of this algorithm has been earlier studied in [78] and then in [69], where it was shown that the solution W(t) of its associated ODE (see Subsection 4.7.1) need not tend to the eigenvectors {v1, …,vr}, but only to a rotated basis W*, of the subspace spanned by them. More precisely, it has been proved in [16] that under the assumption that W(0) is of full column rank such that its projection to the signal subspace of Cx is linearly independent, there exists a rotated basis W* of this signal subspace such that ![]() . A performance analysis has been given in [24, 25]. This issue will be used as an example analysis of convergence and performance in Subsection 4.7.3. Note that replacing x(k)xT(k) by βIn ±x(k)xT(k) (with β > 0) in (4.30), leads to a modified Oja's algorithm [15], which, not affecting its capability of tracking a signal subspace with the sign +, can track a noise subspace by changing the sign (if β > λ1). Of course, these modified Oja's algorithms enjoy the same convergence properties as Oja's algorithm (4.30).

. A performance analysis has been given in [24, 25]. This issue will be used as an example analysis of convergence and performance in Subsection 4.7.3. Note that replacing x(k)xT(k) by βIn ±x(k)xT(k) (with β > 0) in (4.30), leads to a modified Oja's algorithm [15], which, not affecting its capability of tracking a signal subspace with the sign +, can track a noise subspace by changing the sign (if β > λ1). Of course, these modified Oja's algorithms enjoy the same convergence properties as Oja's algorithm (4.30).

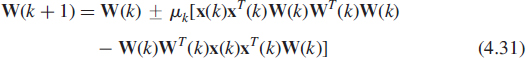

Many other modifications of Oja's algorithm have appeared in the literature, particularly to adapt it to noise subspace tracking. To obtain such algorithms, it is interesting to point out that, in general, it is not possible to obtain noise subspace tracking algorithms by simply changing the sign of the stepsize of a signal subspace tracking algorithm. For example, changing the sign in (4.30) or (4.85) leads to an unstable algorithm (divergence) as will be explained in Subsection 4.7.3 for r = 1. Among these modified Oja's algorithms, Chen et al. [16] have proposed the following unified algorithm

where the signs + and − are respectively associated with signal and noise tracking algorithms. While the associated ODE maintains WT(t)W(t) = Ir if WT(0)W(0) = Ir and enjoys [16] the same stability properties as Oja's algorithm, the stochastic approximation to algorithm (4.31) suffers from numerical instabilities (see e.g. the numerical simulations in [28]). Thus, its practical use requires periodic column reorthonormalization. To avoid these numerical instabilities, this algorithm has been modified [17] by adding the penalty term W(k)[In − W(k)WT(k)] to the field of (4.31). As far as noise subspace tracking is concerned, Douglas et al. [28] have proposed modifying the algorithm (4.31) by multiplying the first term of its field by WT(k)W(k) whose associated term in the ODE tends to Ir, viz

It is proved in [28] that the locally asymptotically stable points W of the ODE associated with this algorithm satisfy WTW = Ir and Span(W) = Span(Un). But the solution W(t) of the associated ODE does not converge to a particular basis W* of the noise subspace but rather, it is proved that Span[W(t)] tends to Span(Un) (in the sense that the projection matrix associated with the subspace Span[W(t)] tends to Πn). Numerical simulations presented in [28] show that this algorithm is numerically more stable than the minor subspace version of algorithm (4.31).

To eliminate the instability of the noise tracking algorithm derived from Oja's algorithm (4.30) where the sign of the stepsize is changed, Abed Meraim et al. [2] have proposed forcing the estimate W(k) to be orthonormal at each time step k (see Exercise 4.10) that can be used for signal subspace tracking (by reversing the sign of the stepsize) as well. But this algorithm converges with the same speed of convergence as Oja's algorithm (4.30). To accelerate its convergence, two normalized versions (denoted Normalized Oja's algorithm (NOja) and Normalized Orthogonal Oja's algorithm (NOOJa)) of this algorithm have been proposed in [4]. They can perform both signal and noise tracking by switching the sign of the stepsize for which an approximate closed-form expression has been derived. A convergence analysis of the NOja algorithm has been presented in [7] using the ODE approach. Because the ODE associated with the field of this stochastic approximation algorithm is the same as those associated with the projection approximation-based algorithm (4.43), it enjoys the same convergence properties.

The Exact Orthonormalization Family The orthonormalization (4.29) of the columns of W′(k + 1) can be performed exactly at each iteration by the symmetric square root inverse of ![]() due to the fact that the latter is a rank one modification of the identity matrix

due to the fact that the latter is a rank one modification of the identity matrix

![]()

with ![]() and

and ![]() . Using the identity

. Using the identity

![]()

we obtain

![]()

with  . Substituting (4.35) into (4.29) leads to

. Substituting (4.35) into (4.29) leads to

![]()

where ![]() . All these steps lead to the Fast Rayleigh quotient-based Adaptive Noise Subspace algorithm (FRANS) introduced by Attallah et al. in [5]. As stated in [5], this algorithm is stable and robust in the case of signal subspace tracking (associated with the sign +) including initialization with a nonorthonormal matrix W(0). By contrast, in the case of noise subspace tracking (associated with the sign −), this algorithm is numerically unstable because of round-off error accumulation. Even when initialized with an orthonormal matrix, it requires periodic reorthonormalization of W(k) in order to maintain the orthonormality of the columns of W(k). To remedy this instability, another implementation of this algorithm based on the numerically well behaved Householder transform has been proposed [6]. This Householder FRANS algorithm (HFRANS) comes from (4.36) which can be rewritten after cumbersome manipulations as

. All these steps lead to the Fast Rayleigh quotient-based Adaptive Noise Subspace algorithm (FRANS) introduced by Attallah et al. in [5]. As stated in [5], this algorithm is stable and robust in the case of signal subspace tracking (associated with the sign +) including initialization with a nonorthonormal matrix W(0). By contrast, in the case of noise subspace tracking (associated with the sign −), this algorithm is numerically unstable because of round-off error accumulation. Even when initialized with an orthonormal matrix, it requires periodic reorthonormalization of W(k) in order to maintain the orthonormality of the columns of W(k). To remedy this instability, another implementation of this algorithm based on the numerically well behaved Householder transform has been proposed [6]. This Householder FRANS algorithm (HFRANS) comes from (4.36) which can be rewritten after cumbersome manipulations as

![]()

with ![]() With no additional numerical complexity, this Householder transform allows one to stabilize the noise subspace version of the FRANS algorithm.6 The interested reader may refer to [75] that analyzes the orthonormal error propagation [i.e., a recursion of the distance to orthonormality

With no additional numerical complexity, this Householder transform allows one to stabilize the noise subspace version of the FRANS algorithm.6 The interested reader may refer to [75] that analyzes the orthonormal error propagation [i.e., a recursion of the distance to orthonormality ![]() from a nonorthogonal matrix W(0)] in the FRANS and HFRANS algorithms.

from a nonorthogonal matrix W(0)] in the FRANS and HFRANS algorithms.

Another solution to orthonormalize the columns of W′(k + 1) has been proposed in [29, 30]. It consists of two steps. The first one orthogonalizes these columns using a matrix G(k + 1) to give W″(k + 1) = W′(k + 1)G(k + 1), and the second one normalizes the columns of W″(k + 1). To find such a matrix G(k + 1) which is of course not unique, notice that if G(k + 1) is an orthogonal matrix having as first column, the vector ![]() with the remaining r − 1 columns completing an orthonormal basis, then using (4.33), the product

with the remaining r − 1 columns completing an orthonormal basis, then using (4.33), the product ![]() becomes the following diagonal matrix

becomes the following diagonal matrix

where ![]() and

and ![]() . It is fortunate that there exists such an orthonogonal matrix G(k + 1) with the desired properties known as a Householder reflector [35, Chap. 5], and can be very easily generated since it is of the form

. It is fortunate that there exists such an orthonogonal matrix G(k + 1) with the desired properties known as a Householder reflector [35, Chap. 5], and can be very easily generated since it is of the form

![]()

This gives the Fast Data Projection Method (FDPM)

![]()

where Normalize{W″(k + 1)} stands for normalization of the columns of W″(k + 1), and G(k + 1) is the Householder transform given by (4.37). Using the independence assumption [36, Chap. 9.4] and the approximation μk « 1, a simplistic theoretical analysis has been presented in [31] for both signal and noise subspace tracking. It shows that the FDPM algorithm is locally stable and the distance to orthonormality E(||WT(k)W(k) − Ir||2) tends to zero as O(e−ck) where c > 0 does not depend on μ. Furthermore, numerical simulations presented in [29–31] with ![]() demonstrate that this algorithm is numerically stable for both signal and noise subspace tracking, and if for some reason, orthonormality is lost, or the algorithm is initialized with a matrix that is not orthonormal, the algorithm exhibits an extremely high convergence speed to an orthonormal matrix. This FDPM algorithm is to the best to our knowledge, the only power-based minor subspace tracking methods of complexity O(nr) that is truly numerically stable since it does not accumulate rounding errors.

demonstrate that this algorithm is numerically stable for both signal and noise subspace tracking, and if for some reason, orthonormality is lost, or the algorithm is initialized with a matrix that is not orthonormal, the algorithm exhibits an extremely high convergence speed to an orthonormal matrix. This FDPM algorithm is to the best to our knowledge, the only power-based minor subspace tracking methods of complexity O(nr) that is truly numerically stable since it does not accumulate rounding errors.

Power-based Methods Issued from Exponential or Sliding Windows Of course, all of the above algorithms that do not use the rank one property of the instantaneous estimate x(k)xT(k) of Cx(k) can be extended to the exponential (4.19) or sliding windowed (4.22) estimates C(k), but with an important increase in complexity. To keep the O(nr) complexity, the orthogonal iteration method (4.12) must be adapted to the following iterations

where the matrix G(k + 1) is a square root inverse of ![]() responsible for performing the orthonormalization of W′(k + 1). It is the choice of G(k + 1) that will pave the way for different adaptive algorithms.

responsible for performing the orthonormalization of W′(k + 1). It is the choice of G(k + 1) that will pave the way for different adaptive algorithms.

Based on the approximation

![]()

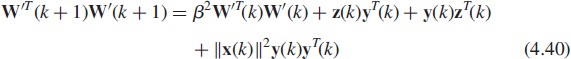

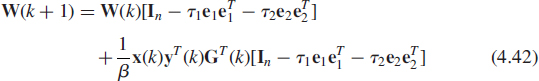

which is clearly valid if W(k) is slowly varying with k, an adaptation of the power method denoted natural power method 3 (NP3) has been proposed in [38] for the exponential windowed estimate (4.19) C(k) = βC(k − 1) + x(k)xT(k). Using (4.19) and (4.39), we obtain

![]()

with ![]() . It then follows that

. It then follows that

with ![]() , which implies (see Exercise 4.9) the following recursions

, which implies (see Exercise 4.9) the following recursions

![]()

where τ1, τ2 and e1, e2 are defined in Exercise 4.9.

Note that the square root inverse matrix G(k + 1) of ![]() is asymmetric even if G(0) is symmetric. Expressions (4.41) and (4.42) provide an algorithm which does not involve any matrix–matrix multiplications and in fact requires only O(nr) operations.

is asymmetric even if G(0) is symmetric. Expressions (4.41) and (4.42) provide an algorithm which does not involve any matrix–matrix multiplications and in fact requires only O(nr) operations.

Based on the approximation that W(k) and W(k + 1) span the same r-dimensional subspace, another power-based algorithm referred to as the approximated power iteration (API) algorithm and its fast implementation (FAPI) have been proposed in [8]. Compared to the NP3 algorithm, this scheme has the advantage that it can handle the exponential (4.19) or the sliding windowed (4.22) estimates of Cx(k) in the same framework (and with the same complexity of O(nr) operations) by writing (4.19) and (4.22) in the form

![]()

with J = 1 and x′(k) = x(k) for the exponential window and ![]() and x′(k) = [x(k), x(k − l)] for the sliding window [see (4.22)]. Among the power-based minor subspace tracking methods issued from the exponential of sliding window, this FAPI algorithm has been considered by many practitioners (e.g. [11]) as outperforming the other algorithms having the same computational complexity.

and x′(k) = [x(k), x(k − l)] for the sliding window [see (4.22)]. Among the power-based minor subspace tracking methods issued from the exponential of sliding window, this FAPI algorithm has been considered by many practitioners (e.g. [11]) as outperforming the other algorithms having the same computational complexity.

4.5.2 Projection Approximation-based Methods

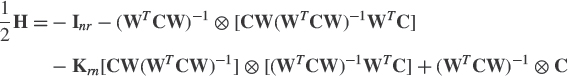

Since (4.14) describes an unconstrained cost function to be minimized, it is straightforward to apply the gradient-descent technique for dominant subspace tracking. Using expression (4.90) of the gradient given in Exercise 4.7 with the estimate x(k)xT(k) of Cx(k) gives

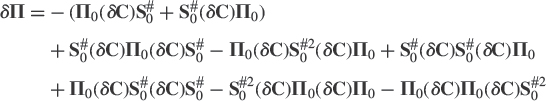

We note that this algorithm can be linked to Oja's algorithm (4.30). First, the term between brackets is the symmetrization of the term −x(k)xT(k) + W(k)WT(k)x(k)xT(k) of Oja's algorithm (4.30). Second, we see that when WT(k)W(k) is approximated by Ir (which is justified from the stability property below), algorithm (4.43) gives Oja's algorithm (4.30). We note that because the field of the stochastic approximation algorithm (4.43) is the opposite of the derivative of the positive function (4.14), the orthonormal bases of the dominant subspace are globally asymptotically stable for its associated ODE (see Subsection 4.7.1) in contrast to Oja's algorithm (4.30), for which they are only locally asymptotically stable. A complete performance analysis of the stochastic approximation algorithm (4.43) has been presented in [24] where closed-form expressions of the asymptotic covariance of the estimated projection matrix W(k)WT(k) are given and commented on for independent Gaussian data x(k) and constant stepsize μ.

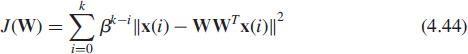

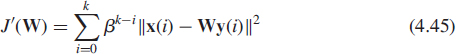

If now Cx(k) is estimated by the exponentially weighted sample covariance matrix ![]() (4.18) instead of x(k)xT(k), the scalar function J(W) becomes

(4.18) instead of x(k)xT(k), the scalar function J(W) becomes

and all data x(i) available in the time interval {0, …, k} are involved in estimating the dominant subspace at time instant k + 1 supposing this estimate is known at time instant k. The key issue of the projection approximation subspace tracking algorithm (PAST) proposed by Yang in [71] is to approximate WT(k)x(i) in (4.44), the unknown projection of x(i) onto the columns of W(k) by the expression y(i) = WT(i)x(i) which can be calculated for all 0 ≤ i ≤ k at the time instant k. This results in the following modified cost function

which is now quadratic in the elements of W. This projection approximation, hence the name PAST, changes the error performance surface of J(W). For stationary or slowly varying Cx(k), the difference between WT(k)x(i) and WT(i)x(i) is small, in particular when i is close to k. However, this difference may be larger in the distant past with i « k, but the contribution of the past data to the cost function (4.45) is decreasing for growing k, due to the exponential windowing. It is therefore expected that J′(W) will be a good approximation to J(W) and the matrix W(k) minimizing J′(W) be a good estimate for the dominant subspace of Cx(k). In case of sudden parameter changes of the model (4.15), the numerical experiments presented in [71] show that the algorithms derived from the PAST approach still converge. The main advantage of this scheme is that the least square minimization of (4.45) whose solution is given by ![]() where

where ![]() and

and ![]() has been extensively studied in adaptive filtering (see e.g. [36, Chap. 13] and [68, Chap. 12]) where various recursive least square algorithms (RLS) based on the matrix inversion lemma have been proposed.7 We note that because of the approximation of J(W) by J′(W), the columns of W(k) are not exactly orthonormal. But this lack of orthonormality does not mean that we need to perform a reorthonormalization of W(k) after each update. For this algorithm, the necessity of orthonormalization depends solely on the post processing method which uses this signal subspace estimate to extract the desired signal information (see e.g. Section 4.8). It is shown in the numerical experiments presented in [71] that the deviation of W(k) from orthonormality is very small and for a growing sliding window (β = 1), W(k) converges to a matrix with exactly orthonormal columns under stationary signal. Finally, note that a theoretical study of convergence and a derivation of the asymptotic distribution of the recursive subspace estimators have been presented in [73, 74] respectively. Using the ODE associated with this algorithm (see Section 4.7.1) which is here a pair of coupled matrix differential equations, it is proved that under signal stationarity and other weak conditions, the PAST algorithm converges to the desired signal subspace with probability one.

has been extensively studied in adaptive filtering (see e.g. [36, Chap. 13] and [68, Chap. 12]) where various recursive least square algorithms (RLS) based on the matrix inversion lemma have been proposed.7 We note that because of the approximation of J(W) by J′(W), the columns of W(k) are not exactly orthonormal. But this lack of orthonormality does not mean that we need to perform a reorthonormalization of W(k) after each update. For this algorithm, the necessity of orthonormalization depends solely on the post processing method which uses this signal subspace estimate to extract the desired signal information (see e.g. Section 4.8). It is shown in the numerical experiments presented in [71] that the deviation of W(k) from orthonormality is very small and for a growing sliding window (β = 1), W(k) converges to a matrix with exactly orthonormal columns under stationary signal. Finally, note that a theoretical study of convergence and a derivation of the asymptotic distribution of the recursive subspace estimators have been presented in [73, 74] respectively. Using the ODE associated with this algorithm (see Section 4.7.1) which is here a pair of coupled matrix differential equations, it is proved that under signal stationarity and other weak conditions, the PAST algorithm converges to the desired signal subspace with probability one.

To speed up the convergence of the PAST algorithm and to guarantee the orthonormality of W(k) at each iteration, an orthonormal version of the PAST algorithm dubbed OPAST has been proposed in [1]. This algorithm consists of the PAST algorithm where W(k + 1) is related to W(k) by W(k + 1) = W(k) + p(k)q(k), plus an orthonormalization step of W(k) based on the same approach as those used in the FRANS algorithm (see Subsection 4.5.1) which leads to the update W(k + 1) = W(k) + p′(k)q(k).

Note that the PAST algorithm cannot be used to estimate the noise subspace by simply changing the sign of the stepsize because the associated ODE is unstable. Efforts to eliminate this instability were attempted in [4] by forcing the orthonormality of W(k) at each time step. Although there was a definite improvement in the stability characteristics, the resulting algorithm remains numerically unstable.

4.5.3 Additional Methodologies

Various generalizations of criteria (4.7) and (4.14) have been proposed (e.g. in [41]), which generally yield robust estimates of principal subspaces or eigenvectors that are totally different from the standard ones. Among them, the following novel information criterion (NIC) [48] results in a fast algorithm to estimate the principal subspace with a number of attractive properties

![]()

given that W lies in the domain {W such that WTCW > 0}, where the matrix logarithm is defined, for example, in [35, Chap. 11]. It is proved in [48] (see also Exercises 4.11 and 4.12) that the above criterion has a global maximum that is attained when and only when W = UrQ where Ur = [u1, …, ur] and Q is an arbitrary r × r orthogonal matrix and all the other stationary points are saddle points. Taking the gradient of (4.46) [which is given explicitly by (4.92)], the following gradient ascent algorithm has been proposed in [48] for updating the estimate W(k)

![]()

Using the recursive estimate ![]() (4.18), and the projection approximation introduced in [71] WT(k)x(i) = WT(i)x(i) for all 0 ≤ i ≤ k, the update (4.47) becomes

(4.18), and the projection approximation introduced in [71] WT(k)x(i) = WT(i)x(i) for all 0 ≤ i ≤ k, the update (4.47) becomes

with ![]() . Consequently, similarly to the PAST algorithms, standard RLS techniques used in adaptive filtering can be applied. According to the numerical experiments presented in [38], this algorithm performs very similarly to the PAST algorithm also having the same complexity. Finally, we note that it has been proved in [48] that the points W = UrQ are the only asymptotically stable points of the ODE (see Subsection 4.7.1) associated with the gradient ascent algorithm (4.47) and that the attraction set of these points is the domain {W such that WTCW > 0}. But to the best of our knowledge, no complete theoretical performance analysis of algorithm (4.48) has been carried out so far.

. Consequently, similarly to the PAST algorithms, standard RLS techniques used in adaptive filtering can be applied. According to the numerical experiments presented in [38], this algorithm performs very similarly to the PAST algorithm also having the same complexity. Finally, we note that it has been proved in [48] that the points W = UrQ are the only asymptotically stable points of the ODE (see Subsection 4.7.1) associated with the gradient ascent algorithm (4.47) and that the attraction set of these points is the domain {W such that WTCW > 0}. But to the best of our knowledge, no complete theoretical performance analysis of algorithm (4.48) has been carried out so far.

4.6 EIGENVECTORS TRACKING

Although, the adaptive estimation of the dominant or minor subspace through the estimate W(k)WT(k) of the associated projector is of most importance for subspace-based algorithms, there are situations where the associated eigenvalues are simple (λ1 > … > λr > λr+1 or λn < … < λn−r+1 < λn−r) and the desired estimated orthonormal basis of this space must form an eigenbasis. This is the case for the statistical technique of principal component analysis in data compression and coding, optimal feature extraction in pattern recognition, and for optimal fitting in the total least square sense, or for Karhunen-Loève transformation of signals, to mention only a few examples. In these applications, {y1(k), …, yr(k)} or {yn(k), …, yn−r+1(k)} with ![]() where W = [w1(k), …, wr(k)] or W = [wn(k), …, wn−r+1(k)] are the estimated r first principal or r last minor components of the data x(k). To derive such adaptive estimates, the stochastic approximation algorithms that have been proposed, are issued from adaptations of the iterative constrained maximizations (4.5) and minimizations (4.6) of Rayleigh quotients; the weighted subspace criterion (4.8); the orthogonal iterations (4.11) and, finally the gradient-descent technique applied to the minimization of (4.14).

where W = [w1(k), …, wr(k)] or W = [wn(k), …, wn−r+1(k)] are the estimated r first principal or r last minor components of the data x(k). To derive such adaptive estimates, the stochastic approximation algorithms that have been proposed, are issued from adaptations of the iterative constrained maximizations (4.5) and minimizations (4.6) of Rayleigh quotients; the weighted subspace criterion (4.8); the orthogonal iterations (4.11) and, finally the gradient-descent technique applied to the minimization of (4.14).

4.6.1 Rayleigh Quotient-based Methods

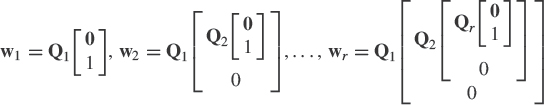

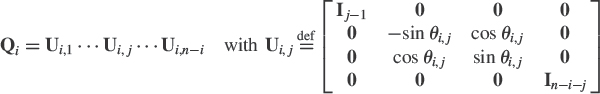

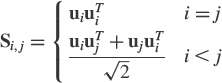

To adapt maximization (4.5) and minimization (4.6) of Rayleigh quotients to adaptive implementations, a method has been proposed in [61]. It is derived from a Givens parametrization of the constraint WTW = Ir, and from a gradient-like procedure. The Givens rotations approach introduced by Regalia [61] is based on the properties that any n × 1 unit 2-norm vector and any orthogonal vector to this vector can be respectively written as the last column of an n × n orthogonal matrix and as a linear combination of the first n − 1 columns of this orthogonal matrix, that is,

where Qi is the following orthogonal matrix of order n − i + 1

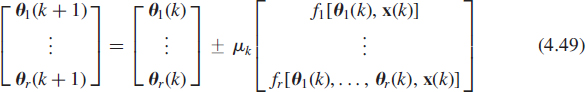

and θi,j belongs to ![]() . The existence of such a parametrization8 for all orthonormal sets {w1, …, wr} is proved in [61]. It consists of r(2n − r − 1)/2 real parameters. Furthermore, this parametrization is unique if we add some constraints on θi,j. A deflation procedure, inspired by the maximization (4.5) and minimization (4.6) has been proposed [61]. First the maximization or minimization (4.3) is performed with the help of the classical stochastic gradient algorithm, in which the parameters are θ1,1, …, θ1,n−1, whereas the maximization (4.5) or minimization (4.6) are realized thanks to stochastic gradient algorithms with respect to the parameters θi,1, …, θi,n−i, in which the preceding parameters θl,1(k), …, θl,n−l(k) for l = 1, …, i − 1 are injected from the i − 1 previous algorithms. The deflation procedure is achieved by coupled stochastic gradient algorithms

. The existence of such a parametrization8 for all orthonormal sets {w1, …, wr} is proved in [61]. It consists of r(2n − r − 1)/2 real parameters. Furthermore, this parametrization is unique if we add some constraints on θi,j. A deflation procedure, inspired by the maximization (4.5) and minimization (4.6) has been proposed [61]. First the maximization or minimization (4.3) is performed with the help of the classical stochastic gradient algorithm, in which the parameters are θ1,1, …, θ1,n−1, whereas the maximization (4.5) or minimization (4.6) are realized thanks to stochastic gradient algorithms with respect to the parameters θi,1, …, θi,n−i, in which the preceding parameters θl,1(k), …, θl,n−l(k) for l = 1, …, i − 1 are injected from the i − 1 previous algorithms. The deflation procedure is achieved by coupled stochastic gradient algorithms

with ![]() and

and ![]() . This rather intuitive computational process was confirmed by simulation results [61]. Later a formal analysis of the convergence and performance was performed in [23] where it has been proved that the stationary points of the associated ODE are globally asymptotically stable (see Subsection 4.7.1) and that the stochastic algorithm (4.49) converges almost surely to these points for stationary data x(k) when μk is decreasing with

. This rather intuitive computational process was confirmed by simulation results [61]. Later a formal analysis of the convergence and performance was performed in [23] where it has been proved that the stationary points of the associated ODE are globally asymptotically stable (see Subsection 4.7.1) and that the stochastic algorithm (4.49) converges almost surely to these points for stationary data x(k) when μk is decreasing with ![]() = and

= and ![]() . We note that this algorithm yields exactly orthonormal r dominant or minor estimated eigenvectors by a simple change of sign in its stepsize, and requires O(nr) operations at each iteration but without accounting for the trigonometric functions.

. We note that this algorithm yields exactly orthonormal r dominant or minor estimated eigenvectors by a simple change of sign in its stepsize, and requires O(nr) operations at each iteration but without accounting for the trigonometric functions.

Alternatively, a stochastic gradient-like algorithm denoted direct adaptive subspace estimation (DASE) has been proposed in [62] with a direct parametrization of the eigenvectors by means of their coefficients. Maximization or minimization (4.3) is performed with the help of a modification of the classic stochastic gradient algorithm to assure an approximate unit norm of the first estimated eigenvector w1(k) [in fact a rewriting of Oja's neuron (4.23)]. Then, a modification of the classical stochastic gradient algorithm using a deflation procedure, inspired by the constraint WTW = Ir gives the estimates [wi(k)]i=2, …, r

This totally empirical procedure has been studied in [63]. It has been proved that the stationary points of the associated ODE are all eigenvector bases {±ui1, …, ±uir}. Using the eigenvalues of the derivative of the mean field (see Subsection 4.7.1), it is shown that all these eigenvector bases are unstable except {±u1} for r = 1 associated with the sign + [where algorithm (4.50) is Oja's neuron (4.23)]. But a closer, examination of these eigenvalues that are all real-valued, shows that for only the eigenbasis {±u1, …, ±ur} and {±un, …, ±un−r+1} associated with the sign + and − respectively, all the eigenvalues of the derivative of the mean field are strictly negative except for the eigenvalues associated with variations of the eigenvectors {±u1, …, ±ur} and {±un, …, ±un−r+1} in their directions. Consequently, it is claimed in [63] that if the norm of each estimated eigenvector is set to one at each iteration, the stability of the algorithm is ensured. The simulations presented in [62] confirm this intuition.

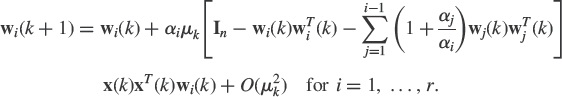

4.6.2 Eigenvector Power-based Methods

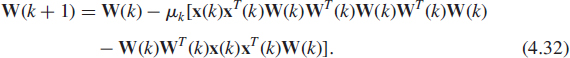

Note that similarly to the subspace criterion (4.7), the maximization or minimization of the weighted subspace criterion (4.8) ![]() subject to the constraint WTW = Ir can be solved by a constrained gradient-descent technique. Clearly, the simplest selection for C(k) is the instantaneous estimate x(k)xT(k). Because in this case, ∇WJ = 2x(k)xT(k)WΩ, we obtain the following stochastic approximation algorithm that will be a starting point for a family of algorithms that have been derived to adaptively estimate major or minor eigenvectors

subject to the constraint WTW = Ir can be solved by a constrained gradient-descent technique. Clearly, the simplest selection for C(k) is the instantaneous estimate x(k)xT(k). Because in this case, ∇WJ = 2x(k)xT(k)WΩ, we obtain the following stochastic approximation algorithm that will be a starting point for a family of algorithms that have been derived to adaptively estimate major or minor eigenvectors

![]()

in which W(k) = [w1(k), …, wr(k)] and the matrix Ω is a diagonal matrix Diag(ω1, …, ωr) with ω1 > … > ωr > 0. G(k + 1) is a matrix depending on

![]()

which orthonormalizes or approximately orthonormalizes the columns of W′(k + 1). Thus, W(k) has orthonormal or approximately orthonormal columns for all k. Depending on the form of matrix G(k + 1), variants of the basic stochastic algorithm are obtained. Going back to the general expression (4.29) of the subspace power-based algorithm, we note that (4.51) can also be derived from (4.29), where different stepsizes μkω1,…, μkωr are introduced for each column of W(k).

Using the same approach as for deriving (4.30), that is, where G(k + 1) is the symmetric square root inverse of ![]() , we obtain the following stochastic approximation algorithm

, we obtain the following stochastic approximation algorithm

Note that in contrast to the Oja's algorithm (4.30), this algorithm is different from the algorithm issued from the optimization of the cost function ![]() defined on the set of n × r orthogonal matrices W with the help of continuous-time matrix algorithms (see e.g. [21, Ch. 7.2], [19, Ch. 4] or (4.91) in Exercise 4.15).

defined on the set of n × r orthogonal matrices W with the help of continuous-time matrix algorithms (see e.g. [21, Ch. 7.2], [19, Ch. 4] or (4.91) in Exercise 4.15).

![]()

We note that these two algorithms reduce to the Oja's algorithm (4.30) for Ω = Ir and to Oja's neuron (4.23) for r = 1, which of course is unstable for tracking the minorant eigenvectors with the sign −. Techniques used for stabilizing Oja's algorithm (4.30) for minor subspace tracking, have been transposed to stabilize the weighted Oja's algorithm for tracking the minorant eigenvectors. For example, in [9], W(k) is forced to be orthonormal at each time step k as in [2] (see Exercise 4.10) with the MCA-OOja algorithm and the MCA-OOjaH algorithm using Householder transforms. Note, that by proving a recursion of the distance to orthonormality ![]() from a nonorthogonal matrix W(0), it has been shown in [10], that the latter algorithm is numerically stable in contrast to the former.

from a nonorthogonal matrix W(0), it has been shown in [10], that the latter algorithm is numerically stable in contrast to the former.

Instead of deriving a stochastic approximation algorithm from a specific orthonormalization matrix G(k + 1), an analogy with Oja's algorithm (4.30) has been used in [54] to derive the following algorithm

It has been proved in [55], that for tracking the dominant eigenvectors (i.e. with the sign +), the eigenvectors {±u1, …,±ur} are the only locally asymptotically stable points of the ODE associated with (4.54). But to the best of our knowledge, no complete theoretical performance analysis of these three algorithms (4.52), (4.53), and (4.54), has been carried out until now, except in [27] which gives the asymptotic distribution of the estimated principal eigenvectors.

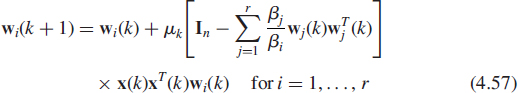

If now the matrix G(k + 1) performs the Gram–Schmidt orthonormalization on the columns of W′(k + 1), an algorithm, denoted stochastic gradient ascent (SGA) algorithm, is obtained if the successive columns of matrix W(k + 1) are expanded, assuming μk is sufficiently small. By omitting the ![]() term in this expansion [51], we obtain the following algorithm

term in this expansion [51], we obtain the following algorithm

where here Ω = Diag(α1, α2, …, αr) with αi arbitrary strictly positive numbers.

The so called generalized Hebbian algorithm (GHA) is derived from Oja's algorithm (4.30) by replacing the matrix WT(k)x(k)xT(k)W(k) of Oja's algorithm by its diagonal and superdiagonal only

![]()

in which the operator upper sets all subdiagonal elements of a matrix to zero. When written columnwise, this algorithm is similar to the SGA algorithm (4.57) where αi = 1, i = 1, …, r, with the difference that there is no coefficient 2 in the sum

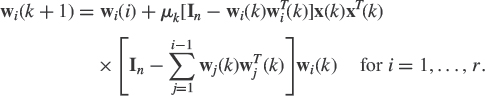

Oja et al. [54] proposed an algorithm denoted weighted subspace algorithm (WSA), which is similar to the Oja's algorithm, except for the scalar parameters β1, …, βr

with β1 > … > βr > 0. If βi = 1 for all i, this algorithm reduces to Oja's algorithm.

Following the deflation technique introduced in the adaptive principal component extraction (APEX) algorithm [42], note finally that Oja's neuron can be directly adapted to estimate the r principal eigenvectors by replacing the instantaneous estimate x(k)xT(k) of Cx(k) by ![]() to successively estimate wi(k), i = 2, …, r

to successively estimate wi(k), i = 2, …, r

Minor component analysis was also considered in neural networks to solve the problem of optimal fitting in the total least square sense. Xu et al. [79] introduced the optimal fitting analyzer (OFA) algorithm by modifying the SGA algorithm. For the estimate wn(k) of the eigenvector associated with the smallest eigenvalue, this algorithm is derived from the Oja's neuron (4.23) by replacing x(k)xT(k) by In−x(k)xT(k), viz

![]()

and for i = n, …, n − r + 1, his algorithm reads

Oja [53] showed that, under the conditions that the eigenvalues are distinct, and that λn−r+1 < 1 and ![]() , the only asymptotically stable points of the associated ODE are the eigenvectors {±vn, …, ±vn−r+1}. Note that the magnitude of the eigenvalues must be controlled in practice by normalizing x(k) so that the expression between brackets in (4.58) becomes homogeneous.

, the only asymptotically stable points of the associated ODE are the eigenvectors {±vn, …, ±vn−r+1}. Note that the magnitude of the eigenvalues must be controlled in practice by normalizing x(k) so that the expression between brackets in (4.58) becomes homogeneous.

The derivation of these algorithms seems empirical. In fact, they have been derived from slight modifications of the ODE (4.75) associated with the Oja's neuron in order to keep adequate conditions of stability (see e.g. [53]). It was established by Oja [52], Sanger [67], and Oja et al. [55] for the SGA, GHA, and WSA algorithms respectively, that the only asymptotically stable points of their associated ODE are the eigenvectors {±v1, …, ±vr}. We note that the first vector (k = 1) estimated by the SGA and GHA algorithms, and the vector (r = k = 1) estimated by the SNL and WSA algorithms gives the constrained Hebbian learning rule of the basic PCA neuron (4.23) introduced by Oja [50].

A performance analysis of different eigenvector power-based algorithms has been presented in [22]. In particular, the asymptotic distribution of the eigenvector estimates and of the associated projection matrices given by these stochastic algorithms with constant stepsize μ for stationary data has been derived, where closed-form expressions of the covariance of these distributions has been given and analyzed for independent Gaussian distributed data x(k). Closed-form expressions of the mean square error of these estimators has been deduced and analyzed. In particular, they allow us to specify the influence of the different parameters (α2, …, αr), (β1, …, βr) and β of these algorithms on their performance and to take into account tradeoffs between the misadjustment and the speed of convergence. An example of such derivation and analysis is given for the Oja's neuron in Subsection 4.7.3.

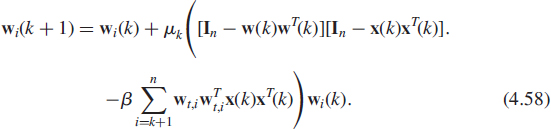

Eigenvector Power-based Methods Issued from Exponential Windows Using the exponential windowed estimates (4.19) of Cx(k), and following the concept of power method (4.9) and the subspace deflation technique introduced in [42], the following algorithm has been proposed in [38]

![]()

![]()

where ![]() for i = 1, …, r. Applying the approximation

for i = 1, …, r. Applying the approximation ![]() in (4.59) to reduce the complexity, (4.59) becomes

in (4.59) to reduce the complexity, (4.59) becomes

![]()

with ![]() and

and ![]() . Equations (4.61) and (4.59) should be run successively for i = 1, …, r at each iteration k.

. Equations (4.61) and (4.59) should be run successively for i = 1, …, r at each iteration k.

Note that up to a common factor estimate of the eigenvalues λi(k + 1) of Cx(k) can be updated as follows. From (4.59), one can write

![]()

Using (4.61) and applying the approximations ![]() and ci(k) ≈ 0, one can replace (4.62) by

and ci(k) ≈ 0, one can replace (4.62) by

![]()

that can be used to track the rank r and the signal eigenvectors, as in [72].

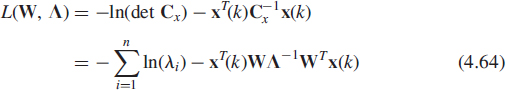

4.6.3 Projection Approximation-based Methods

A variant of the PAST algorithm, named PASTd and presented in [71], allows one to estimate the r dominant eigenvectors. This algorithm is based on a deflation technique that consists in estimating sequentially the eigenvectors. First the most dominant estimated eigenvector w1(k) is updated by applying the PAST algorithm with r = 1. Then the projection of the current data x(k) onto this estimated eigenvector is removed from x(k) itself. Because now the second dominant eigenvector becomes the most dominant one in the updated data vector ![]() , it can be extracted in the same way as before. Applying this procedure repeatedly, all of the r dominant eigenvectors and the associated eigenvalues are estimated sequentially. These estimated eigenvalues may be used to estimate the rank r if it is not known a priori [72]. It is interesting to note that for r = 1, the PAST and the PASTd algorithms, that are identical, simplify as

, it can be extracted in the same way as before. Applying this procedure repeatedly, all of the r dominant eigenvectors and the associated eigenvalues are estimated sequentially. These estimated eigenvalues may be used to estimate the rank r if it is not known a priori [72]. It is interesting to note that for r = 1, the PAST and the PASTd algorithms, that are identical, simplify as

![]()

where ![]() with

with ![]() and