Chapter 2

Blinded by Bias

Mistakes and Markets

Artificial intelligence and machine learning is suddenly all the rage, and for good reason. It is the future of this, and most every other industry. If you've been paying attention to the evolution of technology over the past 2.6 million years, you knew it was coming. Wherever human beings have shouldered the bulk of the effort, we have always sought to replace humans with technology that could do the job better, faster, more efficiently and – since the invention of capital – cheaper. It began with the most basic, brute force physical tasks and has progressively involved more nuanced, cognitive processes. Along the way, the progress has been exponential, not linear. Through AI and machine learning, technology is now attempting to improve on how we make decisions, and truth is, it won't require much effort. Not because technology represents some sort of miracle, but because we do such a poor job of it.

More than 60 years of research in the cognitive sciences provides an abundance of evidence proving that humans are prone to mistakes at every stage of the decision‐making process. From defining the problem to be solved, to researching and predicting the relevant external factors that will likely affect our ability to achieve them, as well as assessing and implementing the actions we should take in order to improve our chances of realizing those objectives, we are vulnerable to systematic errors in judgment. Luckily, technology can help us overcome our shortcomings, but it will not come without a price, and like the hunters, gatherers, cotton pickers, and factory workers who came before us, we who make a living in finance will be called on to pay it – and, likely, sooner than we think.

To conceptualize how this will work, let's simplify all financial markets down to the game of poker. As it is with all others, there are two elements at work in the game – skill and luck. No player can purposefully capitalize on luck, leaving skill to separate the good players from the bad. In the good old days of the game, skilled players extracted value by bluffing and reading the actions of the other players. Experienced players identified basic behavior patterns (“tells”) exhibited by less accomplished opponents and sought to take advantage of them. In other words, they were capitalizing on the mistakes of their opponents.

Then the “geeks” got involved, playing poker after work for hours, before convening at local bars to dissect the action until dawn. Over time, they had distilled the game down to what it is, a game of chance where the odds can be calculated and updated every step of the way. With the probability of every possible outcome being calculable, every decision along the way could be scripted according to a set of predetermined rules, a copy of which is available for free on many Internet sites. When the number crunchers began appearing at the big tournaments, they paid less attention to the other players than to the probabilities. Very quickly, the old style of play and those who practiced it, were confined to the opening rounds of the major poker tournaments, essentially serving to bankroll an ever‐increasing purse for the number crunchers.

As good as they are, however, the new breed of poker players is still human. No matter how much they value the power of probabilities, occasionally they succumb to ego, emotion, fear, greed, and other mistake‐inducing issues, not to mention the limited processing power of the human brain. In other words, as much as this new breed has improved on the old approach by reducing the number of mistakes they make, in the end they remain human. And by human, I mean flawed.

In order to remove the potential for these flaws to occur, one of the six players at a poker table decides to bankroll his Cray supercomputer. He programs it to make decisions based on the probabilities of the game, while also incorporating information it gathers in real‐time regarding facial ticks, posture, body heat, heart rate, chemical levels, and other physical embodiments of stress and excitement, while analyzing their correlation to betting behavior and the cards those players are holding relative to all the others. You can imagine the advantage the computer has over the rest of the players, but let's take a moment to truly understand what that advantage really is.

The computer has a competitive advantage, not because it does things better than the other players, but because it does them with fewer mistakes. Its edge requires that there are players at the table who are making decisions in a way that is different from how they should be made, whereas simultaneously Cray makes them as they should be. After seeing the game's pot gravitating toward Cray and away from the humans, one of the other players decides to bankroll his own supercomputer, leaving two computers and four humans at the table. The gains for the computers are limited to what the humans have left, meaning the potential for Cray 1 has just been reduced, because future earnings will be split between it and Cray 2.

Eventually, all the players choose to step out of the game, and instead replace themselves with Cray supercomputers of their own. At that moment, every player (computer) is making decisions based solely on the probabilities. No more “reads” of the players are possible. The outcome is now exclusively a function of chance. Skill plays no role whatsoever. Think about that for a moment. When the humans were removed from the game, so too were mistakes. Without the mistakes, skill no longer plays a role. The game is reduced to purely one of chance or luck, like betting on the roll of fair dice. In other words, in order for one player to have a competitive advantage attributable to skill, at least one of the other players must be making mistakes. That mistake is what makes skill possible. Take away the mistake, and all that is left is luck.

Imagine for a moment, one of the players who bankrolls a Cray decides that he can improve on the computer's performance by overriding it when he senses an opportunity that the computer isn't programmed to capitalize on, or perhaps turns it off for a bit when the machine is in the midst of a bad run. Although either of these decisions may appeal to our intuition (because we have been taught to take action like this), they are a weakness. We might rationalize the action by saying this is a combination of “art” and science, but when we do, what we are really saying is that this is a combination of decisions driven by unfounded beliefs rather than those supported by evidence.

This applies to markets as much as it does to a poker game. What skilled managers are capitalizing on are the mistakes of others. Remove humans from the markets and you remove the ability to create a competitive edge. Skill ceases to exist. If our goal is to make fewer mistakes, the objective is to make human intelligence behave more like artificial intelligence. To do so, we must focus on process.

Blind to Our Blindness

A wealthy woman was walking through an upscale part of town carrying a purse containing a great deal of cash when suddenly a man came up behind her, grabbed the purse, and took off with it. Luckily, she was able to get a good look at him and provided the police with a detailed description. The perpetrator was a man between 20 and 30 years old, just over 6 feet tall, with red hair and a pronounced limp. A man standing across the street witnessed the crime and provided the exact same description to another police officer, thereby corroborating the evidence.

A few hours later, police officers witnessed a man matching all these characteristics coming out of an electronics store carrying a large television. When they discovered that he had paid cash for the TV, they arrested him. During the trial, the prosecution called a statistician to the stand and asked, “Sir, can you tell us what the probability is of someone matching all the characteristics of the perpetrator?” The expert witness responded, “Yes, it's rather simple. You take the probability of someone in this city matching each of the characteristics, and then multiply them all by each other. In this case, it results in a vanishingly small probability of 0.0002%.”

Given that this was all the evidence available in this case, it was understandable that jurors found the defendant guilty. Unfortunately, given the evidence provided, there is a 95% chance that an innocent man was imprisoned as a result.

The mistake made by these jurors is one that has been made hundreds, and possibly thousands of times over the past three decades in cases where DNA was the only evidence presented. The phenomenon is now recognized as “Prosecutors' Fallacy,” and it occurs when jurors, and even defense attorneys, replace the question they are meant to answer, with one framed by the prosecutor. Here is how it works.

As it relates to the case of the stolen purse, the jury is tasked with answering, “What is the probability the defendant is innocent, given that he matches all the characteristics?” Instead, the prosecutor asks the statistician, “What is the probability of matching all the characteristics, given that a person is innocent?” The two questions sound, if not identical, then so close in meaning that arguing otherwise would seem pedantic. And that is exactly what the prosecution intends.

How could the appropriate question be answered correctly? It would require just a few more questions being asked, prior to drawing a conclusion. How many people live in this city? 10 million. How many of them match all the characteristics? 10,000,000 × 0.0002% = 20. Of those 20, how many are guilty? Just one. How many of them are innocent? 19 of the 20, or 95%. Therefore, the answer to the question posed to this and all other juries, “Given the evidence, what is the probability the defendant is innocent?” is 95%.

It's a simple mistake, but one that has resulted in people actually being put to death. Unfortunately, it isn't the only mistake on display in this case.

Knowing that there is just a 5% chance that the defendant is guilty given the evidence provided, most people would render a verdict of innocence. But what if the probability of guilt weren't nearly as low? At what point, would you decide to send the man to prison? 50% probability he is guilty? 80%? 90%? In other words, how certain of his guilt would you need to be before rendering a guilty verdict?

Now let's suppose, instead of being on trial for purse snatching, you are judging whether he is guilty of murder, and if he is, he will be put to death. Knowing that you are determining the fate of this man's life, would you perhaps raise the threshold? What if the murder occurred in the small town in which both you and the defendant live? Would the possibility of letting a murderer go free to kill again, with the next victim potentially being one of your family members or friends, lead you to lower the threshold? What if the victim's family were sitting in the courtroom sobbing and pleading for closure, would you lower the threshold again? What if the defendant were your mother, your child, or you? Would you want to raise the threshold for judging guilt to perhaps 100% certainty? In each of these scenarios, the probability of the defendant's guilt is unchanged. Indeed, your opinion about whether he is guilty is also unaltered. Instead, the only thing that is changing is the level at which you determine that a defendant should be judged “guilty” and pay for the crime, rather than “innocent” and return home (Figure 2.1).

Figure 2.1 Guilt threshold influence on verdict.

SOURCE: Bija Advisors.

In order for a fair trial to occur, the jury must not only properly calculate the odds of guilt based on the evidence provided, but they must also apply the same threshold to all of their verdicts. As you've witnessed, unless that threshold is set prior to knowing the facts of the case, there is an excellent chance that the threshold will be adjusted as they come to light. What this means is that there are two separate and distinct types of mistakes jurors are vulnerable to making. One relates to properly calculating the odds of guilt, while the other has to do with consistently setting the bar for a guilty verdict.

What if instead of presenting the evidence to jurors, we were to enter it into a computer that would then run a simple algorithm to accurately ascertain the probability of guilt? That probability could then be compared to a threshold that had been agreed upon through a popular vote, and consistently applied in all cases. In those cases where the defendant is judged guilty, a standard sentence would be consistently applied according to the rule of law. In other words, remove the potential for all human error and bias from the system, by removing humans from the process. No more jurors. No more judges.

Would you be in favor of such a change? If not, why not?

An overwhelming number of people to whom I have posed this question to over the years has answered with an emphatic, “No.” The question is, why would you not want to remove the potential for bias to creep into such an important decision? Could it be that you feel the bias would likely work in your favor if you were to find yourself on trial? Perhaps you believe that if you simply had an opportunity to explain yourself, to appeal to a human being's compassion, they would see that you aren't a bad person and therefore make concessions in your case. Fair enough (pun intended).

It's only natural that you would feel this way. We humans are optimists by nature. That is why we tend to underperform our expectations, launch businesses at such an extraordinary rate in spite of the overwhelming odds against success, and why projects typically come in late and over budget. It's this optimism bias and an unrealistic belief in our decision‐making prowess that makes so many distrustful of self‐driving cars and hesitant to turn over investment decisions to the machines, let alone a systematic decision‐making process. The fear is related to the possibility that the machine could break down or make a mistake. Instead, we want the ability to override the machine, to step in and make an adjustment if we feel it isn't properly assessing the situation. Perhaps it is misinterpreting or even ignoring some nuanced information. Because the machine doesn't have the ability to make a snap judgment on the fly, it's thought to be inferior to the more flexible, creative human brain.

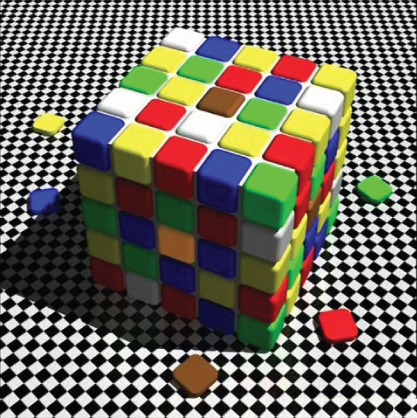

In the Rubik's Cube in Figure 2.2, we are interested in accurately assessing the color of the center pieces. If you are like most, you see the top center piece as a solid brown, while the center piece on the side facing you is a rather bright orange. No deliberation is required, no uncertainty exists. After all, we can see it with our own eyes, the ultimate arbiter of reality. In court cases, nothing trumps the power of an unbiased eyewitness account. Unless we can see something for ourselves, there will always remain a seed of doubt. Unfortunately, even if our eyes see things correctly, our brain doesn't always do a great job of processing the information delivered by them. What's worse is that we are often blind to our own blindness. And such is the case with this Rubik's Cube.

Figure 2.2 Rubik's Cube.

SOURCE: Image by Dr. R. Beau Lotto.

The reality is, those two squares are exactly the same color, represented by the exact same hexadecimal code. When I remove everything else from the image, your brain is able to “see” the squares as the brown squares they truly are (Figure 2.3). Even now that you have been educated, well aware of the shortcoming of your brain's ability to properly process the information, when you look back at the original image, you still cannot see them as they truly exist. Go ahead and try. You can't make the adjustment. You can't reprogram your brain to process the information correctly. Unfortunately, regardless of the fact that we are smart and educated, we remain flawed.

Figure 2.3 The brown and orange squares.

SOURCE: Image by Dr. R. Beau Lotto.

However, if I were to employ a simple technology like Photoshop's eyedropper, I would know with great certainty that those two squares are the exact same hue, as evidenced by the hexadecimal code #905822 (Figure 2.4).

Figure 2.4 Hexadecimal code of the two squares.

It provides an excellent example for how technology can help us improve our decision‐making. Unfortunately, it's rare for us to do so. You see, in order to make the decision to ignore what our own eyes tell us, to override our own intuition and assessment of the world, we must first recognize that we are doing it wrong. We must believe that we are making a mistake that requires fixing.

In the case of the Rubik's Cube, you are probably so certain that you are correctly identifying the tile colors as brown and orange, that even now you are questioning whether I am playing a trick on you. Perhaps I replaced the orange tile with a brown one when I dimmed the rest of the image. Even seeing the Photoshop demonstration, there remains a seed of doubt.

The reality is, visual illusions are little more than parlor tricks. They are interesting, we snicker at them and share them, but it is extremely rare for us to accept that these types of things happen to us dozens of times a day, even when we are facing decisions of great consequence. The only true way to avoid mistakes like these is to accept that we are as vulnerable as everyone else, to identify those moments when we are particularly likely to falter and make preparations ahead of time to either overcome them or, at a minimum, reduce their impact. Only when we accept our blindness can we truly see.

Minimizing Effort

At Raboud University in the Netherlands, researchers discovered something interesting about the human brain as it relates to visual information. It turns out that we see not just with our eyes, but with the brain as well. In Figure 2.5, no triangle exists, but it is implied by the placement of the three Pac‐Man shapes relative to each other. Prior to the recent findings, it was believed that the brain simply filtered the information that came in through the eyes, but now we've come to realize that it actually interprets it. That was the big news, but it was the ancillary finding that I think is far more fascinating, and relevant to our job of interpreting what we see in markets.

Figure 2.5 What the brain sees.

It turns out that the group of three Pac‐Man shapes triggers less brain activity than the one all by itself, because triangles and circles are far more prevalent in the world than Pac‐Man shapes. The single Pac‐Man shape is unexpected and therefore requires more processing by our brain. In other words, if something appears easy to explain, even if it's simply due to familiarity, less brain activity is needed to process that information, compared to when something is unexpected or difficult to account for. That is the cognitive ease our brains seek to achieve, and as we will discover, it is what leads to so many of the mistakes that we make in our decisions and keeps us from achieving so many of our goals.