CHAPTER TEN: Using Libraries and Their APIs: Speech Recognition and Maps

Chapter opener image: © Fon_nongkran/Shutterstock

Introduction

The Android framework provides some powerful libraries for app developers. In this chapter, we learn how to use some of these libraries. We explore libraries for speech recognition and maps. We build a simple app including two activities. The first activity asks the user to say a location and compares it to a list of locations. If there is a match, the second activity displays a map with annotations for that location.

10.1 Voice Recognition

In order to test an app that involves speech recognition, we need an actual device—the emulator cannot recognize speech. Furthermore, some devices may not have speech recognition capabilities, so we should test for that. When the user says a word, the speech recognizer returns a list of possible matches for that word. We can then process that list. Our code can restrict what is returned in that list, for example, we can specify the maximum number of returned items, their confidence level, etc. FIGURE 10.1 shows our app Version 0, using the standard user interface for speech recognition running inside the emulator.

FIGURE 10.1 Show A Map app, Version 0, running inside the tablet, expecting the user to speak

TABLE 10.1 Selected constants of the RecognizerIntent class

| Constant | Description |

|---|---|

| ACTION_WEB_SEARCH | Use with an activity that searches the web with the spoken words. |

| ACTION_RECOGNIZE_SPEECH | Use with an activity that asks the user to speak. |

| EXTRA_MAX_RESULTS | Key to specify the maximum number of results to return. |

| EXTRA_PROMPT | Key to add text next to the microphone. |

| EXTRA_LANGUAGE_MODEL | Key to specify the language model. |

| LANGUAGE_MODEL_FREE_FORM | Value that specifies regular speech. |

| LANGUAGE_MODEL_WEB_SEARCH | Value that specifies that spoken words are used as web search terms. |

| EXTRA_RESULTS | Key to retrieve an ArrayList of results. |

| EXTRA_CONFIDENCE_SCORES | Key to retrieve a confidence scores array for results. |

The RecognizerIntent class includes constants to support speech recognition when starting an intent. TABLE 10.1 lists some of these constants. Most of them are Strings.

To create a speech recognition intent, we use the ACTION_RECOGNIZE_SPEECH constant as the argument of the Intent constructor, as in the following statement:

Intent intent = new Intent( RecognizerIntent.ACTION_RECOGNIZE_SPEECH );

To create a web search intent that uses speech input, we use the ACTION_WEB_SEARCH constant of the RecognizerIntent class as the argument of the Intent constructor, as in the following statement:

Intent searchIntent = new Intent( RecognizerIntent.ACTION_WEB_SEARCH );

Once we have created the intent, we can refine it by placing data in it using the putExtra method, using constants of the RecognizerIntent class as keys for these values. For example, if we want to display a prompt, we use the key EXTRA_PROMPT and provide a String value for it as in the following statement:

intent.putExtra( RecognizerIntent.EXTRA_PROMPT, "What city?" );

We can use the EXTRA_LANGUAGE_MODEL constant as a key, specifying the value of the LANGUAGE_MODEL_FREE_FORM constant to tell the system to interpret the words said as regular speech or specifying the value of the LANGUAGE_MODEL_WEB_SEARCH constant to interpret the words said as a string of search words for the web.

TABLE 10.2 Useful classes and methods to assess if a device recognizes speech

| Class | Method | Description |

|---|---|---|

| Context | PackageManager getPackageManager( ) | Returns a PackageManager instance. |

| PackageManager | List queryIntentActivities( Intent intent, int flags ) | Returns a list of ResolveInfo objects, representing activities that can be supported with intent. The flags parameter can be specified to restrict the results returned. |

| ResolveInfo | Contains information describing how well an intent is matched against an intent filter. |

Not all devices support speech recognition. When building an app that uses speech recognition, it is a good idea to check if the Android device supports it.

We can use the PackageManager class, from the android.content.pm package, to retrieve information related to application packages installed on a device, in this case related to speech recognition. The getPackageManager method, from the Context class, which Activity inherits from, returns a PackageManager instance. Inside an activity, we can use it as follows:

PackageManager manager = getPackageManager( );

Using a PackageManager reference, we can call the queryIntentActivities method, shown in TABLE 10.2 along with the getPackageManager method and the ResolveInfo class, to test if a type of intent is supported by the current device. We specify the type of intent as the first argument to that method, and it returns a list of ResolveInfo objects, one object per activity supported by the device that matches the intent. If that list is not empty, the type of intent is supported. If that list is empty, it is not.

We can use the ACTION_RECOGNIZE_SPEECH constant of the RecognizerIntent, to build an Intent object and pass that Intent as the first argument of the queryIntentActivities method. We pass 0 as the second argument, which does not place any restriction on what the method returns. Thus, we can use the following pattern to test if the current device supports speech recognition:

Intent intent = new Intent( RecognizerIntent. ACTION_RECOGNIZE_SPEECH );

List<ResolveInfo> list = manager.queryIntentActivities( intent, 0 );

if( list.size( ) > 0 ) { // speech recognition is supported

// ask the user to say something and process it

} else {

// provide an alternative way for user input

}

10.2 Voice Recognition Part A, App Version 0

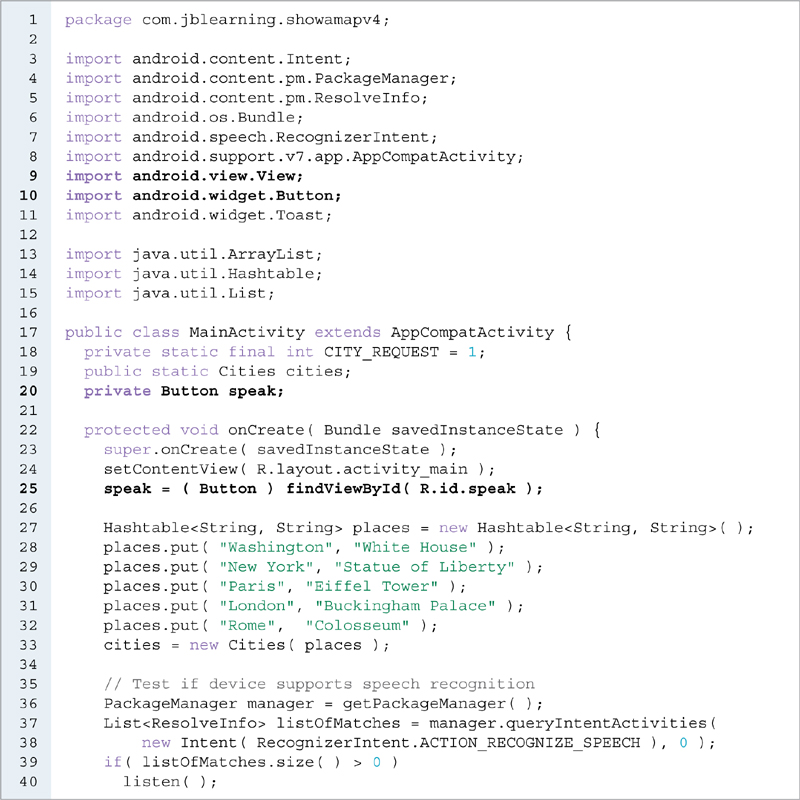

In Version 0 of our app, we will only have one activity: we ask the user to say a word and we provide a list of possible matches for that word in Logcat. Once again, we choose the Empty Activity template. This will change in Version 1 when we add a map to our app. EXAMPLE 10.1 shows the MainActivity class. It is divided into three methods:

▸ In the

onCreatemethod (lines 17–32), we set the content View and check if speech recognition is supported. If it is, we call the listen method. If it is not, we display a message to that effect.▸ In the

listenmethod (lines 34–42), we set up the speech recognition activity.▸ In the

onActivityResultmethod (lines 44–63), we process the list of words understood by the device after the user speaks.

At line 22, we get a PackageManager reference. With it, we call queryIntentActivities at lines 23–24, passing an Intent reference of type RecognizerIntent.ACTION_RECOGNIZE_SPEECH, and assign the resulting list to listOfMatches. We test if listOfMatches contains more than one element at line 25, which means that speech recognition is supported by the current device, and call listen at line 26 if it does. If it does not, we show a temporary message at lines 28–30. We use the Toast class, shown in TABLE 10.3, a convenient class to show a quick message on the screen for a small period of time that still lets the user interact with the current activity.

EXAMPLE 10.1 The MainActivity class, capturing a word from the user, Version 0

TABLE 10.3 Constants and selected methods of the Toast class

| Constant | Description |

|---|---|

| LENGTH_LONG | Use this constant for a Toast lasting 3.5 seconds. |

| LENGTH_SHORT | Use this constant for a Toast lasting 2 seconds. |

| Method | Description |

| static Toast makeText( Context context, CharSequence message, int duration ) | Static method to create a Toast in context, displaying message for duration seconds. |

| void show( ) | Shows this Toast. |

TABLE 10.4 The putExtra and get...Extra methods of the Intent class

| Method | Description |

|---|---|

| Intent putExtra( String key, DataType value ) | Stores value in this Intent, mapping it to key. |

| Intent putExtra( String key, DataType [ ] values ) | Stores the array values in this Intent, mapping it to key. |

| DataType getDateTypeExtra( String key, DataType defaultValue ) | Retrieves the value that was mapped to key. If there is not any, defaultValue is returned. DataType is either a primitive data type or a String. |

| DataType [ ] getDateTypeArrayExtra( String key ) | Retrieves the array that was mapped to key. |

Inside the listen method, at lines 35–36, we create and instantiate listenIntent, a speech recognition intent. Before starting an activity for it at line 41, we specify a few attributes for it at lines 37–40 by calling the putExtra methods of the Intent class, shown in TABLE 10.4. At line 37, we assign the value What city? to the EXTRA_PROMPT key so that it shows on the screen next to the microphone. At lines 38–39, we specify that the expected speech is regular human speech by setting the value of the key EXTRA_LANGUAGE_MODEL to LANGUAGE_MODEL_FREE_FORM. At line 40, we set the maximum number of words in the returned list to 5. We start the activity for listenIntent at line 41, and we associate it with the request code 1, the value defined by the constant CITY_REQUEST (line 15). When it finishes, the onActivityResult method is automatically called and the value of its first parameter should be the value of that request code, 1.

After the listen method executes, the user is presented with a user interface showing a microphone and the extra prompt, in this example What city?, and has a few seconds to say something. After that, the code executes inside the onActivityResult method, inherited from the Activity class. We call the super onActivityResult method at line 46, although it is a do-nothing method at the time of this writing, but that could change in the future. At line 47, we test if this method call corresponds to our earlier activity request and if the request was successful: we do this by checking the value of the request code, stored in the requestCode parameter, and the value of the result code, stored in the resultCode parameter. If they are equal to 1 (the value of CITY_REQUEST) and RESULT_OK, then we know that that method call is for the correct activity and that it was successful. RESULT_OK is a constant of the Activity class whose value is –1. It can be used to test if the result code of an activity is successful. The third parameter of the method is an Intent reference. With it, we can retrieve the results of the speech recognition process. We retrieve the list of possible words understood by the speech recognizer at lines 48–50 and assign it to the ArrayList returnedWords. We call the getStringArrayListExtra method with data, the Intent parameter of the method, and use the EXTRA_RESULTS key, shown in Table 10.1, in order to retrieve its corresponding value, an ArrayList of Strings. We retrieve the array of corresponding confidence scores assessed by the speech recognizer at lines 51–53 and assign it to the array scores.

In this version, we output the elements of returnedWords along with their scores to Logcat at lines 55–61.

We do not need the Hello world! label. Thus, we edit the activity_main.xml file and remove the TextView element.

We should not expect the speech recognition capability of the device to be perfect. Sometimes the speech recognizer understands correctly, sometimes it does not. And sometimes we can say the same word, slightly differently or with a different accent, and it can be understood differently. FIGURE 10.2 shows a possible Logcat output when running the app and saying Washington, while FIGURE 10.3 shows a possible Logcat output when running the app and saying Paris.

So when we process the user’s speech, it is important to take these possibilities into account. There are many ways to do it. One way to do that is to provide feedback to the user by writing the word or words on the screen, or even having the device repeat the word or words to make sure the device understood the word correctly. However, this process can take extra time and be annoying to the user. We can also process all the elements of the list returned and compare them to our own list of words that we expect, and process the first matching word. We do that in Version 1. Another strategy is to try to process the elements of that list one at a time until we are successful processing one.

FIGURE 10.2 Possible Logcat output from Example 10.1 when the user says Washington

FIGURE 10.3 Possible Logcat output from Example 10.1 when the user says Paris

Note that if we failed to speak within a few seconds, the user interface showing the microphone goes away and it is then too late to speak. If we want to give more control to the user, we can include a button in the user interface, which when clicked, triggers a call to the listen method and brings up the microphone again.

10.3 Using the Google Maps Activity Template, App Version 1

The com.google.android.gms.maps and com.google.android.gms.maps.model packages include classes to display a map and place annotations on it. Depending on which version of Android Studio we use, we may need to update Google Play services and Google APIs using the SDK manager. Appendix C shows these steps.

The Google Maps Activity template already includes the skeleton code to display a map. Furthermore, it makes the necessary Google Play services libraries available to the project in the build.gradle (Module: app) file, as shown in Appendix C. When creating the project, instead of choosing the Empty Activity template as we usually do, we choose the Google Maps Activity template (see FIGURE 10.4). As FIGURE 10.5 shows, the default names for the Activity class, the layout XML file, and the app title are MapsActivity, activity_main.xml, and Map, respectively.

In addition to the usual files, the google_maps_api.xml file is also automatically generated, and is located in the values directory. EXAMPLE 10.2 shows it.

FIGURE 10.4 Choosing the Google Maps Activity template

FIGURE 10.5 The names of the main files created by the template

EXAMPLE 10.2 The google_maps_api.xml file, Show A Map app, Version 1

FIGURE 10.6 Lines 18–19 of the google_maps_api.xml file, with the key partially hidden

At line 18, we replace YOUR_KEY_HERE with an actual key obtained from Google based on the instructions in Appendix C. After editing, the author’s lines 18–19 look like the one shown in FIGURE 10.6.

The layout file automatically generated by the template is called activity_maps.xml, as shown in EXAMPLE 10.3. It is typical to place a map inside a fragment (line 1). The type of fragment used here is a SupportMapFragment (line 2), a subclass of the Fragment class and the simplest way to include a map in an app. It occupies its whole parent View (lines 6–7) and has an id (line 1). Thus, we can retrieve it programmatically and change the characteristics of the map, for example, move the center of the map, or add annotations to it.

EXAMPLE 10.3 The activity_maps.xml file, Show A Map app, Version 1

The AndroidManifest.xml file already includes two additional elements, uses-permission and meta-data, to retrieve maps from Google Play services, as shown in EXAMPLE 10.4. The main additions as compared to the typical skeleton AndroidManifest.xml file are as follows:

▸ The activity element is of type

MapsActivity.▸ There is a

uses-permissionelement specifying that the app can access the current device location.▸ There is a

meta-dataelement that specifies key information.

To request a permission in the AndroidManifest.xml file, we use the following syntax:

<uses-permission android:name="permission_name"/>

TABLE 10.5 lists some String constants of the Manifest.permission class that we can use as values in lieu of permission_name.

EXAMPLE 10.4 The AndroidManifest.xml file, Show A Map app, Version 1

TABLE 10.5 Selected constants from the Manifest.permission class

| Constant | Description |

|---|---|

| INTERNET | Allows an app to access the Internet. |

| ACCESS_NETWORK_STATE | Allows an app to access the information about a network. |

| WRITE_EXTERNAL_STORAGE | Allows an app to write to an external storage. |

| ACCESS_COARSE_LOCATION | Allows an app to access an approximate location for the device derived from cell towers and Wi-Fi. |

| ACCESS_FINE_LOCATION | Allows an app to access a precise location for the device derived from GPS, cell towers, and Wi-Fi. |

| RECORD_AUDIO | Allows an app to record audio. |

Each app must declare what permissions are required by the app. When a user installs an app, the user is told about all the permissions that the app requires and has the opportunity to cancel the install at that time if he or she does not feel comfortable granting these permissions.

At lines 9–10, the automatically generated code includes a uses-permission element so that the app can use the device location using the GPS. At lines 29–31, the code includes a meta-data element to specify the value of the key for android maps. At lines 33–35, the code defines an activity element for our MapsActivity activity. At lines 36–40, we specify that it is the first activity to be launched when the app starts.

The com.google.android.gms.maps package provides us with classes to show maps and modify them. TABLE 10.6 shows some of its selected classes. The LatLng class is part of the com.google.android.gms.maps.model package.

TABLE 10.6 Selected classes from the com.google.android.gms.maps and the com.google.android.gms.maps.model packages

| Class | Description |

|---|---|

| SupportMapFragment | A container that contains a map. |

| GoogleMap | The main class for managing maps. |

| CameraUpdateFactory | Contains static methods to create CameraUpdate objects. |

| CameraUpdate | Encapsulates a camera move, such as a position change or zoom level change or both. |

| LatLng | Encapsulates a location on Earth using its latitude and longitude. |

TABLE 10.7 The getMapSync method of the SupportMapFragment class and the mapReady method of the OnMapReadyCallback interface

| Class or Interface | Method | Description |

|---|---|---|

| SupportMapFragment | void getMapAsync( OnMapReadyCallback callback ) | Sets up callback so that its mapReady method will be called when the map is ready. |

| OnMapReadyCallback | void onMapReady( GoogleMap map ) | This method is called when the map is instantiated and ready. This method needs to be implemented. |

The GoogleMap class is the central class for modifying the characteristics of a map and handling map events. Our starting point is a SupportMapFragment object, which we can either retrieve through its id from a layout, or can construct programmatically. In order to modify the map that is contained in that SupportMapFragment, we need a GoogleMap reference to it. We can get the parameter by calling the getMapAsync method of the SupportMapFragment class, shown in TABLE 10.7.

The OnMapReadyCallback interface contains only one method, onMapReady, also shown in Table 10.7. The onMapReady method accepts one parameter, a GoogleMap. When the method is called, its GoogleMap parameter is instantiated and guaranteed not to be null.

The following code sequence will get a GoogleMap reference from a SupportMapFragment named fragment inside a class implementing the OnMapReadyCallback interface.

// Assuming fragment is a reference to a SupportMapFragment

GoogleMap map;

// assuming that this "is a" OnMapReadyCallback

fragment.getMapAsync( this );

public void onMapReady( GoogleMap googleMap ) {

map = googleMap;

}

In order to modify a map, for example, its center point and its zoom level, we use the animateCamera and moveCamera methods of the GoogleMap class, which all take a CameraUpdate parameter. The CameraUpdate class encapsulates a camera move. A camera shows us the map we are looking at: if we move the camera, the map moves. We can also add annotations to a map using addMarker, addCircle, and other methods from the GoogleMap class. TABLE 10.8 shows some of these methods.

In order to create a CameraUpdate, we use static methods of the CameraUpdateFactory class. TABLE 10.9 shows some of these methods. A CameraUpdate object contains position and zoom level values. Zoom levels vary from 2.0 to 21.0. Zoom level 21.0 is the closest to the earth and shows the greatest level of details.

TABLE 10.8 Selected methods of the GoogleMap class

| Method | Description |

|---|---|

| void animateCamera( CameraUpdate update ) | Animates the camera position (and modifies the map) from the current position to update. |

| void animateCamera( CameraUpdate update, int ms, GoogleMap. CancelableCallback callback ) | Animates the camera position (and modifies the map) from the current position to update, in ms (milliseconds). The onCancel method of callback is called if the task is cancelled. If the task is completed, then the onFinish method of the callback is called. |

| void moveCamera( CameraUpdate update ) | Moves, without animation, the camera position (and modifies the map) from the current position to update. |

| void setMapType( int type ) | Sets the type of map to be displayed: the constants MAP_TYPE_NORMAL, MAP_TYPE_SATELLITE, MAP_TYPE_TERRAIN, MAP_TYPE_NONE, and MAP_TYPE_HYBRID of the GoogleMap class can be used for type. |

| Marker addMarker( MarkerOptions options ) | Adds a marker to this Map and returns it; options defines the marker. |

| Circle addCircle( CircleOptions options ) | Adds a circle to this Map and returns it; options defines the circle. |

| Polygon addPolygon( PolygonOptions options ) | Adds a polygon to this Map and returns it; options defines the polygon. |

| void setOnMapClickListener( GoogleMap. OnMapClickListener listener ) | Sets a callback method that is automatically called when the user taps on the map; listener must be an instance of a class implementing the GoogleMap.OnMapClickListener interface and overriding its onMapClick(LatLng) method. |

Often, we want to change the position of the center of the map. If we know the latitude and longitude coordinates of that point, we can use the LatLng class to create one using this constructor:

LatLng( double latitude, double longitude )

Thus, if we have a reference to a MapFragment named fragment, we can change the position of its map using the moveCamera method of the GoogleMap class as follows:

// someLatitute and someLongitude represent some location on Earth GoogleMap map = fragment.getMap( ); LatLng newCenter = new LatLng( someLatitude, someLongitude ); CameraUpdate update1 = CameraUpdateFactory.newLatLng( newCenter ); map.moveCamera( update1 );

TABLE 10.9 Selected methods of the CameraUpdateFactory class

| Method | Description |

|---|---|

| CameraUpdate newLatLng( LatLng location ) | Returns a CameraUpdate that moves a map to location. |

| CameraUpdate newLatLngZoom( LatLng location, float zoom ) | Returns a CameraUpdate that moves a map to location with zoom as the zoom level. |

| CameraUpdate zoomIn( ) | Returns a CameraUpdate that zooms in. |

| CameraUpdate zoomOut( ) | Returns a CameraUpdate that zooms out. |

| CameraUpdate zoomTo( float level ) | Returns a CameraUpdate that zooms to the zoom level level. |

We can then zoom in, using the zoomIn method of the GoogleMap class as follows:

CameraUpdate update2 = CameraUpdateFactory.zoomIn( ); map.animateCamera( update2 );

If we want to directly zoom to level 10, we can use the zoomTo method of the GoogleMap class as follows:

CameraUpdate update3 = CameraUpdateFactory.zoomTo( 10.0f ); map.animateCamera( update3 );

EXAMPLE 10.5 shows the MapsActivity class. It inherits from FragmentActivity (line 13) and OnMapReadyCallback (line 14). The map-related classes are imported at lines 6–11. This class inflates the activity_maps.xml layout file and then places a marker at Sydney, Australia.

A GoogleMap instance variable mMap, is declared at line 16. The onCreate method (lines 19–27) inflates the XML layout file at line 21, retrieves the map fragment at lines 24–25, and calls the getMapSync method with it at line 26.

The onMapReady method (lines 29–48) is automatically called when the map is ready. We assign its GoogleMap parameter to our mMap instance variable at line 41. At line 44, we create a LatLng reference with latitude and longitude values for Sydney, Australia. At lines 45–46, we add a marker to the map whose title is “Marker in Sydney” at that location using chained method calls and an anonymous MarkerOptions object. TABLE 10.10 shows the constructor and various methods of the MarkerOptions class. This is equivalent to the following code sequence:

MarkerOptions options = new MarkerOptions( ); options.position( sydney ); options.title( "Marker in Sydney" ); mMap.addMarker( options );

EXAMPLE 10.5 The MapsActivity class, Show A Map app, Version 1

TABLE 10.10 Selected methods of the MarkerOptions class

| Constructor | Description |

|---|---|

| MarkerOptions( ) | Constructs a MarkerOptions object. |

| Method | Description |

| MarkerOptions position( LatLng latLng ) | Sets the (latitude, longitude) position for this MarkerOptions. |

| MarkerOptions title( String title ) | Sets the title for this MarkerOptions. |

| MarkerOptions snippet( String snippet ) | Sets the snippet for this MarkerOptions. |

| MarkerOptions icon( BitmapDescriptor icon ) | Sets the icon for this MarkerOptions. |

FIGURE 10.7 Show A Map app, Version 1, running inside the tablet

When the user touches the marker, the title is revealed in a callout. Finally, at line 47, we move the camera to the location on the map defined by the LatLng reference sydney, so that a map centered on Sydney, Australia, shows.

FIGURE 10.7 shows the app running inside the tablet. It is possible to run inside the emulator, but we may have to update Google Play services in order to do it.

10.4 Adding Annotations to the Map, App Version 2

In Version 2, we center the map on the White House in Washington, DC, set up the zoom level, add a marker, and draw a circle around the center of the map. The only part of the project impacted is the onMapReady method of the MapsActivity class. In order to do that, we use the addMarker and addCircle methods shown in Table 10.8. These two methods are similar: the addMarker takes a MarkerOptions parameter, and addCircle takes a CircleOptions parameter. TABLE 10.11 shows selected methods of the CircleOptions class.

For both, we first use the default constructor to instantiate an object, and then call the appropriate methods to define the marker or the circle. We must specify a position, otherwise there will be an exception at run time and the app will stop. All the methods shown in both tables return a reference to the object calling the method, so method calls can be chained.

TABLE 10.11 Selected methods of the CircleOptions class

| Constructor | Description |

|---|---|

| CircleOptions( ) | Constructs a CircleOptions object. |

| Method | Description |

| CircleOptions center( LatLng latLng ) | Sets the (latitude, longitude) position of the center for this CircleOptions. |

| CircleOptions radius( double meters ) | Sets the radius, in meters, for this CircleOptions. |

| CircleOptions strokeColor( int color ) | Sets the stroke color for this CircleOptions. |

| CircleOptions strokeWidth( float width ) | Sets the stroke width, in pixels, for this CircleOptions. |

To add a circle to a GoogleMap named mMap, we can use the following code sequence:

LatLng whiteHouse = new LatLng( 38.8977, -77.0366 ); CircleOptions options = new CircleOptions( ); options.center( whiteHouse ); options.radius( 500 ); options.strokeWidth( 10.0f ); options.strokeColor( 0xFFFF0000 ); mMap.addCircle( options );

Because all the CircleOptions methods used previously return the CircleOptions reference that calls them, we can chain all these statements into one as follows:

map.addCircle( new CircleOptions( ).center( new LatLng( 38.8977, -77.0366 ) ).radius( 500 ).strokeWidth( 10.0f ).strokeColor( 0xFFFF0000 ) );

The first format has the advantage of clarity. The second format is more compact. Many examples found on the web use the second format.

EXAMPLE 10.6 The MapsActivity class, Show A Map app, Version 2

FIGURE 10.8 The map of the White House with annotations, Show A Map app, Version 2

EXAMPLE 10.6 shows the updated MapsActivity class. At lines 40–44, we place a marker on the map specifying its location, title, and snippet. We instantiate the MarkerOptions options at line 40, sets its attributes at lines 41–43, and adds it to the map at line 44.

At lines 46–49, we place a circle on the map specifying its location, its radius, its stroke width, and its color. We instantiate a CircleOptions object and chain calls to the center, radius, strokeWidth, and strokeColor methods at lines 46–48. The center of the circle is at the White House location, its radius is 500 meters, its stroke is 10 pixels, and its color is red. We add it to the map at line 49. Although we prefer the first coding style (the one we use for the marker), there are many developers who use the second coding style, chaining method calls in one statement. Thus, we should be familiar with both.

FIGURE 10.8 shows the app running inside the tablet, after the user touches the marker. The title and snippet only show if the user touches the marker.

10.5 The Model

In Version 3, we place a speech recognition activity in front of the map activity: we expect the user to say one of a few predefined cities: Washington, Paris, New York, Rome, and London. We analyze the words understood by the device and compare them to these five cities. If we have a match, we display a map of the matching city. We also place markers on the map with a description of a well-known attraction for each city. We still use the Google Maps Activity template. We need to modify the AndroidManifest.xml file to add an activity for the speech recognition activity as the starting activity for the app and therefore specify that the map activity is not the starting activity.

We keep our Model simple: we store (city, attraction) pairs in a hash table. A hash table is a data structure that maps keys to values. For example, we can map the integers 1 to 12 to the months of the year (January, February, March, etc.) and store them in a hash table. We can also store US states in a hash table: the keys are MD, CA, NJ, etc., and the values are Maryland, California, New Jersey, etc. In this app, cities (the keys) are New York, Washington, London, Rome, and Paris. The attractions (the values) are the Statue of Liberty, the White House, Buckingham Palace, the Colosseum, and the Eiffel Tower. We want to match a word said by the user to a city in our Model. In case we do not have a match, we use Washington as the default city.

EXAMPLE 10.7 shows the Cities class, which encapsulates our Model. At lines 11–13, we declare three constants to store the String Washington, its latitude and its longitude. At line 8, we declare a constant, CITY_KEY, to store the String city. When we go from the first to the second activity, we use that constant as the key for the name of the city understood by the speech recognizer in the intent that we use to start the activity. At line 9, we declare the constant MIN_CONFIDENCE and give it the value 0.5. It defines a threshold of confidence level that any word understood by the speech recognizer needs to have for us to process it as a valid city. At line 14, we declare the constant MESSAGE. We use it when we add a marker to the map.

We declare our only instance variable, places, a Hashtable, at line 16. Hashtable is a generic type whose actual data types are specified at the time of declaration. The first String data type in <String, String> is the data type of the keys. The second String is the data type of the values. We code a constructor that accepts a Hashtable as a parameter and assign it to places at lines 18–20. At lines 43–45, the getAttraction method returns the attraction mapped to a given city in places. If there is no such city in places, it returns null.

At lines 22–41, the firstMatchWithMinConfidence method takes an ArrayList of Strings and an array of floats as parameters. The ArrayList represents a list of words provided by the speech recognizer. The array of floats stores the confidence levels for these words. We use a double loop to process the parameters: the outer loop loops through all the words (line 29), and the inner loop (line 34) checks if one of them matches a key in the hash table places. We expect the confidence levels to be ordered in descending order. Thus, if the current one falls below MIN_CONFIDENCE (line 30), the remaining ones will be as well, so we exit the outer loop and return the value of DEFAULT_CITY at line 40.

Inside the inner loop, we test if the current word matches a city listed as a key in the hash table places at line 36. If it does, we return it at line 37.

If we finish processing the outer loop and no match is found with an acceptable confidence level, we return the value of DEFAULT_CITY at line 40. Note that we process the parameter words in the outer loop (line 29) and the keys of places in the inner loop (line 34) and not the other way around because we expect words to be ordered by decreasing confidence levels.

EXAMPLE 10.7 The Cities class, Show A Map app, Version 2

10.6 Displaying a Map Based on Speech Input, App Version 3

In the first activity, we ask the user to say the name of a city and we use the Cities class to convert the list of words returned by the speech recognizer into a city name that we feel confident the user said. If there is no match, we use the default city stored in the DEFAULT_CITY constant of the Cities class. Then, we store that information in the intent that we create before launching the map activity. Inside the MapsActivity class, we can then access that intent, retrieve the city name and display the correct map.

We need to do the following:

▸ Create an activity that asks the user to speak.

▸ Add an XML layout file for that activity.

▸ After the user speaks, go to the map activity.

▸ Based on what the user says, display the correct map.

▸ Edit the AndroidManifest.xml.

We still use the Google Maps Activity template to automatically generate code, in particular the gradle file, the AndroidManifest.xml file and the MapsActivity class. As we add a MainActivity class that we intend to be the first activity, we modify the AndroidManifest.xml file so that MainActivity is the first activity and MapsActivity is the second activity.

We start with the MainActivity class of Version 0 and its activity_main.xml layout file, in which we delete the TextView element and the padding attributes.

EXAMPLE 10.8 shows the updated MainActivity class. After retrieving the city said by the user, we start a second activity to show a map centered on that city. In order to analyze the city said by the user, we use the Cities class. We declare cities, a Cities instance variable at line 16; it represents our Model. For convenience, because we need to access it from the MapsActivity class, we declare it as public and static. We instantiate it at lines 22–28 inside the onCreate method using a list of five cities. At lines 63–65 of the onActivityResult method, we call the firstMatchWithMinConfidence method with cities in order to retrieve the name of the city said by the user, and we assign it to the String variable firstMatch. At lines 68–69, we place firstMatch in an intent to start a MapsActivity using the putExtra method (shown in TABLE 10.12), mapping it to the key city. We start the activity at lines 70–71.

In the MapsActivity class, we need to do the following:

▸ Access the original intent for this activity and retrieve the name of the city stored in the intent.

▸ Convert that city name to latitude and longitude coordinates.

▸ Set the center of the map to that point.

To retrieve the name of city stored in the original intent, we use the getStringExtra method shown in Table 10.12.

We need to convert a city name to a point on Earth (latitude, longitude) in order to display a map centered on that point. More generally, the process of converting an address to a pair of latitude and longitude coordinates is known as geocoding. The inverse, going from a pair of latitude and longitude coordinates to an address is known as reverse geocoding, as shown in TABLE 10.13.

EXAMPLE 10.8 The MainActivity class, Show A Map app, Version 3

TABLE 10.12 The putExtra and getStringExtra methods of the Intent class

| Method | Description |

|---|---|

| Intent putExtra( String key, String value ) | Puts value in this Intent, associating it with key; returns this Intent. |

| String getStringExtra( String key ) | Retrieves and returns the value that was placed in this Intent using key. |

The Geocoder class provides methods for both geocoding and reverse geocoding. TABLE 10.14 lists two of its methods, getFromLocationName, a geocoding method, and getFromLocation, a reverse geocoding method. They both returned a List of Address objects, ordered by matching confidence level, in descending order. For this app, we keep it simple and process the first element in that list. The Address class, part of the android.location package, stores an address, including street address, city, state, zip code, country, etc., as well as latitude and longitude. TABLE 10.15 shows its getLatitude and getLongitude methods.

TABLE 10.13 Geocoding versus reverse geocoding

| Geocoding | Reverse Geocoding |

|---|---|

| Address → (latitude, longitude) | (latitude, longitude) → street address |

| Example: 1600 Pennsylvania Avenue, Washington, DC 20500 → (38.8977, -77.0366) | Example: (38.8977, -77.0366) → 1600 Pennsylvania Avenue, Washington, DC 20500 |

TABLE 10.14 Selected methods of the Geocoder class

| Constructor | Description |

|---|---|

| Geocoder( Context context ) | Constructs a Geocoder object. |

| Method | Description |

| List <Address> getFromLocationName( String address, int maxResults ) | Returns a list of Address objects given an address. The list has maxResults elements at the most, ordered in descending order of matching. Throws an IllegalArgumentException if address is null, and throws an IOException if the network is not available or if there is any other IO problem. |

| List <Address> getFromLocation( double latitude, double longitude, int maxResults ) | Returns a list of Address objects given a point on Earth defined by its latitude and longitude. The list has maxResults elements at the most, ordered in descending order of matching. Throws an IllegalArgumentException if latitude or longitude is out of range, and throws an IOException if the network is not available or if there is any other IO problem. |

TABLE 10.15 Selected methods of the Address class

| Method | Description |

|---|---|

| double getLatitude( ) | Returns the latitude of this Address, if it is known at that time. |

| double getLongitude( ) | Returns the longitude of this Address, if it is known at that time. |

EXAMPLE 10.9 shows the MapsActivity class, updated from Version 2. We declare city, a String instance variable storing the city name, at line 25. At lines 32–36, we retrieve the city name that was stored in the intent that was used to start this map activity. We get a reference to the intent at line 33, call getStringExtra and retrieve the city name and assign it to city at line 34. If city is null (line 35), we assign to it the default city defined in the Cities class at line 36.

EXAMPLE 10.9 The MapsActivity class, Show A Map app, Version 3

Inside the onMapReady method, we geocode the address defined by the city chosen by the user and its attraction to its latitude and longitude coordinates. Then, we set up the map based on these coordinates, including a circle and a marker as in Version 2.

At lines 49–51, we declare latitude and longitude, two double variables that we use to store the latitude and longitude coordinates of city. We initialize them with the default values stored in the DEFAULT_LATITUDE and DEFAULT_LONGITUDE constants of the Cities class. At lines 53–55, we retrieve the attraction for city and assign it to the String attraction.

We declare and instantiate coder, a Geocoder object, at line 58. The getFromLocationName method throws an IOException, a checked exception, if an I/O problem occurred, such as a network-related problem, for example. Thus, we need to use try (lines 59–67) and catch (lines 68–71) blocks when calling this method. We define the address to geocode as the concatenation of the attraction and the city at line 61. We call getFromLocationName method at line 62, passing address and 5 to specify that we only want 5 or fewer results, and assign the return value to addresses. We test if addresses is null at line 63 before assigning the latitude and longitude data of the first element in it to latitude and longitude at lines 64 and 65. If we execute inside the catch block, that means that we failed to get latitude and longitude data for city, so we reset city to the default value.

Afterward, we update the center of the map to the point with coordinates (latitude, longitude) and set the zoom level at lines 73–78. We add the marker at lines 80–84 and add the circle at lines 86–89.

We need to update the AndroidManifest.xml file so that the app starts with the voice recognition activity and includes the map activity. EXAMPLE 10.10 shows the updated AndroidManifest.xml file. At line 34, we specify MainActivity as the launcher activity. At lines 43–45, we add a MapsActivity activity element.

EXAMPLE 10.10 The AndroidManifest.xml file, Show A Map app, Version 3

Finally, EXAMPLE 10.11 shows the activity_main.xml file, a skeleton RelativeLayout for the first activity.

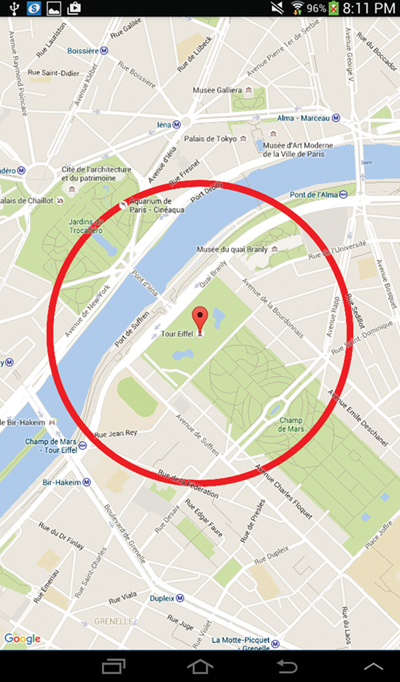

FIGURE 10.9 shows the second screen of our app Version 3, running inside the tablet. It shows a map of Paris, France, after the user said Paris. Note that the center of the map is the Eiffel Tower, because we add the attraction name to the city name so that the center of the map is at the attraction location.

EXAMPLE 10.11 The activity_main.xml file, Show A Map app, Version 3

FIGURE 10.9 The Show A Map app, Version 3, running inside the tablet

10.7 Controlling Speech Input, App Version 4

Note that if we run the app and we do not say anything, the microphone goes away and we are stuck on the first activity, which is now a blank screen. Furthermore, if we go to the second screen and come back to the first screen, we also have a blank screen. One way to fix this issue is to start the map activity no matter what. If the user does not say a word, the microphone goes away but onActivityResult still executes. We can place the code to start the map activity outside the if block of the onActivityResult method of Example 10.8. The map shown is a default map.

Another way to fix this issue is to add a button that when clicked, triggers a call to listen and brings back the microphone. This gives the user a chance to be in control, although there is the extra step of clicking on a button. We implement that feature in Version 4. We edit activity_main.xml to add a button to the first screen and we add a method to the MainActivity class to process that click.

EXAMPLE 10.12 shows the edited activity_main.xml file. At lines 8–14, we define a button that shows at the top of the screen. We give it the id speak at line 13 and specify the startSpeaking method to execute when the user clicks on the button at line 14.

EXAMPLE 10.12 The activity_main.xml file, including a button, Show A Map app Version 4

EXAMPLE 10.13 shows the edited strings.xml file. The click_to_speak string is defined at line 4.

EXAMPLE 10.13 The strings.xml file, Show A Map app, Version 4

EXAMPLE 10.14 shows the edited MainActivity class. It includes the instance variable speak, a Button, at line 20. It is assigned the button defined in activity_main.xml at line 25. If speech recognition is not supported, we disable the button at line 42. The startSpeaking method is defined at lines 49–51. It calls listen at line 50. Note that if we wanted to set up the button to call listen directly, we would need to add a View parameter to listen. When listen executes, we disable the button at line 54, and re-enable it at line 84 when onActivityResult finishes executing, so that it is enabled if we stay on this screen or when we come back to this screen from the map activity after hitting the back button.

EXAMPLE 10.14 The MainActivity Class, Show A Map app, Version 4

If we run the app again, whenever the first screen goes blank, we can click on the button and bring back the microphone.

10.8 Voice Recognition Part B, Moving the Map with Voice Once, App Version 5

We have learned how to use speech recognition with an intent. Some users may like to interact with an app by voice, but they may find the user interface that comes with it annoying. In particular, if we allow the users to repeatedly provide input by voice, it may be desirable to do it without showing the microphone.

The SpeechRecognizer class, along with the RecognitionListener interface, both part of the android.speech package, allow us to listen to and process user speech without using a GUI. However, we still have the bleeping sound that alerts the user that the app expects him or her to say something. In Version 5 of the app, we enable the user to move the map based on speech input: if the user says North, South, West, or East, we move the center of the map North, South, West, or East, respectively. There is no change in MainActivity and activity_main.xml.

To implement this, we do the following:

▸ Edit the AndroidManifest.xml file so that we ask for audio recording permission.

▸ Add the functionality to compare a word (said by the user) to a list of possible directions.

▸ Capture speech input and process it inside the

MapsActivityclass.

In order to add a permission to record audio, we edit the AndroidManifest.xml file as shown at lines 12–13 of EXAMPLE 10.15.

EXAMPLE 10.15 The AndroidManifest.xml file, Show A Map app, Version 5

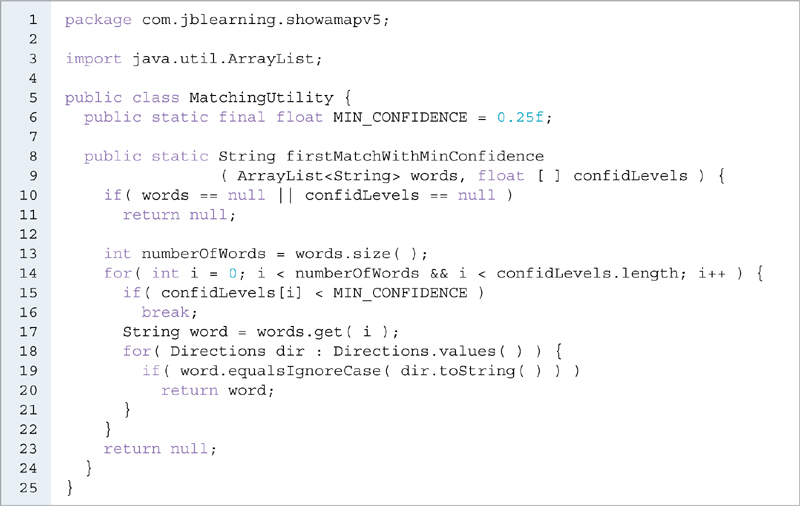

For convenience, we code the Directions class, which includes the Directions enum. We use it to compare user input to the various constants in Directions. Although we could use String constants, using an enum gives us better self-documentation for our code. EXAMPLE 10.16 shows the Directions class.

EXAMPLE 10.16 The Directions class, Show A Map app, Version 5

As before when capturing speech input, we get a list of possible matching words along with a parallel array of confidence scores. In this version of the app, we compare that list to the constants in the enum Directions in order to assess if the user says a word that defines a valid direction. In order to do that, we code another class, MatchingUtility, shown in EXAMPLE 10.17. It includes a constant, MIN_CONFIDENCE, that defines the minimum acceptable score for a matching word, and a method, firstMatchWithMinConfidence, that tests if a list of words includes a String matching one of the constants defined in the enum Directions. It returns that String if it does. The firstMatchWithMinConfidence method is very similar to the method of the Cities class shown previously in Example 10.7, except that we compare a list of words to an enum list rather than a list of keys in a hash table. The main difference in the code is how we loop through the enum Directions at line 18. In general, to loop through an enum named EnumName, we use the following for loop header:

for( EnumName current: EnumName.values( ) ) {

// process current here

}

EXAMPLE 10.17 The MatchingUtility class, Show A Map app, Version 5

TABLE 10.16 Methods of the RecognitionListener interface

| Method | Called when |

|---|---|

| void onReadyForSpeech( Bundle bundle ) | The speech recognizer is ready for the user to start speaking. |

| void onBeginningOfSpeech( ) | The user started to speak. |

| void onEndOfSpeech( ) | The user stopped speaking. |

| void onPartialResults( Bundle bundle ) | Partial results are ready. This method may be called 0, 1, or more times. |

| void onResults( Bundle bundle ) | Results are ready. |

| void onBufferReceived( byte [ ] buffer ) | More sound has just been received. This method may not be called. |

| void onEvent( int eventType, Bundle bundle ) | This method is not currently used. |

| void onRmsChanged( float rmsdB ) | The sound level of the incoming sound has changed. This method is called very often. |

| void onError( int error ) | A recognition or network error has occurred. |

In order to capture voice input without a user interface, we implement the RecognitionListener interface. It has nine methods that all need to be overridden, as listed in TABLE 10.16.

When using the RecognitionListener interface to handle a speech event, we need to perform the following steps:

▸ Code a speech handler class that implements the

RecognitionListenerinterface and override all of its methods.▸ Declare and instantiate an object of that speech handler class.

▸ Declare and instantiate a

SpeechRecognizerobject.▸ Register the speech handler object on the

SpeechRecognizerobject.▸ Tell the

SpeechRecognizerobject to start listening.

TABLE 10.17 Selected methods of the Bundle class

| Method | Description |

|---|---|

| ArrayList getStringArrayList( String key ) | Returns the ArrayList of Strings associated with key, or null if there is none. |

| float [] getFloatArray( String key ) | Returns the float array associated with key, or null if there is none. |

When testing on the tablet, the order of the calls of the various methods of the RecognitionListener interface, not including the calls to the onRmsChanged method, is as follows:

onReadyForSpeech, onBeginningOfSpeech, onEndOfSpeech, onResults

We process user input inside the onResults method. Its Bundle parameter enables us to access the list of possible matching words and their corresponding confidence scores. TABLE 10.17 lists the two methods of the Bundle class that we use to retrieve the list of possible matching words and their associated confidences scores. We use the following pattern:

public void onResults( Bundle results ) {

ArrayList<String> words =

results.getStringArrayList( SpeechRecognizer.RESULTS_RECOGNITION );

float [ ] scores =

results.getFloatArray( SpeechRecognizer.CONFIDENCE_SCORES );

// process words and scores

EXAMPLE 10.18 shows the edits in the MapsActivity class. At line 27, we declare DELTA, a constant that we use to change the latitude or longitude of the center of the map based on user input. At line 31, we declare a SpeechRecognizer instance variable, recognizer.

At lines 118–168, we code the private SpeechListener class, which implements RecognitionListener. In this version of the app, we only care about processing the results, so we override all the methods as do-nothing methods except onResults, which is the method processing the speech results. We retrieve the list of possible matching words and assign it to the ArrayList words at lines 132–133. We retrieve the array of corresponding confidence scores and assign it to the array scores at lines 134–135. At lines 137–138, we call the firstMatchWithMinConfidence method of the MatchingUtility class to retrieve the first element of words that would match the String equivalent value of one of the constants of the enum Directions. We assign it to the String match. Note that match could be null at this point.

EXAMPLE 10.18 The MapsActivity class, Show A Map app, Version 5

Based on the value of match, we update the center of the map and refresh it. At line 139, we retrieve the current center of the map and assign it to the LatLng variable pos. At line 140, we retrieve the current zoom level of the map and assign it to the float variable zoomLevel. We do not intend to change the zoom level by code in this app, but the second parameter of our updateMap method represents the zoom level. If match is not null (line 142), we update the pos variable at lines 143–150, depending on the value of match. If match matches the enum constant NORTH (line 143), we increase the latitude component of pos by DELTA at line 144, and so on. Note that in order to modify the latitude or longitude of the object that pos refers to, we re-instantiate an object every time. This is because the latitude and longitude instance variables of the LatLng class, although public, are final. Thus, the following code does not compile:

pos.latitude = pos.latitude + DELTA;

Once pos is updated, we call updateMap at line 152 to redraw the map. We call listen at line 154 to enable the user to enter more voice input; listen is originally called at lines 97–98, after the map is displayed.

The listen method, coded at lines 108–116, sets up speech recognition. It uses various methods of the SpeechRecognizer class listed in TABLE 10.18. It initializes recognizer at line 111, instantiates a SpeechListener object at line 112, and registers it on recognizer at line 113. At lines 114–115, we call the startListening method with recognizer, passing to it an anonymous intent of the speech recognition type.

TABLE 10.18 Selected methods of the SpeechRecognizer class

| Method | Description |

|---|---|

| static SpeechRecognizer createSpeechRecognizer( Context context ) | Static method that creates a SpeechRecognizer object and returns a reference to it. |

| void setRecognitionListener( RecognitionListener listener ) | Sets listener as the listener that will receive all the callback method calls. |

| void startListening( Intent intent ) | Starts listening to speech. |

| void stopListening( ) | Stops listening to speech. |

| void destroy( ) | Destroys this SpeechRecognizer object. |

FIGURE 10.10 The Show A Map app, Version 5, running inside the tablet, after the user says North

FIGURE 10.10 shows the app running in the tablet after the user says Paris and then North. Note that again, if we do not speak within a few seconds, the onError method is called with a speech timeout error, and speech is no longer processed. If we want to prevent that situation from happening, we can add some logic to detect that situation and restart a new speech recognizer if it occurs. We do that in Version 6.

10.9 Voice Recognition Part C, Moving the Map with Voice Continuously, App Version 6

In Version 6 of the app, we want to keep listening to the user and keep updating the map whenever the user tells us to, even if the user does not talk for a period of time. If that happens, the onError method is called and the speech recognizer stops listening so we need to restart it. Thus, we call the listen method (line 129 of EXAMPLE 10.19) inside the onError method in order to do that. In this way, we can keep talking to update the map.

EXAMPLE 10.19 The onError method of the MapsActivity class, Show A Map app, Version 6

Chapter Summary

The

RecognizerIntentclass includes constants to support speech recognition that can be used to create an intent.To create a speech recognition intent, we use the

ACTION_RECOGNIZE_SPEECHconstant as the argument of theIntentconstructor.We can use the

PackageManagerclass, from theandroid.content.pmpackage, to retrieve information related to application packages installed on a device.The

getPackageManagermethod, from theContextclass, returns aPackageManagerinstance.We can use the

getStringArrayListExtramethod from theIntentclass to retrieve a list of possible words that the speech recognizer may have understood.We can use the

getFloatArrayExtramethod from theIntentclass to retrieve an array of confidence scores for the list of possible words that the speech recognizer may have understood.Map classes are not part of the standard Android Development Kit. If Android Studio is not up to date, they may need to be downloaded and imported. Furthermore, we need a key from Google to be able to use maps.

Because maps, which are made of map tiles, are downloaded from Google, we need to specify a location access permission in the AndroidManifest,xml file.

A map is typically displayed inside a fragment.

The

com.google.android.gms.mapspackage includes many useful map-related classes, such asGoogleMap,CameraUpdate,CameraUpdateFactory,LatLng,CircleOptions,MarkerOptions, etc.The

LatLngclass encapsulates a point on the Earth, defined by its latitude and its longitude.The

SpeechRecognizerclass, along with theRecognitionListenerinterface, both part of theandroid.speechpackage, allow us to listen to and process user speech without using a GUI.

Exercises, Problems, and Projects

Multiple-Choice Exercises

What constant do we use to create an intent for speech recognition?

ACTION _SPEECH

RECOGNIZE_SPEECH

ACTION_RECOGNIZE_SPEECH

ACTION_RECOGNITION_SPEECH

What class includes constants for speech recognition?

Activity

RecognizerIntent

Intent

Recognizer

What constant of the class in question 2 do we pass to the getStringArrayListExtra method of the Intent class in order to retrieve the possible words recognized from our speech?

RESULTS

EXTRA_RESULTS

RECOGNIZED_WORDS

WORDS

What constant of the class in question 2 do we pass to the getFloatArrayExtra method of the Intent class in order to retrieve the confidence scores for the words in question 3?

SCORES

EXTRA_CONFIDENCE_SCORES

CONFIDENCE_SCORES

CONFIDENCE_WORDS

What class can we use to find out if speech recognition is supported by the current device?

Manager

PackageManager

SpeechRecognition

Intent

Inside the AndroidManifest.xml file, what element do we use to express that this app needs some permission, like go on the Internet, for example?

need-permission

uses-permission

has-permission

permission-needed

What class can we use to retrieve the latitude and longitude of a point based on the address of that point?

Geocoder

Address

GetLatitudeLongitude

LatitudeLongitude

In which class are the moveCamera and animateCamera methods?

Camera

CameraFactory

CameraUpdate

GoogleMap

What class encapsulates a point on Earth defined by its latitude and longitude?

Point

EarthPoint

LatitudeLongitude

LatLng

What tools can we use to listen to speech without any user interface?

The SpeechRecognizer class and the RecognitionListener interface

The SpeechRecognizer interface and the RecognitionListener class

The SpeechRecognizer class and the RecognitionListener class

The SpeechRecognizer interface and the RecognitionListener interface

Fill in the Code

Write one statement to create an intent to recognize speech.

Assuming that you have created an Intent reference named myIntent to recognize speech, write one statement to start an activity for it.

You are executing inside the onActivityResult method, write the code to retrieve how many possible matching words there are for the speech captured.

protected void onActivityResult( int requestCode, int resultCode, Intent data ) { // your code goes here }You are executing inside the onActivityResult method, write the code to count how many possible matching words have a confidence level of at least 25%.

protected void onActivityResult( int requestCode, int resultCode, Intent data ) // your code goes here }Inside the AndroidManifest.xml file, write one line so that this app is asking permission to access location.

A map is displayed on the screen and we have a GoogleMap reference to it named myMap. Write the code to set its zoom level to 7.

A map is displayed on the screen and we have a GoogleMap reference to it named myMap. Write the code to move the map so that it is centered on the point with latitude 75.6 and longitude –34.9.

A map is displayed on the screen and we have a GoogleMap reference to it named myMap. Write the code to move the map so that it is centered on the point at 1600 Amphitheatre Parkway, Mountain View, CA 94043.

A map is displayed on the screen and we have a GoogleMap reference to it named myMap. Write the code to display a blue circle of radius 100 meters and centered at the center of the map.

A map is displayed on the screen and we have a GoogleMap reference to it named myMap. Write the code to display an annotation located at the center of the map. If the user clicks on it, it says WELCOME.

A map is displayed on the screen and we have a GoogleMap reference to it named myMap. Make the map move so that the latitude of its center increases by 0.2 and the longitude of its center increases by 0.3.

You are overriding the onResults method of the RecognitionListener interface. If one of the matching words is NEW YORK, output USA to Logcat.

public void onResults( Bundle results ) { // your code goes here }You are overriding the onResults method of the RecognitionListener interface. If there is at least one matching word with at least a 25% confidence level, output YES lo Logcat.

public void onResults( Bundle results ) { // your code goes here }

Write an App

Write an app that displays the city where you live with zoom level 14 and add two circles and an annotation to highlight things of interest.

Write an app that displays the city where you live and highlight, using something different from a circle or a pin (for example, use a polygon), something of interest (a monument, a stadium, etc.).

Write an app that asks the user to enter an address in several TextViews. When the user clicks on a button, the app displays a map centered on that address.

Write an app that asks the user to enter an address in several TextViews. When the user clicks on a button, the app displays a map centered on that address. When the user says a number, the map zooms in or out at that level. If the number is not a valid level, the map does not change.

Write an app that is a game that works as follows: The app generates a random number between 1 and 10 and the user tries to guess it using voice input. The user has three tries to guess it and the app gives some feedback for each guess. If the user wins or loses (after three tries), do not allow the user to speak again.

Write an app that draws circles at some random location on the screen in a color of your choice. The radius of the circle should be captured from speech input.

Write an app that displays a 3 × 3 tic-tac-toe grid. If the user says

X, place an X at a random location on the grid. If the user saysO, place an O at a random location on the grid.Write an app that animates something (a shape or an image) based on speech input. If the user says

left,right,up, ordown, move that object to the left, right, up, or down by 10 pixels.Write an app that is a mini-translator from English to another language. Include at least 10 words that are recognized by the translator. When the user says a word in English, the app shows the translated word.

Write an app that simulates the hangman game. The user is trying to guess a word one letter at a time by saying that letter. The user will lose after using seven incorrect letters (for the head, body, two arms, two legs, and one foot).

Write an app that is a math game. The app generates a simple addition equation using two digits between 1 and 9. The user has to submit the answer by voice, and the app checks if the answer is correct.

Write an app that is a math game. First, we ask the user (via speech) what type of operation should be used for the game: addition or multiplication. Then the app generates a simple equation using two digits between 1 and 9. The user has to submit the answer by voice, and the app checks if the answer is correct.

Write an app that displays a chessboard. When the app starts, all the squares are black and white with alternating colors. When the user says

C8(or another square), that square is colored in red.Write an app that enables a user to play the game of tic-tac-toe by voice. Include a Model.