CHAPTER TWELVE: Using Another App within the App: Taking a Photo, Graying It, and Sending an Email

Chapter opener image: © Fon_nongkran/Shutterstock

Introduction

An app can start another app and process its results. It is more and more common for apps to communicate with and use other apps on a device. For example, we might want to access the phone directory of an app, or look for photos or videos. Photo apps are very popular among smartphone users. They include features to turn a color picture into a black-and-white picture, add a frame around a picture, post a picture on some social media website, etc. In this chapter, we learn how we can use another app: in particular, we learn how to use the camera and email apps inside another app. We build an app that uses the camera app (or at least one of them) of a device, takes a picture, processes that picture, and uses an email app to send it to a friend. The Android Studio environment does not include a camera so we need an actual device, phone or tablet, to test the apps in this chapter.

12.1 Accessing the Camera App and Taking a Picture, Photo App, Version 0

If we want to take a picture within an app, we have two options:

▸ Access the camera app and process its results.

▸ Use the Camera API and access the camera itself.

In this chapter, we use a camera app. In Version 0 of the app, we do three things:

▸ Enable the user to take a picture using a camera app.

▸ Capture the picture taken.

▸ Display the picture.

We can use the PackageManager class to gather information about application packages installed on the device: we can use a PackageManager reference to check if the device has a camera, a front-facing camera, a microphone, a GPS, a compass, a gyroscope, supports Bluetooth, supports Wi-Fi, etc.

We can test if the device has a given feature by calling the hasSystemFeature method of the PackageManager class, passing the constant of the PackageManager class that corresponds to that feature, and that methods returns true if the feature described by the constant is present on the device, false if it is not. The hasSystemFeature method has the following API:

public boolean hasSystemFeature( String feature )

We typically use a String constant of the PackageManager class in place of the feature argument. TABLE 12.1 shows some of these String constants.

TABLE 12.1 Selected constants of the PackageManager class

| Constant | Description |

|---|---|

| FEATURE_BLUETOOTH | Use to check if device supports Bluetooth. |

| FEATURE_CAMERA | Use to check if device has a camera. |

| FEATURE_CAMERA_FRONT | Use to check if device has a front camera. |

| FEATURE_LOCATION_GPS | Use to check if device has a GPS. |

| FEATURE_MICROPHONE | Use to check if device has a microphone. |

| FEATURE_SENSOR_COMPASS | Use to check if device has a compass. |

| FEATURE_SENSOR_GYROSCOPE | Use to check if device has a gyroscope. |

| FEATURE_WIFI | Use to check if device supports Wi-Fi. |

We use the getPackageManager method of the Context class, inherited by the Activity class, to get a PackageManager reference. Assuming that we are inside an Activity class, the following pseudo-code sequence illustrates the preceding:

// inside Activity class PackageManager manager = getPackageManager( ); if( manager.hasSystemFeature( PackageManager.DESIRED_FEATURE_CONSTANT ) ) // Desired feature is present; use it else // Notify the user that this app will not work

In order to run the camera app, or more exactly “a” camera app, we first check that the device has a camera app. Table 12.1 shows two constants that we can use for that purpose. We can test if the device has a camera app by calling the getSystemFeature method of the PackageManager class, passing the FEATURE_CAMERA constant of the PackageManager class, as shown by the code sequence that follows:

// inside Activity class PackageManager manager = getPackageManager( ); if( manager.hasSystemFeature( PackageManager.FEATURE_CAMERA ) ) // Camera app is present; use it else // Notify the user that this app will not work

TABLE 12.2 The startActivityForResult and onActivityResult methods of the Activity class

| Method | Description |

|---|---|

| void startActivityForResult( Intent intent, int requestCode ) | Start an Activity for intent. If requestCode is >= 0, it will be returned as the first parameter of the onActivityResult method. |

| void onActivityResult( int requestCode, int resultCode, Intent data ) | requestCode is the request code supplied to startActivityForResult. Data is the Intent returned to the caller by the activity. |

Once we know that the device has a feature we want to use, we can launch an activity to use that feature. In this case, we are interested in capturing the photo picture and in displaying it on the screen. Generally, if we want to start an activity and we are interested in accessing the results of that activity, we can use the startActivityForResult and the onActivityResult methods of the Activity class, both shown in TABLE 12.2. The onActivityResult executes after the activity defined by the Intent parameter of startActivityForResult finishes executing.

In order to create an Intent to use a feature of the device, we can use the following Intent constructor:

public Intent( String action )

The String action describes the device feature we want to use. Once we have created the Intent, we can start an activity for it by calling the startActivityForResult method of the Activity class. The code sequence that follows illustrates how we can start another app within an app:

// create the Intent Intent otherAppIntent = new Intent( otherAppAppropriateArgument ); // actionCode is some integer startActivityForResult( otherAppIntent, actionCode );

To create an Intent to use a camera app, we pass the ACTION_IMAGE_CAPTURE constant of the MediaStore class to the Intent constructor. The following code sequence starts a camera app activity:

// inside Activity class Intent takePictureIntent = new Intent ( MediaStore.ACTION_IMAGE_CAPTURE ); // actionCode is some integer startActivityForResult( takePictureIntent, actionCode );

Once the activity has executed, we execute inside the onActivityResult method of the Activity class. Its Intent parameter contains the results of the Activity that just executed, in this case a Bitmap object that stores the picture that the user just took. The code that follows shows how we can access the picture taken as a Bitmap inside the onActivityResult method. As indicated in the first comment, we should check that the value of requestCode is identical to the request code passed to the startActivityForResult method, and that the resultCode value reflects that the activity executed normally.

TABLE 12.3 The getExtras and get methods of the Intent and BaseBundle classes

| Class | Method | Description |

|---|---|---|

| Intent | Bundle getExtras( ) | Returns a Bundle object, which encapsulates a map (key/value pairs) of what was placed in this Intent. |

| Base | BundleObject get( String key ) | Returns the Object that was mapped to key. |

We retrieve the Bundle object of the Intent using the getExtras method shown in TABLE 12.3. That Bundle object stores a map of key/value pairs that are stored in the Intent. With that Bundle object, we call the get method, inherited from the BaseBundle class by Bundle and also shown in Table 12.3, passing the key data in order to retrieve the Bitmap. Since the get method returns an Object and we expect a Bitmap, we need to typecast the returned value to a Bitmap.

protected void onActivityResult( int requestCode,

int resultCode, Intent data ) {

// Check that the request code is correct and the result code is good

Bundle extras = data.getExtras( );

Bitmap bitmap = ( Bitmap ) extras.get( "data" );

// process bitmap here

}

The ImageView class, part of the android.widget package, can be used to display a picture and scale it. TABLE 12.4 shows some of its methods.

TABLE 12.4 Selected methods of the ImageView class

| Method | Description |

|---|---|

| ImageView( Context context ) | Constructs an ImageView object. |

| void setImageBitmap( Bitmap bitmap ) | Sets bitmap as the content of this ImageView. |

| void setImageDrawable( Drawable drawable ) | Sets drawable as the content of this ImageView. |

| void setImageResource( int resource ) | Sets resource as the content of this ImageView. |

| void setScaleType( ImageView.ScaleType scaleType ) | Sets scaleType as the scaling mode of this ImageView. |

In this app, we are getting the image dynamically, as the app is running, when the user takes a picture with the camera app. When the picture is taken, we retrieve the extras and then the corresponding Bitmap object using the get method of the BaseBundle class. Thus, we use the setImageBitmap method in this app to fill an ImageView with a picture that we take with the camera app. If we already have a Bitmap reference named bitmap, we can place it inside an ImageView named imageView using the following statement:

imageView.setImageBitmap( bitmap );

We want to notify potential users that this app uses the camera. Thus, we define a uses-feature element inside the manifest element of the AndroidManifest.xml file as follows:

<uses-feature android:name="android.hardware.camera2" />

To keep things simple, we only allow this app to run in vertical orientation, so we add this code inside the activity element:

android:screenOrientation="portrait"

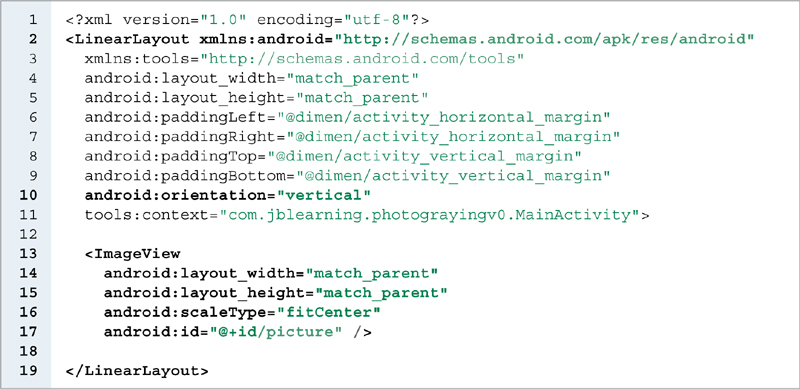

EXAMPLE 12.1 shows the activity_main.xml file. We use a LinearLayout with a vertical orientation (line 10) because we intend to add elements at the bottom of the View in the other versions of the app. At lines 13–17, we add an ImageView element to store the picture. We give it the id picture at line 17 so we can retrieve it from the MainActivity class using the findViewById method.

EXAMPLE 12.1 The activity_main.xml file, Photo app, Version 0

TABLE 12.5 Selected enum values and corresponding XML attribute values of the ImageView.ScaleType enum

| Enum Value | XML Attribute Value | Description |

|---|---|---|

| CENTER | center | Centers the image inside the ImageView without doing any scaling. |

| CENTER_INSIDE | centerInside | Scales down the image as necessary so that it fits inside the ImageView and is centered, keeping the image’s aspect ratio. |

| FIT_CENTER | fitCenter | Scales down or up the image as necessary so that it fits exactly (at least along one axis) inside the ImageView and is centered, keeping the image’s aspect ratio. |

The android:scaleType XML attribute enables us to specify how we want a picture to be scaled and positioned inside an ImageView. The ScaleType enum, part of the ImageView class, contains values that we can use to scale and position a picture inside an ImageView. TABLE 12.5 lists some of its possible values. At line 16, we use the value fitCenter to scale the picture perfectly so that it fits and position it in the middle of the ImageView. At lines 6–9, we provide some padding as defined in the dimens.xml file.

EXAMPLE 12.2 shows the MainActivity class. At lines 14–15, we declare two instance variables, bitmap and imageView, a Bitmap and an ImageView. We need to access them both whenever we want to change the picture so it is convenient to have direct references to them. Inside the onCreate method (lines 17–32), we initialize imageView at line 20. We get a PackageManager reference at line 22 and test if a camera app is present at line 23. If it is, we create an Intent to use a camera app at lines 24–25 and start an activity for it at line 26, passing the value stored in the PHOTO_REQUEST constant. If no camera app is present (line 27), we give a quick feedback to the user via a Toast at lines 28–30.

EXAMPLE 12.2 The MainActivity Class, Photo app, Version 0

When the user runs the app and takes a picture, we execute inside the onActivityResult method, coded at lines 34–42. We retrieve the Bundle from the Intent parameter data at line 38. Since the Intent was created to take a picture with a camera app, we expect that the Bundle associated with that intent contains a Bitmap storing that picture. The get method of the Bundle class, when passed the String data, returns that Bitmap. We retrieve it at line 39. At line 40, we set the image inside imageView to that Bitmap.

When we run the app, the camera app starts. FIGURE 12.1 shows our app after the user takes a picture. FIGURE 12.2 shows our app after the user clicks on the Save button. The picture shows up on the screen.

FIGURE 12.1 The Photo app, Version 0, after taking the picture

FIGURE 12.2 The Photo app, Version 0, after clicking on Save

12.2 The Model: Graying the Picture, Photo App, Version 1

In Version 1 of our app, we gray the picture with a hard coded formula. Our Model for this app is the BitmapGrayer class. It encapsulates a Bitmap and a graying scheme that we can apply to that Bitmap.

In the RGB color system, there are 256 shades of gray; a gray color has the same amount of red, green, and blue. Thus, its RGB representation looks like (x, x, x) where x is an integer between 0 and 255. (0, 0, 0) is black and (255, 255, 255) is white.

TABLE 12.6 lists some static methods of the Color class of the android.graphics package. We should not confuse that class with the Color class of the java.awt package.

Generally, if we start from a color picture and we want to transform it into a black-and-white picture, we need to convert the color of every pixel in the picture to a gray color. There are several formulas that we can use to gray a picture, and we use the following strategy:

TABLE 12.6 Selected methods of the Color class of the android.graphics package

| Method | Description |

|---|---|

| static int argb( int alpha, int red, int green, int blue ) | Returns a color integer value defined by the alpha, red, green, and blue parameters. |

| static int alpha( int color ) | Returns the alpha component of color as an integer between 0 and 255. |

| static int red( int color ) | Returns the red component of color as an integer between 0 and 255. |

| static int green( int color ) | Returns the green component of color as an integer between 0 and 255. |

| static int blue( int color ) | Returns the blue component of color as an integer between 0 and 255. |

▸ Keep the alpha component of every pixel the same.

▸ Use the red, green, and blue components of the pixel in order to generate a gray shade for it.

▸ Use the same transformation formula to generate the gray shade for every pixel.

For example, if red, green, and blue are the amount of red, green, and blue for a pixel, we could apply the following weighted average formula so that the new color for that pixel is (x, x, x):

x = red * redCoeff + green * greenCoeff + blue * blueCoeff

where

redCoeff, greenCoeff, and blueCoeff are between 0 and 1

and

redCoeff + greenCoeff + blueCoeff <= 1

This guarantees that x is between 0 and 255, that the color of each pixel is a shade of gray, and that the color transformation process is consistent for all the pixels in the picture.

Thus, if color is a Color reference representing the color of a pixel and we want to generate a gray color for that pixel using weights 0.5, 0.3, and 0.2 for red, green, and blue, respectively, we can use the following statement to generate that gray color:

int shade = ( int ) ( 0.5 * Color.red( color ) + 0.3 * Color.green( color )

+ 0.2 * Color.blue( color ) );

A picture can be stored in a Bitmap. TABLE 12.7 shows some methods of the Bitmap class. They enable us to loop through all the pixels of a Bitmap object, access the color of each pixel, and change it. In this app, we start with an image, get the corresponding Bitmap, change the colors to gray shades, and then put the Bitmap back into the image, which becomes black-and-white.

TABLE 12.7 Selected methods of the Bitmap class

| Method | Description |

|---|---|

| int getWidth( ) | Returns the width of this Bitmap. |

| int getHeight( ) | Returns the height of this Bitmap. |

| int getPixel( int x, int y ) | Returns the integer representation of the color of the pixel at coordinate( x, y ) in this Bitmap. |

| void setPixel( int x, int y, int color ) | Sets the color of the pixel at coordinate( x, y ) in this Bitmap to color. |

| Bitmap.Config getConfig( ) | Returns the configuration of this Bitmap. The Bitmap.Config class encapsulates how pixels are stored in a Bitmap. |

| static Bitmap createBitmap( int w, int h, Bitmap.Config config ) | Returns a mutable Bitmap of width w, height h, and using config to create the Bitmap. |

In order to access each pixel of a Bitmap named bitmap, we use the following double loop:

for( int i = 0; i < bimap.getWidth( ); i++ ) {

for( int j = 0; j < bitmap.getHeight( ); j++ ) {

int color = bitmap.getPixel( i, j );

// process color here

}

}

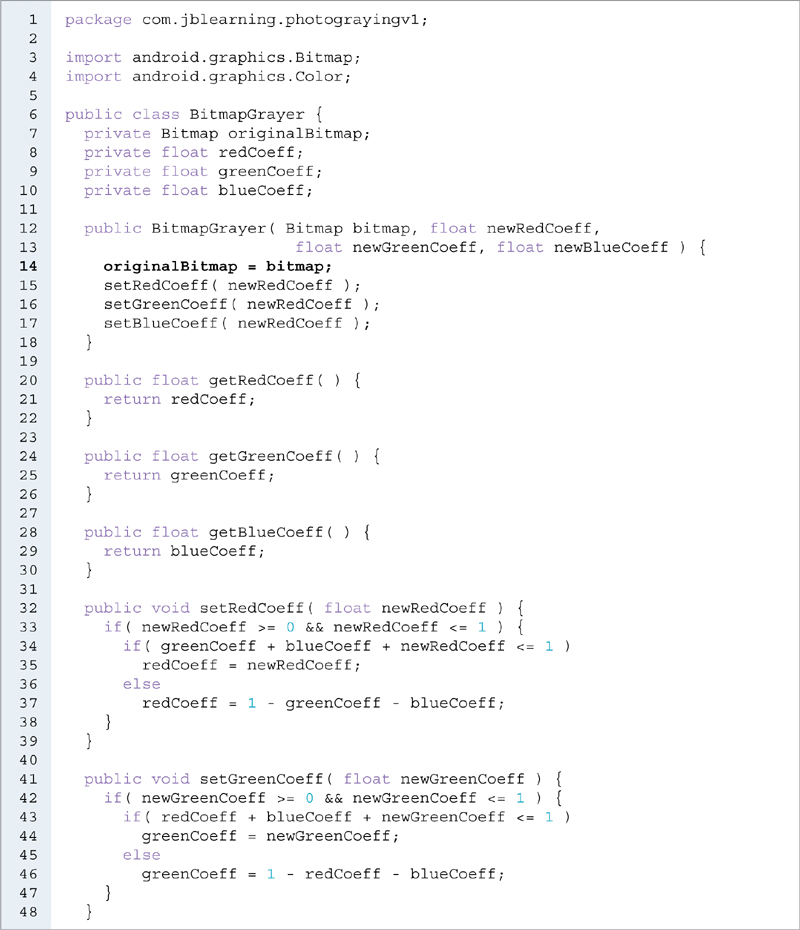

EXAMPLE 12.3 shows the BitmapGrayer class. It has four instance variables (lines 7–10): originalBitmap, a Bitmap, and redCoeff, greenCoeff, blueCoeff, three floats with values between 0 and 1. They represent the amounts of red, green, and blue that we take into account in order to generate a gray shade for each pixel of originalBitmap.

The three mutators, coded at lines 32–39, 41–48, and 50–57, enforce the following constraints:

▸

redCoeff, greenCoeff, and blueCoeff are between 0 and 1▸

redCoeff + greenCoeff + blueCoeff <= 1

The setRedCoeff mutator, at lines 32–39, only updates redCoeff if the newRedCoeff parameter is between 0.0 and 1.0 (line 33). If the value of newRedCoeff is such that the sum of greenCoeff, blueCoeff, and newRedCoeff is 1 or less (line 34), it assigns newRedCoeff to redCoeff (line 35). If not, it sets the value of redCoeff so that the sum of greenCoeff, blueCoeff, and redCoeff is equal to 1 (line 37). The two other mutators follow the same logic.

The grayScale method, coded at lines 59–75, returns a Bitmap with the following characteristics:

▸ It has the same width and height as

originalBitmap.▸ Each of its pixels is gray.

▸ If

red,green, andblueare the amount of red, green, and blue of a given pixel inoriginalBitmap, the color of the pixel in the returnedBitmapat the same x- and y-coordinates is gray and the value of the gray shade is red * redCoeff + green * greenCoeff + blue * blueCoeff.

EXAMPLE 12.3 The BitmapGrayer class, Photo app, Version 1

We retrieve the width and height of originalBitmap at lines 60–61. We retrieve the configuration of originalBitmap at line 62 calling the getConfig method (see Table 12.7). We call createBitmap (see Table 12.7) to create a mutable copy of originalBitmap, bitmap, at line 63, using the same width, height, and configuration as originalBitmap.

At lines 64–73, we use a double loop to define the color of each pixel in bitmap. We retrieve the color of the current pixel in originalBitmap at line 66. We calculate the shade of gray for the corresponding pixel in bitmap at lines 67–69, create a color with it at line 70, and assign that color to that pixel at line 71. We return bitmap at line 74.

EXAMPLE 12.4 shows the MainActivity class, Version 1. The only change from Version 0 is that we gray the picture that is inside imageView. We declare a BitmapGrayer instance variable, grayer, at line 14. Inside onActivityResult, we instantiate grayer at line 41 with bitmap and three default values for the red, green, and blue coefficients for the graying transformation process. Note that it would be too early to instantiate grayer inside the onCreate method because we need the picture to be taken so that we have a Bitmap object for that picture. At line 42, we call the grayScale method with grayer and assign the resulting Bitmap to bitmap.

EXAMPLE 12.4 The MainActivity class, Photo app, Version 1

FIGURE 12.3 The Photo app, Version 1, after clicking on save, showing a grayed picture

FIGURE 12.3 shows our app running inside the tablet after the user clicks on the Save button. The picture is now black-and-white.

12.3 Defining Shades of Gray Using SeekBars, Photo App, Version 2

In Version 1, the formula to generate the shade of gray for each pixel in the Bitmap is hard coded with coefficients 0.34, 0.33, and 0.33. In Version 2, we allow the user to specify the coefficients in the formula. We include three seek bars, also often called sliders, in the user interface. They allow the user to define the red, green, and blue coefficients that are used to calculate the shade of gray for each pixel.

FIGURE 12.4 Inheritance hierarchy of SeekBar

The SeekBar class, part of the android.widget package, encapsulates a seek bar. FIGURE 12.4 shows its inheritance hierarchy.

Visually, a seek bar includes a progress bar and a thumb. Its default representation includes a line (for the progress bar) and a circle (for the thumb). We can define a seek bar in a layout XML file using the element SeekBar. TABLE 12.8 shows some of the XML attributes that we can use to define a seek bar using XML, along with their corresponding methods if we want to control a seek bar by code. We can set the current value of the seek bar, called progress, by using the android:progress attribute or by code using the setProgress method. Its minimum value is 0, and we can define its maximum value using the android:max attribute or the setMax method.

The progress bar and the thumb are light blue by default. We can customize a seek bar by replacing the progress bar or the thumb by a drawable resource, for example an image from a file or a shape. We can do that inside an XML layout file or programmatically. In this app, since each seek bar represents the coefficient of a particular color, we customize the thumb of each of the three seek bars to reflect the three colors that they represent: red, green, and blue. We define three drawable resources, one per color, and use them in the activity_main.xml file.

TABLE 12.8 Selected XML attributes and methods of ProgressBar and AbsSeekBar

| Class | XML Attribute | Corresponding Method |

|---|---|---|

| ProgressBar | android:progressDrawable | void setProgressDrawable ( Drawable drawable ) |

| ProgressBar | android:progress | void setProgress ( int progress ) |

| ProgressBar | android:max | void setMax ( int max ) |

| AbsSeek | Barandroid:thumb | void setThumb ( Drawable drawable ) |

Inside the drawable directory, we create three files: red_thumb.xml, green_thumb.xml, and blue_thumb.xml.

EXAMPLE 12.5 shows the red_thumb.xml file. It defines a shape that we use for the thumb of the red seek bar. It defines an oval (line 4) of equal width and height (lines 5–7), that is, a circle. At lines 8–9, we define the color inside the circle, a full red. At lines 10–12, we define the thickness (line 11), and the color of the outline of the circle (line 12), also red but not completely opaque.

EXAMPLE 12.5 The red_thumb.xml file, Photo app, Version 2

The green_thumb.xml and blue_thumb.xml files are similar, using colors #F0F0 and #F00F, respectively, with the same opacity values as in the red_thumb.xml.file

To access a drawable resource named abc.xml, we use the value @drawable/abc in an XML layout context, or the expression R.drawable.abc if we access the resource programmatically.

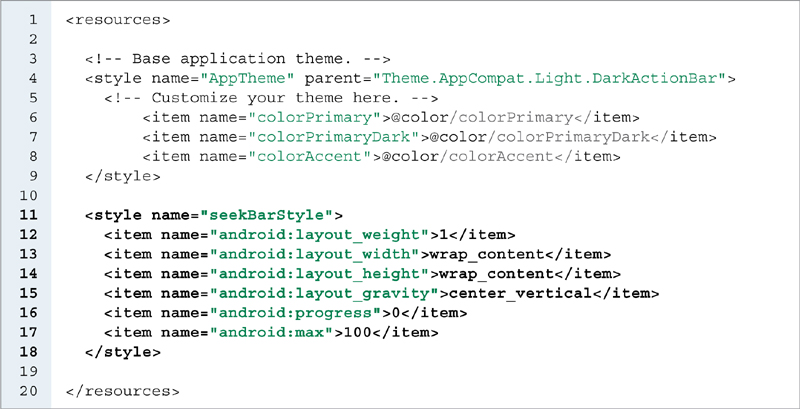

We need to add three SeekBar elements to our XML layout file. Other than their id and their thumb, these SeekBar elements are identical, so we use a style to define some of their attributes. We edit the styles.xml file and add a style element for the SeekBar elements. EXAMPLE 12.6 shows the styles.xml file. The seekBarStyle, defined at lines 11–18, is used in activity_main.xml to style the seek bars. It defines all the attributes that the seek bars have in common.

At line 12, we allocate the same amount of horizontal space to all the elements using seekBarStyle and we center them vertically at line 15. We initialize any seek bar using seekBarStyle with a progress value of 0 at line 16 and give it a max value of 100 at line 17.

EXAMPLE 12.7 shows the activity_main.xml file. It organizes the screen into two parts: an ImageView (lines 13–18) at the top that occupies four fifths of the screen (line 14), and a LinearLayout (lines 20–39) at the bottom that contains one fifth of the screen (line 22), organizes its components horizontally (line 21), and contains three seek bars (lines 26–29, 30–33, 34–37).

We give each seek bar an id (lines 27, 31, 35) so we can retrieve it using the findViewById method. At lines 28, 32, and 36, we customize the three seek bars by setting their thumb to the shapes defined in red_thumb.xml, green_thumb.xml, and blue_thumb.xml. We style them with seekBarStyle at lines 29, 33, and 37.

EXAMPLE 12.6 The styles.xml file, Photo app, Version 2

EXAMPLE 12.7 The activity_main.xml file, Photo app, Version 2

We expect the user to interact with the seek bars and we need to process that event. In order to do that, we need to implement the OnSeekBarChangeListener interface, a public static inner interface of the SeekBar class. It has the three methods, shown in TABLE 12.9. In this app, we only care about the value of each slider as the user interacts with it. Thus we override the onStartTrackingTouch and onStopTrackingTouch methods as do-nothing methods and place our event handling code inside the onProgressChanged method. Its first parameter, seekBar, is a reference to the SeekBar that the user is interacting with, and enables us to determine if the user is changing the red, green, or blue coefficients of our graying formula. Its second parameter, progress, stores the progress value of seekBar: we use it to set the coefficient of the corresponding color. Its third parameter fromUser, is true if the method was called due to user interaction, or false if it was called programmatically. We want the thumb position of each slider to reflect the actual value of its corresponding coefficient. Because of the constraint on our three coefficients of the graying formula, we do not want to allow the user to manipulate the sliders freely. The sum of the three coefficients must be 1 or less at all times. For example, we do not want the red and green sliders to show a value of 75% each, because that would mean that the sum of the red, green, and blue coefficients would be at least 1.5, a constraint violation. Thus, we need to reset the thumb position of each slider by code and that in turn triggers a call to the onProgressChanged method, which in this case, we do not want to process.

TABLE 12.9 Methods of the SeekBar.OnSeekBarChangeListener interface

| Method | Description |

|---|---|

| void onStartTrackingTouch( SeekBar seekBar ) | Called when the user starts touching the slider. |

| void onStopTrackingTouch( SeekBar seekBar ) | Called when the user stops touching the slider. |

| void onProgressChanged( SeekBar seekBar, int progress, boolean fromUser ) | Called continuously as the user moves the slider. |

In order to set up event handling for a SeekBar, we do the following:

▸ Code a

privatehandler class that implements theSeekBar.OnSeekBarChangeListenerinterface.▸ Declare and instantiate a handler object of that class.

▸ Register that handler on a

SeekBarcomponent.

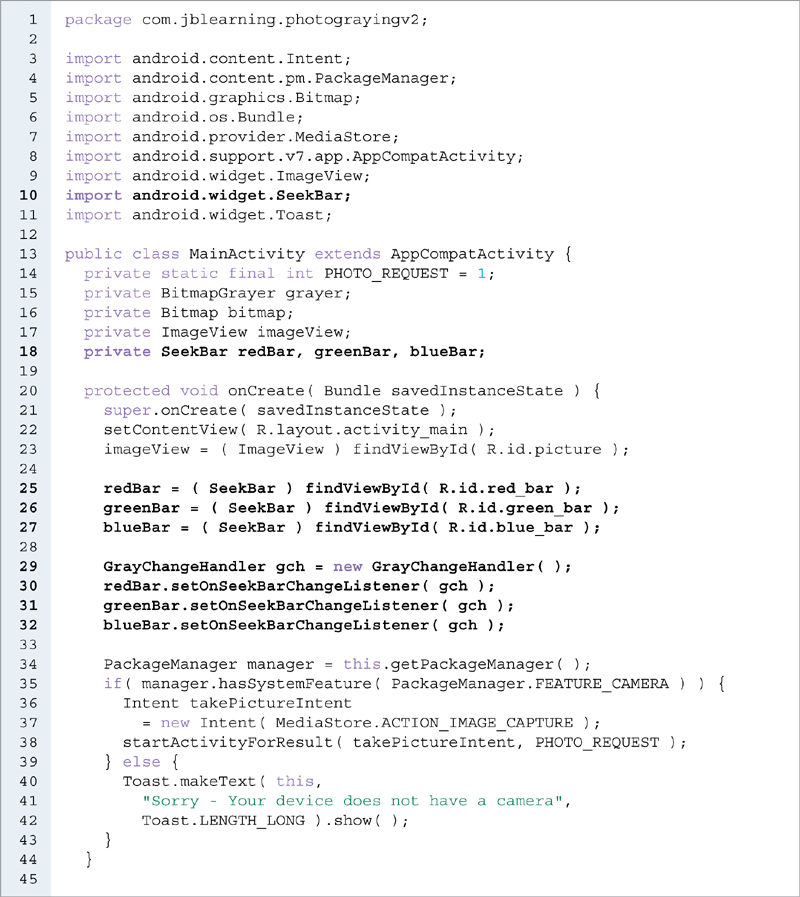

EXAMPLE 12.8 shows the MainActivity class, Version 2. At lines 57–82, we code the GrayChangeHandler class, which implements the SeekBar.OnSeekBarChangeListener interface. Three SeekBar references, redBar, greenBar, and blueBar, are defined as instance variables at line 18. Inside the onCreate method, at lines 25–27, we assign the three SeekBars defined in the activity_main.xml layout file to these three instance variables. At line 29, we declare and instantiate a GrayChangeHandler object. We register it on redBar, greenBar, and blueBar, at lines 30–32 as their listener.

Inside the onActivityResult method (lines 46–55), we instantiate grayer with default values 0, 0, 0 at line 52. In Version 1, we grayed the picture with a hard coded formula. In Version 2, we leave the picture as is, with its colors, and wait for the user to interact with the three sliders in order to gray the picture.

The onProgressChanged method is coded at lines 59–75. We test if the call to that method was triggered by user interaction at line 61. If it was not (i.e., fromUser is false), we do nothing. If it was (i.e., fromUser is true), we test which slider triggered the event. If it was the red slider (line 62), we call setRedCoeff with the value of progress scaled back to between 0 and 1 at line 63. It is possible that the new redCoeff value is such that the sum of the three coefficients is 1 or less. In this case, we want to prevent the thumb of the seek bar to go past the corresponding value of redCoeff (i.e., 100 times the value of redCoeff). Either way, we position the thumb based on the value of redCoeff by calling the setProgress method at line 64. We do the same if the seek bar is the green or blue seek bar at lines 65–67 and 68–70, respectively. Finally, we retrieve the Bitmap generated by the new coefficients at line 72 and reset the picture to that Bitmap at line 73.

EXAMPLE 12.8 The MainActivity class, Photo app, Version 2

FIGURE 12.5 shows our app running after the user clicks on the Save button and the user interacts with the seek bars.

FIGURE 12.5 The Photo app, Version 2, after clicking on Save and user interaction

12.4 Improving the User Interface, Photo App, Version 3

Version 2 provides the user with the ability to customize the formula used to gray the picture, but the seek bars do not give a precise feedback regarding the value of each color coefficient. In Version 3, we add three TextViews below the three SeekBar components to display the color coefficients.

It would be annoying for the user to display numbers with a large number of digits after the decimal point. We limit the number of digits after the decimal point to two. In order to implement this, we build a utility class, MathRounding, with a static method, keepTwoDigits, that takes a float parameter and returns the same float with only the first two digits after the decimal point.

EXAMPLE 12.9 shows the MathRounding class. The keepTwoDigits method, coded at lines 4–7, multiplies the parameter f by 100 and casts the result to an int at line 5. It then casts that int to a float, divides it by 100, and returns the result at line 6. We cast the int to a float in order to perform floating-point division.

We could use this method inside the BitmapGrayer class or inside the MainActivity class. Because we think this feature relates to how we display things rather than the functionality of the app, we use that method inside the Controller for the app, the MainActivity class, rather than the Model, the BitmapGrayer class. Thus, the BitmapGrayer class does not change.

EXAMPLE 12.9 The MathRounding class, Photo app, Version 3

We need to add three TextView elements to our XML layout file. Other than their id, these TextView elements are identical, so we use a style for them. We edit the styles.xml file and add a style element for the TextView. EXAMPLE 12.10 shows the styles.xml file.

EXAMPLE 12.10 The styles.xml file, Photo app, Version 3

The textStyle style, at lines 20–28, defines the style we use for the three TextView elements. We specify a font size of 30 at line 26, which should work well for most devices, and specify that the text should be centered at line 24, make it bold at line 25, and initialize it to 0.0 at line 27.

EXAMPLE 12.11 shows the activity_main.xml file. The screen is still organized with a vertical LinearLayout. It now has three parts: the ImageView (lines 13–18) that occupies eight tenths of the screen (line 14), a LinearLayout (lines 20–39) that occupies one tenth of the screen (line 22), and another LinearLayout (lines 41–57) that also occupies one-tenth of the screen (line 43). The three SeekBar elements, defined at lines 26–37, now only take one-tenth of the screen.

EXAMPLE 12.11 The activity_main.xml file, Photo app, Version 3

The three TextView elements are defined at lines 47–55. We give each of them an id at lines 48, 51, and 54 so that we can access them using the findViewById method in the MainActivity class. They are styled with textStyle at lines 49, 52, and 55.

EXAMPLE 12.12 shows the changes in the MainActivity class, Version 3. The TextView class is imported at line 11 and we declare three TextView instance variables redText, greenText, and blueText at line 20. They are initialized inside the onCreate method at lines 31–33.

Whenever the user interacts with one of the seek bars, we need to update the corresponding TextView. We do that at lines 71–72, 76–77, and 81–82, after we update grayer based on the progress value of the seek bar that the user is interacting with.

FIGURE 12.6 shows our app running after the user clicks on the Save button and then interacts with the seek bars.

EXAMPLE 12.12 The MainActivity class, Photo app, Version 3

FIGURE 12.6 The Photo app, Version 3, after clicking on Save and user interaction

12.5 Storing the Picture, Photo App, Version 4

In Versions 0, 1, 2, and 3, our app uses a camera app to take a picture. By default, any picture taken with the camera is stored in the Gallery. However, the grayed picture is not stored in Versions 0, 1, 2, and 3. In Version 4, our app stores the grayed picture on the device. In general, we can store persistent data in either internal storage or external storage. In the early days of Android, internal storage referred to nonvolatile memory and external storage referred to a removable storage medium. In recent Android devices, storage space is divided into two areas: internal storage and external storage. We can save persistent data in files either in internal or external storage.

Internal storage is always available and files saved in some internal storage directory are, by default, only accessible by the app that wrote them. When the user uninstalls an app, the files related to the app located in internal storage are automatically deleted. Depending on the device, external storage is typically available, although that is not guaranteed, and files located in external storage are accessible by any app. When the user uninstalls an app, the files related to the app located in external storage are automatically deleted only if they are located in a directory returned by the getExternalFilesDir method of the Context class. TABLE 12.10 summarizes some of the differences between internal and external storage, including methods of the Context class.

TABLE 12.10 Internal storage, external storage, and methods of the Context class

| Internal Storage | External Storage | |

|---|---|---|

| Default File Access | Accessible by app. | World readable. |

| When to Use | If we want to restrict file access. | If we are not concerned about restricting file access. |

| Obtain a Directory | getFilesDir( ) or getCacheDir( ) | getExternalFilesDir(String type) |

| Permission in Manifest | None needed. | Needed; WRITE_EXTERNAL_STORAGE |

| When user uninstalls the app | App-related files are deleted. | App-related files are deleted only if they are located in a directory obtained using getExternalFilesDir. |

In Version 4, we save our grayed picture in external storage. In order to implement this feature, we do the following:

▸ We add a button in the activity_main.xml layout file.

▸ We edit the

MainActivityclass so that we save the picture when the user clicks on the button.▸ We create a utility class,

StorageUtility, which includes a method to write aBitmapto an external storage file.

EXAMPLE 12.13 shows the activity_main.xml file, Version 4. At lines 59–72, we add a button to the user interface. At line 70, we specify that the savePicture method is called when the user clicks on that button. We slightly change the percentage of the screen taken by the picture. At line 14, we set the android:layout_weight attribute value of the ImageView element to 7 so that it takes 70% of the screen. The SeekBar elements still take 10% (line 22), the TextView elements still take 10% (line 43), and the button takes 10% of the screen (line 61).

EXAMPLE 12.14 shows the StorageUtility class. It includes only one static method, writeToExternalStorage, at lines 13–62: that method writes a Bitmap to a file in external storage and returns a reference to that file. If a problem happens during that process, it throws an IOException. We return a File reference rather than true or false because we need to access that file in Version 5, when we send the picture to a friend via email, adding that file as an attachment. In addition to a Bitmap parameter, this method also takes an Activity parameter, which we use to access a directory for external storage.

The Environment class is a utility class that provides access to environment variables. Its getExternalStorageState static method returns a String representing the state of the external storage on a device. The API of getExternalStorageState is:

public static String getExternalStorageState( )

EXAMPLE 12.13 The activity_main.xml file, Photo app, Version 4

EXAMPLE 12.14 The StorageUtility class

TABLE 12.11 Selected constants of the Environment class

| Constant | Description |

|---|---|

| MEDIA_MOUNTED | Value returned by the getExternalStorageState method if external storage is present with read and write access. |

| DIRECTORY_PICTURES | Name of directory is Pictures. |

| DIRECTORY_DOWNLOADS | Name of directory is Download. |

| DIRECTORY_MUSIC | Name of directory is Music. |

| DIRECTORY_MOVIES | Name of directory is Movies. |

If that String is equal to the value of the MEDIA_MOUNTED constant of the Environment class (shown in TABLE 12.11), that means that there is external storage on the device and it has read and write access. We can test if there is external storage with the following code sequence:

String storageState = Environment.getExternalStorageState( );

if( storageState.equals( Environment.MEDIA_MOUNTED ) ) {

// there is external storage

We get the storage state at lines 22–23 and test if external storage is available on the device at line 26. If it is not, we throw an IOException at line 61. If it is, we obtain the path of a directory for the external storage at lines 27–29 by calling the getExternalFilesDir method of the Context class, inherited by the MainActivity class via the Activity class. TABLE 12.12 shows the getExternalFilesDir method. On the author’s tablet, the directory path returned if the argument is null is:

/storage/emulated/0/Android/data/com.jblearning.photograyingv4/files

If the argument is MyGrayedPictures, the directory path returned is:

/storage/emulated/0/Android/data/com.jblearning.photograyingv4/files/MyGrayedPictures

TABLE 12.12 The getExternalFilesDir method of the Context class

| Method | Description |

|---|---|

| File getExternalFilesDir( String type ) | Returns the absolute path of an external storage directory where the app can place its files. This is app dependent. If type is not null, a subdirectory named with the value of type is created. |

The Environment class includes some constants we can use for standard names for directories. Table 12.11 lists some of them. Note that we are not required to use a directory named Music to store music files, but it is good practice to do so. At line 29, we use the DIRECTORY_PICTURES constant, whose value is Pictures.

Because the writeToExternalStorage method can be called many times, we want to create a new file every time it is called and not overwrite an existing file. We also do not want to ask the user for a file name, because that can be annoying for the user. Thus, we generate the file name dynamically. Since we do not want to overwrite an existing file, we choose a file naming mechanism that guarantees that we generate a unique file name every time. One way to do this is to use today’s date and the system clock. The elapsedRealtime static method of the SystemClock class, shown in TABLE 12.13, returns the number of milliseconds since the last boot. We combine it with today’s date in order to generate the file name. It is virtually impossible for the user to modify one seek bar and click on the SAVE PICTURE button within the same millisecond as the previous click. Thus, our naming strategy guarantees a unique file name every time we generate one. We generate that unique file name at lines 30–33. At lines 35–36, we create a file using that file name and located in the external storage directory.

We retrieve the amount of free space in the directory where that file will be at line 37 and the number of bytes needed to write the bitmap into that file at line 38, using the getByteCount method of the Bitmap class, shown in TABLE 12.14. We test if we have enough space in the directory for the number of bytes we need (times 1.5, to be safe) at line 39. If we do not, we throw an IOException at lines 57–58. If we do, we attempt to open that file for writing at line 42 and try to write bitmap to it at lines 43–45. If for some reason we are unsuccessful, we execute inside the catch block and we throw an IOException at line 53. Because the FileOutputStream constructor can throw several exceptions, we catch a generic Exception at line 52 to keep things simple.

In order to write the Bitmap bitmap to our file, we use the compress method of the Bitmap class, shown in Table 12.14. The first argument of the compress method is the file compression format. The Bitmap.CompressFormat enum contains constants that we can use to specify such a format, as listed in TABLE 12.15. The second argument of the compress method is the quality of the compression. Some compression algorithms, such as the one for the PNG format, are lossless, and ignore the value of that parameter. The third argument of the compress method is the output stream. If we want to write to a file, we can use a FileOutputStream reference, since FileOutputStream is a subclass of OutputStream. At line 45, we specify the PNG format and a compression quality level of 100 (although it does not matter here).

TABLE 12.13 The elapsedRealtime method of the SystemClock class

| Method | Description |

|---|---|

| static long elapsedRealtime( ) | Returns the time since the last boot in milliseconds, including sleep time. |

TABLE 12.14 The getByteCount and compress methods of the Bitmap class

| Method | Description |

|---|---|

| public final int getByteCount( ) | Returns the minimum number of bytes needed to store this Bitmap. |

| public boolean compress( Bitmap.Compress.Format format, int quality, OutputStream stream ) | Writes a compressed version of this Bitmap to stream, using the format format and a compression quality specified by quality—quality is between 0 (smallest size) and 100 (highest quality). Some formats like PNG, which is lossless, ignore the value of quality. |

TABLE 12.15 The constants of the Bitmap.CompressFormat enum

| Constant | Description |

|---|---|

| JPEG | JPEG format |

| PNG | PNG format |

| WEBP | WebP format |

After closing the file at line 46, we test if the compress method returned true at line 47 (i.e., the Bitmap was correctly written to the file). If it does, we return file at line 48, otherwise, we throw an IOException at lines 50–51.

EXAMPLE 12.15 shows the MainActivity class, Version 4. The savePicture method (lines 65–74) is called when the user clicks on the SAVE PICTURE button. At line 68, we call the writeToExternalStorage method, passing this and bitmap. We do not use the File reference returned so we do not capture it. The user can change the graying parameters many times and save many grayed pictures. Since we name our file using a unique name, we can save as many pictures as we want.

Since the app writes to external storage, it.is mandatory to notify potential users and to include a uses-permission element inside the manifest element of the AndroidManifest.xml file as follows:

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

EXAMPLE 12.15 The MainActivity class, Photo app, Version 4

FIGURE 12.7 shows our app running after the user interacts with the seek bars. The SAVE PICTURE button enables the user to save the grayed picture.

When we run the app and save a picture, the app gives a Toast feedback. We can also open the directory where the pictures stored by the app are located in the device’s external storage and look for our files. FIGURE 12.8 shows a screenshot of the tablet after we navigate to the data directory, after successively clicking on the My Files native app, then storage, emulated, 0, Android, and data on the left pane. Next, we click on the com.jblearning.photograyingv4 directory (at the top on the right) then files, and Pictures. Our pictures are now visible.

If we change lines 28–29 in Example 12.14 to

File dir = activity.getExternalFilesDir("MyPix");

and we run the app again, then navigate to the MyPix directory next to the Pictures directory, MyPix contains the new grayed pictures generated by the app.

FIGURE 12.7 The Photo app, Version 4, after clicking on Save and user interaction

FIGURE 12.8 Screenshot of the tablet as we navigate to find our stored grayed pictures

12.6 Using the Email App: Sending the Grayed Picture to a Friend, Photo App, Version 5

Now that we know how to save the picture to a file, we can use that file as an attachment to an email. In Version 5, we replace the SAVE PICTURE text inside the button with SEND PICTURE, and we enable the user to share the picture or send it to a friend as an attachment to an email.

We modify the code for the button in the activity_main.xml file as shown in EXAMPLE 12.16.

EXAMPLE 12.17 shows the sendEmail method (lines 67–87) and the new import statements (lines 6 and 15) of the MainActivity class. The sendEmail method replaces the savePicture method of Example 12.16, Photo app, Version 4.

At line 70, we write bitmap to external storage, returning the File reference file. At line 71, we call the fromFile method of the Uri class, shown in TABLE 12.16, and create uri, a Uri reference for file. We need a Uri reference in order to attach a file to an email. The Uri class, from the android.net package, not to be confused with the URI class from the java.net package, encapsulates a Uniform Resource Identifier (URI).

EXAMPLE 12.16 The activity_main.xml file, Photo app, Version 5

EXAMPLE 12.17 The MainActivity class, Photo app, Version 5

TABLE 12.16 The fromFile static method of the Uri class

| Method | Description |

|---|---|

| static Uri fromFile( File file ) | Creates and returns a Uri from file; encodes characters except /. |

At lines 72–79, we create and define an Intent to send some data, using constants of the Intent class shown in TABLE 12.17. At line 72, we use the ACTION_SEND constant to create an Intent to send data. We set the Multipurpose Internet Mail Extensions (MIME) data type of the Intent at line 73 using the setType method shown in TABLE 12.18. We define a subject line at lines 76–77, mapping it to the value of EXTRA_SUBJECT. We add uri as an extra to the Intent at line 79, mapping it to the value of EXTRA_STREAM: that means that the file represented by file becomes the attachment when we send the data. We add and comment out lines 74–75 and 78. They show how we can add a list of recipients and email body text, using the EXTRA_EMAIL and EXTRA_TEXT constants. For this app, it is better to let the user fill in the blanks when the email app opens.

TABLE 12.17 Selected constants of the Intent class

| Constant | Description |

|---|---|

| ACTION_SEND | Used for an Intent to send some data to somebody. |

| EXTRA_EMAIL | Used to specify a list of email addresses. |

| EXTRA_STREAM | Used with ACTION_SEND to specify a URI holding a stream of data associated with an Intent. |

| EXTRA_SUBJECT | Used to specify a subject line in a message. |

| EXTRA_TEXT | Used with ACTION_SEND to define the text to be sent. |

TABLE 12.18 The setType and createChooser methods of the Intent class

| Method | Description |

|---|---|

| Intent setType( String type ) | Sets the MIME data type of this Intent; returns this Intent so that method calls can be chained. |

| Intent putExtra( String name, dataType value ) | Adds data to this Intent. Name is the key for the data, value is the data. There are many putExtra methods with various data types. It returns this Intent so that method calls can be chained. |

| static Intent createChooser( Intent target, CharSequence title ) | Creates an action chooser Intent so that the user can choose among several activities to perform via a user interface. Title is the title of the user interface. |

At lines 81–82, we call the createChooser method of the Intent class, shown in Table 12.18, and call startActivity, passing the returned Intent reference. The createChooser method returns an Intent reference such that when we start an activity with it, the user is presented with a choice of apps that match the Intent. Since we use the SEND_ACTION constant to create the original Intent, apps that send data, such as email, gmail, and other apps, will be presented to the user.

FIGURE 12.9 shows our app running after the user interacted with the seek bars. The SEND PICTURE button enables the user to save and send the grayed picture.

FIGURE 12.10 shows our app running after the user clicks on the SEND PICTURE button. All the available apps matching the intent to send data show.

FIGURE 12.9 The Photo app, Version 5, after the user interacted with the seek bars

FIGURE 12.10 The Photo app, Version 5, after the user clicks on SEND PICTURE

FIGURE 12.11 The Photo app, Version 5, after selecting the email app

FIGURE 12.11 shows our app running after the user selects email. We are now inside the email app, and we can edit it as we would edit any email.

Chapter Summary

An app can use another app.

In order to use a feature or another app, we include a

uses-featureelement in the AndroidManifest.xml file.The

hasSystemFeaturemethod of thePackageManagerclass enables us to check if a feature or an app is installed on an Android device.We can use the

ACTION_IMAGE_CAPTUREconstant of theIntentclass to open a camera app.We can use the

ImageViewclass as a container to display a picture.The

Bitmapclass encapsulates an image stored based on a format like PNG or JPEG.If a picture is stored in a

Bitmap, we can access the color of every pixel of the picture.The

SeekBarclass, from theandroid.widgetpackage, encapsulates a slider.We can capture and process

SeekBarevents by implementing theSeekBar.OnSeekBarChangeListenerinterface.To store persistent data, an Android device always has internal storage and typically, but not always, has external storage.

The

Contextclass provides methods that we can use to retrieve a directory path for external storage.In order to write to external storage, it is mandatory to include a

uses-permissionelement in the AndroidManifest.xml file.We can use the

compressmethod of theBitmapclass to write aBitmapto a file.The

fromFilemethod of theUriclass converts aFileto aUri.We can use the

ACTION_SENDconstant from theIntentclass to create anIntentto send an email using an existing email app.We can use the

createChooser staticmethod of theIntentclass to display several possible apps matching a givenIntent.

Exercises, Problems, and Projects

Multiple-Choice Exercises

What method of the PackageManager class can we use to check if an app is installed on an Android device?

isInstalled

isAppInstalled

hasSystemFeature

hasFeature

What constant of the MediaStore class can we use to create an Intent to use a camera app?

ACTION_CAMERA

IMAGE_CAPTURE

ACTION_ CAPTURE

ACTION_IMAGE_CAPTURE

What class can we use as a container for a picture?

PictureView

ImageView

BitmapView

CameraView

What method of the class in question 3 is used to place a Bitmap object inside the container?

setBitmap

setImage

setImageBitmap

putBitmap

What key do we use to retrieve the Bitmap stored within the extras Bundle of a camera app Intent?

camera

picture

data

bitmap

What method of the Bitmap class do we use to retrieve the color of a pixel?

getPixel

getColor

getPixelColor

getPoint

What method of the Bitmap class do we use to modify the color of a pixel?

setPixel

setColor

setPixelColor

setPoint

How many parameters does the method in question 7 take?

0

1

2

3

What is the name of the class that encapsulates a slider?

Slider

Bar

SeekBar

SliderView

What are the types of storage on an Android device?

Internal and external

Internal only

External only

There is no storage on an Android device

What method of the Context class returns the absolute path of an external storage directory?

getExternalPath

getInternalPath

getExternalFiles

getExternalFilesDir

What method of the Bitmap class can we use to write a Bitmap to a file?

writeToFile

compress

write

format

What static method of the Uri class do we use to create a Uri from a file?

fromFile

a constructor

createFromFile

createUriFromFile

What constant of the Intent class do we use to create an Intent for sending data?

ACTION_SEND

DATA_SEND

EMAIL_SEND

SEND

Fill in the Code

Write a code sequence to output to Logcat that a camera app is available or not on a device.

Write the code to place a Bitmap named myBitmap inside an ImageView named myImageView.

Write the code to change all the pixels in a Bitmap named myBitmap to red.

Write the code to change all the pixels in a Bitmap named myBitmap to gray. The gray scale is defined with the following coefficients for red, green, and blue respectively: 0.5, 0.2, 0.3.

Write the code to output to Logcat EXTERNAL STORAGE or NO EXTERNAL STORAGE depending on whether there is external storage available on the current device.

Write the code to output to Logcat the number of bytes in a Bitmap named myBitmap.

Write the code to write a Bitmap named myBitmap to a file using the JPEG format, and a compression quality level of 50.

// assume that file is a File object reference // previously and properly defined try { FileOutputStream fos = new FileOutputStream( file ); // your code goes here } catch( Exception e ) { }Write the code to create an Intent to send an email to [email protected] with the subject line HELLO and the message SEE PICTURE ATTACHED. Attach to the message a file that is stored in a File object named myFile.

Write an App

Write an app that enables the user to take a picture, display it, and has a button to eliminate the red color component in all the pixels of the picture. Include a Model.

Write an app that enables the user to take a picture, display it, and has three radio buttons to eliminate either the red, green, or blue color component in all the pixels of the picture. Include a Model.

Write an app that enables the user to take a picture, display it, and has a button to enable the user to change the picture to a mirror image of itself (the left and right sides of the picture are switched). If the user clicks on the button two times, the app shows the original picture again. Include a Model.

Write an app that enables the user to take a picture, display it, and has a button to enable the user to display a frame (of your choice) inside the picture. You can define a frame with a color and a number of pixels for its thickness. Include a Model.

Same as 26, but add a button to send an email with the framed picture. Include a Model.

Write an app that enables the user to take a picture, display it, and has a button to enable the user to display a frame around the picture of several possible colors (you can have at least three radio buttons for these colors). Include a Model.

Write an app that enables the user to take two pictures, and display them in the same ImageView container like an animated GIF: picture 1 shows for a second, then picture 2 shows for a second, then picture 1 shows for a second, and so on.

Same as 29, but add a button to send the two pictures via email.

Write an app that enables the user to take a picture, display it, and add some text of your choice on the picture. You can use the Canvas and Paint classes, in particular the Canvas constructor that takes a Bitmap as a parameter.

Same as 31, but add a bubble (you can hard code the drawing of the bubble). The text goes inside the bubble.

Write an app that enables the user to take a picture, display it, and add a vignette effect. A vignette effect is a reduction of the image’s saturation at the periphery of the image. Include a Model.