In the city of Pasadena, I went to CaliBurger for lunch and noticed a crowd of people next to the area where the food was being cooked—which was behind glass. The people were taking photos with their smartphones!

Why? The reason was Flippy, an AI-powered robot that can cook burgers.

I was there at the restaurant with David Zito, the CEO and co-founder of the company Miso Robotics that built the system. “Flippy helps improve the quality of the food because of the consistency and reduces production costs,” he said. “We also built the robot to be in strict compliance with regulatory standards.”1

After lunch, I walked over to the lab for Miso Robotics, which included a testing center with sample robots. It was here that I saw the convergence of software AI systems and physical robots. The engineers were building Flippy’s brain, which was uploaded to the cloud. Just some of the capabilities included washing down utensils and the grill, learning to adapt to problems with cooking, switching between a spatula for raw meat and one for cooked meat, and placing baskets in the fryer. All this was being done in real-time.

But the food service industry is just one of the many areas that will be greatly impacted by robotics and AI.

According to International Data Corporation (IDC), the spending on robotics and drones is forecasted to go from $115.7 billion in 2019 to $210.3 billion by 2022.2 This represents a compound annual growth rate of 20.2%. About two thirds of the spending will be for hardware systems.

In this chapter, we’ll take a look at physical robots and how AI will transform the industry.

What Is a Robot?

The origins of the word “robot” go back to 1921 in a play by Karel Capek called Rossum’s Universal Robots. It’s about a factory that created robots from organic matter, and yes, they were hostile! They would eventually join together to rebel against their human masters (consider that “robot” comes from the Czech word robata for forced labor).

But as of today, what is a good definition for this type of system? Keep in mind that there are many variations, as robots can have a myriad of forms and functions.

Physical: A robot can range in size, from tiny machines that can explore our body to massive industrial systems to flying machines to underwater vessels. There also needs to be some type of energy source, like a battery, electricity, or solar.

Act: Simply enough, a robot must be able to take certain actions. This could include moving an item or even talking.

Sense: In order to act, a robot must understand its environment. This is possible with sensors and feedback systems.

Intelligence: This does not mean full-on AI capabilities. Yet a robot needs to be able to be programmed to take actions.

Nowadays it’s not too difficult to create a robot from scratch. For example, RobotShop.com has hundreds of kits that range from under $10 to as much as $35,750.00 (this is the Dr. Robot Jaguar V6 Tracked Mobile Platform).

A heart-warming story of the ingenuity of building robots concerns a 2-year old, Cillian Jackson. He was born with a rare genetic condition that rendered him immobile. His parents tried to get reimbursement for a special electric wheelchair but were denied.

Well, the students at Farmington High School took action and built a system for Cillian.3 Essentially, it was a robot wheelchair, and it took only a month to finish. Because of this, Cillian can now chase around his two corgis around the house!

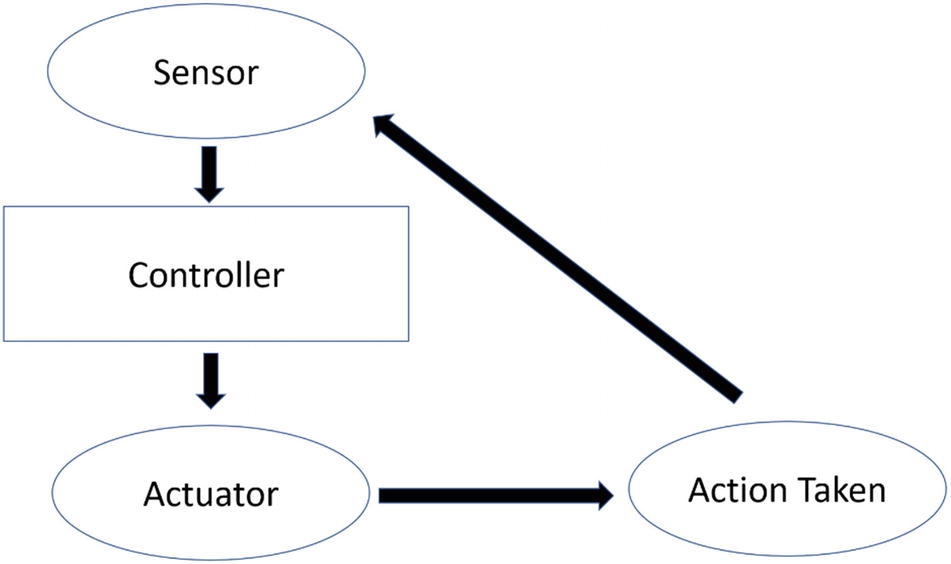

Sensors: The typical sensor is a camera or a Lidar (light detection and ranging), which uses a laser scanner to create 3D images. But robots might also have systems for sound, touch, taste, and even smell. In fact, they could also include sensors that go beyond human capabilities, such as night vision or detecting chemicals. The information from the sensors is sent to a controller that can activate an arm or other parts of the robot.

Actuators: These are electro-mechanical devices like motors. For the most part, they help with the movement of the arms, legs, head, and any other movable part.

Computer: There are memory storage and processors to help with the inputs from the sensors. In advanced robots, there may also be AI chips or Internet connections to AI cloud platforms.

The general system for a physical robot

There are also two main ways to operate a robot. First of all, there is remote control by a human operation. In this case, the robot is called a telerobot. Then there is the autonomous robot, which uses its own abilities to navigate—such as with AI.

So what was the first mobile, thinking robot? It was Shakey. The name was apt, as the project manager of the system, Charles Rosen, noted: “We worked for a month trying to find a good name for it, ranging from Greek names to whatnot, and then one of us said, ‘Hey, it shakes like hell and moves around, let’s just call it Shakey.’”4

The Stanford Research Institute (SRI), with funding from DARPA, worked on Shakey from 1966 to 1972. And it was quite sophisticated for the era. Shakey was large, at over five feet tall, and had wheels to move and sensors and cameras to help with touching. It was also wirelessly connected to DEC PDP-10 and PDP-15 computers. From here, a person could enter commands via teletype. Although, Shakey used algorithms to navigate its environment, even closing doors.

The development of the robot was the result of a myriad of AI breakthroughs. For example, Nils Nilsson and Richard Fikes created STRIPS (Stanford Research Institute Problem Solver), which allowed for automated planning as well as the A∗ algorithm for finding the shortest path with the least amount of computer resources.5

By the late 1960s, as America was focused on the space program, Shakey got quite a bit of buzz. A flattering piece in Life declared that the robot was the “first electronic person.”6

But unfortunately, in 1972, as the AI winter took hold, DARPA pulled the funding on Shakey. Yet the robot would still remain a key part of tech history and was inducted into the Robot Hall of Fame in 2004.7

Industrial and Commercial Robots

The first real-world use of robots had to do with manufacturing industries. But these systems did take quite a while to get adoption.

The story begins with George Devol, an inventor who did not finish high school. But this was not a problem. Devol had a knack for engineering and creativity, as he would go on to create some of the core systems for microwave ovens, barcodes, and automatic doors (during his life, he would obtain over 40 patents).

It was during the early 1950s that he also received a patent on a programmable robot called “Unimate.” He struggled to get interest in his idea as every investor turned him down.

However, in 1957, his life would change forever when he met Joseph Engelberger at a cocktail party. Think of it like when Steve Jobs met Steve Wozniak to create the Apple computer.

Engelberger was an engineer but also a savvy businessman. He even had a love for reading science fiction, such as Isaac Asimov’s stories. Because of this, Engelberger wanted the Unimate to benefit society.

Yet there was still resistance—as many people thought the idea was unrealistic and, well, science fiction—and it took a year to get funding. But once Engelberger did, he wasted little time in building the robot and was able to sell it to General Motors (GM) in 1961. Unimate was bulky (weighing 2,700 pounds) and had one 7-foot arm, but it was still quite useful and also meant that people would not have to do inherently dangerous activities. Some of its core functions included welding, spraying, and gripping—all done accurately and on a 24/7 basis.

Engelberger looked for creative ways to evangelize his robot. To this end, he appeared on Johnny Carson’s The Tonight Show in 1966, in which Unimate putted a golf ball perfectly and even poured beer. Johnny quipped that the machine could “replace someone’s job.”8

But industrial robots did have their nagging issues. Interestingly enough, GM learned this the hard way during the 1980s. At the time, CEO Roger Smith promoted the vision of a “lights out” factory—that is, where robots could build cars in the dark!

He went on to shell out a whopping $90 billion on the program and even created a joint venture, with Fujitsu-Fanuc, called GMF Robotics. The organization would become the world’s largest manufacturer of robots.

But unfortunately, the venture turned out to be a disaster. Besides aggravating unions, the robots often failed to live up to expectations. Just some of the fiascos included robots that welded doors shut or painted themselves—not the cars!

However, the situation of GMF is nothing really new—and it’s not necessarily about misguided senior managers. Take a look at Tesla, which is one of the world’s most innovative companies. But CEO Elon Musk still suffered major issues with robots on his factory floors. The problems got so bad that Tesla’s existence was jeopardized.

In an interview on CBS This Morning in April 2018, Musk said he used too many robots when manufacturing the Model 3 and this actually slowed down the process.9 He noted that he should have had more people involved.

All this points to what Hans Moravec once wrote: “It is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility.”10 This is often called the Moravec paradox.

Regardless of all this, industrial robots have become a massive industry, expanding across diverse segments like consumer goods, biotechnology/healthcare, and plastics. As of 2018, there were 35,880 industrial and commercial robots shipped in North America, according to data from the Robotic Industries Association (RIA).11 For example, the auto industry accounted for about 53%, but this has been declining.

And as we’ve heard from our members and at shows such as Automate, these sales and shipments aren’t just to large, multinational companies anymore. Small and medium-sized companies are using robots to solve real-world challenges, which is helping them be more competitive on a global scale.12

At the same time, the costs of manufacturing industrial robots continue to drop. Based on research from ARK, there will be a 65% reduction by 2025—with devices averaging less than $11,000 each.13 The analysis is based on Wright’s Law, which states that for every cumulative doubling in the number of units produced, there is a consistent decline in costs in percentage terms.

OK then, what about AI and robots? Where is that status of the technology? Even with the breakthroughs with deep learning, there has generally been slow progress with using AI with robots. Part of this is due to the fact that much of the research has been focused on software-based models, such as with image recognition. But another reason is that physical robots require sophisticated technologies to understand the environment—which is often noisy and distracting—in real-time. This involves enabling simultaneous localization and mapping (SLAM) in unknown environments while simultaneously tracking the robot’s location. To do this effectively, there may even need to be new technologies created, such as better neural network algorithms and quantum computers.

Osaro: The company develops systems that allow robots to learn quickly. Osaro describes this as “the ability to mimic behavior that requires learned sensor fusion as well as high level planning and object manipulation. It will also enable the ability to learn from one machine to another and improve beyond a human programmer’s insights.” 14 For example, one of its robots was able to learn, within only five seconds, how to lift and place a chicken (the system is expected to be used in poultry factories).15 But the technology could have many applications, such as for drones, autonomous vehicles, and IoT (Internet of Things).

OpenAI: They have created the Dactyl, which is a robot hand that has human-like dexterity. This is based on sophisticated training of simulations, not real-world interactions. OpenAI calls this “domain randomization,” which presents the robot many scenarios—even those that have a very low probability of happening. With Dactyl, the simulations were able to involve about 100 years of problem solving.16 One of the surprising results was that the system learned human hand actions that were not preprogrammed—such as sliding of the finger. Dactyl also has been trained to deal with imperfect information, say when the sensors have delayed readings, or when there is a need to handle multiple objects.

MIT: It can easily take thousands of sample data for a robot to understand its environment, such as to detect something as simple as a mug. But according to a research paper from professors at MIT, there may be a way to reduce this. They used a neural network that focused on only a few key features.17 The research is still in the early stages, but it could prove very impactful for robots.

Google : Beginning in 2013, the company went on an M&A (mergers and acquisitions) binge for robotics companies. But the results were disappointing. Despite this, it has not given up on the business. Over the past few years, Google has focused on pursuing simpler robots that are driven by AI and the company has created a new division, called Robotics at Google. For example, one of the robots can look at a bin of items and identify the one that is requested—picking it up with a three-fingered hand—about 85% of the time. A typical person, on the other hand, was able to do this at about 80%.18

So does all this point to complete automation? Probably not—at least for the foreseeable future. Keep in mind that a major trend is the development of cobots. These are robots that work along with people. All in all, it is turning into a much more powerful approach, as there can be leveraging of the advantages of both machines and humans.

Amazon Robotics automates fulfilment center operations using various methods of robotic technology including autonomous mobile robots, sophisticated control software, language perception, power management, computer vision, depth sensing, machine learning, object recognition, and semantic understanding of commands.20

Within the warehouses, robots quickly move across the floor helping to locate and lift storage pods. But people are also critical as they are better able to identify and pick individual products.

Yet the setup is very complicated. For example, warehouse employees wear Robotic Tech Vests so as not to be run down by robots!21 This technology makes it possible for a robot to identify a person.

But there are other issues with cobots. For example, there is the real fear that employees will ultimately be replaced by the machines. What’s more, it’s natural for people to feel like a proverbial cog in the wheel, which could mean lower morale. Can people really bond with robots? Probably not, especially industrial robots, which really do not have human qualities.

Robots in the Real World

OK then, let’s now take a look at some of the other interesting use cases with industrial and commercial robots.

Use Case: Security

Both Erik Schluntz and Travis Deyle have extensive backgrounds in the robotics industry, with stints at companies like Google and SpaceX. In 2016, they wanted to start their own venture but first spent considerable time trying to find a real-world application for the technology, which involved talking to numerous companies. Schluntz and Deyle found one common theme: the need for physical security of facilities. How could robots provide protection after 5 pm—without having to spend large amounts on security guards?

This resulted in the launch of Cobalt Robotics. The timing was spot-on because of the convergence of technologies like computer vision, machine learning, and, of course, the strides in robotics.

While using traditional security technology is effective—say with cameras and sensors—they are static and not necessarily good for real-time response. But with a robot, it’s possible to be much more proactive because of the mobility and the underlying intelligence.

However, people are still in the loop. Robots can then do what they are good at, such as 24/7 data processing and sensing, and people can focus on thinking critically and weighing the alternatives.

Besides its technology, Cobalt has been innovative with its business model, which it calls Robotics as a Service (RaaS). By charging a subscription, these devices are much more affordable for customers.

Use Case: Floor-Scrubbing Robots

We are likely to see some of the most interesting applications for robots in categories that are fairly mundane. Then again, these machines are really good at handling repetitive processes.

Take a look at Brain Corp, which was founded in 2009 by Dr. Eugene Izhikevich and Dr. Allen Gruber. They initially developed their technology for Qualcomm and DARPA. But Brain has since gone on to leverage machine learning and computer vision for self-driving robots. In all, the company has raised $125 million from investors like Qualcomm and SoftBank.

Brain’s flagship robot is Auto-C, which efficiently scrubs floors. Because of the AI system, called BrainOS (which is connected to the cloud), the machine is able to autonomously navigate complex environments. This is done by pressing a button, and then Auto-C quickly maps the route.

In late 2018, Brain struck an agreement with Walmart to roll out 1,500 Auto-C robots across hundreds of store locations.22 The company has also deployed robots at airports and malls.

But this is not the only robot in the works for Walmart. The company is also installing machines that can scan shelves to help with inventory management. With about 4,600 stores across the United States, robots will likely have a major impact on the retailer.23

Use Case: Online Pharmacy

As a second-generation pharmacist, TJ Parker had first-hand experience with the frustrations people felt when managing their prescriptions. So he wondered: Might the solution be to create a digital pharmacy?

He was convinced that the answer was yes. But while he had a strong background in the industry, he needed a solid tech co-founder, which he found in Elliot Cohen, an MIT engineer. They would go on to create PillPack in 2013.

The focus was to reimagine the customer experience. By using an app or going to the PillPack web site, a user could easily sign up—such as to input insurance information, enter prescription needs, and schedule deliveries. When the user received the package, it would have detailed information about dose instructions and even images of each pill. Furthermore, each of the pills included labels and were presorted into containers.

To make all this a reality required a sophisticated technology infrastructure, called PharmacyOS. It also was based on a network of robots, which were located in an 80,000-square-foot warehouse. Through this, the system could efficiently sort and package the prescriptions. But the facility also had licensed pharmacists to manage the process and make sure everything was in compliance.

In June 2018, Amazon.com shelled out about $1 billion for PillPack. On the news, the shares of companies like CVS and Walgreens dropped on the fears that the e-commerce giant was preparing to make a big play for the healthcare market.

Use Case: Robot Scientists

Developing prescription drugs is enormously expensive. Based on research from the Tufts Center for the Study of Drug Development, the average comes to about $2.6 billion per approved compound.24 In addition, it can easily take over a decade to get a new drug to market because of the onerous regulations.

But the use of sophisticated robots and deep learning could help. To see how, look at what researchers at the Universities of Aberystwyth and Cambridge have done. In 2009, they launched Adam, which was essentially a robot scientist that helped with the drug discovery process. Then a few years later, they launched Eve, which was the next-generation robot.

The system can come up with hypotheses and test them as well as run experiments. But the process is not just about brute-force calculations (the system can screen more than 10,000 compounds per day).25 With deep learning, Eve is able to use intelligence to better identify those compounds with the most potential. For example, it was able to show that triclosan—a common element found in toothpaste to prevent the buildup of plaque—could be effective against parasite growth in malaria. This is especially important since the disease has been becoming more resistant to existing therapies.

Humanoid and Consumer Robots

The popular cartoon, The Jetsons, came out in the early 1960s and had a great cast of characters. One was Rosie, which was a robot maid that always had a vacuum cleaner in hand.

Who wouldn’t want something like this? I would. But don’t expect something like Rosie coming to a home anytime soon. When it comes to consumer robots, we are still in the early days. In other words, we are instead seeing robots that have only some human features.

Sophia: Developed by the Hong Kong–based company Hanson Robotics, this is perhaps the most famous. In fact, in late 2017 Saudi Arabia granted her citizenship! Sophia, which has the likeness of Audrey Hepburn, can walk and talk. But there are also subtleties with her actions, such as sustaining eye contact.

Atlas: The developer is Boston Dynamics, which launched this in the summer of 2013. No doubt, Atlas has gotten much better over the years. It can, for example, perform backflips and pick itself up when it falls down.

Pepper: This is a humanoid robot, created by SoftBank Robotics, that is focused on providing customer service, such as at retail locations. The machine can use gestures—to help improve communication—and can also speak multiple languages.

As humanoid technologies get more realistic and advanced, there will inevitably be changes in society. Social norms about love and friendship will evolve. After all, as seen with the pervasiveness with smartphones, we are already seeing how technology can change the way we relate to people, say with texting and engaging in social media. According to a survey of Millennials from Tappable, close to 10% would rather sacrifice their pinky finger than forgo their smartphone!26

As for robots, we may see something similar. It’s about social robots. Such a machine—which is life-like with realistic features and AI—could ultimately become like, well, a friend or…even a lover.

Granted, this is likely far in the future. But as of now, there are certainly some interesting innovations with social robots. One example is ElliQ, which involves a tablet and a small robot head. For the most part, it is for those who live alone, such as the elderly. ElliQ can talk but also provide invaluable assistance like give reminders for taking medicine. The system can allow for video chats with family members as well.27

Yet there are certainly downsides to social robots. Just look at the awful situation of Jibo. The company, which had raised $72.7 million in venture funding, created the first social robot for the home. But there were many problems, such as product delays and the onslaught of knock-offs. Because of all this, Jibo filed for bankruptcy in 2018, and by April the following year, the servers were shut down.28

Needless to say, there were many disheartened owners of Jibo, evidenced by the many posts on Reddit.

The Three Laws of Robotics

- 1.

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- 2.

A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- 3.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Note

Asimov would later add another one, the zeroth law, which stated: “A robot may not harm humanity, or, by inaction, allow humanity to come to harm.” He considered this law to be the most important.

Asimov would write more short stories that reflected how the laws would play out in complex situations, and they would be collected in a book called I, Robot. All these took place in the world of the 21st century.

The Three Laws represented Asimov’s reaction to how science fiction portrayed robots as malevolent. But he thought this was unrealistic. Asimov had the foresight that there would emerge ethical rules to control the power of robots.

As of now, Asimov’s vision is starting to become more real—in other words, it is a good idea to explore ethical principles. Granted, this may not necessarily mean that his approach is the right way. But it is a good start, especially as robots get smarter and more personal because of the power of AI.

Cybersecurity and Robots

Cybersecurity has not been much of a problem with robots. But unfortunately, this will not likely be the case for long. The main reason is that it is becoming much more common for robots to be connected to the cloud. The same goes for other systems, such as the Internet of Things or IoT, and autonomous cars. For example, many of these systems are updated wirelessly, which exposes them to malware, viruses, and even ransoms. Furthermore, when it comes to electric vehicles, there is also a vulnerability to attacks from the charging network.

In fact, your data could linger within a vehicle! So if it is wrecked or you sell it, the information—say video, navigation details, and contacts from paired smartphone connections—may become available to other people. A white hat hacker, called GreenTheOnly, has been able to extract this data from a variety of Tesla models at junkyards, according to CNBC.com.29 But it’s important to note that the company does provide options to wipe the data and you can opt out of data collection (but this means not having certain advantages, like over-the-air (OTA) updates).

Now if there is a cybersecurity breach with a robot, the implications can certainly be devastating. Just imagine if a hacker infiltrated a manufacturing line or a supply chain or even a robotic surgery system. Lives could be in jeopardy.

Regardless, there has not been much investment in cybersecurity for robots. So far, there are just a handful of companies, like Karamba Security and Cybereason, that are focused on this. But as the problems get worse, there will inevitably be a ramping of investments from VCs and new initiatives from legacy cybersecurity firms.

Programming Robots for AI

It is getting easier to create intelligent robots, as systems get cheaper and there are new software platforms emerging. A big part of this has been due to the Robot Operating System (ROS), which is becoming a standard in the industry. The origins go back to 2007 when the platform began as an open source project at the Stanford Artificial Intelligence Laboratory.

Despite its name, ROS is really not a true operating system. Instead, it is middleware that helps to manage many of the critical parts of a robot: planning, simulations, mapping, localization, perception, and prototypes. ROS is also modular, as you can easily pick and choose the functions you need. The result is that the system can easily cut down on development time.

Another advantage: ROS has a global community of users. Consider that there are over 3,000 packages for the platform.30

As a testament to the prowess of ROS, Microsoft announced in late 2018 that it would release a version for the Windows operating system. According to the blog post from Lou Amadio, the principal software engineer of Windows IoT, “As robots have advanced, so have the development tools. We see robotics with artificial intelligence as universally accessible technology to augment human abilities.”31

The upshot is that ROS can be used with Visual Studio and there will be connections to the Azure cloud, which includes AI Tools.

OK then, when it comes to developing intelligent robots, there is often a different process than with the typical approach with software-based AI. That is, there not only needs to be a physical device but also a way to test it. Often this is done by using a simulation. Some developers will even start with creating cardboard models, which can be a great way to get a sense of the physical requirements.

But of course, there are also useful virtual simulators, such as MuJoCo, Gazebo, MORSE, and V-REP. These systems use sophisticated 3D graphics to deal with movements and the physics of the real world.

Then how do you create the AI models for robots? Actually, it is little different from the approach with software-based algorithms (as we covered in Chapter 2). But with a robot, there is the advantage that it will continue to collect data from its sensors, which can help evolve the AI.

The cloud is also becoming a critical factor in the development of intelligent robots, as seen with Amazon.com. The company has leveraged its hugely popular AWS platform with a new offering, called AWS RoboMaker. By using this, you can build, test, and deploy robots without much configuration. AWS RoboMaker operates on ROS and also allows the use of services for machine learning, analytics, and monitoring. There are even prebuilt virtual 3D worlds for retail stores, indoor rooms, and race tracks! Then once you are finished with the robot, you can use AWS to develop an over-the-air (OTA) system for secure deployment and periodic updates.

And as should be no surprise, Google is planning on releasing its own robot cloud platform (it’s expected to launch in 2019).32

The Future of Robots

Rodney Brooks is one of the giants of the robotics industry. In 1990, he co-founded iRobot to find ways to commercialize the technology. But it was not easy. It was not until 2002 that the company launched its Roomba vacuuming robot, which was a big hit with consumers. As of this writing, iRobot has a market value of $3.2 billion and posted more than $1 billion in revenues for 2018.

Our robots will be intuitive to use, intelligent and highly flexible. They’ll be easy to buy, train, and deploy and will be unbelievably inexpensive. [Rethink Robotics] will change the definition of how and where robots can be used, dramatically expanding the robot marketplace.33

But unfortunately, as with iRobot, there were many challenges. Even though Brook’s idea for cobots was pioneering—and would ultimately prove to be a lucrative market—he had to struggle with the complications of building an effective system. The focus on safety meant that precision and accuracy was not up to the standards of industrial customers. Because of this, the demand for Rethink’s robots was tepid.

By October 2018, the company ran out of cash and had to close its doors. In all, Rethink had raised close to $150 million from VCs and strategic investors like Goldman Sachs, Sigma Partners, GE, and Bezos Expeditions. The company’s intellectual property was sold off to a German automation firm, HAHN Group.

True, this is just one example. But then again, it does show that even the smartest tech people can get things wrong. And more importantly, the robotics market has unique complexities. When it comes to the evolution of this category, progress may be choppy and volatile.

While the industry has made progress in the last decade, robotics hasn’t yet realized its full potential. Any new technology will create a wave of numerous new companies, but only a few will survive and turn into lasting businesses. The Dot-Com bust killed the majority of internet companies, but Google, Amazon, and Netflix all survived. What robotics companies need to do is to be upfront about what their robots can do for customers today, overcome Hollywood stereotypes of robots as the bad guys, and demonstrate a clear ROI (Return On Investment) to customers.34

Conclusion

Until the past few years, robots were mostly for high-end manufacturing, such as for autos. But with the growth in AI and the lower costs for building devices, robots are becoming more widespread across a range of industries. As seen in this chapter, there are interesting use cases with robots that do things like clean floors or provide security for facilities.

But the use of AI with robotics is still in the nascent stages. Programming hardware systems is far from easy, and there is the need of sophisticated systems to navigate environments. However, with AI approaches like reinforcement learning, there has been accelerated progress.

But when thinking of using robots, it’s important to understand the limitations. There also must be a clear-cut purpose. If not, a deployment can easily lead to a costly failure. Even some of the world’s most innovative companies, like Google and Tesla, have had challenges in working with robots.

Key Takeaways

A robot can take actions, sense its environment, and have some level of intelligence. There are also key functions like sensors, actuators (such as motors), and computers.

There are two main ways to operate a robot: the telerobot (this is controlled by a human) and autonomous robot (based on AI systems).

Developing robots is incredibly complicated. Even some of the world’s best technologists, like Tesla’s Elon Musk, have had major troubles with the technology. A key reason is the Moravec paradox. Basically, what’s easy for humans is often difficult for robots and vice versa.

While AI is making an impact on robots, the process has been slow. One reason is that there has been more emphasis on software-based technologies. But also robots are extremely complicated when it comes to moving and understanding the environment.

Cobots are machines that work alongside humans. The idea is that this will allow for the leveraging of the advantages of both machines and people.

The costs of robots are a major reason for lack of adoption. But innovative companies, like Cobalt Robotics, are using new business models to help out, such as with subscriptions.

Consumer robots are still in the initial stages, especially compared to industrial robots. But there are some interesting use cases, such as with machines that can be companions for people.

During the 1950s, science fiction writer Isaac Asimov created the Three Laws of robotics. For the most part, they focused on making sure that the machines would not harm people or society. Even though there are criticisms of Asimov’s approach, they are still widely accepted.

Security has not generally been a problem with robots. But this will likely change—and fast. After all, more robots are connected to the cloud, which allows for the intrusion of viruses and malware.

The Robot Operating System (ROS) has become a standard for the robotics industry. This middleware helps with planning, simulations, mapping, localization, perception, and prototypes.

Developing intelligent robots has many challenges because of the need to create physical systems. Although, there are tools to help out, such as by allowing for sophisticated simulations.