Active Inference in Multiagent Systems: Context-Driven Collaboration and Decentralized Purpose-Driven Team Adaptation

Georgiy Levchuk; Krishna Pattipati; Daniel Serfaty; Adam Fouse; Robert McCormack Aptima Inc., Woburn, MA, United States

Abstract

The Internet of things (IoT), from heart monitoring implants to home-heating control systems, is becoming integral to our daily lives. We expect the technologies that comprise IoT to become smarter; to autonomously reason, act, and communicate with other entities in the environment; and to achieve shared goals. To realize the full potential of these systems, we must understand the mechanisms that allow multiple intelligent entities to effectively operate, collaborate, and learn in changing and uncertain environments. The future IoT devices must not only maintain enough intelligence to perceive and act locally, but also possess team-level collaboration and adaptation processes. We posit that such processes embody energy-minimizing mechanisms found in all biological and physical systems, and operate over the objectives and constraints that can be defined and analyzed locally by individual devices without the need for global centralized control. In this chapter, we represent multiple IoT devices as a team of intelligent agents, and postulate that multiagent systems achieve adaptive behaviors by minimizing a team’s free energy, which decomposes into distributed iterative perception (inference) and control (action) processes. First, we discuss instantiation of this mechanism for a joint distributed decision-making problem. Next, we present experimental evidence that energy-based teams outperform utility-based teams. Finally, we discuss different learning processes that support team-level adaptation.

Keywords

Internet of Things (IoT); Constraints; Multiple intelligent entities; Variational inference; Perceptual control; Free energy; Organizational structure; Humanmachine teams; Team adaptation

4.1 Introduction

Autonomous intelligent systems are no longer a fancy of science fiction writers; instead, they are quickly becoming part of our everyday lives. These devices, from heart monitoring implants to home-heating control systems, make our lives easier. Commercial technology developers make these devices “smarter” every day. While most of the currently deployed Internet of Things (IoT) systems perform simple tasks, like environment monitoring and human-guided control for smart homes, hospitals, or assembly plants, it is not difficult to envision a near future in which the intelligence and authority of these devices expand well beyond their current applications.

Most research in the area of IoT intelligence has been focused on the devices, including hardware–software interoperability (Al-Fuqaha, Guizani, Mohammadi, Aledhari, & Ayyash, 2015), standards and architectures (Perera, Zaslavsky, Christen, & Georgakopoulos, 2014), and operational challenges for individual devices or networks of homogeneous IoT components (Whitmore, Agarwal, & Da Xu, 2015). However, increasing interdependencies between component devices, data, physical systems, and human users prompted researchers and practitioners to explore the implications of emergent device intelligence on broader aspects of our everyday lives (Evans, 2012), forming the field of the Internet of Everything (IoE). Such studies allow the development of models to extract the highest potential from multiple autonomous and heterogeneous intelligent systems, including human–machine teaming recently identified as the defense technology of the future (Pellerin, 2015).

In this chapter we address two fundamental issues in IoE. First, we describe a general framework of adaptive multiagent behavior based on minimizing a team’s free energy. This framework explains how multiple autonomous agents can produce team-optimal context-aware behaviors by performing collaborative perception and control. Second, we present a mechanism for IoE agents to instantiate adaptive behaviors by intelligently sampling their environment and changing their organization structure. This structure adaptation modifies the agents’ roles and relations, which encode and constrain their decision responsibilities and interactions, and is computed in a distributed manner without a central authority. Energy optimization formally enables locally computed but globally optimal decisions by using approximate variational inference. The agents make local decision and communicate by passing belief messages in peer-to-peer manner. By providing the formal mapping between adaptive decisions, goal-driven actions, and perception, this model prescribes foundational functional requirements for developing IoE entities and networks that can efficiently operate in the complex, dynamic, and uncertain environments of the future.

4.2 Energy-Based Adaptive Agent Behaviors

4.2.1 Free Energy Principle

Recently, Friston proposed a theory, called the free energy principle, that describes how the agents and biological systems (such as a cell or a brain) adapt to the uncertain environments by reducing the information-theoretic quantity known as “variational free energy” (Friston, 2010; Friston, Thornton, & Clark, 2012). This theory brings Bayesian, information-theoretic, neuroscientific, and machine-learning approaches into a single formal framework. The framework prescribes that agents reduce their free energy in three ways: (1) by changing sensory inputs (control); (2) by changing predictions of the hidden variables and future sensory inputs (perception); and (3) by changing the model of the agent, such as its form, representation of environment, and structure of relations with other agents (learning and reorganization).

Variational free energy is defined as a function of sensory outcomes and a probability density over their (hidden) causes. This function is an upper bound on surprise, a negative log of the model evidence representing the difference between an agent’s predictions about its sensory inputs, and the observations it actually encounters. Since the long-term average of surprise is entropy, an agent acting to minimize free energy will implicitly place an upper bound on the entropy of its outcomes—or the sensory states—it samples. Consequently, the free energy principle provides a mathematical foundation to explain how agents maintain order by restricting themselves to a limited number of states. This framework gives a formal mechanism to design decentralized purpose-driven behaviors, where multiple agents can operate autonomously to resist disorder without supervised control by external agents but have a potential for peer-to-peer collaboration and competition.

4.2.2 Adaptive Behavior and Context

Free energy generalizes to learning and cognition, prescribing the acquisition of any form of knowledge as an attempt to reduce surprise. Moreover, a fundamental property of this formulation, as can be seen below with its mathematical derivations, is that both free energy and the surprise it bounds are highly contextual. First, surprise is a function of sensations and the agent predicting them, existing only in relation to model-based expectations. Surprise-minimizing agents attempt to adapt to the context contained in their observations. Second, the free energy principle suggests that agents harvest the sensory signals they can predict, keeping to consistent subspaces of the physical and physiological variables that define their existence (Friston, Thornton, & Clark, 2012). When the perceptions about the world are constant, minimization of the energy makes the agents change their actions to maximize the entropy of the sensations (and, accordingly, self-information) they receive. In other words, the adaptive behaviors prescribed by the free energy principle tightly couple the environment and the agents that populate it and conform to the expectations of those behaviors.

Further, the minimization of surprise suggests that the selected adaptive actions cannot be deterministic. These reflections provide a key differentiation between the behaviors based on the free-energy principle and the classical control formulations where utility or cost functions are optimized. Essentially, the contextual information encoded by free energy produces stochastic actions to achieve a boundedly rational behavior.

The concept of surprise minimization is the basis for many modern estimation theories, system identification, anomaly detection, and adaptive control (e.g., Bar-Shalom, Li, & Kirubarajan, 2004; Ljung & Glad, 1994). Ideas similar to the free-energy principle have also been pursued in manual control and normative-descriptive models of human decision making. For example, the internal model control (IMC) principles of control theory state that every good regulator/controller of a system must be a model of that system (Conant & Ross Ashby, 1970; Smith, 1959). The basic assumption underlying human decision making in dynamic contexts is that well-trained and motivated humans behave in a normative, rational manner subject to their sensory and neuro-motor limitations, as well as perceived task objectives (e.g., Kleinman, Baron, & Levison, 1971; Pattipati, Kleinman, & Ephrath, 1983).

4.2.3 Formal Definitions

Given an agent and its generative model of environment m, we formally assert that a purpose-driven adaptive system “behaves rationally” if it maximizes the model evidence, a probability distribution p(o | m) over observations o conditioned on the model of the environment m, or equivalently minimizes a measure of surprise:

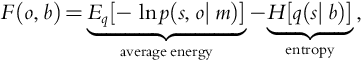

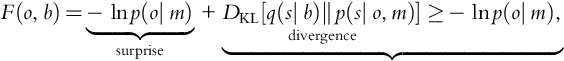

A direct optimization of model evidence or surprise is intractable due to marginalization over all possible hidden states of the world (Friston, 2012). Recently, researchers conjectured that the only tractable way to optimize surprise is to minimize the variational free energy F(o, b), an information-theoretic function of outcomes o, and an internal state of the agent defined as a probability density b over (hidden) causes of these outcomes (Friston, Thornton, & Clark, 2012):

where:

- • p(s, o | m) is a generative density representing the joint probability of world states s and observations o based on an agent model m;

- • q(s | b) is a recognition density that defines an agent’s beliefs about the hidden states s given internal state of agent b;

- • Eq[∙] is the expected value over recognition density, i.e., Eq[− ln p(s, o | m)] = − ∑sq(s | b) ln p(s, o | m); and

- • H[∙] is the entropy of the recognition density, i.e., H[q(s | b)] = − ∑sq(s | b) ln q(s | b).

By rewriting the free energy function, we can obtain several interpretations of how adaptive agents “behave.” First, free energy is equal to the sum of surprise and divergence, obtaining that free energy is an upper bound on surprise:

where DKL[q(s | b)‖ p(s | o, m)] is the Kullback-Leibler divergence between the recognition density q(s | b) and the true posterior of the world states p(s | o, m) = p(s | o). Consequently, the minimization of free energy achieves the approximate minimization of surprise, at which point the perceptions q(s | b) are equal to the posterior density p(s | o, m).

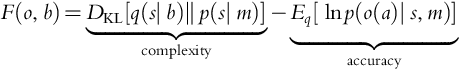

Second, we can rewrite the free energy as the difference between complexity and accuracy:

Here, DKL[q(s | b)‖ p(s | m)] is a measure of divergence between the recognition density q(s | b) and prior beliefs about the world p(s | m), interpretable as a measure of complexity; the second component is the expectation about the observations o to be received after performing an action a, which represents accuracy. This result means that the agent modifies its sensory outputs o = o(a) through action a to achieve the most accurate explanation of data under fixed complexity costs. Accordingly, we can now define the free energy minimization using two sequential phases that separate estimation and control:

- • Perception phase finds beliefs

; and

; and - • Control phase finds actions

.

.

The control phase produces a policy for an agent to generate observations that entail, on average, the smallest free energy. This result ensures that the individual actions produced over time are not deterministic, and that the control phase can be converted into a sampling process a∗~ Q(a, b, o) as a function of exploration bonus plus expected utility (Friston et al., 2013) or average free energy (Friston, Samothrakis, & Montague, 2012). Further, the free energy is dependent on the agent’s model m, which can be adapted to minimize its free energy via an evolutionary or neuro-developmental optimization. This process is distinct from perception; it entails changing the form and architecture of the agent (Friston, Thornton, & Clark, 2012). This change means that the free energy function can be used to compare two or more agents (models) to each other (a better agent is the one that has the smaller free energy), and thus is an ultimate measure of fitness, or congruence, or match between the agent and its environment.

4.2.4 Behavior Workflow and Computational Considerations

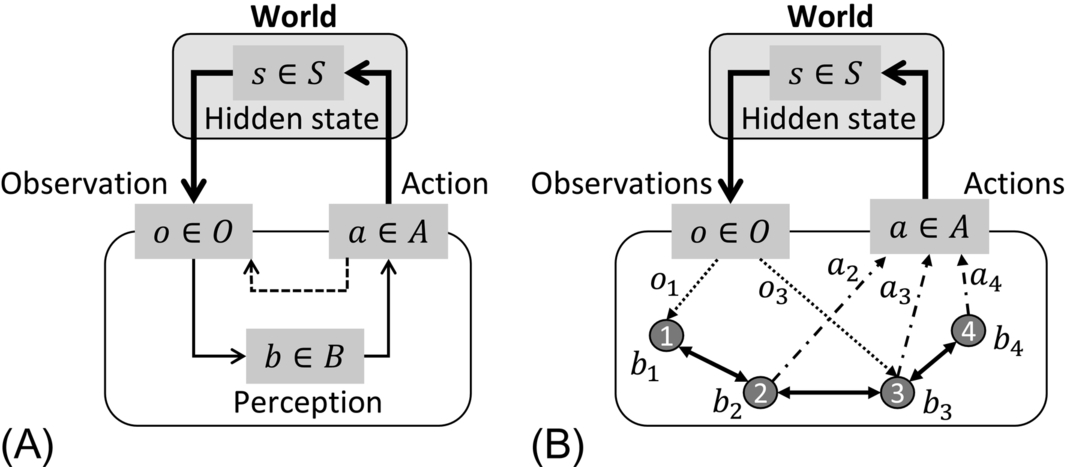

Fig. 4.1 depicts a simplified schematic of the resulting cycle of sensing, control, and perception in adaptive agents, where posterior expectations (about the hidden causes of observation inputs) minimize free energy and prescribe actions. A team of agents differs from a single agent model by distributing the observations, perceptions, and actions among multiple agents, while allowing the agents to communicate to achieve team-level goals.

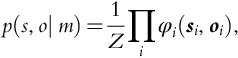

The key benefit of using the information-theoretic free-energy principle for modeling dynamical systems is that the function − ln p(s, o | m) can have a simple mathematical structure when generative density p(s, o | m) factors out:

where {si, oi} represent the subsets of state and observation variables, φi are factor functions encoding dependency relations among the corresponding variables, and Z is the normalization constant. Usually functions φi(∙) are simple, typically describing the relations among one to four variables at a time. Then the agent, or team, or agents can minimize their energy with respect to internal state (recognition density q(s | b)) using a generalized belief propagation (BP) algorithm (Friston et al., 2013), an iterative procedure based on message passing. Moreover, using a standard BP algorithm, derived from a Bethe approximation to the variational free energy (Yedidia, Freeman, & Weiss, 2005), the agents can obtain the approximating density in a peer-to-peer manner with low computational complexity. While standard BP does not guarantee convergence, it performs well in practice.

The free energy principle does not dictate the specifics of a generative process, i.e., the structure/components of the generative density p(s, o | m) required to define the free-energy function. Nor does it prescribe the algorithms that need to be employed to minimize free energy. However, it provides a unifying framework that can be tailored to specific environments and systems. Next, we apply the free-energy formalisms to the design of adaptive multiagent teams, defining appropriate abstractions, and discussing their implications for the IoE functional requirements.

4.3 Application of Energy Formalism to Multiagent Teams

4.3.1 Motivation

A team consisting of human and machine agents is a decentralized purpose-driven system. One of the main challenges in defining adaptive team behavior is in realizing global team-level perception and control, and corresponding optimization processes, into local inferences and decisions produced by individual agents without external control.

In our previous work we showed how free-energy minimization can be applied to define adaptive behavior in teams that execute given multitask missions (Levchuk, Pattipati, Fouse, & Serfaty, 2017). Examples of teams include military organizations, manufacturing teams, and many other project-based organizations. These teams interact with their environment by jointly assigning and executing tasks; optimal teams have the highest task execution accuracy and/or the fastest execution times. In the domain of project-based teams, we considered team members (agents) to possess high levels of intelligence, and thus assumed that agent-to-task assignment decision-making processes should not be externally controlled. We defined observations as the outcomes of task execution, and treated task assignments as a world state variable hidden from the agent. Consequently, the perception phase estimated the probability of agent-to-task assignments, while the control phase defined a team’s organization structure, including the roles and relationships that constrain the tasks that agents could assign and execute, specifying a formal process for the team to resist its disorder.

In this chapter we review an instantiation of energy-minimizing adaptive behavior in a distributed decision-making setting. This entails a more general setup than project-based teams, motivated by the following. First, we wanted to understand how intelligent adaptive behaviors are related to the formal dynamics and structures among agents. We studied how organizational structure influences the processes of searching for sets of good decisions and stabilizing around good decisions once they are discovered (Rivkin & Siggelkow, 2003). The search and stability issues are conceptually identical to the exploration-exploitation tradeoffs afforded by free-energy minimization, allowing us to examine the alignments between energy-based computational mechanisms and discrete human decision-making processes analyzed by Rivkin and Siggelkow.

Second, we posit that the free-energy principle explains the empirically observed behaviors of business organizations. Unlike network-based theories of cities (e.g., Schläpfer et al., 2014), which are developed and tested using extensive quantitative data about social and economic transactions, development of behavior models for business organizations has lagged behind since the data on operations of business enterprises (e.g., communication channels, personnel assignments, and task outcomes) is often proprietary and not available. However, it has been empirically observed that as companies mature and grow, they attempt to maximize profits (utility) at the expense of innovation (entropy, disruption or disorder), placing increasing emphasis on rules, regulations, and other forms of bureaucratic control over its members, impeding market adjustments and leading to their eventual demise (West, 2017). The free-energy principle explains how placing more emphasis on utility versus entropy makes the system brittle and unable to adapt to a changing environment.

Finally, we wanted to identify what implications the free-energy minimization principle had on the design of agents that constitute the effective members of a high-performance team.

4.3.2 Problem Definition

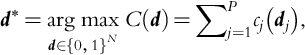

The formal definition of a distributed decision-making problem (Rivkin & Siggelkow, 2003) is as follows. Assume that a team of M agents seeks an N-dimensional binary decision vector d = [d1, …, dN], where di ∈ {0, 1}, to maximize its additive objective function, i.e.,

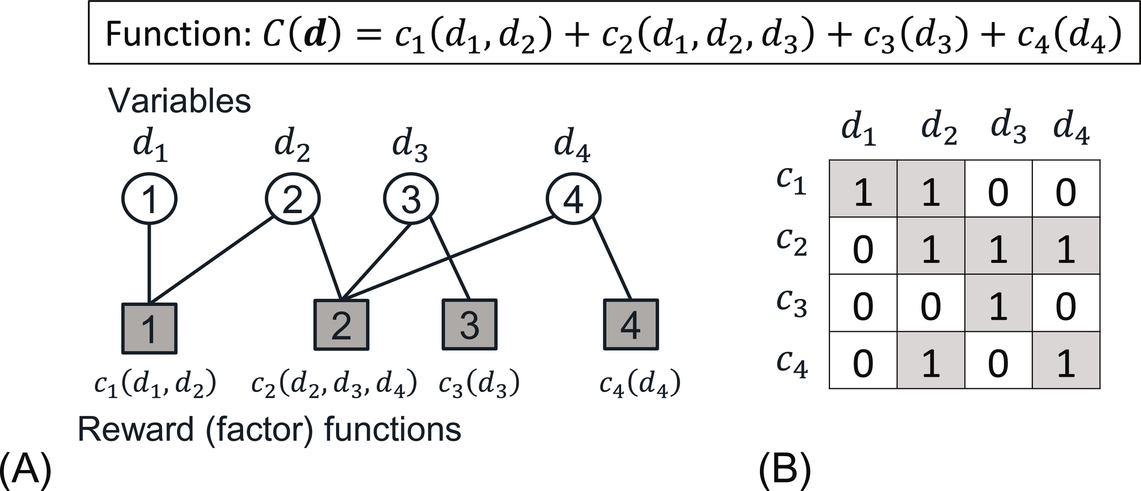

where dj is a subset of decision variables, and P is the number of these subsets. Component reward functions cj(dj) encode dependencies between decisions in subset dj (such as local and team-level rewards). The general additive form of the objective function C(d) represents many real-world search and inference problems. Relationships among the reward functions and decision variables can be expressed using a factor graph or a function-to-decision adjacency matrix (Fig. 4.2).

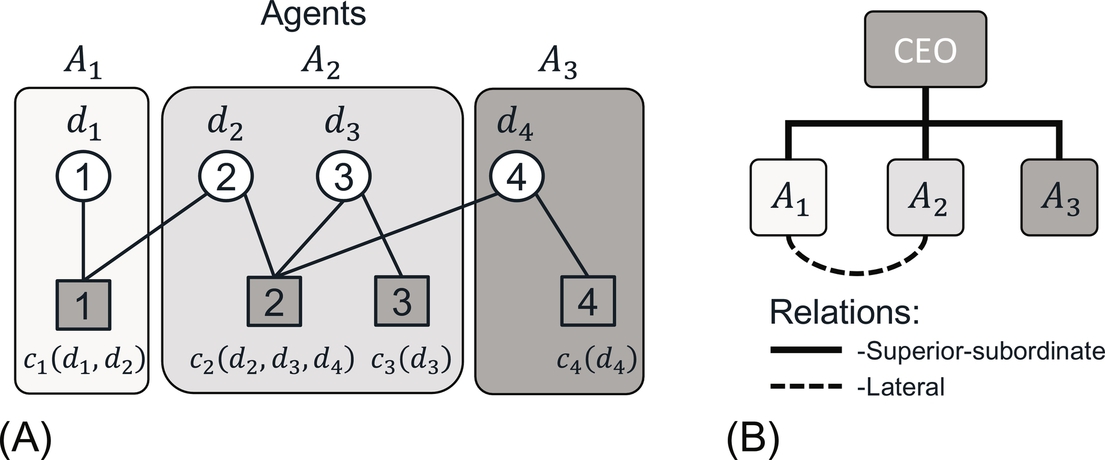

As the number N of decision variables increases, the space of possible decision outcomes d grows exponentially.1 As a result, the optimal solution to the above maximization problem cannot be achieved by an exhaustive search of the values of joint reward function C(d). Instead, an intelligent search in the space of all possible decisions needs to be conducted by the team of agents. To constrain the actions and enable agent collaboration, the organizational structure m among the agents is defined using two variables (Fig. 4.3):

- • Decision decomposition, prescribing subsets of decisions and cost functions assigned to each agent; and

- • An agent network, prescribing superior-subordinate relations among the agents.

Each agent is locally aware of and controls only a subset of decisions and reward functions, giving rise to potential local-global decision inconsistencies. Accordingly, to produce team optimal decisions (maximizing the team objective function C(d)), agents have to collaborate.

Rivkin and Siggelkow (2003) defined a version of the decision-making and collaboration processes that mimicked the human decision making that occurs in business organizations. Subordinate agents generate a set of discrete decision vectors, rank these vectors with local payoffs, and communicate the ranked vectors to a superior agent (indicated as CEO in Fig. 4.3B). The superior agent combines the vectors of subordinates into a set of candidate team-level decision vectors, evaluates them against a team’s objective function, and communicates the best vector to the subordinates for implementation. The number of decision vector values the agents can evaluate during a specified time period is constrained by internal capacity. Lateral relationships can also be defined among the agents, allowing one agent to inform another about their local decisions vectors.

The decision-making process (Rivkin & Siggelkow, 2003) presents a heuristic solution to a distributed optimization problem, but provides no guarantees of convergence or efficacy even with the introduction of global incentives for agents. This heuristic also does not explain the causes of an underlying behavior, nor prescribes an adaptive behavior for the team or its members. We can still evaluate and compare the behaviors of teams of agents using these heuristics in terms of the time it takes to produce their solution, its proximity to the true maximum, and the amount of exploration versus exploitation in the state space of all decision vectors the agents jointly generate. In the following, we offer a formal optimization process that solves this problem in a distributed manner using free energy–minimization principles, along with the concomitant adaptive team behaviors exhibited by the team members during a search process. We show that an energy-based search provides a mechanism to adapt the multiagent behaviors and significantly outperforms the discrete optimization heuristics by Rivkin and Siggelkow, described in the previous section.

4.3.3 Distributed Collaborative Search Via Free Energy Minimization

We recast the problem described above as a joint inference over a factorized objective function in Section 4.2.4. In the distributed search problem of Section 4.3.2, the environment is fully observable, hence we omit the observation notation from the rest of the exposition. We define the adaptive behavior for a team of agents based on the free-energy principle using three processes:

- • Perception will find the beliefs b representing the probability distribution q(d | m);

- • Control will produce the next decision d by sampling the state space to minimize surprise; and

- • Reorganization will adapt the structure m among agents in terms of decision decompositions and the agent network.

Formally, we define a generative probability distribution for a decision variable d as:

where φj(dj) = ecj(dj). We can then write the variational free energy as a function of beliefs b and of the team structure m:

Then minimizing the variational free energy F(b, m) with respect to probability functions q(d | b) becomes an exact procedure for bounding surprise and recovering p(d | m). Exact minimization, however, is intractable for general forms of q(d | m) due to the curse of dimensionality.

When the generative probability is factorizable, as we described in Section 4.2.4, generalized belief propagation is used to find the marginal probability distributions (Yedidia et al., 2005) that form the basis for generating decision points stochastically. This method, however, requires a difficult decision decomposition step and incurs high computational cost in an optimal message aggregation step.

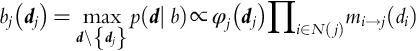

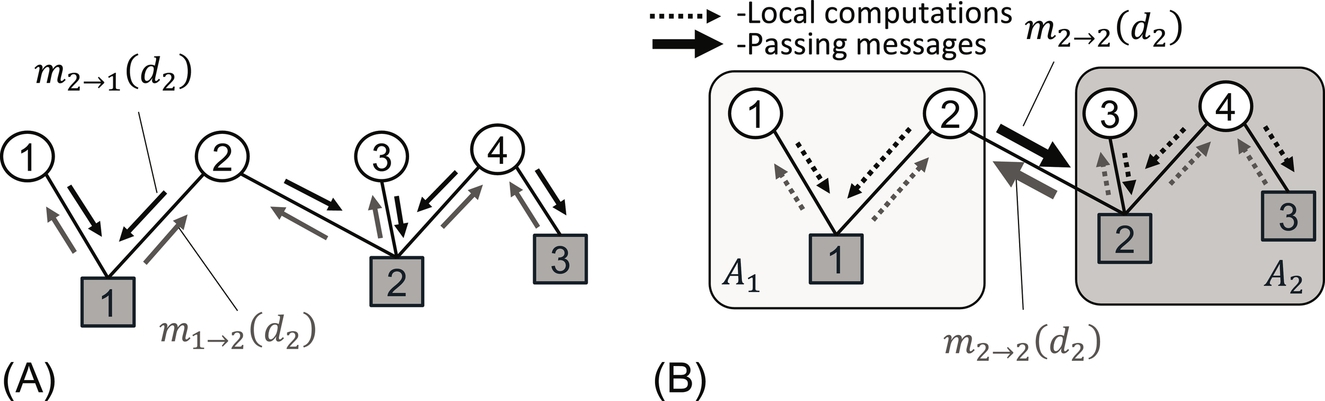

Two features can help us address these challenges. First, we note that maximizing a global team decision cost function is equivalent to maximizing the joint decision probability function. This process, known as maximum a-posteriori (MAP) estimation, requires obtaining max-marginal probability values rather than marginal probabilities. Second, instead of the exact computation of belief distributions, we use an approximate solution produced by the standard belief propagation algorithm (Yedidia et al., 2005), based on the Bethe approximation of the free-energy function. We use the max-product algorithm to reduce a space of the distributions to analyze, lowering the computational complexity. The max-product belief propagation algorithm computes max-marginal distributions by iteratively passing belief messages between variable and factor nodes in the factor graph (Fig. 4.4A) using the following update equations:

- • Variable-to-factor message updates involve multiplication of all except one of the incoming beliefs:

- • Factor-to-variable message updates are conducted by maximizing component functions:

In the above, N(i) denotes the neighbor nodes of node i in a factor graph. For a team of agents, some of the computations above are conducted locally by a single agent, while the message passing across the links between variables and factors that are assigned to different agents need to be physically passed among the agents (Fig. 4.4B). These messages define formal local-global decision making, while the collaborative process is formally specified as the belief messages sent between connected agents.

Variable-to-factor messages contain the beliefs about the variable, interpreted as experience messages. Factor-to-variable messages are the probability distributions over decision values at a corresponding variable node, corresponding to influence messages. The free energy principle and approximate inference with a max-product algorithm only requires the agents to understand the dependencies of their local decisions on the decisions of other agents; they must also be capable of creating, sharing and interpreting the experience and influence messages.

After a max-product algorithm converges, the agents obtain the max-marginal probability distributions, and use these distributions to sample the space of decision vectors locally (i.e., each agent samples its own subset of decision vector variables). Max-marginal estimates include variable marginals used by agents to sample the decision space:

as well as factor marginals, used to adapt a team’s structure:

With these quantities, we compute a Bethe approximation to the free-energy function (Yedidia et al., 2005):

where the first component is a negative expected utility computed as ![]() , and the second component is the entropy HBethe(b, m) = (ni − 1)∑i ∑dibi(di) ln bi(di) − ∑j ∑djbj(dj) ln bj(dj), where ni = | N(i)| is the number of factors dj that the variable i is involved in. Minimizing the free energy is achieved when the team finds all possible (maximally varying) marginals with the highest utility.

, and the second component is the entropy HBethe(b, m) = (ni − 1)∑i ∑dibi(di) ln bi(di) − ∑j ∑djbj(dj) ln bj(dj), where ni = | N(i)| is the number of factors dj that the variable i is involved in. Minimizing the free energy is achieved when the team finds all possible (maximally varying) marginals with the highest utility.

4.3.4 Adapting Team Structure

The team structure is represented by a model variable m, which affects the perceptions and decisions the team jointly produces. In addition, this structure also constrains how the information flows and is incorporated in the organization, including where the belief message can be sent, what communication delays and transmission errors are incurred, which of the messages are used to update decisions, and the concomitant computation workload incurred by team agents. This problem of team structure design can be formulated (and solved) as a network design problem (Feremans, Labbé, & Laporte, 2003).

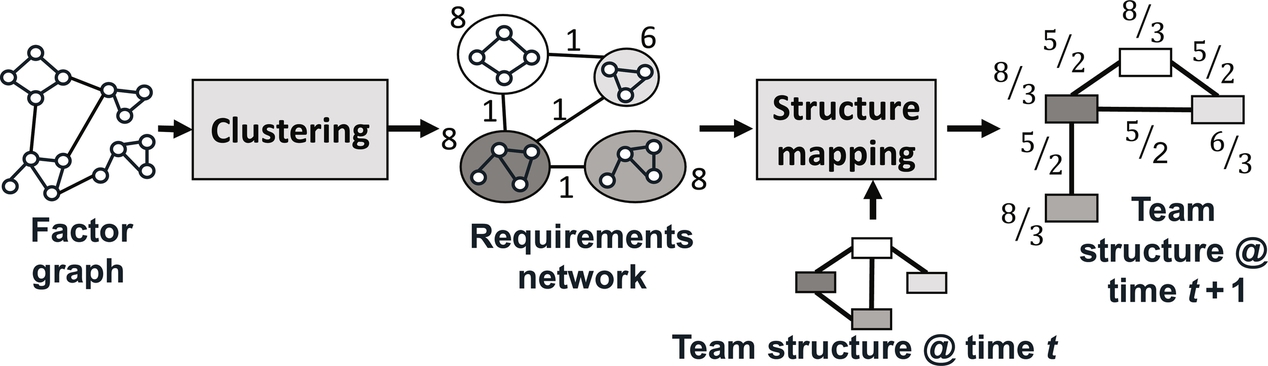

Decision decomposition and the corresponding BP message calculations represent the internal computational workload incurred by agents, representing the collaboration process required to solve a decision problem. Specification of the messages passed between decision and factor nodes in a factor graph define the external communication workload of the agents. The problem of structuring a team can be formulated as the alignment of decision decomposition (“the task network”) and the agent network (“team structure”) to properly balance internal and external workloads. We model the impact of internal and external workloads on a team’s perception and action processes using delay models and/or message transmission errors (the classic “delay-accuracy” tradeoff in team performance). Schematically, the team structuring problem can be decomposed in two steps (Fig. 4.5). First, we aggregate the decision and factor nodes to balance the internal and external workloads via cluster analysis by minimizing the inter-cluster links, subject to constraints on the workload capacities of agents. Second, we use this network as a set of requirements, and map it to the current agent network, re-aligning agent network parameters (i.e., capacities) as the factor graph evolves (e.g., changes its decision reward parameters).

4.4 Validation Experiments

4.4.1 Experiment Setup

We conducted several studies to validate our proposed adaptive team behavior model. First, we generated a collection of synthetic distributed decision-making problems by randomly generating the values for the joint reward function. We manipulated the density of dependencies between variables using a parameter K defined as the average number of variables influencing a factor node. We computed several assessment metrics, including the percent of trials where a team converged to an optimal solution (determined as producing a vector with a reward value within a small threshold of the optimal value), and a normalized payoff (computed as the fraction of the discovered decision payoff compared to the optimal one). We evaluated team performance over time, as well as using a time-averaged payoff. Second, we introduced random periodic variations of the parameters in the joint reward function to evaluate how quickly the teams can recover from these changes. The latter analysis enables us to quantify the attributes of resilience in a team: (1) capacity to absorb changes, (2) recoverability, and (3) adaptive capacity (Francis & Bekera, 2014).

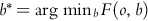

Using these generated datasets, we conducted several evaluations, comparing the discrete decision-making model (Rivkin & Siggelkow, 2003) with two alternative decision processes: perception-maximizing decisions (selecting decisions as a maximum of the max-marginal probabilities), and energy minimization (selecting a decision by sampling max-marginals to minimize surprise). We also analyzed the impact a specific team structure (models) had on the ability of the team to find correct solutions.

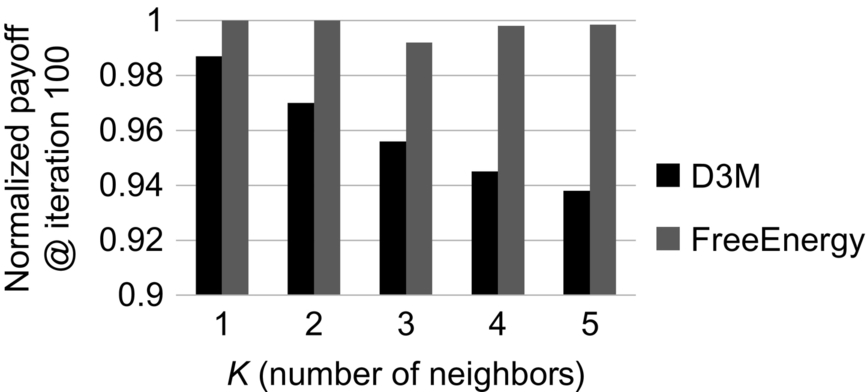

4.4.2 Discrete Decision Making Versus Free Energy

In our first evaluation, we compared the performance of distributed discrete decision making (D3M) heuristics with our model, where the decision vector minimizes a free-energy function. Fig. 4.6 shows the normalized payoff achieved at the 100th iteration by the best of D3M policies (Rivkin & Siggelkow, 2003) versus payoffs of one of the teams defined by our energy-optimizing model. While the free-energy solution provides only a marginal improvement compared to the D3M model (2%–10%), our model achieves convergence much faster than the D3M heuristics (usually at 15 iterations versus 50–80 iterations for D3M), and maintains high convergence for increasing objective function complexity (parameter K), while the performance of D3M consistently decreases with K. As a result, we continued to compare only energy-based adaptation processes.

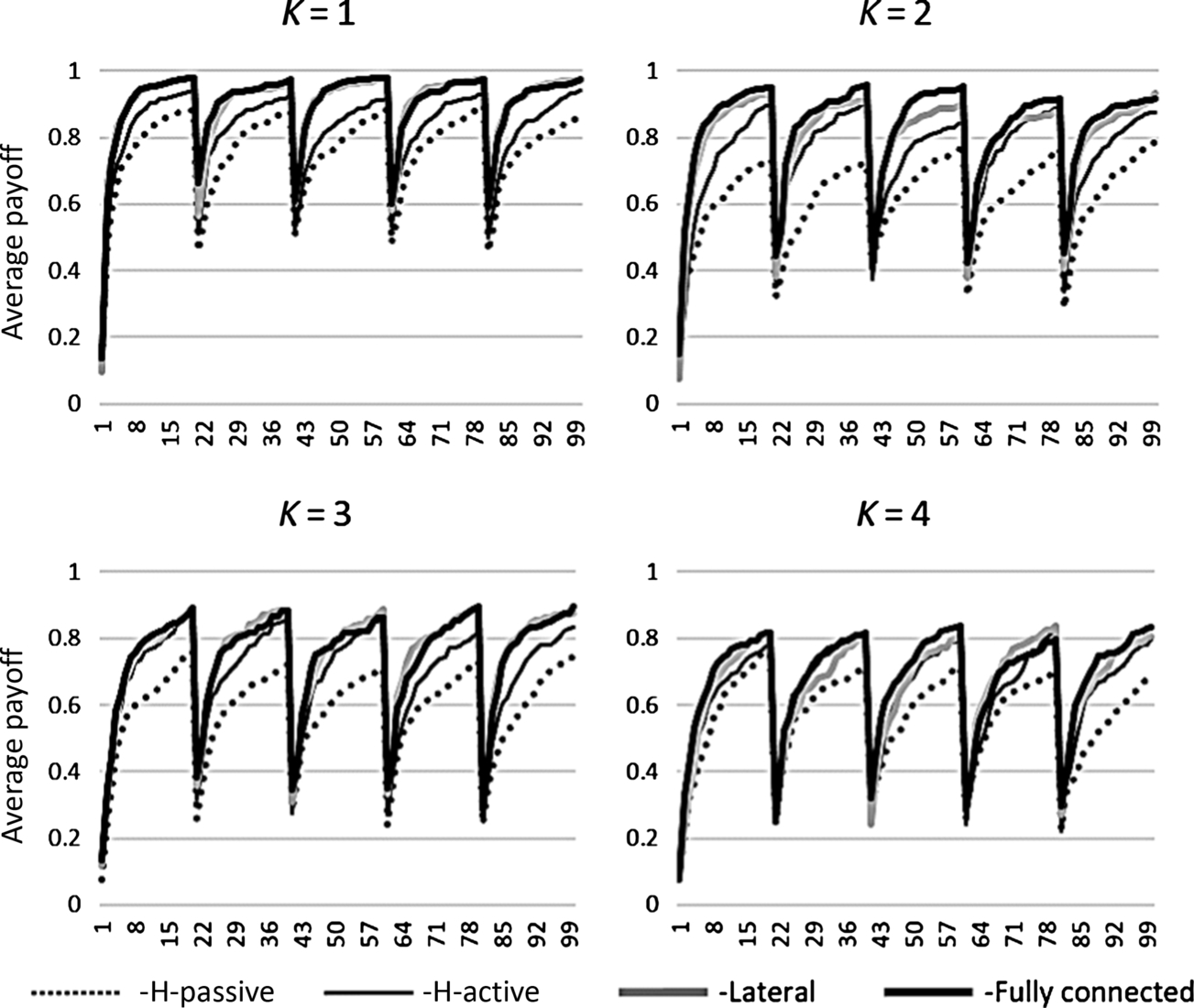

In the next set of experiments we analyzed the ability of a team to adapt to changes in the environment, defined as random regeneration of an objective function’s parameters (without changing the topology of the factor graph) introduced at every 20 decision iterations.

4.4.3 Impact of Agent Network Structure

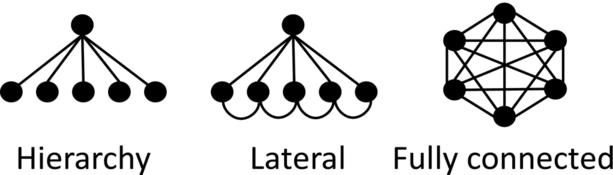

We compared the effects of three different agent network topologies (Fig. 4.7) on the quality of the search process and the ability of a team to adapt. We also analyzed different behaviors at the root node (CEO agent) in the hierarchy: “active” refers to the agent that passes indirect messages among subordinate agents and “passive” accounts for ignoring those messages completely.

From the average payoff values in Fig. 4.8, we concluded that organizations with stronger subordinates (“lateral” and “fully connected”) performed better, while the relative benefit of such teams is highest for medium dependencies between decisions (K = 2–3). In these situations the benefit of lateral coordination appears to outweigh the cost of managing multiple communications. The relative benefit of fully connected networks reduces as the dependencies become more complex (K > 3), mostly due to the suboptimality of a distributed solution when there are many dependencies (i.e., large K) among the decision variables.

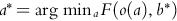

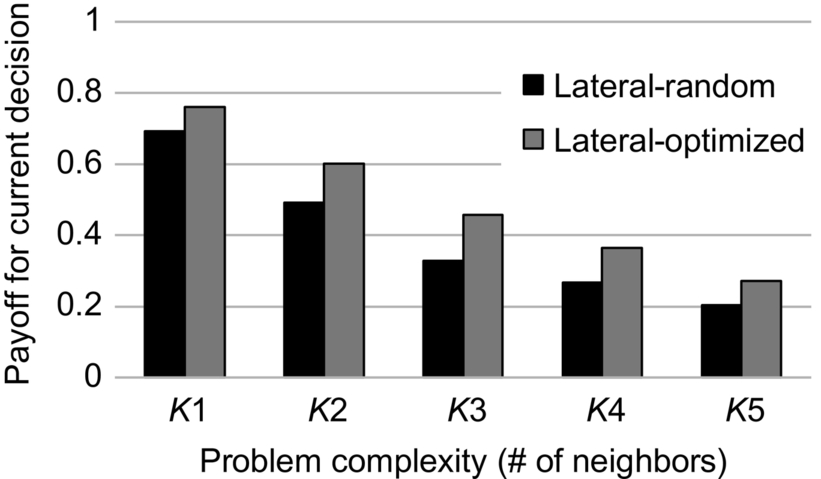

4.4.4 Impact of Decision Decomposition

Finally, we studied the effect of decision decomposition (the assignment of decision and factor nodes to agents) on team performance. We computed a score on the quality of a current decision as the value of an objective function at the max-marginal vector ![]() , where,

, where,

We found that using optimized versus random decomposition improved the solutions achieved by a team, with a larger effect for lateral structures (Fig. 4.9).

Due to space limitations we omitted an analysis of (a) how team structures affect performance; (b) correlation between free energy and the reward function improvement; (c) internal/external workload metrics and how they impact the decision quality; and (d) measures of resilience. These will be included in a future manuscript.

4.5 Conclusions

In this chapter we studied the problem of generating adaptive behaviors for cooperating agents. We presented an application of the free energy–minimization principle to generate decentralized purpose-driven teams of agents. Experiments with synthetic data establish that energy-based behavior results in a higher performance on a distributed search task compared to discrete decision-making heuristics. The minimum free-energy formalism provides a mathematically sound mechanism for coupling perception and action selection processes. Finally, the decisions to affect the environment through actions, adapt by modifying perception, and adjust the architecture of a team in terms of organizational structure among the agents can all be executed in a distributed collaborative manner without the need for external controlling agents.

One of the key innovations of our work is that it prescribes two general interfaces that the intelligent adaptive agents must possess: generating, communicating, and incorporating experience and influence messages. Neither of these interfaces alone can allow multiple agents to achieve the required team-optimal decisions using distributed local computations. Our current work is focused on defining a precise free-energy function that the encodes the effects of team structure on decisions and communications, studying the convergence properties of distributed perception and control processes, obtaining the collaborative adaptation mechanisms for project-based teams, and deriving high-level corollaries with general trends from lower-level free energy–minimizing processes.