CHAPTER 5

The Great Deception of the 2016 RFI

IN AUGUST OF 2018, the Trump administration directed the Select Committee on AI to refresh the previously developed 2016 National AI R&D Strategic Plan. A Request for Information (RFI) was issued to solicit public input. The result was the identification of eight strategic priorities—of which seven were the continuation of the 2016 plan. The overwhelming anchoring of the plan in the 2016 plan (seven out of eight strategies) shows that today's American AI plan is essentially the 2016 plan—which was developed during the Obama administration.

Apparently OSTP was not just pushing President Trump to support its agenda, it was also making sure that President Trump did not find out the AI-related activities led by his predecessor. Without that important information, President Trump can have many “first-ever” statements about his AI accomplishments. For example, the OSTP February 2020 report titled “American Artificial Intelligence Initiative: Year One Annual Report,” says the following about President Trump being the first:

President Trump made history when he became the first president to name artificial intelligence as an Administration R&D priority in 2017—and since then, America has never looked back. (Trump 2020)

However, the same office in 2016, stated the following about the Obama White House:

On May 3, 2016, the White House announced a series of actions to spur public dialogue on AI, to identify challenge and opportunities related to this emerging technology, to aid in the use of AI for more effective government, and prepare for the potential benefit and risks of AI. As part of these actions, the White House directed the creation of a national strategy for research and development in artificial intelligence.

This resulting AI R&D Strategic Plan defines a high-level framework that can be used to identify scientific and technological needs in AI, and to track the progress and maximize the impact of R&D investments to fill those needs. It also establishes priorities for Federally-funded R&D in AI, looking beyond near-term AI capabilities toward long-term transformational impacts of AI on society and the world. (Obama 2016a)

Based on the above, to claim that President Trump was the first president to “name artificial intelligence as an administration R&D priority in 2017” is a stretch of the truth. If the sentence implied that he was first president to do so in the year 2017, since both he and Obama were presidents that year, that is also somewhat misleading.

In fact, AI planning under the Obama administration seemed to have followed a more logical and evolutionary path than under the Trump administration. The Obama administration also looked into the economic aspects of AI and issued a separate report on that. The progression from big data to AI was a natural evolution, and it was important because it kept the focus on the important link between data and AI.

The 2016 plan became the foundation over which all future plans were developed. It is therefore worth it to determine the process that led to the formulation of the 2016 plan. This implies that it will be good idea to begin our analysis with the 2016 plan.

THE OBAMA-ERA AI

President Obama's administration had already began preparing for AI. The administration published a report on big data and then took a systematic approach and started developing the AI plan. At least four conferences were organized to talk about AI. These included:

- May 24, 2016: “Legal and Governance Implications of Artificial Intelligence,” in Seattle, Washington;

- June 7, 2016: “Artificial Intelligence for Social Good,” in Washington, DC;

- June 28, 2016: “Safety and Control for Artificial Intelligence,” in Pittsburgh, Pennsylvania;

- July 7, 2016: “The Social and Economic Implications of Artificial Intelligence Technologies in the Near Term,” in New York City.

Then the Obama administration issued a report on big data, which was followed by the report on AI. This shows that AI had become a national priority in 2016 under the Obama administration—however, as the above titles of the conferences demonstrate, a large focus of AI-related narratives stayed on governance, safety, control, and social good. AI was being introduced to America from the prism of caution.

THE PROCESS FOR FORMULATING THE 2016 PLAN

In an 2018 article by Lynne Parker, the director of the National AI Initiative, she describes the process of how the 2016 plan was created and to whom it was directed (Parker 2018). While American companies were investing a lot of money in consumer-centered AI and for short-term profitability, the plan was created to invest in building the long-term capabilities. The audience for the plan was US policymakers and federal funding agencies. The plan did not issue specific guidelines about funding programs for individual agencies and only gave a high-level perspective on high-priority funding areas. The plan was developed by the AI Task Force, which was a US interagency working group tasked by the Subcommittee on Networking and Information Technology Research and Development (NITRD). NITRD has representation of 20 agencies and coordinates funding for networking and information technology across the agencies. The process deployed to develop the plan was based on four steps. In step 1, the national priorities were analyzed and determined by doing a thought experiment on what the future with AI will look like and then linking it back to the Declaration of Independence. This was a vision exercise to paint a picture of the ideal future world with AI. In step 2, open areas of research were identified by understanding the research needs that were purely basic research–based, a mix of applied and basic, and applied only. In step 3, an RFI was used to solicit feedback. In step 4, the specific R&D priorities were determined, about which Lynne Parker writes:

One additional point about the content of the plan is important to understand. Because R&D in AI primarily occurs within the discipline of information technology, the charge for the creation of the plan was directed to NITRD. Due to the fact that NITRD oversees (specifically) IT-related R&D coordination across the federal government, the content of the plan is exclusively focused on open IT-relevant issues for AI. Of course, the Artificial Intelligence Task Force recognized that AI benefits from a variety of perspectives across many other disciplines, including neuroscience, psychology, social and behavioral sciences, ethics, law, economics, as well as expertise from across the broad spectrum of application domains, including agriculture, transportation, and so forth. Research and development in these other domains is not included in the strategic plan, however, due to the IT-centric tasking of the task force. Nevertheless, a focus on IT-relevant issues still provides a useful foundation for considering priorities in AI R&D investments, and their potential benefits across a wide range of application domains. (Parker 2018)

Anyone with even a little strategy development background can see the dramatic mistakes being made in both the design and the development process of the plan. The plan was based on some pie-in-the-sky Hollywood sci-fi type of story of what the future of America would look like with AI, and then issuing an emotional, virtue-signaling call to tie that vision back to the Declaration of Independence as if the AI's terminator robots were ready to take over America. This exceedingly naïve vision of the future is then somehow used to determine the R&D priorities based on the overly simplistic allocation based on applied vs. basic vs. applied/basic research, and after doing all that, declaring, Oh well, the plan is only about IT-related R&D. So much for developing a strategic plan!

Note that the industrial needs, broad national priorities, competitive advantage and comparative advantages of the nation, industrialization maps, constraints, the state of the US government's current technology, change management, the narrative about inspiring and mobilizing American people, and hundreds of other such factors were not considered. Even if the plan was meant to be only for the government IT R&D, it was grossly deficient in terms of how strategies are developed. To call something like this a national strategy would be analogous to calling a calculator a supercomputer. More care and analysis go into developing a plan for opening a corner bakery than what went into developing what would eventually become the national strategy of AI for the United States of America—and then we wonder why China is leaving us behind. The most dangerous development that happened later was that somehow this plan eventually became the face of the national strategy and then shaped the American AI Initiative and continues to influence national strategies. This plan, which was grossly inadequate and did not follow any strategic process for development, would drive America's AI policy, legislative activity, funding, and presidential executive orders for the years to come. It would become the anchor on which all the future plans will be architected. America had just made its worst mistake. Every subsequent activity would now be based on the anchoring bias.

In 2016, the OSTP had already developed a general framework on what it wanted to do. The RFI is a mechanism used by the government to solicit feedback and guidance from various stakeholders. Unlike the serious feedback process undertaken by FDA or CMS to approve drugs or to evaluate their efficacy and reimbursement, the White House process to solicit the feedback was largely flawed and hugely biased (we will show that in our discussion below). It was a feel-good mechanism, a mere formality, which is typically used to ratify your own initial assumptions and ignore any dissent or criticism.

We do not believe that either any serious thought went behind analyzing the feedback or a sincere effort was made to at least bring in a large group of stakeholders to develop a strategy for the most important and critical strategy ever developed in the history of the nation.

The future of AI in America was to be built on RFP responses that the OSTP never really analyzed or most likely even read (as we will show below).

THE RFI GIMMICK

To develop a policy response to the concerns about the benefits and risks of artificial intelligence technology, the Office of Science and Technology (OSTP) took a series of steps. These steps included organizing conferences and issuing a Request for Information (RFI) to obtain public opinion. The RFI stated:

Like any transformative technology, however, AI carries risks and presents complex policy challenges along a number of different fronts. The Office of Science and Technology Policy (OSTP) is interested in developing a view of AI across all sectors for the purpose of recommending directions for research and determining challenges and opportunities in this field. The views of the American people, including stakeholders such as consumers, academic and industry researchers, private companies, and charitable foundations, are important to inform an understanding of current and future needs for AI in diverse fields. (Obama 2016b; Obama 2016c).

The OSTP claimed that “many intellectuals, organizations, and academics had voiced concerns about the emergence of artificial intelligence technology and its impact on the social, economic, and political processes of human life.”

The following questions were posed in the RFI:

OSTP is particularly interested in responses related to the following topics: (1) The legal and governance implications of AI; (2) the use of AI for public good; (3) the safety and control issues for AI; (4) the social and economic implications of AI; (5) the most pressing, fundamental questions in AI research, common to most or all scientific fields; (6) the most important research gaps in AI that must be addressed to advance this field and benefit the public; (7) the scientific and technical training that will be needed to take advantage of harnessing the potential of AI technology, and the challenges faced by institutions of higher education in retaining faculty and responding to explosive growth in student enrollment in AI-related courses and courses of study; (8) the specific steps that could be taken by the federal government, research institutes, universities, and philanthropies to encourage multi-disciplinary AI research; (9) specific training data sets that can accelerate the development of AI and its application; (10) the role that “market shaping” approaches such as incentive prizes and Advanced Market Commitments can play in accelerating the development of applications of AI to address societal needs, such as accelerated training for low and moderate income workers (see https://www.usaid.gov/ cii/ market-shaping-primer); and (11) any additional information related to AI research or policymaking, not requested above, that you believe OSTP should consider. (Obama 2016c)

Even a cursory look at the above questions shows that the OSTP was least concerned about the industrialization of AI. The overwhelming focus was on governance, control, safety, public good, and so forth. It is rather strange that before even considering the diffusion, adoption, development, and industrialization potential of the technology, the strategic focus was being placed on risks and threats. As if the technology would somehow acquire a mind of its own and rise out of a computer and take over America, the fanatical adherence to safety and control were being pushed and projected as the primary concerns. This is not to discount the attention one must pay to ethical and governance issues of AI but only to point out that the public perception was being controlled with the negative dimensions of the technology vs. all the positive benefits. The reason for doing that was simple: Americans already carried the Hollywood-induced fear of the killer robots and the people formulating the AI agenda knew they could tap into that fear to appear as the saviors of humanity against the terminator. Their actions show that these heroes and so-called protagonists carried no sincere desire to truly solve the governance and ethics problems. But in their wisdom, this was the easiest way to engage people in the AI debate.

Secondly, one can observe that the questions mostly pertained to the supply side of AI—as if the demand side of the technology would somehow automatically appear through magic. When a strategy is based solely on the supply side, it will lack any link to the demand side and hence will not be able to either shape or respond to the demand side. The OSTP was not concerned about the demand side of AI. They couldn't care less about how the technology would be applied, who would use it, how it would be used, what are the national needs, which industries would use it first, and which industries needed to be informed or guided. All they cared about was how to build an AI field of dreams that was oblivious to the demand conditions.

After capturing the responses from the RFI, the White House issued its report in October 2016. As previously stated, this 2016 plan is the plan that would determine America's future, competitiveness, economic potential, political stability, social performance, and everything else that makes and gives nations their competitive edge. One would think that such a plan would have been designed and developed with the highest level of care, utmost responsibility, uncompromising meticulousness, and integrity. To determine whether such a process was put in place, we analyzed all the responses to the RFI and the plan itself.

RFI COMMENTS

We analyzed all the RFI comments received in the public domain. Then we analyzed the report issued by the OSTP. Specifically, we wanted to determine the following:

- Who responded to the RFI? Who didn't respond?

- What does the composition of respondents tell us about their state of affairs?

- Were the responses sufficient to truly formulate and propose a strategy and policy position?

- Were the perspectives, viewpoints, and concerns of the respondents taken into account when developing the report?

- What were the key themes that emerged?

- Was there a conflict of interest, and did the government go far enough to ensure that the viewpoints were balanced and any conflict of interest was properly disclosed?

- Did the comments represent inherent bias of entities, and was that bias reflected in the responses?

WHO RESPONDED?

Assuming that the RFI captured the requisite questions to achieve the goal of developing a broader perspective, our first task was to synthesize the data.

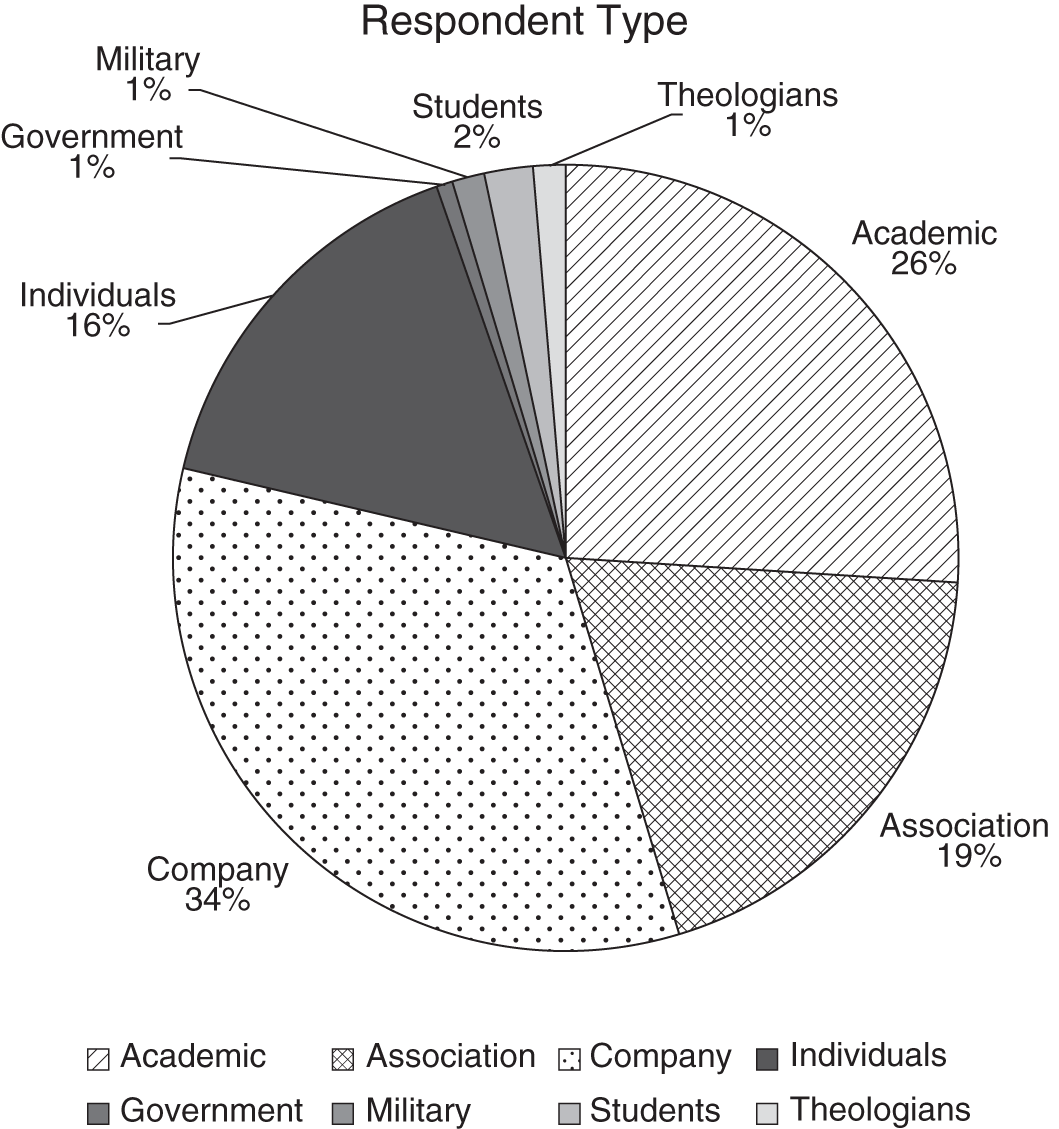

FIGURE 5.1 Respondent Type

While the OSTP reported that it received 161 responses in the final report, when we cleaned up the data, we found that there were 11 duplicates, or the same person doing two or more successive comments, and when we removed them, we were left with 150 comments. So while 161 entries were made, in reality only 150 comments were received. The fact that OSTP never knew that there were several duplicates shows that no one bothered to even read the responses.

Within the 150 comments, we segmented them into academic, companies, associations and nonprofits, students, military, US government, and individuals (Figure 5.1). The largest number of comments (34%) were received from companies, 26% from universities, 19% from associations (including nonprofits, and non-university affiliated institutions), 16% from individuals, 1% from military, 2% from students, 1% from theologians, and 1% from government.

We estimated that 8 comments were from Europe, 1 from Australia, and 1 from Asia, while 140 were from the United States (Figure 5.2).

FIGURE 5.2 Respondent Origination

Clearly, the fact that only 150 responses (with only 140 from the US) formed the basis for the most significant and powerful strategic revolution in the history of humankind shows that the OSTP was not really concerned about receiving any real data. They wanted to throw out something—without developing any deeper analysis or getting feedback from a large segment of society. It was more of a formality than a sincere effort to extract strategic help. It is likely that most people did not even know that an RFI was out.

HOW MANY QUESTIONS WERE ANSWERED?

Since OSTP claims that it received significant feedback and based the national strategy on the feedback, it is important to see what type of feedback OSTP really received. The reason it is important to understand this data is to determine whether the comments actually captured the depth and breadth of the information request and whether the information received truly represents the social concerns and positions of the American population.

The White House posed 11 questions in the RFI, and roughly 55% of the respondents specifically referred to the questions while 45% simply left general comments without clearly referencing the questions. In question 11, the RFI did allow respondents to leave general comments so the free responses (FR) can be viewed as a response to question 11. For our purposes, however, we have classified such responses as a separate category since the RFI did specify that respondents should refer to the question they are responding to, and if a response was obtained that didn't refer to any question, we classified that in the free response category.

This shows that only a little more than half wanted to talk about what was being asked in the RFI. If you are doing a survey and only half of your respondents want to talk about what you asked them and the rest want to raise other issues, you must at least consider what those issues are.

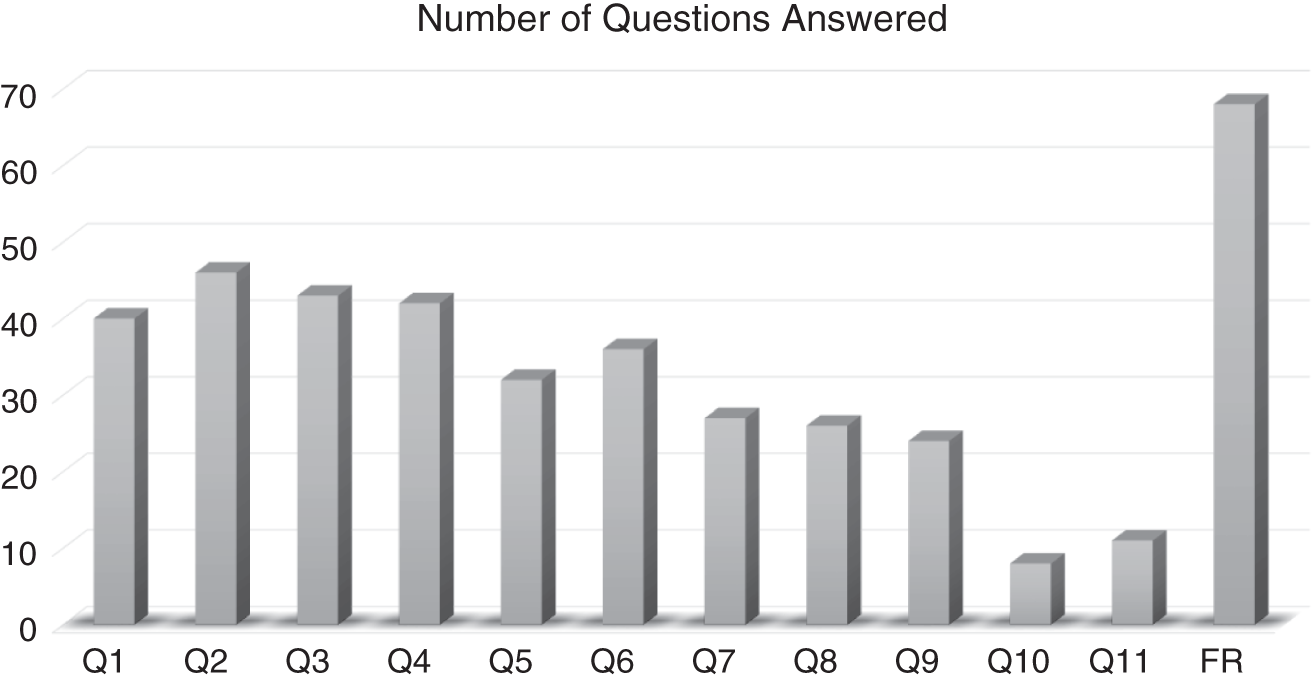

The frequency of input received is shown in Figure 5.3. Free responses (68) were followed by “Public Good” (46), which was followed by “Safety/Control issues” (43), “Economic Impact” (42), “Legal & Governance” (40), “Research Gaps” (36), “Pressing Questions” (32), “Training Needs” (27), “Steps to Encourage Research” (26), “Training Datasets” (24), “Additional Input” (11), and “Market Shaping” (8). Even at this level, the least amount of information obtained was on market shaping. The market shaping question was important. It was about “accelerating the development of applications of AI to address societal needs, such as accelerated training for low- and moderate-income workers,” and it received the least input. Clearly, no one seemed to be concerned about low- and moderate-income workers. The eyes of the respondents were on the only thing they cared about: more funding.

FIGURE 5.3 Total Responses by Question

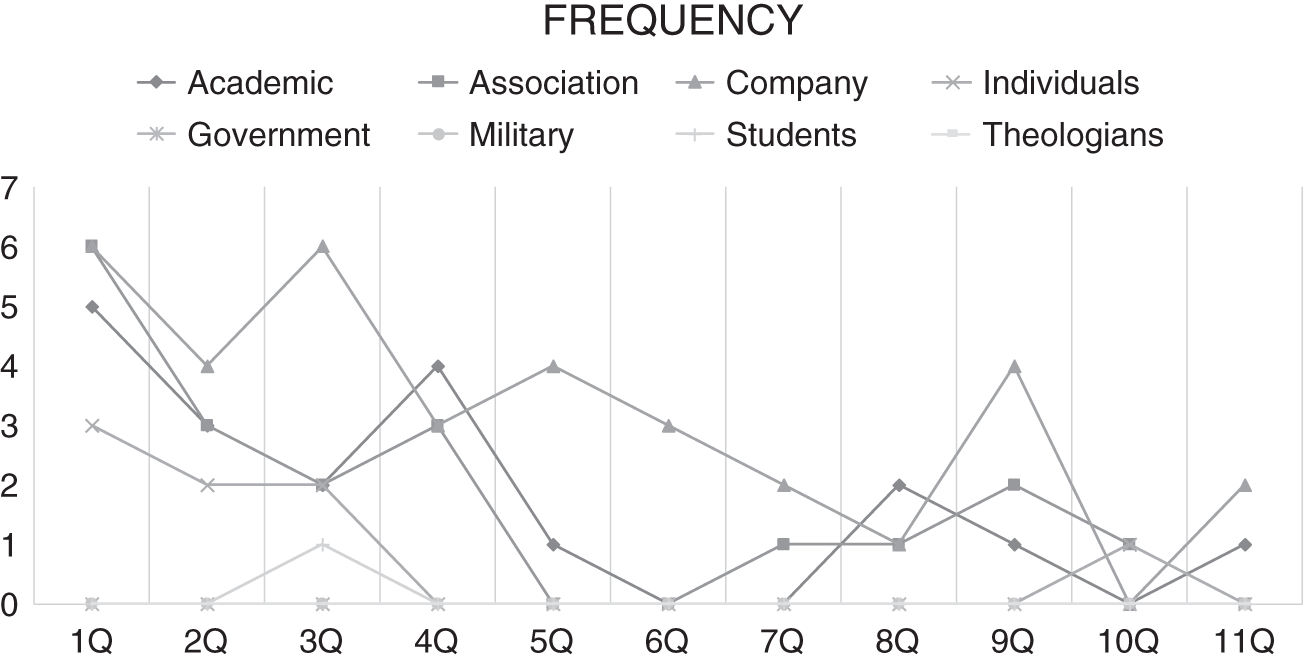

The frequency measures the number of responses for each question from each representation category. This provides a perspective on whether the sample set is significant enough for the government to make an informed decision. Additionally, it determines whether enough information was collected for each topic. For example, academics, whose job is to educate and train people, were least interested in answering question 10, which referred to ideas about “accelerated training for low- and moderate-income workers” (Figure 5.4). Only one school, University of Memphis, addressed that question. This showed that all that the universities cared about was to get more money into their research and had absolutely no regard for national performance or to worry about training low- and moderate-income workers. This should have created a concern for the OSTP. The job of OSTP is not to wine and dine and attend Big Tech–sponsored conferences in luxury hotels. It is to serve the American people and to advise the president. Based on the above, the OSTP should have concluded that only a tiny fraction of the limited number of stakeholders who have responded show any concern for training “low- and moderate-income workers.” This was a red flag.

Besides free responses, research gaps was the most popular topic among academic researchers, followed by safety/control and legal/governance.

Companies focused on safety/control issues, followed by social good/benefits, economic impact, and then governance issues.

Associations mostly focused on social good and governance issues.

FIGURE 5.4 How Did Respondents Answer Questions?

FREQUENCY OF QUESTIONS ANSWERED

This section shows how many respondents answered only one question, only two questions, and so on. This is a “coverage” measure and shows the comprehensiveness of the discussion that took place. While it is understandable that certain specialists (for example, a law firm commenting on the legal/governance issue) would comment only on their area of expertise, it is reasonable to expect that universities, associations, and companies would provide more comprehensive and broad coverage in their comments: universities because they are multidisciplinary, companies because business strategies are typically based on multidimensional research, and associations because they typically represent a broad member base and hence have the ability and the responsibility to collect multidimensional information.

Twenty respondents answered only one question, 12 answered two questions, 13 answered only three questions, while only 3 answered all of the 11 questions (Figure 5.5). Surprisingly, while 39 universities and university-based institutions participated, only one university (University of Memphis) answered all 11 questions (Figure 5.6).

Besides the universities, IBM and West Desert Enterprises were the two other respondents who answered all RFI questions. Only 22 respondents out of 150 answered more than five questions.

Clearly most universities chose to either not respond to any question or focus on a small number of questions.

FIGURE 5.5 Frequency Total

FIGURE 5.6 Frequency

ACADEMIC INSTITUTIONS

- Universities submitted 39 replies.

- Future of Life Institute made three entries, and two of them were duplicates. MIT made three entries.

- George Mason University made two entries. Multiple researchers made entries from Johns Hopkins. University of Virginia also included an entry reference to another source.

- There were 19 comments that specifically referred to the questions in the RFI, and 20 came in the form of free responses.

- In total comments, 7 academic respondents attempted to market or sell their services, books, consulting services, or reports.

- Only one comment specifically disclosed a potential conflict of interest, but that was also done not as a means of disclosure but rather to show their credentials.

- Only one university, University of Memphis, provided comprehensive answer to every question.

- It is important to identity that many universities and some of the top programs were missing. Of the 18 top artificial intelligence programs in the United States, only one-third participated and two-thirds did not.

- Of the top 100 programs in the world, only 20% participated.

- Of the ones who did participate, only five universities responded to more than four questions.

American universities and research centers form the foundation of our nation's development and progress. Obtaining deep and thorough feedback from the universities should have been the top priority. But what happened was extremely disappointing. Of the nearly 4000 universities and colleges in the US (although US News included only 1452 schools in the 2021 rankings) only 39 responded to the RFI. Of those who responded, only one—University of Memphis—provided answers to all questions. So much for the feedback.

If OSTP really wanted to get real feedback, it should have gone to all schools. It should have knocked on the doors of community colleges and mid-tier universities and not just the elite schools. If the goal was to build the American AI capability, a college from a small town, a university from a rural area, a social sciences school, all should have been included in the planning process. But the OSTP was not designed that way. It was designed to be a representative of top schools who received large funds from companies and of professors who were engaged in building software companies to be sold to Big Tech. National interest or national strategy were not placed in the forefront.

It became obvious that in general the responses came from university computer science departments, and other departments were either not included or didn't respond. Thus, even though the goal of the RFI was to get information on social, economic, and governance/regulatory matters in addition to the technical matters, a vast majority of universities chose to focus only on the aspects of funding.

Unsurprisingly, to a large extent the questions of the RFI focused on governance, safety, control, and regulation, and the responses also concentrated on those aspects with an angle of funding. As if America was digging a dormant nuclear facility in the middle of a thriving city, the overly cautious tone belied the real intention: get more money. It would be analogous to launching the auto industry with a narrative based on the perils of drunk driving instead of making the automobiles, roads, infrastructure, economic impact of automobiles, understanding how manufacturing and distribution would change, how retail would develop with automobiles, how service industry would develop, and how people would travel and commute. What if the first question asked by the auto industry was about the ramifications of drunk driving?

George Mason University and Hunter College CUNY took the position of minimizing regulatory oversight and governance. Both strongly argued against any type of oversight, governance, and regulation unless clear evidence of harm exists.

A comment from the MIT Minsky Institute said, “Myself and a group met with Marvin Minsky at his house on 111 Ivy Street, Brookline, Mass., one night a week last fall… . We discussed what a ‘Minsky Institute' might consist of and what its mission statement might be. Unfortunately, Marvin passed away before anything fully converged.” Mr. Greenblatt offered to serve the Minsky Group as a central clearinghouse. Despite the response being irrelevant and nonsensical, it was MIT that later gained the central position in helping OSTP shape the strategy. Three years later when the czars of OSTP appeared in conferences, they were escorted by an MIT professor. This shows that the RFI feedback was either ignored or not even considered as the criteria to select advisors. In another comment from MIT, Mr. Lieberman said that the warnings are “overblown”; however, he acknowledged the need for safety research. The third comment from MIT (Brains, Mind, and Machines) didn't refer to the governance issue at all; however, it requested a major funding program by the federal government.

Nick Bostrom from the University of Oxford and author of the 2014 book Superintelligence: Paths, Dangers, Strategies (a book recommended by Bill Gates and Elon Musk—both heavily invested in AI) chose not to respond to the governance question directly; however, while addressing responses to question 3 and 6, he did touch on the governance issue. He wrote, “We believe that regulation of AI due to these concerns would be extremely premature and undesirable. Current AI systems are not nearly powerful enough to pose a threat to society on such a scale, and may not be for decades to come.” In other words, on one hand he was increasing global anxiety about the dangers of AI while on the other hand, he was calling regulations premature and undesirable. He did offer the services of his group to be involved in the process, however.

On the governance issues, many universities did offer balanced and neutral analysis, and some provided several valuable ideas. Our analysis showed that the following institutions provided a balanced and valuable perspective on governance/regulation issues: Santa Clara University, Florida International University, University of Memphis, Hinckley Institute of Politics, Stanford University, University of Wisconsin, HKUST, University of Virginia, Texas A&M University, University of Michigan, University of Wisconsin–Madison, Washington State University, Brown University, University of Cambridge, University of Maryland, and UC Berkeley School of Information.

COMPANIES

Of the nearly 10 million active companies in the United States, we estimate that only 50 companies responded to the survey.

Four of the submissions were from Alphabet/Google-related companies including Google, DeepMind, and Moonshot. Of the tech giants, Google, IBM, Facebook, and Microsoft participated while Amazon and Apple didn't (at least not directly). Oracle made an irrelevant comment. Nvidia made two comments.

Many other large software companies, such as SAS and Salesforce, did not comment.

Note: It is possible that software companies were represented by associations and hence decided not to comment directly. Although, we find it hard to believe that associations could have been able to collect, analyze, and synthesize data back from feedback from all of their member firms.

In the answers provided by large companies, we found Microsoft's comments to be balanced and looking at both sides of problems. Google-affiliated firms mostly addressed their capabilities and benefits. IBM answered all the questions. Facebook also focused more on its own capabilities and research.

In addressing the governance and regulation question, Skymind focused on the use of artificial intelligence to improve corporate governance vs. governance and regulation of AI technology.

Not only were dozens of sectors and potentially thousands of companies missing and not represented, but those who did answer the RFI provided little strategic insights. As with the academic institutions, the underlying message from companies was also, “Give us more money.” Factors such as industrialization of AI were not considered. The term “AI industrialization” means adoption of AI in business and industry such that companies, industries, sectors, and government agencies are built around AI. Whether it is the automobile sector or the power sector, consumer goods or pharmaceutical, defense agencies or civilian, AI industrialization implies transforming the business models, operations, and products and services to make them compatible with AI.

Companies, particularly large companies, have powerful strategy departments. The questions asked by the RFI should have been covered by the strategy departments. But again, America was not involved in the OSTP so-called strategy development process—only a handful of tech firms (the sponsors of conferences) and professors (the seekers of research funding) were involved. This should be another red flag for the OSTP. Why were P&G, Kraft, Goldman Sachs, or Walmart not offering recommendations? Clearly, either they did not know about this or if they did, they did not find commenting on the initiative would make any difference. It was the OSTP responsibility to reach out to these firms and to get them to participate.

ASSOCIATIONS AND NONPROFITS

- Twenty-nine associations (nonprofits, trade, scientific, etc.) provided responses.

- Eight associations specifically addressed question 1, while five others touched on the issue in their comments.

- Of the ones that responded or commented on the governance/regulation issue, seven took the position that there should be no regulation/governance, six asked for light governance/regulation, and none asked for strong governance and regulation.

- The seven associations that took the same position as George Mason University and Hunter College CUNY (no regulation) represented trade interests of the technology sector. Several clearly disclosed that position. It is not clear to us whether the feedback provided by associations was vetted by their members before being submitted.

The anti-governance and anti-regulation sentiment is not surprising. Many of the associations are trade associations and serve the role of promoting the interests of their members.

Some of the associations that favored least or no governance and regulation include the US Chamber of Commerce, SIIA Software & Information Industry Association, The Niskanen Center, The Internet Association, and Computing Community Consortium.

For example, the US Chamber of Commerce responded (Obama 2016b, p. 153):

With these and many other questions in mind, companies like Google have established AI ethics boards, which go beyond legal compliance to examine the deeper implications and potential complications of emerging AI technologies. Regulatory questions also arise with the rise of any transformational technology. The misconceptions around AI increase the likelihood of reckless regulatory decisions. It is vital to recognize that AI is well covered by existing laws and regulators with respect to privacy, security, safety, and ethics. Placing additional undue burdens would suppress the ability for this technology to continue growing. The policy questions that AI and machine learning raise are not so radically different than questions raised by technology that has preceded it. It is important to remember that artificial intelligence is still nascent, and it would be a mistake to attempt to address the issue with broad, overarching regulation.

The reference to Google's ethics board is interesting, as the company has been unable to keep an ethics board intact.

Software & Information Industry Association (SIIA) commented:

Automation has historically produced long-term growth and full employment. But the next generation of really smart AI-based machines could create sustained technological unemployment. Two Oxford economists estimated that 47% of occupations are susceptible to automation. An OECD study found that “9% of jobs are automatable.”

The Council of Economic Advisors (CEA) recently warned that AI could exacerbate wage inequality, estimating that 83% of jobs making less than $20 per hour would come under pressure from automation, as compared to only 4% of jobs making above $40 per hour. The CEA also documented a long-term decline in prime-age male labor force participation—from 97% in 1954 to 88% today—that could be exacerbated by AI.

Despite these concerns, there is no real evidence that the ultimate impact of AI on the labor market will be any different from that of earlier productivity-enhancing technologies. Studies have shown that labor market developments that some are blaming on computer technology and the Internet—like job polarization—have been a feature of the US economy since the 1950s. (Obama 2016b, p. 180)

The beauty of the above comment was that after providing significant empirical information in the first two paragraphs about the AI impact on labor market, the Association argues that “there is no real evidence” in the third paragraph of the excerpt above.

The Niskanen Center, a DC-based think tank, commented:

Some find the flowering of the technology alarming, and wonder aloud whether AI may lead to a Terminator-style future in which incomprehensibly intelligent computers destroy human civilization. Even moderate critics of AI warn that we now stand on the verge of a mass labor dislocation in which up to half of all jobs may be taken by machines. For now, however, these worries are extremely speculative, and the alarm they cause can be counterproductive.

In order to maximize the benefits associated with ongoing developments in AI, we recommend that policymakers and regulators:

- Avoid speaking of hyperbolic hypothetical doomsday scenarios, and

- Embrace a policy of regulatory restraint, intervening in the development and use of AI technology only when and if the prospect of harm becomes realistic enough to merit government intervention. (Obama 2016b, p. 183)

The Internet Association, which represented 40 of the world's leading organizations, commented and argued that existing policy frameworks can adapt to AI—a position that was proven incorrect based on the plethora of policy frameworks developed later on in America:

Before delving into policy specifics, the IA submits that it is advisable for policymakers to draw parameters around what artificial intelligence is and is not since this is an open debate that may trigger fearful policy responses where they are not needed.(Obama 2016b, p. 192)

Openeth.org talked about the products it is developing. The Leadership Council on Civil and Human Rights, ACM Special Interest Group on AI, the Center for Data Innovation, the Global Information Infrastructure Commission, the Application Development Alliance, and the Machine Intelligence Research Institute also touched directly or indirectly on the light governance issue. A comment claiming to represent truckers in America talked about the impact of AI on truckers and raised concerns about the economic well-being of the drivers. MITRE focused on the topic of safety and control and proposed to use its risk evaluation model.

The feedback from the associations should have been analyzed from many perspectives: first, which associations and scientific societies were participating and which were not and why not; second, what part of their feedback truly represents national interests vs. only being lobbyists for their members' causes; and third, why no association answered all questions and most only responded to questions about governance and regulation.

THE MIRACULOUS REPORT

One would assume the after receiving such a low, largely irrelevant, and mostly self-centered “just open up the bank” responses, the OSTP would have gone back to the design board and figured out how to truly engage and receive qualitatively and quantitatively relevant and actionable information, but that did not happen. The OSTP did not even consider the recommendations or the gaps that were pointed out in the 150 responses. Most likely they did not even read the responses, since—as already explained—the OSTP would have known there were 150 and not 161 responses. Once again, let us consider the following:

- Out of 10 million American firms, only about 50 participated directly. Sectors other than the tech sector were mostly not represented. There were only a handful of Fortune 500 and, again, only a few tech firms.

- Even most of the associations represented only the tech sector. There was little or no representation for other sectors.

- Only 39 universities participated. The majority of American universities and schools did not respond.

- Only two companies and one university responded to all questions.

- Most common response was to increase funding (open the bank)—which is a completely expected answer from university research departments or from the tech firms whose tech investments can be subsidized by the government.

Clearly, what was lacking were the industrialization, diffusion, AI demand development, AI adoption, and AI communication—the factors that drive true national passion and energy to do great things. The OSTP plan was really a few professors and government employees—all apparently directed by or representing the Big Tech agenda—reaching to the conclusion that more money needs to be thrown into the AI pit. That was the extent of the plan. And this narrow thinking was going to cost America its leadership in AI.

REFERENCES

- Obama, Barack. 2016a. “Obama White House.” [Online]. Available at: https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/national_ai_rd_strategic_plan.pdf.

- Obama, Barack. 2016b. “Obama RFI.” [Online]. Available at: https://cra.org/ccc/wp-content/uploads/sites/2/2016/04/OSTP-AI-RFI-Responses.pdf.

- Obama, Barack. 2016c. “Obama RFI 2.” [Online]. Available at: https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/preparing_for_the_future_of_ai.pdf.

- Parker, Lynne E. 2018. “Creation of the National Artificial Intelligence Research and Development Strategic Plan.” AI Magazine 39(2), 25–32. Available at: https://doi.org/10.1609/aimag.v39i2.2803.

- Trump, Donald. 2020. “AI One Year Report.” [Online]. Available at: https://www.nitrd.gov/nitrdgroups/images/c/c1/American-AI-Initiative-One-Year-Annual-Report.pdf.