12

HOW PRACTITIONERS ADAPT TO CLUMSY TECHNOLOGY

In developing new information technology and automation, the conventional view seems to be that new technology makes for better ways of doing the same task activities. We often act as if domain practitioners were passive recipients of the “operator aids” that the technologist provides for them. However, this view overlooks the fact that the introduction of new technology represents a change from one way of doing things to another.

The design of new technology is always an intervention into an ongoing world of activity. It alters what is already going on – the everyday practices and concerns of a community of people – and leads to a resettling into new practices. (Flores et al., 1988, p. 154)

For example, Cordesman and Wagner summarize lessons from the use of advanced technology in the Gulf War:

Much of the equipment deployed in US, other Western, and Saudi forces was designed to ease the burden on the operator, reduce fatigue, and simplify the tasks involved in combat. Instead, these advances were used to demand more from the operator. Almost without exception, technology did not meet the goal of unencumbering the military personnel operating the equipment, due to the burden placed on them by combat. As a result … systems often required exceptional human expertise, commitment, and endurance. … leaders will exploit every new advance to the limit. As a result, virtually every advance in ergonomics was exploited to ask military personnel to do more, do it faster and do it in more complex ways. … One very real lesson of the Gulf War is that new tactics and technology simply result in altering the pattern of human stress to achieve a new intensity and tempo of combat. (Cordesman and Wagner, 1996, p. 25)

Practitioners are not passive in this process of accommodation to change. Rather, they are an active adaptive element in the person-machine ensemble, usually the critical adaptive portion. Multiple studies have shown that practitioners adapt information technology provided for them to the immediate tasks at hand in a locally pragmatic way, usually in ways not anticipated by the designers of the information technology (Roth et al., 1987; Flores et al., 1988; Hutchins, 1990; Cook and Woods, 1996b; Obradovich and Woods, 1996). Tools are shaped by their users. Or, to state the point more completely, artifacts are shaped into tools through skilled use in a field of activity. This process, in which an artifact is shaped by its use, is a fundamental characteristic of the relationship between design and use.

There is always … a substantial gap between the design or concept of a machine, a building, an organizational plan or whatever, and their operation in practice, and people are usually well able to effect this translation. Without these routine informal capacities most organizations would cease to function. (Hughes, Randall, and Shapiro, 1991)

TAILORING TASKS AND SYSTEMS

Studies have revealed several types of practitioner adaptation to the impact of new information technology. In system tailoring, practitioners adapt the device and context of activity to preserve existing strategies used to carry out tasks (e.g., adaptation focuses on the set-up of the device, device configuration, how the device is situated in the larger context). In task tailoring, practitioners adapt their strategies, especially cognitive and collaborative strategies, for carrying out tasks to accommodate constraints imposed by the new technology.

When practitioners tailor systems, they adapt the device itself to fit their strategies and the demands of the field of activity. For example, in one study (summarized in Chapter 7), practitioners set up the new device in a particular way to minimize their need to interact with the new technology during high criticality and high-tempo periods. This occurred despite the fact that the practitioners’ configurations neutralized many of the putative advantages of the new system (e.g., the flexibility to perform greater numbers and kinds of data manipulation). Note that system tailoring frequently results in only a small portion of the “in principle” device functionality actually being used operationally. We often observe operators throw away or alter functionality in order to achieve simplicity and ease of use.

Task tailoring types of adaptations tend to focus on how practitioners adjust their activities and strategies given constraints imposed by characteristics of the device. For example, information systems which force operators to access related data serially through a narrow keyhole instead of in parallel result in new displays and window management tasks (e.g., calling up and searching across displays for related data, decluttering displays as windows accumulate, and so on). Practitioners may tailor the device itself, for example, by trying to configure windows so that related data is available in parallel. However, they may still need to tailor their activities. For example, they may need to learn when to schedule the new decluttering task (e.g., by devising external reminders) to avoid being caught in a high criticality situation where they must reconfigure the display before they can “see” what is going on in the monitored process. A great many user adaptations can be found in observing how people cope with large numbers of displays hidden behind a narrow keyhole. See Woods and Watts (1997) for a review.

PATTERNS IN USER TAILORING

Task and system tailoring represent coping strategies for dealing with clumsy aspects of new technology. A variety of coping strategies employed by practitioners to tailor the system or their tasks have observed (Roth et al., 1987; Cook et al., 1991; Moll van Charante et al., 1993; Sarter and Woods, 1993; Sarter and Woods, 1995; Cook and Woods, 1996b; Obradovich and Woods, 1996). One class of coping behaviors relates to workload management to prevent bottlenecks from occurring at high-tempo periods. For example, we have observed practitioners force device interaction to occur in low-workload periods to minimize the need for interaction at high-workload or high-criticality periods. We have observed practitioners abandon cooperative strategies and switch to single-agent strategies when the demands for communication with the machine agent are high, as often occurs during high criticality and high tempo operations.

Another class of coping strategies relates to spatial organization. We consistently observe users constrain “soft,” serial forms of interaction and display into a spatially dedicated default organization.

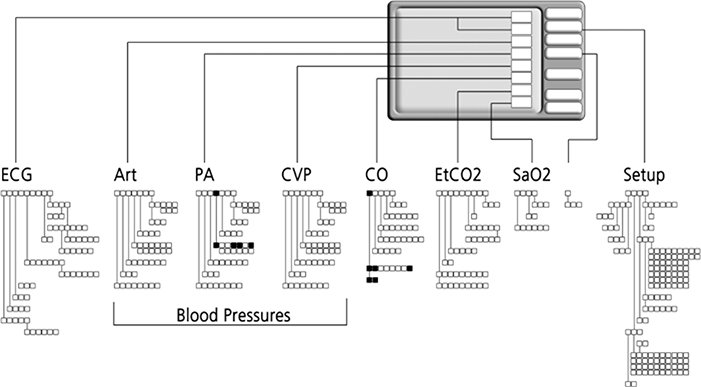

Another consistent observation is that, rather than exploit device flexibility, we see practitioners externally constrain devices via ad hoc standards. Individuals and groups develop and stick with stereotypical routes or methods to avoid getting lost in large networks of displays, complex menu structures, or complex sets of alternative methods. For example, Figure 12.1 shows about 50 percent of the menu space for a computerized patient-monitoring information system used in operating rooms. We sampled physician interaction with the system for the first three months of its use during cardiac surgery. The highlighted sections of the menu space indicate the options that were actually used by physicians during this time period. This kind of data is typical – to cope with complexity, users throw away functionality to achieve simplicity of use tailored to their perceptions of their needs.

Studies of practitioner adaptation to clumsy technology consistently observe users invent “escapes” – ways to abandon high-complexity modes of operation and to retreat to simpler modes of operation when workload gets too high.

Finally, observations indicate that practitioners sometimes learn ways to “trick” automation, for example to silence nuisance alarms. Practitioners appear to do this in an attempt to exercise control over the technology (rather than let the technology control them) and to get the technology to function as a resource or tool for their ends.

The patterns of adaptation noted above represent examples of practitioners’ adaptive coping strategies for dealing with clumsy aspects of new technology, usually in response to criteria such as workload, cognitive effort, robustness. Note these forms of tailoring are as much a group as an individual dynamic. Understanding how practitioners adaptively respond to the introduction of new technology and understanding what are the limits of their adaptations are critical for understanding how new automation creates the potential for new forms of error and system breakdown.

BRITTLE TAILORING

Practitioners (commercial pilots, anesthesiologists, nuclear power operators, operators in space control centers, and so on) are responsible, not just for device operation but also for the larger system and performance goals of the overall system. Practitioners tailor their activities to insulate the larger system from device deficiencies and peculiarities of the technology. This occurs, in part, because practitioners inevitably are held accountable for failure to correctly operate equipment, diagnose faults, or respond to anomalies even if the device setup, operation, and performance are ill-suited to the demands of the environment.

However, there are limits to a practitioner’s range of adaptability, and there are costs associated with practitioners’ coping strategies, especially in non-routine situations when a variety of complicating factors occur. Tailoring can be clever, or it can be brittle. In extreme cases, user adaptations to cope with an everyday glitch can bypass or erode defenses against failure. Reason, working backwards from actual disasters, has noted that such poor adaptations are often a latent condition contributing to disaster (e.g., the capsizing of the ferry The Herald of Free Enterprise). Here, we are focusing on the gaps and conflicts in the work environment that lead practitioners to tailor strategies and tasks to be effective in their everyday practice. Adaptations to cope with one glitch can create vulnerabilities with respect to other work demands or situations. Therefore, to be successful in a global sense, adaptation at local levels must be guided by the provision of appropriate criteria, informational and material resources, and feedback. One function of people in more supervisory roles is to coordinate adaptation to recognize and avoid brittle tailoring and to propagate clever ones.

These costs or limits of adaptation represent a kind of an ever-present problem whose effects are visible only when other events and circumstances show up to produce critical incidents. At one point in time, practitioners adapt in effective ways based on the prevailing conditions. However, later, these conditions change in a way that makes the practitioners tailoring ineffective, brittle, or maladaptive.

Clumsy new systems introduce new burdens or complexities for practitioners who adapt their tasks and strategies to cope with the difficulties while still achieving their multiple goals. But incidents can occur that challenge the limits of those adaptations.

Ironically, when incidents occur where those adaptations are brittle, break down, or are maladaptive, investigation stops with the label “human error” (the First Story). When investigations stop at this First Story, the human skills required to cope with the effects of the complexities of the technology remain unappreciated except by other beleaguered practitioners. Paradoxically, practitioners’ normally adaptive, coping responses help to hide the corrosive effects of clumsy technology from designers and reviewers. Note the paradox: because practitioners are responsible, they work to smoothly accommodate new technology. As a result, practitioners’ work to tailor the technology can make it appear smooth, hiding the clumsiness from designers.

ADAPTATION AND ERROR

There is a fundamental sense in which the adaptive processes which lead to highly skilled, highly robust operator performance are precisely the same as those which lead to failure. Adaptation is basically a process of exploring the space of possible behaviors in search of stable and efficient modes of performance given the demands of the field of practice.

“Error” in this context represents information about the limits of successful adaptation. Adaptation thus relies on the feedback or learning about the system which can be derived from failures, near misses and incidents. Change and the potential for surprise (variability in the world) drive the need for individuals, groups and organizations to adapt (Ashby’s law of requisite variety). If the result is a positive outcome, we tend to call it cleverness, skill or foresight and the people or organizations reap rewards. If the result is a negative outcome, we tend to call it “human error” and begin remedial action.

Attempts to eradicate “error” often take the form of policing strict adherence to policies and procedures. This tactic tries to eliminate the consequences of poor adaptations by attempting to drive out all adaptations. Given the inherent variability of real fields of practice, systems must have equivalent adaptive resources. Pressures from the organization to stick to standard procedures when external circumstances require adaptation to achieve goals creates a double bind for practitioners: fail to adapt and goals will not be met but adaptation if unsuccessful will result in sanctions.

To find the Second Story after incidents, we need to understand more about how practitioners adapt tools to their needs and to the constraints of their field of activity. These adaptations may be inadequate or successful, misguided or inventive, brittle or robust, but they are locally rational responses of practitioners to match resources to demands in the pursuit of multiple goals.

HOW DESIGNERS CAN ADAPT

Computer technology offers enormous opportunities. And enormous pitfalls. In the final analysis, the enemy of safety is complexity. In medicine, nuclear power and aviation, among other safety-critical fields, we have learned at great cost that often it is the underlying complexity of operations, and the technology with which to conduct them, that contributes to the human performance problems. Simplifying the operation of the system can do wonders to improve its reliability, by making it possible for the humans in the system to operate effectively and more easily detect breakdowns. Often, we have found that proposals to improve systems founder when they increase the complexity of practice (e.g., Xiao et al., 1996). Adding new complexity to already complex systems rarely helps and can often make things worse.

The search for operational simplicity, however, has a severe catch. The very nature of improvements and efficiency (e.g., in health care delivery) includes, creates, or exacerbates many forms of complexity. Ultimately, success and progress occur through monitoring, managing, taming, and coping with the changing forms of complexity, and not by mandating simple “one size fits all” policies. This has proven true particularly with respect to efforts to introduce new forms and levels of computerization. Improper computerization can simply exacerbate or create new forms of complexity to plague operations.

ADOPT METHODS FOR USE-CENTERED DESIGN OF INFORMATION TECHNOLOGY

Calls for more use of integrated computerized information systems to reduce error could introduce new and predictable forms of error unless there is a significant investment in use-centered design. The concepts and methods for use-centered design are available and are being used everyday in software houses (Carroll and Rosson, 1992; Flach and Dominguez, 1995). Focus groups, cognitive walkthroughs, and interviews are conducted to generate a cognitive task analysis which details the nature of work to be supported by a product (e.g., Garner and Mann, 2003). Iterative usability testing of a system prior to use with a handful of representative users has become a standard, not an exceptional part, of most product development practices. “Out of the box” testing is conducted to elicit feedback on how to improve the initial installation and use of a fielded product.

Building partnerships, creating demonstration projects, and disseminating the techniques for organizations is a significant and rewarding investment to ensure we receive the benefits of computer technology while avoiding designs that induce new errors (Kling, 1996). But there is much more to human-computer interaction than adopting basic techniques like usability testing (Karsh, 2004). Much of the work in human factors concerns how to use the potential of computers to enhance expertise and performance. The key to skillful as opposed to clumsy use of technological possibilities lies in understanding both the factors that lead to expert performance and the factors that challenge expert performance (Feltovich, Ford, and Hoffman, 1997). Once we understand the factors that contribute to expertise and to breakdown, we then will understand how to use the powers of the computer to enhance expertise. This is an example of a more general rule – to understand failure and success, begin by understanding what makes some problems difficult. We can achieve substantial gains by understanding the factors that lead to expert performance and the factors that challenge expert performance. This provides the basis to change the system, for example, through new computer support systems and other ways to enhance expertise in practice.