Each IT has its own delivery frameworks. Even if it is a tiny company, there are some tools and actions which need to be performed to successfully deliver any application or service. The term framework means basically that it is a predefined routine or set of tools which should make its delivery easier. These normally consist out of installation tools used for application delivery, deployment tools for OSes and configuration tools for infrastructure. All together they form your delivery framework.

IT is important to understand what function each tool is covering. Sometimes there are tools which already cover a part of a process or an entire process. Then it is important to understand how to interact with those tools and at which point the automation has to handover the task to this tools. A very popular example is ticket managing systems. In bigger companies, they are typically part of the delivery process, even though they serve a rather passive role. However, they do cover normally quite a big part of other processes such as change management, release planning as well as tracking service deployments.

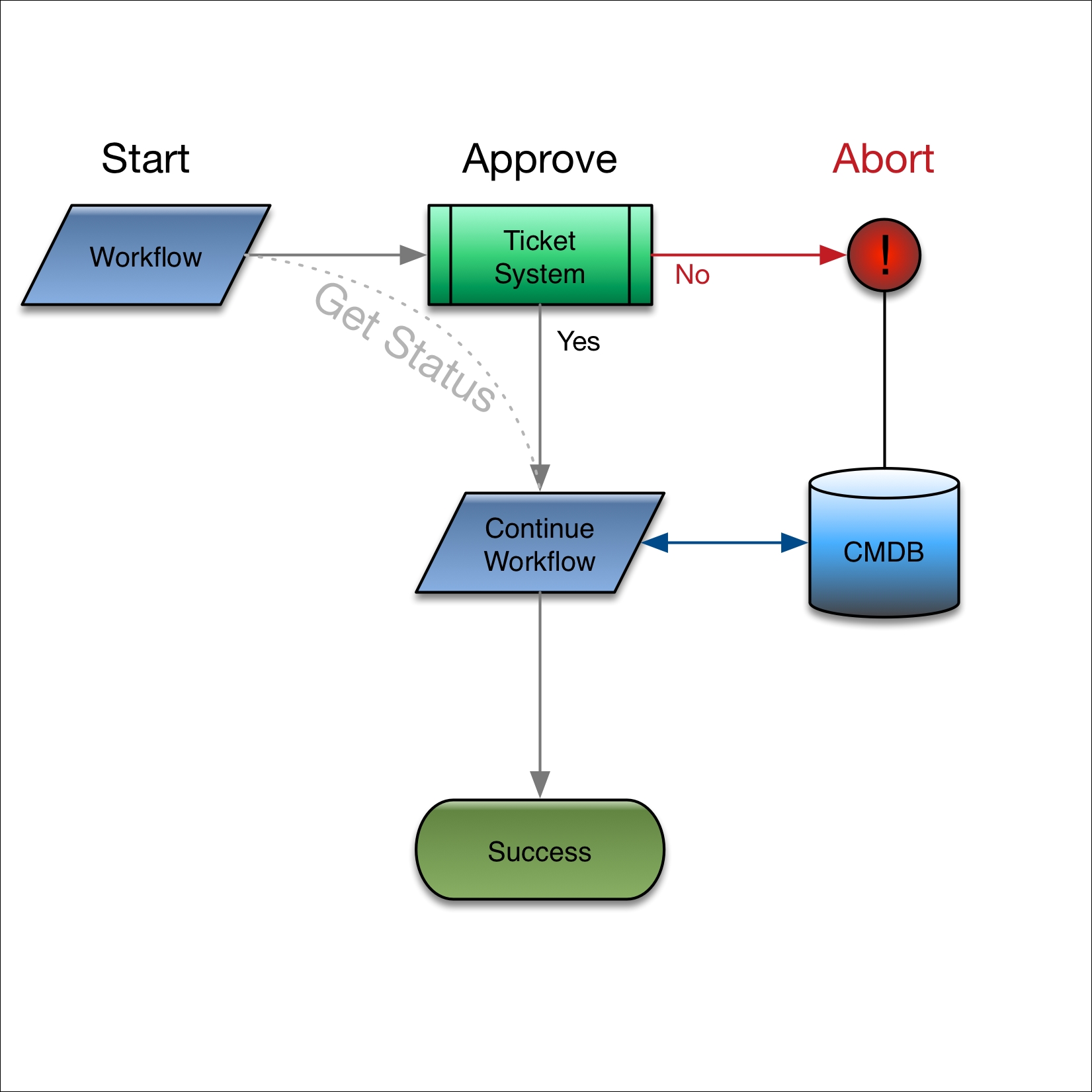

There is a misbelieve that ITIL plays no role in a modern SDDC, that is actually not true. ITIL is still valid, with the difference that the integration can now be done completely automatically. This guarantees its completeness over manual data entry and also helps to relieve some tedious tasks from the administrators. This is a typical example of an IT delivery process taking care of all the technical orchestration, handing over all necessary information to the ticketing system and then, if it got a successful return, continuing the task and closing the ticket.

The same comes true for CMDB. This is a typical ITIL requirement and contains and maintains all software and hardware configurations within a data center. It is meant to hold this information in order to keep track of changes as well as knowing what is deployed and running in the data center. You might not find this in smaller data centers, but in bigger ones, with thousands of servers and hundreds of applications, it might become necessary to maintain a CMDB. To keep theses CMDBs accurate is often one of the less popular things to do for an administrator. Sometimes they are already using data out of the ticketing system. Sometimes a complete configuration dataset has to be provided plus the ticketing system is required to file a change/support/deployment request.

However, with the power of automation, also this data entry can be taken care of by the technical deployment workflow. All we need is to know which data is required to go into the CMDB and if we can use an API to simply hand the data over. Also, each time somebody requests a change we can update the record to keep the data accurate. Finally, once a user has decided to remove a workload/application, automation can eventually mark the record in the CMDB as application deleted.

These are steps of the IT delivery framework which typically form a bigger picture. Since all departments have to add their data to a CMDB or use the ticket management system. This means that automation within the data center makes the job of the teams easier to keep this kind of information accurate. But it is important to know when and where these tools are used and what data goes into them.

On the other hand, if your organization is not using a CMDB or ticket management system yet, the good news is that a lot of the SDDC functions and features are quite similar to these frameworks. Therefore, you do not need to specifically introduce these concepts all together with the SDDC. You could simply declare the way the SDDC management handles deployments as your change and configuration management standards. Since introducing a proper ticket management system might be as complex as introducing an SDDC, you might consider using the SDDCs options first and then decide if it is fulfilling your requirements. However, there are some regulations which might still require a CMDB or ticket system, to ensure compliance standards.

All this is part of your framework, by identifying your internal data center processes you might also identify how your delivery framework looks like. Always keep in mind that this is relevant for all involved parties and departments. It does not make sense to have it fully documented for the server department, but the processes and tools for any other departments are mostly unknown. Always keep in mind that the SDDC will touch each and every part of your data center, even if it might have a big share in the server unit, it can and will not work without the participation of every other department in the data center.

This is maybe the big topic when it comes to the SDDC or automation itself. For scripts and workflows, it is paramount to adhere to a standard in doing things. If all deployments consist of some exceptions it might be impossible to use automation to deploy. Normally there are a few tasks in a data center, which have already been standardized. There are a few factors, which point out that something is already following a standard:

- There is a form to request the service

- The service is deployed according to preset choices

- These choices will modular fit most requirements

- There might be runbooks to create any config/deploy any service

- There is a catalog of services

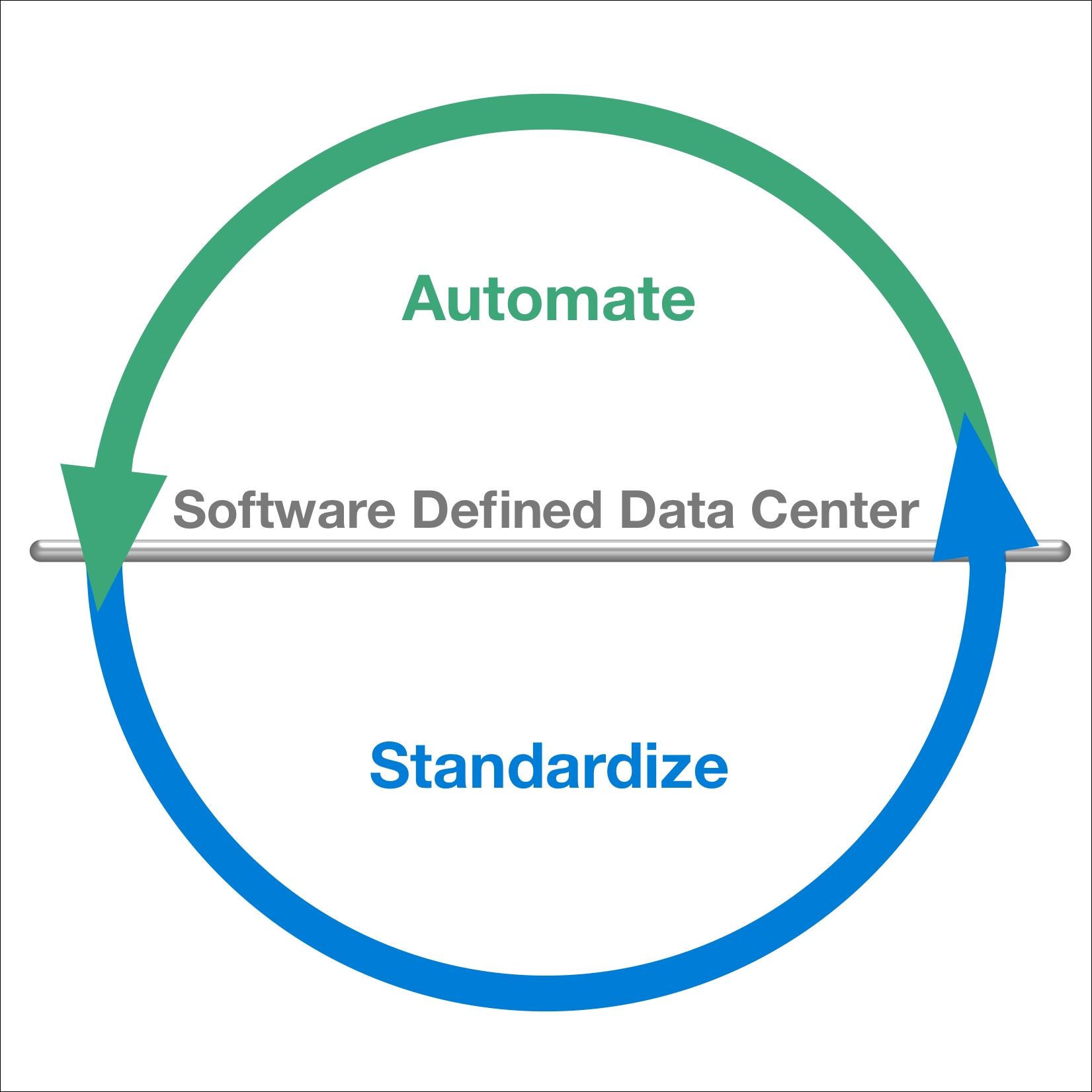

Typically any of these things describe the standardized setup of a service. Standardization basically stands for easily repeatable actions, based on predefined data entry forms. This is why standardization goes hand in hand with automation. If every deployment is different and every OS is custom, if every network setting is unique and every storage requirement is different, it will be impossible to automate it in a straight forward manner. Workflows are perfect for applying standards, but only limited usable for exceptions and customized installations.

Therefore, one of the most important things to do before creating an SDDC is ensuring standardization is in place. The good news is a lot of organizations already have some kind standardization in place.

There are areas where standardization is transparent for the end user:

- In the storage team, the pool size, logical device (LDEV) size or logical unit number (LUN) size can be set in chunks (for example, 100 GB steps)

- In the network team, IPs/networks may be requested at a pool or range level (for example, 20 addresses)

- In the server department, VMs can be requested using predefined compute and memory value

- 1 vCPU with 2 GB RAM, 2 vCPU with 4 GB RAM, and so on

However, there are standards, which might influence the user more than the infrastructure standards. Mostly, those are OS to application combinations. Or only certain OS types are supported for deployment. Typically, organizations try to keep the zoo of OSes and applications as small as possible and as big as necessary. Therefore, mostly they support some versions of Windows as well as some specific Linux distributions.

These are often set by the IT group itself. Just keep in mind that for every OS/application you want to support, you need to have somebody who can help you troubleshoot and fix problems which may arise on these platforms.

Also, sometimes standardization can lead to the introduction of so-called runbooks, which are needed to install an OS or any application on top of it. These runbooks need to be as up to date as possible to stay relevant. So somebody needs to prove all the steps over and over and update them as the OSes/applications develop. This often is a full-time job and consumes a lot of time. Therefore, some IT departments try to keep this at a low profile, to prevent their staff from constantly updating those runbooks.

A runbook typically is a detailed step by step guide which is easy to follow by an administrator. Normally, they are written in a way that even a new employee can follow their instructions. Bigger organizations can have multiple runbooks for tens or hundreds of use cases. However, since this is a read and copy exercise, this work might be quite error prone for administrators who are doing it for the first couple of times.

The good news is that with automation, this is taken over by the orchestrator running the workflows. The workflow replaces the runbook and is way quicker than a human in completing the steps. Also, it has no issues in doing the same steps over and over again. This is why standardization and automation go so well together.

Instead of maintaining the runbooks, administrators or service designers now keep the workflow up to date.

By following the modular approach, this should be quite simple to do. Once the workflow is updated it can be run to recheck its functionality. No one will have to sit through all the steps and copy on the screen what's written in a book.

Before automation, standardization was limiting your service portfolio but enhancing your efficiency. With the SDDC you can actually broaden your portfolio while still keeping standardization with the power of automation. Indeed, you will be able to accomplish more tasks than before, with enhanced efficiency and diversity.