3

Enterprise Storage

CERTIFICATION OBJECTIVES

3.01 Explain the Security Implications of Enterprise Storage

3.11 Secure Storage Management

Some people love to say “storage is cheap”—and when you’re talking about consumer-grade storage, such as the hard drive you may have in your laptop or PC right now, it is cheap. Consumers can easily obtain terabyte-plus drives for less than $100. Enterprise storage is designed for large-scale, multiuser environments. When compared to consumer storage, enterprise storage is typically more reliable, more scalable, has better fault tolerance, and is quite a bit more expensive on a byte-for-byte basis. An enterprise storage solution for a medium to large enterprise will typically consist of one or more online storage arrays, offline storage for backups, and an archiving solution for long-term or permanent storage. Some enterprise architects will also include disaster recovery solutions to address data recovery from localized disasters that only affect part of the enterprise storage solution.

As organizations migrated away from local storage toward centralized, network storage solutions, they began to understand that this migration carried its own set of concerns. Putting all the data “eggs” into one basket made protecting that basket more important than ever before and soon IT organizations began to understand that “storage security” was just as important as perimeter security, remote access security, and so on. Each type of enterprise storage carries its own set of risks and potential vulnerabilities. We’ll examine some of them in this chapter.

CERTIFICATION OBJECTIVE 3.01

Explain the Security Implications of Enterprise Storage

Enterprise storage solutions are attractive for a number of reasons, such as moving data off user PCs, efficiency in storage, better backup and data retention, easier recovery, and so on. Unfortunately, some of the very things that make enterprise storage so attractive are some of the biggest potential vulnerabilities. Consolidating data on network storage can be great, but what happens when an attacker compromises that storage? If a single PC is compromised, an attacker likely only has access to the data on that PC. If an attacker gains access to network storage, they may gain access to a great deal of data. If the attacker is able to escalate privileges on the storage device or compromise a backup-level account with read access to everything, they may be able to read any piece of data on that storage device no matter how sensitive it is. This consolidation of data creates a virtual vault that, like a bank vault, contains many treasures to rob once the main security systems are defeated.

The very definition of enterprise storage is evolving at a rapid pace. Over the past several years, enterprise storage has gone from a relatively static and controlled environment (such as a company-owned datacenter) to distributed storage centers, cloud storage, and mobile networks. Data is valuable, and users are insisting on more and more access. Whether they’re using desktops, laptops, or mobile devices, users are demanding the ability to access data any time and from anywhere. This fluid storage environment introduces a significant number of input paths and access methods that may be great for business processes but also introduce new vulnerabilities and threats against enterprise storage environments. For example, multiple access paths and multiple access methods increase the likelihood that an infected file will find its way into the network storage. Once there, the infection may spread rapidly, thus making cleanup and eradication efforts very challenging. If the infection isn’t discovered quickly enough, infected files may be backed up and reintroduced inadvertently during recovery efforts.

Collection of data in one or more central storage networks creates a target of opportunity for potential attackers. If access to the storage network is denied or slowed, the impact may be felt by the entire organization. If the storage network is damaged, corrupted, or destroyed beyond immediate repair, the organization may be forced to implement disaster recovery protocols and restore from backup—a lengthy and often costly process.

Fortunately, many of the traditional best practices can be deployed to help secure network storage solutions:

![]() Deploying a firewall and IPS between the network storage and the Internet

Deploying a firewall and IPS between the network storage and the Internet

![]() Filtering and monitoring traffic accessing the network storage (although this must be done carefully to ensure it does not adversely impact storage performance)

Filtering and monitoring traffic accessing the network storage (although this must be done carefully to ensure it does not adversely impact storage performance)

![]() Restricting direct access to storage devices

Restricting direct access to storage devices

![]() Limiting access to logical units (LUNs) as needed

Limiting access to logical units (LUNs) as needed

![]() Regular vulnerability scanning of storage environments with immediate remediation of discovered vulnerabilities

Regular vulnerability scanning of storage environments with immediate remediation of discovered vulnerabilities

![]() Persistent and diligent patching and maintenance of firmware and software within the network storage environment

Persistent and diligent patching and maintenance of firmware and software within the network storage environment

![]() Requiring strong authentication and access control measures

Requiring strong authentication and access control measures

![]() Using encryption to secure sensitive data within the storage environment (at a minimum—for greater security all data and files could be encrypted)

Using encryption to secure sensitive data within the storage environment (at a minimum—for greater security all data and files could be encrypted)

![]() Using encrypted communications when accessing and transporting data

Using encrypted communications when accessing and transporting data

![]() Routine virus, Trojan, and malware scanning of all files

Routine virus, Trojan, and malware scanning of all files

![]() Use of SNMP v3 with authentication and encryption when SNMP must be used

Use of SNMP v3 with authentication and encryption when SNMP must be used

![]() Logging access to storage networks

Logging access to storage networks

The threats to storage area networks and the methods to secure them is a rapidly growing field—so much so that the storage industry and concerned security professionals have created a security specific forum and working groups within the Storage Networking Industry Association (www.snia.org). The security working group has divided the storage security field into four main areas:

![]() Storage System Security (SSS) Securing the systems and applications of the storage network, along with integration into the IT and security infrastructure

Storage System Security (SSS) Securing the systems and applications of the storage network, along with integration into the IT and security infrastructure

![]() Storage Resource Management (SRM) Secure provisioning, tuning, monitoring, and control of storage resources

Storage Resource Management (SRM) Secure provisioning, tuning, monitoring, and control of storage resources

![]() Data In Flight (DIF) Protecting the integrity, confidentiality, and availability of the data as it’s transferred

Data In Flight (DIF) Protecting the integrity, confidentiality, and availability of the data as it’s transferred

![]() Data At Rest (DAR) Protecting the integrity, confidentiality, and availability of the data as it resides on disk, tape, or any other type of media

Data At Rest (DAR) Protecting the integrity, confidentiality, and availability of the data as it resides on disk, tape, or any other type of media

SNIA has some very good resources for securing and protecting storage networks, which are available at www.snia.org.

Does it sound like enterprise storage is ultimately a risky concept to be avoided? It shouldn’t. Yes, removing data from endpoints and placing it into centralized storage does introduce new risks, but it can also help you control other risks. Yes, centralized storage creates fewer locations for data storage, but that also means there are fewer locations to secure, audit, and monitor. Removing data from endpoints can reduce the risk of compromise and data loss because your security staff is now able to focus on protecting a smaller subset of resources. With data in centralized locations, it’s also easier to enforce policies related to data classification, encryption, data retention, and so on.

CERTIFICATION OBJECTIVE 3.02

Virtual Storage

If entire servers can be virtualized, why not virtualize storage? The truth is, virtualizing storage is not a new concept. IBM used the term virtual storage when using two types of physical storage (auxiliary and central) to create storage space in their z/OS operating system. But recently the term is being applied to the process of separating logical storage from the physical storage mechanisms used to provide that storage. As a simple example, consider a shared drive employed by a group of users within a company. The users don’t really care that their files are stored on a Dell R710 with three 1TB drives in a RAID 5 configuration—they only care that when they go looking for their files, the files are still there and are not corrupted. The physical implementation of the storage is irrelevant to the user base; in many cases, the less end users are aware of the physical implementation of storage, the better it is for administrators.

The most relevant implementation of virtual storage for most users today is that of cloud storage. To the end user, cloud storage is completely abstract—you have no idea where your data is actually being stored, how it is moved, or how it is replicated. You simply use your designated (or preferred) interface to push data into and pull data from the cloud environment. Cloud storage claims to have some significant benefits, such as automatic replication to multiple datacenters, which reduces the chance of catastrophic data loss. Although this may be great from a business-continuity perspective, from a security perspective you have to wonder, where is that data really being stored? In what country? Who has access to it? What else is being stored on the same physical storage mechanisms where your data resides? Consider iCloud or Dropbox—both are virtual storage examples used by millions of people every day. As an end user, you have no idea where the data you are uploading is stored, how it is stored, how it is maintained, how it is secured, and so on. You simply access the data across the network and trust that the rest is being taken care of on your behalf.

Despite the potential security implications, virtual storage is catching on. Companies such as EMC are looking to virtual storage to help them create federated storage solutions that combine local and global resource pooling to combat “data distance.” Data distance is essentially how far away the data is from the user or application that needs that data. The farther away the data is, the more issues arise resulting from latency, bandwidth, connection reliability, and so on. If an organization can really address the performance and security issues of virtual storage, it will become an even more attractive storage option for organizations looking to reduce cost and increase accessibility and survivability.

CERTIFICATION OBJECTIVE 3.03

NAS—Network Attached Storage

Network attached storage (NAS) is a storage device connected to a local network usually via Ethernet (as shown in Figure 3-1). Users typically access the NAS, and the data residing on it, via TCP/IP connections. A NAS is typically used by single users and small businesses and can range up to several terabytes in size. NAS devices are designed to provide file-based storage; they are not designed to run applications or function as servers in any other traditional role a server might fulfill. A NAS often provides better performance and simpler configuration than a file server.

FIGURE 3-1 Network attached storage

Most NAS systems contain one or more disks that are usually configured into a RAID array (0, 1, or 5). Some NAS support the ability to divide the available disk space into logical units of storage. NAS uses file-based protocols such as SMB/CIFS, NFS, FTP, SFTP, and AFP to communicate with clients.

Security options on a NAS can be rather limited. Some NAS provide the ability to limit the IP addresses allowed to connect to the SAN or can restrict the users allowed to access the management interface (which is often web based). Most NAS support simple user ID/password controls for access, and some even integrate with external authentication services such as RADIUS and Active Directory. A NAS is not really a “hardened” device and should not be exposed to a public Internet connection. For optimal performance and security, a NAS would be located on a restricted VLAN where only authorized user traffic is permitted.

CERTIFICATION OBJECTIVE 3.04

SAN—Storage Area Network

A storage area network (SAN) is a dedicated, high-performance network that allows storage systems to communicate with computer systems for block-level data storage. The key point about a SAN is it connects storage devices to computers (sometimes both workstations and servers). A SAN attempts to correct the data isolation that occurs when data is stored, accessed, and manipulated on individual servers. By consolidating assets into a SAN, an organization may be able to save time and money while potentially increasing security. SANs are typically expensive both in terms of hardware, implementation, and management—but if you are consolidating the storage needs of 100 individual servers into a SAN, it could still be a very cost-effective solution.

A SAN is typically on a dedicated network used only for data access. Certain protocols and technologies (such as Fiber Channel or 10 GbE) are popular for implementing SANs because they have the speed and capacity to handle data transfers. Although allowing some administrative traffic is acceptable, ensuring the SAN is a network dedicated to data storage and data access is important for both performance and security reasons:

![]() Allowing “regular” user traffic such as e-mail, web browsing, streaming audio, and so on, on the same network as the SAN clogs up the network and will reduce the performance of the SAN.

Allowing “regular” user traffic such as e-mail, web browsing, streaming audio, and so on, on the same network as the SAN clogs up the network and will reduce the performance of the SAN.

![]() Allowing any external traffic increases the possibility of malware infecting the SAN.

Allowing any external traffic increases the possibility of malware infecting the SAN.

![]() Dedicating the network to data storage allows for better performance, scaling, and planning as your organization’s data needs change.

Dedicating the network to data storage allows for better performance, scaling, and planning as your organization’s data needs change.

![]() Restricting the traffic and protocols allowed on the SAN helps reduce the risk of compromise, attacks, and “accidents.”

Restricting the traffic and protocols allowed on the SAN helps reduce the risk of compromise, attacks, and “accidents.”

Consolidating data storage into a SAN can also help with backups, disaster recovery, and business continuity. By centralizing data storage, administrators can focus backup and recovery processes on critical assets and reduce data losses from those “we didn’t know that data was only stored on that server” scenarios. Because data is stored “in bulk,” recovery becomes a matter of replacing data in the proper location on a limited number of storage devices rather than attempting to replace data on many individual servers.

If your organization uses a SAN, take the time to audit the logical controls. Who has access to the SAN? Is traffic restricted as it should be? Do you perform any monitoring on your SAN? Many organizations make the mistake of considering networks dedicated for storage access to be “secure” because they are internal networks. The larger your organization is, the more likely it is that some server, workstation, or development platform has access to the SAN when it really should not.

SANs must be carefully secured and monitored. By their very nature, they are very attractive targets. The “all eggs in one basket” concept could mean that an attacker gaining access to the SAN could be gaining access to all of your organization’s critical data. Here are some tips for securing a SAN:

![]() Use a dedicated network for the SAN—no other user traffic.

Use a dedicated network for the SAN—no other user traffic.

![]() Never expose the SAN to the Internet.

Never expose the SAN to the Internet.

![]() Mask the logical units (LUNs) so that users can only see the storage areas they are supposed to see (this can sometimes be accomplished at the Host Bus Adapter level).

Mask the logical units (LUNs) so that users can only see the storage areas they are supposed to see (this can sometimes be accomplished at the Host Bus Adapter level).

![]() Use encryption to further protect sensitive data.

Use encryption to further protect sensitive data.

![]() Restrict the protocols running on the SAN. (Do you really need SNMP?)

Restrict the protocols running on the SAN. (Do you really need SNMP?)

![]() Limit access to the SAN.

Limit access to the SAN.

![]() Use zoning to group storage ports and hosts (this helps to limit the LUNs a server can access).

Use zoning to group storage ports and hosts (this helps to limit the LUNs a server can access).

![]() Secure the LAN you use to connect any management consoles to the SAN—ensure an attacker can’t make the leap from any of your LANs over to the SAN.

Secure the LAN you use to connect any management consoles to the SAN—ensure an attacker can’t make the leap from any of your LANs over to the SAN.

![]() Monitor the SAN traffic for performance and security issues.

Monitor the SAN traffic for performance and security issues.

CERTIFICATION OBJECTIVE 3.05

VSAN

The abstraction of logical from physical storage is becoming even more popular by the increased use of virtual storage area networks. Much like a VLAN, a VSAN allows storage traffic to be isolated within specific parts of the larger storage area network. Cisco refers to this as “zoning” or the division of a storage area network into multiple, isolated subnetworks. Much like a VLAN separates broadcasts and traffic from other networks, a VSAN separates storage. VSANs have a number of benefits that make them attractive when implemented correctly:

![]() Storage can be relocated without requiring a physical layout change.

Storage can be relocated without requiring a physical layout change.

![]() Using VSANs can make expanding and modifying storage easier.

Using VSANs can make expanding and modifying storage easier.

![]() Problems in a single VSAN are typically isolated to that SAN and don’t affect the rest of the storage.

Problems in a single VSAN are typically isolated to that SAN and don’t affect the rest of the storage.

![]() Redundancy between VSANs can minimize the risk of catastrophic data loss.

Redundancy between VSANs can minimize the risk of catastrophic data loss.

![]() VSANs are silos of storage; if one is compromised the others can stay secure.

VSANs are silos of storage; if one is compromised the others can stay secure.

Just because storage may have been “virtualized” does not mean you should ignore any of the capacity planning or I/O performance metrics you would worry about with a physical storage solution. That virtual solution has to eventually be implemented in hardware somewhere. Remember first and foremost that if you virtualize storage and end up pooling and consolidating resources, you will likely increase utilization of the back-end physical resources. This can lead to contention and poor performance. Aggregate your storage requirements, especially any application I/O performance requirements, and then spec out your storage solution to ensure it measures up. If you are procuring new resources, be sure to consider your workload, required redundancy protection, needed storage, protocol support needed, and so on, before making any purchase. To be safe, track your performance and I/O needs over a period of several days to get a more accurate baseline before compensating for burst usage and expansion.

Do you have excess storage capacity in your virtual servers? Consider using a virtual SAN appliance to create a storage area network accessible to all your virtual machines. A virtual SAN appliance is typically implemented in software inside a virtual machine, but some storage vendors are starting to build this type of capability into their products as part of the firmware.

CERTIFICATION OBJECTIVE 3.06

iSCSI

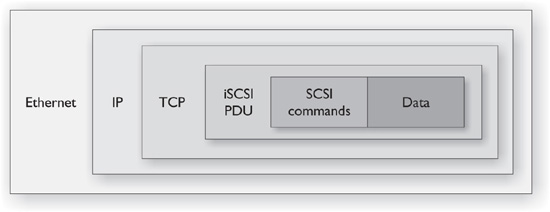

Internet Small Computer System Interface (iSCSI) is an IP-based standard for data storage and servers (or hosts) and has been key in increasing both the prevalence and popularity of SANs. Essentially, what iSCSI does is provide for the encapsulation and encryption, if enabled, of data requests from the server and the return data (iSCSI is a bidirectional protocol). iSCSI runs over Ethernet and can be used to transfer data across LANs or even the Internet. With the proliferation of gigabit network and the advent of 10 GbE, iSCSI is a very viable alternative to more expensive Fiber Channel solutions. iSCSI works by generating SCSI commands based on user, application, or operating system requests. Those commands are encapsulated and encrypted, a packet header is added, and the resulting packets are transmitted out an Ethernet connection. On arrival, the process is reversed, and the SCSI commands are sent on to the control and storage device(s). Figure 3-2 shows an example of an iSCSI packet.

FIGURE 3-2 Logical depiction of an iSCSI packet

Because iSCSI is a network protocol, administrators must take precautions such as the following to secure iSCSI implementations:

![]() Using a separate network or VLAN for SAN traffic One of the most obvious means to secure iSCSI traffic is to isolate it and protect it from external traffic and any internal system that does not need direct access.

Using a separate network or VLAN for SAN traffic One of the most obvious means to secure iSCSI traffic is to isolate it and protect it from external traffic and any internal system that does not need direct access.

![]() Using access control lists ACLs should be used to limit the users and systems that can connect to the iSCSI devices. Most ACLs support IP address and/or MAC address filtering. The initiator name of the iSCSI client can also be used to filter connections.

Using access control lists ACLs should be used to limit the users and systems that can connect to the iSCSI devices. Most ACLs support IP address and/or MAC address filtering. The initiator name of the iSCSI client can also be used to filter connections.

![]() Implement strong authentication Protocols such as CHAP and RADIUS should be used to more securely identify client connections.

Implement strong authentication Protocols such as CHAP and RADIUS should be used to more securely identify client connections.

![]() Locking down management interfaces Restrict access to the management interface/capabilities of any iSCSI device. Restrict accounts, change any vendor-supplied accounts/passwords, and ensure auditing is enabled where supported.

Locking down management interfaces Restrict access to the management interface/capabilities of any iSCSI device. Restrict accounts, change any vendor-supplied accounts/passwords, and ensure auditing is enabled where supported.

![]() Encrypting network traffic where necessary IPSec can be used to encrypt just the data portion of the traffic (transport mode) or the entire transmission (tunnel mode). Use transport on internal networks and tunnel mode for any iSCSI traffic leaving an internal network or any traffic leaving a network you consider to be secure.

Encrypting network traffic where necessary IPSec can be used to encrypt just the data portion of the traffic (transport mode) or the entire transmission (tunnel mode). Use transport on internal networks and tunnel mode for any iSCSI traffic leaving an internal network or any traffic leaving a network you consider to be secure.

![]() Encrypting data at rest This is essentially a best practice for any SAN. Use an encrypting file system, encryption application, or encryption capabilities that may exist on the iSCSI device itself.

Encrypting data at rest This is essentially a best practice for any SAN. Use an encrypting file system, encryption application, or encryption capabilities that may exist on the iSCSI device itself.

CERTIFICATION OBJECTIVE 3.07

FCOE

Fiber Channel Over Ethernet (FCOE) is a protocol developed to allow Fiber Channel traffic to be encapsulated within Ethernet frames (like iSCSI allows SCSI traffic to be sent over Ethernet networks). FCOE allows Fiber Channel to take advantage of the speeds of 10 GbE networks. FCOE is primarily used in datacenters because it has the potential to reduce the number of connections required to support both network and data traffic. A Converged Network Adapter is a device that combines both the network interface functionality and the Host Bus Adapter functionality into a single connection—something very useful in datacenter environments. Although some vendors suggest a single network using FCOE and Ethernet can support both the data and network traffic for a datacenter, most administrators continue to operate separate networks for data and network traffic from a performance and a security perspective.

Because FCOE is Fiber Channel traffic encapsulated in Ethernet frames, securing it involves protecting it against network sniffing, spoofing, authentication attacks, and so on, as you would with any SAN. One area in particular where security personnel and administrators may disagree is the separation of FCOE traffic onto dedicated networks and VLANs. In most SAN solutions, a separate network dedicated to carrying data traffic is the “norm,” but one of the specific advantages of FCOE is the ability to carry data and network traffic across the same connection. This reduces cost, heat, the number of connections required, management overhead, and so on. If your FCOE traffic must be carried on a common physical network, ensure the traffic is maintained on a dedicated and secured VLAN with sufficient capacity to support the high bandwidth demand of large-scale data transfer. You certainly don’t want your storage traffic being slowed down by users watching YouTube or listening to Internet radio.

CERTIFICATION OBJECTIVE 3.08

LUN Masking

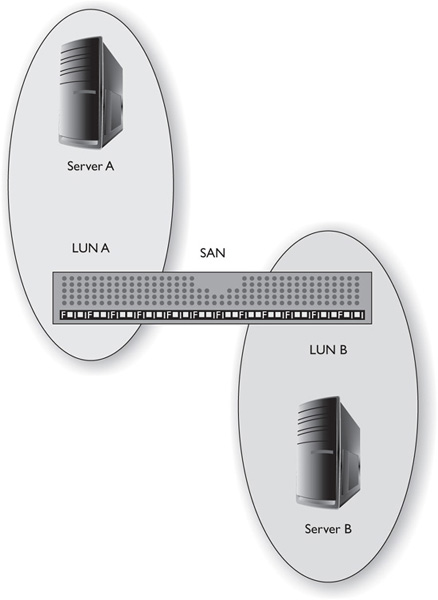

A LUN (logical unit number) within a SAN is a logical disk—a section of storage that is identified by a unique number. The LUN is used to address the storage space by protocols such as SCSI, Fiber Channel, or iSCSI. For example, imagine you have a RAID array separated into separate volumes with a SCSI target assigned to each volume. That SCSI target can provide one or more LUNs. Computers accessing the RAID must identify which LUN they are connecting to for read/write operations. LUN masking is the authorization process that makes a LUN available to some systems but not others (see Figure 3-3). LUN masking is typically performed at the adapter level (Host Bus Adapter, or HBA) but in some cases is implemented on the storage controller.

FIGURE 3-3 LUN masking

LUN masking can sometimes be defeated at the HBA level because it is possible to forge source addresses (IP address, MAC address, WWNs) and trick the HBA into allowing an unauthorized system access to the LUN. The more “secure” method is to perform LUN masking at the storage controller. Although LUN masking does have some security benefits, it is most often done to isolate storage volumes from servers to prevent corruption issues. For example, servers running Windows operating systems will sometimes corrupt non-Windows volumes by writing a Windows volume label to any LUN the Windows-based server has access to. This can corrupt the LUN and prevent non-Windows operating systems such as Linux and NetWare from using the LUN. LUN masking helps prevent this issue by making some LUNs “invisible” to the Windows server.

Periodically audit each LUN on your storage devices. Which systems have access? Which should have access? Should any be added/removed? Are you restricting access as much as you should be? Are you encrypting sensitive data? If you are not, why aren’t you?

CERTIFICATION OBJECTIVE 3.09

HBA Allocation

The Host Bus Adapter (HBA) is often referred to as a Fiber Channel interface card because that is the protocol HBAs are most often associated with. However, the term HBA is increasingly being used with iSCSI implementations as well. The HBA connects a computer system to the storage network and handles the traffic associated with the data transfers. Each HBA has a unique World Wide Name (WWN), similar to a MAC address on a NIC. WWNs are 8 bytes; like MAC addresses, the WWN of each HBA contains the Organizationally Unique Identifier (OUI) of the manufacturer. HBAs have two types of WWNs: a node WWN that identifies the HBA and is used by all the ports on the HBA WWN, and port WWNs that identify the unique storage port (WWPN).

Much like ports in a switch can be assigned to a specific VLAN, HBAs can be allocated to specific devices, connections, storage zones, and so on. For performance and security reasons, most storage networks are divided into zones—different logical or physical storage areas based on sensitivity of the data, use of the data, who has access to the data, or any other criteria administrators need to implement. HBAs typically have one or more ports on them; these ports can be assigned (or allocated) to specific storage targets by administrators. HBAs can be configured to talk to multiple storage zones, and multiple HBAs on the same system can be configured to talk to the same zone. From a security perspective, most administrators will limit the zones any HBA or group of HBAs can access. Similarly, within the storage devices, administrators will limit the HBAs that can communicate with the storage device and access a given zone based on the WWNs of the HBA attempting to access it.

CERTIFICATION OBJECTIVE 3.10

Redundancy (Location)

One of the key tenets in business continuity and disaster recovery is redundancy. Most administrators are familiar with redundancy in components such as power supplies in a server or redundancy in networks such as firewalls configured with failover options to use alternate paths. When it comes to storage, backups are critically important. Whereas equipment can be replaced, data oftentimes cannot. Backups are a great way of protecting against the absolute loss of data, but can be extremely slow from a recovery perspective (consider restoring 50 terabytes of enterprise storage from tape). As the price of storage media and bandwidth continues to drop, many organizations are increasingly turning toward distributed, redundant storage solutions to protect their data and reduce downtime.

Redundant storage locations is a simple concept—you are simply keeping copies of data in more than one location (see Figure 3-4). On the simplest level, this could involve a RAID 1 (mirror) setup where everything written to drive A is automatically written to drive B as well. Although this may work from a simple and immediate recovery aspect, it’s not very effective for business continuity because any outage, failure, or disaster that affects drive A will likely affect drive B as well. The next logical step up would be to store copies of data on separate storage devices (that is, mirroring storage device A to storage device B). Some organizations do this with mission-critical data, essentially creating a large RAID 1 implementation using separate storage devices. This works well for data redundancy and can make for fast recovery times, but does make the organization potentially susceptible to data loss because any major event or disaster that affects storage device A will likely affect storage device B as well if they are stored in the same facility.

FIGURE 3-4 Redundant storage location Internet Data

The next logical evolution in data redundancy is geographical replication—storing copies of data in different physical locations. This approach can be very bandwidth intensive because you will be replicating data from one location to another. Synchronous replication attempts to keep two data stores synchronized in real time, but this requires very high bandwidth and requires that the data stores be located somewhat close together to eliminate potential issues associated with latency. Asynchronous replication requires slightly less bandwidth and allows the sites to be farther apart because changes are streamed and committed before full acknowledgments can be received. Point-in-time or snapshot replication updates the backup data store periodically and is the most bandwidth efficient because it only sends changes and new files to the backup data store.

Although some SAN solutions include geographical replication capabilities that allow administrators to set up their own data redundancy solutions, organizations are increasingly turning toward cloud-based storage solutions to solve the geographical replication issue. Cloud storage is essentially a virtualized storage environment accessed across the network. From a location redundancy perspective, cloud storage seems almost ideal. Data is accessible from anywhere, and cloud environments are (by default) supposed to be failure resistant, replicated across multiple nodes in different locations, and extremely fault tolerant. Unfortunately, for cloud services, the brochure is far more attractive than the reality. Just ask any of the customers affected by the great “Amazon Cloud Collapse” that occurred in April of 2011. When Amazon lost one of its five major datacenters, thousands of organizations, including Foursquare and Zynga, lost the web services they were relying on the cloud to provide. The lesson learned by many organizations was that having your own backup plan is crucial—you simply can’t rely on any one solution when it comes to preserving and protecting your data.

CERTIFICATION OBJECTIVE 3.11

Secure Storage Management

Everyone has a junk drawer—it might not be a physical drawer, but everyone has some location that has devolved into a collection of assorted odds and ends that don’t seem to fit in anywhere else. Allowing this sort of behavior in an enterprise storage environment quickly leads to higher costs and loss of available space. Storage must be managed. Storage management is an all-encompassing term for the tools, policies, and processes used to manage storage devices, networks, and services. In this section, we’ll examine three aspects of storage management: multipath, snapshots, and deduplication.

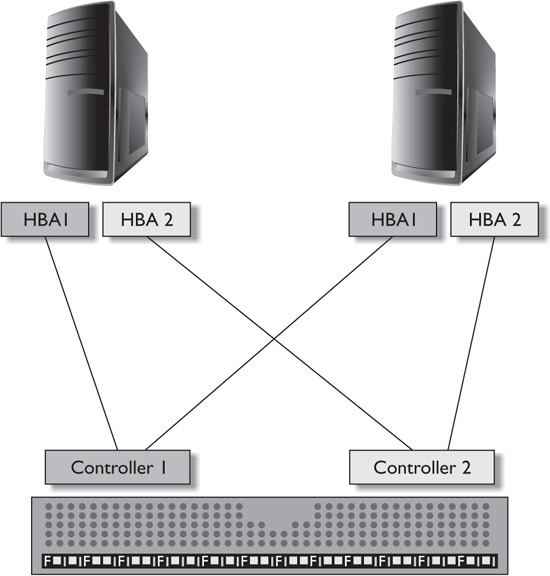

Multipath

In storage, multipath is the use of one or more physical paths to access a storage device or system—for example, using two HBAs that connect to two controllers on the same SAN, as shown in Figure 3-5, or using two NICs to connect to two separate Ethernet networks of an iSCSI-based SAN. Multipath solutions can provide greater reliability and availability as provided by the fault tolerance of having more than one way to get to the SAN. Multipath solutions can also be configured to provide load balancing of I/O to improve performance of data access. From a security standpoint, multipath does help provide availability—if one pathway is unavailable, the other continues to provide access to the data (hopefully).

FIGURE 3-5 Multipath access

Snapshots

A snapshot is a point-in-time picture of the state of a storage system. A snapshot can be a full copy of a data store taken on a given date/time or it can be a set of differential data, similar to an incremental backup, taken from a full data store copy at a specific date/time. The use of snapshots is quite popular—full backups can take a long time to complete and consume a great deal of storage space. Some full backup systems require disabling write access during the backup process—something most enterprise environments cannot support due to loss of productivity. Snapshots typically take less time and resources to create when compared to full backups. In many cases, once the initial snapshot is taken, subsequent snapshots only need to store the changed data, which makes for a quicker, more efficient backup, provided the original snapshot is still accessible and the sequence of changes is preserved in the snapshots. Because most snapshot methods use a pointer system that refers back to the original snapshot when recording changes, subsequent snapshots are much smaller than full backups because only the changes are recorded.

Snapshots are usually created as either read-only or read-write copies of the data being backed up. Read-only snapshots are often used in mission-critical environments because the application can continue to operate in read-write mode on the original data while backup operations are carried out on the read-only snapshot. Read-write snapshots, sometime called branching snapshots, create different versions of stored data. This type of snapshot is very useful in hosting and virtualization environments because you can makes changes to a system, create a snapshot, and then continue to make changes. This method is very useful when testing new software or patches, updating and testing standard images, or any other task where the ability to back changes out of a file system quickly is useful.

Deduplication

Deduplication is, quite simply, the elimination of duplicate copies of information. Users have a natural tendency to store copies of data files. Examine the storage resources of any decent size working group and you’ll find redundant copies of e-mails, memos, drawings, and so on, in shared folders as well as personal folders for every member of that group. From a storage perspective, redundant data on the same data store is a bad thing. It increases the disk space being used, which increases the backup storage needs, which increases the time required to perform backups, which eventually ends up costing the organization more money. Performing deduplication can be a daunting task for administrators. Most deduplication methods involve file name searches, but how can administrators be certain what appears to be a duplicate file is indeed an exact duplicate? How can administrators find files that are complete duplicates of other files in all respects except for the filename? Fortunately, there are a number of utilities that can help in your deduplication efforts. These tools can help you identify duplicate files based on filename, size, checksums, file dates, and so on. For example, Microsoft’s File Server Resource Manager can run searches for duplicate files and provide you with customized reports based on owner, file size, and a number of other sorting criteria.

From a security standpoint, deduplication can be critically important when it comes to sensitive or mission-critical data. Imagine responding to a subpoena that requires your company to list all files containing references to a specific project. Now imagine responding to that subpoena when multiple end users all have copies of original data files “backed up” in their own personal directories. The same could happen with credit card numbers, social security numbers, patent applications, or any other sensitive data. If users make copies (or are allowed to make copies) of files, ensuring the data is not lost, stolen, or compromised becomes far more difficult. With mission-critical or sensitive data, deduplication becomes much more than a “storage recovery” effort—it can significantly reduce the risk of data loss if you can prevent rampant or unauthorized data replication.

CERTIFICATION SUMMARY

In this chapter, we discussed the security implications of enterprise storage—specifically how consolidating storage can reduce costs but can also create very inviting targets for attackers and data thieves. We also covered some of the methods that can be used to help secure enterprise storage and particularly the storage networks used to support and operate enterprise storage. We discussed virtual storage and the need to understand the vulnerabilities associated with such a solution.

The next objectives covered were network attached storage and storage area networks, as well as methods for securing storage devices and the networks that connect them to the devices accessing the storage devices. Next, we covered VSAN, a virtual storage area network that is essentially the same as a VLAN but is dedicated for use with data storage. We also discussed two protocols commonly used to access storage across networks: iSCSI and FCOE. iSCSI, which is essentially SCSI commands encapsulated and transmitted via Ethernet, is quite popular and has become more widely adopted because it is able to take advantage of 10Gbps network speeds. FCOE, which is essentially Fiber Channel commands encapsulated and transmitted via Ethernet, has the potential to limit network connects through the use of Host Bus Adapters that can service both network traffic and storage traffic.

Then we discussed LUN masking as a means of partitioning off storage devices and restricting access to different areas of the storage device. We also discussed HBA allocation—a means to restrict access to storage through the use of World Wide Names found on all HBA devices. The next objective was redundancy, which is simply the use of redundant storage locations to protect against data loss.

Finally, we discussed secure storage management and the use of multipath access, snapshots, and deduplication.

TWO-MINUTE DRILL

TWO-MINUTE DRILL

Explain the Security Implications of Enterprise Storage

![]() Enterprise storage solutions are attractive for a number of reasons, such as moving data off user PCs, efficiency in storage, better backup and data retention, easier recovery, and so on. Unfortunately, some of the very things that make enterprise storage so attractive are some of the biggest potential vulnerabilities.

Enterprise storage solutions are attractive for a number of reasons, such as moving data off user PCs, efficiency in storage, better backup and data retention, easier recovery, and so on. Unfortunately, some of the very things that make enterprise storage so attractive are some of the biggest potential vulnerabilities.

![]() Consolidation of data creates a virtual vault that, like a bank vault, contains many treasures to rob once the main security systems are defeated.

Consolidation of data creates a virtual vault that, like a bank vault, contains many treasures to rob once the main security systems are defeated.

![]() Many of the best practices used to secure networks can be applied to secure storage networks.

Many of the best practices used to secure networks can be applied to secure storage networks.

Virtual Storage

![]() The idea of “virtual storage” is not new and can be applied to most situations where the end users are completely unaware of the physical implementation of the data storage they are using.

The idea of “virtual storage” is not new and can be applied to most situations where the end users are completely unaware of the physical implementation of the data storage they are using.

![]() Cloud storage is a good example of virtual storage.

Cloud storage is a good example of virtual storage.

![]() Data distance is essentially how far away the data is from the user or application that needs that data. The farther away the data is, the more issues arise resulting from latency, bandwidth, connection reliability, and so on.

Data distance is essentially how far away the data is from the user or application that needs that data. The farther away the data is, the more issues arise resulting from latency, bandwidth, connection reliability, and so on.

NAS—Network Attached Storage

![]() Network attached storage (NAS) is a storage device connected to a local network usually via Ethernet.

Network attached storage (NAS) is a storage device connected to a local network usually via Ethernet.

![]() Security options on a NAS are typically limited to a simple user ID/password or the NAS may integrate with an external authentication source such as LDAP or RADIUS.

Security options on a NAS are typically limited to a simple user ID/password or the NAS may integrate with an external authentication source such as LDAP or RADIUS.

![]() A NAS is typically not a hardened device and access to this device should be restricted where possible.

A NAS is typically not a hardened device and access to this device should be restricted where possible.

SAN—Storage Area Network

![]() A storage area network (SAN) is a dedicated, high-performance network that allows storage systems to communicate with computer systems for block-level data storage.

A storage area network (SAN) is a dedicated, high-performance network that allows storage systems to communicate with computer systems for block-level data storage.

![]() A SAN should be a dedicated network where access is restricted to authorized users and systems.

A SAN should be a dedicated network where access is restricted to authorized users and systems.

![]() Consolidating data storage into a SAN can also help with backups, disaster recovery, and business continuity.

Consolidating data storage into a SAN can also help with backups, disaster recovery, and business continuity.

![]() SANs can be very attractive targets because they store a great deal of data. Fortunately, many of the techniques used to secure LANs can be applied to SANs, such as restricting traffic, preventing external access, monitoring, and so on.

SANs can be very attractive targets because they store a great deal of data. Fortunately, many of the techniques used to secure LANs can be applied to SANs, such as restricting traffic, preventing external access, monitoring, and so on.

VSAN

![]() A VSAN separates storage traffic much like a VLAN separates network traffic.

A VSAN separates storage traffic much like a VLAN separates network traffic.

![]() A VSAN allows storage traffic to be isolated within specific parts of the larger storage area network.

A VSAN allows storage traffic to be isolated within specific parts of the larger storage area network.

![]() Proper use of VSANs can help increase performance and security.

Proper use of VSANs can help increase performance and security.

iSCSI

![]() iSCSI provides for the encapsulation and encryption, if enabled, of data requests and the return data (iSCSI is a bidirectional protocol). iSCSI runs over Ethernet and can be used to transfer data across LANs or even the Internet.

iSCSI provides for the encapsulation and encryption, if enabled, of data requests and the return data (iSCSI is a bidirectional protocol). iSCSI runs over Ethernet and can be used to transfer data across LANs or even the Internet.

![]() iSCSI is typically cheaper to implement than other block transfer protocols such as Fiber Channel.

iSCSI is typically cheaper to implement than other block transfer protocols such as Fiber Channel.

![]() iSCSI should be secured through the use of ACLs, dedicated networks, strong authentication, and encryption for storage and transmission.

iSCSI should be secured through the use of ACLs, dedicated networks, strong authentication, and encryption for storage and transmission.

FCOE

![]() Fiber Channel Over Ethernet (FCOE) is a protocol developed to allow Fiber Channel traffic to be encapsulated within Ethernet frames (much like iSCSI).

Fiber Channel Over Ethernet (FCOE) is a protocol developed to allow Fiber Channel traffic to be encapsulated within Ethernet frames (much like iSCSI).

![]() A Host Bus Adapter is a device used to implement FCOE that can carry both network and data traffic over a single connection.

A Host Bus Adapter is a device used to implement FCOE that can carry both network and data traffic over a single connection.

LUN Masking

![]() A LUN (logical unit number) within a SAN is a logical disk—a section of storage that is identified by a unique number.

A LUN (logical unit number) within a SAN is a logical disk—a section of storage that is identified by a unique number.

![]() LUN masking is the authorization process that makes a LUN available to some systems but not others.

LUN masking is the authorization process that makes a LUN available to some systems but not others.

![]() Windows systems write Windows Volume labels to LUNs they access, which can cause those LUNs to appear corrupted to other operating systems such as Linux.

Windows systems write Windows Volume labels to LUNs they access, which can cause those LUNs to appear corrupted to other operating systems such as Linux.

HBA Allocation

![]() Host Bus Adapters (HBAs) can be dedicated for access to specific storage resources.

Host Bus Adapters (HBAs) can be dedicated for access to specific storage resources.

![]() Each HBA has a unique World Wide Name (WWN), similar to a MAC address on a NIC. WWNs are 8 bytes; like MAC addresses, the WWN of each HBA contains the Organizationally Unique Identifier (OUI) of the manufacturer.

Each HBA has a unique World Wide Name (WWN), similar to a MAC address on a NIC. WWNs are 8 bytes; like MAC addresses, the WWN of each HBA contains the Organizationally Unique Identifier (OUI) of the manufacturer.

![]() HBAs have two types of WWNs: a node WWN that identifies the HBA and is used by all the ports on the HBA WWNN, and port WWNs that identify the unique storage port (WWPN).

HBAs have two types of WWNs: a node WWN that identifies the HBA and is used by all the ports on the HBA WWNN, and port WWNs that identify the unique storage port (WWPN).

Redundancy (Location)

![]() Redundant storage locations is a simple concept—you are simply keeping copies of data in more than one location.

Redundant storage locations is a simple concept—you are simply keeping copies of data in more than one location.

![]() Geographical replication is the storing of copies of data in different physical locations, such as different datacenters in different states.

Geographical replication is the storing of copies of data in different physical locations, such as different datacenters in different states.

![]() Synchronous replication attempts to keep two data stores synchronized in real time, but this requires very high bandwidth and requires that the data stores be located somewhat close together to eliminate potential issues associated with latency.

Synchronous replication attempts to keep two data stores synchronized in real time, but this requires very high bandwidth and requires that the data stores be located somewhat close together to eliminate potential issues associated with latency.

![]() Asynchronous replication requires slightly less bandwidth and allows the sites to be farther apart because changes are streamed and committed before full acknowledgments can be received.

Asynchronous replication requires slightly less bandwidth and allows the sites to be farther apart because changes are streamed and committed before full acknowledgments can be received.

![]() Point-in-time or snapshot replication updates the backup data store periodically and is the most bandwidth efficient because it only sends changes and new files to the backup data store.

Point-in-time or snapshot replication updates the backup data store periodically and is the most bandwidth efficient because it only sends changes and new files to the backup data store.

![]() Storage management is an all-encompassing term for the tools, policies, and processes that are used to manage storage devices, networks, and services.

Storage management is an all-encompassing term for the tools, policies, and processes that are used to manage storage devices, networks, and services.

![]() Multipath is the use of one or more physical paths to access a storage device or system—for example, using two HBAs that connect to two controllers on the same SAN or using two NICs to connect to two separate Ethernet networks of an iSCSI-based SAN.

Multipath is the use of one or more physical paths to access a storage device or system—for example, using two HBAs that connect to two controllers on the same SAN or using two NICs to connect to two separate Ethernet networks of an iSCSI-based SAN.

![]() A snapshot is a point-in-time picture of the state of a storage system.

A snapshot is a point-in-time picture of the state of a storage system.

![]() Read-only snapshots are often used in mission-critical environments because the application can continue to operate in read-write mode on the original data while backup operations are carried out on the read-only snapshot.

Read-only snapshots are often used in mission-critical environments because the application can continue to operate in read-write mode on the original data while backup operations are carried out on the read-only snapshot.

![]() Read-write snapshots, sometime called branching snapshots, create different versions of stored data.

Read-write snapshots, sometime called branching snapshots, create different versions of stored data.

![]() Deduplication is the elimination of duplicate copies of information.

Deduplication is the elimination of duplicate copies of information.

SELF TEST

The following questions will help you measure your understanding of the material presented in this chapter. Read all the choices carefully because there might be more than one correct answer. Choose all correct answers for each question.

1. Which of the following is a risk associated with enterprise storage?

A. Eradicating infected files within the storage environment is difficult.

B. Attacks on storage network affect the availability of data.

C. Allowing access to centralized storage from servers, desktops, and mobile devices increases the threat vectors and avenues for attack.

D. All of the above.

2. Your organization is looking to add three new Windows servers to the pool of systems accessing your enterprise storage. Each will be processing sensitive data. Which of the following best practices should you ensure are enforced during this process?

A. Traffic flow between the Windows servers and the storage network should be rate limited.

B. Creation of a new LUN for these servers and encryption of all data at rest on the new LUN.

C. The use of SNMP v2 to monitor the health of the Windows servers and granting access to all critical LUNs.

D. Scanning of all network traffic passing between the Windows servers.

3. Your organization is looking to migrate 1,500 users from a physical file server to a virtual storage server. Each user has a 2GB quota, but the average usage is less than 50 percent. Assuming a 70-percent maximum utilization rate, how much space does the virtual storage need to provide to accommodate the storage needs?

A. 1.5TB

B. 1.8TB

C. 2.05TB

D. 2.24TB

4. Which of the following is an example of virtual storage?

A. VSAN

B. Cloud storage

C. RAID 10 storage

D. iSCSI

5. Your marketing department has requested you set up a new NAS for their group to store large files and multimedia projects on. The NAS will be accessed by Windows-based PCs, iPads, MacBook Pros, and Android-based tablets. The NAS will only be accessible from inside the corporate network, and security of data during transmission is not a concern. What protocols (at a minimum) should the deployed NAS support?

A. SMB/CIFS

B. SMB/CIFS, AFP

C. SMB/CIFS, AFP, SFTP

D. SMB/CIFS, AFP, SFTP, NFS

6. Which of the following is a primary difference between a SAN and a NAS?

A. A NAS is usually larger and provides access to more storage.

B. A NAS uses Ethernet for connectivity whereas a SAN does not.

C. A NAS is a device whereas a SAN is a dedicated network.

D. A SAN only provides file-based access.

7. Double-tagging takes advantage of the backward-compatibility features of what protocol?

A. 802.1x

B. 802.1q

C. 802.1e

D. 802.1w

8. Which of the following is a best practice for configuring LUNs on a SAN?

A. Allowing direct Internet access to the SAN

B. Masking LUNs so users can only see the LUNs they are supposed to see

C. Allowing authenticated users access to all LUNs in your organization

D. Routing traffic to the SAN across the network used for VoIP traffic

9. Which are the following are benefits of using a VSAN?

A. Storage can be relocated without requiring a physical layout change.

B. Problems in a single VSAN are typically isolated to that SAN and don’t affect the rest of the storage.

C. Redundancy between VSANs can minimize the risk of catastrophic data loss.

D. All of the above.

10. When looking at a fully encapsulated iSCSI packet, what is the correct order of headers reading from outside to inside?

A. TCP, IP, iSCSI PDU, SCSI Commands, Data

B. Ethernet, TCP, IP, iSCSI PDU, SCSI Commands, Data

C. Ethernet, IP, TCP, iSCSI PDU, SCSI Commands, Data

D. Ethernet, iSCSI PDU, IP, TCP, SCSI Commands, Data

11. Which of the following are not techniques that will help you secure an iSCSI-based SAN?

A. Use separate VLANs for storage traffic.

B. Encrypt storage traffic between clients and storage devices.

C. Limit the systems that can connect to the SAN using IP addresses, MAC addresses, and/or initiator names.

D. Using only GbE or 10 GbE network devices

12. A converged network adapter combines the functionality of what two devices?

A. A NIC and an HBA

B. An HBA and a SAN

C. A NIC and a VLAN

D. A WWN and a NIC

13. When compared to iSCSI, one of the specific advantages of FCOE is the ability to:

A. Support multiple users

B. Encapsulate storage traffic in Ethernet frames for transport

C. Carry data and network traffic across the same connection

D. Use dedicated VLANs for storage traffic

14. In reference to a logical disk within a SAN, the term LUN stands for:

A. Local unit network

B. Logical unit network

C. Logical unit number

D. Local unit number

15. Which of the following can potentially be used at the Host Bus Adapter level to perform LUN masking?

A. WWNs, MAC address, and IP address

B. MAC address, VLAN, and target

C. LUN ID, IP address, and DNS

D. WWNs, iSCSI target, and PGP key

16. How many bytes are in a valid WWN?

A. 4

B. 8

C. 16

D. 32

17. Which of the following is the main differentiator between redundant storage locations and geographical replication?

A. Geographical replication stores copies of data on different devices.

B. Geographical replication requires data to be stored in different physical locations.

C. Redundant storage locations use asynchronous replication.

D. Redundant storage locations require the use of RAID devices.

18. The two main benefits of multipath are:

A. Minimal hardware requirements and increased capacity

B. Load balancing and custom traffic filtering

C. Availability and security

D. Reliability through redundancy and increased performance

19. For mission-critical environments, which type of snapshot method is most commonly used?

A. Read-write snapshots

B. Read-only snapshots

C. Branching snapshots

D. Differential snapshots

20. You are examining 100GB worth of user data and discover 10 percent of the data contains files with a single exact duplicate in another location on the same data store. If you delete all the exact duplicates, how much space will you regain?

A. 1GB

B. 2GB

C. 5GB

D. 10GB

LAB QUESTION

Your organization is creating a new iSCSI-based SAN to process and store data for developers and beta testers, and you’ve been asked to come up with a design that factors in performance and security. Prepare a draft system design that encompasses the types of devices you’ll need and the required best practices for securing an iSCSI network.

SELF TEST ANSWERS

1. ![]() D. All of the above. Although an enterprise storage solution can be very effective from a consolidation and cost-reduction standpoint, it does carry some additional risk. Infected files, particularly self-replicating infections, can be difficult to clean from network storage and associated backups. Any activity that affects the storage network itself affects the availability of the data to include DDoS, broadcast storms, misconfigurations, and so on. If most or all of the organization’s data is centralized, users and business processes will need the ability to access that storage from multiple locations and platforms, including servers, PCs, laptops, and mobile devices. These additional access paths increase the avenues of attack and can increase the risk to your organization’s data if not managed and secured properly.

D. All of the above. Although an enterprise storage solution can be very effective from a consolidation and cost-reduction standpoint, it does carry some additional risk. Infected files, particularly self-replicating infections, can be difficult to clean from network storage and associated backups. Any activity that affects the storage network itself affects the availability of the data to include DDoS, broadcast storms, misconfigurations, and so on. If most or all of the organization’s data is centralized, users and business processes will need the ability to access that storage from multiple locations and platforms, including servers, PCs, laptops, and mobile devices. These additional access paths increase the avenues of attack and can increase the risk to your organization’s data if not managed and secured properly.

2. ![]() B. If the new Windows servers are processing sensitive or critical data, creating a new LUN to service their storage needs and ensuring all data at rest is encrypted on those LUNs will help to guard against data corruption and unintentional access. Encrypting the data at rest helps protect it against direct theft or loss.

B. If the new Windows servers are processing sensitive or critical data, creating a new LUN to service their storage needs and ensuring all data at rest is encrypted on those LUNs will help to guard against data corruption and unintentional access. Encrypting the data at rest helps protect it against direct theft or loss.

![]() A, C, and D are incorrect. Rate limiting the traffic between the servers and the storage network will definitely impact performance, which is not desirable with storage networks. SNMP v2 is considered to be an insecure protocol, and granting access to multiple LUNs could lead to data corruption if those other LUNs are used by UNIX- or Solaris-based systems. Scanning of the traffic passing between the Windows servers may help secure the servers themselves, but provides little direct benefit in securing the storage area network.

A, C, and D are incorrect. Rate limiting the traffic between the servers and the storage network will definitely impact performance, which is not desirable with storage networks. SNMP v2 is considered to be an insecure protocol, and granting access to multiple LUNs could lead to data corruption if those other LUNs are used by UNIX- or Solaris-based systems. Scanning of the traffic passing between the Windows servers may help secure the servers themselves, but provides little direct benefit in securing the storage area network.

3. ![]() C. 1,500 users times 2GB each equals 3,000GB. 70 percent of 3,000 is 2,100. 2,100 divided by 1024 is 2.05. Therefore, your organization will need 2.05TB of virtual storage to accommodate your users.

C. 1,500 users times 2GB each equals 3,000GB. 70 percent of 3,000 is 2,100. 2,100 divided by 1024 is 2.05. Therefore, your organization will need 2.05TB of virtual storage to accommodate your users.

![]() A, B, and D are all incorrect.

A, B, and D are all incorrect.

4. ![]() B. Cloud storage is a classic example of virtual storage. Users of cloud storage have no idea how the storage is physically implemented, where it is located, and so on.

B. Cloud storage is a classic example of virtual storage. Users of cloud storage have no idea how the storage is physically implemented, where it is located, and so on.

![]() A, C, and D are incorrect. VSAN stands for virtual storage area network, which is a virtual implementation of a storage network, not the virtual storage itself. RAID 10 storage requires a minimum of four disks and is often called a stripe of mirrors because disks are both striped and mirrored, which leads to good performance and redundancy. iSCSI is a storage protocol that encapsulates SCSI commands inside Ethernet packets.

A, C, and D are incorrect. VSAN stands for virtual storage area network, which is a virtual implementation of a storage network, not the virtual storage itself. RAID 10 storage requires a minimum of four disks and is often called a stripe of mirrors because disks are both striped and mirrored, which leads to good performance and redundancy. iSCSI is a storage protocol that encapsulates SCSI commands inside Ethernet packets.

5. ![]() C. At a minimum, your NAS should support SMB/CIFS. This protocol can be used by each of the client platforms listed.

C. At a minimum, your NAS should support SMB/CIFS. This protocol can be used by each of the client platforms listed.

![]() A, B, and D are incorrect. They all contain more protocols than really needed. AFP could be used by the Apple products, but each of the Apple products can already use SMB. SFTP is not needed because there is no concern for secure transmission. NFS is not needed because no servers or Solaris systems are connecting to the SAN.

A, B, and D are incorrect. They all contain more protocols than really needed. AFP could be used by the Apple products, but each of the Apple products can already use SMB. SFTP is not needed because there is no concern for secure transmission. NFS is not needed because no servers or Solaris systems are connecting to the SAN.

6. ![]() C. A NAS is an actual storage device. SAN standard for storage area network—it’s a dedicated network for access to data storage.

C. A NAS is an actual storage device. SAN standard for storage area network—it’s a dedicated network for access to data storage.

![]() A, B, and D are incorrect. A NAS does usually provide access to more storage than a SAN, both a NAS and a SAN use Ethernet, and a SAN typically provides access to block level storage.

A, B, and D are incorrect. A NAS does usually provide access to more storage than a SAN, both a NAS and a SAN use Ethernet, and a SAN typically provides access to block level storage.

7. ![]() B. Double-tagging takes advantage of the backward-compatibility features of the 802.1q protocol.

B. Double-tagging takes advantage of the backward-compatibility features of the 802.1q protocol.

![]() A, C, and D are incorrect. A is the standard for Port-based Network Access Control (PNAC). C is the System Load Protocol. D is the Rapid Spanning Tree Protocol.

A, C, and D are incorrect. A is the standard for Port-based Network Access Control (PNAC). C is the System Load Protocol. D is the Rapid Spanning Tree Protocol.

8. ![]() B. As a best practice, you should mask the logical units (LUNs) so that users can only see the storage areas they are supposed to see.

B. As a best practice, you should mask the logical units (LUNs) so that users can only see the storage areas they are supposed to see.

![]() A, C, and D are incorrect. A is incorrect because you should not allow direct Internet access to your SAN. C is incorrect because authenticated users should only have access to the LUNs they need—not every LUN on the SAN. D is incorrect because you should use a dedicated network for storage traffic whenever possible.

A, C, and D are incorrect. A is incorrect because you should not allow direct Internet access to your SAN. C is incorrect because authenticated users should only have access to the LUNs they need—not every LUN on the SAN. D is incorrect because you should use a dedicated network for storage traffic whenever possible.

9. ![]() D. All these are benefits of using a VSAN.

D. All these are benefits of using a VSAN.

10. ![]() C. When looking at a fully encapsulated iSCSI packet the correct order of headers reading from outside to inside is Ethernet, IP, TCP, iSCSI PDU, SCSI Commands, Data.

C. When looking at a fully encapsulated iSCSI packet the correct order of headers reading from outside to inside is Ethernet, IP, TCP, iSCSI PDU, SCSI Commands, Data.

![]() A, B, and D are all incorrect.

A, B, and D are all incorrect.

11. ![]() D. Using GbE or 10 GbE devices will increase the speed and transfer rate of your SAN, but on its own will do nothing to help you secure an iSCSI-based LAN.

D. Using GbE or 10 GbE devices will increase the speed and transfer rate of your SAN, but on its own will do nothing to help you secure an iSCSI-based LAN.

![]() A, B, and C are incorrect. They are all best practices for securing iSCSI-based SANs.

A, B, and C are incorrect. They are all best practices for securing iSCSI-based SANs.

12. ![]() A. A Converged Network Adapter is a device that combines both the network interface functionality and the Host Bus Adapter functionality in a single connection.

A. A Converged Network Adapter is a device that combines both the network interface functionality and the Host Bus Adapter functionality in a single connection.

![]() B, C, and D are all incorrect.

B, C, and D are all incorrect.

13. ![]() C. FCOE was specifically designed to carry data and network traffic across the same connection (if so desired), although it is not often implemented in this manner.

C. FCOE was specifically designed to carry data and network traffic across the same connection (if so desired), although it is not often implemented in this manner.

![]() A, B, and D are incorrect. A is incorrect because both iSCSI and FCOE support multiple users. B is incorrect because both iSCSI and FCOE encapsulate storage traffic in Ethernet frames for transport. D is incorrect because iSCSI and FCOE can make use of dedicated VLANs.

A, B, and D are incorrect. A is incorrect because both iSCSI and FCOE support multiple users. B is incorrect because both iSCSI and FCOE encapsulate storage traffic in Ethernet frames for transport. D is incorrect because iSCSI and FCOE can make use of dedicated VLANs.

14. ![]() C. In reference to a logical disk within a SAN, the term LUN stands for logical unit number.

C. In reference to a logical disk within a SAN, the term LUN stands for logical unit number.

![]() A, B, and D are all incorrect.

A, B, and D are all incorrect.

15. ![]() A. WWNs, a MAC address, and an IP address can all potentially be used at the Host Bus Adapter level to perform LUN masking.

A. WWNs, a MAC address, and an IP address can all potentially be used at the Host Bus Adapter level to perform LUN masking.

![]() B, C, and D are all incorrect.

B, C, and D are all incorrect.

16. ![]() B. A valid WWN is 8 bytes long.

B. A valid WWN is 8 bytes long.

![]() A, C, and D are all incorrect.

A, C, and D are all incorrect.

17. ![]() B. Geographical replication requires data to be stored in different physical locations such as in different datacenters in different cities.

B. Geographical replication requires data to be stored in different physical locations such as in different datacenters in different cities.

![]() A, C, and D are incorrect. A is incorrect because both geographical replication and redundant storage locations can store copies of data on different devices. C is incorrect because both can use asynchronous replication. D is incorrect because neither absolutely requires the use of RAID devices (even though the use of RAID devices is highly encouraged with either method).

A, C, and D are incorrect. A is incorrect because both geographical replication and redundant storage locations can store copies of data on different devices. C is incorrect because both can use asynchronous replication. D is incorrect because neither absolutely requires the use of RAID devices (even though the use of RAID devices is highly encouraged with either method).

18. ![]() D. The two main benefits of multipath are reliability through redundancy and increased performance.

D. The two main benefits of multipath are reliability through redundancy and increased performance.

![]() A, B, and C are all incorrect.

A, B, and C are all incorrect.

19. ![]() B. Read-only snapshots are often used in mission-critical environments because the application can continue to operate in read-write mode on the original data while backup operations are carried out on the read-only snapshot.

B. Read-only snapshots are often used in mission-critical environments because the application can continue to operate in read-write mode on the original data while backup operations are carried out on the read-only snapshot.

![]() A, C, and D are all incorrect.

A, C, and D are all incorrect.

20. ![]() B. If 10GB of the data consists of a file with a single exact duplicate, then deleting the duplicates should free up 5GB of disk space (half of the 10GB).

B. If 10GB of the data consists of a file with a single exact duplicate, then deleting the duplicates should free up 5GB of disk space (half of the 10GB).

![]() A, C, and D are all incorrect.

A, C, and D are all incorrect.

LAB ANSWER

The design of an iSCSI SAN will vary widely and will likely include some of the designer’s preferences with regard to specific equipment. However, any design should incorporate the following elements and address these questions:

Network connectivity: an iSCSI SAN will run over Ethernet and any design document should address how the system will be connected to the network. Gigabit Ethernet would be a minimum with an expectation of some discussion of the use of VLANs to separate and protect storage traffic as well as the use of techniques such as NIC teaming to eliminate bottlenecks.

Capacity and storage: the design should also address the amount of storage to be provided, any redundancy/data protection techniques such as the use of RAID 5/10, and should address the use of storage quotas (if any).

Security: most of the design should focus on how the SAN will be secured and protected. A complete design should mention limiting connections/protocols to the SAN, creation of different LUNs as needed to ensure data separation, use of VLANs, CHAP authentication, encryption of data at rest (if needed), changing of default passwords, limiting access to the SAN’s management interface/capabilities, and so on.