Chapter 2. Enterprise Layer 2 and Layer 3 Design

In network design, it is common that a certain design goal can be achieved “technically” using different approaches. Although, from a technical deployment point of view this can be seen as an advantage, from networks’ design perspective on the other hand, almost always one of the most challenging parts is, which design option should be selected? (To achieve a business driven design that takes into considerations technical and non-technical design requirements.) Practically, to achieve this, network designers must be aware of the different design options and protocols as well as the advantages and limitations of each. Therefore, this chapter will concentrate specifically on highlighting, analyzing, and comparing the various design options, principles, and considerations with regard to Layer 2 and Layer 3 control plane protocols from different design aspects, focusing on enterprise grade networks.

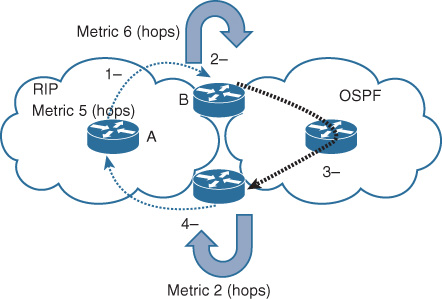

Enterprise Layer 2 LAN Design Considerations

To achieve a reliable and highly available Layer 2 network design, network designers need to have various technologies in place that protect the Layer 2 domain and facilities having redundant paths to ensure continuous connectivity in the event of a node or link failure. The following are the primary technologies that should be considered when building the design of Layer 2 networks:

![]() Layer 2 control protocols, such as Spanning Tree Protocol

Layer 2 control protocols, such as Spanning Tree Protocol

![]() VLANs and trunking

VLANs and trunking

![]() Link aggregation

Link aggregation

![]() Switch fabric (discussed in Chapter 8, “Data Center Networks Design”)

Switch fabric (discussed in Chapter 8, “Data Center Networks Design”)

Spanning Tree Protocol

As a Layer 2 network control protocol, the Spanning Tree Protocol (STP) is considered the most proven and commonly used control protocol in classical Layer 2 switched network environments, which include multiple redundant Layer 2 links that can generate loops. The basic function of STP is to prevent Layer 2 bridge loops by blocking the redundant L2 interface to a level that can provide a loop-free topology. There are multiple flavors or versions of STP. The following are the most commonly deployed versions:

![]() 802.1D: The traditional STP implementation

802.1D: The traditional STP implementation

![]() 802.1w: Rapid STP (RSTP) supports large-scale implementations with enhanced convergence time

802.1w: Rapid STP (RSTP) supports large-scale implementations with enhanced convergence time

![]() 802.1s: Multiple STP (MST) permits very large-scale STP implementations

802.1s: Multiple STP (MST) permits very large-scale STP implementations

In addition, there are some features and enhancements to STP that can optimize the operation and design of STP behavior in a classical Layer 2 environment. The following are the primary STP features:

![]() Loop Guard: Prevents the alternate or root port from being elected unless bridge protocol data units (BPDUs) are present

Loop Guard: Prevents the alternate or root port from being elected unless bridge protocol data units (BPDUs) are present

![]() Root Guard: Prevents external or downstream switches from becoming the root

Root Guard: Prevents external or downstream switches from becoming the root

![]() BPDU Guard: Disables a PortFast-enabled port if a BPDU is received

BPDU Guard: Disables a PortFast-enabled port if a BPDU is received

![]() BPDU Filter: Prevents sending or receiving BPDUs on PortFast-enabled ports

BPDU Filter: Prevents sending or receiving BPDUs on PortFast-enabled ports

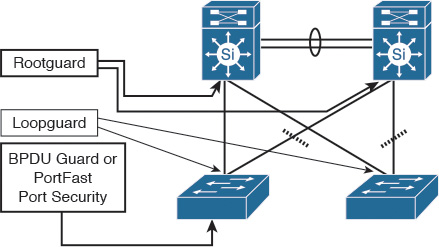

Figure 2-1 briefly highlights the most appropriate place where these features should to be applied in a Layer 2 STP-based environment.

Note

Cisco has developed enhanced versions of the STP. It has incorporated a number of these features into it using different versions of STP that provide faster convergence and increased scalability, such as Per-VLAN Spanning Tree Plus (PVST+) and Rapid PVST+.

VLANs and Trunking

A Layer 2 virtual local-area network (VLAN) is considered as a type of network virtualization technique that provides logical separation with broadcast domains and policy control implementation. In addition, VLANs offer a degree of fault isolation at Layer 2 that can contribute to the optimization of network performance, stability, and manageability. Trunking, however, refers to the protocols that enable the network to extend VLANs across Layer 2 uplinks between different nodes by providing the ability to carry multiple VLANs over a single physical link.

From a design best practices perspective, VLANs should not span multiple access switches; however, this is only a general recommendation. For example, some designs dictate that VLANs must span multiple access switches to meet certain application requirements. Consequently, understanding the different Layer 2 topologies and the impact of spanning VLANs across multiple switches is a key aspect for Layer 2 design. This aspect (applicability and its implications) is covered in more detail later in this chapter (in the section “Enterprise Layer 2 LAN Common Design Options”).

Link Aggregation

The concept of link aggregation refers to the industry standard IEEE 802.3ad, in which multiple physical links can be grouped together to form a single logical link. This concept offers a cost-effective solution by increasing cumulative bandwidth without requiring any hardware upgrades. The IEEE 802.3ad Link Aggregation Control Protocol (LACP) offers several other benefits, including the following:

![]() An industry standard protocol that enables interoperability of multivendor network devices

An industry standard protocol that enables interoperability of multivendor network devices

![]() The optimization of network performance in a cost-effective manner by increasing link capacity without changing any physical connections or requiring hardware upgrades

The optimization of network performance in a cost-effective manner by increasing link capacity without changing any physical connections or requiring hardware upgrades

![]() Eliminate single points of failure and enhance link-level reliability and resiliency

Eliminate single points of failure and enhance link-level reliability and resiliency

Although link aggregation is a simple and reasonable mechanism to increase bandwidth capacity between network nodes, each individual flow will be limited to the speed of the utilized member link by that flow, based on the load-balancing hashing algorithm used unless the flowlet concept is considered1.

1. “Dynamic Load Balancing Without Packet Reordering,” IETF Draft, chen-nvo3-load-banlancing, http://www.ietf.org

Note

In addition to LACP (the industry standard link aggregation control protocol), Cisco has developed a proprietary link aggregation protocol called Port Aggregation Protocol (PAgP). Both protocols have different operational modes, which the network designer must be aware.

There are two primary types of link aggregation connectivity models:

![]() Single-chassis link aggregation: The typical link aggregation type of connectivity that connects two network nodes in a point-to-point manner.

Single-chassis link aggregation: The typical link aggregation type of connectivity that connects two network nodes in a point-to-point manner.

![]() Multichassis link aggregation: Also referred to as mLAG, this type of link aggregation connectivity is most commonly used when the upstream switches (typically two) are deployed in “switch clustering” mode. This connectivity model offers a higher level of link and path resiliency than the single-chassis link aggregation.

Multichassis link aggregation: Also referred to as mLAG, this type of link aggregation connectivity is most commonly used when the upstream switches (typically two) are deployed in “switch clustering” mode. This connectivity model offers a higher level of link and path resiliency than the single-chassis link aggregation.

Figure 2-2 illustrates these two link aggregation connectivity models.

First Hop Redundancy Protocol and Spanning Tree

First-hop Layer 3 routing redundancy is designed to offer transparent failover capabilities at the first-hop Layer 3 IP gateways, where two or more Layer 3 devices work together in a group to represent one virtual Layer 3 gateway. Hot Standby Router Protocol (HSRP), Virtual Router Redundancy Protocol (VRRP), and Gateway Load Balancing Protocol (GLBP) are the primary and most commonly used protocols to provide a resilient default gateway service for endpoints and hosts.

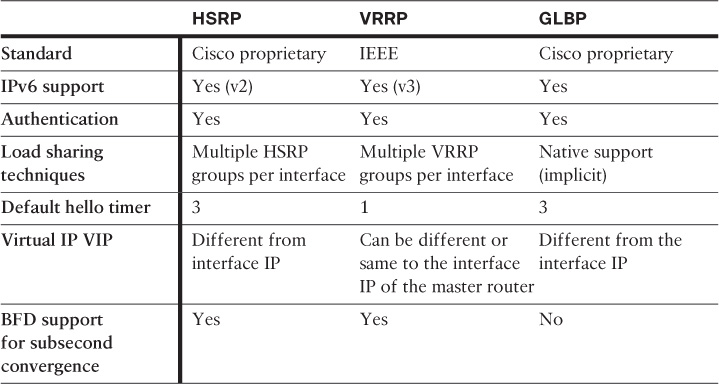

Table 2-1 summarizes and compares the main capabilities and functions of these different FHRP protocols.

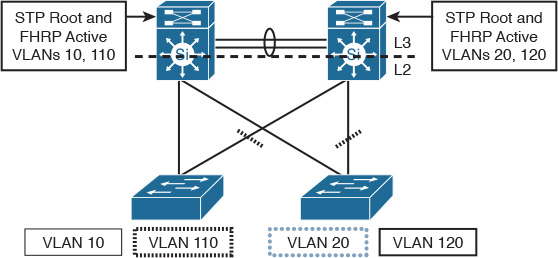

One of the typical scenarios in classical hierarchal networks is when FHRP works in conjunction with STP to provide a redundant Layer 3 gateway services. However, some Layer 2 design models require special attention in terms of VLANs design (extending Layer 2 VLANs across access switches or not) and the placement of the demarcation point between Layer 2 and Layer 3. (For example, if the interswitch link between the distribution layer switches is configured as Layer 2 or Layer 3 link.) The aforementioned factors can affect the overall reliability and convergence time of the Layer 2 LAN design. The following design model (depicted in Figure 2-3) is considered one of the common design models that has a proven ability to provide the most resilient design when FHRP is applied to an STP-based Layer 2 network (such as VRRP or HSRP). This design model has the following characteristics:

![]() The interswitch link between the distribution switches is configured as Layer 3 link.

The interswitch link between the distribution switches is configured as Layer 3 link.

![]() No VLAN spanning across switches.

No VLAN spanning across switches.

![]() The STP root bridge is aligned with active FHRP instance for each VLAN.

The STP root bridge is aligned with active FHRP instance for each VLAN.

![]() Uplinks from access to distribution are both forwarding from STP point of view.

Uplinks from access to distribution are both forwarding from STP point of view.

In the design illustrated in Figure 2-3, when GLBP is used as the FHRP, it is going to be less deterministic compared to HSRP or VRRP because the distribution of Address Resolution Protocol (ARP) responses is going to be random.

Enterprise Layer 2 LAN Common Design Options

Network designers have many design options for Layer 2 LANs. This section will help network designers by highlighting the primary and most common Layer 2 LAN design models used in traditional and today’s LANs, along with the strengths and weaknesses of each design model.

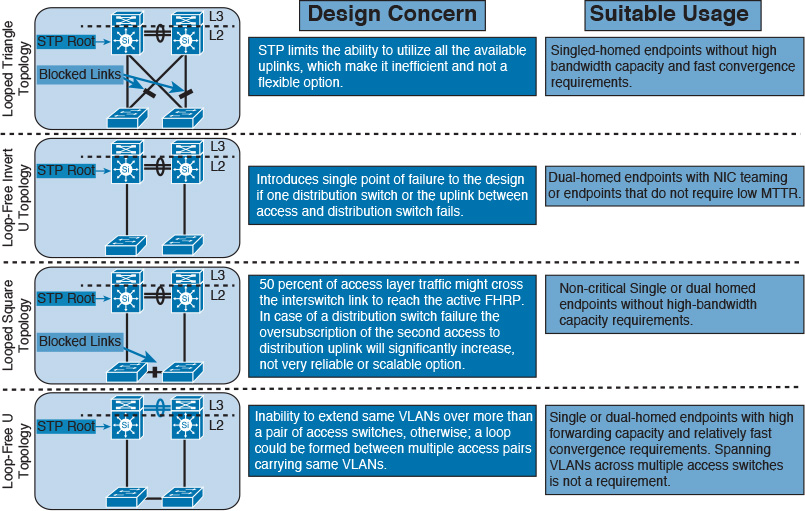

Layer 2 Design Models: STP Based (Classical Model)

In classical Layer 2 STP-based LAN networks, the connectivity from the access to the distribution layer switches can be designed in various ways and combined with Layer 2 control protocols and features discussed earlier to achieve certain design functional requirements. In general, there is no single best design that someone can suggest that can fit every requirement, because each design is proposed to resolve a certain issue or requirement. However, by understanding the strengths and weaknesses of each topology and design model (illustrated in Figure 2-4), network designers may then always select the most suitable design model that meets the requirements from different aspects, such as network convergence time, reliability, and flexibility. This section highlights the most common classical Layer 2 design models of LAN environments with STP, which can be applied to enterprise Layer 2 LAN designs.

Note

All the Layer 2 design models in Figure 2-4 share common limitations: the reliance on STP to avoid loss of connectivity caused by Layer 2 loops and the dependency on Layer 3 FHRP timers, such as VRRP to converge. These dependences naturally lead to an increased convergence time when a node or link fails. Therefore, as a rule of thumb, tuning and aligning STP and FHRP timers is a recommended practice to overcome these limitations to some extent.

Figure 2-4 summarizes some of the design concerns and lists suggested usage of each of the depicted design models in this figure [5].

Layer 2 Design Model: Switch Clustering Based (Virtual Switch)

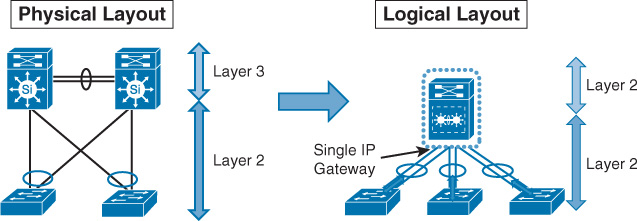

The concept of switch clustering significantly changed the Layer 2 design model between the access and distribution layer switches. With this design model, a pair of upstream distribution switches can appear as a one logical (virtual) switch from the access layer switch point of view. Consequently, this approach transformed the way access layer switches connect to the distribution layer switches, because there is no reliance on STP and FHRP anymore, which means the elimination of any convergence delays associated with STP and FHRP. In addition, from the uplinks and link aggregation perspective, one access switch can be connected (multihomed) to the two clustered distribution switches as one logical switch using one link aggregation bundle over multichassis link aggregation (mLAG), as illustrated in Figure 2-5.

As Figure 2-5 shows, all uplinks will be in forwarding state across both distribution switches from a Layer 2 point of view. There will be one virtual IP gateway that should permit the forwarding across both switches from the forwarding plane perspective. It is obvious that this design model can enhance network resiliency and convergence time, and maximize bandwidth capacity, by utilizing all uplinks. In addition, this design model supports the extension of the Layer 2 VLAN across access switches safely, without any concern about forming any Layer 2 loop. This makes the design model simple, reliable, easy to manage, and more scalable as compared to the classical STP-based design model.

Layer 2 Design Model: Daisy-Chained Access Switches

Although this design model might be a viable option to overcome some limitations, network designers commonly use it as an interim solution. This design can introduce undesirable network behaviors. For instance, the design shown in Figure 2-6 can introduce the following issues during a link or node failure:

![]() Dual active HSRP

Dual active HSRP

![]() Possibility of 50 percent loss of the returning traffic for devices that still use the distribution switch-1 as the active Layer 3 FHRP gateway

Possibility of 50 percent loss of the returning traffic for devices that still use the distribution switch-1 as the active Layer 3 FHRP gateway

When suggesting an alternative solution to overcome a given design issue or limitation, it is important to make sure that the suggested design option will not introduce new challenges or issues during certain failure scenarios. Otherwise, the newly introduced issues will outweigh the benefits of the suggested solution.

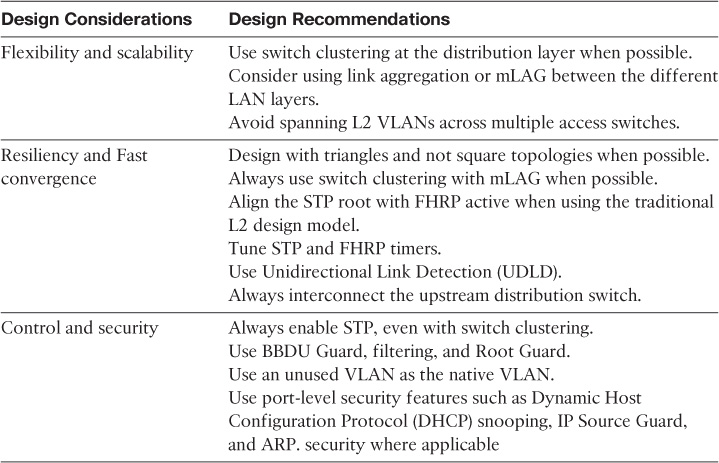

Layer 2 LAN Design Recommendations

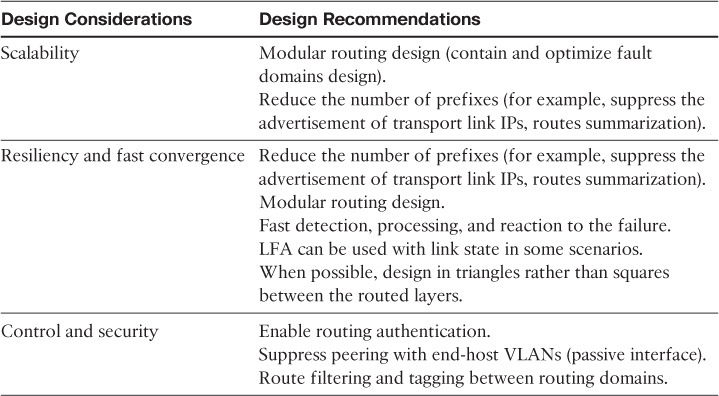

Table 2-2 summarizes the different Layer 2 LAN design considerations and the relevant design recommendations.

Enterprise Layer 3 Routing Design Considerations

This section covers the various routing design considerations and optimization concepts that pertain to enterprise-grade routed networks.

IP Routing and Forwarding Concept Review

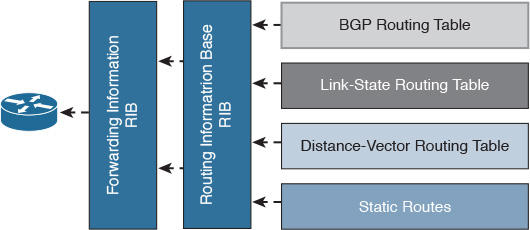

The main goal of routing protocols is to serve as a delivery mechanism to route packets to reach their intended destination. The end-to-end process of packets routing across the routed network is facilitated and driven by the concept of distributed databases. This concept is typically based on having a database of IP addresses (typically IPs of hosts and networks) on each Layer 3 node in the packet’s path, along with the next-hop IP addresses of the Layer 3 nodes that can be used to reach each of these IP addresses. This database is known as the Routing Information Database (RIB). In contrast, the Forwarding Information Base (FIB), also known as the forwarding table, contains the destination addresses and the interfaces required to reach those destinations, as depicted in Figure 2-7. In general, routing protocols are classified as either link-state, path-vector, or distance-vector protocols. This classification is based on how the mechanism of the routing protocol constructs and updates its routing table, and how it computes and selects the desired path to reach the intended IP destination.2

2. IETF draft: (Routing Information Base Info Model “draft-nitinb-i2rs-rib-info-model-02”)

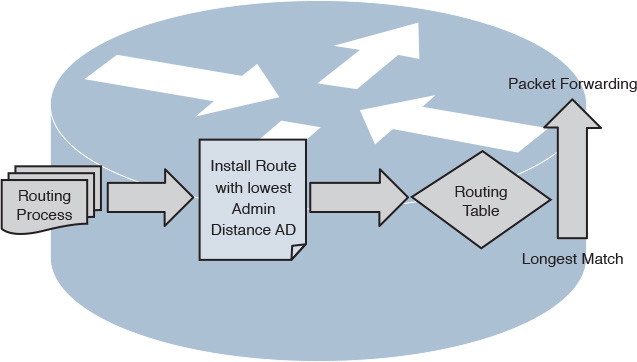

As illustrated in Figure 2-8, the typical basic forwarding decision in a router is based on three processes:

![]() Routing protocols

Routing protocols

![]() Routing table

Routing table

![]() Forwarding decision (switches packets)

Forwarding decision (switches packets)

Link-State Routing Protocol Design Considerations

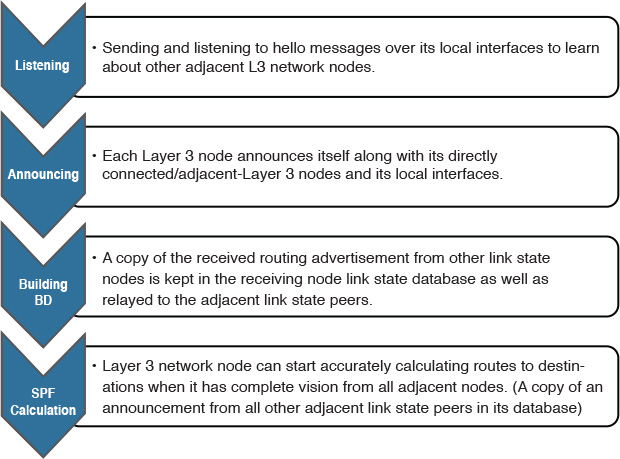

Open Shortest Path First (OSPF) and Intermediate System-to-Intermediate System (IS-IS) protocols as link-state routing protocols have a common conceptual characteristic in the way they build, interact, and handle L3 routing to some extent. Figure 2-9 illustrates the process of building and updating a link-state database (LSDB).

It is important to remember that although OSPF and IS-IS as link-state routing protocols are highly similar in the way they build the LSDB and operate, they are not identical! This section discusses the implications of applying link-state routing protocols (OSPF and IS-IS) on different network topologies, along with different design considerations and recommendations.

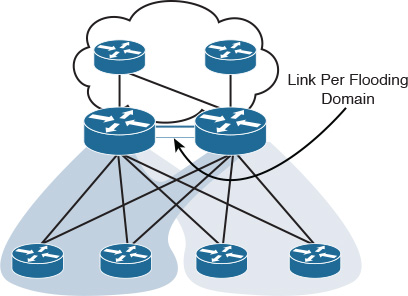

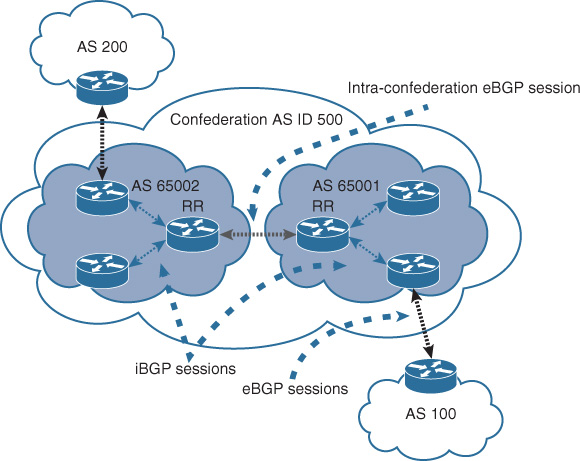

Link-State over Hub-and-Spoke Topology

In general, some implications should be considered when link-state routing protocols are applied on a hub-and-spoke topology, including the following:

![]() There is a concern with regard to scaling to a large number of spokes, because each spoke node typically will receive all other spoke nodes’ link-state information, because there is no effective means to control the distribution of routing information among these spokes.

There is a concern with regard to scaling to a large number of spokes, because each spoke node typically will receive all other spoke nodes’ link-state information, because there is no effective means to control the distribution of routing information among these spokes.

![]() Special consideration must be taken to avoid suboptimal routing, in which traffic can use remote sites (spokes) as a transit site to reach the hub or other spokes.

Special consideration must be taken to avoid suboptimal routing, in which traffic can use remote sites (spokes) as a transit site to reach the hub or other spokes.

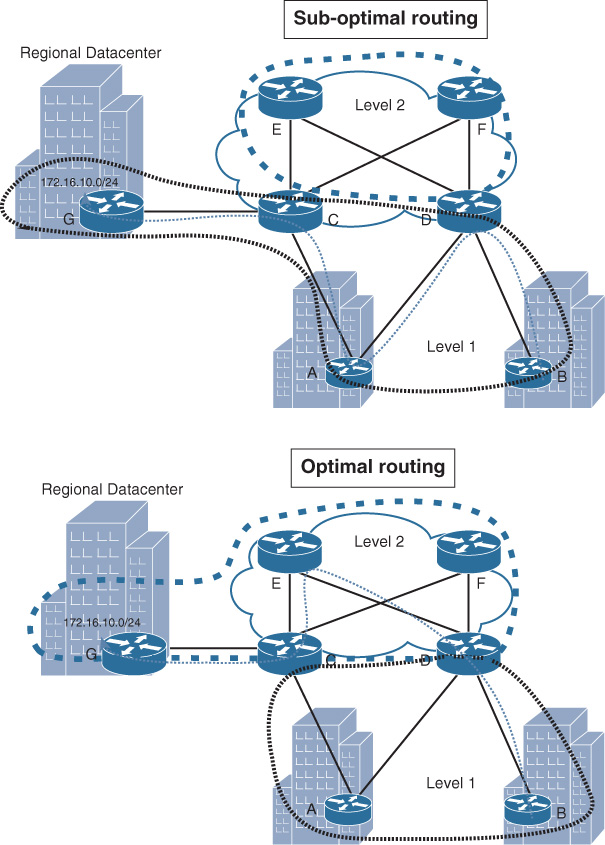

For instance, summarization of routing flooding domains in a multi-area/flooding domain design with multiple border routers requires specific routing information between the border routers (Area Border Routers [ABRs] in OSPF or L1/L2 in IS-IS) over a nonsummarized link, to avoid using spoke sites as a transit path, as illustrated in Figure 2-10 [13].

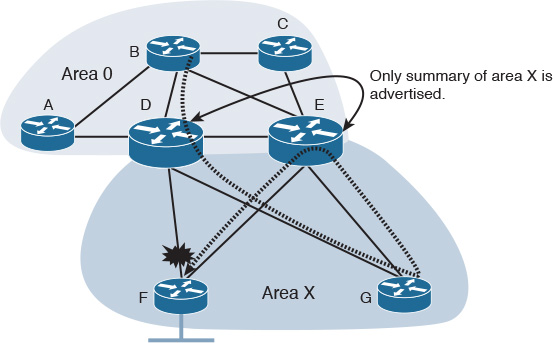

So, for each hub-and-spoke flooding domain to be added to the hub routers, you need to consider an additional link between the hub routers in that domain. This is a typical use case scenario to avoid suboptimal routing with link-state routing protocols. However, when the number of flooding domains (for example, OSPF areas) increases, the number of VLANs, subinterfaces, or physical interfaces between the border routers will grow as well, which will result in scalability and complexity concerns. One of the possible solutions is to have a single link with adjacencies in multiple areas. (RFC 5185) [13]. For instance, in the scenario illustrated in Figure 2-11, there is a hub-and-spoke topology that uses OSPF multi-area design.

If the link between router D and router F (part of OSPF area X) fails, any traffic from router B destined to the LAN connected to router F going toward the summary advertised route by router D will traverse across the more specific route over the path G, E, then F.

To optimize this design during this failure scenario, there are multiple possible solutions, and here network designers must decide which solution is the most suitable one with regard to other design requirements such as application requirements where delay could affect critical business applications:

![]() Place the inter-ABR link (D to E) in area X (simple and provide “north to south” optimal routing in this topology).

Place the inter-ABR link (D to E) in area X (simple and provide “north to south” optimal routing in this topology).

![]() Place each spoke in its own area with link-state advertisement (LSA) type 3 filtering. (May lead to complex operations and limited scalability; “depends on the network size.”)

Place each spoke in its own area with link-state advertisement (LSA) type 3 filtering. (May lead to complex operations and limited scalability; “depends on the network size.”)

![]() Disable route summarization at the ABRs; for example advertise more specific routes from ABR router E. (May not always be desirable because this means reduced scalability and the loss of some of the value of the OSPF multi-area design.)

Disable route summarization at the ABRs; for example advertise more specific routes from ABR router E. (May not always be desirable because this means reduced scalability and the loss of some of the value of the OSPF multi-area design.)

Note

The link between the two hub nodes (for example, ABRs) will introduce the potential of a single point of failure to the design. Therefore, link redundancy (availability) between the ABRs may need to be considered.

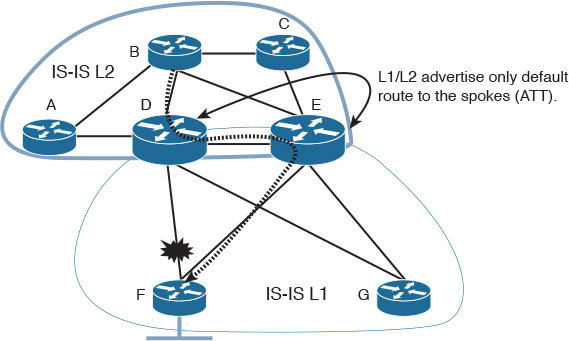

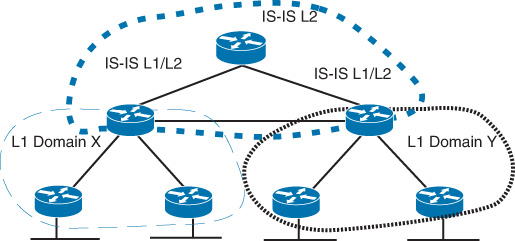

If IS-IS is applied to the topology in Figure 2-11 instead, using a similar setup where IS-IS L2 is to be used instead of the area 0 and IS-IS L1 is to be used by the spokes, the simplest way to optimize this architecture is to put the links between the border routers in IS-IS L1-L2 (overlapping levels capability), where we can extend L1 to overlap with L2 on the border router (ABR in OSPF), as illustrated in Figure 2-12. This will result in a topology that can support summarization with more optimal routing with regard to the failure scenario discussed above.

OSPF is a more widely deployed and proven link-state routing protocol in enterprise networks compared to IS-IS, especially with regard to hub-and-spoke topologies. IS-IS has limitations when it works on nonbroadcast multiple access (NBMA) multipoint networks.

OSPF Interface Network Type Considerations in a Hub-and-Spoke Topology

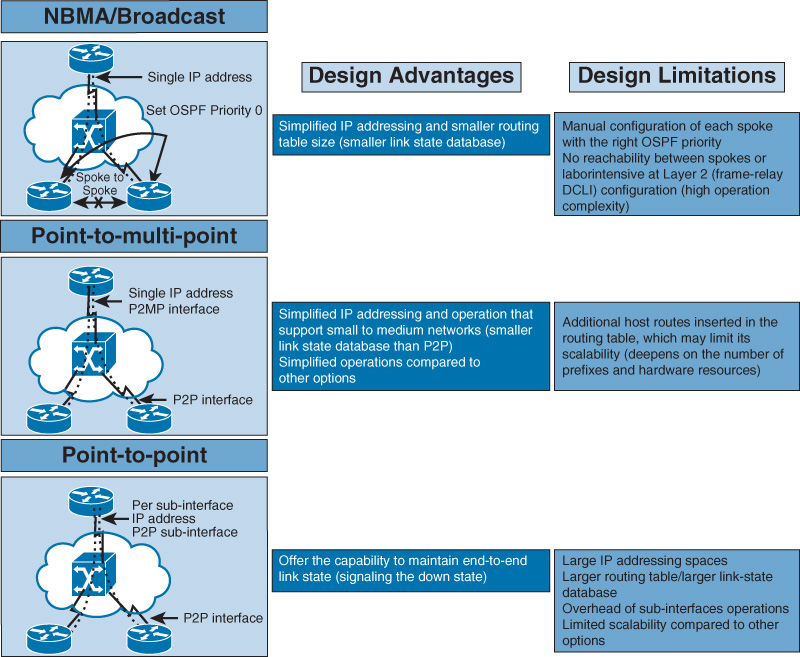

Figure 2-13 summarizes the different possible types of OSPF interfaces in a hub-and-spoke topology over NBMA transport (typically either Frame Relay or ATM), along with the associated design advantages and implications of each [13].

Link-State over Full-Mesh Topology

Fully meshed networks can offer a high level of redundancy and the shortest paths. However, the substantial amount of routing information flooding across a fully meshed network is a significant concern. This concern stems from the fact that each router will receive at least one copy of every new piece of information from each neighbor on the full mesh. For example, in Figure 2-14, each router has four adjacencies. When a router’s link connect to the LAN side fails, it must flood its LSA/LSP to each of the four neighbors. Each neighbor will then flood this LSA/LSP (link-state package) again to its neighbors. This process will culminate in a process like a broadcast being sent, due to this full-mesh connectivity and reflooding [13].

With link-state routing protocols, you can use the mesh group technique to reduce link-state information flooding in a full-meshed topology [23]. However, with link-state routing protocols in failure scenarios over a meshed topology, some routers may know about the failure before others within the mesh. This will typically lead to a temporarily inconsistent LSDB across the nodes within the network, which can result in transient forwarding loops. Even though the concept of a loop-free alternate (LFA) route can be considered to overcome situations like this, using LFA over a mesh topology will add complexity to the control plane.

Note

Later in this chapter, in the section “Hiding Topology and Reachability Information,” more details are provided about flooding domain and route summarization design considerations for link-state routing protocols, which can reduce the level of control plane complexity and optimize link-state information flooding and performance.

Note

Other mechanisms help to optimize and reduce link-state LSA/LSP flooding by reducing the transmission of subsequent LSAs/LSPs, such as OSPF flood reduction (described in RFC 4136). This is done by eliminating the periodic refresh of unchanged LSAs, which can be useful in fully meshed topologies

OSPF Area Types

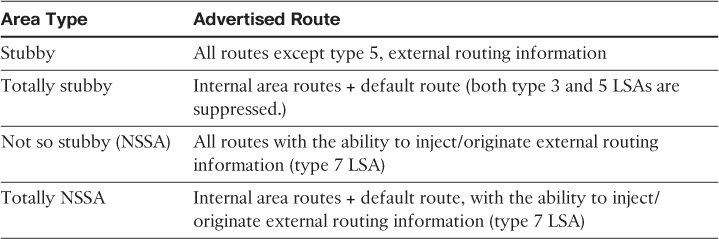

Table 2-3 contains a summarized review of the different types of OSPF areas [21, 22].

Each of the OSPF areas allows certain types of LSAs to be flooded, which can be used to optimize and control route propagation across OSPF routed domain. However, if OSPF areas are not properly designed and aligned with other requirements, such as application requirements, it can lead to serious issues because of the traffic black-holing and suboptimal routing that can appear as a result to this type of design. Subsequent sections in this book discuss these points in more detail.

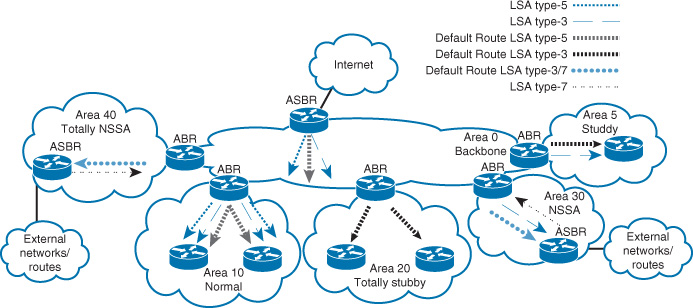

Figure 2-15 shows a conceptual high-level view of the route propagation, along with the different OSPF LSAs, in an OSPF multi-area design with different area types.

The typical design question is this: Where can these areas be used and why?

The basic standard answer is this: It depends on the requirements and topology.

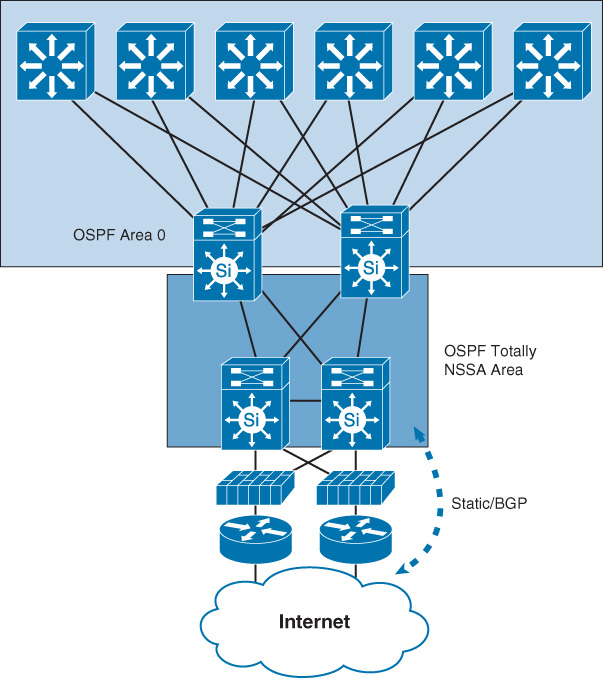

For instance, if no requirement specifies which path a route must take to reach external networks such as an extranet or the Internet, you can use the “totally NSSA” area type to simplify the design. For example, the scenario in Figure 2-16 is one of the most common design models that use OSPF NSSA. In this design model, the border area that interconnects the campus or data center network with the WAN or Internet edge devices can be deployed as totally NSSA. This deployment assumes that no requirement dictates which path should be used [15]. Furthermore, in the case of NSSA and multiple ABRs, OSPF selects one ABR to perform the translation from LSA type 7 to LSA type 5 and flood it into area 0 (normally the router with the highest router ID, as described in RFC 1587). This behavior can affect the design if the optimal path is required.

Note

RFC 3101 introduced the ability to have multiple ABRs perform the translation from LSA type 7 to type 5. However, the extra unnecessary number of LSA type 7 to type 5 translators may significantly increase the size of the OSPF LSDB. This can affect the overall OSPF performance and convergence time in large-scale networks with a large number of prefixes [RFC 3101].

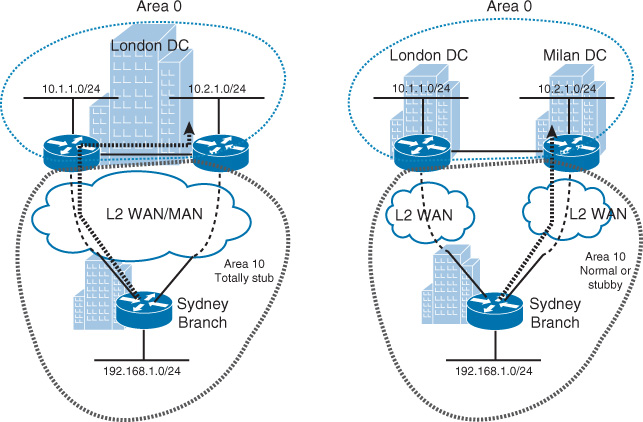

Similarly, in the scenario depicted on the left in Figure 2-17, a data center in London hosts two networks (10.1.1.0/24 and 10.2.1.0/24). Both WAN/MAN links to this data center have the same bandwidth and cost. Based on this setup, the traffic coming from the Sydney branch toward network 10.2.1.0/24 can take any path. If this is not compromising any requirement (in other words, suboptimal routing is not an issue), the OSPF area 10 can be deployed as a “totally stubby area” to enhance the performance and stability of remote site routers.

In contrast, the scenario on the right side of Figure 2-17 has a slightly different setup. The data centers are located in different geographic locations with a data center interconnect (DCI) link. In a scenario like this, the optimal path to reach the destination network can be critical, and using a totally stubby area can break the optimal path requirement. To overcome this limitation, there are two simple alternatives to use: either “normal OSPF area” or the “stubby area” for area 10. This ensures that the most specific route (LSA type 3) is propagated to the Sydney branch router to select the direct optimal path rather than crossing the international DCI [13].

In a nutshell, the goal of these types of different OSPF areas is to add more optimization to the OSPF multi-area design by reducing the size of the routing table and lowering the overall control plane complexity by reducing the size of the fault domains (link-state flooding domains). This size reduction can help to reduce overhead of the routers’ resources, such as CPU and memory. Furthermore, the reduction of the flooding domains’ size will help accelerate the overall network recovery time in the event of a link or node failure. However, in some scenarios where an optimal path is important, take care when choosing between these various area types.

In the scenarios illustrated in Figure 2-16 and Figure 2-17, asymmetrical routing is a possibility, which may be an issue if there are any stateful or stateless network devices in the path such as a firewall. However, this section focuses only on the concept of area design. Later in this book, you will learn how to manage asymmetrical routing at the network edge.

OSPF Versus IS-IS

It is obvious that OSPF and IS-IS as link-state routing protocols are similar and can achieve (to a large extent) the same result for enterprises in terms of design, performance, and limitations. However, OSPF is more commonly used by enterprises as the interior gateway protocol (IGP), for the following reasons:

![]() OSPF can offer a more structured and organized routing design for modular enterprise networks.

OSPF can offer a more structured and organized routing design for modular enterprise networks.

![]() OSPF is more flexible over hub-and-spoke topology with multipoint interfaces at the hub.

OSPF is more flexible over hub-and-spoke topology with multipoint interfaces at the hub.

![]() OSPF naturally runs over IP, which makes it a suitable option to be used over IP tunneling protocols such as generic routing encapsulation (GRE) and dynamic multipoint virtual private network (DMVPN), whereas with IS-IS, this is not a supported design.

OSPF naturally runs over IP, which makes it a suitable option to be used over IP tunneling protocols such as generic routing encapsulation (GRE) and dynamic multipoint virtual private network (DMVPN), whereas with IS-IS, this is not a supported design.

![]() In terms of staff knowledge and experience, OSPF is more widely deployed on enterprise-grade networks. Therefore, compared to IS-IS, more people have knowledge and expertise.

In terms of staff knowledge and experience, OSPF is more widely deployed on enterprise-grade networks. Therefore, compared to IS-IS, more people have knowledge and expertise.

However, if there is no technical barrier, both OSPF and IS-IS are valid options to consider.

Note

Some Cisco platforms and software versions do support IS-IS over GRE.3

3. “Cisco IOS XR Routing Configuration Guide for the Cisco CRS Router, Release 4.2.x,” http://www.cisco.com

Further Reading

OSPF Version 2, RFC 1247: http://www.ietf.org

OSPF for IPv6, RFC 2740: http://www.ietf.org

Domain-Wide Prefix Distribution with Two-Level IS-IS, RFC 5302: http://www.ietf.org

“OSPF Design Guide”: http://www.cisco.com

“How Does OSPF Generate Default Routes?”: http://www.cisco.com

“What Are OSPF Areas and Virtual Links?”: http://www.cisco.com

“OSPF Not So Stubby Area Type 7 to Type 5 Link-State Advertisement Conversion”: http://www.cisco.com

“IS-IS Network Types and Frame Relay Interfaces”: http://www.cisco.com

EIGRP Design Considerations

Enhanced Interior Gateway Routing Protocol (EIGRP) is an enhanced distance-vector protocol, relying on the Diffusing Update Algorithm (DUAL) to calculate the shortest path to a network. EIGRP, as a unique Cisco innovation, became highly valued for its ease of deployment, flexibility, and fast convergence. For these reasons, EIGRP is commonly considered by many large enterprises as the preferred IGP. EIGRP maintains all the advantages of distance-vector protocols while avoiding the concurrent disadvantages [16]. For instance, EIGRP does not transmit the entire routing information that exists in the routing table following an update event; instead, only the “delta” of the routing information will be transmitted since the last topology update. EIGRP is deployed in many enterprises as the routing protocol, for the following reasons:

![]() Easy to design, deploy, and support

Easy to design, deploy, and support

![]() Easier to learn

Easier to learn

![]() Flexible design options

Flexible design options

![]() Lower operational complexities

Lower operational complexities

![]() Fast convergence (subsecond)

Fast convergence (subsecond)

![]() Can be simple for small networks while at the same time scalable for large networks

Can be simple for small networks while at the same time scalable for large networks

![]() Supports flexible and scalable multi-tire campus and hub-and-spoke WAN design models

Supports flexible and scalable multi-tire campus and hub-and-spoke WAN design models

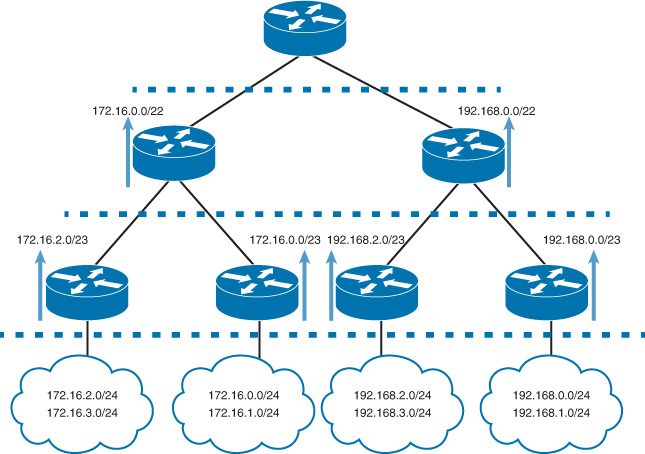

Unlike link-state routing protocols, such as OSPF, EIGRP has no hard edges. This is a key design advantage because hierarchy in EIGRP is created through routes summarization or routes filtering rather than relying on a protocol-defined boundary, such as OSPF areas. As illustrated in Figure 2-18, the depth of hierarchy depends on where the summarization or filtering boundary is applied. This makes EIGRP flexible in networks structured as a multitier architecture [19].

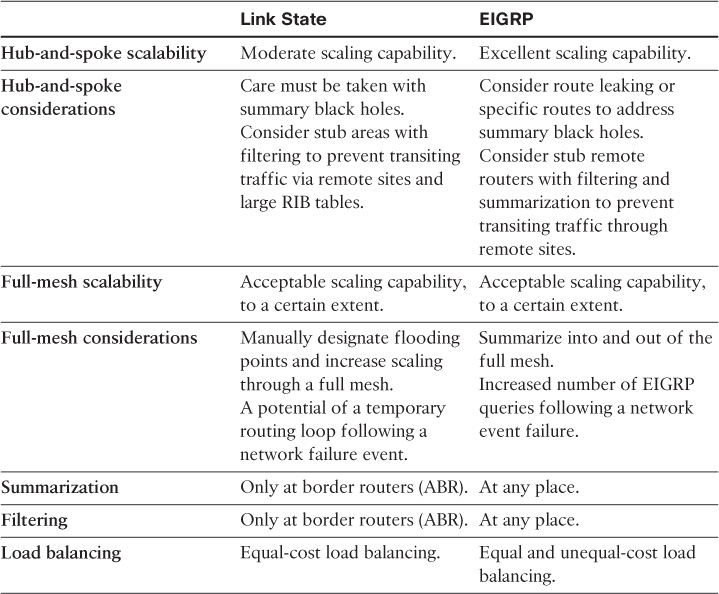

EIGRP: Hub and Spoke

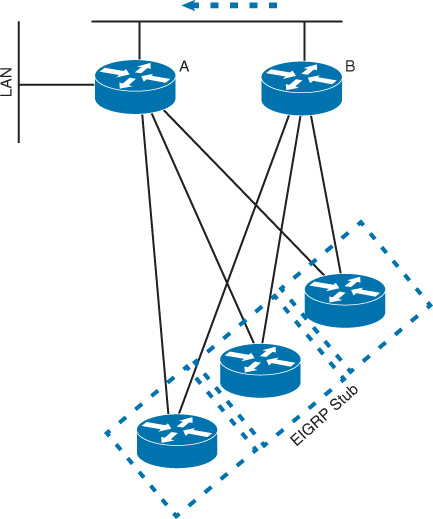

As discussed earlier, link-state routing protocols have some scaling limitations when applied on a hub-and-spoke topology. In contrast, EIGRP offers more flexible and scalable capabilities for the hub-and-spoke types of topologies. One of the main concerns in a hub-and-spoke topology is the possibility of a spoke or remote site being used as a transit path due to a configuration error or a link failure. With link-state routing protocols, several techniques to mitigate this type of issue were highlighted. However, there are still scalability limitations associated with it.

However, EIGRP offers the capability to mark the remote site (spoke) as a stub, which is unlike the OSPF stub (where all routers in the same stub area can exchange routes and propagate failure and update information). With EIGRP, when the spokes are configured as a stub, it will signal to the hub router that the paths through the spokes should not be used as transit paths. As a result, there will be significant optimization to the design. This optimization results from the decrease in EIGRP query scope and the reduction of the unnecessary overhead associated with responding to queries by the spoke routers (for example, EIGRP stuck-in-active [SIA] queries) [19].

In Figure 2-19, router B will see it has only one path to the LAN connected to router A, rather than four paths.

Consequently, enabling EIGRP Stub over a “hub-and-spoke” topology helps to reduce the overall control plane complexity as well as increases the scalability of the design to support large number of spokes without affecting its performance.

Note

With EIGRP, you can control what a stub router can advertise, such as directly connected links or redistributed static route. Therefore, network operators have more flexibility to control what is announced by the “stub” remote sites.

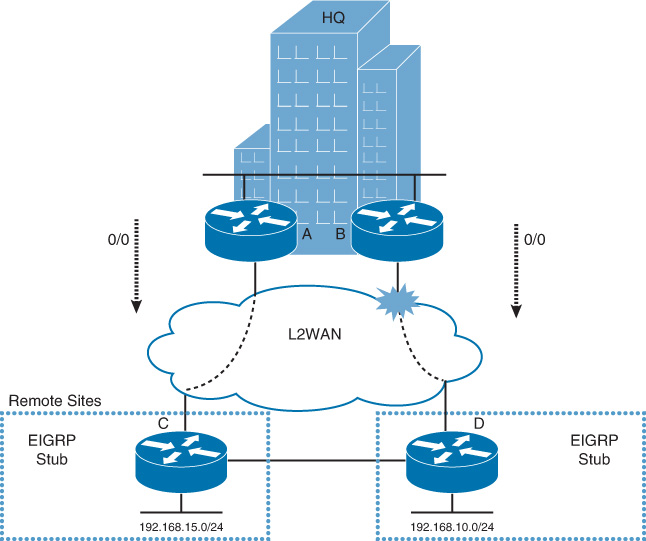

EIGRP Stub Route Leaking: Hub-and-Spoke Topology

You might encounter some scenarios like the one depicted in Figure 2-20, which is an extension to the EGRP stub design with a backdoor link between two remote sites. In this scenario, the HQ site is connected to the two remote sites over an L2 WAN. These remote sites are also interconnected directly via a backdoor link. Remote sites are configured as EIGRP stubs to optimize the remote sites’ EIGRP performance over the WAN.

The issue with the design in this scenario is that if the link between router B and router D fails, the following will result as a consequence of this single failure:

![]() Router A cannot reach network 192.168.10.0/24 because router D is configured as a stub. Also, router C is a stub, which will not advertise this network to router A anyway.

Router A cannot reach network 192.168.10.0/24 because router D is configured as a stub. Also, router C is a stub, which will not advertise this network to router A anyway.

![]() Router D will not be able to receive the default from router A because router C is a stub as well.

Router D will not be able to receive the default from router A because router C is a stub as well.

This means that the remote site connected to router D will be completely isolated, without taking any advantage of the backdoor link. To overcome this issue, EIGRP offers a useful feature called stub leaking, where both routers D and C in this scenario can advertise routes to each other selectively, even if they are configured as a stub. Route filtering might need to be incorporated in scenarios like this, when an EIGRP leak map is introduced into the design to avoid any potential suboptimal routing that might happen as a consequence of routes leaking.

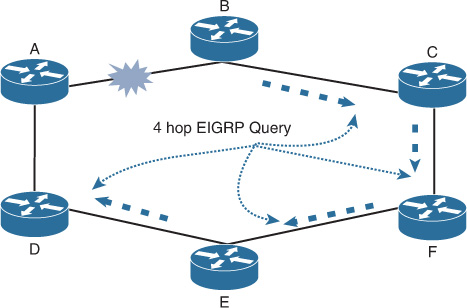

EIGRP: Ring Topology

Unlike link-state routing protocols, EIGRP has limitations with a ring topology. As depicted in Figure 2-21, the greater the number of nodes in the ring, the greater the number of queries to be sent during a link failure. As a general recommendation with EIGRP, always try to design in triangles where possible, rather than rings [20].

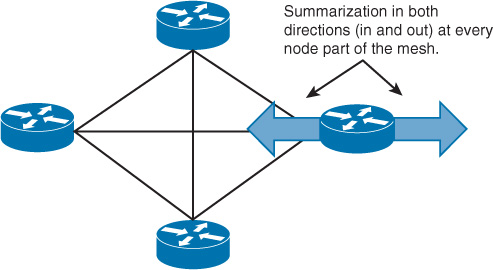

EIGRP: Full-Mesh Topology

EIGRP in a full-mesh topology (see Figure 2-22) is less desirable in comparison with link-state protocols. For example, with link-state protocols such as OSPF, network designers can designate one router to flood into the mesh and block flooding on the other routers, which can improve the topology. In contrast, with EIGRP, this capability is not available. The only way to mitigate the information flooding in an EIGRP mesh topology is by relying on route summarization and filtering techniques [19]. To optimize EIGRP in a mesh topology, the summarization must be into and out of the meshed network.

As discussed earlier, link state can lead to transient forwarding loops in ring and mesh topologies after a network component failure event. Therefore, both EIGRP and link state have limitations on these topologies, with different indications (fast and large number of EIGRP queries versus link-state transient loop).

EIGRP Route Propagation Considerations

EIGRP offers a high level of flexibility to network designers, which can fit different types of designs and topologies. However, like any other protocol, some limitations apply (especially with regard to route propagation) and may influence the design choices. Therefore, network designers must consider the following factors to avoid impacting the propagation of routing information, which can result in instable design:

![]() EIGRP bandwidth: By default, EIGRP is designed to use up to 50 percent of the main interface bandwidth for EIGRP packets; however, this value is configurable. The limitation with this concept occurs when there is a dialer or point-to-multipoint physical interface with several peers over one multipoint interface. In this scenario, EIGRP considers the bandwidth value on the main interface divided by the number of EIGRP peers on that interface to calculate the amount of bandwidth per peer. Consequently, when more peers are added over this multipoint interface, EIGRP will reach a point where it will not have enough bandwidth to operate over that dialer or multipoint interface appropriately. In addition, one of the common mistakes with regard to EIGRP and interface bandwidth is that sometimes network operators try to “influence” a best path selection decision in EIGRP DUAL by only tuning the bandwidth over an interface where the interface with the lowest bandwidth will be the least preferred. However, this approach can impact the EIGRP control plane peering functionality and scalability if it is tuned to a low value without proper planning.

EIGRP bandwidth: By default, EIGRP is designed to use up to 50 percent of the main interface bandwidth for EIGRP packets; however, this value is configurable. The limitation with this concept occurs when there is a dialer or point-to-multipoint physical interface with several peers over one multipoint interface. In this scenario, EIGRP considers the bandwidth value on the main interface divided by the number of EIGRP peers on that interface to calculate the amount of bandwidth per peer. Consequently, when more peers are added over this multipoint interface, EIGRP will reach a point where it will not have enough bandwidth to operate over that dialer or multipoint interface appropriately. In addition, one of the common mistakes with regard to EIGRP and interface bandwidth is that sometimes network operators try to “influence” a best path selection decision in EIGRP DUAL by only tuning the bandwidth over an interface where the interface with the lowest bandwidth will be the least preferred. However, this approach can impact the EIGRP control plane peering functionality and scalability if it is tuned to a low value without proper planning.

Therefore, the network designer must take this point into consideration and adopt alternatives, such as point-to-point subinterfaces under the multipoint interface. In addition, with overlay multipoint tunnel interfaces such as DMVPN the bandwidth may be required to be defined manually at the tunnel interface when there is a large number of remote spokes.

![]() Zero successor routes: When EIGRP tries to install routes in the RIB table and it is rejected, this is called zero successor routes because this route simply will not be propagated to other EIGRP neighbors in the network. This behavior typically happens due to one of the following two primary reasons:

Zero successor routes: When EIGRP tries to install routes in the RIB table and it is rejected, this is called zero successor routes because this route simply will not be propagated to other EIGRP neighbors in the network. This behavior typically happens due to one of the following two primary reasons:

![]() There is already the same route in the RIB table with a better administrative distance (AD).

There is already the same route in the RIB table with a better administrative distance (AD).

![]() When there are multiple EIGRP autonomous systems (AS) defined on the same router, the router will typically install any given route learned via both EIGRP autonomous systems with the same AD from one EIGRP AS, while the other will be rejected. Consequently, the route of the other EIGRP AS will not be propagated within its domain.

When there are multiple EIGRP autonomous systems (AS) defined on the same router, the router will typically install any given route learned via both EIGRP autonomous systems with the same AD from one EIGRP AS, while the other will be rejected. Consequently, the route of the other EIGRP AS will not be propagated within its domain.

Further Reading

savage-eigrp-xx, IETF draft, http://www.ietf.org

“Introduction to EIGRP,” http://www.cisco.com

“Configuration Notes for the Implementation of EIGRP over Frame Relay and Low Speed Links,” http://www.cisco.com

“What Does the EIGRP DUAL-3-SIA Error Message Mean?” http://www.cisco.com

Hiding Topology and Reachability Information Design Considerations

Technically, both topology and reachability information hiding can help to improve routing convergence time during a link or node failure. Topology and reachability information hiding also reduces control plane complexity and enhances network stability to a large extent. For example, if there is a link flapping in a remote site, this might cause all other remote sites to receive and process the update information every time this link flaps, which leads to instability and increased CPU processing.

However, to produce a successful design, the design must first align with the business goals and requirements (and not just be based on the technical drivers). Therefore, before deciding how to structure IGP flooding domains, network architects or designers must first identify the business’s goals, priorities, and drivers. Consider, for example, an organization that plans to merge with one of its business partners but with no budget allocated to upgrade any of the existing network nodes. When these two networks merge, the size of the network may increase significantly in a short period of time. As a result, the number of prefixes and network topology information will increase significantly, which will require more hardware resources such as memory or CPU.

Given that this business has no budget allocated for any network upgrade, in this case introducing topology and reachability information hiding to this network can optimize the overall network performance, stability, and convergence time. This will ultimately enable the business to meet its goal without adding any additional cost. In other words, the restructuring of IGP flooding domain design in this particular scenario is a strategic business-enabler solution.

However, in some situations, hiding topology and reachability information may lead to undesirable behaviors, such as suboptimal routing. Therefore, network designers must identify and measure the benefits and consequences by following the top-down approach. The following are some of the common questions that need to be thought about during the planning phase of the IGP flooding domain design:

![]() What are the business goals, priorities, and directions?

What are the business goals, priorities, and directions?

![]() How many Layer 3 nodes are in the network?

How many Layer 3 nodes are in the network?

![]() What is the number of prefixes?

What is the number of prefixes?

![]() Are there any hardware limitations (memory, CPU)?

Are there any hardware limitations (memory, CPU)?

![]() Is optimal routing a requirement?

Is optimal routing a requirement?

![]() Is low convergence time required?

Is low convergence time required?

![]() What IGP is used, and what is the used underlying topology?

What IGP is used, and what is the used underlying topology?

Furthermore, it is important that network designers understand how each protocol interacts with topology information and how each calculates its path, so as to be able to identify design limitations and provide valid optimization recommendations.

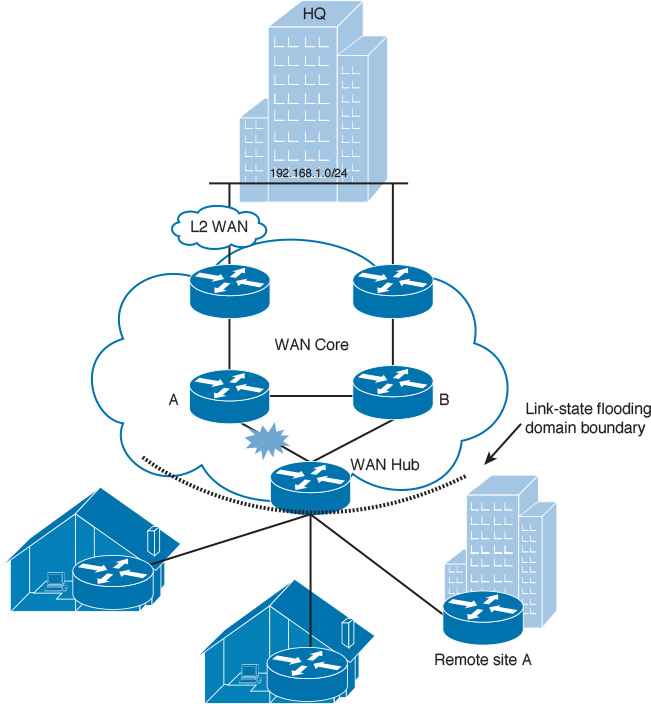

Link-state routing protocols take the full topology of the link-state routed network into account when calculating a path [18]. For instance, in the network illustrated in Figure 2-23, the router of remote site A can reach the HQ network (192.168.1.0/24) through the WAN hub router. Normally, if the link between the WAN hub router and router A in the WAN core fails, remote site A will be notified about this topology change “in a flat link-state design.” In fact, in any case, the remote site A router will continue to route its traffic via the WAN hub router to reach the HQ LAN 192.168.1.0/24.

In other words, in this scenario, the link failure notifications between the WAN hub router and the remote site routers are considered as unnecessary extra processing for the remote site routers. This extra processing could lead to other limitations in large networks with a large number of prefixes and nodes, such as network and CPU spikes. In addition, the increased size of the LSDB will impact routing calculation and router memory consumption [8]. Therefore, by introducing the principle of “topology hiding boundary” at the WAN hub router (for example, by using OSPF multi-area design), the overall routing design will be optimized (different fault domains) in terms of performance and stability.

A path-vector routing protocol (Border Gateway Protocol [BGP]) can achieve topology hiding by simply using either route summarization or filtering, and distance-vector protocols, by nature, do not propagate topology information. Moreover, with route summarization, network designers can achieve “reachability information hiding” for all the different routing protocols [19].

Note

Link state can offer built-in information hiding capabilities (route suppression) by using different type of flooding domains, such as L1/L2 in IS-IS and stubby types of areas in OSPF.

The subsequent sections examine where and why to break a routed network into multiple logical domains. You will also learn summarization techniques and some of the associated implications that you need to consider.

Note

Although route filtering can be considered as an option for hiding reachability information, it is often somewhat complicated with link-state protocols.

IGP Flooding Domains Design Considerations

As discussed earlier, modularity can add significant benefit to the overall network architecture. By applying this concept to the design of logical routing architectural domains, we can have a more manageable, scalable, and flexible design. To achieve this, we need to break a flat routing design into one that is more hierarchical and has modularity in its overall architecture. In this scenario, we may have to ask the following questions: How many layers should we consider in our design? How many modules or domains is good practice?

The simple answer to these questions depends on several factors, including the following:

![]() Design goal (simplicity versus scalability versus stability)

Design goal (simplicity versus scalability versus stability)

![]() Network topology

Network topology

![]() Network size (nodes, routes)

Network size (nodes, routes)

![]() Routing protocol

Routing protocol

![]() Network type (for example, enterprise versus service provider)

Network type (for example, enterprise versus service provider)

The following sections covers the various design considerations for IGP flooding domains, starting with a review of the structure of link-state and EIGRP domains.

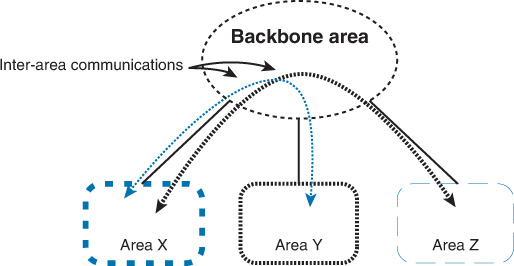

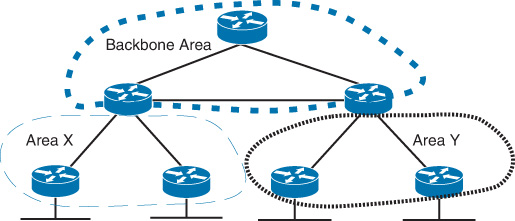

Link-State Flooding Domain Structure

Both OSPF and IS-IS as link-state routing protocols can divide the network into multiple flooding domains, as discussed earlier in this book. Dividing a network into multiple flooding domains, however, requires an understanding of the principles each protocol uses to build and maintain communication between the different flooding domains. In a multiple flooding domain design with OSPF, a backbone area is required to maintain end-to-end communication between all other areas (regardless of its type). In other words, area 0 in OSFP is like the glue that interconnects all other areas within an OSPF domain [22]. In fact, nonbackbone OSPF areas and area 0 (backbone area) interconnect and communicate in a hub-and -spoke fashion, as illustrated in Figure 2-24.

Similarly, with IS-IS, its levels chain (IS-IS flooding domains) must not be disjointed (L2 to L1/L2 to L1 and vice versa) for IS-IS to maintain end-to-end communications, where the level 2 can be seen as analogous to area 0 in OSPF.

The natural communication behavior of link-state protocols across multiple flooding domains requires at least one router to be dually connected to the core flooding domain (backbone area) and the other area or areas, where an LSDB for each area is stored along with separate shortest path first (SPF) calculations for each area. Moreover, the characteristic of the communication between link-state flooding domains (between border routers) is like a distance-vector protocol. In OSPF terminology, this router is called the Area Border Router (ABR). In IS-IS, the L1/L2 router is analogous to the OSPF ABR.

In general, OSPF and IS-IS are two-layer hierarchy protocols; however, this does not mean that they cannot operate well in networks with more hierarchies (as discussed later in this section).

In addition, although both OSPF and IS-IS are suitable for two-layer hierarchy network architecture, there are some differences in the way that their logical layout (flooding domains such as areas, levels) can be designed. For example, OSPF has a hard edge at the flooding domain borders. Typically, this is where routing policies are applied, such as route summarization and filtering, as shown in Figure 2-25.

By contrast, IS-IS routing information of the different levels (L1 and L2) is (technically) carried over different packets. This helps IS-IS have a softer edge at its flooding domain borders. This makes it more flexible than OSPF, because the L2 routing domain can overlap with the L1 domains, as shown in Figure 2-26.

Consequently, IS-IS can perform better when optimal routing is required with multiple border routers, whereas OSPF requires special consideration with regards to the inter-ABR links (for example, which area to be part of, or in which direction is optimal routing more important).

Recommendation: With both OSPF and IS-IS, the design must always reflect that the backbone cannot be partitioned in case of a link or node failure. Although an OSPF virtual link can help to fix partitioned backbone area issues, it is not a recommended approach. Instead, redesign of the logical or physical architecture is highly desirable in this case. Nevertheless, an OSPF virtual link may be used as an interim solution (see the following example).

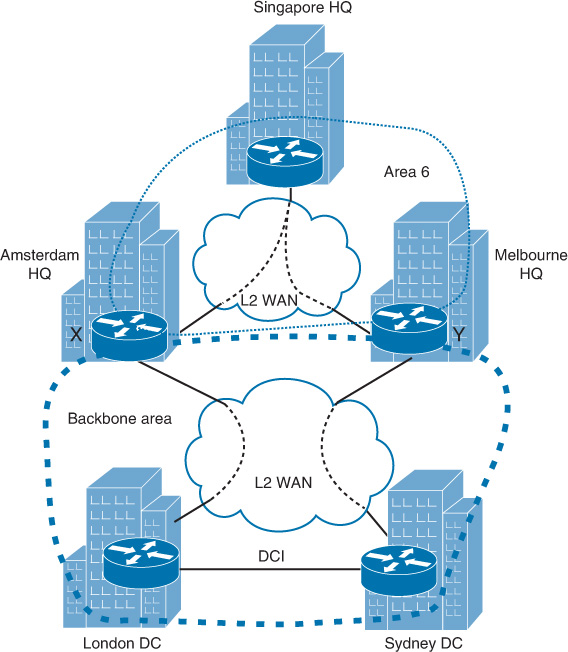

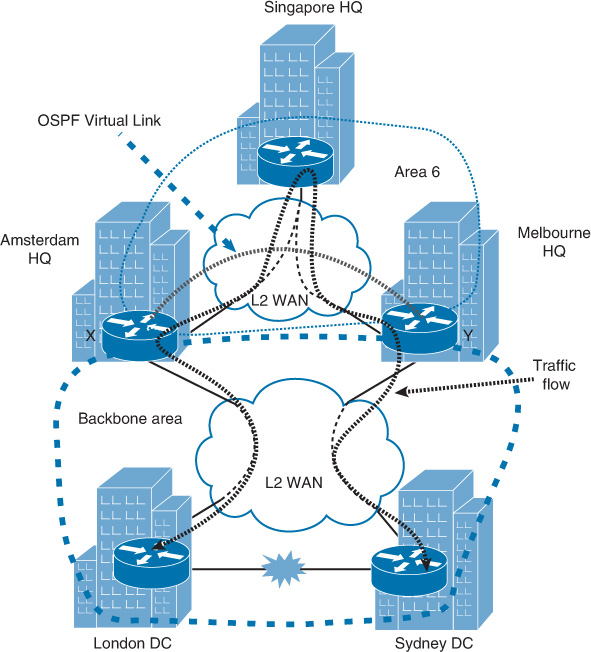

The scenario shown in Figure 2-27 illustrates poorly designed OSPF areas. It is considered a poor design because the OSPF backbone area has the potential to be partitioned if the direct interconnect link between the regional data centers (London and Sydney) fails. This will result in communication isolation between the London and Sydney data centers. However, let’s assume that this organization needs to use its regional HQs (Melbourne, Amsterdam, and Singapore), which are interconnected in a hub-and-spoke fashion, as a backup transit path when the link between the London and Sydney sites is down.

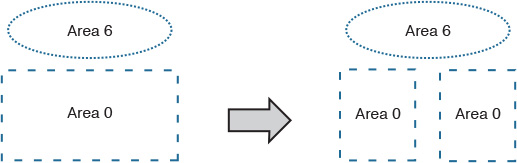

Based on the current OSPF area design, a nonbackbone area (area 6) cannot be used as a transit area. Figure 2-28 illustrates the logical view of OSPF areas before and after the failure event on the data center interconnect between London and Sydney data centers, which leads to a disjoint area 0 situation [22].

The ideal fix to this issue is to add redundant links from the London data center to WAN backbone router Y and/or from the Sydney data center to WAN backbone router X or to add a link between WAN backbone routers X and Y in area 0.

However, let’s assume that the provisioning of the links takes a while and this organization requires a quick fix to this issue. As shown in Figure 2-29, if you deploy an OSPF virtual link between WAN backbone routers X and Y in Amsterdam and Melbourne, respectively (across the hub site in Singapore), OSPF will consider this link as a point-to-point link. Both WAN backbone routers (ABRs) X and Y will form a virtual adjacency across this virtual link. As a result, this path can be used as an alternate path to maintain the communication between London and Sydney data centers when the direct link between them is down.

Note

The solution presented in this scenario is based on the assumption that traffic flowing over multiple international links is acceptable from the perspective of business and application requirements.

Note

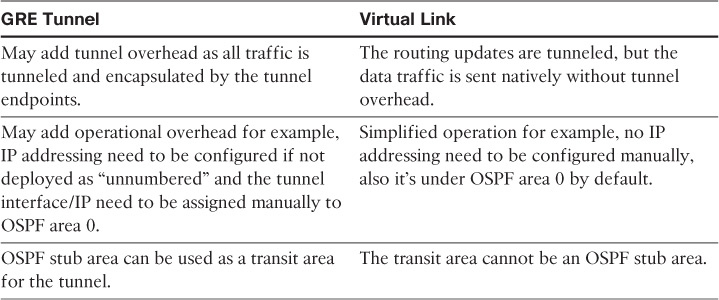

You can use a GRE tunnel as an alternative method to the OSPF virtual link to fix issues like the one just described; however, there are some differences between using a GRE tunnel versus an OSPF virtual link, as summarized in Table 2-4.

Link-State Flooding Domains

One of the most common questions when designing OSPF or IS-IS is this: What is the maximum number of routers that can be placed within a single area?

The common rule of thumb specifies between 50 and 100 routers per area or IS-IS level. However, in reality it is hard to generalize the recommended maximum number of routers per area because the maximum number of routers can be influenced by a number of variables, such as the following:

![]() Hardware resources (such as memory, CPU)

Hardware resources (such as memory, CPU)

![]() Number of prefixes (can be influenced by routes’ summarization design)

Number of prefixes (can be influenced by routes’ summarization design)

![]() Number of adjacencies per shared segment

Number of adjacencies per shared segment

Note

The amount of available bandwidth with regard to the control plane traffic such as link-state LSAs/LSPs is sometimes a limiting factor. For instance, the most common quality of service (QoS) standard models followed by many organizations allocate one of the following percentages of the interface’s available bandwidth for control (routing) traffic:4 4-class model, 7 percent; 8-class model, 5 percent; and 12-class model, 2 percent. This is more of a concern when the interconnection is a low-speed link such as legacy WAN link (time-division multiplexing [TDM] based, Frame Relay, or ATM) with limited bandwidth. Therefore, other alternatives are sometimes considered with these types of interfaces, such as passive interface or static routing.

4. “Medianet WAN Aggregation QoS Design 4.0,” http://www.cisco.com

For instance, many service providers run tens of hundreds of routers within one IS-IS level. Although this may introduce other design limitations with regard to modern architectures, in practice it is proven as a doable design. In addition, today’s router capabilities, in terms of hardware resources, are much stronger and faster than routers that were used five to seven years ago. This can have a major influence on the design, as well, because these routers can handle a high number of routes and volume of processing without any noticeable performance degradation.

In addition, the number of areas per border router is also one of the primary considerations in designing link-state routing protocols, in particular OSPF. Traditionally, the main constraint with the limited number of areas per ABR is the hardware resources. With the next generation of routers, which offer significant hardware improvements, ABRs can hold a greater number of areas. However, network designers must understand that additional areas to be added per ABR correlates to potential lower expected performance (because the router will store a separate LSDB per area).

In other words, hardware capabilities of the ABR are the primary deterministic factor of the number of areas that can be allocated per ABR, considering the number of prefixes per area as well. Traditionally, the rule of thumb is to consider two to three areas (including backbone area) per ABR. This is a foundation and can be expanded if the design requires more areas per ABR, with the assumption that the hardware resources of the ABR can handle this increase.

In addition to these facts and variables, network designers should consider the nature of the network and the concept of fault isolation and design modularity for large networks that can be designed with multiple functional fault domains (modules). For example, large-scale routed networks are commonly divided based on the geographic location for global networks or based on an administrative domain structure if they are managed by different entities.

EIGRP Flooding Domains Structure

As discussed earlier, EIGRP has no protocol-specific flooding domains or structure. However, EIGRP with route summarization or filtering techniques can break the flooding domains into multiple hierarchies of routing domains, which can reduce the EIGRP query scope, as depicted in Figure 2-30. This concept is a vital contributor to the optimization for the overall EIGRP design in terms of scalability, simplicity, and convergence time. In addition, EIGRP offers a higher degree of flexibility and scalability in networks with three and more levels in their hierarchies as compared to link-state routing protocols [19].

Routing Domain Logical Separation

The two main drivers for breaking a routed network into multiple logical domains (fault domains) are the following: to improve the performance of the networks and routers (fault isolation), and to modularize the design (to make it become simpler, more stable and scalable). These two drivers enhance network convergence and increase the overall routing architecture scalability. Furthermore, breaking the routed topology into multiple logical domains will facilitate topology aggregation and information hiding. It is critical to decide where a routing domain can be divided into two or multiple logical domains. In fact, several variables influence the location where the routing domains are broken or divided. The considerations discussed in the sections that follow are the primary influencers that help to determine the correct location of the logical routing boundaries. Network designers need to consider these when designing or restructuring a routed network.

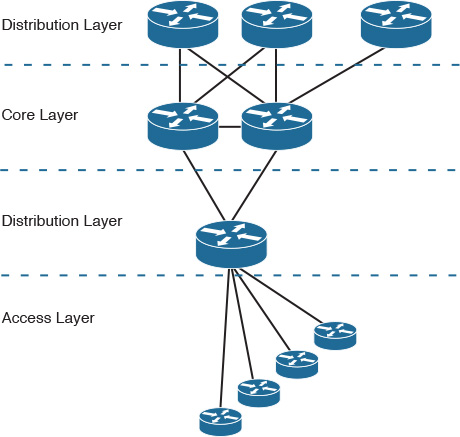

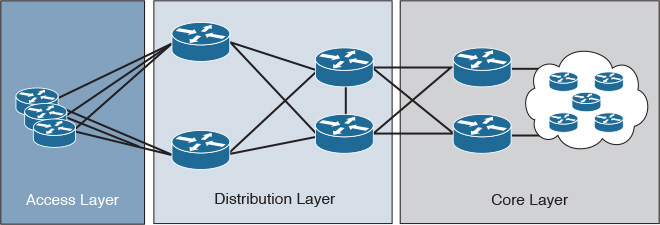

Underlying Physical Topology

As discussed in Chapter 1, “Network Design Requirements: Analysis and Design Principles,” the physical network layout is like the foundation of a building. As such, it is the main influencer when designing the logical structure of a routing domain (for example, a hub-and-spoke versus ring topology). For instance, the level of hierarchy held by a given network can impact the logical routing design if its structure includes two, three, or more tiers, as illustrated in Figure 2-31.

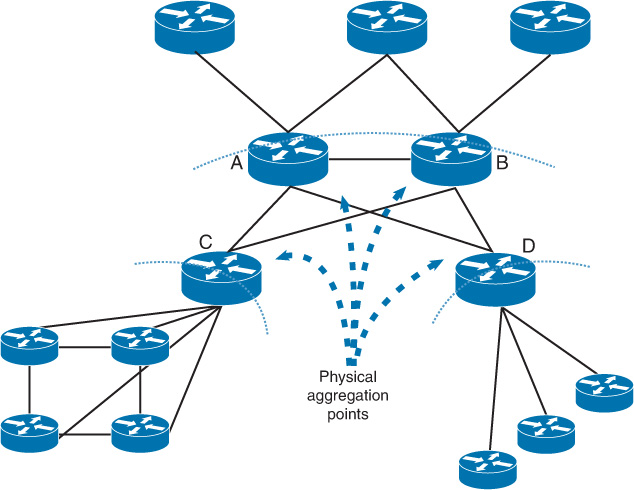

Moreover, the points in the network where the interconnections or devices meet (also known as chokepoints) at any given tier within the network are a good potential border location of a fault domain boundary, such as ABR in OSPF [19]. For instance, in Figure 2-32, the network is constructed of three-level hierarchies. Routers A and B and routers C and D are good potential points for breaking the routing domain (physical aggregation points). Also, these boundaries can be feasible places to perform route summarizations.

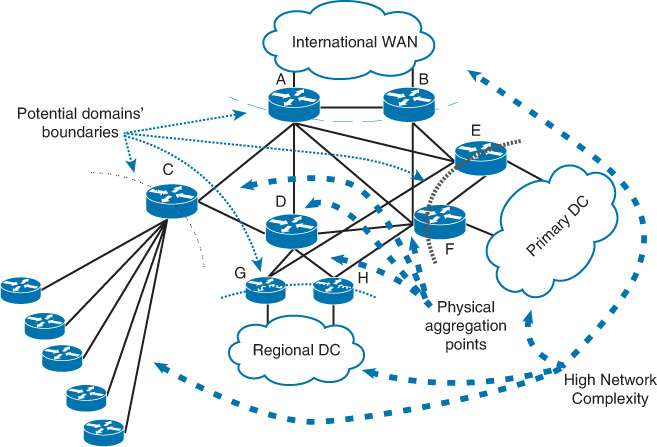

The other important factor with regard to the physical network layout is to break areas that have a high density of interconnections into separate logical fault domains where possible. As a result, devices in each fault domain will have smaller reachability databases (for example, LSDB) and will only compute paths within their fault domain, as illustrated in Figure 2-33. This will ultimately lead to the reduction of the overall control plane design complexity [8]. This concept will promote a design that can facilitate the support of other design principles, including simplicity, modularity, scalability, and topology and reachability information hiding.

The network illustrated in Figure 2-33 has four different functional areas:

![]() The primary data center

The primary data center

![]() The regional data center

The regional data center

![]() The international WAN

The international WAN

![]() The hub-and-spoke network for some of the remote sites

The hub-and-spoke network for some of the remote sites

From the perspective of logical separation, you should place each one of the large parts of the network into its own logical domain. The logical topology can be broken using OSPF areas, IS-IS levels, or EIGRP route summarization. The question you might be asking is this: Why has the domain boundary been placed at routers G and H rather than router D? Technically, both are valid places to break the network into multiple logical domains. However, if we place the domain boundary at router D, both the primary data center network and regional data center will be under same logical fault domain. This means the network may be less scalable and associated with lower control plane stability because routers E and F will have a full view of the topology of the regional data center network connected to routers G and H. In addition, routers G and H most probably will face the same limitations as routers E and F. As a result, if there is any link flap or routing change in the regional data center network connected to router G or H, it will be propagated across to routers E and F (unnecessary extra load and processing).

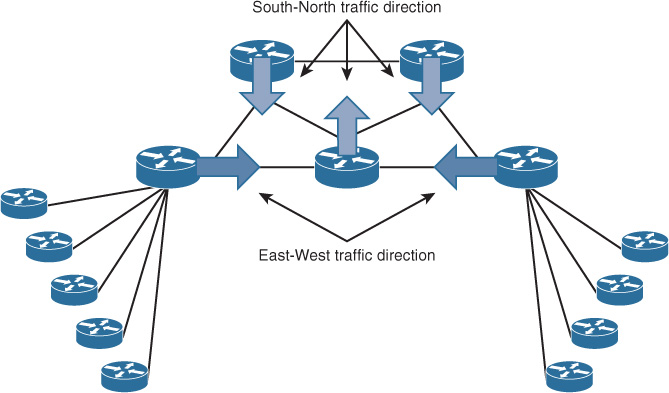

Traffic Pattern and Volume

By understanding traffic pattern (for example, south-north versus east-west) and traffic volume trends, network designers can better understand the impact if a logical topology were to be divided into multiple domains on certain points (see Figure 2-34). For example, OSPF always prefers the path over the same area regardless of the link cost over other areas. (For more information about this, see the section “IGP Traffic Engineering and Path Selection: Summary.”) In some situations, this could lead to suboptimal routing, where a high volume of traffic will travel across low-capacity links or expensive links with strict billing that not every type of communications should go over it; this results from the poor design of OSPF areas, which did not consider bandwidth or cost requirements.

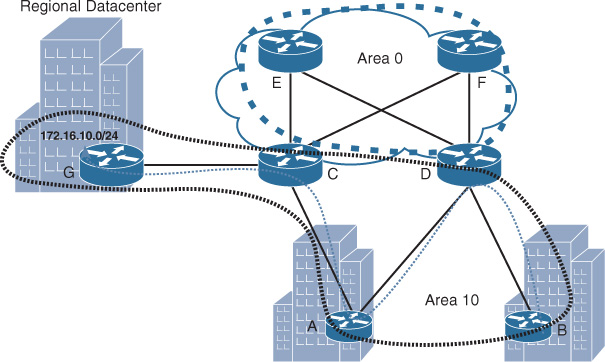

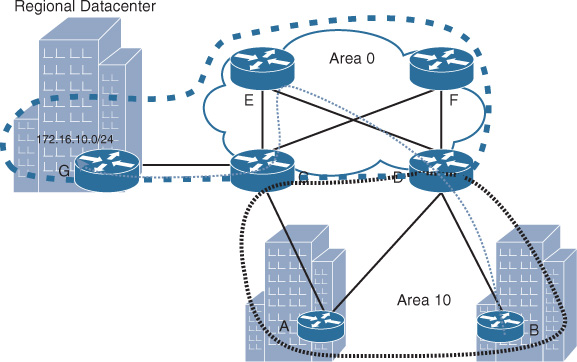

Similarly, if the traffic pattern is mostly north-south, such as in a hub-and-spoke topology where no communication between the spokes is required, this can help network designers to avoid placing the logical routing domain boundary at points likely to using spoke sites as transit sites (suboptimal routing). For instance, the scenario depicted in Figure 2-35 demonstrates how the application of the logical area boundaries on a network can influence the path selection. Traffic sourced from router B going to the regional data center behind router G should (optimally) go through router D, and then across one of the core routers E or F, and finally to router C to reach the data center over one of the core high-speed links. However, the traffic is currently traversing the low-speed link via router A. This path (B-D-A-C-G) is within the same area (area 10), as shown in Figure 2-35.

No route filtering or any type of summarization is applied to this network. This suboptimal routing results entirely from the poor design of OSPF areas. If you apply the concepts discussed in this section, you can optimize this design and fix the issue of suboptimal routing, as follows:

![]() First, the physical network is a three-tier hierarchy. Routers C and D are the points where the access, data center, and core links meet, which makes them a good potential location to be the area border (which is already in place).

First, the physical network is a three-tier hierarchy. Routers C and D are the points where the access, data center, and core links meet, which makes them a good potential location to be the area border (which is already in place).

![]() Second, if you divide this topology into functional domains, you can, for example, have three parts (core, remote sites, and data center), with each placed in its own area. This can simplify summarization and introduce modularity to the overall logical architecture.

Second, if you divide this topology into functional domains, you can, for example, have three parts (core, remote sites, and data center), with each placed in its own area. This can simplify summarization and introduce modularity to the overall logical architecture.

![]() The third point here is traffic pattern. It is obvious that there will be traffic from the remote sites to the regional data center, which needs to go over the high-speed links rather than going over the low-speed links by using other remote sites as a transit path.

The third point here is traffic pattern. It is obvious that there will be traffic from the remote sites to the regional data center, which needs to go over the high-speed links rather than going over the low-speed links by using other remote sites as a transit path.

Based on this analysis, the simple solution to this design is to either place the data center in its own area or to make the data center part of area 0, as illustrated in Figure 2-36, with area 0 extended to include the regional data center.

Note

Although both options are valid solutions, on the CCDE exam the correct choice will be based on the information and requirements provided. For instance, if one of the requirements is to achieve a more stable and modular design, a separate OSPF area for the regional data center will be the more feasible option in this case.

Similarly, if IS-IS is used in this scenario as illustrated in Figure 2-37, router B will always use router A as a transit path to reach the regional data center prefix. Over this path (B-D-A-C-G), the regional data center prefix behind router G will be seen as IS-IS level 1, and based on IS-IS route selection rules, this path will be preferred compared to the one over the core, in which it will be announced as an IS-IS level 2 route. (For more information about this, see the section “IGP Traffic Engineering and Path Selection: Summary.”) Figure 2-37 suggests a simple possible solution to optimize IS-IS flooding domain design (levels): including the regional data center as part of IS-IS level 2. This ensures that traffic from the spokes (router B in this example) destined to the regional data center will always traverse the core network rather transiting any other spoke’s network.

Route Summarization

The other major factor when deciding where to divide logical topology of a routed network is where summarization or reachability information hiding can take place. The important point here is that the physical layout of the topology must be taken into account. In other words, you cannot decide where to place the reachability information hiding boundary (summarization) without referring to what the physical architecture looks like and where the points are that can enhance the overall routing design if summarization is enabled. Subsequent sections in this chapter cover route summarization design considerations in more detail.

Security Control and Policy Compliance

This pertains more to what areas of a certain network have to be logically separated from other parts of the network. For example, an enterprise might have a research and development lab (R&D) where different types of unified communications applications are installed, including routers and switches. Furthermore, the enterprise security policy may dictate that this part of the network must be logically contained and only specific reachability information needs to be leaked between this R&D lab environment and the production network. Technically, this will lead to increased network stability and policy control.

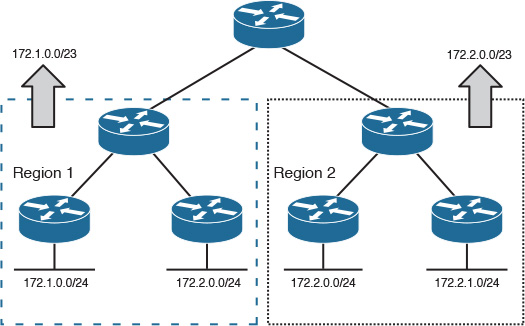

Route Summarization

By having a well-structured IP address align with the physical layout with reachability information hiding using routes summarization, as shown in Figure 2-38, network designers can achieve an optimized level of network design simplicity, scalability, and stability.

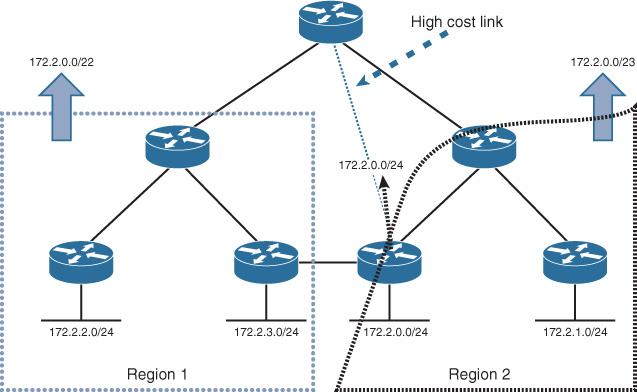

For example, based on the routes’ summarization structure illustrated in Figure 2-38, if there is any link flap in a remote site in region 2, it will not affect the remote site routers of region 1 in processing or updating their topology database (which in some situations might cause unnecessary path recalculation and processing, which in turn may lead to service interruption). Usually, route summarization facilitates the reduction of the RIB table size by reducing the number of route counts. This means less memory, lower CPU utilization, and faster convergence time during a network change or following any failure event. In other words, the boundary of the route summarization almost always overlaps with the boundary of the fault domain.

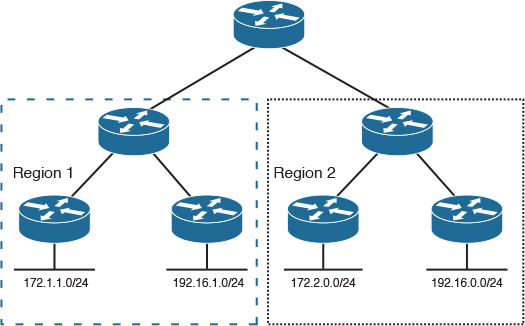

However, not every network has a structured network IP addressing like the one shown in Figure 2-38. Therefore, network designers must consider alternatives to overcome this issue. In some situations, the solution is “not to summarize.” For instance, Figure 2-39 illustrates a network with unstructured IP addressing, and the business may not able to afford changing their IP scheme in the near future.

Moreover, in some scenarios, the unstructured physical connectivity can introduce challenges with route summarization. For example, in Figure 2-40, summarization can lead to forcing all the traffic from the hub site to always prefer the high-cost and low-bandwidth link to reach 172.2.0.0/24 network (more specific route over the high-cost nonsummarized link), which may lead to undesirable outcome from the business point of view (for example slow applications’ response time over this link).

As a general rule of thumb (not always), summarization should be considered at the routing logical domain boundaries. The reason why summarization might not always be considered at the logical boundary domain is because in some designs it can lead to suboptimal routing or traffic black-holing (also known as summary black holes). The following subsections discuses summary suboptimal routing and summary black-holing in more detail.

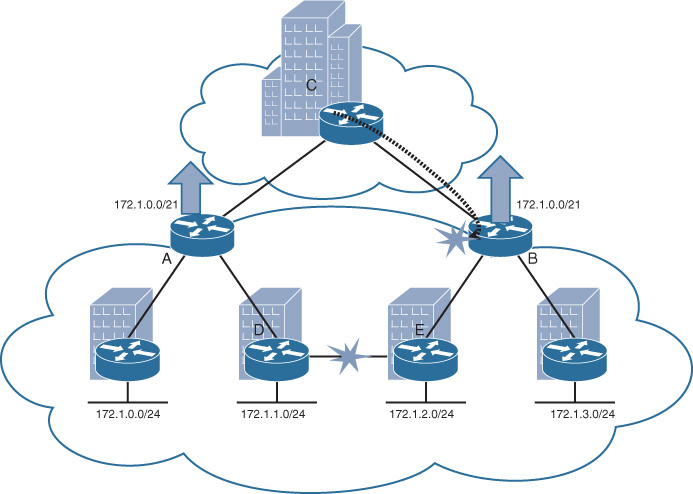

Summary Black Holes

The principle of route summarization is based on hiding specific reachability information. This principle can optimize many network designs, as discussed earlier; however, it can lead to traffic black-holing in some scenarios because of the specific hidden routing information. In the scenario illustrated in Figure 2-41, router A and B send the summary route only (172.1.0.0/21) with the same metric toward router C. Based on this design, in case of link failure between router D and E, the routing table of router C will remain intact, because it is receiving only the summary. Consequently, there is potential for traffic black-holing. For instance, traffic sourced from router C destined to network 172.1.1.0/24 landing at router B will be dropped because of this summarization black-holing. Moreover, the situation can become even worse if router C is performing per-packet load balancing across routers A and B. In this case, 50 percent of the traffic is expected to be dropped. Similarly, if router C is load balancing on a per-session basis, hypothetically some of the sessions will reach their destinations and others may fail. As a result, route summarization in this scenario can lead to a serious connectivity issues in some failure situations [18], [19].

To mitigate this issue and enhance the design in Figure 2-41, summarization either should be avoided (this option might not be always desirable because it can reduce the stability and scalability in large networks) or at least one nonsummarized link must be added between the summarizing routers (in this scenario, between routers A and B, as illustrated in Figure 2-42). The nonsummarized link can be used as an alternate path to overcome the route summarization black-holing issue described previously.

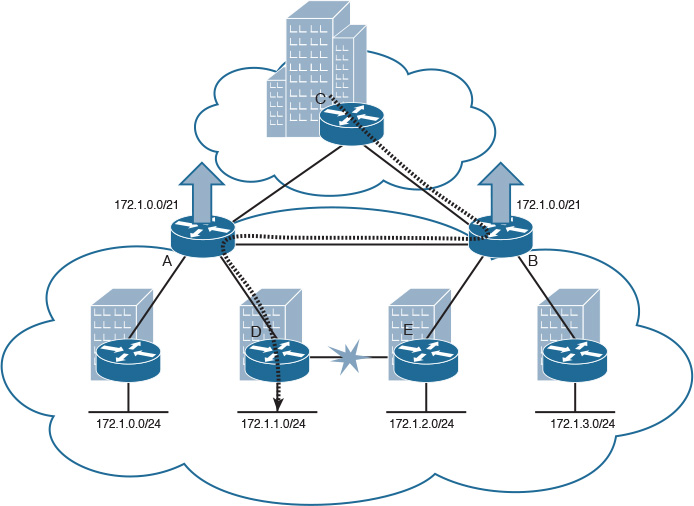

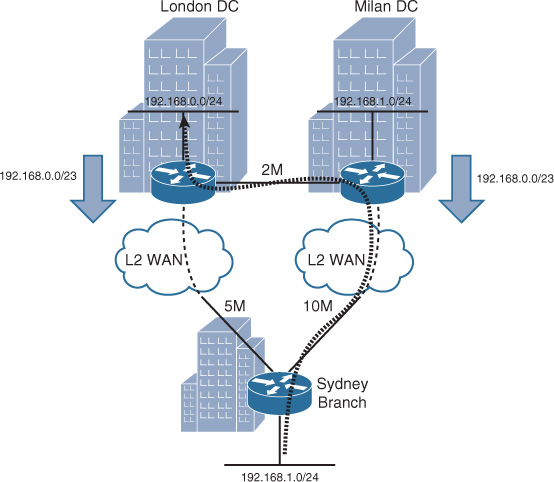

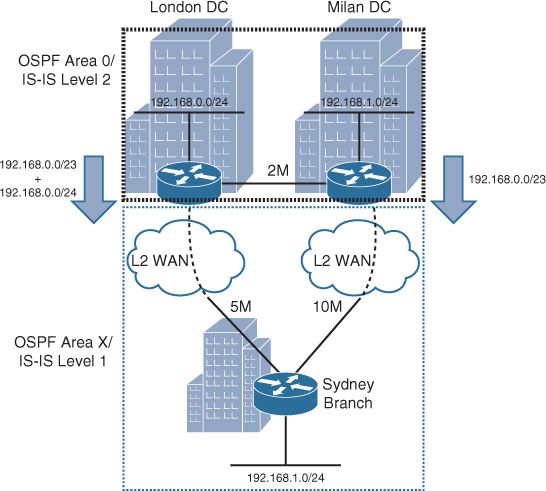

Suboptimal Routing

Although hiding reachability information with route summarization can help to reduce control plane complexity, it can lead to suboptimal routing in some scenarios. This suboptimal routing, in turn, may lead traffic to use a lower-bandwidth link or an expensive link, over which the enterprise might not want to send every type of traffic. For example, if we use the same scenario discussed earlier in the OSPF areas, we then apply summarization on the data center edge routers of London and Milan and assume that the link between Sydney and Milan is a high-cost link that has a typically lower routing metric, as depicted in Figure 2-43.

Note

The example in Figure 2-43 is “routing protocol” neutral. It can apply to all routing protocols in general.

As illustrated in Figure 2-43, the link between the Sydney branch and Milan data center is 10 Mbps, and the link to London is 5 Mbps. In addition, the data center interconnect between Milan and London data centers is only 2 Mbps. In this particular scenario, summarization toward the Sydney branch from both data centers will typically hide the more specific route. Therefore, the Sydney branch will send traffic destined to any of the data centers over the high-bandwidth link (with lower routing metric); in this case, the Sydney-Milan path will be preferred (almost always higher bandwidth = lower path metric). This behavior will cause suboptimal routing for traffic destined to London data center network. This suboptimal routing in turn can lead to an undesirable experience, because rather than having 5 Mbps between Sydney branch and London data center, their maximum bandwidth will be limited to the data center interconnect link capacity, which is 2 Mbps in this scenario. This is in addition to the extra cost and delay that will from the traffic having to traverse multiple international links.

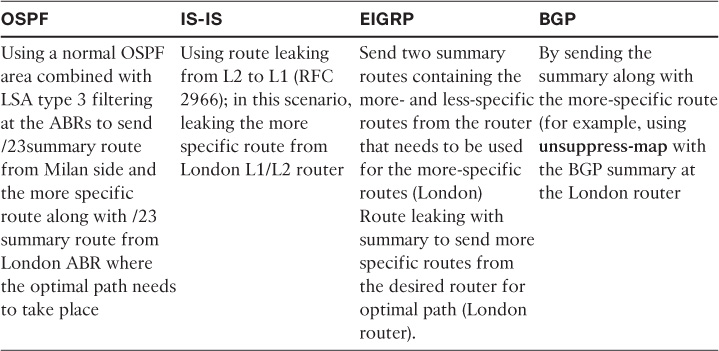

Even so, this design limitation can be resolved via different techniques based on the use of the routing protocol, as summarized in Table 2-5.

Figure 2-44 illustrates link-state areas/levels application with regard to the discussed scenario and the suggested solutions, because the different areas/levels designs can have a large influence on the overall traffic engineering and path selection.

with IS-IS, L1-L2 (ABR) may send default route toward the L1 domain and the route leaking at the London ABR will leak/send the more specific local prefix for optimal routing.

Based on the these design considerations and scenarios, we can conclude that although route summarization can optimize the network design for the several reasons (discussed earlier in this chapter), in some scenarios summarization from the core networks toward the edge or remote sites can lead to suboptimal routing. In addition, summarization from the remote sites or edge routers toward the core network may lead to traffic black holes in some failure scenarios. Therefore, to provide a robust and resilient design, network designers must pay attention to the different failure scenarios when considering route summarization [19].

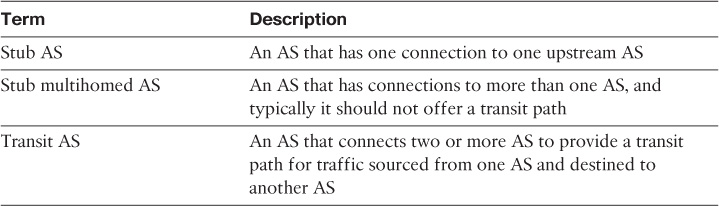

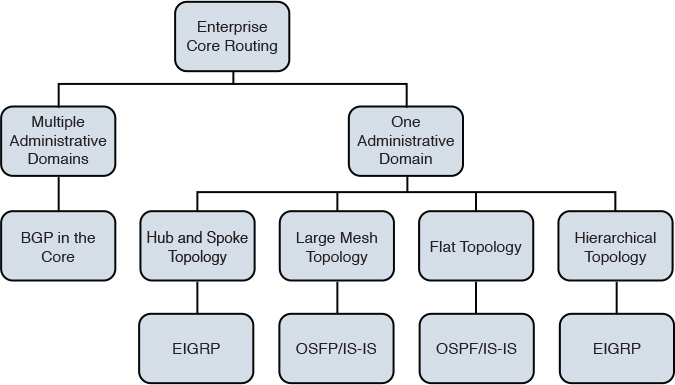

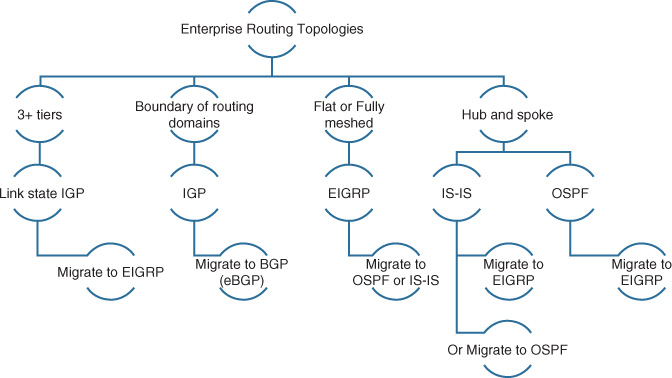

IGP Traffic Engineering and Path Selection: Summary

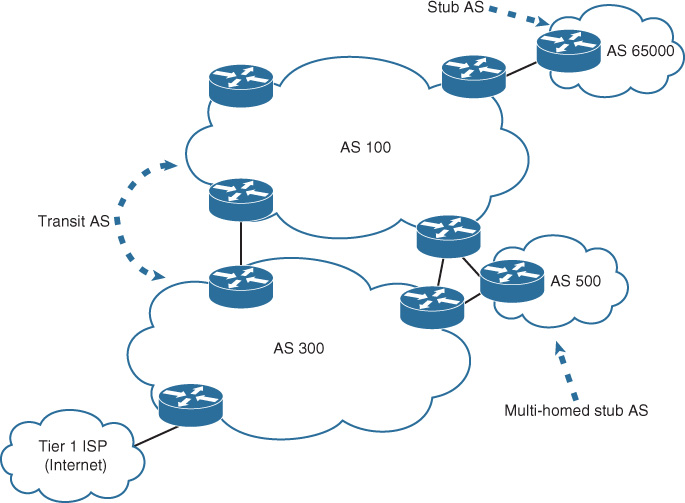

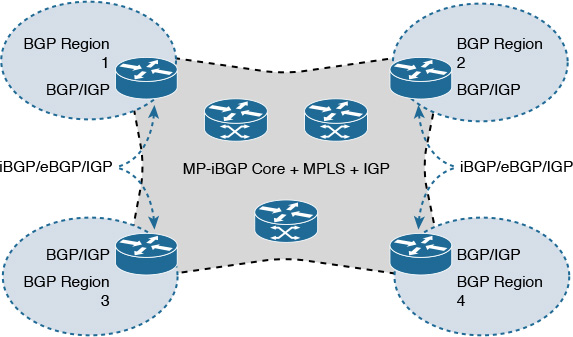

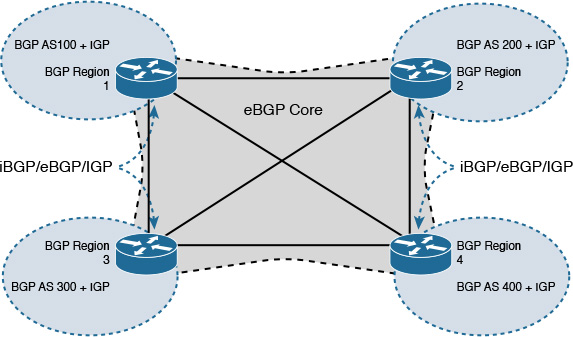

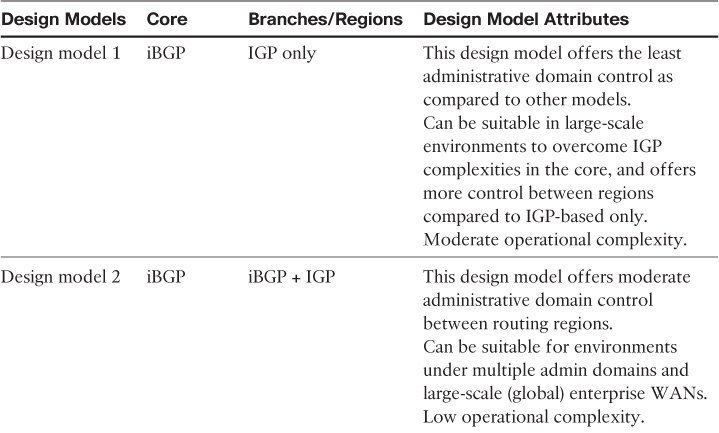

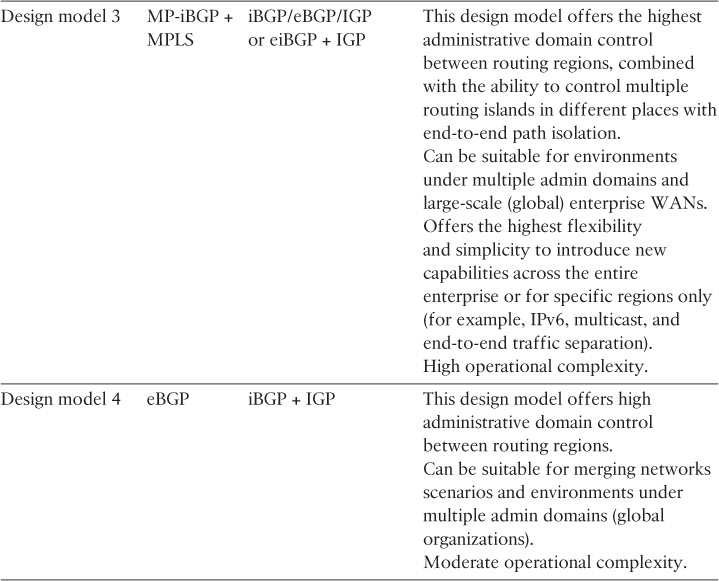

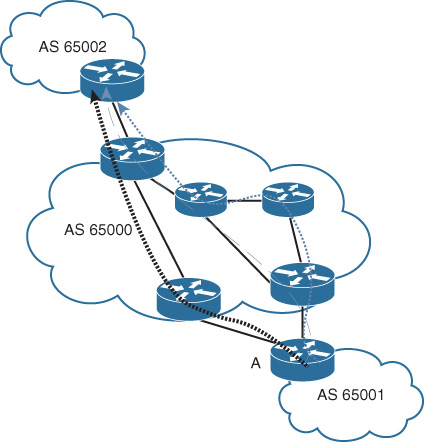

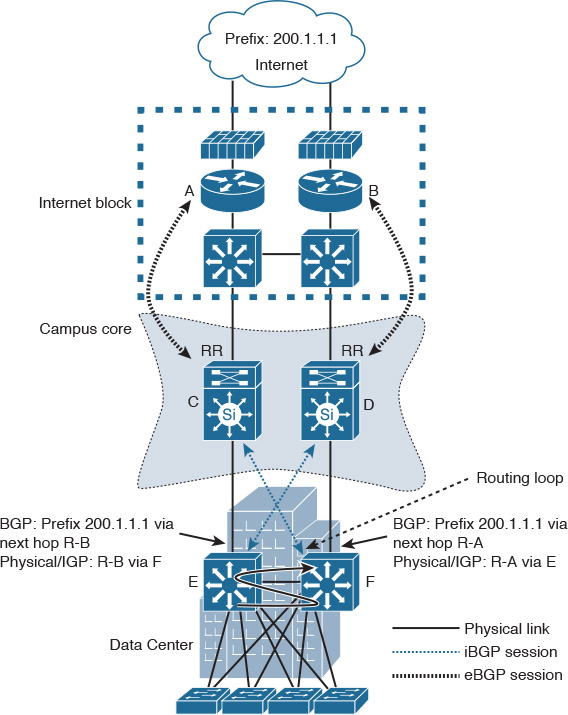

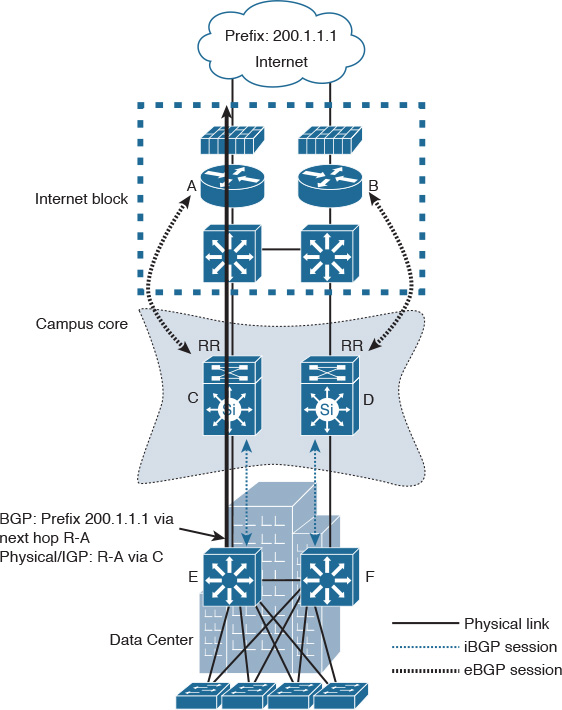

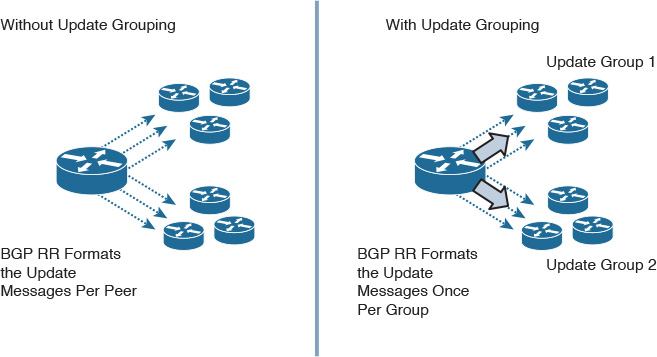

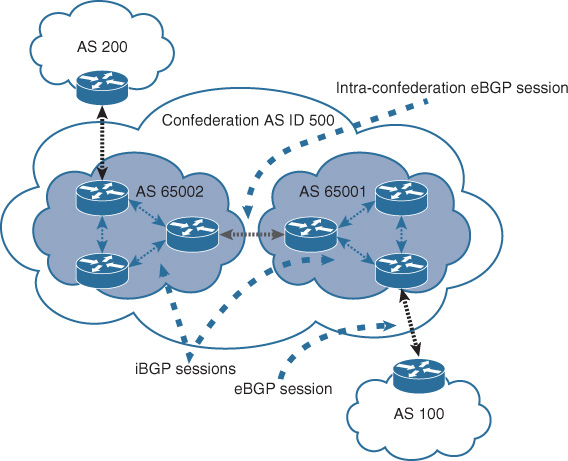

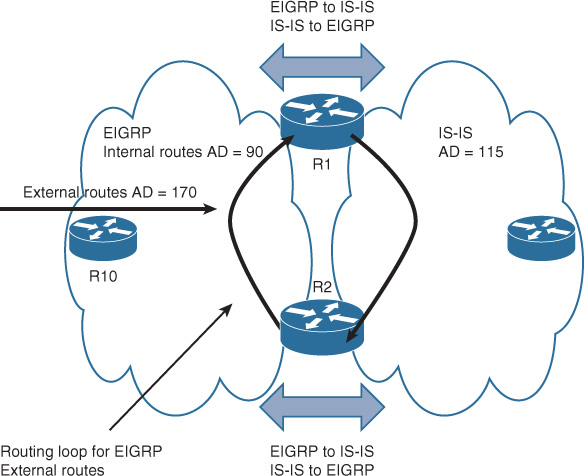

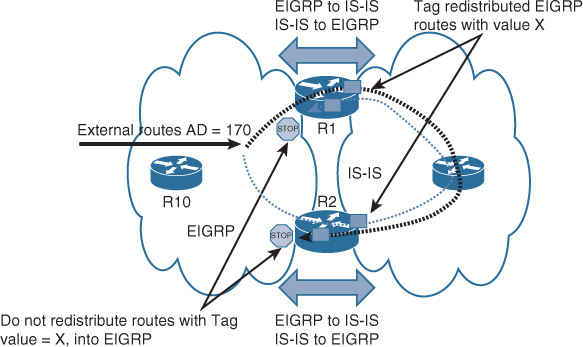

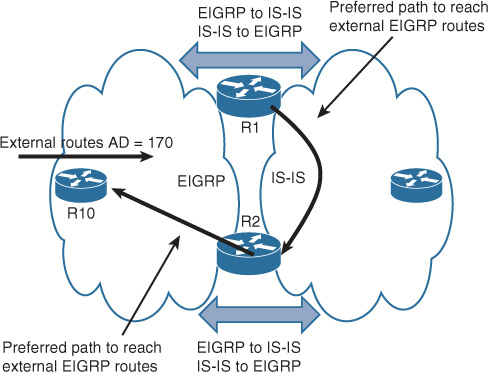

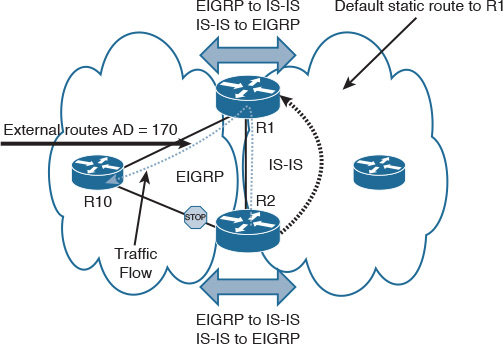

By understanding the variables that influence a routing protocol decision to select a certain path, network designers can gain more control to influence route preference over a given path based on a design goal. This process is also known as traffic engineering.