Chapter 7

WLAN Media and Application Services

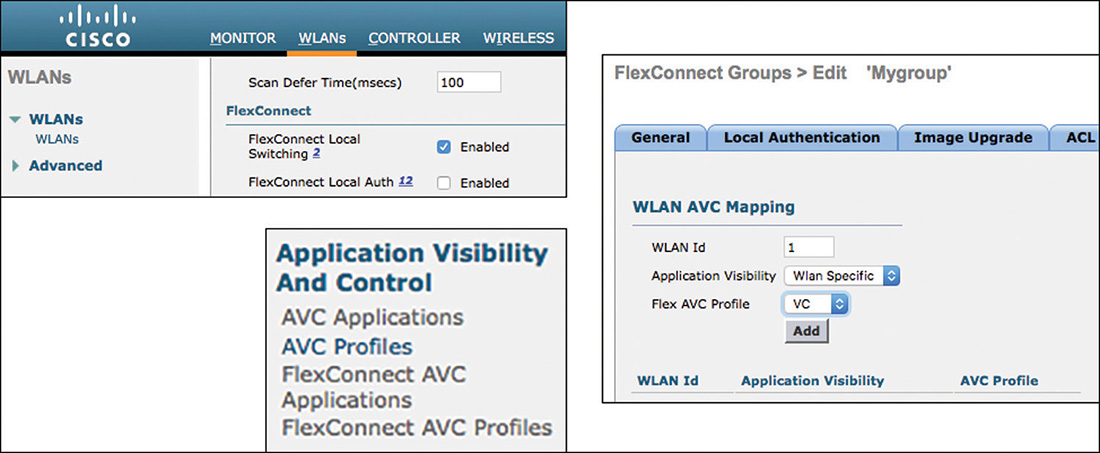

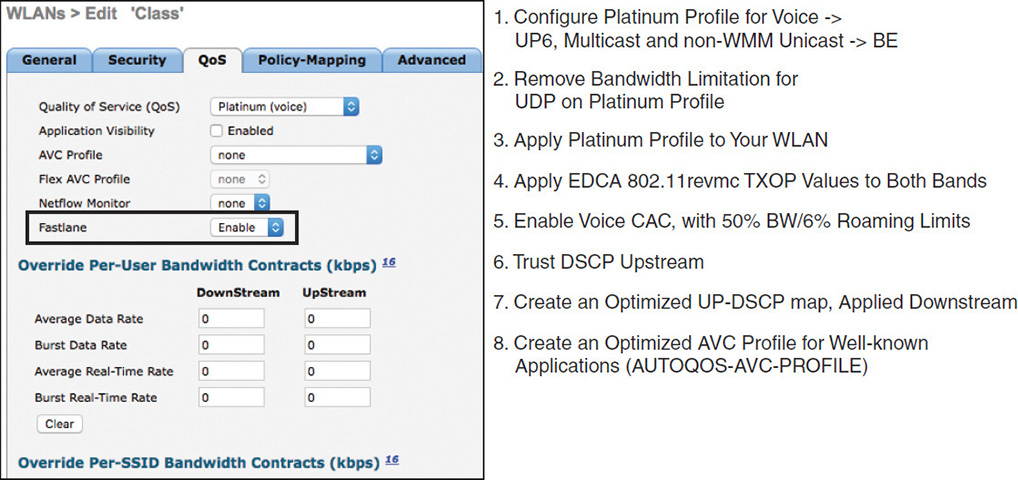

Introduction

Providing differentiated treatment for different types of applications is a difficult challenge for Wi-Fi networks (and CCIE candidates). Wi-Fi networks are inherently unstable. Speeds are half duplex, overhead renders the links less deterministic, devices move, and users tend to bring old devices that disrupt the performance of your latest shiny fast phone or laptop.

In this context, providing a good application experience for your users requires a combination of sound RF design, a good understanding of your client database, and a solid background in QoS foundations and QoS configuration. This last aspect is difficult to acquire because you will find that, in AireOS, QoS features that saw light in the early days of Wi-Fi QoS (and are now nearly obsolete) stand next to QoS features that just appeared in the 802.11 standard. This chapter will help you triage all these elements and give you the basis you need to provide a good quality experience to your users. You should also be able to face the exam in confidence. This chapter is organized into two sections. The first section gives you the technical background you need to understand application differentiated services over Wi-Fi. The second section shows you how these elements are configured on the various platforms, AireOS, switches, and Autonomous APs. More specifically, the two sections are organized as follows:

Section 1 provides the survival concepts needed to understand QoS over 802.11. This section first introduces the notion of differentiated services to help you understand DSCP markings and IP Precedence. These notions are then related to QoS design and the concept of traffic classes. Operating QoS classes implies recognizing traffic, and the following subsections explain how traffic is identified and marked, with Layer 3 techniques for IPv4 and IPv6, and with Layer 2 techniques, with 802.11e and also 802.1Q, 802.1D, and 802.1p, to which 802.11e often gets compared. This first section then examines different techniques to handle congestion.

Section 2 covers the configuration of differentiated treatment for application services over the various architectures. The first architecture to be covered is AireOS, and you will see how QoS profiles, bandwidth contracts, QoS mappings, and trust elements are configured. You also learn how to configure congestion management techniques, including EDCA parameters, CAC, and AVC. You then see how Fastlane creates specific additions for iOS and MacOS devices. You then learn how application services are configured for autonomous APs. Quite a few items available on AireOS are also configured on autonomous APs; however, autonomous APs are Layer 2 objects. Therefore, they present some variations that you need to understand and master. Finally, you will see how QoS is configured on switches. Your goal is not to become a QoS expert but to be able to autonomously configure a switch to provide a basic support for the QoS policies you configured on your WLAN infrastructure.

At the end of this chapter, you should have a good understanding of real-time application support for wireless clients.

QoS Survival Concepts for Wireless Experts

QoS is not a static set of configuration commands designed long ago by a circle of wise network gurus and that are valid forever. In fact, QoS models, traffic definitions, and recommendations have evolved over the years as common traffic mixes in enterprise networks have changed. With new objects (such as IoT), new protocols, and new traffic types, QoS continues to evolve. However, you do not need to be a QoS expert to pass the CCIE Wireless exam, you just need to understand the logic of QoS and survive by your own, configuring standard QoS parameters that will affect your Wi-Fi network’s most sensitive traffic (typically Voice). In short, your task is to understand QoS well enough to make Voice over Wi-Fi work.

The Notion of Differentiated Treatment

Let’s start with a quiz question: What is the second most configured feature in Cisco networking devices? If you read the title of this chapter, then you probably guessed: QoS (the first one is routing).

QoS is therefore a fundamental concept. However, to help you survive the complexities of the CCIE lab exam, but also to help you face real deployment use cases, we need to dispel a few legends. The first legend is that QoS is about lack of bandwidth and that adding bandwidth solves the problem and removes the need for QoS. This is deeply untrue. QoS is about balance between the incoming quantity of bits per second and the practical limitations of the link where these bits must be sent.

Let’s look at a nonwireless example to help clarify this concept. Suppose that you need to send a video flow over a 1 Gbps switch interface. The communicated bandwidth, 1 Gbps, is only an approximation over time of the capacity of the link. One gigabit per second is the measure of the transmission capability of the Ethernet port and its associated cable (over 1 second, this port can transmit one billion bits). But behind the port resides a buffer (in fact probably more than one, each buffer being allocated to a type of traffic), whose job is to store the incoming bits until they can be sent on the line, and a scheduler, whose job is to send blocks of bits to the cable.

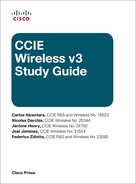

A high-end switch buffer can store 5.4 MB. If the switch port is built around the notion of four queues, that’s 1.35 MB per queue. Now, your port can send 1 gigabit per second, which translates to about 125 kilobytes per microseconds. If you map the buffer to the link speed, you see that the buffer can store up to 10.8 ms of data before being filled up.

Suppose that your switch receives a flow of video packets, representing a modest 2 MB burst intended to draw a full 32-color 1080p video frame. You can immediately see that, even if the port is completely idle before the burst arrives from within the switch bus (let’s assume that the internal bus is faster than 1 Gbps; a common speed for a good switch can be around 480 Gbps), the buffer can saturate long before the entire flow is stored and then transmitted on the line, as shown in Figure 7-1.

From the outside, you see a modest 2 MB burst of packets, a very low link utilization (less than 1%), and yet several bytes are dropped. The issue is that 2 MB should never have been sent to that queue in less than 11 ms, and this is where QoS comes into play. Here, more bandwidth does not solve the problem (you already have enough bandwidth, and your link utilization is overall less than 1%). What solves the problem is making sure that the right quantity of packets gets through the interface buffer in a reasonable amount of time, so as not to saturate the interface beyond capacity.

The same issue can be seen in the wireless world. Suppose that you send voice packets, using no QoS (best effort) from a smartphone to an access point. These packets are very small (one audio payload can typically represent 160 bytes), so bandwidth is typically a non-issue. Yet, oblivious to the presence of voice traffic (as you use best effort), the AP suddenly jumps to another channel to scan (RRM). The AP is likely to be gone for about 80 ms, during which your phone will fail to receive an ACK for the last voice packet, will retry many times while rate shifting down, then drop the packet and attempt to send the next one, and so on. When the AP returns to the channel, you will have lost three consecutive packets, which is enough for the user to detect a short drop in the audio flow and complain about Wi-Fi. Yet, troubleshooting the issue will show no oversubscription of the link. The issue is not bandwidth. The issue is that proper priority was not given to this real-time traffic over other tasks. Typically, 30 ms in the cell is the maximum time interval allowed for a Wi-Fi driver to send a real-time voice frame (UP 6). After that delay, this type of frame is dropped from the queue. Here again, the solution is not more bandwidth but proper servicing over time of packets based on their specific category. In other words, ensure that voice packets are not delayed for more than 30 ms in the cell, regardless of the available bandwidth. This is again what QoS does.

Another lasting legend about QoS is that it should be configured on weak links (typically, WAN and Wi-Fi). However, QoS is not a Wi-Fi or a WAN problem—it is an end-to-end problem. Each medium has unique characteristics, and the complexity of configuring QoS is to make sure to translate the requirements of each traffic type into configurations adapted to the local medium. Therefore, you need a mix of global configurations and local particulars to express a global QoS intent for each traffic and translate this intent to each local medium specific constraints. As you saw from the bandwidth urban legend, your Wi-Fi could be configured for QoS and yet traffic could be dropped on the switch side, if you neglected the LAN side because of its large bandwidth.

This end-to-end concern, and also the fact that Wi-Fi is one of the weakest links, are the reasons why the CCIE Wireless exam expects from you the ability to configure QoS for Wi-Fi but also have reasonable knowledge on how the intent you expressed through your Wi-Fi QoS configuration would translate on the switch port to your access point or your WLC. You do not need to be a QoS expert, but you must be able to verify that QoS will not stop at the AP or WLC port. This ability translates into the knowledge of the different families of traffic and the various possibilities brought by QoS to offer a different treatment for different types of traffic: prioritize urgent business-relevant traffic and delay or drop traffic of lower urgency or importance depending on the link capacity.

Traditionally, these requirements translate into different possible types of actions and mechanisms:

QoS Design: Your first task is to determine which traffic matters for your network. Most of the time, this determination will bring you to create different categories of traffic.

Classification and marking: After traffic starts flowing and enters your network, you must identify it; when identification is completed, you may want to put a tag on each identified traffic packet so as not to have to run the identification task at every node.

Congestion management tools: When congestion occurs, multiple tools can help manage the available bandwidth for the best. In traditional QoS books, this section would cover many subsections, such as Call Admission Control mechanisms, policing, shaping, queue management, and a few others. In the context of the CCIE Wireless exam, you need to understand the main principles, and we will group under this category topics that matter for you.

Link efficiency mechanisms: On some links, you can compress or simplify packets to send the same amount of information using less bandwidth. This is especially important on WAN connections but is completely absent from Wi-Fi links. You will find efficiency mechanisms for Wi-Fi, such as MIMO, blocks, and a few others, and they do improve transmissions. However, this is not efficiency in the sense of transmission compression or simplification. The term link efficiency is important to know in the context of QoS, but you will not need to implement such a mechanism, with a QoS approach, for Wi-Fi.

The listed actions and mechanisms have different relative importance for the CCIE Wireless exam. The following sections cover what you need to know, and we refer to the category names along the way to help your learning.

QoS Design

Just as you do not start building a house without a blueprint, you also do not start configuring QoS without a QoS blueprint. This blueprint lists the types of traffic that you expect in your network and organizes these types into categories. As a Wireless CCIE, your job is not to build an enterprise QoS blueprint. However, a basic understanding of what this blueprint covers will help you reconcile the apparent inconsistencies between 802.11e QoS marking and DSCP/802.1p marking, and will also show you why some vendors get it right and others do not.

The main concern behind this QoS blueprint, often called a QoS model, is about arbitration between packets. Suppose you have two packets that get onto an interface (toward any type of medium). Suppose that you have to decide which packet to send first (because your outgoing medium has a slower data rate than wherever these two packets came from), or you have to decide to pick one to send and drop the other (because your interface buffer cannot store the second packet, and because the second packet will not be useful anymore if it is delayed, or for any other reason). If you have built a QoS model, and if you know which packet belongs to which class in that model, you can make a reasonable choice for each packet.

A very basic QoS model runs around four classes: real-time traffic (voice or real-time conferencing), control traffic for these real-time flows (but this category also includes your network control traffic, such as RIP updates), data, and everything else. Data is often called transactional data, and it matches specific data traffic that you identified as business relevant and that you care about, such as database traffic, email, FTP, backups, and the like. Everything else represents all other traffic that may or may not be business relevant. If it is business relevant, it is not important enough that it needed to be identified in the transactional data category.

The four-queue model is simple and matches the switching queuing structure available in old switches (and vice versa; the switches queues were built to match the common QoS model implemented by most networks). This model was common 15 years ago, but it proved a bit too simple and over the years was replaced by a more complex 8-class model. Keep in mind that this was during the 2000s (you will see in a few pages why this is important).

The 8-class model separates real-time voice from real-time video. One main reason is that video conferencing increased in importance during these years. If you happen to use video conferencing, you probably notice that the sound is more important than the image. If your boss tells you that you are “…ired,” you want to know if the word was hired or fired. Sound is critical. However, if your boss’s face appears to pixilate for a second, the impact on the call is lower. Also, real-time video is video conferencing, and the 4-class model did not have a space for streaming video. It is different from transactional data because it is almost real-time, but you can buffer a bit of it. By contrast, video conferencing cannot be buffered because it is real-time.

Similarly, network control updates (like RIP or EIGRP updates) are not the same as real-time application signaling. As you will see in the next section, real-time application signaling provides statistical information about the call. It is useful to know if, and how, the call should be maintained. You can drop a few of these statistical packets without many consequences. Of course, if you drop too many of these packets, the call will suffer (but only one call will suffer). By contrast, if you drop EIGRP updates, your entire network collapses. The impact is very different, and putting both in the same control category was too simplistic.

Finally, there is clearly all the rest of the traffic, but there is also a category of traffic that you know does not matter. A typical example is peer-to-peer downloads (such as BitTorrent). In most networks, you want to either drop that traffic or make sure that it is sent only if nothing else is in the pipe. This traffic is called scavenger and should not compete with any other form of traffic, even the “Everything else” traffic that may or may not be business relevant.

The result is that the 8-class model implements the following categories: Voice, Interactive Video, Streaming Video, Network Control, (real-time traffic) Signaling, Transactional Data, Best Effort, and Scavenger.

The 8-class model is implemented in many networks. In the past decade, another subtler model started to gain traction, which further breaks down these eight classes. For example, the Network Control class is divided in two, with the idea that you may have some network control traffic that is relevant only locally, whereas some other network control traffic is valid across multiple sections of your network. Similarly, real-time video can be one way (broadcast video of your CEO’s quarterly update) or two ways (video conferencing). Usually, the broadcast is seen as more sensitive to delay and losses than the two-way video because the broadcast impacts multiple receivers (whereas an issue in a video-conference packet usually impacts only one receiver). Also, some traffic may be real time but not video (such as gaming). With the same splitting logic, a single Transactional Data category came to be seen as too simple. There is indeed the transactional data traffic, but if there is a transaction, traffic like FTP or TFTP does not fit well in that logic. Transactional should be a type of traffic where a user requests something from a server and the server sends it (ERP applications or credit card transactions, for example). By contrast, traffic such as file transfers or emails is different. This type of traffic can be delayed more than the real transactional data traffic and can send more traffic too, so it should have its own category. In fact, it should be named bulk data. In the middle (between the transactional data and the bulk data classes), there is all the rest, which is business-relevant data that implies data transfer but is neither transactional nor bulk. Typically, this would be your management traffic, where you upload configs, code updates, your telnet to machines, and the like. This class is rightly called Network Management (or Operations, Administration and Maintenance, or OAM).

This further split results in a 12-class model, with the following categories:

Network Control and Internetwork Control

VoIP

Broadcast Video

Multimedia Conferencing

Real-time Interactive

Multimedia Streaming

Signaling

Transactional Data

Network Management (OAM)

Bulk Data

Best Effort

Scavenger

A great document to learn more about these categories (and, as you will see in the DSCP part, their recommended marking) is the IETF RFC 4594 (https://www.ietf.org/rfc/rfc4594.txt).

Keep in mind that there is not a “right” QoS model. It all depends on what traffic you expect in your network, how granular you want your categorization to be, and of course, what you can do with these classes. If your switches and routers have only four queues (we’ll talk about your WLC and APs soon), having a 12-class model is great, but probably not very useful. You may be able to use your 12 classes in a PowerPoint but not in your real network.

In most networks, though, starting from a 12-class model allows the QoS designers to have fine-grained categories that match most types of traffic expected in a modern network. When some network devices cannot accommodate 12 categories, it is always possible to group categories into common buckets.

Application Visibility and Identification

Although QoS design should be your first action, it implies a knowledge of QoS mechanisms and categories. It is therefore useful to dwell on classification fundamentals, especially because Wi-Fi has a heavy history in the classification field.

Classifying packets implies that you can identify the passing traffic. The highest classification level resides at the application layer. Each application opens a certain number of sockets in the client operating system to send traffic through the network. These sockets have different relative importance for the performances of the application itself.

An example will help you grasp the principles. Take a voice application like Jabber. When the application runs, your smartphone microphone records the room audio, including (hopefully) the sound of your voice. Typically, such an application splits the audio into small chunks, for example, of 20 milliseconds each.

Note

You will find that there can be a lot of complexity behind this process, and that the process described here is not strictly valid for all applications. For example, some applications may slice time in chunks of 20 ms but record only a 10-millisecond sample over that interval, and consider it a good-enough representation of the overall 20 millisecond sound. Other applications will use 8 or 10 millisecond slices, for example.

Each 20-millisecond audio sample is converted into bits. The conversion process uses what is called a codec (to “code” and “decode”). Each codec results in different quantities of bits for the same audio sample. In general, codecs that generate more bits also render a sound with more nuances than codecs with a smaller bit encoding. A well-known reference codec is G.711, which generates about 160 bytes (1280 bits) of payload for each 20 ms sound sample. With 50 samples per second, this codec consumes 64 kbps, to which you must add the overhead of all the headers (L2, L3, L4) for the payload transmission. Other codecs, like PDC-EFR, consume only 8 kbps, at the price of a less-nuanced representation of the captured audio. It is said to be more “compressed” than G.711. Some codecs are also called elastic, in that they react to congestion by increasing the compression temporarily. The art of compressing as much as possible, while still rendering a sound with a lot of nuances, is a very active field of research, but you do not need to be a codec expert to pass the CCIE Wireless exam.

As you use Jabber, the application opens a network socket to send the flow of audio to the network. Keep in mind that the audio side of the application is different from the transmission side of your smartphone. Your network buffer receives from the application 160 bytes every 20 ms. However, transmitting one audio frame over an 802.11n or 802.11ac network can take as little as a few hundred microseconds. The function of the network stack is to empty the buffer, regardless of the number of packets present. When your Wi-Fi card gains access to the medium, it sends one block or multiple frames, depending on the operating system’s internal network transmission algorithm, until the buffer is empty. As soon as a new packet gets into the network buffer, the driver reattempts to access the medium, and will continue transmitting until the buffer is empty again.

Therefore, there is no direct relationship between the voice part of the application and the network part. Your smartphone does not need 1 second to send 1 second worth of audio, and your smartphone also does not need to access the medium strictly every 20 milliseconds. The application will apply a timestamp on every packet. On the receiver side, the application will make sure that packets are decoded back into a sound and played in order at the right time and pace (based on each packet timestamp).

The receiver will also send, at intervals, statistics back to the sender to inform it about which packets were lost, which were delayed, and so on. These statistic messages are less important than the audio itself but are still useful for the sender to determine, for example, whether the call can continue or whether the transmission has degraded beyond repair (in which case the call is automatically terminated). Sending such statistics implies opening another socket through the operating system. It is common to see real-time voice sent over UDP while the statistics part would be sent over a TCP socket. In a normal audio call, both sides exchange sounds, and therefore both sides run the previous processes.

Note

Some codecs use Voice Activity Detection, or VAD. When the microphone captures silence, or audio below a target volume threshold, the application decides that there is nothing to send and does not generate any packet for that sampling interval. However, this mechanism sometimes gives the uncomfortable impression of “dead air” to the listener. Many codecs prefer to send the faint sound instead. Others send a simplified (shorter) message that instructs the application on the listener’s end to play instead a preconfigured “reassuring white noise” for that time interval.

Many well-known applications can be recognized by the ports they use. For example, TCP 80 is the signature of web traffic. This identification is more complex for real-time applications because they commonly use a random port in a range. For example, Jabber Audio, like many other real-time voice applications, uses a random UDP port between 16384 and 32766. The port can change from one call to the next. This randomness helps ensure that two calls on the same site would not use the same port (which can lead to confusion when devices renew or change their IP addresses) and improves the privacy of the calls by making port prediction more difficult for an eavesdropper.

Processes like Network Based Application Recognition (NBAR, which is now implementing its second generation and is therefore often called NBAR2) can use the ports to identify applications. When the port is not usable, the process can look at the packet pattern (packet size, frequency, and the like) to identify the application. Looking beyond the port is called Deep Packet Inspection (DPI). NBAR2 can identify close to 1,400 different applications. Identification can rely on specific markers. For example, a client opening a specific (random) port to query a Cisco Spark server in the cloud then receives an answer containing an IP address, and then exchanges packets over the same port with that IP address, is likely to have just opened a Spark call to that address. NBAR2 can easily identify this pattern. When the whole exchange is encrypted, NBAR2 can rely on the packet flow (how many packets, of what size, at what interval) to fingerprint specific applications. NBAR2 can recognize more than 170 encrypted applications with this mechanism.

Therefore, deploying NBAR2 at the edge of your network can help you identify which application is sending traffic. However, running DPI can be CPU intensive. After you have identified the type of traffic carried by each packet, you may want to mark this packet with a traffic identifier so as not to have to rerun the same DPI task on the next networking device but apply prioritization rules instead. In some cases, you can also trust the endpoint (your wireless client for example) to mark each packet directly.

This notion of “trust” will come back in the “trust boundary” discussion, where you will decide whether you want to trust (or not) your clients’ marking.

Layer 3, Layer 2 QoS

Each packet can be marked with a label that identifies the type of traffic that it carries. Both Layer 3 headers (IPv4 and IPv6) and most media Layer 2 headers (including Ethernet and 802.11) allow for such a label. However, Layer 2 headers get removed when a packet transits from one medium to the other. This is true when a packet goes through a router. The router strips Layer 2 off, looks up the destination IP address, finds the best interface to forward this packet, then builds the Layer 2 header that matches that next interface medium type before sending (serializing) the packet onto the medium.

This Layer 2 removal also happens without a router. When your autonomous AP receives an 802.11 frame, it strips off the 802.11 header and replaces it with an 802.3 header before sending it to the Ethernet interface. When the AP is managed by a controller, the AP encapsulates the entire 802.11 frame into CAPWAP and sends it to the WLC. The WLC looks at the interface where the packet should be sent and then removes the 802.11 header to replace it (most likely) with an 802.3 header before sending it out.

In all cases, the original Layer 2 header is removed. For this reason, we say that the Layer 2 header is only locally significant (it is valid only for the local medium, with its specific characteristics and constraints). By contrast, the Layer 3 part of the packet is globally significant (it exists throughout the entire packet journey). This is true for the entire header, but is also true for the QoS part of that header. As a consequence, the QoS label positioned in the Layer 3 section of the packet expresses the global QoS intent—that is to say a view, valid across the entire journey, on what this packet represents (from a QoS standpoint). The label expresses a classification (what type of traffic this packet carries). Because your network carries multiple types of traffic (organized in categories, as detailed in the next section), each label represents a type of traffic but also expresses the relative importance of this traffic compared to the others. For example, if your network includes five traffic categories labeled 1 to 5, and if 5 is the most important category, any packet labeled 5 will be seen as not just “category 5” but also “most important traffic.” However, keep in mind that the label you see is “5,” and nothing else. You need a mapping mechanism to translate this (quantitative) number into a qualitative meaning (“most important traffic”).

The way Layer 3 QoS is expressed depends on the IP system you use (IPv4 or IPv6) and the tagging system you use (for example, IP Precedence or DSCP). Luckily, they are similar and inclusive of one another.

IPv4 QoS Marking

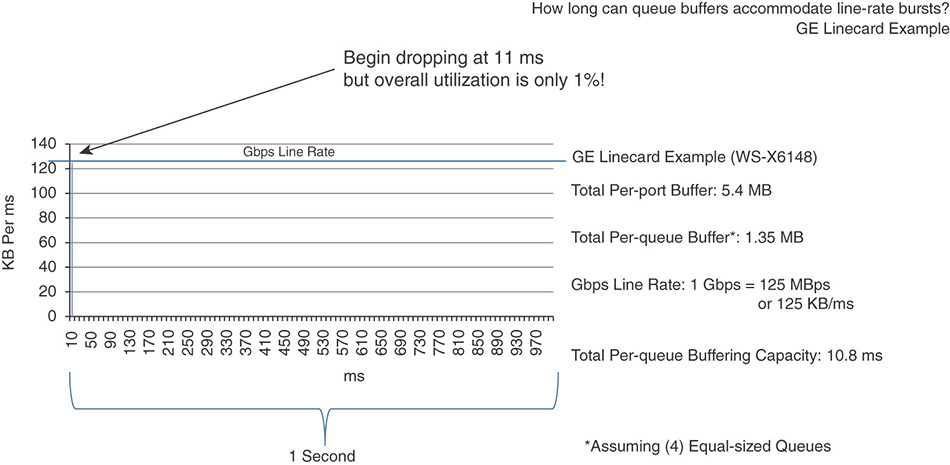

The IPv4 header has a field called Type of Service (ToS).

Note

Do not confuse Layer 3 Type of Service (ToS) and Layer 2 (e.g. 802.1) Class of Service (CoS). CoS is Layer 2, ToS is Layer 3. A way to remember it is to say that ToS is on Top, while CoS is Closer to the cable.

Using ToS, QoS can be expressed in two ways:

Three bits can be used to mark the QoS value. This is the IP Precedence system. You must be aware of its existence, but the next system (DSCP) incorporates IP Precedence. In most networks, IP Precedence is used only to express a QoS label compatible with other systems that also only allow 3 bits to express QoS (for example, 802.1 or 802.11). The IPv4 ToS field contains 8 bits. When IP Precedence is used, only the most significant bits (MSB, the bits on the left side) are used, resulting in a labeling scale from 000 to 111 (0 to 7 in decimal). The other bits (on the right) are usually left to be 0, although they can in theory be used to express other information, such as reliability or delay.

Another system, differentiated services code point (DSCP), extends the number of possibilities. DSCP uses the 6 bits on the left, thus creating 64 different QoS values. The other last 2 bits (on the right side) mark explicit congestion notification (ECN), thus informing the destination point about congestion on the link. For our purpose, these 2 rightmost bits are always 0 (because they do not express a QoS category), and we’ll ignore them in this section. Figure 7-2 shows the location of the QoS field in an Ethernet frame.

Figure 7-2 DSCP and CoS Fields

Both DSCP and IP Precedence keep the rightmost bit at 0 (this bit is called MBZ, “must be zero”). This means, among other things, that you should never find in a standard network an odd DSCP value. All DSCP values are expected to be even numbers (again, unless the network is nonstandard and the admin uses experimental QoS marking).

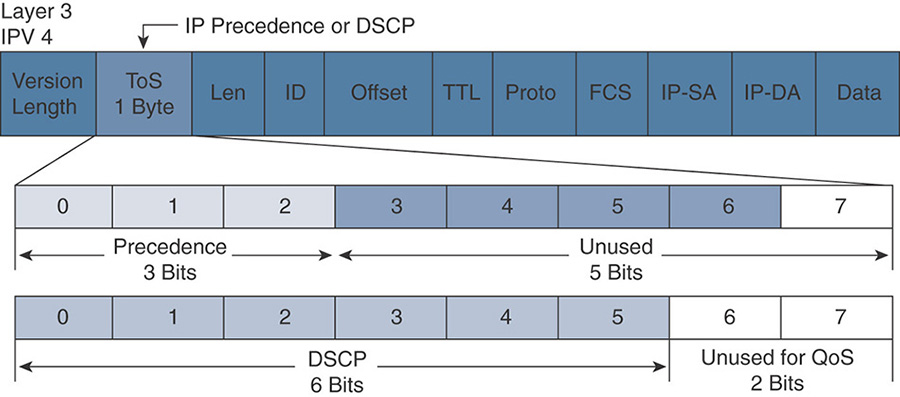

DSCP is more complex than IP Precedence because it further divides the 6 left bits into three sections. As a CCIE Wireless, you do not need to be a QoS expert. In fact, you can probably survive the exam with no clear knowledge of DSCP internals, but gaining some awareness will undoubtedly help you design your networks’ QoS policies properly.

Within the DSCP 6-bit label, the three leftmost bits (the 3 MSBs) express the general importance of the traffic, here again on scale from 000 to 111 (0 to 7 in decimal). “Importance” is a relative term. In theory, you could decide that 101 would mean “super important,” while 110 would mean “don’t care.” If you were to do so, you would be using experimental QoS. Experimental, because translating this importance beyond your own network (into the WAN, for example) would become pretty challenging, as any other network could mean anything they wanted with the same bits. To simplify the label structure and help ensure end-to-end compatibility of packet markings between networks, the IETF has worked on multiple RFCs that organize possible traffic types in various categories, and other RFCs that suggest a standardized (that is, common for all) marking for these different categories of traffic. A great RFC to start from is RFC 4594. Reading it will give you a deeper understanding of what types of traffic categories are expected in various types of networks, and what relative importance and marking they should receive. Cisco (and the CCIEW exam) tend to follow the IETF recommendations.

Note

There is in fact only one exception, voice signaling, that the IETF suggests labeling CS5, while Cisco recommends using CS3. The IETF rationale is that signaling belongs to the Voice category and should therefore be right below the audio flows in terms of priority. The Cisco rationale is that this is signaling (statistical exchanges about the voice flow, and not real-time data itself), and that therefore it should come after other types of real-time traffic, such as real-time video.

In most systems, a higher value (for example, 110 vs. 010) represents a type of traffic that is more sensitive to losses or additional delays. This does not mean that the traffic is more important, but simply that delaying or losing packets for that traffic category has more impact on the network user quality of experience. A typical case is real-time voice and FTP. If you drop a voice packet, it typically cannot be re-sent later (the receiving end has only a short window of opportunity to receive this packet on time and play it in the flow of the other received audio packets). By contrast, an FTP packet can be dropped and re-sent without major impact, especially because FTP uses TCP (which will make sure that the lost packet is identified as such and re-sent properly).

Because the three leftmost bits express the general importance of the traffic, and because you have more bits to play with, each set of leftmost bits represent what is called a class of traffic. For example, you could follow the IETF recommendations and label all your real-time video traffic with the leftmost bits 100. But within that category, that class of traffic, you may have different types of flows. For example, your network may carry the business-relevant Spark real-time video traffic and personal traffic (for example, Viber or Facetime). If congestion occurs, and you have no choice but to drop or delay one video packet, which one should you choose? Because you want to keep your job as a network admin, you will probably choose to forward that Spark video packet where your boss is discussing a million-dollar contract and delay or drop that Facetime video packet where your colleague tells his aunt how great her coffee tastes. This wise choice means that you have to further label your video traffic, and this is exactly what the next two bits in DSCP do. They are called drop probability bits. Remember that the last bit on the right is called MBZ (must be zero) and will therefore always be zero in normal networks, and under normal circumstances. Figure 7-3 shows the structure of how DSCP values translate to per-hop behavior (PHB) classes.

The slight complexity of DSCP is that the class bits and the drop probability work in reverse logic.

The three leftmost bits represent the general traffic class, and the convention is that a higher number means a traffic more sensitive to delay and loss (a traffic that you should delay, or drop less, if possible). So you would delay or drop 010 before you delay or drop 100.

By contrast, the drop probability bits represent, within a class, which traffic should be dropped or delayed first. This means that a drop probability of 11 is higher than a drop probability of 10, and the traffic marked 11 will be dropped before the traffic marked 10.

Let’s take a few examples. Suppose that you have a packet marked 101 00 0. Class is 101, drop probability is 00 (and MBZ is, of course, 0).

Suppose that you have a congestion issue and have to delay a packet marked 101 00 0 or a packet marked 100 00 0. Because 101 is higher than 100, you will probably delay 100 00 0. (We suppose here and later that you follow the IETF reasonable and common rules.)

Now suppose that you have to choose between a packet marked 100 10 0 and a packet marked 100 11 0. Because the class is the same, you cannot use the class to make a choice. But because one packet has a drop probability of 11, higher than the other (10), you will choose to delay the packet marked 100 11 0 (because it belongs to the same class as the other but its drop probability is higher). This difference between the class and the drop probability confuses a lot of people, so don’t feel sad if you feel that it is uselessly complicated. In the end, you just get used to it.

Now, if you have to choose between a packet marked 101 11 0 and a packet marked 100 01 0, you will delay or drop the packet marked 100 01 0 because its class is lower than the other one. You see that the class is the first criterion. It is only when the class is the same that you will look at the drop probability. When the class is enough to make a decision, you do not look at the drop probability.

Per Hop Behavior

These DSCP numbers can be difficult to read and remember. Most of the time, the binary value is converted to decimal. For example, 100 01 0 in binary is 34 in decimal. 34 is easier to remember, but it does not show you the binary value that would help you check the class and drop probability values.

The good news is that this general QoS architecture, called Diffserv (for Differentiated Services, because different packets with different marking may receive different levels of service, from “send first” to “delay or drop”), also includes a naming convention for the classes. This naming convention is called the Per Hop Behavior (PHB). The convention works the following way:

When the general priority group (3 bits on the left) is set to 000, the group is called best-effort service; no specific priority is marked.

When the general priority group contains values but the other bits (Drop Probability and MBZ) are all set to 0, the QoS label is in fact contained in 3 bits. As such, it is equivalent to the IP Precedence system mentioned earlier. It is also easily translated into other QoS systems (802.11e or 802.1D) that use only 3 bits for QoS. That specific marking (only 3 bits of QoS) is called a Class Selector (CS), because it represents the class itself, without any drop probability.

When the general priority group ranges from 001 to 100 (001, 010, 011, and 100) and contains a drop probability value, the traffic is said to belong to the Assured Forwarding (AF) class. Transmission will be assured, as long as there is a possibility to transmit.

When the general priority group is set to 101, traffic belongs to the Expedited Forwarding (EF) class. This value is decimal 5, the same as the value used for voice with IP Precedence. In the EF class, for voice traffic, the drop probability bits are set to 11, which gives a resulting tag of 101110, or 46. “EF” and “46” represent the same priority value with DSCP. This is a value you should remember (Voice = 101110 = EF = DSCP 46), but you should also know that 44 is another value found for some other voice traffic, called Voice Admit (but it’s less common). The EF name is usually an equivalent to DSCP 46 (101110). EF is in reality the name of the entire class. Voice Admit (DSCP 44) also belongs to the EF category, but it is handled specially (with Call Admission Control), so you will always see it mentioned with a special note to express that the target traffic is not your regular EF = DSCP 46.

With the exception of EF, all the other classes are usually indicated with a number that shows which level they represent. For example, AF1 will represent all traffic starting with 001. AF4 will represent all traffic starting with 100. Then, when applicable, a second number will show the drop probability. For example, the PHB AF41 represents the DSCP value 100 01 0 (DSCP 34 in decimal).

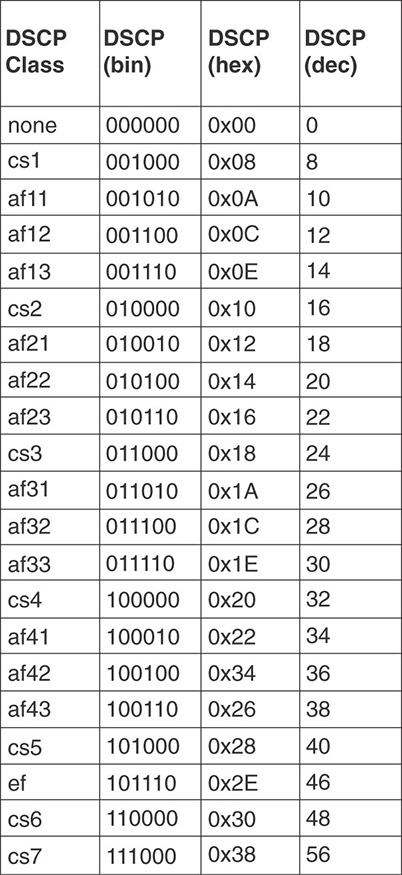

Table 7-1 summarizes the AF values.

AF Class |

Drop Probability |

DSCP Value |

AF Class 1 |

Low |

001 01 0 |

|

Medium |

001 10 0 |

|

High |

001 11 0 |

AF Class 2 |

Low |

010 01 0 |

|

Medium |

010 10 0 |

|

High |

010 11 0 |

AF Class 3 |

Low |

011 01 0 |

|

Medium |

011 10 0 |

|

High |

011 11 0 |

AF Class 4 |

Low |

100 01 0 |

|

Medium |

100 10 0 |

|

High |

100 11 0 |

When there is no drop probability, the class is called CS and a single number indicates the level. For example, the PHB CS4 represents the DSCP value 100 00 0.

Figure 7-4 will help you visualize the different values. Notice that AF stops at AF4 (there is no AF5, which would overlap with EF anyway, but there is also no AF6 or AF7). Beyond Voice, the upper classes (CS6 and CS7) belong to a category that RFC 4594 (and others) call the Network Control traffic. This is your EIGRP update or your CAPWAP control traffic. You are not expected to have plenty of these, so a common class is enough. Also, you should never have to arbitrate between packets in this class. In short, if you drop your CS6 traffic your network collapses, so you should never have to drop anything there (RFC 4594 sees CS7 as “reserved for future use,” so your network control traffic likely only contains CS6 packets).

You do not need to learn all the values in this table, but you should at least know DSCP 46 (EF) for Voice, AF 41 (DSCP 34), which are used for most real-time video flows, and CS3 (voice signaling in Cisco networks).

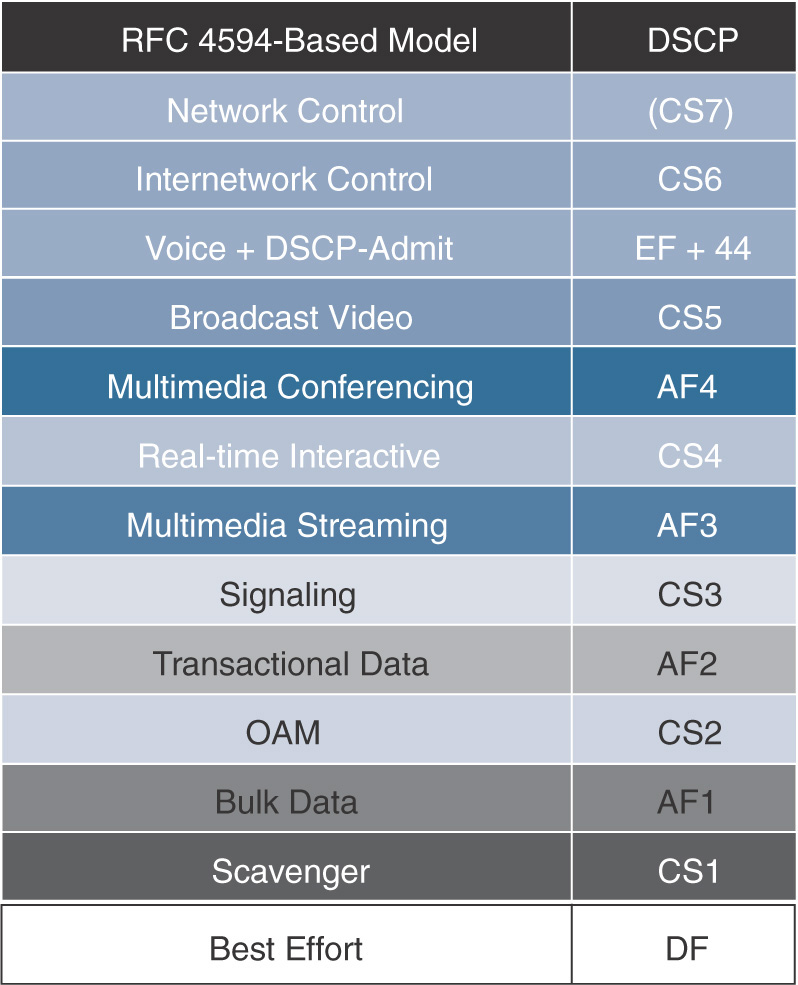

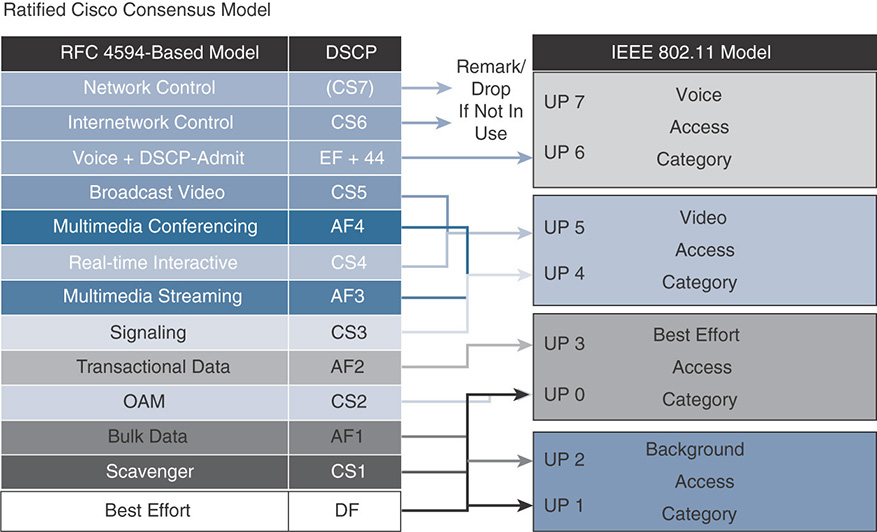

You may wonder how this table maps to the various 12 classes, and this is an important question. Figure 7-5 provides a structured view:

Notice that traffic higher in the list is not “more important.” It is usually more sensitive to delay and loss (as you learned earlier). The reason why this is fundamental to the understanding of QoS is “marking bargaining.” Throughout your QoS career, you will often encounter customers with requirements like this:

Customer: I want to prioritize my business-relevant traffic. I use Spark audio.

You: I see with AVC that you also have Facetime audio traffic. We’ll remark this one as Best Effort and will keep Spark audio as EF.

Customer: Wait, Facetime is not business relevant but it is still Voice, so it is delay sensitive.

You: Yes, but if we mark Facetime EF, it will compete with your business-relevant Spark audio traffic.

Customer: Okay, but can’t you give it something higher than BE that shows that we care for our employees’ personal calls, even if they are not business relevant? Something, like, say 38 or 42?

You: Dear customer, you have absolutely no understanding of QoS.

Your answer to the customer is very reasonable (at least technically). Each class will correspond to specific configuration on switches, routers (and you will see soon, WLCs and APs). Facetime is either in the same bucket as Spark and should be treated the same way (making sure that 50 packets per seconds can be forwarded with low delay, and so on), or it is not business relevant and is sent as best effort (which means “We’ll do our best to forward these packets as fast as we can, unless something more important slows us down”). There is no QoS bargaining, for multiple reasons. One of them is that you probably do not have a QoS configuration for DSCP 42, as your customer suggests. Another reason is that if you create a configuration for DSCP 42, you will have to borrow buffers and queues that are now used for something else, and your Facetime packets will compete with other traffic (for example, with the CEO daily broadcast). If you mark Facetime “something else,” like CS4 or CS5, it will again conflict with other traffic.

These are the reasons why Table 7-1 represents a series of classes, each of which will correspond to a specific set of configured parameters to service that class to best match its requirements and needs. It does not mean that voice is more important than broadcast video, for example. The table just lists typical traffic types and a label that can be applied to each traffic type. In turn, this label will be used on each internetworking device to recognize the associated traffic. With the knowledge of what this traffic type needs, each networking device will forward the packets in conditions that will provide the best possible service, taking into account the current conditions of the network links.

This understanding is also important to realize that Scavenger (CS1) is not “higher” than Best Effort (CS0, 000 00 0), even if it is listed above BE. In fact, all your configurations will purposely send CS1 traffic only if there is nothing else in the queue. If a BE packet comes in, it will go first, and CS1 will only be sent at times when it does not conflict with something else.

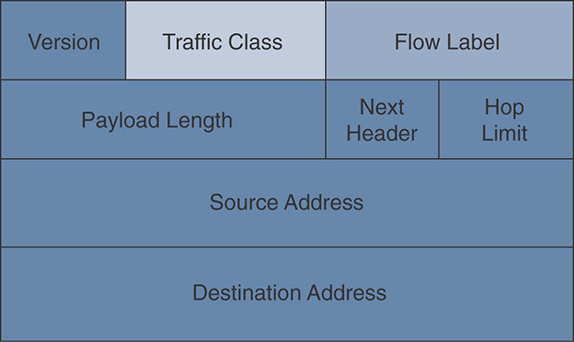

IPv6 Layer 3 Marking

DSCP may sound a bit complicated, but it is very efficient. We covered the IPv4 case; now let’s spend a few minutes on IPv6. The good news is that IPv6 QoS marking follows the logic and mechanics of IPv4. The IPv6 header also has an 8-bit field called the Traffic Class field, where you can mark QoS. Just like for IPv4 QoS, we ignore the rightmost 2 bits. They are also called Explicit Congestion Notice bits and are used on some media to inform the other end about a congestion on the line. Because we do not use them in Wi-Fi and because they do not express a QoS category, we ignore them.

The other 6 bits are the same as in the IPv4 header, so you can put your DSCP value there. This makes DSCP easy to use. The same value and categories are expressed in the IPv4 and the IPv6 header, as shown in Figure 7-6.

IPv6 adds another section in the header called the Flow Label. This field is not present in IPv4. It is useful for QoS in general because it allows the sender to put a label on a traffic flow. This helps identify all packets in a given flow, and helps classification. With labels, your intermediate networking device can check the label and identify the traffic without having to look at ports or run a deep packet inspection. This is useful in general, but you will not be tested on IPv6 Flow Labels on the CCIE Wireless exam.

Layer 2 802.1 QoS Marking

Remember that Layer 3 QoS marks the global QoS intent for your packet, the general category to which this packet should belong. Your packet is then transported across different media. Each medium has specific characteristics and constraints, and they cannot all categorize QoS families over 6 bits. You saw that DSCP used 6 bits, which translated into (in theory) 64 possible categories. In fact, a count from CS7 to CS0 shows that we are only using 21 values, not 64. However, the DSCP scale is open to 64 possibilities, and your network can decide to implement some experimental values if needed.

Many Layer 2 mediums do not have the possibility of expressing 64 levels of categorization because of physical limitations. Switches, for example, build transmission buffers. You may remember from the section “The Notion of Differentiated Treatment” that the general buffer is split into subbuffers for each type of traffic category. If you had to build 64 subbuffers, each of them would be too small to practically be useful or your general buffer would have to be enormous. In both cases, prioritizing 64 categories over a single line does not make much processing sense when you are expected to switch packets at line rate (1 Gbps or 10 Gbps). However, most switches can use DSCP but they use an 8- or 12-queue model.

The Ethernet world was the first to implement a QoS labeling system. It was built around 3 bits (8 categories, with values ranging from 0 to 7). The initial IP Precedence system at the IP layer was founded on the idea that there would be an equivalence between the Layer 2 and the Layer 3 QoS categories. But as you know, DSCP expanded the model. Today, the Ethernet world is aware that using L3 QoS is reasonable and that Layer 2 should translate the DSCP value, when needed, into the (older) Ethernet 3-bit model. You will find many switches that understand the Ethernet 3-bit QoS model but only use the DSCP value for their traffic classification and prioritization.

You are not taking a Routing and Switching CCIE, so you do not need to be an expert in Layer 2 QoS. However, 802.11 got its inspiration from 802.1 QoS, so it may be useful for you to see the background picture.

That picture may be confusing for non-Switching people, because 802.1 QoS appeared historically in several protocols, and you may have heard of 802.1p, 802.1D, and 802.1Q. In fact, it is just a question of historic perspective. 802.1p is the name of an IEEE task group that designed QoS marking at the MAC layer. Being an 802.1 branch, the target was primarily Ethernet. They worked between 1995 and 1998. The result of their work was published in the 802.1D standard.

Note

In the 802.1 world, lower cases are task groups, like 802.1p, and capital letters are full standards, like 802.1D. This is different from 802.11, where there is only one standard and the standard itself has no added letter. Lower cases are amended to the standard (like 802.11ac), and capital letters indicate deprecated amendments, usually politely named “recommended practices,” like 802.11F.

802.1D incorporates the 802.1p description of MAC Layer QoS but also includes other provisions, like interbridging or Spanning Tree. A revision of 802.1D was published in 2004; however, the 802.1Q standard, first published in 2003 and revised in 2005 and 2011, leverages the 802.1D standard for part of the frame header while adding other parameters (like the VLAN ID). In summary, 802.1p is the original task group, for which results were published in 802.1D and whose elements appear in the 802.1Q section of the 802.1 and 802.3 headers. Very simple.

More critical than history is the resulting QoS marking logic. 802.1p allows for 3 bits to be used to mark QoS, with values ranging from 0 to 7 (8 values total). Each value is called a Class of Service (CoS). They are named as shown in Table 7-2, in 802.1Q-2005.

Table 7-2 Classes of Service Names and Values

Class Name |

Value (Binary/Decimal) |

Best Effort |

0 / 000 |

Background |

1 / 001 |

Excellent Effort |

2 / 010 |

Critical Applications |

3 / 011 |

Video |

4 / 100 |

Voice |

5 / 101 |

Internetwork control |

6 / 110 |

Network Control |

7 / 111 |

Caution

Table 7-2 is not what the original 802.1p-1998 or 802.1D said. We’ll come back to this point soon. However, this is the current practice today, based on 802.1Q.

You see that there is a natural mapping from DSCP CS classes to these 8 values. CS7 and CS6 map to CoS 7 and 6, respectively. Voice (EF) maps to CoS 5, Video (AF4 family) to CoS 4, the critical Signaling (CS3) and Multimedia Streaming (AF3) map to CoS 3, all data (AF2 and CS2) maps to CoS 2, BE is still 0, and Background (CS1) is CoS 1. You need to squeeze several classes into one, and there are a few uncomfortable spots (like CS5), but overall, the DSCP to CoS mapping works.

It would be much more difficult to map the other way around, from CoS to DSCP, because you would not be able to use the 21 values (not to mention 64); you would use only 8. This will come back to us soon.

802.11 QoS Marking

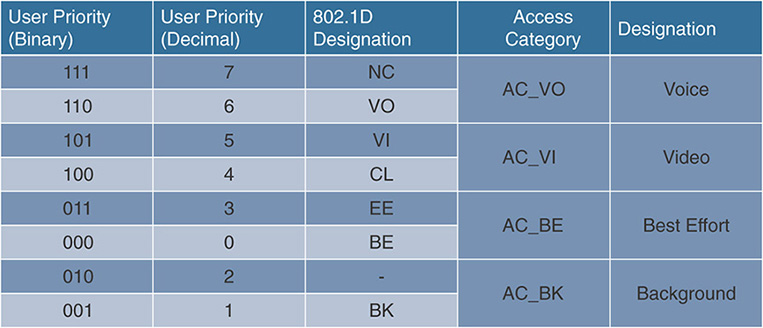

It is now time to look at 802.11 L2 QoS marking. It looks like we took the scenic route to get there, but all you learned will help you make sense of what will look strange in the way 802.11 opposes 802.1 or DSCP. You undoubtedly remember from your CCNA or CCNP studies that the 802.11e amendment, published in 2005 and incorporated in 802.11-2007, lists the labels displayed in Figure 7-7.

This table is an approximation of the table that appears as 20.i in 802.11e (and Table 10.1 in 802.11-2016). We added the binary value. A few elements are striking, in light of the previous pages:

The labels are called priorities (User Priorities, UP). This is because there is not a lot of buffering play available in a half-duplex medium. We will come back to this point very soon.

UPs are grouped two by two into access categories. Each access category has an intended purpose (voice, and so on).

The values differ notably from the 802.1Q table. This is something a wireless expert must understand.

Table 7-2 was 802.1Q. Table 7-3 is the original 802.1p table that was translated into 802.1D-2004:

Table 7-3 802.1p / 802.1D-2004 Classes

Class Name |

Value (Binary/Decimal) |

Best Effort |

0 / 000 |

Background |

1 / 001 |

Spare |

2 / 010 |

Excellent Effort |

3 / 011 |

Controlled Load |

4 / 100 |

Video |

5 / 101 |

Voice |

6 / 110 |

Network Control |

7 / 111 |

You can immediately see that there is a disconnect between 802.1p/802.1D and 802.1Q-2005. Voice is 6 in 802.1p/802.1d-2004 but 5 in 802.1Q-2005. Similarly, Video gets 5 versus 4, not to mention Excellent Effort downgraded from 4 to 3. At the same time, it looks like 802.11 is following 802.1p/802.1D instead of following 802.1Q. What gives?

It all comes back to this notion of the QoS model. Back at the end of the 1990s, when the 802.1p group was working, a standard model was around four queues, and a new trend was to add more queues. The most urgent traffic was seen as Network Control, and the most urgent client data traffic was seen as Voice. This is what gave birth to the 802.1p model.

When the 802.11e work started in 2000, it built on the work of a consortium, Wireless Multimedia Extensions (WME), led by Microsoft, that was already trying to implement QoS markings among Wi-Fi vendors. They (of course) followed the IEEE recommended values of the days (802.1p). The 802.11e draft was finished in 2003, and the 2003 to 2005 period was about finalizing details and publishing the document.

In between, the IETF recognized that Network Control Traffic should be split into two categories: level 6, which is the standard internetwork control traffic, and an additional category of even higher level that should in fact be reserved for future use or for emergencies (label 7). In such a model, Voice is not 6 anymore but 5, and Video moves from 5 to 4. This change was brought into the inclusion of 802.1D into the revised 802.1Q-2005, but 802.11e was already finalized.

The result is a discrepancy between the standard 802.1Q marking for Voice and Video (5 and 4, respectively), and the standard 802.11e marking for these same traffic types (6 and 5, respectively). A common question you will face as an expert is: Why did 802.11 keep this old marking? Why did they not update like 802.1Q did?

There are multiple answers to this question, but one key factor to keep in mind is that the 802.11e marking is neither old, wrong, obsolete, nor anything along that line. Ethernet is a general medium that can be found at the Access, Distribution, and Core layers. You expect any type of traffic on such a medium, including routing updates. By contrast, Wi-Fi is commonly an access medium. You do not expect EIGRP route updates to commonly fly between a wireless access point and a wireless client (wireless backbones are different and are not access mediums). As such, there is no reason to include an Internetwork Control category for 802.11 QoS. We already lose UP 7 if we keep it for future use as recommended by IETF; it would be a waste to also lose UP 6 and be left with only 5 values (plus 0). Therefore, the choice made by the IEEE 802.11 group, to keep the initial 802.1D map, is perfectly logical, and is not laziness (and yes, the 802.11 folks also know that 802.1Q changed the queues). In the future, it may happen more that 802.11 becomes a transition medium toward other networks (for example, toward your ZigBee network of smart home devices). If this happens, it may make sense to change the 802.11e queues to include an additional Internetwork Control queue. Until that day comes, a simple translation mechanism happens at the boundary between the 802.11 and the 802.1 medium (UP 6 is changed to CoS 5, UP 5 to CoS 4, while the rest of the header is converted too). As a CCIE Wireless, you need to know that these are the right markings and resist the calls to unify the markings. Current best practices are to use UP6/PHB EF/DSCP 46/CoS 5 for Voice and UP 5/PHB AF41/DSCP 34/CoS 4 for real-time video. The only exception is that CoS tends to be ignored more and more, as DSCP is used instead (more on this in the upcoming section). Any request to use UP 5 for Voice is a trap into which you should not fall.

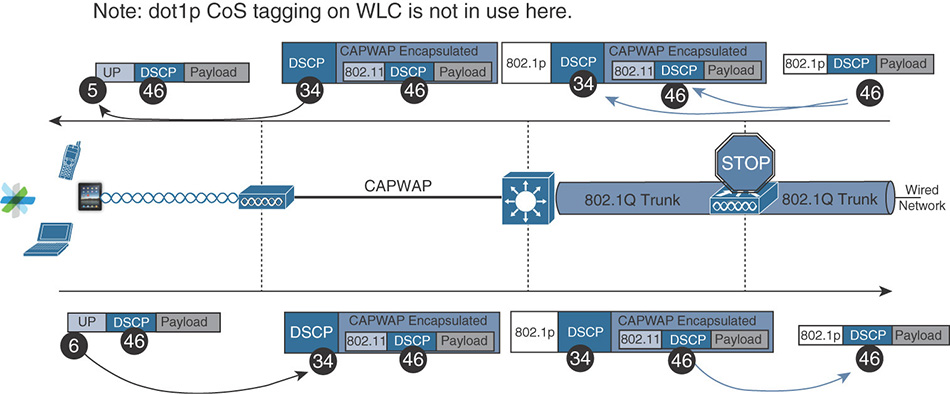

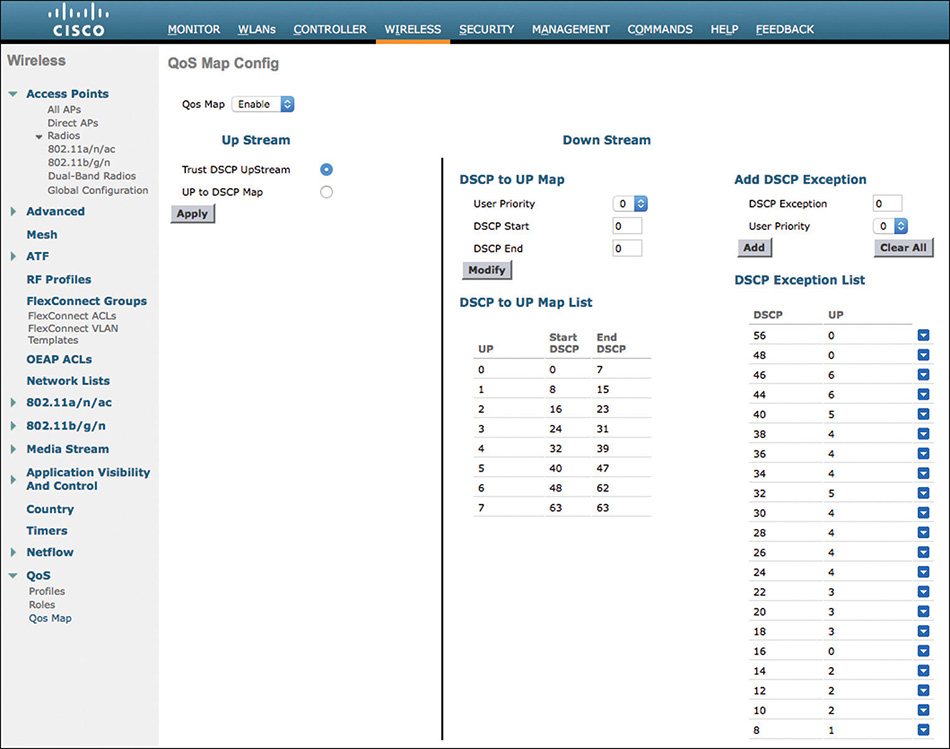

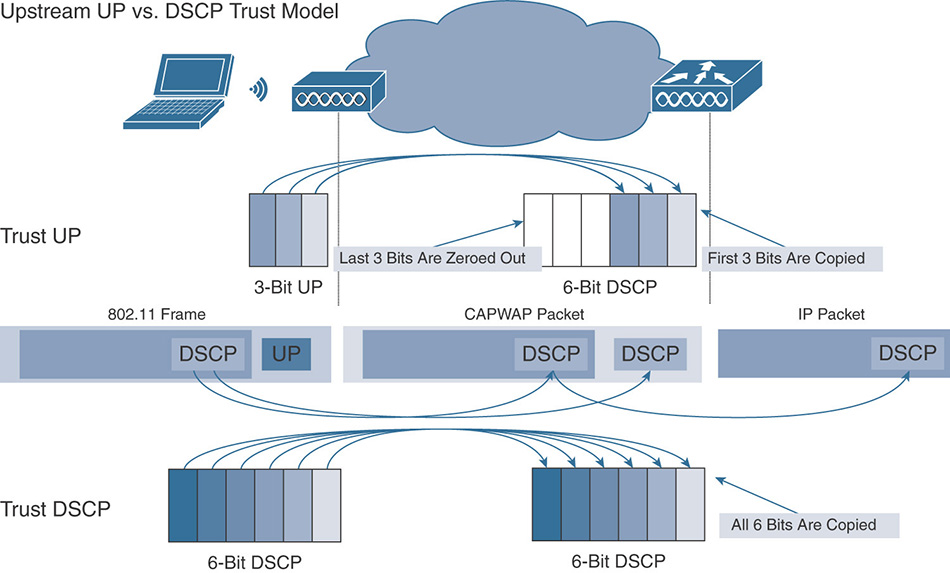

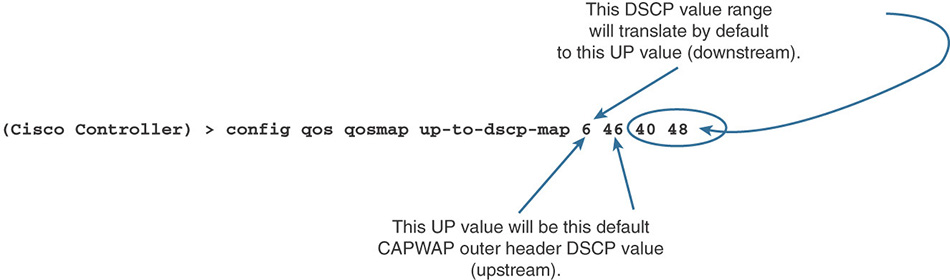

DSCP to UP and UP to DSCP Marking

In most scenarios, you should try to use DSCP to express your QoS intent (because DSCP is independent from the local medium). However, there are cases where you need to work from UP and decide the best DSCP, or when you need to decide which UP is best for a given DSCP value. These requirements mean that you need to know what the recommended practices are for this mapping. Fear not, there is a macro that we will examine in the “Configurations” section that will help you configure the right numbers.

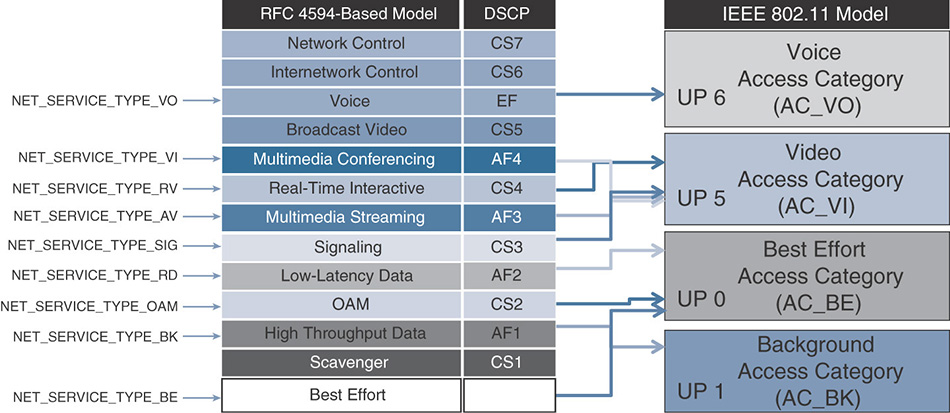

Downstream

Downstream traffic will come from the wire and will have a DSCP value. You need to decide which UP to use. Table 7-4 shows the Cisco recommended mapping for a 12-class QoS model. You will see each of 12 categories, their associated recommended DSCP marking (as per RFC 4594), and the associated recommended UP marking. This mapping, shown in Figure 7-8, is not just recommended by Cisco but is also an IETF standard (RFC 8325, https://tools.ietf.org/html/rfc8325).

Table 7-4 UP to DSCP Recommended Mapping

Incoming UP Value |

Recommended DSCP Value to Map To |

0 - 000 |

0 (CS0) – 000 00 0 |

1 - 001 |

8 (CS1) – 001 00 0 |

2 - 010 |

16 (CS2) – 010 00 0 |

3 - 011 |

24 (CS3) – 011 00 0 |

4 - 100 |

32 (CS4) – 100 00 0 |

5 - 101 |

34 (AF41) – 100 01 0 |

6 - 110 |

46 (EF) – 101 11 0 |

7 - 111 |

56 (CS7) – 111 00 0 |

Notice that this model uses all UPs (except 7, still reserved “for future use”). This rich mapping implies that some traffic, within the same access category (AC), will have different UPs. You will see in the “Congestion Management” section that the access method (AIFSN, TXOP) is defined on a per access category basis. This implies that both UPs in the same AC should have the same priority level over the air. However, Cisco APs (and most professional devices) have an internal buffering system that prioritizes higher UPs. In other words, although there is no difference between UP 4 and UP 5 as far as serialization goes (the action of effectively sending the frame to the air), the internal buffering mechanisms in Cisco APs will process the UP 5 frame in the buffer before the UP 4 frame, thus providing an internal QoS advantage to UP 5 over UP 4, even if you do not see this advantage in the IEEE 802.11 numbers.

Upstream

Upstream traffic should come with DSCP marking (see the next section). In that case, you should use DSCP and ignore UP. However, you may have networks and cases where UP is all you have to work from, and you need to derive the DSCP value from the UP value. This is a very limiting case because you have only 8 UP values to work from, and this does not fit a 12-class model. It also makes it impossible to map to potentially 64 DSCP values. Even if you keep in mind that only 21 DSCP values are really used (counting all the AF, CS, and EF values in the DSCP table in the previous pages), 8 UPs definitely is not enough. So you should avoid using UP to derive DSCP whenever possible, but if you have to make this mapping, the recommended structure is shown in Table 7-4.

Note that the mapping structure is very basic: For most UP values, simply map to the generic Class Selector (CS). Then, map Video and Voice to their recommended DSCP value (AF41 and EF, respectively).

Note

There is an important semantic exception to make for this table. You will see that UP 7 is recommended to be mapped to CS 7. This is the mapping recommendation. This mapping recommendation does not mean that you should have UP 7 or CS7 traffic in your network. It just says that if you have UP 7 traffic in your network, the only logical DSCP value to which UP 7 could be mapped is CS 7.

However, you should not have any UP 7 (or DSCP CS 7) traffic in your network, first because there is typically no network control traffic that would flow through your Wi-Fi access network (unless your Wi-Fi link bridges to other networks, in which case it is not “access” anymore but has become “infrastructure”), but also because CS7 is reserved for future use. So the actual recommendation is to remap any incoming CS 7 traffic (or UP 7 traffic) to 0, because such marking is not normal and would be the sign of something strange happening in your network (unless you are consciously using this marking for some experimental purpose). You will see in the “Configurations” section how this remarking is done.

Even if you have network control traffic flowing through your Wi-Fi network, the IETF recommendation is in fact to use CS 6 and map it to UP 6. It will be in the same queue as your voice traffic, which is not great. But UP 6 is better than UP 7 (which should stay reserved for future use). The expectation is that you should not have a large volume of network control traffic anyway. Therefore, positioning that traffic in the same queue as voice is acceptable because of the criticality of such traffic and the expected low volume. If you have a large volume of network control traffic over your Wi-Fi link, and you plan to also send real-time voice traffic on that link, you have a basic design issue. A given link should not be heavily used for voice traffic and be at the same time a network control exchange highway.

Trust Boundary and Congestion Mechanisms

Marking packets correctly is critical, and understanding how to translate one marking system into another when packets are routed or switched between mediums is necessary. However, marking only expresses the sensitivity of a packet to delay, jitter, loss, and bandwidth constraints. After this sensitivity is expressed, you need to look at it and do something with it.

Trust Boundary

At this point, you should understand that traffic can be identified (using various techniques, including Deep Packet Inspection, or simple IP and port identification). After identification is performed, you can apply a label to that traffic to express its category. This label can be applied at Layer 2, at Layer 3, or both. Layer 3 expresses the general intent of the traffic, while Layer 2 expresses the local translation of that general intent. After a packet is labeled, you do not need to perform classification again (you already know the traffic category)—that is, if you can trust that label. This brings the notion of trust boundary. The trust boundary represents the point where you start trusting the QoS label on a frame or packet. At that point, you examine each packet and decide its classification and associated marking. Beyond that point, you know that packets have been inspected and that their marking truly reflects their classification. Therefore, all you have to do is act upon the packet QoS label (to prioritize, delay, or drop packets based on various requirements examined in the next section).

But where should the trust boundary be located? Suppose that you receive a packet from a wireless client and this packet is marked EF (DSCP 46). Should you automatically assume that this packet carries voice traffic and should be prioritized accordingly, or should you examine the packet with Deep Packet Inspection (DPI) and confirm what type of traffic is carried? You may then discover that the packet is effectively voice, or that it is BitTorrent.

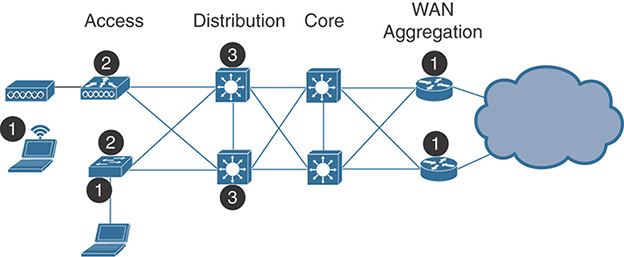

There is no right and wrong answer for this trust question, and Figure 7-9 illustrates the various options. In a corporate environment, where you expect only corporate devices and corporate activity, you may decide to fully trust your endpoints and clients. From an efficiency standpoint, this scenario is optimal because you should classify and recognize traffic as close as possible to the traffic source. If classification is done at the endpoint level, and all you have to do is act upon the marking displayed in the endpoint packets, your network QoS tasks are all around prioritization and congestion management. Classifying at the endpoint level removes the need for the network to perform DPI. DPI is CPU-intensive, so there is a great advantage in trusting the client marking. In that case, your trust boundary is right at the client level (points labeled 1 on the left).

However, in most networks, you cannot trust the client marking. This lack of trust in the client QoS marking is not necessarily a lack of trust in the users (although this component is definitely present in networks open to the general public) but is often a consequence of differences in QoS implementations among platforms (in other words, your Windows clients will not mark a given application the same way as your MacOS, iOS, or Android clients). Therefore, you either have to document each operating system behavior for each application and create individual mappings and trust rules accordingly, or run DPI at the access layer (on the access switch for wired clients and on the AP or the WLC for wireless clients). In that case, your trust boundary moves from the client location to the access layer. This location of the trust boundary is also acceptable. This is the reason why you will see NBAR2/AVC be configured commonly on supporting access switches, and on APs/WLCs. They are the access layer (labeled 2 in the illustration). On the other side of the network, the first incoming router is the location where AVC rules will also be configured, because the router is the incoming location for external traffic. (It is the point under your control that is located as close as possible to the traffic source when traffic comes from the outside of your network, and is therefore also labeled 1 in the illustration.)

If you cannot perform packet classification at the access layer, you can use the aggregation layer (labeled 3 in the illustration). However, traffic from all your access network transits through aggregation. Therefore, performing classification at this point is likely to be highly CPU intensive and should therefore be avoided, if possible, especially if classification means DPI.

One point where you should never perform classification is at the core layer. At this layer, all you want to do is to switch packets as fast as possible from one location to another. This is not the place where traffic inspection should happen.

As a wireless expert, you should know that the point of entry to your network (the WAN aggregation router) is one location where classification should occur. You should also decide whether you can trust your wireless clients’ marking. As you will see later in this chapter, in most cases you cannot trust the client marking. The reason is not so much that you cannot trust your users but rather that different operating systems have different rules for application QoS marking. Therefore, unless your network is exclusively composed of iOS and MacOS devices, with a very small set of applications, running AVC on the WLC will be required if you want to have precise QoS rules in your network. This is a common rule in the CCIE Wireless exam. In the real world, running AVC consumes CPU resources, and you will want to examine the impact of AVC on your WLCs CPU before freezing your configuration to AVC Enabled and leaving for a 3-month vacation.

Congestion Management

The trust boundary determines the location where you start trusting that the QoS label present on a packet truly reflects the packet classification. Regardless of where the trust boundary lies, there will be locations in the network (before or after the trust boundary) where you will have to act upon packets to limit the effect of congestion. It is better if your trust boundary is before that congestion location (otherwise you will act upon packets based on their QoS label without being sure that the QoS label is really representing the packet class).

Multiple techniques are available to address existing or potential congestion. In QoS books, you will see many sections on this topic because this is one of the main objectives of QoS. However, as a wireless expert, you do not need deep insight into switching and routing techniques to address observed or potential congestion. In the context of CCIE Wireless, this section is limited to the techniques that are relevant to your field.

802.11 Congestion Avoidance

One key method to limit the effect of congestion is to avoid congestion in the first place. This avoidance action is grouped under the general name of congestion avoidance.

In your CCNA studies, you heard about the 802.11 access method, Carrier Sense Multiple Access with Collision Avoidance (CSMA/CA). This is one implementation of congestion avoidance, where any 802.11 transmitter listens to the medium until the medium is free for the duration of a Distributed Interframe Space (DIFS).

Note

Remember that there are two time units in a cell, the slot time and the short interframe space (SIFS). With 802.11g/a/n/ac, the slot time is 9 μs and the SIFS 16 μs. The DIFS is calculated as 1 DIFS = 1 SIFS + 2 slot times. The slot time is the speed at which stations count time (especially during countdown operations).

This “wait and listen” behavior is already a form of congestion avoidance. Then, to avoid the case where more than one station is waiting to transmit (in which case both stations would wait for a silent DIFS, then transmit at the end of the silent DISF, and thus collide), each station picks up a random number within a range, then counts down from that number (at the speed of one number per slot time) before transmitting. At each point of the countdown, each station is listening to the medium. If another station is transmitting, the countdown stops, and resumes only after the medium has been idle for a DIFS. This random number pick, followed by countdown, is also a form of congestion avoidance because it spreads the transmissions over a period of time (thus limiting the number of occurrences where two stations access the medium at the same time).

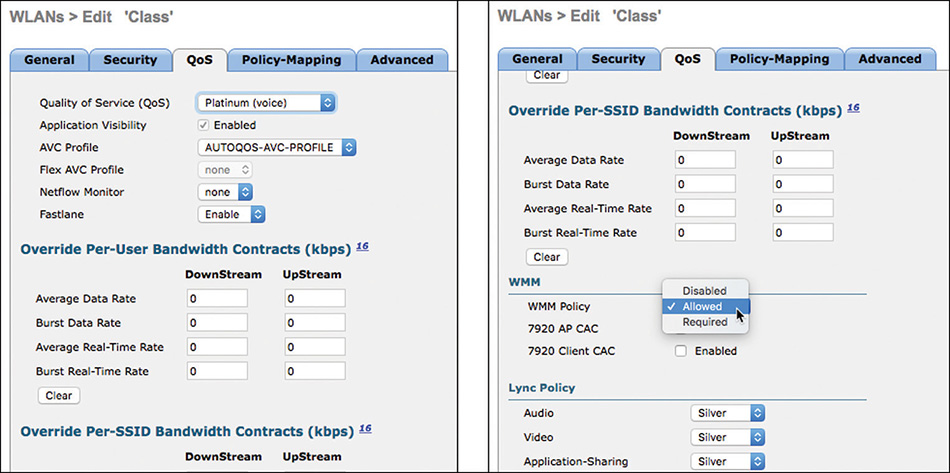

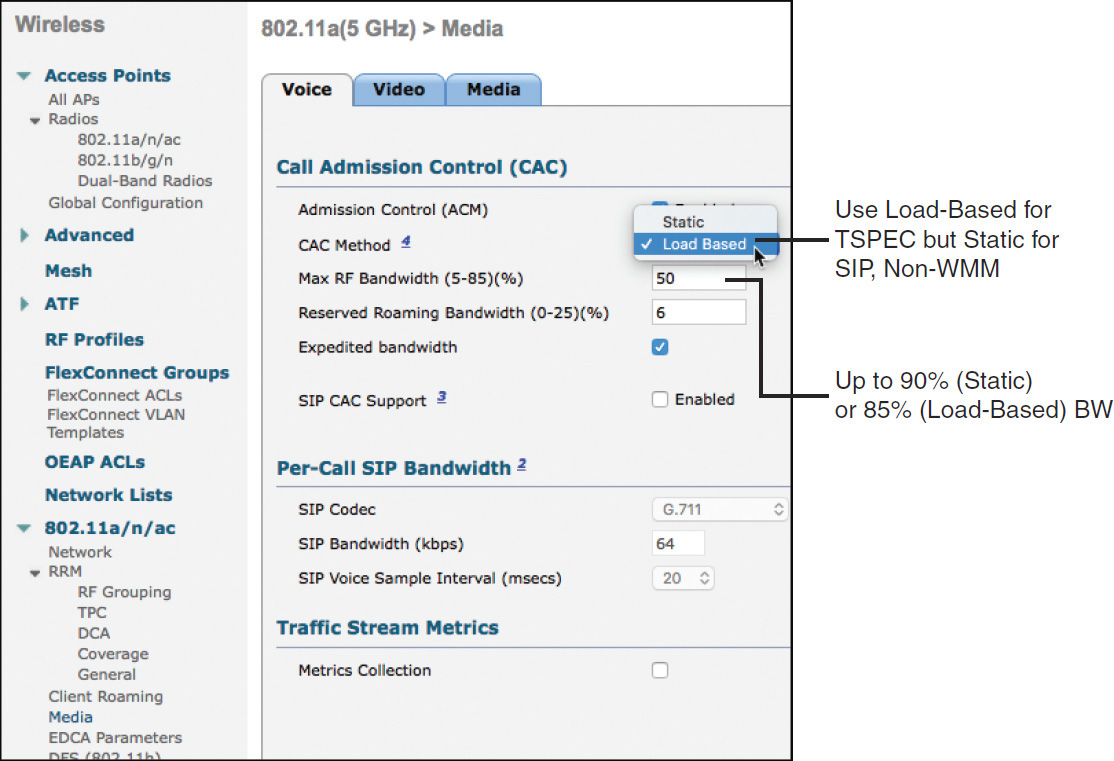

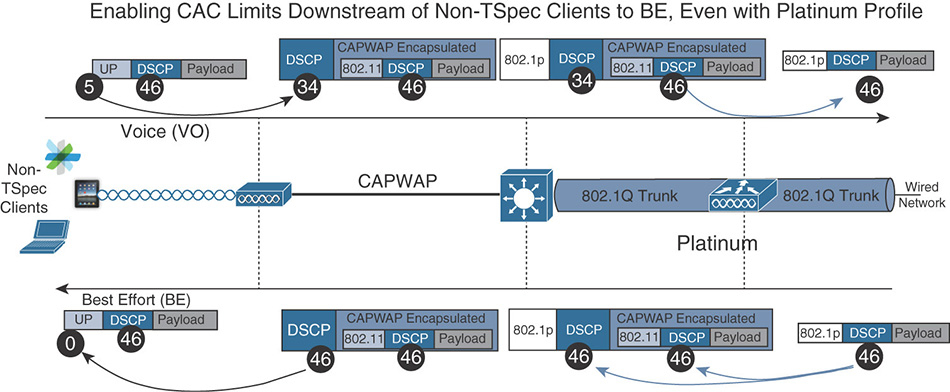

If your network is 802.11n or 802.11ac, it must implement WMM, which is a Wi-Fi Alliance certification that validates a partial implementation of 802.11e. WMM validates that devices implement access categories (AC). It tests that a device has four ACs but does not mandate a specific DSCP to UP mapping, and within each AC does not verify which of the two possible UPs is used. As 802.11n was published in 2009, it is likely that your networks will implement the WMM access method rather than the legacy access method.

WMM also implements the part of 802.11e called Enhanced Distributed Channel Access (EDCA). With WMM and EDCA, your application classifies traffic into one of the four ACs, and the corresponding traffic is sent down the network stack with this categorization. In reality, this classification can be done through a socket call at the application layer that will either directly specify the AC or, more commonly, will specify another QoS value (typically DSCP, but sometimes UP), that will in turn be translated at the Wi-Fi driver level into the corresponding AC.

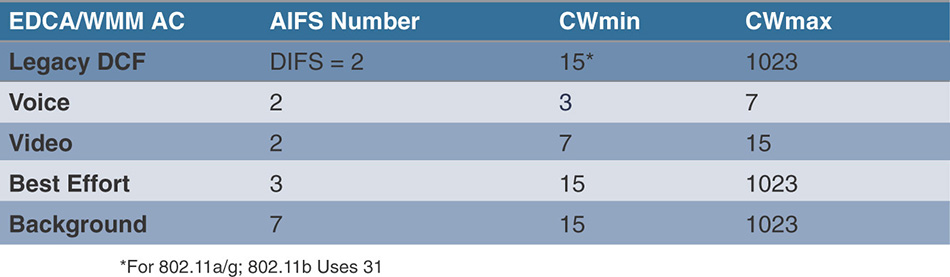

Each AC has a different initial wait time (the DIFS for non-WMM traffic). Because that initial duration is arbitrated depending on which AC the traffic belongs to, the initial wait time is called the Arbitrated Interframe Space (AIFS). Because it is calculated in reference to the DIFS, it is also often called the Arbitrated Interframe Space Number (AIFSN). The AIFSN is smaller for ACs intended for traffic that is more sensitive to loss and delay. This difference means that the driver will start counting down sooner for voice traffic than, for example, best effort or background traffic.

For each packet, the driver also picks up a random number. After the medium has been idle for the duration of the AIFSN matching the AC within which the packet is waiting, the driver will count down from that random number. The range of numbers from where the random number is picked is called the Contention Window (CW). You will see CW as a number, but keep in mind that a random number is picked within a range. So if you see CW = 15, do not think that the device should wait 15 slot times. A random number will be picked within 0 and 15, and the device would count down from that number.

The same logic applies here as for the AIFSN. ACs more sensitive to loss and delay will pick a number within a smaller range. Although the number is random (and therefore any of the ACs may pick, for example, 1 or 2), ACs with a small range have more statistical chances of picking smaller numbers more often than ACs with larger ranges.

When the countdown ends (at 0), the matching packet is sent. All four ACs are counting down in parallel. This means that it may (and does) happen that two ACs get to 0 at the same time. This is a virtual collision. 802.11 states that, in that case, the driver should consider that a collision really occurred and should apply the collision rules (below). However, some vendors implement a virtual bypass and send the packet with the highest AC, and either only reset the counter of the packet with the lower AC or send that packet with the lower AC right after the packet with the higher AC. It is difficult to know whether your client vendor implements these shortcuts unless you have visibility into the driver. Cisco does not implement these shortcuts because they violate the rules of 802.11, and because they result in weird imbalances between applications. However, Cisco implements 8 queues (per client), which means that packets with UP 5 and packets with UP 4, although belonging to the same AC, will be counting down in parallel (whereas a simpler driver would count down for one, then count down for the other, because the packet would be one after the other in the same queue). When two packets of the same AC but with different UPs end up at 0 at the same time, this is the only case where the packet with larger UP is sent first, then (after the matching AIFSN) the packet with the lower UP is also sent. This allows for a differentiated treatment between packets of the same AC but different UPs while not violating the 802.11 rules (that treats inter-AC collisions as virtual collisions, not intra-AC collisions).

Collisions can occur virtually, but also physically (when two stations send at the same time). In this case, a collision will be detected for unicast frames because no acknowledgement is received (but note that a collision will not be detected by the sender of broadcast or multicast traffic). In that case, the contention window doubles. Most drivers double the random number they picked initially, although 802.11 states that the window itself should double, and a random number can be picked from this new, twice-as-large, window. The reason for doubling the number itself is another form of congestion avoidance. If a collision happened, too many stations had too much traffic to send within too short a time interval. Because you cannot reduce the number of transmitters (you have no control over the number of stations having traffic to send at this point in time), your only solution is to work on the time dimension and extend the time interval across which that same number of stations is going to send.

This doubling of time is logical from a protocol design standpoint but not very efficient for time-sensitive traffic. Therefore, an upper limit is also put on this extension of the contention window. The contention window can only grow up to a maximum value that is smaller for voice than for video, smaller for video than for best effort, and smaller for best effort than for background traffic. Therefore, the contention window can span between an initial value, called the minimum contention window value (CWmin), and a max number, called the maximum contention window value (CWmax).

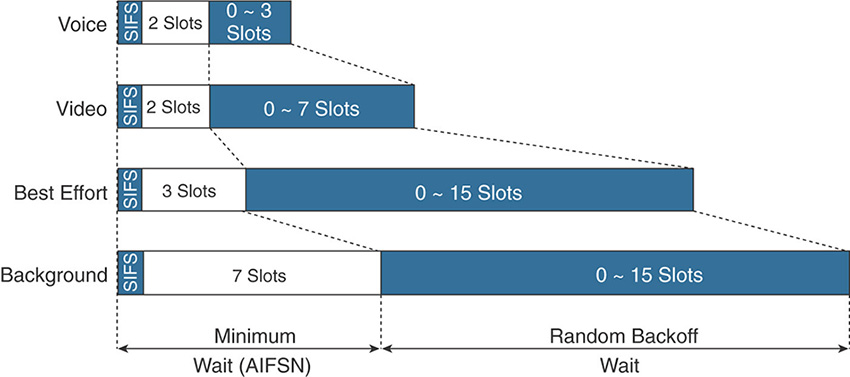

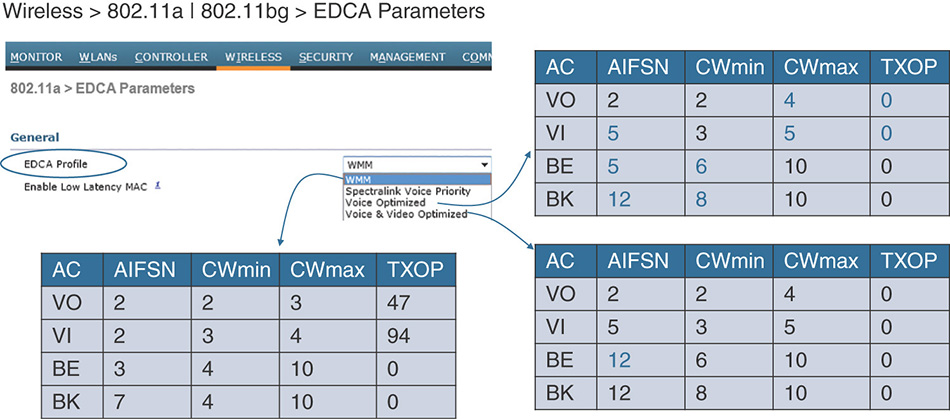

The different AIFSN and CW values are represented in Figure 7-10. You will note that the AIFSN for voice has the same value as the DIFS. However, the CWmin provides enough statistical advantage to voice that a smaller AIFSN would not have been useful. As a matter of fact, early experiments (while 802.11e was designed) showed that any smaller AIFSN for voice or video would give such a dramatic advantage to these queues over non-WMM traffic that non-WMM stations would almost never have a chance to access the medium.

Also, it is the combined effect of the AIFS and the CW that provides a statistical differentiation for each queue. As illustrated in Figure 7-11, you can see that voice has an obvious advantage over best effort. Non-WMM traffic would have the same AIFS/DIFS as the voice queue, but the CW is so much larger for best effort that voice gets access to the medium much more often than best effort.

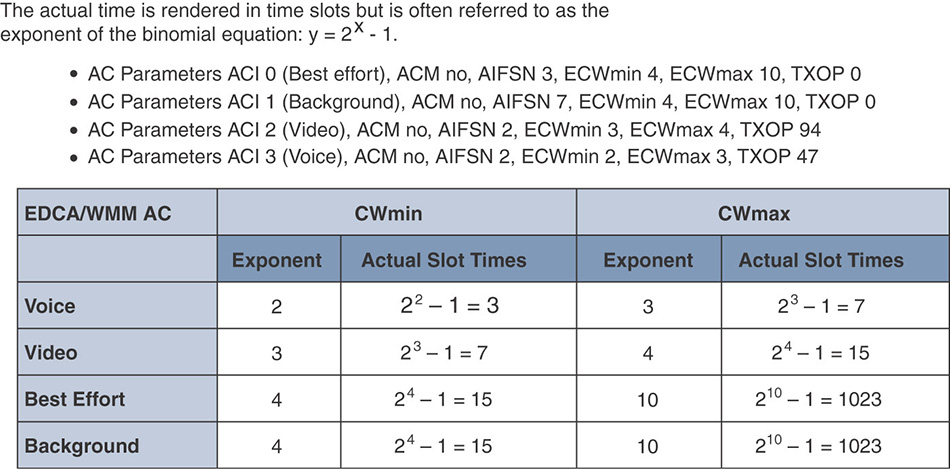

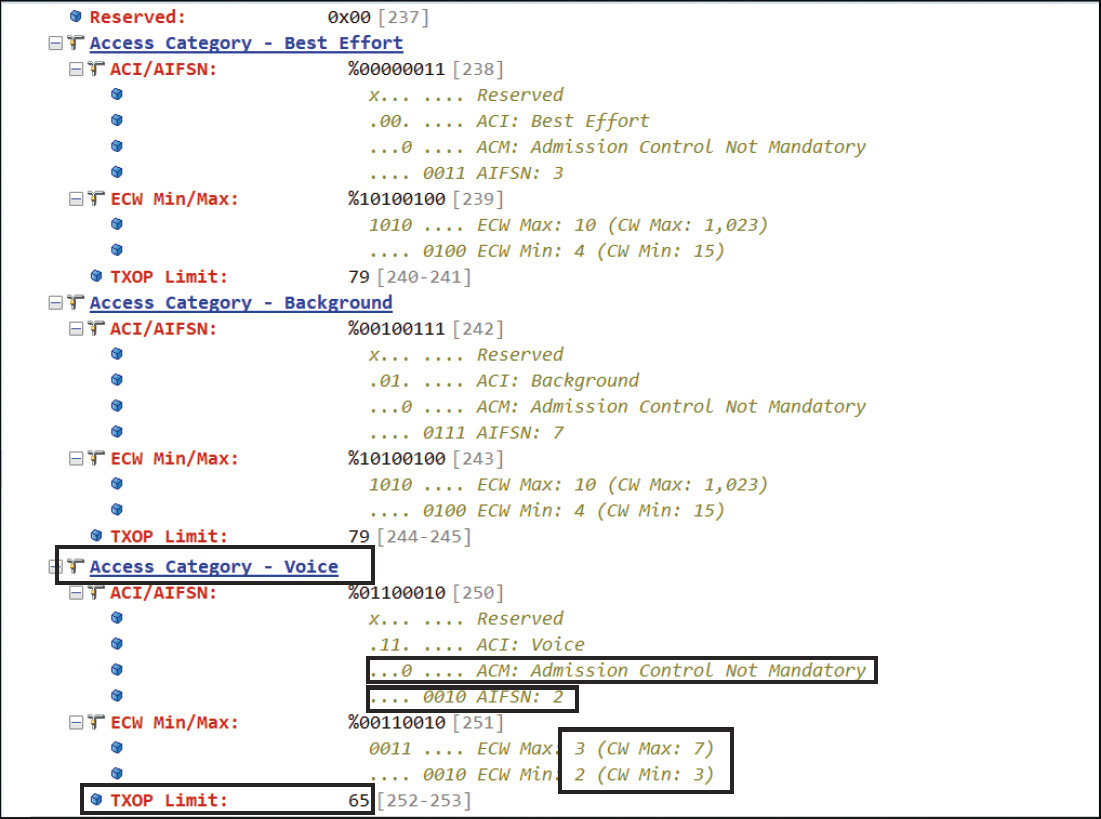

Note that if you capture traffic and look at the CW values, you may not see the right numbers. This is because 802.11 defines them as exponents, as shown in Figure 7-12.

A value of 3 (0011) would in fact mean (23 − 1), which is 7. This exponent view provides a compact way to express numbers with the least number of consumed bits.

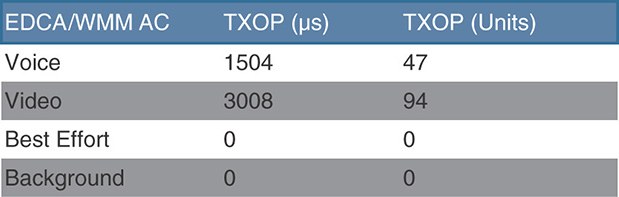

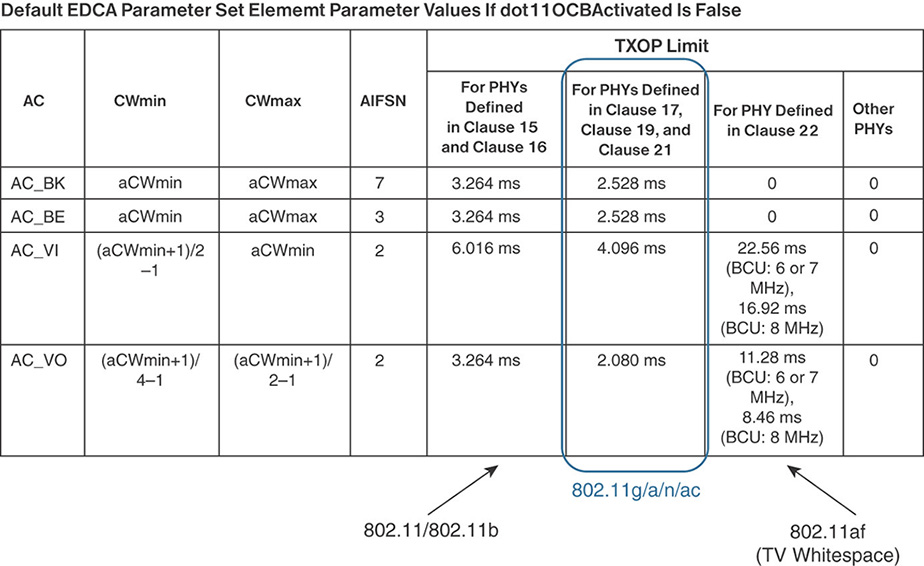

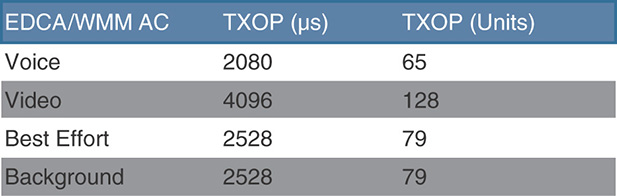

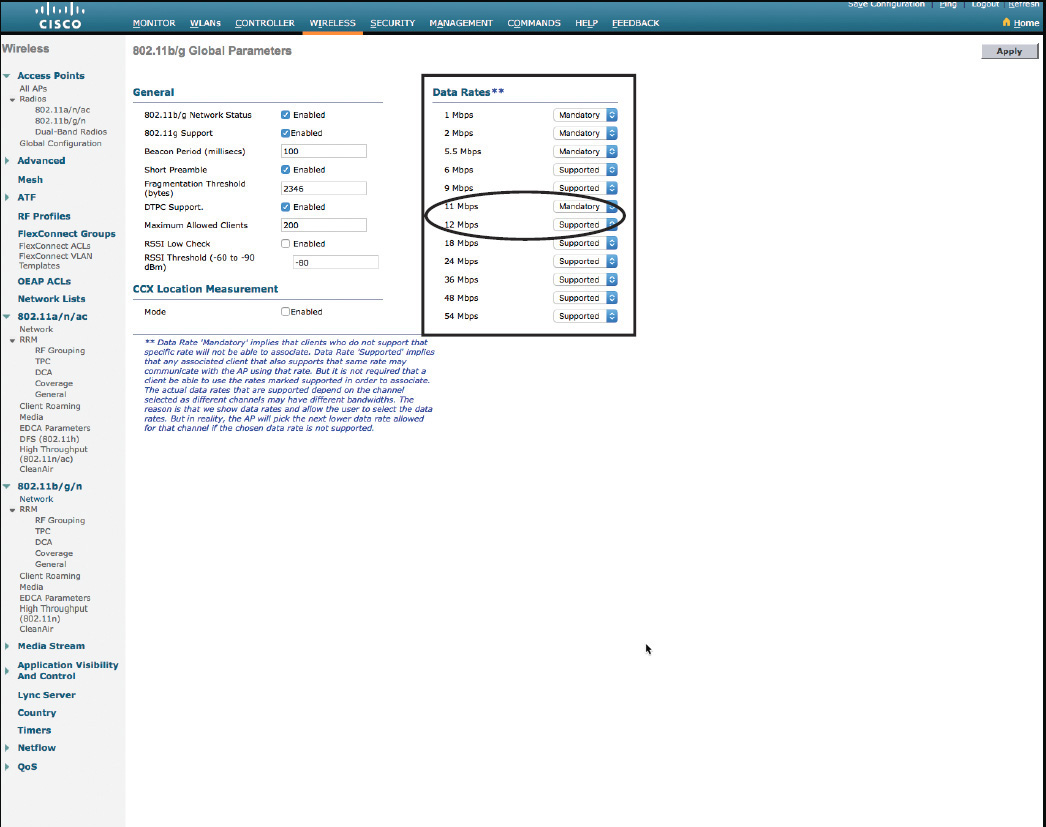

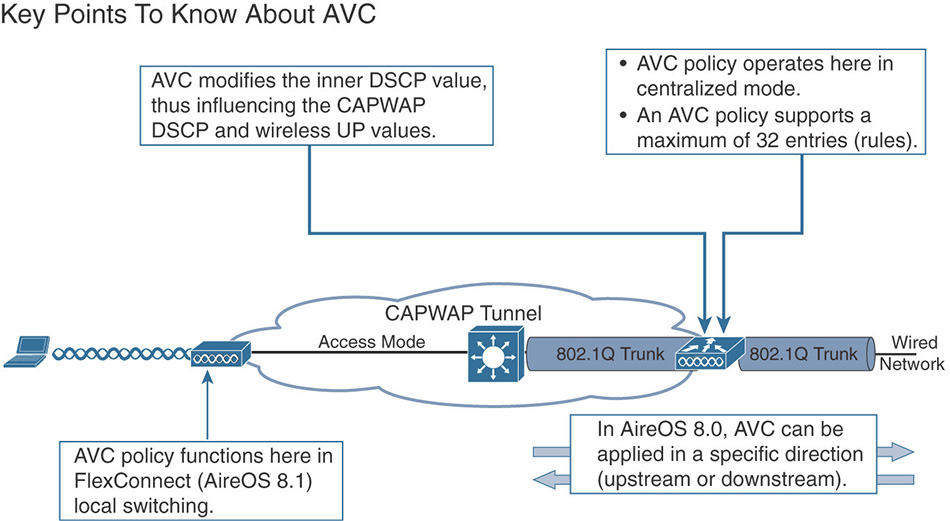

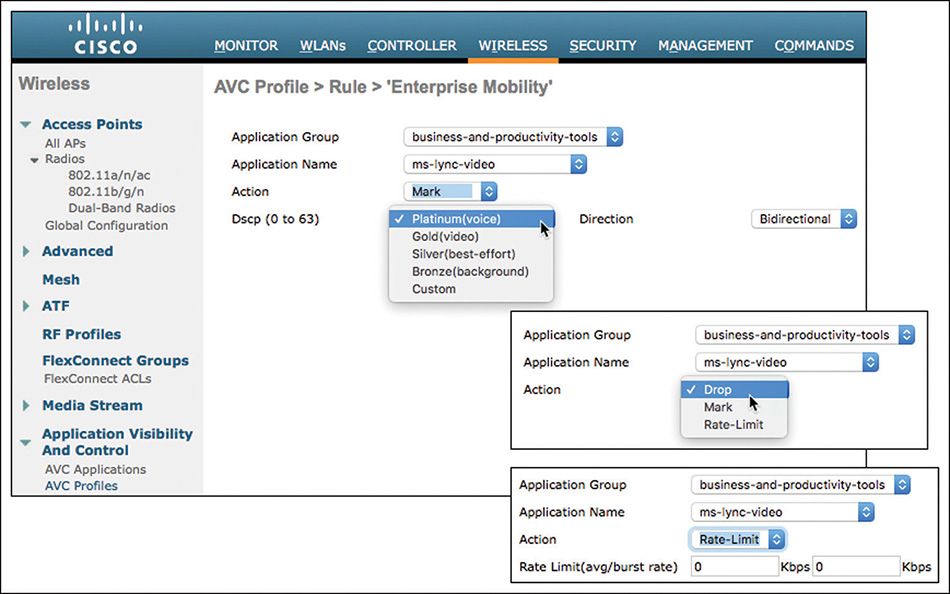

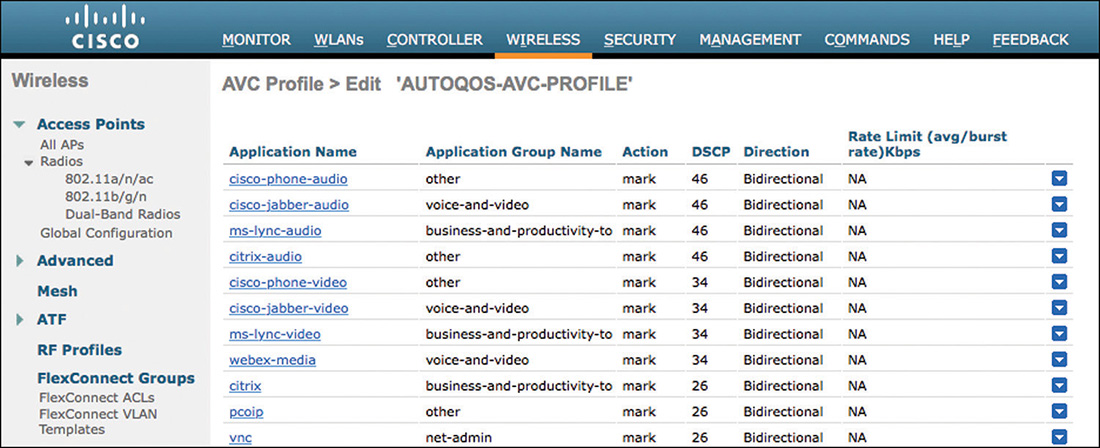

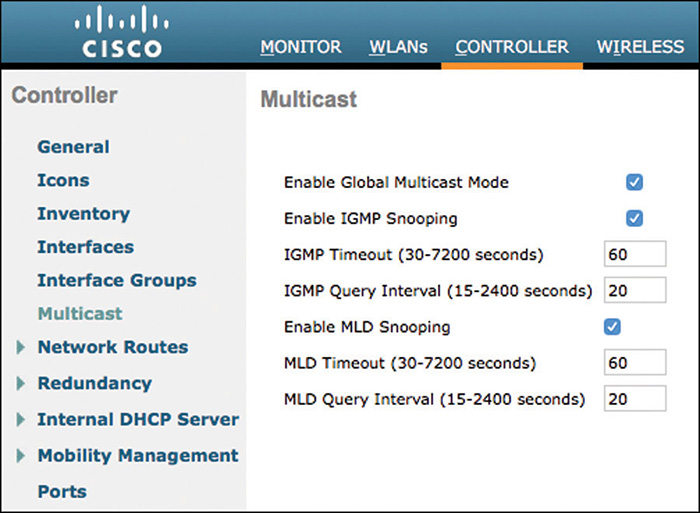

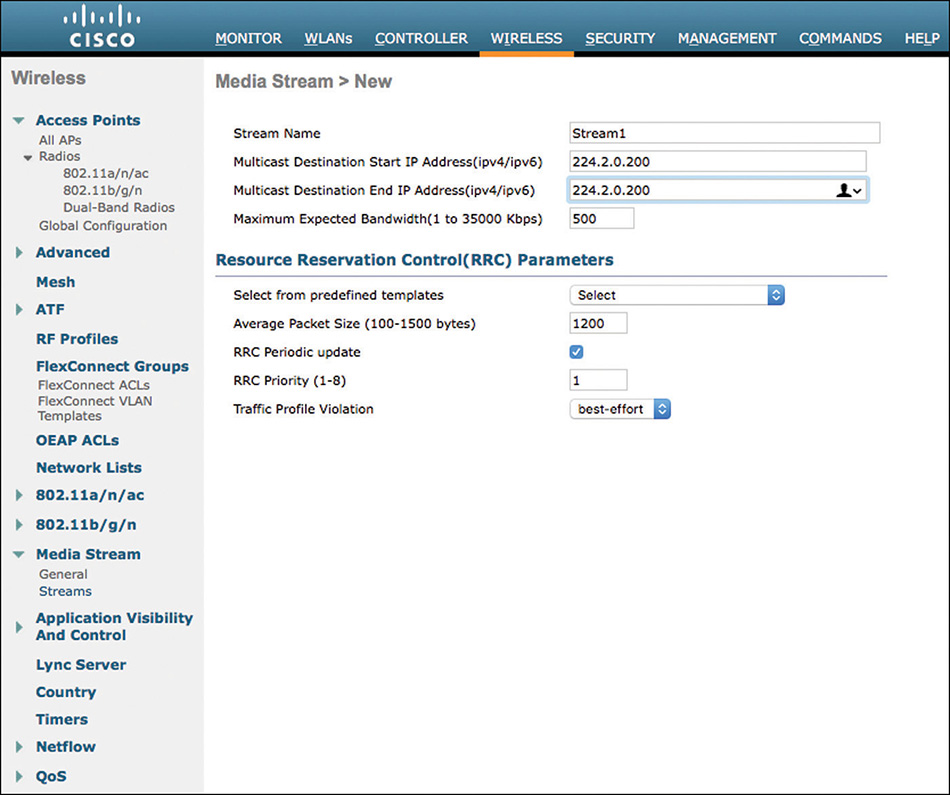

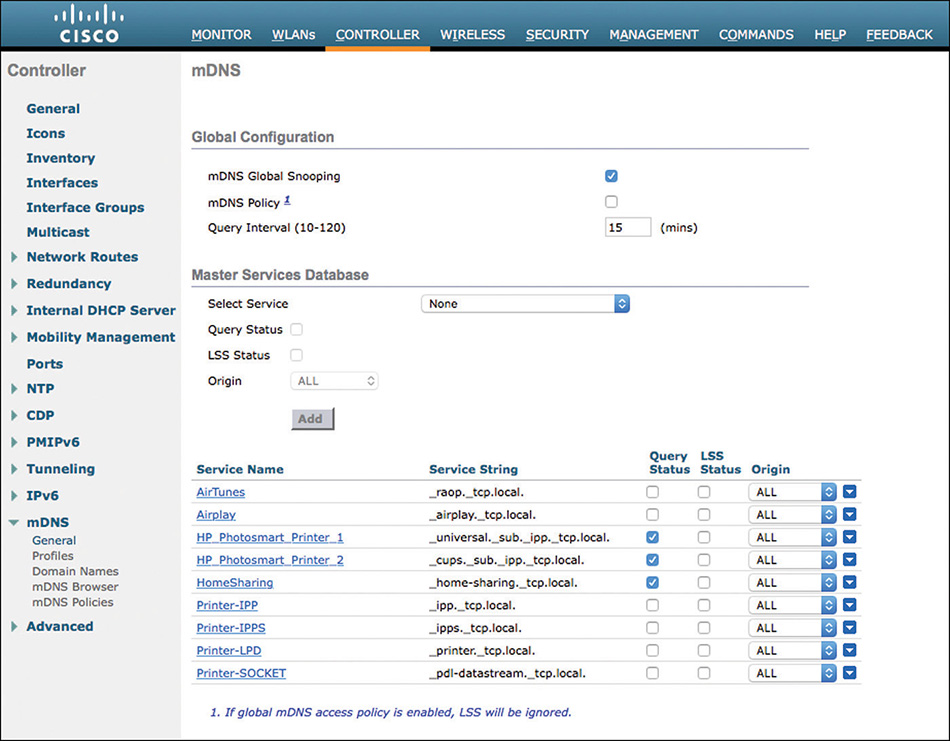

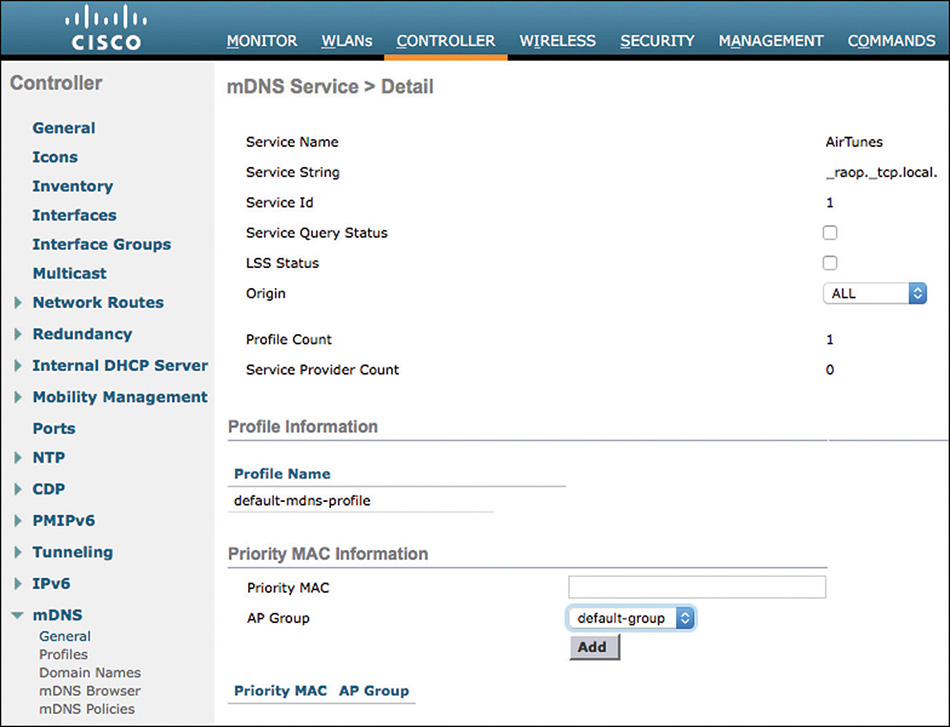

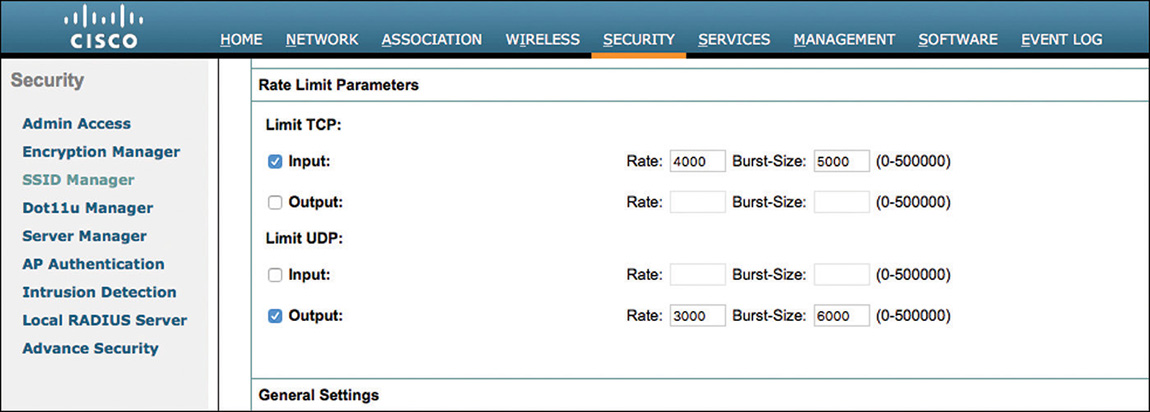

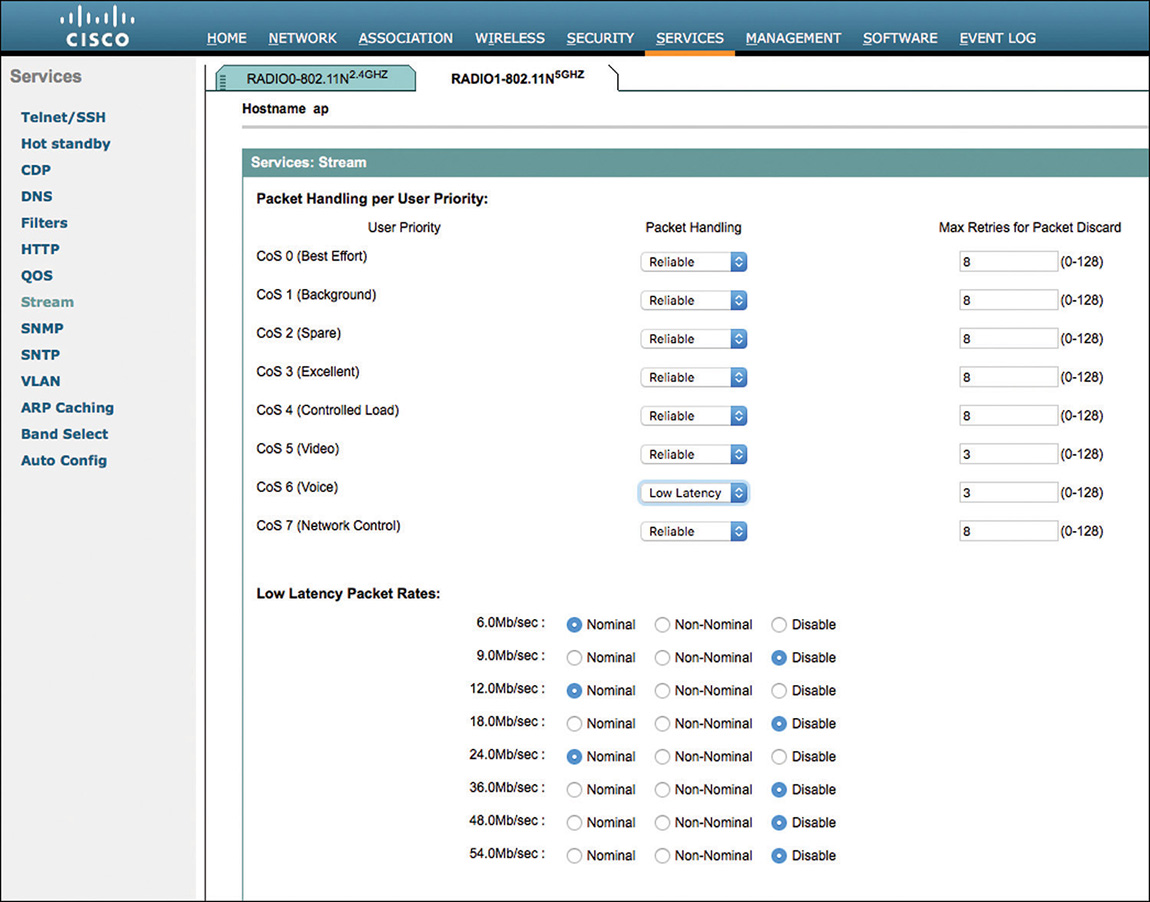

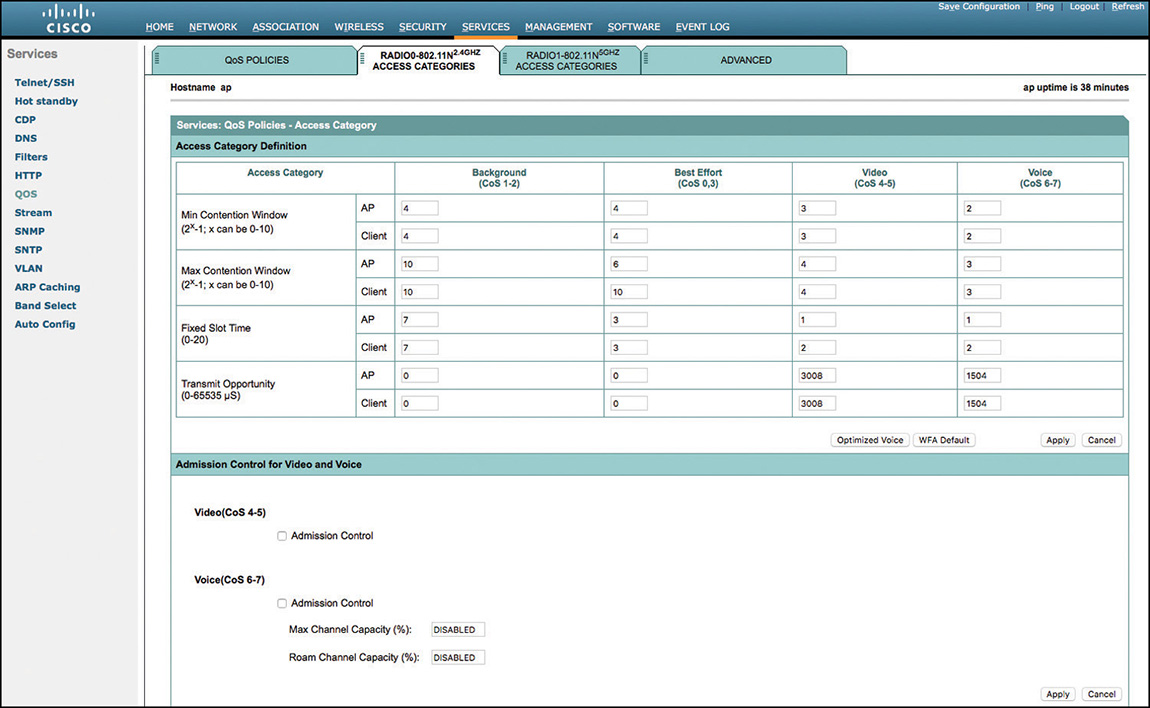

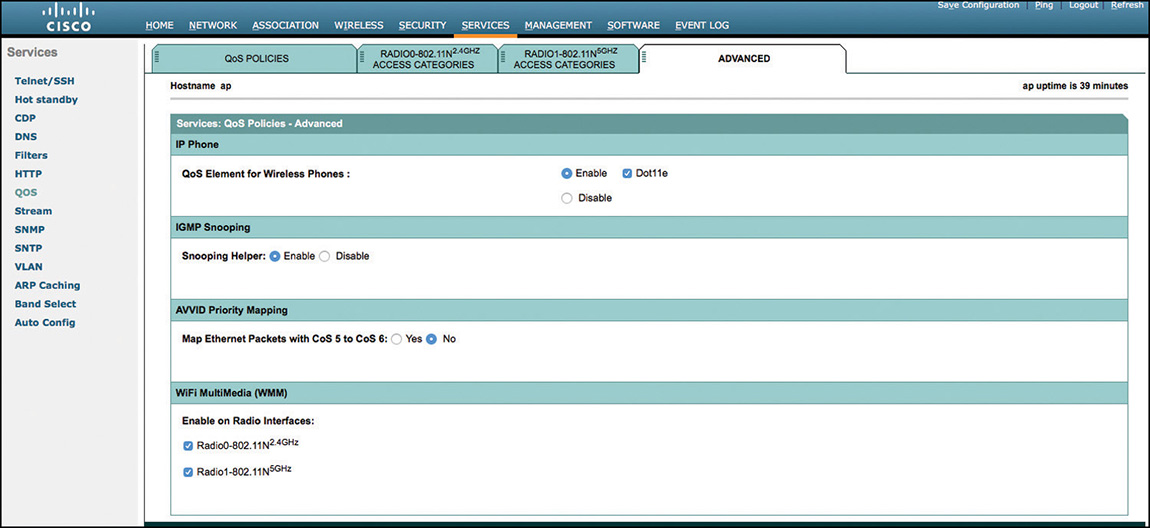

TXOP