Chapter 9. WAN Availability and QoS

This chapter covers the following subjects:

WAN Design Methodologies: This section discusses the processes of identifying business and technology strategies, assessing the existing network, and creating a design that is scalable, flexible, and resilient.

Design for High Availability: This section covers removing the single points of failure from a network design by using software features or hardware-based resiliency.

Internet Connectivity: This section discusses public network access and securely connecting business locations.

Backup Connectivity: This section discusses providing an alternative WAN path between locations when primary paths are unavailable.

QoS Strategies: This section discusses design models for providing QoS service differentiation.

Designing End-to-End QoS Policies: This section discusses options for QoS mechanisms such as queuing, policing, and traffic shaping.

This chapter covers WAN design and QoS. Expect plenty of questions on the ENSLD 300-420 exam about the selection and use of WAN designs in enterprise networks. A CCNP enterprise designer must understand WAN availability and the QoS models that are available to protect traffic flows in the network. This chapter starts with WAN methodologies and then covers WAN availability with deployment models using MPLS, hybrid, and Internet designs. This chapter also explores backup connectivity and failover designs. Finally, it covers QoS strategies and designing end-to-end QoS policies.

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz helps you identify your strengths and deficiencies in this chapter’s topics. This quiz, derived from the major sections in the “Foundation Topics” portion of the chapter, helps you determine how to spend your limited study time. Table 9-1 outlines the major topics discussed in this chapter and the “Do I Know This Already?” quiz questions that correspond to those topics. You can find the answers in Appendix A, “Answers to the ‘Do I Know This Already?’ Quiz Questions Q&A Questions.”

Table 9-1 “Do I Know This Already?” Foundation Topics Section-to-Question Mapping

Foundation Topics Section |

Questions Covered in This Section |

WAN Design Methodologies |

1–2 |

Design for High Availability |

3 |

Internet Connectivity |

4–5 |

Backup Connectivity |

6 |

QoS Strategies |

7–8 |

Designing End-to-End QoS Policies |

9–10 |

1. Which of the following is a measure of data transferred from one host to another in a given amount of time?

Reliability

Response time

Throughput

Jitter

2. Which of the following is a description of the key design principle scalability?

Modularity with additional devices, services, and technologies

Redundancy through hardware, software, and connectivity

Ease of managing and maintaining the infrastructure

Providing enough capacity and bandwidth for applications

3. What percentage of availability allows for four hours of downtime in a year?

99.5%

99.99%

99.999%

99.95%

4. What Internet connectivity option provides the highest level of resiliency for services?

Single-router dual-homed

Single-router single-homed

Dual-router dual-homed

Shared DMZ

5. Which of the following eliminates single points of failures with the router and the circuit?

Dual-router dual-homed

Single-router dual-homed

Shared DMZ

Single-router single-homed

6. What backup option allows for both a backup link and load-sharing capabilities using the available bandwidth?

Dial backup

Secondary WAN link

IPsec tunnel

GRE tunnel

7. Which of the following adds a strict priority queue to modular class-based QoS?

FIFO

CBWFQ

WFQ

LLQ

8. Which of the following is a mechanism to handle traffic overflow using a queuing algorithm with QoS?

Congestion management

Traffic shaping and policing

Classification

Link efficiency

9. Which QoS model uses Resource Reservation Protocol (RSVP) to explicitly request QoS for the application along the end-to-end path through devices in the network?

DiffServ

IntServ

CBWFQ

BE

10. What technique does traffic shaping use to release the packets into the output queue at a preconfigured rate?

Token bucket

Leaky bucket

Tagging

Interleaving

Foundation Topics

This chapter describes the WAN design and QoS topics you need to master for the ENSLD 300-420 exam. These topics include WAN methodologies in the enterprise edge, WAN availability, and WAN designs including backup and failover options. In addition, this chapter describes quality of service (QoS) and how it can be used to prioritize network traffic and better utilize the available WAN bandwidth.

WAN Design Methodologies

WAN design methodologies should be used when designing enterprise edge networks. Some keys to WAN design are the following processes:

Identifying the network requirements: This includes reviewing the types of applications, the traffic volume, and the traffic patterns in the network.

Assessing the existing network: This involves reviewing the technologies used and the locations of hosts, servers, network equipment, and other end nodes.

Designing the topology: This is based on the availability of technology as well as the projected traffic patterns, technology performance, constraints, and reliability.

When designing the WAN topology, remember that the design should describe the functions that the enterprise modules should perform. The expected service levels provided by each WAN technology should be explained. WAN connections can be characterized by the cost of renting the transmission media from the service provider to connect two or more sites.

New network designs should be flexible and adaptable to future technologies and should not limit the customer’s options going forward. For example, collaboration applications such as VoIP and video are common now, and most enterprise network designs should be able to support them. The customer should not have to undergo major hardware upgrades to implement these types of technologies. The ongoing support and management of the network is another important factor, and the design’s cost-effectiveness is important as well.

Table 9-2 lists key design principles that can help serve as the basis for developing network designs.

Table 9-2 Key Design Principles

Design Principle |

Description |

High availability |

Redundancy through hardware, software, and connectivity |

Scalability |

Modularity with additional devices, services, and technologies |

Security |

Measures to protect business data |

Performance |

Enough capacity and bandwidth for applications |

Manageability |

Ease of managing and maintaining the infrastructure |

Standards and regulations |

Compliance with applicable laws, regulations, and standards |

Cost |

Appropriate security and technologies given the budget |

High availability is what most businesses and organizations strive for in sound network designs. The key components of application availability are response time, throughput, and reliability. Real-time applications such as voice and video are not very tolerant of jitter and delay.

Table 9-3 identifies various application requirements for data, voice, and video traffic.

Table 9-3 Application Requirements for Data, Voice, and Video Traffic

Characteristic |

Data File Transfer |

Interactive Data Application |

Real-Time Voice |

Real-Time Video |

Response time |

Reasonable |

Within a second |

One-way delay less than 150 ms with low delay and jitter |

Minimum delay and jitter |

Throughput and packet loss tolerance |

High/medium |

Low/low |

Low/low |

High/medium |

Downtime (high reliability = low downtime) |

Reasonable |

Low |

Low |

Minimum |

Response Time

Response time is a measure of the time between a client user request and a response from the server host. An end user will be satisfied with a certain level of delay in response time. However, there is a limit to how long the user will wait. This amount of time can be measured and serves as a basis for future application response times. Users perceive the network communication in terms of how quickly the server returns the requested information and how fast the screen updates. Some applications, such as a request for an HTML web page, require short response times. On the other hand, a large FTP transfer might take a while, but this is generally acceptable.

Throughput

In network communications, throughput is a measure of data transferred from one host to another in a given amount of time. Bandwidth-intensive applications have a greater impact on a network’s throughput than does interactive traffic such as a Telnet session. Most high-throughput applications involve some type of file-transfer activity. Because throughput-intensive applications have longer response times, you can usually schedule them when time-sensitive traffic volumes are lower, such as after hours.

Reliability

Reliability is a measure of a given application’s availability to its users. Some organizations require rock-solid application reliability, such as five nines (99.999%); this level of reliability has a higher price than most other applications. For example, financial and security exchange commissions require nearly 100% uptime for their applications. These types of networks are built with a large amount of physical and logical redundancy. It is important to ascertain the level of reliability needed for a network that you are designing. Reliability goes further than availability by measuring not only whether the service is there but whether it is performing as it should.

Bandwidth Considerations

Table 9-4 compares several WAN technologies in terms of speeds and media types.

Table 9-4 Physical Bandwidth Comparison

WAN Connectivity |

Bandwidth: Up to 100 Mbps |

Bandwidth: 1 Gbps to 10 Gbps |

Copper |

Fast Ethernet |

Gigabit Ethernet, 10 Gigabit Ethernet |

Fiber |

Fast Ethernet |

Gigabit Ethernet, 10 Gigabit Ethernet, SONET/SDH, dark fiber |

Wireless LTE/5G |

802.11a/g LTE/LTE Advanced |

802.11n/ac Wave1/Wave2 LTE Advance Pro/5G |

A WAN designer must engineer the network with enough bandwidth to support the needs of the users and applications that will use the network. How much bandwidth a network needs depends on the services and applications that will require network bandwidth. For example, VoIP requires more bandwidth than interactive Secure Shell (SSH) traffic. A large number of graphics or CAD drawings require an extensive amount of bandwidth compared to file or print sharing information being transferred on the network. A big driver in increasing demand for bandwidth is the expanded use of collaboration applications that utilize video interactively.

When designing bandwidth for a WAN, remember that implementation and recurring costs are important factors. It is best to begin planning for WAN capacity early. When the link utilization reaches around 50% to 60%, you should consider increases and closely monitor the capacity. When the link utilization reaches around 75%, immediate attention is required to avoid congestion problems and packet loss that will occur when the utilization nears full capacity.

QoS techniques become increasingly important when delay-sensitive traffic such as VoIP is using the limited bandwidth available on the WAN. LAN bandwidth, on the other hand, is generally inexpensive and plentiful; in the age of robust real-time applications, however, QoS can be necessary. To provide connectivity on the LAN, you typically need to be concerned only with hardware and implementation costs.

Design for High Availability

Most businesses need a high level of availability, especially for their critical applications. The goal of high availability is to remove the single points of failure in the network design by using software features or hardware-based resiliency. Redundancy is critical in providing high levels of availability for the enterprise. Some technologies have built-in techniques that enable them to be highly available. For technologies that do not have high availability, other techniques can be used, such as additional WAN circuits or backup power supplies.

Defining Availability

System availability is a ratio of the expected uptime to the amount of downtime over the same period of time. Let’s take an example of 4 hours of downtime per year. There are 365 days in a year, which equals 8760 hours (365 × 24 = 8760). Now, if we subtract 4 hours from the annual total of 8760 hours, we get 8756. Then, if we figure 8756 / 8760 × 100, we get the amount of availability percentage, which in this case is 99.95%.

Table 9-5 shows the availability percentages from 99% to 99.999999%, along with amounts of downtime per year.

Table 9-5 Availability Percentages

Availability |

Downtime per Year |

The Nines of Availability |

Targets |

99.000000% |

3.65 days |

Two nines |

|

99.900000% |

8.76 hours |

Three nines |

|

99.990000% |

52.56 minutes |

Four nines |

Branch WAN high availability |

99.999000% |

5.256 minutes |

Five nines |

Branch WAN high availability |

99.999900% |

31.536 seconds |

Six nines |

Ultra high availability |

99.999990% |

3.1536 seconds |

Seven nines |

Ultra high availability |

99.999999% |

.31536 seconds |

Eight nines |

Ultra high availability |

Figure 9-1 illustrates WAN router paths and the impacts to availability depending on the level of redundancy used.

Figure 9-1 Router Paths and Availability Examples

Deployment Models

There are three common deployment models for WAN connectivity, each with pros and cons:

MPLS WAN: Single- or dual-router MPLS VPN

Hybrid WAN: MPLS VPN and Internet VPN

Internet WAN: Single- or dual-router Internet VPN

An MPLS WAN involves single or dual routers for the MPLS VPN connections. It provides for the highest in SLA guarantees for both QoS capabilities and network availability. However, this option is the most expensive, and it ties the organization to the service provider.

A hybrid WAN combines an MPLS VPN and an Internet VPN on a single router or on a pair of routers. This deployment model offers a balanced cost option between the higher-cost MPLS VPN connection and the lower-cost Internet VPN for backup. With a hybrid WAN, traffic can be split between the MPLS VPN for higher-priority-based traffic and Internet VPN for lower-priority-based traffic. Newer WAN designs are also using SDWAN with both MPLS and Internet-based transports.

An Internet WAN includes a single router or dual routers using Internet-based VPN only. This deployment model is the lowest-cost option but lacks the SLAs and QoS capabilities offered by carriers. The enterprise would be responsible for providing SLAs to the end users.

Redundancy Options

Depending on the cost of downtime for an organization, different levels of redundancy can be implemented for a remote site. The more critical WAN sites will use higher levels of redundancy. With any of the deployment options—MPLS WAN, hybrid WAN, or Internet WAN—you can design redundant links with redundant routers, a single router with redundant links, or a single router with a single link.

For the most critical WAN sites, you typically want to eliminate single points of failure by designing with dual routers and dual WAN links along with dual power supplies. However, this highly available option comes with a higher price tag and is more complex to manage; however, it offers failover capabilities. Another option available to reduce cost is to use a single router with dual power supplies and multiple WAN links providing power and link redundancy. Non-redundant, single-homed sites are the lowest cost, but they have multiple single points of failure inherent with the design, such as the WAN carrier or WAN link.

Single-Homed Versus Multi-Homed WANs

The advantages of working with a single WAN carrier are that you only have one vendor to manage, and you can work out a common QoS model that can be used throughout your WAN. The major drawback with a single carrier is that if the carrier has an outage, it can be catastrophic to your overall WAN connectivity. This also makes it difficult to transition to a new carrier because all your WAN connectivity is with a single carrier.

On the other hand, if you have dual WAN carriers, the fault domains are segmented, and there are typically more WAN offerings to choose from because you are working with two different carriers. This also allows for greater failover capabilities with routing and software redundancy features. The disadvantages with dual WAN carriers are that the overall design is more complex to manage, and there will be higher recurring WAN costs.

Single-Homed MPLS WANs

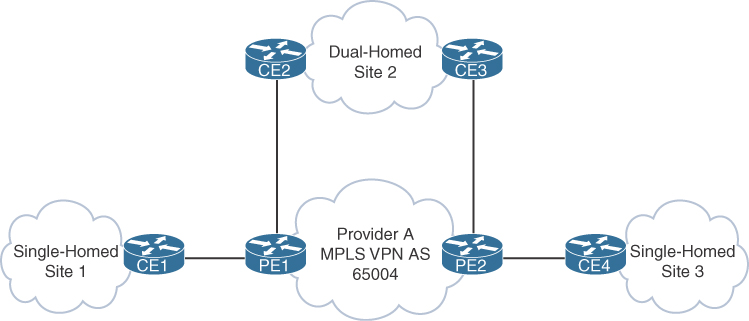

In a single-MPLS-carrier design, each site is connected to a single MPLS VPN from one provider. For example, you might have some sites that are single-homed and some sites that are dual-homed to the MPLS VPN. Each site will consist of CE routers peering with the provider using eBGP, and iBGP will be used for any CE-to-CE peering. Each CE will advertise any local prefixes to the provider with BGP and redistribute any learned BGP routes from the provider into the IGP or use default routing. Common IGPs are standard-based OSPF and EIGRP.

Figure 9-2 illustrates a single-MPLS-carrier design with single- and dual-homed sites.

Figure 9-2 Single-MPLS-Carrier Design Example

Multi-Homed MPLS WANs

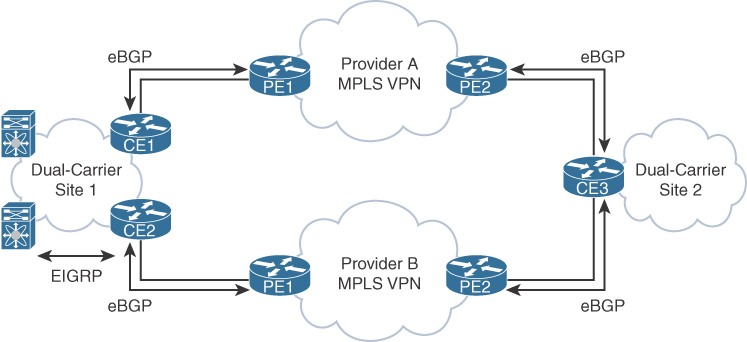

In a dual-MPLS-carrier design, each site is connected to both provider A and provider B. Some sites might have two routers for high availability, and others might have only a single router but with two links for link and provider redundancy. For example, each CE router would redistribute local routes from EIGRP into BGP. Routes from other sites would be redistributed from BGP into EIGRP as external routes. For sites that have two routers, filtering or tagging of the routes in and out of BGP would be needed to prevent routing loops.

Figure 9-3 illustrates a dual-MPLS-carrier design with single and dual routers.

Figure 9-3 Dual-MPLS-Carrier Design Example

Hybrid WANs: Layer 3 VPN with Internet Tunnels

Hybrid WAN designs involve using an MPLS VPN for the primary connection and an Internet tunnel for the backup connection. In this design, eBGP would be used to peer with the MPLS VPN provider, and EIGRP would be used for routing for the IGP internally. At each site, the CE router would learn routes from the MPLS VPN via BGP and redistribute the routes from BGP into EIGRP. Then each site would redistribute EIGRP routes into BGP and use EIGRP to peer with other local routers at each site. The Internet tunnel routers would use EIGRP to exchange routes inside the VPN tunnels, and they would not need to redistribute routing information because they would run only EIGRP. On the MPLS VPN router, BGP-learned routes would be preferred because the BGP routes that would be redistributed into EIGRP routes would have a lower administrative distance. In this case, if you want the MPLS VPN router to be the primary path, you need to run an FHRP between the dual-homed routers, with the active router being the MPLS VPN-connected router. That way, it would choose the MPLS VPN path as the primary path and use the Internet tunnel path as the backup path for failover. Another option would be to modify the routing protocol metrics so that the MPLS VPN path is preferred.

Figure 9-4 illustrates a hybrid WAN design with an MPLS VPN and an Internet VPN.

Figure 9-4 Hybrid WAN Design Example

Internet Connectivity

Most enterprises have multiple sites with different numbers of users at the sites, but they are usually grouped into two site types: larger central WAN sites and smaller branch WAN sites. The larger site types typically host more of the users and services. The smaller branch offices tend to have a low user count and a smaller number of hosted services. Both central and branch sites typically need Internet access, but there are high availability considerations to think about when selecting the Internet access design for a given site type. When choosing an Internet connectivity option, remember to consider the business requirements and the budget allocated for the design.

Internet connectivity options include the following:

Dual-router dual-homed: Provides the highest level of resiliency for Internet connectivity with full redundancy in hardware, links, and Internet service providers.

Single-router dual-homed: Provides a good level of redundancy for Internet connectivity through the use of multiple links and multiple Internet service providers.

Single-router single-homed: Provides the bare minimum for Internet connectivity, providing no levels of redundancy for the hardware, links, or Internet service providers.

Figure 9-5 shows Internet connectivity options with different levels of redundancy.

Because central sites have larger user populations, they normally have higher Internet bandwidth connectivity and centralized access control for the Internet traffic flows. Although most branch offices have Internet connections, many of them still have their Internet traffic backhauled over the WAN to the central site, where centralized access control can occur.

Figure 9-5 Internet Connectivity Options

Internet for Remote Sites

When designing the Internet traffic flows for remote site locations, you have two main options to consider. One option, referred to as centralized Internet access, involves tunneling all the Internet traffic back to the data center or main site. With this option, you have more control over the Internet traffic with centralized security services such as URL filtering, firewalling, and intrusion prevention. However, there are some drawbacks with this approach because the bandwidth requirements and cost will be higher for your WAN links to the branch locations, and it increases the delay for any Internet-based traffic. Another option is to allow Internet-destined traffic at each branch to use the dedicated local Internet connection or VPN split tunneling. There are some advantages with this approach; your bandwidth requirements and the cost for your MPLS VPN links will be lower for your branch locations because you do not need to transport Internet traffic on them. This approach does have some drawbacks, however, because the local Internet access may violate your security policy by exposing more Internet points within your organization that need protection with security services.

Here are some pros and cons of each of these options:

Centralized Internet for each remote site: Higher bandwidth is available, and security policies are centralized, but traffic flows are suboptimal. This option might require additional redundancy at the Internet edge, which may or may not be present.

Direct Internet for remote site: Traffic flows are optimal, but it is more difficult to manage distributed security policies. This option also has a higher risk of Internet attacks due to the greater number of attachment points.

High Availability for the Internet Edge

When you have decided to have two Internet routers, each with a link to two different Internet service providers, you need to think about the logical design for the routers, including failover options. Logical Internet high availability design considerations include the following:

Use a public BGP AS number for eBGP connections to the ISPs.

Use provider-independent IP address space to allow for advertisement to both ISPs.

Receive full or partial Internet routing tables to optimize forwarding outbound.

Use HSRP/GLBP or an IGP such as EIGRP or OSPF internally.

Backup Connectivity

Redundancy is a critical component of WAN design for the remote site because of the unreliable nature of WAN links compared to the LANs that they connect. Many enterprise edge solutions require high availability between the primary and remote sites. Because many remote site WAN links have lower reliability and lack bandwidth, they are good candidates for most WAN backup designs.

Remote site offices should have some type of backup strategy to deal with primary link failures. Backup links can either be permanent WAN or Internet-based connections.

WAN backup options are as follows:

Secondary WAN link: Adding a secondary WAN link makes the network more fault tolerant. This solution offers two key advantages:

Backup link: The backup link provides for network connectivity if the primary link fails. Dynamic or static routing techniques can be used to provide routing consistency during backup events. Application availability can also be increased because of the additional backup link.

Additional bandwidth: Load sharing allows both links to be used at the same time, increasing the available bandwidth. Load balancing can be achieved over the parallel links using automatic routing protocol techniques.

IPsec tunnel across the Internet: An IPsec VPN backup link can redirect traffic to the corporate headquarters when a network failure has been detected on the primary WAN link.

SDWAN with MPLS and Internet tunnel: With SDWAN using two transports, an Internet link can carry traffic to the corporate headquarters by load balancing with the MPLS link or during a failover event when a network failure has occurred.

Failover

An option for network connectivity failover is to use the Internet as the failover transport between sites. However, keep in mind that this type of connection does not support bandwidth guarantees. The enterprise also needs to set up the tunnels and advertise the company’s networks internally so that remote offices have reachable IP destinations. IP SLA monitoring can be leveraged along with a floating static route to provide failover.

Security is of great importance when you rely on the Internet for network connectivity, so a secure tunnel using IPsec needs to be deployed to protect the data during transport.

Figure 9-6 illustrates connectivity between the headend or central site and a remote site using traditional MPLS Layer 3 VPN IP connections for the primary WAN link. The IPsec tunnel is a failover tunnel that provides redundancy for the site if the primary WAN link fails.

Figure 9-6 WAN Failover Using an IPsec Tunnel

IPsec tunnels are configured between the source and destination routers using tunnel interfaces. Packets that are destined for the tunnel have the standard formatted IP header. IP packets that are forwarded across the tunnel need an additional GRE/IPsec header placed on them, as well. As soon as the packets have the required headers, they are placed in the tunnel with the tunnel endpoint as the destination address. After the packets cross the tunnel and arrive on the far end, the GRE/IPsec headers are removed. The packets are then forwarded normally, using the original IP packet headers. An important design consideration to keep in mind is that you might need to modify the MTU sizes between the source and destination of the tunnel endpoints to account for the larger header sizes of the additional GRE/IPsec headers.

QoS Strategies

Quality of service (QoS) is a fundamental network technology that has been around for over 20 years and is still relevant in today’s networks, even though bandwidth has been increasing rapidly over the years. QoS gives network operators techniques to help manage the contention for network resources and in turn provide better application experiences for end users. To help us with this, Cisco supports three main models for providing QoS service differentiation: best-effort (BE), Differentiated Services (DiffServ), and Integrated Services (IntServ). These three models are different in how they enable applications to be prioritized throughout the network and how they handle the delivery of data packets with a specified level of service.

Best-Effort QoS

The best-effort (BE) QoS model is typically the default QoS model and does not implement any QoS behaviors to prioritize traffic before other QoS traffic classes. This is the easiest of the three models because there is nothing you really need to do for it to work. You would not want to use best-effort QoS for any real-time applications such as voice or video traffic. It is a last-resort QoS model that you use after you have already prioritized all other important traffic classes that are sensitive to delay, jitter, and/or bandwidth within the network.

DiffServ

The DiffServ QoS model separates traffic into multiple classes that can be used to satisfy varying QoS requirements. A packet’s class can be marked directly inside the packet that classifies packets into different treatment categories.

With the DiffServ model, packets are classified and marked to receive a per-hop behavior (PHB) at the edge of the network. Then the rest of the network along the path to the destination uses the DSCP value to provide proper treatment. Each network device then treats the packets according to the defined PHB. The PHB can be specified in different ways, such as by using the 6-bit Differentiated Services Code Point (DSCP) setting in IP packets or by using ACLs with source and destination addresses.

Priorities are marked in each packet using DSCP values to classify the traffic according to the specified QoS policy for the traffic class. Typically, the marking is performed per packet at the QoS domain boundaries within the network. Additional policing and shaping operations can be implemented to enable greater scalability.

Table 9-6 maps applications to DSCP and decimal values.

Table 9-6 DSCP Mapping Table

Application |

DSCP |

Decimal Value |

Network control |

CS7 |

56 |

Internetwork control |

CS6 |

48 |

VoIP |

EF |

46 |

Broadcast video |

CS5 |

40 |

Multimedia conferencing |

AF4 |

34–38 |

Real-time interaction |

CS4 |

32 |

Multimedia streaming |

AF3 |

26–30 |

Signaling |

CS3 |

24 |

Transactional data |

AF2 |

18–22 |

Network management |

CS2 |

16 |

Bulk data |

AF1 |

10–14 |

Scavenger |

CS1 |

8 |

Best-effort |

Default |

0 |

IntServ

The IntServ QoS model was designed for the needs of real-time applications such as video, multimedia conferencing, and virtual reality. It provides end-to-end QoS treatment that real-time applications require by explicitly reserving network resources and giving QoS treatment to user packet flows. The IntServ model applications ask the network for an explicit resource reservation per flow and use admission control mechanisms as key building blocks to establish end-to-end QoS throughout the network.

IntServ uses Resource Reservation Protocol (RSVP) to explicitly request QoS for the application along the end-to-end path through devices in the network. Before an application begins transmitting, it requests that each network device reserve the necessary bandwidth along the path. The network, in turn, accepts or rejects the reservation per flow based on available network resources.

IntServ requires several functions on each of the routers and switches between the source and destination of the packet flow:

Admission control: Determines whether the requested flows can be accepted without impacting existing reservations

Classification: Identifies traffic that requires different levels of QoS

Policing: Allows or drops packets when traffic does not conform to the QoS policy

Queuing and Scheduling: Forwards traffic for permitted QoS reservations

Designing End-to-End QoS Policies

Cisco has developed many different QoS mechanisms, such as queuing, policing, and traffic shaping, to enable network operators to manage and prioritize the traffic flowing on a network. Applications that are delay sensitive, such as VoIP, require special treatment to ensure proper application functionality.

Classification and Marking

For a flow to have priority, it must be classified and marked. Classification is the process of identifying the type of traffic. Marking is the process of setting a value in the IP header based on the classification. The following are examples of technologies that support classification:

Network-based application recognition (NBAR): This technology uses deep packet content inspection to identify network applications. An advantage of NBAR is that it can recognize applications even when they do not use standard network ports. Furthermore, it matches fields at the application layer. Before NBAR, classification was limited to Layer 4 TCP and User Datagram Protocol (UDP) port numbers.

Committed access rate (CAR): CAR uses a rate limit to set precedence and allows customization of the precedence assignment by user, source or destination IP address, and application type.

Shaping

Traffic shaping and policing are mechanisms that inspect traffic and take action based on the traffic’s characteristics, such as DSCP or IP precedence bits set in the IP header.

Traffic shaping involves slowing down the rate at which packets are sent out an interface (egress) by matching certain criteria. Traffic shaping uses a token bucket technique to release the packets into the output queue at a preconfigured rate. Traffic shaping helps eliminate potential bottlenecks by throttling back the traffic rate at the source. In enterprise environments, traffic shaping is used to smooth the flow of traffic going out to the provider. This is desirable for several reasons. For example, in provider networks, it prevents the provider from dropping traffic that exceeds the contracted rate.

Policing

Policing involves tagging or dropping traffic, depending on the match criteria. Generally, policing is used to set the limit of traffic coming into an interface (ingress) and uses a “leaky bucket mechanism.” Policing can be used to forward traffic based on conforming traffic and to drop traffic that violates the policy. Policing is also referred to as committed access rate (CAR). One example of using policing is giving preferential treatment to critical application traffic by elevating to a higher class and reducing best-effort traffic to a lower-priority class.

When you contrast traffic shaping with policing, remember that traffic shaping buffers packets, while policing can be configured to drop packets. In addition, policing propagates bursts, but traffic shaping does not.

Queuing

Queuing refers to the buffering process used by routers and switches when they receive traffic faster than it can be transmitted. Different queuing mechanisms can be implemented to influence the order in which the different queues are serviced (that is, how different types of traffic are emptied from the queues).

QoS is an effective tool for managing a WAN’s available bandwidth. Keep in mind that QoS does not add bandwidth; it only helps you make better use of the existing bandwidth. For chronic congestion problems, QoS is not the answer; in such situations, you need to add more bandwidth. However, by prioritizing traffic, you can make sure that your most critical traffic gets the best treatment and available bandwidth in times of congestion. One popular QoS technique is to classify your traffic based on a protocol type or a matching access control list (ACL) and then give policy treatment to the class. You can define many classes to match or identify your most important traffic classes. The remaining unmatched traffic then uses a default class in which the traffic can be treated as best-effort.

Table 9-7 describes QoS options for optimizing bandwidth.

Table 9-7 QoS Options

QoS Category |

Description |

Classification |

Identifies and marks flows |

Handles traffic overflow using a queuing algorithm |

|

Link-efficiency mechanisms |

Reduce latency and jitter for network traffic on low-speed links |

Traffic shaping and policing |

Prevent congestion by policing ingress and egress flows |

Congestion Management

Two types of output queues are available on routers: the hardware queue and the software queue. The hardware queue uses the first-in, first-out (FIFO) strategy. The software queue schedules packets first and then places them in the hardware queue. Keep in mind that the software queue is used only during periods of congestion. The software queue uses QoS techniques such as priority queuing, custom queuing, weighted fair queuing, class-based weighted fair queuing, low-latency queuing, and traffic shaping and policing.

Priority Queuing

Priority queuing (PQ) is a queuing method that establishes four interface output queues that serve different priority levels: high, medium, default, and low. Unfortunately, PQ can starve other queues if too much data is in one queue because higher-priority queues must be emptied before lower-priority queues.

Custom Queuing

Custom queuing (CQ) uses up to 16 individual output queues. Byte size limits are assigned to each queue so that when the limit is reached, CQ proceeds to the next queue. The network operator can customize these byte size limits. CQ is fairer than PQ because it allows some level of service to all traffic. This queuing method is considered legacy due to improvements in the other queuing methods.

Weighted Fair Queuing

Weighted fair queuing (WFQ) ensures that traffic is separated into individual flows or sessions without requiring that you define ACLs. WFQ uses two categories to group sessions: high bandwidth and low bandwidth. Low-bandwidth traffic has priority over high-bandwidth traffic. High-bandwidth traffic shares the service according to assigned weight values. WFQ is the default QoS mechanism on interfaces below 2.0 Mbps.

Class-Based Weighted Fair Queuing

Class-based weighted fair queuing (CBWFQ) extends WFQ capabilities by providing support for modular user-defined traffic classes. CBWFQ lets you define traffic classes that correspond to match criteria, including ACLs, protocols, and input interfaces. Traffic that matches the class criteria belongs to that specific class. Each class has a defined queue that corresponds to an output interface.

After traffic has been matched and belongs to a specific class, you can modify its characteristics, such as by assigning bandwidth and specifying the maximum queue limit and weight. During periods of congestion, the bandwidth assigned to the class is the guaranteed bandwidth that is delivered to the class.

One of the key advantages of CBWFQ is its modular nature, which makes it extremely flexible for most situations. It is often referred to as Modular QoS CLI (MQC), which is the framework for building QoS policies. Many classes can be defined to separate network traffic as needed in the MQC.

Low-Latency Queuing

Low-latency queuing (LLQ) adds a strict priority queue to CBWFQ. The strict priority queue allows delay-sensitive traffic such as voice to be sent first, before other queues are serviced. That gives voice preferential treatment over the other traffic types. Unlike PQ, LLQ provides for a maximum threshold on the priority queue to prevent lower-priority traffic from being starved by the priority queue.

Without LLQ, CBWFQ would not have a priority queue for real-time traffic. The additional classification of other traffic classes is done using the same CBWFQ techniques. LLQ is the standard QoS method for many VoIP networks.

Link Efficiency

With Cisco IOS, several link-efficiency mechanisms are available. Link fragmentation and interleaving (LFI), Multilink PPP (MLP), and Real-Time Transport Protocol (RTP) header compression can provide for more efficient use of bandwidth.

Table 9-8 describes Cisco IOS link-efficiency mechanisms.

Table 9-8 Link-Efficiency Mechanisms

Mechanisms |

Description |

Link fragmentation and interleaving (LFI) |

Reduces delay and jitter on slower-speed links by breaking up large packet flows and inserting smaller data packets (Telnet, VoIP) in between them. |

Multilink PPP (MLP) |

Bonds multiple links between two nodes, which increases the available bandwidth. MLP can be used on analog or digital links and is based on RFC 1990. |

Real-Time Transport (RTP) header compression |

Provides increased efficiency for applications that take advantage of RTP on slow links. Compresses RTP/UDP/IP headers from 40 bytes down to 2–5 bytes. |

Window Size

The window size defines the upper limit of frames that can be transmitted without getting a return acknowledgment. Transport protocols such as TCP rely on acknowledgments to provide connection-oriented reliable transport of data segments. For example, if the TCP window size is set to 8192, the source stops sending data after 8192 bytes if no acknowledgment has been received from the destination host. In some cases, the window size might need to be modified because of unacceptable delay for larger WAN links. If the window size is not adjusted to coincide with the delay factor, retransmissions can occur, which affects throughput significantly. It is recommended that you adjust the window size to achieve better connectivity conditions.

References and Recommended Readings

RFC 1990: The PPP Multilink Protocol, https://tools.ietf.org/html/rfc1990.

Cisco, “Campus QoS Design Simplified,” https://www.ciscolive.com/c/dam/r/ciscolive/emea/docs/2018/pdf/BRKCRS-2501.pdf.

isco, “Cisco IOS Quality of Service Solutions Configuration Guide Library, Cisco IOS Release 15M&T,” http://www.cisco.com/c/en/us/td/docs/ios-xml/ios/qos/config_library/15-mt/qos-15-mt-library.html.

Cisco, “DSCP and Precedence Values,” https://www.cisco.com/c/en/us/td/docs/switches/datacenter/nexus1000/sw/4_0/qos/configuration/guide/nexus1000v_qos/qos_6dscp_val.pdf.

Cisco, “Highly Available Wide Area Network Design,” https://www.ciscolive.com/c/dam/r/ciscolive/us/docs/2019/pdf/BRKRST-2042.pdf.

Cisco, “Module 4: Enterprise Network Design,” Designing for Cisco Internetwork Solution Course (DESGN) v3.0.

Wikipedia, “LTE: LTE (telecommunications),” en.wikipedia.org/wiki/LTE_(telecommunication).

Exam Preparation Tasks

As mentioned in the section “How to Use This Book” in the Introduction, you have a couple of choices for exam preparation: the exercises here, Chapter 13, “Final Preparation,” and the exam simulation questions on the companion website.

Review All Key Topics

Review the most important topics in the chapter, noted with the Key Topic icon in the outer margin of the page. Table 9-9 lists these key topics and the page number on which each is found.

Table 9-9 Key Topics

Key Topic Element |

Description |

Page |

List |

WAN design methodologies |

300 |

Key design principles |

301 |

|

Application requirements for data, voice, and video traffic |

301 |

|

Physical bandwidth comparison |

302 |

|

Availability percentages |

303 |

|

List |

Deployment models |

304 |

Paragraph |

Single-homed MPLS WANs |

305 |

Paragraph |

Dual-homed MPLS WANs |

306 |

Paragraph |

Hybrid WANs: Layer 3 VPN with Internet tunnels |

306 |

List |

Internet for remote sites |

308 |

List |

WAN backup options |

309 |

Paragraph |

Failover |

310 |

Paragraph |

DiffServ |

311 |

DSCP mapping table |

311 |

|

Paragraph |

IntServ |

312 |

List |

Classification technologies |

312 |

Paragraph |

Shaping |

313 |

Paragraph |

Policing |

313 |

Paragraph |

Queuing |

313 |

QoS options |

313 |

|

Link-efficiency mechanisms |

315 |

Complete Tables and Lists from Memory

Print a copy of Appendix D, “Memory Tables,” found on the companion website, or at least the section for this chapter, and complete the tables and lists from memory. Appendix E, “Memory Tables Answer Key,” includes completed tables and lists to check your work.

Define Key Terms

Define the following key terms from this chapter and check your answers in the glossary:

Q&A

The answers to these questions appear in Appendix A. For more practice with exam format questions, use the exam engine on the companion website.

1. Which of the following is based on the availability of technology as well as the projected traffic patterns, technology performance, constraints, and reliability?

Designing the topology

Assessing the existing network

Identifying the network requirements

Characterize the existing network

2. Which design principle involves redundancy through hardware, software, and connectivity?

Performance

Security

Scalability

High availability

3. Which application requires round-trip times of less than 400 ms with low delay and jitter?

Data file transfer

Real-time voice

Real-time video

Interactive data

4. Which of the following is a measure of a given application’s availability to its users?

Response time

Throughput

Reliability

Performance

5. Which of the following defines the upper limit of frames that can be transmitted without a return acknowledgment?

Throughput

Link efficiency

Window size

Low-latency queuing

6. Which of the following is the availability target range for branch WAN high availability?

99.9900%

99.9000%

99.0000%

99.9999%

7. Which WAN deployment model provides for the best SLA guarantees?

MPLS WAN with dual routers

Hybrid WAN with MPLS and Internet routers

Internet WAN with dual routers

Internet WAN with a single router

8. Which Internet connectivity option provides for the highest level of resiliency?

Single-router single-homed

Single-router dual-homed

Dual-router dual-homed

GRE tunnels

9. When designing Internet for remote sites, which option provides control for security services such as URL filtering, firewalling, and intrusion prevention?

Centralized Internet

Direct Internet

Direct Internet with split tunnel

IPsec with split tunnel

10. Which design considerations are most important for Internet high availability design? (Choose two.)

Using a public BGP AS number for eBGP connections to ISPs

Using provider-independent IP address space for advertisements to ISPs

Using BGP communities

Using extended ACLs

11. Which WAN backup option provides for redundancy and additional bandwidth?

Backup link

IPsec tunnel

GRE tunnel

NAT-T

12. Which failover option can be used to back up the primary MPLS WAN connection?

BGP

GLBP

HSRP

IPsec tunnel

13. Which of the following is not a model for providing QoS?

Best-effort

DiffServ

IntServ

NSF

14. In QoS markings, what DSCP value is used for VoIP traffic?

AF4

CS2

EF

CS5

15. Which QoS method uses a strict priority queue in addition to modular traffic classes?

CBWFQ

Policing

WFQ

LLQ

16. Within RSVP, what function is used to determine whether the requested flows can be accepted?

Admission control

Classification

Policing

Queuing and scheduling

17. Which of the following slows down the rate at which packets are sent out an interface (egress) by matching certain criteria?

Policing

CAR

Shaping

NBAR

18. What is the buffering process that routers and switches use when they receive traffic faster than it can be transmitted?

Policing

Queuing

NBAR

Shaping

19. What do service providers use to define their service offerings at different levels?

SWAN

WAN tiers

WWAN

SLA

20. Which of the following has mechanisms to handle traffic overflow using a queuing algorithm?

Link-efficiency mechanisms

Classification

Congestion management

Traffic shaping and policing

21. Which QoS category identifies and marks flows?

Congestion management

Traffic shaping and policing

Link-efficiency mechanisms

Classification and marking

22. Which design principle balances the amount of security and technologies with the budget?

Performance

Standards and regulations

Cost

Security

23. Which application type has requirements for low throughput and response time within a second?

Real-time video

Interactive data

Real-time voice

Interactive video

24. Which of the following WAN connectivity options has bandwidth capabilities of 1 Gbps to 10 Gbps?

802.11a

LTE

LTE Advance Pro

LTE Advanced

25. How many days of downtime per year occur with 99.00000% availability?

8.76 days

5.2 days

3.65 days

1.2 days

26. With dual-router and dual-path availability models, how much downtime is expected per year?

4 to 9 hours per year

26 hours per year

5 hours per year

5 minutes per year

27. Which deployment model for WAN connectivity has a single router or dual routers and uses both MPLS and an Internet VPN?

Hybrid WAN

Internet WAN

MPLS WAN

VPLS WAN

28. When designing the Internet with high availability, which of the following is a design consideration ?

Use public address space for internal addressing

Use private address space for route advertising to the ISPs

Block all Internet routes

Use HSRP/GLBP or an IGP internally

29. Which of the following is an important design consideration when using IPsec over GRE tunnels?

QoS classification

MTU size

Header type

Payload length

30. When using DSCP to classify traffic, which of the following is prioritized the most?

Signaling

Transactional data

Real-time interaction

VoIP