4

The Role of the Teacher in Making Sense of Classroom Experiences and Effecting Better Learning

A CHANGE IN PERSPECTIVE

In this chapter, I describe a personal and professional odyssey as I developed from being a teacher alone in my own classroom, to a researcher on students' thinking, to a professional developer of a practical framework for making sense of classroom experiences and designing environments for effecting better learning. No longer alone, I am now a participant in collaborations among teachers, researchers, developers, and policymakers. But, the story starts nearly 40 years ago.

During my first few years of teaching, I focused mostly on what I was doing as a teacher. I carefully crafted my presentations and revised them to make my presentations clearer. I was told by my administrators and by my students that I was “one of the best teachers” they had had, but I wondered precisely what learning effects I had had on my students. The students did well on my tests as long as I kept the questions close to the procedures that I had “trained” them to do. But, when I slipped in questions that required a deep understanding of the concepts and reasoning I supposedly had been teaching, I was disappointed. I became more curious about the nature of learning in my classroom.

After only 4 years of teaching, I had the opportunity to participate in research and curriculum development on the national level with Project Physics (1970). Although the methods used by the researchers in that project were very sophisticated and served the needs of a large curriculum development project, they were not useful for my interest in improving my effectiveness as a teacher in my own classroom. Although the results seemed too far from my issues of learning in my classroom, the experience initiated my interest in research.

Six years into my teaching career, I began working part-time at the University of Washington with Professor Arnold Arons, a colleague and mentor through whom (not from whom) I learned a lot about science and about the capabilities and difficulties of developing conceptual understanding. In working with our university students (mostly teachers and prospective teachers), Arons coached me to keep my hands in my pockets and make the students show me what they did, or what they would do, about the problem. Prior to that, my inclination was not unlike many well-meaning teachers whose approach is: “Here, let me show you how to do it,” from which the students learned little more than how “smart” I was.

Arons also coached me to listen to what the students were saying, reminding me that I had two ears but only one mouth and to use them in that proportion. In addition to my learning much about physics, I changed my perspective from a focus on me as a deliverer of knowledge to a focus on my students and what they were learning. My critical questions as a teacher became: “What is the understanding of my students?” and “What experiences can I put before the students to cause them to have to rethink their present understanding and reconstruct their understanding in order to make it more consistent with a broader set of phenomena?”

This has evolved into my line of classroom research and has affected my teaching greatly. When I finished my doctoral dissertation, I applied for a grant to support released time for me to conduct research on the teaching and learning of my students. That has become the natural, and practical, setting within which I conduct a line of research. At the same time, my primary responsibility has been to teach, or, more correctly, to be responsible for my students' learning. Now, in the classroom, I always wear both the hat of a researcher and the hat of a teacher. Each perspective helps me to direct, and to make sense of, the results of the other.

Classroom-based research questions focused my attention on what students were learning. How better could I understand my students' thinking, their conceptual understanding, and their reasoning in the natural setting of the classroom? What effects, if any, did my teaching have on their learning? How could I effect better learning? Will my results be of use beyond my classroom?

In the early stages of my action research, my activities as a researcher were informal. They amounted mostly to anecdotes that, to me, represented evidence of either the learning I intended or the learning that did not occur. I looked for correlation between gross measures like grades in my class (e.g., high school physics) and possibly predicting variables like grades in other courses (e.g., geometry) and more “standardized” measures (e.g., “the Classroom Test of Formal Operations,” (A. Lawson, personal communication, 1977).

Sometimes, I was testing an intervention as short as a particular lesson and, at other times, the effects of aspects of an entire year' program. Occasionally, I conducted a controlled experiment. Sometimes, I simply gathered descriptive data and attempted to interpret the results.

Gradually, there evolved a line of investigation in my classroom that focused on describing my students' initial and developing understanding and reasoning about the content of the courses I was teaching. Later, that line of investigation evolved into designing and testing instructional interventions explicitly adapted to address students' difficulties in understanding and reasoning.

This chapter has two parts. First, I describe my attempts as a teacher-researcher to create a coherent view within which I can make sense of my experiences in the classroom. In the second part, I describe how, from this view, I redesign classroom experiences to effect better learning on the part of my students.

MAKING SENSE OF CLASSROOM EXPERIENCES

Data Gathering in My Classroom

After I began to listen to my students more carefully and to solicit their ideas, I needed to gather data systematically. I enlisted the help of my students and their parents who, at the beginning of the school year, were asked to consider and sign consent forms for participation in my studies. I warned my students that I might be doing some atypical teaching and assessment during my efforts to better understand and address their thinking. I bought a small, battery-run audiorecorder that I kept on my desk in the classroom. Later, I bought a video camera and recorder that I set up when I anticipated discussions that might be informative to other teachers. While students interacted in small groups, I carried the audiorecorder with me and turned it on when I came to an interesting discussion or when students came to me with questions or ideas they had about the phenomena under investigation. During large group discussions, when it appeared that an informative discussion was likely to develop, I started the recorder and let it run throughout the class period.

On one such occasion, early in my experience as a classroom researcher, the audiorecorder was running when we were beginning the study of force and motion. I had asked the students about the forces on a book “at rest” on the front table. The students drew and wrote their answers on paper quietly. While I was walking around the room, I noticed two dominant answers, involving whether the table exerted a force. One suggested that the table exerted an upward force (consistent with the scientists' view), and the other suggested no such upward force. When our discussion began, I drew those two diagrams on the board and took a poll. There was an observer in the class that day, so I asked him to record the number of students who raised their hands during these brief surveys. The answers were divided approximately evenly between those who thought that the table exerted an upward force and those who thought that it did not.

I asked for volunteers to support one or the other of these positions and discovered that the difference revolved around whether one believed that passive objects like tables could exert forces. I decided to test my conjecture by putting the book on the outstretched hand of a student. We took a poll on the students' beliefs about this situation. Nearly everyone thought that the hand exerted an upward force. I inquired about the difference between the two situations, and the students argued that the hand was alive and that the person made muscular adjustments to support the book, especially when I stacked several additional books on top of the first one. The observer recorded the number of students who raised their hands. The teacher side of me wanted the students to be able to see the similarities between the table and the hand situations, but it was clear that the students were seeing the differences. Again thinking about how I would address their concern, I pulled a spring out of a drawer, hung it from hardware, and attached the book to the spring. The spring stretched until the book was supported. I asked again for diagrams and took another poll, recorded by the observer. Nearly all of the students believed that the spring must be exerting an upward force. I countered by asking whether the spring was alive or how this situation was like the book on the hand. The students did not believe that the spring was alive with muscular activity, but that it could stretch or deform and adjust in a way to support the book. And, how was this different from the table? They suggested that the table was rigid; it did not stretch or deform like the spring. “Ability to deform or adjust” now seemed to be the difference between these believable situations and my target situation of the book on the table. I put on my teacher hat, darkened the room, pulled out a light projector, and set it up so that the light reflected off the table top onto the far wall. Using this “light lever,” I alternately put heavy objects on and off the table, and we noticed the movement of the light on the far wall. The students concluded that the table must be bending also. With my teacher hat still in place, I summarized by suggesting that force is a concept invented by humankind. As such, we are free to define force in any way we want, but the scientist notes the similarity of “at rest” in several situations. Then, wanting to be consistent, he thinks of one explanation that works for all of the situations; the explanation involving balanced forces. This means that the scientist' definition of force will include “passive” support by tables as well as “active” support by more active things like hands or springs.

The description of this action research became the material in my first published research article (Minstrell, 1982a). The situation has been analyzed since by other researchers and incorporated into curricular materials (Camp et al., 1994). It is important to note that, in this discovery mode, the “hats” of researcher and teacher are being interchanged quickly in an effort to both understand the students' thinking and affect their learning.

It was a memorable lesson for me and for my students. It made them think differently about whether actions are active or passive and about the idea of force. These lessons that students keep referring back to later in the year, or in subsequent years, I have come to call “benchmark lessons,” a metaphor from the geographical survey reference to benchmarks that one finds cemented into rocks (diSessa & Minstrell, 1998).

To find out what students were thinking, I designed and set problematic situations before them at the beginning of most units of study. These tasks were typically in the form of preinstruction quizzes, but only the students' honest effort, not the “correctness” of the answer, counted for credit (Minstrell, 1982b). Students were asked for an answer and reasoning for how the answer made sense. From the sorts of tasks I set and from the answers and the rationale students gave, I inferred their conceptual understanding. In this research approach, I was using methods similar to the clinical interviews conducted by cognitive scientists except that I was interviewing my whole class (Bruer, 1993). As a teacher, the activities I set in class tended to be driven by the class as a whole, rather than by an individual learner. Still, the method allowed me to “know” the tentative thinking of most of the individuals in my class as well as the thinking of the class in general.

This procedure allowed me to “discover” aspects of my students' thinking. For example, before I started a dynamics unit, I used the University of Massachusetts Mechanics Diagnostic (J. Clement, personal communication, 1982) to identify ideas my students seemed to have about the forces that objects exerted on each other during interaction. Even though most high school students were able to repeat the phrase “for every action, there is an equal and opposite reaction,” they did not apply the idea to objects interacting. I found that most students initially attended to surface features and argued that the heavier, or the stronger, or the most active object, or the one that inflicted the greater change in the other object, exerted the greater force. Often, that was as far as I could go in terms of learning about students' thinking, creating the hypothesis about their thinking, and then instructing with that thinking in mind.

However, as time and opportunity allowed, I also attempted to “verify” my hypotheses about students' thinking. I designed problematic situations that contained those features specifically, and based on my assumptions about the students' thinking, I predicted their answers and rationale. If they responded according to my prediction, I had some degree of confirmation that my assumptions about their thinking were correct.

Notice that the procedure is consistent with science as a method. As a researcher, I was generating and testing hypotheses about students' thinking. As a teacher, I wanted to know generally what the thinking was so that I could choose or design more relevant activities. But, my efforts also had aspects of engineering, that is, designing more effective learning environments. I wanted to design benchmark lessons that might have a better chance of initiating change in students' conceptions, for example, by incorporating a broader set of phenomena, constructing new conceptions or new models that likely would be more consistent with formal scientific thinking. The results of these more systematic approaches to identifying students' ideas have appeared in a working document accumulating facets of students' thinking in physics (Minstrell, 1992; also see www.talariainc.com/facet).

Describing Facets of Students' Thinking About Events and Ideas

Between the research I was doing and the accumulation of research others had done relative to my interests in the teaching of introductory physics, my list of students' misperceptions, misconceptions, procedural errors, and so forth was getting quite long, too long. As a classroom teacher, I needed a practical way of organizing students' thinking so that I could address it in the classroom. I grouped the problematic ideas and approaches around students' understanding or application of significant “big ideas” in the discipline of physics, for example, the meaning of average velocity. Other ideas were organized around explanations or interpretations of some classic event in the physical world, for example, explaining falling bodies.

The usual term applied to these problematic ideas was “misconception,” and that bothered me. Many of the ideas seemed valid in certain contexts. For example, “heavier falls faster” is a conclusion that applies pretty well to situations like dropping a marble and a sheet of paper. Part of the complexity of understanding science is to know the conditions under which such a conclusion holds and when it doesn't and to understand why that can happen in some situations and not in others. Also, some of the schema applied were more procedural errors than misconceptions, for example, finding the average velocity by simply adding the initial and final velocities and dividing by 2 in a context in which an object is accelerating in a nonuniform way. In discussions, my colleagues and I talked about these various sorts of problematic aspects of students' thinking as different facets of students' thinking about physics. The reference term “facets” has stuck with us.

Facets, then, are individual pieces, or constructions of a few pieces of knowledge or strategies of reasoning. “Facet clusters” are the small collections of facets about some big idea or explanations of some big event. Most important of all is that facets represent a practical way of talking about students' thinking in the classroom. Teachers should be able to recognize particular facets in what students say or do in the classroom when discussing ideas or events related to the discipline (Minstrell, in press).

I make no claim about what is actually going on inside the learner' head. But, the facets perspective is useful in describing the products of students' thinking and in guiding the subsequent instruction in the classroom. I have attempted to order the facets within a cluster from those that are the most problematic to some that are less troublesome and so on up the ladder to the learning target. This rough ranking is not for scoring but to help teachers monitor progress up the ladder of facets of thinking by individual students or by the class as a whole. The ranking is typically decided based on two criteria: How problematic will future learning be for a student who displays this facet, and/or, based on an effective teaching experience, which facet seems to arise first in the development of the unit. A critical test of the importance of the idea is that if the student leaves my class with this idea, their subsequent learning in this domain could be very deeply curtailed. Those ideas get addressed first. Less problematic ideas likely get treated later in the unit of instruction. Of course, in instruction, while I am challenging each problematic facet, I am attempting to build a case for the goal facet, the learning target, the standard for learning the big ideas of the unit. An example cluster of facets is discussed in the next section along with instructional implications associated with the facets.

INSTRUCTIONAL DESIGN BASED ON STUDENTS' THINKING

Using Facets to Create a Facet-Based Learning Environment

In this section of the chapter, I describe how the facet organization of students' thinking is used to create a facet-based learning environment. The purpose of the environment is to guide students as they construct a more scientific understanding of the related ideas. Facets can be and are being used to diagnose students' ideas, to choose or design instructional activities, and to design assessment activities and interpret results (Bruer, 1993; Hunt and Minstrell, 1994; Minstrell, 1989, 1992, in press; Minstrell & Stimpson, 1996.) I use a particular facet cluster to demonstrate the creation and implementation of such a facet-based learning environment. I consider one part of one unit in our physics program and use this context to discuss the practical implications of my assumptions about students' understanding and learning.

One of the goals in our introductory physics course includes understanding the nature of gravity and its effects and differentiating gravitational effects from the effects of ambient fluid (e.g., air or water) mediums on objects in them, whether the objects are at rest or moving through the fluid. For many introductory physics students, an initial difficulty involves a confusion between which are effects of gravity and which are effects of the surrounding fluid medium. When one attempts to weigh something, does it weigh what it does because the air pushes down on it? Or, is the scale reading that would give the true weight of the object distorted somehow because of the air? Or, is there absolutely no effect by air? Because these have been issues for beginning students, the students are usually highly motivated to engage in thoughtful discussion of the issues. This is the content for my description of a facet-based instructional design.

Eliciting Students' Ideas Prior to Instruction in Order to Build an Awareness of the Initial Understanding

At the beginning of several units or subunits, my teacher colleagues and I administer a preinstruction survey containing one or more questions. One purpose is to provide the teacher with knowledge of what are going to be the learning issues in this content with this group of students and to provide specific knowledge of which students exhibit what sorts of initial ideas. A second purpose is to help students become more aware of the content and issues involved in the upcoming unit.

When I first began doing research on students' conceptions, I apologized to the students for asking these questions at the beginning of units. The students convinced me to stop apologizing, saying “I hate these pre-instruction questions, but they help me know what I am going to need to learn and know by the end of the unit.” At the end of the year, students reported that these questions were one of the worst things about my course but that they were also one of the best things about the course. They didn't like answering the questions, but the questions were an important tool for promoting the students' learning.

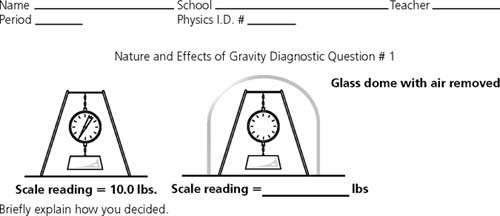

To get students involved in separating effects of gravity from effects of the ambient medium, we use the following question associated with Fig. 4.1.

First, suppose we weigh some object on a large spring scale, not unlike the ones we have at the market. The object apparently weighs 10 lbs, according to the scale. Now we put the same apparatus, scale, object and all, under a very large glass dome, seal the system around the edges, and pump out all the air. That is, we use a vacuum pump to allow all the air to escape out from under the glass dome. What will the scale reading be now? Answer as precisely as you can at this point in time. [pause] And, in the space provided, briefly explain how you decided.

Thus, we elicit the students' ideas, their best answer (guess if it need be), and their rationale for how that answer seems to make sense to them at this time. (I encourage the reader to answer this question now as best and as precisely as you can. Or, to let you off the hook a bit, what are the various answers and rationale you believe students would give?)

Students write their answers and their rationale. From their words, a facet diagnosis can be made relatively easily. The facets associated with this cluster, “Separating medium effects from gravitational effects,” can be seen in Table 4.1. Students who give an answer of zero pounds for the scale reading in a vacuum usually are thinking that air only presses down, and “without air there would be no weight, like in space” (coded as Facet 319). Other students suggest a number “a little less than 10,” because “air is very light, so it doesn't press down very hard, but it does press down some,” thus, taking the air away will only decrease the scale reading slightly (Facet 318). Other students suggest there will be no change at all. “Air has absolutely no effect on the scale reading.” This answer could result either from a belief that surrounding fluids don't exert any forces or pressures on objects in them (Facet 314), or that fluid pressures on the top and bottom of an object are equal (Facet 315). Typically a few students suggest “the scale reading will be greater because air, like water, pushes upward with a buoyant force on things.” A very few students answer that that will yield a large increase in the scale reading, “because of the [buoyant] support by the air.” These students seem to be thinking that air or water only exerts upward forces (Facet 317.) Note that many textbooks stop at this point, and readers go away believing that the surrounding fluid only pushes upward on submerged objects. A few students suggest that

although there are pressures from above and below, there is a net upward pressure by the fluid. “There is a resultant slight buoyant force” (Facet 310, an acceptable workable idea at this point).

The numbering scheme for the facets allows for more than simply marking the answers “right” or “wrong.” The codes ending with a high digit (9, 8, and sometimes 7) represent common facets used by our students at the beginning of the instruction. In the example facet cluster, 319, 318, and 317 each represent an approach that has the fluid pushing in one direction only. Codes ending in 0 or 1 are used to represent goals of learning. In the example cluster 310 is an appropriate conceptual modeling of the situation and 311 represents an appropriate mathematical modeling of the situation. The latter abstractions represent the sort of reasoning or understanding that would be productive at this level of learning and instruction. Middle number codes often represent some formal learning.

The scale is only roughly ordinal in the sense that a facet ending in 5 is less likely to be problematic than a facet ending in 9. But, adjacent numbered facets are not necessarily very distinguishable, for example, Facet 314 is not likely to be distinctly better than 315. More important than the scale is that each facet represents a different sort of understanding, one that likely requires particular lessons to address it. Even so, if and when data are coded, the teacher/researcher can visually scan the class results to identify dominant targets for the focus of instruction.

Benchmark Instruction to Initiate Change in Understanding and Reasoning

By committing their answers and rationale to paper, students demonstrate some ownership of the tentative ideas expressed. They become more engaged and interested in finding out what does happen. Students are now motivated to participate in activities that can lead to resolution. In the classroom, this benchmark lesson usually begins with a discussion of students' answers and rationale. We call this stage “benchmark instruction” because the lesson tends to be a reference point for subsequent lessons (diSessa & Minstrell, 1998). It unpacks the issues in the unit and provides clues to potential resolution of those issues. In this stage, students are encouraged to share their answers and the associated rationale. As the teacher encourages students to express their ideas, teachers attempt to maintain neutrality in leading the discussion. That is, we express sincere interest in hearing what the students are thinking, and we try to help them clarify these initial thoughts. But, we refrain from expressing judgment of the correctness of their answers and rationale. This helps keep the focus on students doing the thinking and it honors the potential (but testable) validity of students' facets of knowledge and reasoning. We accept the students' experiences as valid and we accept, as tentative hypotheses, the sense they make of those experiences (van Zee & Minstrell, 1997.)

Note that many of the ideas and their corresponding facets have valid aspects.

After the initial sharing of answers and rationale, many threads of students' present understanding of the situation are unraveled and lay on the table for consideration. The next phase of the discussion moves toward allowing fellow students to identify strengths and limitations of the various suggested individual threads. “Is this idea ever true? When, in what contexts? Is this idea valid in this context? Why or why not?”

Seeing the various threads unraveled, students are motivated to know “what is the right answer?” Because young learners' knowledge (and perhaps that of most anyone for whom a content domain is new) resides more in features of specific contexts and not in general theoretical principles, their motivation centers on what will happen in this specific situation. At this point, they are ready for the question, “How can we find out what happens?” They readily suggest “Try it. Do the experiment and see what happens.” In this case, the teacher can “just happen to be prepared” with a vacuum pump, bell jar, spring scale, and an object to hang on the scale. After first demonstrating some effects of a vacuum environment, perhaps using a partially filled balloon, the class is ready to determine the result of the original problem relating to scale readings in a vacuum.

The experiment is run, air is evacuated, and the result is “no detectable difference in the scale reading in the vacuum versus in air.” Note that this is not the final understanding I want by the end of the unit, but it does summarize the results based on the measurements we have taken with the instruments available so far. This is an opportunity for the class to revisit their earlier investigations in measurement. “What can we conclude from the experiment? Given the uncertainty of the scale reading, can we say for sure that the value is exactly the same? What original suggested answers can we definitely eliminate? What answers can we not eliminate, knowing the results of this experiment? What ideas can we conclude do not apply?” We can eliminate the answer “it would weigh nothing,” and we can eliminate the idea that “downward push of air is what causes weight.” We can eliminate the answers reflecting a “great weight increase,” and we can eliminate the idea that “air has a major buoyant effect on this object.” We cannot eliminate “the scale reading would be a tiny bit more” or “a tiny bit less,” but also, we don't know that “exactly the same” is the right answer either. Thus, our major conclusion is that the effects of gravity and the effects of a surrounding fluid medium (e.g., air) are different sets of effects. This idea will be important again later when we investigate and try to explain the motions of falling bodies.

Meanwhile, we have addressed the thinking of those students who thought that air pressure was what caused weight, the 319 facet. We still have many other students believing that air only presses down (318). In fact, about 50% of my students typically believe that air only pushes down (318 and 319.) Plus, there is another 10% to 15% who believe that air only pushes up (317). Many of these other issues are left unraveled for now. We don't yet have the definitive answer. We are mirroring an aspect of the nature of science. Rarely do experiments give results indicating exactly the “right” answer (unless the experiments are highly constrained, as in the Klahr, Chen, & Toth chapter in this volume, regarding training on the control of variables). But, experiments usually do eliminate many of the potential answers and ideas. Additional concerns about the direction and magnitude of pushes by air and water are taken up in subsequent investigations.

Elaboration Instruction to Explore Contexts of Application of Other Threads Related to New Understanding and Reasoning

Additional discussion and laboratory investigations allow students to test the contexts of validity for other threads of understanding and reasoning related to the effects of the surrounding fluid medium. Ordinary daily experiences are brought out for investigation; a glass of water with a plastic card over the opening, then inverted (done carefully, the water does not come out); a vertical straw dipped in water and a finger placed over the upper end (the water does not come out of the lower end until the finger is removed from the top); an inverted cylinder is lowered into a larger cylinder of water (it “floats” and as you push the inverted cylinder down, you can see the water rise relative to the inside of the inverted cylinder); and a plastic 2L water filled soda bottle with three holes at different levels down the side (uncapped, water from the lowest hole comes out fastest; capped, air goes in the top hole and water comes out the bottom hole).

It typically takes about two or three class periods for students to work through each of these situations and to present to the rest of the class their results of investigating one of the situations. Although each experiment is a new, specific context, the teacher encourages the students to come to general conclusions about the effects of the surrounding fluid. “In what directions can air and water push?” “What can each experiment tell us that might relate to all of the other situations, including the original benchmark, preinstruction problem?”

Possible related conclusions include “air and water can push upward, and from the side as well as down,” and “the push from water is greater the deeper one goes in the container.” The latter is probably true of air as well, given reported differences in air pressure between sea level and greater altitudes such as those at the tops of mountains or the levels where planes fly. That can also get brought out through text material and by sharing firsthand experiences, such as siphoning water from a higher level to a lower level or boiling water at high elevation versus at low elevation. Of course, new issues get opened up too, including “stickiness” of water, and “sucking” by vacuums. The former gets us into cohesion and adhesion. Sucking is an hypothesized mechanism that works but is not needed. Addressing it requires raising the issue of parsimony in scientific explanation. Each of these elaboration experiences gives students additional evidence that Facets 319, 318, and 317 are not appropriate. The experiences also provide evidence that water and air can push in all directions and that the push is greater the deeper one goes in the fluid. These are the sorts of big ideas that emerge out of the experiences and consensus discussions.

In addition to encouraging investigation of issues, the teacher can help students note the similarity between what happens to an object submerged in a container of water and what happens to an object submerged in the “ocean of air” around the earth. What are the similarities and differences between air and water as surrounding fluids? To the extent that students learn that the fluid properties of air and water are similar, they can transfer what they learn about water to the less observable properties of water.

A final experiment for this subunit affords students the opportunity to try their new understanding and reasoning in yet one more specific context. A solid, metal slug is weighed successively in air, then partially submerged in water (scale reading is slightly less), then totally submerged just below the surface of the water (scale reading is even less), and finally, totally submerged deep in the container of water (scale reading is the same as in any other position, as long as it is totally submerged). From the scale reading in air, students are asked to predict (qualitatively compare) each of the other results, then do the experiment, record their results, and finally, interpret those results. One last task asks the students to relate these results and the results of the previous experiments to the original benchmark experience. This activity specifically addresses the facets that water does not push on things (314) and that water pushes equally from above and below (315). So, the order of the instructional activities moves gradually up the facet ladder.

By seeing that air and water have similar fluid properties, students are prepared to build an analogy. “Weighing in water is to weighing out of water (in air) as weighing in the ocean of air is to weighing out of the ocean of air (in a vacuum).” Thus, students are now better prepared to answer the original question about weighing in a surround of air, and they have developed a more principled view of the situation.

Some teachers have also had students make the same observations for the metal slug being weighed in other fluids less dense than water (but more dense than air.) The differences in the scale reading turn out to be less dramatic than for water. Thus, they have additional evidence that the same sorts of effects exist, but the magnitude of the effects is less, helping students bridge between more dense fluids (e.g., water), to progressively less dense fluids (e.g., alcohol), to very low density fluid (e.g., air.)

Because students' cognition is often associated with the specific features of each situation, a paramount task for instruction is to help students recognize the common features that cross the various situations. Students argue consistently within a given context. That is, to the extent that different situations have similar surface features, they will employ similar facets. If the surface features in two situations differ, they may alter the facet applied. Thus, we need to guide them in seeing common features across the variety of situations. Only the features we want them to recognize as similar are more like the principles incorporated in the goal facets, for example, 310 and 311. Then, the facets of their understanding and reasoning can be generalized to apply across the situations with different surface features. Air and water push upward and sideways in addition to downward. The push (in all directions) is greater, deeper in the container. Rather than starting with the common, theoretical principles and pointing out the specific applications of the general principles, we start with specific situations, identify ideas that apply in each, attempt to recognize common principles features, and derive ideas (facets) that are more productive across situations.

Some of the students' original ideas may combine to help interpret another situation. In the last experiment, the water pushes upward on the bottom of the metal cylinder and downward on the top of the metal cylinder. The upward push is apparently greater accounting for the drop in the scale reading when the slug is weighed in water. The water in the straw is pushed up by the air beneath the “slug” of water and pushed downward by the air in the straw. The upward push is apparently greater than the downward push, accounting for the need to support the weight of the slug of water in the straw. The surface features of the two experiments are very different. Students need encouragement to see that, in both situations, the slug is apparently partially supported by the difference in pushes by the fluid above and the fluid below the slug. Part of coming to understand physics is coming to see the world differently. The general, principled view can be constructed inductively from experiences and from the ideas that apply across a variety of specific situations. The facets are our representation of the students' ideas. They originate and are used by the students, although they may be elicited from the students by the skilled instructor with curricular tools based on the facets research. Thus, the generalized understandings and explanations are constructed by students from their own earlier ideas. In this way, I am attempting to bridge from students' ideas to the formal ideas of physics.

Assessment Embedded Within Instruction

Sometime after the benchmark instruction and the more focused elaboration lessons, after the class begins to come to tentative resolution on some of the issues, it is useful to give students the opportunity to individually check their understanding and reasoning. Although I sometimes administer these questions on paper in large group format, I prefer to allow the students to quiz themselves at the point they feel they are ready. They think they understand, but they need opportunities to check and fine tune the understanding. To address this need for ongoing formative assessment, Earl Hunt, other colleagues at the University of Washington, and I developed a computerized tool to assist the teacher in individualizing the assessment and keeping records on student progress. When students feel they are ready, they are encouraged to work through computer-presented questions and problems, appropriate to the unit being studied, using a program called DIAGNOSER. I discuss the program in some detail here, because it represents instructional innovation in the form of technological assistance with learning consistent with designing facet-based learning environments.

Technically, the present version of DIAGNOSER is a HyperCard program, carefully engineered to run on a Macintosh Classic or newer. In 1987, we wanted to keep within these design requirements to ensure that we had a program that could be run in the schools. A more complete technical description is presented elsewhere (Levidow, Hunt, & McKee, 1991). Here, I discuss what the DIAGNOSER looks like from the students' view and how it is consistent with my pedagogical framework (Hunt & Minstrell, 1994.)

The DIAGNOSER is organized into units that parallel units of instruction in our physics course. Within our example unit, there is a cluster of questions that focus on the effects of a surrounding medium on scale readings when attempting to weigh an object. Within each cluster, the DIAGNOSER contains several question sets made up of pairs of questions. Each set may address specific situations dealt with in the recent instruction, to emphasize to students that I want them to understand and be able to explain these situations. Sets, also, may depict a novel problem context related to this cluster. I want continually to encourage students to extend the contexts of their understanding and reasoning.

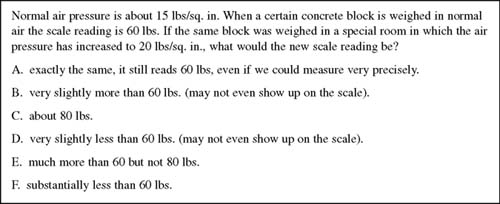

The delivery of each question set consists of four HyperCard screens. The first screen contains a phenomenological question, typically asking the student “what would happen if … ?” For example, the phenomenological question in Fig. 4.2 asks the student to predict what would happen to an original scale reading of 60 lbs, if air pressure was increased from the normal approximately 15 lbs/sq in to about 20 lbs/sq in.

The appropriate observations or predictions are presented in a multiple choice format with each alternative representing an answer derivable from understanding or reasoning associated with a facet in this cluster. From the student' choice, the system makes a preliminary facet diagnosis. For example, if, in Fig. 4.2, the student had chosen either answer B or E, each

FIG. 4.2. DIAGNOSER Phenomenological question screen 3100.

of those answers would be consistent with the thinking that air pushes down and therefore contributes to scale readings (Facet 318.1).

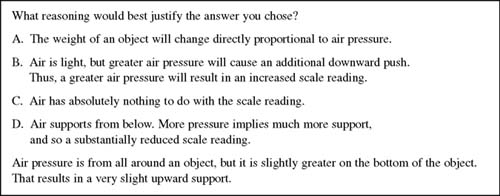

The second screen asks the student “What reasoning best justifies the answer you chose?” Again, the format is multiple choice with each choice briefly paraphrasing a facet as applied to this problem context. From the student' choice, the system makes a second diagnosis. For example, from the screen presented in Fig. 4.3, the student may have picked answer B, suggesting that although air is light, it still does contribute to an increased scale reading (again, Facet 318.1).

On the computer, each of the two screens has an alternative “write a note to the instructor” button. Clicking on this option allows the student to leave a note about their interpretation of the question or about their difficulties with the content. These notes can be scrutinized by the teacher/researcher to assist the individual student. The notes are also helpful in improving DIAGNOSER questions, and in modifying activities to improve learning.

Students are also allowed to move back and forth between the question and the reasoning screens. This is done to encourage students' reflection on experiences, to think about why they have answered the question the way they have, to encourage them to seek more general reasons for answering questions in specific contexts.

The reasoning screen is followed by a diagnosis feedback screen. What this screen says depends on precisely what the student did on the question and reasoning screens. Logically, there are five generic possibilities. First, the student could have chosen the “right” answer for both the phenomenological question and for productive, “physic like” reasoning, in which case the student is given an encouraging message, acknowledging the fact

FIG. 4.3. DIAGNOSER Reasoning question screen 3100.

that she was being consistent. Second, the student could have chosen an incorrect prediction and an associated problematic reasoning, both coded with the same problematic facet, as in our previous example. In this case, the student is encouraged for being consistent but urged to follow a prediction for help (on the next screen; see Fig. 4.4). Third, the student could have chosen a correct prediction but problematic reasons. Fourth, the student could have chosen an incorrect prediction for what seem to be productive reasons. In either of these last two cases, the apparent inconsistency between prediction and reasoning is pointed out, and students are encouraged to follow the prescription for help (see Fig. 4.5). Finally, they may have chosen an incorrect prediction and inconsistent, questionable reasoning. In this case, the double trouble is noted and they are told that the system will attempt to address their phenomenological trouble first, and then address the reasoning, if that is still necessary.

The fourth screen in each sequence is the prescription screen. If the student' answers were diagnosed as associated with productive understanding and reasoning, then the student is very mildly commended and is encouraged to try more questions to be surer. The rationale here is that it should be recognized that although the student' ideas seem ok in this context, overcongratulating the student may allow him to get by with a problematic facet that just didn't happen to show up in this problem situation.

If the student' answers were diagnosed as potentially troublesome, they are issued a prescription lesson associated with the problematic facet. For example, if the student chose one or more answers consistent with “air pushes downward,” he would get a prescription suggesting experiences or

FIG. 4.4. DIAGNOSER Feedback screen for consistent answer and reasoning but indicating a conceptual difficulty.

FIG. 4.5. DIAGNOSER Feedback screen for inconsistent answer and reasoning and indicating problematic reasoning.

information inconsistent with that facet (318.1; see Fig. 4.6). Typically, the student is encouraged to think about how his ideas would apply to some common, everyday experience or they may be encouraged to do an experiment they may not yet have done. In either case, the experience was chosen because the results would likely challenge the problematic facet apparently invoked by the student. Effectiveness of the prescriptive lessons have been suggested and tested by classroom teachers.

Note the emphasis on consistency. From the students' view, they are generally trying to be consistent. We want to reward students for having thoughtful reasons for the answers they give, thus, the two-part feedback; first for consistency and second for potential productivity of their ideas.

If students generally are seeking consistency, we can challenge situations wherein they have not been consistent. On the DIAGNOSER, one challenge comes if there is an apparent inconsistency between the facet associated with their answer to the question and the facet associated with their reasoning. Even if students are consistent between question and reasoning in the DIAGNOSER, if their facet diagnosis is not consistent with class experiments or with common daily experience, we attempt to point out that inconsistency to them. Thus, we capitalize on the students' nature to be consistent (within a given situation) and then challenge them to extend that consistency within a situation to be applied across situations as well. Note that consistency here means consistency in apparent facet diagnosis. Students are usually more consistent within a particular problem situation than they are between problem situations. Features of different problems tend to cue up different facets.

The DIAGNOSER is typically run in parallel with other instructional activities going on in the classroom. Some students are working on DIAG-

FIG. 4.6. DIAGNOSER Prescription lesson screen for facet 318.1, “air pressure is downward only.”

NOSER while others are working in groups on paper and pencil problem solving or are conducting additional laboratory investigations. In the case of our example subunit, the class may even be moving ahead into the next subunit, more directly investigating gravitational effects. Although students may work on the program individually or in small groups of two or three, they are not graded on their performance on it. It is a formative assessment tool to help them assess their own thinking, and it is a tool to help the teacher assess additional instructional needs for the class as a whole or for students individually. The teacher gets the facet diagnosis for each students' responses or from the small group and can use that information to identify which students or groups still need help addressing what facet of their understanding. It is a device to assist the students and teacher in keeping a focus on understanding and reasoning, on students' learning relative to goal facets.

Application of Ideas and Further Assessment of Knowledge

A unit of instruction may consist of several benchmark experiences and many more elaboration experiences together with the associated DIAGNOSER sessions. Sometime after a unit is completed, students' understanding and reasoning is tested to assess the extent to which instruction has yielded more productive understanding and reasoning. When designing questions for paper and pencil assessment, we attempt to create at least some questions that test for the extent of application of understanding and reasoning beyond the specific contexts dealt with in class. Has learning been a genuine reweave into a new fabric of understanding that generalizes across specific contexts, or has instruction resulted in more brittle situation-bound knowledge?

In designing tests, the cluster of facets becomes the focus for a particular test question. In our example cluster, test questions probe students' thinking about situations in which the local air pressure is substantially changed, questions similar to the elicitation question or the DIAGNOSER question used as examples. Have students moved from believing that air pressure is the cause of gravitational force? Other questions focus on interpreting the effects of a surrounding fluid medium, as they help us infer the forces on an object in that fluid medium. Do students now believe that the fluid pushes in all directions? Can they cite evidence for the idea that greater pushes by the fluid are applied at greater depths? Can they integrate all these ideas together to correctly predict, qualitatively, what effects the fluid medium will have on an object in the fluid? In subsequent units, dynamics for example, do students integrate this qualitative understanding of relative pushes to identify and diagram relative magnitudes of forces acting on submerged objects?

Whether the question is in multiple choice or open response format, I attempt to develop a list of expected answers and associated rationale based on the individual facets in that cluster. After inventing a situation context relevant to the cluster, we read each facet in the cluster and predict the answer and characterize the sort of rationale students would use, if they were operating under this facet. Assuming we have designed clear question situations, and that our lists and characterizations of facets have been sufficiently descriptive of students' understanding and reasoning, we can trace the development of their thinking by recording the trail of facets from preinstruction, through DIAGNOSER, to postunit quizzes, and to final tests in the course.

FACET-BASED LEARNING ENVIRONMENTS PROMOTE LEARNING

For the sample of results described in this section, I continue to focus on separating gravitational effects from effects of the surrounding fluid. The answers for each question associated with diagnosis or assessment were coded using the facets from the cluster for “Separating medium effects from gravitational effects”(see Table 4.1, “310 Cluster of Facets”).

The preinstruction question called for free response answers. For that question, 3% of our students wrote answers coded at the most productive level of understanding (see Table 4.2). On the embedded assessment (DIAGNOSER), after students completed the elaboration experiences for a similar multiple choice question and reasoning combination, 81% of the answers to the phenomenological question and 59% of the answers to the reasoning were coded 310.

Revisiting the “object in fluid” context in subsequent instruction helped maintain the most productive level of understanding and reasoning about buoyancy at nearly the 60% level. By the end of the first semester, the class had integrated force related ideas (dynamics) into the context of fluid effects on objects submerged in the fluid medium. On a question in this area, 60% of the students chose, and then briefly defended in writing, an answer coded 310. On the end-of-year final, for the three related questions, answers coded 310 were chosen by students 55%, 56%, and 63% of the time, respectively. Given the difficulty in conceptually understanding the mechanisms of buoyancy and given that the instructional activities took less than 5 hours of class, I consider these numbers a considerable accomplishment.

When we look at the other end of the understanding and reasoning spectrum of facets, we see a substantial development away from believing that “downward pressure causes gravitational effects” (Facet 319) and “fluid mediums push mainly in the downward direction” (Facet 318). On the free response pre-instruction quiz, these two facets accounted for 49% of the data (see Table 4.2). In the DIAGNOSER, those facets accounted for about 5% to 20% of the data, depending on the question. Similar results were achieved on both the first semester final and on the second semester final, some 7 months after the few days of focused instruction. Much of this movement away from the problematic pressure down facets did not make it all the way to the most productive facet. Much student thinking moved to intermediate facets that involve thinking that there are no pushes by the surrounding fluid of air (Facet 314) or that the pushes up and down by the surrounding air are equal (Facet 315). Many of these same students were not stuck on these intermediate facets in the water context, only in the air context. This makes sense because they have direct evidence that when the cylinder is submerged, water pressure causes a difference in the scale reading. In the air case, the preponderance of the evidence is that if there is any difference due to depth, it doesn't matter. For example, force diagrams on a metal slug hung in the air in the classroom don't usually include forces by the surrounding air, because those effects are negligible, except for very lightweight objects, such as balloons.

| Scale Reading* | Percentk | Facet Code |

| s≥20 lbs. | 2% | 317 |

| 20>s>11 | 11 | 317 |

| 11≥s>10 | 3 | 310 |

| s = 10 | 35 | 314/315 |

| 10>s≥9 | 12 | 318 |

| 9>s>1 | 17 | 318 |

| 1≥s≥0 | 20 | 319 |

* “s” represents the predicted scale reading

Similar facet-based instruction is now being used by networks of physics teachers across Washington State (Hunt & Minstrell, 1994.) These teachers are getting improved learning effects. After adopting and using a facet-based learning environment in their classes, on the average, these teachers were able to increase the performance of the next cohort of students by 15% over the previous cohort.

The teachers see their role as changed. Formerly, they focused on what they were doing as teachers. They were presenting activities. Now they focus on student learning, on guiding students across the gap from initial facets of thinking toward the learning target facet. The teachers attempt to make sense of what their students are saying and doing, and they design and adjust their instruction to challenge problematic facets and effect improved learning. They have a practical, theoretical view of teaching and learning that they can implement in their classrooms.

The idea of facet-based learning environments is generalizable to other teaching and learning contexts. David Madigan and colleagues have done preliminary research to identify facets of thinking in introductory statistics at the university level. Complete with a web-served version of DIAGNOSER, they have effected better learning in their courses (Schaffner, 1997.) At Talaria Inc. in Seattle, researchers and developers are building facet-based learning environments to effect better learning within many topics in elder care. Yoshi Nakamura and David Madigan (1997) have identified facets of understanding in pain management for eldercare. By diagnosing learners' understanding of pain management and implementing instruction specific to the diagnosis, they effected significantly better understanding among caregivers. Minstrell at Talaria, in concert with Earl Hunt at the University of Washington and the Office of Superintendent of Public Instruction for Washington State are creating facet-based assessment systems to serve teachers and to effect better learning in science and mathematics for students in the state of Washington. It appears that facets and facet clusters can help teachers in many content areas to better focus on their students' thinking and to effect better learning.

FINAL REMARKS

Teaching is an ill-defined problem where “… every teacher—student interaction can change the teacher' goals and choice of operators” (Bruer, 1993). There are multiple solutions that depend on the prior experiences, knowledge, interests, and motivation of the students and teachers who are present. The teaching—learning process is too complex to completely specify in advance, but I have found that there are specific actions we can take and tools we can use to effect better learning in complex, content-rich learning environments.

Teachers need to be able to make sense of experiences in the classroom and to organize their instructional actions within a coherent framework of learning and teaching, such as that suggested by Donovan, Bransford, and Pellegrino (1997). Facet-based learning environments have provided that coherent framework for me and my fellow teachers. Facets are used to describe students' initial ideas and can be used to track the development of ideas during instruction. The facet clusters provide us with a guide for bridging the gap from students' ideas to the learning standards and from research to practice (Minstrell, in press).

When we know the problematic ideas students use, we can design our curriculum, our assessment items, and our teaching strategies to elicit these ideas and challenge them. Our instructional design to foster development of learner understanding involves eliciting students' ideas with preinstruction questions. Then, we carefully design benchmark lessons that challenge some initial ideas and elaboration lessons that allow students to propose and test initial hypotheses. The classroom becomes a community generating understanding together through respectfully encouraging expression of tentative ideas while at the same time promoting critical reflection and analysis of evidence for and against the shared ideas. Elaboration activities offer an opportunity to test the reliability and validity of new ideas and to explore contexts of application of the new ideas. Through discussions of experiences, we encourage students to summarize principles, the big ideas, that apply across a range of contexts. Assessment embedded within instruction allows students to check on their understanding and allows teachers to monitor progress and identify instructional needs of individuals or of the whole class. Revisiting the ideas and issues by applying them in subsequent units helps students see the value of the new ideas. In subsequent assessments, we go back to the facets of problematic thinking to see the extent to which students have moved away from thinking based on superficial features and have moved toward more principled thinking. The organization and instruction based on facets is effective in promoting development of even the weaker students. Tuning our instruction to address the specific thinking of learners can improve learning across the sciences.

Teachers need to better understand their student' thinking. They need to design or choose instruction to effect better learning. The facets perspective supports teachers' attempts to make sense of classroom experiences and to facilitate more effective learning.

ACKNOWLEDGMENTS

I would like to thank my former students and my teacher colleagues for sharing their classrooms with me and with each other, and for contributing substantially to the data leading to facets descriptions of students' thinking and to benchmark and elaboration lessons. I would also like to thank my colleagues at Talaria Inc. and at the University of Washington, most notably Earl Hunt, for their critical review of theoretical ideas and for their assistance in the transfer of the facets framework into technological tools. Together, I know we have constructed a practical, research-based framework that can assist students and teachers in effecting better learning. Finally, I want to thank the editors of this book and their reviewers for their thoughtful, constructive comments.

Research and development of the facets perspective was supported by grants from the James S. McDonnell Foundation Program in Cognitive Studies for Educational Practice (CSEP) and the National Science Foundation Program for Research in Teaching and Learning (RTL). The writing of this chapter was partially supported by grants #REC-9906098 and REC-9972999 from the National Science Foundation Program for Research in Educational Policy and Practice (REPP). The ideas expressed are those of the author and do not necessarily represent the beliefs of the foundations.

REFERENCES

Bruer, J. (1993). Schools for thought: A science for learning in the classroom. Cambridge, MA: MIT Press.

Camp, C., and Clement, J. (1994). Preconceptions in mechanics: Lessons dealing with students' conceptual difficulties. Dubuque, IA: Kendall Hunt.

diSessa, A., & Minstrell, J. (1998). Cultivating conceptual change with benchmark lessons. In J. Greeno and S. Goldman (Eds.), Thinking practices in mathematics and science learning (pp. 155–187). Mahwah, NJ: Lawrence Erlbaum Associates.

Donovan, M., Bransford, J., & Pellegrino, J. (Eds.). (1999). How people learn: bridging research and practice. Washington, DC: National Research Council.

Hunt, E., & Minstrell, J. (1994). A collaborative classroom for teaching conceptual physics. In K. McGilly (Ed.), Classorrom lessons: Integrating cognitive theory and classroom practice (pp. 51–74). Cambridge, MA: MIT Press.

Levidow, B., Hunt, E., & McKee, C. (1991). The DIAGNOSER: A HyperCard tool for building theoretically based tutorials. Behavioral research, method, instruments, and computers, 23 (2), 249–252.

Minstrell, J. (1982a, January). Explaining the “at rest” condition of an object. The Physics Teacher, pp. 10–14.

Minstrell, J. (1982b). Conceptual development research in the natural setting of the classroom. In M. B. Rowe (Ed.), Education for the 80': Science, (pp. 129–143). Washington, DC: National Education Association.

Minstrell, J. (1989). Teaching science for understanding. In L. Resnick and L. Klopfer (Eds.), ASCD 1989 Yearbook: Toward the thinking curriculum: Current cognitive research, Washington, DC, Association for Supervision and Curriculum Development.

Minstrell, J. (1992). Facets of students' knowledge and relevant instruction. In R. Duit, F. Goldberg, & H. Niedderer (Eds.), Proceedings of the international workshop: Research in physics learning—theoretical issues and empirical studies (pp. 110–128). Kiel, Germany: The Institute for Science Education at the University of Kiel (IPN).

Minstrell, J. (in press). Facets of students' thinking: Designing to cross the gap from research to standards-based practice. In K. Crowley, C. Schunn, & T. Okada (Eds.) Designing for science: Implications from professional, instructional, and everyday science. Mahwah, NJ: Lawrence Erlbaum Associates.

Minstrell, J., & Stimpson, V. (1996). A classroom environment for learning: Guiding students' reconstruction of understanding and reasoning. In R. Glaser & L. Schauble (Eds), Innovations in learning: New environments for education (pp. 175–202). Mahwah, NJ: Lawrence Erlbaum Associates.

Nakamura, Y., & Madigan, D. (1997). A facet-based learning approach for elder care (FABLE): A computer-based tool for teaching geriatric pain management skills. Unpublished manuscript.

Project Physics. (1970). New York: Holt, Rinehart & Winston.

Schaffner, A. (1997). Tools for the advancement of undergraduate statistics education. Unpublished doctoral dissertation, University of Washington, Seattle.

van Zee, E., & Minstrell, J. (1997). Developing shared understanding in a physics classroom. The International Journal of Science Education, 19, 2 (pp. 209–228).