Chapter 2. Reliability and Safety Problems

If anything can go wrong,

it will (and at the worst

possible moment).

A VARIANT OF MURPHY’S LAW

ONE OF THE MOST IMPORTANT CHALLENGES in the design of computer-related systems is to provide meaningful assurances that a given system will behave dependably, despite hardware malfunctions, operator mistakes, user mistakes, malicious misuse, and problems in the external environment—including lightning strikes and power outages that affect system performance, as well as circumstances outside the computer systems relating to processes being controlled or monitored, such as a nuclear meltdown.

With respect to reliability issues, a distinction is generally made among faults, errors, and failures in systems. Basically, a fault is a condition that could cause the system to fail. An error is a deviation from expected behavior. Some errors can be ignored or overcome. However, a failure is an error that is not acceptable. A critical failure is a failure that can have serious consequences, such as causing damage to individuals or otherwise undermining the critical requirements of the system.

The following 11 sections of this chapter present past events that illustrate the difficulties in attaining reliability and human safety. These events relate to communication systems and computers used in space programs, defense, aviation, public transit, control systems, and other applications. Many different causes and effects are represented. Each application area is considered in summary at the end of the section relating to that area.

It is in principle convenient to decouple reliability issues from security issues. This chapter considers reliability problems, whereas security is examined in Chapters 3 and 5. In practice, it is essential that both reliability and security be considered within a common framework, because of factors noted in Chapter 4. Such an overall system perspective is provided in Chapter 7. In that chapter, a discussion of how to increase reliability is given in Section 7.7.

2.1 Communication Systems

Communications are required for many purposes, including for linking people, telephones, facsimile machines, and computer systems with one another. Desirable requirements for adequate communications include reliable access and reliable transmission, communication security, privacy, and availability of the desired service.

2.1.1 Communications Reliability Problems

This section considers primarily cases in which the reliability requirements failed to be met for communications involving people and computers. Several security-related communications problems are considered in Chapter 5.

The 1980 ARPAnet collapse

In the 1970s, the ARPAnet was a network linking primarily research computers, mostly within the United States, under the auspices of the Advanced Research Projects Agency (ARPA) of the Department of Defense (DoD). (It was the precursor of the Internet, which now links together many different computer networks worldwide.) On October 27, 1980, the ARPAnet experienced an unprecedented outage of approximately 4 hours, after years of almost flawless operation. This dramatic event is discussed in detail by Eric Rosen [139], and is summarized briefly here.

The collapse of the network resulted from an unforeseen interaction among three different problems: (1) a hardware failure resulted in bits being dropped in memory; (2) a redundant single-error-detecting code was used for transmission, but not for storage; and (3) the garbage-collection algorithm for removing old messages was not resistant to the simultaneous existence of one message with several different time stamps. This particular combination of circumstances had not arisen previously. In normal operation, each net node broadcasts a status message to each of its neighbors once per minute; 1 minute later, that message is then rebroadcast to the iterated neighbors, and so on. In the absence of bogus status messages, the garbage-collection algorithm is relatively sound. It keeps only the most recent of the status messages received from any given node, where recency is defined as the larger of two close-together 6-bit time stamps, modulo 64. Thus, for example, a node could delete any message that it had already received via a shorter path, or a message that it had originally sent that was routed back to it. For simplicity, 32 was considered a permissible difference, with the numerically larger time stamp being arbitrarily deemed the more recent in that case. In the situation that caused the collapse, the correct version of the time stamp was 44 [101100 in binary], whereas the bit-dropped versions had time stamps 40 [101000] and 8 [001000]. The garbage-collection algorithm noted that 44 was more recent than 40, which in turn was more recent than 8, which in turn was more recent than 44 (modulo 64). Thus, all three versions of that status message had to be kept.

From then on, the normal generation and forwarding of status messages from the particular node were such that all of those messages and their successors with newer time stamps had to be kept, thereby saturating the memory of each node. In effect, this was a naturally propagating, globally contaminating effect. Ironically, the status messages had the highest priority, and thus defeated all efforts to maintain the network nodes remotely. Every node had to be shut down manually. Only after each site administrator reported back that the local nodes were down could the network be reconstituted; otherwise, the contaminating propagation would have begun anew. This case is considered further in Section 4.1. Further explanation of the use of parity checks for detecting any arbitrary single bit in error is deferred until Section 7.7.

The 1986 ARPAnet outage

Reliability concerns dictated that logical redundancy should be used to ensure alternate paths between the New England ARPAnet sites and the rest of the ARPAnet. Thus, seven separate circuit links were established. Unfortunately, all of them were routed through the same fiber-optic cable, which was accidentally severed near White Plains, New York, on December 12, 1986 (SEN 12, 1, 17).1

The 1990 AT&T system runaway

In mid-December 1989, AT&T installed new software in 114 electronic switching systems (Number 4 ESS), intended to reduce the overhead required in signaling between switches by eliminating a signal indicating that a node was ready to resume receiving traffic; instead, the other nodes were expected to recognize implicitly the readiness of the previously failed node, based on its resumption of activity. Unfortunately, there was an undetected latent flaw in the recovery-recognition software in every one of those switches.

On January 15, 1990, one of the switches experienced abnormal behavior; it signaled that it could not accept further traffic, went through its recovery cycle, and then resumed sending traffic. A second switch accepted the message from the first switch and attempted to reset itself. However, a second message arrived from the first switch that could not be processed properly, because of the flaw in the software. The second switch shut itself down, recovered, and resumed sending traffic. That resulted in the same problem propagating to the neighboring switches, and then iteratively and repeatedly to all 114 switches. The hitherto undetected problem manifested itself in subsequent simulations whenever a second message arrived within too short a time. AT&T finally was able to diagnose the problem and to eliminate it by reducing the messaging load of the network, after a 9-hour nationwide blockade.2 With the reduced load, the erratic behavior effectively went away by itself, although the software still had to be patched correctly to prevent a recurrence. Reportedly, approximately 5 million calls were blocked.

The ultimate cause of the problem was traced to a C program that contained a break statement within an if clause nested within a switch clause. This problem can be called a programming error, or a deficiency of the C language and its compiler, depending on your taste, in that the intervening if clause was in violation of expected programming practice. (We return to this case in Section 4.1.)

The Chicago area telephone cable

Midmorning on November 19, 1990, a contractor planting trees severed a high-capacity telephone line in suburban Chicago, cutting off long-distance service and most local service for 150,000 telephones. The personal and commercial disruptions were extensive. Teller machines at some banks were paralyzed. Flights at O’Hare International Airport were delayed because the air-traffic control tower temporarily lost contact with the main Federal Aviation Administration air-traffic control centers for the Chicago area. By midafternoon, Illinois Bell Telephone Company had done some rerouting, although outages and service degradations continued for the rest of the day.3

The New York area telephone cable

An AT&T crew removing an old cable in Newark, New Jersey, accidentally severed a fiber-optic cable carrying more than 100,000 calls. Starting at 9:30 A.M. on January 4, 1991, and continuing for much of the day, the effects included shutdown of the New York Mercantile Exchange and several commodities exchanges; disruption of Federal Aviation Administration (FAA) air-traffic control communication in the New York metropolitan area, Washington, and Boston; lengthy flight delays; and blockage of 60 percent of the long-distance telephone calls into and out of New York City.4

Virginia cable cut

On June 14, 1991, two parallel cables were cut by a backhoe in Annandale, Virginia. The Associated Press (perhaps having learned from the previously noted seven-links-in-one-conduit separation of New England from the rest of the ARPAnet) had requested two separate cables for their primary and backup circuits. Both cables were cut at the same time, because they were adjacent!

Fiber-optic cable cut

A Sprint San Francisco Bay Area fiber-optic cable was cut on July 15, 1991, affecting long-distance service for 3.5 hours. Rerouting through AT&T caused congestion there as well.5

SS-7 faulty code patch

On June 27, 1991, various metropolitan areas had serious outages of telephone service. Washington, D.C. (6.7 million lines), Los Angeles, and Pittsburgh (1 million lines) were all affected for several hours. Those problems were eventually traced to a flaw in the Signaling System 7 protocol implementation, and were attributed to an untested patch that involved just a few lines of code. A subsequent report to the Federal Communications Commission (written by Bell Communications Research Corp.) identified a mistyped character in the April software release produced by DSC Communications Corp. (“6” instead of “D”) as the cause, along with faulty data, failure of computer clocks, and other triggers. This problem caused the equipment and software to fail under an avalanche of computer-generated messages (SEN, 17, 1, 8-9).

Faulty clock maintenance

A San Francisco telephone outage was traced to improper maintenance when a faulty clock had to be repaired (SEN, 16, 3, 16-17).

Backup battery drained

A further telephone-switching system outage caused the three New York airports to shut down for 4 hours on September 17, 1991. AT&T’s backup generator hookup failed while a Number 4 ESS switching system in Manhattan was running on internal power (in response to a voluntary New York City brownout); the system ran on standby batteries for 6 hours until the batteries depleted, unbeknownst to the personnel on hand. The two people responsible for handling emergencies were attending a class on power-room alarms. However, the alarms had been disconnected previously because of construction in the area that was continually triggering the alarms! The ensuing FAA report concluded that about 5 million telephone calls had been blocked, and 1174 flights had been canceled or delayed (SEN 16, 4; 17, 1).

Air-traffic control problems

An FAA report to a House subcommittee listed 114 “major telecommunication outages” averaging 6.1 hours in the FAA network consisting of 14,000 leased lines across the country, during the 13-month period from August 1990 to August 1991. These outages led to flight delays and generated safety concerns. The duration of these outages ranged up to 16 hours, when a contractor cut a 1500-circuit cable at the San Diego airport in August 1991. That case and an earlier outage in Aurora, Illinois, are also noted in Section 2.4. The cited report included the following cases as well. (See SEN 17, 1.)

• On May 4, 1991, four of the FAA’s 20 major air-traffic control centers shut down for 5 hours and 22 minutes. The cause: “Fiber cable was cut by a farmer burying a dead cow. Lost 27 circuits. Massive operational impact.”

• The Kansas City, Missouri, air-traffic center lost communications for 4 hours and 16 minutes. The cause: “Beaver chewed fiber cable.” (Sharks have also been known to chomp on undersea cables.) Causes of other outages cited included lightning strikes, misplaced backhoe buckets, blown fuses, and computer problems.

• In autumn 1991, two technicians in an AT&T long-distance station in suburban Boston put switching components on a table with other unmarked components, and then put the wrong parts back into the machine. That led to a 3-hour loss of long-distance service and to flight delays at Logan International Airport.

• The failure of a U.S. Weather Service telephone circuit resulted in the complete unavailability of routine weather-forecast information for a period of 12 hours on November 22, 1991 (SEN 17, 1).

Problems in air-traffic control systems are considered more generally in Section 2.4, including other cases that did not involve communications difficulties.

The incidence of such outages and partial blockages seems to be increasing, rather than decreasing, as more emphasis is placed on software and as communication links proliferate. Distributed computer-communication systems are intrinsically tricky, as illustrated by the 1980 ARPAnet collapse and the similar nationwide saturation of AT&T switching systems on January 15, 1990. The timing-synchronization glitch that delayed the first shuttle launch (Section 2.2.1) and other problems that follow also illustrate difficulties in distributed systems. A discussion of the underlying problems of distributed systems is given in Section 7.3.

2.1.2 Summary of Communications Problems

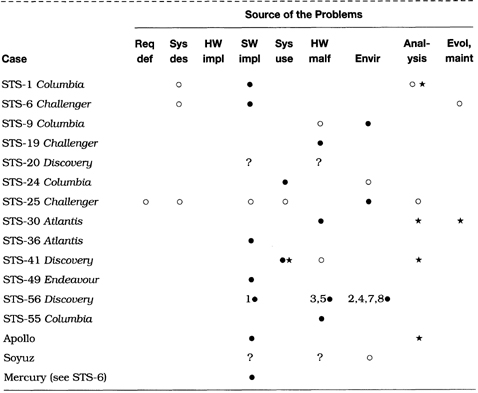

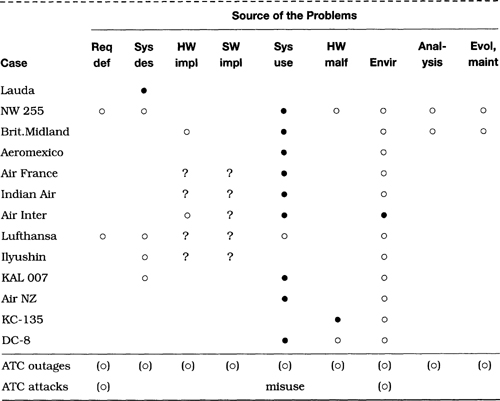

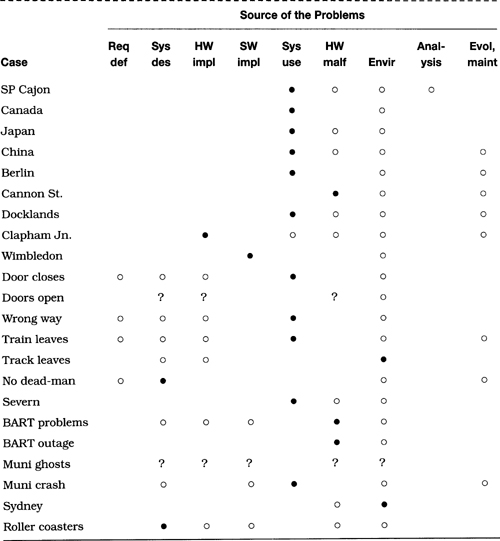

Section 2.1 considers a collection of cases related to communications failures. Table 2.1 provides a brief summary of the causes of these problems. The column heads relate to causative factors enumerated in Section 1.2, and are as defined in Table 1.1 in Section 1.5. Similarly, the table entries are as defined in Table 1.1.

Table 2.1 Summary of communications problems

The causes and effects in the cases in this section vary widely from one case to another. The 1980 ARPAnet outage was triggered by hardware failures, but required the presence of a software weakness and a hardware design shortcut that permitted total propagation of the contaminating effect. The 1986 ARPAnet separation was triggered by an environmental accident, but depended on a poor implementation decision. The 1990 AT&T blockage resulted from a programming mistake and a network design that permitted total propagation of the contaminating effect. A flurry of cable cuttings (Chicago, New York area, Virginia, and the San Francisco Bay Area) resulted from digging accidents, but the effects in each case were exacerbated by designs that made the systems particularly vulnerable. The SS-7 problems resulted from a faulty code patch and the absence of testing before that patch was installed. The New York City telephone outage resulted from poor network administration, and was complicated by the earlier removal of warning alarms.

In summary, the primary causes of the cited communications problems were largely environmental, or were the results of problems in maintenance and system evolution. Hardware malfunctions were involved in most cases, but generally as secondary causative factors. Software was involved in several cases. Many of the cases have multiple causative factors.

The diversity of causes and effects noted in these communications problems is typical of what is seen throughout each of the sections of this chapter relating to reliability, as well as in succeeding chapters relating to security and privacy. However, the specific factors differ from one type of application to another. As a consequence, the challenge of avoiding similar problems in the future is itself quite diversified. (See, for example, Chapter 7.)

2.2 Problems in Space

In this section, we consider difficulties that have occurred in space programs, involving shuttles, probes, rockets, and satellites, to illustrate the diversity among reliability and safety problems.

One of the most spectacular uses of computers is in the design, engineering, launch preparation, and real-time control of space vehicles and experiments. There have been magnificent advances in this area. However, in the firm belief that we must learn from the difficulties as well as the successes, this section documents some of the troubles that have occurred.

Section 2.2.1 considers problems that have arisen in the shuttle program, plus a few other related cases involving risks to human lives. Section 2.2.2 considers space exploration that does not involve people on board.

2.2.1 Human Exploration in Space

Despite the enormous care taken in development and operation, the space program has had its share of problems. In particular, the shuttle program has experienced many difficulties. The National Aeronautics and Space Administration (NASA) has recorded hundreds of anomalies involving computers and avionics in the shuttle program. A few of the more instructive cases are itemized here, including several in which the lives of the astronauts were at stake. Unfortunately, details are not always available regarding what caused the problem, which makes it difficult to discuss how that problem could have been avoided, and which may at times be frustrating to both author and reader.

The order of presentation in this section is more or less chronological. The nature of the causes is extremely diverse; indeed, the causes are different in each case. Thus, we begin by illustrating this diversity, and then in retrospect consider the nature of the contributing factors. In later sections of this chapter and in later chapters, the presentation tends to follow a more structured approach, categorizing problems by type. However, the same conclusion is generally evident. The diversity among the causes and effects of the encountered risks is usually considerable — although different from one application area to another.

The first shuttle launch (STS-1)

One of the most often-cited computer-system problems occurred about 20 minutes before the scheduled launch of the first space shuttle, Columbia, on April 10, 1981. The problem was extensively described by Jack Garman (“The ‘Bug’ Heard ‘Round the World” [47]), and is summarized here. The launch was put on hold for 2 days because the backup computer could not be initialized properly.

The on-board shuttle software runs on two pairs of primary computers, with one pair in control as long as the simultaneous computations on both agree with each other, with control passing to the other pair in the case of a mismatch. All four primary computers run identical programs. To prevent catastrophic failures in which both pairs fail to perform (for example, if the software were wrong), the shuttle has a fifth computer that is programmed with different code by different programmers from a different company, but using the same specifications and the same compiler (HAL/S). Cutover to the backup computer would have to be done manually by the astronauts. (Actually, the backup fifth computer has never been used in mission control.)

Let’s simplify the complicated story related by Garman. The backup computer and the four primary computers were 1 time unit out of phase during the initialization, as a result of an extremely subtle 1-in-67 chance timing error. The backup system refused to initialize, even though the primary computers appeared to be operating perfectly throughout the 30-hour countdown. Data words brought in one cycle too soon on the primaries were rejected as noise by the backup. This system flaw occurred despite extraordinarily conservative design and implementation. (The interested reader should delve into [47] for the details.)

A software problem on shuttle (STS-6)

The Johnson Space Center Mission Control Center had a pool of four IBM 370/168 systems for STS mission operations on the ground. During a mission, one system is on-line. One is on hot backup, and can come on-line in about 15 seconds. During critical periods such as launch, reentry, orbit changes, or payload deployment, a third is available on about 15 minutes notice. The third 370 can be supporting simulations or software development during a mission, because such programs can be interrupted easily. Prior to STS-6, the 370 software supported only one activity (mission, simulation, or software development) at a time. Later, the Mature Operations Configuration would support three activities at a time. These would be called the Dual and Triple Mission Software deliveries. STS-6 was the first mission after the Dual Mission Software had been installed. At liftoff, the memory allocated to that mission was saturated with the primary processing, and the module that allocated memory would not release the memory allocated to a second mission for Abort Trajectory calculation. Therefore, if the mission had been aborted, trajectories would not have been available. After the mission, a flaw in the software was diagnosed and corrected (SEN 11, 1).

Note that in a similar incident, Mercury astronauts had previously had to fly a manual reentry because of a program bug that prevented automatic control on reentry (SEN 8, 3).

Shuttle Columbia return delayed (STS-9)

On December 8, 1983, the shuttle mission had some serious difficulties with the on-board computers. STS-9 lost the number 1 computer 4 hours before reentry was scheduled to begin. Number 2 took over for 5 minutes, and then conked out. Mission Control powered up number 3; they were able to restart number 2 but not number 1, so the reentry was delayed. Landing was finally achieved, although number 2 died again on touchdown. Subsequent analysis indicated that each processor failure was due to a single loose piece of solder bouncing around under 20 gravities. Astronaut John Young later testified that, had the backup flight system been activated, the mission would have been lost (SEN 9, 1, 4; 14, 2).

Thermocouple problem on Challenger (STS-19)

On July 29, 1985, 3 minutes into ascent, a failure in one of two thermocouples directed a computer-controlled shutdown of the center engine. Mission control decided to abort into orbit, 70 miles up — 50 miles lower than planned. Had the shutdown occurred 30 seconds earlier, the mission would have had to abort over the Atlantic. NASA has reset some of the binary thermocouple limits via subsequent software changes. (See SEN 14, 2.)

Discovery launch delay (STS-20)

An untimely—and possibly experiment-aborting—delay of the intended August 25, 1985, launch of the space shuttle Discovery was caused when a malfunction in the backup computer was discovered just 25 minutes before the scheduled launch. The resulting 2-day delay caused serious complications in scheduling of the on-board experiments, although the mission was concluded successfully. A reporter wrote, “What was puzzling to engineers was that the computer had worked perfectly in tests before today. And in tests after the failure, it worked, though showing signs of trouble.”6 (Does that puzzle you?)

Arnold Aldrich, manager of the shuttle program at Johnson, was quoted as saying “We’re about 99.5 percent sure it’s a hardware failure.” (The computers were state of the art as of 1972, and were due for upgrading in 1987.) A similar failure of the backup computer caused a 1-day delay in Discovery’s maiden launch in the summer of 1984 (SEN 10, 5).

Near-disaster on shuttle Columbia (STS-24)

The space shuttle Columbia came within 31 seconds of being launched without enough fuel to reach its planned orbit on January 6, 1986, after weary Kennedy Space Center workers mistakenly drained 18,000 gallons of liquid oxygen from the craft, according to documents released by the White House panel that probed the shuttle program. Although NASA said at the time that computer problems were responsible for the scrubbed launch, U.S. Representative Bill Nelson from Florida flew on the mission, and said that he was informed of the fuel loss while aboard the spacecraft that day.

According to the appendix [to the panel report], Columbia’s brush with disaster . . . occurred when Lockheed Space Operations Co. workers “inadvertently” drained super-cold oxygen from the shuttle’s external tank 5 minutes before the scheduled launch. The workers misread computer evidence of a failed valve and allowed a fuel line to remain open. The leak was detected when the cold oxygen caused a temperature gauge to drop below approved levels, but not until 31 seconds before the launch was the liftoff scrubbed.7

The Challenger disaster (STS-25)

The destruction of the space shuttle Challenger on January 28, 1986, killed all seven people on board. The explosion was ultimately attributed to the poor design of the booster rockets, as well as to administrative shortcomings that permitted the launch under cold-weather conditions. However, there was apparently a decision along the way to economize on the sensors and on their computer interpretation by removing the sensors on the booster rockets. There is speculation that those sensors might have permitted earlier detection of the booster-rocket failure, and possible early separation of the shuttle in an effort to save the astronauts. Other shortcuts were also taken so that the team could adhere to an accelerating launch schedule. The loss of the Challenger seems to have resulted in part from some inadequate administrative concern for safety—despite recognition and knowledge of hundreds of problems in past flights. Problems with the O-rings — especially their inability to function properly in cold weather—and with the booster-rocket joints had been recognized for some time. The presidential commission found “a tangle of bureaucratic underbrush”: “Astronauts told the commission in a public hearing . . . that poor organization of shuttle operations led to such chronic problems as crucial mission software arriving just before shuttle launches and the constant cannibalization of orbiters for spare parts.” (See the Chicago Tribune, April 6, 1986.)

Shuttle Atlantis computer fixed in space (STS-30).8

One of Atlantis’ main computers failed on May 7, 1989. For the first time ever, the astronauts made repairs — in this case, by substituting a spare processor. It took them about 3.5 hours to gain access to the computer systems by removing a row of lockers on the shuttle middeck, and another 1.5 hours to check out the replacement computer. (The difficulty in making repairs was due to a long-standing NASA decision from the Apollo days that the computers should be physically inaccessible to the astronauts.) (See SEN 14, 5.)

Shuttle Atlantis backup computer on ground delays launch (STS-36)

A scheduled launch of Atlantis was delayed for 3 days because of “bad software” in the backup tracking system. (See SEN 15, 2.)

Shuttle Discovery misprogramming (STS-41)

According to flight director Milt Heflin, the shuttle Discovery was launched on October 6, 1990, with incorrect instructions on how to operate certain of its programs. The error was discovered by the shuttle crew about 1 hour into the mission, and was corrected quickly. NASA claims that automatic safeguards would have prevented any ill effects even if the crew had not noticed the error on a display. The error was made before the launch, and was discovered when the crew was switching the shuttle computers from launch to orbital operations. The switching procedure involves shutting down computers 3 and 5, while computers 1 and 2 carry on normal operations, and computer 4 monitors the shuttle’s vital signs. However, the crew noticed that the instructions for computer 4 were in fact those intended for computer 2. Heflin stated that the problem is considered serious because the ground prelaunch procedures failed to catch it.9

Intelsat 6 launch failed

In early 1990, an attempt to launch the $150 million Intelsat 6 communications satellite from a Titan III rocket failed because of a wiring error in the booster, due to inadequate human communications between the electricians and the programmers who failed to specify in which of the two satellite positions the rocket had been placed; the program had selected the wrong one (Steve Bellovin, SEN 15, 3). Live space rescue had to be attempted. (See the next item.)

Shuttle Endeavour computer miscomputes rendezvous with Intelsat (STS-49)

In a rescue mission in May 1992, the Endeavour crew had difficulties in attempting to rendezvous with Intelsat 6. The difference between two values that were numerically extremely close (but not identical) was effectively rounded to zero; thus, the program was unable to observe that there were different values. NASA subsequently changed the specifications for future flights.10

Shuttle Discovery multiple launch delays (STS-56)

On April 5, 1993, an attempt to launch Discovery was aborted 11 seconds before liftoff. (The engines had not yet been ignited.) (There had been an earlier 1-hour delay at 9 minutes before launch, due to high winds and a temperature-sensor problem.) Early indications had pointed to failure of a valve in the main propulsion system. Subsequent analysis indicated that the valve was operating properly, but that the computer system interpreted the sensor incorrectly—indicating that the valve had not closed. A quick fix was to bypass the sensor reading, which would have introduced a new set of risks had there subsequently been a valve problem.11

This Discovery mission experienced many delays and other problems. Because of the Rube Goldberg nature of the mission, each event is identified with a number referred to in Table 2.2 at the end of the section. (1) The initial attempted launch was aborted. (2) On July 17, 1993, the next attempted launch was postponed because ground restraints released prematurely. (3) On July 24, a steering-mechanism failure was detected at 19 seconds from blastoff. (4) On August 4, the rescheduled launch was postponed again to avoid potential interference from the expected heavy Perseid meteor shower on August 11 (in the aftermath of the reemergence of the comet Swift-Tuttle). (5) On August 12, the launch was aborted when the engines had to be shut down 3 seconds from blastoff, because of a suspected failure in the main-engine fuel-flow monitor. (An Associated Press report of August 13, 1993, estimated the expense of the scrubbed launches at over $2 million.) (6) The Columbia launch finally went off on September 12, 1993. Columbia was able to launch two satellites, although each had to be delayed by one orbit due to two unrelated communication problems. (7) An experimental communication satellite could not be released on schedule, because of interference from the payload radio system. (8) The release of an ultraviolet telescope had to be delayed by one orbit because communication interference had delayed receipt of the appropriate commands from ground control.

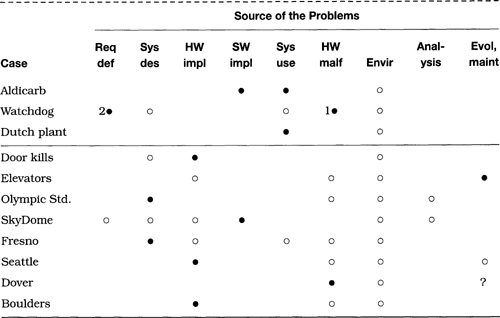

Table 2.2 Summary of space problems

Shuttle Columbia aborted at -3 seconds (STS-55)

On March 22, 1993, Columbia’s main engines were shut down 3 seconds before liftoff, because of a leaky valve. (The mission finally went off on April 26, 1993.)

Columbia launch grounded due to safety software (STS-58)

The planned launch of Columbia on October 14, 1993, had to be scrubbed at the last minute because of a “glitch in the computer system designed to ensure safety on the ground during launches.”12 The launch went off successfully 4 days later.

Apollo: The moon was repulsive

The Apollo 11 software reportedly had a program flaw that resulted in the moon’s gravity appearing repulsive rather than attractive. This mistake was caught in simulation. (See SEN 9, 5, based on an oral report.)

Software mixup on the Soyuz spacecraft

According to Aviation Week (September 12, 1988, page 27), in the second failed reentry of the Soviet Soyuz-TM spacecraft on September 7, 1988, the engines were shut down in the last few seconds due to a computer problem: “Instead of using the descent program worked out for the Soviet-Afghan crew, the computer switched to a reentry program that had been stored in the Soyuz TM-5 computers in June for a Soviet-Bulgarian crew. Soviet officials said . . . that they did not understand why this computer mixup occurred.” Karl Lehenbauer noted that the article stated that the crew was committed to a reentry because they had jettisoned the orbital module that contained equipment that would be needed to redock with the Mir space station. The article also noted that Geoffrey Perry, an analyst of Soviet space activities with the Kettering Group, said “the crew was not flying in the same Soyuz that they were launched in, but instead were in a spacecraft that had been docked with the Mir for about 90 days. Perry said that is about half the designed orbital life of the Soyuz.” (See SEN 13, 4.)

2.2.2 Other Space-Program Problems

The problems itemized in Section 2.2.1 involved risks to human lives (except for the Intelsat 6 launch, which is included because of the rescue mission). Some of these cases necessitated extra-vehicular human actions as well. Next, we examine problems relating to uninhabited space missions.

Atlas-Agena went beserk due to missing hyphen

On what was expected to be the first U.S. spacecraft to do a Venus flyby, an Atlas-Agena became unstable at about 90 miles up. The $18.5 million rocket was then blown up on command from the control center at Cape Kennedy. Subsequent analysis showed that the flight plan was missing a hyphen that was a symbol for a particular formula.13

Mariner I lost

Mariner I was intended to be the first space probe to visit another planet (Venus). Apparent failure of an Atlas booster during launch on July 22, 1962, caused the range officer to destroy the booster rather than to risk its crashing in a populated area. However, in reality, the rocket was behaving correctly, and it was the ground-based computer system analyzing the launch that was in error—as the result of a software bug and a hardware failure. The software bug is noteworthy. The two radar systems differed in time by 43 milliseconds, for which the program supposedly compensated. The bug arose because the overbar had been left out in the handwritten guidance equations in the expression R dot bar sub n. Here R denotes the radius; the dot indicates the first derivative — that is, the velocity; the bar indicates smoothed rather than raw data; and n is the increment. When a hardware fault occurred, the computer processed the track data incorrectly, leading to the erroneous termination of the launch.14

There had been earlier erroneous reports that the problem was due to a comma that had been entered accidentally as a period in a DO statement (which really was a problem with the Mercury software, as noted in the next item). The confusion was further compounded by a report that the missing overbar might have been the interchange of a minus sign and a hyphen, but that was also false (SEN 13, 1). This case illustrates the difficulties we sometimes face in trying to get correct details about a computer failure.

DO I=1.10 bug in Mercury software

Project Mercury’s FORTRAN code had a syntax error something like DO I=1.10 instead of DO I=1,10. The substitution of a comma for a period was discovered in an analysis of why the software did not seem sufficiently accurate, even though the program had been used successfully in previous suborbital missions; the error was corrected before the subsequent orbital and moon flights, for which it might have caused problems. This case was reported in RISKS by Fred Webb, whose officemate had found the flaw in searching for why the program’s accuracy was poor (SEN 15, 1). (The erroneous 1.10 would cause the loop to be executed exactly once.)

Ohmage to an Aries launch

At White Sands Missile Range, New Mexico, a rocket carrying a scientific payload for NASA was destroyed 50 seconds after launch because its guidance system failed. The loss of the $1.5-million rocket was caused by the installation of an improper resistor in the guidance system.15 (The flight was the twenty-seventh since the first Aries was launched in 1973, but was only the third to experience failure.)

Gemini V lands 100 miles off course

Gemini V splashed down off-course by 100 miles because of a programmer’s misguided short-cut. The intended calculation was to compute the earth reference point relative to the sun as a fixed point, using the elapsed time since launch. The programmer forgot that the earth does not come back to the same point relative to the sun 24 hours later, so that the error cumulatively increased each day.16

Titan, Orion, Delta, Atlas, and Ariane failures

The disaster of the space shuttle Challenger (January 28, 1986) led to increased efforts to launch satellites without risking human lives in shuttle missions. However, the Challenger loss was followed by losses of the Titan III (34-D) on April 18, 1986; the Nike Orion on April 25, 1986 (although it was not reported until May 9); and the Delta rocket on May 3, 1986. The Titan III loss was the second consecutive Titan III failure, this launch having been delayed because of an accident in the previous launch during the preceding August, traced to a first-stage turbo-pump. A subsequent Titan IV loss is noted in Section 7.5. A further Titan IV blew up 2 minutes after launch on August 2, 1993, destroying a secret payload thought to be a spy satellite.17 The failure of the Nike Orion was its first, after 120 consecutive successes. The Delta failure followed 43 consecutive successful launches dating back to September 1977. In the Delta-178 failure, the rocket’s main engine mysteriously shut itself down 71 seconds into the flight—with no evidence of why! (Left without guidance at 1400 mph, the rocket had to be destroyed, along with its weather satellite.) The flight appeared normal up to that time, including the jettisoning of the first set of solid rockets after 1 minute out. Bill Russell, the Delta manager, was quoted thus: “It’s a very sharp shutdown, almost as though it were a commanded shutdown.” The preliminary diagnosis seemed to implicate a short circuit in the engine-control circuit. The May 22, 1986, launch of the Atlas-Centaur was postponed pending the results of the Delta investigation, because both share common hardware. The French also had their troubles, when an Ariane went out of control and had to be destroyed, along with a $55 million satellite. (That was its fourth failure out of 18 launches; 3 of the 4 involved failure of the third stage.) Apparently, insurance premiums on satellite launches skyrocketed as a result. (See SEN 11, 3.) Atlas launches in 1991, August 1992, and March 1993 also experienced problems. The first one had to be destroyed shortly after liftoff, along with a Japanese broadcasting satellite. The second went out of control and had to be blown up, along with its cable TV satellite. The third left a Navy communications satellite in a useless orbit, the failure of the $138 million mission being blamed on a loose screw. The losses in those 3 failed flights were estimated at more than $388 million. The first successful Atlas flight in a year occurred on July 19, 1993, with the launch of a nuclear-hardened military communications satellite.18

Voyager missions

Voyager 1 lost mission data over a weekend because all 5 printers were not operational; 4 were configured improperly (for example, off-line) and one had a paper jam (SEN 15, 5).

Canaveral rocket destroyed

In August 1991, an off-course rocket had to be destroyed. A technician had apparently hit a wrong key while loading the guidance software, installing the ground-test version instead of the flight software. Subsequently, a bug was found before a subsequent launch that might have caused the rocket to err, even if the right guidance software had been in place (Steve Bellovin, SEN 16, 4).

Viking antenna problem

The Viking probe developed a misaligned antenna due to an improper code patch. (An orally provided report is noted in SEN 9, 5.)

Phobos 1 and 2

The Soviets lost their Phobos 1 Mars probe after it tumbled in orbit and the solar cells lost power. The tumbling resulted from a single character omitted in a transmission from a ground controller to the probe. The change was necessitated because of a transfer from one command center to another. The omission caused the spacecraft’s solar panels to point the wrong way, which prevented the batteries from staying charged, ultimately causing the spacecraft to run out of power. Phobos 2 was lost on March 27, 1989, because a signal to restart the transmitter while the latter was in power-saver mode was never received.19

Mars Observer vanishes

The Mars Observer disappeared from radio contact on Saturday, August 21, 1993, just after it was ready to pressurize its helium tank (which in turn pressurizes the hydrazine-oxygen fuel system), preparatory to the rocket firings that were intended to slow down the spacecraft and to allow it to be captured by Martian gravity and settle into orbit around Mars. The subsequent request to switch on its antenna again received no response. Until that point, the mission had been relatively trouble free—except that the spacecraft’s instructions had to be revised twice to overcome temporary problems. Speculation continued as to whether a line leak or a tank rupture might have occurred. Glenn Cunningham, project manager at the Jet Propulsion Laboratory, speculated on several other possible scenarios: the Observer’s on-board clock could have stopped, the radio could have overheated, or the antenna could have gone askew. Lengthy analysis has concluded that the most likely explanation is that a change in flight plan was the inadvertent cause. The original plan was to pressurize the propellant tanks 5 days after launch; instead, the pressurization was attempted 11 months into the flight, in hopes of minimizing the likelihood of a leak. Apparently the valves were not designed to operate under the alternative plan, which problem now seems most likely to have been the cause of a fuel-line rupture resulting from a mixture of hydrazine and a small amount of leaking oxidizer.20

Landsat 6

Landsat 6 was launched on October 5, 1993. It was variously but erroneously reported as (1) having gotten into an improper orbit, or (2) being in the correct orbit but unable to communicate. On November 8, 1993, the $228 million Landsat 6 was declared officially missing. The object NASA had been tracking turned out to be a piece of space junk.21

These problems came on the heels of the continued launch delays on another Discovery mission, a weather satellite that died in orbit after an electronic malfunction, and the Navy communications satellite that was launched into an unusable orbit from Vandenburg in March 1993 ($138 million). Shuttle launches were seriously delayed.22

Galileo troubles en route to Jupiter

The $1.4 billion Galileo spacecraft en route to Jupiter experienced difficulties in August 1993. Its main antenna jammed, and its transmissions were severely limited to the use of the low-gain antenna.

Anik E-1, E-2 failures

Canadian Telesat’s Anik E-1 satellite stopped working for about 8 hours on January 21, 1994, with widespread effects on telephones and the Canadian Press news agency, particularly in the north of Canada. Minutes after Anik E-1 was returned to service, Anik E-2 (which is Canada’s main broadcast satellite) ceased functioning altogether. Amid a variety of possible explanations (including magnetic storms, which later were absolved), the leading candidate involved electron fluxes related to solar coronal holes.

Xichaing launchpad explosion

On April 9, 1994, a huge explosion at China’s Xichiang launch facility killed at least two people, injured at least 20 others, and destroyed the $75 million Fengyun-2 weather satellite. The explosion also leveled a test laboratory. A leak in the on-board fuel system was reported as the probable cause.23

Lens cap blocks satellite test

A Star Wars satellite launched on March 24, 1989, was unable to observe the second-stage rocket firing because the lens cap had failed to be removed in time. By the time that the lens was uncovered, the satellite was pointing in the wrong direction (SEN 14, 2).

2.2.3 Summary of Space Problems

Table 2.2 provides a brief summary of the problems cited in Section 2.2. The abbreviations and symbols used for table entries and column headings are as in Table 1.1.

From the table, we observe that there is considerable diversity among the causes and effects, as in Table 2.1, and as suggested at the beginning of Section 2.2.1. However, the nature of the diversity of causes is quite different from that in Table 2.1. Here many of the problems involve software implementation or hardware malfunctions as the primary cause, although other types of causes are also included.

The diversity among software faults is evident. The STS-1 flaw was a subtle mistake in synchronization. The STS-49 problem resulted from a precision glitch. The original STS-56 launch postponement (noted in the table as subcase (1)) involved an error in sensor interpretation. The Mariner I bug was a lexical mistake in transforming handwritten equations to code for the ground system. The Mercury bug involved the substitution of a period for a comma. The Gemini bug resulted from an overzealous programmer trying to find a short-cut in equations of motion.

The diversity among hardware problems is also evident. The multiple STS-9 hardware failures were caused by a loose piece of solder. The STS-19 abort was due to a thermocouple failure. STS-30’s problem involved a hard processor failure that led the astronauts to replace the computer, despite the redundant design. The original STS-55 launch was aborted because of a leaky valve. Two of the STS-56 delays (subcases 3 and 5) were attributable to equipment problems. The Mariner I hardware failure in the ground system was the triggering cause of the mission’s abrupt end, although the software bug noted previously was the real culprit. The mysterious shutdown of the Delta-178 main engine adds still another possible cause.

Several cases of operational human mistakes are also represented. STS-24 involved a human misinterpretation of a valve condition. STS-41 uncovered incorrect operational protocols. The Atlas-Agena loss resulted from a mistake in the flight plan. Voyager gagged on output data because of an erroneous system configuration. The loss of Phobos 1 was caused by a missing character in a reconfiguration command.

The environmental causes include the cold climate under which the Challenger was launched (STS-25), weightlessness mobilizing loose solder (STS-9), and communication interference that caused the delays in Discovery’s placing satellites in orbit (STS-56, subcases 7 and 8).

Redundancy is an important way of increasing reliability; it is considered in Section 7.7. In addition to the on-board four primary computers and the separately programmed emergency backup computer, the shuttles also have replicated sensors, effectors, controls, and power supplies. However, the STS-1 example shows that the problem of getting agreement among the distinct program components is not necessarily straightforward. In addition, the backup system is intended primarily for reentry in an emergency in which the primary computers are not functional; it has not been maintained to reflect many of the changes that have occurred in recent years, and has never been used. A subsystem’s ability to perform correctly is always dubious if its use has never been required.

In one case (Apollo), analytical simulation uncovered a serious flaw before launch. In several cases, detected failures in a mission led to fixes that avoided serious consequences in future missions (STS-19, STS-49, and Mercury).

Maintenance in space is much more difficult than is maintenance in ground-based systems (as evidenced by the cases of STS-30 and Phobos), and consequently much greater care is given to preventive analysis in the space program. Such care seems to have paid off in many cases. However, it is also clear that many problems remain undetected before launch.

2.3 Defense

Some of the applications that stretch computer and communication technology to the limits involve military systems, including both defensive and offensive weapons. We might like to believe that more money and effort therefore would be devoted to solving problems of safety and lethality, system reliability, system security, and system assurance in defense-related systems than that expended in the private sector. However, the number of defense problems included in the RISKS archives suggests that there are still many lessons to be learned.

2.3.1 Defense Problems

In this section, we summarize a few of the most illustrative cases.

Patriot clock drift

During the Persian Gulf war, the Patriot system was initially touted as highly successful. In subsequent analyses, the estimates of its effectiveness were seriously downgraded, from about 95 percent to about 13 percent (or possibly less, according to MIT’s Ted Postol; see SEN 17, 2). The system had been designed to work under a much less stringent environment than that in which it was actually used in the war. The clock drift over a 100-hour period (which resulted in a tracking error of 678 meters) was blamed for the Patriot missing the scud missile that hit an American military barracks in Dhahran, killing 29 and injuring 97. However, the blame can be distributed—for example, among the original requirements (14-hour missions), clock precision, lack of system adaptability, extenuating operational conditions, and inadequate risks analysis (SEN, 16, 3; 16, 4). Other reports suggest that, even over the 14-hour duty cycle, the results were inadequate. A later report stated that the software used two different and unequal versions of the number 0.1—in 24-bit and 48-bit representations (SEN 18, 1, 25). (To illustrate the discrepancy, the decimal number 0.1 has as an endlessly repeating binary representation 0.0001100110011 . . . . Thus, two different representations truncated at different lengths are not identical—even in their floating-point representations.) This case is the opposite of the shuttle Endeavour problem on STS-49 noted in Section 2.2.1, in which two apparently identical numbers were in fact representations of numbers that were unequal!

Vincennes Aegis system shoots down Iranian Airbus

Iran Air Flight 655 was shot down by the USS Vincennes’ missiles on July 3, 1988, killing all 290 people aboard. There was considerable confusion attributed to the Aegis user interface. (The system had been designed to track missiles rather than airplanes.) The crew was apparently somewhat spooked by a previous altercation with a boat, and was working under perceived stress. However, replay of the data showed clearly that the airplane was ascending—rather than descending, as believed by the Aegis operator. It also showed that the operator had not tracked the plane’s identity correctly, but rather had locked onto an earlier identification of an Iranian F-14 fighter still on the runway. Matt Jaffe [58] reported that the altitude information was not displayed on the main screen, and that there was no indication of the rate of change of altitude (or even of whether the plane was going up, or going down, or remaining at the same altitude). Changes were recommended subsequently for the interface, but most of the problem was simplistically attributed to human error. (See SEN 13, 4; 14, 1; 14, 5; 14, 6.) However, earlier Aegis system failures in attempting to hit targets previously had been attributed to software (SEN 11, 5).

“Friendly Fire”—U.S. F-15s take out U.S. Black Hawks

Despite elaborate precautions designed to prevent such occurrences, two U.S. Army UH-60 Black Hawk helicopters were shot down by two American F-15C fighter planes in the no-fly zone over northern Iraq on April 14, 1994, in broad daylight, in an area that had been devoid of Iraqi aircraft activity for many months. One Sidewinder heat-seeking missile and one Amraam radar-guided missile were fired. The fighters were operating under instructions from an AWACS plane, their airborne command post. The AWACS command was the first to detect the helicopters, and instructed the F-15s to check out the identities of the helicopters. The helicopters were carrying U.S., British, French, and Turkish officers from the United Nations office in Zakho, in northern Iraq, and were heading eastward for a meeting with Kurdish leaders in Salahaddin. Both towns are close to the Turkish border, well north of the 26th parallel that delimits the no-fly zone. All 26 people aboard were killed.

After stopping to pick up passengers, both helicopter pilots apparently failed to perform the routine operation of notifying their AWACS command plane that they were underway. Both helicopters apparently failed to respond to the automated “Identification: Friend or Foe” (IFF) requests from the fighters. Both fighter pilots apparently did not try voice communications with the helicopters. A visual flyby apparently misidentified the clearly marked (“U.N.”) planes as Iranian MI-24s. Furthermore, a briefing had been held the day before for appropriate personnel of the involved aircraft (F-15s, UH-60s, and AWACS).

The circumstances behind the shootdowns remained murky, although a combination of circumstances must have been present. One or both helicopter pilots might have neglected to turn on their IFF transponder; one or both frequencies could have been set incorrectly; one or both transponders might have failed; the fighter pilots might not have tried all three of the IFF modes available to them. Furthermore, the Black Hawks visually resembled Russian helicopters because they were carrying extra external fuel tanks that altered their profiles. But the AWACS plane personnel should have been aware of the entire operation because they were acting as coordinators. Perhaps someone panicked. An unidentified senior Pentagon offical was quoted as asking “What was the hurry to shoot them down?” Apparently both pilots neglected to set their IFF transponders properly. In addition, a preliminary Pentagon report indicates that the controllers who knew about the mission were not communicating with the controllers who were supervising the shootdown.24

“Friendly fire” (also called fratricide, amicicide, and misadventure) is not uncommon. An item by Rick Atkinson25 noted that 24 percent of the Americans killed in action—35 out of 146—in the Persian Gulf war were killed by U.S. forces. Also, 15 percent of those wounded—72 out of 467—were similarly victimized by their colleagues. During the same war, British Warrior armored vehicles were mistaken for Iraqi T-55 tanks and were zapped by U.S. Maverick missiles, killing 9 men and wounding 11 others. Atkinson’s article noted that this situation is not new, citing a Confederate sentry who shot his Civil War commander, Stonewall Jackson, in 1863; an allied bomber that bombed the 30th Infantry Division after the invasion of Normandy in July 1944; and a confused bomber pilot who killed 42 U.S. paratroopers and wounded 45 in the November 1967 battle of Hill 875 in Vietnam. The old adage was never more appropriate: With friends like these, who needs enemies?

Gripen crash

The first prototype of Sweden’s fly-by-wire Gripen fighter plane crashed on landing at the end of its sixth flight because of a bug in the flight-control software. The plane is naturally unstable, and the software was unable to handle strong winds at low speeds, whereas the plane itself responded too slowly to the pilot’s controls (SEN 14, 2; 14, 5). A second Gripen crash on August 8, 1993, was officially blamed on a combination of the pilot and the technology (SEN 18, 4, 11), even though the pilot was properly trained and equipped. However, pilots were not informed that dangerous effects were known to be possible as a result of large and rapid stick movements (SEN 19, 1, 12-13).

F-111s bombing Libya jammed by their own jamming

One plane crashed and several others missed their targets in the 1986 raid on Libya because the signals intended to jam Libya’s antiaircraft facilities were also jamming U.S. transmitters (SEN 11, 3; 15, 3).

Bell V22 Ospreys

The fifth of the Bell-Boeing V22 Ospreys crashed due to the cross-wiring of two roll-rate sensors (gyros that are known as vyros). As a result, two faulty units were able to outvote the good one in a majority-voting implementation. Similar problems were later found in the first and third Ospreys, which had been flying in tests (SEN 16, 4; 17, 1). Another case of the bad outvoting the good in flight software is reported by Brunelle and Eckhardt [18], and is discussed in Section 4.1.

Tornado fighters collide

In August 1988, two Royal Air Force Tornado fighter planes collided over Cumbria in the United Kingdom, killing the four crewmen. Apparently, both planes were flying with identical preprogrammed cassettes that controlled their on-board computers, resulting in both planes reaching the same point at the same instant. (This case was reported by Dorothy Graham, SEN 15, 3.)

Ark Royal

On April 21, 1992, a Royal Air Force pilot accidentally dropped a practice bomb on the flight deck of the Royal Navy’s most modern aircraft carrier, the Ark Royal, missing its intended towed target by hundreds of yards. Several sailors were injured. The cause was attributed to a timing delay in the software intended to target an object at a parametrically specified offset from the tracked object, namely the carrier. 26

Gazelle helicopter downed by friendly missile

On June 6, 1982, during the Falklands War, a British Gazelle helicopter was shot down by a Sea Dart missile that had been fired from a British destroyer, killing the crew of four (SEN 12, 1).

USS Scorpion

The USS Scorpion exploded in 1968, killing a crew of 99. Newly declassified evidence suggests that the submarine was probably the victim of one of its own conventional torpedoes, which, after having been activated accidentally, was ejected. Unfortunately, the torpedo became fully armed and sought its nearest target, as it had been designed to do.27

Missiles badly aimed

The U.S. guided-missile frigate George Philip fired a 3-inch shell in the general direction of a Mexican merchant ship, in the opposite direction from what had been intended during an exercise in the summer of 1983 (SEN 8, 5). A Russian cruise missile landed in Finland, reportedly 180 degrees off its expected target on December 28, 1984 (SEN 10, 2). The U.S. Army’s DIVAD (Sgt. York) radar-controlled antiaircraft gun reportedly selected the rotating exhaust fan in a latrine as a potential target, although the target was indicated as a low-priority choice (SEN 11, 5).

Air defense

For historically minded readers, there are older reports that deserve mention. Daniel Ford’s book The Button28 notes satellite sensors being overloaded by a Siberian gas-field fire (p. 62), descending space junk being detected as incoming missiles (p. 85), and a host of false alarms in the World-Wide Military Command and Control System (WWMCCS) that triggered defensive responses during the period from June 3 to 6, 1980 (pp. 78-84) and that were eventually traced to a faulty integrated circuit in a communications multiplexor. The classical case of the BMEWS defense system in Thule, Greenland, mistaking the rising moon for incoming missiles on October 5, 1960, was cited frequently.29 The North American Air Defense (NORAD) and the Strategic Air Command (SAC) had 50 false alerts during 1979 alone—including a simulated attack whose outputs accidentally triggered a live scramble/alert on November 9, 1979 (SEN 5, 3).

Early military aviation problems

The RISKS archives also contain a few old cases that have reached folklore status, but that are not well documented. They are included here for completeness. An F-18 reportedly crashed because of a missing exception condition if . . . then . . . without the else clause that was thought could not possibly arise (SEN 6, 2; 11, 2). Another F-18 attempted to fire a missile that was still clamped to the plane, resulting in a loss of 20,000 feet in altitude (SEN 8, 5). In simulation, an F-16 program bug caused the virtual plane to flip over whenever it crossed the equator, as the result of a missing minus sign to indicate south latitude.30 Also in its simulator, an F-16, flew upside down because the program deadlocked over whether to roll to the left or to the right (SEN 9, 5). (This type of problem has been investigated by Leslie Lamport in an unpublished paper entitled “Buridan’s Ass”—in which a donkey is equidistant between two points and is unable to decide which way to go.)

2.3.2 Summary of Defense Problems

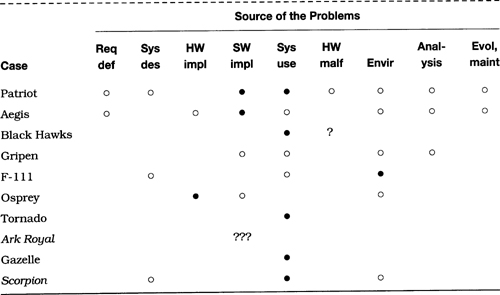

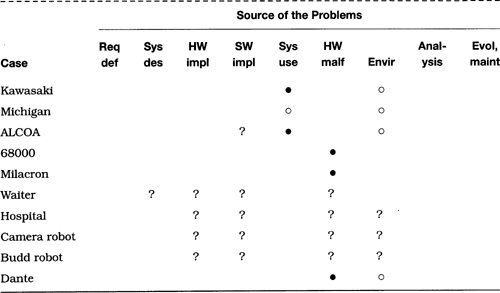

Table 2.3 provides a brief summary of the causative factors for the cited problems. (The abbreviations and symbols are given in Table 1.1.)

Table 2.3 Summary of defense problems

The causes vary widely. The first six cases in the table clearly involve multiple causative factors. Although operator error is a common conclusion in such cases, there are often circumstances that implicate other factors. Here we see problems with system requirements (Patriot), hardware (Osprey), software (lack of precision in the Patriot case, a missing exception condition in the F-18, and a missing sign in the F-16), and the user interface (Aegis). The Black Hawk shootdown appears to have been a combination of errors by many different people, plus an overreliance on equipment that may not have been turned on. The environment also played a role—particularly electromagnetic interference in the F-111 case.

2.4 Civil Aviation

This section considers the basic problems of reliable aviation, the risks involved, and cases in which reliability and safety requirements failed to be met. The causes include human errors and computer-system failures. The effects are serious in many of the cited cases.

2.4.1 Risks in Automated Aviation

Section 2.4.1 originally appeared as a two-part Inside Risks column, CACM 37, 1 and 2, January and February 1994, written by Robert Dorsett—who is the author of a Boeing 727 systems simulator and TRACON II for the Macintosh.

The 1980s saw a tremendous increase of the use of digital electronics in newly developed airliners. The regulatory requirement for a flight engineer’s position on larger airplanes had been eased, such that only two pilots were then required. The emerging power of advanced technology has resulted in highly automated cockpits and in significant changes to flight management and flight-control philosophies.

Flight management

The Flight Management System (FMS) combines the features of an inertial navigation system (INS) with those of a performance-management computer. It is most often used in conjunction with screen-based flight instrumentation, including an artificial horizon and a navigation display. The crew can enter a flight plan, review it in a plan mode on the navigation display, and choose an efficiency factor by which the flight is to be conducted. When coupled with the autopilot, the FMS can control virtually the entire flight, from takeoff to touchdown. The FMS is the core of all modern flight operations.

Systems

As many functions as possible have been automated, from the electrical system to toilet-flush actuators. System states are monitored by display screens rather than more than 650 electromechanical gauges and dials. Most systems’ data items are still available via 10 to 15 synoptic pages, on a single systems display. It is not feasible for the pilots to monitor long-term changes in any onboard system; they must rely on an automatic alerting and monitoring system to do that job for them. Pilots retain limited control authority over on-board systems, if advised of a problem.

Flight control

In 1988, the Airbus A320 was certified; it was the first airliner to use a digital fly-by-wire flight-control system. Pilots control a conventional airliner’s flight path by means of a control column. The control column is connected to a series of pulleys and cables, leading to hydraulic actuators, which move the flight surfaces. Fly-by-wire control eliminates the cables, and replaces them with electrical wiring; it offers weight savings, reduced complexity of hardware, the potential for the use of new interfaces, and even modifications of fundamental flight-control laws — resulting from having a computer filter most command inputs.

Communications

All new airliners offer an optional ARINC Communication and Reporting System (ACARS). This protocol allows the crew to exchange data packets, such as automatically generated squawks and position reports, with a maintenance or operations base on the ground. Therefore, when the plane lands, repair crews can be ready, minimizing turnaround time. ACARS potentially allows operations departments to manage fleet status more efficiently, in real time, worldwide, even via satellite.

Similar technology will permit real-time air-traffic control of airliners over remote locations, such as the Pacific Ocean or the North Atlantic, where such control is needed.

Difficulties

Each of the innovations noted introduces new, unique problems. The process of computerization has been approached awkwardly, driven largely by market and engineering forces, less by the actual need for the features offered by the systems. Reconciling needs, current capabilities, and the human-factors requirements of the crew is an ongoing process.

For example, when FMSs were introduced in the early 1980s, pilots were under pressure to use all available features, all the time. However, it became apparent that such a policy was not appropriate in congested terminal airspace; pilots tended to adopt a heads-down attitude, which reduced their ability to detect other traffic. They also tended to use the advanced automation in high-workload situations, long after it clearly became advisable either to hand-fly or to use the autopilot in simple heading- and altitude-select modes. By the late 1980s, airlines had started click-it-off training. Currently, many airlines discourage the use of FMSs beneath 10,000 feet.

Increased automation has brought the pilot’s role closer to that of a manager, responsible for overseeing the normal operation of the systems. However, problems do occur, and the design of modern interfaces has tended to take pilots out of the control loop. Some research suggests that, in an emergency situation, pilots of conventional aircraft have an edge over pilots of a modern airplane with an equivalent mission profile, because older airplanes require pilots to participate in a feedback loop, thus improving awareness. As an example of modern philosophies, one manufacturer’s preflight procedure simply requires that all lighted buttons be punched out. The systems are not touched again, except in abnormal situations. This design is very clever and elegant; the issue is whether such an approach is sufficient to keep pilots in the loop.

Displays

The design of displays is an ongoing issue. Faced with small display screens (a temporary technology limitation), manufacturers tend to use tape formats for airspeed and altitude monitoring, despite the cognitive problems. Similarly, many systems indications are now given in a digital format rather than in the old analog formats. This display format can result in the absence of trend cues, and, perhaps, can introduce an unfounded faith in the accuracy of the readout.

Control laws

Related to the interface problem is the use of artificial control laws. The use of unconventional devices, such as uncoupled sidesticks, dictates innovations in control to overcome the device’s limitations; consequently, some flight-control qualities are not what experienced pilots expect. Moreover, protections can limit pilot authority in unusual situations. Because these control laws are highly proprietary—they are not standardized, and are not a simple consequence of the natural flying qualities of the airplane—there is potential for significant training problems as pilots transition between airplane types.

Communications

The improvement of communications means that ground personnel are more intimately connected with the flight. The role of an airliner captain has been similar to that of a boat captain, whose life is on the line and who is in the sole position to make critical safety judgments—by law. With more real-time interaction with company management or air-traffic control, there are more opportunities for a captain to be second-guessed, resulting in distributed responsibility and diminished captain’s authority. Given the increasingly competitive, bottom-line atmosphere under which airlines must operate, increased interaction will help diminish personnel requirements, and may tend to impair the safety equation.

Complexity

The effect of software complexity on safety is an open issue. Early INSs had about 4 kilobytes of memory; modern FMSs are pushing 10 megabytes. This expansion represents a tremendous increase in complexity, combined with a decrease in pilot authority. Validating software to the high levels of reliability required poses all but intractable problems. Software has allowed manufacturers to experiment with novel control concepts, for which the experience of conventional aircraft control gathered over the previous 90 years provides no clear guidance. This situation has led to unique engineering challenges. For example, software encourages modal thinking, so that more and more features are context-sensitive.

A Fokker F.100 provided a demonstration of such modality problems in November 1991. While attempting to land at Chicago O’Hare, the crew was unable to apply braking. Both the air and ground switches on its landing gear were stuck in the air position. Since the computers controlling the braking system thought that the plane was in the air, not only was the crew unable to use reverse thrust, but, more controversially, they also were unable to use nosewheel steering or main gear braking—services that would have been available on most other airliners in a similar situation.

As another example, the flight-control laws in use on the Airbus A320 have four distinct permutations, depending on the status of the five flight-control computers. Three of these laws have to accommodate individual-component failure. On the other hand, in a conventional flight-control system, there is but one control law, for all phases of flight.

Regulation

The regulatory authorities are providing few direct standards for high-tech innovation. As opposed to conventional aircraft, where problems are generally well understood and the rules codified, much of the modern regulatory environment is guided by collaborative industry standards, which the regulators have generally approved as being sound. Typically a manufacturer can select one of several standards to which to adhere. On many issues, the position of the authorities is that they wish to encourage experimentation and innovation.

The state of the industry is more suggestive of the disarray of the 1920s than of what we might expect in the 1990s. There are many instances of these emerging problems; Boeing and Airbus use different display color-coding schemes, not to mention completely different lexicons to describe systems with similar purposes. Even among systems that perform similarly, there can be significant discrepancies in the details, which can place tremendous demands on the training capacity of both airlines and manufacturers. All of these factors affect the safety equation.

2.4.2 Illustrative Aviation Problems

There have been many strange occurrences involving commercial aviation, including problems with the aircraft, in-flight computer hardware and software, pilots, air-traffic control, communications, and other operational factors. Several of the more illuminating cases are summarized here. A few cases that are not directly computer related are included to illustrate the diversity of causes on which airline safety and reliability must depend. (Robert Dorsett has contributed details that were not in the referenced accounts.)

Lauda Air 767

A Lauda Air 767-300ER broke up over Thailand, apparently the result of a thrust reverser deploying in mid-air, killing 223. Of course, this event is supposed to be impossible in flight.31 Numerous other planes were suspected of flying with the same defect. The FAA ordered changes.

757 and 767 autopilots

Federal safety investigators have indicated that autopilots on 757 and 767 aircraft have engaged and disengaged on their own, causing the jets to change direction for no apparent reason, including 28 instances since 1985 among United Airlines aircraft. These problems have occurred despite the autopilots being triple-modular redundant systems.32

Northwest Airlines Flight 255

A Northwest Airlines DC-9-82 crashed over Detroit on August 17, 1987, killing 156. The flaps and the thrust computer indicator had not been set properly before takeoff. A computer-based warning system might have provided an alarm, but it was not powered up—apparently due to the failure of a $13 circuit breaker. Adding to those factors, there was bad weather, confusion over which runway to use, and failure to switch radio frequencies. The official report blamed “pilot error.” Subsequently, there were reports that pilots in other aircraft had disabled the warning system because of frequent false alarms.33

British Midland 737 crash

A British Midland Boeing 737-400 crashed at Kegworth in the United Kingdom, killing 47 and injuring 74 seriously. The right engine had been erroneously shut off in response to smoke and excessive vibration that was in reality due to a fan-blade failure in the left engine. The screen-based “glass cockpit” and the procedures for crew training were questioned. Cross-wiring, which was suspected—but not definitively confirmed—was subsequently detected in the warning systems of 30 similar aircraft.34

Aeromexico crash

An Aeromexico flight to Los Angeles International Airport collided with a private plane, killing 82 people on August 31, 1986 — 64 on the jet, 3 on the Piper PA-28, and at least 15 on the ground. This crash occurred in a government-restricted area in which the private plane was not authorized to fly. Apparently, the Piper was never noticed on radar (although there is evidence that it had appeared on the radar screen), because the air-traffic controller had been distracted by another private plane (a Grumman-Yankee) that had been in the same restricted area (SEN 11, 5). However, there were also reports that the Aeromexico pilot had not explicitly declared an emergency.

Collisions with private planes

The absence of technology can also be a problem, as in the case of a Metroliner that collided with a private plane that had no altitude transponder, on January 15, 1987, killing 10. Four days later, an Army turboprop collided with a private plane near Independence, Missouri. Both planes had altitude transponders, but controllers did not see the altitudes on their screens (SEN 12, 2).

Air France Airbus A320 crash

A fly-by-wire Airbus A320 crashed at the Habsheim airshow in the summer of 1988, killing 3 people and injuring 50. The final report indicates that the controls were functioning correctly, and blamed the pilots. However, there were earlier claims that the safety controls had been turned off for a low overflight. Dispute has continued to rage about the investigation. One pilot was convicted of libel by the French government for criticizing the computer systems (for criticizing “public works”), although he staunchly defends his innocence [6]. There were allegations that the flight-recorder data had been altered. (The flight recorder showed that the aircraft hit trees when the plane was at an altitude of 32 feet.) Regarding other A320 flights, there have been reports of altimeter glitches, sudden throttling and power changes, and steering problems during taxiing. Furthermore, pilots have complained that the A320 engines are generally slow to respond when commanded to full power.35

Indian Airlines Airbus A320 crash

An Indian Airlines Airbus A320 crashed 1000 feet short of the runway at Bangalore, killing 97 of the 146 passengers. Some similarities with the Habsheim crash were reported. Later reports said that the pilot was one of the airline’s most experienced and had received “excellent” ratings in his Airbus training. Airbus Industrie apparently sought to discredit the Indian Airlines’ pilots, whereas the airlines expressed serious apprehensions about the aircraft. The investigation finally blamed human error; the flight recorder indicated that the pilot had been training a new copilot (SEN 15, 2; 15, 3; 15, 5) and that an improper descent mode had been selected. The crew apparently ignored aural warnings.

French Air Inter Airbus A320 crash

A French Air Inter Airbus A320 crashed into Mont Sainte-Odile (at 2496 feet) on automatic landing approach to the Strasbourg airport on a flight from Lyon on January 20, 1992. There were 87 people killed and 9 survivors. A combination of human factors (the integration of the controller and the human-machine interface, and lack of pilot experience with the flightdeck equipment), technical factors (including altimeter failings), and somewhat marginal weather (subfreezing temperature and fog) was blamed. There was no warning alarm (RISKS 13, 05; SEN 17, 2; 18, 1, 23; 19, 2, 11).

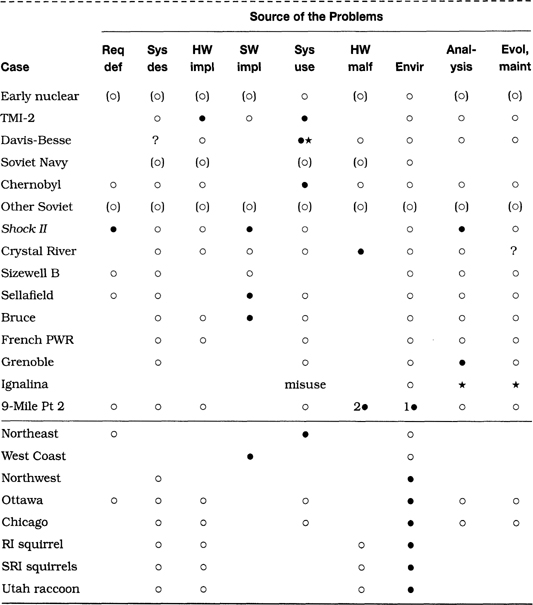

Lufthansa Airbus A320 crash