Chapter 3. Security Vulnerabilities

Caveat emptor.

(Let the buyer beware.)

THIS CHAPTER CONSIDERS security vulnerabilities. It examines some of the characteristic system flaws that can be exploited. It also discusses a few remarkable cases of system flaws. Chapter 5 presents specific examples of cases in which such vulnerabilities have been exploited or have otherwise caused problems.

The informal definition of security given in Section 1.1 implies freedom from undesirable events, including malicious and accidental misuse. In the computer sense, undesirable events might also include the results of hardware malfunctions. In this natural usage, security would be an all-inclusive term spanning many computer-system risks. In common technical usage, however, computer security and communication security generally refer to protection against human misuse, and exclude protection against malfunctions. Two particularly important security-related challenges concern assuring confidentiality and maintaining integrity. A third involves maintaining system availability despite threats from malicious or accidental system misuse. Chapter 4 demonstrates the close coupling between reliability and security concepts; many of the techniques for achieving one contribute to enhancing the other. The following security-related terms are used here:

• Confidentiality (or secrecy) means that information is protected from unintended disclosure. Computer-system mechanisms and policies attempt to enforce secrecy—for example, to protect individual rights of privacy or national defense. The system does not accidentally divulge sensitive information, and is resistant to attempts to gain access to such information.

• Integrity means literally that a resource is maintained in an unimpaired condition, or that there is a firm adherence to a specification (such as requirements or a code of values). In the computer sense, system integrity means that a system and its system data are maintained in a (sufficiently) correct and consistent condition, whereas (user) data integrity means that (user) data entities are so maintained. In this sense, the danger of losing integrity is clearly a security concern. Integrity could be related to unintended disclosure (in that nonrelease is a property to be maintained), but is generally viewed more as being protection against unintended modification. From the inside of a system, integrity involves ensuring internal consistency. From an overall perspective, integrity also includes external consistency; that is, the internals of the system accurately reflect the outside world. Related to external consistency are notions such as the legality of what is done within the system and the compatibility with external standards. Integrity in the larger sense also involves human judgments.

• Availability means that systems, data, and other resources are usable when needed, despite subsystem outages and environmental disruptions. From a reliability point of view, availability is generally enhanced through the constructive use of redundancy, including alternative approaches and various archive-and-retrieval mechanisms. From a security point of view, availability is enhanced through measures to prevent malicious denials of service.

• Timeliness is relevant particularly in real-time systems that must satisfy certain urgency requirements, and is concerned with ensuring that necessary resources are available quickly enough when needed. It is thus a special and somewhat more stringent case of availability. In certain critical systems, timeliness must be treated as a security property—particularly when it must be maintained despite malicious system attacks.

Once again, there is considerable overlap among these different terms. Also important are requirements for human safety and for overall survivability of the application, both of which depend on the satisfaction of requirements relating to reliability and security:

• System survivability is concerned with the ability to continue to make resources available, despite adverse circumstances including hardware malfunctions, software flaws, malicious user activities, and environmental hazards such as electronic interference.

• Human safety is concerned with the well-being of individuals, as well as of groups of people. In the present context, it refers to ensuring the safety of anyone who is in some way dependent on the satisfactory behavior of systems and on the proper use of technology. Human safety with respect to the entire application may depend on system survivability, as well as on system reliability and security.

There is clearly overlap among the meanings of these terms. In the sense that they all involve protection against dangers, they all are components of security. In the sense that protection against dangers involves maintenance of some particular condition, they might all be considered as components of integrity. In the sense that the system should behave predictably at all times, they are all components of reliability. Various reliability issues are clearly also included as a part of security, although many other reliability issues are usually considered independently. Ultimately, however, security, integrity, and reliability are closely related. Any or all of these concepts may be constituent requirements in a particular application, and the failure to maintain any one of them may compromise the ability to maintain the others. Consequently, we do not seek sharp distinctions among these terms; we prefer to use them more or less intuitively.

The term dependable computing is sometimes used to imply that the system will do what is expected with high assurance, under essentially all realistic circumstances. Dependability is related to trustworthiness, which is a measure of trust that can reasonably be placed in a particular system or subsystem. Trustworthiness is distinct from trust, which is the degree to which someone has faith that a resource is indeed trustworthy, whether or not that resource is actually trustworthy.

3.1 Security Vulnerabilities and Misuse Types

Security vulnerabilities can arise in three different ways, representing three fundamental gaps between what is desired and what can be achieved. Each of these gaps provides a potential for security misuse.

First, a technological gap exists between what a computer system is actually capable of enforcing (for example, by means of its access controls and user authentication) and what it is expected to enforce (for example, its policies for data confidentiality, data integrity, system integrity, and availability). This technological gap includes deficiencies in both hardware and software (for systems and communications), as well as in system administration, configuration, and operation. Discretionary access controls (DACs) give a user the ability to specify the types of access to individual resources that are considered to be authorized. Common examples of discretionary controls are the user/group/world permissions of Unix systems and the access-control lists of Multics. These controls are intended to limit access, but are generally incapable of enforcing copy protection. Attempts to constrain propagation through discretionary copy controls tend to be ineffective. Mandatory access controls (MACs) are typically established by system administrators, and cannot be altered by users; they can help to restrict the propagation of copies. The best-known example is multilevel security, which is intended to prohibit the flow of information in the direction of decreasing information sensitivity. Flawed operating systems may permit violations of the intended policy. Unreliable hardware may cause a system to transform itself into one with unforeseen and unacceptable modes of behavior. This gap between access that is intended and access that is actually authorized represents a serious opportunity for misuse by authorized users. Ideally, if there were no such gap, misuse by authorized users would be a less severe problem.

Second, a sociotechnical gap exists between computer system policies and social policies such as computer-related crime laws, privacy laws, and codes of ethics. This sociotechnical gap arises when the socially expected norms are not consistent with or are not implementable in terms of the computer system policies. For example, issues of intent are not addressed by computer security policies, but are relevant to social policies. Intent is also poorly covered by existing “computer crime” laws.

Finally, a social gap exists between social policies and actual human behavior. This social gap arises when people do not act according to what is expected of them. For example, authorized users may easily diverge from the desired social policies; malicious penetrators do not even pretend to adhere to the social norms.

The technological gap can be narrowed by carefully designed, correctly implemented, and properly administered computer systems and networks that are meaningfully secure, at least to the extent of protecting against known vulnerabilities. For example, those systems that satisfy specific criteria (see Section 7.8.1) have a significant advantage with respect to security over those systems that do not satisfy such criteria. The sociotechnical gap can be narrowed by well-defined and socially enforceable social policies, although computer enforcement still depends on narrowing the technological gap. The social gap can be narrowed to some extent by a narrowing of the first two gaps, with some additional help from educational processes. Malicious misuse of computer systems can never be prevented completely, particularly when perpetrated by authorized users. Ultimately, the burden must rest on more secure computer systems and networks, on more enlightened management and responsible employees and users, and sensible social policies. Monitoring and user accountability are also important.

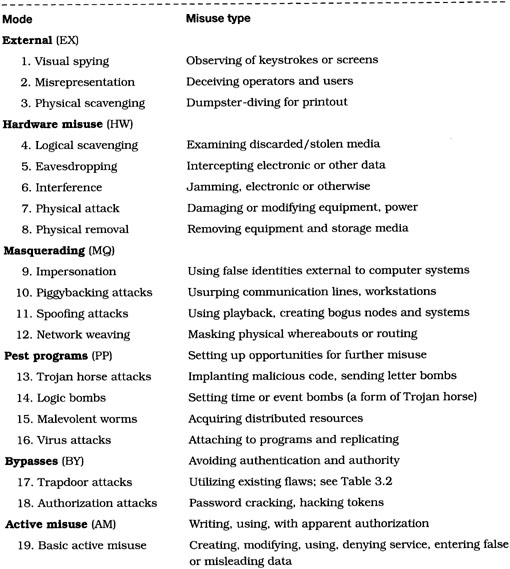

Computer misuse can take many forms. Various classes of misuse are summarized in Figure 3.1 and Table 3.1. For visual simplicity, the figure is approximated as a simple tree. However, it actually represents a system of descriptors, rather than a taxonomy in the usual sense, in that a given misuse may involve multiple techniques within several classes.

Figure 3.1 Classes of techniques for computer misuse

Adapted from P.G. Neumann and D.B. Parker, “A summary of computer misuse techniques,” Twelfth National Computer Security Conference, Baltimore, 1989.

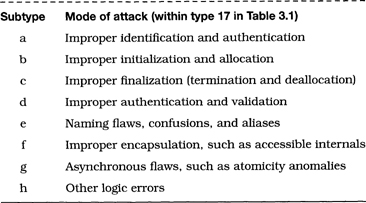

Table 3.1 Types of computer misuse

Adapted from P.G. Neumann and D.B. Parker, “A summary of computer misuse techniques,” Twelfth National Computer Security Conference, Baltimore, 1989.

The order of categorization depicted is roughly from the physical world to the hardware to the software, and from unauthorized use to misuse of authority. The first class includes external misuses that can take place without any access to the computer system. The second class concerns hardware misuse, and generally requires involvement with the computer system itself. Two examples in this second class are eavesdropping and interference (usually electronic or electromagnetic, but optical and other forms are also possible). The third class includes masquerading in a variety of forms. The fourth includes the establishment of deferred misuse—for example, the creation and enabling of a Trojan horse (as opposed to subsequent misuse that accompanies the actual execution of the Trojan horse—which may show up in other classes at a later time) or other forms of pest programs discussed in Section 3.2. The fifth class involves bypass of authorization, possibly enabling a user to appear to be authorized — or not to appear at all (for example, to be invisible to the audit trails). The remaining classes involve active and passive misuse of resources, inaction that might result in misuse, and misuse that helps in carrying out additional misuses (such as preparation for an attack on another system, or use of a computer in a criminal enterprise).

The main downward-sloping right diagonal path in Figure 3.1 indicates typical steps and modes of intended use of computer systems. The leftward branches all involve misuse; the rightward branches represent potentially acceptable use—until a leftward branch is taken. (Each labeled mode of usage along the main-diagonal intended-usage line is generally the antithesis of the corresponding leftward misuse branch.) Every leftward branch represents a class of vulnerabilities that must be defended against—that is, detected, avoided, or recovered from. The means for prevention, deterrence, avoidance, detection, and recovery typically differ from one branch to the next. (Even inaction may imply misuse, although no abusive act of commission may have occurred.)

The two classes of misuse types that are of main interest here are preplanned pest programs, such as Trojan horses, viruses, and worms (with effects including time bombs, logic bombs, and general havoc), and bypasses of authority (trapdoor exploitations and authorization attacks). These classes are discussed next. Several other forms are important in the present context, and are also considered.

3.2 Pest Programs and Deferred Effects

Various types of malicious attacks employ the insertion of code that can result in subsequent malicious effects; these are collectively referred to as pest programs. Pest-program attacks involve the creation, planting, and arming of software using methods such as Trojan horses, logic bombs, time bombs, letter bombs, viruses, and malicious worms. Generically, many of these are subcases of Trojan horse attacks.

3.2.1 Pest Programs in General

This section considers pest programs in general computer systems. Section 3.2.2 considers personal-computer viruses.

• A Trojan horse is an entity (typically but not always a program) that contains code or something interpretable as code, which when executed can have undesired effects (such as the clandestine copying of data or the disabling of the computer system).

• A logic bomb is a Trojan horse in which the attack is detonated by the occurrence of some specified logical event, such as the first subsequent login by a particular user.

• A time bomb is a logic bomb in which the attack is detonated by the occurrence of some specified time-related logical event, such as the next time the computer date reaches Friday the Thirteenth.

• A letter bomb is a peculiar type of Trojan horse attack whereby the harmful agent is not contained in a program, but rather is hidden in a piece of electronic mail or data; it typically contains characters, control characters, or escape sequences squirreled away in the text such that its malicious activities are triggered only when it is read in a particular way. For example, it might be harmless if read as a file, but would be destructive if read as a piece of electronic mail. This kind of attack can be prevented if the relevant system programs are defensive enough to filter out the offending characters. However, if the system permits the transit of such characters, the letter bomb would be able to exploit that flaw and thus to be triggered.

• A virus is in essence a Trojan horse with the ability to propagate itself iteratively and thus to contaminate other systems. There is a lack of clarity in terminology concerning viruses, with two different sets of usage: one for strict-sense viruses, another for personal-computer viruses. A strict-sense virus, as defined by Cohen [27] is a program that alters other programs to include a copy of itself. A personal-computer virus is similar, except that its propagation from one system to another is typically the result of human inadvertence or obliviousness. Viruses often employ the effects of a Trojan horse attack, and the Trojan horse effects often depend on trapdoors that either are already present or are created for the occasion. Strict-sense viruses are generally Trojan horses that are self-propagating without any necessity of human intervention (although people may inadvertently facilitate the spread). Personal-computer viruses are usually Trojan horses that are propagated primarily by human actions such as moving a floppy disk from one system to another. Personal-computer viruses are rampant, and represent a serious long-term problem (see Section 3.2.2). On the other hand, strict-sense viruses (that attach themselves to other programs and propagate without human aid) are a rare phenomenon—none are known to have been perpetrated maliciously, although a few have been created experimentally.

• A worm is a program that is distributed into computational segments that can execute remotely. It may be malicious, or may be used constructively—for example, to provide extensive multiprocessing, as in the case of the early 1980s experiments by Shoch and Hupp at Xerox PARC [155].

The setting up of pest programs may actually employ misuses of other classes, such as bypasses or misuse of authority, or may be planted via completely normal use, as in a letter bomb. The subsequent execution of the deferred misuses may also rely on further misuse methods. Alternatively, execution may involve the occurrence of some logical event (for example, a particular date and time or a logical condition), or may rely on the curiosity, innocence, or normal behavior of the victim. Indeed, because a Trojan horse typically executes with the privileges of its victim, its execution may require no further privileges. For example, a Trojan horse program might find itself authorized to delete all the victim’s files.

A comparison of pest-program attacks with other modes of security misuse is given in Section 3.6.

3.2.2 Personal-Computer Viruses

Personal-computer viruses may attack in a variety of ways, including corruption of the boot sector, hard-disk partition tables, or main memory. They may alter or lock up files, crash the system, and cause delays and other denials of service. These viruses take advantage of the fact that there is no significant security or system integrity in the system software. In practice, personal-computer virus infection is frequently caused by contaminated diagnostic programs or otherwise infected floppy disks. See Section 5.1 for further discussion of virus attacks.

3.3 Bypass of Intended Controls

This section begins with a discussion of bypass vulnerabilities, and then considers a particularly important subset typified by password vulnerabilities, along with the corresponding types of attacks that can exploit these vulnerabilities.

3.3.1 Trapdoors

A trapdoor is an entry path that is not normally expected to be used. In some cases, its existence is accidental and is unknown to the system developers; in other cases, it is intentionally implanted during development or maintenance. Trapdoors and other system flaws often enable controls to be bypassed. Bypass of controls may involve circumvention of existing controls, or modification of those controls, or improper acquisition of otherwise-denied authority, presumably with the intent to misuse the acquired access rights subsequently. Common modes of unauthorized access result from system and usage flaws (for example, trapdoors that permit devious access paths) such as the following (see [105], with subtended references to earlier work by Bisbey, Carlstedt, Holling-worth, Popek, and other researchers), summarized in Table 3.2.

Table 3.2 Types of trapdoor attacks

Inadequate identification, authentication, and authorization of users, tasks, and systems

Lack of checking for identity and inadequate authentication are common sources of problems. System spoofing attacks may result, in which one system component masquerades as another. The debug option of sendmail and the .rhosts file exploited by the Internet Worm [138, 150, 159] are examples (see Section 5.1.1). For example, the .rhost mechanism enables logins from elsewhere without any authentication being required—a truly generous concept when enabled. These exploitations also represent cases in which there was an absence of required authority. (Password-related trapdoors are noted separately after this itemization.)

Improper initialization

Many bypasses are enabled by improper initialization, including improper initial domain selection or improper choice of security parameters; improper partitioning, as in implicit or hidden sharing of privileged data; and embedded operating-system parameters in application memory space. If the initial state is not secure, then everything that follows is in doubt. System configuration, bootload, initialization, and system reinitialization following reconfiguration are all serious sources of vulnerabilities, although often carried out independently of the standard system controls.

Improper finalization

Even if the initialization is sound, the completion may not be. Problems include incompletely handled aborts, accessible vestiges of deallocated resources (residues), incomplete external device disconnects, and other exits that do not properly clean up after themselves. In many systems, deletion is effected by the setting of a deleted flag, although the information is still present and is accessible to system programmers and to hardware maintenance personnel (for example). A particularly insidious flaw of this type has existed in several popular operating systems, including in TENEX, permitting accidental piggybacking (tailgating) when a user’s communication line suffers an interruption; the next user who happens to be assigned the same login port is simply attached to the still active user job. Several examples of accidental data residues are noted in Section 5.2.

Incomplete or inconsistent authentication and validation

User authentication, system authentication, and other forms of validation are often a source of bypasses. Causes include improper argument validation, type checking, and bounds checks; failure to prevent permissions, quotas, and other programmed limits from being exceeded; and bypasses or misplacement of control. The missing bounds check in gets exploited by the finger attack used in the Internet Worm is a good example. Authentication problems are considered in detail in Section 3.3.2.

Improper naming

Naming is a common source of difficulties (for example, see Section 6.5), particularly in creating bypasses. Typical system problems include aliases (for example, multiple names with inconsistent effects that depend on the choice of name), search-path anomalies, and other context-dependent effects (same name, but with different effects, depending on the directories or operating-system versions).

Improper encapsulation

Proper encapsulation involves protecting the internals of a system or subsystem to make them inaccessible from the outside. Encapsulation problems that cause bypasses include a lack of information hiding, accessibility of internal data structures, alterability of audit trails, midprocess control transfers, and hidden or undocumented side effects.

Sequencing problems

The order in which events occur and the overlap among different events that may occur simultaneously introduce serious security bypass problems, as well as serious reliability problems, especially in highly distributed systems. Problems include incomplete atomicity or sequentialization, as in faulty interrupt or error handling; flawed asynchronous functionality, such as permitting undesired changes to be made between the time of validation and the time of use; and incorrect serialization, resulting in harmful nondeterministic behavior, such as critical race conditions and other asynchronous side effects. A race condition is a situation in which the outcome is dependent on internal timing considerations. It is critical if something else depending on that outcome may be affected by its nondeterminism; it is noncritical otherwise. The so-called time-of-check to time-of-use (TOCTTOU) problem illustrates inadequate atomicity, inadequate encapsulation, and incomplete validation; an example is the situation in which, after validation is performed on the arguments of a call by reference, it is then possible for the caller to change the arguments.

Other logic errors

There are other diverse cases leading to bypass problems—for example, inverted logic (such as a missing not operation), conditions or outcomes not properly matched with branches, and wild transfers of control (especially if undocumented).

Tailgating may occur accidentally when a user is attached randomly to an improperly deactivated resource, such as a port through which a process is still logged in but to which the original user of the process is no longer attached (as in the TENEX problem noted previously). Unintended access may also result from other trapdoor attacks, logical scavenging (for example, reading a scratch tape before writing on it), and asynchronous attacks (for example, incomplete atomic transactions and TOCTTOU discrepancies). For example, trapdoors in the implementation of encryption can permit unanticipated access to unencrypted information.

3.3.2 Authentication and Authorization Vulnerabilities

Password attacks

Password attacks are one of the most important classes of authentication attacks, and are particularly insidious because of the ubiquity of passwords. Some of the diversity among password attacks is illustrated in the following paragraphs.

Exhaustive trial-and-error attacks

Enumerating all possible passwords was sometimes possible in the early days of time-sharing systems, but is now much less likely to succeed because of limits on the number of tries and auditing of failed login attempts.

Guessing of passwords

Guessing is typically done based on commonly used strings of characters (such as dictionary words and proper names) or strings that might have some logical association with an individual under attack (such as initials, spouse’s name, dog’s name, and social-security number). It is amazing how often this approach succeeds.

Capture of unencrypted passwords

Passwords are often available in an unencrypted form, in storage, as they are being typed, or in transit (via local or global net, or by trapdoors such as the Unix feature of using /dev/mem to read all of memory). For example, the Ethernet permits promiscuous mode sniffing of everything that traverses the local network; it is relatively easy to scan for commands that are always followed by passwords, such as local and remote logins.

Derivation of passwords

Certain systems or administrators constrain passwords to be algorithmically generated; in such cases, the algorithms themselves may be used to shorten the search. Occasionally, inference combined with a short-cut search algorithm can be used, as in the TENEX connect flaw, where the password comparison was done sequentially, one character at a time; in that case, it was possible to determine whether the first character was correctly guessed by observing page fault activity on the next serially checked password character, and then repeating the process for the next character—using a match-and-shift approach. Another attack, which appears to be increasing in popularity, is the preencryptive dictionary attack, as described by Morris and Thompson [96] and exploited in the Internet Worm. That attack requires capturing the encrypted password file, and then encrypting each entry in a dictionary of candidate words until a match is found. (It is possible to hinder this attack somewhat in some systems by making the encrypted password file unreadable by ordinary users, as in the case of a completely encapsulated shadow password file that is ostensibly accessible to only the login program and the administrators. However, usurpation of administrator privileges can then lead to compromise.)

Existence of universal passwords

In rare cases, a design or implementation flaw can result in the possibility of universal passwords, similar to skeleton keys that open a wide range of locks. For example, there has been at least one case in which it was possible to generate would-be passwords that satisfy the login procedure for every system with that login implementation, without any knowledge of valid passwords. In that case, a missing bounds check permitted an intentionally overly long password consisting of a random sequence followed by its encrypted form to overwrite the stored encrypted password, which defeated the password-checking program [179]). This rather surprising case is considered in greater detail in Section 3.7.

Absence of passwords

In certain cases, trapdoors exist that require no passwords at all. For example, the .rhost tables enable unauthenticated logins from any of the designated systems. Furthermore, some Unix remote shell (rsh) command implementations permit unauthenticated remote logins from a similarly named but bogus foreign user. The philosophy of a single universal login authentication for a particular user that is valid throughout all parts of a distributed system and—worse yet—throughout a network of networks represents an enormous vulnerability, because one compromise anywhere can result in extensive damage throughout. (In the context of this book, the verb to compromise and the noun compromise are used in the pejorative sense to include penetrations, subversions, intentional and accidental misuse, and other forms of deviations from expected or desired system behavior. The primary usage is that of compromising something such as a system, process, or file, although the notion of compromising oneself is also possible—for example, by accidentally introducing a system flaw or invoking a Trojan horse. To avoid confusion, we do not use compromise here in the sense of a mutual agreement.)

Nonatomic password checking

Years ago, there were cases of applications in which the password checking could be subverted by a judiciously timed interrupt during the authentication process. The scenario typically involved attempting to log in with a legitimate user name and an incorrect password, then interrupting, and miraculously discovering oneself logged in. This was a problem with several early higher-layer authenticators.

Editing the password file

If the password file is not properly protected against writing, and is not properly encapsulated, it may be possible to edit the password file and to insert a bogus but viable user identifier and (encrypted) password of your own choosing, or to install your own variant password file from a remote login.

Trojan horsing the system

Trojan horses may be used to subvert the password checking—for example, by insertion of a trapdoor into an authorization program within the security perimeter. The classical example is the C-compiler Trojan horse described by Thompson [167], in which a modification to the C compiler caused the next recompilation of the Unix login routine to have a trapdoor. This case is noted in Section 3.7. (Trojan horses may also capture passwords when typed or transmitted, as in the second item in this list.)

The variations within this class are amazingly rich. Although the attack types noted previously are particularly dangerous for fixed, reusable passwords, there are also comparable attack techniques for more sophisticated authentication schemes, such as challenge-response schemes, token authenticators, and other authenticators based on public-key encryption. Even if one-time token authenticators are used for authentication at a remote authentication server, the presence of multiple decentralized servers can permit playback of a captured authenticator within the time window (typically on the order of three 30-second intervals), resulting in almost instantaneous penetrations at all the other servers.

There are many guidelines for password management, but most of them can be subverted if the passwords are exposed. Even if callback schemes are used in an attempt to ensure that a dial-up user is actually in the specified place, callback can be defeated by remotely programmable call forwarding. Techniques for overcoming these vulnerabilities are considered in Section 7.9.2.

3.4 Resource Misuse

In addition to the two forms of malicious attacks (pest programs and bypasses) already discussed, there are various forms of attack relating to the misuse of conferred or acquired authority. Indeed, these are the most common forms of attack in some environments.

3.4.1 Active Misuse of Resources

Active misuse of apparently conferred authority generally alters the state of the system or changes data. Examples include misuse of administrative privileges or superuser privileges; changes in access-control parameters to enable other misuses of authority; harmful data alteration and false data entry; denials of service (including saturation, delay, or prolongation of service); the somewhat exotic salami attacks in which numerous small pieces (possibly using roundoff) are collected (for example, for personal or corporate gain); and aggressive covert-channel exploitation. A covert channel is a means of signaling without using explicit read-write and other normal channels for information flow; signaling through covert channels is typically in violation of the intent of the security policy. Covert storage channels tend to involve the ability to detect the state of shared resources, whereas covert timing channels tend to involve the ability to detect temporal behavior. For example, a highly constrained user might be able to signal 1 bit of information to an unconstrained user on the basis of whether or not a particular implicitly shared resource is exhausted, perhaps requiring some inference to detect that event. If it is possible to signal 1 bit reliably, then it is usually possible to signal at a higher bandwidth. If it is possible to signal only unreliably, then error-correcting coding can be used. (See Section 4.1.) Note that the apparently conferred authority may have been obtained surreptitiously, but otherwise appears as legitimate use.

3.4.2 Passive Misuse of Resources

Passive misuse of apparently conferred authority generally results in reading information without altering any data and without altering the system (with the exception of audit-trail and status data). Passive misuse includes browsing (without specific targets in mind), searching for specific patterns, aggregating data items to derive information that is more sensitive than are the individual items (for example, see [84]), and drawing inferences. An example that can employ both aggregation and inference is provided by traffic analysis that is able to derive information without any access to the data. In addition, it is sometimes possible to derive inferences from a covert channel even in the absence of a malicious program or malicious user that is signaling intentionally.

These passive misuses typically have no appreciable effect on the objects used or on the state of the system (except, of course, for the execution of computer instructions and possibly resulting audit data). They do not need to involve unauthorized use of services and storage. Note that certain events that superficially might appear to be passive misuse may in fact result in active misuse—for example, through time-dependent side effects.

3.5 Other Attack Methods

Several other attack methods are worth noting here in greater detail—namely, the techniques numbered 1 through 12 in Table 3.1, collected into general categories as follows:

External misuse. Generally nontechnological and unobserved, external misuse is physically removed from computer and communication facilities. It has no directly observable effects on the systems and is usually undetectable by the computer security systems; however, it may lead to subsequent technological attacks. Examples include visual spying (for example, remote observation of typed keystrokes or screen images), physical scavenging (for example, collection of waste paper or other externally accessible computer media such as discards—so-called dumpster diving), and various forms of deception (for example, misrepresentation of oneself or of reality) external to the computer systems and telecommunications.

Hardware misuse. There are two types of hardware misuse, passive and active. Passive hardware misuse tends to have no (immediate) side effects on hardware or software behavior, and includes logical scavenging (such as examination of discarded computer media) and electronic or other types of eavesdropping that intercept signals, generally unbeknownst to the victims. Eavesdropping may be carried out remotely (for example, by picking up emanations) or locally (for example, by planting a spy-tap device in a terminal, workstation, mainframe, or other hardware-software subsystem). Active hardware misuse generally has noticeable effects, and includes theft of computing equipment and physical storage media; hardware modifications, such as internally planted Trojan horse hardware devices; interference (electromagnetic, optical, or other); physical attacks on equipment and media, such as interruption of or tampering with power supplies or cooling. These activities have direct effects on the computer systems (for example, internal state changes or denials of service).

Masquerading. Masquerading attacks include impersonation of the identity of some other individual or computer subject; spoofing attacks that take advantage of successful impersonation; piggybacking attacks that gain physical access to communication lines or workstations; playback attacks that merely repeat captured or fabricated communications; and network weaving that masks physical whereabouts and routing via both telephone and computer networks, as practiced by the Wily Hackers [162, 163]. Playback attacks might typically involve replay of a previously used message or preplay of a not-yet used message. In general, masquerading activities often appear to be indistinguishable from legitimate activity.

Inaction. The penultimate case in Table 3.1 involves misuse through inaction, in which a user, operator, administrator, maintenance person, or perhaps surrogate fails to take an action, either intentionally or accidentally. Such cases may logically be considered as degenerate cases of misuse, but are listed separately because they may have quite different origins.

Indirect attacks. The final case in Table 3.1 involves system use as an indirect aid in carrying out subsequent actions. Familiar examples include performing a dictionary attack on an encrypted password file (that is, attempting to identify dictionary words used as passwords, and possibly using a separate machine to make detection of this activity more difficult [96]); factoring of extremely large numbers, as in attempting to break a public-key encryption mechanism such as the Rivest-Shamir-Adleman (RSA) algorithm [137], whose strength depends on a product of two large primes being difficult to factor; and scanning successive telephone numbers in an attempt to identify modems that might be attacked subsequently.

3.6 Comparison of the Attack Methods

Misuse of authority is of considerable concern here because it can be exploited in either the installation or the execution of malicious code, and because it represents a major threat modality. In general, attempts to install and execute malicious code may employ a combination of the methods discussed in Sections 3.2 through 3.5. For example, the Wily Hackers [162, 163] exploited trapdoors, masquerading, Trojan horses to capture passwords, and misuse of (acquired) authority. The Internet Worm [138, 150, 159] attacked four different trapdoors, as discussed in Section 5.1.1.

The most basic pest-program problem is the Trojan horse. The installation of a Trojan horse often exploits system vulnerabilities, which permit penetration by either unauthorized or authorized users. Furthermore, when they are executed, Trojan horses may exploit other vulnerabilities such as trapdoors. In addition, Trojan horses may cause the installation of new trapdoors. Thus, there can be a strong interrelationship between Trojan horses and trapdoors. Time bombs and logic bombs are special cases of Trojan horses. Letter bombs are messages that act as Trojan horses, containing bogus or interpretively executable data.

The Internet Worm provides a graphic illustration of how vulnerable some systems are to a variety of attacks. It is interesting that, even though some of those vulnerabilities were fixed or reduced, equally horrible vulnerabilities still remain today. (Resolution of the terminology war over whether the Internet Worm was a worm or a virus depends on which set of definitions is used.)

3.7 Classical Security Vulnerabilities

Many security flaws have been discovered in computer operating systems and application programs. In several cases, the flaws have been corrected or the resultant threats reduced. However, discovery of new flaws is generally running ahead of the elimination of old ones.

We consider here a few particularly noteworthy security flaws.

The C-compiler Trojan horse

A now-classical Trojan horse involved a modification to the object code of the C compiler such that, when the login program was next compiled, a trapdoor would be placed in the object code of login [167]. No changes were made either to the source code of the compiler or to the source code of the login routine. Furthermore, the Trojan horse was persistent, in that it would survive future recompilations. Thus, it might be called a stealth Trojan horse, because there were almost no visible signs of its existence, even after the login trapdoor had been enabled. This case was, in fact, perpetrated by the developers of Unix to demonstrate its feasibility and the potential power of a compiler Trojan horse and not as a malicious attack. But it is an extremely important case because of what it demonstrated.

A master-password trapdoor

Young and McHugh [179] (SEN 12, 3) describe an astounding flaw in the implementation of a password-checking algorithm that permitted bogus overly long master passwords to break into every system that used that login program, irrespective of what the real passwords actually were and despite the fact that the passwords were stored in an encrypted form. The flaw, shown in Figure 3.2, was subtle. It involved the absence of strong typing and bounds checking on the field in the data structure used to store the user-provided password (field b in the figure). As a result, typing an overly long bogus password overwrote the next field (field c in the figure), which supposedly contained the encrypted form of the expected password. By choosing the second half of the overly long sequence to be the encrypted form of the first half, the attacker was able to induce the system to believe that the bogus password was correct.

Figure 3.2 Master-password flaw and attack (PW = password)

Undocumented extra argument bypasses security

Sun’s login program for the 386i had an argument that bypassed authentication, installed for convenience. Discovery and abuse of that argument would have allowed anyone to become omnipotent as root (SEN 14, 5).

Integer divide breaks security

Sun Microsystems announced and fixed a bug in September 1991: “A security vulnerability exists in the integer division on the SPARC architecture that can be used to gain root privileges.” (This case is included in the on-line reportage from Sun as Sun Bug ID 1069072; the fix is Sun Patch ID 100376-01.)

Flaws in hardware or in applications

There are numerous security flaws that appear not in an operating system but rather in the application or, in some cases, in the hardware. Infrared-reprogrammable parking meters are vulnerable to attack by simulating the appropriate signals (SEN 15, 5). Cellular telephones are vulnerable to radio-frequency monitoring and tampering with identifiers. Several of the Reduced Instruction Set Computer (RISC) architectures can be crashed from user mode (SEN 15, 5). Hitachi Data Systems has the ability to download microcode dynamically to a running system; unfortunately, so do penetrators (SEN 16, 2). There was also a report of an intelligent system console terminal that was always logged in, for which certain function keys could be remotely reprogrammed, providing a Trojan horse superuser attack that could execute any desired commands and that could not be disabled (Douglas Thomson, SEN 16, 4).

3.8 Avoidance of Security Vulnerabilities

Subtle differences in the types of malicious code are relatively unimportant, and often counterproductive. Rather than try to make fine distinctions, we must attempt to defend against all the malicious-code types systematically, within a common approach that is capable of addressing the underlying problems. The techniques for an integrated approach to combatting malicious code necessarily cover the entire spectrum, except possibly for certain vulnerabilities that can be ruled out completely—for example, because of constraints in the operating environment, such as if all system access is via hard-wired lines to physically controlled terminals. Thus, multipurpose defenses are more effective in the long term than are defenses aimed at only particular attacks. Besides, the attack methods tend to shift with the defenses. For these reasons, it is not surprising that the defensive techniques in the system-evaluation criteria are, for the most part, all helpful in combatting malicious code and trapdoor attacks, or that the set of techniques necessary for preventing malicious code is closely related to the techniques necessary for avoiding trapdoors. The weak-link nature of the security problem suggests a close coupling between the two types of attack.

Malicious-code attacks such as Trojan horses and viruses are not covered adequately by the existing system-evaluation criteria. The existence of malicious code would typically not show up in a system design, except possibly for accidental Trojan horses (an exceedingly rare breed). Trojan horses remain a problem even in the most advanced systems.

There are differences among the different types of malicious-code problems, but it is the similarities and the overlaps that are most important. Any successful defense must recognize both the differences and the similarities, and must accommodate both. In addition, as noted in Chapter 4, the similarities between security and reliability are such that it is essential to consider both together—while handling them separately where that is convenient. That approach is considered further in Chapter 7, where the role of software engineering is discussed (in Section 7.8).

To avoid such vulnerabilities, and eventually to reduce the security risks, we need better security in operating systems (for mainframes, workstations, and personal computers), database management systems, and application systems, plus better informed system administrators and users. Techniques for improving system security are also discussed in Chapter 7.

3.9 Summary of the Chapter

Security vulnerabilities are ubiquitous, and are intrinsically impossible to avoid completely. The range of vulnerabilities is considerable, as illustrated in Figure 3.1 and Table 3.1. Some of those vulnerabilities are due to inadequate systems, weak hardware protection, and flawed operating systems; personal computers are particularly vulnerable if used in open environments. Other vulnerabilities are due to weaknesses in operations and use. Certain vulnerabilities may be unimportant in restrictive environments, as in the case of systems with no outside connectivity. Misuse by trusted insiders may or may not be a problem in any particular application. Thus, analyzing the threats and risks is necessary before deciding how serious the various vulnerabilities are.

Awareness of the spectrum of vulnerabilities and the concomitant risks can help us to reduce the vulnerabilities in any particular system or network, and can pinpoint where the use of techniques for defensive design and operation would be most effective.1

Challenges

C3.1 Vulnerabilities are often hidden from general view—for example, because computer-system vendors do not wish to advertise their system deficiencies, or because users are not sufficiently aware of the vulnerabilities. Such vulnerabilities, however, often are well known to communities that are interested in exploiting them, such as malicious hackers or professional thieves. Occasionally, the vulnerabilities are known to system administrators, although these people are sometimes the last to find out. Ask at least two knowledgeable system administrators how vulnerable they think their systems are to attack; you should not be surprised to get a spectrum of answers ranging from everything is fine to total disgruntlement with a system that is hopelessly nonsecure. (The truth is somewhere in between, even for sensibly managed systems.) Try to explain any divergence of viewpoints expressed by your interviewees, in terms of perceived vulnerabilities, threats, and risks. (You might also wish to consider the apparent knowledge, experience, truthfulness, and forthrightness of the people whom you questioned.)

C3.2 Consider a preencryptive dictionary attack on passwords. Suppose that you know that all passwords for a particular system are constrained to be of the form abcdef, where abc and def represent distinct lower-case three-letter English-language dictionary words. (For simplicity, you may assume that there are 50 such three-letter words.) How would the constrained password attack compare with an exhaustive search of all six-letter character strings? How does the constrained password attack compare with an attack using English-language words of not more than six letters? (For simplicity, assume that there are 7000 such words in the dictionary.) Readers of other languages may substitute their own languages. This challenge is intended only to illustrate the significant reduction in password-cracking effort that can result if it is known that password generation is subject to constraints.

C3.3 Describe two password-scheme vulnerabilities other than those listed in Section 3.3.2. You may include nontechnological vulnerabilities.

C3.4 For your favorite system, search for the 26 types of vulnerabilities described in this chapter. (If you attempt to demonstrate the existence of some of these vulnerabilities, please remember that this book does not recommend your exploiting such vulnerabilities without explicit authorization; you might wind up with a felony conviction. Furthermore, the defense that “no authorization was required” may not work.)