Guest Chapter by Madeline Gannon

Designing for the body can be challenging with today’s digital tools. 3D-modeling environments tend to be empty virtual spaces that give no reference to the human body. Chapter 8 showed how 3D scanning and parametric modeling can help tailor your digital tools for crafting wearables. But even with customizing, they still have limitations. At the end of the day, you are 3D-modeling in a tool that was built to design cars or buildings, not wearables. So, what would a digital tool native to wearables design look like? How would it help overcome the design challenges that are inherent to wearables?

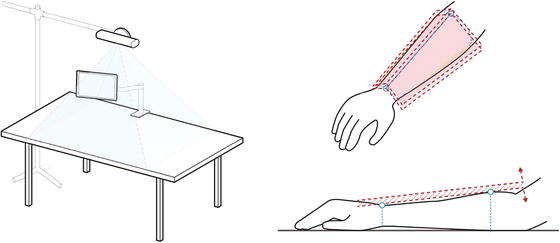

This chapter looks at Tactum, an experimental interface that uses the human body as an interactive canvas for digital design and fabrication. Tactum , shown in Figure 10-1, is an augmented 3D-modeling tool for designing ready-to-print wearables directly on your body. This design system was created by Madeline Gannon’s Madlab.cc. Instead of using a screen, mouse, and keyboard to 3D-model a design, it uses depth sensing and projection mapping to detect touch gestures and display digital designs directly on the user’s skin. Using Tactum, a person can touch, poke, rub, or pinch the geometry projected onto their arm to design a wearable. Once a design is finalized, a simple gesture exports the digital geometry for 3D printing . Because wearables designed with Tactum are created and scaled to the user’s body, they are ready to be worn immediately after printing.

Figure 10-1. Tactum is a gesture-based interface that lets you customize wearables directly on your body

This chapter takes a deep dive into the technical details that made Tactum possible. It also gives you some background on computer interfaces that use the skin and discusses the future potential of augmented modeling tools.

Skin -Centric Interfaces

In the past ten years, a rich body of work has been developing for using your skin, instead of a screen, as the primary interface for mobile computing . Researchers have been working on various methods for sensing and displaying interactions with the skin. Figure 10-2 shows three recent research projects that explore the practicalities of using hands and arms for navigating menus, dialing phone numbers, and remembering input. The consensus among these researchers is that skin offers a surprising number of possibilities that smartphones lack:

Skin interactions can be detected through devices worn on the body, in the body, or in the environment.

Skin can be both an input and output surface by combining sensors and projectors .

The human body has proprioceptive qualities ––a spatial awareness of itself––which makes visual feedback not entirely necessary for effective interaction.

Unlike your cell phone, your skin is always available and with you.

Your skin innately provides tactile feedback.

Because skin is stretchy, you can get multidimensional input.

Figure 10-2. Researchers in human-computer interaction have been exploring skin as an interface for mobile computing. This image shows Skinput, by Chris Harrison, et al. (2010), in which buttons projected onto the skin work just like buttons on a screen. (Images courtesy of Chris Harrison, Scott Saponas, Desney Tan, and Dan Morris, Microsoft Research; licensed under CC BY 3.0.)

Tactum builds on this existing work by creating a way to 3D-model directly on the skin. Notice in the previous list that a few of the possibilities are particularly useful for 3D modeling: projecting onto the skin lets you map and simulate 3D models on the body; sensing diverse input lets you use a number of natural gestures for modeling; and multidimensional input has potential to use the skin as a digitally deformable surface. With these opportunities in mind, Tactum focuses specifically on designing for the forearm: not only is it easily accessible, but many wearables—including watches, gadgets, jewelry, and medical devices—can be made for the arm.

Sensing the Body

Tactum uses a single depth sensor to detect tactile interactions with the body. The system detects touch gestures by first tracking the medial axis of the forearm as is moves around the workstation. It can then isolate the small volume of space directly above forearm. When a finger enters this small volume of space, the system knows that a user is touching the skin. Tactum then starts tracking the behaviors of the touch—such as duration, position, velocity, and acceleration—to classify a specific touch gesture. Using this method, Tactum can detect up to nine different skin-centric gestures for 3D modeling. Figure 10-4 shows the range of natural gestures that are sent to Tactum’s 3D-modeling back end.

Figure 10-3. (left) The initial workstation setup for Tactum. (right) The segmented, red touch zones on the forearm are tracked by Tactum’s depth sensor as the user moves in the workstation

Figure 10-4. Using a single depth sensor , Tactum can detect up to nine different gestures for 3D modeling

Although the sensors may have changed, the principles for tracking and detecting the body have remained the same. Tactum uses depth data from the sensor as in the following example:

Find and segment the forearm.

Find the index finger and thumb of the opposite hand.

When the index or thumb enters the touch zone of the forearm, begin recording the gesture.

Identify the gesture, and send it to the 3D-modeling back end.

3D Modeling Back End

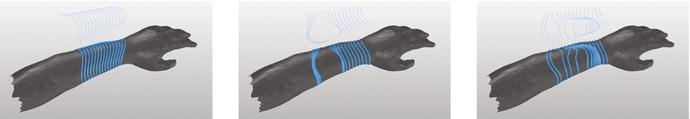

The gestures detected by Tactum’s depth sensor are fed directly to a 3D-modeling back end. This is where digital geometry is processed without the designer having to know the details. In the example in Figure 10-5, a live 3D model is being wrapped and piped around an existing 3D scan of the forearm . As the designer touches and pinches the skin, their tactile interactions are sent to the modeling back end. From here, the wearable design’s animated 3D-printable geometry is dynamically updated by a particular gesture. The updated geometry is simultaneously projected back onto the designer’s body. Although Figure 10-5 shows a 3D scan of the body, it’s not entirely necessary when designing in Tactum; however, working from a 3D scan helps ensure that the wearable has an exact fit once the printed form is placed back on the body.

Figure 10-5. The 3D-modeling back end dynamically updates the wearable design based on tactile interactions with the skin

Fabrication-Aware Design

Fabrication-aware designembeds the technical expertise of an experienced fabricator into the workflow of a digital design environment. With Tactum, the internal digital geometry is built with an awareness of how it will be physically produced: it only allows for 3D-printable geometry to be generated. Therefore, no matter how much or little the geometry is manipulated by the designer, the digital geometry is always exported as a valid, 3D-printable mesh. Figure 10-6 shows the projected geometry that the designer sees. Once they achieve a satisfactory design, they can close their fist to export the geometry for fabrication.

Figure 10-6. Dynamic 3D model being projected back onto the body. Once a desired geometry is found, the design can be exported for 3D printing

Tactum keeps the animated digital geometry scaled and attached to the designer’s body. This gives the printed form a level of ergonomic intelligence: wearable designs inherently fit the designer. Moreover, with the constraints for 3D printing embedded into the geometry, every design is immediately ready to be 3D-printed and worn on the body.

Intuitive Gestures, Precise Geometry

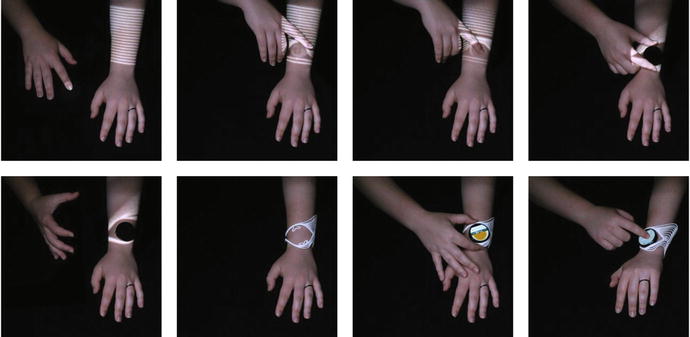

Gestures within Tactum are designed to be as natural as possible: as you touch, poke, or pinch your skin, the projected geometry responds as dynamic feedback. Although these gestures are intuitive and expressive , they are also fairly imprecise: they are only as precise as your hands. This means the minimum tolerance during design is around 20 mm—the approximate size of a fingertip. This 20 mm limit is adequate for many design scenarios, as in Figure 10-7, which shows the creation of a new design. However, to design a wearable around an existing object, such as a smartwatch, you need more precise control over the digital geometry.

Figure 10-7. This sequence of images shows the process of designing a smart watch band directly on the body with Tactum. The actual watch face can be used as a physical reference for the digital design

Figure 10-7 shows how Tactum can be used to create a new watch band for a Moto 360 Smartwatch. As in the previous example, you can use skin gestures to effect the overall design: touching and pinching the skin lets a designer set the position and orientation of the watch face and the distribution of the bands. However, the watch-band design also requires mechanical components to function: it needs clips that hold the watch to the watch band and a clasp that holds the watch band to the body. Moreover, for the watch to fit and the watch band to function, these mechanical components require precise measurements and tolerances that go beyond what you can detect from skin gestures.

To overcome this limitation, Tactum can connect premade geometry that was precisely 3D modeled in a traditional CAD program to specific parts of a wearable design. In this example, the clips are first 3D-modeled using conventional modeling techniques. They are then imported and parametrically attached to the abstract design definition of the watch band: the two clips sit precisely 41 mm apart, but their position and orientation are entirely dependent on where the designer places the watch face on their skin. This parametric association between the overall watch-band design and any premade, imported geometry prevents a designer from directly modifying any high-precision geometry and preserves the wearable’s functional constraints.

Tactum uses intelligent geometry to strike a balance between intuitive gestures and precise constraints. Here, the exact geometries for the clips and clasp of the smartwatch are topologically defined within the band’s parametric model. In this example, the user-manipulated geometry defines the overall form and aesthetic of the watch band. The clips and clasp, although dependent on the overall watch-band geometry, cannot be directly modified by any gestures. The CAD back end places and generates those precise geometries, once the user has finalized a design. Figure 10-8 shows how the existing smartwatch fits directly into the watch band designed through Tactum.

Figure 10-8. Tactum can design wearables around preexisting objects, such as this watch band for a Motorola 360 smartwatch . Skin-centric gestures are used to set the overall design of the watch band. The high-precision mechanical components of the watch band can be imported from conventional CAD programs and parametrically attached to the overall design

Pre-scanning the body is not entirely necessary when designing a wearable in Tactum. However, it ensures an exact fit once the printed form is placed back on the body. Between the 3D scan, the intelligent geometry, and intuitive interactions , Tactum is able to coordinate imprecise skin-based gestures to create very precise designs around very precise forms.

Physical Artifacts

Tactum has been used to create a series of physical artifacts around the forearm. These artifacts test a range of different interactive geometries, materials, modeling modes, and fabrication machines. Figure 10-9 shows a PLA print made from a standard desktop 3D printer, a nylon and rubber print made from a selective-laser sintering (SLS) 3D printer , and a rubbery print made from a stereolithography (SLA) 3D printer .

Figure 10-9. Tactum has been used to fabricate a number of wearables using different 3D printing processes. (left) An armlet printed from PLA on a desktop FDM printer. (center) A splint prototype printed from nylon and rubber on an SLS printer. (right) A cuff printed from a rubber on an SLA printer

Future Applications

Although Tactum is an experimental interface, there are real-world implications for skin-centric design tools. Many wearables today are still made using high-skill analog techniques: for example, a special-effects artist sculpting a mask onto an actor; a tailor fitting garments to a client; a prosthetist molding a socket on a residual limb; or a doctor wrapping a cast around a patient. These professions may benefit from integrating digital technologies into their workflows, but they should not have to abandon the dexterous abilities of their own two hands. Skin-centric design tools show a potential for balancing the best of digital and analog techniques for on-body design.

Summary

This chapter profiled Tactum, an experimental interface that uses the designer’s body as an interactive canvas for digital design and fabrication. You learned about related work in skin-based interfaces and saw how Tactum uses depth sensors to detect tactile gestures on the skin. This chapter also showed how gestures—although relatively imprecise—can be used to 3D-model precise, functional objects directly on the body. Finally, the chapter concluded with a series of 3D-printed artifacts fabricated using a range of printing processes. In the next chapter, we look at how wearables can integrate into our everyday lives for health and wellness.