Chapter 5. Loading Data

Before running queries or using analytic tools, Greenplum needs to ingest data. There are multiple ways to move data into Greenplum. In the sections that follow, we explore each of them.

INSERT Statements

The simplest way to insert data is to use the INSERT SQL statement, which facilitates inserting a few rows of data. However, because the insert is done via the master node of Greenplum Database, it cannot be parallelized.

An insert like the one that follows is fine for populating the values in a small lookup table:

INSERT INTO faa.d_cancellation_codes

VALUES ('A', 'Carrier'),

('B', 'Weather'),

('C', 'NAS'),

('D', 'Security'),

('', 'none');

There are also set-based INSERT statements that you can parallelize (these are discussed later in this chapter).

COPY Command

The COPY command is a psql command. There is also the COPY statement in SQL. There are minor differences, one of which is that only a user with superuser privileges can use COPY.

You can run COPY in psql scripts. psql is the command-line tool for accessing Greenplum as well as PostgreSQL. A Greenplum version is available as part of the normal installation process.

COPY is efficient for loading smallish datasets—a few thousand rows or so—but because it is single-threaded through the master server, it is less useful for loads of millions of rows or more.

The data is in a file, the top lines of which look like this:

dog_id, dog_name, dog_dob 123,Fido,09/09/2010 456,Rover,01/21/2014 789,Bonzo,04/15/2016

The corresponding table would be as follows:

CREATE TABLE dogs (dog_id int, dog_name text, dog_dob date) distributed randomly;

Here’s the SQL statement that copies the three rows of data to the table dogs:

COPY dogs FROM '/home/gpuser/Exercises/dogs.csv' CSV HEADER LOG ERRORS SEGMENT REJECT LIMIT 50 ROWS;

Raw data is often filled with errors. Without the REJECT clause, the COPY statement would fail if there were errors. In this example, the REJECT clause allows the script to continue loading until there are 50 errors on a segment. The LOG ERRORS clause will place the errors in a log file. This is explained in the Greenplum SQL Command Reference.

You also can use COPY to unload small amounts of data:

COPY dogs TO '/home/gpuser/Exercises/dogs_out.csv' CSV HEADER;

The gpfdist Process

For large datasets, neither INSERT nor COPY will be nearly as performant as Greenplum’s parallel loading techniques. These make use of external tables and the gpfdist tool. gpfdist is a process that runs on the server on which the raw data resides. It sits there passively until a Greenplum SQL statement implicitly calls it to request data from an external table.

The data is in a file, the top lines of which are as follows:

dog_id, dog_name, dog_dob 123,Fido,09/09/2010 456,Rover,01/21/2014 789,Bonzo,04/15/2016

The corresponding table would be as follows:

CREATE TABLE dogs (dog_id int, dog_name text, dog_dob date) distributed randomly;

The external table definition could be as shown here:

CREATE READABLE EXTERNAL TABLE dogs_ext like(dogs)

LOCATION ('gpfdist://10.0.0.99:8081/dogs.csv')

FORMAT 'csv' (header)

LOG ERRORS SEGMENT REJECT LIMIT 50 rows;

Start the gpfdist process on the host housing the data to be loaded. The -d command-line argument points to the directory in which the files live:

gpfdist -d /home/gpuser/data/ -p 8081 > gpfdist.log 2>&1 &

After the gpfdist process has been started, the data can easily be imported by using the following statement:

INSERT INTO dogs AS SELECT * FROM dogs_ext;

When the INSERT statement is executed, all of the segments engaged in the INSERT statement will issue requests to the gpfdist process running on the server with address 10.0.0.99 for chunks of data. They will parse each row, and if the row should belong to the segment that imports it, it will be stored there. If not, the row will be shipped across the private interconnect to the segment to which it belongs and it will be stored there. This process is known as Scatter-Gather. The mechanics of this are completely transparent to the user community.

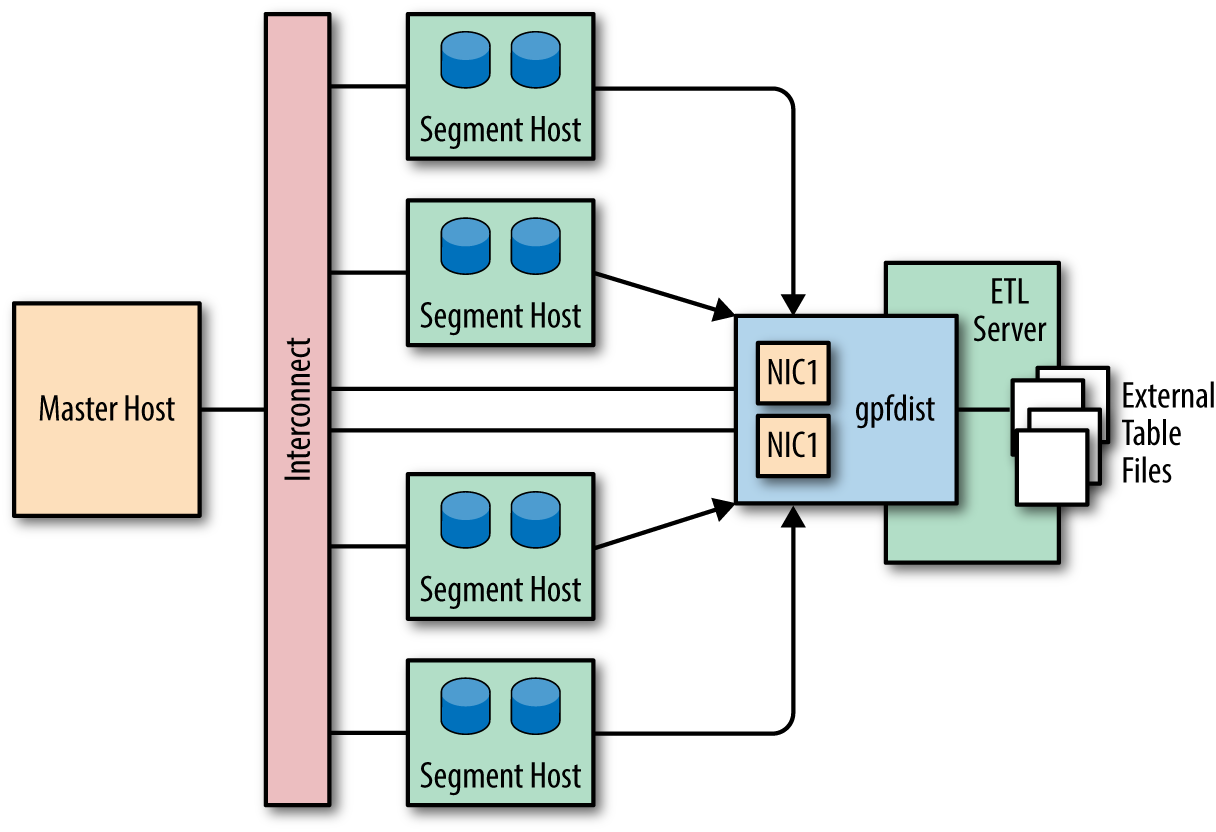

The Greenplum documentation describes a number of methods for deploying gpfdist. In particular, the number of gpfdist processes per external server can have a large impact on load speeds. Figure 5-1 shows one example in which one gpfdist process is running on the external ETL server.

Figure 5-1. External table using single gpfdist instance with multiple network interface cards

The gpload Tool

Many Greenplum users employ the gpload tool. The gpload command is an integral part of Greenplum. It uses a YAML configuration file to describe the loading of data. In many organizations, there are dedicated data-loading teams that might not actually have much SQL experience or might not even have database accounts. For these teams, gpload is an ideal tool.

Here’s what gpload does behind the scenes:

-

Creates a user session in a database

-

Creates an external table that describes the data to be loaded

-

Starts the

gpfdistprogram on the host where the data is located -

Performs a parallel load of the data from the source to the target table

For this to happen, the configuration file or the command-line argument or relevant environment variable must specify the database user, the target database and table, the location of the target data, and the Greenplum master host of the cluster.

Following is a very simple YAML configuration file for our dog table example:

VERSION: 1.0.0.1

DATABASE: dogpound

USER: gpuser

HOST: mdw-1

PORT: 5432

GPLOAD:

INPUT:

- SOURCE:

LOCAL_HOSTNAME:

- data_host.foobar.com

PORT: 8081

FILE:

- /home/gpuser/data/*

- FORMAT: csv

- DELIMITER: ','

OUTPUT:

- TABLE: public.dogs

- MODE: INSERT

Notice that there are two PORT fields in the YAML file. The first is the Greenplum listener port to which user sessions attach. The second is the port that gpfdist and Greenplum use to transfer data.

There are many useful optional features in gpload:

-

Logging load errors for potential correction.

-

SQL commands that you can run before and after the load operation. You can use these to add audit commands or check the error files.

-

Ability to truncate the target table before loading. This is useful when loading into staging tables on a regular basis.

-

Mapping makes it possible for you to apply functions or expressions to data as part of the ingest process. You could use this to encrypt data or transform it in other ways.

The gpload command automates what gpfdist does, as described in the previous section.

Additional Resources

The Rollout Blog has a good tutorial on YAML.

The Greenplum Documentation has a thorough discussion of loading and unloading data.

Pivotal’s Jon Roberts has written Outsourcer, a tool that does Change Data Capture from Oracle and SQLServer into Greenplum. Though this is not a Pivotal product and is thus not supported by Pivotal, Jon makes every effort to maintain, support, and enhance this product.