Chapter 15. Good Cop, Bad Cop

In the past I engaged in the whack-a-mole game against abusers. I spent many man months on algorithmic approaches for fighting abuse. My efforts focused on analysis of the content, sophisticated filters, and user “rehab” automation. In the end, the abusers (as a collective) have won. While I had given up and have left to engage in more fulfilling endeavors, the abusers have gradually figured out ways to bypass filters, create multiple accounts, and keep flooding message boards and public forums with spam.

Anyone who has ever tried seriously to block email spam knows that these kind of arms’ races are very hard to win. We are very few, the abusers are many, and there always seems to be more of them. They have learned to share sophisticated tools (like breaking captcha) so effectively the pros and the least sophisticated script-kiddies are no longer two separate groups.

The good news is that there is a better way, but it requires a totally new and fresh approach.

In particular, I’ve become a true believer in community-moderation and collaborative filtering, after seeing the success of schemes like those of Y! Answers and Slashdot. What these successful sites are doing in essence is empowering their (good) users to take ownership of the abuse and quality-level goals. They create strong incentives for good users to put an effort into moderating the sites. Users who consistently exhibit good judgments and willingness to contribute, get “strength points” making them more and more powerful in the never ending game of stamping-out abuse.

In contrast, abusers have almost no power. The more they abuse, the more they lose points. New accounts start with zero reputation/power making them useless to engage in the game.

Once we get a critical-mass of good and willing users, the incentive system feeds itself. The abusers may bypass a filter, but they cannot fool an army of dedicated human beings.

Community Management

Administrators are people, too! And they deserve good interfaces. Far too often, since the earliest days of the Web, beautiful sites have been launched to the public with minimally functional content management and administrative functionality, or sometimes none at all. Similarly, a social website has an engine room, too: it needs an admin side where community managers can help cultivate the best contributions and downplay or discourage the worst.

But how can people be expected to behave well if they don’t know what constitutes good behavior? Thus it’s important to establish and clearly communicate the behavioral norms of the community and to actively participate in the community, particularly in its impressionable early days, modeling good behavior and demonstrating how to get things done.

Norms

In the context of managing social networks and information in the public domain, Norms refer to a pattern, and expected behaviors (in contrast to those established by law) when operating or working with a system.

Norms are socially enforced, and less restrictive than rules, although this does not quite reflect on the actual effectiveness of a Norm as compared to a legally established Rule. In many cases, Norms appear to be more effective in molding or directing user behavior as compared to rules because of greater visibility of actions in a broader community. This appears to align with the fundamental observation that a broad community involvement in management is far better than the management entrusted to a select few.

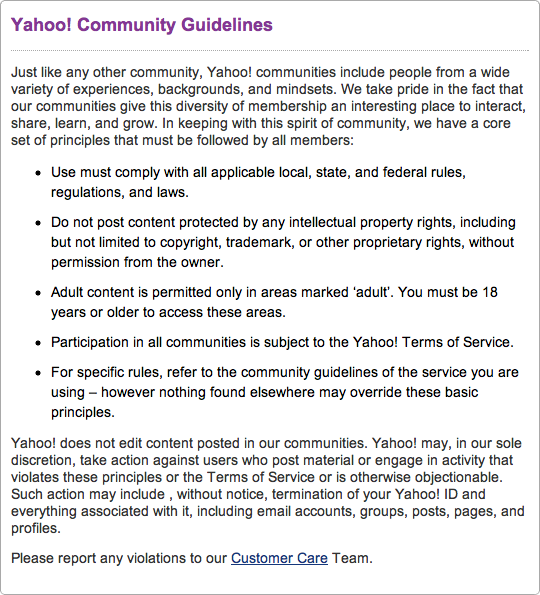

A key building block of any community interface is a published set of guidelines that is easy to find and crystal-clear (Figure 15-1).

Role Model

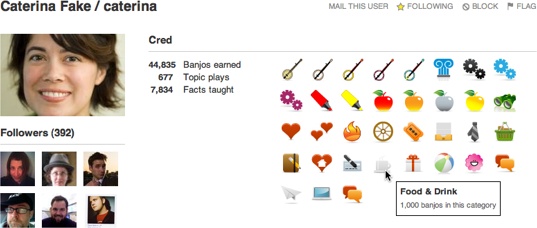

If you conceive of the founders or creators of a social website as a kind of external deity ruling with an iron fist, you conjure up one sort of image of a community, but if instead you picture the founders manifesting (or “incarnating”?) as ordinary users in the system, then they can walk among the common folk and demonstrate how life in this microcosm is really intended to be lived (Figure 15-2). This doesn’t preclude attending to and learning from the innovations and revealing mistakes of your users, but it is a proven method for getting patterns and norms of behavior established from the get-go.

Potemkin Village (Anti-Pattern)

Users may want separate areas for discussing separate topics, and site creators may have an elaborate vision of a complex arrangement of topic and groups, but instead of creating a complicated empty scaffolding in hopes of enticing community to take root (the “if you build it, they will come” fallacy), start small and compact, and then prepare to grow organically (Figure 15-3).

Create one main topic, a pinned (permanently on top) welcome topic, and perhaps a separate help topic, and nothing else. Resist the urge to anticipate the contours of the conversations and groups. Wait until people are begging for a subtopic, then fork the original group. Repeat.

This way, any pioneer community members will all interact in a single shared space, with no dilution of numbers. By the time they want to start sequestering topics from one another you’ll have already reached critical mass.

Continue to resist calving off new groups until they are clearly and undeniably needed.

Building out a thorough structure of anticipated groups or discussions before a site has any real life in it creates a “Potemkin Village,” an empty, fake site that dissipates any early momentum it might otherwise gather across too many cubbyholes.

Collective Governance

Determine how much self-governing you want your community members to do, and then give them the tools to do it with. They will want to make their own decisions. They may want to use voting to do so sometimes.

Also, confer with your users when deciding on the direction of the site. Don’t necessarily bow to the majority’s will, but some decisions are best made when given over to the community.

As Craigslist has wrestled with sustainable ideas for making money without damaging the “magic” of its community, the founder has frequently asked the community to help decide the direction of the site. For example, the decision to charge for rental listings in a few hot real estate markets and keep everything else free was made by consensus within the existing user community.

Group Moderation

The goal of community moderation is to foster rich conversations, connections, relation-ships, and activities. Reward the kinds of participation you wish to see more of, and gently discourage the behaviors you believe are counterproductive.

When dealing with flame wars and sock puppets (see Flame Wars in Flame Wars and Sock Puppets in Sock Puppets), “don’t feed the trolls.” Whenever possible, de-escalate. Give problem users “time outs” (suspensions from posting privileges), and when necessary, freeze entire threads or topics to let emotions cool.

For incorrigible characters, consider banning them (but there’s always the risk they will simply reregister with a new account), or put them in a “Hall of Mirrors” in which only they (and perhaps other spammers and trolls) can see their posts. They will wonder why no one is falling for their tricks anymore.

Official moderators (paid, employed staff) can do only so much. They will need to find allies in the community itself. Promote from within. Create labels (see Labels in Labels) and identify the most helpful community members. Grant moderation privileges to trusted users, and harness the feedback of all users to promote the best contributions and bury the worst.

Collaborative Filtering

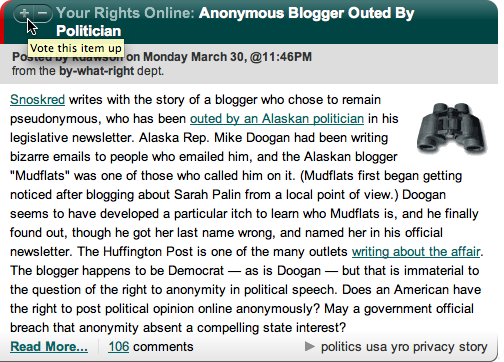

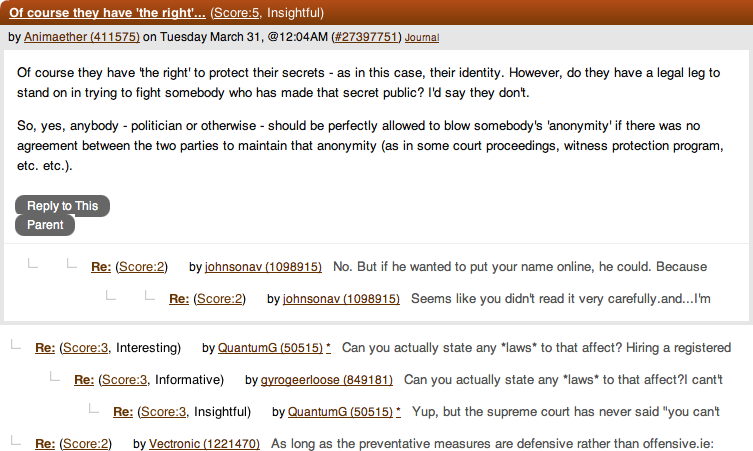

People need help finding the best contributions to online community (Figure 15-4).

Use this pattern when you have a large base of contributors and a wide range of quality.

How

Enable authenticated users to vote up or down, or otherwise rate content. Optionally give users with higher reputation status more privileges to highlight or hide content. Aggregate the votes, and use this to determine sorting and display order (Figure 15-5).

Why

The collective wisdom of the community can help filter out the best contributions and conversations.

Related patterns

Reputation Influences Behavior in Reputation Influences Behavior

Thumbs Up/Down Ratings in Thumbs Up/Down Ratings

Vote to Promote in Vote to Promote

As seen on

Kuro5hin (http://www.kuro5hin.org/)

Slashdot (http://slashdot.org/)

Yahoo! Answers (http://answers.yahoo.com/)

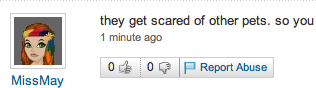

Report Abuse

Any active, successful social system online is subject to abuse. We know it will occur, so we need to have processes in place for identifying and mitigating it. People need a way to report it that isn’t too inconvenient and doesn’t require them to type in or restate information that we could glean from context.

In a growing community, abuse reporting scales up faster than human beings can handle it, so an escalation strategy is needed to deal with the consequences of popularity.

Use when

Use this pattern to allow your users the ability to self-moderate content that is contributed by the community (Figure 15-6).

How

The experience of reporting abuse should be as simple and transparent as possible. Do not ask the user to enter data that could have been captured automagically. Make it clear to the user how the report will be handled, without overpromising, and then deliver the user cleanly back to the context from which he reported the abuse. Where possible, immediately hide the reported content from the user who reported it:

Offer a Report Abuse link on any community-generated content (optionally include the standard flag icon).

Reporting abuse should take the user to a simple form.

Abuse reports should be tracked as signals along with other evidence of abuse.

For highly granular user-generated content (such as a stream of vitality updates on a page), the Report Abuse affordance must be made available individually for each item, without overwhelming the page with a stream of Report Abuse links or little flags.

Report Abuse link

Use consistent terminology for labeling the report link. Some sites prefer “Report Abuse,” and others use “Flag.”

Optional icon

Optionally, include a consistent icon to mark the link for reporting abuse. (Reporting abuse is sometimes colloquially referred to as “flagging” abusive content, hence the iconic symbol for the abuse report button is a flag.) It should be easy for the user to spot a Report Abuse flag icon and click it to initiate the abuse reporting process:

Avoid the flag icon in contexts where it will conflict with existing terminology or symbols (as in Mail, where “flagging” a message indicates that it is important and not that it is abusive).

Likewise, if the icon doesn’t suit the design, then use only the Report Abuse link text.

Do not use the icon without text. (It’s OK to use text without an icon.)

Abuse report form

The form should be as simple as possible (but no simpler):

Clicking the Report Abuse link should take the user to a form where he can select the type or nature of the abuse and optionally fill in more context.

The user should not be required to manually enter the relevant URL or page title or any other metadata we can glean from the source page.

If possible, use an inline short form for people who are already signed in (they just choose from two categories—offensive or illegal—optionally make a comment, and they’re done).

Signed-out users will need a full-page form where they can indicate how they can be contacted.

Confirmation

Submission of the form should generate a success message that does not promise any specific action, and then should return reporting users to the original context where they initially started the abuse-reporting process. Optionally, hide the offending content from them while the response to the report is pending.

Abuse tracking

After a user submits an abuse report, it must be reviewed by a customer care agent (unless a reputation system is in place to track signals of abuse). As a site scales up, additional considerations have to be factored into the abuse-reporting process:

Just provide a way for a user to report abusive content and send the request to a sup-port team for review.

Add priority to different requests by allowing users to choose whether this abuse violates community guidelines or is illegal.

Take into consideration whether you should inform the original poster about the abuse report.

Take into consideration whether an appeal mechanism should be provided.

Why

Providing users a standard way to report abusive content and behavior complements any algorithmic and behavioral signals of abuse gathered.

Related patterns

Reputation Influences Behavior in Reputation Influences Behavior

As seen on

Craigslist (http://craigslist.org/)

Yahoo! (http://www.yahoo.com/)

Most social sites

Sources

This pattern is based on the Report Abuse component pattern written primarily by Micah Alpern at Yahoo!.

What’s the Story?

Shara Karasic says, “Good stories are the glue of the strongest online and offline commu-nities. Every group, from hunter-gather tribes to modern physicists, has a story that binds them together with history, mission, and purpose. Weave your story into your interface and interactions, and let your users become the main characters in that story.”

Further Reading

“Community Lessons from Flickr’s Heather Champ,” from Brian Oberkirch’s Only Connect blog, http://www.brianoberkirch.com/2008/03/07/community-lessons-from-flickrs-heather-champ/

Derek Powazek’s posts on community, http://powazek.com/posts/category/community

“The Virtual Community,” by Howard Rheingold, http://www.well.com/~hlr/vcbook/