With a normal pipeline of DevOps, teams leave the code building and package management responsibilities to the DevOps tool, such as GitLab or Azure DevOps. DevSecOps expects more than that and requires that every developer and IT personnel take responsibility for code security, quality, and reviews. The collaborative nature of open source communities provides a good quality code review and constructive criticism to code changes. A small organization might not be able to enjoy the benefits of hundreds of collaborators online, but they can use their own engineers and architects and develop the initial versions of their product without peer reviews. Regardless of the automation platform, scripts and packages can be introduced in the pipeline that require a merge request1 to be peer-reviewed. Even before a merge request is created, a well-defined DevOps2 pipeline can notify the contributor about the potential problems that a change might have.

Performance degradation of the solution

Code complexity

Vulnerabilities in the code

Potential code smell and anti-patterns being introduced

The number of test cases failing or being ignored by developers and the code that is not being covered by the test cases

These cases differ from repository to repository, and from project to project. But the main gist of DevOps is that it automates the process if the policies are met and there are no serious problems to process. As soon as the DevOps pipeline finds a problem, it breaks the pipeline and alerts the operations team or the developer responsible for a fix.

The value of the code is measured by the impact it has, and a positive value is generated by a positive influence of the code and application.

The process of writing a secure application is a broad topic. Even for engineers with two-three years of experience, writing buggy code is possible.3 That is why every organization enforces security principles and code reviews in their development environments. GitLab, for example, is a very innovative company working on a hosted and self-managed DevOps toolchain. Looking at its online repository, one can easily see how intensively tight their DevOps pipelines are. Every action that happens on their repositories must go through rigorous testing before it’s even reviewed. This careful analysis of the code—regardless of the contribution size, contributor profile, repository, and previous history—helps GitLab ensure the project is healthy at any point. This behavior leads to a successful implementation of DevOps and automation, which then leads to a better product for the market.

I have collaborated on open source repositories owned by Microsoft, Google, and GitLab. If we remove the “specific jobs,” then all the repositories had a similar flow of checks that my contributions needed to pass to be reviewed for a final check. Regardless of whether your contribution is a major feature fix, a bug removal, or a simple typo correction, a DevOps cycle runs the contribution through all the necessary steps of verification and then notifies the respective owners to review the commit.

By this time, a contribution made has already been verified for all the code style policies, error and bug vulnerabilities, security issues, and dependency and/or license issues. A complete report developed using these key points is provided as a supportive “yes” or “no” to the reviewers. It is the job of the reviewers to further verify the changes. This cycle creates a collaborative environment where contributors and peer reviewers work closely to improve the project.

Moving away from abstract theory about secure code writing and reviewing processes, in this chapter we will discuss the ways in which you can ensure that developers are writing secure code, and every piece of code that is committed to the repository is tested for any known vulnerabilities. Our focus is .NET Core and repositories that contain .NET Core based projects. Since .NET Core has a vast ecosystem of deployment platforms, ranging from desktops, servers, web frameworks, gaming to mobile, we will study how we can introduce security verification for code in DevOps pipelines.

Write Less, Write Secure

Software engineers enjoy writing code for applications and sometimes they like to write a lot of code. It is mentioned sometimes that “good software” is well understood by humans. While that statement is true, it does not mean that code has to be written in a verbose manner. By the end of this chapter, we will explore the concept of microservices and how .NET Core can be used to develop (cloud-native4) microservices. Modern runtimes have a steep learning path when it comes to best practices. For ASP.NET Core, many software engineers barely touch the internals of the framework as they develop the software. This means that some effort needs to be made in order to fully explore and understand the platform/framework.

When we talk about the complete deployment suite offered by .NET Core, this becomes a hectic job to make sure that our products are bug free, or that they do not contain any performance or security related issues. Take an example of a web application5 that finds the orders made by a specific user. A naïve approach to this would be to query the database, fetch all the records, and then process them on the server side. The upside is that all the orders are available in the cache and subsequent queries can be performed quickly, as the data has been loaded into the memory. This code has a problem. Our application is repeating the tasks that a database engine can perform several times better. Relational databases use the SQL language to query the data. SQL exposes several clauses that help database developers write queries that can filter or prepare the data to be presented back to users. We can improve the efficiency of our program by modifying our queries and returning only the orders made by the specific user. In this case, we will pass the user (or customer) ID as a parameter.

A simple and straight-forward approach is to write a SQL query and replace the user ID with any of the string-replacement methods. These methods can be string interpolation, string concatenation, or string format helpers in C#. This improves the overall performance6 of database engine and our web application. Our database does the heavy lifting of query processing and returns the data that is needed by the application. Our application, on the other hand, can print the data to the user without the need of filtering it. This approach has a minor coding problem that turns into a major production bug. The problem is leaving your SQL inputs unsanitized or unescaped and is called SQL Injection. SQL Injection is one of many “injections” used in computer science and belongs to the bad types of injections. We can solve the problem by properly using escaped SQL. We will come back to this point later in this chapter.

You must design your relational databases according to the normalization rules. In other words, keep it simple.

You should write SQL as readable and understandable as possible.

You should group or filter the data on single columns and avoid using multiple columns in a single clause.

You should avoid using asterisk or select-all "*" in SELECT queries and should try to write the column names for the data.

You should never concatenate the queries and always use parameters for inputs.

You can use a JOIN clause or INNER queries to return the data, and then your database can decide how to return the data. Always choose readability in SQL queries.

These points are not even a summary of the best practices7 for SQL. Since databases apply to every domain that .NET Core covers, it’s very important to discuss SQL and NoSQL databases and their pitfalls. You will find databases being used in mobile apps, web apps, progressive web apps, all the way to games, machine learning apps, and microservices. Ensuring that SQL best practices are applied on your repositories can help reduce the number of bugs that might show up in the apps.

Several .NET Core object-relational mappers use these practices to ensure security and performance efficiency. Entity Framework Core uses this approach to use LINQ8 to convert the C# expressions into native SQL queries that are executed by the database engine. When you write a LINQ query to fetch the number of posts in your blog, Entity Framework Core converts the function call to the best suitable SQL query, taking security, efficiency, and performance into consideration.

Communication

Encryption

Consistency

Service discovery

In later chapters we will solve these problems one by one. With each solution, code complexity increases and makes it difficult to manage the software project. This leads to using service mesh components that manage these tasks for our microservice architectures.

SAST, DAST, IAST, and RASP

SAST: Static application security testing

DAST: Dynamic application security testing

IAST: Interactive application security testing

RASP: Runtime application self-protection

The difference is when they are executed. You will stumble upon the static code analysis techniques the most. In this chapter, I will demonstrate the importance of static code analysis with a higher priority and dynamic code analysis with a secondary priority. The code analysis tools that we reviewed in the previous chapter fall in the category of static code analysis. Static code analysis does not have to be performed in a CI pipeline only. It can be applied to an IDE for real-time analysis reports.

One important aspect to note about dynamic analysis or real-time analysis is that they work on applications that are in the production environment already. In production, it is less important to find a bug and it is more important to patch a bug. In this scenario, RASP offers a better feature, to patch the bugs and to apply a firewall on the web application. Most cloud vendors provide a web firewall option that protects your web app from malicious and hacking attempts and applies a temporary patch on your website. This patch can protect your website against common bugs.

Microsoft Azure supports Web Firewall, which can prevent any XSS or SQL injection attacks on your web application.

Since we are focusing on .NET Core and its security and performance related concerns, we will only focus on the static-code analysis. However, dynamic code analysis and web firewalls are available for .NET Core as well as for other runtimes that are not specific to .NET Core. Therefore, you can check a guide about cloud technologies to learn more about those tools and products.

Developer Training

It is important for an organization to train its developers and testers per industry standards for code and security. This can differ from organization to organization, and the importance of training can vary from developer to developer. The goal of this is to ensure that the code coming out of the developer is of quality. This helps to improve the development experience and decreases the number of reviews and unnecessary build resources.

There are several platforms that provide high-quality instructor-led training resources. It is also good approach for senior resources, such as senior developers, operations leads, and marketing managers, to provide a one-hour webinar on modern tools and technologies for junior resources. Several organizations9 implement this approach to support an educational environment.

Analyzers for Secure Code

Code analysis packages can aid software development and improve code quality. As code complexity grows, even the most experienced software developers and engineers sometimes fail to catch bugs in the code. For .NET Core environments, there are packages that are available as NuGet downloads and IDE extensions; Resharper and so on. It is best to use these tools to notify the developers about potential code smells before the code is checked in. This happens before DevOps and helps decrease infrastructure costs.

Runtime Selection and Configuration

It is also good practice to use production-like environments for build and testing stages. This approach helps ensure that the code will work in the production environments. Normal development machines have variables and scripts set up in aiding the program’s execution. These can be problematic in the production environment, and sometimes they are not available altogether.

Create and build an artifact to run tests on it with a debug profile.

Create and build an artifact to run A/B testing and use Docker image’s labels to deploy each separately.

Utilize the production Docker images to run tests and verify the package is of high quality before approving a code merge.

Test the software package on different runtimes/stress environments to ensure performance does not degrade.

Docker is likely the de-facto when it comes to containerization and cloud-native solution development. Your teams should run tests against Docker environments too. Docker containers are also vulnerable to security and performance bugs. You should scan the images before you run your tests on them. Docker Hub provides you with good tools that you can use to run scans on your built images, and a list of known vulnerabilities in existing images, so you can ignore those images in your development environment.

Verify the Docker image is free from known vulnerabilities.

Check if the Docker image—and the code inside it—is compliant with international laws and standards.

Detect and remove any virus programs injected from build environment or parent images.

The .NET Core and .NET Framework images are available separately based on your needs

The .NET Core SDK supports environment variables and configuration files. These files are used to store necessary information for the program to start and execute. You can create separate configuration files for each environment on which your team must run the application. Since .NET Core applications—especially ASP.NET Core—must utilize a middleware registry to start the pipeline, you can easily configure which file to read the configuration from. This lets your developers configure a local database to connect to, instead of the production database.

Never store passwords, connection strings, or sensitive information such as API keys in a configuration file.

Always use settings and values local to your development machine to avoid unnecessary exposure of keys and connection strings.

Avoid checking the configuration files in to the version control and create a local configuration file instead.13

Always override the default configurations by the platform-provided settings. Microsoft Azure provides App Service settings that can be used to configure the app startup.

Code Smells, Bugs, Performance Issues and Naive Errors

Finally, code smells and bugs pollute the source code repository and decrease the value of the product. There are bugs that are visible to the end users and there are bugs that are not visible to the end users. Just because a bug is not visible to the end user does not mean that it has a low priority. Think of a software issue where a bad algorithm is being used that is eating up the maximum amount of your infrastructure resources. An end user might not complain but your accounts and finance departments will.

These types of errors are commonly the most difficult to detect and remove. This is where load testing/stress testing comes in to play. You can run statistical tests on the web apps and software written in .NET Core using QA and testing suites. The purpose of QA tests is to verify the code quality and find the regions where the code is taking most time. Your engineers can review the part of your code that has performance issues.

Unlike logical issues, which you can verify using unit testing, you cannot test to see if a website provides a response to a user query in less than 200 milliseconds. Even if you time a request-response from your API, you cannot be certain that a website would perform similarly in a production environment. For such reasons, statistical testing of the code is used. Many QA tools, such as Apache JMeter, provide such a feature that lets you run the test on the software and generate hypotheses that show a fail/pass scenario for your website’s performance.

Likewise, QA tests are run on a running app, and responses are captured and logged. A request is made a couple hundred times, imitating real users on the Internet. The responses are used as data samples and the rest of the calculations are done by statistics. This helps your engineers see if the app would function properly in high traffic scenarios, based on statistical tests. Later in the book we will see how to use Apache JMeter to perform basic load tests on our applications, and if we can integrate the entire process in our CI/CD pipeline.

For each negative outcome, engineers need to rework the application and add a patch to fix the problem. This also means that for each bug fix, performance increase, or code improvement, your entire application has to be rebuilt, deployed, and restarted on the server. That is where microservices architectures come into the play; more on that later in this chapter.

Vulnerabilities in Web Apps

The Internet is the “wild west” of computer science, where anything can go wrong. A single-threaded single-user .NET Core based desktop or console application might not be exposed to all the dangers and vulnerabilities that a web-based application might be exposed to. Different standards of the hypertext protocol bring several vulnerabilities that are difficult to tackle and sometimes even discover. Recently,14 Chinese hackers were able to hack into Google Chrome, Microsoft’s Edge,15 Apple’s Safari, Office 365, and many other software products that run on the Internet. This shows how network-based software is more vulnerable to an attack as compared to offline software.

We should talk about a few vulnerabilities that should be “must knows” for every software developer who writes software for web applications. These problems range from front-end HTML and JavaScript-based problems and vulnerabilities to back-end based session and runtime vulnerabilities. The solution for each of these problems lies in their specific domain—for front-end bugs, front-end libraries provide a patch for each problem, and the same for the back-end runtimes and frameworks.

Most common problems found in front-end apps are cross-site scripting, request token forgery, and several JavaScript-based problems like prototype polluting. You should review a complete list of vulnerabilities in JavaScript front-end libraries—Angular, React, and so on—on Snyk’s report16 and also review a list of vulnerabilities in the dependencies17 for these libraries and frameworks. Some vulnerabilities are caused by poor handling by end users, while they are still possible to be fixed by code. If you review the list, you will find JavaScript as a language being the root cause for most vulnerabilities. It is our responsibility to prevent these errors.

Fixing Injection and Scripting Attacks

Most vulnerabilities are caused by poorly tested software code. ASP.NET Core code requires more test checks as compared to front-end modules, such as React. Most front-end constructs fail to build if you use them inappropriately. In React, for example, if you try to pass in an HTML snippet as a props to a component, it terminates the code. The reason to do this is to avoid HTML injection in the DOM. React, however, does support using a special props value, dangerouslySetInnerHtml,18 which you can use to pass the HTML.

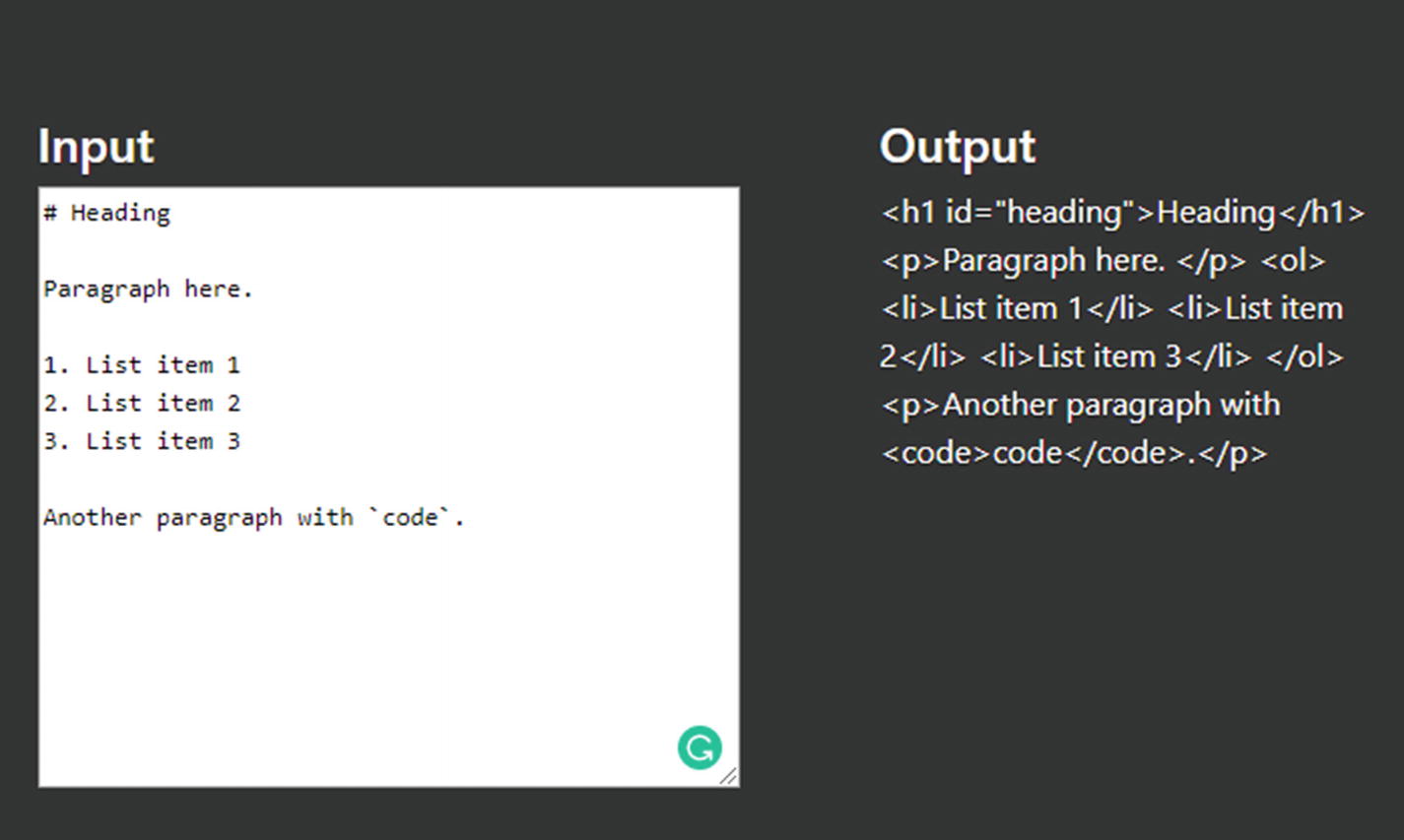

EditorComponent is a React component that exposes a Markdown editor tab.

This component is responsible for attaching a couple of event handlers that take input from the user and convert that Markdown to potential HTML. Then, in our components, we render the HTML in the DOM .

You can ignore the dependency listing and code structure, since that is not important in this example. What is important is how React handles the HTML injections.

MarkdownInputComponent exposes a textarea field that is used to input the Markdown.

MarkdownPreviewComponent exposes the paragraph element that renders the content.

Markdown content along with its HTML representation side by side

What happens here is that our Markdown content is converted to HTML but React renders this HTML by escaping it. So <h1> becomes <h1>. This prevents HTML injection in the DOM and secures the client from getting polluted or vulnerable code script.

But if you need to inject the Markdown-rendered HTML, you might need to skip this check. In such cases, React allows you to use the function we spoke about previously and render the HTML directly in the innerHTML part.

Markdown rendered as HTML in the application

This could still lead to problems, as Markdown does not prevent native HTML being an injection in a Markdown document. You can restrict the HTML content to run tests on the code before rendering it on the DOM.

React ensures that you know what you are doing, and that you and only you are responsible for the problems caused by directly injection HTML into the DOM. That is why the props name starts with danger and you need to pass a special object with __html as a field name. You can avoid having to do this manually by using community-driven packages and libraries. For example, you can add the Remarkable NPM package to your projects, or you can use the react-mde NPM package to use their React components, which are well tested against issues that you might have to test yourself.

Injection attacks are not only common to front-end libraries, several injection problems occur on the back-end too. SQL Injection is one such problem. In an ASP.NET Core web application—also an application to other platforms of .NET Core like Mobile, Cloud-native, Games, and ML.NET—you should consider using an ORM that performs your SQL queries. Entity Framework Core is the most widely used ORM for .NET Core apps.

You can use C# language features such as LINQ to query the data. Most C# features, such as asynchronous development, are supported and provide excellent performance in high-demanding environments.

Scripting Problems: XSS, Token Forgery, and Session Hijacks

NPM also suggests a possible solution to these problems and allows you to fix all problems using a command. Note that NPM does not guarantee a solution to the vulnerability itself, but it will attempt to revert to a version that did not contain a vulnerability. You can execute npm audit fix and NPM will try to patch it if it can. In a larger project you might run into issues when you audit a complete solution. If your teams utilize a central build system, it will face more problems when it comes to fixing and handling build warnings and errors.

Automated Tests

We will explore the automated tests and their benefits when it comes to DevOps in the later parts of this book. For the time being, we can enlist the automated testing suites as a way of preventing the bugs in the software.

Unit tests, integration tests, and load testing suite enable you to verify whether your application provides or fulfills a service-level objective. Organizations can define their own test suites to verify software stability. Build pipelines can run the known tests on the packages. It is your responsibility to add tests as they become necessary. One such example is adding the newly determined errors and bugs to the software code.

As shown in the previous chapter, we can use static-code analysis tools to find the tests necessary in future packages. You can use dynamic code analysis and web firewalls to detect, monitor, and add bugs to your workloads. Your engineers can then select a test from this list and work on the test cases.

I will revisit automated tests in a later part of this book; right now we study how to separate the application’s parts based on the domain and explore the benefits.

Microservices: Separation of Concerns

Software development practices and software testing approaches are different in each module for .NET Core applications. You cannot run tests on an ML.NET project the way you might test a Xamarin.Forms mobile application. A test written for a web application is different in terms of testing strategies as well as the libraries used. Thus, keeping everything in a single archive or package makes the code base and repository bloated with scripts. Most of the scripts that are generated for a web-based application’s test are run less than half the time. In your personal hobby application, you might change the front-end design, color, or typography every week to keep the content fresh. But you will change the back-end of your web app as needed and only when you are working on bug fixes or feature improvements. In a typical enterprise application, this would be different as they need to work on feature improvements, performance, and security related issues every day. This increases the execution of test suites on the web application, but it might decease this in a different application.

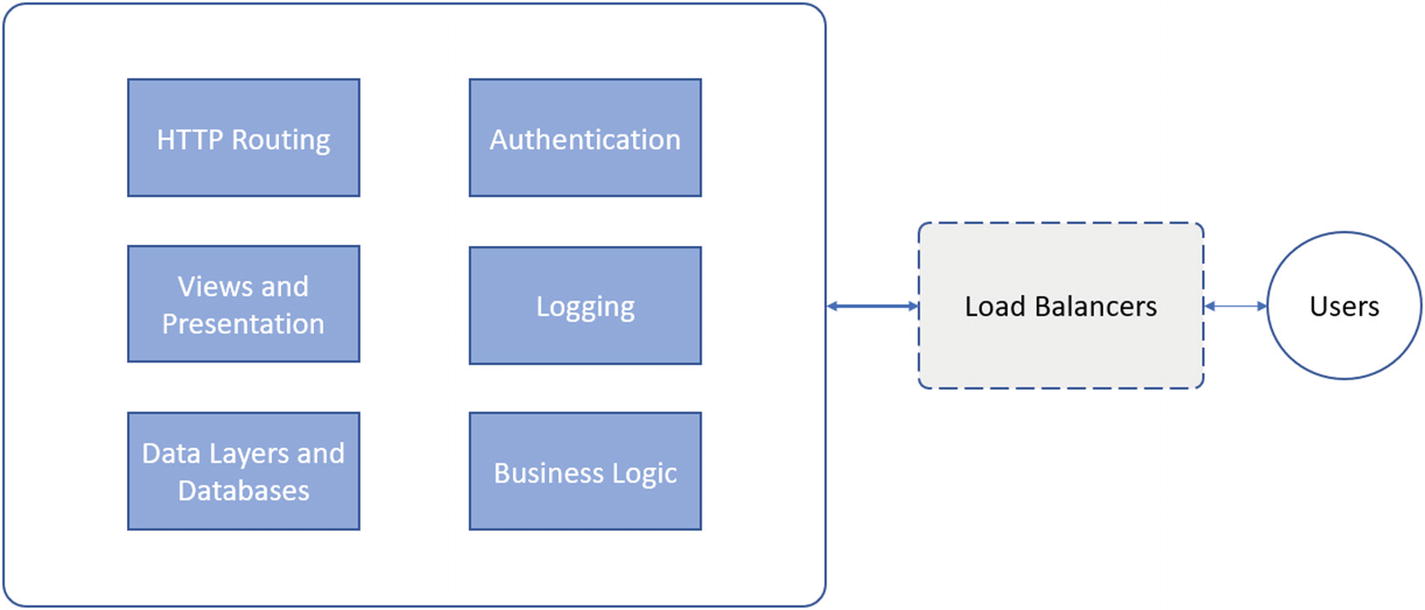

In a typical application, users connect directly to a monolith application, sometimes deployed behind a load balancer, and that application is completely responsible for and contains the entire solution

As seen in Figure 3-4, a monolith application contains the code for every aspect of the solution. From the business logic, to logging and views. If there is a crash in any one of the components, the entire application crashes and impacts all the active users. Introduction of different concepts and domains like cloud-native Web APIs and services attempt to solve this problem.

MVC controllers

Web API controllers

Views, or React, Angular, and Vue based front-end libraries

Models and data layers: DbContexts in Entity Framework Core

Logging services

Cache and session stores

Static files for CSS, JavaScript, and other media

You can easily distribute these files across multiple projects and manage them accordingly. Load balancers are more optimized to work on the static files; thus, you can extract the files in a separate project and run them behind a load balancer or proxy server. Nginx is a valid candidate in this category. Similarly, you should plan to separate the controllers from the views and data.

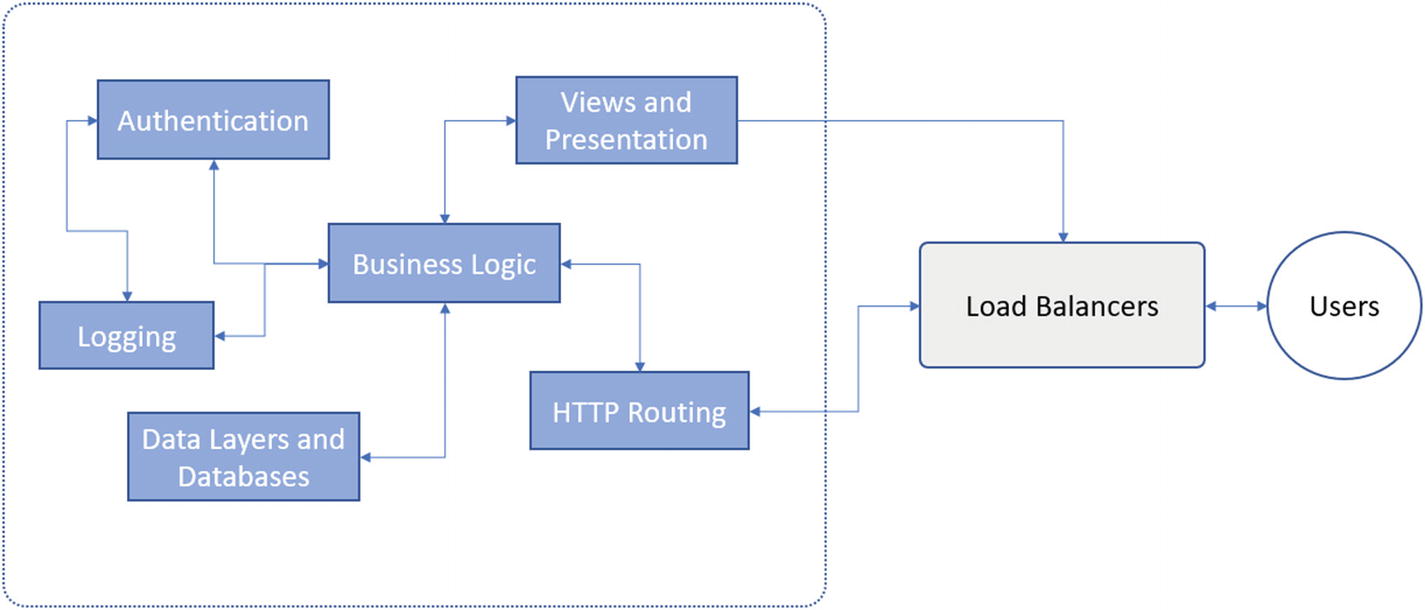

Different services can be deployed separately as microservices and the complete solution can be broken down as each microservice. In this configuration a load balancer is necessary, because your customers will not know the hostname or the port that a service would be listening on

The microservices term is applied to this architecture in which each service is developed, tested, deployed, managed, and improved separately. This decreases the overall complexity of a project and enables the developers to focus on the project itself and the improvement of an individual service. Every service is developed separately, which removes the CI/CD burden of every other service. Each service has a separate lifecycle and a CI/CD pipeline, disconnected from the other CI/CDs. Modifying the code of one service will not impact the other services.

As shown in Figure 3-5, separation of concerns enables the developers to work on products separately. Each service can communicate with a different service (or all services hosted). If a single service is down, your entire cluster does not crash. Your customers can continue to use the application if the logging component crashes. Logging components and their status will be updated on the operations team’s dashboard and they can fix the problems.

I drew the diagram in Figure 3-5 with one point in mind—it should explain the chaos that enters the architecture. If you compare the figure of a monolith to a microservice, you can easily find the monolith to be simpler and better designed. In the case of microservices, chaos is added as a by-product. Microservices offer greater control over scalability and performance.

N-Tier Products with Hidden Databases

If you design an application using legacy architectures, you can still secure your application and improve performance. Regardless of your hosting and deployment platform, you can protect your production resources from external access. On cloud platforms like Microsoft Azure, AWS, or GCP, you can use internal virtual networks to restrict access to individual resources.

N-Tier is sometimes misunderstood, and there is no perfect way to put or explain what an N-Tier development approach is. N-Tier—or multitier application design approach—means that the data, the business logic, and the presentation layers of your application are all separated. In a cloud-first environment, we can apply this concept to every resource that is being used—from web hosting to databases to logging and monitoring services, all the way to user identity management, caching and backing up controls.

A cloud-based application can be hosted as a simple web application. A web application is then delegated with access to other resources inside the deployment platform. Every cloud platform offers networking products that can help organizations create an isolated infrastructure in the cloud. The tier-based separation system is a legacy model of development and deployment of the products.

Let’s look at an example of how cloud-based resources can be connected to each other in a virtual network and be disconnected from external Internet access.

I demonstrate the scenario using Microsoft Azure. You are free to use Alibaba Cloud, Google Cloud Platform, AWS, or your favorite cloud platform. The concepts, services, features, and products have different names by different cloud vendors, but they offer similar services. I recommend that you use Microsoft Azure if you want to follow along with the tutorial.

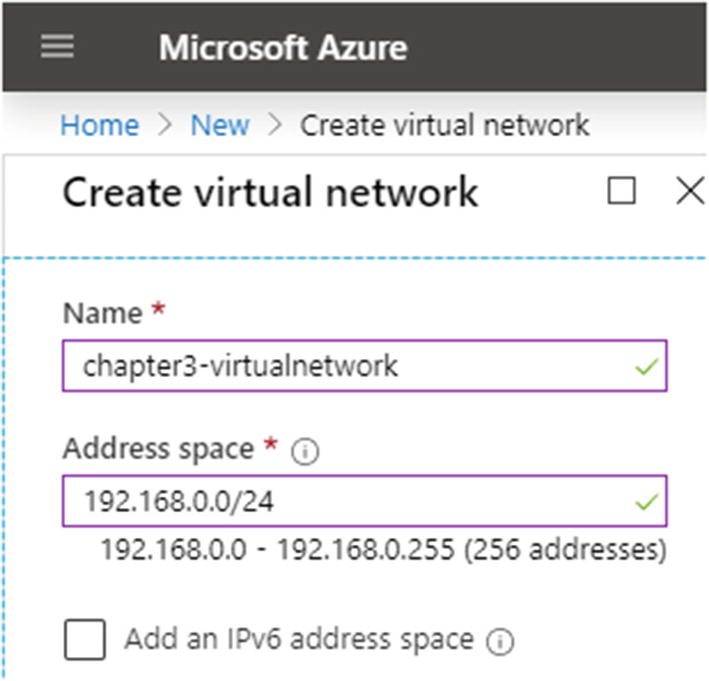

A virtual network uses the address space to allocate the resources in your subscription. You should discuss this with your network administrator to understand which CIDR to use

You can then add resources to this network or use it as a central hub for monitoring traffic inside your corporate.

Corporate Applications

One application of virtual networks is within the corporate networks and corporate applications. A virtual network provides access to services deployed in it. Internet-based access is not allowed. Corporate applications can utilize virtual private networks, or VPN for short, to access the services deployed in the virtual networks.

This serves as a security layer on top of your software packages. You can deploy the applications on the cloud within a virtual network. Say you have a web application that provides a static HTML page to your customers. Your resources within a virtual private network can be utilized by the web page. Your customers access the website (static HTML or a framework-based web application).

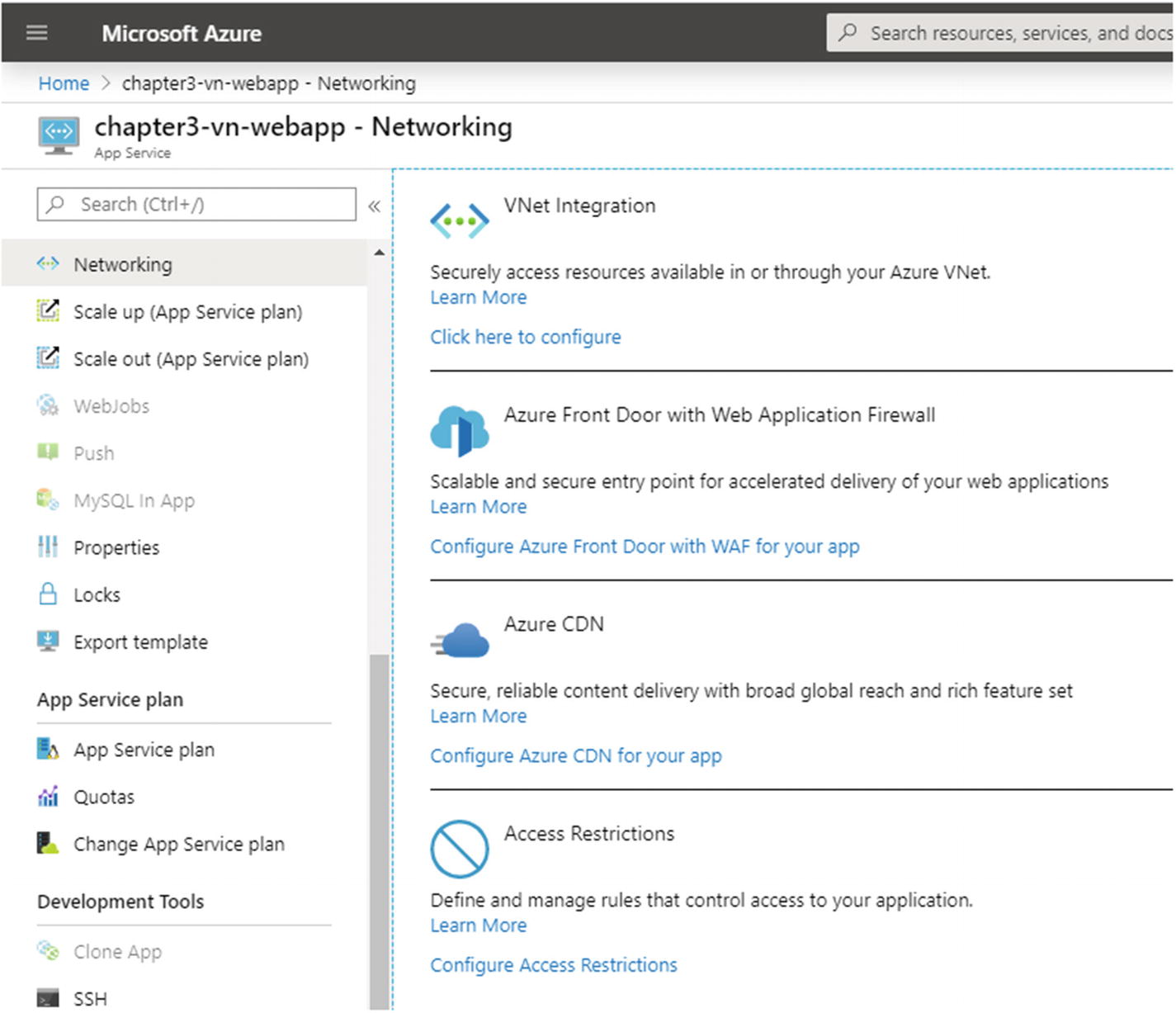

The Azure Portal showing the Networking tab for the web application, with the settings and features that can help you improve the site’s performance and security

A private virtual network to hide resources in plain-cloud.

A web application firewall to secure your web applications from being hacked or from malicious attempts.

CDN support to replicate your static resources, such as style sheets, JavaScript code, HTML, and media resources. This improves the overall performance of a static website.

A rule-based network control to allow or deny access to the website.

Network feature of virtual network integration configuration in Azure Portal. I am selecting an already created virtual network and the subnet

Virtual network integration showing the number of allowed addresses available in the subnet and the total virtual network address space available

You can modify the virtual network settings to add more resources to the network. Remember that each resource must consume a separate IP address to be made available.

In a cloud environment, the physical resources do not count, and virtual resources can be created and grouped using online resources. For example, in this scenario where you are limited to eight addresses in the subnet, you can create a load balancer resource in the virtual network and add any number of resources to that load balancer. Similarly, you can also connect your virtual network to other networks using virtual private network (VPN) services.

You can also use other products and services of cloud platforms such as identity services. The benefit of identity services is that your .NET Core applications no longer need to manage the user accounts and profiles on their own databases.

Increasing Scalability

As previously seen in Chapter 2, .NET Core enables developers to create user identity databases inside the application. For a starter or a proof-of-concept application, it is a good practice. As your users grow, you should separate the database and application code. This increases the overall scalability of your applications. An application that is hardcoded with databases and contains the user or session information in the memory is more likely to fail.

ASP.NET has been using in-proc session management for users since its early days. The design requirement for an N-Tier app demands the separation of different layers. We can architect the application in a microservice approach and provide different endpoints for services. Once our application has been separated from the hardcoded resources, we can better scale the applications. Several database engines offer this kind of data synchronization support. In my own experience, I have used Redis cache database19 to out-proc the session management.

A cloud-native application often utilizes Docker or Kubernetes engines to orchestrate the web applications. If you have ever worked with Kubernetes, you must be aware of the difficulties that a DevOps might face while working with Stateful20 deployments. If you are using a cloud-platform such as Azure to deploy your databases and other resources, you can use a stateless deployment on Kubernetes and have your work done. Your web resources are managed for scalability and availability by the cloud, and your web app is managed by Kubernetes.

If you are provisioning the services yourself, it’s better to convert your applications to be stateless.

Communication in Services

Message encoding and transmission

Authentication and authorization

Message delivery reports and retry attempts

Remote procedures and services

For .NET Framework, Microsoft introduced many network services and frameworks. Windows Communication Foundation (also known as WCF) was one of the initial frameworks used to develop online services. If you are using or have been using WCF, you can easily migrate your services to modern web services development frameworks. One major problem with WCF is that it is still a monolith approach to the development of services. Most of the services that you develop provide best-in-class authentication, performance, caching, and distribution strategies. Another major problem with WCF is that it is strictly tied to the .NET Framework. To consume the services, you need to create a client that is compliant with the .NET Framework and its requirements for a client. Most of the clients in today’s world are low-powered devices such as IoT, handheld devices like Android and iOS devices, and so on. WCF does not work in these scenarios, even with Xamarin as a platform of development for mobile applications.

WCF offers several benefits on top of these, the first one being a framework with decades of good performance and efficient web service development and deployment. WCF offers bidirectional communication between the client and server. In an application that requires real-time messaging, WCF can offer duplex options to provide native performance to send the messages.

The .NET Core framework offers more than what WCF can offer: extensibility. The .NET Core has the complete suite of Microsoft-developed protocols and community-driven frameworks and runtimes.

TCP

TCP-based communication is provided and supported out of the box for several apps written in .NET Core. The .NET Core framework supported System.Net namespace offers a vast variety of objects that help write network-oriented apps. The TCP stack for networking is old but provides a very secure and guaranteed method of communication between multiple resources.

You, as a developer, do not need to test the validity of your apps to run on the TCP stack if you are using .NET Core provided TCP/UDP protocols. TCP is also the simplest method of communication in applications.

A common alternative to TCP is a named pipe. A named pipe is a UNIX concept that allows a program to communicate with another program using a pipe.21 The benefit of using a pipe is that it is available on all UNIX and Linux platforms. There is an extra bit of security to pipes as well. A program can access the pipes that it has created, as a file descriptor. Only the program that created the pipe can forward the file descriptor (the FD) to another program for communication. External processes cannot access the FD of the program’s pipe. A TCP based communication uses a hostname and the port to communicate, which can be sniffed. Note that the FD of a pipe can also be exploited in several ways, but that is beyond the scope of this book.

A TCP programming sample can be found on my GitHub profile at https://github.com/afzaal-ahmad-zeeshan/tcpserver-dotnetcore. You can fork the project and run it through the code analysis tools as an exercise.

HTTP/2, gRPC, and Beyond

Many modern buzzwords are being introduced to support the modern traffic load and performance requirements. HTTP/2, gRPC, SignalR, and many other technologies have been introduced in .NET Core runtime. Microsoft introduced support for HTTP/2 in their Edge browser, and every other web browser company has done it in the past or is doing so right now. The problem with these is that not everybody has adopted the frameworks. HTTP/2 provides an improvement over the number of requests per response from the server.

This command creates a new ASP.NET Core application with gRPC support. The gRPC uses Protocol Buffers22 (Protobuf) to generate services that provide safe-typed responses. When we combine these solutions, the result becomes cross-platform in nature and offers microservices out of box. The gRPC is also proposed to be used instead of HTTP protocol for better performance and little overhead.

The problem with gRPC is the same as with WCF: the clients need to be compliant with the framework.

It is interesting to note that this problem spans across the basic networking model we use in computing. HTTP web servers require HTTP-based clients and TCP servers require a TCP handshake between servers and clients. We do not feel so different about HTTP and TCP, because they are a vital part of our operating systems and runtimes. You can use HTTP clients and SDKs on every platform. WCF, HTTP/2, gRPC, and similar runtimes and SDKs are not common platforms of choice, and thus it seems different when we talk about native support of a communication channel.

For the teams that use .NET Core for their development, gRPC does not pose any problem. You can develop gRPC clients using .NET Core—I will show one such example later—and then deploy the solutions on any platform you want. The platforms that can be targeted range from basic web applications, console programs, mobile apps (using Xamarin), microservices, and cloud-native applications. They all are possible candidates for peer-to-peer or remote procedure operations. The primary difference in gRPC and WCF is that WCF is based on the .NET Framework, which is available on the Windows operating system only.

On the other hand, gRPC clients can be developed using .NET Core, which targets the .NET Standard. This enables gRPC to be used across devices, platforms, and screens.

One common point to remember is that gRPC uses TLS to encrypt the data. There is no layer of gRPC that adds security, and the message travels on the wire without security. It is recommended to always use TLS and SSL certificates to verify the identity of the endpoints—the server and the clients. A single certificate can improve the overall experience for the users. We can also swap the components of the gRPC layers, such as the HTTP/2, to improve performance. One such implementation is the addition of the QUIC protocol to the suite. In this case, we apply the TLS and SSL encryptions but use the QUIC protocol to send and receive the messages. The binary messages transferred by gRPC represent the states of the messages. A web application must refrain from sending sensitive information on the network if the channel is not secured. Most applications can utilize a VPN to secure the communication when using an SSL certificate is not possible.

You can keep things simple and encrypt the data before sending it if your application cannot be secured behind an SSL certificate. You can use the framework’s security libraries, such as the ones offered by the .NET Core framework.

gRPC Sample

This defines the complete contract of a request and response. You can see that we are configuring the C# client namespace here as well. A service is defined as Greeter and has a method called SayHello accepting HelloRequest and returning HelloReply. This enables gRPC to be simple in definition. You will use a proto-compiler to compile the service to native programming languages such as Java, C#, and C++. For .NET Core applications, the build tools and compilers are available through NuGet package managers.

The .NET Core CLI will automatically build everything and run the project for you. The security is added because gRPC requires23 you to use HTTPS for traffic and communication.

If you are new to development, then you can use .NET Core CLI to create and add a custom signed certificate to your system.

This will create a listing of developer certificates in the trusted certificates registry on your machine. This will prevent your applications from crashing (due to certificate failures) or your browsers from showing an error each time you try to access the resource in development mode.

Website running gRPC shows an error message telling the service can only be consumed from a gRPC client

This shows that our application connects to the gRPC server and communicates properly.

Using Secure Cryptographic Methods

When working with secure systems or writing custom code for data security, you should consider using framework-provided cryptographic types and functions. This ranges from the basic data encryption/decryption to password hashing and storage.

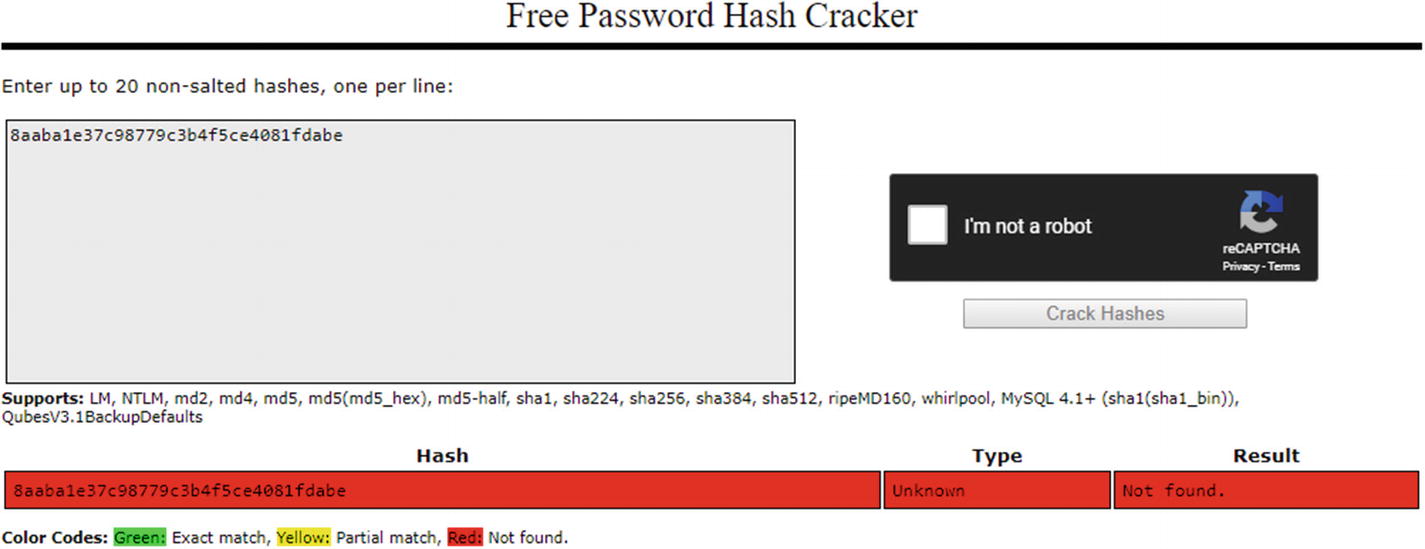

You should never write your own code to encrypt or decrypt the data. That is the first rule in cryptography. Frameworks and runtime providers have invested years of research and study in the authoring of a cryptographic library. Microsoft has done extensive research25 for their cryptographic classes and functions exposed by each of the class. This ensures that apps running on the .NET Framework and using the types and methods exposed by the Systems.Security.Cryptography namespace are secure and developers can trust the types. The key sizes and patterns can be deduced by a hacker and can lead to exploitation of user data. Using old and breakable cryptographic hashing functions is like storing the data in plain-text. MD5 is known to have several rainbow tables that can exploit user’s sensitive information.

The crackstation website showcasing a crashed hash of MD5 type

A strong password is safe when it comes to rainbow table attacks. Try a-veryStrongP@ssw0rd!!! for example. This will not be found in the rainbow tables, at least at the time of authoring this book, as they are precomputed hashes and their plain-text passwords. You cannot expect every user on your system to adhere to these standards unless you enforce these policies in your password standards. There is also a risk of password hash collision.

The crackstation.net website failed to decipher an MD5 of a strong password

You will notice that there is a mention of “salt” in the web page. The process of salting is when arbitrary random noise is added to the input. So, your input p@ssw0rd is modified before hashing and storing in the database. This increases the strength of the password and helps prevent collision between similar passwords. If your database with user information is exposed, an attacker might try to apply a rainbow table attack on the values. Two similar values in the database would suggest the same passwords for more than one user. The addition of salt prevents this for similar passwords. The salt has to be random in nature. The typical random number generators are not secure for the password-salting process. The Random class in the System namespace is predictable and can be exploited. You should consider using any class that extends RandomNumberGenerator, such as RNGCryptoServiceProvider. These types are guaranteed to be random. You can use their values as salt and hash your passwords to prevent attackers from deciphering the encrypted text.

MD5 and SHA1 for File Hashes

You can use MD5 and SHA1 hashing26 functions in your filesystem to verify their authenticity. Although I would prohibit the use of MD5 or SHA1 for password hashing (as discussed in previous section, MD5 and SHA1 have known rainbow tables and attacks, respectively, and their hashes can be converted back to the plain-text passwords), it is common to use MD5 or SHA1 for file hashing.

You might have experienced on a download site that a special hash (hexadecimal string) is provided along with the download file buttons. These strings are used to verify the authenticity of the file. It might be that a file was corrupted on the server, on the network, or on your own machine. This text helps you verify that the file you downloaded and are going to install on your machine is authentic. Several open source projects use this scheme to ensure their customers know that the file is not modified.

You can use this approach to prevent any false claims from your users against you, if their system is compromised. If this hash is different for the executables, then you know the system has been modified outside your control.

The size of MD5 hash is smaller, as it uses the 128-bit hash size. There is no benefit to increasing the hash size for filesystem management. Although as your files grow in number and size, it would be useful to use SHA1 or SHA2 family variants. This can help you in your DevOps cycles as your maintain and manage the releases of your software product.

Apply SSL Across Domain

While using the algorithms for encryption and decryption, ensure that you are aware of these algorithms and how they work. It is a good approach to use HTTPS (encrypted traffic on the HTTP protocol), but it is always best to know when to use HTTP. Microservices and service-mesh architectures make it possible to use HTTP traffic inside the cluster to decrease the TLS handshake in each request and session. You must configure all public facing endpoints to always use SSL based traffic and always enforce SSL for cookies as well. It is a common misunderstanding that sending the content inside the HTTP body can secure the content on the network. The problem is that your content is available in plain-text and can easily be deciphered. Only HTTPS is capable of safely encrypting your traffic. HTTPS protects your website from man-in-the-middle attacks. Apply a QA check to validate all communication inside your service to use HTTPS.

A common pitfall with the HTTP/HTTPS is that sometimes organizations apply HTTPS only on the pages where they have a form. A web page with no input requires HTTPS too. Consider having a home page with basic HTML that redirects the users to their login pages. If your page is not encrypted, a hacker could attempt to modify the HTML code and redirect your users to a malicious website. This is a common problem if your website’s domain name is long. Your users might not be able to understand the basic difference between the URLs. Several phishing attempts use this approach to lead the traffic away.

It is always necessary to apply all safety checks throughout your website. If you are a corporate working in banking sector, the number of attacks on your website is huge as compared to a blog website. Your website might have state-of-the-art security protocols implemented on the accounts and transaction channels. But if your home page is unencrypted, an attacker can modify the HTML code and redirect your customers to a malicious website. They might not be able to get any benefit out of it, but your customers might expose their credentials to the website on their database.

A single domain certificate is available at a cheap price, and some are available for free. Let’s Encrypt is one such product that comes to mind. You can use Let’s Encrypt to quickly generate certificates by authorizing your ownership of the domain. Let’s Encrypt certificates are valid for 90 days and you can always request a new certificate when you need to. Several cloud providers support Let’s Encrypt certificates. There are community-driven tutorials27 and articles that show how you can set up the certificates yourself. If you are using Microsoft Azure’s App Service, you can use the Let’s Encrypt WebJob to request28 an SSL certificate for your domain.

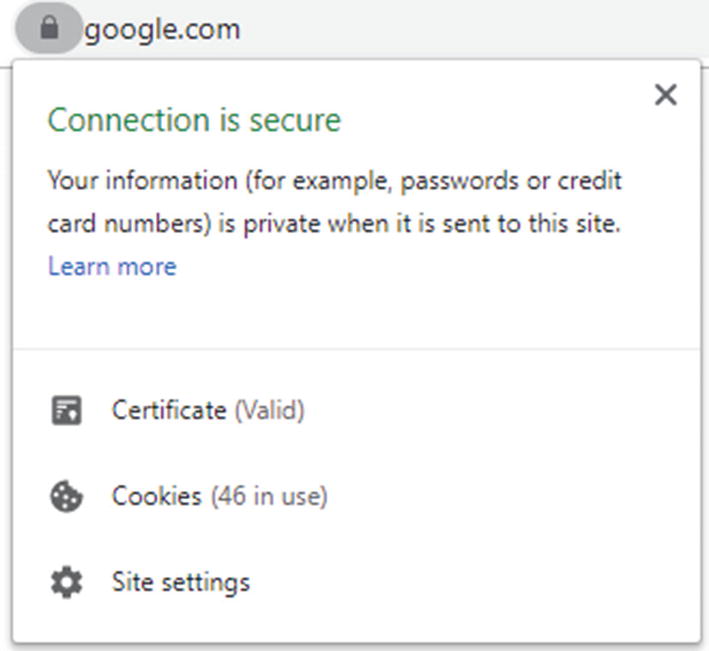

Modern browsers also have a bias against unencrypted websites and their traffic.

I do not include expired certificates as a problem here. That is a different scenario and is caused most likely by the laziness of Ops teams or the configurations under which your machine is running as a user.

Google Chrome showing the website connection status for Google’s home page

Google has shown29 to lower the ranks of unencrypted websites in their search results. The Google Security Team says that Google will start to rank HTTPS pages over HTTP pages even if they have no links to them. The importance of HTTPS is bigger than the certificate cost.

Another problem with using mixed mode HTTP and HTTPS is that your website is loaded as one request per resource. If you use mix mode HTTP and HTTPS, your website is slowed down by the HTTPS request (due to the TLS handshake) and some requests are not making proper use of the TLS handshake already made. Most browsers will also try to prevent the resources being loaded from an unencrypted host. Static resources—such as images, CSS, and JavaScript files—are more prone to attacks by malware and man-in-the-middle attacks. Application of HTTPS across domains also prevents these problems.

Things get interesting when you develop solutions using modern web standards. Progressive web applications, or PWA for short, work only with HTTPS.30 Most standards enforced by the SEO ranking tools also consider SSL certification a primary and leading factor31 in site ranking. Some websites have shown a double amount32 of traffic after application of SSL certificate on their web servers. Organizations have discovered that PWAs provide a better conversion rate as compared to native apps. Teams develop their solutions as a PWA to quickly turn their traffic into business value. Google recently notified33 users of their AdSense product that they are moving from a native experience to a PWA experience. For you, this is possible only if you use SSL certificates on your website.

Summary

The focus of this chapter was to bring in the common concepts and software packages that are used to secure the software and improve the quality of your products. I discussed several tools that are being used in my regular day development, as well as the organizations that I have worked with. .NET Core is a new framework and is currently in the process of being adopted.

A vulnerable application leads to a poor user experience and bad performance. Migrating your applications to a new platform, like cloud-native architecture, does not help with security. Most cloud platforms provide a service that controls and manages the network traffic, such as a web application firewall. As mentioned earlier, code stability is a feature added at development time. Build pipelines are configured to verify the code changes, validate the code for security measurements, and identify any flaws and code smells.

Architecture and design

Input validation and code security

Notable security loopholes and attack possibility

Network architecture and security policies

Security framework and libraries used

You will find several attacks and service degradation reasons listed on a cybersecurity book or website. Most of these are not directly related to the code, such as social engineering, but they can be ignored by proper design.

With organizations that have multiple deployments each day (or every two days), automation supports the development and deployment lifecycles. In the next chapter, we discuss how we can introduce these security and performance validations into build pipelines.