Previous chapters covered the initial CI phases of the DevOps cycle. A complete DevOps cycle incorporates the production environment as well. From security scanning, to runtime selection, process warmup, continuous monitoring, and protection, DevSecOps takes care of everything. A DevOps engineer1 must manage the details of the production environment as well as the development cycles. It is important for a secure application to run in a secure environment. If the environment is compromised, your secure application will end up being tampered with (as in the case of an HTTP hosted web application) or taken over completely by an attacker. In this chapter, I discuss security and performance from the point of view of the hosting platform. The term “hosting platform” applies to the environment where your solutions run. A hosting platform for a .NET Core solution is not always a cloud environment, or your go-to web hosting provider. A .NET Core solution can run on a mobile application (as in the case of a Xamarin.Forms application) or it can run on a user’s device (as in the case of a desktop application). I discuss the common practices that can help you protect your applications and resources.

A complete solution is more than an executable performing a task on the provided input. Modern solutions integrate third-party (or in-house) APIs to provide customized solutions to end users. Engineers tend to use hosted platforms with managed virtual machines to host their processes. This approach is called Infrastructure-as-a-Service (IaaS). With this approach, you (the engineer) are responsible for managing the entire software and runtime stack, including the OS updates and patches. This is the type of service subscription that IaC tends to target and provide support for. If you do not wish to work on OS upgrades and runtime management, then you can purchase a hosting subscription. All cloud vendors provide a solution hosting subscription, sometimes called a “platform.” This subscription is called Platform-as-a-Service (PaaS). I cover these two deployment models in this chapter. These models are the ones that DevOps engineers tackle in their regular day jobs.

There is another type of service subscription that takes much of the configuration overhead away from the consumer and provides a managed service to them. This model of subscription is called Software-as-a-Service (SaaS). A common example of this service model is Office 365. If you use hosted document editors such as Google Drive’s Docs product, you are using an SaaS product. The benefit of using an SaaS is that you are only responsible for the data that you generate in the application. With a SaaS product, the vendor or provider is responsible for managing the security and regulations of the product.

FTP ports for publishing

SMTP ports for emails

CPanel ports for administrative tasks

Custom ports configured by you

All these ports are enabled to provide control over the environment. If these ports are enabled behind a virtual network, then everything is okay. If they are not, then there will be security and hacking problems. External users can snitch the network to find the open ports and attempt to bypass the security. A virtual network can prevent direct access, adding a layer of security. Similarly, the network that you have created for a web server should only grant external access to the web server. Secondary resources such as databases and log servers should be kept private. There are problems beyond an average network or port exposure, such as Distributed Denial of Service (DDoS) attacks, reverse-engineering, bad encryption methods, and more.

If you provision production environments, do not skip this chapter. This chapter covers the sort of attacks your solutions should tackle. It will also give you tips and helpful material that will guide you about solutions for such scenarios. I will share my personal experience of handling sensitive information for my own mobile and web applications. Even if you do not handle the production environments, read this chapter, as it will help you design and develop the software with Ops teams in mind.

Host Platforms

When I design a solution, I always ask myself, “Where am I going to host this solution?” This question helps me understand several areas2 of the production to design and develop. It helps in planning whether the solution will be an N-tier, microservice, cloud-native, or serverless model. The cloud platforms make it easier for developers to publish their solutions to the customers with a single click. Vendors also make it simple for developers to lift-and-shift their products to make them cloud-native.

There is a common misconception that bringing your solutions to the cloud can automatically improve their performance or increase their efficiency. I can give at least a dozen examples of clients who got the opposite results by following a lift-and-shift approach. This does not mean that a lift-and-shift approach is always a bad idea. The core culprit is the method applied to the lift-and-shift. I have worked with customers who wanted to migrate their APIs to cloud environments. Alibaba Cloud and Microsoft Azure offer a smooth approach for designing, developing, and deploying the APIs that customers have in a serverless fashion. I have also written a few articles that discuss these approaches. You can read one of the articles at https://medium.com/@Alibaba_Cloud/architecting-serverless-applications-with-function-compute-11777da4a888.

Modern solutions must fight a never-ending fight of using/not using Docker for their deployments. Regardless to say, whether you use Docker or not, your infrastructure can be automated. To keep things simple, I will introduce most of the concepts using Docker and also mention some direct3 deployment models.

Docker and Containers

A usual workflow used by Docker container developers to create a Docker container and push the changes to their release environments via web hooks in Docker Hub

Three separate environments control the automation job for your latest updates on the production environment. Apart from the security of these environments and their respective commands or parameters, you also need to configure their automation stability. You are responsible for migrating any legacy code or script. You must always be ready for change.

A sample Node.js application

Docker image building, Dockerfile

Docker Compose sample code

This example5 uses my Docker image and replicates it to three instances. Each instance receives a resource of 10% CPU and 100MB of RAM. From an IaC standpoint, this is excellent. You can review how your infrastructure is being used. Your applications request the resources and you allocate a top limit to them. Each time your application shows poor performance, you can review the IaC and apply any necessary changes. GitOps can then help you keep the changes in history and audit them in the future.

I have a separate repository for Kubernetes objects and scripts. You should check out that repository at https://github.com/afzaal-ahmad-zeeshan/hello-kubernetes.

Network Security

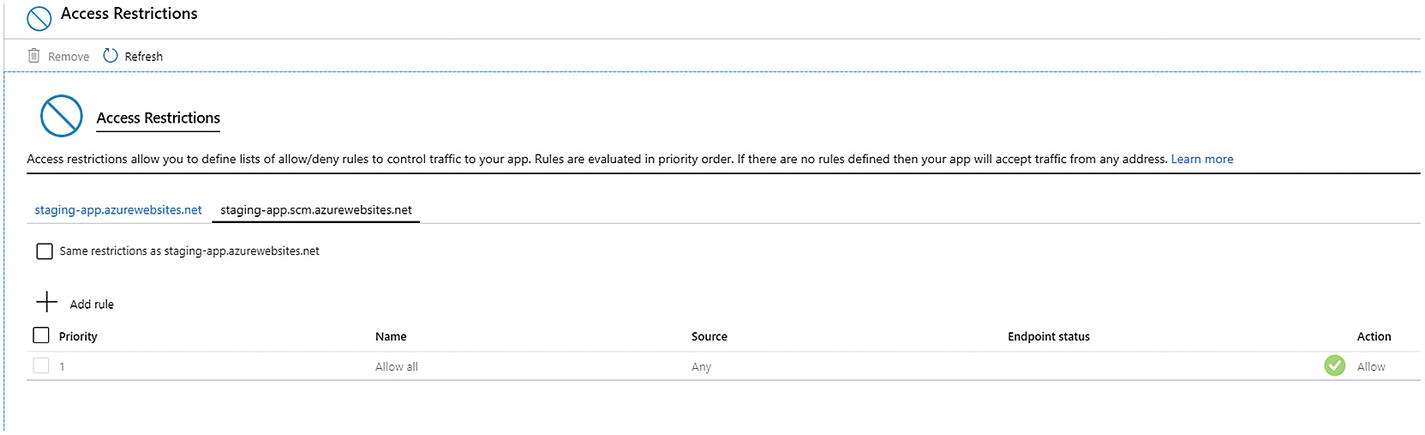

I mentioned previously that you should perform your QA and other tests (DAST, RASP, etc.) on the production environment. This will give you a better idea about your software and how customers are using it. This is also critical because you cannot perform QA operations directly on a production site. Hosting platforms offer a “staging” environment to enable QA teams to run their tests on a production-like environment. Microsoft Azure offers App Service Deployment Slots, for example. These instances run on your production resources and sometimes consume similar APIs that the production environment would have.

Deployment slots are not an important concept to mention here because not every cloud environment offers this service. Some cloud vendors require you to create two separate instances of app hosting subscription with similar profiles. Then you forward the traffic from one instance to another using networking APIs and SDKs of the cloud. Microsoft Azure provides an easy-to-manage option—GUI. For deployment slots, it is recommended you create a mock environment that provides a known response to every known query. This enables your QA to perform tests on the production environment, without consuming any production resources, such as databases.

Since the instances consume the resources from your production environment, they can also be open to requests from external sources, such as the Internet. You should use networking profiles to hide the services that you do not want to be accessible to the public Internet. Your engineers can access those resources by connecting to a central VPN network.

You can remove the production resources from the staging and QA environments. One reason for this is that your QA and staging usage data might pollute the production data. If you are using analytics tools, such as Google Analytics or Azure Application Insights, your QA data might get dumped into those reports. This inconsistency can lead to misleading reports and unwanted actions from marketing and sales teams.

Private networks

Content delivery networks (CDNs)

Web firewalls

Traffic managers

DNS and routing systems

The Azure App Service showing the networking options available in the Azure infrastructure. Different cloud vendors provide different approaches to support the networking requirements of a client and their software

Configuring the Azure Virtual Networks to grant/deny access to a specific IP address block for a service on Azure

Azure App Service Resource Group Template showing the configurations made in the previous step. These settings and configurations should be version controlled for future releases and deployments

As shown in Figure 6-4, the IP address configurations have been created. See the first element in the JSON “ipSecurityRestrictions” array. We can create more policies to further control how our application is accessed. If you download the template and version control it, you can review the changes to network visibility in future versions. Your code reviewers can review the changes in each file and separately accept or reject them. Your infrastructure will also become more transparent, as it shows which resources are created and how they are being used. You will also find these configuration settings in other cloud platforms, as well as DevOps and IaC tools like Terraform.

The Azure portal showing the settings for the production environment as well as the control panel on Azure. We can map similar settings or apply different settings to these environments

Disabling the access will protect your control panel from any public access while providing public access to your website. You will configure the policies the way I showed in the previous page on access restrictions. You also need to configure firewalls and other protection mechanisms to save your solutions from hacking attacks.

Web Firewalls

Online resource protection against hacking attempts. These attempts can be unwanted HTTP requests against a login or authentication URL. WordPress, for example, hosts the login pages at {site}/wp-login.php. A web firewall should protect against any harmful inputs into the fields.

Remote access from a blocked IP address or a region.

A way to dynamically modify inputs before they are passed to the server. This has an application in case you detect a SQL Injection problem in your application. You can apply a patch to the user input before your developers update the code and publish a new version.

A way to rate limit the number of requests by a remote IP address. The rate limiting can be done based on the total requests or the average requests in a certain time span.

Cloud platforms offer web firewalls as a service that you pay to receive. It is not necessary to add the paid services in your infrastructure if you do not need to host manually. You can use the resources on top of your infrastructure to provide services. If you follow this approach, this opens up your solution to unknown problems and undefined application behavior.

Imagine you applied a security patch because of a potential SQL Injection or XSS scripting bug found in your application. Unfortunately, your infrastructure crashed and had to be recreated on the cloud. If your IaC contained the settings for the web firewall, then it would continue smoothly. Otherwise, your application will be open to those bugs again. Therefore, you should consider open tools such as Terraform or Ansible to apply your infrastructure-level changes. They offer better automation and development experiences.

The Azure portal showing the blade to create a new Azure Front Door service. This service enables developers to create web firewalls for the service or to load-balance the application with multiple instances

Azure Front Door showing the GUI editor to configure custom domains (frontend hosts), load balancing (backend pools), or the policies to match the requests against or to apply to the requests

The Azure portal showing the backend update pool. This is the setting where we can add backend virtual machines or App Service instances to load balance the requests

Policy settings as shown in the Web Application Firewall settings on Azure platform

Web application firewall managed policies for protection against hacking or malicious attempts

There are more settings and attack profiles in the list than the screenshot can show. There are profiles for XSS, SQL Injection, HTTP protocol attacks, and URL and path traversal (such as passing /../ in the URL). You can always write the code to prevent these from within the application. This product can help protect your application without the request reaching your application and consuming your resources (every conditional block takes some CPU and RAM resources on your server).

The problem with this solution is that it’s not integrated with your infrastructure; remember what I mentioned a few paragraphs ago? This Azure Front Door instance is created outside the Azure App Service and you need to have its template downloaded and ready for redeployment.

Azure Front Door showing the template with the settings enabled for the pool we have

Other cloud vendors will provide a similar service in their own naming conventions. It would take extra time to discuss those solutions and replicate what I did here.

If you have any security recommendations from Azure about your products you can find them under the Security tab on the applications. Azure Security Center offers recommendations and tips to secure your products. Security comes in the form of performance improvements too. If your products have multiple ports open, you can disable some of them to prevent your programs from listening on those ports.

DDoS

Apply a load balancer in front of the resources that you have. Load balancers can smoothly send the traffic to resources that are healthy and can take the load off the incoming traffic.

Make your application stateless, so in case your load gets high, you can still provide a response to the customers. Note that a high amount of traffic can create a DDoS in your application. It doesn’t mean that the request is coming with the intentions of DDoS.

Purchase an anti-DDoS subscription for your products.

You can configure the DDoS prevention in your DNS, your load balancers, and proxy engines. Since DDoS is a common method of attack for bringing servers down, various hosting providers include free DDoS prevention support. You can also use rate and request limiting software to prevent this from happening in your applications. I have a separate section that covers how to do that in cloud environments later, called “API Management,” so make sure to read that section to learn how to set rate limiting to prevent extra loads on your web apps.

SSL and Encryption

The Azure App Service extensions tab offers the extensions that can be added to the resource. Currently we have a single extension added to the app instance, Azure’s Let's Encrypt

If you cannot find the extension, you should try “adding” the extension first. This extension lets me perform the task of capturing a fresh SSL certificate for my website. The requirements are simple—I should own the domain for which I am requesting a certificate. The rest of the job is done by this extension. Free SSL, and a forever and trustworthy relationship with the customers. If you are using a VM, you can run the customized scripts on your machine as well.

Kubernetes, Docker, and native cloud orchestrators have built-in support for SSL configuration. You can create objects that provide internal (or self-hosted) SSL certificates to the services hosted in the clusters. This will help you optimize the cost of the entire infrastructure. Various service-related frameworks and service meshes, such as Istio, enable you to off-load the SSL to the framework. This enables developers to use self-signed certificates in the cluster and provide encryption with a premium certificate where needed.

Azure offers cheap SSL certificates to be installed and configured by Azure infrastructure

The Alibaba Cloud offers a similar certificate with different pricing. Each platform has its own offers for the certificates that it allows customers to purchase. Customers can always bring their own certificates on the cloud environments

Alibaba Cloud offers cheaper solution hosting options; it is unclear to me why their SSL certificates are so expensive. Nonetheless, I do not recommend purchasing SSL certificates unless your business requires it. Let’s Encrypt certificates are more than capable of providing a security layer for your websites and can build trust with your customers and search engines. Paying $70 USD per year for SEO is not expensive, but it is not needed when you can use Let’s Encrypt to do the same task. If you are working in, let’s say, Fintech, then you should purchase a premium certificate with maximum support by the certificate authority to protect your online and physical identities. A customer is likely to trust an entity that has their physical identity verified, such as an office building. Premium certificate publishers verify your identity before releasing a certificate, which is necessary for an enterprise.

Just as I mentioned in the “Docker and Containers” section, you must always be ready for change. Just recently, I read that a Let’s Encrypt SSL certificate provider was revoking around three million SSL certificates. Which means, three million certificates will be invalidated on March 4, 20208 and users will see a Red Screen of Chrome9 because the certificate is not valid anymore. The solution is easy for the domain owners. Let’s Encrypt provides automation scripts and jobs that perform all the heavy lifting. For the Ops teams, this is the kind of job they need to take care of. I have more than four SSL certificates deployed with my websites and it would be a headache to rerun the jobs and capture the certificates manually.

If you can configure the DevOps pipeline to automatically fetch an updated SSL certificate on an as-needed basis, please do. Most cloud vendors do support jobs, such as WebJobs on Microsoft Azure. These jobs can be used to run periodically or ad hoc.

API Management

So far you have seen different resources provided on the cloud that make it easier for you to configure how customers connect and interact with your resources. If you own an API that is to be consumed by customers (or by your own applications), you should consider hosting the API behind an API controller. There are several options to describe your API, such as Swagger. These profiles make it easier for your customers to access the service or try it out before purchasing it.

Enable you to deploy and host your API endpoints and documentation in a single place. This lets your customers visit and connect to your API (or developer-facing resources) on a single page.

Enable your customers to preview your API before integrating them into the solutions. Swagger is one such API documentations tool that can be used to help customers understand the request parameters and response types.

Protect your APIs from external attacks, such as SQL Injection. Azure Front Door is an example of such a product. API Management contains these software packages.

Provide you with an easy way to add or remove private or sensitive information that is required by your API. You can add a custom certificate to forward the requests to your API engine if the customer provides a valid API key.

Help you limit the consumption of your API by a single IP address or customer. You can also group customers, such as free users, and apply a rate limit to their requests per minute.

Enable a web application firewall to protect your application. Built-in DDoS protection is provided to you as part of the service.

There are more services that you can use with the API Management to control your APIs than you can write and integrate manually in your APIs or servers. Custom software development includes development and maintenance costs for each feature. Cloud-hosted API Management solutions provide a turn-key solution to provision, control, and manage your APIs at any scale. Of course, every vendor will have its own definition of the service that it is going to offer you. You need to check this with the software vendor for more information. Most API Management software comes at a cheaper price for all these offers. API Management software should become part of your infrastructure and should be deployed with your resources. API Management is software published as a service for you, but it should be generated as part of your infrastructure deployment, using IaC.

Create a central repository to manage the API products that we have in our product portfolio.

Create and manage self-hosted as well as third-party and CA-signed certificates to authenticate our applications and their clients.

Provide a central hub for the customers of our API to explore the API endpoints, API requests, and responses and to make those requests using multiple client SDKs.

Apply rate limiting on API consumption.

Create groups and subscriptions to provide a central policy and management system to operations teams.

Implement security and authentication protocols such as OAuth 2.0, OpenID, and so on, to provide delegated and federated authentication/authorization experiences to the customers.

Monitor and track API consumption to study the usage pattern or improve the underlying APIs.

Send notifications to customers for different events that occur in the application or the API Management thread.

Azure API Management has different pricing rules for customers. Users can always create a Developer license account to try the services

You can select the Developer subscription to try the product before purchasing a subscription for your APIs. It will take approximately 15-30 minutes to deploy and set up your API management service, so give it the time that it needs.

The screenshots and concepts shared in this section use the default configured API Management instance. You can follow along with the concepts in this section by creating a new instance of API Management. If you decide not to create a new instance, you can simply follow along with the text and visualize the concepts and how they apply to the API endpoints and the customers using these APIs.

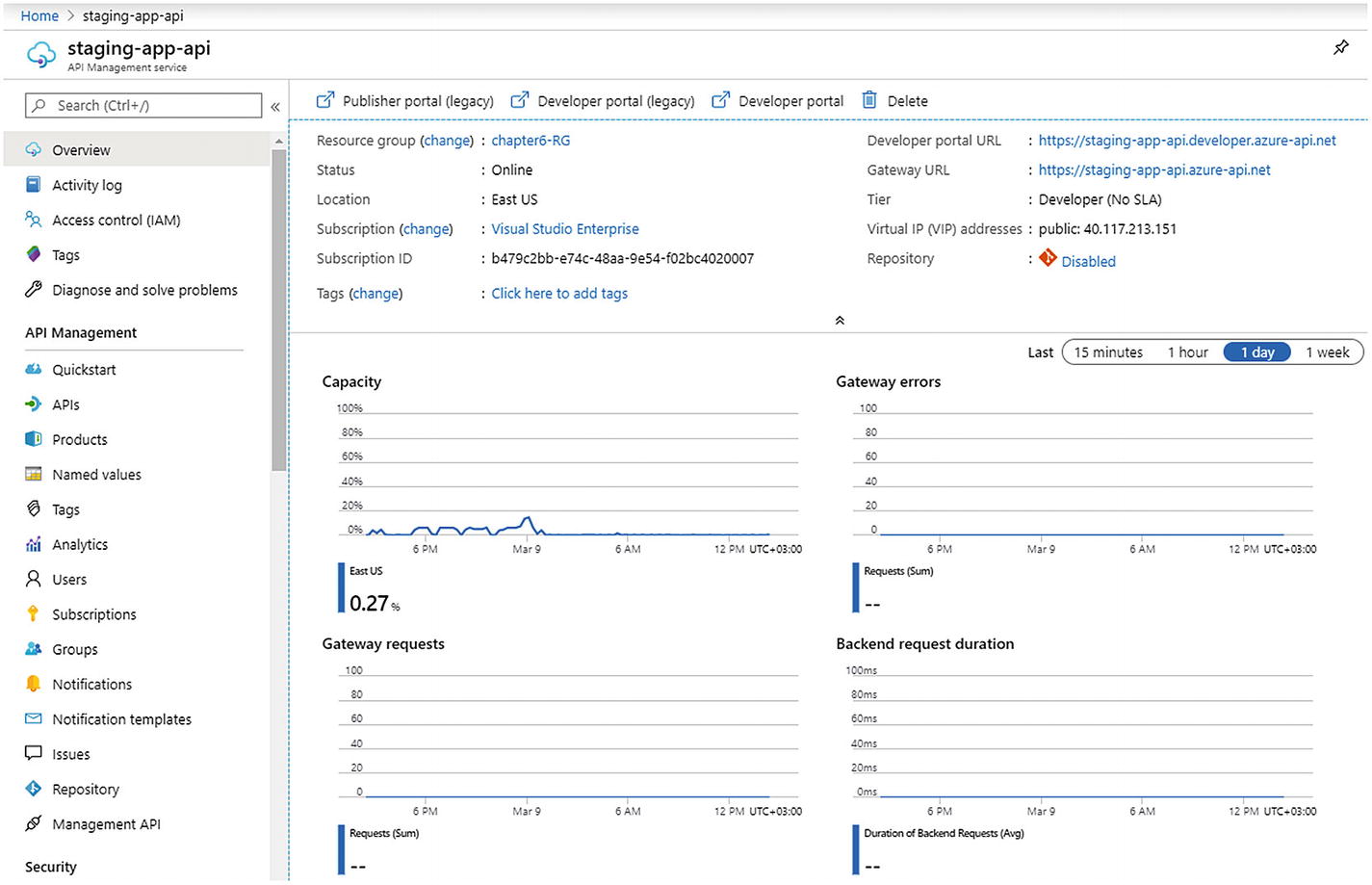

The Azure API Management dashboard, like other Azure products, offers complete information to the customers. It shows the default endpoints that will be used by their customers as well as their engineers. A good monitoring dashboard is also integrated in the service

The dashboard gives you complete access to your production as well as a developer side for the projects. The dashboard is where you can get an overview of the current status of your application. You will see the number of requests and how they are performing on the cloud. Azure Monitor can be used to further investigate the performance for your product.

A default API, called Echo API, is created for the customers to try out the API Management product. This service has default subscriptions, groups, and policies. You can explore more options about this API before creating your own using the options available under the API Creation tab

APIs can be added to Azure API Management using different formats. Microsoft provides an industry-supportive list of the APIs and formats and provides first-class support for adding Azure Functions to the list

These API configurations come in different shapes and sizes. Some of these configurations are pure XML-based configurations (such as SOAP-based Web Services) and some are JSON-based documentation for your web APIs.

You will notice that Microsoft Azure provides first-class support for Function App. Azure Function apps are serverless applications that are developed, deployed, and operated on Microsoft Azure. They are APIs in nature and perform well in the world of IoT and Edge Computing.

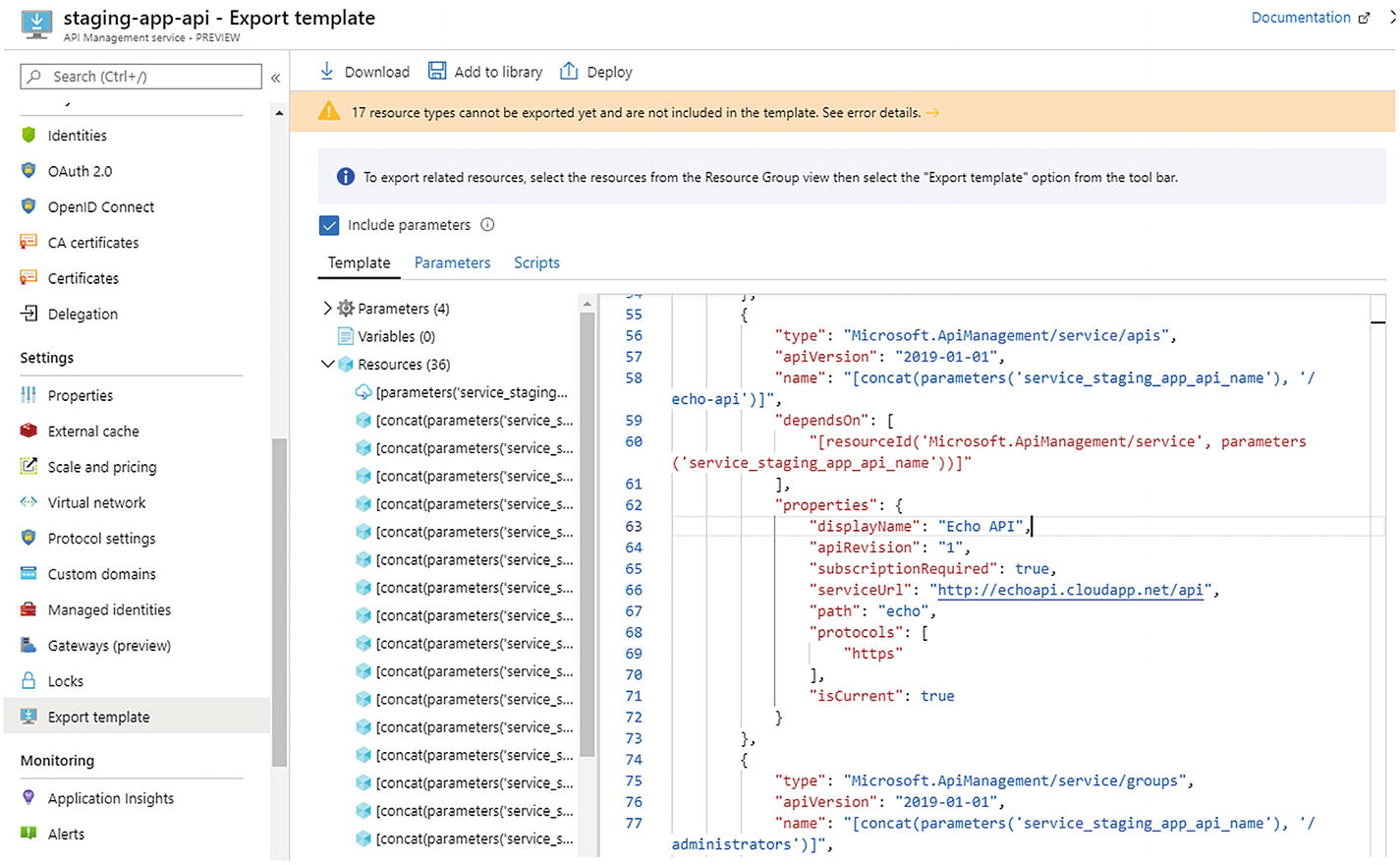

Azure API Management provides the template for the service that developers can use to version-control the application state, or to redeploy the application if there is a problem. This template contains the settings you make

API Management offers different authentication options, ranging from OAuth 2.0, OpenID Connect, all the way to Google, Twitter, and Facebook account sign up. You can use a username/password combination to allow users to join your service

API Management offers a basic username/password combination for user accounts as well as other settings. A simple approach to managing the authentication is by using self-signed certificates. You can generate them for free and deploy them on Azure as well as on client devices. You can see the Certificates tab under Security. Public/private certificates enable a secure option to authenticate the devices with a central server.

API Management has a default policy definition structure, defined in XML. You can add new policies to the product. This is an easy way to control how your products are consumed by customers. These values are also added to the resource template so, if you redeploy, all your settings are applied to the infrastructure again. Thanks to DevOps and IaC

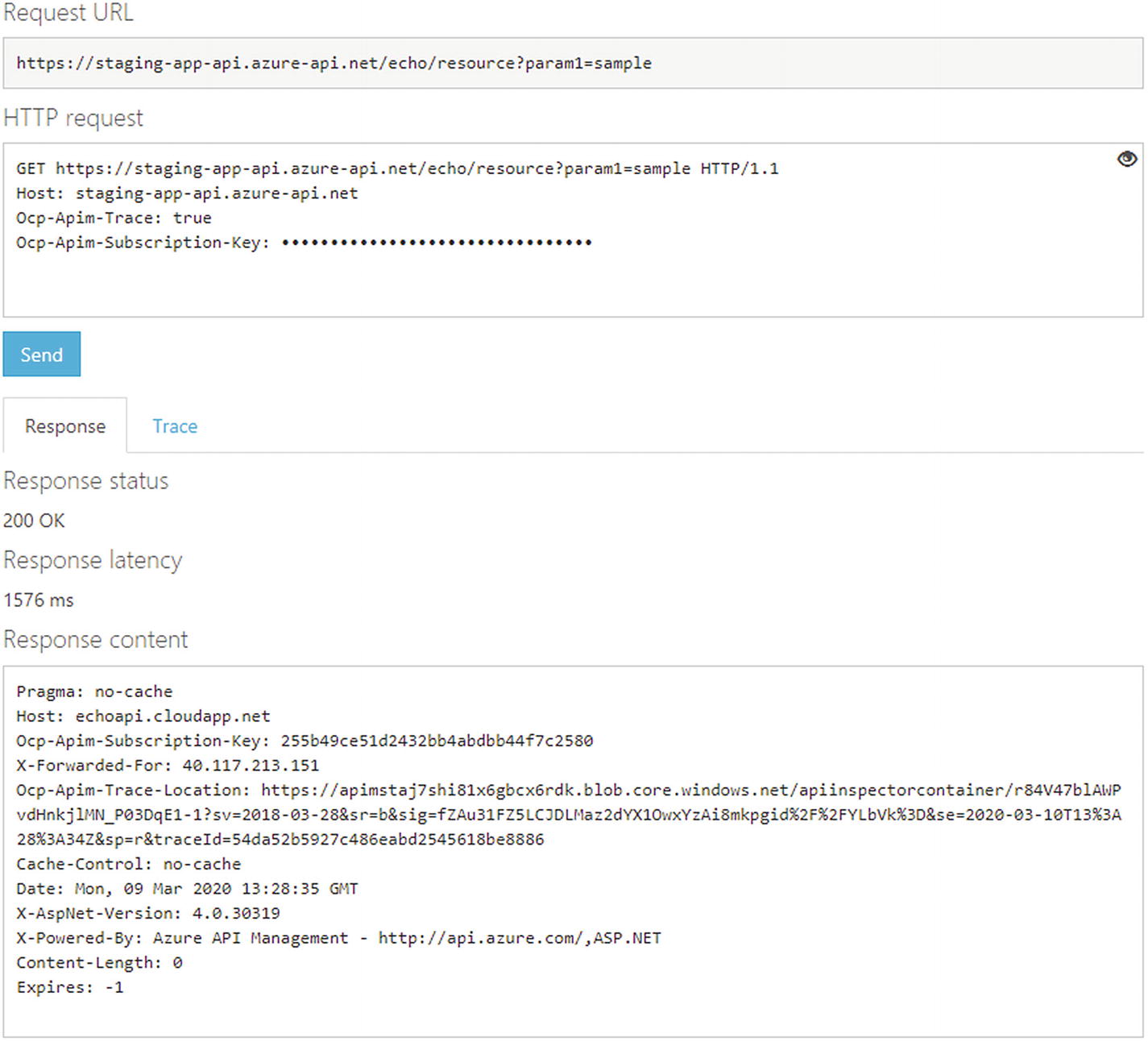

Azure API Management gives a default “Try-It” portal where customers can send requests using an API key that they are provided

A user can interact with the service using their own favorite programming or scripting language. They need to use the values to be passed in the HTTP header and body as needed by the API. Customers can study the API definition before trying it out, or Azure API Management can help them with that

If the usage of the API exceeds the allowed quota, API Management will automatically fail the request and provide an error message that tells what is wrong

Now, as shown in Figure 6-24, the response is 429. The server indicates that the actual number of requests has exceeded the expected number of requests. You also get this information in the body of the response. The Retry-After: <value> header is added to the response headers and you also get a message in the body.

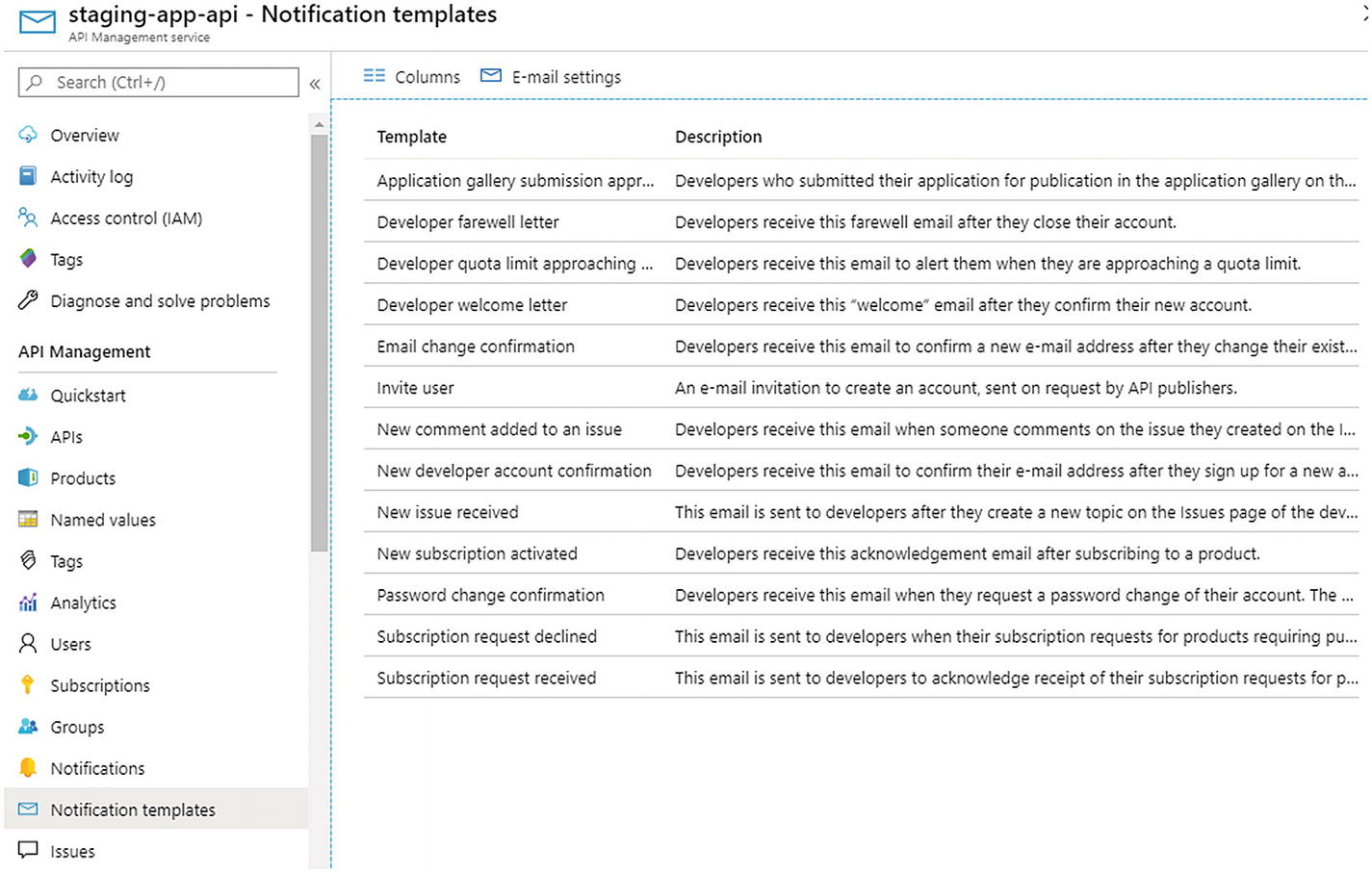

Azure API Management comes with default templates for notifications. Customers can modify the templates to meet their needs

You can edit the templates as needed.

So, in a nutshell, API Management provides a good umbrella service for your hosted solutions for customers. You get to create the groups to control how customers are using your product. Application of policies on a group of customers is easier and ethical as compared to preventing the whole product userbase from accessing the application fully. Several online solution providers use this approach to earn revenue from their solutions and provide a limited set of services to the customers for free.

Configuration and Credentials

Your infrastructure requires some settings and configuration values in order to boot up and serve the clients. For an individual who is self-managing the production environment, it is fine to have the configurations files version controlled. If you have a team of engineers, probably friends working together on a solution, then you should avoid using version control. You should avoid using a version control system if you use public version control systems such as GitHub or GitLab with their public settings enabled. IaC tools will provide you with features to securely input the passwords and other details, such as a connection string in a solution such as a web application.

Terraform10 enables you to add the secure information to its database (not the literal meaning of database). The engine then takes care of deploying your applications and passing the safe information to the configuration files as needed. This prevents unwanted access from being granted to your resources to engineers. This also enables a high-speed DevOps pipeline because your jobs do not wait for a human to enter the configuration information before the resources are deployed to the cloud.

The scenario changes for non-web-based applications. With mobile applications, you cannot control who accesses the production release. A web application merely exposes generated HTML code that can be secured at the backend. With a mobile application, your entire application’s source code is downloaded and available on the client’s device.

Mobile Applications

If you are a Xamarin.Forms developer, you should pay attention to the resources you are releasing in your final APK product. I have been working with colleagues and on my own Android applications. One thing that I had to invest time on was where to put the API keys. Android does not provide a secure vault to place keys. It would be amazing to get a functionality in Android, out of the box, that allows developers to use their production certificates to encrypt their API keys and use them. That way, the Android platform could provide them with their API keys on runtime and prevent anyone from reverse-engineering the APK to download the keys. If your API keys are exposed, it can leave you open to extra billing and charges as they might consume your services.

There are many online solutions available to this problem, and Stack Overflow is full of string interpolation and encryption solutions. They might work, but if a user is smart enough to reverse-engineer your APK, they will be smart enough to perform a quick encryption around your API keys. The solution that feels good to me is to use a web-based API. Create a personal website with an API exposed that allows your mobile application to communicate. Keep the API keys and other sensitive passwords and connection strings on the website. A user is less likely to hack into your web application and extract the key; you will learn when they do so.

With this approach, your keys are secure, and your users are getting the responses they need. There are other benefits to this approach as well. You get to maintain a cache around the API calls. I have a chat bot that I developed using Google’s Dialogflow that helps users get a movie recommendation (at random) or simply tells them which movies are currently playing at the theater. I am currently in the process of making a mobile application for it. Just imagine that a new movie gets released and users want to get a movie recommendation. Every second or third query (or request) would be for a list of movies that are playing.

The requests will differ in terms of location (people will be sending requests from Pakistan, India, Turkey, the US, etc.), but the rest of the request would be same—current show times. If every mobile device is sending a request to the API using my key (a highly discouraged approach!), it will quickly rate-limit my calls (remember the rate limiting features of APIs?) and my customers will likely feel a poor UX with my applications. If your API is like what API Management offers, then a customer might see an error message telling him that he has used the API key more than his designated quota, when in reality he never did. These problems arise when you give out keys and do not provide a central method to cache the responses or to provide the service. A central server will likely know the number of times it has used the API key and prevent requests in a graceful way.

If, instead of using this approach, I save the API key on my website and allow everyone to communicate with my website, I can cache the responses from similar requests. So, everyone from Turkey asking for the current movie listing will get a similar response, and everyone from Pakistan will get a different response (caching based on the location and query11). I will be doing all this while also protecting my resources.

If you are unsure if the website is a safe place to put your API keys, or if you are using an open source platform to host your website,12 then you should invest some time learning and understanding how to implement secure vaults to protect your properties.

Secure Vaults

The Azure App Service hides the settings by default, but it enables the customers to preview the settings by a single click only. This is not safe, as anyone can preview the values if they have access to them

You can see that the values are hidden, but you can simply click to show them. To hide these values, you might need to remove the user from the group that can see these values altogether. In most scenarios, that is not applicable. You need a separate environment to store the keys that do not enable a preview of the values (without a password, of course).

Every platform offers this functionality differently. Microsoft Azure has a product called Azure Key Vaults that allows you to write the keys to a secure vault (FIPS 140-2 standard compliant vaults). GitLab and GitHub enable you to add private information in their own security channels. It does not matter which service you use, just make sure that if you want to embed your information, you save it in a secure channel that does not allow other users to preview the information.

System Failure and Post-Mortems

If you have prepared for the worst and applied all the configurations that are necessary to secure your platform as well as the customers, you have done your part. Internet solutions are meant to crash, and that is a pattern adopted by many online solution providers. Netflix, for example, uses the Chaos Monkey13 tool, which automatically terminates the virtual machines that run in your production environments.

Replications can be performed within a single machine or datacenter. This mode of replication does not protect your resources if there’s a datacenter failover. They are cheap and they offer a good amount of high availability when you have a process or hard disk failure.

Replications that improve on top of this are the ones that take place within a single datacenter but on the stacks that do not require an upgrade at the same time. This helps your customers connect to the resource during the service update periods.

Highest availability happens when you deploy your instances on different datacenters. Each datacenter should have separation enough to accommodate not only datacenter failures but also natural disasters. If you utilize U.S. datacenters of a cloud platform, you should consider using Europe for a failover to prevent service disruption.

Customers also use hybrid cloud solutions. If your company has its own datacenter and is considering migrating to a public cloud platform or has a customized cloud solution, you should consider applying for a backup plan. Online cloud platforms offer you a backup plan on their own infrastructure with an SLA for availability. You pay a very cheap amount every month and they provide you with availability option from their datacenters. If your application crashes on your premises, your customers will continue to access the service from the cloud. These services are available not only for web applications but also for complete virtual machine migrations. This enables your solution to make the most of the hybrid environment and provide the availability that your customers would expect from you.

If your software fails and you do not have a backup plan, you should at least have a graceful exception handling in your application. ASP.NET Core14 lets you handle the exceptions gracefully and shows a helpful message to the customers. Sometimes an application is required to return a status code to the browser, so that browser can also remember what happened. This can help your customers with accessibility requirements better understand what happened. If you return a status of success but the screen message says something failed, screen readers might miss the context and mislead the customers. Your UX should be consistent in the case of exceptions as well as successful execution of the code.

.NET Core has a vast ecosystem of logging solution providers. The oldest of them is the ELMAH15. You should use it to track all the exceptions and errors happening in your system. A brief report of the exception and other metrics can help you perform a suitable post-mortem of the problems in your application. DevSecOps recommends quick response against a bug or an exception. You should work on the exception as soon as it shows up in your application’s metrics and analytics reports. You will use Azure Application Insights or Google Analytics to track your online solutions. You can use Firebase and Visual Studio App Center to manage the mobile apps developed by Xamarin.Forms. All these online solutions provide a reporting solution for you to track. You can learn what went wrong and how to improve the application to prevent the exception from happening again.

Infrastructure Rollbacks

So, you have faced a problem with your system. Maybe the problem was with a recent update you made to the system. You can always roll the system back since you integrated the cloud platform with a DevOps and IaC tool, such as Terraform.

Most cloud platforms enable you to perform a roll back as needed on their service. You should not rely on these products and features on the cloud platform because they can change anytime. Microsoft Azure is in favor of using Terraform instead of their ARM templates because of the features that Terraform provides. The features, community-support, and cloud platform it targets all make it a suitable product to be used for IaC purposes.

If you want to get more technical in the deployments and their history, you should read about how Kubernetes16 enables rollbacks for the deployments that are made on their platform. Note that you can also manually provide the container label to pull the specific container and refresh the deployment. You can perform this action on other platforms as well; we discussed the Azure App Service platform that supports Docker containers. Always choose automatic updates and operations over manual tasks and configurations. Manual handling of the infrastructure is prone to errors and likely to cause system failures, crashes, and service disruptions. Manual handling of the system also is less auditable and cannot be checked for underlying problems. Automatic operations are more likely to be verified by CI servers and are easier to be rolled back when they cause a problem. Version control systems such as Git enable us to tag a specific version of history and label it. We can use these labels to specify what that state does and move back (or forward) in time as needed. The rollbacks become easier and are automated.

Summary

Your infrastructure contains resources beyond your .NET Core code. You are responsible for securing those services and products as well. Some of these services run on top of your .NET Core, so it does not matter how secure your code is. Improving the infrastructure security and performance requires an understanding of different computer science concepts; trust me, not everybody knows them all and everyone does Google the solutions every now and then.

Our hosting environments utilize Docker containers or a similar technology to improve the developer experience. Many cloud platforms have Docker and other services integrated into their platform to provide a first-class experience to the developers and operations team. Although cloud vendors make security and privacy their top concern, it is your responsibility to make sure the hosting environment is secure and protected from external attacks. You might need to purchase a subscription if your cloud vendor requires it from you. The solutions range from basic rate limiting and load balancing, to SQL and XSS prevention, to SSL and encryption application on your infrastructure.

If your application resides in a secure environment, it can better perform the services that it states to perform. There are services that target different important areas of software production. If you are building APIs and hosting them on the cloud, you can create an integrated experience (like a marketplace for your APIs) on the cloud for your customers. This enables your customers to visit a central place to learn about your APIs and try them out.

I also mentioned how you should protect your resources from public repositories and public access. This area applies directly to mobile and other handheld platform devices. If you are developing applications for Android or iOS, you must never hard-code the APIs and other external or custom API keys in the application. Your applications are likely to be reverse-engineered. If an application is reverse-engineered, it is likely to expose the API keys during the process. An attacker can then use that API key to make requests to the server.

In the end, I discussed how you can improve the system’s performance, availability, and replication with public clouds, private clouds, or hybrid cloud approaches. Hybrid cloud applications are the most applicable approaches in this modern time. Not every business is ready to take their solutions to the cloud because of the complex architectures of cloud-native solutions.

Now that you know how to improve the production environment, in the next chapter, I will discuss compliance and other regulations that apply to your perfect solution! Developing the solution and building it with your favorite IDE is not enough to publish a solution to the market. You need to make sure that it is ready to serve the customers in the markets that you are planning to target. Compliance and regulations, such as GDPR, require lots of developer time (if you are an individual) and you should take some time to understand what they mean for you and your application.