In this chapter, our last on the asynchronous shared memory model, we introduce atomic objects. An atomic object of a particular type is very much like an ordinary shared variable of that same type. The difference is that an atomic object can be accessed concurrently by several processes, whereas accesses to a shared variable are assumed to occur indivisibly. Even though accesses are concurrent, an atomic object ensures that the processes obtain responses that make it look like the accesses occur one at a time, in some sequential order that is consistent with the order of invocations and responses. Atomic objects are also sometimes called linearizable objects.

In addition to the atomicity property, most atomic objects that have been studied satisfy interesting fault-tolerance conditions. The strongest of these is the wait-free termination condition, which says that any invocation on a non-failing port eventually obtains a response. This property can be weakened to require such responses only if all the failures are confined to a designated set I of ports or to a certain number f of ports. The only types of failures we consider in this chapter are stopping failures.

Atomic objects have been suggested as building blocks for the construction of multiprocessor systems. The idea is that you should begin with basic atomic objects, such as single-writer/single-reader read/write atomic objects, which are simple enough to be provided by hardware. Then starting from these basic atomic objects, you could build successively more powerful atomic objects. The resulting system organization would be simple, modular, and provably correct. The problem, as yet unresolved, is to build atomic objects that provide sufficiently fast responses to be useful in practice.

Atomic objects are indisputably useful, however, as building blocks for asynchronous network systems. There are many distributed network algorithms that are designed to provide the user with something that looks like a centralized, coherent shared memory. Formally, many of these can be viewed as distributed implementations of atomic objects. We will see some examples of this phenomenon later, in Sections 17.1 and 18.3.3.

In Section 13.1, we provide the formal framework for the study of atomic objects. That is, we define atomic objects and give their basic properties, in particular, results about their relationship to shared variables of the same type and results indicating how they can be used in system construction.

Then in the rest of the chapter, we give algorithms for implementing particular types of atomic objects in terms of other types of atomic objects (or, equivalently, in terms of shared variables). The types of atomic objects we consider are read/write objects, read-modify-write objects, and snapshot objects. The results we present are only examples—there are many more such results in the research literature, and there is still much more research to be done.

13.1 Definitions and Basic Results

We first define atomic objects and their basic properties, then give a construction of a canonical wait-free atomic object of a given type, and then prove some basic results about composing atomic objects and about substituting them for shared variables in shared memory systems. These results can be used to justify the hierarchical construction of atomic objects from other atomic objects.

Many of the notions in this section are rather subtle. They are important, however, not only for the results in this chapter, but also for material involving fault-tolerance in Chapters 17 and 21. So we will go slowly here and present the ideas somewhat more formally than usual. On a first reading, you might want to skip the proofs and only read the definitions and results. In fact, you might want to start by reading only the definitions in Section 13.1.1, then skipping forward to Section 13.2 and referring back to this section as necessary.

13.1.1 Atomic Object Definition

The definition of an atomic object is based on the definition of a variable type from Section 9.4. You should reread that section now. In particular, recall that a variable type consists of a set V of values, an initial value v0, a set of invocations, a set of responses, and a function f : invocations ![]() V → responses

V → responses ![]() V. This function f specifies the response and new value that result when a particular invocation is made on a variable with a particular value.

V. This function f specifies the response and new value that result when a particular invocation is made on a variable with a particular value.

Also recall that the executions of a variable type are the finite sequences v0, a1, b1, v1, a2, b2, v2,…, vr and infinite sequences v0, a1, b1, v1, a2, b2, v2,…, where the a’s and b’s are invocations and responses, respectively, and adjacent quadruples are consistent with f. Also, the traces of a variable type are the sequences of a’s and b’s that are derived from executions of the type.

If ![]() is a variable type, then we define an atomic object A of type

is a variable type, then we define an atomic object A of type ![]() to be an I/O automaton (using the general definition of an I/O automaton from Chapter 8) satisfying a collection of properties that we describe in the next few pages. In particular, it must have a particular type of external interface (external signature) and must satisfy certain “well-formedness,” “atomicity,” and liveness conditions.

to be an I/O automaton (using the general definition of an I/O automaton from Chapter 8) satisfying a collection of properties that we describe in the next few pages. In particular, it must have a particular type of external interface (external signature) and must satisfy certain “well-formedness,” “atomicity,” and liveness conditions.

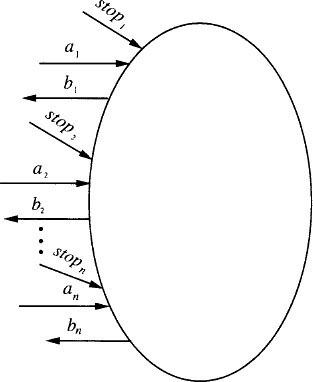

We begin by describing the external interface. We assume that A is accessed through n ports, numbered 1,…, n. Associated with each port i, A has some input actions of the form ai, where a is an invocation of the variable type, and some output actions of the form bi, where b is a response of the variable type. If ai is an input action, it means that a is an allowable invocation on port i, while if bi is an output action, it means that b is an allowable response on port i. We assume a technical condition: if ai is an input on port i and if f(a, v) = (b, w) for some v and w, then bi should be an output on port i. That is, if invocation a is allowed on port i, then all possible responses to a are also allowed on port i.

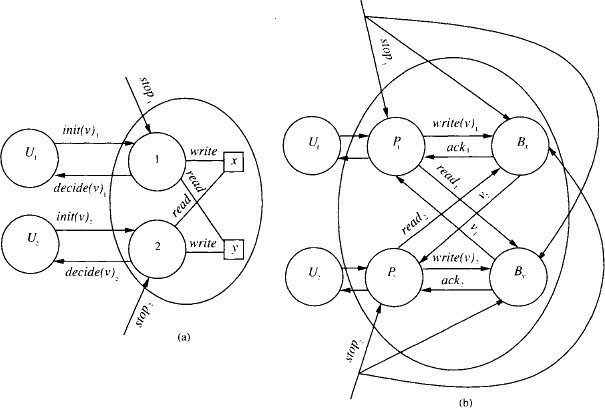

In addition, since we will consider the resiliency of atomic objects to stopping failures, we assume that there is an input stopi for each port i. The external interface is depicted in Figure 13.1.

Example 13.1.1 Read/write atomic object external interface

We describe an external interface for a 1-writer/2-reader atomic object for domain V. The object has three ports, which we label by 1, 2, and 3. Port 1 is a write port, supporting write operations only, while ports 2 and 3 are read ports, supporting read operations only. More precisely, associated with port 1 there are input actions of the form write(v)1 for all υ ∈ V and a single output action ack1. Associated with port 2 there is a single input action read2 and output actions of the form v2 for all υ ∈ V, and analogously for port 3. There are also stop1, stop2, and stop3 input actions, associated with ports 1, 2, and 3, respectively. The external interface is depicted in Figure 13.2.

Next, we describe the required behavior of an atomic object automaton A of a particular variable type ![]() . As in Chapters 10–12, we assume that A is composed with a collection of user automata Ui, one for each port. The outputs of Ui are assumed to be the invocations of A on port i, and the inputs of Ui are assumed to be the responses of A on port i. The stopi action is not part of the signature of Ui; it is assumed to be generated not by Ui, but by some unspecified external source.

. As in Chapters 10–12, we assume that A is composed with a collection of user automata Ui, one for each port. The outputs of Ui are assumed to be the invocations of A on port i, and the inputs of Ui are assumed to be the responses of A on port i. The stopi action is not part of the signature of Ui; it is assumed to be generated not by Ui, but by some unspecified external source.

The only other property we assume for Ui is that it preserve a “well-formedness” condition, defined as follows. Define a sequence of external actions of user Ui to be well-formed for user i provided that it consists of alternating invocations and responses, starting with an invocation. We assume that each Ui preserves well-formedness for i (according to the formal definition of “preserves” in Section 8.5.4). That is, we assume that the invocations of operations on each port are strictly sequential, each waiting for a response to the previous invocation. Note that this sequentiality requirement only refers to individual ports; we allow concurrency among the invocations on different ports.1 Throughout this chapter, we use the notation U to represent the composition of the separate user automata Ui, U = ΠUi.

We require that A ![]() U, the combined system consisting of A and U, satisfy several properties. First, there is a well-formedness condition similar to the ones used in Chapters 10, 11, and 12.

U, the combined system consisting of A and U, satisfy several properties. First, there is a well-formedness condition similar to the ones used in Chapters 10, 11, and 12.

Well-formedness: In any execution of A ![]() U and for any i, the interactions between Ui and A are well-formed for i.

U and for any i, the interactions between Ui and A are well-formed for i.

Since we have already assumed that the users preserve well-formedness, this amounts to saying that A also preserves well-formedness. This says that in the combined system A ![]() U, invocations and responses alternate on each port, starting with an invocation.

U, invocations and responses alternate on each port, starting with an invocation.

The next condition is the hardest one to understand. It describes the apparent atomicity of the operations, for a particular variable type T. Note that a trace of ![]() describes the correct responses to a sequence of invocations when all the operations are executed sequentially, that is, where each invocation after the first waits for a response to the previous invocation. The atomicity condition says that each trace produced by the combined system—which permits concurrent invocations of operations on different ports—“looks like” some trace of

describes the correct responses to a sequence of invocations when all the operations are executed sequentially, that is, where each invocation after the first waits for a response to the previous invocation. The atomicity condition says that each trace produced by the combined system—which permits concurrent invocations of operations on different ports—“looks like” some trace of ![]() .

.

The way of saying this formally is a little more complicated than you might expect, since we want a condition that makes sense even for executions of A ![]() U in which some of the invocations—the last ones on some ports—are incomplete, that is, have no responses. So we stipulate that each execution looks as if the operations that are completed and some of the incomplete ones are performed instantaneously at some points in their intervals.

U in which some of the invocations—the last ones on some ports—are incomplete, that is, have no responses. So we stipulate that each execution looks as if the operations that are completed and some of the incomplete ones are performed instantaneously at some points in their intervals.

In order to define atomicity for the system A ![]() U, we first give a more basic definition, of atomicity for a sequence of user actions. Namely, suppose that β is a (finite or infinite) sequence of external actions of A

U, we first give a more basic definition, of atomicity for a sequence of user actions. Namely, suppose that β is a (finite or infinite) sequence of external actions of A ![]() U that is well-formed

for every i (that is, for every i, β|ext(Ui) is well-formed for i). We say that β satisfies the atomicity property for

U that is well-formed

for every i (that is, for every i, β|ext(Ui) is well-formed for i). We say that β satisfies the atomicity property for ![]() provided that it is possible to do all of the following:

provided that it is possible to do all of the following:

- For each completed operation π, to insert a serialization point *π somewhere between π’s invocation and response in β.

- To select a subset Φ of the incomplete operations.

- For each operation π ∈ Φ, to select a response.

- For each operation π ∈ Φ, to insert a serialization point *π somewhere after π’s invocation in β.

These operations and responses should be selected, and these serialization points inserted, so that the sequence of invocations and responses constructed as follows is a trace of the underlying variable type ![]() :

:

For each completed operation π, move the invocation and response events appearing in β (in that order) to the serialization point *π. (That is, “shrink” the interval of operation π ∈ Φ to its serialization point.) Also, for each operation π, put the invocation appearing in β, followed by the selected response, at *π. Finally, remove all invocations of incomplete operations π ![]() Φ.

Φ.

Notice that the atomicity condition only depends on the invocation and response events—it does not mention the stop events. We can easily extend this definition to executions of A and of A ![]() U. Namely, suppose that α is any such execution that is well-formed for every i (that is, for every i, α|ext(Ui) is well-formed for i). Then we say that α satisfies the atomicity property for

U. Namely, suppose that α is any such execution that is well-formed for every i (that is, for every i, α|ext(Ui) is well-formed for i). Then we say that α satisfies the atomicity property for ![]() provided that its sequence of external actions, trace(α), satisfies the atomicity property for

provided that its sequence of external actions, trace(α), satisfies the atomicity property for ![]() .

.

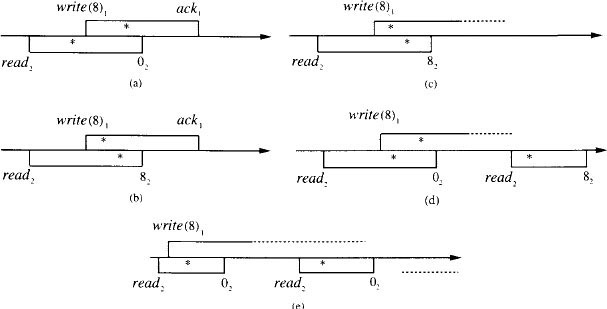

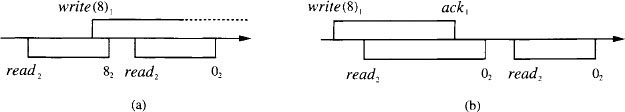

Example 13.1.2 Executions with serialization points

Figure 13.3 illustrates some executions of a single-writer/single-reader read/write object with domain V = ![]() and initial value v0 = 0 that satisfy the atomicity property for the read/write register variable type. The serialization points are indicated by stars. Suppose that ports 1 and 2 are used for writing and reading, respectively.

and initial value v0 = 0 that satisfy the atomicity property for the read/write register variable type. The serialization points are indicated by stars. Suppose that ports 1 and 2 are used for writing and reading, respectively.

In (a), a read operation that returns 0 and a write(8) operation overlap and the serialization point for the read is placed before that of the write(8). Then if the operation intervals are shrunk to their serialization points, the sequence of invocations and responses is read2, 02, write(8)1, ack1. This is a trace of the variable type. (See Example 9.4.4.)

In (b), the same operation intervals are assigned serialization points in the opposite order. The resulting sequence of invocations and responses is then write(8)1, ack1, read2, 82, again a trace of the variable type.

Each of the executions in (c) and (d) includes an incomplete write(8) operation. In each case, a serialization point is assigned to the write(8), because its result is seen by a read operation. For (c), the result of shrinking the operation intervals is write(8)1, ack1, read2, 82, whereas for (d), the sequence is read2, 02, write(8)1, ack1, read2, 82. Both are traces of the variable type. (Again, see Example 9.4.4.)

In (e), there are infinitely many read operations that return 0, and consequently the incomplete write(8) cannot be assigned a serialization point.

Figure 13.3: Executions of a single-writer/single-reader read/write object satisfying the atomicity property.

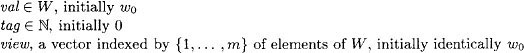

Example 13.1.3 Executions with no serialization points

Figure 13.4 illustrates some executions of a single-writer/single-reader read/write object that do not satisfy the atomicity property. In (a), there is no way to insert serialization points to explain the occurrence of a read that returns 8 followed by a read that returns 0. In (b), there is no way to explain the occurrence of a read that returns 0, after the completion of a write of 8.

Figure 13.4: Executions of a single-writer/single-reader read/write object that do not satisfy the atomicity property.

Now we are (finally) ready to define the atomicity condition for the combined system A ![]() U.

U.

Atomicity: Let α be a (finite or infinite) execution of A ![]() U that is well-formed for every i. Then α satisfies the atomicity property (as defined just before Example 13.1.2).

U that is well-formed for every i. Then α satisfies the atomicity property (as defined just before Example 13.1.2).

We can also express the atomicity condition in terms of a trace property (see the definition of a trace property in Section 8.5.2). Namely, define the trace property P so that its signature sig(P) is the external interface of A ![]() U and its trace set traces(P) is exactly the set of sequences that satisfy both of the following:

U and its trace set traces(P) is exactly the set of sequences that satisfy both of the following:

- Well-formedness for every i

- The atomicity property for

(For convenience, we include the stop actions in the signature of P, even though they are not mentioned in the well-formedness and atomicity conditions.) The interesting thing about P is that it is a safety property, as defined in Section 8.5.3. That is, traces(P) is nonempty, prefix-closed, and limit-closed. This is not obvious, because the atomicity property has a rather complicated definition, involving the existence of appropriate placements of serialization points and selections of operations and responses.

Theorem 13.1 P (the trace property defined above, expressing the combination of well-formedness and atomicity) is a safety property.

The proof of Theorem 13.1 uses König’s Lemma, a basic combinatorial lemma about infinite trees:

Lemma 13.2 (König’s Lemma) If G is an infinite tree in which each node has only finitely many children, then G has an infinite path from the root.

Proof Sketch (of Theorem 13.1). Nonemptiness is clear, since λ ∈ traces(P).

For prefix-closure, suppose that β ∈ traces(P) and let β’ be a finite prefix of β. Since β ∈ traces(P), it is possible to select a set Φ of incomplete operations, a set of responses for the operations in Φ, and a set of serialization points that together demonstrate the correctness of β. We show how to make such selections for β’.

Let γ denote the sequence obtained from β by inserting the selected serialization points. Let γ’ be the prefix of γ ending with the last element of β’. Then γ’ includes serialization points for all the complete operations in β’ and some subset of the incomplete operations in β′. Choose Φ’, the set of incomplete operations for β′, to consist of those incomplete operations in β′ that have serialization points in γ’. Choose a response for each operation π ∈ Φ’ as follows: If π is incomplete in β, that is, if π ∈ Φ, then choose the same response that is chosen for π in β. Otherwise choose the response that actually appears in β. Then it is not hard to see that the chosen set Φ′, its chosen responses, and the serialization points in γ′ together demonstrate the correctness of β′. This shows prefix-closure.

Finally, we show limit-closure. Consider an infinite sequence β and suppose that all finite prefixes of β are in traces(P). We use König’s Lemma.

The tree G that we construct in order to apply König’s Lemma describes the possible placements of serialization points in β. Each node of G is labelled by a finite prefix of β, with serialization points inserted for some subset of the operations that are invoked in β. We only include labels that are “correct” in the sense that they satisfy the following three conditions:

- Every completed operation has exactly one serialization point, and that serialization point occurs between the operation’s invocation and response.

- Every incomplete operation has at most one serialization point, and that serialization point occurs after the operation’s invocation.

- Every response to an operation π is exactly the response that is calculated for π using the function of the given variable type

at the serialization points. (Start with the initial value v0 and apply the function once for each serialization point, in order, with the corresponding invocation as the first argument. The response that is calculated for π is the response obtained when the function is applied for the serialization point *π.)

at the serialization points. (Start with the initial value v0 and apply the function once for each serialization point, in order, with the corresponding invocation as the first argument. The response that is calculated for π is the response obtained when the function is applied for the serialization point *π.)

- The label of the root is λ.

- The label of each non-root node is an extension of the label of its parent.

- The label of each non-root node ends with an element of β.

- The label of each non-root node contains exactly one more element of β than does the label of its parent node (and possibly some more serialization points).

Thus, at each branch point in G, a decision is made about which serialization points to insert, in which order, between two particular symbols in β. By considering the prefix-closure construction above, we can see that G can be constructed so that every finite prefix β′ of β, with every “correct” assignment of serialization points prior to the last symbol of β′, appears as the label of some node of G.

Now we apply König’s Lemma to the tree G. First, it is easy to see that each node of G has only finitely many children. This is because only operations that have already been invoked can have their serialization points inserted and there are only finitely many places to insert these serialization points.

Second, we claim that G contains arbitrarily long paths from the root. This is because every finite prefix β′ of the infinite sequence β is in traces(P), which means that β′ has an appropriate assignment of serialization points. This assignment yields a corresponding path in G of length |β′|.

Since G contains arbitrarily long paths from the root, it is infinite. Then König’s Lemma (Lemma 13.2) implies that G contains an infinite path from the root. The node labels on this path yield a correct selection of serialization points (and consequently, of incomplete operations and responses) for the entire sequence β. ![]()

Having defined the safety properties for atomic objects—well-formedness and atomicity—we now turn to liveness properties. The liveness properties we consider are termination conditions similar to those we gave for the agreement problem, in Section 12.1. The simplest requirement is for failure-free executions, that is, those executions in which no stop event occurs.

Failure-free termination: In any fair failure-free execution of A ![]() U, every invocation has a response.

U, every invocation has a response.

With this one liveness property, we can define “atomic objects.” Namely, we say that A is an atomic object of variable type ![]() if it guarantees the well-formedness condition, the atomicity condition for

if it guarantees the well-formedness condition, the atomicity condition for ![]() , and the failure-free termination condition, for all collections of users.

, and the failure-free termination condition, for all collections of users.

Note that if we wanted to consider only the failure-free case, then we could simplify the statement of the atomicity condition, because there would never be any need to consider incomplete operations. The reason we have given the more complicated statement of the atomicity condition is that we shall also consider failures.

As for the mutual exclusion problem in Section 10.2, it is possible to reformulate the entire definition of an atomic object equivalently in terms of a trace property P. This time, sig(P) includes all the external interface actions of the atomic object, including the stop actions as well as the invocation and response actions, and traces(P) expresses well-formedness, atomicity, and failure-free termination. Then an automaton A with the right interface is an atomic object of type ![]() exactly if, for all collections of users, fairtraces(A

exactly if, for all collections of users, fairtraces(A ![]() U)

U) ![]() traces(P).

traces(P).

We also consider some stronger termination conditions involving fault-tolerance.

Wait-free termination: In any fair execution of A ![]() U, every invocation on a non-failing port has a response.

U, every invocation on a non-failing port has a response.

That is, any port on which no failure occurs provides responses for all invocations, regardless of the failures that occur on any of the other ports. We generalize this property to describe termination in the presence of any number of failures.

f-failure termination, 0 ≤ f ≤ n: In any fair execution of A ![]() U in which stop events occur on at most f ports, every invocation on a non-failing port has a response.

U in which stop events occur on at most f ports, every invocation on a non-failing port has a response.

Failure-free termination and wait-free termination are the special cases of the f-failure termination condition where f is equal to 0 and n, respectively. A further generalization allows us to talk about the failure of any particular set of ports.

I-failure termination, I ![]() {1,…, n}: In every fair execution of A

{1,…, n}: In every fair execution of A ![]() U in which the only stop events occur on ports in I, every invocation on a non-failing port has a response.

U in which the only stop events occur on ports in I, every invocation on a non-failing port has a response.

Thus, f-failure termination is the same as I-failure termination for all sets I of ports of size at most f. We say that A guarantees wait-free termination, guarantees I-failure termination, and so on, provided that it guarantees the corresponding condition for all collections of users.

We close this section with a simple example of a shared memory system that is an atomic object.

Example 13.1.4 A read/increment atomic object

We define the read/increment variable type to have ![]() as its domain, 0 as its initial value, and read and increment as its operations.

as its domain, 0 as its initial value, and read and increment as its operations.

Let A be a shared memory system with n processes in which each port i supports both read and increment operations. A has n shared read/write registers x(i), 1 ≤ i ≤ n, each with domain ![]() and initial value 0. Shared variable x(i) is writable by process i and readable by all processes.

and initial value 0. Shared variable x(i) is writable by process i and readable by all processes.

When an incrementi input occurs on port i, process i simply increments its own shared variable, x(i). It can do this using only a write operation, by remembering the value of x(i) in its local state. When a readi occurs on port i, process i reads all the shared variables x(j) one at a time, in any order, and returns the sum.

Then it is not hard to see that A is a read/increment atomic object and that it guarantees wait-free termination. For example, to see the atomicity condition, consider any execution of A ![]() U. Let Φ be the set of incomplete increment operations for which a write occurs on a shared variable. For each increment operation π that is either completed or is in Φ, place the serialization point *π at the point of the write.

U. Let Φ be the set of incomplete increment operations for which a write occurs on a shared variable. For each increment operation π that is either completed or is in Φ, place the serialization point *π at the point of the write.

Now, note that any completed (high-level) read operation π returns a value v that is no less than the sum of all the x(i)’s when the read is invoked and no greater than the sum of all the x(i)’s when the read completes. Since each increment operation only increases this sum by 1, there must be some point within π’s interval at which the sum of the x(i)’s is exactly equal to the return value v. We place the serialization point *π at this point. These choices allow the shrinking needed to show atomicity.

13.1.2 A Canonical Wait-Free Atomic Object Automaton

In this subsection we give an example of an atomic object automaton C for a given variable type ![]() and given external interface. Automaton C guarantees wait-free termination. C is highly nondeterministic and is sometimes regarded as a “canonical wait-free atomic object automaton” for the given type and external interface. It can be used to help show that other automata are wait-free atomic objects.

and given external interface. Automaton C guarantees wait-free termination. C is highly nondeterministic and is sometimes regarded as a “canonical wait-free atomic object automaton” for the given type and external interface. It can be used to help show that other automata are wait-free atomic objects.

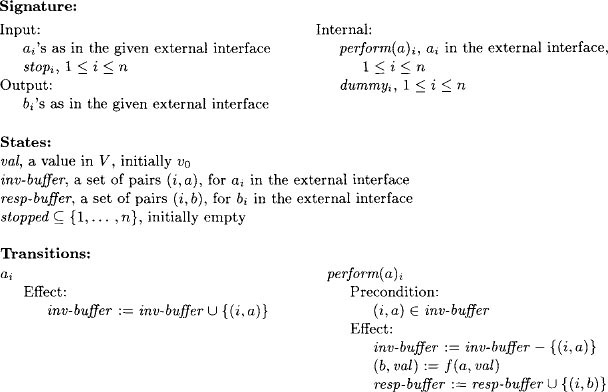

C automaton (informal):

C maintains an internal copy of a shared variable of type ![]() , initialized to the initial value v0. It also has two buffers, inv-buffer for pending invocations and resp-buffer for pending responses, both initially empty.

, initialized to the initial value v0. It also has two buffers, inv-buffer for pending invocations and resp-buffer for pending responses, both initially empty.

Finally, it keeps track of the ports on which a stop action has occurred, in a set stopped, initially empty.

When an invocation arrives, C simply records it in inv-buffer. At any time, C can remove any pending invocation from inv-buffer and perform the requested operation on the internal copy of the shared variable. When it does this, it puts the resulting response in resp-buffer. Also at any time, C can remove any pending response from resp-buffer and convey the response to the user.

A stopi event just adds i to stopped, which enables a special dummyi action having no effect. It does not, however, disable the other locally controlled actions involving i. All the locally controlled actions involving each port i, including the dummyi action, are grouped into one task. This means that after a stopi, actions involving i are permitted (but not required) to cease.

More precisely,

C automaton (formal):

Theorem 13.3 C is an atomic object with the given type and external interface, guaranteeing wait-free termination (for all collections of users).

Proof Sketch. Well-formedness is straightforward. To see wait-freedom, consider any fair execution α of C ![]() U and suppose that there are no failures on port i in α. Then the dummyi action is never enabled in α. The fairness of α then implies that every invocation on port i triggers a performi event and a subsequent response.

U and suppose that there are no failures on port i in α. Then the dummyi action is never enabled in α. The fairness of α then implies that every invocation on port i triggers a performi event and a subsequent response.

It remains to show atomicity. Consider any execution α of C ![]() U. Let Φ be the set of incomplete operations for which a perform occurs in α. Assign a serialization point *π to each operation π that is either completed in α or is in Φ: place *π at the point of the perform. Also, for each π ∈ Φ, select the response returned by the perform as the response for the operation. These choices allow the shrinking needed to show atomicity.

U. Let Φ be the set of incomplete operations for which a perform occurs in α. Assign a serialization point *π to each operation π that is either completed in α or is in Φ: place *π at the point of the perform. Also, for each π ∈ Φ, select the response returned by the perform as the response for the operation. These choices allow the shrinking needed to show atomicity. ![]()

C can be used to help verify that other automata are also wait-free atomic objects, as follows:

Theorem 13.4 Suppose that A is an I/O automaton with the same external interface as C. Suppose that fairtraces(A ![]() U)

U) ![]() fairtraces(C

fairtraces(C ![]() U) for every composition U of user automata. Then A is an atomic object guaranteeing wait-free termination.

U) for every composition U of user automata. Then A is an atomic object guaranteeing wait-free termination.

Proof Sketch. Follows from Theorem 13.3. For the well-formedness and atomicity, we use the fact that the combination of these two conditions is a safety property (Theorem 13.1), plus the fact that every finite trace can be extended to a fair trace (Theorem 8.7). The wait-freedom condition follows immediately from the definitions.

We also have a converse to Theorem 13.4, which says that every fair trace that is allowed for a wait-free atomic object is actually generated by C:

Theorem 13.5 Suppose that A is an I/O automaton with the same external interface as C. Suppose that A is an atomic object guaranteeing wait-free termination. Then fairtraces(A ![]() U)

U) ![]() fairtraces(C

fairtraces(C ![]() U), for every composition U of user automata.

U), for every composition U of user automata.

Proof. The proof is left as an exercise.

13.1.3 Composition of Atomic Objects

In this subsection, we give a theorem that says that the composition of atomic objects (using ordinary I/O automaton composition, defined in Section 8.2.1) is also an atomic object. Recall the definitions of compatible variable types and composition of variable types from the end of Section 9.4.

Theorem 13.6 Let {Aj}j∈J be a countable collection of atomic objects having compatible variable types {Tj}j∈J and all having the same set of ports {1,…, n}. Then the composition A = ∏ j∈J is an atomic object having variable type T = ∏j∈J Tj and having ports {1,…, n}.

Furthermore, if every Aj guarantees I-failure termination (for all collections of users), then so does A.

In atomic object A, port i handles all the invocations and responses that are handled on port i of any of the Aj. According to the definition of composition, the state of A has a piece for each Aj. The invocations and responses that are derived from Aj only involve the piece of the state of A associated with Aj. The stopi actions, however, affect all parts of the state. We leave the proof of Theorem 13.6 for an exercise.

13.1.4 Atomic Objects versus Shared Variables

The definition of an atomic object says that its traces “look like” traces of a sequentially accessed shared variable of the underlying type. What good is this?

The most important fact about atomic objects, from the point of view of system construction, is that it is possible to substitute them for shared variables in a shared memory system. This permits modular construction of systems: it is possible first to design a shared memory system and then to replace the shared variables by arbitrary atomic objects of the given types. Under certain circumstances, the resulting system “behaves in the same way” as the original shared memory system, as far as the users can tell.

In this section, we describe this substitution technique. First we give some technical conditions on the original shared memory system that are required for the replacement to work correctly. Next, we give the substitution construction. Finally, we define the sense in which the resulting system behaves in the same way as the original system and prove that, with the given conditions, the resulting system really does behave in the same way. Although the basic ideas are reasonably simple, there are a few details that have to be handled carefully in order to make the substitution technique work out right.

We begin with A, an arbitrary algorithm in the shared memory model of Chapter 9. We assume that A interacts with user automata Ui, 1 ≤ i ≤ n. We permit each process i of A to have any number of tasks. We also include stopi actions, as discussed in Section 9.6, and assume that each stopi event permanently disables all the tasks of process i.

Now for the technical conditions we mentioned above. Consider A in combination with any collection of user automata Ui. We assume that for each port i, there is a function turni that, for any finite execution α of the combined system, yields either the value system or user. This is supposed to indicate whose turn it is to take the next step, after α. Specifically, we require that if turni(α) = system, then Ui has no output step enabled in its state after α, while if turni(α) = user, then process i of A has no output or internal step, that is, no locally controlled step, enabled in its state after α.

For example, all the mutual exclusion algorithms in Chapter 10 and all the resource-allocation algorithms in Chapter 11 satisfy these conditions (if we add the stop actions). In those cases, turni(α) = system for any α after which Ui is in the trying or exit region, and turni(α) = user if Ui is in the critical or remainder region. In fact, the required conditions are implied by the restriction on process activity assumed near the end of Section 10.2 and at the end of Section 11.1.2.

For consensus algorithms, as studied in Chapter 12, we may define turni(α) = system for any α that contains an initi event, and turni(α) = user otherwise. Then to satisfy the conditions we need here, we would have to add a restriction, namely, that process i cannot do anything before an initi occurs. This condition is satisfied by the only algorithm in Chapter 12, RMWAgreement.

Now we give the substitution. Suppose that for each shared variable x of A, we are given an atomic object automaton Bx of the same type and the appropriate external interface. That is, Bx has ports 1,…, n, one for each process of A. On each port, it allows all invocations and responses that are used by process i in its interactions with shared variable x in algorithm A. It also has stopi inputs, one for each port, as usual.

Then we define Trans(A), the transformed version of A that uses the atomic objects Bx in place of its shared variables, to be the following automaton:

Trans(A) automaton:

Trans(A) is a composition of I/O automata, one for each process i and one for each shared variable x of algorithm A. For each variable x, the automaton is the atomic object automaton Bx. For each process i, the automaton is Pi, defined as follows.

The inputs of Pi are the inputs of A on port i plus the responses of each Bx on port i plus the stopi action. The outputs of Pi are the outputs of A on port i plus the invocations for each Bx on port i.

Pi’s steps simulate those of process i of A directly, with the following exceptions: When process i of A performs an access to shared variable x, Pi instead issues the appropriate invocation to Bx. After it does this, it suspends its activity, awaiting a response by Bx to the invocation. When a response arrives, Pi resumes simulating process i of A as usual. There is a task of Pi corresponding to each task of process i of A.

If a stopi event occurs, all tasks of Pi are thereafter disabled.

Example 13.1.5 A and Trans(A)

Consider a two-process shared memory system A that is supposed to solve some sort of consensus problem, using two read/write shared variables, x and y. We assume that process 1 writes x and reads y, and process 2 writes y and reads x. The interface between each Ui and A consists of actions of the form init(v)i, which are outputs of Ui and inputs of A, and actions of the form decide(v)i, which are outputs of A and inputs of Ui. In addition, stopi, i ∈ {1, 2} is an input of A. The architecture of this system is depicted in Figure 13.5, part (a).

The architecture of the transformed system Trans(A) is depicted in part (b). Note the external interfaces of the automata Bx and By. For example, Bx has inputs write(v)1 and read2 and outputs ack1 and v2.2 Bx also has inputs stop1 and stop2, which are identified with the stop1 input to P1 and the stop2 input to P2, respectively. This means, for example, that stop1 simultaneously disables all tasks of P1 and also has whatever effect stop1 has on the implementation Bx.

Now we give a theorem describing what is preserved by transformation Trans. Theorem 13.7 first describes conditions that hold for any execution α of Trans(A). Execution α does not have to be fair for these conditions to hold. These conditions say that α looks to the users like an execution α′ of A. Moreover, the same stop events occur in α and α′, although we allow for the possibility that the stop events could occur in different positions in the two executions.

Theorem 13.7 then goes on to identify some conditions under which the simulated execution α′ of the A system is guaranteed to be a fair execution. As you would expect, one of the conditions is that α is itself a fair execution of the Trans(A) system. But this is not enough—we also need to make sure that the object automata Bx do not cause processing to stop. So we include two other conditions that together ensure that this does not happen, namely, that all the failures that occur in α are confined to a particular set I of ports and that all the object automata Bx can tolerate failures on I (formally, they guarantee I-failure termination).

Theorem 13.7 Suppose that α is any execution of the system Trans(A) ![]() U. Then there is an execution α′ of A

U. Then there is an execution α′ of A ![]() U such that the following conditions hold:

U such that the following conditions hold:

- α and α′ are indistinguishable3 to U.

- For each i, a stopi occurs in α exactly if a stopi occurs in α′.

Moreover, if α is a fair execution, if every i for which stopi appears in α is in I, and if every Bx guarantees I-failure termination (for all collections of users), then α′ is also a fair execution.

Proof Sketch. We modify α to get α′ as follows. First, since each Bx is an atomic object, we can insert a serialization point *π in α between the invocation and response of each completed operation π on Bx and also after the invocation of each of a subset Φ of the incomplete operations on Bx. We also obtain responses for all the operations in Φ. These serialization points and responses can be guaranteed to satisfy the “shrinking” property described in the atomicity condition.

Next, we move the invocation and response events for each completed operation π on Bx so that they are adjacent and occur exactly at *π. Also, for each incomplete operation π in Φ—that is, each incomplete operation that has been assigned a serialization point—we place the invocation, together with the newly manufactured response, at *π. And for each incomplete operation that is not in Φ—that is, each incomplete operation that has not been assigned a serialization point—we simply remove the invocation event. There is one additional technicality: if any stopi event in α occurs after an invocation by process i and before the serialization point to which the invocation is moved, then that stopi event is also moved to the serialization point, just after the invocation and response. We move, add, and remove events in this way for all shared variables x.

We claim that it is possible to move all the events that we have moved in this construction without changing the order of events of any Pi (with one technical exception: a response to Pi by some Bx may be moved ahead of a stopi). This follows from two facts. First, by construction, Pi performs no locally controlled actions while it is waiting for a response to an invocation. And second, while Pi is waiting for a response, it is the system’s turn to take steps. This means that Ui will not perform any output steps, so Pi will receive no inputs.

Similarly, we claim that we can add the responses we have added and remove the invocations we have removed in this construction without otherwise affecting the behavior of Pi. This is because if Pi performs an incomplete operation in α, it does not do anything after that operation. It does not matter if Pi stops just before issuing the invocation, while waiting for a response, or just after receiving the response.

Since we have not changed anything significant by this motion, addition, and removal of events, we can simply fill in the states of the processes Pi as in α. (A technical exception: A response to Pi moved before a stopi might cause a different change in the state of Pi than it did in α.) The result is a new execution, α1, also of the system Trans(A) ![]() U. Moreover, it is clear that α and α1 are indistinguishable to U and have stop events for the same ports.

U. Moreover, it is clear that α and α1 are indistinguishable to U and have stop events for the same ports.

Now, α1 is an execution of Trans(A) ![]() U, which is not exactly what we need; rather, we need an execution of the system A

U, which is not exactly what we need; rather, we need an execution of the system A ![]() U. But notice that in α1, all the invocations and responses for the object automata Bx occur in consecutive matching pairs. So we replace those pairs by instantaneous accesses to the corresponding shared variables and thereby obtain an execution α′ of the system A

U. But notice that in α1, all the invocations and responses for the object automata Bx occur in consecutive matching pairs. So we replace those pairs by instantaneous accesses to the corresponding shared variables and thereby obtain an execution α′ of the system A ![]() U. Then α and α′ are indistinguishable to U and have stop events for the same ports. This proves the first half of the theorem.

U. Then α and α′ are indistinguishable to U and have stop events for the same ports. This proves the first half of the theorem.

For the second half, suppose that α is a fair execution of Trans(A) ![]() U, that I

U, that I ![]() {1,…, n}, that every i for which stopi appears in α is in I, and that each Bx guarantees I-failure termination. Then the only stopi inputs received by any Bx must be for ports i ∈ I. Thus, since every Bx guarantees I-failure termination, it must be that every Bx provides responses for every invocation by a process Pi for which no stopi event occurs in α. This fact, combined with the fairness assumption for processes Pi, is enough to imply that α′ is a fair execution of A

{1,…, n}, that every i for which stopi appears in α is in I, and that each Bx guarantees I-failure termination. Then the only stopi inputs received by any Bx must be for ports i ∈ I. Thus, since every Bx guarantees I-failure termination, it must be that every Bx provides responses for every invocation by a process Pi for which no stopi event occurs in α. This fact, combined with the fairness assumption for processes Pi, is enough to imply that α′ is a fair execution of A ![]() U.

U.

Thus, Theorem 13.7 implies that any algorithm for the shared memory model (with some simple restrictions) can be transformed to work with atomic objects instead of shared variables and that the users cannot tell the difference.

We give as a corollary the special case of Theorem 13.7 where the atomic objects Bx all guarantee wait-free termination. In this case, we can conclude that α′ is fair just by assuming that α is fair.

Corollary 13.8 Suppose that all the Bx guarantee wait-free termination. Suppose that α is any fair execution of Trans(A) x U. Then there is a fair execution α′ of A x U such that the following conditions hold:

- α and α′ are indistinguishable to U.

- For each i, a stopi occurs in α exactly if a stopi occurs in α′.

Proof. Immediate from Theorem 13.7, letting I = {1,…, n}.

In the special case where A is itself an atomic object, Theorem 13.7 implies that Trans(A) is also an atomic object. Including failure considerations, we obtain the following corollary.

Corollary 13.9 Suppose that A and all the Bx’s are atomic objects guaranteeing I-failure termination. Then Trans(A) is also an atomic object guaranteeing I-failure termination.

Proof. First let α be any execution of Trans(A) and a collection of users Ui. Then Theorem 13.7 yields an execution α′ of A ![]() U such that α and α′ are indistinguishable to U. Since A is an atomic object, α′ satisfies the well-formedness and atomicity properties. Since both of these are properties of the external interface of U, and α and α′ are indistinguishable to U, α also satisfies the well-formedness and atomicity properties.

U such that α and α′ are indistinguishable to U. Since A is an atomic object, α′ satisfies the well-formedness and atomicity properties. Since both of these are properties of the external interface of U, and α and α′ are indistinguishable to U, α also satisfies the well-formedness and atomicity properties.

It remains to consider the I-failure termination condition. Let α be any fair execution of Trans(A) and a collection of users Ui such that every i for which stopi appears in α is in I. Since all the Bx guarantee I-failure termination, Theorem 13.7 yields a fair execution α′ of A ![]() U, such that α and α′ are indistinguishable to U and α and α′ contain stop events for the same set of ports. Thus, every i for which stopi appears in α′ is in I.

U, such that α and α′ are indistinguishable to U and α and α′ contain stop events for the same set of ports. Thus, every i for which stopi appears in α′ is in I.

Now consider any invocation in α on a port i for which no stopi event occurs in α—that is, on a non-failing port. Since α and α′ are indistinguishable to U, the same invocation appears in α′. Because A guarantees I-failure termination, there is a corresponding response event in α′. Then, since α and α′ are indistinguishable to U, this response also appears in α. This is enough to show I-failure termination.

Hierarchical construction of shared memory systems. In the special case where each atomic object Bx is itself a shared memory system, we claim that Trans(A) can also be viewed as a shared memory system. Namely, each process i of Trans(A) (viewed as a shared memory system) is a combination of process Pi of Trans(A) and the processes indexed by i in all of the shared memory systems Bx. This combination is not exactly an I/O automaton composition, because the processes in the Bx’s are not I/O automata. However, the combination is easy to describe: the state set of process i of Trans(A) is just the Cartesian product of the state set of Pi and the state sets of all the processes indexed by i in all the Bx’s, and likewise for the start states. The actions associated with process i of Trans(A) are just the actions of all the component processes i, and similarly for the tasks.

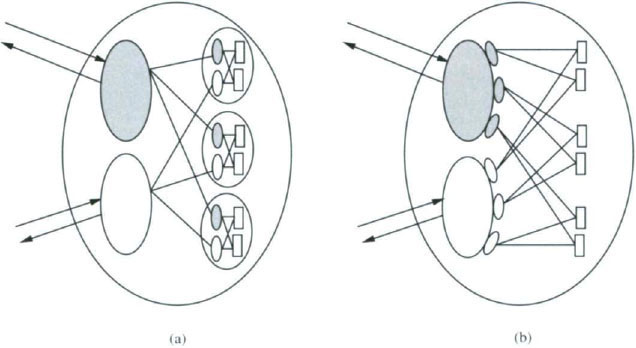

The situation is depicted in Figure 13.6. Part (a) shows Trans(A), including the shared memory systems Bx plugged in for all the shared variables x of A. (For simplicity, we have not drawn the stop input arrows.) All the shaded processes are associated with port 1. Part (b) shows the same system as in part (a), with the processes that are to be combined grouped together. Thus, all the shaded processes from part (a) are now combined into a single process 1 in part (b).

By the definition of Trans(A), the effect of a stopi event in the system of part (a) is to immediately stop all tasks of all the processes associated with port i—the tasks of Pi as well as the tasks of all the processes i of the Bx’s. This is the same as saying that stopi stops all tasks of the composed process i in the system of part (b), which is just what stopi is supposed to do when that system is regarded as a shared memory system.

Hierarchical construction of atomic objects. Finally, consider the very special case where shared memory system A is an atomic object guaranteeing I-failure termination and each atomic object Bx is a shared memory system that guarantees I-failure termination. Then Corollary 13.9 and the previous paragraph imply that Trans(A) is an atomic object guaranteeing I-failure termination and also that it is a shared memory system. This observation says that two successive layers of atomic object implementations in the shared memory model can be collapsed into one.

13.1.5 A Sufficient Condition for Showing Atomicity

Before presenting specific atomic object constructions, we give a sufficient condition for showing that a shared memory system guarantees the atomicity condition. This lemma enables us to avoid reasoning explicitly about incomplete operations in many of our proofs that objects are atomic.

For this lemma, we suppose that A is a shared memory system with an external interface appropriate for an atomic object for variable type ![]() . Also, we suppose that Ui, 1 ≤ i ≤ n, is any collection of users for A; as usual, U = Π Ui.

. Also, we suppose that Ui, 1 ≤ i ≤ n, is any collection of users for A; as usual, U = Π Ui.

Lemma 13.10 Suppose that the combined system A x U guarantees well-formedness and failure-free termination. Suppose that every (finite or infinite) execution α of A x U containing no incomplete operations satisfies the atomicity property. Then the same is true for every execution of A x U, including those with incomplete operations.

Proof. Let α be an arbitrary finite or infinite execution of the combined system A x U, possibly containing incomplete operations. We must show that α satisfies the atomicity property, that is, that α|ext(U) satisfies the atomicity property.

If α is finite, then the handling of stop events in a shared memory system implies that there is a finite failure-free execution α1, obtained by removing the stop events from α (and possibly modifying some state changes associated with inputs at ports on which a stop has occurred), such that α1|ext(U) = α|ext(U). By basic properties of I/O automata (in particular, Theorem 8.7), α1 can be extended to a fair failure-free execution α2 of A x U. Since A guarantees failure-free termination, every operation in α2 is completed. Then, by assumption, α2 satisfies the atomicity property, that is, α2|ext(U) satisfies the atomicity property. But α1|ext(U) is a prefix of α2|ext(U). Since, by Theorem 13.1, atomicity combined with well-formedness is a safety property and hence is prefix-closed, it follows that α1|ext(U) satisfies the atomicity property. Since α|ext(U) = α1|ext(U), we have that α|ext(U) satisfies the atomicity property, as needed.

On the other hand, suppose that α is infinite. By what we have just proved, any finite prefix α1 of α has the property that α1|ext(U) satisfies the atomicity property. But α|ext(U) is just the limit of the sequences of the form α1|ext(U). Since, by Theorem 13.1, atomicity combined with well-formedness is a safety property and hence is limit-closed, it follows that α|ext(U) satisfies the atomicity property, as needed.

13.2 Implementing Read-Modify-Write Atomic Objects in Terms of Read/Write Variables

We consider the problem of implementing a read-modify-write atomic object in the shared memory model with read/write shared variables. (See Section 9.4 for the definition of a read-modify-write variable type.) To be specific, we fix an arbitrary n and suppose that the read-modify-write object being implemented has n ports, each of which can support arbitrary update functions as inputs.

If all we require is an atomic object and we are not concerned about tolerating failures, then there are simple solutions. For instance,

RMWfromRW algorithm:

The latest value of the read-modify-write variable corresponding to the object being implemented is kept in a read/write shared variable x. Using a set of read/write shared variables different from x, the processes perform the trying part of a lockout-free mutual exclusion algorithm (for example, PetersonNP from Section 10.5.2) whenever they want to perform operations on the atomic object. When a process i enters the critical region of the mutual exclusion algorithm, it obtains exclusive access to x. Then process i performs its read-modify-write operation using a read step followed by a separate write step. After completing these steps, process i performs the exit part of the mutual exclusion algorithm.

However, this algorithm is not fault-tolerant: a process might fail while it is in its critical region, thereby preventing any other process from accessing the simulated read-modify-write variable. In fact, this limitation is not an accident. We give an impossibility result, even for the case where only a single failure is to be tolerated.

Theorem 13.11 There does not exist a shared memory system using read/write shared variables that implements a read-modify-write atomic object and guarantees 1-failure termination.

Proof. Suppose for the sake of contradiction that there is such a system, say B. Let A be the RMWAgreement algorithm for agreement in the read-modify-write shared memory model, given in Section 12.3. By Theorem 12.9, A guarantees wait-free termination and hence guarantees 1-failure termination (as defined for agreement algorithms in Section 12.1). Now we apply the transformation of Section 13.1.4 to A, using B in place of the single shared read-modify-write variable of A. Let Trans(A) denote the resulting system.

Claim 13.12 Trans(A) solves the agreement problem of Chapter 12 and guarantees 1-failure termination.

Proof. The proof of this is similar to that of Corollary 13.9. First let α be any execution of Trans(A) and a collection of users Ui. Then Theorem 13.7 yields an execution α′ of A x U such that α and α′ are indistinguishable to U. Since A solves the agreement problem, α′ satisfies the well-formedness, agreement, and validity properties. Then since α and α′ are indistinguishable to U, α also satisfies the well-formedness, agreement, and validity properties.

It remains to consider the 1-failure termination condition. Let α be any fair execution of Trans(A) and a collection of users Ui, in which init events occur on all ports and in which there is a stop event for at most one port. Since B guarantees 1-failure termination, Theorem 13.7 yields a fair execution α′ of A x U such that α and α′ are indistinguishable to U and contain stop events for the same set of ports. Thus, init events occur on all ports in α′, and there is a stop event for at most one port in α′.

Now consider any port i with no stopi event in α. Since α and α′ contain stop events for the same ports, there is also no stopi event in α′. Because A guarantees 1-failure termination, there is a decidei event in α′. Then, since α and α′ are indistinguishable to U, this decidei also appears in α. This is enough to show 1-failure termination.

However, by the paragraph at the end of Section 13.1, Trans(A) is itself a shared memory system in the read/write shared memory model. But then Trans(A) contradicts Theorem 12.8, the impossibility of agreement with 1-failure termination in the read/write shared memory model.

13.3 Atomic Snapshots of Shared Memory

In the rest of this chapter, we consider the implementation of particular types of atomic objects in terms of other types of atomic objects, or, equivalently, in terms of shared variables. This section is devoted to snapshot atomic objects, and the next is devoted to read/write atomic objects.

In the read/write shared memory model, it would be useful for a process to be able to take an instantaneous snapshot of the entire state of shared memory. Of course, the read/write model does not directly provide this capability—it only permits reads on individual shared variables.

In this section, we consider the implementation of such a snapshot. We formulate the problem as that of implementing a particular type of atomic object called a snaphot atomic object, using the read/write shared memory model. The variable type underlying a snapshot atomic object has as its domain V the set of vectors of some fixed length over a more basic domain W. The operations are of two kinds: writes to individual vector components, which we call update operations, and reads of the entire vector, which we call snap operations. A snapshot atomic object can simplify the task of programming a read/write system by allowing the processes to view the entire shared memory as a vector accessible by these powerful operations.

We start with a description of the problem, then give a simple solution that uses read/write shared variables of unbounded size. Then we show how the construction can be modified to work with bounded-size shared variables. Section 13.4.5 contains an application of snapshot atomic objects in the implementation of read/write atomic objects.

13.3.1 The Problem

We first define the variable type ![]() to which the snapshot atomic object will correspond; we call this a snapshot variable type.

to which the snapshot atomic object will correspond; we call this a snapshot variable type.

The definition begins with an underlying domain W with initial value ω0. The domain V of ![]() is then the set of vectors of elements of ω of a fixed length m. The initial value v0 is the vector in which every component has the value ω0. There are invocations of the form update(i, w), where 1 ≤ i ≤ m and ω∈ W, with response ack, and an invocation snap, with responses v ∈ V. An update(i, ω) invocation causes component i of the current vector to be set to the value ω and triggers an ack response. A snap invocation causes no change to the vector but triggers a response containing the current value of the entire vector.

is then the set of vectors of elements of ω of a fixed length m. The initial value v0 is the vector in which every component has the value ω0. There are invocations of the form update(i, w), where 1 ≤ i ≤ m and ω∈ W, with response ack, and an invocation snap, with responses v ∈ V. An update(i, ω) invocation causes component i of the current vector to be set to the value ω and triggers an ack response. A snap invocation causes no change to the vector but triggers a response containing the current value of the entire vector.

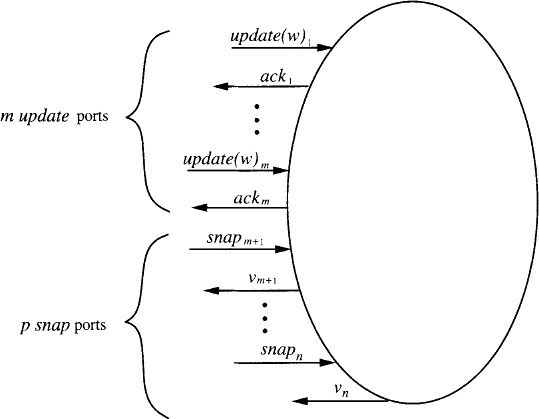

Next we define the external interface that we will consider. We assume that there are exactly n = m + p ports, where m is the fixed length of the vectors and p is some arbitrary positive integer. The first m ports are the update ports, and the remaining p ports are the snap ports. On each port i, 1 ≤ i ≤ m, we permit only invocations of the form update(i, w)—that is, only updates to the ith vector component are handled on port i. We sometimes abbreviate the redundant notation update(i, w)i, which indicates an invocation of update(i, w) on port i, as simply update(ω)i. On each port i, m + 1 ≤ i ≤ n, we permit only snap invocations. See Figure 13.7.

Notice that we are considering a special case of the general problem, where updates to each vector component arrive only at a single designated port and hence arrive sequentially. It is also possible to consider a more general case, where many ports allow updates to the same vector component. Of course, we could also consider the case where update and snap operations are allowed to occur on the same port.

We consider implementing the atomic object corresponding to this variable type and external interface using a shared memory system with n processes, one per port. We assume that all the shared variables are 1-writer/n-reader read/write shared variables. The implementations we describe guarantee wait-free termination.

13.3.2 An Algorithm with Unbounded Variables

The UnboundedSnapshot algorithm uses m 1-writer/n-reader read/write shared variables x(i), 1 ≤ i ≤ m. Each variable x(i) can be written by process i (the one connected to port i, which is the port for update(i, w) operations) and can be read by all processes. The architecture appears in Figure 13.8. Each variable x(i) holds values each of which consists of an element of ω plus some additional values needed by the algorithm. One of these additional values is an unbounded integer “tag.”

In the UnboundedSnapshot algorithm, each process i writes the values that it receives in updatei invocations into the shared variable x(i). A process performing a snap operation must somehow obtain consistent values from all the shared variables, that is, values that appear to have coexisted in the shared memory at some moment in time. The way it does this is based on two simple observations.

Observation 1: Suppose that whenever a process i performs an update(ω)i operation, it writes not only the value ω into x(i), but also a “tag” that uniquely identifies the update. Then, if a process j that is attempting to perform a snap operation reads all the shared variables twice, with the second set of reads starting after the first set of reads is finished, and if it finds the tag in each variable x(i) to be the same in the first and second set of reads, then the common vector of values returned in the two sets of reads is in fact a vector that appears in shared memory at some point during the interval of the snap operation. In particular, this vector is the vector of values at any point after the completion of the first set of reads and before the start of the second set.

Observation 1 suggests the following simple algorithm. Each process i performing an update(ω) operation writes ω into x(i), along with a unique local tag, obtained by starting with 1 for the first update at i and incrementing for each successive update at i.

Each process j performing a snap repeatedly performs a set of reads, one per shared variable, until two consecutive sets of reads are “consistent,” that is, they return identical tags for every x(i). When this happens, the vector of values returned by the second set of reads (which must be the same as that returned by the first set of reads) is returned as the response to the snap operation.

It is easy to see that whenever this simple algorithm completes an operation, the response is always “correct,” that is, it satisfies the well-formedness and atomicity conditions. However, it fails to guarantee even failure-free termination: a snap may never return, even in the absence of process failures, if new update operations keep getting invoked while the snap is active. A way out of this difficulty is provided by

Observation 2: If process j, while performing repeated sets of reads on behalf of a snap, ever sees the same variable x(i) with four different tags—say tag1, tag2, tag3, and tag4—then it knows that some updatei operation is completely contained within the interval of the current snap. In particular, the updatei operation that writes tag3 must be totally contained within the current snap.

To see why this is so, we argue first that the update that writes tag3 must begin after the beginning of the snap. This is because it begins after the end of the update that writes tag2, and the end of the update that writes tag2 must happen after the beginning of the snap interval (since the snap sees tag1).

Second, we argue that the update that writes tag3 must end before the end of the snap. This is because it ends before the beginning of the update that writes tag4, and the snap sees tag4.

Observations 1 and 2 suggest the UnboundedSnapshot algorithm. It extends the simple algorithm above so that before an update process i writes to x(i), it first executes its own embedded-snap subroutine, which is just like a snap. Then, when it writes its value and tag in x(i), it also places the result of its embedded-snap in x(i). A snap that fails to discover two sets of reads with identical tags despite many repeated attempts can use the result of an embedded-snap as a default snapshot value. A more careful description follows. In this description, each shared variable is a record with several fields; we use dot notation to indicate the fields.

UnboundedSnapshot algorithm:

Each shared variable x(i), 1 ≤ i ≤ m, is writable by process i and readable by all processes. It contains the following fields:

When a snapj input occurs on port j, m + 1 ≤ j ≤ n, process j behaves as follows. It repeatedly performs sets of reads, where a set consists of m reads, one read of each shared variable x(i), 1 ≤ i ≤ m, in any order. It does this until one of the following happens:

- Two sets of reads return the same x(i).tag for every i.

In this case, the snap returns the vector of values x(i).val, 1 ≤ i ≤ m, returned by the second set of reads. (This is the same as the vector returned by the first set of reads.)

- For some i, four distinct values of x(i).tag have been seen.

In this case, the snap returns the vector of values in x(i).view associated with the third of the four values of x(i).tag.

When an update(ω)i input occurs, process i behaves as follows. First, it performs an embedded-snap. This involves exactly the same work as is performed by a snap, except that the vector determined is recorded locally by process i instead of being returned to the user. Second, process i performs a single write to x(i), setting the three fields of x(i) as follows:

- x(i).val := ω

- x(i).tag is set to the smallest unused tag at i.

- x(i).view is set to the vector returned by the embedded-snap.

Finally, process i outputs acki.

Theorem 13.13 The UnboundedSnapshot algorithm is a snapshot atomic object guaranteeing wait-free termination.

Proof. The well-formedness condition is clear. Wait-free termination is also easy to see: the key is that every snap and every embedded-snap must terminate after performing at most 3m + 1 sets of reads. This is because after 3m + 1 sets of reads, there must either be two consecutive sets with no changes or else some variable x(i) with at least four different tags. In either of these two cases, the operation terminates.

It remains to show the atomicity condition. Fix any execution α of the UnboundedSnapshot algorithm plus users. In view of Lemma 13.10, we may assume without loss of generality that α contains no incomplete operations. We describe how to insert serialization points for all operations.

We insert the serialization point for each update operation at the point at which its write occurs. The insertion of serialization points for snap operations is a little more complicated. To describe this insertion, we find it helpful to assign serialization points not just to the snap operations but also to the embedded-snap operations.

First, consider any snap or embedded-snap that terminates by finding two consistent sets of reads. For each such operation, we insert the serialization point anywhere between the end of the first of its two sets of reads and the beginning of its second.

Second, consider those snap and embedded-snap operations that terminate by finding four different tags in the same variable. We insert serialization points for these operations one by one, in the order of their response events. For each such operation π, note that the vector it returns is the result of an embedded-snap ϕ whose interval is totally contained within the interval of operation π. Note that this operation ϕ has already been assigned a serialization point, since it completes earlier than π. We insert the serialization point for π at the same place as that for ϕ.

It is easy to see that all the serialization points are within the required intervals. For the update operations and for the snap and embedded-snap operations that terminate by finding two consistent sets of reads, this is obvious. For the snap and embedded-snap operations that terminate by finding four distinct tags, this can be argued by induction on the number of response events for such operations in α.

It remains to show that the result of shrinking the operation intervals to their respective serialization points is a trace of the underlying snapshot variable type. For this, first note that after any finite prefix α′ of α, there is a unique vector in V resulting from the write events in α′. Call this the correct vector after α′. It is enough to show that every snap operation returns the correct vector after the prefix of α up to the operation’s serialization point. More strongly, we argue that every snap and embedded-snap operation returns the correct vector for its serialization point.

This is clear for the operations that terminate by finding two consistent sets of reads. For the other snap and embedded-snap operations, we argue this by induction on the number of response events for such operations in α.

Complexity analysis. The UnboundedSnapshot algorithm uses m shared variables, each of which can take on an unbounded set of values. Even if the underlying domain ω is finite, the variables are still unbounded because of the unbounded tags. For time complexity, a non-failing process executing a snap performs at most 3m + 1 sets of reads, or at most (3m + 1)m shared memory accesses, for a total time that is O (m2l), where l is an upper bound on process step time. A non-failing process executing an update also performs O (m2) shared memory accesses, for a total time that is O (m2l); this is because of its embedded-snap operation.

13.3.3 An Algorithm with Bounded Variables*

The main problem with the UnboundedSnapshot algorithm is that it uses unbounded-size shared variables to store the unbounded tags. In this subsection, we sketch an improved algorithm called BoundedSnapshot, which replaces the unbounded tags with bounded data. In order to achieve this improvement in efficiency, the BoundedSnapshot algorithm uses some mechanisms that are more complicated than simple tags.

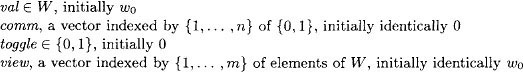

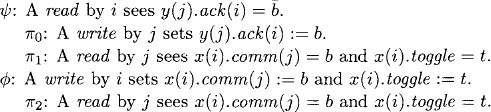

Note that the unbounded tags are used in the UnboundedSnapshot algorithm only for the purpose of allowing processes performing snap and embedded-snap operations to detect when new update operations have taken place. This information could, however, be communicated using a less powerful mechanism than a tag, in particular, using a combination of handshake bits and a toggle bit.

The handshake bits work as follows. There are now n + m shared variables: variables x(i), 1 ≤ i ≤ m as in the UnboundedSnapshot algorithm, plus new variables y(j), 1 ≤ j ≤ n. Each variable x(i) is writable by update process i and readable by all processes, as before. Each variable y(j), 1 ≤ j ≤ m, is writable by update process j (specifically, by the embedded-snap part of update process j) and is readable by all update processes, and each variable y(j), m + 1 ≤ j ≤ n, is writable by snap process j and readable by all update processes. Note that, unlike in the UnboundedSnapshot algorithm, the execution of the snap and embedded-snap operations in BoundedSnapshot involve writing to shared memory.

For each update process i, 1 ≤ i ≤ m, there are n pairs of handshake bits, one pair per process j. The pair of bits for (i, j) allow process i to tell process j about new updates by process i and also allow process j to acknowledge that it has seen this information. Specifically, x(i) contains a length n vector comm of bits, where the element comm(j) in variable x(i)—which we denote by x(i).comm(j)—is used by process i to communicate with process j about new updates by process i. And y(j) contains a length m vector ack of bits, where the element ack(i) in variable y(j)—which we denote by y(j).ack(i)—is used by process j to acknowledge that it has seen new updates by process i. Thus, the pair of handshake bits for (i, j) are x(i).comm(j) and y(j).ack(i).

The way these handshake bits are used is roughly as follows. When a process i executes an update(w), it begins by reading all the handshake bits y(j).ack(i). Then it performs its write to x(i); when it does this, it writes the value w and embedded snap response view, as it does in UnboundedSnapshot, and in addition writes the handshake bits in comm. In particular, for each j, it sets the bit comm(j) to be unequal to the value of y(j).ack(i) read at the beginning of the operation.

A process j performing a snap or embedded-snap repeatedly tries to perform two sets of reads, looking for the situation where nothing has changed in between the two sets of reads. But this time, changes are detected using the handshake bits rather than integer-valued tags. Specifically, before each attempt to find two consistent sets of reads, process j first reads all the handshake bits x(i).comm(j) and sets each handshake bit y(j).ack(i) equal to the value of x(i).comm(j) just read. (Thus, the update operations attempt to set the handshake bits unequal and the snap and embedded-snap operations attempt to set them equal.) Process j looks for changes to the handshake bits comm(j) in between its two sets of reads; if it finds such changes on 2m + 1 separate attempts, then it knows it has seen the results of four separate update operations by the same process i and can adopt the vector view produced by the third of these operations.

The handshake protocol described so far is simple and is “sound” in the sense that every time a process performing a snap or embedded-snap detects a change, a new update has in fact occurred. However, it turns out that the handshake is not sufficient to discover every update—it is possible for two consecutive updates by a process i not to be distinguished by some other process j. Consider, for example, the following situation.

Example 13.3.1 Insufficiency of handshake bits

Suppose that at some point during an execution, x(i).comm(j) = 0 and y(j).ack(i) = 1, that is, the handshake bits used to tell j about i’s updates are unequal. Then the following events may occur, in the indicated order. (The actions involving the two processes i and j appear in separate columns.)

In this sequence of events, process j performs three reads of x(i).comm(j). The first of these is just a preliminary test; the second and third are part of an attempt to find two consistent sets of reads. Here, process j determines as a result of its second and third reads that no updates have occurred in between. This is erroneous.

To overcome this problem, we augment the handshake protocol with a second mechanism: each x(i) contains an additional toggle bit that is flipped by process i during each of its write steps. This ensures that each update changes the value of the shared variable x(i). In a bit more detail, the protocol works as follows: