Networking on a Docker Engine is provided by a bridge network, the docker0 bridge. The docker0 bridge is local in scope to a Docker host and is installed by default when Docker is installed. All Docker containers run on a Docker host and are connected to the docker0 bridge network. They communicate with each other over the network.

The Problem

The default docker0 bridge network has the following limitations:

The bridge network is limited in scope to the local Docker host to provide container-to-container networking and not for multi-host networking.

The bridge network isolates the Docker containers on the host from external access. A Docker container may expose a port or multiple ports and the ports may be published on the host for an external client host access, as illustrated in Figure 10-1, but by default the docker0 bridge does not provide any external client access outside the network.

Figure 10-1. The default docker0 bridge network

The Solution

The Swarm mode (Docker Engine >=1.12) creates an overlay network called ingress for the nodes in the Swarm. The ingress overlay network is a multi-host network to route ingress traffic to the Swarm; external clients use it to access Swarm services. Services are added to the ingress network if they publish a port. The ingress overlay network has a default gateway and a subnet and all services in the ingress network are exposed on all nodes in the Swarm, whether a service has a task scheduled on each node or not. In addition to the ingress network, custom overlay networks may be created using the overlay driver. Custom overlay networks provide network connectivity between the Docker daemons in the Swarm and are used for service-to-service communication. Ingress is a special type of overlay network and is not for network traffic between services or tasks. Swarm mode networking is illustrated in Figure 10-2.

Figure 10-2. The Swarm overlay networks

The following Docker networks are used or could be used in Swarm mode.

The Ingress Network

The ingress network is created automatically when Swarm mode is initialized. On Docker for AWS, the ingress network is available out-of-the-box because the managed service has the Swarm mode enabled by default. The default overlay network called ingress extends to all nodes in the Swarm, whether the node has a service task scheduled or not. The ingress provides load balancing among a service’s tasks. All services that publish a port are added to the ingress network. Even a service created in an internal network is added to ingress if the service publishes a port. If a service does not publish a port, it is not added to the ingress network. A service publishes a port with the --publish or –p option using the following docker service create command syntax.

docker service create--name <SERVICE-NAME>--publish <PUBLISHED-PORT>:<TARGET-PORT><IMAGE>

If the <PUBLISHED-PORT> is omitted, the Swarm manager selects a port in the range 30000-32767 to publish the service.

The following ports must be open between the Swarm nodes to use the ingress network .

Port 7946 TCP/UDP is used for the container network discovery

Port 4789 UDP is used for the container ingress network

Custom Overlay Networks

Custom overlay networks are created using the overlay driver and services may be created in the overlay networks. A service is created in an overlay network using the --network option of the docker service create command. Overlay networks provide service-to-service communication. One Docker container in the overlay network can communicate directly with another Docker container in the network, whether the container is on the same node or a different node. Only Docker containers for Swarm service tasks can connect with each using the overlay network and not just any Docker containers running on the hosts in a Swarm. Docker containers started with the docker run <img> command, for instance, cannot connect to a Swarm overlay network, using docker network connect <overlay network> <container> for instance. Nor are Docker containers on Docker hosts that are not in a Swarm able to connect and communicate with Docker containers in the Swarm directly. Docker containers in different Swarm overlay networks cannot communicate with each other directly, as each Swarm overlay network is isolated from other networks.

While the default overlay network in a Swarm, ingress, extends to all nodes in the Swarm whether a service task is running on it or not, a custom overlay network whose scope is also the Swarm does not extend to all nodes in the Swarm by default. A custom Swarm overlay network extends to only those nodes in the Swarm on which a service task created with the custom Swarm overlay network is running.

An “overlay” network overlays the underlay network of the hosts and the scope of the overlay network is the Swarm. Service containers in an overlay network have different IP addresses and each overlay network has a different range of IP addresses assigned. On modern kernels, the overlay networks are allowed to overlap with the underlay network, and as a result, multiple networks can have the same IP addresses.

The docker_gwbridge Network

Another network that is created automatically (in addition to the ingress network) when the Swarm mode is initialized is the docker_gwbridge network . The docker_gwbridge network is a bridge network that connects all the overlay networks, including the ingress network, to a Docker daemon’s host network. Each service container is connected to the local Docker daemon host’s docker_gwbridge network.

The Bridge Network

A bridge network is a network on a host that is managed by Docker. Docker containers on the host communicate with each other over the bridge network. A Swarm mode service that does not publish a port is also created in the bridge network. So are the Docker containers started with the docker run command. This implies that a Swarm mode Docker service that does not publish a port is in the same network as Docker containers started with the docker run command.

This chapter covers the following topics:

Setting the environment

Networking in Swarm mode

Using the default overlay network ingress to create a service

Creating a custom overlay network

Using a custom overlay network to create a service

Connecting to another Docker container in the same overlay network

Creating an internal network

Deleting a network

Setting the Environment

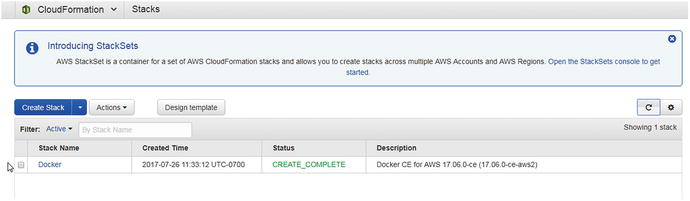

Create a three-node Docker Swarm on Docker for AWS, as discussed in Chapter 3. An AWS CloudFormation stack, shown in Figure 10-3, is used to create a Swarm.

Figure 10-3. AWS CloudFormation stack

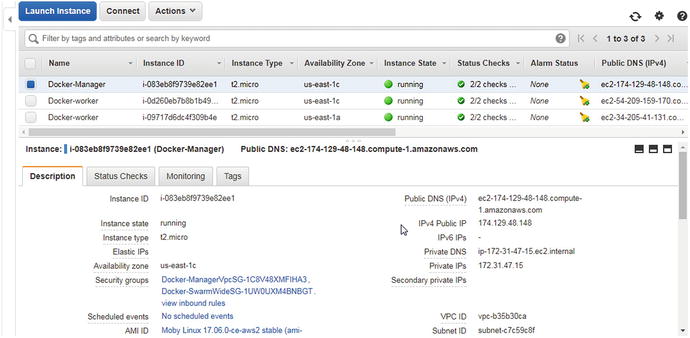

Obtain the public IP address of the Swarm manager node, as shown in Figure 10-4.

Figure 10-4. Obtaining the public IP address of a Swarm manager node instance

SSH login into the Swarm manager instance .

[root@localhost ∼]# ssh -i "docker.pem" [email protected]Welcome to Docker!

List the Swarm nodes —one manager and two worker nodes.

∼ $ docker node lsID HOSTNAME STATUS AVAILABILITY MANAGER STATUSnpz2akark8etv4ib9biob5yyk ip-172-31-47-123.ec2.internal Ready Activep6wat4lxq6a1o3h4fp2ikgw6r ip-172-31-3-168.ec2.internal Ready Activetb5agvzbi0rupq7b83tk00cx3 * ip-172-31-47-15.ec2.internal Ready Active Leader

Networking in Swarm Mode

The Swarm mode provides some default networks, which may be listed with the docker network ls command. These networks are available not just on Docker for AWS but on any platform (such as CoreOS) in Swarm mode.

∼ $ docker network lsNETWORK ID NAME DRIVER SCOPE34a5f77de8cf bridge bridge local0e06b811a613 docker_gwbridge bridge local6763ebad69cf host host locale41an60iwval ingress overlay swarmeb7399d3ffdd none null local

We discussed most of these networks in a preceding section. The "host" network is the networking stack of the host. The "none" network provides no networking between a Docker container and the host networking stack and creates a container without network access.

The default networks are available on a Swarm manager node and Swarm worker nodes even before any service task is scheduled.

The listed networks may be filtered using the driver filter set to overlay.

docker network ls --filter driver=overlayOnly the ingress network is listed. No other overlay network is provisioned by default.

∼ $ docker network ls --filter driver=overlayNETWORK ID NAME DRIVER SCOPEe41an60iwval ingress overlay swarm

The network of interest is the overlay network called ingress, but all the default networks are discussed in Table 10-1 in addition to being discussed in the chapter introduction.

Table 10-1. Docker Networks

Network | Description |

bridge | The bridge network is the docker0 network created on all Docker hosts. The Docker daemon connects containers to the docker0 network by default. Any Docker container started with the docker run command, even on a Swarm node, connects to the docker0 bridge network. |

docker_ gwbridge | Used for communication among Swarm nodes on different hosts. The network is used to provide external connectivity to a container that lacks an alternative network for connectivity to external networks and other Swarm nodes. When a container is connected to multiple networks, its external connectivity is provided via the first non-internal network, in lexical order. |

host | Adds a container to the host’s network stack. The network configuration inside the container is the same as the host’s. |

ingress | The overlay network used by the Swarm for ingress, which is external access. The ingress network is only for the routing mesh/ingress traffic. |

none | Adds a container to a container specific network stack and the container lacks a network interface. |

The default networks cannot be removed and, other than the ingress network, a user does not need to connect directly or use the other networks. To find detailed information about the ingress network, run the following command .

docker network inspect ingress The ingress network's scope is the Swarm and the driver used is overlay. The subnet and gateway are 10.255.0.0/16 and 10.255.0.1, respectively. The ingress network is not an internal network as indicated by the internal setting of false, which implies that the network is connected to external networks. The ingress network has an IPv4 address and the network is not IPv6 enabled.

∼ $ docker network inspect ingress[{"Name": "ingress","Id": "e41an60iwvalbeq5y3stdfem9","Created": "2017-07-26T18:38:29.753424199Z","Scope": "swarm","Driver": "overlay","EnableIPv6": false,"IPAM": {"Driver": "default","Options": null,"Config": [{"Subnet": "10.255.0.0/16","Gateway": "10.255.0.1"}]},"Internal": false,"Attachable": false,"Ingress": true,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {"ingress-sbox": {"Name": "ingress-endpoint","EndpointID": "f646b5cc4316994b8f9e5041ae7c82550bc7ce733db70df3f66b8d771d0f53c4","MacAddress": "02:42:0a:ff:00:02","IPv4Address": "10.255.0.2/16","IPv6Address": ""}},"Options": {"com.docker.network.driver.overlay.vxlanid_list": "4096"},"Labels": {},"Peers": [{"Name": "ip-172-31-47-15.ec2.internal-17c7f752fb1a","IP": "172.31.47.15"},{"Name": "ip-172-31-47-123.ec2.internal-d6ebe8111adf","IP": "172.31.47.123"},{"Name": "ip-172-31-3-168.ec2.internal-99510f4855ce","IP": "172.31.3.168"}]}]

Using the Default Bridge Network to Create a Service

To create a service in Swarm mode using the default bridge network , no special option needs to be specified. The --publish or –p option must not be specified. Create a service for the mysql database.

∼ $ docker service create> --env MYSQL_ROOT_PASSWORD='mysql'> --replicas 1> --name mysql> mysqllikujs72e46ti5go1xjtksnky

The service is created and the service task is scheduled on one of the nodes.

∼ $ docker service lsID NAME MODE REPLICAS IMAGE PORTSlikujs72e46t mysql replicated 1/1 mysql:latest

The service may be scaled to run tasks across the Swarm.

∼ $ docker service scale mysql=3mysql scaled to 3∼ $ docker service ps mysqlID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSv4bn24seygc6 mysql.1 mysql:latest ip-172-31-47-15.ec2.internal Running Running 2 minutes ago29702ebj52gs mysql.2 mysql:latest ip-172-31-47-123.ec2.internal Running Running 3 seconds agoc7b8v16msudl mysql.3 mysql:latest ip-172-31-3-168.ec2.internal Running Running 3 seconds ago

The mysql service created is not added to the ingress network, as it does not publish a port.

Creating a Service in the Ingress Network

In this section, we create a Docker service in the ingress network. The ingress network is not to be specified using the --network option of docker service create. A service must publish a port to be created in the ingress network. Create a Hello World service published (exposed) on port 8080.

∼ $ docker service rm hello-worldhello-world∼ $ docker service create> --name hello-world> -p 8080:80> --replicas 3> tutum/hello-worldl76ukzrctq22mn97dmg0oatup

The service creates three tasks, one on each node in the Swarm.

∼ $ docker service lsID NAME MODE REPLICAS IMAGE PORTSl76ukzrctq22 hello-world replicated 3/3 tutum/hello-world:latest *:8080->80/tcp∼ $ docker service ps hello-worldID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS5ownzdjdt1yu hello-world.1 tutum/hello-world: latest ip-172-31-14-234.ec2.internal Running Running 33 seconds agocsgofrbrznhq hello-world.2 tutum/hello-world:latest ip-172-31-47-203.ec2.internal Running Running 33 seconds agosctlt9rvn571 hello-world.3 tutum/hello-world:latest ip-172-31-35-44.ec2.internal Running Running 32 seconds ago

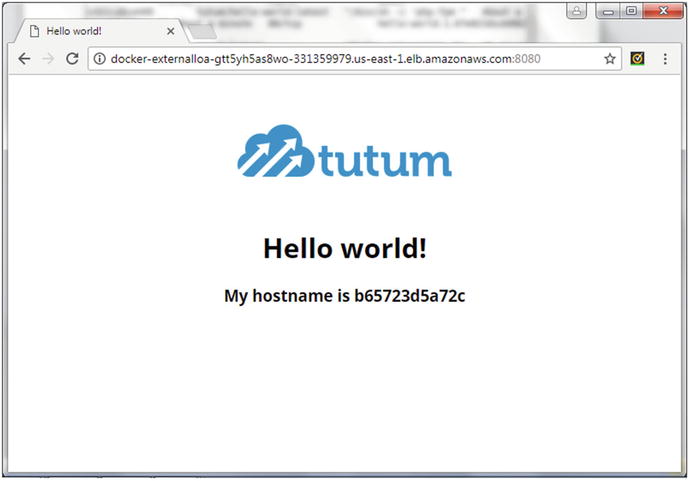

The service may be accessed on any node instance in the Swarm on port 8080 using the <Public DNS>:<8080> URL. If an elastic load balancer is created, as for Docker for AWS, the service may be accessed at <LoadBalancer DNS>:<8080>, as shown in Figure 10-5.

Figure 10-5. Invoking a Docker service in the ingress network using EC2 elastic load balancer public DNS

The <PublishedPort> 8080 may be omitted in the docker service create command .

∼ $ docker service create> --name hello-world> -p 80> --replicas 3> tutum/hello-worldpbjcjhx163wm37d5cc5au2fog

Three service tasks are started across the Swarm .

∼ $ docker service lsID NAME MODE REPLICAS IMAGE PORTSpbjcjhx163wm hello-world replicated 3/3 tutum/hello-world:latest *:0->80/tcp∼ $ docker service ps hello-worldID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSxotbpvl0508n hello-world.1 tutum/hello-world:latest ip-172-31-37-130.ec2.internal Running Running 13 seconds agonvdn3j5pzuqi hello-world.2 tutum/hello-world:latest ip-172-31-44-205.ec2.internal Running Running 13 seconds agouuveltc5izpl hello-world.3 tutum/hello-world:latest ip-172-31-15-233.ec2.internal Running Running 14 seconds ago

The Swarm manager automatically assigns a published port (30000), as listed in the docker service inspect command .

∼ $ docker service inspect hello-world["Spec": {"Name": "hello-world",..."EndpointSpec": {"Mode": "vip","Ports": [{"Protocol": "tcp","TargetPort": 80,"PublishMode": "ingress"}]}},"Endpoint": {"Spec": {"Mode": "vip","Ports": [{"Protocol": "tcp","TargetPort": 80,"PublishMode": "ingress"}]},"Ports": [{"Protocol": "tcp","TargetPort": 80,"PublishedPort": 30000,"PublishMode": "ingress"}],"VirtualIPs": [{"NetworkID": "bllwwocjw5xejffmy6n8nhgm8","Addr": "10.255.0.5/16"}]}}]

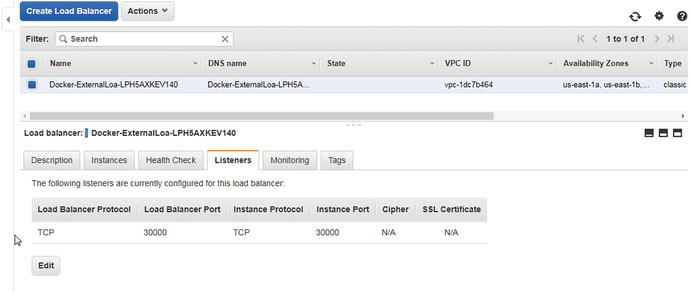

Even though the service publishes a port (30000 or other available port in the range 30000-32767), the AWS elastic load balancer for the Docker for AWS Swarm does not add a listener for the published port (30000 or other available port in the range 30000-32767). We add a listener with <Load Balancer Port:Instance Port> mapping of 30000:30000, as shown in Figure 10-6.

Figure 10-6. Adding a load balancer listener

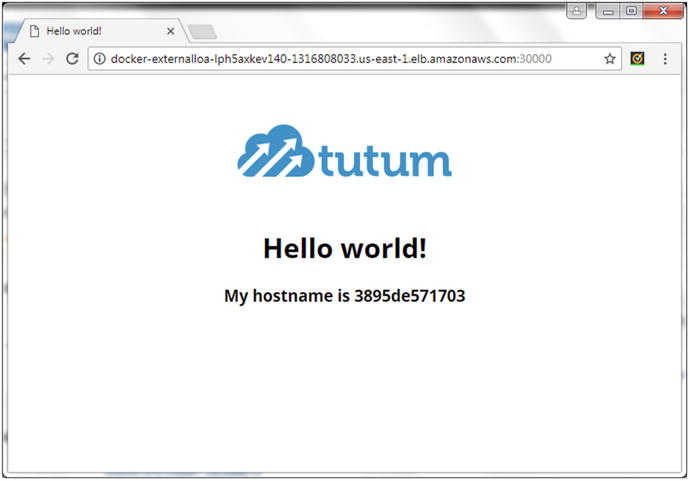

Invoke the service at the <Load Balancer DNS>:<30000> URL, as shown in Figure 10-7.

Figure 10-7. Invoking a Hello World service on port 30000

Creating a Custom Overlay Network

We used the default overlay network ingress provisioned in Swarm mode. The ingress network is only for the Swarm mode routing mesh in which all nodes are included. The Swarm routing mesh is provided so that each node in the Swarm may accept connections on published ports for services in the Swarm even if a service does not run a task on a node. The ingress network is not for service-to-service communication.

A custom overlay network may be used in Swarm mode for service-to-service communication. Next, create an overlay network using some advanced options, including setting subnets with the --subnet option and the default gateway with the --gateway option, as well as the IP range with the --ip-range option. The --driver option must be set to overlay and the network must be created in Swarm mode. A matching subnet for the specified IP range must be available. A subnet is a logical subdivision of an IP network. The gateway is a router that links a host’s subnet to other networks. The following command must be run from a manager node.

∼ $ docker network create> --subnet=192.168.0.0/16> --subnet=192.170.0.0/16> --gateway=192.168.0.100> --gateway=192.170.0.100> --ip-range=192.168.1.0/24> --driver overlay> mysql-networkmkileuo6ve329jx5xbd1m6r1o

The custom overlay network is created and listed in networks as an overlay network with Swarm scope.

∼ $ docker network lsNETWORK ID NAME DRIVER SCOPE34a5f77de8cf bridge bridge local0e06b811a613 docker_gwbridge bridge local6763ebad69cf host host locale41an60iwval ingress overlay swarmmkileuo6ve32 mysql-network overlay swarmeb7399d3ffdd none null local

Listing only the overlay networks should list the ingress network and the custom mysql-network.

∼ $ docker network ls --filter driver=overlayNETWORK ID NAME DRIVER SCOPEe41an60iwval ingress overlay swarmmkileuo6ve32 mysql-network overlay swarm

The detailed information about the custom overlay network mysql-network lists the subnets and gateways.

∼ $ docker network inspect mysql-network[{"Name": "mysql-network","Id": "mkileuo6ve329jx5xbd1m6r1o","Created": "0001-01-01T00:00:00Z","Scope": "swarm","Driver": "overlay","EnableIPv6": false,"IPAM": {"Driver": "default","Options": null,"Config": [{"Subnet": "192.168.0.0/16","IPRange": "192.168.1.0/24","Gateway": "192.168.0.100"},{"Subnet": "192.170.0.0/16","Gateway": "192.170.0.100"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": null,"Options": {"com.docker.network.driver.overlay.vxlanid_list": "4097,4098"},"Labels": null}]

Only a single overlay network can be created for specific subnets, gateways, and IP ranges. Using a different subnet, gateway, or IP range, a different overlay network may be created.

∼ $ docker network create> --subnet=10.0.0.0/16> --gateway=10.0.0.100> --ip-range=10.0.1.0/24> --driver overlay> mysql-network-2qwgb1lwycgvogoq9t62ea4ny1

The mysql-network-2 is created and added to the list of networks.

∼ $ docker network lsNETWORK ID NAME DRIVER SCOPE34a5f77de8cf bridge bridge local0e06b811a613 docker_gwbridge bridge local6763ebad69cf host host locale41an60iwval ingress overlay swarmmkileuo6ve32 mysql-network overlay swarmqwgb1lwycgvo mysql-network-2 overlay swarmeb7399d3ffdd none null local

New overlay networks are only made available to worker nodes that have containers using the overlay. While the new overlay networks mysql-network and mysql-network-2 are available on the manager node, the network is not extended to the two worker nodes. SSH login to a worker node.

[root@localhost ∼]# ssh -i "docker.pem" [email protected]Welcome to Docker!

The mysql-network and mysql-network-2 networks are not listed on the worker node.

∼ $ docker network lsNETWORK ID NAME DRIVER SCOPE255542d86c1b bridge bridge local3a4436c0fb00 docker_gwbridge bridge localbdd0be4885e9 host host locale41an60iwval ingress overlay swarm5c5f44ec3933 none null local

To extend the custom overlay network to worker nodes, create a service in the network that runs a task on the worker nodes, as we discuss in the next section.

The Swarm mode overlay networking is secure by default. The gossip protocol is used to exchange overlay network information between Swarm nodes. The nodes encrypt and authenticate the information exchanged using the AES algorithm in GCM mode. Manager nodes rotate the encryption key for gossip data every 12 hours by default. Data exchanged between containers on different nodes on the overlay network may also be encrypted using the --opt encrypted option, which creates IPSEC tunnels between all the nodes on which tasks are scheduled. The IPSEC tunnels also use the AES algorithm in GCM mode and rotate the encryption key for gossip data every 12 hours. The following command creates an encrypted network.

∼ $ docker network create> --driver overlay> --opt encrypted> overlay-network-2aqppoe3qpy6mzln46g5tunecr

A Swarm scoped network that is encrypted is created.

∼ $ docker network lsNETWORK ID NAME DRIVER SCOPE34a5f77de8cf bridge bridge local0e06b811a613 docker_gwbridge bridge local6763ebad69cf host host locale41an60iwval ingress overlay swarmmkileuo6ve32 mysql-network overlay swarmqwgb1lwycgvo mysql-network-2 overlay swarmeb7399d3ffdd none null localaqppoe3qpy6m overlay-network-2 overlay swarm

Using a Custom Overlay Network to Create a Service

If a custom overlay network is used to create a service, the --network must be specified. The following command creates a MySQL database service in Swarm mode using the custom Swarm scoped overlay network mysql-network.

∼ $ docker service create> --env MYSQL_ROOT_PASSWORD='mysql'> --replicas 1> --network mysql-network> --name mysql-2> mysqlocd9sz8qqp2becf0ww2rj5p5n

The mysql-2 service is created. Scale the mysql-2 service to three replicas and lists the service tasks for the service.

∼ $ docker service scale mysql-2=3mysql-2 scaled to 3

Docker containers in two different networks for the two services—mysql (bridge network) and mysql-2 (mysql-network overlay network)—are running simultaneously on the same node.

A custom overlay network is not extended to all nodes in the Swarm until the nodes have service tasks that use the custom network. The mysql-network does not get extended to and get listed on a worker node until after a service task for mysql-2 has been scheduled on the node.

A Docker container managed by the default Docker Engine bridge network docker0 cannot connect with a Docker container in a Swarm scoped overlay network. Using a Swarm overlay network in a docker run command, connecting with a Swarm overlay network with a docker network connect command, or linking a Docker container with a Swarm overlay network using the --link option of the docker network connect command is not supported. The overlay networks in Swarm scope can only be used by a Docker service in the Swarm.

For connecting between service containers:

Docker containers for the same or different services in the same Swarm scoped overlay network are able to connect with each other.

Docker containers for the same or different services in different Swarm scoped overlay networks are not able to connect with each other.

In the next section, we discuss an internal network, but before we do so, the external network should be introduced. The Docker containers we have created as of yet are external network containers. The ingress network and the custom overlay network mysql- network are external networks. External networks provide a default route to the gateway. The host and the wider Internet network may connect to a Docker container in the ingress or custom overlay networks. As an example, run the following command to ping google.com from a Docker container’s bash shell; the Docker container should be in the ingress overlay network or a custom Swarm overlay network.

docker exec –it <containerid> ping –c 1 google.comA connection is established and data is exchanged. The command output is shown in italics.

∼ $ docker exec -it 3762d7c4ea68 ping -c 1 google.comPING google.com (172.217.7.142): 56 data bytes64 bytes from 172.217.7.142: icmp_seq=0 ttl=47 time=0.703 ms--- google.com ping statistics ---1 packets transmitted, 1 packets received, 0% packet lossround-trip min/avg/max/stddev = 0.703/0.703/0.703/0.000 ms

Creating an Internal Overlay Network

In this section, we discuss creating and using an internal overlay network. An internal network does not provide external connectivity. What makes a network internal is that a default route to a gateway is not provided for external connectivity from the host or the wider Internet.

First, create an internal overlay network using the --internal option of the docker network create command. Add some other options, such as --label, which have no bearing on the internal network. It’s configured with the --internal option of the docker network create command.

∼ $ docker network create> --subnet=10.0.0.0/16> --gateway=10.0.0.100> --internal> --label HelloWorldService> --ip-range=10.0.1.0/24> --driver overlay> hello-world-networkpfwsrjeakomplo5zm6t4p19a9

The internal network is created and listed just the same as an external network would be.

∼ $ docker network lsNETWORK ID NAME DRIVER SCOPE194d51d460e6 bridge bridge locala0674c5f1a4d docker_gwbridge bridge localpfwsrjeakomp hello-world-network overlay swarm03a68475552f host host localtozyadp06rxr ingress overlay swarm3dbd3c3ef439 none null local

In the network description, the internal is set to true.

core@ip-172-30-2-7 ∼ $ docker network inspect hello-world-network[{"Name": "hello-world-network","Id": "58fzvj4arudk2053q6k2t8rrk","Scope": "swarm","Driver": "overlay","EnableIPv6": false,"IPAM": {"Driver": "default","Options": null,"Config": [{"Subnet": "10.0.0.0/16","IPRange": "10.0.1.0/24","Gateway": "10.0.0.100"}]},"Internal": true,"Containers": null,"Options": {"com.docker.network.driver.overlay.vxlanid_list": "257"},"Labels": {"HelloWorldService": ""}}]

Create a service that uses the internal network with the --network option.

∼ $ docker service create> --name hello-world> --network hello-world-network> --replicas 3> tutum/hello-worldhm5pf6ftcvphdrd2zm3pp4lpj

The service is created and the replicas are scheduled.

Obtain the container ID for one of the service tasks, d365d4a5ff4c.

∼ $ docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESd365d4a5ff4c tutum/hello-world:latest "/bin/sh -c 'php-f..." About a minute ago Up About a minute hello-world.3.r759ddnl1de11spo0zdi7xj4z

As before, ping google.com from the Docker container.

docker exec –it <containerid> ping –c 1 google.comA connection is not established, which is because the container is in an internal overlay network.

∼ $ docker exec -it d365d4a5ff4c ping -c 1 google.comping: bad address 'google.com'

Connection is established between containers in the same internal network, as the limitation is only on external connectivity. To demonstrate, obtain the container ID for another container in the same internal network.

∼ $ docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESb7b505f5eb8d tutum/hello-world:latest "/bin/sh -c 'php-f..." 3 seconds ago Up 2 seconds hello-world.6.i60ezt6da2t1odwdjvecb75fx57e612f35a38 tutum/hello-world:latest "/bin/sh -c 'php-f..." 3 seconds ago Up 2 seconds hello-world.7.6ltqnybn8twhtblpqjtvulkupd365d4a5ff4c tutum/hello-world:latest "/bin/sh -c 'php-f..." 7 minutes ago Up 7 minutes hello-world.3.r759ddnl1de11spo0zdi7xj4z

Connect between two containers in the same internal network. A connection is established.

∼ $ docker exec -it d365d4a5ff4c ping -c 1 57e612f35a38PING 57e612f35a38 (10.0.1.7): 56 data bytes64 bytes from 10.0.1.7: seq=0 ttl=64 time=0.288 ms--- 57e612f35a38 ping statistics ---1 packets transmitted, 1 packets received, 0% packet lossround-trip min/avg/max = 0.288/0.288/0.288 ms

If a service created in an internal network publishes (exposes) a port, the service gets added to the ingress network and, even though the service is in an internal network, external connectivity is provisioned. As an example, we add the --publish option of the docker service create command to publish the service on port 8080.

∼ $ docker service create> --name hello-world> --network hello-world-network> --publish 8080:80> --replicas 3> tutum/hello-worldmqgek4umisgycagy4qa206f9c

Find a Docker container ID for a service task.

∼ $ docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES1c52804dc256 tutum/hello-world:latest "/bin/sh -c 'php-f..." 28 seconds ago Up 27 seconds 80/tcp hello-world.1.20152n01ng3t6uaiahpex9n4f

Connect from the container in the internal network to the wider external network at google.com, as an example. A connection is established. Command output is shown in italics.

∼ $ docker exec -it 1c52804dc256 ping -c 1 google.comPING google.com (172.217.7.238): 56 data bytes64 bytes from 172.217.7.238: seq=0 ttl=47 time=1.076 ms--- google.com ping statistics ---1 packets transmitted, 1 packets received, 0% packet lossround-trip min/avg/max = 1.076/1.076/1.076 ms

Deleting a Network

A network that is not in use may be removed with the docker network rm <networkid> command. Multiple networks may be removed in the same command. As an example, we can list and remove multiple networks.

∼ $ docker network lsNETWORK ID NAME DRIVER SCOPE34a5f77de8cf bridge bridge local0e06b811a613 docker_gwbridge bridge localwozpfgo8vbmh hello-world-network swarm6763ebad69cf host host locale41an60iwval ingress overlay swarmmkileuo6ve32 mysql-network overlay swarmqwgb1lwycgvo mysql-network-2 overlay swarmeb7399d3ffdd none null localaqppoe3qpy6m overlay-network-2 overlay swarm

Networks that are being used by a service are not removed. The command output is shown in italics.

∼ $ docker network rm hello-world-network mkileuo6ve32 qwgb1lwycgvo overlay-network-2hello-world-networkError response from daemon: rpc error: code = 9 desc = network mkileuo6ve329jx5xbd1m6r1o is in use by service ocd9sz8qqp2becf0ww2rj5p5nqwgb1lwycgvooverlay-network-2

Summary

This chapter discussed the networking used by the Docker Swarm mode. The default networking used in Swarm mode is the overlay network ingress, which is a multi-host network spanning all Docker nodes in the same Swarm to provide a routing mesh for each node to be able to accept ingress connections for services on published ports. Custom overlay network may be used to create a Docker service with the difference that a custom overlay network provides service-to-service communication instead of ingress communication and extends to a Swarm worker node only if a service task using the network is scheduled on the node. The chapter also discussed the difference between an internal and an external network. In the next chapter, we discuss logging and monitoring in Docker Swarm mode.