A service task container in a Swarm has access to the filesystem inherited from its Docker image. The data is made integral to a Docker container via its Docker image. At times, a Docker container may need to store or access data on a persistent filesystem. While a container has a filesystem, it is removed once the container exits. In order to store data across container restarts, that data must be persisted somewhere outside the container.

The Problem

Data stored only within a container could result in the following issues:

The data is not persistent. The data is removed when a Docker container is stopped.

The data cannot be shared with other Docker containers or with the host filesystem.

The Solution

Modular design based on the Single Responsibility Principle (SRP) recommends that data be decoupled from the Docker container. Docker Swarm mode provides mounts for sharing data and making data persistent across a container startup and shutdown. Docker Swarm mode provides two types of mounts for services:

Volume mounts

Bind mounts

The default is the volume mount. A mount for a service is created using the --mount option of the docker service create command.

Volume Mounts

Volume mounts are named volumes on the host mounted into a service task’s container. The named volumes on the host persist even after a container has been stopped and removed. The named volume may be created before creating the service in which the volume is to be used or the volume may be created at service deployment time. Named volumes created at deployment time are created just prior to starting a service task’s container. If created at service deployment time, the named volume is given an auto-generated name if a volume name is not specified. An example of a volume mount is shown in Figure 6-1, in which a named volume mysql-scripts, which exists prior to creating a service, is mounted into service task containers at the directory path /etc/mysql/scripts.

Figure 6-1. Volume mount

Each container in the service has access to the same named volume on the host on which the container is running, but the host named volume could store the same or different data.

When using volume mounts, contents are not replicated across the cluster. For example, if you put something into the mysql-scripts directory you’re using, those new files will only be accessible to other tasks running on that same node. Replicas running on other nodes will not have access to those files.

Bind Mounts

Bind mounts are filesystem paths on the host on which the service task is to be scheduled. The host filesystem path is mounted into a service task’s container at the specified directory path. The host filesystem path must exist on each host in the Swarm on which a task may be scheduled prior to a service being created. If certain nodes are to be excluded for service deployment, using node constraints, the bind mount host filesystem does not have to exist on those nodes. When using bind mounts, keep in mind that the service using a bind mount is not portable as such. If the service is to be deployed in production, the host directory path must exist on each host in the Swarm in the production cluster.

The host filesystem path does not have to be the same as the destination directory path in a task container. As an example, the host path /db/mysql/data is mounted as a bind mount into a service’s containers at directory path /etc/mysql/data in Figure 6-2. A bind mount is read-write by default, but could be made read-only at service deployment time. Each container in the service has access to the same directory path on the host on which the container is running, but the host directory path could store different or the same data.

Figure 6-2. Bind mount

Swarm mode mounts provide shareable named volumes and filesystem paths on the host that persist across a service task startup and shutdown. A Docker image’s filesystem is still at the root of the filesystem hierarchy and a mount can only be mounted on a directory path within the root filesystem.

This chapter covers the following topics:

Setting the environment

Types of mounts

Creating a named volume

Using a volume mount to get detailed info about a volume

Removing a volume

Creating and using a bind mount

Setting the Environment

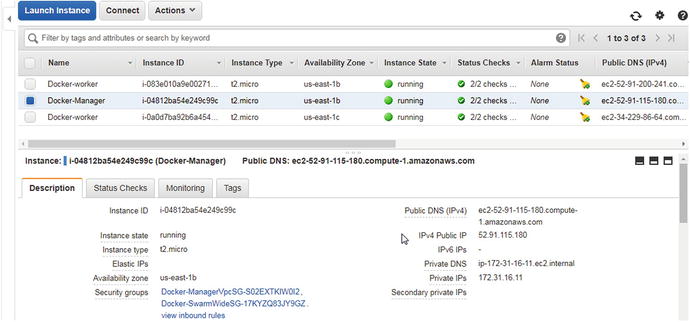

Create a Docker for AWS-based Swarm consisting of one manager node and two worker nodes, as discussed in Chapter 3. The Docker for AWS Swarm will be used for one type of mount, the volume mount. For the bind mount, create a three-node Swarm consisting of one manager and two worker nodes on CoreOS instances. Creating a Swarm on CoreOS instances is discussed in Chapter 2. A CoreOS-based Swarm is used because Docker for AWS Swarm does not support bind mounts out-of-the-box. Obtain the public IP address of the manager instance for the Docker for AWS Swarm from the EC2 console, as shown in Figure 6-3.

Figure 6-3. EC2 instances for Docker for AWS Swarm nodes

SSH login into the manager instance.

[root@localhost ∼]# ssh -i "docker.pem" [email protected]Welcome to Docker!

List the nodes in the Swarm. A manager node and two worker nodes are listed.

∼ $ docker node lsID HOSTNAME STATUS AVAILABILITY MANAGER STATUS8ynq7exfo5v74ymoe7hrsghxh ip-172-31-33-230.ec2.internal Ready Activeo0h7o09a61ico7n1t8ooe281g * ip-172-31-16-11.ec2.internal Ready Active Leaderyzlv7c3qwcwozhxz439dbknj4 ip-172-31-25-163.ec2.internal Ready Active

Creating a Named Volume

A named volume to be used in a service as a mount of type volume may either be created prior to creating the service or at deployment time. A new named volume is created with the following command syntax.

docker volume create [OPTIONS] [VOLUME]The options discussed in Table 6-1 are supported.

Table 6-1. Options for the docker volume create Command for a Named Volume

Option | Description | Type | Default Value |

|---|---|---|---|

--driver, -d | Specifies volume driver name | string | local |

--label | Sets metadata for a volume | value | [] |

--name | Specifies volume name | string | |

--opt, -o | Sets driver specific options | value | map[] |

Create a named volume called hello using the docker volume create command.

∼ $ docker volume create --name hellohello

Subsequently, list the volumes with the docker volume ls command. The hello volume is listed in addition to other named volumes that may exist.

∼ $ docker volume lsDRIVER VOLUME NAMElocal hello

You can find detailed info about the volume using the following command.

docker volume inspect helloIn addition to the volume name and driver, the mountpoint of the volume also is listed.

∼ $ docker volume inspect hello[{"Driver": "local","Labels": {},"Mountpoint": "/var/lib/docker/volumes/hello/_data","Name": "hello","Options": {},"Scope": "local"}]

The scope of a local driver volume is local. The other supported scope is global. A local volume is created on a single Docker host and a global volume is created on each Docker host in the cluster .

Using a Volume Mount

Use the hello volume in the docker service create command with the --mount option. The options discussed in Table 6-2 may be used both with bind mounts and volume mounts.

Table 6-2. Options for Volume and Bind Mounts

Option | Required | Description | Default |

type | No | Specifies the type of mount. One of three values may be specified: volume-Mounts is a named volume in a container. bind-Bind-mounts is a directory or file from the host into a container. tmpfs-Mounts is a tmpfs into a container. | volume |

src or source | Yes for type=bind only. No for type=volume | The source directory or volume. The option has different meanings for different types of mounts. type=volume: src specifies the name of the volume. If the named volume does not exist, it is created. If src is omitted, the named volume is created with an auto-generated name, which is unique on the host but may not be unique cluster-wide. An auto-generated named volume is removed when the container using the volume is removed. The docker service update command shuts down task containers and starts new task containers and so does scaling a service. volume source must not be an absolute path. type=bind: src specifies the absolute path to the directory or file to bind-mount. The directory path must be an absolute and not a relative path. The src option is required for a mount of type bind and an error is generated if it’s not specified. type=tmpfs: is not supported. | |

dst or destination or target | Yes | Specifies the mount path inside a container. If the path does not exist in a container’s filesystem, the Docker engine creates the mount path before mounting the bind or volume mount. The volume target must be a relative path. | |

readonly or ro | No | A boolean (true/false) or (1/0) to indicate whether the Docker Engine should mount volumes and bind read-write or read-only. If the option is not specified, the engine mounts the bind or volume read-write. If the option is specified with a value of true or 1 or no value, the engine mounts the volume or bind read-only. If the option is specified with a value of false or 0, the engine mounts the volume or bind read-write. |

Some of the mount options are only supported for volume mounts and are discussed in Table 6-3.

Table 6-3. Options for Volume Mounts

Option | Required | Description | Default Value |

|---|---|---|---|

volume-driver | No | Specifies the name of the volume-driver plugin to use for the volume. If a named volume is not specified in src, the volume-driver is used to create a named volume. | local |

volume- label | No | Specifies one or more comma-separated metadata labels to apply to the volume. Example: volume-label=label-1=hello-world,label-2=hello. | |

volume-nocopy | No | Applies to an empty volume that is mounted in a container at a mount path at which files and directories already existed. Specifies whether a container’s filesystem files and directories at the mount path (dst) are to be copied to the volume. A host is able to access the files and directories copied from the container to the named volume. A value of true or 1 disables copying of files from the container’s filesystem to the host volume. A value of false or 0 enables copying. | true or 1 |

volume- opt | No | Specifies the options to be supplied to the volume-driver in creating a named volume if one does not exist. The volume-opt options are specified as a comma-separated list of key/value pairs. Example: volume-opt-1=option-1=value1,option-2=value2. A named volume has to exist on each host on which a mount of type volume is to be mounted. Creating a named volume on the Swarm manager does not also create the named volume on the worker nodes. The volume-driver and volume-opt options are used to create the named volume on the worker nodes. |

The options discussed in Table 6-4 are supported only with a mount of type tmpfs.

Table 6-4. Options for the tmpfs Mount

Option | Required | Description | Default Value |

|---|---|---|---|

tmpfs-size | No | Size of the tmpfs mount in bytes | Unlimited value on Linux |

tmpfs- mode | No | Specifies the file mode of the tmpfs in octal | 1777 in Linux |

Next, we will use the named volume hello in a service created with Docker image tutum/hello-world. In the following docker service create command, the --mount option specifies the src as hello and includes some volume-label labels for the volume.

∼ $ docker service create--name hello-world--mount src=hello,dst=/hello,volume-label="msg=hello",volume-label="msg2=world"--publish 8080:80--replicas 2tutum/hello-world

The service is created and the service ID is output.

∼ $ docker service create> --name hello-world> --mount src=hello,dst=/hello,volume-label="msg=hello",volume-label="msg2=world"> --publish 8080:80> --replicas 2> tutum/hello-world8ily37o72wyxkyw2jt60kdqoz

Two service replicas are created.

∼ $ docker service lsID NAME MODE REPLICAS IMAGE PORTS8ily37o72wyx hello-world replicated 2/2 tutum/hello-world:latest *:8080->80/tcp∼ $ docker service ps hello-worldID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSuw6coztxwqhf hello-world.1 tutum/hello-world:latest ip-172-31-25-163.ec2.internalRunning Running 20 seconds agocfkwefwadkki hello-world.2 tutum/hello-world:latest ip-172-31-16-11.ec2.internalRunning Running 21 seconds ago

The named volume is mounted in each task container in the service.

The service definition lists the mounts, including the mount labels.

∼ $ docker service inspect hello-world[..."Spec": {"ContainerSpec": {"Image": "tutum/hello-world:latest@sha256:0d57def8055178aafb4c7669cbc25ec17f0acdab97cc587f30150802da8f8d85","Mounts": [{"Type": "volume","Source": "hello","Target": "/hello","VolumeOptions": {"Labels": {"msg": "hello","msg2": "world"},...]

In the preceding example, a named volume is created before using the volume in a volume mount. As another example, create a named volume at deployment time. In the following docker service create command, the --mount option is set to type=volume with the source set to nginx-root. The named volume nginx-root does not exist prior to creating the service.

∼ $ docker service create> --name nginx-service> --replicas 3> --mount type=volume,source="nginx-root",destination="/var/lib/nginx",volume-label="type=nginx root dir"> nginx:alpinertz1ldok405mr03uhdk1htlnk

When the command is run, a service is created. Service description includes the volume mount in mounts.

∼ $ docker service inspect nginx-service[..."Spec": {"Name": "nginx-service",..."Mounts": [{"Type": "volume","Source": "nginx-root","Target": "/var/lib/nginx","VolumeOptions": {"Labels": {"type": "nginx root dir"},...]

The named volume nginx-root was not created prior to creating the service and is therefore created before starting containers for service tasks. The named volume nginx-root is created only on nodes on which a task is scheduled. One service task is scheduled on each of the three nodes.

∼ $ docker service ps nginx-serviceID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSpfqinizqmgur nginx-service.1 nginx:alpine ip-172-31-33-230.ec2.internalRunning Running 19 seconds agomn8h3p40chgs nginx-service.2 nginx:alpine ip-172-31-25-163.ec2.internalRunning Running 19 seconds agok8n5zzlnn46s nginx-service.3 nginx:alpine ip-172-31-16-11.ec2.internalRunning Running 18 seconds ago

As a task is scheduled on the manager node, a named volume called nginx-root is created on the manager node, as listed in the output of the docker volume ls command.

∼ $ docker volume lsDRIVER VOLUME NAMElocal hellolocal nginx-root

Service tasks and task containers are started on each of the two worker nodes. A nginx-root named volume is created on each of the worker nodes. Listing the volumes on the worker nodes lists the nginx-root volume.

[root@localhost ∼]# ssh -i "docker.pem" [email protected]Welcome to Docker!∼ $ docker volume lsDRIVER VOLUME NAMElocal hellolocal nginx-root[root@localhost ∼]# ssh -i "docker.pem" [email protected]Welcome to Docker!∼ $ docker volume lsDRIVER VOLUME NAMElocal hellolocal nginx-root

A named volume was specified in src in the preceding example. The named volume may be omitted as in the following service definition.

∼ $ docker service create> --name nginx-service-2> --replicas 3> --mount type=volume,destination=/var/lib/nginx> nginx:alpineq8ordkmkwqrwiwhmaemvcypc3

The service is created with a replica and is scheduled on each of the Swarm nodes.

∼ $ docker service ps nginx-service-2ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSkz8d8k6bxp7u nginx-service-2.1 nginx:alpine ip-172-31-25-163.ec2.internalRunning Running 27 seconds agowd65qsmqixpg nginx-service-2.2 nginx:alpine ip-172-31-16-11.ec2.internalRunning Running 27 seconds agombnmzldtaaed nginx-service-2.3 nginx:alpine ip-172-31-33-230.ec2.internalRunning Running 26 seconds ago

The service definition does not list a named volume.

∼ $ docker service inspect nginx-service-2["Spec": {"Name": "nginx-service-2","ContainerSpec": {"Mounts": [{"Type": "volume","Target": "/var/lib/nginx"}],...]

Named volumes with auto-generated names are created when a volume name is not specified explicitly. One auto-generated named volume with an auto-generated name is created on each node on which a service task is run. One of the named volumes listed on the manager node is an auto-generated named volume with an auto-generated name.

∼ $ docker volume lsDRIVER VOLUME NAMElocal 305f1fa3673e811b3b320fad0e2dd5786567bcec49b3e66480eab2309101e233local hellolocal nginx-root

As another example of using named volumes as mounts in a service, create a named volume called mysql-scripts for a MySQL database service.

∼ $ docker volume create --name mysql-scriptsmysql-scripts

The named volume is created and listed.

∼ $ docker volume lsDRIVER VOLUME NAMElocal 305f1fa3673e811b3b320fad0e2dd5786567bcec49b3e66480eab2309101e233local hellolocal mysql-scriptslocal nginx-root

The volume description lists the scope as local and lists the mountpoint.

∼ $ docker volume inspect mysql-scripts[{"Driver": "local","Labels": {},"Mountpoint": "/var/lib/docker/volumes/mysql-scripts/_data","Name": "mysql-scripts","Options": {},"Scope": "local"}]

Next, create a service that uses the named volume in a volume mount.

∼ $ docker service create> --env MYSQL_ROOT_PASSWORD='mysql'> --mount type=volume,src="mysql-scripts",dst="/etc/mysql/scripts",el="msg=mysql",volume-label="msg2=scripts"> --publish 3306:3306> --replicas 2> --name mysql> mysqlcghaz4zoxurpyqil5iknqf4c1

The service is created and listed.

∼ $ docker service lsID NAME MODE REPLICAS IMAGE PORTS8ily37o72wyx hello-world replicated 2/2 tutum/hello-world:latest *:8080->80/tcpcghaz4zoxurp ysql replicated 1/2 mysql:latest *:3306->3306/tcp

Listing the service tasks indicates that the tasks are scheduled on the manager node and one of the worker nodes.

∼ $ docker service ps mysqlID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSy59yhzwch2fj mysql.1 mysql:latest ip-172-31-33-230.ec2.internalRunning Preparing 12 seconds agozg7wrludkr84 mysql.2 mysql:latest ip-172-31-16-11.ec2.internalRunning Running less than a second ago

The destination directory for the named volume is created in the Docker container. The Docker container on the manager node may be listed with docker ps and a bash shell on the container may be started with the docker exec -it <containerid> bash command.

∼ $ docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESa855826cdc75 mysql:latest "docker-entrypoint..." 22 seconds ago Up 21 seconds 3306/tcp mysql.2.zg7wrludkr84zf8vhdkf8wnlh∼ $ docker exec -it a855826cdc75 bashroot@a855826cd75:/#

Change the directory to /etc/mysql/scripts in the container. Initially, the directory is empty.

root@a855826cdc75:/# cd /etc/mysql/scriptsroot@a855826cdc75:/etc/mysql/scripts# ls -ltotal 0root@a855826cdc75:/etc/mysql/scripts# exitexit

A task container for the service is created on one of the worker nodes and may be listed on the worker node.

∼ $ docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESeb8d59cc2dff mysql:latest "docker-entrypoint..." 8 minutes ago Up 8 minutes 3306/tcp mysql.1.xjmx7qviihyq2so7n0oxi1muq

Start a bash shell for the Docker container on the worker node. The /etc/mysql/scripts directory on which the named volume is mounted is created in the Docker container.

∼ $ docker exec -it eb8d59cc2dff bashroot@eb8d59cc2dff:/# cd /etc/mysql/scriptsroot@eb8d59cc2dff:/etc/mysql/scripts# exitexit

If a service using an auto-generated named volume is scaled to run a task on nodes on which a task was not running previously, named volumes are auto-generated on those nodes also. As an example of finding the effect of scaling a service when using an auto-generated named volume as a mount in the service, create a MySQL database service with a volume mount. The volume mysql-scripts does not exist prior to creating the service; remove the mysql-scripts volume if it exists.

∼ $ docker service create

> --env MYSQL_ROOT_PASSWORD='mysql'

> --replicas 1

> --mount type=volume,src="mysql-scripts",dst="/etc/mysql/scripts"

> --name mysql

> mysql

088ddf5pt4yb3yvr5s7elyhpnThe service task is scheduled on a node.

∼ $ docker service ps mysqlID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSxlix91njbaq0 mysql.1 mysql:latest ip-172-31-13-122.ec2.internalRunning Preparing 12 seconds ago

List the nodes; the node on which the service task is scheduled is the manager node.

∼ $ docker node lsID HOSTNAME STATUS AVAILABILITY MANAGER STATUSo5hyue3hzuds8vtyughswbosl ip-172-31-11-41.ec2.internal Ready Activep6uuzp8pmoahlcwexr3wdulxv ip-172-31-23-247.ec2.internal Ready Activeqnk35m0141lx8jljp87ggnsnq * ip-172-31-13-122.ec2.internal Ready Active Leader

A named volume mysql-scripts and an ancillary named volume with an auto-generated name are created on the manager node on which a task is scheduled.

∼ $ docker volume ls

DRIVER VOLUME NAME

local a2bc631f1b1da354d30aaea37935c65f9d99c5f084d92341c6506f1e2aab1d55

local mysql-scriptsThe worker nodes do not list the mysql-scripts named volume, as a task is not scheduled on the worker nodes.

∼ $ docker volume lsDRIVER VOLUME NAME

Scale the service to three replicas. A replica is scheduled on each of the three nodes.

∼ $ docker service scale mysql=3mysql scaled to 3∼ $ docker service ps mysqlID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTSxlix91njbaq0 mysql.1 mysql:latest ip-172-31-13-122.ec2.internal Running Running about a minute agoifk7xuvfp9p2 mysql.2 mysql:latest ip-172-31-23-247.ec2.internal Running Running less than a second ago3c53fxgcjqyt mysql.3 mysql:latest ip-172-31-11-41.ec2.internal Running Running less than a second ago

A named volume mysql-scripts and an ancillary named volume with an auto-generated name are created on the worker nodes because a replica is scheduled.

[root@localhost ∼]# ssh -i "docker.pem" [email protected]Welcome to Docker!∼ $ docker volume lsDRIVER VOLUME NAMElocal 431a792646d0b04b5ace49a32e6c0631ec5e92f3dda57008b1987e4fe2a1b561local mysql-scripts[root@localhost ∼]# ssh -i "docker.pem" [email protected]Welcome to Docker!∼ $ docker volume lsDRIVER VOLUME NAMElocal afb2401a9a916a365304b8aa0cc96b1be0c161462d375745c9829f2b6f180873local mysql-scripts

The auto-generated named volumes are persistent and do not get removed when a service replica is shut down. The named volumes with auto-generated names are not persistent volumes. As an example, scale the service back to one replica. Two of the replicas shut down, including the replica on the manager node.

∼ $ docker service scale mysql=1mysql scaled to 1∼ $ docker service ps mysqlID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS3c53fxgcjqyt mysql.3 mysql:latest ip-172-31-11-41.ec2.internal Running Running 2 minutes ago

But the named volume mysql-scripts on the manager node is not removed even though no Docker container using the volume is running.

∼ $ docker volume lsDRIVER VOLUME NAMElocal mysql-scripts

Similarly, the named volume on a worker node on which a service replica is shut down also does not get removed even though no Docker container using the named volume is running. The named volume with the auto-generated name is removed when no container is using it, but the mysql-scripts named volume does not.

Remove the volume mysql-scripts still does not get removed.

∼ $ docker service rm mysqlmysql∼ $ docker volume lsDRIVER VOLUME NAMElocal mysql-scripts

Removing a Volume

A named volume may be removed using the following command.

docker volume rm <VOL>As an example, remove the named volume mysql-scripts.

∼ $ docker volume rm mysql-scriptsmysql-scripts

If the volume you try to delete is used in a Docker container, an error is generated instead and the volume will not be removed. Even a named volume with an auto-generated name cannot be removed if it’s being used in a container.

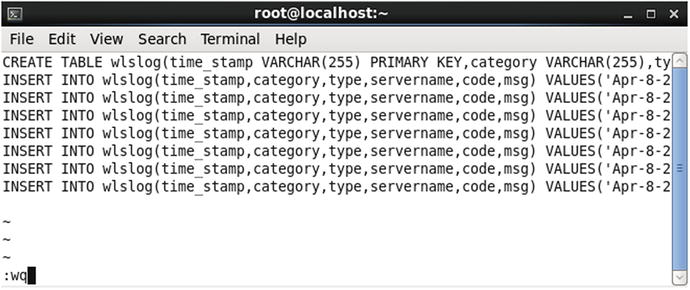

Creating and Using a Bind Mount

In this section, we create a mount of type bind. Bind mounts are suitable if data in directories that already exist on the host needs to be accessed from within Docker containers. type=bind must be specified with the --mount option when creating a service with mount of type bind. The host source directory and the volume target must both be absolute paths. The host source directory must exist prior to creating a service. The target directory within each Docker container of the service is created automatically. Create a directory on the manager node and then add a file called createtable.sql to the directory.

core@ip-10-0-0-143 ∼ $ sudo mkdir -p /etc/mysql/scriptscore@ip-10-0-0-143 ∼ $ cd /etc/mysql/scriptscore@ip-10-0-0-143 /etc/mysql/scripts $ sudo vi createtable.sql

Save a SQL script in the sample SQL file, as shown in Figure 6-4.

Figure 6-4. Adding a SQL script to the host directory

Similarly, create a directory and add a SQL script to the worker nodes.

Create a service with a bind mount that’s using the host directory. The destination directory is specified as /scripts.

core@ip-10-0-0-143 ∼ $ docker service create> --env MYSQL_ROOT_PASSWORD='mysql'> --replicas 3> --mount type=bind,src="/etc/mysql/scripts",dst="/scripts"> --name mysql> mysql0kvk2hk2qigqyeem8x1r8qkvk

Start a bash shell for the service container from the node on which a task is scheduled. The destination directory /scripts is listed.

core@ip-10-0-0-143 ∼ $ docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESe71275e6c65c mysql:latest "docker-entrypoint.sh" 5 seconds ago Up 4 seconds 3306/tcp mysql.1.btqfrx7uffym2xvc441pubazacore@ip-10-0-0-143 ∼ $ docker exec -it e71275e6c65c bashroot@e71275e6c65c:/# ls -ldrwxr-xr-x. 2 root root 4096 Jul 24 20:44 scripts

Change the directory (cd) to the destination mount path /scripts. The createtable.sql script is listed in the destination mount path of the bind mount.

root@e71275e6c65c:/# cd /scriptsroot@e71275e6c65c:/scripts# ls -l-rw-r--r--. 1 root root 1478 Jul 24 20:44 createtable.sql

Each service task Docker container has its own copy of the file on the host. Because, by default, the mount is read-write, the files in the mount path may be modified or removed. As an example, remove the createtable.sql script from a container.

core@ip-10-0-0-137 ∼ $ docker exec -it 995b9455aff2 bashroot@995b9455aff2:/# cd /scriptsroot@995b9455aff2:/scripts# ls -ltotal 8-rw-r--r--. 1 root root 1478 Jul 24 20:45 createtable.sqlroot@995b9455aff2:/scripts# rm createtable.sqlroot@995b9455aff2:/scripts# ls -ltotal 0root@995b9455aff2:/scripts#

A mount may be made read-only by including an additional option in the --mount arg, as discussed earlier. To demonstrate a readonly mount, first remove the mysql service that’s already running. Create a service and mount a readonly bind with the same command as before, except include an additional readonly option.

core@ip-10-0-0-143 ∼ $ docker service create> --env MYSQL_ROOT_PASSWORD='mysql'> --replicas 3> --mount type=bind,src="/etc/mysql/scripts",dst="/scripts",readonly> --name mysql> mysqlc27se8vfygk2z57rtswentrix

A bind of type mount which is readonly is mounted.

Access the container on a node on which a task is scheduled and list the sample script from the host directory.

core@ip-10-0-0-143 ∼ $ docker exec -it 3bf9cf777d25 bashroot@3bf9cf777d25:/# cd /scriptsroot@3bf9cf777d25:/scripts# ls -l-rw-r--r--. 1 root root 1478 Jul 24 20:44 createtable.sql

Remove, or try to remove, the sample script. An error is generated.

root@3bf9cf777d25:/scripts# rm createtable.sqlrm: cannot remove 'createtable.sql': Read-only file system

Summary

This chapter introduced mounts in Swarm mode. Two types of mounts are supported—bind mount and volume mount. A bind mount mounts a pre-existing directory or file from the host into each container of a service. A volume mount mounts a named volume, which may or may not exist prior to creating a service, into each container in a service. The next chapter discusses configuring resources.