New Definitions of Old Issues and Need for Continuous Improvement

Abstract

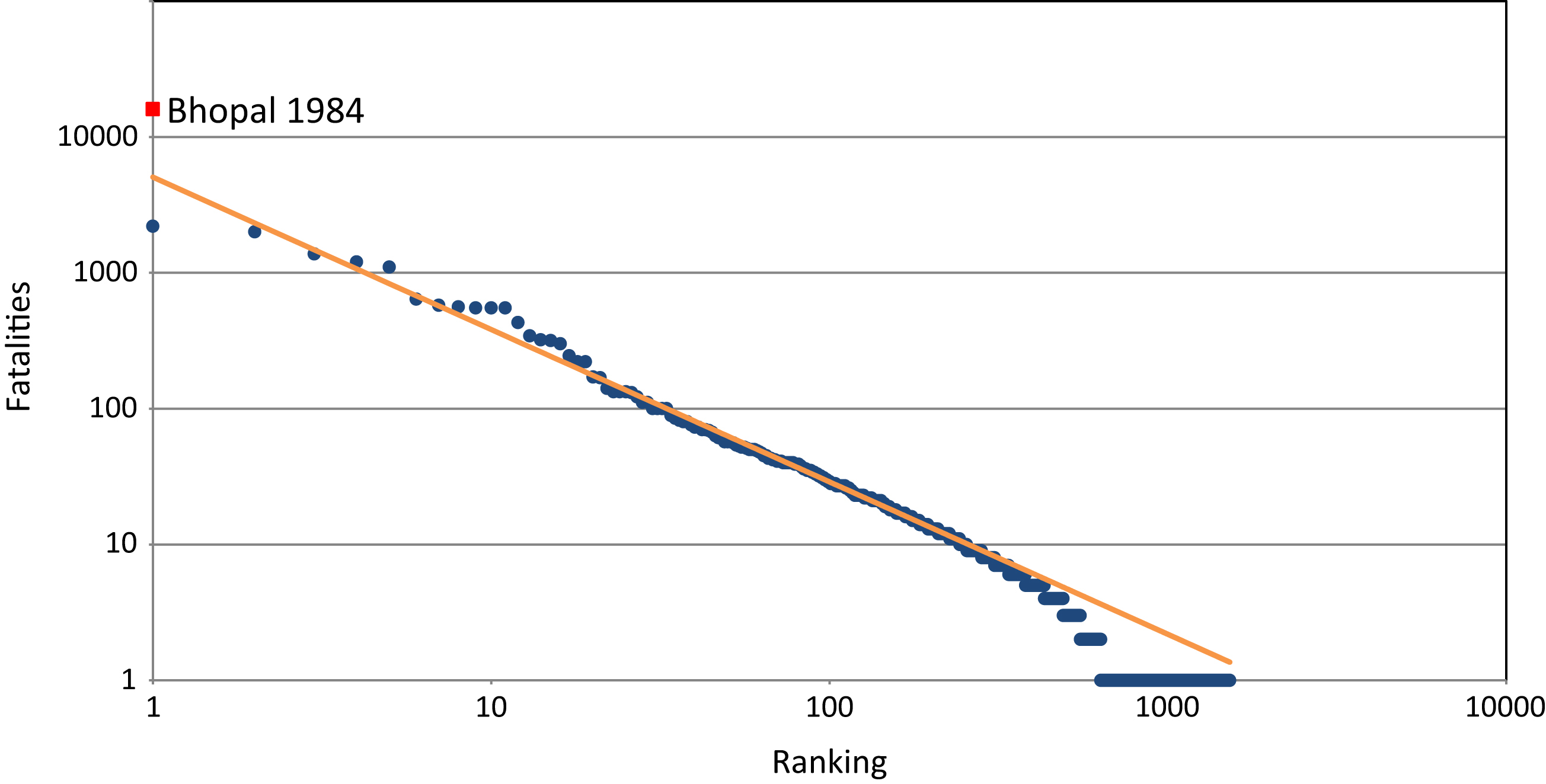

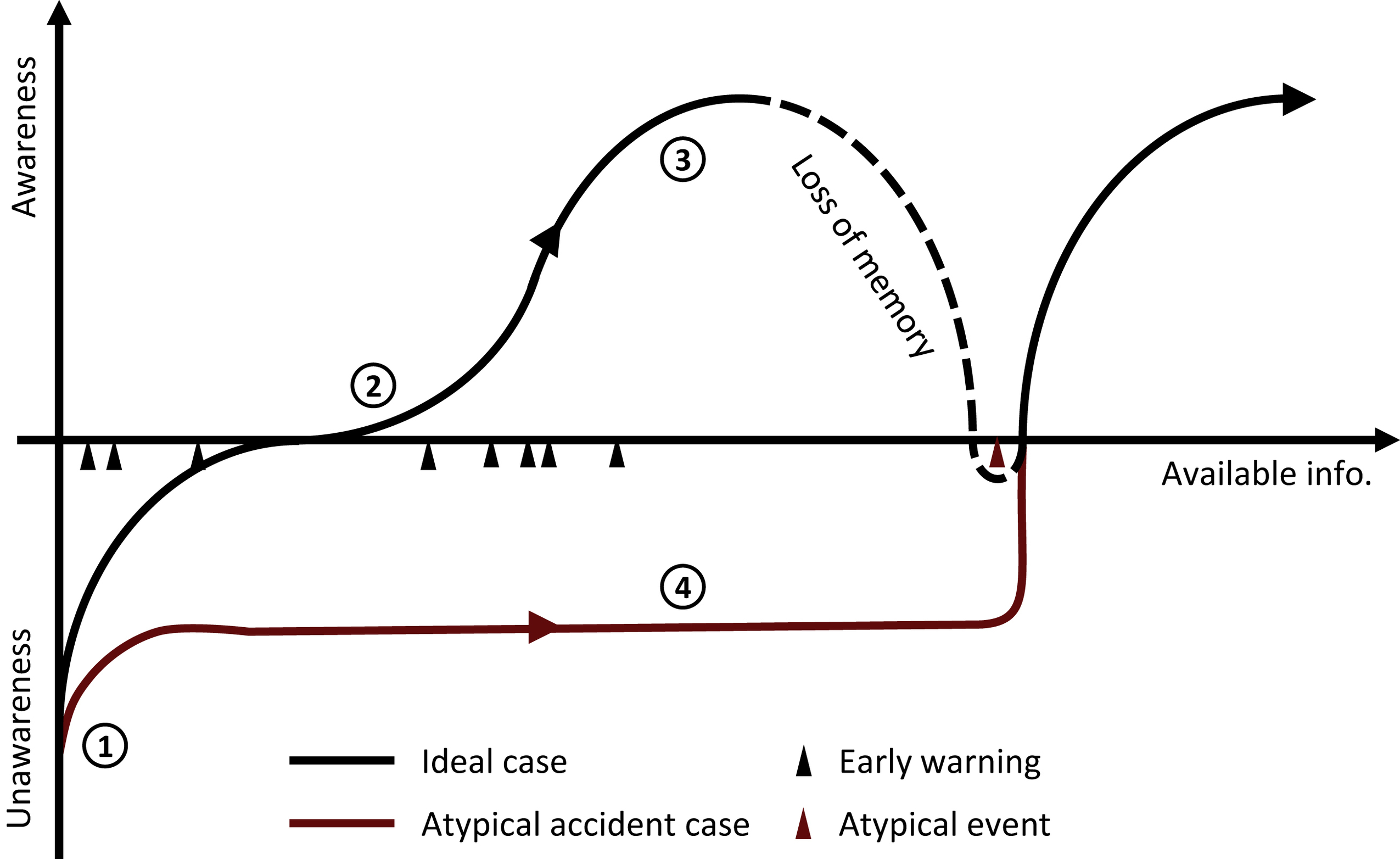

A number of definitions to describe major accidents and their specific features exist. In particular, several experts have committed to providing specific and effective definition outlining of low-probability, high-impact events, for which classification is particularly challenging owing to their rarity. These events may result from failures in preassessment, knowledge management, or likelihood evaluation, or they may be simply unpredictable. This chapter reports a brief overview of definitions of such extreme events, from atypical accidents to dragon kings, through the popular metaphor of the black swan. To a certain extent, these different perspectives agree on the fact that conjunctions of “small things” have the potential to result in extreme effects. For this reason, this chapter suggests a twofold approach to be adopted for limitation of such events: Well-known small failures should not be disregarded, and continuous improvement of models and classifications should always be carried out to keep track of the ever-changing industrial environment.

Keywords

1. Introduction

2. Atypical Accident Scenarios

Table 2.1

Definitions of Known/Unknown Events (Used by US Army Intelligence and Made Popular by Donald Rumsfeld [4])

Unknown knowns Events we are not aware that we (can) know by means of available (but disregarded) information | Known knowns Events we are aware that we know, for which risk can be managed with a certain level of confidence |

Unknown unknowns Events we are not aware that we do not know, for which risk cannot be managed | Known unknowns Events we are aware that we do not know, for which we employ both prevention and learning capabilities |

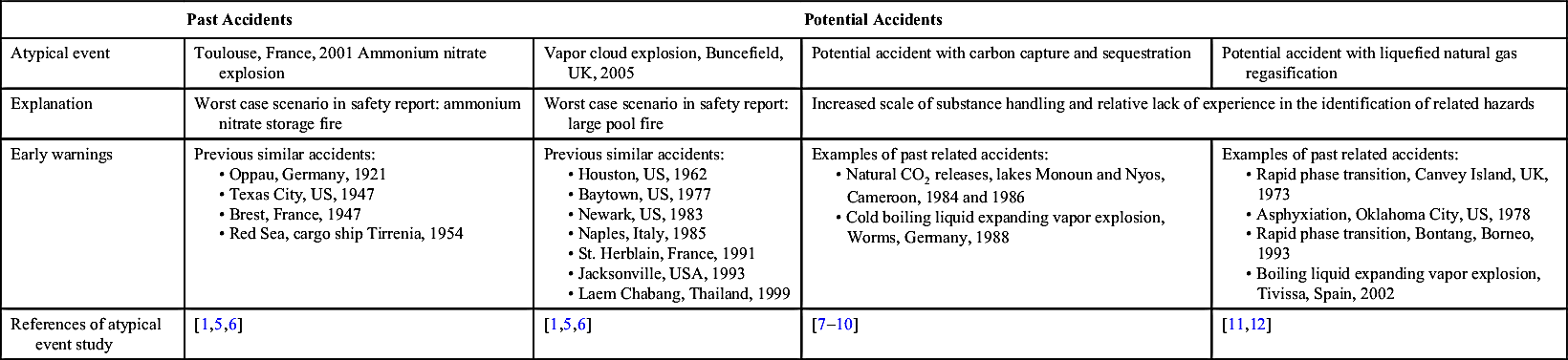

Table 2.2

| Past Accidents | Potential Accidents | |||

| Atypical event | Toulouse, France, 2001 Ammonium nitrate explosion | Vapor cloud explosion, Buncefield, UK, 2005 | Potential accident with carbon capture and sequestration | Potential accident with liquefied natural gas regasification |

| Explanation | Worst case scenario in safety report: ammonium nitrate storage fire | Worst case scenario in safety report: large pool fire | Increased scale of substance handling and relative lack of experience in the identification of related hazards | |

| Early warnings | Previous similar accidents: • Oppau, Germany, 1921 • Texas City, US, 1947 • Brest, France, 1947 • Red Sea, cargo ship Tirrenia, 1954 | Previous similar accidents: • Houston, US, 1962 • Baytown, US, 1977 • Newark, US, 1983 • Naples, Italy, 1985 • St. Herblain, France, 1991 • Jacksonville, USA, 1993 • Laem Chabang, Thailand, 1999 | Examples of past related accidents: • Natural CO2 releases, lakes Monoun and Nyos, Cameroon, 1984 and 1986 • Cold boiling liquid expanding vapor explosion, Worms, Germany, 1988 | Examples of past related accidents: • Rapid phase transition, Canvey Island, UK, 1973 • Asphyxiation, Oklahoma City, US, 1978 • Rapid phase transition, Bontang, Borneo, 1993 • Boiling liquid expanding vapor explosion, Tivissa, Spain, 2002 |

| References of atypical event study | [1,5,6] | [1,5,6] | [7–10] | [11,12] |

3. Black Swans

4. Dragon Kings