Chapter 5. Decision Making

Whether you’re deciding which house or which hamburger to buy, you make decisions every day, if not every minute. It therefore behooves you to learn more about the art and science of decision making.

Because the hacks in this chapter rely on the analysis of future events, they use a lot of math, but don’t let that scare you. You can often use the math hacks from Chapter 4 to simplify things.

This chapter addresses the following questions:

How important is your problem [Hack #44]?

How long will it last [Hack #45]?

What steps can you take to solve it [Hack #46] and [Hack #47]?

What can you do when all analysis fails [Hack #48]?

Perhaps most importantly, what do you do when you’ve got that Friday 7:30 feeling in your bones [Hack #49]?

Foresee Important Problems

Learn to foresee the most significant problems you’ll face by multiplying the probability of an event by its impact on human-friendly seven-point scales. The result is a final estimate of importance on a scale of 0 to 100.

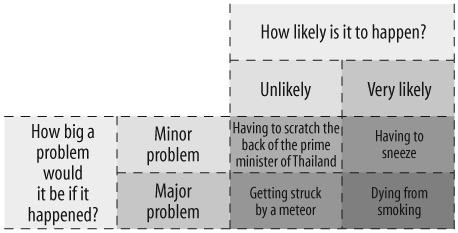

This decision-making hack is similar to the technique known as bulletproofing1 but with a much finer resolution and ability to compare concerns. The idea of bulletproofing is to do "negative brainstorming” about all the things that could possibly go wrong with a project, and then to rank them by priority on a chart labeled “Minor problem” and “Major problem” on one axis, and “Unlikely” and “Very likely” on the other axis. Figure 5-1 ranks four things that could go wrong for an average smoker.

In Figure 5-1, “Getting struck by a meteor” and “Dying from smoking” are on the “Major problem” side of the chart, but “Getting struck by a meteor” is on the “Unlikely” side and “Dying from smoking” is on the “Very likely” side. The goal is to attend to the potential problems that are major problems if they occur and are very likely. Sneezing is, of course, very likely, but also not much of a problem; having to scratch the back of the prime minister of Thailand just isn’t worth thinking about, because it will probably never happen, and if it did, who cares?

What if we want a resolution that is higher than this simple binary measurement? That’s where the Likert Scale comes in. In the early 1930s, the psychologist Rensis Likert (pronounced lick-ert) developed the Likert Scale for questionnaires intended to measure attitudes. Attitudes are rated on either a five-point or a seven-point scale; the seven-point scale is considered more accurate, since it has a higher resolution.2,3

In Action

For our purposes, the important thing about a seven-point Likert Scale is that it is human-friendly; it makes it easy for humans to convert their fuzzy attitudes, intuitions, and estimates about a phenomenon into a crisp number from one to seven.

Tip

A seven-point scale also works well because seven items is about the limit for human short-term memory [Hack #11].

For example, Table 5-1 shows a seven-point Likert Scale designed to capture estimates of probability. Similarly, Table 5-2 shows a seven-point Likert Scale designed to measure how important something would be to someone.

| Point | Probability |

| 1 | Very improbable |

| 2 | Improbable |

| 3 | Somewhat improbable |

| 4 | Neither probable nor improbable |

| 5 | Somewhat probable |

| 6 | Probable |

| 7 | Very probable |

| Point | Importance |

| 1 | Very unimportant if it happens |

| 2 | Unimportant if it happens |

| 3 | Somewhat unimportant if it happens |

| 4 | Neither important nor unimportant if it happens |

| 5 | Somewhat important if it happens |

| 6 | Important if it happens |

| 7 | Very important if it happens |

An interesting thing happens if you combine these scales by multiplication: you get a useful measure of priority. For example, being struck by a meteor might have an importance of 7 (very important if it happens) but a probability of only 1 (very improbable). Thus, it would have a raw priority of 1 × 7 = 7, which should not be considered nearly as high a priority as dying from smoking, with an importance of 7 but also a probability of 7 (very probable), for a raw priority of 7 × 7 = 49. This kind of analysis will help you avoid such common human-reasoning errors as risk aversion, where a person will take a guaranteed $40 over $100 with a 50% chance of payoff.4

You might also find it interesting that if you double the raw-priority scores, which range from 1 to 49, you get a scale ranging from 2 to 98, which nicely approximates a percentile scale (0–100). There are statistical tricks you can perform to stretch the 2–98 scale onto the percentile scale exactly, but since the math is easy to do in your head without them and nothing is ever utterly important (100) or completely unimportant (0) anyway, why bother?

By the way, if this kind of analysis of concerns and problems depresses you, you can always run the analysis in the other direction and ask what is most likely to go right.

In Real Life

Shortly after I developed this hack, I tried it out while designing a new, free (open source) collectible card game called GameFrame (I describe the genesis of this game briefly in “Seed Your Mental Random-Number Generator” [Hack #19]). Some of the concerns I came up with were as follows.

No one will care:

- Probability = 4

Neither probable nor improbable. It’s a weird and esoteric game; nevertheless, people do some esoteric things out there on the World Weird Web.

- Significance = 6

Important if it happens. If no one cares, or few people care, it might sink the project.

- Priority = 48%

4 × 6 × 2

Someone else will publish a similar project first:

- Probability = 2

Improbable. Not only is the game weird and esoteric, but also it’s idiosyncratic, which means that if someone else has a similar project, it’s likely to be different enough that both projects can coexist.

- Significance = 6

Important if it happens. Even though the chances are low that someone else is producing a nearly identical game, if someone did, it could mean the end of the game project. It’s not like a text-editor program, where multiple editors with significantly overlapping functionality can coexist.

- Priority = 24%

2 × 6 × 2

People will resent the use of free (open source) tools to create the cards:

- Probability = 5

Somewhat probable. People are likely to be ticked off if they have to learn Perl or Scribus to design cards for the card game.

- Significance = 3

Somewhat unimportant if it happens. People can learn, and if they don’t want to use free tools, they can produce their own versions of the cards using commercial tools such as Adobe PageMaker.

- Priority = 30%

5 × 3 × 2

Some people will resent the free (open source) licensing clauses:

- Probability = 6

Probable. People are even more likely to resent the free/open source licensing on the game content than they are on the use of free tools, if they want to make their own proprietary versions of the game, since the free-content rules can’t be sidestepped.

- Significance = 2

Unimportant if it happens. That the game will be free and open source is part of the bedrock of the design, so if people don’t like it, it’s just tough luck; they can go work for Hasbro or something.

- Priority = 24%

6 × 2 × 2

Someone will claim an intellectual property violation and sue:

- Probability = 2

Improbable. We are being very scrupulous about fair use of copyrighted information and mostly limiting ourselves to original text, graphics, and source code. In any case, people usually have to smell money to sue, and we’re a small, shoestring project at the moment.

- Significance = 7

Very important if it happens. No one presently on the project has the time, money, or other resources to defend a case in court. Since the project is primarily a card game and not computer software as such, it’s unlikely that the free software community would come to our aid. Also, because of the seemingly frivolous nature of games, we’d be unlikely to see much pro bono help from organizations such as the ACLU.

- Priority = 28%

2 × 7 × 2

These concerns rank from high to low as shown in Table 5-3.

| Priority | Outcome |

| 48 | No one will care. |

| 30 | People will resent the use of free (open source) tools to create the cards. |

| 28 | Someone will claim an intellectual property violation and sue. |

| 24 | Some people will resent the free (open source) licensing clauses. |

| 24 | Someone else will publish a similar project first. |

Thus, according to my estimates, the highest-priority concern I should have about the game’s success is that no one will care. Accordingly, I made a plan to publicize the game project.

End Notes

Bulletproofing. http://www.mycoted.com/creativity/techniques/bulet-proof.php.

Keegan, Gerard. “Likert Scale” (glossary entry). http://www.gerardkeegan.co.uk/glossary/gloss_l.htm.

Wikipedia entry. “Likert Scale.” http://en.wikipedia.org/wiki/Likert_scale.

Wikipedia entry. “Risk aversion.” http://en.wikipedia.org/wiki/Risk_aversion.

Predict the Length of a Lifetime

Many of us instinctively trust that things that have been around a long time are likely to be around a lot longer, and things that haven’t, aren’t. The formalization of this heuristic is known as Gott’s Principle, and the math is easy to do.

Physicist J. Richard Gott III has so far correctly predicted when the Berlin Wall would fall and calculated the duration of 44 Broadway shows.1 Controversially, he has predicted that the human race will probably exist between 5,100 and 7.8 million more years, but no longer. He argues that this is a good reason to create self-sustaining space colonies: if the human race puts some eggs in other nests, we might extend the life span of our species in case of an asteroid strike or nuclear war on the home planet.2

Gott believes that his simple calculations can be extended to almost anything at all, within certain parameters. To predict how long something will be around by using these calculations, all you need to know is how long it has been around already.

In Action

Gott bases his calculations on what he calls the Copernican Principle (and what some people call, in this specific application, Gott’s Principle). The principle says that when you choose a moment in time to calculate the lifetime of a phenomenon, that moment is probably quite ordinary, not special or privileged, just as Copernicus told us the Earth does not occupy a privileged place in the universe.

It’s important to choose subjects at ordinary, unprivileged moments. Biasing your test by choosing subjects that you already believe to be near the beginning or end of their life span—such as the human occupants of a neonatal ward or a nursing home—will yield bad results. Further, Gott’s Principle is less useful in situations where actuarial data already exists. Plenty of actuarial data is available on the human life span already, so Gott’s Principle is less useful here.

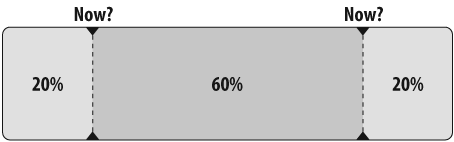

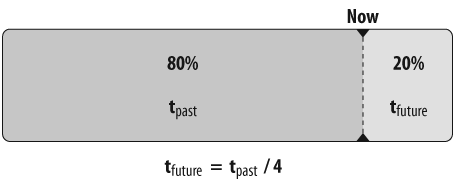

Having chosen a moment, let’s examine it. All else being equal, there’s a 50% chance the moment is somewhere in the middle 50% of the phenomenon’s lifetime, a 60% chance it’s in the middle 60%, a 95% chance it’s in the middle 95%, and so on. Therefore, there’s only a 25% chance that you’ve chosen a moment in the first fourth of its lifetime, a 20% chance it’s in the first fifth, a 2.5% chance it’s in the last 2.5% of the subject’s lifetime, and so on.

Table 5-4 provides equations for the 50%, 60%, and 95% confidence levels. The variable tpast represents how long the object has existed, and tfuture represents how long it is expected to continue.

| Confidence level | Minimum tfuture | Maximum tfuture |

| 50% | tpast / 3 | 3tpast |

| 60% | tpast / 4 | 4tpast |

| 95% | tpast / 39 | 39tpast |

Let’s look at a simple example. Quick: whose work do you think is more likely to be listened to 50 years from now, Johann Sebastian Bach’s or Britney Spears'? Bach’s first work was performed around 1705. At the time of this writing, that’s 300 years ago. Britney Spears’ first album was released in January 1999, about 6.5 years or 79 months ago.

Consulting Table 5-4, for the 60% confidence level, we see that the minimum tfuture is tpast / 4, and the maximum is 4tpast. Since tpast for Britney’s music is 79 months, there is a 60% chance that Britney’s music will be heard for between 79 / 4 months and 79 × 4 months longer. In other words, we can be 60% sure that Britney will be a cultural force for somewhere between 19.75 months (1.6 years) and 316 months (26.3 years) from now.

Tip

Sixty percent is a good confidence level for quick estimation; not only is it a better-than-even chance, but the factors 1/4 and 4 are easy to use because of the phenomenon of aliquot parts [Hack #36].

By the same token, we can expect people to listen to Bach’s music for somewhere between another 300 / 4 and 300 × 4 years at the 60% confidence level, or somewhere between 75 years and 1,200 years from now. Thus, we can predict that there’s a good chance that Britney’s music will die with her fans, and there’s a good chance that Bach will be listened to in the fourth millennium.

How It Works

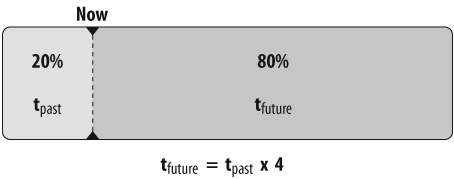

Suppose we are studying the lifetime of some object that we’ll call the target. As we’ve already seen, there’s a 60% chance we are somewhere in the middle 60% of the object’s lifetime (Figure 5-2).3

If we are at the very end of this middle 60%, we are at the second point marked “now?” in Figure 5-2. At this point, only 20% of the target’s lifetime is remaining (Figure 5-3), which means that tfuture is equal to one-fourth of tpast (80%). This is the minimum remaining lifetime we expect at the 60% confidence level.

Similarly, if we are at the beginning of the middle 60% (the first point marked “now?” in Figure 5-2), 80% of the target’s existence lies in the future, as depicted in Figure 5-4. Therefore, tfuture (80%) is equal to 4 × tpast (20%). This is the maximum remaining lifetime we expect at the current confidence level.

Since there’s a 60% chance we’re between these two points, we can calculate with 60% confidence that the future duration of the target (tfuture) is between tpast / 4 and 4 × tpast.

In Real Life

Suppose you want to invest in a company and you want to estimate how long the company will be around to determine whether it’s a good investment. You can use Gott’s Principle to do so. Although it’s not publicly traded, let’s take O’Reilly Media, the publisher of this book, as an example.

Tip

I certainly didn’t pick O’Reilly Media at random, and plenty of historical information is available about how long companies tend to last, but let’s try Gott’s Principle as a rough-and-ready estimate of O’Reilly’s longevity anyway. After all, there’s probably good data on the longevity of Broadway shows, but Gott didn’t shrink from analyzing them—and I hesitate to say that now that O’Reilly has published Mind Performance Hacks, its immortality is assured.

According to the Wikipedia, O’Reilly started in 1978 as a consulting firm doing technical writing. It’s July 2005 as I write this, so O’Reilly has existed as a company for approximately 27 years. How long can we expect O’Reilly to continue to exist?

Here’s O’Reilly’s likely lifetime, calculated at the 50% confidence level:

- Minimum

27 / 3 = 9 years (until July 2014)

- Maximum

27 × 3 = 81 years (until July 2086)

Here are our expectations at the 60% confidence level:

- Minimum

27 / 4 = 6 years and 9 months (until April 2012)

- Maximum

27 × 4 = 108 years (until July 2113)

Finally, here’s our prediction with 95% confidence:

- Minimum

27 / 39 = 0.69 years = about 8 months and 1 week (until mid-March 2006)

- Maximum

27 × 39 = 1,053 years (until July 3058)

In the post-dot-com economy, these figures look pretty good. For example, Apple Computer’s aren’t much better, and Microsoft was founded in 1975, so the same can be said for it. A real investor would want to consider many other factors, such as annual revenue and stock price, but as a first cut, it looks as though O’Reilly Media is at least as likely to outlive a hypothetical investor as to tank in the next decade.

End Notes

Ferris, Timothy. “How to Predict Everything.” The New Yorker, July 12, 1999.

Gott, J. Richard III. “Implications of the Copernican Principle for Our Future Prospects.” Nature, 363, May 27, 1993.

Gott, J. Richard III. “A Grim Reckoning.” http://pthbb.org/manual/services/grim.

Find Dominant Strategies

Sometimes, you can find the best of all possible strategies in what is far from the best of all possible worlds.

Some situations in life are like games, and the mathematical discipline of game theory, which studies game strategies, can be applied to them.

In game theory, a dominant strategy is a plan that’s better than all the other plans that you can choose, no matter what your opponents do. In other words, a dominant strategy is better than some courses of action in some of the possible situations, and never worse than other courses. Look for a dominant strategy before looking for any other kind of strategy.1

In sequential games, such as chess or Go, players take turns. You consider your opponent’s previous moves, look ahead to anticipate her best moves, and extrapolate to find the optimal move to counter her; the initiative then passes to your opponent, who does the same.

On the other hand, in simultaneous games, where players’ moves are planned and are executed at the same time, seeking a dominant strategy is helpful. For example, in a presidential debate, you can only guess what your opponent will say and do. In such a situation, using a dominant strategy to know the best possible move regardless of your opponent’s move, which you cannot know, is indispensable—if a dominant strategy exists.

In Action

On that world-famous cookery game show, Titanium Chef, the contestants are busy cooking on opposite sides of the room, and neither can see what the other is doing. That makes Titanium Chef a simultaneous game and an ideal place to look for a dominant strategy.

Consider two contestants, Andi and Bruno. These two chefs must cook in one of two styles: Haute Cuisine and Home Cookin’. Both contestants have made a careful study of the judges’ previous preferences, and they know that two of the ten judges prefer Haute Cuisine, and the other eight prefer the guilty pleasure of Home Cookin’.

Furthermore, if Andi cooks in one style and Bruno cooks in the other style, each contestant will get all of the votes from the judges who prefer the particular style. If both contestants cook in the same style, they will split the votes of the judges who prefer that style, and the rest of the judges will pout and abstain. The winner receives $100,000; if there is a tie, the chefs split the prize.

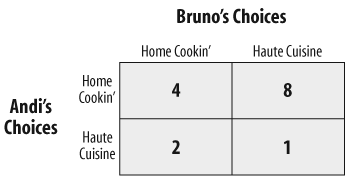

Consider Figure 5-5, which shows the number of votes Andi can expect to get in each possible situation.

If both contestants cook in the Home Cookin’ style, they can expect to split the eight votes of the judges who prefer that style, so Andi will get four votes. If both opponents cook Haute Cuisine, they will split the two available votes, and Andi will get one vote.

On the other hand, if Andi cooks Haute Cuisine and Bruno cooks Home Cookin', Andi will get both available votes for Haute Cuisine, for a total of two. If Andi selects Home Cookin’ and Bruno chooses Haute Cuisine, Andi will get all eight available votes for Home Cookin’.

No matter what Bruno does, Andi will fare better if she selects Home Cookin', so Home Cookin’ is Andi’s dominant strategy. You can check this by comparing the top row with the bottom row. Both values in the top row are better than their corresponding values in the bottom row. This means that Home Cookin’ strongly dominates the Haute Cuisine strategy. If a pair of cells being compared in this case were the same in value, the Home Cookin’ strategy would be said to weakly dominate the other one.2

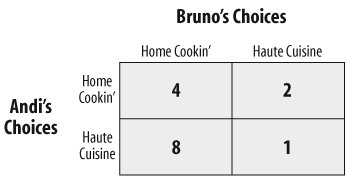

Now, let’s examine Bruno’s choices. Figure 5-6 shows Bruno’s expected outcome, depending on what each competitor picks.

Bruno also can expect eight points if he chooses Home Cookin’ and Andi chooses Haute Cuisine, two points if the opposite happens, one point if both contestants choose Haute Cuisine, and four points if both contestants choose Home Cookin’.

This time, we’re comparing columns, not rows. Both values in the left column (Home Cookin') are bigger than the values in the right column (Haute Cuisine), so Bruno’s dominant strategy is also Home Cookin’.

If both players are rational, both will select Home Cookin', since it’s the dominant strategy for both of them. If they do so, this episode of Titanium Chef will be a foregone conclusion: it will be a 4-4 tie, and each player will receive $50,000.

From Andi’s perspective, however, it’s always possible that Bruno will miscalculate or not have done his homework and will cook Haute Cuisine instead. In that case, her payoff is huge: she will sweep the judges, receive eight votes, and win $100,000. The same is true from Bruno’s perspective.

The worst either of them will do by choosing the dominant strategy is to tie and split the prize, but they have a chance to win outright if their opponent makes a mistake. Without the dominant strategy, they could be the one making the wrong choice, losing outright, and going home with empty pockets.

This simple example is intended for clarity of explanation only. For a more complex example of dominant and dominated strategies, see “Eliminate Dominated Strategies” [Hack #47].

Finding dominant strategies is important because a dominant strategy is your best strategy independent of the impact of your opponents’ strategies. It’s a way to maximize your potential win and minimize your loss, even before you start and regardless of what happens afterward.

Tip

What is “best” is considered here from a strictly selfish point of view, of course; you might wish to adopt the Golden Rule in some situations despite the fact that it probably wouldn’t be a dominant strategy in the game-theory sense.

You can find a dominant strategy in a simultaneous game by creating a table like those shown in Figures 5-5 and 5-6. Populate your table with values calculated any way you prefer, as long as you are consistent. A Likert Scale [Hack #44] provides a human-friendly way of evaluating outcomes.

Note that in our Titanium Chef example, both players have a dominant strategy, and it’s the same one. Sometimes, however, each player has a different dominant strategy; if that were true on Titanium Chef, the contest would still be a foregone conclusion, but there would be a single winner.

Sometimes a player won’t have a dominant strategy at all. In that case, he should calculate what the other player’s dominant strategy is (if she has one) and make his best response to that strategy. It’s also important to avoid dominated strategies [Hack #47]. There are also situations (such as a game of Rock Paper Scissors) where no player has a dominant strategy; in such situations, don’t overthink things [Hack #48].

In Real Life

Imaginary game shows can be fun, but you might be wondering when you would get a chance to employ dominant strategies in real life. Remember, many real-life situations are gamelike, so you can apply game theory to them. During the Cold War, game theory was even applied to the nuclear arms race, so it can be applied to some very serious “games” indeed. Game theory is also widely used in economics and has even been used to explain some puzzles in evolutionary biology, such as why animals have evolved to cooperate. Consider that all of the following can be modeled as simultaneous games to which game theory can apply:

Deciding on a legal defense in a courtroom

Choosing which toys to manufacture for the holiday season

Deciding whether to attack at dawn

Deciding whether to be an early adopter of a new technology, or to wait and see if it catches on

As John von Neumann, one of the founders of game theory and inventors of the computer, put it:3

And finally, an event with given external conditions and given actors (assuming absolute free will for the latter) can be seen as a parlor game if one views its effects on the persons involved... There is scarcely a question of daily life in which this problem [of successful strategy] does not play a role.

It is often said that life is a game, but seldom is it said by someone who can back it up with hard figures. Pay attention to dominant strategies, and your life’s parlor games may be a little more successful.

End Notes

Dixit, Avinash K., and Barry J. Nalebuff 1991. Thinking Strategically. W.W. Norton & Company, Inc.

Economic Science Laboratory. “Iterated deletion of Dominated strategies.” Economics Handbook. http://www.econport.org:8080/econport/request?page=man_gametheory_domstrat.

Bewersdorff, Jörg, translated by David Kramer. 2005. Luck, Logic, and White Lies: The Mathematics of Games. A K Peters, Ltd. An excellent recent book on applying game theory to situations people would normally think of as games, such as chess and poker.

Eliminate Dominated Strategies

Find your strongest strategy by systematically eliminating all of your weaker choices.

We’ve already seen that it’s important to find dominant strategies [Hack #46] when you make decisions, if possible. If you’re lucky enough to have a single dominant strategy, your choice is clear.

Sometimes, however, neither opponent has a dominant strategy. In that case, the opponents should try to eliminate strategies from consideration that are dominated and to continue eliminating weaker strategies until a single strategy emerges as clearly superior. When each opponent has settled on a single strategy, they have reached a pure strategy equilibrium, which is the best that either opponent can rationally hope for.1

In Action

Welcome back to that world-famous cookery game show, Titanium Chef. On this episode, we have two time-traveling celebrity chefs named Pasta and Futurio. The ground rules for this episode are as follows:

Both chefs will choose a cuisine from their respective periods. Pasta will choose between Incan and Sumerian cuisine, and Futurio will choose among Andromedan, Rigelian, and Venusian cooking.

There are 10 judges on this episode, each of whom may either cast one vote for a chef or abstain from voting.

Each contestant will take home $10,000 times the number of votes she receives.

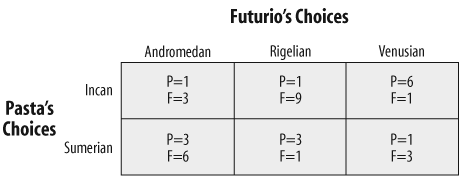

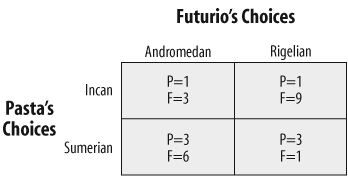

The Titanium Chef studio has been temporally shielded so that Pasta and Futurio can’t use their chronovision sets to predict their opponent’s cuisine. However, both Pasta and Futurio do have access to advanced computer simulations that can predict how many votes each chef will receive, depending on which cuisine she chooses. Figure 5-7 shows the possible outcomes in each situation.

Remember, a dominant strategy is a plan that’s better than all the other plans that you can choose, no matter what your opponents do. In this scenario, consider a row where P consistently beats F. If there are no other rows with a better outcome, it’s a dominant strategy for P to choose that row. The same would go for F and a winning column.

As you can see, neither opponent has a dominant strategy. For example, Andromedan cuisine does not dominate Rigelian for Futurio, and vice versa, because each is better for one of Pasta’s strategies and worse for the other. However, Andromedan cooking does dominate Venusian cooking; Andromedan cooking is a better strategy than Venusian whether Pasta cooks Incan or Sumerian.

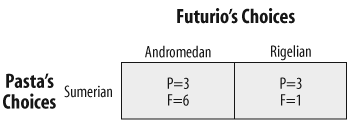

Thus, since both players are rational, and each knows the other to be rational as well (each knows the other has a reliable simulation of the contest), they both eliminate the dominated strategy of Venusian cooking from their calculations, leaving a simplified game that looks like Figure 5-8.

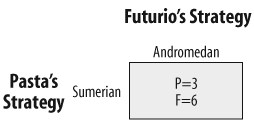

Futurio now has no clear choice, but eliminating Venusian cuisine as an option means that one of Pasta’s strategies is now dominated: the Incan row can be eliminated, since both of her values in the Sumerian row are higher. After eliminating the Incan cooking row, the game looks like Figure 5-9.

Narrowing Pasta’s choices down to Sumerian means that Futurio now has a dominant strategy. Andromedan food clearly dominates Rigelian food, with six votes to Rigelian cuisine’s one vote.

Eliminating the dominated Rigelian strategy means that the final pure strategy equilibrium is Sumerian versus Andromedan food. The outcome is that Pasta receives three votes ($30,000) and Futurio receives six votes ($60,000), as shown in Figure 5-10.

Thus, this episode of Titanium Chef was a foregone conclusion, and we didn’t even need to watch it or use a time machine to discover how it would turn out. Reality TV tends to work that way...

How It Works

This hack assumes that both opponents are rational. That might seem peculiar; what if your opponent isn’t?

It is sometimes possible to do better than game theory predicts, just as it’s possible to make a dumb move in chess that might pay off, and hope that your opponent doesn’t notice how dumb your move was. However, “maybe they won’t notice” is not a consistently winning strategy, so the wise player will put up the best possible defense and not pin all his hopes on his opponent being an idiot.2

Iterated elimination of dominated strategies works because it simplifies the game in question to a point where it can be handled more easily. Eliminating strategies which neither you nor any rational opponent would play may expose other strategies that can be eliminated the same way. Eventually, either each player will have only one strategy, or the game will at least be simplified to the point that you can analyze it in another way.3 Think of the process as analogous to reducing a fraction to its lowest terms: the situation being analyzed remains the same, but you can see the answer more clearly.

In Real Life

You can use iterated elimination of dominated strategies in the same real-life situations in which you can find a dominant strategy [Hack #46]. In fact, finding a dominant strategy is just a special case of iterated elimination: in effect, all dominated strategies have already been eliminated.

End Notes

Dixit, Avinash K., and Barry J. Nalebuff. 1991. Thinking Strategically. W.W. Norton & Company, Inc. The best book of which I’m aware on applying game theory to everyday life.

Bewersdorff, Jörg, translated by David Kramer. 2005. Luck, Logic, and White Lies: The Mathematics of Games. A K Peters, Ltd.

Economic Science Laboratory. “Iterated deletion of Dominated strategies.” Economics Handbook. http://www.econport.org:8080/econport/request?page=man_gametheory_domstrat.

See Also

The EconPort digital economics library has an Economics Handbook with a wonderfully lucid exposition of basic concepts in game theory: http://www.econport.org:8080/econport/request?page=man.

Don’t Overthink It

When each side in a game—or an important decision—is trying to outsmart the other, it might be time to flip a coin.

On our third trek through the foothills of game theory, let’s leave the wilds of Titanium Chef behind. Instead, imagine you are playing a game in which you hold a black Go stone in one hand and a white Go stone in the other. Your opponent must choose the hand holding the white stone. If she chooses correctly, she wins $1 from you; if she does not, she pays you $1.

Now imagine that your opponent is super-intelligent and will always outguess you. If you intentionally hide the white stone in your right hand, she will choose that hand. If you decide that she knows you will hide the stone in your right hand, and you try to outsmart her and hide it in your left, she will know that you know she knows, and she will decide to pick your left hand. No matter which hand you decide to hide the stone in while trying to outthink her, she will always be able to outthink you and pick the correct hand.

In this situation, the optimal strategy is to shake the Go stones in your cupped hands so that even you do not know which is which, and randomly take one in each hand. In fact, that is always your optimal strategy in this game against a rational opponent, and you must usually assume your opponent is rational. Similarly, your opponent’s rational strategy is to flip a coin to determine which hand to pick. You can never expect to do better than 50:50 against a rational opponent in this game, no matter which side you are on, and your optimal strategy is always a randomly chosen 50:50. Therefore, sometimes it’s simply best not to overthink things!

In Action

What situations does this hack apply to? Randomness is needed in games when having to go first would be a disadvantage. Consider Rock Paper Scissors (RPS). A player who goes first (this is never supposed to happen in the real game) will always lose to a rational opponent, who will play the perfect countermove: Rock to smash Scissors, Paper to wrap Rock, and Scissors to cut Paper. You can model a super-intelligent opponent simply as someone who always gets to go last.1

Extending our reasoning about the hidden-stone game, it’s not hard to see that the perfect RPS strategy against a perfect, rational opponent is one-third Rock, one-third Paper, and one-third Scissors, all chosen at random (perhaps by rolling dice). In terms of game theory, this is a mixed strategy equilibrium, meaning you are randomly mixing your strategies of Rock, Paper, and Scissors.

Tip

“Find Dominant Strategies” [Hack #46] and “Eliminate Dominated Strategies” [Hack #47] discuss what to do in situations when randomness is not appropriate.

In Real Life

My friends and I play a game called Zendo,2 in which a player called the Master creates a secret game rule that determines whether any sculpture (called a Koan) made by the other players (called Students) “has the Buddha Nature.” As you might guess, the theme of the game is study in a Zen Buddhist monastery. However, a better theme for the game might have been scientific induction, as players attempt to use inductive logic to guess the secret rule. In fact, Zendo is heavily influenced by an earlier game called Eleusis3 in which the theme of Scientists playing against Nature is made explicit. Both games are excellent training in inductive reasoning skills [Hack #67].

Zendo, and even more so Eleusis, can be summed up by this quote from Good Omens, written by Neil Gaiman and Terry Pratchett:

God does not play dice with the universe; He plays an ineffable game of His own devising, which might be compared, from the perspective of any of the other players, to being involved in an obscure and complex version of poker in a pitch-dark room, with blank cards, for infinite stakes, with a Dealer who won’t tell you the rules, and who smiles all the time.4

When a Student creates a Koan and cries “Mondo!” all Students have the opportunity to guess whether the new Koan has Buddha Nature. If you guess correctly, you get a point, which is good; it lets you attempt to guess the secret rule later.

Interestingly enough, players get to vote on Koans by proffering a Go stone in their closed fist, in a way similar to the guessing game described earlier. My friends and I often find when we are playing the game as Students that in some sense we are trying to outsmart not only the Master, but also ourselves; we are often so far from learning the secret rule that whatever we guess consciously is comically bound to be wrong. In this case, we shake two Go stones in our cupped hands and proffer whichever one ends up in our right hand. This strategy tends to maximize our profit: the Master gives us points 50% of the time (more or less), we still learn something, and we have enough points when we need them to guess the secret rule.

Just so, sometimes in that “real” game against Nature, you have to trust and let go. Talk to a stranger. Delurk on a mailing list. Put up a blog or a wiki and see who stops by. Sometimes it’s spammers, sometimes it’s cranks, and sometimes it’s someone you really want to know. A random play in the game of life [Hack #49] can sometimes have a big payoff.

End Notes

Dixit, Avinash K., and Barry J. Nalebuff. 1991. Thinking Strategically. W.W. Norton & Company, Inc.

Heath, Kory. 2003. “Zendo.” http://www.wunderland.com/WTS/Kory/Games/Zendo.

Abbott, Robert. “Eleusis.” http://logicmazes.com/games/eleusis.html.

Gaiman, Neil, and Terry Pratchett. 1992. Good Omens: the Nice and Accurate Prophecies of Agnes Nutter, Witch: a Novel. Berkley Books.

Roll the Dice

Break out of your rut by making lists of tasks, recreations, books to read, or research avenues to investigate—including some you don’t want to—and rolling the dice to determine your fate.

Dicing, or dice living, is a decision-making technique developed by “Dice Man” Luke Rhinehart (pen name for George Cockcroft). While dicing is not a panacea, it is a many-sided remedy. It can:

Break through your analysis paralysis

Bring more fun into your life

Introduce novelty and unpredictability into the way you do things

As Rhinehart writes:1

Dicing is simply one of many ways to attack seriousness. If you list six options, some moral, some immoral, some ambitious and some trivial, some spiritual and some lusty, and let chance decide what you do, then you are in effect challenging the seriousness of your acts, you are saying it doesn’t matter what I do. When the die chooses an action I choose to do it with all my heart—that is the dice-person’s controlled folly.

Controlled folly is a term that Rhinehart appropriated from the fiction of Carlos Castaneda. To act with controlled folly is to act with the belief that your actions are useless, but to do them anyway, and to care about them. This is the essence of dicing.

In Action

The next time you’re bored or depressed, make a list of six or more possible options (for example, reading a random book, getting drunk, having sex, writing a book, working overtime, going to the gym). Then, roll a die or dice to choose among them.

Tip

It’s important to include on your list some options that are distasteful to you, some that are boring, and some that are frightening (skydiving, for example), at least from time to time. Part of the value of using dice to decide is to create the possibility of shaking up your life when you shake the dice.

Whichever option comes up, it’s crucial to the hack that you be completely obedient to the dice roll and do what it “tells” you to do. Otherwise, why roll the dice in the first place, except to gain insight into what you really want?

Dicing can also involve randomly role-playing [Hack #31] characters, emotions, and relationships with other people (patient/therapist, parent/child, lovers, enemies, and so on), always with controlled folly.

The Code

You can use the pyro program [Hack #20] to generate random options for you even more flexibly and powerfully than dice will.

Since my wife and I live in a Seattle suburb, we use pyro to generate things to do in the Seattle area. Here’s our datafile, whattodo.dat:

#@activity@ @funout@ @funout@ Order out from @takeout@. @funhome@ Stay home. @funhome@ #@funhome@ Cook a tasty meal together. Design a game. Go catalog shopping. Just talk together. Listen to @webradio@. Listen to some audio together (roll for which). Make jewelry together. Play @game@. Randomly surf the Web via @randomweb@. Read aloud. Reorganize @mess@. Watch a movie at home (make a list and roll). Work on a self-help book together. Work on the Glass Bead Game together. Write some parody lyrics. #@funout@ Drive randomly, starting in @watown@. Drive through downtown Seattle looking for adventure. Eat out; make a list of restaurants and roll. Go (window) shopping at @store@. Go letterboxing. Go on a thrift store expedition. Go people-watching at @park@. Go to Marymoor Park with the dogs. See a movie (make a list of current movies and roll). Take a road trip to Portland. Visit a cafe. Visit the @museumetc@. Visit the library. #@game@ Blokus Boggle Can't Stop Carcassonne Cathedral Focus Pickomino Ploy Rummy Scrabble Ultima #@mess@ the bedroom the computer room the garage #@museumetc@ Asian Art Museum Experience Music Project Science Fiction Museum Seattle Aquarium Seattle Art Museum Woodland Park Zoo #@park@ Occidental Park the Des Moines pier #@randomweb@ RandomURL.com random Wikipedia pages random pages from H2G2 #@store@ @bookstore@ @gamestore@ Fry's Ikea Math'n'Stuff Silver Platters #@bookstore@ Elliott Bay Half Price Books Third Place Books Twice-Sold Tales some chain bookstore that begins with 'B' the University Bookstore #@gamestore@ Game Wizard Gary's Games and Hobbies Genesis Games and Gizmos Uncle's Games #@takeout@ Chopsticks Golden Dynasty Jet City Pizza Longhorn Barbecue #@watown@ Bellevue Redmond Renton Seattle Tacoma Tukwila #@webradio@ BBC7 KEXP This American Life

If you use this data, customize it with your own interests and locales; otherwise, it will be of no use to you, except to spy on what a tame life I lead in Seattle.

Running the Hack

See the “How to Run the Programming Hacks" section of the Preface if you need general instructions on running Perl scripts. If Perl is installed on your system, save the pyro script and the whattodo.dat file in the same directory, and then run pyro by typing the following command within that directory:

perl pyro whattodo.dat activity

If you’re on a Linux or Unix system, you might also be able to use the following shortcut:

./pyro whattodo.dat activity

The following console session shows the generation of weekend plans for Friday, Saturday, and Sunday nights in the Seattle area:

$./pyro whattodo.dat activityOrder out from Jet City Pizza. Listen to BBC7. $./pyro whattodo.dat activityOrder out from Golden Dynasty. Design a game. $./pyro whattodo.dat activitySee a movie (make a list of current movies and roll).

The key to “dicing up” activities with pyro is not to generate a huge list of things to do, then pick and choose (as you might when you morphologically force connections [Hack #20]), but to generate one activity for an evening and choose to do whatever comes up with the whole of your heart.

End Notes

Rhinehart, Luke. 2000. The Book of the Die: A Handbook of Dice Living. The Overlook Press.