Review

Kim R. Fowler, IEEE Fellow, Consultant

Review is a set of feedback paths within system development. The act of review has two primary objectives: (i) to confirm correct design and development and (ii) to expose and identify problems with design, development, or processes.

This chapter outlines the processes and procedures for various types of reviews, provides the formats for review, and describes when to conduct reviews. It gives examples and templates for minutes, action items, and agendas. It has checklists for preparing and debriefing a formal review and for basic topics to cover in formal design reviews.

Keywords

PERRU; peer review; code inspection; design review; minutes; action items; CoDR; SysDR; PDR; CDR; TRR

Introduction to review

Review is a set of feedback paths within system development. The act of review has two primary objectives: (i) to confirm correct design and development and (ii) to expose and identify problems with design, development, or processes.

The US Food and Drug Administration (FDA) defines design review in general terms: “Design review means a documented, comprehensive, systematic examination of a design to evaluate the adequacy of the design requirements, to evaluate the capability of the design to meet these requirements, and to identify problems” (21 CFR 820.3(h)) [1]. This definition of design review encompasses a variety of reviews, reviewers, processes, and procedures.

The California Department of Transportation defines technical review along expanded lines: “Technical reviews suggest alternative approaches, communicate status, monitor risk, coordinate activities within multi-disciplinary teams, and identify design defects. Technical reviews can monitor and take action on both technical and project management metrics that define progress” [2].

The Wikipedia definition focuses on using technical peer reviews to find problems: “Technical peer reviews are a well defined review process for finding and fixing defects, conducted by a team of peers with assigned roles. Technical peer reviews are carried out by peers representing areas of life cycle affected by material being reviewed (usually limited to 6 or fewer people). Technical peer reviews are held within development phases, between milestone reviews, on completed products or completed portions of products” [3].

The NASA Systems Engineering Handbook describes the mechanisms of technical reviews: “Typical activities performed for technical reviews include (1) identifying, planning, and conducting phase-to-phase technical reviews; (2) establishing each review’s purpose, objective, and entry and success criteria; (3) establishing the makeup of the review team; and (4) identifying and resolving action items resulting from the review” [4].

The IEEE 1028–1998 Standard identifies the following five types of reviews:

1. Management reviews, e.g., control gates for decision processes during development

3. Inspections, e.g., identifying errors or deviations from standards and specifications

4. Walkthroughs, e.g., authors of designs present and explain to peers who then examine the requirements or the design

5. Audits, which are part of the configuration management process.

The Project Plan or the Systems Engineering Management Plan (SEMP) should establish the process for conducting reviews. The plan should also describe the content and level of formality for different reviews and how to tailor each review for its purpose and type [2].

Part of a complete feedback system

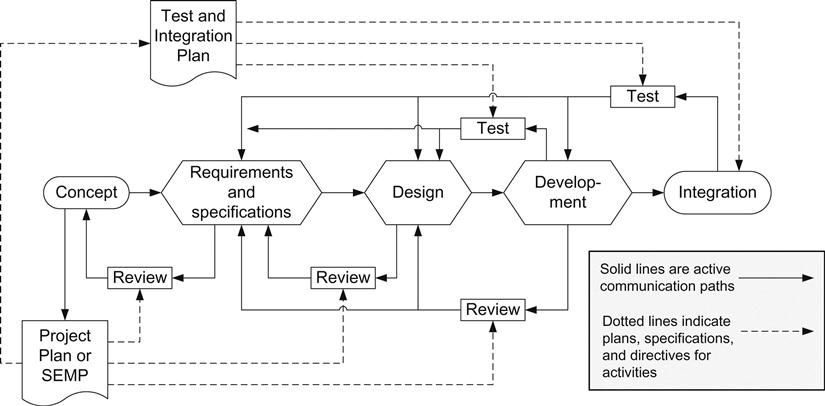

Design reviews, coupled with test and integration, are the primary feedback activities to assure adherence to and achievement of the project goals (Figure 13.1). These activities confirm whether your system’s design and development are as you planned them to be.

PERRU

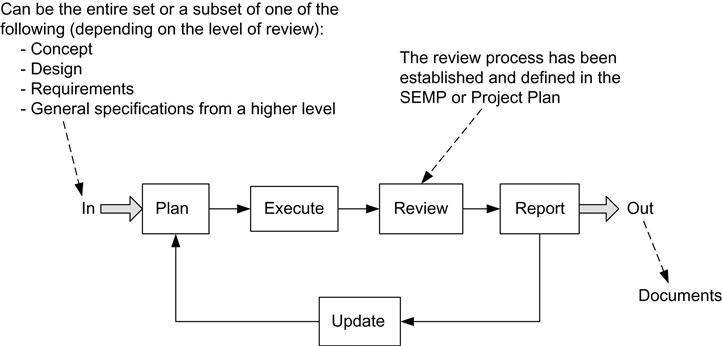

Every review should have a set of activities that are both consistent and thorough. Reviews fit into the system engineering structure that can be summarized in the acronym PERRU for plan, execute, review, report, and update. Figure 13.2, which is simplified from the original diagram in Chapter 1, illustrates this structure and data flow. This structure has an interesting self-similarity principle that exists at every level of process, from high-level processes to the low-level processes, finely detailed procedures, within every project or product development.

Review is necessary

Review is a necessary and critical part of system development. Unfortunately, review receives scant attention in too many situations. You should carefully consider and plan review and then perform it regularly.

General processes and procedures

The sum total of reviews is a multilevel effort within every project. Some reviews are small groups that meet for a short duration; examples include code walk-throughs and module reviews. Some reviews are group meetings to assess project progress or design. Some reviews are formal design reviews and sign-offs. Each has its structure; each has its purpose.

The Defense Acquisition University (DAU) in the United States describes some of the characteristics and components of a technical review. The web site titles the description with “Technical Review Best Practices: Easy in principle, difficult in practice.” Here are some concerns [5]:

• Steps, components, and characteristics for best practices in technical reviews include the following:

– Be event-based, e.g., the end of a design phase, such as a preliminary design review (PDR).

– Define objective criteria, up-front, for entry to and exit from each review; the Defense Acquisition Guidebook provides general suggestions for criteria.

– Involve and engage technical authorities and independent subject-matter experts.

– The Review Chair should be independent of the program team. (Note: in small development teams and companies this is seldom practical.)

– Reviews are only as good as those who conduct them.

• Review status of program development from a technical perspective.

– Involve all affected stakeholders.

– Involve all technical products, such as specifications, baselines, and risk assessments, that are relevant to the review.

• System-level reviews should occur after the corresponding reviews of the subsystems.

General outline for review

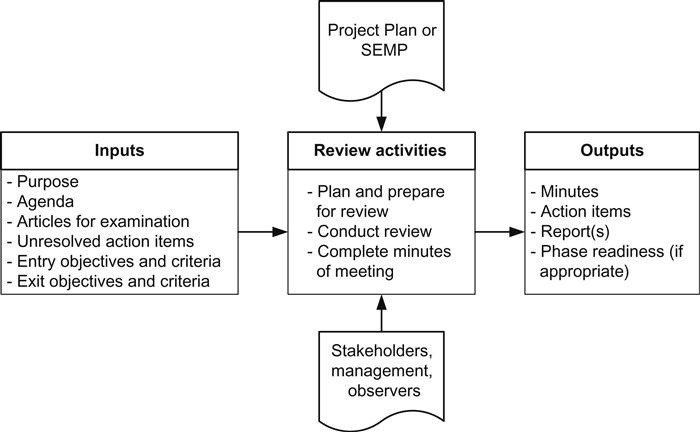

The California Department of Transportation reinforces these objectives with more thorough details [2]. Figure 13.3 outlines the basic flow and structure of a review. The following description is NOT an exhaustive list of necessary review activities for every occasion; it should serve you well in many situations, though.

You must clearly establish and publicize the purpose for the meeting and define the expected outcomes. Provide the required review inputs, which are products from the current phase. Also provide the unresolved action items from previous reviews; these action items may be carried over for either continuing discussion or decision. Finally, establish the entry and exit criteria.

The Project Plan (or SEMP) should describe the process to perform technical reviews. Involve the appropriate stakeholders in the review for optimum communication and decision making.

The outputs from a technical review include a number of potential items:

• Action items, which are critical concerns, assigned by the review team to appropriate staff, to be addressed, fixed, modified, eliminated, or reviewed before specified dates.

• Documented review of decisions and action item progress, which includes acceptance, rework with comments, deviations, and waivers to particular developments.

• Feedback to participants and management about the results of the meeting.

• Provide a record of the meeting for their review and comments. Accurate and complete (not necessarily extensive) minutes ensure that decisions, actions, and assignments are accurately documented.

Plan a schedule of reviews. Plan specific activities for each meeting; include who should attend, the technical and formal level of the review, the agenda, the input components, e.g., document drafts, software modules, or hardware, and the output components, e.g. minutes, action items, decisions with criteria, such as 100% consensus agreement or majority agreement or project manager only. In preparing for each review, define the purpose, objectives, and the intended outcomes of the meeting. Identify participants and their roles, invite them, and distribute the agenda and appropriate background material.

Conduct the meeting in the following manner: Start on time. Clearly state the purpose of the review and provide an updated agenda to the attendees. Record the attendees present and their role, e.g., presenter, chairperson, or observer, and if need be, collect up-to-date contact information, such as e-mail, phone number, department, division, organization, or company. Review the ground rules for the review prior to discussion. Conclude one agenda item at a time. Manage discussions to focus on the topic. Document all decisions, actions, and assignments in the minutes. At the close of the review, summarize all decisions, actions, and assignments, review agenda items, and assignments for the next meeting. Confirm date, time, and place of the next meeting. Keep track of the time and then end on time.

After the review, send out a complete set of minutes that include all decisions, action items, and assignments. Follow up with the attendees to make sure the minutes are correct and complete. Periodically check on the progress of critical action items from the review.

Tailoring your review

Tailor the reviews to the size, type, and formality of the project. For example, if the project is a small COTS integration to control some short-term university experiments, then you may only need monthly reviews. The reviews can be informal and attended by the project engineer and the principal investigator. The meeting minutes may only be a paragraph or two that you e-mail out to the participants. On the other hand, developing a medical device may require weekly status meeting of every engineering team, a monthly status meeting of the entire project, peer review of every completed module, system reviews of modules and integration, phase reviews, and stacks of documents.

Types of review

Peer review: Peer review is a very effective form of review; it involves team members looking over the logic, rationale, and implementation of a module, which can be software, electronic, or mechanical. Peer review generally refers to small, fairly short meetings (less than one and a half hours) where colleagues examine a basic, small unit or module. An example module might be a software routine of less than 60 lines. Another example module might be a circuit board with 50 or fewer components. Even though peer review may be informal, it still should have a stereotyped format including agenda, checklists, action items, and minutes—albeit simple versions of each.

Code inspections: Software code walk-throughs are a type of peer review. They are highly effective and an excellent way to encourage proper designs and good development processes. For some types of products, such as medical devices, code inspections are an important part of the formal development. You should still have procedures, which can be simple and straightforward, for recording notes or minutes and then maintain a database of action items.

Design reviews: For larger, longer, and more critical programs, regular design reviews monitor progress, status, and conformance to requirements. The end of each development phase almost always has a formal design review; it is a high-level design review that ensures that the project’s architecture is balanced and appropriate so that the system’s functionality and performance meet the intended need. Design reviews are formal meetings and should have independent reviewers who are not directly associated with the project for more objective assessment.

Planning review: Verifies that plans, schedules, and activities are appropriate and timely for the project.

Concept of operations review: Ensures that the system operation meets the intended use and addresses the needs of the stakeholders. This review is reserved for large, complex projects where the concept of operations drives many requirements.

Requirements review: Ensures that the requirements meet the intent of the project. The requirements review checks that they are complete, appropriate, and address the user needs.

Component-level detailed design review: This is a particular form of peer review; it checks the logic, rationale, and implementation of the detailed design for a module. This can be a major review or may be a subset of a larger phase review.

Test readiness review (TRR): A review to confirm that components and subsystems are ready for verification tests. For system integration, the TRR examines the results of the verification tests and addresses concerns for integration.

Operational review: Ensures that the system is ready for deployment. This review confirms that training, support, and maintenance for the system are in place.

Frequency of review

The Project Plan or SEMP should establish a regular schedule for the reviews or at least define when reviews will follow specific events. In some instances, such as with code inspection, a schedule is not possible, but code inspections should occur after the completion of each module. Regardless, the Project Plan or SEMP should state either the timing or the criteria for holding a review.

Course of action, changes, and updates following review

Following each review, the program manager, system engineer, or appointed staff should monitor the effort to address the action items that derived from the review. An action item should be a clearly defined effort, focused on a single concern. Sometimes an action item can become a job order or a task assignment.

Managing the action items and the results of reviews is critical to a project. No development effort goes forward without some adjustment recommended in review. Here are the primary sources that record issues for adjustment:

• Minutes—peer reviews, design reviews, control board meetings, and failure review board meetings

• Logs—problem reports/corrective actions, engineering change requests, and engineering change notices

• Test results—bench tests, unit tests, system tests, integration, and acceptance tests

• Communications—memos, e-mail messages, notes, letters, and customer audits.

Roles and responsibilities

Project staff and consultants conduct internal reviews. Customers, audit agencies, and consultants may join project staff for design reviews and external reviews. The Project Plan or SEMP should determine when the reviews and audits may take place; though some audits might be unannounced or developed later in the project development, particularly if the project is mission-critical or safety-critical and certification or approval is required, e.g., FDA approval.

Formal reviews, particularly for project development in mission-critical or safety-critical markets, have a structured set of responsibilities. Less formal development can still benefit from these definitions and this framework.

Moderator: Conducts the technical peer review process and collects inspection data. Leads the technical peer review but does not perform the rework [3].

Inspectors: Review the design or code or plans and search for defects in work products [3].

Author: Responsible for the information about the work product and its design or code or plans; usually the designer of that portion of the project. Responsible for correcting all major defects and any minor defects as cost and schedule permit [3].

Reader: Guides the team through the work product during the technical peer review meeting. Reads or paraphrases each work product in detail. Performs duties of an inspector in addition to the reader’s role. (This is the one role that is only used in the largest of projects. I have never encountered a situation with a reader.) [3]

Recorder: Accurately records each defect found during an inspection meeting; typically each defect becomes an action item. May perform the duties of an inspector [3].

If your company has a Control Board, its function is to confirm implementation of the revisions or updates derived from reviews. For smaller projects (less than 15 or 20 staff members involved), the program manager or system engineer performs this function.

Components of a review

Agenda

All reviews should have an agenda, even if it is very brief. The agenda may just be a reference to a company procedure or a short set of e-mailed instructions. Generally, an agenda should have the following:

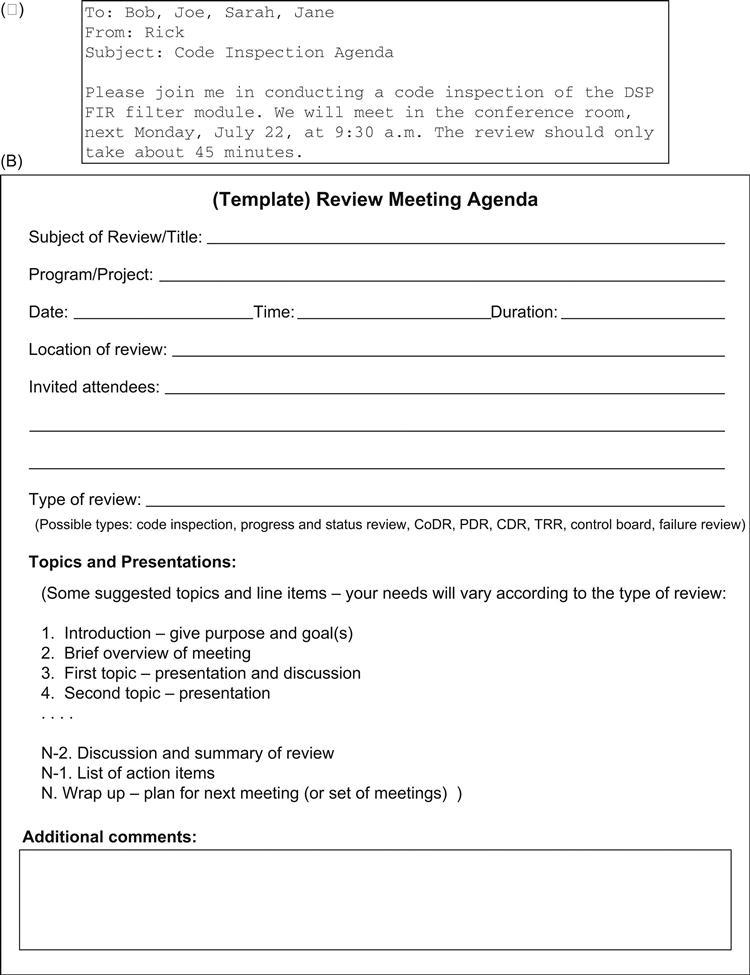

Figure 13.4 illustrates two examples of agendas for a technical review. The first, in Figure 13.4A, is simple and suffices for many situations. The second, in Figure 13.4A, is formal and detailed; it suits more complex projects.

Most agendas are specific for the topic and the project. Developing templates for the various reviews within a project may be useful for your company or group; this would be a forward-looking practice to save time on future projects. Unfortunately, not everyone takes the time to do this. If you happen to be reviewing company procedures and processes for quality assurance, the agenda for review or audit should address these questions:

Minutes

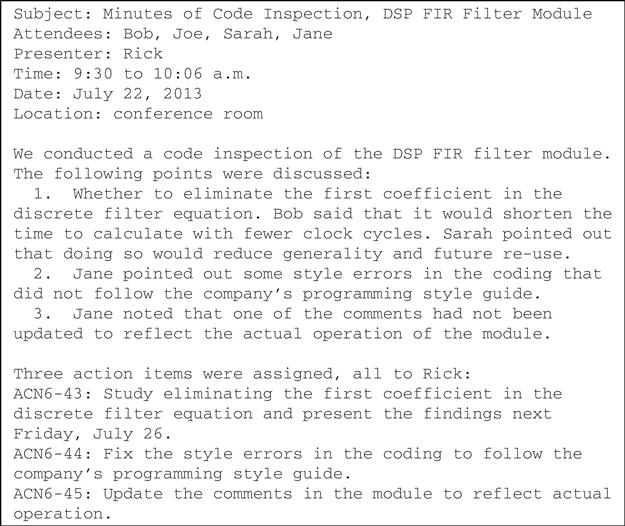

Minutes are the archival record of the review. Minutes should identify the primary topics discussed and the main points presented. They should reference any action items generated during the review. Minutes do not need exhaustive detail. Figure 13.5 illustrates one example of minutes. A basic format for the minutes from a review will include:

Action items

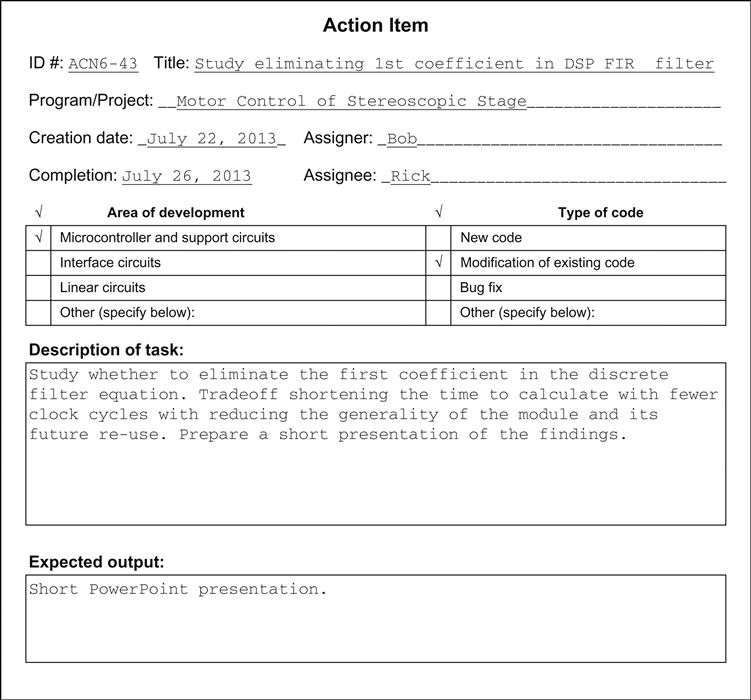

Reviews should generate action items when identified issues need addressing. All action items should be tracked in a database. Each action item should have the following fields:

Figure 13.6 illustrates an example action item.

Checklist

Here are some items that might go into a checklist to ensure thorough coverage in preparing and conducting reviews [2].

1. Does the Project Plan or SEMP contain a section with a plan for reviews? If so, does the plan contain:

• The number or frequency of the reviews?

• The process for carrying out each review?

• Roles identified for each review?

• Level of formality identified for each review?

• All action items will be completed.

• Any unresolved actions will require program manager sign-off.

2. Here are some items to consider in preparing a larger review—particularly formal reviews:

• Identify the attendees and their roles as soon as possible.

• Check the location of the technical review for size, climate, configuration, equipment, furniture, noise, and lighting.

– Make sure to identify the purpose and outcomes expected.

– Develop an agenda and distribute it 10 days to two weeks before the meeting.

– Distribute supporting and background material to the attendees 10 days to two weeks before the meeting.

– Bring forward all unresolved assignments, identified in the previous meeting.

– Distribute the ground rules for the meeting beforehand and discuss them before the start of discussion.

– Create an attendance roster for the meeting that requests up-to-date contact information from each attendee.

3. Following a large meeting, here are some items to debrief:

• How well did the presenters prepare for the meeting?

• Were all issues resolved or identified?

• What were the status and recommended resolutions?

• Did the meeting start on time?

• Did all attendees make introductions, or were they introduced?

• Were the purpose of the meeting and the expected outcomes clearly stated?

• Did you distribute an updated agenda with the priorities assigned for each agenda item?

• Was each agenda item concluded before discussing the next item?

• Did the minutes document all decisions, assignments, and actions?

• Were the meeting and the results summarized at the end of the meeting?

• Did the meeting end on time?

• Were the minutes distributed to the attendees?

• Were all critical assignments and action items followed up between meetings?

• Were the meeting facilities appropriate and useful?

• Were all the food and refreshments appropriately supplied?

4. Some suggested ground rules [2]:

• Tell it like it is, but respect, honor, and trust one another.

• Work toward consensus, recognizing that disagreements in the meeting are acceptable and not an indication of failure.

• Upon agreement, we all support the decision.

• Hold one conversation at a time.

• Focus on issues, not on personalities; actively listen and question to understand.

Peer review and inspection

Peer review is extraordinarily useful in catching many of the most difficult problems, such as errors in logic and misinterpreted customer intent. Peer review is primarily a technical discourse; it can address architecture, schematic capture, programming, and code development. Peer review can take several forms—inspection, code walk-throughs, technical presentation, and discussion. Peer review should always collect and archive notes, agenda, and minutes—however brief they may be. These archived items are useful when performing a root cause analysis of problems or preparing for future upgrades.

Internal review

Internal reviews are those reviews conducted within the project, by company staff, and for the good of the project. While they include peer reviews, they also include meetings to review status and progress. All internal reviews should always collect and archive notes, agenda, and minutes—however brief they may be.

Formal design review

For larger, longer, and more critical programs, regular design reviews monitor progress, status, and conformance to requirements. Design reviews are formal meetings and should have independent reviewers who are not directly associated with the project for more objective assessment. The review committee for each formal review should consist of at least four members plus a designated chairman; none of whom should be members of the project team. I have to say this rarely happens—it is the ideal but most small companies do not have the people, time, or resources to devote to independent review. Instead, they will put together design reviews with members of the project team presenting and reviewing and invite a customer or the client to attend and critique.

Regardless of the format, you should send a review package to each reviewer about two weeks ahead of the formal design review. The review package should contain a copy of all the slides to be presented at the review along with appropriate background material, the agenda, and ground rules.

Types of design reviews

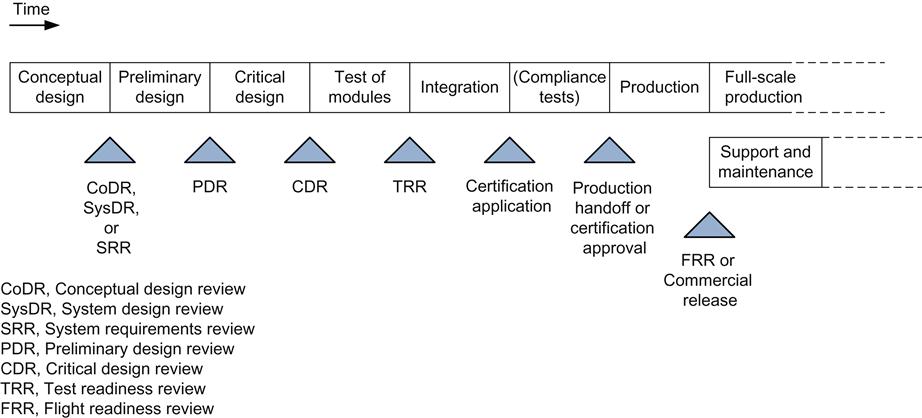

A number of design reviews can occur during the development timeline of an embedded system. Each one serves as a gateway to the next phase of the project. Figure 13.7 illustrates a timeline with example design reviews. I will describe some of the activities in these design reviews in the following subsections.

Conceptual design review

The Concept of Design Review (CoDR) closes the initial phase of the project. It can also be called the System Design Review (SysDR). The purpose of this phase is to develop a reasonable approach and architecture for the embedded system. The review examines the mission goals, objectives, and constraints; it also examines the first-pass requirements for the project and the approach to meet those requirements.

If the project is a larger, more complex system, such as a vehicle or medical device or spacecraft, you will probably hold another design review called the system requirements review (SRR). Usually the SRR takes place sometime toward the end of the concept phase or the beginning of the preliminary design phase. The US military describes it this way, “The SRR assesses the system requirements as captured in the system specification, and ensures that the system requirements are consistent with the approved materiel solution (including its support concept) as well as available technologies resulting from any prototyping efforts” [5].

Here are some example items that a CoDR or SysDR might address:

• Project organizational structure and interfaces

• Requirements’ process and management

– Mission: environment, available resources, interactions with other systems

– Performance: technical characteristics and constraints

– Major system functions and interfaces

• Research—literature, patent searches

• Trade studies to identify design constraints and feasibility

Preliminary design review

The Preliminary Design Review (PDR) closes the preliminary design phase of the project. A PDR should present the basic system in terms of the software, mechanical, power distribution, thermal management, and electronic designs with initial assessments for loads, stresses, margins, reliability, software requirements and basic structure, computational loading, design language, and development tools to be used in development. Finally, the PDR should present the preliminary estimates of weight, power consumption, and volume.

The US military describes a PDR this way, “The PDR establishes the allocated baseline (hardware, software, human/support systems). A successful PDR is predicated on the determination that the subsystem requirements; subsystem preliminary design; results of peer reviews; and plans for development, testing, and evaluation form a satisfactory basis for proceeding into detailed design and test procedure development” [5].

A PDR presents the design and interfaces through block diagrams, signal flow diagrams, and schematics. Within these topics, a PDR should present first concepts for logic diagrams, interface circuits, packaging plans, configuration layouts, preliminary analyses, modeling, simulation, and early test results. Here are some example items that a PDR might address:

• Closure of action items, anomalies, deviations, waivers and their resolution following the CoDR

• Completed research, tradeoffs, and feasibility

– Functional—what the system does

– Performance—how well the system does it

– Connections and interfaces—how it affects other systems

– Mechanical/structural design, analyses, and life tests

– Weight, power, data rate, commands, EMI/EMC

– Electrical, thermal, mechanical, and radiation design and analyses

• Software requirements and design

• Design verification, test flow, and test plans

– Test and support equipment design

• Risk and hazard analysis—which might include STPA, Safety Case, ETA, FMECA, and FTA

• Contamination requirements and control plan (this tends toward spacecraft development)

Critical design review

The Critical Design Review (CDR) closes the critical design phase of the project. A CDR presents the final designs through completed analyses, simulations, schematics, software code, and test results. It should present the engineering evaluation of the breadboard model of the project. A CDR must be held and signed off before design freeze and before any significant production begins. The design at CDR should be complete and comprehensive.

The US military describes a CDR this way, “The CDR establishes the initial product baseline. A successful CDR is predicated on the determination that the subsystem requirements, subsystem detailed designs, results of peer reviews, and plans for test and evaluation form a satisfactory basis for proceeding into system implementation and integration” [5].

The design should be complete at CDR. The CDR should present all the same basic subjects as the PDR, but in final form. Here are some additional example items, beyond the items in a PDR, that a CDR might address:

• Closure of action items, anomalies, deviations, waivers, and their resolution following the PDR

• Final implementation plans including

– Engineering models, prototypes, flight units, and spares

• Updated risk management plan

– Updated risk and hazard analysis

– Qualification and environmental test plans

– Integration and compliance plans

– Status of procedures and verification plans

– Test flow and history of the hardware

– Completed support equipment and test jigs

• Identification of residual risk items

• Plans for distribution and support

Commercial release

Commercial release, or Flight Readiness Review (FRR) (for spacecraft), is the decision gate prior to manufacturing and full-scale production. Sometimes this review is called the Production Readiness Review (PRR). It has multiple purposes: to assure that the design of the item has been validated through the integration and acceptance test program; to assure that all deviations, waivers, and open items have been satisfactorily closed; to assure that the project, along with all the required support equipment, documentation, and operating procedures, is ready for production.

The US military describes a PRR this way, “The PRR examines a program to determine if the design is ready for production and if the prime contractor and major subcontractors have accomplished adequate production planning. The PRR determines if production or production preparations have unacceptable risks that might breach thresholds of schedule, performance, cost, or other established criteria” [5].

Here are some example items that commercial release or a PRR might address:

• Resolution of all anomalies through regression testing or test plan changes:

– Rework or replacement of hardware

– Assessment of could-not-duplicate failures and the residual risk

• Compliance certification results

• Measured test margins versus design estimates

• Demonstrate qualification and acceptance of operational and environmental margins

• Demonstrate the failure-free operating time and the results of life longevity tests

Other types of design reviews

Depending on the type of embedded system, you might require a number of different design reviews. The US military has the following definitions [5].

Alternative systems review (ASR)—The ASR assesses the preliminary materiel solutions that have been proposed and selects the one or more proposed materiel solution(s) that ultimately have the best potential ultimately to be developed into a cost-effective, affordable, and operationally effective and suitable system at an appropriate level of risk.

Flight readiness review (FRR)—The FRR is a subset of the TRR and is applicable only to aviation programs. The FRR assesses the readiness to initiate and conduct flight tests or flight operations.

Initial technical review (ITR)—The ITR is a multidisciplined technical review held to ensure that a program’s technical baseline is sufficiently rigorous to support a valid cost estimate as well as enable an independent assessment of that estimate.

In-service review (ISR)—The ISR is held to ensure that the system under review is operationally employed with well-understood and managed risk. It provides an assessment of risk, readiness, technical status, and trends in a measurable form. These assessments help to substantiate budget priorities for in-service support.

System functional review (SFR)—The SFR is held to ensure that the system’s functional baseline has a reasonable expectation of satisfying stakeholder requirements within the currently allocated budget and schedule. The SFR assesses whether the system’s proposed functional definition is fully decomposed to its lower level, and that preliminary design can begin.

System verification review (SVR)—The SVR is held to ensure the system under review can proceed into initial and full-rate production within cost (program budget), schedule (program schedule), risk, and other system constraints. The SVR assesses system functionality and determines if it meets the functional requirements as documented in the functional baseline.

Test readiness review (TRR)—The TRR is designed to ensure that the subsystem or system under review is ready to proceed into formal test. The TRR assesses test objectives, test methods and procedures, scope of tests, and safety; and it confirms that required test resources have been properly identified and coordinated to support planned tests [5].

Change control board

Companies developing mission-critical or safety-critical embedded systems sometimes have a separate function called the change control board (CCB). A CCB comprises company staff from different technical disciplines. It reviews requests for design changes after a design is frozen. The purpose of a CCB is to give technical oversight that manages change efficiently.

NASA has an entire document devoted to problem management that a CCB implements [6]. The US FDA discusses the use of a CCB to review changes to current products, “The Change Board will meet to review the technical content of all proposed changes to released documentation for accuracy and impact on safety, efficacy, reliability, product cost, parts and finished goods inventory, work-in-process, instruction and service manuals, data sheets, test procedures, product specifications, compatibility with existing products, and other factors…” [7].

Failure review board

A failure review board (FRB) is similar to a CCB except that it focuses solely on failures and their causes. Companies developing mission-critical or safety-critical embedded systems sometimes have a separate FRB. Like a CCB, an FRB comprises company staff from different technical disciplines. Its purpose is to review failures in products and pursue resolution.

An FRB often uses analysis tools like failure reporting, analysis and corrective action system (FRACAS). This sort of tool records failures of components, subsystems, and processes; records the results of root cause analysis; identifies corrective actions; provides cognizant people, staff, clients, and customers, with the necessary information to fix the problem [8].

Sematech, Inc. has a document that gives the following description in its executive summary, “Failure Reporting, Analysis, and Corrective Action System (FRACAS) is a closed-loop feedback path in which the user and the supplier work together to collect, record, and analyze failures of both hardware and software data sets. The user captures predetermined types of data about all problems with a particular tool or software component and submits the data to that supplier. A Failure Review Board (FRB) at the supplier site analyzes the failures, considering such factors as time, money, and engineering personnel. The resulting analysis identifies corrective actions that should be implemented and verified to prevent failures from recurring” [9].

Audits and customer reviews

Audits may be conducted by certification agencies or by customers or by company staff. Their primary purpose is to assure outside parties that your company’s processes and procedures are sufficient for the market. The best way for you to minimize the impact of an audit on your project development is to maintain open and clear communications with the agency or customer.

Audits and customer reviews come in many forms from simple online surveys to monthly visits of three and four days at a time. While entertaining a customer takes away time and effort from your development, considering them a partner in your project and using their expertise will help you in the long run. You will develop a better product and will gain a trusted client.

Static versus dynamic analysis

So far, I have described and discussed static methods to review technical design and progress. Static means that the review is a snapshot of an instance in time of the continuously developing product. The dynamic aspects of review are best handled in test and integration, which Chapter 14 describes in detail.

Debrief

One of the best things that a team can do is debrief at the end of a project. Ask, “What went right?” and “What went wrong?” Determine how you might do things better in future projects and record your notes and thoughts. This is a long-term view of development and it is an investment in your company’s future. Most companies do not take the time to review in retrospect to prepare for the future. This is unfortunate because thorough debriefing will reduce time and effort in future projects.

Acknowledgments

My thanks to both Geoff Patch and George Slack for their careful and thoughtful reviews, critiques, examples, and suggestions for this chapter.