Software Design and Development

Geoff Patch, CEA Technologies Pty. Ltd.

Software development for embedded systems is not like software development for desktop systems. This chapter provides detailed descriptions of the key differences between the two domains. It examines operating system support, real-time requirements, resource constraints, and safety. It then provides advice about tools and techniques to develop embedded system software, with the primary emphasis on processes that help the embedded system developer to reliably and repeatedly produce high quality embedded systems. The advice is based upon techniques that have developed over many years, and have been proven to work effectively in highly complex real-world military and industrial applications.

Keywords

Software; process; design; tools; techniques; quality; reliability

We are surrounded in our daily lives by embedded systems. Modern telephones, automobiles, domestic appliances, and entertainment devices all have one thing in common—large amounts of complex software running on a processor, or processors, embedded in the device. That software implements much of the functionality of the device.

The design and development of software for these systems is a specialized discipline requiring knowledge of constraints and techniques that are not relevant to more conventional areas of software. While the rapid proliferation of embedded systems is being reflected in the gradual introduction of relevant courses into university degrees, in many ways, the efficient production of high-quality embedded software is still an art rather than a science.

This chapter will examine software design and development in the embedded system domain. It explains the processes, tools, and techniques that may be used to overcome the difficulties of development in this environment.

Distinguishing characteristics

Before contemplating how to approach the development of embedded software, you need to understand what actually distinguishes embedded software from software developed for either the desktop or the server. Understanding the difference between embedded and other domains is extremely important. The distinguishing factors are not trivial; in many cases, they are not obvious. Whether obvious or not, these factors are critical drivers in embedded software development programs; appreciating their impact can differentiate eventual success from failure of a development program.

Not every embedded system has all of these characteristics, of course, and some nonembedded systems will share these characteristics. It would be surprising, however, to find an embedded system that doesn’t embody some of the features from the following list.

Minimal operating system support

A full featured desktop operating system (OS), such as Linux, provides an enormously powerful virtual machine for the desktop software developer to develop against. In most cases, the physical hardware underlying the OS platform is almost entirely abstracted away by large Application Programming Interfaces (APIs) that hide the complexities of dealing with the hardware on which the application is running. Beyond hardware abstraction, desktop OSs provide other abstractions such as virtual memory, multiprocessing, and threading that provide the illusion of multiple independent tasks operating concurrently. In fact these software processes sequentially share a single processor or a small number of cores on a multicore CPU.

The features of a desktop OS provides the desktop developer with enormous power and flexibility, but they come at a cost which includes the amount of storage required to store the OS image and the amount of RAM required to actually run the system. At the time of writing, large desktop OSs may require gigabytes of storage and RAM to operate successfully. As well as their storage requirements, such OSs also consume substantial amounts of processing power to provide their services.

In many cases, it is not feasible to use one of these large-scale OSs in an embedded system because it would make the device under development technically or commercially infeasible. In these circumstances, the developer is faced with two options.

For small systems, the best choice may be to have no OS at all. The embedded software may consist of a program that runs a control loop that polls devices for activity, or responds to flags being set by interrupt handlers. This solution is simple, inexpensive, and is often the best choice for small high-volume applications where minimizing cost is a key driver.

For more complex applications, particularly those where multiple activities must be conducted in parallel, the best choice is a Real Time Operating System (RTOS). An RTOS is a small, efficient, and fast OS specifically targeted at the embedded system domain and designed to meet performance deadlines. RTOSs provide many of the useful capabilities of the desktop OS such as multithreading, while avoiding the plethora of nice-to-have features that consume resources without adding core capabilities to the system.

RTOSs will be discussed in more detail later in this chapter.

Real-time requirements

A real-time software application must meet all processing deadlines. Ganssle and Barr define real time as “having timeliness requirements, typically in the form of deadlines that can’t be missed” [1]. A system fails if it does not meet either the logical or the timing correctness properties of the system. While not universal, timing constraints in embedded systems are extremely common.

A common mistake is to believe that a system with real-time requirements must be “fast.” A system with real-time requirements simply has to deliver numerically correct computational results while meeting its temporal constraints. A correct numerical result delivered late is a failure.

Determining real-time requirements in an embedded system depends entirely on the nature of the system. In radar systems, timing constraints are frequently specified in units of nanoseconds. In an automobile engine management system, timing constraints may be specified in microseconds or milliseconds. While it may be stretching the spirit of the definition, it’s entirely possible that a system may have timing constraints specified in seconds or minutes or even days.

However tight the constraints may be, the addition of timing requirements to a system is frequently a key discriminator between embedded systems and those in other domains. Spreadsheets, word processors, and web browsers work just as fast as they can; the result is delivered when it’s available. Users may wish for faster performance from such systems, but a complaint to a spreadsheet vendor that the calculations are performed slowly would most likely fall on deaf ears.

Real world sensor and actuator interfaces

Real-world interactions drive real-time requirements in an embedded system. This interaction senses some aspect of the external environment around the system; makes decisions based on the information received from the sensors or inputs; then drives change in the external environment through actuators or other control mechanisms.

The pattern of real-world sensing and control, subject to real-time constraints, is extremely common in the world of embedded systems. Moreover, it is one of the most difficult areas to understand fully and implement correctly. The detailed mathematical study of this topic falls under the subject of control systems found in Chapter 8.

Resource constrained

The computational capacity of most embedded systems is severely constrained. The processor environment is typically much less powerful than the current state of the art in the desktop environment.

This isn’t arbitrary. Nothing is free. Heavy duty computational power typically requires large processors in large physical packages, which require large amounts of electrical power. Thus begins a cascade effect on design: large amounts of electrical power require the installation of large power supplies; large amounts of power result in the generation of large amounts of heat, which necessitate heat sinks, fans, and other infrastructure to move the heat away from the processor and peripherals. All these items add complexity, weight, and volume to the embedded system.

As well as limiting the raw computational power of the processor, the physical environment will constrain many other aspects of the software. The amount of nonvolatile memory available to store the executable image often limits the size of the program. Both the amount of RAM available and the speed at which it operates will limit data structures. The availability of interfaces with the appropriate performance constraints will limit the speed of communication with other systems.

Factors that need to be considered in this area are size, weight, power, heat dissipation, and resistance to shock and vibration. (Chapters 8–10 go into the trade-offs for these factors and parameters.) In general, these factors will significantly influence the amount of processing power available to an embedded system software developer.

Single purpose

Embedded systems often have fixed and single purposes. An engine control module only manages the engine; it has no other function. An industrial process control system will be designed to control a well-defined set of industrial processes. It won’t have to download and play MP3 files off the Internet while maintaining the membership database of the operator’s basketball club.

Contrast the embedded system’s single-mindedness with a desktop system, which is inherently general and multipurpose. Users may install software developed by any third party and at least, in theory, they may run any number of programs simultaneously in any combination.

Educational institutions universally teach the principles involved in developing such general-purpose software as being the correct paradigm to follow for successful application development. This is true outside the embedded systems arena, but following this paradigm can have unexpected and even harmful consequences when used in the development of embedded systems.

For example, dynamic memory allocation techniques allow developers to allocate and deallocate blocks of memory as the load on their application changes. This is advantageous in a general-purpose system performing many different tasks simultaneously because it allows an application to use only the amount of memory it needs, which frees unused memory so that the OS can allocate it efficiently to other tasks. Unfortunately, general-purpose algorithms for memory allocation that work well on the desktop suffer from problems such as temporal nondeterminism and memory fragmentation that can make them totally unsuited for use in an embedded environment. If you are adding rows of cells to the bottom of a spreadsheet, you are unlikely to notice a delay of 10, 20, 30, or even 100 s of milliseconds every now and then when the spreadsheet has to allocate a block of memory to accommodate the new data. On the other hand, if the fuel injection control computer in your vehicle paused for 50 milliseconds to allocate some new memory as you accelerated and failed to supply fuel to the engine for around five engine revolutions, then that is something you would almost certainly notice.

Long life cycle

Embedded systems, particularly military and large-scale industrial control systems, have life cycles that far exceed those of desktop systems. Some may run as long as 30 or even 50 years! The impact of this long life cycle is that long-term maintainability of embedded system software is extremely important.

If an application such as a game has a life cycle of a year or two, it would be unprofitable to allocate extensive resources to ensuring the long-term maintainability of the software. On the other hand, if an embedded system has a life cycle of 20 years, then it’s likely that multiple generations of developers will support and maintain it over that extended time frame.

This extended product life cycle has major implications for the nature of embedded software. From the very start of the project, extensive effort must be put into documentation such as code comments, system interface specifications, and system operational models.

Reliability and design correctness

In many cases, the failure of a software system will result in nothing more than some degree of inconvenience to the operator. This is true even with embedded systems such as mobile phones and entertainment devices.

Many embedded systems, however, operate in mission-critical and safety-critical environments. The lives of hundreds of sailors may one day depend on the correct operation of their fire control radar as it guides a missile in the defense of their ship. The continuing heartbeat of a person wearing a heart pacemaker depends entirely on the correct operation of the software and electronics embedded into that device.

Under these circumstances, instead of mere inconvenience, the failure of a system or a design flaw may result in death, injury, economic loss, or environmental damage. These adverse consequences may affect either individuals or entire nations. Therefore, the emphasis in the design and development of such systems must be quality, correctness, and reliability above all else.

Safety

Operator health and safety in the world of desktop software development is typically limited to consideration of aspects such as choosing screen layouts and character fonts that minimize eyestrain. This is in sharp contrast to the world of embedded system development, where embedded devices frequently control powerful machines and processes at incredible speeds.

Embedded software controlling powerful machinery has the capability to injure and kill people and in notable cases it has done exactly that. Producing software that is reliable is a common design goal in all domains, but producing safe software is critical in the area of embedded systems.

Standards and certification

Most embedded systems must comply with domain specific standards and achieve certification by relevant authorities. This is particularly true in areas where the incorrect operation of the system may result in harm to people or the environment. Conversely, most desktop software is not developed against particular standards or subject to certification by any regulatory body.

Compliance with external standards has many costs. For example, developers must know the required standards and train to produce software that conforms to the standards. Work products, such as code and documentation, must then be externally audited to ensure compliance.

Cost

Embedded systems are expensive to build and maintain! Implantable medical devices take 5–7 years and between US$12 and 50 million to develop. Industrial and manufacturing test stations may cost millions and support can run into hundreds of thousands of US dollars each year. An automobile recall to simply reprogram an engine control module may only take 15 min per car but at a rate of US$50 per hour for 1 million cars that amounts to US$12.5 million; and that does not include the nonrecurring engineering cost to develop and test the new software!

Companies building embedded systems must employ, train, and then retain highly skilled staff with esoteric expertise. High-quality development tools for embedded software, such as compilers and debuggers, can cost orders of magnitude more than their desktop equivalents. The development of the system may require expensive electronic support tools, such as logic analyzers and spectrum analyzers. The development processes used, and the resulting work products, may be subject to standards compliance audits that increase the time and cost required to perform the development.

Product volume

Embedded systems usually minimize the cost of the processor environment in large volume manufacturing to save costs and to maximize profits, which helps the product to be commercially competitive. Minimizing the processor environment directly affects the software that operates it. Conversely, the costs of packaging and delivering desktop software, however, are generally small and have no impact on the development of the software.

This cost reduction in embedded systems usually results in the use of less capable devices in the processor environment. You must carefully consider this constraint in the design and implementation of the software.

Specialized knowledge

Developing software in any environment requires a combination of software development expertise and domain-specific knowledge. If you are going to produce an online shopping web site, you will need web programming and database skills, combined with some knowledge of retail activities, financial transaction processing, tax regulations, and various concerns specific to the business domain.

The same thing applies in the development of embedded systems, except that the domain knowledge required is usually much more specialized and complex than that found in most desktop systems. Esoteric mathematical techniques are commonplace in the world of embedded systems. For example, the fast Fourier transform (FFT) is a digital implementation of a method that extracts frequency information from time-sampled data. It is seldom seen in desktop applications, but may be found in numerous embedded systems, ranging from consumer audio to advanced radar systems.

Similarly, the Kalman filter is a complex algorithm originally devised for tracking satellites. While rare on the desktop, it has become commonplace in embedded systems. It is the basic algorithm for a vast variety of applications such as the Global Positioning System (GPS), Air Traffic Control, and advanced weapon control.

These techniques, along with others such as control system design, require years of advanced study to master. The people who have such advanced skills are in high demand.

Security

The issue of system security has historically received little attention in the embedded system space. Surprisingly, this was actually a reasonable policy to follow in most cases because embedded systems were typically isolated from potential sources of attack. An engine management system, isolated in the engine bay of a motor vehicle and connected only to the engine, provides few opportunities for compromise by a hostile actor.

Increases in processor power, however, have been accompanied by increases in connectivity throughout the world of computing; now embedded systems have become a part of this trend. As a consequence, previously isolated subsystems tended to be designed so that they could be connected to each other within a larger system. In the automotive world, the widespread adoption of the Controller Area Network (CAN) bus provided a reliable and inexpensive means for linking together the disparate control and monitoring systems within a motor vehicle [3]. This development provided many benefits, but it also meant that the engine management system no longer remained isolated.

The trend toward increasing connectivity has continued. Many embedded systems now have Internet connectivity, which is often implemented to provide remote system diagnostic and upgrade capabilities. Unfortunately, these enhanced capabilities carry with them the risks associated with exposing the components of the system to unauthorized external assaults, i.e., hacking.

The security of embedded systems was once an afterthought, if it was thought of at all. However, embedded systems play a massive part in the operation of our modern world. Many of these devices now have their presence exposed on the Internet or through other means. This means that security must be designed into the systems from the very start if they are to operate robustly in this unpredictable environment. Chapter 12 provides more insight into security issues.

The framework for developing embedded software

Human beings are inherently orderly creatures. From our earliest years, we are happiest and most prosperous when provided with clear rules and boundaries that are presented in a consistent and coherent manner and that provide tangible benefits as we grow. This desire for order and regularity is reflected in the laws and regulations that we implement to govern our lives. While restricting our actions in many areas, an orderly framework of laws allows a group of disparate individuals to come together as a society and work harmoniously together for the good of all.

The benefits of defining and then working within a commonly understood framework seem obvious, regardless of whether we are referring to an entire society or to a small business unit within an engineering organization. And yet the common experience of software developers in general, whether embedded developers or otherwise, is that their development activities are conducted in an environment that is remarkably unconstrained by rules and regulations.

This lack of structure and constraint is all the more remarkable when software development is contrasted with professional activities in other fields such as law, medicine, and accounting. For example, an accountant working for a corporation will work according to a very clearly defined set of corporate accounting procedures. These procedures will have been derived from the common corporate governance legislation that all companies are subject to within a country, with the result that there is a high degree of standardization within the profession and general agreement on how accounting should be done.

Similar comments could be made about professions such as law and medicine, but the situation with software development is still in a state of flux. Despite activities such as the Software Engineering Body of Knowledge (SWEBOK), there is no common understanding within the profession about how to do good software development [4]. Every organization produces software in a manner that is different to every other organization. Even different departments within the same company may work in completely different ways to produce similar products.

This situation is attributable in large part to the newness of the profession. People have been building bridges for thousands of years. Apart from the occasional mishap such as the Tacoma Narrows collapse, bridge building is a well-understood problem with well-understood and reliable solutions [5]. Large-scale software development, on the other hand, has only been widely conducted over a period of a little more than 40 years, so the common understanding of how to develop good software is still maturing. The growth of this understanding is made more difficult by the incredibly broad variety of applications software and the resistance of many practitioners to the very concepts of regulation and standardization.

In most cases, this resistance springs from a misguided belief that working within such a framework will crush creativity, eliminate elegance, and turn satisfying creative work into soul-destroying production line drudgery. The fact is that nothing could be further from the truth. Written English, for example, is produced within the context of very tight constraints regarding spelling, grammar, and punctuation. Yet within those constraints for written language, you can produce anything from a sonnet to a software user manual. In fact, the widely understood rules of written language do not hinder creativity or elegance, they encourage it.

This section aims to provide guidance for developing and implementing a high-level framework for software within embedded systems. This framework is not a complex or an unproven theoretical construct with burdensome overheads. It is a simple set of ideas that have been refined over many years of engineering practice. It has reliably and repeatedly produced high-quality embedded systems with minimum overhead.

Processes and standards

Many organizations engaged in the development of embedded systems don’t know what they’re doing. What I mean by this is that they don’t understand the mechanisms that conceive products and then deliver successful products. The result of this lack of understanding is that work is performed on a “cottage industry” basis and difficulties abound. Each project is approached differently. If more than one engineer is involved in a project, they will use different tools and different techniques. Communication between staff may be difficult, components may not integrate well together, and heroic efforts are often required of individuals to achieve deadlines.

Given a fair amount of luck a project that is run like this will succeed, but nobody will be quite sure why it was a success. Without the addition of luck, there will be just as much doubt as to why the effort was a failure.

A number of steps are required to minimize the requirement for large helpings of luck in successful development programs. First, you need to develop an understanding of operations. This involves studying and reflecting upon how development is performed within your organization, determining what works and what doesn’t work, and then documenting these observations in a set of operational processes. Second, once these documents have been developed, they should be promulgated within the organization and adopted as the corporate standard to be used by all engineers.

One size doesn’t fit all

Programs for developing embedded systems come in all shapes and sizes. It’s very unusual for one project to closely resemble the next project in dimensions such as scope, complexity, schedule, and staff requirements. For this reason in most cases, it’s more important for process documents to describe what (the ultimate goals and objectives) should be achieved rather than how (the exact procedures) it should be achieved.

For example, the level of system review conducted on a small project that occupies one person for two months will be different to that conducted on a project that occupies six people for three years. Applying a process that is suitable for one of those projects to the other project could be wildly inappropriate. What is appropriate, though, is to have a review process that specifies in part, that regardless of the size of a project, the preliminary design of the system should be documented and externally reviewed prior to the commencement of coding.

Specifying the process in this manner means that the review of the small project could be conducted by a couple of people in a morning, while the large project review might be conducted by ten people over a period of a week. In both cases, the desired business outcome occurs in accordance with the process, with a level of complexity and overhead incurred that is suited to the nature of the project.

While this advice is true in general, there may be cases where you want to be prescriptive, where one size really does have to fit everybody. For example, while some organizations allow projects to use various coding standards, the usual case is for a single standard to be applied at the corporate level. In this case, all code produced within the organization has to comply with the standard, regardless of the nature of the project that it is produced for.

It’s also worthwhile considering the nature of the projects that are undertaken by your organization. While there may be substantial differences between individual projects, it’s quite likely that most of them will fit into categories such as “small,” “medium,” and “large,” or perhaps “safety-critical” (or mission-critical) versus “nonsafety-critical (or nonmission-critical).” If this is true, then you should generate specific process templates applicable to each of these different categories of project. The result is that at the start of a project, the engineers involved can simply copy the template appropriate to their project, perform some minor tailoring, and have their project process ready to go with minimum effort.

Process improvement

We live in a rapidly changing world. Change applies to development environments just as much as to any other aspect of life, so you must ensure that corporate processes and their related documentation keep up with evolutionary change. If this does not happen, then either the enforcement of obsolete processes will inhibit necessary and valuable change or changes will occur anyway without the documentation being upgraded so that it eventually becomes useless.

You should examine each recommendation for change to ensure that it is beneficial. In many cases, proposals for process change arise naturally with the introduction of new technology or techniques. Recognizing and embracing such positive change enhances productivity and enhances staff involvement as they feel that they own the processes. (Again, confirmed and supported in Chapter 2—Ed.)

On the other hand, not all change is good. In particular, you should be aware of the concept of “normalization of deviance,” coined by sociologist Diane Vaughan in her book on the Challenger space shuttle disaster. The concept relates to a situation in a process-oriented environment where a small deviation is made from the methods described by a process, with no ill effect [6]. Each time such a process deviation occurs that doesn’t lead to a negative result people become more tolerant of that unofficial standard, with the result that actual working methods gradually diverge greatly from the documented processes. It is very likely that at some point, as with the Challenger disaster, the normalization of deviance will produce results that greatly diverge from being harmless to being catastrophic.

Regardless of how it eventuates, work practices will change. You should welcome and incorporate beneficial work practices into the overall process framework while recognizing harmful practices and taking active measures to reject them.

Process overhead

Complying with corporate processes takes time and effort. In a business environment, this equates to money. Process compliance should cost less than the benefits that are gained from such compliance and if this is not the case then you’re not only spinning your wheels, you’re also going backward! Therefore, an important aspect of process improvement efforts is studying how processes work and how to modify them, to minimize both the number of processes required for successful operations and the amount of effort required to comply with the processes.

Unnecessary processes, which don’t contribute business value or overly bureaucratic processes with high compliance costs, can actually impede rather than assist progress by wasting staff resources in an unproductive manner.

Process compliance

I have established that developing and implementing processes requires considerable management skill. Maintaining process compliance requires a similar, if not greater, level of management involvement.

No matter how you look at it, process compliance involves extra work and generally it is not nearly as interesting or exciting as writing a cool new piece of code. Furthermore, many of the long-term advantages of working within a process framework are not evident during the early stages of building a process-oriented environment. As a result, people tend to ignore processes, or put process-related work at the “bottom of the pile” so that it never gets done. There is hope! You can take a number of steps to address this problem:

1. Persuade staff members of the benefits of process compliance. This is generally a matter of explaining and demonstrating how a small amount of short-term pain can result in a large amount of long-term gain.

2. Actively involve staff members in process development. Workers will resist irrelevant or burdensome processes that are imposed by management without consultation with the people in the trenches. (Confirmed in Chapter 2—Ed.)

3. Encourage and enforce useful and effective processes. You might as well not have the process if it is ignored. If you honestly believe that a process is useful and beneficial for your business, then deviations from that process, no matter how small, must be corrected whenever they occur.

Your processes will be most beneficial for the predictable and routine within the work environment because they provide standard solutions to standard problems. That said, life will always throw you curve balls; unusual situations will arise that aren’t covered by your processes, or occasionally following your processes will produce undesirable results. Sometimes, for very good reasons, business demands may dictate that work be performed outside the process framework. Such cases should be atypical, you should violate processes only after due consideration and with full awareness of what you are doing. BUT…when it’s necessary don’t be afraid to do what has to be done to finish the job.

Under these circumstances, once the emergency has been resolved, it’s very tempting to put the unpleasantness behind you and carry on with getting the job done. While this is the easiest thing to do it’s also a mistake, because you will lose the opportunity to improve your processes by learning from what happened.

As soon as possible after the event, you should debrief all those involved. Get the players together and talk to them to get their story about what happened. For a variety of reasons, it’s likely that everybody will have a different story. Take written notes, work out what actually happened, analyze the event, and learn from it.

ISO 12207 reference process

The International Organization of Standardization (ISO) has produced ISO 12207—Software Lifecycle Processes [7]. It is a reference standard that covers the entire software development life cycle: acquisition, development and implementation, support and maintenance, and system retirement.

ISO 12207 details activities that you should conduct during each stage of software development within a project. This standard describes things that should be done during development, but not how to do them. You can best use the standard as a checklist of useful activities that can be tailored to provide a level of process overhead that is suitable for any given project.

Recommended process documents

The size and scope of your process documentation should depend on the size and nature of your organization. Your organization may only be you, sitting at a computer in your home office, or it may comprise you with a dozen other people working on a ground-breaking piece of new technology in a high-tech start-up.

You should have a documented set of processes that describe the standard approach that you take to standard issues or problems, which lets you concentrate on the unusual or interesting issues that arise. Suppose you started out alone, then one day someone starts working with you; documented procedures will save you time and money if you can say, “Read this…it’s how I’ve been doing things.” Suppose you are already working in a group, then one day somebody leaves; documented procedures will save you time and money if you can say to the replacement hire, “Read this…it’s how we do things around here.”

In every project, you should tailor the abstract guidance provided by each of these high-level documents into a set of concrete steps appropriate for the project. These procedural documents related to a specific project translate the general, “What do we want to achieve?” into, “This is how we are going to work on this project.”

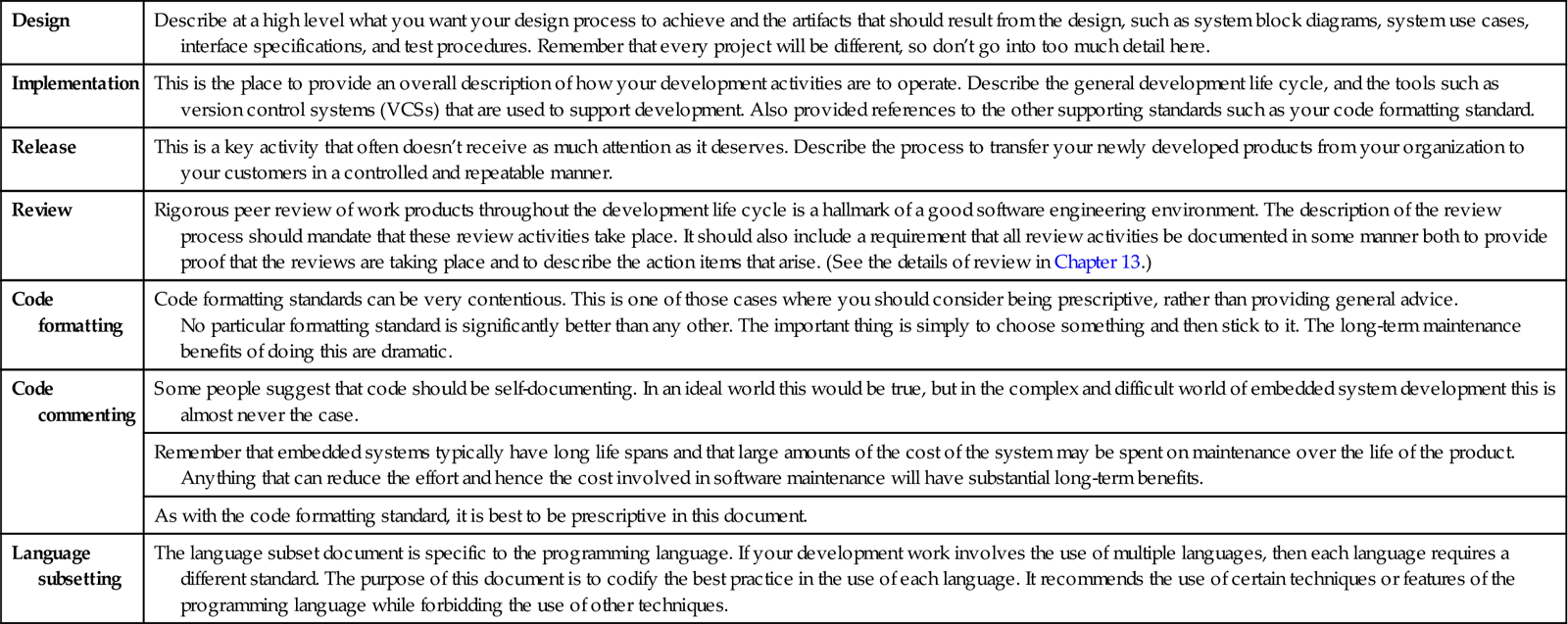

Table 11.1 lists a minimal set of process documents. Developing and implementing this small set of processes will immediately benefit your development activities.

Table 11.1

A minimal set of suggested process documents

| Design | Describe at a high level what you want your design process to achieve and the artifacts that should result from the design, such as system block diagrams, system use cases, interface specifications, and test procedures. Remember that every project will be different, so don’t go into too much detail here. |

| Implementation | This is the place to provide an overall description of how your development activities are to operate. Describe the general development life cycle, and the tools such as version control systems (VCSs) that are used to support development. Also provided references to the other supporting standards such as your code formatting standard. |

| Release | This is a key activity that often doesn’t receive as much attention as it deserves. Describe the process to transfer your newly developed products from your organization to your customers in a controlled and repeatable manner. |

| Review | Rigorous peer review of work products throughout the development life cycle is a hallmark of a good software engineering environment. The description of the review process should mandate that these review activities take place. It should also include a requirement that all review activities be documented in some manner both to provide proof that the reviews are taking place and to describe the action items that arise. (See the details of review in Chapter 13.) |

| Code formatting | Code formatting standards can be very contentious. This is one of those cases where you should consider being prescriptive, rather than providing general advice. No particular formatting standard is significantly better than any other. The important thing is simply to choose something and then stick to it. The long-term maintenance benefits of doing this are dramatic. |

| Code commenting | Some people suggest that code should be self-documenting. In an ideal world this would be true, but in the complex and difficult world of embedded system development this is almost never the case. |

| Remember that embedded systems typically have long life spans and that large amounts of the cost of the system may be spent on maintenance over the life of the product. Anything that can reduce the effort and hence the cost involved in software maintenance will have substantial long-term benefits. | |

| As with the code formatting standard, it is best to be prescriptive in this document. | |

| Language subsetting | The language subset document is specific to the programming language. If your development work involves the use of multiple languages, then each language requires a different standard. The purpose of this document is to codify the best practice in the use of each language. It recommends the use of certain techniques or features of the programming language while forbidding the use of other techniques. |

The embedded system world has displayed considerable interest in language subsetting over the last few years, primarily driven by the widespread use of C and C++ in embedded environments. Two notable efforts in this area come from the Motor Industry Software Reliability Association (MISRA) and Lockheed-Martin.

MISRA is a consortium of motor vehicle manufacturers. In the late 1998, they produced a standard called “Guidelines for the use of the C language in vehicle based software,” otherwise known as MISRA-C:1998. This document contains a set of rules for constraining the use of various aspects of the C language in mission-critical systems. While originally targeted at the automotive industry, a wide variety of other industries has adopted this standard. It has undergone a number of revisions, and the current version is MISRA-C:2012. As well as the C language standard, in 2008 MISRA also produced a C++ standard called “Guidelines for the use of the C++ language in critical systems.” This document is known as MISRA-C++:2008.

The work by Lockheed-Martin relates to their involvement in the Joint Strike Fighter Program. As part of developing the avionic software for this aircraft, Lockheed-Martin developed a language subset known as the Joint Strike Fighter Air Vehicle C++ Coding Standards, or JSF++ for short.

Both the MISRA and JSF++ standards provide a good basis for study and the development of a good language subset. In particular, the MISRA standards have become so popular that many common compilers and static code analysis tools provide inbuilt standard support that you can easily enable.

Requirements engineering

If someone were to produce an old-fashioned map showing the embedded systems software development process, then the area entitled “Requirements” would surely also be labeled “Here Be Dragons.” There is no part of the process that is more prone to difficulty and no part of the process that is more critical to the development of a system than getting the requirements right. (Chapter 6 goes into great detail on generating and maintaining requirements.)

It’s as simple as this. At the start of a project, someone will have a set of ideas in their head about what they want the system to do. Those ideas represent the system requirements. Your job is to construct a coherent list of those requirements and then to build a system that meets the requirements. If you get the requirements wrong at the start, then even if you do everything else perfectly you will end up building the wrong thing. You will build something that your customer doesn’t want and your project will be a failure. It’s simple to describe, but it sure isn’t easy to do.

Requirements engineering in embedded system software development has the added difficulty that rather than being the end product, the software is a component that is embedded in a larger system. This means that the system requirements are usually expressed in terms of what the system must achieve, instead of what the software must achieve, with the result that the detailed software requirements are buried and lost inside the system requirements. It can be very difficult for an engineer to translate a requirement from an application domain, such as radar tracking or engine management, into a meaningful software requirement.

If you are aware of the importance and difficulty of good requirements engineering and if you allocate the necessary resources to perform this part of the process effectively, then you’ve taken a large step toward mitigating the risks associated with having bad requirements. You also need to keep in mind that even with your best efforts, the inherent difficulty of gathering requirements means that it’s unlikely that you’ll get it right first time. New requirements will emerge during the development of your product, and you must be prepared to accept these changes and to be flexible enough to incorporate them into your product during the course of the development.

So what do you actually need to perform good requirements engineering? My view is that the critical activities involved in requirements engineering are:

1. Collection. This is the process of discussing with all stakeholders and building an initial list of all of the things that the product is supposed to do.

2. Analysis. After collecting the initial requirements, you need to study and analyze them for completeness and consistency. Even if only one person specifies the requirements, it’s likely that the requirements will contain internal conflicts and inconsistencies that must be resolved at this point.

3. Specification. Once you have collected all of the requirements, you need to formalize them into a System Requirements Specification. This document will define each requirement in the most precise language possible and will allocate unique identifiers to each requirement for consistency of reference. The identifiers enable other derived documents, such as test specifications, to remain synchronized with the requirements specification.

4. Validation. A final check of the completely defined and identified set of initial requirements contained in the System Requirements Specification to confirm that they meet all of the needs, and the overall intent, of the customer.

5. Management. Once you have been specified and validated the initial requirements, you may use them to commence the design activities. From this point, and throughout the project, you will discover that despite your best efforts, your requirements will still contain flaws that need fixing. Some requirements will disappear, new requirements will emerge, and others will change shape. Requirements management involves staying on top of this fluid situation and ensuring at all times that you have a solid specification that precisely defines what you are attempting to build.

There are a wide variety of software products available to assist in all of these areas of the requirements engineering process. For smaller projects, a spreadsheet and a word processor are probably all that you need. For larger projects with hundreds or thousands of requirements, it can be very difficult to manage the process without the support of dedicated requirements engineering tools.

A good example of such a product is the DOORS requirements tracking tool originally developed by Swedish firm Telelogic, and now supported and marketed by IBM. This consists of a suite of different tools providing various capabilities, but the key functionality supports managing the development of sets of complex requirements for large systems.

Version control

Automated VCSs are a key tool in any modern software engineering environment. Developing software without the assistance of such a tool is like running a race with a rock in your shoe. Yes, you will reach the finish line eventually, but the race will be slower and more painful than necessary.

VCSs apply to all software development projects and are not specifically related to embedded systems. Regardless, VCSs still deserve some discussion here because their use represents a best practice in modern software development.

The simplest view of VCSs is that they are document management systems specifically targeted at maintaining libraries of text files where the text files typically contain software source code. The systems may be used to store other types of files, but they are less effective when used to manage binary files such as word processor documents.

The conventional method of operation involves storing a master copy of each file in a central repository. A database management engine of some kind typically manages the repository of files. When an engineer needs to make a change to a file, he or she checks the file out of the repository and copies it to a local computer for editing. When the engineer has made the required changes, he or she checks the updated file back into the repository, which simply involves copying the file from the local computer back into the repository.

There are many variations on this basic theme. For example, rather than simply storing entire new copies of changed documents in the repository, some systems encode and store the sequence of changes between one version and the next. When many small changes are made to large files, as is typical in software development environments, this technique can dramatically reduce the storage requirements of the repository and the time taken to access the files. Some VCSs lock the repository copy of a file when it is checked out, which enforces the rule that only one person can be working on a file at any time. While this technique is simple to understand and implement, it can be extremely inconvenient in a team environment as it forces serial access to the file. More advanced systems allow parallel development where multiple users are able to check out files simultaneously for update, with the system assisting to resolve any editing conflicts that might occur at check in time.

Now let’s consider the primary benefit of VCS which, as the name implies, is the control and management of different versions of a software product. If the world was a perfect place, VCS would be far less useful. Unfortunately, the world is not a perfect place and Version 1.0 of most software systems is followed by Version 1.1, then Version 1.2…you get the picture.

Management of this in the trivial case where the entire system is built from one source file doesn’t present much of a challenge, as there’s a one-to-one mapping between each version of the source file and the resulting executable. Unfortunately, few real-world systems are this trivial. I am aware of a major avionics embedded system that consists of over 35,000 source files. Systems of this magnitude are not unusual, and control of the development process in this environment is an extremely complex task that would be virtually impossible without the assistance of a VCS.

The first benefit provided by a VCS is a history of the changes that have been made to each source file, including the date and time of the change, the name of the engineer who made the change, and useful comments about the purpose of the change. This feature is very beneficial in understanding how a system has evolved and who was responsible for the change process, but more importantly, it allows changes to revert when things go wrong, as they inevitably will. This ability to quickly back out of and recover from defective changes can be an absolute lifesaver.

A further complication in a large-scale development environment is that there is very little correlation between the changes that are made to each file because changes are made to different files for different reasons at different times. A new version of the system might require changes to 100 different source files. The next version of the system might require further changes to 35 of those files, with more changes being made to 100 different files, and each succeeding version will require some unpredictable combination of changes to different files. The result is that a snapshot of the state of all the files in the system on one day will most likely be very different to a snapshot taken a week later. Manually reverting to a previous version of the system under these conditions would be an extremely difficult task to perform manually as it would require reverting each individual file to the correct state, but a VCS provides the mechanisms for doing this that make it a trivial process. The usual process is to tag all of the files in a project with the version number or some other unique identifier. Once this tag is in place, it is possible to extract the entire system with a specified tag in a single operation.

The next advantage provided by a VCS involves the coordination of distributed development activities by multiple engineers. As the physical separation between developers increases, the task of coordinating their updates to shared source files increases. The members of a development team these days may be located in different offices within a building, different buildings on a corporate campus, different buildings in different cities, or even in different cities on different continents in different time zones.

Managing the activities of such teams, particularly the very widely distributed teams, is nearly impossible without the use of a VCS. It’s still hard even with the use of a VCS, but the difficulties are substantially reduced. Consider two engineers attempting to work on a source file over a period of several weeks when one person is located in Los Angeles and the other is located in Sydney. Thousands of miles and a 7-hour time difference separate these two people. Without a VCS, coordinating their work would require a substantial amount of time and effort to be invested in phone calls at inconvenient times, transfer of files back and forth, and recovery from errors. Automating this process via a VCS will increase productivity by eliminating almost all of that overhead.

Finally, a VCS provides a single convenient target for system backup and recovery processes. Without the use of a VCS, an organization’s code base may be spread over multiple folders on a server or over multiple servers. In the worst case, the code may be spread over the individual workstations belonging to the members of the development team. In this sort of environment, the failure of the hard drive in a machine containing the only copies of the source code for an important application can result in a disastrous waste of time and effort.

With the use of a VCS, the source code backup process can be greatly simplified. This simplification means that the process will be inherently more reliable because all that is required is that the source code repository be backed up on a periodic basis to ensure coverage across the entire code base.

The design of a good backup process is beyond the scope of this discussion, but it’s worthwhile pointing out that if all of your backup media are stored in the same physical location as the server containing your VCS repository, then you could be heading for trouble. A disaster such as a fire or flood might result in you ending up with no server and no backups either, so always incorporate offsite storage of media into your backup regime.

A final point worth noting is that a VCS is just a tool. Used correctly, it will substantially reduce the costs of developing large-scale software systems. Even when used incorrectly, it will still help, but perhaps not as much as it should. For example, if an engineer checks a source file out of the repository and then works on it intensively for a month without checking it back in to the repository, then many of the benefits of change logging, collaboration, and easy backup are lost. As with most other areas, the development of simple and well-understood VCS management processes are important in ensuring that the benefits of using the tool are fully realized.

Effort estimation and progress tracking

Software development is an opaque activity. No other form of organized human activity resembles it. There is no other form of organized human activity in which it is so difficult to understand what people involved in a project are doing or achieving.

Consider conventional real-world construction projects that involve lots of metal and concrete. Things built out of concrete can be measured in terms that humans can intuitively understand. The completed length of a freeway, the completed height of a dam, and the number of stories completed on a skyscraper are all things that you can easily express and convey numerically and pictorially. If a freeway construction project is due for completion in three weeks and there are still three miles of unfinished road under construction, then it will be obvious to the project team that the project is in trouble. Very importantly, the problem will also be obvious to outsiders, even those who are completely unfamiliar with any aspect of freeway construction.

Software, as the name implies, is the exact opposite of concrete. The construction material used in software development is human thought, which is an infinitely light, malleable, and flexible material. Software engineers are capable of building large, complex, and incredibly intricate mechanisms out of this material.

Unfortunately, software doesn’t come with the intuitions that are associated with conventional construction materials; consequently, the conventional paradigms for human-oriented measurement don’t apply to it. You can’t pick up a piece of software and heft it in your hand. You can’t weigh it or pull out a tape measure to measure its length. And because software doesn’t come with the intuitions associated with conventional construction materials, it is very difficult to represent and measure progress within a software development project. A software project may be in a very similar position to the freeway-building project with three miles of road to complete in three weeks without it being obvious to interested outsiders.

There are no magical solutions to this problem, but there are techniques and tools that can mitigate some of the risk associated with tracking the progress of a software project.

The intuitive metric for measuring progress in a software project is the number of lines of code that have been written. If a project requires 10,000 lines of code, and the developers have produced 8000 lines of code, then the project is 80% complete, right? Well, maybe. Or maybe not, because not all lines of code are created equal. The developers may have concentrated on the infrastructure aspects of the project, producing lots of straightforward code to accomplish simple tasks. The remaining 2000 lines of code may represent the core functionality of the application, containing complex algorithms and tricky performance issues that require detailed study, analysis, and testing. In this case it wouldn’t be at all unusual to discover that the last 2000 lines of code take as much time to complete as the first 8000. (This is the old rubric that 20% of the work takes 80% of the time.)

In some cases, lines of code may even be a perverse metric that indicates the opposite of what is actually correct. If one engineer can solve a problem in 1000 lines of code, and another can solve the same problem in 100 lines of code that execute 10 times as fast then it would probably be a mistake to consider that the first engineer is more productive than the second. Or perhaps the engineers are equally skilled and productive, but one is working in assembler language while the other is working in a higher level language such as C++, in which case the latter would be able to implement similar functionality in far fewer lines of code.

The keyword here is “functionality.” The best technique for approaching this difficult issue lies in assessing progress in terms of the amount of functionality completed, not the lines of code completed. In simple terms, this involves deriving defined points of functionality from the system requirements and allocating a measurement of complexity to each function point. The result is a single metric that represents the total functionality of the system, with greater functional density associated with different function points depending on their complexity.

This technique is known as Function Point Analysis, and it was defined by Allan Albrecht of IBM in the 1970s [8]. Much work has been done on this topic since then, and there are now a number of recognized standards for performing this analysis [9]. Even if you choose to perform your analysis in a less formal way, it is still an invaluable technique for providing insight into the complexity of a project.

Let’s assume that you’ve done a Function Point Analysis on your new project, along with other aspects of your design, and you’re ready to set to work. Unfortunately, your problems are not over. In fact, they’ve only just begun. Setting to work on implementing your function points sounds great, but who is going to do the work? Which engineers will complete which functions? How do you keep track of which function points are completed, which ones are in progress, and which ones haven’t been touched yet? Keep in mind also that your iterative testing program will reveal design and implementation defects that will require correction by somebody at some time during the project.

The difficulties of managing this resource allocation and scheduling problem are substantial, as are the risks to your project by getting things wrong in this area. Happily, there are tools available to help you mitigate this risk. They go by a variety of names, including “bug tracking systems,” “issue tracking systems,” and “ticketing systems.” I prefer to use the term “ticketing system” because it’s a neutral term that avoids the misconceptions associated with terms such as “bug tracker.”

The name “ticketing system” comes from the use of cards known as tickets, which were originally used to allocate work to staff members in a call center and help desk organizations. When a customer called in a problem, the phone operator would fill out a paper ticket with details of the problem and then place it in a queue that was serviced by the responsible engineers and technicians. During the process of working on the task, the engineer would annotate the ticket with details of the job as required. When the task was completed, the ticket would be marked as completed and filed with all of the other completed jobs.

A modern ticketing system is a software implementation of that workflow, with the tickets replaced by database entries, and a software application providing a front-end to the database that allows for queries and updates to the entries. There are a number of ticketing systems that are specifically designed for use in a software development environment. These usually provide specific support for aspects of the software development life cycle, along with features, such as integration into VCSs and report generation systems.

The benefits of using a ticketing system (or whatever you prefer to call it) during a software development project can’t be exaggerated. If you use tickets to allocate all of the work at the beginning of a project, then the list of tasks resides with the tickets. If you want to know what you have completed so far, the list of completed tickets is available at the click of a button. The same applies to tickets that are the subject of work in progress, and work that is yet to be started.

Using a high-quality ticketing system to allocate tasks to engineers and to track the progress of those tasks through to completion is a hallmark of good software engineering. If you are developing systems without one of these tools, then you may as well work with one hand tied behind your back.

Life cycle

A current direction in the life cycles of software development is toward Agile development techniques as espoused in The Agile Manifesto. Agile development techniques are human centered [10]. They recognize the psychology, strengths, and weaknesses of the humans involved as both customers and developers in the software development process.

Agile techniques are particularly well suited to the sort of exploratory software development characteristic of applications that require heavy human interaction, such as web-based transaction processing systems. The software development effort, in such systems, often focuses on the implementation of complex graphical user interfaces (GUIs), which aim to resolve the issues associated with human cognitive strengths and weaknesses. In particular, people generally cannot assess the strengths and weaknesses of a GUI without operating the interface.

One of the particular strengths of this development methodology is that it recognizes that humans are visually oriented creatures. It concentrates, therefore, on prototyping and the rapid experimental development of user facing components of application software. Short cycles of rapid application development combined with intense user feedback and code refactoring can produce very high velocities of development when this technique is used by skilled Agile developers.

Agile development techniques might apply to the development of embedded software. In this domain, Agile development must proceed with great caution since embedded systems fundamentally differ from desktop software, as described in the first part of this chapter.

Agile techniques are diametrically opposed to the “Design Everything First” waterfall methodologies exemplified in the life cycle methodology described in DOD-STD-2167A. This was a large highly prescriptive, documentation-centric methodology promulgated by the US Department of Defense in the 1970s which mandated that development should be conducted as a series of discrete steps, with each step, such as system design, fully completed before beginning the next step [11].

While attractive on the face of it, this methodology fails to recognize the fundamental fact of human fallibility. People make mistakes. Designs will contain errors, and committing to the completion of the entire design before commencing development commits the eventual development process to the implementation of an error prone design. This means that design errors will be propagated through the rest of the development cycle and may not be found until system integration or test time. Fixing these problems at this time will be much more expensive than doing it earlier in the project. Also, being closer to the delivery deadline means that the corrections will be developed under much more stressful circumstances, with it being likely that the quality of the work will be lower due to schedule pressure.

So, the embedded system developer is faced with a conundrum. Highly interactive and highly iterative agile development methodologies with minimal up-front design work well in the development of human centered applications with complex GUIs where user requirements are very uncertain. Unfortunately, most embedded systems don’t fit this description, and the application of agile techniques to the development of embedded software can be very problematic.

On the other hand, the heavyweight sequential methodologies that require that everything be designed up-front can also be problematic because the development process chokes on a flurry of design and implementation defects that are only discovered very late in the development cycle.

Unfortunately, there is no magical technique or methodology that provides an answer to this problem because the solution lies in compromise and good judgement developed through experience. You can apply some fundamental ideas though. Don’t attempt to design every aspect of your system up-front before commencing development. Conversely, resist the temptation to begin coding before you have thought about your system and produced at least a basic design.

Like Goldilocks and the Three Bears, you need to perform an amount of up-front design that is neither too much, nor too little, but just right. Your experience and good judgment determines what is “just right” for your project and your environment. In some situations, there’s no working around it and you will have to do very large amounts of highly detailed design work up-front, while in other cases a more exploratory approach may be entirely suitable.

Whatever the case may be, once the initial up-front design process is completed, commence a series of iterative development cycles. The phrase “design a little, code a little, test a little” best captures the essence of what is involved. The feedback process from the test phases is critical. You must recognize and correct design or implementation errors as early as possible, because such errors take more time and money to correct as the system gradually takes shape. This might be considered a hybrid approach between spiral and Agile development.

Finally, when you complete the development, test your entire system to ensure correct operation; this is validation. Given the testing phases embedded into the development cycles, this testing should actually be a confirmation of correctness as much as a search for errors.

Chapter 1 provides further information on life cycle models, including the waterfall model, the spiral model, and Agile development.

Tools and techniques

Real-time operating systems

Fairness is an admirable characteristic in most situations. People undoubtedly wish to be treated fairly in their dealings with other people, and they carry that desire over into their dealings with machines. General-purpose OSs are designed to allocate scarce resources between a variety of user and system level processes. It’s unsurprising that one of the primary goals of those systems is achieving a fair and equitable allocation of those resources between all of the competing processes that are running on the computer. Of course, “fairness” may be defined in a variety of ways. For example, an OS may be designed to allocate CPU resources fairly between computationally intensive background processes to achieve high throughput, at the expense of interactive users.

The primary driver for OS design is not a love of fairness on the part of the OS developers, but simply that OSs are general purpose in nature. The desktop operating environment is inherently chaotic and unpredictable. It’s highly unlikely that any two general-purpose computers have exactly the same characteristics in terms of applications and data files installed. This in turn means that the computational demands placed on the processors are almost totally unpredictable.

This unpredictability forces the OS designers to make very generic decisions about how their schedulers will allocate system resources between a multitude of competing processes. Fairness is a reasonable starting point when deciding how to slice the pie.

Embedded systems, however, represent exactly the opposite situation from that encountered on the desktop. Embedded systems are focused devices dedicated to the solution of a single problem. They highly constrain inputs and outputs and thoroughly define processing. Techniques appropriate to the desktop environment are prone to fail in the embedded world. Fairness as a resource allocation philosophy fails miserably in embedded systems.

Enter the RTOS. The primary distinguishing feature between a general-purpose OS and an RTOS is that an RTOS is not concerned with the fair allocation of system resources. An RTOS allocates each process a priority. At any given time, the process with the highest priority will be executing. As long as the highest priority process requires the use of the CPU, it will get it to the exclusion of other processes. This “highest priority first” scheduling philosophy is an extraordinarily powerful tool for the embedded system software developer. Used well, an RTOS can extract the maximum processing power out of a system with minimal overhead.

There are a large number of commercial and open source RTOS packages available to choose from, with a wide variety of features. The most compelling argument for adopting one of these systems is that they allow your developers to focus on their application domain, rather than on developing infrastructure that, while necessary, doesn’t contribute to your business goals.

For example, apart from their key task creation and scheduling functions, RTOSs also provide abstracted software layers and device drivers for processor bootstrap, interprocess communication, onboard timers, external communications ports, and peripherals of all varieties. Getting your expensive engineers to custom build this infrastructure rather than concentrating on your core business applications is almost certainly going to be more expensive and less efficient than acquiring a suitable product from people who are in the business of producing that infrastructure.

Design by Contract

Design by Contract (DBC) is a powerful software development technique developed by Prof. Bertrand Meyer. He espoused the concept in his book Object-Oriented Software Construction [12] and implemented it as a first-class component of the Eiffel programming language. Facilities for using DBC are now available in a wide variety of programming languages, either built into the language or implemented as libraries, preprocessors, and other tools.

DBC uses the concept of a contract from the world of business as a powerful metaphor to extend the notion of assertions that have been a common programming technique for many years. As in a business contract, the central idea of the DBC metaphor involves a binding arrangement between a customer and a supplier about a service that is to be provided.

In terms of software development, a supplier is a software module that provides a service, such as data through a public interface, while a customer is a separate software module that makes calls on that interface to obtain the data. A contract between the two modules represents a mutually agreed upon set of obligations that must be met by both the customer and supplier for the transaction to complete successfully. These obligations represent things such as valid system state upon entry to and exit from the call to the interface, valid input values, valid return types, and aspects of system state that will be maintained by the supplier.

There is a substantial difference between the conventional use of assertions and DBC. The old school use of assertions involves writing code and then adding assertions at various points throughout the code. As the name implies, Design by Contract involves incorporating assertions about the correctness of the program into the design process so that the assertions are written before the code is written.

This is a powerful idea. Creating contracts during system design forces the designer to think very carefully about program correctness right from the beginning. DBC provides convenient tools for guaranteeing that correctness is established and maintained during program operation.

Two standard elements for establishing DBC contracts are the REQUIRES() and ENSURES() clauses. These may be viewed as function calls that take a Boolean expression as an argument. If the Boolean expression evaluates to true, then program execution continues as expected. If the expression evaluates to false, then a contract has been violated and the contract violation mechanism will be invoked. You place one or more REQUIRES() clauses at the start of a function to establish the contract to which the function agrees prior to commencing work. You place one or more ENSURES() clauses at the end of the function to establish the state to which the function guarantees after it has done its work.

The key thing for you to understand is that DBC is intended to locate defects in your code. This might sound like a statement of the obvious, but it’s more subtle than it appears at first glance. The contracts that you embed throughout your code should establish the system state required for correct operation at that point of execution. They represent “The Truth” about the correct operation of the system. If a contract is violated, then a catastrophe has occurred and it is not possible for the system to continue to operate successfully. At that point, it is better for the system to signal a failure and terminate, rather than to propagate the catastrophe to some other point of the system where it may fail quietly and mysteriously, resulting in days or weeks of debugging to track backward from the point of failure.

DBC should not be used for validation of data received over interfaces, either from an operator or from another system. Normal defensive programming techniques should be used at the interface to reject bad data such as operator typographical errors. The difference here is between something bad that might actually happen under unusual circumstances versus something that must never happen at all. You should never invoke a DBC contract on something that could reasonably occur.

One way to look at your contracts is that they are testing for impossible conditions. You shouldn’t think, “I won’t test for that, because that will never occur.” You should think “I will test for that, because if that does occur, it indicates that there is a defect in the system and I want to trap it right now.”

So, the REQUIRES() statement says, “I require the system state to satisfy this condition before I can proceed. If the system is not in this state, then it is corrupt and I’ll halt.” Similarly the ENSURES() statement says, “I guarantee that inside this function I have successfully transformed the system state from where we were at the beginning, to this state. If this transformation has not been successful, then something has gone very wrong, the system is corrupt, and I’ll halt.”

You might look at ENSURE at the end of a function and think “Why should I test that? That pointer is never going to be NULL.” But the concern is that the pointer will never be NULL if the system is working correctly. What we’re catching with the ENSURE is the case where a maintainer (either you next week or somebody else in 10 years’ time) comes along, changes the system erroneously, and introduces a subtle bug that results in the pointer being NULL once every month, which causes the system to mysteriously crash.

To repeat: Ask yourself exactly what the system state should be at the start of a function for subsequent processing to succeed and then REQUIRE that to be so. At the end of the function, ask yourself to what state you’ve transitioned and ENSURE that you’ve successfully made that transition.

This process forces you to think more clearly about what you are doing and ensures that you have a much crisper mental model of your system inside your head during design and development. This leads to better design and implementation so that you avoid introducing defects in the first place.

An analogy I like is that DBC statements are the software equivalent of fuses in electrical systems. A blown fuse indicates that something is defective (or failed) while protecting your valuable equipment from an out-of-range value (i.e., too much current). A triggered DBC condition indicates that something is defective while protecting the rest of your system from that corruption.

1. Nothing comes for free. Using DBC incurs an overhead caused by the computation necessary to evaluate each of the DBC clauses. In systems with limited amounts of spare processing power, it may be the case that the evaluation of the DBC clauses involves a prohibitive amount of overhead. In such cases, it may be necessary to disable DBC evaluation for release after system development and testing has been completed.

2. The expressions provided in DBC clauses must not have any side effects. If the expressions do have side effects and DBC evaluation is disabled for release, then the released version will operate differently to the debug version at runtime, which is obviously highly undesirable.

3. It can be very difficult to decide what action to take in an operational system if a DBC clause evaluates to false. When a DBC clause is triggered, it presumably indicates the detection of a defect within the system. The simplest option when this happens is to display or log appropriate errors messages and then terminate execution of the program. While this is a simple solution, it may not be feasible to follow this course of action in a working embedded system. For example, if the system under consideration is the engine management system of a motor vehicle, then terminating the program while the vehicle is traveling down a freeway at high speed is probably not appropriate. On the other hand, doing nothing may be an equally bad choice given the detection of a potentially serious defect within the system.

4. Realistically, the action to be taken by the DBC processing upon detecting a defect is a choice that can only be made by the developers based on the information they have about the nature of their system.

Drawings