Chapter 8

Inviting to Participation

EAM 2.0

Content

Building Block 4: Participation in Knowledge

Collaborative Data Modeling: The ObjectPedia

Weak Ties and a Self-Organizing Application Landscape

Summing It Up: Assessment by the EA Dashboard

Building Block 5: Participation in Decisions

The Diagnostic Process Landscape

The Bazaar of IT Opportunities

Summing It Up: Assessment by the EA Dashboard

Building Block 6: Participation in Transformation

Mashing Up the Architecture Continuum

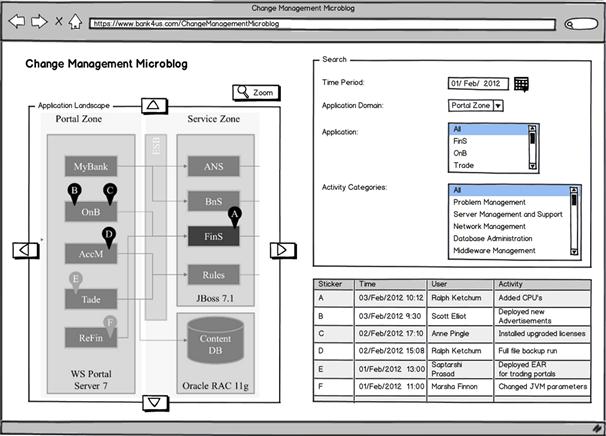

The Change Management Microblog

The Western intellectual tradition shows a certain bias against crowds. The ancient Greek philosopher Plato (424–348 BC), for instance, conceptualized the idea of a sophocracy in his classic The Republic (in Greek: Politeia). The essence of this government is that the most knowledgeable and wisest—the philosophers—should rule. The German poet Goethe made the point clear in 1833:

Nothing is more repugnant than the majority; it consists of a few strong trailblazers, rogues and weak characters that assimilate, and the crowd that trolls after them without a clue what it wants.1

What contributions can be expected from those trolling behind, as Goethe puts it? Throughout the centuries, one can spot a conviction among Western thinkers that a crowd is never wiser than its most capable members. It is accompanied by the misanthropic belief that individuals even become “dumbed down” in a crowd; they vulgarize: laugh at insipid jokes, applaud harebrained slogans, or lower their ethical standards to a degree they otherwise would feel ashamed of. The television entertainment of our time indeed gives some evidence for such opinions.

Nevertheless, as general statements about the capabilities of crowds, these convictions are wrong. James Surowiecki has gathered an abundance of research results from social sciences and economy that prove the sometimes fabulous capabilities of crowds in his bestseller The Wisdom of Crowds (2005). The title of this booklet has become a headline for the intellectual capabilities that show up when individuals are networking: The crowd often is smarter than the plain addition of its members’ capabilities. The economist and Nobel laureate Friedrich August von Hayek wrote (1979, p. 54):

[…] the only possibility of transcending the capacity of individual minds is to rely on those super-personal, self-organizing forces which create spontaneous orders.

What von Hayek had in mind were the self-organizing forces of market economies. These economies are not planned or controlled by a mastermind; nevertheless they are unbeatably efficient in producing and distributing the goods in response to actual needs. The “super-personal” forces at work, for instance, condense the whole knowledge about the production and distribution circumstances and the criticality of a demand in a single number, the price—something individual minds generally fall short off. But von Hayek’s tenet goes beyond economy and is today also proven by spectacular crowd achievements such as Wikipedia or Linux.

Turning to IT architecture, we find that the traits of sophocracy are still carved into IT architecture as a discipline: Here’s the brain with the blueprint, the architect; and there are the hands implementing the plan accordingly. These traits are particularly visible in enterprise architecture, maybe due to the proximity to the illustrious CxO level, maybe because it sometimes is considered the peak of the IT architecture profession. Yet we know that wisdom is not just with a few and that modern IT has grown to a challenge where enterprise architects cannot afford to lock out insights and ideas from whomever.

This chapter is centered on building blocks accounting for the wisdom of crowds—proposals for ways to implement the third of the central maxims set out in Chapter 6, “Foundations of Collaborative EA”:

Foster and moderate open participation instead of relying only on experts and top-down wisdom

The turn we’re taking for putting this maxim into practice is EA 2.0—the application of Enterprise 2.0 to EA. From the public space we know that social software platforms and other Web 2.0 techniques elicit collaboration, knowledge sharing, and the super-personal spontaneous orders that von Hayek wrote about. Enterprise 2.0 for good reason backs on the hope of utilizing this momentum in a general enterprise context and for general enterprise ends. What is more compelling than pursuing this ideal for the sake of EA, too?

The following sections exemplify the EA 2.0 vision using concrete Enterprise 2.0 tools in the context of our Bank4Us case. We’re not saying that these are the only or the best tools. Instead, they should convey an idea of how to give birth to a viable EA 2.0 culture. We have arranged them into three major building blocks:

Building Block 4 pursues new traits of sharing and combining knowledge. ObjectPedia will be one of the examples demonstrating this aspect—a self-organizing, company-wide data dictionary of business objects that is roughly built along the lines of the famous archetype, Wikipedia.

Building Block 5 is about collaborative decision making. The diagnostic process landscape demonstrates how decisions can be prepared on the ground using crowd input, whereas our second example, the ITO bazaar, even shows how common decisions can be moderated in an Enterprise 2.0 fashion.

Finally, Building Block 6 exemplifies how the transformation that eventually changes the IT landscape can be fostered by participation.

But before we come to the building blocks, we start the EA 2.0 journey with a recap of the basic ingredients of Web 2.0 and Enterprise 2.0. We must come to a clear understanding of these somewhat fuzzy subjects and discuss their general benefits and pitfalls in a wider scope.

A primer on Enterprise 2.0

The term Enterprise 2.0 was coined by Andrew McAfee, professor at the MIT Sloan School of Management at Harvard. In 2006, McAfee published a paper, Enterprise 2.0: The Dawn of Emergent Collaboration (McAfee, 2006a), in which he described social software as a means of collaboration in an enterprise context. The paper gained a great deal of attention. Social software had always been regarded as a playground for leisure time or private activities; that it could also add value to the grave, professional world of modern enterprises was a new idea.

McAfee took up two trains of thought that were widely discussed and applied them to his research topic: the “impact of IT on how businesses perform and how they compete” (McAfee, 2009, p. 3). One train of thought is Web 2.0. The other is a series of related research about collective intelligence—the way communities collaborate, coordinate their activities, manage knowledge, and come to new insights.

Let’s first take a look at Web 2.0.2 There is no straightforward definition of this term. An authority like Sir Timothy Berners-Lee, inventor of HTML, professor at the Massachusetts Institute of Technology (MIT), and director of the World Wide Web Consortium (W3C) even regarded it as “[…] a piece of jargon, nobody even knows what it means” and claimed that it actually adds nothing that wasn’t there in the good old Web 1.0 (Berners-Lee, 2006). The “tag cloud” of notions people associate with Web 2.0 is indeed diverse and full of concepts lacking a reliable definition.

The most important description of Web 2.0 goes back to Tim O’Reilly, founder and CEO of O’Reilly Media, in 2005. In his much-quoted article “What Is Web 2.0?” he defends the term against the criticism of fuzziness: “Like many important concepts, Web 2.0 doesn’t have a hard boundary, but rather, a gravitational core” (O’Reilly, 2005). In other words, just as there is no hard boundary between jazz and pure noise, there’s no hard boundary between things that are Web 2.0-like and things that are not—but that doesn’t render the concept meaningless.

Figure 8-1 Web 2.0 tag cloud.

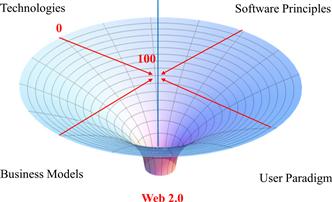

Web 2.0 is a conglomerate of technologies, software principles, business models, and, most important, a different user paradigm of the Internet as a social medium. Web things scoring 100% in all these aspects clearly are at the gravitational core of Web 2.0, as shown in Figure 8-2.

Figure 8-2 Aspects and the gravitational core of Web 2.0.

Take, for instance, a Web application built on specific technologies according to specific software principles, distributed according to new business models, and addressing the user as a social being; this application would beyond doubt deserve the label “Web 2.0.” On the other side, if we stepwise subtract these properties, we more and more move toward the boundary until it eventually becomes dubious whether it still is Web 2.0 or not.

We do not want to go deeper into the characteristic technologies, since there is a broad literature available on this topic. Nevertheless, to indicate what we mean by Web 2.0 technologies, here’s a short and certainly not exhaustive list:

• Feeds (RSS or Atom), permalinks, and trackbacks

• Web-services (REST or SOAP WS-*)

• Server-side component frameworks such as Java Server Faces or Microsoft ASP.NET

• Full-stack-frameworks for rapid development such as Ruby on Rails or Grails

• Client-side technologies such as AJAX, JavaScript, Adobe Flex, or Microsoft Silverlight

• Content and application integration technologies, namely portals and in particular mashups such as IBM Mashup Center or Software AG MashZone

These technologies are the enablers for the new Web 2.0 game. Feeds, permalinks, and trackbacks, to explore some examples, are the foundation of the blogosphere, the social ecosystem of cross-connected Web blogs.

The software principles outlined here as a second characteristic of Web 2.0 guide the way software is designed and developed on the new Web. The most eye-catching principle is the design for a richer user experience, or, in more glowing words, for joy of use. It is the quality leap from the stubborn, form-based request-response behavior of classical Web applications to the handsome comfort we’ve gotten used to with desktop applications. Modern Web applications offer fluid navigation, context-aware menus and help, visual effects and multimedia, and a look that doesn’t feel like something out of a bureaucrat’s cabinet.

Another principle is the so-called perpetual beta. Contrary to traditional software products that are released as ready-made, compact feature packs in relatively long-lasting release cycles, Web 2.0 software is typically in a state of continuous improvement. It is released frequently in short cycles,3 and upgrades happen seamlessly, without the blare of trumpets that announce traditional software releases.

The perpetual beta also invites users to be development partners. It is a trial-and-error approach to guessing what the user wants. Features are tentatively placed on a Website, but if users do not embrace them, the provider removes them. Both the “release soon and often” factor and the unresisting adaptation to user behavior also characterize lean and agile development approaches, as we know from Chapter 7. These approaches therefore are natural complements to Web 2.0.

The last principle we want to outline here is the design for remixability. This means that data and functionality are delivered to the client in the form of extensible services, as opposed to the closure of functions traditional applications offer. The services are intended to be remixed by the service consumer, even in a way that the provider has not got the faintest idea about. Their design therefore strives for lightweight APIs, versioning, and other ingredients of loose coupling.

Remixability brings us to the business models characterizing Web 2.0. The business models of Web 2.0 are no longer centered on competition between applications. Internet users today simply expect reasonable user interfaces, but it is nothing they get excited about. What separates the wheat from the chaff are the services, in particular the data offered by a competitor. Companies that provide highly valuable data in a remixable way and that demonstrate operational excellence in ensuring performance, security, and other nonfunctional properties are gaining market share.

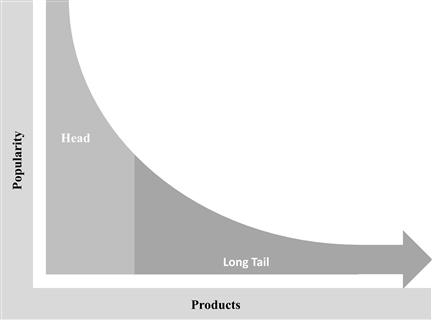

Another feature of Web 2.0 business models is that they serve the long tail. The long tail (see Figure 8-3)4 is a metaphor the journalist Chris Anderson (2006) applied to the large market segment of diversified low-volume offerings.

Figure 8-3 Long tail of product distribution.

Internet marketplaces such as Amazon demonstrate strong support of the long tail. Bookworms find rare bibliophilic editions there because Amazon remixes the services offered by smaller or specialized booksellers and creates a seamless link between seller and bookworm. This is a nice example of how the openness and remixability of Web 2.0 services enable providers to meet individualized, uncommon requirements.

A third feature of Web 2.0 business models is that they turn the formerly passive role of the consumer into active participation. Consumers act, for example, as marketing agents: They spread the news and—consciously or not—recommend products. Marketing folks use the (slightly insane) term viral marketing for the methodology to influence this genuinely autonomous consumer activity.

Tim O’Reilly finds this kind of marketing so characteristic that he writes: “You can almost make the case that if a site or product relies on advertising to get the word out, it isn’t Web 2.0” (O’Reilly, 2005). Consumers have an important say in the public appearance of a product. They evaluate products, write critiques and user guides, publish hints and tricks, relate products to each other, and so forth. In contrast to pre-Web 2.0 times, it is now much more difficult for a company to control the public image of their offerings.

The last feature of Web 2.0 business models we’d like to mention here is that services get better the more people use them. Examples of this characteristic are public spam filters or file distribution models such as BitTorrent. Such services functionally depend on being used, and the more users there are, the better the services work.

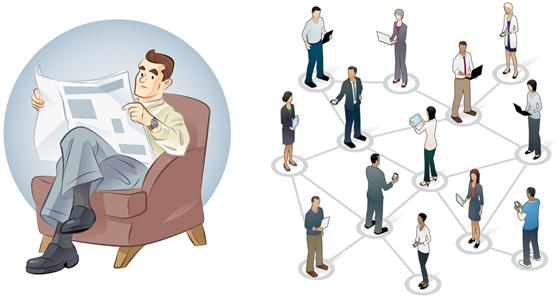

The features of business models already indicate what can be regarded as a litmus test for Web 2.0. It is the question whether the Internet is utilized as just a rather one-directional publication channel or as a platform that addresses users as social beings and invites them to participation and exchange. Figure 8-4 nicely illustrates this distinction in paradigms.

Figure 8-4 Information source versus platform of participation.

The social momentum of Web 2.0 is particularly visible in social software, which is an important subcategory of Web 2.0 applications. The most prominent examples of social software such as Wikipedia, Facebook, or Twitter are ubiquitous and known to everyone. Tom Coates defines social software as “software which supports, extends, or derives added value from human social behavior” (Coates, 2005).

Though the definition is crisp and nicely emphasizes the value lying in IT-enabled social behavior, it is slightly too general. Email, for example, would fall under Coates’s definition, but email is generally not regarded as social software. Social software offers a platform, a space where actions are publicly visible and persistent. A platform invites an indeterminate cloud of people to react, whether sooner or later; that’s up to them. Email, on the contrary, opens a channel visible to a small group only and imposes an obligation to reply soon. Thus, email is ruled out of the social software equation.

But what about company portals—are they social software? According to McAfee (2009), this depends on how much structure they impose. Most portals we have seen are intended to support a tightly coupled working group in predefined tasks. They show a rich and somewhat rigid structure in terms of workflows, data and document types that can be published, permissions, and roles. This is not social software. Social software minimizes the pre-given structures and leaves the rest to self-organization. This doesn’t mean it is unstructured, but the pre-given landmarks are reduced to a few condensation-points where content can accumulate.

McAfee (2009) uses the term emergent to characterize structure that is not preplanned by a mastermind but emerges out of local activities in a community. The structure is, as McAfee puts it, irreducible: It results from network effects and economies of scale but cannot be deduced from the contributions of individual actors.

The tag clouds of the Yahoo! bookmarking service Delicious are an example of an emergent structure. Internet users make their bookmarks publicly available and assign tags to the bookmarks that, in their very subjective view, describe the contents of the bookmarked page. The aggregation of individual tags yields a tag cloud that puts Web content into categories with amazing accuracy and facilitates catchword-based searching. Such categorizations are called folksonomies—taxonomies created by a large crowd of folks.

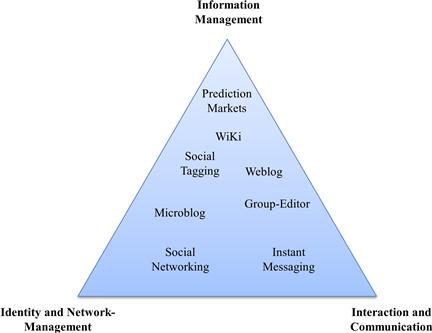

A common way to systematize the manifold social software applications is by means of a social software triangle, as shown in Figure 8-5. This triangle arranges application types according to their contribution to the three fundamental use cases of social software:

• Information management. Creating, aggregating, finding, evaluating, and maintaining information.

• Identity and network management. Shaping and presenting their own personal profiles, building and cultivating social ties.

• Interaction and communication. Direct and indirect communication, conversations, correspondence, information exchange, quotations, and comments.

Figure 8-5 The social software triangle.

Another well-established characterization of an information platform as social software is formulated by McAfee (2009, p. 3) and condensed into the acronym SLATES, which stands for:

• Search. Users must be able to find what they are looking for.

• Links. Users must be able to link content. Links structure the content in an emergent way and rate the relevance of some content by counting the links referring to it (which is Google’s page-rank algorithm).

• Authoring. Users must be able to contribute their knowledge, experience, insights, facts, opinions, and so on.

• Tags. Users must be able to apply tags to categorize content in an emergent way.

• Extensions. Users get automatic hints on content that might be interesting to them based on user behavior and preferences (“If you like this, you might like …”)

• Signals. Users can subscribe to alerts or feeds so that they are continuously informed about new or updated contents. By using feed aggregators, they also can choose to automatically incorporate the most recent content into their own content.

But let’s conclude here. We hope that the reader by now has a good grasp of what Web 2.0 is about, given that this notion cannot be defined with ultimate sharpness. At this point, we also have collected all ingredients to state McAfee’s famous definition of Enterprise 2.0. It reads (McAfee, 2006b):

Enterprise 2.0 is the use of emergent social software platforms within companies, or between companies and their partners or customers.

Today, many organizations in which knowledge work is an essential part of the business have adopted social software in some way. Wikis, blogs, and Facebook-like social networks can be found in many companies or institutions. The momentum such tools have is not caused by technology but rather by the social dynamics they elicit. They fill what the sociologist Ronald Burt denotes as structural holes in a knowledge network (Burt, 1995)—missing links between people that otherwise would provide some information benefits.

The observation goes back to Mark Granovetter, who in 1973 published his influential paper The Strength of Weak Ties (Granovetter, 1973). Prior to this landmark paper, studies of social dynamics always emphasized the importance of the closely related group. This is a group of peers, friends, relatives, colleagues, and other people who have strong ties.

But Granovetter argued that for gathering information, solving problems, and inspiration from unfamiliar ideas, the weak ties are at least as important. Strongly tied groups are in a sense islands of static knowledge. The members more or less share the same experiences, convictions, and tenets. Even if there are different opinions or backgrounds, exchange must usually come to a “we agree to disagree” arrangement. Weak ties, on the other hand—relationships to people you know only superficially or by name—are bridges to other knowledge islands and can therefore be strong in acquiring new insights.

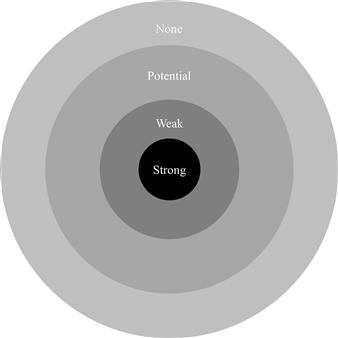

Andrew McAfee adopted Granovetter’s idea and extended it based on the potential of the new Web 2.0 technologies to what he calls the “bull’s eye” (Figure 8-6).5

Figure 8-6 The bull’s eye.

At the center of the bull’s eye are strongly tied people. In the working world, these are the people you work with on a regular basis—people who belong to your community of practice. This is the inner circle that has been addressed by traditional IT tools for collaboration by so-called groupware. Web 2.0 does not revolutionize this circle except that it provides lighter accessibility and a less rigorous structure.

The social net grows to the second circle of the bull’s eye if you take in people you worked with on a project long time ago, people you just met once on a conference, or people who happen to be in your address book for some other reason— in short, people to whom you have weak ties. These are the ties that become important when you want to know something off the beaten track of your daily routine. They are the people you want to contact when all your buddies are shrugging their shoulders.

Maybe your concern is to introduce an orchestration engine into your ESB6 and use BPEL7 as a means to implement business processes, but nobody in your company has any experience with the pros and cons of doing such a task. Today this might be the point at which you turn to professional networks such as LinkedIn or even Facebook. These tools not only provide an address book and lightweight connectivity; they also make you aware of what your weakly tied counterparts are doing and knowing—they provide what Enterprise 2.0 apologists call network awareness.

But the potential of Web 2.0 goes beyond people’s weak ties. The characteristic trait that people contribute content to platforms on a broad basis spawns potential ties between them. If you find something relevant in the blog of some author or a comment or backtrack in your own blog left by some person, it can be the starting point of a conversation or even a collaboration.

The outermost circle labeled “None” on the bull’s eye is not there in all versions of this diagram. It denotes the kind of social dynamic that is not centered on ties but rather on an anonymous aggregation of information contributed by people who have no intention of getting in contact with each other. Examples of such dynamics are tagging, rating, and prediction markets.

It is a remarkable feature of the bull’s eye that it has backtracks from the outer circles to the inner ones. Since the communities of practice at the inner circle also place their content on public platforms, they become more open to comments or opinions from the outer space, which is one of the major advantages of Web 2.0 with regard to the inner circle.

The public space offers convincing examples of how the means of Web 2.0, which look inconsiderable at first sight, can change the rules of the game. Take, for instance, the way we consume products today—aided by ratings, consumer forums, links to similar products, and so forth. Don’t we have reason to suspect that there is some potential to Enterprise 2.0—that it implies a major shift in the way people are doing knowledge-intensive work? This is the reason we analyze it here for its applicability to EA.

The introduction of Enterprise 2.0 into an organization is, on the other hand, not a straightforward thing. It is in no case sufficient to install a wiki, some social networking tool, a blogging platform, or other Web 2.0 software, then leave the rest to emergence. The hope that tools alone will make knowledge and communication flourish like a tropical rainforest is illusory. The introduction is to a large extent a question of organizational measures, and we list here just the ones we find most critical:

• Enterprise 2.0 platforms must be put into “the flow.” They should not come as an additional burden apart from the daily work processes. This might imply a clear-cut replacement of existing tools.

• Enterprise 2.0 platforms must be governed by carefully defined rules and norms. The authoring rules of Wikipedia are a good example of how this guides the direction, contents, and etiquette of such a system.

• Enterprise 2.0 platforms should come with a skeleton of predefined structure. This structure provides crystallization points at which contributions of the user community can sediment. A tabula rasa leaves too many contextual considerations to users and is perceived as an obstacle for starting to use the platform.

• Contributions to Enterprise 2.0 platforms must be valued. There’s a wide range of options here, from somewhat childish goodies8 to incentive-related goal agreements. But the most convincing signs of appreciation are that (a) that authorities or highly respected persons start using the platform and (b) important content is published and discussed there. If the CEO writes a blog but the staff finds nothing more important there than her impressions of the New York marathon, the platform is not earnestly valued.

A thorough discussion of the weaknesses and threats of Enterprise 2.0 is beyond the scope of this book, so we refer the interested reader to Chapter 6 of McAfee (2009) for such a discussion. There is in fact a broad variety of pernicious things that can show up in a social platform—for example:

• Inappropriate content. Pictures of doggies and kittens, conversations about cycling training methods, off-topic discussions, and the like.

• Flame wars. Discussions for the sake of discussion, insults, airing of dirty laundry, mobbing, troll behavior, etc.

• Wrong content. Self-proclaimed experts giving wrong advice, employees uttering opinions that are contradictory to the company position, etc.

We tend to agree with Andrew McAfee that these downsides are, as he puts it, “red herrings”—threats that can be overcome by suitable norms, corrective actions of authorities, or role models. The self-healing capabilities of social platforms may also auto-correct them to some extent.

But the loss of confidentiality and the danger of security leaks are, in our view, more serious concerns than McAfee thinks. Envision a company that has outsourced a large part of its IT function to service providers, business partners, or freelancers. This typically results in a politically delicate conglomerate of conflicting interests. Making the IT strategy, the map of the IT landscape, product decisions, or other highly interesting topics public to all involved parties changes the game between service provider and buyer.

From the buyer’s perspective it gets more difficult to pull the wool over the provider’s eyes, and from the provider’s point of view it is simpler exploit weak spots as cash cows. One might ask in such a constellation whether it is the new openness or the game of “partners” itself that smells fishy, but such shifts in the political equilibrium caused by Enterprise 2.0 must be given due consideration.

The most serious threat about Enterprise 2.0 certainly is that it simply doesn’t work due to a lack of participation. By April 2011, there were 3,649,867 English articles in Wikipedia,9 a tremendous number that outreaches other encyclopedias by magnitudes. At the same time, the number of contributors, who are the users with at least 10 edits since their arrival, counts up to 684,737.10 This is an impressive absolute number, but given that there are more than one billion English-speaking Wikipedia users, the number melts down to a tiny relative percentage of about 0.068% of the whole.

If the same percentage applied to an Enterprise 2.0 platform featuring topics regarding the IT function of an enterprise, a theme that does not reach more than 1,000 addressees, the whole idea would collapse to a homepage and publication channel of a handful of expert authors. Thus, countermeasures must be taken to raise the percentage of contributors to a viable level. Some of these measures have been outlined previously—for instance, the advice to put Enterprise 2.0 tools into the flow—but we must have an eye on this aspect in the following sections, where we ponder Enterprise 2.0 as an enabler for EA.

Building Block 4: Participation in knowledge

Our first building block primarily invites the IT staff in participation. According to the British sitcom “The IT Crowd,”11 these folks lead a despised, nerdish life in the untidy, shabby basement of a company, in dazzling contrast to the glamorous marble and glass architecture the rest of the company enjoys. How can enterprise architects fight against a technophile subculture that rules the basement? How can they excavate the treasure of knowledge buried in the cellar?

The Strategy blog

Oscar Salgado, the chief architect of our Bank4Us EA case, has recently participated in the maxim workshop spelling out the CIO’s Closer to Customer mission statement set out in Chapter 3, activity EA-1, Defining the IT strategy. The outcome has now been published as a slideshow to the employee portal. But Oscar knows his IT crowd inside out: They will play a round of buzzword bingo12 with it and turn to something “more substantial.”

During the workshop, Oscar learned a lot about the business concerns, the market conditions, and the financial figures that are causing a headache for higher management. He understood that customer empowerment, one of the strategic goals of Bank4Us, is more than a marketing label. Many of the services still offered at the bank’s branch offices are too expensive and simply must be replaced with Internet-based self-services. This is not a matter of value-adds but of financial survival.

Oscar has also started to believe that the initiatives subsumed under customer empowerment can really make a difference to the consumer. The idea of a financial dashboard, for instance, that transparently shows the customer’s financial health state at any time and on any device—desktop, smartphone, or tablet—is something Oscar would actually like to have for himself. One workshop participant brought this example up, but such details didn’t make it to the strategy slides.

After some hesitation, Oscar decides to publish his insights regarding the strategy in some blog posts. He feels a bit uneasy about it because it is a different kind of writing than the impersonal, matter-of-fact stuff he usually authors. On the other side, he is a seasoned consultant and knows that showing some passion about the strategic goals helps win over the people in the company. Therefore, he decides to make himself a spokesman of the strategy and utter some personal views at the risk that technocratic mockers among the IT crowd might enjoy tearing them apart. But he is convinced that a deeper understanding of the strategy’s motivation, a clearer view of what it means in practice and a closer identification with the goals, will help kick off the mission. Dave Callaghan, his boss and CIO, embraces the idea and encourages Oscar to do so.

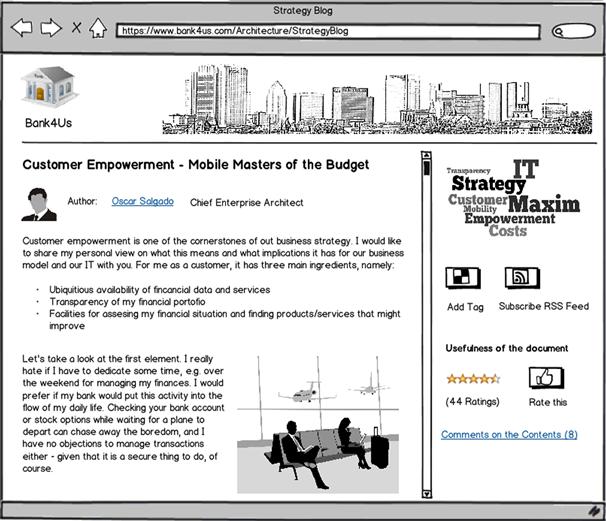

The EA group has long played with the idea of utilizing a social software platform (SSP) for the sake of architecture management. Leon Campista, a peer of Padma Valluri on the EA team focusing on processes and methods, is acting as an evangelist for Enterprise 2.0 and has set up an SSP on the corporate intranet to invite participation. Hence Oscar decides to volunteer as a trailblazer and posts his first blog to Leon’s SSP, as shown in Figure 8-7.

Figure 8-7 Oscar’s first post to the Strategy blog.

Oscar’s blog post addresses the economic reasons behind customer empowerment, the opportunities it offers, and the implications for the technical landscape. Nevertheless, the major part is less concrete and analytical; it is about finding the right slogans, scenarios, metaphors, and comparisons. Two Japanese founders of modern knowledge management, Ikujiro Nonaka and Hirotaka Takeuchi, would applaud this approach. Their classic The Knowledge Creating Company (1995) furnishes evidence of the importance of nonanalytical knowledge in giving organizations a common direction.

Oscar, for instance, compares messages that Bank4Us currently provides to its customers about their savings with the information parents receive from their teenage kids on a school trip to Paris. Every now and then, you get some difficult-to-decipher messages on how they’re doing, but all in all your insight and influence are rather limited. This resembles Oscar’s impression when he receives monthly account statements about his equity fund.

After publishing his post, Oscar remembers Leon’s advice that Enterprise 2.0 platforms must be valued (as explained in “A Primer on Enterprise 2.0”), and he asks some peers to read his blog. They in fact take up the blog as a means of spreading the word and add comments contributing their own notes and points of view.

All in all, the majority of the IT crowd welcomes the blog posts because they add some beef to the meager maxims of the strategy slides. Leon, acting as the technician behind the SSP, has added a widget to the blog whereby readers can assess the usefulness of the document. The enterprise architecture group wants to use this as a criterion for a regular clean sweep of the SSP to avoid the proverbial document graveyard.

Yet blogs are still rather unidirectional and similar to the bulletin-like publications found in most employee portals. Blogs elicit comments but do not invite participation in the full sense. There is another example at Bank4Us that goes one step further: the ObjectPedia.

Collaborative data modeling: The objectPedia

Data modeling always has been a headache at Bank4Us. Until a few years ago, the company did not have an explicit, enterprise-wide data model. Some units had a detailed, rigid model—in particular those dealing with accounts and money transfers—but others, such as marketing and sales, were drowned in an unconsidered proliferation of concepts and database entities. However, with the advent of more and more cross-unit business processes and the struggle for a unified, seamless customer interface, the absence of an enterprise data model grew into an obstruction. This problem became particularly visible with the introduction of an enterprise service bus (ESB) as a data exchange platform.

Steve Pread, the enterprise architect in charge of the data architecture, has sent a cry for help to Padma Valluri, who maintains the EA processes at Bank4Us. The process for bringing business objects (BOs) into the ESB draws heavy criticism. To ensure the interoperability of services, Steve’s team had been entitled to be the only authorities defining BOs that are admissible as service parameters in the ESB.

The objective was a unified data model shared among all service providers and consumers in the ESB. But that turned out to be overambitious. Steve’s team was soon overloaded with requests from projects. Furthermore, his architects did not understand the semantics of the hundreds of BOs well enough. Finally, they also had to acknowledge that the dozen interpretations of a central BO like “customer” all had good reasons: The marketing view of a customer is essentially different from what bank transactions attribute to this entity. Hence, Steve asks Padma for a pitiless inspection of his approach.

Padma, seeing this as an opportunity to show the benefits of the recently developed lean toolkit, invites Steve to a process activity mapping of the BO definition, review, and approval process (see Building Block 1 in Chapter 7). They soon agree that more autonomy must be given to projects and that central control of data definition must be given up in favor of a federated, self-organizing approach.

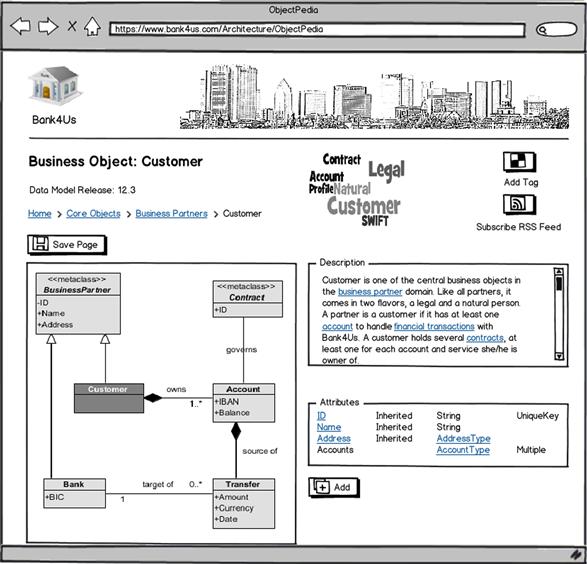

After some days of self-critical musing, Steve comes up with the idea of an ObjectPedia, a social software data dictionary along the same lines as Wikipedia. Padma immediately embraces this suggestion because it points to the direction of the just-in-time modeling and iterative design she regards as a prerequisite for the EA Kanban idea (see Building Block 3). Together Steve and Padma draft a first sketch of this platform, as shown in Figure 8-8.

Figure 8-8 Collaborative modeling: A sketch of ObjectPedia.

The page has some standard SSP functions that Padma and Steve find promising:

• Steve hopes that the tag cloud makes the search for architecture information more efficient. The company portal the enterprise architecture group used in the past to publish documents (Microsoft Office) eventually felt like searching for a needle in a haystack.

• Users can subscribe to feeds so that they are notified of changes on a page. Padma wants to raise common awareness of changes and automatically synch up all stakeholders as soon as an author publishes modifications.

But a Website is just a start and is not sufficient for self-organization. Leon Campista, the EA 2.0 evangelist, reminds Padma and Steve of the advice that Enterprise 2.0 platforms must be governed by carefully defined rules and norms (see “A Primer on Enterprise 2.0”), and together they constitute the following ObjectPedia Charter:

• ObjectPedia shall follow the same code of conduct as Wikipedia, which lives on a respectful and forgiving netiquette.

• Authors must not create redundant concepts. If there is some potential to reuse an existing business object, it should be exploited.

• Authors must integrate new concepts into the existing model. Semantic relationships to existing entities must be explicated.

The charter also lists some rules governing the integration with the overall software development process:

• ObjectPedia is open to data architects from each project. The projects nominate their data architects, who then work on the BO model in a fully authorized, self-responsible way. There’s no authority from above reviewing the contents or moderating in case of conflicting views.

• The data model has several releases per year. At the end of such a cycle, a snapshot of the most recent data model is frozen, labeled with the release number, and activated in the service repository of the ESB.

• Before a project deploys a service provider implementation into production, it has to decide what data model release it adheres to. This decision is part of the service-level agreement (SLA).

The charter gives wide autonomy to the projects and downsizes the role of Steve’s team to consultancy and moderation. ObjectPedia will have a version and change history as well as discussion pages, like Wikipedia. But with these facilities, projects are left to their own responsibility for resolving conflicts. They can approach Steve’s modeling gurus for advice but shouldn’t expect that they’ll get an authoritative model from the top down.

Padma, Steve, and Leon are convinced that this scheme might work. They present the idea to Oscar, the chief enterprise architect, on the next possible occasion and win him over to fight for a prototyping budget. But they also know that efforts go beyond planned SSP implementation: Weakening the grip in favor of self-organization is a brave step and needs some coaching during ramp-up. It is a long haul, as Andrew McAfee puts it (2009).

The Bank4Us example is a case where the designing capabilities of a central authority did not suffice to tame the complexity of an enterprise data model. Way back in our Reflections on Complexity, we gave some reasons that a network of autonomous designers has the potential to do better: It gets more hands on board and benefits from a diversity of knowledge. ObjectPedia shows how this can be achieved in an EA context.

Weak ties and a self-organizing application landscape

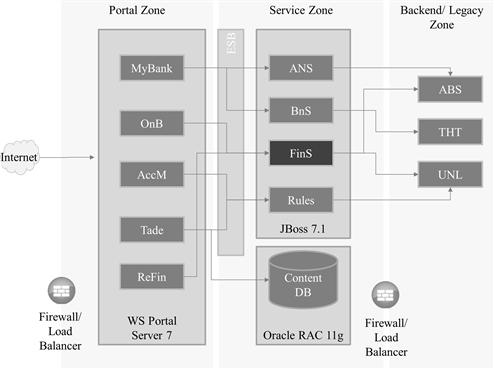

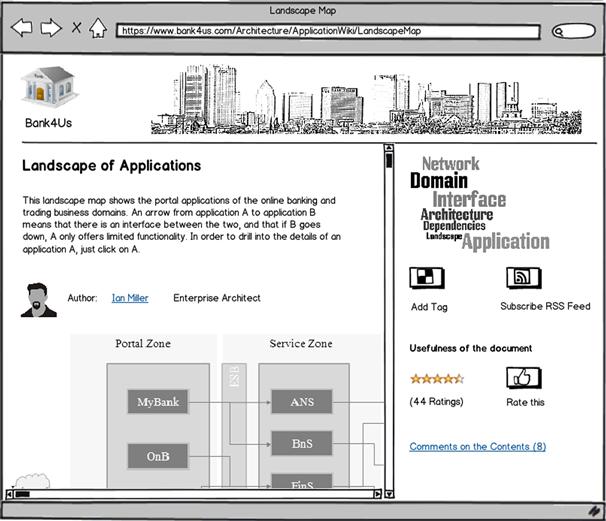

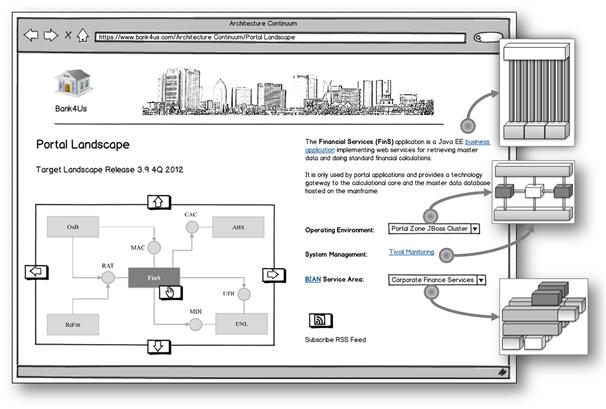

Ian Miller, the Bank4Us enterprise architect whom we already encountered on several occasions, has recently drafted a landscape map of the Internet portal applications in the online banking and trading business domains. An excerpt of this map is shown in Figure 8-9. The map is a view of the as-is architecture and addresses several stakeholders: developers, operational staff, and planners.

Figure 8-9 Ian’s landscape map of portal applications.

It was a strenuous effort to draw the full map consisting of 46 applications and 129 application interfaces. But Ian is an old hand and knows the fate of such architecture views: They are inaccurate from the first day onward, and their reliability melts like ice in summer with each release of an application. Developers and operational staff start ignoring the map as soon as they discover major inaccuracies, and planners will blame Ian if they base erroneous decisions on faults in the map. It has happened more than once that the credibility and reputation of the enterprise architecture group was damaged by such blaming that eventually escalated to the CIO.

The enterprise architecture group had pursued various approaches to ensure the accuracy and timeliness of models. They mandated that development projects had to submit their architecture input at defined milestones, and they monitored the projects by architecture compliance reviews as described in Chapter 3, “Monitoring the project portfolio (EA-6).”

But the results of these governance mechanisms were disappointing for various reasons: The projects unwillingly submitted just a minimum of information “to make the EAs happy,” or the project architect did not have sufficient insight into the de facto implementation, or the delivered input was already outdated when the release went into production. In many cases, models were simply abandoned or kept alive at high cost by frequent and laborious software archeology. The run for up-to-date models felt like an inverse “hare and hedgehog” race: Whenever the modeler was under the happy impression he had captured reality, the de facto implementation had already moved on to a different place.

To escape this dilemma, and with growing support for ObjectPedia in the offing, Ian decides to publish the landscape map to the SSP and open it for comments, corrections, amendments, and evaluations by the IT crowd. A realization of this idea is sketched in Figure 8-10.

Figure 8-10 Ian’s landscape map in the architecture SSP.

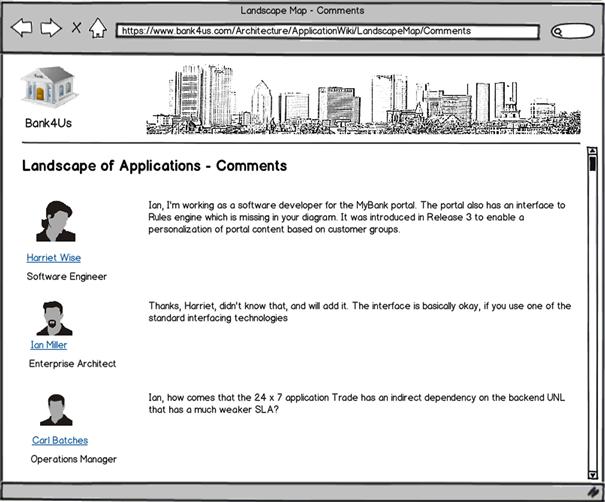

The site has some standard functions of an SSP: tag clouds to facilitate searching, feeds to raise the awareness of changes, and usefulness ratings to alleviate cleansing. But the treasure is in the content-related comments that Ian harvests in a corresponding subpage (see Figure 8-11).

Figure 8-11 Comments on Ian’s landscape map.

The inaccuracy pointed out by software engineer Harriet and the service-level concern discovered by system operator Carl are exactly the fallacies that would probably have not been discovered without the SSP. Ian knew Carl, and maybe he would have stated his concern in an ordinary review of the architecture specification. But Ian had no relation to Harriet; she was one of the so-called potential ties connected by the SSP.

So far, so good—but this site has shortcomings:

• The drafting of the landscape map still remains a solitary effort and invites participation only with hindsight.

• The review of the map is an additional burden to the project teams. In addition to the documents they have to provide for an architecture conformance review (ACR), they are now called to find flaws in Ian’s filings. This breaks the maxim that Enterprise 2.0 platforms must be put “into the flow.”

• Because the development process does not mandate review of the landscape map, the finding of fallacies is a matter of luck.

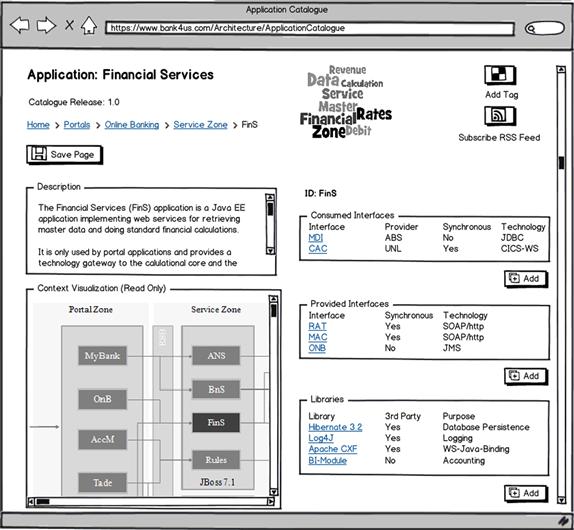

Hence, Ian would like to take one step further in the direction of a self-organizing application catalogue. The project teams are currently submitting their input to the ACR on Excel sheets. They could as well use an SSP site like the one shown in Figure 8-12.

Figure 8-12 The application catalogue site.

The site provides a template in which project architects can fill in the details about their applications. The landscape map is then generated from this input without further manual work.

Meanwhile, Ian starts appreciating emergent models and wonders whether self-organization could not also answer a notorious meta-problem involved in templates, as shown in Figure 8-12. Templates like this one incorporate the taxonomy of enterprise architecture—for example, the EA understanding of the concept “application.” But the application domains are different and the technologies widespread, so a template that makes sense to all is a least common denominator conveying just meager information.

Ian started his career as a software developer in the data warehouse (DW) domain, and he implemented ETL13 flows by means of Perl and Structured Query Language (SQL). At that time, Common Object Request Broker Architecture (CORBA) was the ruling hype technology, and all architecture documentation standards at Bank4Us were targeted at CORBA applications. The templates were all about components, implementation-independent interfaces, IDLs,14 stubs, skeletons, and interface operations. Ian remembers how awkward it was to express his file- and database-centric mass data flows in these terms. It also felt as though his well-thought-out, useful flows were something dirty or half-legal.

From reviewing the input the project teams submit to the ACR, Ian got the impression that many project architects are scratching their heads as they fill in the templates. They do not feel at home in the taxonomy designed by the EA team. What if this taxonomy could also be emergent and he could invite the project architects to shape it according to their views on the subject?

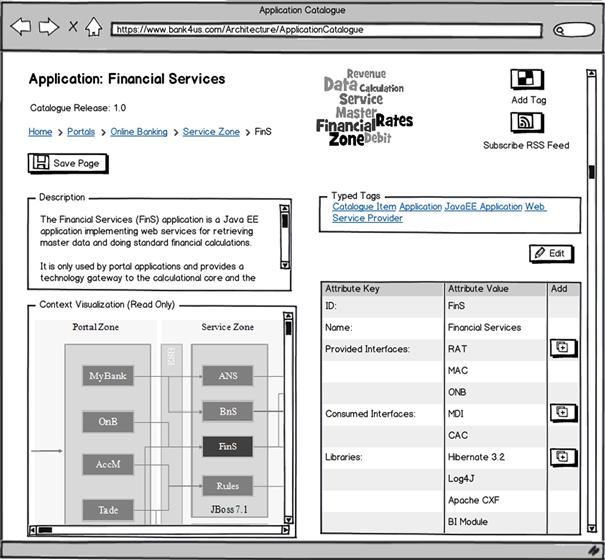

Recent research conducted at the Technical University of Munich gives an idea what such an emergent taxonomy could look like (Buckle et al., 2010).15 The research group Software Engineering for Business Information Systems (SEBIS) promotes hybrid wikis as a tool for emergent meta-models. Hybrid wikis are not completely structureless like the ordinary tabula rasa wikis, but they also are not rigidly prestructured by templates, as in the case of Ian’s application catalogue site. Their structure is given to self-organization, and the taxonomy emerges while users are editing contents. The method of choice to achieve this functionality are typed tags. These tags are not simply strings, as in the case of ordinary tags, but key-value pairs. An example is shown in Figure 8-13.

Figure 8-13 The application catalogue as a hybrid wiki.

The application FinS is tagged with several typed tags—for example, with CatalogueItem and JavaEE. Therefore, FinS inherits the simple attributes ID and Name from CatalogueItem and the multivalued attribute Libraries from JavaEE. As usual with tags, users can create their own typed tags while editing contents in the HybridWiki. An author in charge of some ETL flow would not pick the JavaEE tag but could annotate the flow with a new typed tag. This tag would be specific to ETL flows and list the target database tables and shared stored procedures being accessed. After creation, it would be available to other authors, too.

The SEBIS research group distributes an open source hybrid wiki called Tricia. The video recording at (SEBIS, 2012) nicely demonstrates how a meta-model emerges on the fly while users are editing contents with this SSP. The researchers also discovered EA as an application domain for hybrid wikis and founded the industry collaboration Wiki4EAM to proceed in this direction. It will be interesting to follow their work.

Summing it up: Assessment by the EA Dashboard

Throughout this section we’ve come across blueprint examples of how Bank4Us embarks on Building Block 4, Participation in knowledge. The tools range from straightforward ones, like Oscar Salgado’s Strategy blog, to rather futuristic means, like Ian Miller’s vision of a hybrid wiki application catalogue.

The addressees of the given examples are the IT crowd, but knowledge sharing is certainly not confined to this target group. Ian’s application landscape, for example, can easily be extended by forum elements to a collaboratively written user manual that invites users to share their best practices and tricks. We don’t have to dwell on this; today half of the user knowledge about publicly available software is not in the authoritative manuals but instead written by the users themselves.

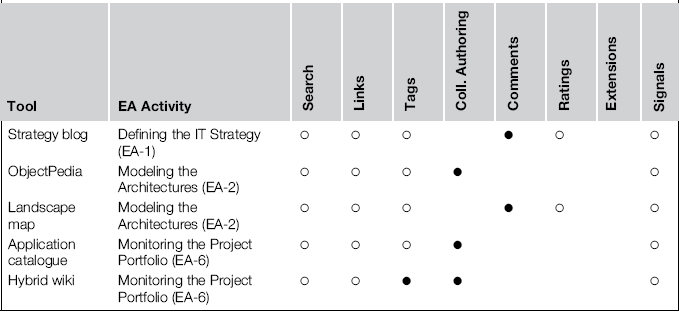

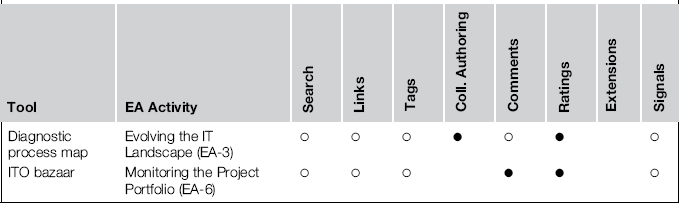

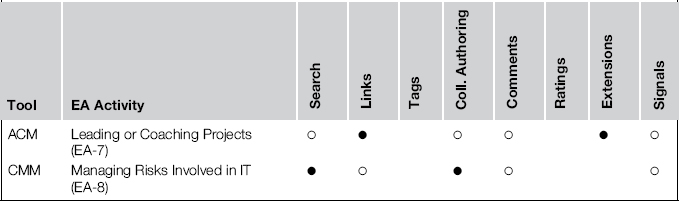

The examples support various EA activities (often even more than one) and apply several techniques of social software platforms (SSPs); Table 8-116 summarizes these.

Table 8-1 Building Block 4: Supported Activities and Employed Techniques

In this table, a solid bullet marks the most crucial techniques, whereas a hollow bullet denotes a feature that plays a role but is not key. Nevertheless, the general, ubiquitous functions of an SSP should not be underestimated. The ability to connect people and make the network participants aware of what others are thinking and doing is presumably a paramount benefit of an SSP. Only the landscape map explicitly highlighted this benefit, but it is implicit in the other examples, too.

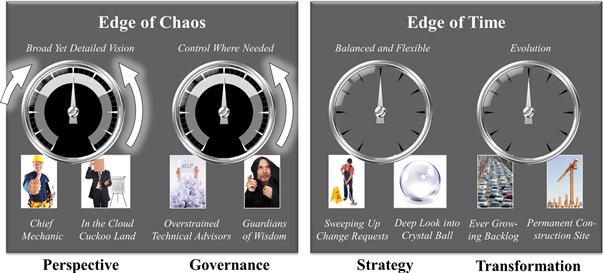

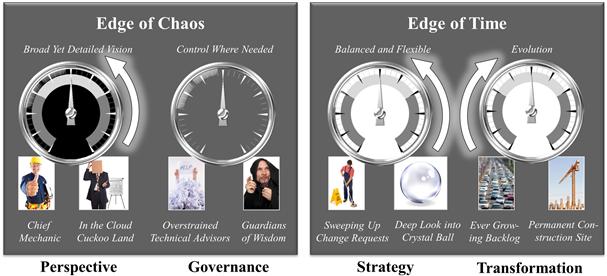

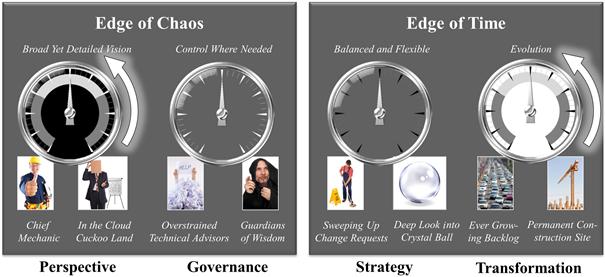

What more specific benefits can we expect for EA? Figure 8-14 measures the expected value of Building Block 4 on our EA Dashboard.

Figure 8-14 The EA Dashboard for Building Block 4, Participation in knowledge.

Since Building Block 4 is about knowledge but not about taking action, the expected impact is on the order-chaos dichotomy but not on the timeline.

Tools like ObjectPedia and the landscape map are dedicated to guiding EA out of “Cloud Cuckoo Land.” Their very essence is to meet implementation realities and local knowledge on the ground. The Strategy blog, on the other hand, demonstrates that EA 2.0 tools can also help propagate business concerns and visions among the IT crowd. The Perspective gauge therefore deserves arrows in both directions but with a stronger emphasis on getting our feet back on the ground.

ObjectPedia was initially designed to overcome a governance bottleneck: the solitary top-down definition of a data model by EA. The application catalogue and its futuristic twin, the hybrid wiki, exemplify how the mode of documentation obligations can change from top-down examinations to a more self-responsible authorship of projects. Hence, we expect that Building Block 4 can help the guardians of wisdom weaken the grip.

Building Block 5: Participation in decisions

The crucial question in this section is, how can enterprise architecture benefit from broader participation in finding future directions and taking decisions? Can the wisdom of crowds also improve decision making in EA?

In thinking about this question, one can easily lose footing. What we’re after is a realistic answer against the background of today’s enterprise constitutions. There’s no use in chasing butterflies in utopia: Grassroots democracy is not a model for companies. Which application server software we choose to use will not be a subject of general elections. Enterprise architects won’t form political parties and fight to win the votes for a particular target IT landscape.

Sometimes it is striking how close the work of an enterprise architect in a federated, “political” enterprise can come to such a mode. Nevertheless, it is more a fight for the consent and support of those with budget and influence; applause from the ground workers is neither necessary nor sufficient.

Democracy is marvelous because it respects the autonomy of the individual, not because it is particularly effective in making the right decisions. Unlike society as a whole, an enterprise is, as the name indicates, an organization pursuing particular business goals, and the autonomy of the employees is not its primary concern. Unless this changes, democracy also is not the only rational choice for enterprise constitutions. Hence, we’re not calling for democratic subversion with Building Block 5. Rather, our focus is on providing more and broader input into the decision-making process.

Today we are used to basing our decisions on the opinions and knowledge of Internet social networks. The orientation in terms of what friends, colleagues, neighbors, or relatives do and cherish has always been a trait in decision making, but the advent of social software platforms broadened the scope beyond the strong ties we formerly took into account. The decision to buy a book or a car or even to look for a new job is influenced by the comments, ratings, and cross-links we search for on the Internet. And usually we’re better off with it than with only reading one article written by a profound expert. Why should this day-to-day experience be wrong in enterprise architecture?

The diagnostic process landscape

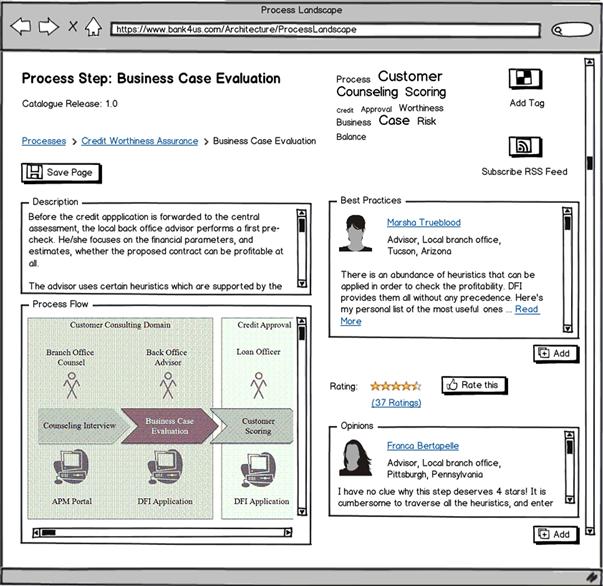

Bank4Us in the meantime has taken further steps toward Enterprise 2.0. The social software platform (SSP) launched by the EA group is now applied beyond the bounds of the IT crowd, too. The extensive office manuals giving instructions on how to perform activity X of process Y have been accompanied by a forum, as shown in Figure 8-15.

Figure 8-15 The process landscape forum.

The forum is gathering best practices from daily work; it is a self-written user manual. The user contributions arrange themselves around the process landscape model of EA, which works as a crystallization point for this knowledge. All users directly or indirectly involved in a process can post advice. The motives for authoring are widespread: Some authors just want to help other poor strugglers, some recognize the SSP as a valuable source of information and want to pass knowledge back in return, whereas others enjoy being regarded as old hands and advisors in their specific areas.

Furthermore, it feels good that the SSP to some extent makes the practitioners owners of the process. This in particular holds true for the rating widget, which is also the interesting part with regard to choosing future directions and making decisions. It collects the pain points from those who feel the pain.

Franca Bertapelle, for example, a local advisor at the branch office in Pittsburgh, rates the business case evaluation process step (a customer applies for a loan, and Franca needs to qualify his case) quite low. She complains that she has to apply several heuristics to assess the profitability of a credit application, but there are no means to transfer data from one heuristics to the next, which implies that she has to type the same data again.

The idea for this rating and the “Have your say” box did not come from the EA group but from the users. Nevertheless, it has become an important diagnostic tool in activity EA-3, “Evolving the IT landscape.” In assessing the sanity of processes or applications, the EA group still collects input from experts, but they never draw conclusions without taking a look at what the users report. If the user ratings suggest that a process is cumbersome and poorly supported by IT, an expert’s praise for it will be taken with a grain of salt.

The diagnostic process landscape is a straightforward quick win with Enterprise 2.0. The next example, however, is more subtle and not only prepares decisions by gathering information but drives them to a result.

The bazaar of IT opportunities

Given the right circumstances, crowds are not only good at assessing present things but also at prognosticating the future. One of the most famous research projects in this direction is the Iowa Electronic Market (IEM) project conducted by the Henry B. Tippie College of Business at Iowa University (Surowiecki, 2005, p. 40). The project started in 1988 but is still under way. IEM is an online, not-for-profit trading market where traders can trade prospects with real (but relatively small amounts) of money.17 Prospects are statements about a future course of issues—for example:

• P1: The current German chancellor will be reelected next year: US$1

• P2: The Democrats win the US presidential elections next year

Traders can buy, for example, shares of P1, and if the German chancellor in fact wins the elections one year later, they receive US$1 as revenue per share. The price of the shares depends on the market supply and demand: If the price of a P1 share is 52 cents, IEM prognosticates a 52% probability that the current chancellor will win. The second prospect, P2, is more fine-grained: The revenue depends on the percentage of votes the Democrats win. Assume they attain 56%; then the owner of a P2 share receives 56 cents in return. A price of 48 cents per share is therefore interpreted as a prognosis that the Democrats will win 48% of the votes.

The IEM frequently outperforms the survey-based prognosis of accredited statistical institutes—presumably because the traders pay more attention to it than to a nonbinding survey and the market mechanism has more self-correction capabilities than the plain summation of polled opinions.

Two items that frequently need to be prognosticated in IT are effort and business value of a solution alternative. Effort estimation finds support in a rich toolbox of techniques,18 but business value estimation is still in its infancy. Big strategic IT initiatives usually undergo a business case assessment that somehow numbers their expected value. But for so-called IT opportunities, such an approach causes too much effort or is principally impossible due to a lack of predictability.

The term IT opportunity (ITO) was coined by Broadbent and Kitzis (2005) and denotes the bag of ideas and bleeding-edge innovations complementing the big strategic initiatives. IT opportunities are typically perilous, in regard to the expected outcome or technology. They are the wildest among the Wild Cats in the Ward-Peppard square (see the section “Assessing Applications”).

Broadbent and Kitzis recommend designing a management process for the highly volatile bag of ITOs in parallel with strategic planning. The process should be shaped like a funnel with a broad entry for gathering the rich plenty of ideas but an early and rigid cutting down to the really smart ones. What would be more suitable than a marketplace for implementing this funnel? Folks can easily place an offer, but if it doesn’t sell, it’s out.

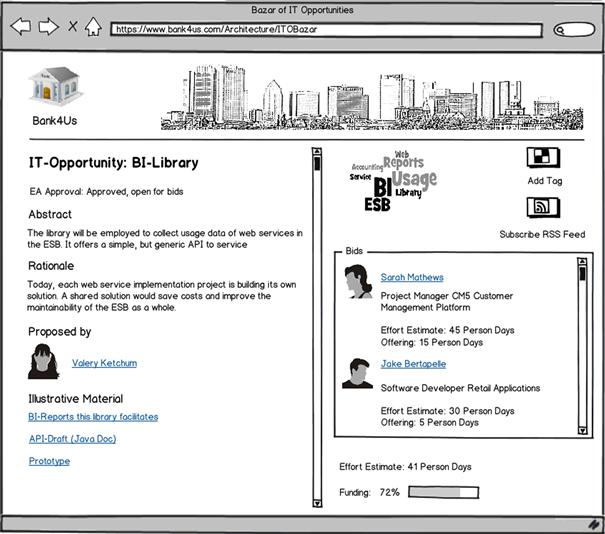

Padma Valluri, the enterprise architect at Bank4Us who focuses on EA processes, finds marketplaces like IEM highly inspiring. Together with Leon Campista, the Enterprise 2.0 evangelist, she proposes the idea of an ITO bazaar and eventually convinces the EA group and management to furnish it in the EA social software platform (SSP). The prototype Leon helped her set up is shown in Figure 8-16.

Figure 8-16 First bids in the ITO bazaar.

The site again shows many basic functions of an SSP: tags, RSS feeds, links to people and further assets, and the possibility of leaving suggestions. But its most remarkable feature is the bidding widget on the right side. There people can bet how much effort is involved in the realization of the ITO and, as an option, can also offer a certain amount of person days they are willing to contribute to the solution.

Betting on the effort is just a game. People who came close to the de facto effort (determined after the implementation) are rewarded with a corresponding number of scores that can be converted into money in an online store.

Offering a certain amount of work time, however, is more serious. Bidders are responsible for ensuring that, if the ITO indeed becomes a project, they can fulfill their offering. Project managers like Sarah Mathews should ensure that her team has the needed capacity, and individual software developers like Jake Bertapelle should agree with their supervisors to be exempt from other duties to the extent needed.

There is no automation behind the ITO bazaar that kicks off projects. If an ITO gains sufficient interest—meaning that the ratio of available offerings and estimated effort exceeds 100%—bidders are invited to a meeting with the project portfolio management. This is to check that the preconditions for a kickoff are given and in particular that all bidders can fulfill their shares.

This bidding mechanism helps estimate the true business value of an ITO: Only if there is a need for the proposed solution, and sufficient faith in the success of the idea, does the ITO get funded. Furthermore, the mechanism mitigates a chicken-race problem related to the funding of shared, cross-unit software components: In the past, the first unit to jump on such a component had to pay the price all alone.

There was a saying in Bank4Us that “The first guy who wants to swim has to pay for the swimming pool,” which is a break for both innovation and cross-unit collaboration. The bidding mechanism puts an end to this idea by bargaining a fair sharing of the efforts.

The ITO bazaar is open to the IT crowd but also to others with sparkling ideas about the profitable exploitation of information technology. The filings therefore range from rather down-to-earth, technology-driven submissions like the one shown in Figure 8-8 to high-flying exciters. But this variety is put under EA governance: Each ITO needs approval from the EA group before it is open for trading. The reasons for erecting such a quality gate are:

• Filings contradicting strategic maxims, the future application landscape, or the technology reference model are likely to be rejected. This is to ensure that the ITOs do not head in a different direction from the non-ITO initiatives planned by the strategy board.

• Filings that are sheer nonsense or rather naïve with regard to technical feasibility are sifted out. Insignificant ideas are also sieved so that the bazaar does not dissipate energies in tiny code writings here and there.

Whether such a quality gate should be erected was the subject of fierce discussion at Bank4Us. Some opponents believed it endangers the free development of the market (the discussions showed the usual traits of debates about market regulation). The convincing argument in favor of such a gate was a look at the governance of open source software programs: They also employ quality sieves to prevent the essence of the program from being eroded by nonfitting projects or the grooming of hobbyhorses.19

Experience will show whether the previous “business model” for the ITO bazaar will work out. This model is the essence of the ITO bazaar, and its functionality needs more attention and care than mere tooling. The responsible parties at Bank4Us therefore decided to set up frequent monitoring and optimization of the trading rules.

Summing it up: Assessment by the EA Dashboard

Building Block 5, Participation in decisions, applies the principles and techniques of Enterprise 2.0 to choosing future directions and making decisions in connection with EA. We have seen two examples from Bank4Us of how this looks in practice:

• The rather straightforward diagnostic process map exemplified how key performance indicators regarding IT can be polled from a wider crowd of IT stakeholders. The diversity of viewpoints caught up by such a poll can counterbalance the tunnel view experts sometimes fall victim to.

• The more subtle ITO bazaar provides an innovative answer to how IT opportunities should be managed. The trading mechanism on one hand prognosticates the business value of solutions and on the other solves a problem with the funding of shared software components.

The EA activities and SSP techniques of these examples are summarized in Table 8-2.20

Table 8-2 Building Block 5: Supported Activities and Employed Techniques

In summary, what do we expect from Building Block 5 for EA? Figure 8-17 measures the expected impact by the EA Dashboard.

Figure 8-17 The EA Dashboard for Building Block 5, Participation in decisions.

The essence of participating in decisions is giving a voice to the crowd with respect to identifying where there is need for action and exploring alternatives. We can therefore expect it to set the perspective right if this has lost contact with the ground-level realities (Cloud Cuckoo Land). Furthermore, the building block is an antidote against a tunnel view in strategy, a single faraway vision that is mostly based on the beliefs and wishes of the top brass. The reality check ventilated by the SSP may draw EA away from its hypnotic fixation on the crystal ball and uncover additional tracks worth following. Furthermore, participating in decisions establishes a funnel for ideas and innovations, as the ITO bazaar demonstrates. It therefore can help us escape from congealing that anxiously focuses on keeping the existing system running; hence, the transformation gauge is rising, too.

Building Block 6: Participation in transformation

The previous two building blocks provided good reason to assert that EA 2.0 techniques spur on knowledge sharing and organizational learning and are valuable tools in preparing to make and actually making decisions. But how can they help when you eventually go hands-on?

Building Block 6 addresses transformation: implementing change up to deploying and operating the next release of the IT landscape. Transformation in a sense is an ultimate practical test of EA: How well EA guides projects and eventually realizes the target landscape is one of its most important success criteria. What can Enterprise 2.0 contribute to the effectiveness of EA in the transformation phase? This is the question we explore in the following section.

Mashing up the architecture continuum

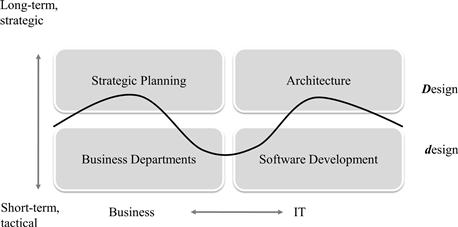

Enterprise architectures are not monoliths, they are mosaics composed of architectures designed by different owners. In Chapter 7 we came across a classification of architecture types by granularity into strategic, segment, and capability architectures. The architectures of this classification are nested according to the dimensions of time horizon, subject matter, and level of detail. They range from long-term, far-reaching, and high-level strategic architectures to short-term, special, and detailed capability architectures. Furthermore, these architectures are usually crafted by different teams of architects.

Beneath the capability architectures, we even find another layer of architectures made up of the daily design decisions of development teams and therefore yet another team contributing to the overall picture. This daily hands-on layer is not irrelevant. During the 2010 conference of The Open Group in Amsterdam, Len Fehskens, vice president of this organization, asked where the borderline between architectural Design (with a capital D) and design (with a small d) actually is. This line is indeed difficult to draw when adopting a definition of Design such as Booch’s explanation of architecture, “[…] the significant design decisions that shape a system, where significant is measured by cost of change” (Booch, 2006). On this ground, the borderline presumably meanders across the teams, as shown in Figure 8-18

Figure 8-18 The borderline between Design and design.

The assumption that design decisions on a lower layer can be revised at low cost turns out to be wrong in practice: The innocent decision for a certain primary key in some database table design can freeze change in some wider application domain for years.21

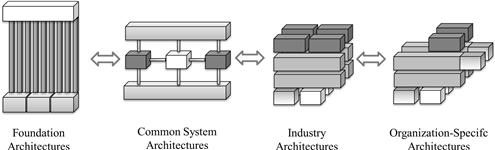

Another rationale for continuity is reuse. The Open Group has conceptualized the vision of what they call the architecture continuum. It is a subdivision of the enterprise architecture according to generality. Figure 8-1922 depicts the four divisional classes.

Figure 8-19 The Open Group’s architecture continuum.

The flow from left to right goes from general to special, from technology focused to business focused, and from logical to physical.

• Foundation architectures are the most generic type of architecture. They typically consist of a general taxonomy and a frame of functional domains. The TOGAF Technical Reference Model (TRM) is an example of foundation architecture (The Open Group, 2009, p. 575 ff).

• Common system architectures are one step more specific and typically specify building blocks for particular aspects—for instance, security, system management, or networking services. The TOGAF Integrated Information Infrastructure Reference Model (III-RM) is an example of a common system architecture that addresses several aspects centered on the vision of a boundary-less, enterprise-wide information flow (The Open Group, 2009, p. 607 ff).

• Industry architectures specify building blocks for particular verticals. They add branch specifics on top of the two previous architectures. Examples are the telecom NGOSS23 architecture and the finance BIAN.24

• Organization-specific architectures are the most specific architectures that structure the IT landscape of an individual enterprise.

The more specific architectures on the right reuse the more generic ones on the left. They use the capabilities of general building blocks—for instance, the functionality of a general single sign-on security solution, or are embedded in a more general frame, for instance as concrete SOA service providers in the service landscape of BIAN.

The two architecture classifications—by generality and granularity—are different but not entirely orthogonal: The foundational architectures typically belong to the strategic class, just to name one correlation. Nevertheless, there are strategic elements in the organization-specific architectures as well as quite detailed capability architectures among the common system architectures, so the two classifications are founded on widely independent dimensions.

Common to both classifications is a rich network of links that exist between the different architectures—and this eventually is where Web 2.0 comes into play. The design principle of remixability and the focus on linking and backtracking content provide the ideal ground for mashing up a true architecture continuum. Web 2.0 renders possible an architecture knowledge repository that is consistently navigable across multiple dimensions, integrates data and models from various sources, and facilitates a continuous flow from high-level strategic plans to code design or from standards to custom solutions.

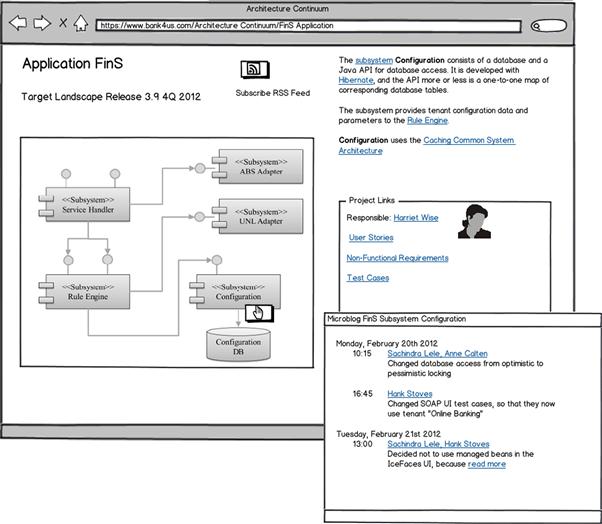

Padma Valluri, the lean evangelist among the enterprise architects, is excited by this vision set forth by her EA 2.0 counterpart, Leon Campista. She is convinced that a continuous flow of architecture design across strategic, segment, and capability teams down to code design (with a small d) cannot be achieved with plain old paper documentation. But this flow is at the heart of the EA Kanban and is a prerequisite for a continuous integration of designs. Padma therefore supports Leon’s struggle for some time and budget that would allow Leon and his helping hands to prototype the idea sketched in Figures 8-20 and 8-21.

Figure 8-20 The application landscape mashup.

Figure 8-21 The application-level mashup and microblog.

The prototype’s first page shows a map of the application landscape mashed up with information stemming from various sources. The map serves as a navigational component—a mouse click on an application rectangle, an interface bubble, or a dependency arrow brings up related information in the other widgets of the page. The information these widgets display depends on the selected item and whether it is an application, an interface, or a dependency. For an application, the linked-in information is about system management, operating environments, the BIAN service area the application is mapped to, and other items worth knowing. An interface, on the other hand, is displayed with a different set of items.

The links highlighted in Figure 8-20 are pointers to more generic architectures. They either point to entire pages—the System Management link to the Tivoli Monitoring common system architecture is such a case—or to data items provided by the higher-level architectures, as in the case of the BIAN combo box that displays the service areas available in this industry-specific architecture.

While these navigation links are paths from specific to more general architectures, a different kind of magic happens when we double-click on the FinS application rectangle: The SSP drills into a deeper level of detail. Figure 8-21 shows the target page, the decomposition of FinS into subsystems, which is the highest application-internal part of Kruchten’s Logical View25 on the software architecture.

The logical view is mashed up with links to more generic architecture building blocks—for instance, with a link to a common database caching architecture. Double-clicking on the subsystem rectangle, on the other hand, zooms deeper into the inner structure, eventually down to class diagrams, scenarios, pages with HTML-formatted code headers, and so on.

In addition, there are links into requirements management and quality assurance. They direct the user to requirements and test cases pertinent to the subsystem that is currently in focus. These navigation paths connect the architecture SSP with other knowledge islands of a transformation program.

Leon’s plans also include microblogging as a means to capture the daily design decisions and changes. The postings are grouped by software subsystem. Here is an example: While pair programming, Sachindra Lele and Anne Calten (two developers) decide to switch database access from an optimistic to a pessimistic transactional locking and report this decision to the microblog.

This can be a useful piece of information some days later if a sudden database deadlock or lock timeout problem occurs in the next round of load testing. Multiple channels are open to this micro-blog: the SCM26-system, a standalone desktop or Web user interface, an SMS, a mobile app, or whatever else is used in daily work.

The benefits that Leon and Padma list to promote the architecture continuum mashup (ACM) idea are:

• The ACM enforces referential integrity between the architectures and therefore a stringent modeling of architectures from top down to coding and from generic building blocks to highly specific ones.

• The ACM makes people aware of what architecture building blocks there already are and therefore fosters reuse.

• The ACM is a knowledge repository that makes the transformational paths visible and navigable and therefore is a means to monitor and control the transformation. Wrong directions and frictions can be spotted more easily.

• The ACM is a tool for collaborating on working copies of architectures and therefore makes possible the EA Kanban vision of just-in-time (JIT) modeling and of a steady flow of design from top to bottom.

• The ACM closes gaps between knowledge islands of a transformation program and therefore helps in making the left hand know what the right hand is doing.

Leon’s microblog is a ground-level example illustrating the last bullet quite well. The next section’s Bank4Us case, however, comes up with an even more thrilling story of how Enterprise 2.0 can bridge gaps between knowledge islands.

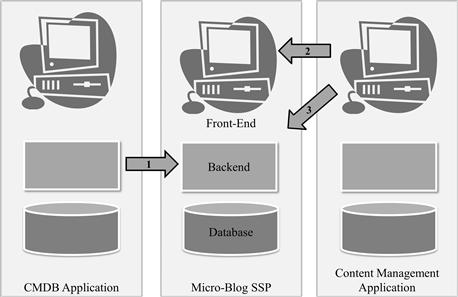

The change management microblog

Knowledge islands can be fatal. One of the most well-known examples is the failure of the US intelligence community to spot the 9/11 attacks in advance. “In the months preceding 9/11 troubling signs were apparent to intelligence agents and analysts throughout the world,” writes McAfee (2006b, p. 31). But there were 16 agencies making up the US intelligence community, and they shared information reluctantly (if at all) and ineffectively. Furthermore, the prevalent safety culture in these agencies was “need-to-know”—do not let people know more than what they absolutely need to do their duties. Hence, all the knowledge was there, but no one was able to piece the jigsaw together.

In 2011, 10 years after 9/11, a similar case was discovered in Germany. A small group of extremists had committed acts of terror and murdered a still undetermined number of people over a period of 10 years. In retrospect, the units of the highly federated law enforcement agencies had all bits of information they needed to arrest the group years previously, but the bits were “safely” isolated and locked away.

Enterprises are not doing better than governmental agencies in this respect: They also fall victim to knowledge islands. The consequences may not be as deathly—in particular, when thinking of the comparably harmless failures in IT—but they nevertheless do harm. When a consultant is helping companies in matters of IT problems, the value the consultant adds often is not in the additional knowledge she brings but in the fact that she listens to stakeholders from all parties. It sometimes is stunning: All the knowledge is already there, but it needs an external player to put the puzzle together.

Likewise, when tracking an IT failure back to its root causes, one often discovers that the problem could have been avoided if only two or three people had shared their knowledge. A test engineer, under full steam to get a release out the door, manually changes the JVM heap size27 of an application server to get rid of out-of-memory exceptions and kick off functional integration testing.

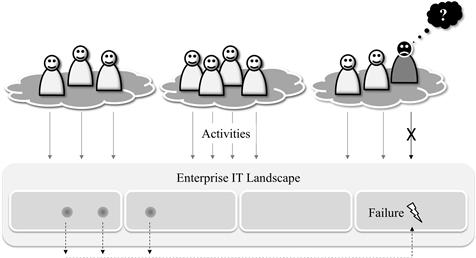

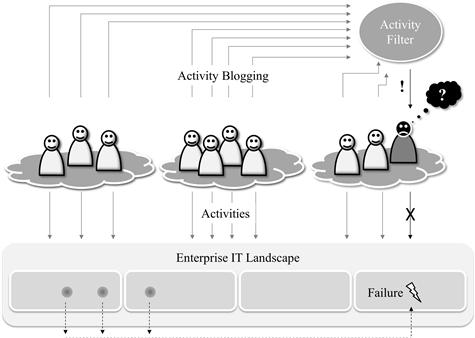

One week later, the same lack of memory rocks the boat when the tested release is being deployed in production, this time with a customer-facing outage that is brought to the attention of the CIO. The information about the change simply got lost in the chain from test engineer over project manager to release coordinator and eventually to the administrator of the production server.