Chapter 5

Define Endpoint Types and Operations

API design affects not only the structure of request and response messages, which we covered in Chapter 4, “Pattern Language Introduction.” It is equally—or even more—important to position the API endpoints and their operations within the architecture of the distributed system under construction (the terms endpoints and operations were introduced in the API domain model in Chapter 1, “Application Programming Interface (API) Fundamentals”). If positioning is done without careful thought, in a hurry, or not at all, the resulting API provider implementation is at risk for being hard to scale and maintain when inconsistencies degrade conceptual integrity; API client developers might find it difficult to learn and utilize the resulting mishmash APIs.

The architectural patterns in this chapter play a central role in our pattern language. Their purpose is to connect high-level endpoint identification activities with detailed design of operations and message representations. We employ a role- and responsibility-driven approach for this transition. Knowing about the technical roles of API endpoints and the state management responsibilities of their operations allows API designers to justify more detailed decisions later and also helps with runtime API management (for instance, infrastructure capacity planning).

This chapter corresponds to the Define phase of the Align-Define-Design-Refine (ADDR) process outlined in the introduction to Part 2 of the book. You do not have to be familiar with ADDR to be able to apply its patterns.

Introduction to API Roles and Responsibilities

Business-level ideation activities often produce collections of candidate API end-points. Such initial, tentative design artifacts typically start from API design goals expressed as user stories (of various forms), event storming output, or collaboration scenarios [Zimmermann 2021b]. When the API realization starts, these interface candidates have to be defined in more detail. API designers seek an appropriate balance between architectural concerns such as granularity of the services exposed by the API (small and specific vs. large and universal) and degree of coupling between clients and API provider implementations (as low as possible, as high as needed).

The requirements for API design are diverse. As explained previously, goals derived from the business-level activities are a primary source of input, but not the only one; for instance, external governance rules and constraints imposed by existing backend systems have to be taken into account as well. Consequently, the architectural roles of APIs in applications and service ecosystems differ widely. Sometimes, API clients just want to inform the provider about an incident or hand over some data; sometimes, they look for provider-side data to continue their processing. When responding to client requests, providers may simply return a data element already available—or may perform rather complex processing steps (including calls to other APIs). Some of the provider-side processing, whether simple or complex, may change the provider state, some might leave this state untouched. Calls to API operations may or may not be part of complex interaction scenarios and conversations. For instance, long-running business processes such as online shopping and insurance claims management involve complex interactions between multiple parties.

The granularity of the operations varies greatly. Small API operations are easy to write, but there might be many, which have to be composed with their invocations being coordinated over time; few large API operations may be self-contained and autonomous, but they can be difficult to configure, test, and evolve. The runtime operations management of many small units also differs from that of a few big ones; there is a flexibility versus efficiency trade-off.

API designers have to decide how to give operations a business meaning (this, for instance, is a principle in service-oriented architectures [Zimmermann 2017]). They also have to decide if and how to manage state; an operation might simply return a calculated response but might also have a permanent mutational effect on the provider-side data stores.

In response to these challenges, the patterns in this chapter deal with endpoint and operation semantics in API design and usage. They carve out the architectural role of API endpoints (emphasis on data or activity?) and the responsibilities of operations (read and/or write behavior?).

Challenges and Desired Qualities

The design of endpoints and operations, expressed in the API contract, directly influences the developer experience in terms of function, stability, ease of use, and clarity.

Accuracy: Calling an API rather than implementing its features oneself requires a certain amount of trust that the called operation will deliver correct results reliably; in this context, accuracy means the functional correctness of the API implementation with regard to its contract. Such accuracy certainly helps building trust. Mission-critical functionality deserves particular attention. The more important the correct functioning of a business process and its activities is, the more effort should be spent on their design, development, and operations. Preconditions, invariants, and postconditions of operations in the API contract communicate what clients and providers expect from each other in terms of request and response message content.

Distribution of control and autonomy: The more work is distributed, the more parallel processing and specialization become possible. However, distribution of responsibilities and shared ownership of business process instances require coordination and consensus between API client and provider; integrity guarantees have to be defined, and consistent activity termination must be designed. The smaller and more autonomous an endpoint is, the easier it becomes to rewrite it; however, many small units often have a lot of dependencies among each other, making an isolated rewrite activity risky; think about the specification of pre-and postconditions, end-to-end testing, and compliance management.

Scalability, performance, and availability: Mission-critical APIs and their operations typically have demanding SERVICE LEVEL AGREEMENTS that go along with the API DESCRIPTION. Two examples of mission-critical components are day trading algorithms at a stock exchange and order processing and billing in an online shop. A 24/7 availability requirement is an example of a highly demanding, often unrealistic quality target. Business processes with many concurrent instances, implemented in a distributed fashion involving a large number of API clients and involving multiple calls to operations, can only be as good as their weakest component in this regard. API clients expect the response times for their operations calls to stay in the same order of magnitude when the number of clients and requests increases. Otherwise, they will start to question the reliability of the API.

Assessing the consequences of failure or unavailability is an analysis and design task in software engineering but also a business leadership and risk management activity. An API design exposing business processes and their activities can ease the recovery from failures but also make it more difficult. For example, APIs might provide compensating operations that undo work done by previous calls to the same API; however, a lack of architectural clarity and request coordination might also lead to inconsistent application state within API clients and providers.

Manageability: While one can design for runtime qualities such as performance, scalability, and availability, only running the system will tell whether API design and implementation are adequate. Monitoring the API and its exposed services is instrumental in determining its adequacy and what can be done to resolve mismatches between stated requirements and observed performance, scalability, and availability. Monitoring supports management disciplines such as fault, configuration, accounting, performance, and security management.

Consistency and atomicity: Business activities should have an all-or-nothing semantics; once their execution is complete, the API provider finds itself in a consistent state. However, the execution of the business activity may fail, or clients may choose to explicitly abort or compensate it (here, compensation refers to an application-level undo or other follow-up operation that resets the provider-side application state to a valid state).

Idempotence: Idempotence is another property that influences or even steers the API design. An API operation is idempotent if multiple calls to it (with the same input) return the same output and, for stateful operations, have the same effect on the API state. Idempotence helps deal with communication errors by allowing simple message retransmission.

Auditability: Compliance with the business process model is ensured by audit checks performed by risk management groups in enterprises. All APIs that expose functionality that is subject to be audited must support such audits and implement related controls so that it is possible to monitor business activities execution with logs that cannot be tampered with. Satisfying audit requirements is a design concern but also influences service management at runtime significantly. The article “Compliance by Design—Bridging the Chasm between Auditors and IT Architects,” for instance, introduces “Completeness, Accuracy, Validity, Restricted Access (CAVR)” compliance controls and suggests how to realize such controls, for instance in service-oriented architectures [Julisch 2011].

Patterns in this Chapter

Resolving the preceding design challenges is a complex undertaking; many design tactics and patterns exist. Many of those have already been published (for instance, in the books that we list in the preface). Here, we present patterns that carve out important architectural characteristics of API endpoints and operations; doing so simplifies and streamlines the application of these other tactics and patterns.

Some of the architectural questions an API design has to answer concern the input to operations:

What can and should the API provider expect from the clients? For instance, what are its preconditions regarding data validity and integrity? Does an operation invocation imply state transfer?

The output produced by API implementations when processing calls to operations also requires attention:

What are the operation postconditions? What can the API client in turn expect from the provider when it sends input that meets the preconditions? Does a request update the provider state?

In an online shopping example, for instance, the order status might be updated and can be obtained in subsequent API calls, with the order confirmation containing all (and only) the purchased products.

Different types of APIs deal with these concerns differently. A key decision is whether the endpoint should have activity- or data-oriented semantics. Hence, we introduce two endpoint roles in this chapter. These types of endpoints correspond to architectural roles as follows:

The pattern PROCESSING RESOURCE can help to realize activity-oriented API endpoints.

Data-oriented API endpoints are represented by INFORMATION HOLDER RESOURCES.

The section “Endpoint Roles” covers PROCESSING RESOURCE and INFORMATION HOLDER RESOURCE. Specialized types of INFORMATION HOLDER RESOURCES exist. For instance, DATA TRANSFER RESOURCE supports integration-oriented APIs, and LINK LOOKUP RESOURCE has a directory role. OPERATIONAL DATA HOLDERS, MASTER DATA HOLDERS, and REFERENCE DATA HOLDERS differ concerning the characteristics of data they expose in terms of data lifetime, relatedness, and mutability.

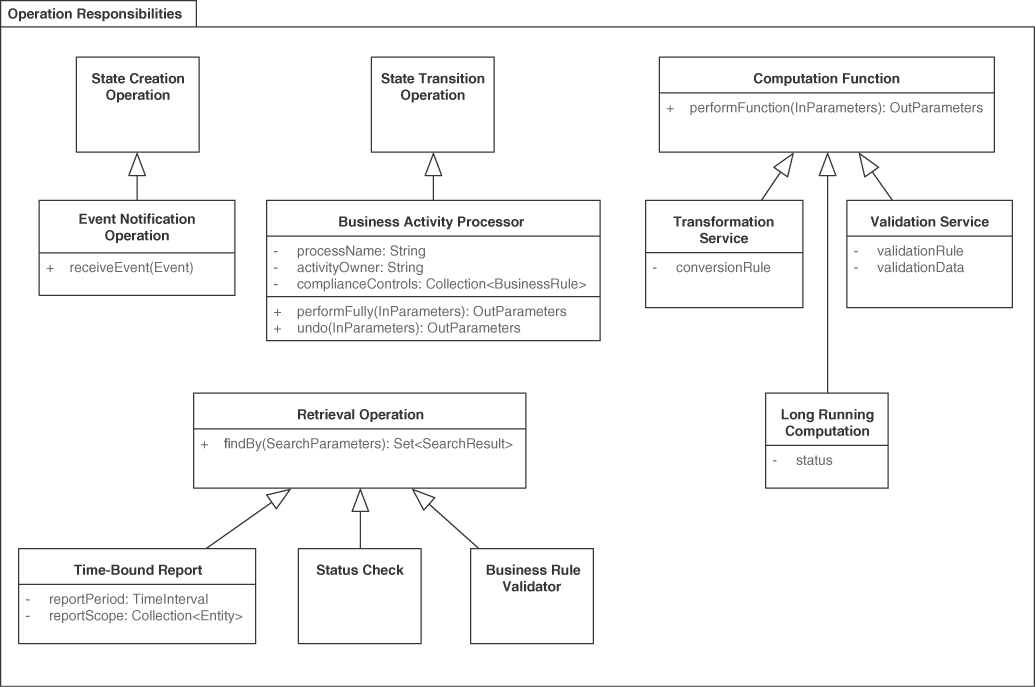

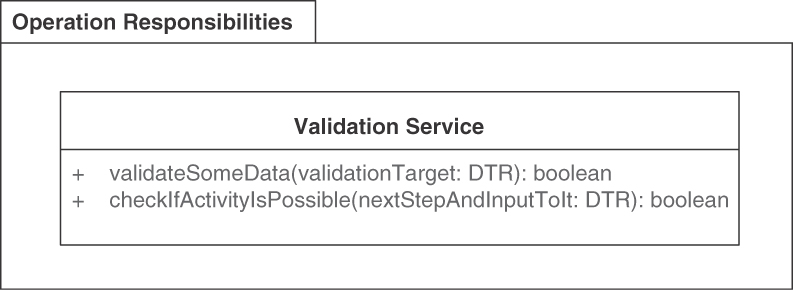

The four operation responsibilities found in these types of endpoints are COMPUTATION FUNCTION, RETRIEVAL OPERATION, STATE CREATION OPERATION, and STATE TRANSITION OPERATION. These types are covered in the section “Operation Responsibilities.” They differ in terms of client commitment (preconditions in API contract) and expectation (postconditions), as well as their impact on provider-side application state and processing complexity.

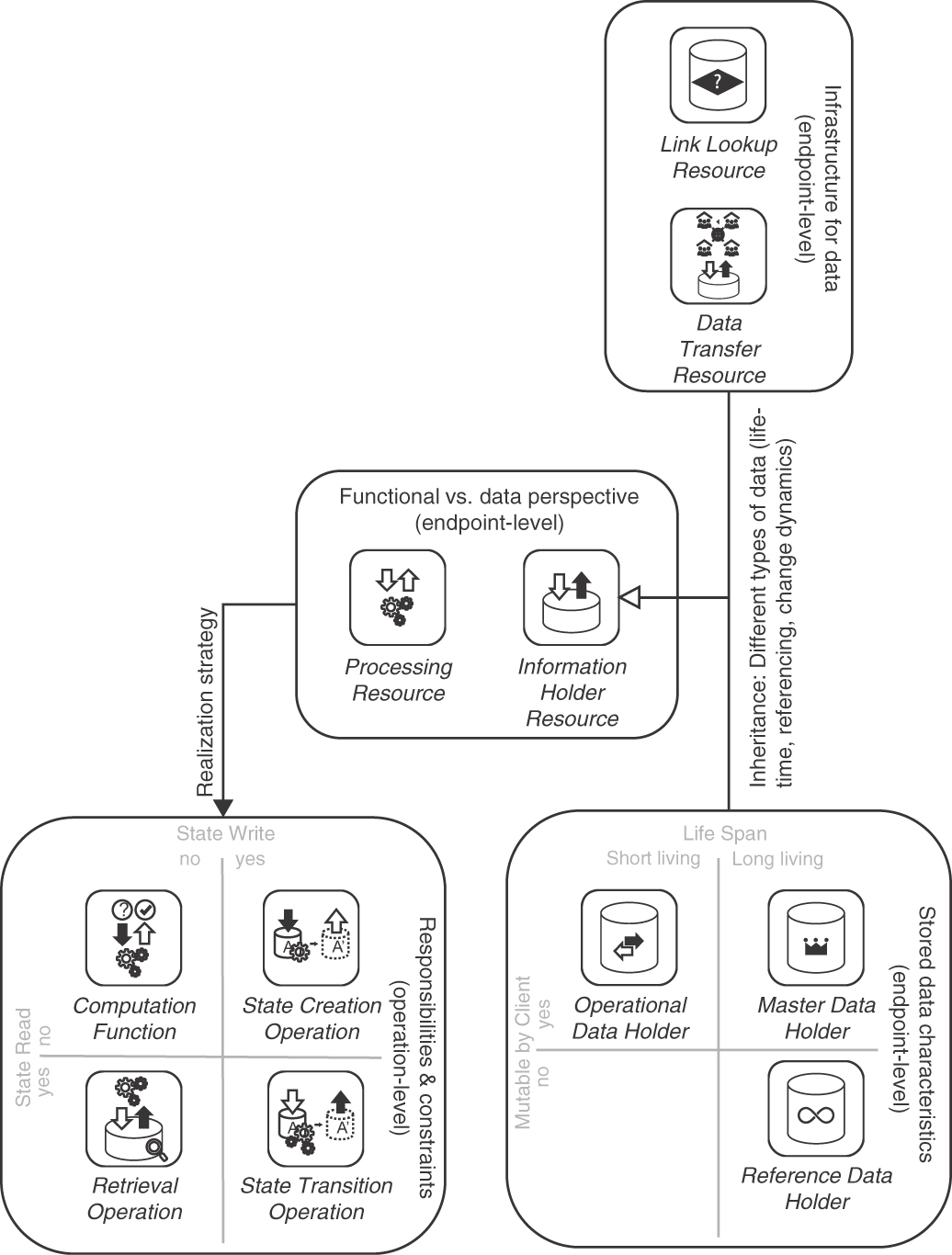

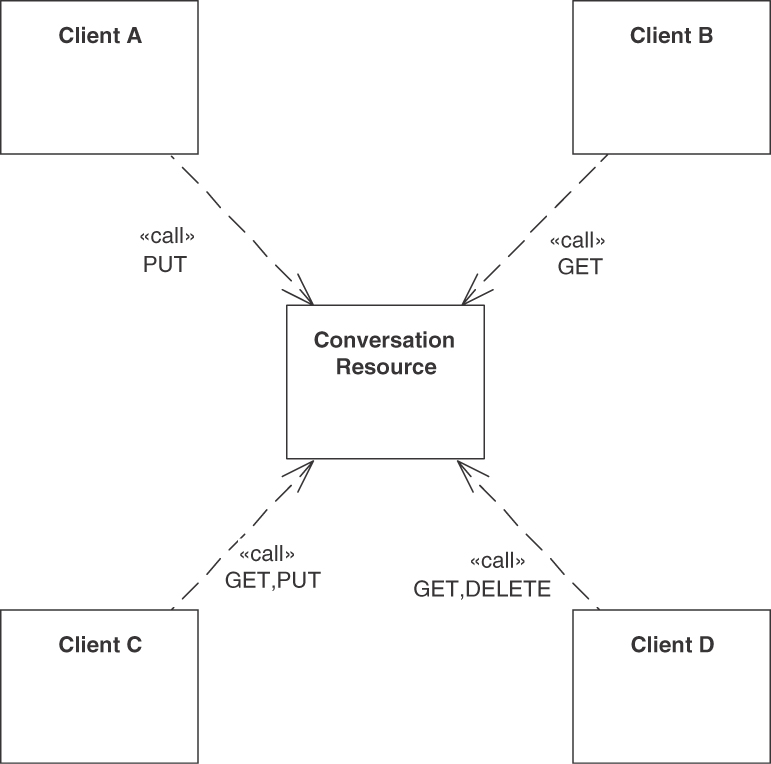

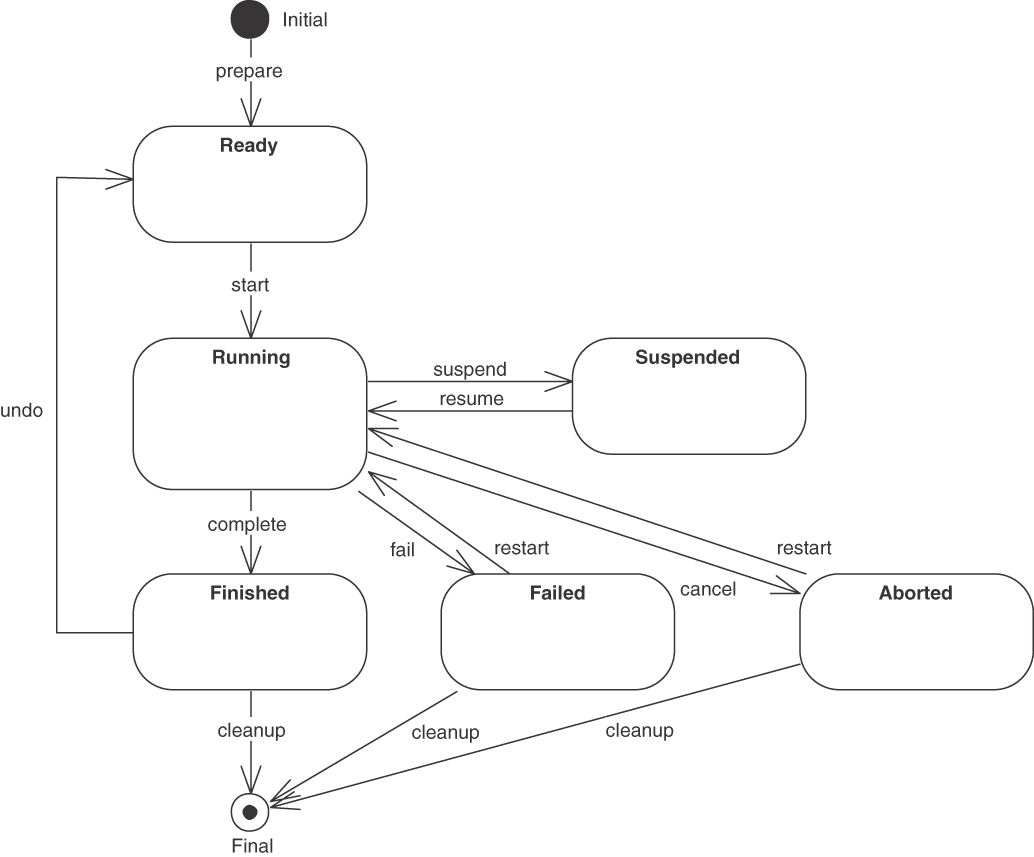

Figure 5.1 summarizes the patterns in this chapter.

Figure 5.1 Pattern map for this chapter (endpoint roles and operations responsibilities)

Endpoint Roles (aka Service Granularity)

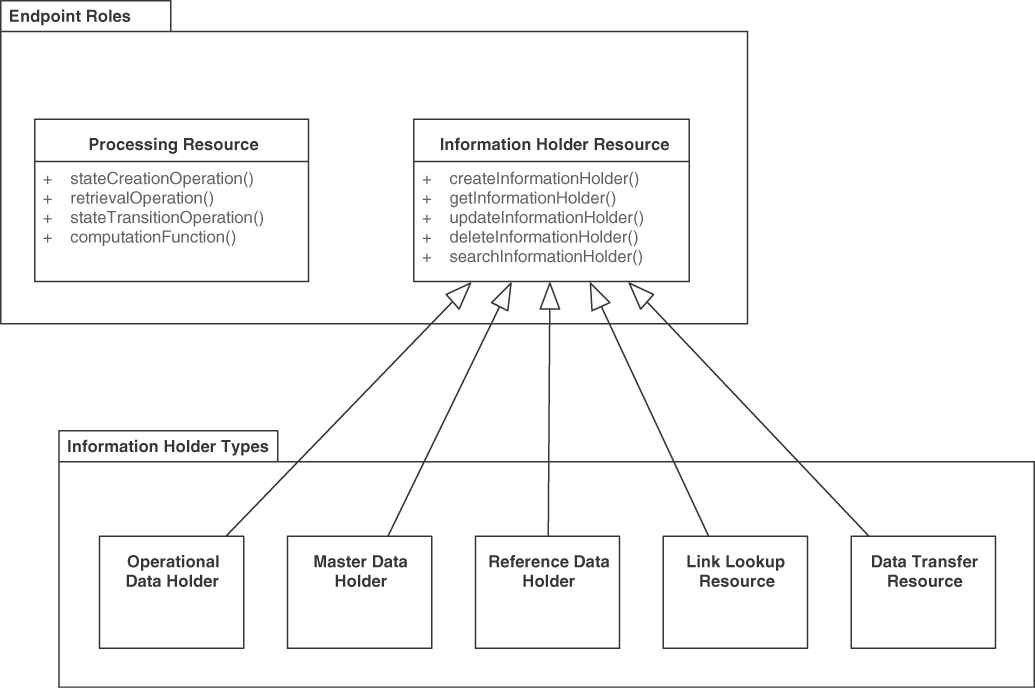

Refining the pattern map for this chapter, Figure 5.2 shows the patterns representing two general endpoint roles and five types of information holders.

Figure 5.2 Patterns distinguishing endpoint roles

The two general endpoint roles are PROCESSING RESOURCE and INFORMATION HOLDER RESOURCE. They may expose different types of operations that write, read, read-write, or only compute. There are five INFORMATION HOLDER RESOURCE specializations, answering the following question differently:

How can data-oriented API endpoints be classified by data lifetime, link structure, and mutability characteristics?

Let us cover PROCESSING RESOURCE first, followed by INFORMATION HOLDER RESOURCE and its five specializations.

Pattern: PROCESSING RESOURCE

Pattern: PROCESSING RESOURCE

When and Why to Apply

The functional requirements for an application have been specified, for instance, in the form of user stories, use cases, and/or analysis-level business process models. An analysis of the functional requirements suggests that something has to be computed or a certain activity is required. This cannot or should not be done locally; remote FRONTEND INTEGRATION and/or BACKEND INTEGRATION APIs are required. A preliminary list of candidate API endpoints might have been collected already.

How can an API provider allow its remote clients to trigger actions in it?

Such actions may be short-lived, standalone commands and computations (application-domain-specific ones or technical utilities) or long-running activities in a business process; they may or may not read and write provider-side state.

We can ask more specifically:

How can clients ask an API endpoint to perform a function that represents a business capability or a technical utility? How can an API provider expose the capability of executing a command to its clients that computes some output from the client’s input and, possibly, from the provider’s own state?

When invoking provider-side processing upon request from remote clients, general design concerns are as follows:

Contract expressiveness and service granularity (and their impact on coupling): Ambiguities in the invocation semantics harm interoperability and can lead to invalid processing results (which in turn might cause bad decisions to be made and consequently other harm). Hence, the meaning and side effects of the invoked action (such as a self-contained command or part of a conversation), including the representations of the exchanged messages, must be made clear in the API DESCRIPTION. The API DESCRIPTION must be clear in what end-points and operations do and do not do; preconditions, invariants, and postconditions should be specified. State changes, idempotence, transactionality, event emission, and resource consumption in the API implementation should also be defined. Not all of these properties have to be disclosed to API clients, but they still must be described in the provider-internal API documentation.

API designers have to decide how much functionality each API endpoint and its operations should expose. Many simple interactions give the client a lot of control and can make the processing highly efficient, but they also introduce coordination effort and evolution challenges; few rich API capabilities can promote qualities such as consistency but may not suit each client and therefore may waste resources. The accuracy of the API DESCRIPTION matters as much as that of its implementation.

Learnability and manageability: An excessive number of API endpoints and operations leads to orientation challenges for client programmers, testers, and API maintenance and evolution staff (which might or might not include the original developers); it becomes difficult to find and choose the ones appropriate for a particular use case. The more options available, the more explanations and decision-making support have to be given and maintained over time.

Semantic interoperability: Syntactic interoperability is a technical concern for middleware, protocol, and format designers. The communication parties must also agree on the meaning and impact of the data exchanged before and after any operation is executed.

Response time: Having invoked the remote action, the client may block until a result becomes available. The longer the client has to wait, the higher the chances that something will break (either on the provider side or in client applications). The network connection between the client and the API may time out sooner or later. An end user waiting for slow results may click refresh, thus putting additional load on an API provider serving the end-user application.

Security and privacy: If a full audit log of all API invocations and resulting server-side processing has to be maintained (for instance, because of data privacy requirements), statelessness on the provider side is an illusion even if application state is not required from a functional requirement point of view. Personal sensitive information and/or otherwise classified information (for example, by governments or enterprises) might be contained in the request and response message representations. Furthermore, in many scenarios one has to ensure that only authorized clients can invoke certain actions (that is, commands, conversation parts); for instance, regular employees are usually not permitted to increase their own salary in the employee management systems integrated via COMMUNITY APIS and implemented as microservices. Hence, the security architecture design has to take the requirements of processing-centric API operations into account—for instance in its policy decision point (PDP) and policy enforcement point (PEP) design and when deciding between role-based access control (RBAC) and attribute-based access control (ABAC). The processing resource is the subject of API security design [Yalon 2019] but also is an opportunity to place PEPs into the overall control flow. The threat model and controls created by security consultants, risk managers, and auditors also must take processing-specific attacks into account, for instance denial-of-service (DoS) attacks [Julisch 2011].

Compatibility and evolvability: The provider and the client should agree on the assumptions concerning the input/output representations as well as the semantics of the function to be performed. The client expectations should match what is offered by the provider. The request and response message structures may change over time. If, for instance, units of measure change or optional parameters are introduced, the client must have a chance to notice this and react to it (for instance, by developing an adapter or by evolving itself into a new version, possibly using a new version of an API operation). Ideally, new versions are forward and backward compatible with existing API clients.

These concerns conflict with each other. For instance, the richer and the more expressive a contract is, the more has to be learned, managed, and tested (with regard to interoperability). Finer-grained services might be easier to protect and evolve, but there will be many of them, which have to be integrated. This adds performance overhead and may raise consistency issues [Neri 2020].

A “Shared Database” [Hohpe 2003] that offers actions and commands in the form of stored procedures could be a valid integration approach (and is used in practice), but it creates a single point of failure, does not scale with a growing number of clients, and cannot be deployed or redeployed independently. Shared Databases containing business logic in stored procedures do not align well with service design principles such as single responsibility and loose coupling.

How It Works

Add a PROCESSING RESOURCE endpoint to the API exposing operations that bundle and wrap application-level activities or commands.

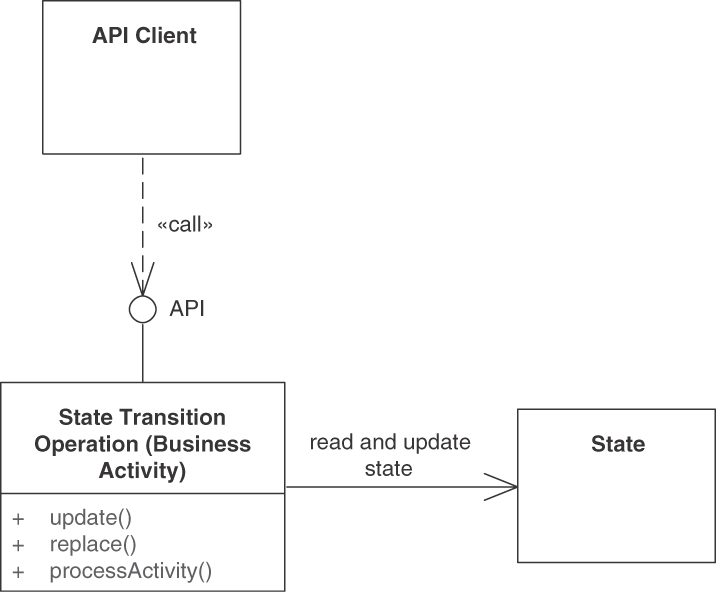

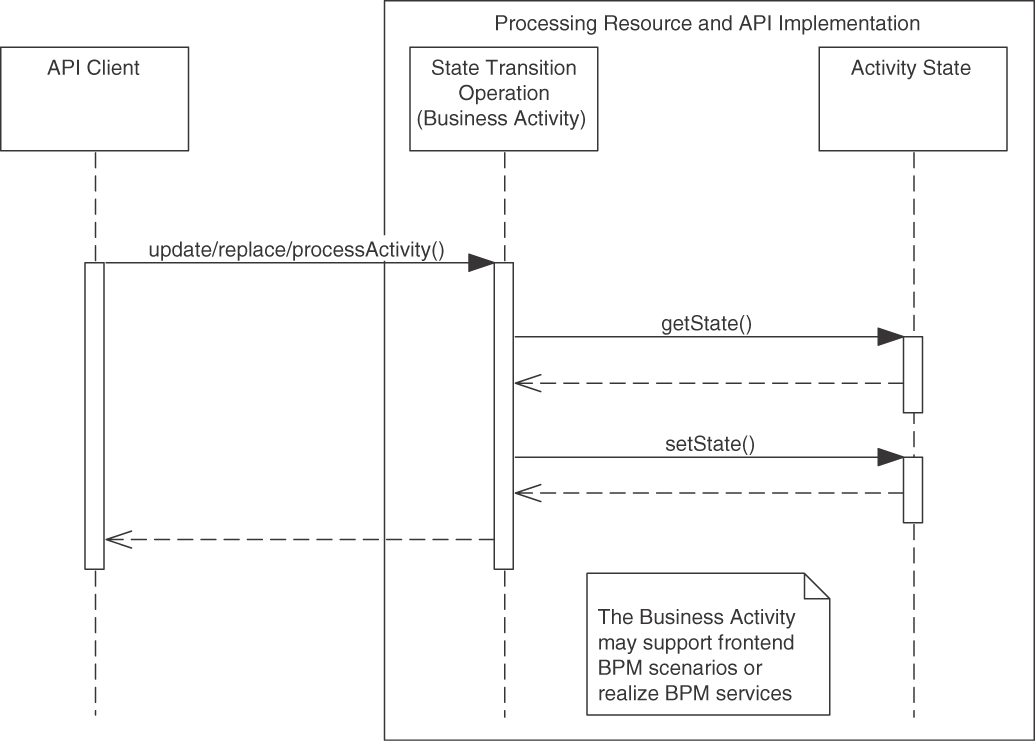

For the new endpoint, define one or more operations, each of which takes over a dedicated processing responsibility (“action required”). COMPUTATION FUNCTION, STATE CREATION OPERATION, and STATE TRANSITION OPERATION are common in activity-oriented PROCESSING RESOURCES. RETRIEVAL OPERATIONS mostly are limited to mere status/state checks here and are more commonly found in data-oriented INFORMATION HOLDER RESOURCES. For each of these operations, define a “Command Message” for the request. Add a “Document Message” for the response when realizing an operation as a “Request-Reply” message exchange [Hohpe 2003]. Make the endpoint remotely accessible for one or more API clients by providing a unique logical address (for instance, a Uniform Resource Identifier [URI] in HTTP APIs).

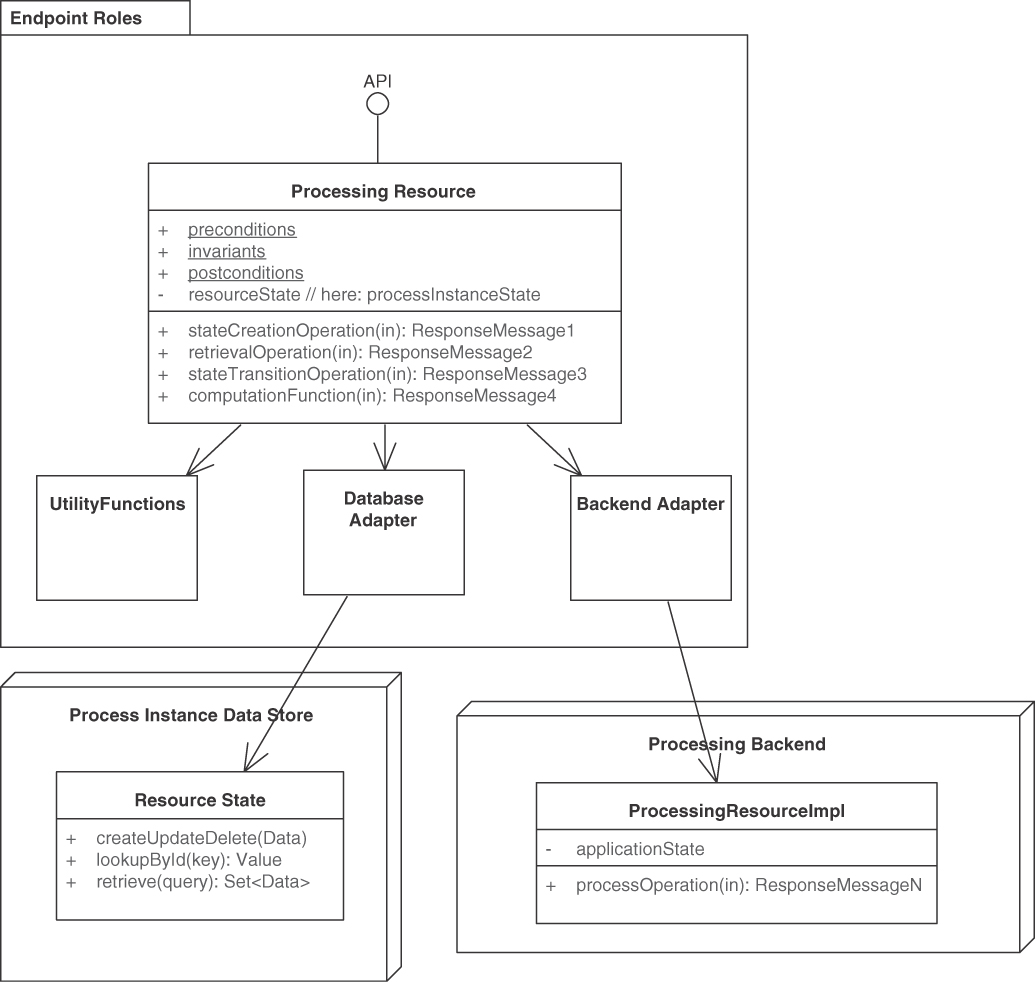

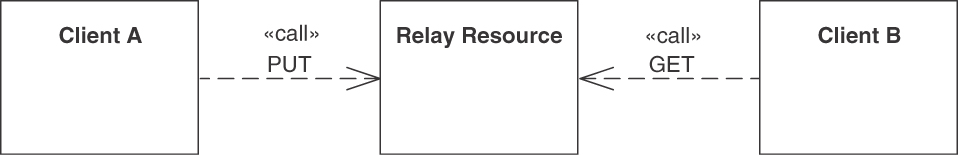

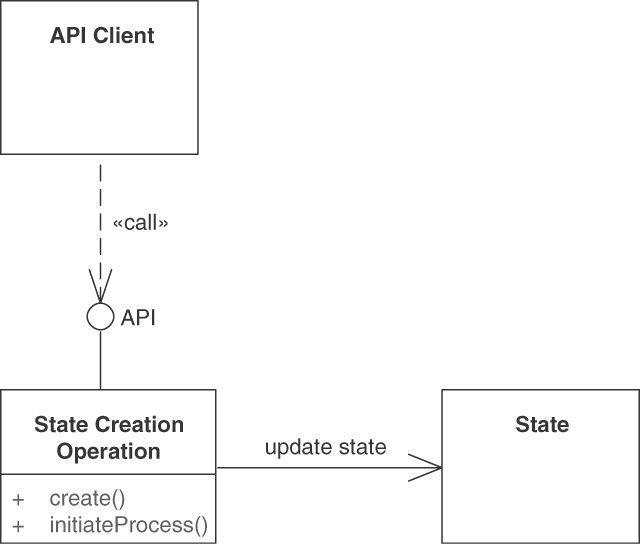

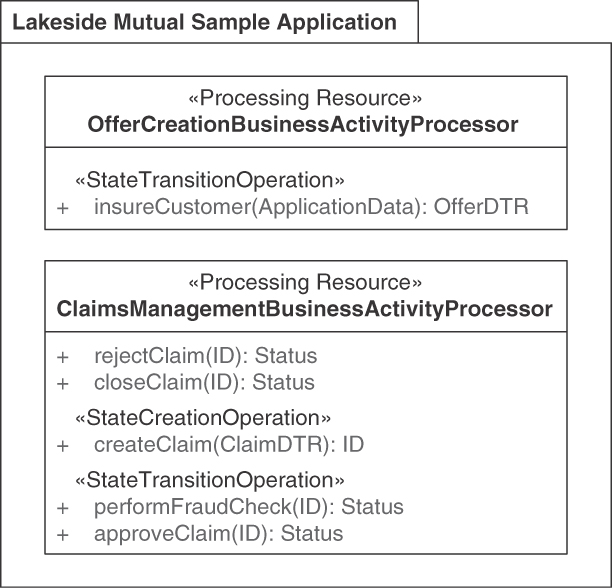

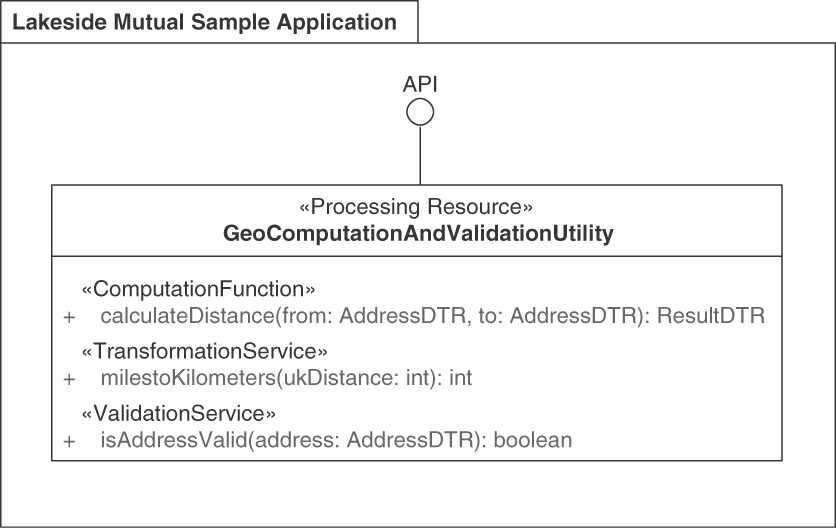

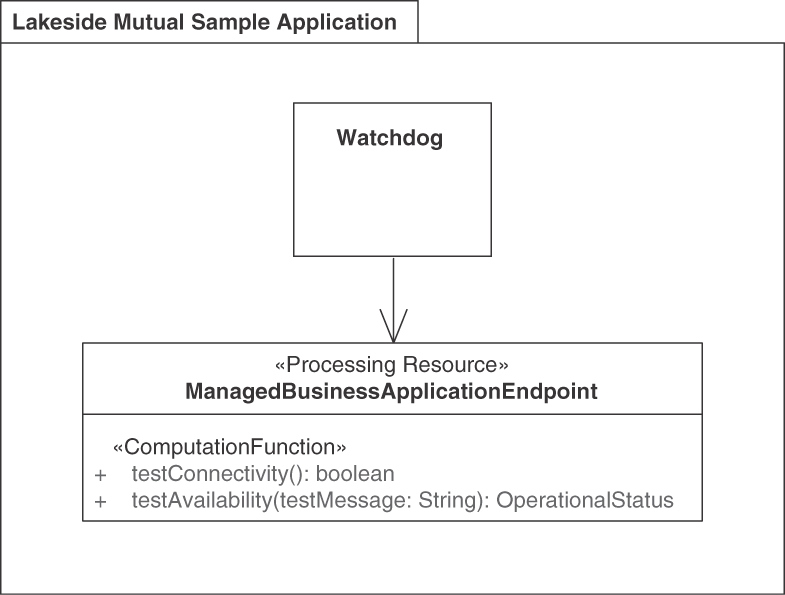

Figure 5.3 sketches this endpoint-operation design in a UML class diagram.

Figure 5.3 PROCESSING RESOURCES represent activity-oriented API designs. Some operations in the endpoint access and change application state, others do not. Data is exposed only in request and response messages

The request message should make the performed action explicit and allow the API endpoint to determine which processing logic to execute. These actions might represent a general-purpose or an application-domain-specific functional system capability (implemented within the API provider or residing in some backend and accessed via an outbound port/adapter) or a technical utility.

The request and response messages possibly can be structured according to any of the four structural representation patterns ATOMIC PARAMETER, ATOMIC PARAMETER LIST, PARAMETER TREE, and PARAMETER FOREST. The API DESCRIPTION has to document the syntax and semantics of the PROCESSING RESOURCE (including operation pre- and postconditions as well as invariants).

The PROCESSING RESOURCE can be a “Stateful Component” or a “Stateless Component” [Fehling 2014]. If invocations of its operations cause changes in the (shared) provider-side state, the approach to data management must be designed deliberately; required decisions include strict vs. weak/eventual consistency, optimistic vs. pessimistic locking, and so on. The data management policies should not be exposed in the API (which would make them visible to the API client), but open and close (or commit, rollback) system transactions be placed inside the API implementation, preferably at the operation boundary. Application-level compensating operations should be offered to handle things that cannot be undone easily by system transaction managers. For instance, an email that is sent in an API implementation cannot be taken back once it has left the mail server; a second mail, “Please ignore the previous mail,” has to be sent instead [Zimmermann 2007; Richardson 2018].

Example

The Policy Management backend of the Lakeside Mutual case contains a stateful PROCESSING RESOURCE InsuranceQuoteRequestCoordinator that offers STATE TRANSITION OPERATIONS, which move an insurance quotation request through various stages. The resource is implemented as an HTTP resource API in Java and Spring Boot:

@RestController@RequestMapping("/insurance-quote-requests")public class InsuranceQuoteRequestCoordinator {@Operation(summary = "Updates the status of an existing " +"Insurance Quote Request")@PreAuthorize("isAuthenticated()")@PatchMapping(value = "/{id}")public ResponseEntity<InsuranceQuoteRequestDto>respondToInsuranceQuote(Authentication,@Parameter(description = "the insurance quote " +"request's unique id", required = true)@PathVariable Long id,@Parameter(description = "the response that " +"contains the customer's decision whether " +"to accept or reject an insurance quote",required = true)@Valid @RequestBodyInsuranceQuoteResponseDto insuranceQuoteResponseDto) {

The Lakeside Mutual application services also contain RiskComputation-Service, a stateless PROCESSING RESOURCE that implements a single COMPUTATION FUNCTION called computeRiskFactor:

@RestController@RequestMapping("/riskfactor")public class RiskComputationService {@Operation(summary = "Computes the customer's risk factor.")@PostMapping(value = "/compute")public ResponseEntity<RiskFactorResponseDto>computeRiskFactor(@Parameter(description = "the request containing " +"relevant customer attributes (e.g., birthday)",required = true)@Valid @RequestBodyRiskFactorRequestDto riskFactorRequest) {int age = getAge(riskFactorRequest.getBirthday());String postalCode = riskFactorRequest.getPostalCode();int riskFactor = computeRiskFactor(age, postalCode);return ResponseEntity.ok(new RiskFactorResponseDto(riskFactor));}

Discussion

Business activity- and process-orientation can reduce coupling and promote information hiding. However, instances of this pattern must make sure not to come across as remote procedure call (RPC) tunneled in a message-based API (and consequently be criticized because RPCs increase coupling, for instance, in the time and format autonomy dimensions). Many enterprise applications and information systems do have “business RPC” semantics, as they execute a business command or transaction from a user that must be triggered, performed, and terminated somehow. According to the original literature and subsequent collections of design advice [Allamaraju 2010], an HTTP resource does not have to model data (or only data), but can represent such business transactions, long-running ones in particular.1 Note that “REST was never about CRUD” [Higginbotham 2018]. The evolution of PROCESSING RESOURCES is covered in Chapter 8.

1. Note that HTTP is a synchronous protocol as such; hence, asynchrony has to be added on the application level (or by using QoS headers or HTTP/2) [Pautasso 2018]. The DATA TRANSFER RESOURCE pattern describes such design.

A PROCESSING RESOURCE can be identified when applying a service identification technique such as dynamic process analysis or event storming [Pautasso 2017a]; this has a positive effect on the “business alignment” tenet in service-oriented architectures. One can define one instance of the pattern per backend integration need becoming evident in a use case or user story; if a single execute operation is included in a PROCESSING RESOURCE endpoint, it may accept self-describing action request messages and return self-contained result documents. All operations in the API have to be protected as mandated by the security requirements.

In many integration scenarios, activity- and process-orientation would have to be forced into the design, which makes it hard to explain and maintain (among other negative consequences). In such cases, INFORMATION HOLDER RESOURCE is a better choice. It is possible to define API endpoints that are both processing- and data-oriented (just like many classes in object-oriented programming combine storage and behavior). Even a mere PROCESSING RESOURCE may have to hold state (but will want to hide its structure from the API clients). Such joint use of PROCESSING RESOURCE and INFORMATION HOLDER RESOURCE is not recommended for microservices architectures due to the amount of coupling possibly introduced.

Different types of PROCESSING RESOURCES require different message exchange patterns, depending on (1) how long the processing will take and (2) whether the client must receive the result immediately to be able to continue its processing (otherwise, the result can be delivered later). Processing time may be difficult to estimate, as it depends on the complexity of the action to be executed, the amount of data sent by the client, and the load/resource availability of the provider. The Request-Reply pattern requires at least two messages that can be exchanged via one network connection, such as one HTTP request-response pair in an HTTP resource API. Alternatively, multiple technical connections can be used, for instance, by sending the command via an HTTP POST and polling for the result via HTTP GET.

Decomposing the PROCESSING RESOURCE to call operations in other API end-points should be considered (it is rather common that no single existing or to-be-constructed system can satisfy all processing needs, due to either organizational or legacy system constraints). Most of the design difficulty lies in how to decompose a PROCESSING RESOURCE to a manageable granularity and set of expressive, learnable operations. The Stepwise Service Design activity in our Design Practice Reference (DPR) [Zimmermann 2021b] investigates this problem set.

Related Patterns

This pattern explains how to emphasize activity; its INFORMATION HOLDER RESOURCE sibling focuses on data orientation. PROCESSING RESOURCES may contain operations that differ in the way they deal with provider-side state (stateless services vs. stateful processors): STATE TRANSITION OPERATION, STATE CREATION OPERATION, COMPUTATION FUNCTION, and RETRIEVAL OPERATION.

PROCESSING RESOURCES are often exposed in COMMUNITY APIS, but also found in SOLUTION-INTERNAL APIS. Their operations are often protected with API KEYS and RATE LIMITS. A SERVICE LEVEL AGREEMENT that accompanies the technical API contract may govern their usage. To prevent technical parameters from creeping into the payload in request and response messages, such parameters can be isolated in a CONTEXT REPRESENTATION.

The three patterns “Command Message,” “Document Message,” and “Request-Reply” [Hohpe 2003] are used in combination when realizing this pattern. The “Command” pattern in [Gamma 1995] codifies a processing request and its invocation data as an object and as a message, respectively. PROCESSING RESOURCE can be seen as the remote API variant of the “Application Service” pattern in [Alur 2013]. Its provider-side implementations serve as “Service Activators” [Hohpe 2003].

Other patterns address manageability; see our evolution patterns in Chapter 8, “Evolve APIs,” for design-time advice and remoting patterns books [Voelter 2004; Buschmann 2007] for runtime considerations.

More Information

PROCESSING RESOURCES correspond to “Interfacers” that provide and protect access to service providers in responsibility-driven design (RDD) [Wirfs-Brock 2002].

Chapter 6 in SOA in Practice [Josuttis 2007] is on service classification; it compares several taxonomies, including the one from Enterprise SOA [Krafzig 2004]. Some of the examples in the process services type/category in these SOA books qualify as known uses of this pattern. These two books include project examples and case studies from domains such as banking and telecommunications.

“Understanding RPC vs REST for HTTP APIs” [Sturgeon 2016a] talks about RPC and REST, but taking a closer look, it actually (also) is about deciding between PROCESSING RESOURCE and INFORMATION HOLDER RESOURCE.

The action resource topic area/category in the API Stylebook [Lauret 2017] provides a (meta) known use for this pattern. Its “undo” topic is also related because undo operations participate in application-level state management.

Pattern: INFORMATION HOLDER RESOURCE

Pattern: INFORMATION HOLDER RESOURCE

When and Why to Apply

A domain model, a conceptual entity-relationship diagram, or another form of glossary of key application concepts and their interconnections has been specified. The model contains entities that have an identity and a life cycle as well as attributes; entities cross reference each other.

From this analysis and design work, it has become apparent that structured data will have to be used in multiple places in the distributed system being designed; hence, the shared data structures have to be made accessible from multiple remote clients. It is not possible or is not easy to hide the shared data structures behind domain logic (that is, processing-oriented actions such as business activities and commands); the application under construction does not have a workflow or other processing nature.

How can domain data be exposed in an API, but its implementation still be hidden?

More specifically,

How can an API expose data entities so that API clients can access and/or modify these entities concurrently without compromising data integrity and quality?

Modeling approach and its impact on coupling: Some software engineering and object-oriented analysis and design (OOAD) methods balance processing and structural aspects in their steps, artifacts, and techniques; some put a strong emphasis on either computing or data. Domain-driven design (DDD) [Evans 2003; Vernon 2013], for instance, is an example of a balanced approach. Entity-relationship diagrams focus on data structure and relationships rather than behavior. If a data-centric modeling and API endpoint identification approach is chosen, there is a risk that many CRUD (create, read, update, delete) APIs operating on data are exposed, which can have a negative impact on data quality because every authorized client may manipulate the provider-side data rather arbitrarily. CRUD-oriented data abstractions in interfaces introduce operational and semantic coupling.

Quality attribute conflicts and trade-offs: Design-time qualities such as simplicity and clarity; runtime qualities such as performance, availability, and scalability; as well as evolution-time qualities such as maintainability and flexibility often conflict with each other.

Security: Cross-cutting concerns such as application security also make it difficult to deal with data in APIs. A decision to expose internal data through an API must consider the required data read/write access rights for clients. Personal sensitive information and/or information classified as confidential might be contained in the request and response message representations. Such information has to be protected. For example, the risk of the creation of fake orders, fraudulent claims, and so on, has to be assessed and security controls introduced to mitigate it [Julisch 2011].

Data freshness versus consistency: Clients desire data obtained from APIs to be as up-to-date as possible, but effort is required to keep it consistent and current [Helland 2005]. Also, what are the consequences for clients if such data may become temporarily or permanently unavailable in the future?

Compliance with architectural design principles: The API under construction might be part of a project that has already established a logical and a physical software architecture. It should also play nice with respect to organization-wide architectural decisions [Zdun 2018], for instance, those establishing architectural principles such as loose coupling, logical and physical data independence, or microservices tenets such as independent deployability. Such principles might include suggestive or normative guidance on whether and how data can be exposed in APIs; a number of pattern selection decisions are required, with those principles serving as decision drivers [Zimmermann 2009; Hohpe 2016]. Our patterns provide concrete alternatives and criteria for making such architectural decisions (as we discussed previously in Chapter 3).

One could think of hiding all data structures behind processing-oriented API operations and data transfer objects (DTOs) analogous to object-oriented programming (that is, local object-oriented APIs expose access methods and facades while keeping all individual data members private). Such an approach is feasible and promotes information hiding; however, it may limit the opportunities to deploy, scale, and replace remote components independently of each other because either many fine-grained, chatty API operations are required or data has to be stored redundantly. It also introduces an undesired extra level of indirection, for instance, when building data-intensive applications and integration solutions.

Another possibility would be to give direct access to the database so that consumers can see for themselves what data is available and directly read and even write it if allowed. The API in this case becomes a tunnel to the database, where consumers can send arbitrary queries and transactions through it; databases such as CouchDB provide such data-level APIs out-of-the-box. This solution completely removes the need to design and implement an API because the internal representation of the data is directly exposed to clients. Breaking basic information-hiding principles, however, it also results in a tightly coupled architecture where it will be impossible to ever touch the database schema without affecting every API client. Direct database access also introduces security threats.

How It Works

Add an INFORMATION HOLDER RESOURCE endpoint to the API, representing a data-oriented entity. Expose create, read, update, delete, and search operations in this endpoint to access and manipulate this entity.

In the API implementation, coordinate calls to these operations to protect the data entity.

Make the endpoint remotely accessible for one or more API clients by providing a unique logical address. Let each operation of the INFORMATION HOLDER RESOURCE have one and only one of the four operation responsibilities (covered in depth in the next section): STATE CREATION OPERATIONS create the entity that is represented by the INFORMATION HOLDER RESOURCE. RETRIEVAL OPERATIONS access and read an entity but do not update it. They may search for and return collections of such entities, possibly filtered. STATE TRANSITION OPERATIONS access existing entities and update them fully or partially; they may also delete them.

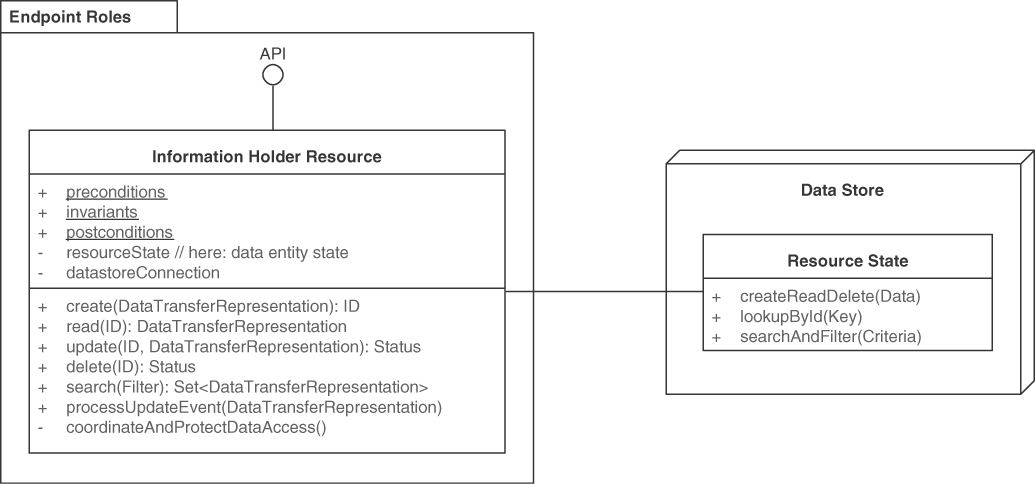

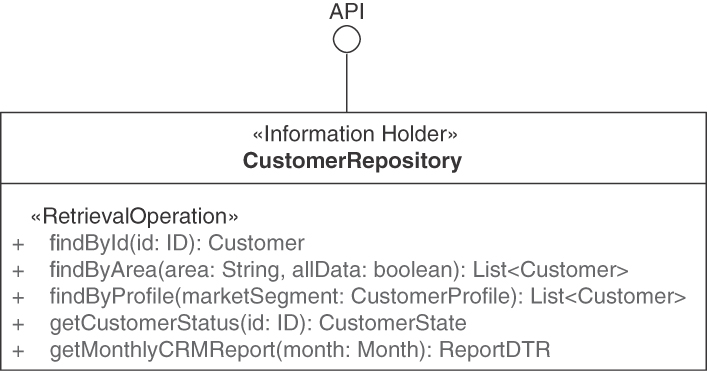

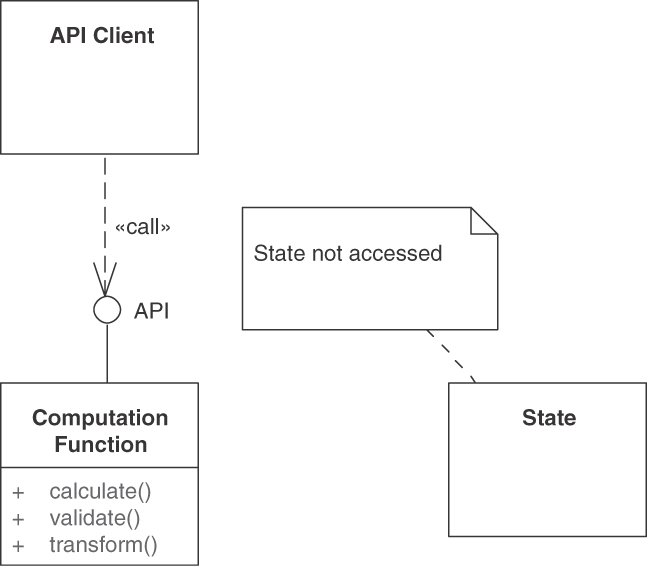

For each operation, design the request and, if needed, response message structures. For instance, represent entity relationships as LINK ELEMENTS. If basic reference data such as country codes or currency codes are looked up, the response message typically is an ATOMIC PARAMETER; if a rich, structured domain model entity is looked up, the response is more likely to contain a PARAMETER TREE that represents the data transfer representation (a term from the API domain model introduced in Chapter 1) of the looked-up information. Figure 5.4 sketches this solution.

Figure 5.4 INFORMATION HOLDER RESOURCES model and expose general data-oriented API designs. This endpoint role groups information-access-oriented responsibilities. Its operations create, read, update, or delete the data held. Searching for data sets is also supported

Define operation-level pre- and postconditions as well as invariants to protect the resource state. Decide whether the INFORMATION HOLDER RESOURCE should be a “Stateful Component” or a “Stateless Component,” as defined in [Fehling 2014]. In the latter case, there still is state (because the exposed data has to be held somewhere), but the entire state management is outsourced to a backend system. Define the quality characteristics of the new endpoint and its operation, covering transactionality, idempotence, access control, accountability, and consistency:

Introduce access/modification control policies. API KEYS are a simple way of identifying and authorizing clients, and more advanced security solutions are also available.

Protect the concurrent data access by applying an optimistic or a pessimistic locking strategy from the database and concurrent programming communities. Design coordination policies.

Implement consistency-preserving checks, which may support “Strict Consistency” or “Eventual Consistency” [Fehling 2014].

Five patterns in our language refine this general solution to data-oriented API endpoint modeling: OPERATIONAL DATA HOLDER, MASTER DATA HOLDER, REFERENCE DATA HOLDER, DATA TRANSFER RESOURCE, and LINK LOOKUP RESOURCE.

Example

The Customer Core microservice in the Lakeside Mutual sample exposes master data. The semantics and its operations (for example, changeAddress(...)) of this service are data- rather than action-oriented (the service is consumed by other microservices that realize the PROCESSING RESOURCE pattern). Hence, it exposes a CustomerInformationHolder endpoint, realized as an HTTP resource:

@RestController@RequestMapping("/customers")public class CustomerInformationHolder {@Operation(summary = "Change a customer's address.")@PutMapping(value = "/{customerId}/address")public ResponseEntity<AddressDto> changeAddress(@Parameter(description = "the customer's unique id",required = true)@PathVariable CustomerId,@Parameter(description = "the customer's new address",required = true)@Valid @RequestBody AddressDto requestDto) {[...]}@Operation(summary = "Get a specific set of customers.")@GetMapping(value = "/{ids}")public ResponseEntity<CustomersResponseDto>getCustomer(@Parameter(description ="a comma-separated list of customer ids",required = true)@PathVariable String ids,@Parameter(description ="a comma-separated list of the fields" +"that should be included in the response",required = false)@RequestParam(value = "fields", required = false,defaultValue = "")String fields) {[...])}

This CustomerInformationHolder endpoint exposes two operations, a read-write STATE TRANSITION OPERATION changeAddress (HTTP PUT) and a read-only RETRIEVAL OPERATIONS getCustomer (HTTP GET).

Discussion

INFORMATION HOLDER RESOURCES resolve their design forces as follows:

Modeling approach and its impact on coupling: Introducing INFORMATION HOLDER RESOURCES often is the consequence of a data-centric approach to API modeling. Processing will typically shift to the consumer of the INFORMATION HOLDER RESOURCE. The INFORMATION HOLDER RESOURCE then is solely responsible for acting as a reliable source of linked data. The resource may serve as a relationship sink, source, or both.

It depends on the scenario at hand and the project goals/product vision whether such an approach is adequate. While activity- or process-orientation is often preferred, it simply is not natural in a number of scenarios; examples include digital archives, IT infrastructure inventories, and server configuration repositories. Data-oriented analysis and design methods are well suited to identify INFORMATION HOLDER endpoints but sometimes go too far, for instance, when tackling system behavior and logic.2

2. One of the classic cognitive biases is that every construction problem looks like a nail if you know how to use a hammer (and have one at hand). Analysis and design methods are tools made for specific purposes.

Quality attribute conflicts and trade-offs: Using an INFORMATION HOLDER RESOURCE requires carefully considering security, data protection, consistency, availability, and coupling implications. Any change to the INFORMATION HOLDER RESOURCE content, metadata, or representation formats must be controlled to avoid breaking consumers. Quality attribute trees can steer the pattern selection process.

Security: Not all API clients may be authorized to access each INFORMATION HOLDER RESOURCE in the same way. API KEYS, client authentication, and ABAC/RBAC help protect each INFORMATION HOLDER RESOURCE.

Data freshness versus consistency: Data consistency has to be preserved for concurrent access of multiple consumers. Likewise, clients must deal with the consequences of temporary outages, for instance, by introducing an appropriate caching and offline data replication and synchronization strategy. In practice, the decision between availability and consistency is not as binary and strict as the CAP theorem suggests, which is discussed by its original authors in a 12-year retrospective and outlook [Brewer 2012].

If several fine-grained INFORMATION HOLDERS appear in an API, many calls to operations might be required to realize a user story, and data quality is hard to ensure (because it becomes a shared, distributed responsibility). Consider hiding several of them behind any type of PROCESSING RESOURCE.

Compliance with architectural design principles: The introduction of INFORMATION HOLDER RESOURCE endpoints may break higher-order principles such as strict logical layering that forbids direct access to data entities from the presentation layer. It might be necessary to refactor the architecture [Zimmermann 2015]—or grant an explicit exception to the rule.

INFORMATION HOLDER RESOURCES have the reputation for increasing coupling and violating the information-hiding principle. A post in Michael Nygard’s blog calls for a responsibility-based strategy for avoiding pure INFORMATION HOLDER RESOURCES, which he refers to as “entity service anti-pattern.” The author recommends always evolving away from this pattern, because it creates high semantic and operational coupling, and rather “focus[ing] on behavior instead of data” (which we describe as PROCESSING RESOURCE) and “divid[ing] services by life cycle in a business process” [Nygard 2018b] (which we see as one of several service identification strategies). In our opinion, INFORMATION HOLDER RESOURCES do have their place both in service-oriented systems and in other API usage scenarios. However, any usage should be a conscious decision justified by the business and integration scenario at hand—because of the impact on coupling that is observed and criticized. For certain data, it might be better indeed not to expose it at the API level but hide it behind PROCESSING RESOURCES.

Related Patterns

“Information Holder” is a role stereotype in RDD [Wirfs-Brock 2002]. This general INFORMATION HOLDER RESOURCE pattern has several refinements that differ with regard to mutability, relationships, and instance lifetimes: OPERATIONAL DATA HOLDER, MASTER DATA HOLDER, and REFERENCE DATA HOLDER. The LINK LOOKUP RESOURCE pattern is another specialization; the lookup results may be other INFORMATION HOLDER RESOURCES. Finally, DATA TRANSFER RESOURCE holds temporary shared data owned by the clients. The PROCESSING RESOURCE pattern represents complementary semantics and therefore is an alternative to this pattern.

STATE CREATION OPERATIONS and RETRIEVAL OPERATIONS can typically be found in INFORMATION HOLDER RESOURCES, modeling CRUD semantics. Stateless COMPUTATION FUNCTIONS and read-write STATE TRANSITION OPERATIONS are also permitted in INFORMATION HOLDER RESOURCES but typically operate on a lower level of abstraction than those of PROCESSING RESOURCES.

Implementations of this pattern can be seen as the API pendant to the “Repository” pattern in DDD [Evans 2003], [Vernon 2013]. An INFORMATION HOLDER RESOURCE is often implemented with one or more “Entities” from DDD, possibly grouped into an “Aggregate.” Note that no one-to-one correspondence between INFORMATION HOLDER RESOURCE and Entities should be assumed because the primary job of the tactical DDD patterns is to organize the business logic layer of a system, not a (remote) API “Service Layer” [Fowler 2002].

More Information

Chapter 8 in Process-Driven SOA is devoted to business object integration and dealing with data [Hentrich 2011]. “Data on the Outside versus Data on the Inside” by Pat Helland explains the differences between data management on the API and the API implementation level [Helland 2005].

“Understanding RPC vs REST for HTTP APIs” [Sturgeon 2016a] covers the differences between INFORMATION HOLDER RESOURCES and PROCESSING RESOURCES in the context of an RPC and REST comparison.

Various consistency management patterns exist. “Eventually Consistent” by Werner Vogels, the Amazon Web Services CTO, addresses this topic [Vogels 2009].

Pattern: OPERATIONAL DATA HOLDER

Pattern: OPERATIONAL DATA HOLDER

When and Why to Apply

A domain model, an entity-relationship diagram, or a glossary of key business concepts and their interconnections has been specified; it has been decided to expose some of the data entities contained in these specifications in an API by way of INFORMATION HOLDER RESOURCE instances.

The data specification unveils that the entity lifetimes and/or update cycles differ significantly (for instance, from seconds, minutes, and hours to months, years, and decades) and that the frequently changing entities participate in relationships with slower-changing ones. For instance, fast-changing data may act mostly as link sources, while slow-changing data appears mostly as link targets.3

3. The context of this pattern is similar to that of its sibling pattern MASTER DATA HOLDER. It acknowledges and points out that the lifetimes and relationship structure of these two types of data differ (in German: Stammdaten vs. Bewegungsdaten; see [Ferstl 2006; White 2006]).

How can an API support clients that want to create, read, update, and/or delete instances of domain entities that represent operational data that is rather short-lived, changes often during daily business operations, and has many outgoing relations?

Several desired qualities are worth calling out, in addition to those applying to any kind of INFORMATION HOLDER RESOURCE.

Processing speed for content read and update operations: Depending on the business context, API services dealing with operational data must be fast, with low response time for both reading and updating its current state.

Business agility and schema update flexibility: Depending on the business context (for example, when performing A/B testing with parts of the live users), API endpoints dealing with operational data must also be easy to change, especially when the data definition or schema evolves.

Conceptual integrity and consistency of relationships: The created and modified operational data must meet high accuracy and quality standards if it is business-critical. For instance, system and process assurance audits inspect financially relevant business objects such as invoices and payments in enterprise applications [Julisch 2011]. Operational data might be owned, controlled, and managed by external parties such as payment providers; it might have many outgoing relations to similar data and longer-lived, less frequently changing master data. Clients expect that the referred entities will be correctly accessible after the interaction with an operational data resource has successfully completed.

One could think of treating all data equally to promote solution simplicity, irrespective of its lifetime and relationship characteristics. However, such a unified approach might yield only a mediocre compromise that meets all of the preceding needs somehow but does not excel with regard to any of them. If, for instance, operational data is treated as master data, one might end up with an overengineered API with regard to consistency and reference management that also leaves room for improvement with regard to processing speed and change management.

How It Works

Tag an INFORMATION HOLDER RESOURCE as OPERATIONAL DATA HOLDER and add API operations to it that allow API clients to create, read, update, and delete its data often and fast.

Optionally, expose additional operations to give the OPERATIONAL DATA HOLDER domain-specific responsibilities. For instance, a shopping basket might offer fee and tax computations, price update notifications, discounting, and other state-transitioning operations.

The request and response messages of such OPERATIONAL DATA HOLDERS often take the form of PARAMETER TREES; however, the other types of request and response message structure can also be found in practice. One must be aware of relationships with master data and be cautious when including master data in requests to and responses from OPERATIONAL DATA HOLDERs via EMBEDDED ENTITY instances. It is often better to separate the two types in different endpoints and realize the cross references via LINKED INFORMATION HOLDER instances.

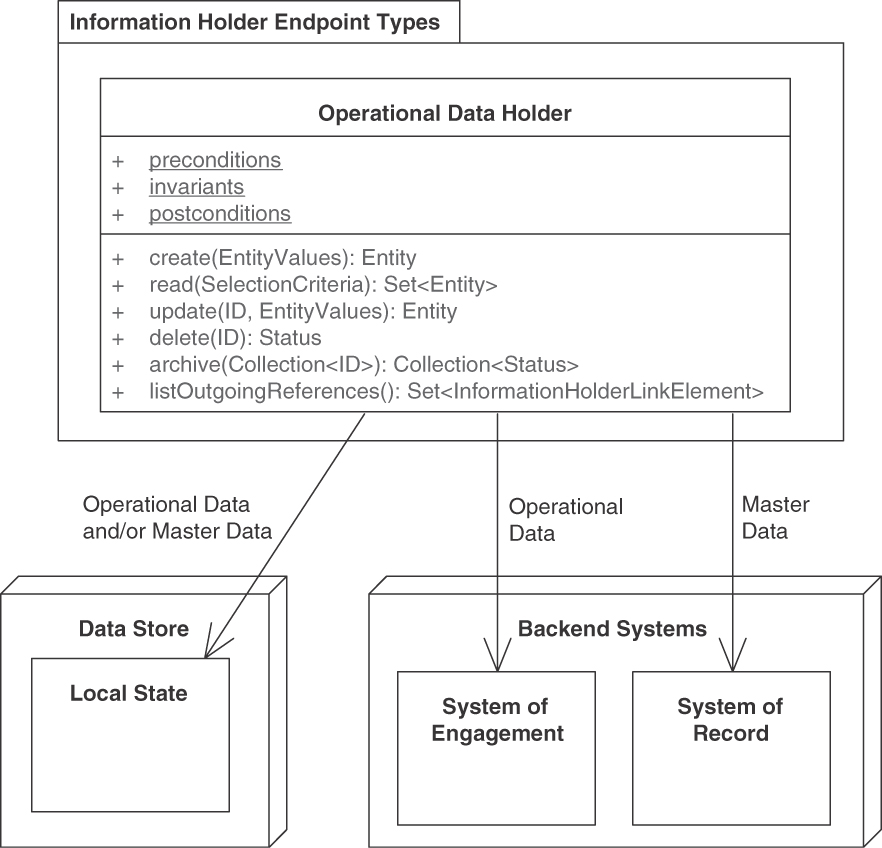

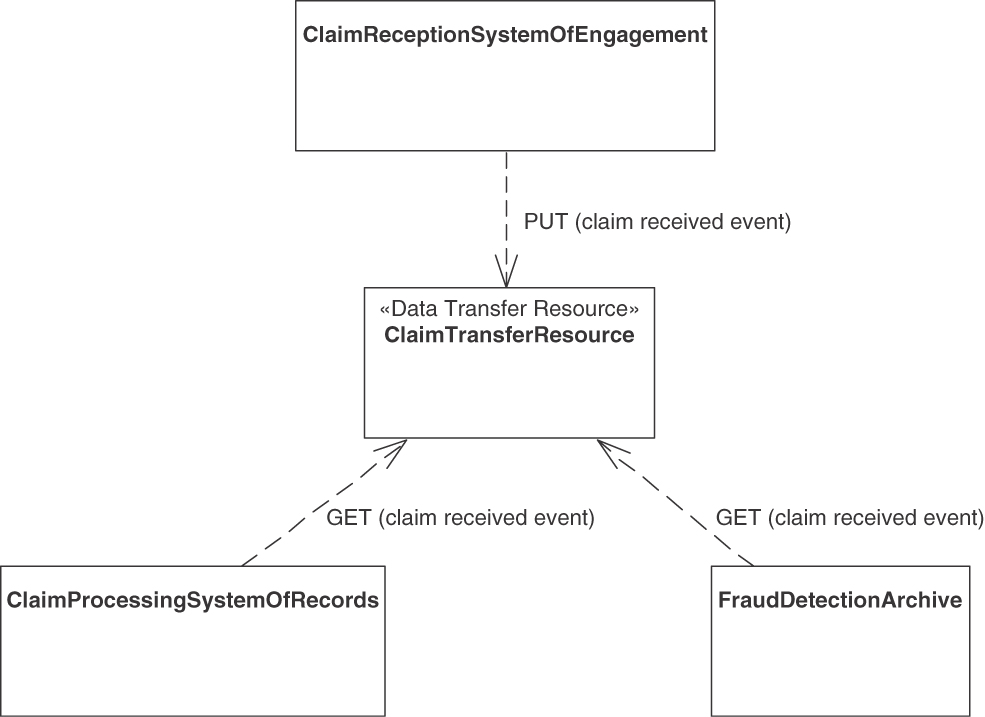

Figure 5.5 sketches the solution. A System of Engagement is used to support everyday business and typically holds operational data; related master data can be found in a System of Record. The API implementation might also keep its own Data Stores in addition to integrating with such backend systems, which may hold both operational data and master data.

Figure 5.5 OPERATIONAL DATA HOLDER: Operational data has a short to medium lifetime and may change a lot during daily business. It may reference master data and other operational data

OPERATIONAL DATA HOLDERS accessed from multiple concurrent clients should provide transactional guarantees in terms of isolation and atomicity so that multiple clients may attempt to access the same data items at the same time while keeping its state consistent. If failures occur during the interaction with a specific client, the state of the OPERATIONAL DATA HOLDER should be reverted back to the last known consistent state. Likewise, update or creation requests being retried should be de-duplicated when not idempotent. Closely related OPERATIONAL DATA HOLDERS should also be managed and evolved together to assure their clients that references across them will remain valid. The API should provide atomic update or delete operations across all related OPERATIONAL DATA HOLDERS.

OPERATIONAL DATA HOLDERS are good candidates for event sourcing [Stettler 2019] whereby all state changes are logged, making it possible for API clients to access the entire history of state changes for the specific OPERATIONAL DATA HOLDER. This may increase the API complexity, as consumers may want to refer to or retrieve arbitrary snapshots from the past as opposed to simply querying the latest state.

Example

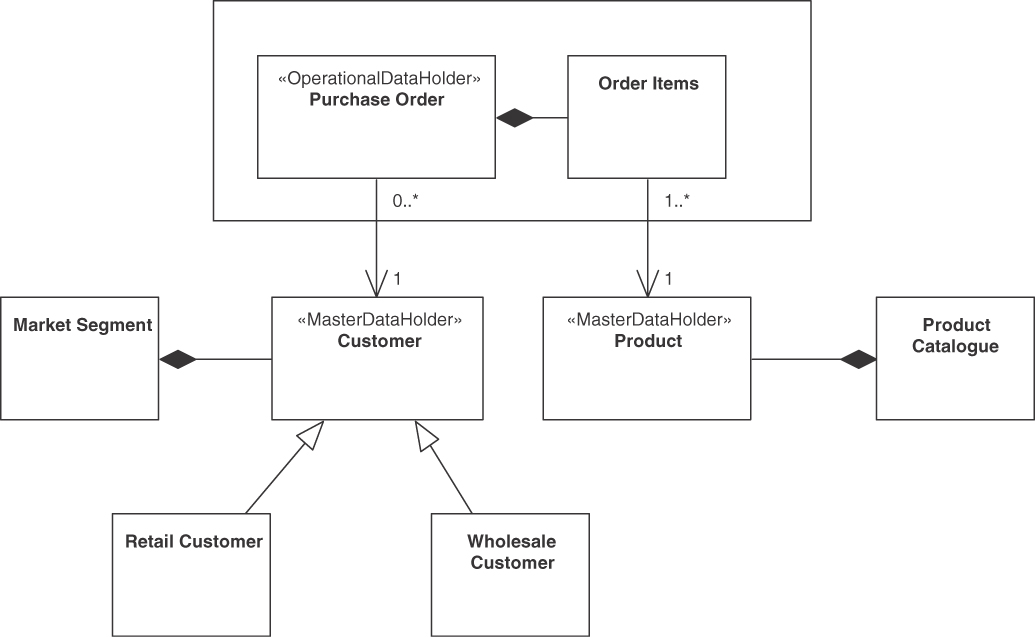

In an online shop, purchase orders and order items qualify as operational data; the ordered products and the customers placing an order meet the characteristics of master data. Hence, these domain concepts are typically modeled as different “Bounded Context” instances (in DDD) and exposed as separate services, as shown in Figure 5.6.

Figure 5.6 Online shop example: OPERATIONAL DATA HOLDER (Purchase Order) and MASTER DATA HOLDERS (Customer, Product) and their relations

Lakeside Mutual, our sample application from the insurance domain, manages operational data such as claims and risk assessments that are exposed as Web services and REST resources (see Figure 5.7).

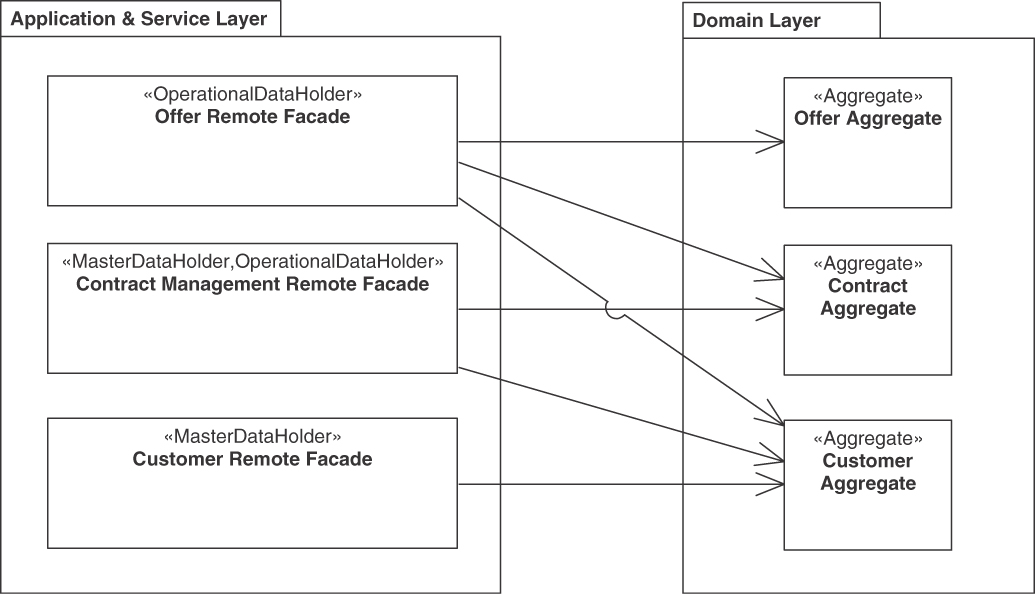

Figure 5.7 Examples of combining OPERATIONAL DATA HOLDER and MASTER DATA HOLDER: Offers reference contracts and customers, contracts reference customers. In this example, the remote facades access multiple aggregates isolated from each other. The logical layer names come from [Evans 2003] and [Fowler 2002]

Discussion

The pattern primarily serves as a “marker pattern” in API documentation, helping to make technical interfaces “business-aligned,” which is one of the SOA principles and microservices tenets [Zimmermann 2017].

Sometimes even operational data is kept for a long time: in a world of big data analytics and business intelligence insights, operational data is often archived for analytical processing, such as in data marts, data warehouses, or semantic data lakes.

The fewer inbound dependencies an OPERATIONAL DATA HOLDER has, the easier to update it is. A limited lifetime of data and data definitions makes API evolution less challenging; for instance, backward compatibility and integrity management become less of an issue. It might even be possible to rewrite OPERATIONAL DATA HOLDERS rather than maintain older versions of them [Pautasso 2017a]. Relaxing their consistency properties from strict to eventual [Fehling 2014] can improve availability.

The consistency and availability management of OPERATIONAL DATA HOLDERS may prioritize the conflicting requirements differently than MASTER DATA HOLDERS (depending on the domain and scenario). Business agility, schema update flexibility, and processing speed are determined by the API implementation.

The distinction between master data and operational data is somewhat subjective and dependent on application context; data that is needed only temporarily in one application might be a core asset in another one. For instance, think about purchases in an online shop. While the shopper cares about the order only until it is delivered and paid for (unless there is a warranty case or the customer wants to return the good or repeat the same order in the future), the shop provider will probably keep all details forever to be able to analyze buying behavior over time (customer profiling, product recommendations, and targeted advertisement).

The OPERATIONAL DATA HOLDER pattern can help to satisfy regulatory requirements expressed as compliance controls. An example of such requirement and compliance control is “all purchase orders reference a customer that actually exists in a system of record and in the real world.” Enforcing this rule prevents (or finds) cases of fraud [Julisch 2011].

Related Patterns

Longer-living information holders with many incoming references are described by the patterns MASTER DATA HOLDER (mutable) and REFERENCE DATA HOLDER (immutable via the API). An alternative, less data- and more action-oriented pattern is PROCESSING RESOURCE. All operation responsibilities patterns, including STATE CREATION OPERATION and STATE TRANSITION OPERATION, can be used in OPERATIONAL DATA HOLDER endpoints.

Patterns from Chapters 4, 6, and 7 are applied when designing the request and response messages of the operations of the OPERATIONAL DATA HOLDER. Their suitability heavily depends on the actual data semantics. For instance, entering items into a shopping basket might expect a PARAMETER TREE and return a simple success flag as an ATOMIC PARAMETER. The checkout activity then might require multiple complex parameters (PARAMETER FOREST) and return the order number and the expected delivery date in an ATOMIC PARAMETER LIST. The deletion of operational data can be triggered by sending a single ID ELEMENT and might return a simple success flag and/or ERROR REPORT representation. PAGINATION slices responses to requests for large amounts of operational data.

The “Data Type Channel” pattern in [Hohpe 2003] describes how to organize a messaging system by message semantics and syntax (such as query, price quote, or purchase order).

OPERATIONAL DATA HOLDERS referencing other OPERATIONAL DATA HOLDERS may choose to include this data in the form of an EMBEDDED ENTITY. By contrast, references to MASTER DATA HOLDERS often are not included/embedded but externalized via LINKED INFORMATION HOLDER references.

More Information

The notion of operational (or transactional) data has its roots in the database and information integration community and in business informatics (Wirtschaftsinfor-matik) [Ferstl 2006].

Pattern: MASTER DATA HOLDER

Pattern: MASTER DATA HOLDER

When and Why to Apply

A domain model, an entity-relationship diagram, a glossary, or a similar dictionary of key application concepts has been specified; it has been decided to expose some of these data entities in an API by way of INFORMATION HOLDER RESOURCES.

The data specification unveils that the lifetimes and update cycles of these INFORMATION HOLDER RESOURCE endpoints differ significantly (for instance, from seconds, minutes, and hours to months, years, and decades). Long-living data typically has many incoming relationships, whereas shorter-living data often references long-living data. The data access profiles of these two types of data differ substantially.4

4. The context of this pattern is similar to that of its alternative pattern OPERATIONAL DATA HOLDER. It emphasizes that the lifetimes and relationship structure of these two types of data differ. Here we are interested in master data, often contrasted with operational data, also called transactional data (in German, Stammdaten vs. Bewegungsdaten; see Ferstl [2006], White [2006]).

How can I design an API that provides access to master data that lives for a long time, does not change frequently, and will be referenced from many clients?

In many application scenarios, data that is referenced in multiple places and lives long has high data quality and data protection needs.

Master data quality: Master data should be accurate because it is used directly, indirectly, and/or implicitly in many places, from daily business to strategic decision making. If it is not stored and managed in a single place, uncoordinated updates, software bugs, and other unforeseen circumstances may lead to inconsistencies and other quality issues that are hard to detect. If it is stored centrally, access to it might be slow due to overhead caused by access contention and backend communication.

Master data protection: Irrespective of its storage and management policy, master data must be well protected with suitable access controls and auditing policies, as it is an attractive target for attacks, and the consequences of data breaches can be severe.

Data under external control: Master data may be owned and managed by dedicated systems, often purchased by (or developed in) a separate organizational unit. For instance, there is an application genre of master data management systems specializing in product or customer data. In practice, external hosting (strategic outsourcing) of these specialized master data management systems happens and complicates system integration because more stakeholders are involved in their evolution.

Data ownership and audit procedures differ from those of other types of data. Master data collections are assets with a monetary value appearing in the balance sheets of enterprises. Therefore, their definitions and interfaces often are hard to influence and change; due to external influences on its life cycle, master data may evolve at a different speed than operational data that references it.

One could think of treating all entities/resources equally to promote solution simplicity, irrespective of their lifetime and relationship patterns. However, such an approach runs the risk of not satisfactorily addressing the concerns of stakeholders, such as security auditors, data owners, and data stewards. Hosting providers and, last but not least, the real-world correspondents of the data (for instance, customers and internal system users) are other key stakeholders of master data whose interests might not be fulfilled satisfactorily by such an approach.

How It Works

Mark an INFORMATION HOLDER RESOURCE to be a dedicated MASTER DATA HOLDER endpoint that bundles master data access and manipulation operations in such a way that the data consistency is preserved and references are managed adequately. Treat delete operations as special forms of updates.

Optionally, offer other life-cycle events or state transitions in this MASTER DATA HOLDER endpoint. Also optionally, expose additional operations to give the MASTER DATA HOLDER domain-specific responsibilities. For instance, an archive might offer time-oriented retrieval, bulk creations, and purge operations.

A MASTER DATA HOLDER is a special type of INFORMATION HOLDER RESOURCE. It typically offers operations to look up information that is referenced elsewhere. A MASTER DATA HOLDER also offers operations to manipulate the data via the API (unlike a REFERENCE DATA HOLDER). It must meet the security and compliance requirements for this type of data.

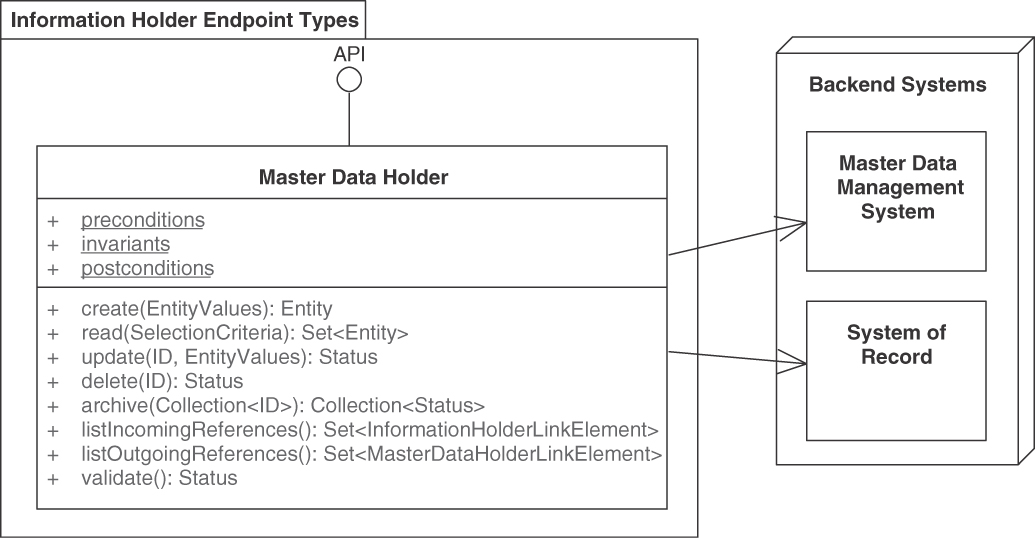

Figure 5.8 shows its specific design elements.

Figure 5.8 MASTER DATA HOLDER. Master data lives long and is frequently referenced by other master data and by operational data. It therefore faces specific quality and consistency requirements

The request and response messages of MASTER DATA HOLDERS often take the form of PARAMETER TREES. However, more atomic types of request and response message structure can also be found in practice. Master data creation operations typically receive a simple to medium complex PARAMETER TREE because master data might be complex but is often created in one go, for instance, when being entered completely by a user in a form (such as an account creation form). They usually return an ATOMIC PARAMETER or an ATOMIC PARAMETER LIST to report the ID ELEMENT or LINK ELEMENT that identifies the master data entity uniquely/globally and reports whether or not the creation request was successful (for instance, using the ERROR REPORT pattern). Reasons for failure can be duplicate keys, violations of business rules and other invariants, or internal server-side processing errors (for instance, temporary unavailability of backend systems).

A master data update may come in two forms:

Coarse-grained full update operation that replaces most or all attributes in a master data entity such as customer or product. This form corresponds to the HTTP PUT verb.

Fine-grained partial update operation that updates only one or a few of the attributes in a master data entity, for instance, the address of a customer (but not its name) or the price of a product (but not its supplier and taxation rules). In HTTP, the verb PATCH has such semantics.

Read access to master data is often performed via RETRIEVAL OPERATIONS that offer parameterized search-and-filter query capabilities (possibly expressed declaratively).

Deletion might not be desired. If supported, delete operations on master data sometimes are complicated to implement due to legal compliance requirements. There is a risk of breaking a large number of incoming references when removing master data entirely. Hence, master data often is not deleted at all but is set into an immutable state “archived” in which updates are no longer possible. This also allows keeping audit trails and historic data manipulation journals; master data changes are often mission-critical and thus must be nonrepudiable. If deletion is indeed necessary (and this can be a regulatory requirement), the data may actually be hidden from (some or all) consumers but still preserved in an invisible state (unless yet another regulatory requirement forbids this).

In an HTTP resource API, the address (URI) of a MASTER DATA HOLDER resource can be widely shared among clients referencing it, which can access it via HTTP GET (a read-only method that supports caching). The creation and update calls make use of POST, PUT, and PATCH methods, respectively [Allamaraju 2010].

Please note that the discussion of the words create, read, update, and delete in the context of this pattern should not indicate that CRUD-based API designs are the intended or only possible solution for realizing the pattern. Such designs quickly lead to chatty APIs with bad performance and scalability properties, and lead to unwanted coupling and complexity. Beware of such API designs! Instead, follow an incremental approach during resource identification that aims to first identify well-scoped interface elements such as Aggregate roots in DDD, business capabilities, or business processes. Even larger formations, such as Bounded Contexts, may serve as starting points. In infrequent cases, domain Entities can also be considered to supply endpoint candidates. This inevitably will lead to MASTER DATA HOLDER designs that are semantically richer and more meaningful—and with a more positive impact on the mentioned qualities. In DDD terms, we aim for a rich and deep domain model, as opposed to an anemic domain model [Fowler 2003]; this should be reflected in the API design. In many scenarios, it makes sense to identify and call out master data (as well as operational data) in the domain models so that later design decisions can use this information.

Example

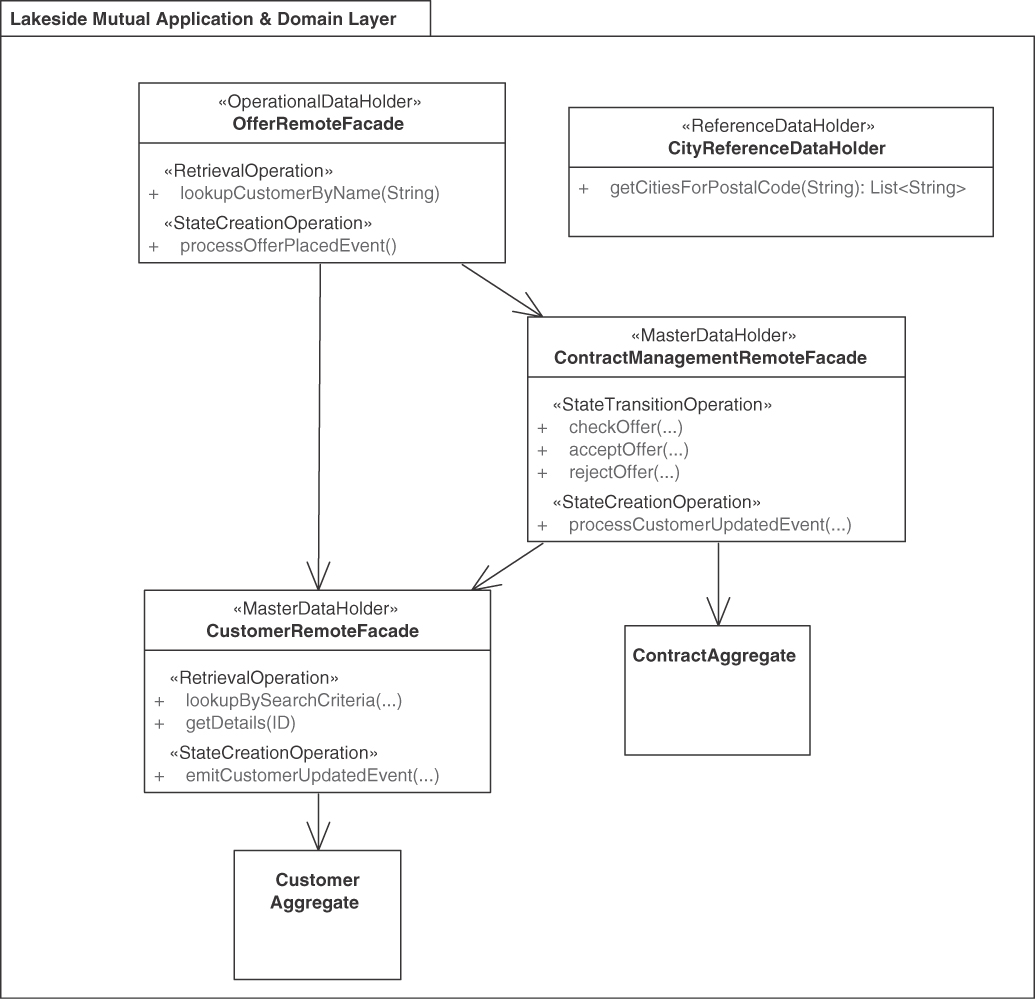

Lakeside Mutual, our sample application from the insurance domain, features master data such as customers and contracts that are exposed as Web services and REST resources, thus applying the MASTER DATA HOLDER pattern. Figure 5.9 illustrates two of these resources as remote facades.

Figure 5.9 Example of OPERATIONAL DATA HOLDER and MASTER DATA HOLDER interplay. Operational data references master data, but not vice versa. An application of the REFERENCE DATA HOLDER pattern is also shown

In this example, the remote facades (offer, contract, customer) access each other and two domain-layer aggregates in the API implementation.

Discussion

Tagging an API endpoint as a MASTER DATA HOLDER can help achieve the required focus on data quality and data protection.

Master data by definition has many inbound dependencies and might also have outbound ones. Since such data is often under external control, tagging an API end-point as MASTER DATA HOLDER also helps to control and limit where such external dependency is introduced. This way, there will be only one API providing fresh access to a specific master data source in a consistent way.

Master data often is a valuable company asset that is key to success in the market (it might even turn a company into an acquisition target). Hence, when exposed as part of the API, it is particularly important to plan its future evolution in a roadmap that respects backward compatibility, considers digital preservation, and protects the data from theft and tampering.

Related Patterns

The MASTER DATA HOLDER pattern has two alternatives: REFERENCE DATA HOLDER (with data that is immutable via the API) and OPERATIONAL DATA HOLDER (exposing shorter-lived data with less incoming references).

More Information

The notion of master data versus operational data comes from literature in the database community (more specifically, information integration) and in business informatics (Wirtschaftsinformatik in German) [Ferstl 2006]. It plays an important role in online analytical processing (OLAP), data warehouses, and business intelligence (BI) efforts [Kimball 2002].

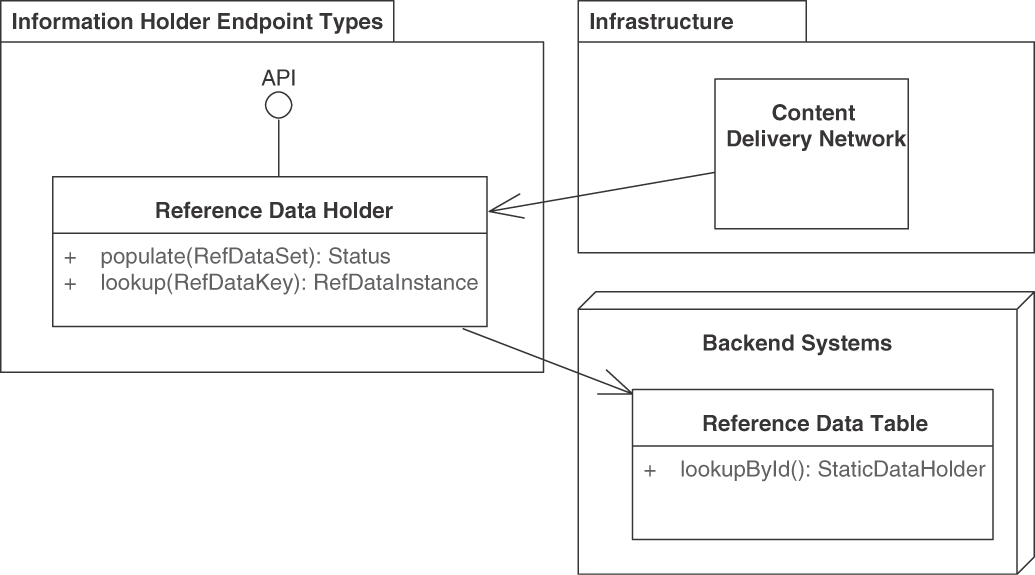

Pattern: REFERENCE DATA HOLDER

Pattern: REFERENCE DATA HOLDER

When and Why to Apply

A requirements specification unveils that some data is referenced in most if not all system parts, but it changes only very rarely (if ever); these changes are administrative in nature and are not caused by API clients operating during everyday business. Such data is called reference data. It comes in many forms, including country codes, zip codes, geolocations, currency codes, and units of measurement. Reference data often is represented by enumerations of string literals or numeric value ranges.

The data transfer representations in the request and response messages of API operations may either contain or point at reference data to satisfy the information needs of a message receiver.

How should data that is referenced in many places, lives long, and is immutable for clients be treated in API endpoints?

How can such reference data be used in requests to and responses from PROCESSING RESOURCES or INFORMATION HOLDER RESOURCES?

Two desired qualities are worth calling out (in addition to those applying to any kind of INFORMATION HOLDER RESOURCE).

Do not repeat yourself (DRY): Because reference data rarely changes (if ever), there is a temptation to simply hardcode it within the API clients or, if using a cache, retrieve it once and then store a local copy forever. Such designs work well in the short run and might not cause any immanent problems—until the data and its definitions have to change.5 Because the DRY principle is violated, the change will impact every client, and if clients are out of reach, it may not be possible to update them.

5. For instance, it was sufficient to use two digits for calendar years through 1999.

Performance versus consistency trade-off for read access: Because reference data rarely changes (if at all), it may pay off to introduce a cache to reduce round-trip access response time and reduce traffic if it is referenced and read a lot. Such replication tactics have to be designed carefully so that they function as desired and do not make the end-to-end system overly complex and hard to maintain. For instance, caches should not grow too big, and replication has to be able to tolerate network partitions (outages). If the reference data does change (on schema or on content level), updates have to be applied consistently. Two examples are new zip codes introduced in a country and the transition from local currencies to the Euro (EUR) in many European countries.

One could treat static and immutable reference data just like dynamic data that is both read and written. This works fine in many scenarios but misses opportunities to optimize the read access, for instance, via data replication in content delivery networks (CDNs) and might lead to unnecessary duplication of storing and computing efforts.

How It Works

Provide a special type of INFORMATION HOLDER RESOURCE endpoint, a REFERENCE DATA HOLDER, as a single point of reference for the static, immutable data. Provide read operations but no create, update, or delete operations in this endpoint.

Update the reference data elsewhere if required, by directly changing back-end assets or through a separate management API. Refer to the REFERENCE DATA HOLDER endpoint via LINKED INFORMATION HOLDERS.

The REFERENCE DATA HOLDER may allow clients to retrieve the entire reference data set so that they can keep a local copy that can be accessed multiple times. They may want to filter its content before doing so (for instance, to implement some auto-completion feature in an input form in a user interface). It is also possible to look up individual entries of the reference data only (for instance, for validation purposes). For example, a currency list can be copy-pasted all over the place (as it never changes), or it can be retrieved and cached from the REFERENCE DATA HOLDER API, as described here. Such API can provide a complete enumeration of the list (to initialize and refresh the cache) or feature the ability to project/select the content (for instance, a list of European currency names), or allow clients to check whether some value is present in the list for client-side validation (“Does this currency exist?”).

Figure 5.10 sketches the solution.

Figure 5.10 REFERENCE DATA HOLDER. Reference data lives long but cannot be changed via the API. It is referenced often and in many places

The request and response messages of REFERENCE DATA HOLDERS often take the form of ATOMIC PARAMETERS or ATOMIC PARAMETER LISTS, for instance, when the reference data is unstructured and merely enumerates certain flat values.

Reference data lives long but hardly ever changes; it is referenced often and in many places. Hence, the operations of REFERENCE DATA HOLDERS may offer direct access to a reference data table. Such lookups can map a short identifier (such as a provider-internal surrogate key) to a more expressive, human-readable identifier and/or entire data set.

The pattern does not prescribe any type of implementation; for instance, a relational database might come across as an overengineered solution when managing a list of currencies; a file-based key-value store or indexed sequential access method (ISAM) files might be sufficient. Key-value stores such as a Redis or a document-oriented NoSQL database such as CouchDB or MongoDB may also be considered.

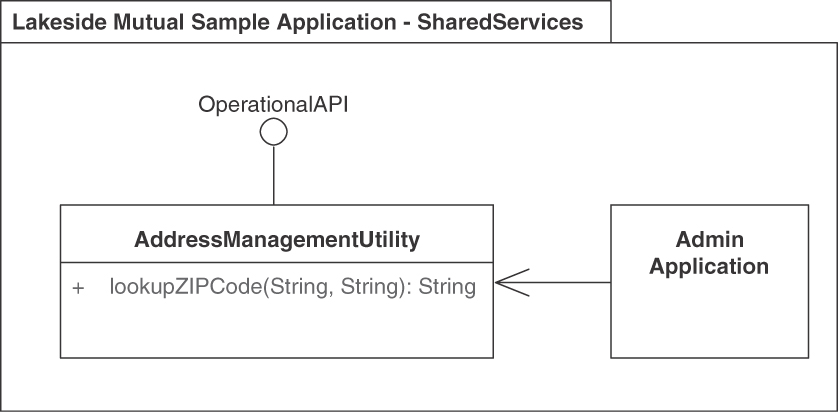

Example

Figure 5.11 shows an instance of the pattern that allows API clients to look up zip codes based on addresses, or vice versa.

Figure 5.11 REFERENCE DATA HOLDER: Zip code lookup

Discussion

The most common usage scenario for this pattern is the lookup of simple text data whose value ranges meet certain constraints (for example, country codes, currency codes, or tax rates).

Explicit REFERENCE DATA HOLDERS avoid unnecessary repetition. The purpose of a REFERENCE DATA HOLDER is to give a central point of reference for helping disseminate the data while keeping control over it. Read performance can be optimized; immutable data can be replicated rather easily (no risk of inconsistencies as long as it never changes).

Dedicated REFERENCE DATA HOLDERS have to be developed, documented, managed, and maintained. This effort will still be less than that required to upgrade all clients if such reference data gets hardcoded in them.

DRY: Clients no longer have to implement reference management on their own, at the expense of introducing a dependency on a remote API. This positive effect can be viewed as a form of data normalization, as known from database design and information management.

Performance versus consistency trade-off for read access: The pattern hides the actual data behind the API and therefore allows the API provider to introduce proxies, caches, and read-only replicas behind the scenes. The only effect that is visible to the API clients is an improvement (if done right) in terms of quality properties such as response times and availability, possibly expressed in the SERVICE LEVEL AGREEMENT that accompanies the functional API contract.

A standalone REFERENCE DATA HOLDER sometimes turns out to cause more work and complexity than it adds value (in terms of data normalization and performance improvements). In such cases, one can consider merging the reference data with an already existing, more complex, and somewhat more dynamic MASTER DATA HOLDER endpoint in the API by way of an API refactoring [Stocker 2021a].

Related Patterns

The MASTER DATA HOLDER pattern is an alternative to REFERENCE DATA HOLDER. It also represents long-living data, which still is mutable. OPERATIONAL DATA HOLDERS represent more ephemeral data.

The section “Message Granularity” in Chapter 7, “Refine Message Design for Quality,” features two related patterns, EMBEDDED ENTITY and LINKED INFORMATION HOLDER. Simple static data is often embedded (which eliminates the need for a dedicated REFERENCE DATA HOLDER) but can also be linked (with the link pointing at a REFERENCE DATA HOLDER).

More Information

“Data on the Outside versus Data on the Inside” introduces reference data in the broad sense of the word [Helland 2005]. Wikipedia provides links to inventories/directories of reference data [Wikipedia 2022b].

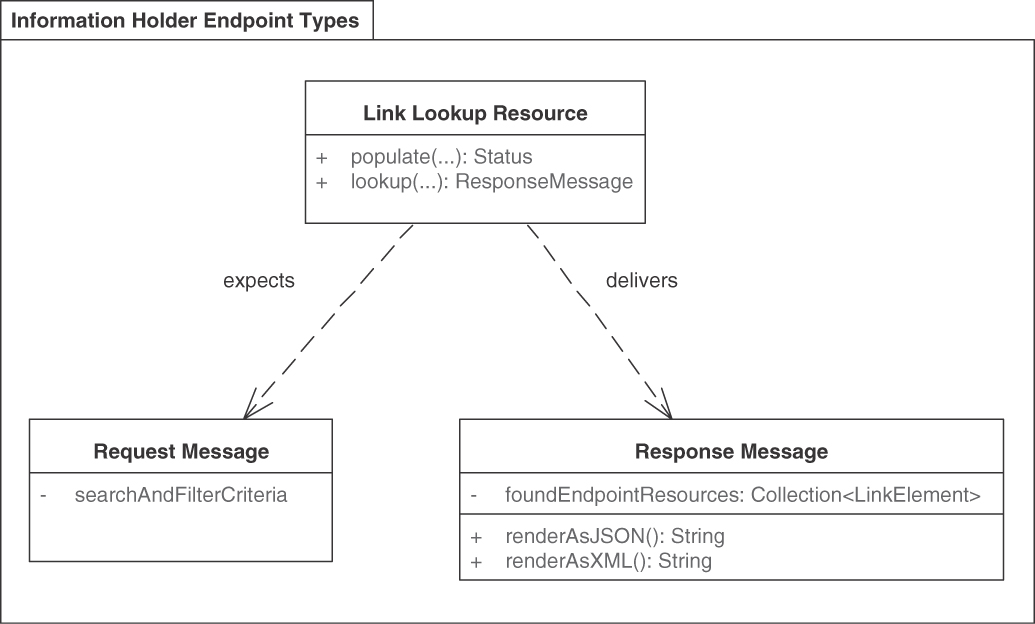

Pattern: LINK LOOKUP RESOURCE

Pattern: LINK LOOKUP RESOURCE

When and Why to Apply

The message representations in request and response messages of an API operation have been designed to meet the information needs of the message receivers. To do so, these messages may contain references to other API endpoints (such as INFORMATION HOLDER RESOURCES and/or PROCESSING RESOURCES) in the form of LINK ELEMENTS. Sometimes it is not desirable to expose such endpoint addresses to all clients directly because this adds coupling and harms location and reference autonomy.

How can message representations refer to other, possibly many and frequently changing, API endpoints and operations without binding the message recipient to the actual addresses of these endpoints?

Following are two reasons to avoid an address coupling between communication participants: