Chapter 8

Maintain and monitor SQL Server

Previous chapters covered the importance and logistics of database backups, but what else do you need to do on a regular basis to maintain a healthy SQL Server?

This chapter lays the foundation for the what and why of Microsoft SQL Server monitoring, based on dynamic management objects (DMOs), Database Consistency Checker (DBCC) commands, Extended Events (which replace Profiler/trace), and other tools provided by Microsoft.

Beyond simply setting up these tools, this chapter reviews what to look for on SQL Server instances on Windows and Linux, as well as SQL monitoring solutions in the Azure portal.

There is a lot for a DBA to be concerned with to monitor your databases—corrupt data files, lack of use of indexes and stats, properly sized data files, and baselined performance metrics, just to start. This chapter covers these topics and more.

All sample scripts in this book are available for download at https://MicrosoftPressStore.com/SQLServer2022InsideOut/downloads.

Detect, prevent, and respond to database corruption

After database backups, the second most important task concerning database integrity is proper configuration to prevent—and monitoring to mitigate—database corruption. A very large part of this is a proactive schedule of detection for rare cases when corruption occurs despite your best efforts. This isn’t a complicated topic and mostly revolves around configuring one setting and regularly running one command.

Set the database’s page verify option

For all databases, the page verify option should be CHECKSUM. Since SQL Server 2005, CHECKSUM has been the superior and default setting, but it requires a manual change after a database is restored up to a new SQL Server version.

If you still have databases whose page verify option is not CHECKSUM, you should change this setting immediately. The legacy NONE or TORN_PAGE_DETECTION options for this setting are a clear sign that this database has been moved over the years from a pre–SQL Server 2005 version. This setting is never automatically changed; you must change this setting after restoring the database up to a new version of SQL Server.

Warning

Before making the change to the CHECKSUM page verify option, take a full backup!

If corruption is found with the newly enabled CHECKSUM setting, the database can drop into a SUSPECT state, in which it becomes inaccessible. It is entirely possible that changing a database from NONE or TORN_PAGE_DETECTION to CHECKSUM could result in the discovery of existing, even long-present database corruption.

You should periodically run CHECKDB on all databases. This is a time-consuming but crucial process. You should run DBCC CHECKDB at least as often as your backup retention plan. Consider DBCC CHECKDB nearly as important as regular database backups.

The only reliable solution to database corruption is restoring from a known good backup.

For example, if you keep local backups around for one month, you should ensure that you perform a successful DBCC CHECKDB at least once per month, but more often is recommended. This ensures you will at least have a recovery point for uncorrupted, unchanged data, and a starting point for corrupted data fixes.

The DBCC CHECKDB command covers other more granular database integrity check tasks, including DBCC CHECKALLOC, DBCC CHECKTABLE, and DBCC CHECKCATALOG, all of which are important, and in only rare cases need to be run separately to split up the workload.

Running DBCC CHECKDB with no other parameters or syntax performs an integrity test on the current database context. Without specifying a database, however, no other additional options can be provided.

On large databases, DBCC CHECKDB is a resource-intensive operation (CPU, memory, and I/O), can take hours, and affects other user queries because of that resource consumption. DBCC CHECKDB may take hours to complete and tie up CPU resources, so it should be run only outside of business hours. To mitigate this, consider specifying the MAXDOP option (more on that in a moment). You can evaluate the progress of a DBCC CHECKDB operation (as well as backup and restore operations) by referencing the value in sys.dm_exec_requests.percent_complete for the executing session.

Here are some parameters worth noting:

NOINDEX. This can reduce the duration of the integrity check by skipping checks on nonclustered rowstore and columnstore indexes. It is not recommended.

Example usage:

DBCC CHECKDB (databasename, NOINDEX);REPAIR_REBUILD. This ensures you have no data loss. However, there are some limitations to its potential benefit. You should run this only after considering other options, including a backup and restore, because although it might have some success, it is unlikely to result in a complete repair. It can also be very time consuming, involving the rebuilding of indexes based on attempted repair data.

Review the

Review the DBCC CHECKDBdocumentation at https://learn.microsoft.com/sql/t-sql/database-console-commands/dbcc-checkdb-transact-sql.

Example usage:

DBCC CHECKDB (databasename) WITH REPAIR_REBUILD;REPAIR_ALLOW_DATA_LOSS. You should run this only as a last resort to achieve a partial database recovery, because it can force a database to resolve errors by simply deallocating pages, potentially creating gaps in rows or columns. You must run this in

SINGLE_USERmode, and you should run it inEMERGENCYmode. Review theDBCC CHECKDBdocumentation for a number of caveats, and do not execute this command casually.Example usage (last resort only, not recommended!):

ALTER DATABASE WorldWideImporters SET EMERGENCY, SINGLE_USER; DBCC CHECKDB('WideWorldImporters', REPAIR_ALLOW_DATA_LOSS); ALTER DATABASE WorldWideImporters SET MULTI_USER;Note

A complete review of

EMERGENCYmode andREPAIR_ALLOW_DATA_LOSSis detailed in this blog post by Microsoft’s originalDBCC CHECKDBengineer, Paul Randal: http://sqlskills.com/blogs/paul/checkdb-from-every-angle-emergency-mode-repair-the-very-very-last-resort.WITH NO_INFOMSGS. This suppresses informational status messages and returns only errors.

Example usage:

DBCC CHECKDB (databasename) WITH NO_INFOMSGS;WITH ESTIMATEONLY. This estimates the amount of space required in tempdb for the

CHECKDBoperation.Example usage:

DBCC CHECKDB (databasename) WITH ESTIMATEONLY;WITH MAXDOP = n. Similar to limiting the maximum degree of parallelism in other areas of SQL Server, this option limits the

CHECKDBoperation’s parallelism, possibly extending duration but potentially reducing the CPU utilization. SQL Server Enterprise edition supports parallel execution of theDBCC CHECKDBcommand, up to the server’sMAXDOPsetting. Therefore, in Enterprise edition, considerMAXDOP = 1to run the command single-threaded, or, overriding the other limitations on maximum degree of parallelism withMAXDOP = 0, allowing theCHECKDBunlimited parallelism to potentially finish sooner. Outside of Enterprise and Developer editions of SQL Server, objects are not checked in parallel.Example usage, combined with the aforementioned

NO_INFOMSGScommand to show multiple parameters:DBCC CHECKDB (databasename) WITH NO_INFOMSGS, MAXDOP = 0; You can see all the syntax options for

You can see all the syntax options for CHECKDB, and those options that can be used together, at https://learn.microsoft.com/sql/t-sql/database-console-commands/dbcc-checkdb-transact-sql#syntax. For more information on automating

For more information on automating DBCC CHECKDB, see Chapter 9, “Automate SQL Server administration.”

Repair database data file corruption

Of course, the only real remedy to data corruption after it has happened is to restore from a backup that predates the corruption. The well-documented DBCC CHECKDB option for REPAIR_ALLOW_DATA_LOSS, discussed previously, should be a last resort.

It is possible to repair missing pages in clustered indexes by piecing together missing columns in nonclustered indexes. If you are fortunate enough that corruption is only in nonclustered indexes, you can simply rebuild those indexes to recover from corruption. However, in many cases, clustered index or system pages are corrupt, meaning the only option is to restore the database. It is also possible to recover from data corruption, admittedly a lucky endeavor that this author has benefited from, by identifying the objects reported by DBCC CHECKDB and performing index rebuild operations on them.

Finally, availability groups provide a built-in data-corruption detection and automatic repair capability by using uncorrupted data on one replica to replace inaccessible data on another.

For more information on this feature of availability groups, see Chapter 11, “Implement high availability and disaster recovery.”

For more information on this feature of availability groups, see Chapter 11, “Implement high availability and disaster recovery.”

Recover from database transaction log file corruption

In addition to following guidance in the previous chapter on the importance of backups, you can reconstitute a corrupted or lost database transaction log file by using the code that follows. A lost transaction log file could result in the loss of uncommitted data (or in the case of delayed durability tables, the loss of data that hasn’t been made durable in the log yet), but in the event of a disaster recovery involving the loss of the .ldf file with an intact .mdf file, this could be a valuable step.

It is possible to rebuild a blank transaction log file in a new file location for a database by using the following command:

ALTER DATABASE DemoDb SET EMERGENCY, SINGLE_USER;

ALTER DATABASE DemoDb REBUILD LOG

ON (NAME= DemoDb_Log, FILENAME = 'F:DATADemoDb_new.ldf');

ALTER DATABASE DemoDb SET MULTI_USER;Note

Rebuilding a blank transaction log file using ALTER DATABASE … REBUILD LOG is not supported for databases containing a MEMORY_OPTIMIZED_DATA filegroup.

Database corruption in Azure SQL Database

Like many other administrative concerns with a platform as a service (PaaS) database, integrity checks for Azure SQL Database are automated. Microsoft takes data integrity in its PaaS database offering very seriously and provides strong assurances of assistance and recovery for this product. Albeit rare, Azure engineering teams respond 24×7 globally to data-corruption reports. The Azure SQL Database engineering team details its response promises at https://azure.microsoft.com/blog/data-integrity-in-azure-sql-database/.

Note

While Azure SQL Managed Instance has many PaaS-like qualities, automated integrity checks are not one of them. You should set up maintenance plans to execute DBCC CHECKDB, index maintenance, and other maintenance topics discussed in this chapter for Azure SQL Managed Instance.

We discuss Azure SQL Managed Instance in detail in Chapter 18, “Provision Azure SQL Managed Instance.”

We discuss Azure SQL Managed Instance in detail in Chapter 18, “Provision Azure SQL Managed Instance.”

Maintain indexes and statistics

Index fragmentation occurs when insert, update, and delete activity occurs within tables, and there is not enough free space for that data, causing data to be split across pages. It can also happen when index pages get out of order, resulting in inefficient scans. Index fragmentation is caused by improper organization of rowstore data within the file that SQL Server maintains. Removing fragmentation is really about minimizing the number of pages that must be involved when queries read or write those data pages. Reducing fragmentation in database objects is vastly different from reducing fragmentation at the drive level, and has little in common with the Windows Disk Defragmenter application. Although this doesn’t translate to page locations on disk, and has even less relevance on storage area networks (SANs), it does translate to the activity of I/O systems when retrieving data.

In performance terms, the higher the amount of fragmentation (easily measurable in dynamic management views, as discussed later), the more activity is required for accessing the same amount of data.

The causes of index fragmentation are writes. Our data would stay nice and tidy if applications would stop writing to it! Updates and deletes will inevitably have a significant effect on clustered and nonclustered index fragmentation, plus the effect that inserts can have on fragmentation because of clustered index design.

The information in this section is largely unchanged from previous versions of SQL Server and applies to SQL Server instances, databases in Azure SQL Database, Azure SQL Managed Instance, and even dedicated SQL pools in Azure Synapse Analytics (formerly known as Azure SQL Data Warehouse).

Change the fill factor when beneficial

Each rowstore index on disk-based objects has a numeric property called a fill factor that specifies the percentage of space to be filled with rowstore data in each leaf-level data page of the index when it is created or rebuilt. The instance-wide default fill factor is 100 percent, which is represented by the setting value 0, and means that each leaf-level data page will be filled with as much data as possible. A fill factor setting of 80 (percent) means that 20 percent of leaf-level data pages will be intentionally left empty when data is inserted. You can adjust this fill factor percentage for each index to manage the efficiency of data pages.

A non-default fill factor may help reduce the number of page splits, which occur when the Database Engine attempts to add a new row of data or update an existing row with more data to a page that does not have enough space to add a new row. In this case, the Database Engine will clear out space for the new row by moving a proportion of the old rows to a new page. A page split can be a time-consuming and resource-consuming operation, with many page splits possible during writes, and will lead to index fragmentation.

However, setting a non-default fill factor will also increase the number of pages needed to store the same data and increase the number of reads needed for query operations. For example, a fill factor of 50 will roughly double the space on the drive that it initially takes to store and therefore access the data when compared to the default fill factor of 0.

In most instances, data is read far more often than it is written and inserted, updated, and deleted upon occasion. Indexes will therefore benefit from a high or default fill factor—usually more than 80—because it is almost always more important to keep the number of reads to a manageable level than to minimize the resources needed to perform a page split. You can deal with index fragmentation by using the REBUILD or REORGANIZE commands, as discussed in the next section.

If the key value for an index is constantly increasing, such as an autoincrementing IDENTITY or SEQUENCE-populated field as the first key of a clustered index, the data is added to the end of a data page and any gaps would not need to be filled. In the case of a table for which data is always inserted sequentially and never updated, changing the fill factor from the default may offer no advantage. Even after fine-tuning a fill factor, the benefit of reducing page splits might not be noticeable to write performance. The design of your database may affect your choice of fill factor—for example, if your clustered index key is a GUID, you may choose to lower the fill factor.

You can set a fill factor when an index is first created, or you can change it by using the ALTER INDEX ... REBUILD syntax, as discussed in the next section.

Note

The OPTIMIZE_FOR_SEQUENTIAL_KEY feature, introduced in SQL Server 2019, can further benefit IDENTITY and SEQUENCE-populated columns. For more on this recommended new feature, see Chapter 15, “Understand and design indexes.”

Track page splits

If you intend to fine-tune the fill factor for important tables to maximize the performance/storage space ratio, you can measure page splits in two ways: with a query on a DMV (discussed here), and with an Extended Event session (covered later in this chapter).

You can use the performance counter DMV to measure page splits in aggregate on Windows Server, as shown here:

SELECT * FROM sys.dm_os_performance_counters WHERE counter_name ='Page Splits/sec';The cntr_value increments whenever a page split is detected. This is a bit misleading because to calculate the page splits per second, you must sample the incrementing value twice and divide by the time difference between the samples. When viewing this metric in Performance Monitor, the calculation is done for you.

You can also track page_split events alongside statement execution by adding the page_split event to sessions such as the Transact-SQL (T-SQL) template in the Extended Events wizard. You’ll see an example of this later in this chapter, in the section “Use Extended Events to detect page splits.”

Extended Events and the

Extended Events and the sys.dm_os_performance_countersDMV are discussed in more detail later in this chapter in the section “Query performance metrics with DMVs.” This section also includes a sample session script to trackpage_splitevents.

Monitor index fragmentation

You can find the extent to which an index is fragmented by interrogating the sys.dm_db_index_physical_stats dynamic management function (DMF).

Unlike most DMVs, this function can have a significant impact on server performance because it can tax I/O. To query this DMF, you must be a member of the sysadmin server role or the db_ddladmin or db_owner database roles. Alternatively, you can grant the VIEW DATABASE STATE or VIEW SERVER STATE permissions. The sys.dm_db_index_physical_stats DMF is often joined to catalog views like sys.indexes or sys.objects, which require the user to have some permissions to the tables in addition to VIEW DATABASE STATE or VIEW SERVER STATE.

For more information, visit https://learn.microsoft.com/sql/relational-databases/system-dynamic-management-views/sys-dm-db-index-physical-stats-transact-sql#permissions and https://learn.microsoft.com/sql/relational-databases/security/metadata-visibility-configuration.

For more information, visit https://learn.microsoft.com/sql/relational-databases/system-dynamic-management-views/sys-dm-db-index-physical-stats-transact-sql#permissions and https://learn.microsoft.com/sql/relational-databases/security/metadata-visibility-configuration.

Keep this in mind when scripting this operation for automated index maintenance. (We talk more about automating index maintenance in Chapter 9.)

Some of the following samples can be executed against the WideWorldImporters sample database. You can download then restore the WideWorldImporters-Full.bak file from this location: https://go.microsoft.com/fwlink/?LinkID=800630. For example, to find the fragmentation level of all indexes on the Sales.Orders table in the WideWorldImporters sample database, you can use a query such as the following:

USE WideWorldImporters;

SELECT

DB = db_name(s.database_id)

, [schema_name] = sc.name

, [table_name] = o.name

, index_name = i.name

, s.index_type_desc

, s.partition_number -- if the object is partitioned

, avg_fragmentation_pct = s.avg_fragmentation_in_percent

, s.page_count -- pages in object partition

FROM sys.indexes AS i

CROSS APPLY sys.dm_db_index_physical_stats

(DB_ID(),i.object_id,i.index_id, NULL, NULL) AS s

INNER JOIN sys.objects AS o ON o.object_id = s.object_id

INNER JOIN sys.schemas AS sc ON o.schema_id = sc.schema_id

WHERE i.is_disabled = 0

AND o.object_id = OBJECT_ID('Sales.Orders');The sys.dm_db_index_physical_stats DMF accepts five parameters: database_id, object_id, index_id, partition_id, and mode. The mode parameter defaults to LIMITED, the fastest method, but you can set it to Sampled or Detailed. These additional modes are rarely necessary, but they provide more data, as well as more precise data. Some result set columns will be NULL in LIMITED mode. For the purposes of determining fragmentation, the default mode of LIMITED (used when the parameter value of NULL is provided or the literal LIMITED) suffices.

The five parameters of the sys.dm_db_index_physical_stats DMF are all nullable. For example, if you run the following script, you will see fragmentation statistics for all databases, objects, indexes, and partitions:

SELECT * FROM sys.dm_db_index_physical_stats(NULL,NULL,NULL,NULL,NULL);We recommend against executing this in a production environment during operational hours because, again, it can have a significant impact on server resources, resulting in a noticeable drop in performance.

Maintain indexes

After your automated script has identified the objects most in need of maintenance with the aid of sys.dm_db_index_physical_stats, it should proceed with steps to remove fragmentation in a timely fashion during a maintenance window. The commands to remove fragmentation are ALTER INDEX and ALTER TABLE, with REBUILD and REORGANIZE options. We explain the differences later, but briefly, rebuild is more thorough and potentially disruptive, whereas reorganize is less thorough, not disruptive, but often sufficient.

You must implement index maintenance for both rowstore and columnstore indexes; we cover strategies for both in this section.

Ideally, your automated index maintenance script runs as often as possible during regularly scheduled maintenance windows and for a limited amount of time. For example, if your business environment allows for a maintenance window each night between 1 a.m. and 4 a.m., try to run index maintenance each night in that window. If possible, modify your script to avoid starting new work after 4 a.m. or using the RESUMABLE PAUSE feature at 4 a.m. (More on the latter strategy in the upcoming section “Rebuild indexes.”) In databases with very large tables, index maintenance may require more time than you have within in a single maintenance window. Try to use the limited amount of time in each maintenance window with the greatest effect. Given ample time, this approach tends to work best to reduce fragmentation rather than, for example, a single very long maintenance period during a weekend. This feature also allows your active transaction log pages to be cleared with a log backup during the paused phases of an index rebuild.

For more on maintenance plans and automating index maintenance, including the typical “care and feeding” of a SQL Server, see Chapter 9.

For more on maintenance plans and automating index maintenance, including the typical “care and feeding” of a SQL Server, see Chapter 9.

For more on enabling the accelerated database recovery option, see Chapter 6, “Provision and configure SQL Server databases.”

For more on enabling the accelerated database recovery option, see Chapter 6, “Provision and configure SQL Server databases.”

Rebuild indexes

Performing an INDEX REBUILD operation on a rowstore index (clustered or nonclustered) physically re-creates the index B-tree leaf level. The goal of moving the pages is to make storage more efficient and to match the logical order provided by the index key. A rebuild operation is destructive to the index object and blocks other queries attempting to access the pages unless you provide the ONLINE option. Because the rebuild operation destroys and re-creates the index, it must update the index statistics afterward, eliminating the need to perform a subsequent UPDATE STATISTICS operation as part of regular maintenance.

Long-term table locks are held during the rebuild operation. One major advantage of SQL Server Enterprise edition remains the ability to specify the ONLINE option, which allows for rebuild operations that are significantly less disruptive to other queries, though not completely. This makes index maintenance feasible on SQL Servers with round-the-clock activity.

Consider using ONLINE with index rebuild operations whenever short maintenance windows are insufficient for rebuilding fragmented indexes offline. An online index rebuild, however, might take longer than an offline rebuild. There are also scenarios for which an online rebuild is not possible, including deprecated data types image, text, and ntext, or the xml data type. Since SQL Server 2012, it has been possible to perform ONLINE index rebuilds on the max lengths of the data types varchar, nvarchar, and varbinary.

For the syntax to rebuild the FK_Sales_Orders_CustomerID nonclustered index on the Sales.Orders table with the ONLINE functionality in Enterprise edition, see the following code sample:

ALTER INDEX FK_Sales_Orders_CustomerID

ON Sales.Orders

REBUILD WITH (ONLINE=ON);It’s important to note that if you perform any kind of index maintenance on the clustered index of a rowstore table, it does not affect the nonclustered indexes. Nonclustered index fragmentation will not change if you rebuild the clustered index.

Instead of rebuilding each index on a table individually, you can rebuild all indexes on a table by replacing the name of the index with the keyword ALL. For example, to rebuild all indexes on the Sales.OrderLines table, do the following:

ALTER INDEX ALL ON [Sales].[OrderLines] REBUILD;This is usually overkill and inefficient, however, because not all indexes may have the same level of fragmentation or need for maintenance. Remember, we should perform index maintenance as granularly as possible.

For memory-optimized tables, we recommend a manual routine maintenance step using the ALTER TABLE … ALTER INDEX … REBUILD syntax. This is not to reduce fragmentation in the in-memory data; rather, it is to examine the number of buckets in a memory-optimized table’s hash indexes. For more information on rebuilding hash indexes and bucket counts, see Chapter 15.

Note

You can change the data compression option for indexes with the rebuild operation using the DATA_COMPRESSION option. For more detail on data compression, see Chapter 3, “Design and implement an on-premises database infrastructure.”

Aside from ONLINE, there are other options that you might want to consider for rebuild operations. Let’s look at them:

SORT_IN_TEMPDB. Use this when you want to create or rebuild an index using tempdb for sorting the index data, potentially increasing performance by distributing the I/O activity across multiple drives. This also means that these sorting worktables are written to the tempdb database transaction log instead of the user database transaction log, potentially reducing the impact on the user database log file, and allowing for the user database transaction log to be backed up during the operation.

MAXDOP. Use this to mitigate some of the impact of index maintenance by preventing the operation from using parallel processors. This can cause the operation to run longer, but to have less impact on performance.

WAIT_AT_LOW_PRIORITY. First introduced in SQL Server 2014, this is the first of a set of parameters that you can use to instruct the

ONLINEindex maintenance operation to try not to block other operations. This feature is known as Managed Lock Priority, and this syntax is not usable outside of online index operations and partition-switching operations. (SQL Server 2022 also introduced the ability to useWAIT_AT_LOW_PRIORITYforDBCC SHRINKDATABASEandDBCC SHRINKFILEoperations.) Here is the full syntax:ALTER INDEX PK_Sales_OrderLines on [Sales].[OrderLines] REBUILD WITH (ONLINE=ON (WAIT_AT_LOW_PRIORITY (MAX_DURATION = 5 MINUTES, ABORT_AFTER_WAIT = SELF)));The parameters for

MAX_DURATIONandABORT_AFTER_WAITinstruct the statement on how to proceed if it begins to be blocked by another operation. The online index operation will wait, allowing other operations to proceed.The

ABORT_AFTER_WAITparameter provides an action at the end of theMAX_DURATIONwait:SELFinstructs the statement to terminate its own process, ending the online rebuild step.BLOCKERSinstructs the statement to terminate the other process that is being blocked, terminating what is potentially a user transaction. Use with caution.NONEinstructs the statement to continue to wait. When combined withMAX_DURATION = 0, it is essentially the same behavior as not specifyingWAIT_AT_LOW_PRIORITY.

RESUMABLE. Introduced in SQL Server 2017, this feature makes it possible to initiate an online index creation or rebuild that can be paused and resumed later, even after a server shutdown. You can also specify a

MAX_DURATIONin minutes when starting an index rebuild operation, which will pause the operation if it exceeds the specified duration. You cannot specify theALLkeyword for a resumable operation. TheSORT_IN_TEMPDB=ONoption is not compatible with theRESUMABLEoption.

Note

Starting with SQL Server 2019, the RESUMABLE syntax can also be used when creating an index. An ALTER INDEX and CREATE INDEX statement can be similarly paused and resumed.

To leverage resumable index maintenance operations, you can see a list of resumable and paused index operations in a new DMV, sys.index_resumable_operations, where the state_desc field will reflect RUNNING (and pausable) or PAUSED (and resumable).

Here is a sample scenario of a paused/resumed index maintenance operation on a large table in the sample WideWorldImporters database:

ALTER INDEX PK_Sales_OrderLines on [Sales].[OrderLines]

REBUILD WITH (ONLINE = ON, RESUMABLE = ON);From another session, show that the index rebuild is RUNNING with the RESUMABLE option:

SELECT object_name = object_name (object_id), *

FROM sys.index_resumable_operations;From a third session, run the following to pause the operation:

ALTER INDEX PK_Sales_OrderLines on [Sales].[OrderLines] PAUSE;You can then show that the index rebuild is paused:

SELECT object_name = object_name (object_id), * FROM sys.index_resumable_operations;This sample is on a relatively small table, and may not allow you to execute the pause before the index rebuild is completed. This will result in a disconnection of the session of the original index maintenance, and a severe error message. In the SQL Server Error Log, the event is not a severe error message, but an informative note that “An ALTER INDEX ‘PAUSE’ was executed for….”

To resume the index maintenance operation, you have two options:

Reissue the same index maintenance operation, which will warn you it’ll just resume instead.

ALTER INDEX PK_Sales_OrderLines on [Sales].[OrderLines] REBUILD WITH (ONLINE = ON, RESUMABLE = ON);Issue a

RESUMEto the same index.ALTER INDEX PK_Sales_OrderLines on [Sales].[OrderLines] RESUME;

Reorganize indexes

Performing a REORGANIZE operation on an index uses fewer system resources and is much less disruptive than performing a full rebuild, while still accomplishing the goal of reducing fragmentation. It physically reorders the leaf-level pages of the index to match the logical order. It also compacts pages to match the fill factor on the index, though it does not allow the fill factor to be changed. This operation is always performed online, so long-term table locks (except for schema locks) are not held, and queries or modifications to the underlying table or index data will not be blocked by the schema lock during the REORGANIZE transaction.

Because the REORGANIZE operation is not destructive, it does not automatically update the statistics for the index afterward as a rebuild operation does. Thus, you should always follow a REORGANIZE step with an UPDATE STATISTICS step.

For more on statistics objects and their impact on performance, see Chapter 15.

For more on statistics objects and their impact on performance, see Chapter 15.

The following example presents the syntax to reorganize the PK_Sales_OrderLines index on the Sales.OrderLines table:

ALTER INDEX PK_Sales_OrderLines on [Sales].[OrderLines] REORGANIZE;None of the options available to rebuild that we covered in the previous section are available to the REORGANIZE command. The only additional option that is specific to REORGANIZE is the LOB_COMPACTION option. It compresses large object (LOB) data, which affects only LOB data types: image, text, ntext, varchar(max), nvarchar(max), varbinary(max), and xml. By default, this option is enabled, but you can disable it for non-heap tables to potentially skip some activity, though we do not recommend it. For heap tables, LOB data is always compacted.

Update index statistics

SQL Server uses statistics to describe the distribution and nature of the data in tables. The Query Optimizer needs the auto create setting enabled (it is enabled by default) so it can create single-column statistics when compiling queries. These statistics help the Query Optimizer create the most optimal query plans at runtime. The auto update statistics option prompts statistics to be updated automatically when accessed by a T-SQL query. This only occurs when the table is discovered to have passed a threshold of rows changed. Without relevant and up-to-date statistics, the Query Optimizer might not choose the best way to run queries.

An update of index statistics should accompany INDEX REORGANIZE steps to ensure that statistics on the table are current, but not INDEX REBUILD steps. Remember that the INDEX REBUILD command also updates the index statistics.

The basic syntax to update the statistics for an individual table is as follows:

UPDATE STATISTICS [Sales].[Invoices];The only command option to be aware of concerns the depth to which the statistics are scanned before being recalculated. By default, SQL Server samples a statistically significant number of rows in the table. This sampling is done with a parallel process starting with database compatibility level 150. This is fast and adequate for most workloads. You can optionally choose to scan the entire table by specifying the FULLSCAN option, or a sample of the table based on a percentage of rows or a fixed number of rows using the SAMPLE option, but these options are typically reserved for cases of unusual data skew where the default sampling may not provide adequate coverage for your column or index.

You can manually verify that indexes are being kept up to date by the Query Optimizer when auto_create_stats is enabled. The sys.dm_db_stats_properties DMF accepts an object_id and stats_id, which is functionally the same as the index_id, if the statistics object corresponds to an index. The sys.dm_db_stats_properties DMF returns information such as the modification_counter of rows changed since the last statistics update, and the last_updated date, which is NULL if the statistics object has never been updated since it was created.

Not all statistics are associated with an index, such as statistics that are automatically created. There will generally be more statistics objects than index objects. This function works in SQL Server and Azure SQL Database. You can easily tell if a statistics object (which you can gather from querying sys.stats) is automatically created by its naming convention, WA_Sys_<column_name>_<object_id_hex>, or by looking at the user_created and auto_created columns in the same view.

For more on statistics objects and their impact on performance, see Chapter 15.

For more on statistics objects and their impact on performance, see Chapter 15.

Reorganize columnstore indexes

You must also maintain columnstore indexes, but these use different internal objects to measure the fragmentation of the internal columnstore structure. Columnstore indexes need only the REORGANIZE operation. For more on designing columnstore indexes, see Chapter 15.

You can review the current structure of the groups of columnstore indexes by using the DMV sys.dm_db_column_store_row_group_physical_stats. This returns one row per row group of the columnstore structure. The state of a row group, and the current count of row groups by their states, provides some insight into the health of the columnstore index. Most row group states should be COMPRESSED. Row groups in the OPEN and CLOSED states are part of the delta store and are awaiting compression. These delta store row groups are served up alongside compressed data seamlessly when queries use columnstore data.

The number of deleted rows in a rowgroup is also an indication that the index needs maintenance. As the ratio of deleted rows to total rows in a row group that is in the COMPRESSED state increases, the performance of the columnstore index will be reduced. If delete_rows is larger than or greater than the total rows in a rowgroup, a REORGANIZE step will be beneficial.

Performing a REBUILD operation on a columnstore index is essentially the same as performing a drop/re-create and is not necessary. However, if you want to force the rebuild process, using the WITH (ONLINE = ON) syntax is supported starting with SQL Server 2019 for rebuilding (and creating) columnstore indexes. A REORGANIZE step for a columnstore index, just as for a nonclustered index, is an online operation that has minimal impact to concurrent queries.

You can also use the REORGANIZE WITH (COMPRESS_ALL_ROW_GROUPS=ON) option to force all delta store row groups to be compressed into a single compressed row group. This can be useful when you observe many compressed row groups with fewer than 100,000 rows.

Without COMPRESS_ALL_ROW_GROUPS, only compressed row groups will be compressed and combined. Typically, compressed row groups should contain up to one million rows each, but SQL might align rows in compressed row groups that align with how the rows were inserted, especially if they were inserted in bulk operations.

We talk more about automating index maintenance in Chapter 9.

We talk more about automating index maintenance in Chapter 9.

Manage database file sizes

It is important to understand the distinction between the size of a database data or log files, which act simply as reservations for SQL Server to work in, and the data within those reservations. Note that this section does not apply to Azure SQL Database, because this level of file management is not available and is automatically managed.

In SQL Server Management Studio (SSMS), you can right-click a database, select Reports, and choose Disk Usage to view the Disk Usage report for a database. It contains information about how much data is in the database’s files.

Alternatively, the following query uses the FILEPROPERTY function to reveal how much data there is inside a file reservation. We again use the undocumented but well-understood sp_msforeachdb stored procedure to iterate through each of the databases, accessing the sys.database_files catalog view.

DECLARE @FILEPROPERTY TABLE

( DatabaseName sysname

,DatabaseFileName nvarchar(500)

,FileLocation nvarchar(500)

,FileId int

,[type_desc] varchar(50)

,FileSizeMB decimal(19,2)

,SpaceUsedMB decimal(19,2)

,AvailableMB decimal(19,2)

,FreePercent decimal(19,2) );

INSERT INTO @FILEPROPERTY

exec sp_MSforeachdb 'USE [?];

SELECT

Database_Name = d.name

, Database_Logical_File_Name = df.name

, File_Location = df.physical_name

, df.File_ID

, df.type_desc

, FileSize_MB = CAST(size/128.0 as Decimal(19,2))

, SpaceUsed_MB = CAST(CAST(FILEPROPERTY(df.name, "SpaceUsed") AS int)/128.0 AS

decimal(19,2))

, Available_MB = CAST(size/128.0 - CAST(FILEPROPERTY(df.name, "SpaceUsed") AS int)/128.0

AS decimal(19,2))

, FreePercent = CAST((((size/128.0) - (CAST(FILEPROPERTY(df.name, "SpaceUsed") AS

int)*8/1024.0)) / (size*8/1024.0) ) * 100. AS decimal(19,2))

FROM sys.database_files as df

CROSS APPLY sys.databases as d

WHERE d.database_id = DB_ID();'

SELECT * FROM @FILEPROPERTY

WHERE SpaceUsedMB is not null

ORDER BY FreePercent asc; --Find files with least amount of free space at topRun this on a database in your environment to see how much data there is within database files. You might find that some data or log files are near full, whereas others have a large amount of space. Why would this be?

Files that have a large amount of free space might have grown in the past but have since been emptied out. If a transaction log in the full recovery model has grown for a long time without having a transaction log backup, the .ldf file will have grown unchecked. Later, when a transaction log backup is taken, causing the log to truncate, it will be nearly empty, but the size of the .ldf file itself will not have changed. It isn’t until you perform a shrink operation that the .ldf file will give its unused space back to the operating system (OS). In most cases, you should never shrink a data file, and certainly not on a schedule. The two main exceptions are if you mistakenly oversize a file or you applied data compression to a number of large database objects. In these cases, shrinking files as a one-time corrective action may be appropriate.

You should manually grow your database and log files to a size that is well ahead of the database’s growth pattern. You might fret over the best autogrowth rate, but ideally, autogrowth events are best avoided altogether by proactive file management.

Autogrowth events can be disruptive to user activity, causing all transactions to wait while the database file asks the OS for more space and grows. Depending on the performance of the I/O system, this could take seconds, during which activity on the database must wait. Depending on the autogrowth setting and the size of the write transactions, multiple autogrowth events could be suffered sequentially.

Growth of database data files is also greatly sped up by instant file initialization, which is covered in Chapter 3.

Growth of database data files is also greatly sped up by instant file initialization, which is covered in Chapter 3.

Understand and find autogrowth events

You should change autogrowth rates for database data and log files from the initial (and far too small) default settings, but, more importantly, you should maintain enough free space in your data and log files so that autogrowth events do not occur. As a proactive DBA, you should monitor the space in database files and grow the files ahead of time, manually and outside of peak business hours.

You can view recent autogrowth events in a database via a report in SSMS or a T-SQL script (see the code example that follows). In SSMS, in Object Explorer, right-click the database name. Then, on the shortcut menu that opens, select Reports, select Standard Reports, and then select Disk Usage. An expandable/collapsible region of the report contains data/log files autogrow/autoshrink events.

The autogrowth report in SSMS reads data from the SQL Server instance’s default trace, which captures autogrowth events. This data is not captured by the default Extended Events session, called system_health, but you could capture autogrowth events with the sqlserver.database_file_size_change event in an Extended Event session.

To view and analyze autogrowth events more quickly, and for all databases simultaneously, you can query the SQL Server instance’s default trace yourself. The default trace files are limited to 20 MB, and there are at most five rollover files, yielding 100 MB of history. The amount of time this covers depends on server activity. The following sample code query uses the fn_trace_gettable() function to open the default trace file in its current location:

SELECT

DB = g.DatabaseName

, Logical_File_Name = mf.name

, Physical_File_Loc = mf.physical_name

, mf.type

-- The size in MB (converted from the number of 8-KB pages) the file increased.

, EventGrowth_MB = convert(decimal(19,2),g.IntegerData*8/1024.)

, g.StartTime --Time of the autogrowth event

-- Length of time (in seconds) necessary to extend the file.

, EventDuration_s = convert(decimal(19,2),g.Duration/1000./1000.)

, Current_Auto_Growth_Set = CASE

WHEN mf.is_percent_growth = 1

THEN CONVERT(char(2), mf.growth) + '%'

ELSE CONVERT(varchar(30), mf.growth*8./1024.) + 'MB'

END

, Current_File_Size_MB = CONVERT(decimal(19,2),mf.size*8./1024.)

, d.recovery_model_desc

FROM fn_trace_gettable(

(select substring((SELECT path

FROM sys.traces WHERE is_default =1), 0, charindex('log_',

(SELECT path FROM sys.traces WHERE is_default =1),0)+4)

+ '.trc'), DEFAULT) AS [g]

INNER JOIN sys.master_files mf

ON mf.database_id = g.DatabaseID

AND g.FileName = mf.name

INNER JOIN sys.databases d

ON d.database_id = g.DatabaseID

ORDER BY StartTime desc;Understanding autogrowth events helps explain what happens to database files when they don’t have enough space. They must grow, or transactions cannot be accepted. What about the opposite scenario, where a database file has “too much” space? We cover that next.

Shrink database files

We need to be as clear as possible about this: Shrinking database files is not something that you should do regularly or casually. If you find yourself every morning shrinking a database file that grew overnight, stop. Think. Isn’t it just going to grow again tonight?

One of the main concerns with shrinking a file is that it indiscriminately returns free pages to the OS, helping to create fragmentation. Aside from potentially ensuring autogrowth events in the future, shrinking a file creates the need for further index maintenance to alleviate the fragmentation. A shrink step can be time consuming, can block other user activity, and is not part of a healthy complete maintenance plan.

Database data and logs under normal circumstances—and in the case of the full recovery model with regular transaction log backups—grow to the size they need to be because of actual usage. Frequent autogrowth events and shrink operations are bad for performance and create fragmentation.

To increase concurrency of shrink operations, by allowing DBCC SHRINKDATABASE and DBCC SHRINKFILE to patiently wait for locks, SQL Server 2022 introduces the WAIT_AT_LOW_PRIORITY syntax. This same keyword has similar application for online index maintenance commands and behaves similarly. When you specify WAIT_AT_LOW_PRIORITY, the shrink operation waits until it can claim the shared schema (Sch-S) and shared metadata (Sch-M) locks it needs. Other queries won’t be blocked until the shrink can actually proceed, resulting in less potential for blocked queries. The WAIT_AT_LOW_PRIORITY option is less configurable for the two shrink commands, and is hard-coded to a 1-minute timeout. If after 1 minute the shrink operation cannot obtain the necessary locks to proceed, it will be cancelled.

Shrink data files

Try to proactively grow database files to avoid autogrowth events altogether. You should shrink a data file only as a one-time event to solve one of three scenarios:

A drive volume is out of space and, in an emergency break-fix scenario, you reclaim unused space from a database data or log file.

A database transaction log grew to a much larger size than is normally needed because of an adverse condition and should be reduced back to its normal operating size. An adverse condition could be a transaction log backup that stopped working for a timespan, a large uncommitted transaction, or a replication availability group issue that prevented the transaction log from truncating.

For the rare situation in which a database had a large amount of data deleted from the file, an amount of data that is unlikely ever to exist in the database again, a one-shrink file operation might be appropriate.

Shrink transaction log files

For the case in which a transaction log file should be reduced in size, the best way to reclaim the space and re-create the file with optimal virtual log file (VLF) alignment is to first create a transaction log backup to truncate the log file as much as possible. If transaction log backups have not recently been generated on a schedule, it may be necessary to create another transaction log backup to fully clear out the log file. Once empty, shrink the log file to reclaim all unused space, then immediately grow the log file back to its expected size in increments of no more than 8,000 MB at a time. This allows SQL Server to create the underlying VLF structures in the most efficient way possible.

For more information on VLFs in your database log files, see Chapter 3.

For more information on VLFs in your database log files, see Chapter 3.

The following sample script of this process assumes a transaction log backup has already been generated to truncate the database transaction log and that the database log file is mostly empty. It also grows the transaction log file backup to 9 GB (9,216 MB or 9,437,184 KB). Note the intermediate step of growing the file first to 8,000 MB, then to its intended size.

USE [WideWorldImporters];

--TRUNCATEONLY returns all free space to the OS

DBCC SHRINKFILE (N'WWI_Log' , 0, TRUNCATEONLY);

GO

USE [master];

ALTER DATABASE [WideWorldImporters]

MODIFY FILE ( NAME = N'WWI_Log', SIZE = 8192000KB );

ALTER DATABASE [WideWorldImporters]

MODIFY FILE ( NAME = N'WWI_Log', SIZE = 9437184KB );

GOCaution

You should never enable the autoshrink database setting. It automatically returns any free space of more than 25 percent of the data file or transaction log. You should shrink a database only as a one-time operation to reduce file size after unplanned or unusual file growth. This setting could result in unnecessary fragmentation, overhead, and frequent rapid log autogrowth events. This setting was originally intended, and might only be appropriate, for tiny local and/or embedded databases.

Monitor activity with DMOs

SQL Server provides a suite of internal dynamic management objects (DMOs) in the form of views (DMVs) and functions (DMFs). It is important for you as a DBA to have a working knowledge of these objects because they unlock the analysis of SQL Server outside of built-in reporting capabilities and third-party tools. In fact, third-party tools that monitor SQL Server almost certainly use these very dynamic management objects.

DMO queries are discussed in several other places in this book:

Chapter 14 discusses reviewing, aggregating, and analyzing cached execution plan statistics, including the Query Store feature introduced in SQL Server 2016.

Chapter 14 also discusses reporting from DMOs and querying performance monitor metrics within SQL Server DMOs.

Chapter 15 covers index usage statistics and missing index statistics.

Chapter 11 details high availability and disaster recovery features like automatic seeding.

The section “Monitor index fragmentation” earlier in this chapter talked about using a DMF to query index fragmentation.

Observe sessions and requests

Any connection to a SQL Server instance is a session and is reported live in the DMV sys.dm_exec_sessions. Any actively running query on a SQL Server instance is a request and is reported live in the DMV sys.dm_exec_requests. Together, these two DMVs provide a thorough and far more detailed replacement for the sp_who or sp_who2 system stored procedures, as well as the deprecated sys.sysprocesses system view, with which longtime DBAs might be more familiar. With DMVs, you can do so much more than replace sp_who.

By adding a handful of other DMOs, we can turn this query into a wealth of live information, including:

Complete connection source information

The actual runtime statement currently being run (like

DBCC INPUTBUFFER, but not limited to 254 characters)The actual plan XML (provided with a blue hyperlink in the SSMS results grid)

Request duration

Cumulative resource consumption

The current and most recent wait types experienced

Sure, it might not be as easy to type in as sp_who2, but it provides much more data, which you can easily query and filter. Save this as a go-to script in your personal DBA tool belt. If you are unfamiliar with any of the data being returned, take some time to dive into the result set and explore the information it provides; it will be an excellent hands-on learning resource. You might choose to add more filters to the WHERE clause specific to your environment. Let’s take a look:

SELECT

when_observed = sysdatetime()

, s.session_id, r.request_id

, session_status = s.[status] -- running, sleeping, dormant, preconnect

, request_status = r.[status] -- running, runnable, suspended, sleeping, background

, blocked_by = r.blocking_session_id

, database_name = db_name(r.database_id)

, s.login_time, r.start_time

, query_text = CASE

WHEN r.statement_start_offset = 0

and r.statement_end_offset= 0 THEN left(est.text, 4000)

ELSE SUBSTRING (est.[text], r.statement_start_offset/2 + 1,

CASE WHEN r.statement_end_offset = -1

THEN LEN (CONVERT(nvarchar(max), est.[text]))

ELSE r.statement_end_offset/2 - r.statement_start_offset/2 + 1

END

) END --the actual query text is stored as nvarchar,

--so we must divide by 2 for the character offsets

, qp.query_plan

, cacheobjtype = LEFT (p.cacheobjtype + ' (' + p.objtype + ')', 35)

, est.objectid

, s.login_name, s.client_interface_name

, endpoint_name = e.name, protocol = e.protocol_desc

, s.host_name, s.program_name

, cpu_time_s = r.cpu_time, tot_time_s = r.total_elapsed_time

, wait_time_s = r.wait_time, r.wait_type, r.wait_resource, r.last_wait_type

, r.reads, r.writes, r.logical_reads --accumulated request statistics

FROM sys.dm_exec_sessions as s

LEFT OUTER JOIN sys.dm_exec_requests as r on r.session_id = s.session_id

LEFT OUTER JOIN sys.endpoints as e ON e.endpoint_id = s.endpoint_id

LEFT OUTER JOIN sys.dm_exec_cached_plans as p ON p.plan_handle = r.plan_handle

OUTER APPLY sys.dm_exec_query_plan (r.plan_handle) as qp

OUTER APPLY sys.dm_exec_sql_text (r.sql_handle) as est

LEFT OUTER JOIN sys.dm_exec_query_stats as stat on stat.plan_handle = r.plan_handle

AND r.statement_start_offset = stat.statement_start_offset

AND r.statement_end_offset = stat.statement_end_offset

WHERE 1=1 --Veteran trick that makes for easier commenting of filters

AND s.session_id >= 50 --retrieve only user spids

AND s.session_id <> @@SPID --ignore this session

ORDER BY r.blocking_session_id desc, s.session_id asc;Notice that the preceding script returned wait_type and last_wait_type. Let’s dive into these important performance signals now.

Understand wait types and wait statistics

Wait statistics in SQL Server are an important source of information and can be a key resource for finding bottlenecks in performance at the aggregate level and at the individual query level. A wait is a signal recorded by SQL Server indicating what SQL Server is waiting on when attempting to finish processing a query. This section provides insights into this broad and important topic. However, entire books, training sessions, and software packages have been developed to address wait type analysis.

Wait statistics can be queried and provide value to SQL Server instances as well as databases in Azure SQL Database and Azure SQL Managed Instance, though there are some waits specific to the Azure SQL Database platform (which we’ll review). Like many DMOs, membership in the sysadmin server role is not required, only the permission VIEW SERVER STATE, or in the case of Azure SQL Database, VIEW DATABASE STATE.

You saw in the query in the previous section the ability to see the current and most recent wait type for a session. Let’s dive into how to observe wait types in the aggregate, accumulated at the server level or at the session level. Waits can occur when a request is in the runnable or suspended state. SQL Server can track many different wait types for a single query, many of which are of negligible duration or are benign in nature. There are quite a few waits that can be ignored or that indicate idle activity, as opposed to waits that indicate resource constraints and blocking. There are more than 1,000 distinct wait types in SQL Server 2022 and even more in Azure SQL Database. Some are better documented and understood than others. We review some that you should know about later in this section.

You can see the complete list of wait types at https://learn.microsoft.com/sql/relational-databases/system-dynamic-management-views/sys-dm-os-wait-stats-transact-sql#WaitTypes.

You can see the complete list of wait types at https://learn.microsoft.com/sql/relational-databases/system-dynamic-management-views/sys-dm-os-wait-stats-transact-sql#WaitTypes.

Monitor wait type aggregates

To view accumulated waits for a session, which live only until the close or reset of the session, use the sys.dm_exec_session_wait_stats DMV.

In sys.dm_exec_sessions, you can see the current wait type and most recent wait type, but this isn’t always that interesting or informative. Potentially more interesting would be to see all the accumulated wait stats for an ongoing session. This code sample shows how the DMV returns one row per session, per wait type experienced, for user sessions:

SELECT * FROM sys.dm_exec_session_wait_stats

ORDER BY wait_time_ms DESC;There is a distinction between the two time measurements in this query and others. The value from signal_wait_time_ms indicates the amount of time the thread waited on CPU activity, correlated with time spent in the runnable state. The wait_time_ms value indicates the accumulated time in milliseconds for the wait type, including the signal_wait_time_ms, and so includes time the request spent in the runnable and suspended states. Typically, wait_time_ms is the wait measurement that we aggregate. The waiting_tasks_count is also informative, indicating the number of times this wait_type was encountered. By dividing wait_time_ms by waiting_tasks_count, you can get an average number of milliseconds (ms) each task encountered this wait.

You can view aggregate wait types at the instance level with the sys.dm_os_wait_stats DMV. This is the same as sys.dm_exec_session_wait_stats, but without the session_id, which includes all activity in the SQL Server instance without any granularity to database, query, time frame, and so on. This can be useful for getting the “big picture,” but it is limited over long spans of time because the wait_time_ms counter accumulates, as illustrated here:

SELECT TOP (25)

wait_type

, wait_time_s = wait_time_ms / 1000.

, Pct = 100. * wait_time_ms/nullif(sum(wait_time_ms) OVER(),0)

, avg_ms_per_wait = wait_time_ms / nullif(waiting_tasks_count,0)

FROM sys.dm_os_wait_stats as wt ORDER BY Pct DESC;Eventually, the wait_time_ms numbers will be so large for certain wait types that trends or changes in wait type accumulations rates will be mathematically difficult to see. You want to use the wait stats to keep a close eye on server performance as it trends and changes over time, so you need to capture these accumulated wait statistics in chunks of time, such as one day or one week.

--Script to set up capturing these statistics over time

CREATE TABLE dbo.usr_sys_dm_os_wait_stats

( id int NOT NULL IDENTITY(1,1)

, datecapture datetimeoffset(0) NOT NULL

, wait_type nvarchar(512) NOT NULL

, wait_time_s decimal(19,1) NOT NULL

, Pct decimal(9,1) NOT NULL

, avg_ms_per_wait decimal(19,1) NOT NULL

, CONSTRAINT PK_sys_dm_os_wait_stats PRIMARY KEY CLUSTERED (id)

);

--This part of the script should be in a SQL Agent job, run regularly

INSERT INTO

Dbo.usr_sys_dm_os_wait_stats

(datecapture, wait_type, wait_time_s, Pct, avg_ms_per_wait)

SELECT

datecapture = SYSDATETIMEOFFSET()

, wait_type

, wait_time_s = convert(decimal(19,1), round( wait_time_ms / 1000.0,1))

, Pct = wait_time_ms/ nullif(sum(wait_time_ms) OVER(),0)

, avg_ms_per_wait = wait_time_ms / nullif(waiting_tasks_count,0)

FROM usr_sys.dm_os_wait_stats wt

WHERE wait_time_ms > 0

ORDER BY wait_time_s;Using the metrics returned in the preceding code, you can calculate the difference between always-ascending wait times and counts to determine the counts between intervals. You can customize the schedule for this data to be captured in tables, building your own internal wait stats reporting table.

The sys.dm_os_wait_stats DMV is reset—and all accumulated metrics are lost—upon restart of the SQL Server service, but you can also clear them manually. Understandably, this would clear the statistics for the whole SQL Server instance. Here is a sample script of how you can capture wait statistics at any interval:

DBCC SQLPERF ('sys.dm_os_wait_stats', CLEAR);You can also view statistics for a query currently running in the DMV sys.dm_os_waiting_tasks, which contains more data than simply the wait_type; it also shows the blocking resource address in the resource_description field. This data is also available in sys.dm_exec_requests.

For a complete breakdown of the information that can be contained in the

For a complete breakdown of the information that can be contained in the resource_descriptionfield, see http://learn.microsoft.com/sql/relational-databases/system-dynamic-management-views/sys-dm-os-waiting-tasks-transact-sql.

The query storage also tracks aggregated wait statistics for queries that it tracks. The waits tracked by the Query Store are not as detailed as the DMVs, but they do give you a quick idea of what a query is waiting on.

For more information on reviewing waits in the Query Store, see Chapter 14.

For more information on reviewing waits in the Query Store, see Chapter 14.

Understand wait resources

What if you observe a query wait occurring live, and want to figure out what data the query is actually waiting on?

SQL Server 2019 delivered some new tools to explore the archaeology involved in identifying the root of waits. While an exhaustive look at the different wait resource types—some more cryptic than others—is best documented in Microsoft’s online resources, let’s review the tools provided.

The undocumented DBCC PAGE command (and its accompanying Trace Flag 3604) were used for years to review the information contained in a page, based on a specific page number. Whether trying to see the data at the source of waits or trying to peek at corrupted pages reported by DBCC CHECKDB, the DBCC PAGE command didn’t return any visible data without first enabling Trace Flag 3604. Now, for some cases, we have the pair of new functions, sys.dm_db_page_info and sys.fn_PageResCracker. Both can be used only when the sys.dm_exec_requests.wait_resource value begins with PAGE. So, the new tools leave out other common wait_resource types like KEY.

The DMVs in SQL Server 2019 and SQL Server 2022 are preferable to using DBCC PAGE because they are fully documented and supported. They can be combined with sys.dm_exec_requests—the hub DMV for all things active in SQL Server—to return potentially useful information about the object in contention when PAGE blocking is present:

SELECT r.request_id, pi.database_id, pi.file_id, pi.page_id, pi.object_id,

pi.page_type_desc, pi.index_id, pi.page_level, rows_in_page = pi.slot_count

FROM sys.dm_exec_requests AS r

CROSS APPLY sys.fn_PageResCracker (r.page_resource) AS prc

CROSS APPLY sys.dm_db_page_info(r.database_id, prc.file_id, prc.page_id, 'DETAILED') AS

pi;Benign wait types

Many of the waits in SQL Server do not affect the performance of user workload. These waits are commonly referred to as benign waits and are frequently excluded from queries analyzing wait stats. The following code contains a starter list of wait types that you can mostly ignore when querying the sys.dm_os_wait_stats DMV for aggregate wait statistics. You can append the following sample list WHERE clause.

SELECT * FROM sys.dm_os_wait_stats

WHERE

wt.wait_type NOT LIKE '%SLEEP%' --can be safely ignored, sleeping

AND wt.wait_type NOT LIKE 'BROKER%' -- internal process

AND wt.wait_type NOT LIKE '%XTP_WAIT%' -- for memory-optimized tables

AND wt.wait_type NOT LIKE '%SQLTRACE%' -- internal process

AND wt.wait_type NOT LIKE 'QDS%' -- asynchronous Query Store data

AND wt.wait_type NOT IN ( -- common benign wait types

'CHECKPOINT_QUEUE'

,'CLR_AUTO_EVENT','CLR_MANUAL_EVENT' ,'CLR_SEMAPHORE'

,'DBMIRROR_DBM_MUTEX','DBMIRROR_EVENTS_QUEUE','DBMIRRORING_CMD'

,'DIRTY_PAGE_POLL'

,'DISPATCHER_QUEUE_SEMAPHORE'

,'FT_IFTS_SCHEDULER_IDLE_WAIT','FT_IFTSHC_MUTEX'

,'HADR_FILESTREAM_IOMGR_IOCOMPLETION'

,'KSOURCE_WAKEUP'

,'LOGMGR_QUEUE'

,'ONDEMAND_TASK_QUEUE'

,'REQUEST_FOR_DEADLOCK_SEARCH'

,'XE_DISPATCHER_WAIT','XE_TIMER_EVENT'

--Ignorable HADR waits

, 'HADR_WORK_QUEUE'

,'HADR_TIMER_TASK'

,'HADR_CLUSAPI_CALL');Through your own research into your workload, and in future versions of SQL Server, as more wait types are added, you can grow this list so that important and actionable wait types rise to the top of your queries. A prevalence of these wait types shouldn’t be a concern; they’re unlikely to be generated by or negatively affect user requests.

Wait types to be aware of

This section shouldn’t be the start and end of your understanding of or research into wait types. Many of them have multiple avenues to explore in your SQL Server instance, or at the very least, names that are misleading to the DBA considering their origin. There are some, or groups of some, that you should understand, because they indicate a condition worth investigating. Many wait types are always present in all applications but become problematic when they appear in large frequency and/or with large cumulative waits. Large here is of course relative to your workload and your server.

Different instance workloads will have a different profile of wait types. Just because a wait type is at the top of the aggregate sys.dm_os_wait_stats list, it doesn’t mean that is the main or only performance problem with a SQL Server instance. It is likely that all SQL Server instances, even finely tuned instances, will show these wait types near the top of the aggregate waits list. You should track and trend these wait stats, perhaps using the script example in the previous section.

Important waits include the following, provided in alphabetical order:

ASYNC_NETWORK_IO. This wait type is associated with the retrieval of data to a client (including SQL Server Management Studio and Azure Data Studio), and the wait while the remote client receives and finally acknowledges the data received. This wait almost certainly has very little to do with network speed, network interfaces, switches, or firewalls. Any client, including your workstation or even SSMS running locally on the server, can incur small amounts of

ASYNC_NETWORK_IOas data is retrieved to be processed. Transactional and snapshot replication distribution will incurASYNC_NETWORK_IO. You will see a large amount ofASYNC_NETWORK_IOgenerated by reporting applications such as Tableau, SSRS, SQL Server Profiler, and Microsoft Office products. The next time a rudimentary Access database application tries to load the entire contents of theSales.OrderLinestable, you’ll likely seeASYNC_NETWORK_IO.Reducing

ASYNC_NETWORK_IO, like many of the waits we discuss in this chapter, has little to do with hardware purchases or upgrades; rather, it’s more to do with poorly designed queries and applications. The solution, therefore, would be an application change. Try suggesting to developers or client applications incurring large amounts ofASYNC_NETWORK_IOthat they eliminate redundant queries, use server-side filtering as opposed to client-side filtering, use server-side data paging as opposed to client-side data paging, or use client-side caching.CXPACKET. A common and often-overreacted-to wait type,

CXPACKETis a parallelism wait. In a vacuum, execution plans that are created with parallelism run faster. But at scale, with many execution plans running in parallel, the server’s resources might take longer to process the requests. This wait is measured in part asCXPACKETwaits.When the

CXPACKETwait is the predominant wait type experienced over time by your SQL Server, you should consider turning both the Maximum Degree of Parallelism (MAXDOP) and Cost Threshold for Parallelism (CTFP) dials when performance tuning. Make these changes in small, measured gestures, and don’t overreact to performance problems with a small number of queries. Use the Query Store to benchmark and trend the performance of high-value and high-cost queries as you change configuration settings.If large queries are already a problem for performance and multiple large queries regularly run simultaneously, raising the

CTFPmight not solve the problem. In addition to the obvious solutions of query tuning and index changes, including the creation of columnstore indexes, useMAXDOPas well to limit parallelization for very large queries.Until SQL Server 2016,

MAXDOPwas either a setting at the server level, a setting enforced at the query level, or a setting enforced to sessions selectively via Resource Governor (more on this toward the end of this chapter in the section “Protect important workloads with Resource Governor”). Since SQL Server 2016, theMAXDOPsetting has been available as a database-scoped configuration. You can also use the MAXDOP query hint in any statement to override the database or server-levelMAXDOPsetting.

IO_COMPLETION. This wait type is associated with synchronous read and write operations that are not related to row data pages, such as reading log blocks or virtual log file (VLF) information from the transaction log, or reading or writing merge join operator results, spools, and buffers to disk. It is difficult to associate this wait type with a single activity or event, but a spike in

IO_COMPLETIONcould be an indication that these same events are now waiting on the I/O system to complete.LCK_M_*. Lock waits have to do with blocking and concurrency (or lack thereof). (Chapter 14 looks at isolation levels and concurrency.) When a request is writing and another request in

READ COMMITTEDor higher isolation is trying to lock that same row data, one of the 60+ differentLCK_M_*wait types will be the reported wait type of the blocked request. For example,LCK_M_ISmeans that the thread wants to acquire an Intent Shared lock, but some other thread has it locked in an incompatible manner.In the aggregate, this doesn’t mean you should reduce the isolation level of your transactions. Whereas

READ UNCOMMITTEDis not a good solution, read committed snapshot isolation (RCSI) and snapshot isolation are good solutions; see Chapter 14 for more details. Rather, you should optimize execution plans for efficient access, for example, by reducing scans as well as avoiding long-running multistep transactions. Also, avoid index rebuild operations without theONLINEoption. (See the “Rebuild indexes” section earlier in this chapter for more information.)The

wait_resourceprovided insys.dm_exec_requests, orresource_descriptioninsys.dm_os_waiting_tasks, provide a map to the exact location of the lock contention inside the database. For a complete breakdown of the information that can be contained in the

For a complete breakdown of the information that can be contained in the resource_descriptionfield in your version of SQL Server, visit https://learn.microsoft.com/sql/relational-databases/system-dynamic-management-views/sys-dm-os-waiting-tasks-transact-sql.

MEMORYCLERK_XE. The

MEMORYCLERK_XEwait type could spike if you have allowed Extended Events session targets to consume too much memory. We discuss Extended Events later in this chapter, but you should watch out for the maximum buffer size allowed to thering_buffersession target, among other in-memory targets.OLEDB. This self-explanatory wait type describes waits associated with long-running external communication via the OLE DB provider, which is commonly used by SQL Server Integration Services (SSIS) packages, Microsoft Office applications (including querying Excel files), linked servers using the OLE DB provider, and third-party tools. It could also be generated by internal commands like

DBCC CHECKDB. When you observe this wait occurring in SQL Server, in most cases, it’s driven by long-running linked server queries.

PAGELATCH_* and PAGEIOLATCH_*. These two wait types are presented together not because they are similar in nature—they are not—but because they are often confused. To be clear,

PAGELATCHhas to do with contention over pages in memory, whereasPAGEIOLATCHrelates to contention over pages in the I/O system (on the drive).PAGELATCH_*contention deals with pages in memory, which can rise because of the overuse of temporary objects in memory, potentially with rapid access to the same temporary objects. This can also be experienced when reading in data from an index in memory or reading from a heap in memory.A rise in

PAGEIOLATCH_*could be due to the performance of the storage system (keeping in mind that the performance of drive systems does not always respond linearly to increases in activity). Aside from throwing (a lot of!) money at faster drives, a more economical solution is to modify queries and/or indexes and reduce the footprint of memory-intensive operations, especially operations involving index and table scans.PAGEIOLATCH_*contention has to do with a far more limiting and troubling performance condition: the overuse of reading from the slowest subsystem of all, the physical drives.PAGEIOLATCH_SHdeals with reading data from a drive into memory so that the data can be accessed. Keep in mind that this doesn’t necessarily translate to a request’s row count, especially if index or table scans are required in the execution plan.PAGEIOLATCH_EXandPAGEIOLATCH_UPare waits associated with reading data from a drive into memory so that the data can be written to.

RESOURCE_SEMAPHORE. This wait type is accumulated when a request is waiting on memory to be allocated before it can start. Although this could be an indication of memory pressure caused by insufficient memory available to process the queries being executed, it is more likely caused by poor query design and poor indexing, resulting in inefficient execution plans. Aside from throwing money at more system memory, a more economical solution is to tune queries and reduce the footprint of memory-intensive operations. The memory grant feedback features that are part of Intelligent Query Processing help address these waits by improving memory grants for subsequent executions of a query.

SOS_SCHEDULER_YIELD. Another flavor of CPU pressure, and in some ways the opposite of the

CXPACKETwait type, is theSOS_SCHEDULER_YIELDwait type. TheSOS_SCHEDULER_YIELDis an indicator of CPU pressure, indicating that SQL Server had to share time with, or yield to, other CPU tasks, which can be normal and expected on busy servers. WhereasCXPACKETis SQL Server complaining about too many threads in parallel,SOS_SCHEDULER_YIELDis the acknowledgement that there were more runnable tasks for the available threads. In either case, first take a strategy of reducing CPU-intensive queries and rescheduling or optimizing CPU-intense maintenance operations. This is more economical than simply adding CPU capacity.WAIT_XTP_RECOVERY. This wait type can occur when a database with memory-optimized tables is in recovery at startup and is expected. As with all wait types on performance-sensitive production SQL Server instances, you should baseline and measure it, but be aware this is not usually a sign of any problem.

WRITELOG. The

WRITELOGwait type is likely to appear on any SQL Server instance, including availability group primary and secondary replicas, when there is heavy write activity. TheWRITELOGwait is time spent flushing the transaction log to a drive and is due to physical I/O subsystem performance. On systems with heavy writes, this wait type is expected.You could consider re-creating the heavy-write tables as memory-optimized tables to increase the performance of writes. Memory-optimized tables optionally allow for delayed durability, which would resolve a bottleneck writing to the transaction log by using a memory buffer. For more information, see Chapter 14.

XE_FILE_TARGET_TVF and XE_LIVE_TARGET_TVF. These waits are associated with writing Extended Events sessions to their targets. A sudden spike in these waits would indicate that too much is being captured by an Extended Events session. Usually these aren’t a problem, because the asynchronous nature of Extended Events has a much lower impact than traces.

Monitor with the SQL Assessment API

The SQL Assessment API is a code-delivered method for programmatically evaluating SQL Server instance and database configuration. First introduced with SQL Server Management Objects (SMO) and the SqlServer PowerShell module in 2019, calls to the API can be used to evaluate alignment with best practices, and then can be scheduled to monitor regularly for variance.

You can use this API to assess SQL Servers starting with SQL Server 2012, for SQL Server on Windows and Linux. The assessment is performed by comparing SQL Server configuration to rules, stored as JSON files. Microsoft has provided a standard array of rules.

Review the default JSON configuration file at https://learn.microsoft.com/sql/tools/sql-assessment-api/sql-assessment-api-overview.

Review the default JSON configuration file at https://learn.microsoft.com/sql/tools/sql-assessment-api/sql-assessment-api-overview.

Use this standard JSON file as a template to assess your own best practices if you like. The assessment configuration files are organized into the following:

Probes. These usually contain a T-SQL query—for example, to return data from DMOs (discussed earlier in this chapter). You can also write probes against your own user tables to query for code-driven states or statuses that can be provided by applications, ETL packages, or custom error-handling.

Checks. These compare desired values with the actual values returned by probes. They include logical conditions and thresholds. Here, like with any SQL Server monitoring tool, you might want to change numeric thresholds to suit your SQL environment.

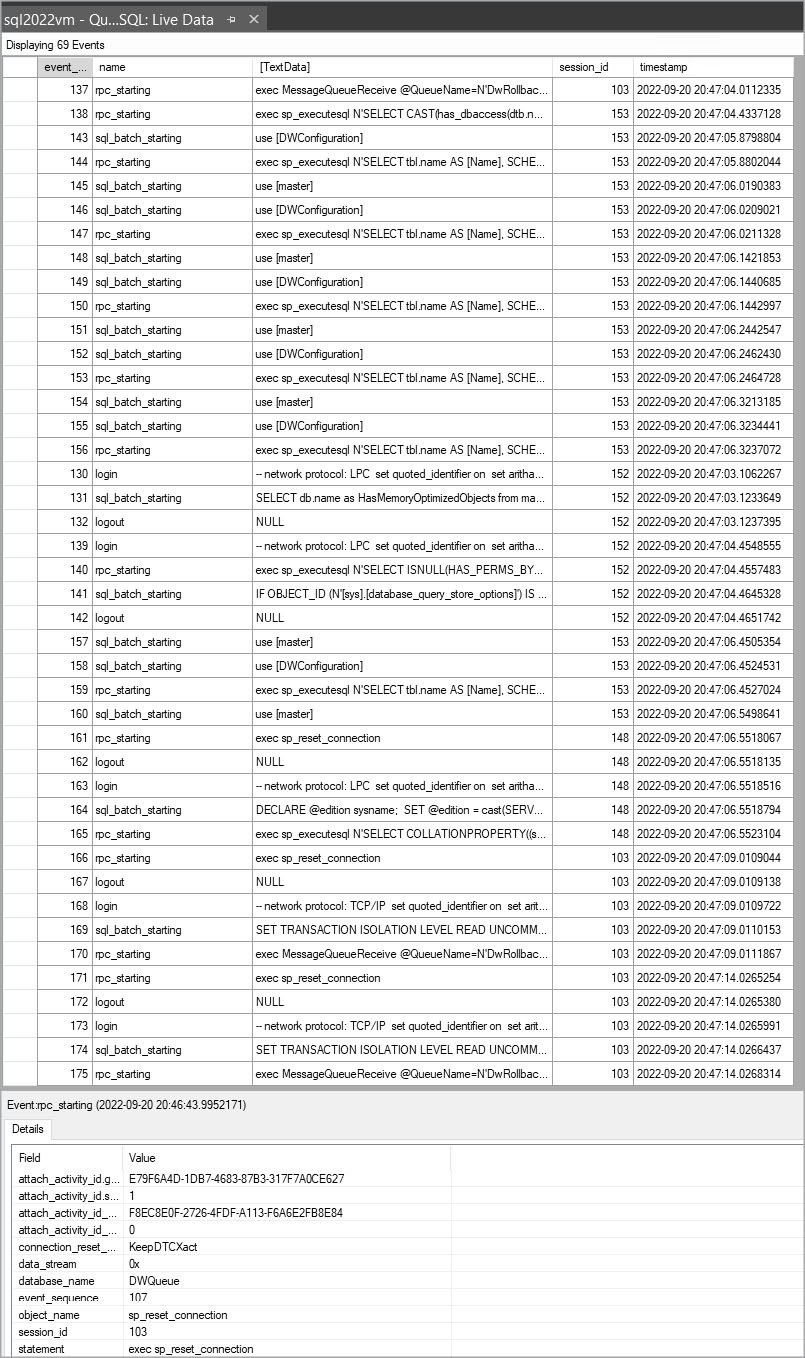

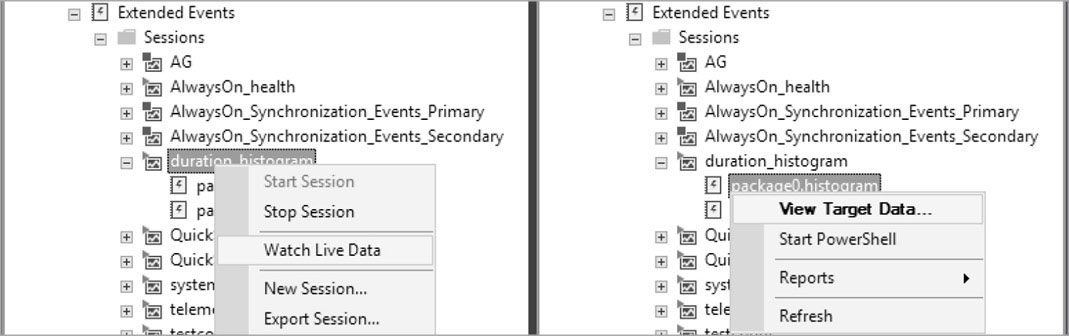

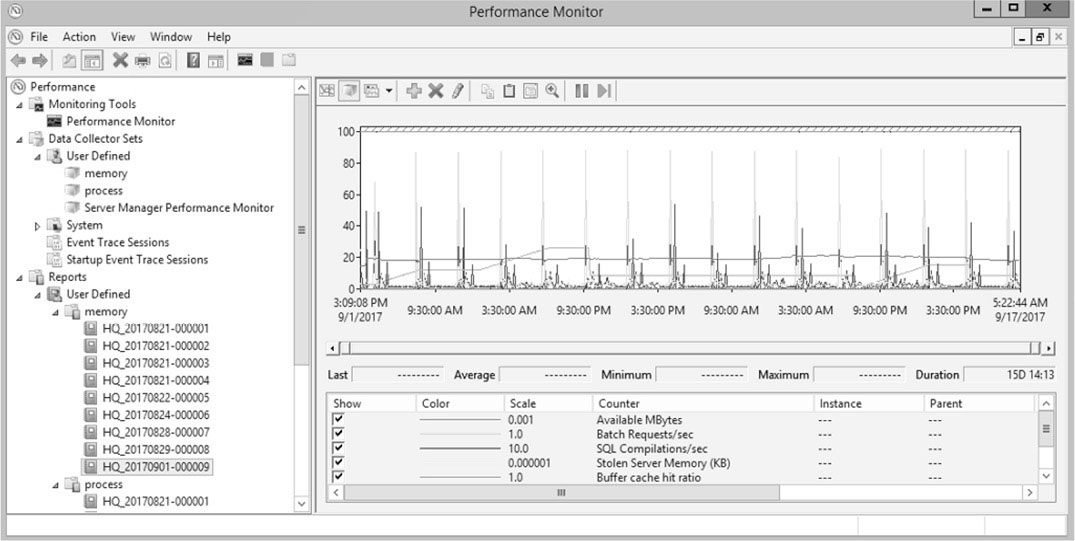

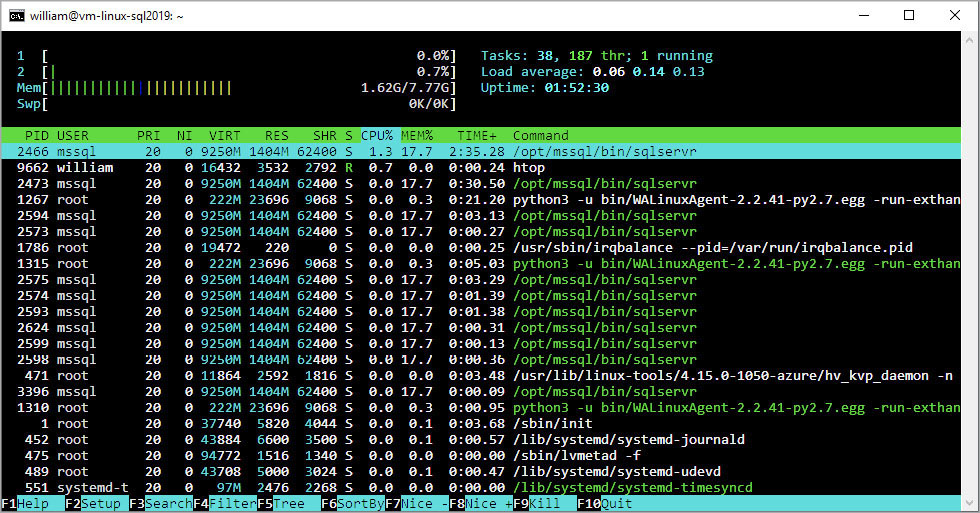

Get started with the SQL Assessment API