24. Case Study: System/360 Architecture

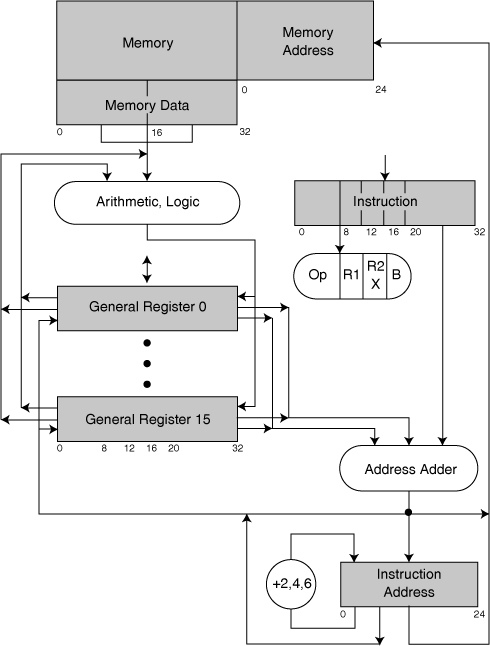

Basic programming model of the IBM System/360

Blaauw and Brooks [1997], Computer Architecture, Figure 12-78

TOM A. WISE [1966], FORTUNE MAGAZINE

[The IBM System/360 mainframe and its compatible successors]... was the workhorse of computers for such a long time and continues to be.

Highlights and Peculiarities

Boldest Decision

Drop all further development of each of IBM’s six existing product lines in favor of one new product line, exposing the existing customer base to competitors’ computers compatible with the existing IBM product lines. Needless to say, this decision was made by the CEO, Thomas J. Watson, Jr.

Bold Decision

Make the new six-computer product line strictly upward- and downward-binary-compatible, with exactly one architecture. This initiative came from Donald Spaulding, and Bob O. Evans made it a decision.

Bold Decision

Base the architecture on an 8-bit byte, making obsolete all existing I/O and auxiliary devices, even card punches.

Introduction and Context

Owner:

IBM Corporation

Designers:

Gene Amdahl, Architecture Manager; Gerrit Blaauw, Second Architect and manual author; Richard Case, George Grover, William Harms, Derek Henderson, Paul Herwitz, Graham Jones, Andris Padegs, Anthony Peacock, David Reid, William Stevens, William Wright; Frederick Brooks, Project Manager

Dates:

1961–1964

Context

Few computer architectures have had their rationales so thoroughly discussed as the IBM System/360 product family. The “Notes and References” section gives some of the most important rationale discussions.1 This case-study essay will therefore hit only the high spots.

In 1960 it was clear that IBM’s second-generation (discrete-transistor technology) computer product lines were running out of architectural gas (memory-addressing capacity, principally). IBM’s existing mutually incompatible product lines, each with its own software and market support, were

• IBM 650 (first-generation, vacuum tubes) and its incompatible transistorized successor, the 1620

• IBM 1401 and its incompatible successor, the 1410

• IBM 7070-7074

• IBM 702-705-7080

• IBM 701-704-709-7090

• IBM 7030 (Stretch, nine copies, no further marketing)

Of these, the first two, some two-thirds of the total fielded machines, were the responsibility of the General Products Division (GPD); the rest were the responsibility of the Data Systems Division (DSD). The 1410 and the 7070 competed directly with each other, as did the 7080 and the 7074. The several product lines represented quite distinct architectural philosophies and basic decisions.

DSD had started developing, in 1959, a new product line, the “8000 Series,” based on second-generation discrete transistor technology, reflecting Stretch architectural philosophy and designed to serve as successor replacements to the 7074, 7080, 7090, and Stretch. The first of these engineering models was running, and four models of the 8000 series had been through “zero-level” cost estimating, market forecasting, and pricing by January 1961. A key component of the market forecast was a new set of applications based on telephonic computer communications.

During the first half of 1961, there was a raging product fight within DSD as to whether to proceed at once with the 8000 Series, as I mistakenly advocated, or to wait three years and design a new product line to be produced with the forthcoming new integrated-circuit technology. The latter plan, championed by Bob O. Evans, won out. The 8000 Series effort was stopped, and in June work started on a new integrated-circuit DSD product line. Evans put me in charge, a totally unexpected action by a very big-minded man.

Meanwhile, corporate technical staffer Donald Spaulding became convinced that IBM needed a unified, corporate-wide new product line, not just a new DSD line that addressed only the upper half of the market. He persuaded Vice President T. V. Learson, who convened a corporate-wide strategy committee, the SPREAD committee, to develop such a plan. The committee was shrewdly put under the leadership of John Haanstra, Engineering VP of GPD, who might have been expected to most vigorously oppose any constraint on GPD autonomy, and whose 1401 product line was proving immensely successful (the first computer to sell more than 10,000 copies). The SPREAD committee produced its report at the end of 1961, and the Corporate Management Committee adopted its recommended New Product Line as the successor for all existing product lines.2 This stunningly bold move was later called, by Fortune magazine, “IBM’s $5,000,000,000 gamble.”3 Evans called it “You bet your company.” I was appointed Corporate Processor Manager to coordinate all the development activities. Fortunately, besides this staff-type corporate-wide authority, I had line responsibility for the market requirements and architecture efforts for the whole project, and line responsibility for the DSD computer engineering and all the programming efforts. Staff authority is paper signoff authority; line authority has money and people.

The SPREAD report called for six computers to be developed initially, with an ultra-low-cost machine and a super-supercomputer to come within a couple of years. The first six were christened Models 30, 40, 50, 60, 64, and 70; the later two, Models 20 and 90. Models 20 and 30 would be GPD responsibilities; the others, DSD responsibilities.

Objectives

Primary Goals

• Create a strictly upward- and downward-binary-compatible computer architecture.

• The computers must be suitable and competitive for business data processing, scientific engineering computing, and telecomputing.

• Broaden the capabilities for new applications so that IBM would have a steadily growing sales dollar volume, even as cost per computer dropped in half. We could not expect IBM’s market share of existing applications to increase substantially, or for those applications’ volumes to double quickly.

• Make each of the models cost-effective (competitive) in its own market, from the very low-cost to the fastest supercomputer.

Other Important Objectives

• Develop a single new total software support, exploiting binary compatibility to enable a single rich system to replace the multitude of incomplete second-generation systems. This must include a new operating system incorporating the fast-developing concepts from second-generation computers’ operating systems experience.

• Devise ways to help customers convert to System/360 from their second-generation systems, even as competitors offered compatible successor machines to IBM’s discontinued product lines.

• Provide an architecture, sometimes to be implemented in hardened technology, meeting the needs of IBM’s Federal Systems Division for both military and government civilian (such as NASA) products.

• Achieve new levels of reliability and maintainability, including ultra-reliable multiprocessor systems.

Opportunities as of June 1961

A New Architecture Necessary

Magnetic-core memories had proved to be quite reliable, and their costs had fallen radically. As a consequence, all customers wanted more memory. Since all the existing product lines had exhausted their addressing capacities, one or more major architectural revisions would be necessary. This gave us the opportunity to apply many lessons learned from first- and second-generation computer uses and users. These lessons were hard to exploit within the old architectures.

New, Cheaper Technology

IBM’s technology division was in hot pursuit of integrated circuits and would have an important way-station, called Solid Logic Technology (SLT), ready for volume manufacture in 1964. This promised cost savings of about a factor of two for computers of any given complexity, along with smaller sizes, lower power, and higher reliability. This drastic performance/cost increase promised to be incentive enough for customers to go through the painful and costly process of conversion to a new incompatible system.

Plenty of Design Time

The timing of the new technology meant that for once the system architects would have plenty of time, almost two years, to do a thorough and careful job.

New Kind of I/O Device

Random-access disk technology had progressed rapidly, enabling entirely new data-processing approaches and a radically different approach to operating systems.

New Telecomputing Capability

Computer communications technology, originally developed for air defense, was beginning to be attractive for commercial applications and had been pioneered in airline reservation systems.

Challenges and Constraints

Compatibility—Address Size

By far the greatest technical challenge was the achieving of strict (binary) upward and downward compatibility, while enabling each level of computer to compete in its own market against rifle-shot competitors. How to keep the smallest machine low-cost, without thereby overly constraining the supercomputer? How to enable the supercomputer to be super-fast without burdening the low-cost one? The principal problem was address size. The top of the line needed lots of address bits; could the bottom of the line (serially implemented) afford the memory bit investment and the performance hit of fetching lots of empty address bytes?

Compatibility—Operation Set

How to provide complex operations such as floating-point for scientific applications and character-string operations for business data without compromising the cost objectives of the machines?

Broader Application Scope

A third major challenge was achieving the total systems diversity needed for new applications (especially communications and remote terminals), for compute-intensive systems, and for data-processing-intensive systems.

Conversion from Existing Systems

Conversion from second-generation systems was a nightmare that we didn’t spend much effort thinking about during the first year of the design.

Most Significant Design Decisions

8-Bit Byte

The byte is 8 bits rather than the 6-bit byte that had characterized all first- and second-generation computers (except Stretch). This was the biggest and most hotly debated decision. It has many ramifications: floating-point precision argued for 48-bit words and 96-bit double words, hence 6-bit bytes. An instruction length of 24 bits was too small; 48, too large. What would be the demand for the lowercase alphabet, almost unknown in earlier computers?

The future application promise of the lowercase alphabet was convincing to me. We settled on 8-bit bytes, 32-bit data words and single-address instructions, and 32- and 64-bit floating-point words.

Failed Stack Architecture

We started with a stack architecture as an attack on the address-length problem. After pursuing this for six months, we found it worked fine for mid-range and up, but killed performance at the bottom of the line, where the stack had to be implemented in main memory, rather than in registers.

Design Competition

After the stack architecture failed, Amdahl proposed that we have an internal design competition. His idea worked brilliantly—Amdahl’s team and Blaauw’s team each independently came up with a base-register solution to the address-size problem. So we adopted that.

24-Bit Addresses

We reluctantly settled on this size, with addressing to the byte. We knew, and I publicly predicted in 1965, that we would at some point in the life of the architecture have to go to 32 bits, but we couldn’t afford it for 1964 implementations.4 Various wise provisions were made for that future jump, but unfortunately, the Branch and Link subroutine call instruction was inadvertently designed to use the upper 8 bits of address that should have been left untouched.

This is a clear example of the danger of team designs. I had failed to indoctrinate the whole team strongly enough with our vision for future expansion, and none of the reviews caught this mistake.

Standard I/O Interface

To enable a wide diversity of specialized application systems, we designed a standard logical, electrical, and mechanical interface for the attachment of all I/O devices, as Buchholz had first done on Stretch. This radically reduced configuration and software costs and simplified engineering development of I/O devices and control units.

Supervisory Control Provisions

A carefully thought-out set of supervisory capabilities was designed, so the systems could be controlled by an operating system without manual intervention. These included an interruption system, memory protection, a privileged instruction mode, and a timer.

Single-Error Detection

Complete end-to-end single-error detection was mandated for all S/360 implementations, in spite of no evident customer desire to pay for such. This substantially helped in achieving the stiff reliability and maintainability goals.

Commercial data-processing computers from all manufacturers had from the initial UNIVAC incorporated extensive checking. Scientific computers, from the initial Burks, Goldstine, and von Neumann paper, had not. This seems inverted; surely a hardware error in calculating an atomic explosion matters more than one in a utility bill. I think the difference is that the scientific community routinely incorporated global checks such as energy conservation in their programs.

We had observed that people were by 1961 trusting the answers from their computers, so as a matter of professional responsibility we incorporated the hardware checking and hoped the extra cost would not kill the market.

Decimal Arithmetic

In order to simplify conversion and user training for the huge data-processing market, we decided to incorporate decimal arithmetic as well as binary arithmetic. (All addressing was binary, in contrast to earlier 650, 1401, 1410, 7070, 7074, and 7080 systems.)

Providing a decimal datatype was probably a mistake; we should have instead seen to it that COBOL and other languages handled that problem by keeping money amounts in integral pennies, so there would be no fractional conversion error. How much omitting the decimal datatype would have hurt marketing, one can only speculate. The hardware cost was not substantial; the software cost and the added conceptual complexity were.

Multiprocessing

Provisions were made for multiple processors to be configured into a single system, with system control operating on whichever one was not failing.

Microprogrammed Implementation

We mandated, in the SPREAD report, microprogrammed implementation unless a particular engineering manager could show a 33 percent performance/cost advantage for conventional logic. This enabled the lower-end processors to include the fairly rich uniform operation set with the only cost being a little more control memory. Models 60 and 64 started development with conventional logic and switched during development to a single Model 65, with a microprogrammed implementation. Models 75 and 91 used conventional logic.

Emulation of Earlier Architectures

Stewart Tucker saw that the 32-bit-4-parity-bit memory and datapath word of the Model 65 implementation could gracefully accommodate the 36-bit-no-parity word of the 7090. He invented a microcoded 7090 emulator that used the Model 65 datapaths quite effectively. This breakthrough proved to be a major solution to the conversion problem for 7090, 7074, and 7080 customers.5

At a crucial point in January 1964, William Harms, Gerald Ottoway, and William Wright devised almost overnight a microprogrammed emulation of the 1401 on the Model 30. This mightily addressed the biggest single customer conversion problem.

No Virtual Memory

During S/360 architecture definition, virtual memory was invented on the Cambridge Atlas, and operating systems using it were developed at Cambridge, MIT, and Michigan. We debated long and hard about whether to switch our design over. We decided not to, for performance reasons. This was a mistake, which was rectified in the first successor generation, System/370.

New Random-Access I/O Devices

The project spent a lot of development effort on a new drum for operating system residence and new disk files. We saw this as fundamental for new applications and for diversity of system configurations. Similarly, new single-line and multiline communications controllers were developed.

Input-Output Channels

I/O was handled by independently operating channels, essentially specialized stored-program units, some optimized for rapid block transfer and some for multiplexing up to 256 communication lines.

Milestone Events

Summer 1961

Work starts in DSD on the architecture of the new product line. Amdahl, Boehm, and Cocke from IBM Research join Blaauw’s architecture team from the 8000 series. Work begins on the stack approach.

January 1962

A corporate-wide effort is organized.

Spring 1962

The first performance evaluations show the stack architecture to be noncompetitive. A design competition leads to base-register addressing.

Summer 1962

The byte-size debate is settled.

Fall 1962

A first draft of the architecture manual is produced.

Fall 1963

The architecture manual is frozen.

January 1964

There is a major product fight between S/360 Model 30 and GPD’s 1401S, a six-times-faster 1401 successor pushed by Haanstra, now Division President of GPD. S/360 won by the invention of 1401 emulation on Model 30.

April 1964

The announcement is made of Models 30, 40, 50, 65, and 75, with a hint that Model 90 is coming.

February 1965

The first S/360 is shipped (Model 40).

August 1972

System/370 virtual memory is announced.

Early 1980

System/370 XA 31-bit architecture is announced.

2000

z-Series 64-bit architecture is announced.6

Assessment

Firmness

One definition of firmness for a computer architecture would be “durability.” I predicted that the architecture would endure in various implementations for 25 years, with modifications to provide larger addresses.4 It is now 45 years since the S/360 announcement, and the architecture endures as progressively augmented. One recent implementation is the IBM z/90, announced in March 2007. It is still backward-compatible; S/360 programs will still run. These so-called mainframes continue to do a large portion of the world’s database work, running a descendant of MVS/360, VM/360, or, increasingly, Linux as the operating system.

Another definition of firmness would be “impact on the field.” Gordon Bell, himself a great computer architect for DEC, recently identified the System/360 as the most influential computer in history, referring to intellectual influence, not market presence, where the PC would win handily.7 The S/360’s switch to the 8-bit byte changed computer architecture completely and permanently. Its heavy emphasis on disk-based input-output configurations also changed system design radically.8

Gene Amdahl licensed S/360 architecture and implemented it exactly in the highly successful Amdahl Corporation computer family. RCA licensed the architecture and used it in its Spectra 70 family. Although RCA faithfully implemented all the architecture affecting Problem Mode, its architects chose to do an idiosyncratic version of the Supervisory Mode architecture. RCA’s version was licensed and extensively used by Siemens, Fujitsu, and Hitachi and was copied by the Soviets.

S/360 architecture clearly influenced DEC’s VAX family and the PDP-11 computer family and its numerous microcomputer descendants such as the Motorola 6800, 68000.

Usefulness—Competitiveness, Market by Market

Commercially, the System/360 gamble was a big success. IBM annual reports show an average annual growth in revenue of 21 percent from 1964 to 1968, and an average growth of 20 percent in profit from 1964 to 1968.

Some 144 new products were announced on April 7, 1964. Many of these were various memory options. Most, however, were a stunning array of 8-bit input-output devices: multiple printers, some with variable character sets; multiple disks, some with replaceable cartridges; new tape systems; a spectrum of communications terminals and network devices; new card punches, readers, and printers; and miscellaneous devices such as check sorters and factory-data-input terminals. The richness of this collection, developed in many far-flung laboratories, enabled a near-infinite variety and scale of system configurations. The standard I/O interface and its software support meant that configuration growth and change were easy. CPU compatibility meant that the machine at the center of a configuration was often upgraded to a different model over a weekend, without changing the I/O configuration or the software.

All the models did well in their respective markets. The Model 30, with its disks and printer, was an instant success. The upward-compatible Model 20 did very well when it appeared soon after.

The Model 65 was a major success in applications previously performed on 7090, 7094, 7094 II, 7080, 7074, and other models. The new database techniques were well served by this model, and it and its descendant models dominated the field. It also did very well as the engineering computing workhorse. The serious competitors were mostly themselves computers with S/360 architecture, the so-called plug compatibles, usually running OS/360 software.

Models 75, 91, and others in that family were designed as scientific supercomputers. They split that market pretty evenly with the contemporary CDC and Cray machines, but the Cray descendants came to dominate it. Four Model 75s provided the ground-based computing for the Apollo program; hardened derivatives of System/360 served as the on-board computers.

Delight

The original architecture was rather clean, as was the careful conceptual separation of architecture, implementation, and technological realization.9 The requirement of strict upward and downward compatibility imposed a strict discipline that protected the low end from functional deficiency and the high end from excess. (Similarly, any writer learns that a strict page limit often yields cleaner and more effective writing.) Blaauw left well-placed spaces in the operation-code list for future additions. And additions there certainly have been, with the result that the operation-code set is no longer as orderly as it was.

Our biggest mistake technically was the failure to adopt virtual memory at the outset. This was a case of expert designers going wrong in a big way (Chapter 14).

Our biggest mistake esthetically and conceptually was our failure to recognize that an I/O channel was just another computer. Cray’s peripheral processors, introduced on the CDC 6600, are a superb embodiment of an elegant and powerful concept. Each of many concurrent I/O flows is controlled by an architecturally separate simple small binary computer, all implemented with one time-shared dataflow.

The ugliest thing in the original CPU architecture was the SS instruction format, which provided a base register but not a separate index register, as did all the other formats. As remarked above, Branch and Link uses high-order address bits that should have been reserved for the expansion to 32-bit addresses. Load Address cleared those same high-order bits.

A smaller mistake is that we initially failed to provide a guard digit in the definition of floating-point operations. We had to field-modify the first S/360 computers after delivery.

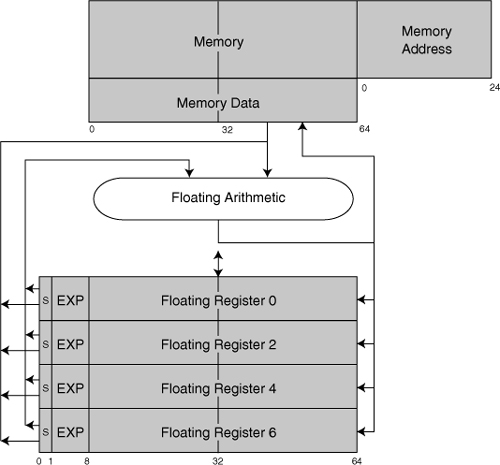

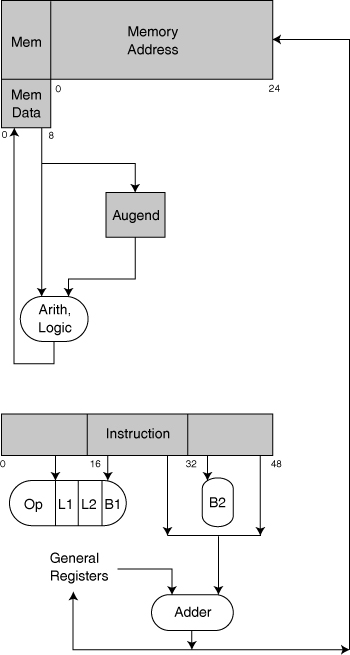

Perhaps the most telling esthetic critique of our effort would be a jeer that the S/360 was really three architectures under one cover: the basic 32-bit binary machine, the 64-bit floating-point machine with a different dataflow, and the byte-by-byte processor, with a quite different dataflow and even decimal arithmetic (chapter frontispiece, Figure 24-1, and Figure 24-2). In fact, when one adds selector channels and multiplex channels, there are really five architectures present. Microcoded implementations make it all work.

Figure 24-1 System/360 floating-point dataflow

Blaauw and Brooks [1997], Computer Architecture, Figure 12-79

Figure 24-2 System/360 byte-by-byte dataflow

Blaauw and Brooks [1997], Computer Architecture, Figure 12-80

What was achieved by these multiple concurrent architectures was a truly general-purpose computer family, adaptable by suitable processor, memory, and especially I/O configurations, to all kinds of applications and performance needs.

General Lessons Learned

1. Allow plenty of project time for design. It makes the product much better and useful longer, and it might even make delivery sooner by reducing rework.

2. Having multiple concurrent implementations of the same architecture strongly protects the architecture from bad compromises, when it is discovered that an implementation has (usually inadvertently) departed from the architecture. With only one implementation, it is always easier, cheaper, and faster to change the manual rather than the machine. Chapter 6 of The Mythical Man-Month treats this and other methods of ensuring conformity of implementation to architecture (rather than the reverse) in some detail.

3. Amdahl’s proposal for a design competition when our first design ran aground proved very fruitful. It produced great concurrence on many issues, and it quickly spotlighted the crucial differences. Moreover, it had a powerful positive effect on team morale. In 2008, I heard for the first time in over 40 years from Doug Baird, who had been a junior architect on the team. He still remembered appreciatively that his rather junior team had had a chance to put their design forward on the same basis as all the distinguished architects on the team.

4. For totally new designs, as opposed to follow-on products, from the beginning devote part of the design effort to establishing metrics for performance and other essential properties, and approximate cost surrogates (such as bits of register for third-generation computers).

5. Market forecasting methodology is designed for follow-on products, not radical innovations (a lesson elaborated in Chapter 19). Designers of totally new products should spend lots of early effort getting forecasters on board with the new concepts.

Notes and References

1. The most important treatments of the S/360 architecture rationale are

• Amdahl [1964], “Architecture of the IBM System/360”

• Blaauw and Brooks [1964], “Outline of the logical structure of System/360”

• Blaauw and Brooks [1997], Computer Architecture, Section 12.4

• Evans [1986], “System/360: A retrospective view”

• IBM Corp. [1961], “Processor products—final report of SPREAD Task Group, Dec. 28, 1961.”

• IBM Corp. [1964ff], IBM System/360 Principles of Operation, Form A22-6821-0

• IBM Systems Journal 3, no. 2 (all)

• Pugh [1991], IBM’s 360 and Early 370 Systems

2. IBM Corp. [1961], “Processor products—final report of SPREAD Task Group, Dec. 28, 1961.”

3. Wise, “IBM’s $5,000,000,000 gamble.”

4. Brooks [1965], “The future of computer architecture.”

5. Tucker [1965], “Emulation of large systems.” In the event, he did not map one 7090 floating-point word to one S/360 word, but spread out the parts.

6. http://en.wikipedia.org/wiki/System_370 contains an excellent treatment of the evolution of the architecture and of the highlights of the basic architecture (accessed December 2008). So does http://www.answers.com/topic/ibm-system-360, as of August 2009.

7. Bell [2008], “IT vet Gordon Bell talks about the most influential computers.”

8. Bell and Newell [1971], Computer Structures, Section 3, 561–637, gives another assessment and a quite detailed discussion.

9. Blaauw and Brooks [1997], Computer Architecture, treats this important three-way distinction in detail in Section 1.1.