Exploring split testing with AdWords

Setting up simple and powerful split tests

Declaring winning and losing ads

Generating ideas to test

Most people find that writing an effective AdWords ad is challenging. In the old days (2004, actually), advertisers found their ads swatted down constantly by the 0.5% CTR (click-through rate) threshold. That is, not even 5 in 1,000 searchers would click their ad, and Google felt that an ad so unattractive did not deserve to remain active.

The difficulty of successful ad creation is understandable — you have 130 characters to convince someone to choose your offer over 19 other close-to-identical listings on the same page. Plus, writing good ads is tough in the best of circumstances.

Perry Marshall gave a talk in which he demonstrated the need for split testing by challenging audience members — professional marketers all — to choose the more effective ad or headline from a series of 10 split tests. The best of us got no more than 4 or 5 out of 10 correct. As we held our hands up high and proud for having achieved 50 percent on the test, Perry shot us down: "If I had flipped a coin, I would have done as well as you. Congratulations. You guys are as smart as a penny."

If you want to be smarter than a penny, you must apply the most powerful tool in the marketer's arsenal: split testing.

In this chapter, I show you how to set up split testing with AdWords and analyze the results. I tell you about split-testing landing pages, as well as your entire sales process. Also, you discover only what you need to know about statistical significance (which, in this case, relates to your confidence level that the split-testing results are repeatable) to make the best choices about your ads.

Nothing leads to improvement faster than timely and clear feedback. While a million monkeys typing would eventually produce the entire works of Shakespeare, they would get there much faster if they got a banana every time they typed an actual word and an entire banana split when they managed a rhymed couplet in iambic pentameter. (Can you tell I've been reading Shakespeare For Dummies, by John Doyle and Ray Lischner?) And for every nonword, someone would chuck a copy of Typing Shakespeare For Monkeys at them.

Now suppose the monkeys could keep and understand a written record of the characters that produced bananas, banana splits, and no reward. After a while, you would see more and more real words and Shakespearean phrasing, and fewer xlkjdfsdfsr. Ouch!

Note

AdWords contains the world's simplest mechanism for getting timely and clear feedback on your ads. You can create multiple ads, which AdWords shows to your prospects in equal rotation, and you can receive automatic and ongoing feedback.

Split testing is not an AdWords innovation — direct marketers have been testing customers' response rates since Moses got two tablets of commandments. Readers' Digest used to choose headlines for its articles by sending postcards to readers, asking which articles they would be interested in reading in an upcoming issue. The list of articles was actually a list of headlines for the same article.

Here's how split testing works in AdWords:

Run multiple ads simultaneously within a single ad group.

Monitor the effectiveness of all ads at eliciting the customer response you want.

Continue monitoring until one of those ads has proven itself better at its calling.

Declare the proven ad the winner (or, in marketing geek-speak, the control).

Retire the less successful ad, replace it with a new challenger, and repeat the contest.

If the challenger does better, it becomes the new control. If the control maintains supremacy, you send a different challenger up against it.

Tip

The beauty of this split-testing system is that you can't help but improve your results over time. If a new ad proves worse than your control, simply delete it. And the added beauty is that you don't even have to know what you're doing to improve your ad's effectiveness. While market intelligence, creativity, and writing skill help, mere trial and error — when funneled through split testing — can boost your results significantly.

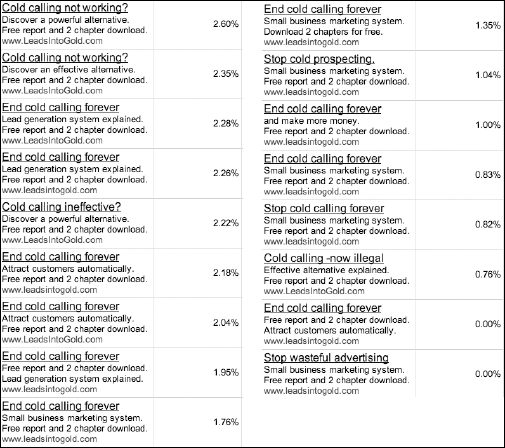

One of my early AdWords projects was an ad for a direct-marketing home-study course for small businesses (see the series of ads in Figure 13-1). An early ad, headlined "Cold calling — now illegal," achieved a 0.7% CTR. The final ad I used — "Cold calling not working?" — nearly quadrupled that with a 2.7% CTR. The big lesson from this long series of ads is this: I had no idea what I was doing at the time, yet I still succeeded. Take a few minutes and examine each of the ads carefully. Be honest — could you predict which of these ads would do better than the rest? I couldn't. I still can't. But the numbers don't lie, and I was able to turn a marginal product into a success thanks to split testing.

Split-testing with AdWords follows the four-step process outlined in the preceding section. You can prepare yourself to launch a series of split-testing ads by getting curious about what messages will be most compelling to your prospects. Turn each message into an ad and get ready to have fun.

Creating your second ad is even easier than creating your first (see Chapter 3 for step-by-step instructions):

From the Campaigns tab, click the Ads rollup tab group name to see a list of all the ads in your account.

Click the Ad Group name to the right of the ad you wish to challenge.

Click the New Ad button just above and to the left of the list of ads.

Choose Text Ad from the drop-down list.

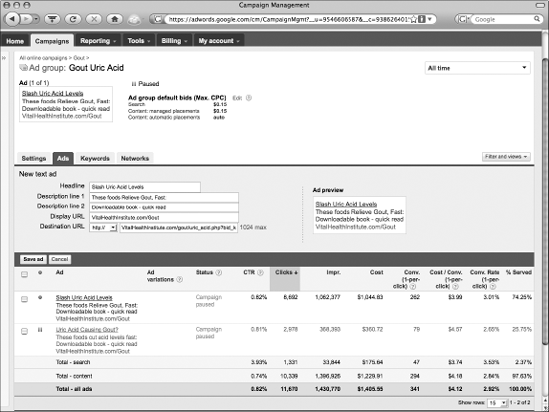

An ad template with sample copy appears on-screen, as shown in Figure 13-2.

Type over the existing copy and URL with your challenger ad's copy and URL.

Click the Save Ad button.

After your challenger ad is in place, you want to make sure that your two ads will compete fairly. Google assumes you're too busy (or lazy) to monitor your split tests, so the default setting is for AdWords to show the ad with the higher CTR more, and gradually let the poorer-performing ad slip into oblivion.

Tip

You want to override this setting for two reasons:

An ad with a lower CTR may still be the more profitable ad (see Chapter 15 for details of this apparent paradox).

When you let AdWords evaluate the ads without your supervision, you don't learn anything that makes you a smarter advertiser. The faster you declare winning and losing ads, the faster your marketing improves.

Here's how you override the AdWords default setting that may kill an ad without your approval. (Note: You establish this setting on the campaign level, so you may need to do this with each campaign.)

From the Campaigns tab, click the Campaigns rollup tab to generate a list of all campaigns in your account.

Check the name of the campaign you wish to edit.

Click the Settings rollup tab.

A page with various campaign-level settings appears.

Scroll down to Advanced Settings at the very bottom of the page, and click the Edit link next to Ad Rotation.

If you can't find the Ad Rotation setting, you may have to open the Ad delivery section by clicking the Ad Delivery: Ad Rotation, Ad Frequency Link at the bottom of the page.

Click the radio button next to Rotate: Show ads more evenly.

Click the Save button at the bottom of the Campaign Settings page.

Now you're split-testing properly. Once you install conversion tracking (see Chapter 15), you have the ability to compare the profitability of your ads. Until then, the only thing you can compare is the CTR.

Note

If you ever deploy Google's Conversion Optimizer tool on a campaign, you must revert to the default option, where Google shows your better performing ads more often.

Just as you wouldn't put a cake in a 350° F oven and not pay attention to when it was done, you wouldn't set up a split test and then ignore the results. You have three ways to check up on your cake to make sure it doesn't burn and the fork comes out clean:

Haphazardly: Check up on your cake when you think of it. As long as you catch it before the smoke alarm does, the cake might turn out okay.

Annoyingly: Set your watch to beep every few minutes to remind you to check the cake.

Geekily: Install a sensor in the oven that alerts you when the cake is done.

These three methods are available for monitoring your AdWords split tests as well:

With the haphazard method, you can look at each ad group once a day, once every three days, once a week, whenever Dartmouth wins a football game, and so on. The interval you choose should relate to the amount of traffic your ads are getting. For example, if you get 50 clicks per day, you might want to check your ads every day. A huge stream of traffic will give you a winner much quicker than a trickle, all other things being equal. But even if your traffic is massive, wait at least a couple of days before declaring a winner. Visitors checking out your ad at three o'clock in the morning on Sunday are likely to be very different from Monday afternoon visitors. You want to collect a representative sample to be sure your results are accurate.

With the annoying, repetitive method, you can create reports within AdWords and schedule those reports (see Chapter 15).

With the geeky, pass-the-buck method, you can subscribe to a third-party service that monitors all your split tests and e-mails you when you have a winner (see the section, "Automating your testing with Winner Alert" later in this chapter).

Okay, so you're watching your split tests with eagle eyes and keen concentration. How do you know when one ad has outperformed another? After all, as the investment ads say, "Past performance doesn't guarantee future results." Fortunately, the testing process is simple and straightforward when you're running a single test of two different ads. You just want to answer the question, "Is this result real, or just a random coincidence?" That's where your friend and mine — statistical significance — makes a welcome appearance.

If I flip a coin twice and it lands heads both times, should I assume that coin would always come up heads? Of course not — two flips do not give me enough data to reach that conclusion. What about four flips, all heads? Less likely, but still plausible? What about ten flips, all heads? Are we getting a tad suspicious now? It could still be due to random chance — after all, every single flip of a fair coin has an equal chance of landing heads or tails — or, possibly, this is no fair coin. If I get to 20, 30, or 100 flips with no tails in sight, I can be pretty sure something's up.

In your AdWords split testing, you're looking for information that will tell you that something's up. You want to know that one ad is truly better than another, and that the difference in CTR is not because of randomness. Just as with the coin, you can never know for absolute certain. Statistical significance tells you the probability that you're making the right choice.

If you're doing it yourself, here are the steps to assessing the significance of your results and deciding whether to declare a winner:

From your AdWords account, click through to the Ad Group you want to test.

Click the Ad Variations tab, and write down the following numbers:

Number of clicks for Ad #1

Impressions for Ad #1

Number of clicks for Ad #2

Impressions for Ad #2

Go to

www.askhowie.com/splitand enter the four numbers in the appropriate fields.Look at your confidence interval and see whether you have a winner.

I'm willing to accept a 95% threshold for my split testing. I can live with the knowledge that 1 out of every 20 split tests is giving me a bogus sense of confidence. Below that, I want to keep running the test until I achieve significance or until I'm satisfied that there really is no difference between the two ads.

Say you're testing two ads, and they're running neck and neck for days. Weeks. Months. In this case, you're losing money by continuing the test. Sure, at some point, the data might tip one way or the other, but the simple fact is the difference isn't going to be important in real life. Drop the challenger (keeping the control makes sense because it has more history behind it) and get a new challenger. Pull the plug on a test when each ad has at least 100 clicks.

Because of the way Google assigns quality scores to keyword/ad combinations, you need to make sure you're giving your challenger ads a fair chance. Google, like most people, prefers old friends to new acquaintances. This means that an ad that's been running for a while and doing well (meaning, good CTR and clearly relevant) will have a higher quality score than a new ad. Therefore, the control ad will display in a higher position, which gives it an artificial boost in CTR. So, you may incorrectly keep a control ad because of its unfair advantage.

The solution is to create a copy of the control ad and test it against the challenger. Both ads start out with zero impressions and an assumption of equal quality. Even though the copy is an exact clone of the control ad, Google doesn't recognize it as an old friend. Therefore, your split test will compare the ads fairly, allowing you to choose the real winner.

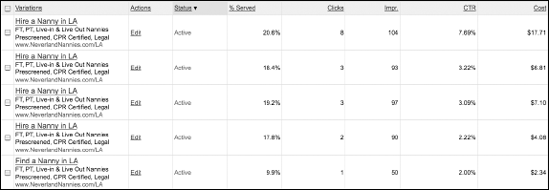

While you're at it, I recommend creating three copies of your control ad, not just one. That way, you get to show your control ad (that is, the one you know is working the best) to 80 percent of searchers, rather than 50 percent. Think about it — would you trust half your business to a new, totally untested salesperson? By creating multiple copies of the control ad, you're reducing your risk in case the new ad is a total failure. (See Figure 13-3.)

Figure 13-3. The control ad (top) achieved a much higher CTR than its three identical clones because of a better Quality Score.

Also, as Richard Mouser of www.scientificwebsitetesting.com points out, the different CTRs of the three identical copies of the control ad will converge at the point of statistical significance. So if you have three identical copies at CTRs of 3.1%, 1.6%, and 0.8%, then you know instantly you haven't run the test long enough to be confident in the results.

Many AdWords beginners understand the concept of split testing, but do it haphazardly and without strategy. They learn that split testing is too confusing and complicated, and give up on the most powerful weapon in their marketing arsenal. The following sections discuss three strategies to assure a streamlined and effective split-testing process.

When you begin to split-test in an ad group, choose two very different ads. You may want to focus on different markets (stay-at-home dads versus divorced/widowed dads with full custody), different emotional responses (greed versus fear), or different benefits (lose weight versus prevent heart disease). Get the big picture right before drilling down to the details. It does you no good to test easy versus simple in a headline if your prospects don't care about ease or simplicity, but just whether it can run on batteries.

After you discover the right market, key benefits, and the emotional hot buttons of that market, you can start testing more specific elements (see the upcoming section, "Generating Ideas for Ad Testing").

Remember high school chemistry class? You had to buy a marble notebook and keep track of all your experiments, including date, hypothesis, experiment design, and results.

Chances are that your bright ideas about ad testing are not new. If you don't keep track somehow, you'll find yourself repeating experiments to which you already know the answer. Keeping track of your results in a marble notebook, or its digital equivalent (a Word document, private blog, or Excel spreadsheet), is therefore crucial to moving forward efficiently.

Split-testing can become so mechanical, it's easy to forget the purpose is to make you smarter by learning what makes your customers tick — er, click.

Perry Marshall distinguishes between true market research and what he calls "opinion research." Opinion research is what people say they'll do. Market research is what they actually do. Split testing is a powerful form of market research that will provide answers to whatever questions you ask. As computer programmers are fond of saying, "Garbage In, Garbage Out." If you ask intelligent questions, you'll get useful answers.

So before you run a split test, take a moment to write down (in your lab notebook, of course) the question you want your prospects to answer for you. Then design a split test that asks that question.

The following figures show some examples of good questions and the split tests that were set up to answer them:

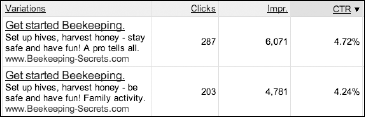

How much traffic do I give up if I put the price of the product in the ad? (See Figure 13-4.)

Will positioning my product as a "professional shares his secrets" increase clicks, compared to flagging the benefit of family fun? (See Figure 13-5.)

You want to test broadly different ideas before getting into details. Don't worry about whether description line 2 should have a comma in it before you've figured out the answers to your big questions. Imagine that you're searching for the most delicious plum in the world. First, you test the orchard to make sure it has plum trees and not orange trees. When you find the plum orchard, start testing trees to find the tree with the best plums. When you find the best tree, see whether you prefer the plums near the top or closer to the ground. On the north or the south side. Then taste the fruit on different limbs, and after you find the most promising limb, see which branch yields the best fruit.

David Bullock's (www.davidbullock.com) list of big questions from Chapter 6 comes in handy here:

Who is looking?

What are they looking for?

Why are they looking for it?

What will be the result of their search?

What does the searcher want the ultimate outcome to be?

What is the emotional good feeling they seek?

What emotional outcome are they trying to avoid?

Who does the searcher care about?

What does the searcher care about?

In other words, split-test the ads to discover the demographics and psychographics of your market. Who are they — working mothers or single professionals taking care of aging parents? What big benefit are they looking for in your product — saving time or assuaging guilt? Are they angry with their company or do they feel grateful? Who do they want to help them with this problem — Walter Cronkite or Jon Stewart?

Use your split tests to answer these questions as best you can. Write down a hypothesis and brainstorm two ads that will prove or disprove it. After you test the big ideas, turn your attention to the little things that can make a big difference:

Order of lines: If you're highlighting the benefit on line 1 and explaining a feature on line 2, try switching the order of the two lines.

Display URLs: If you buy a bunch of domain names related to your main domain, you can point them all to the same Web site and test which domain name attracts the right customers. If you have the

.comand.orgfor the same domain, will one outperform the other?Capitalization: Finding the right capitalization of your URL to make its meaning stand out is an art form. For example, I found that

LeadsintoGold.comdid better thanleadsintogold.comin almost every test.Synonyms: Try variations of your benefits: simple/easy/quick/no sweat.

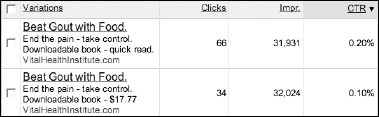

Punctuation: Perry Marshall talks about the cadence of an ad — the way the searchers hear it in the mind's ear can subtly influence whether they resonate with it. Use punctuation to make the phrase more melodic and persuasive. Figure 13-6 shows what happened when I used a comma to put the emphasis on You rather than Instead:

The ad with the comma is four times as effective as the other one. Without testing, there's no way I would have predicted the effect would be so profound.

With the proper tools, split testing can be the most powerful tactic in your entire marketing strategy. The following tools allow you to split test faster to improve faster.

My AdWords account is quite large at this point. I have 27 separate campaigns. Many of the campaigns include dozens of ad groups. Each of these groups is running a split test pretty much all the time. I probably have to monitor well over 100 split tests simultaneously. If I were to go into each ad group, pull out the data, and enter it into a statistical significance calculator, it would take me the better part of a day just to assess the tests. And that doesn't even include the time it takes me to think up new ad variations to challenge the winners.

If you're just starting out and you're running fewer than 10 ad groups at a time, you won't feel my pain. But after your campaigns grow, you'll either stop tracking the results of your tests or you'll wait too long to find winners. Wait too long and you're ignoring profit-growing market data and showing prospects suboptimal ads.

Note

To help alleviate this problem, I created a Winner Alert tool that automates the process of tracking statistical significance, and e-mails you whenever one of your split tests produces a winner. It's a great tool, and when you're ready for it, you can try it free for a month. Go to www.askhowie.com/winner for video demos and your coupon code.

The Taguchi Method lets you test hundreds of variations in a fraction of the time it would take if you used a standard A-B split. It's not for beginners; the methodology is so complicated, it's easy to fall into the Garbage-In-Garbage-Out trap and believe you have the answer to Life, the Universe, and Everything because the printout looks so impressive.

Note

You should consider Taguchi testing if and only if your keywords get at least several thousand daily impressions each, and if the person setting up your test has experience using Taguchi for marketing. Taguchi testing was originally developed to reduce manufacturing errors, and many practitioners misapply a manufacturing mindset to the marketing process. David Bullock is the premiere Taguchi marketer who applies the method to AdWords. You can find an article he wrote to protect you from incorrect and unnecessary use of Taguchi at www.askhowie.com/taguchi.

Although you can split-test other elements of your Web site using ad split testing (for example, running two visually identical ads with different destination URLs), you get better results by using Google's free Web Site Optimizer tool (see Chapter 14 for details).