Chapter 5

Reviews

After studying this chapter, you will be able to:

- – understand the value of different types of reviews;

- – understand the personal review;

- – understand the desk-check type of peer review;

- – understand the reviews described in the ISO/IEC 20246 standard, the CMMI®, and the IEEE 1028 standard;

- – understand the walk-through and inspection review;

- – understand the project launch review and project lessons learned review;

- – understand the measures related to reviews;

- – understand the usefulness of reviews for different business models;

- – understand the requirements of the IEEE 730 standard regarding reviews.

5.1 Introduction

Humphrey (2005) [HUM 05] collected years of data from thousands of software engineers showing that they unintentionally inject 100 defects per thousand lines of code. He also indicates that commercial software typically includes from one to ten errors per thousand lines of code [HUM 02]. These errors are like hidden time bombs that will explode when certain conditions are met. We must therefore put practices in place to identify and correct these errors at each stage of the development and maintenance cycle. In a previous chapter, we introduced the concept of the cost of quality. The calculation of the cost of quality is:

The detection cost is the cost of verification or evaluation of a product or service during the various stages of the development process. One of the detection techniques is conducting reviews. Another technique is conducting tests. But it must be remembered that the quality of a software product begins in the first stage of the development process, that is to say, when defining requirements and specifications. Reviews will detect and correct errors in the early phase of development while tests will only be used when the code is available. So we should not wait for the testing phase to begin to look for errors. In addition, it is much cheaper to detect errors with reviews than with testing. This does not mean we should neglect testing since it is essential for the detection of errors that reviews cannot discover.

Unfortunately, many organizations do not perform reviews and rely on testing alone to deliver a quality product. It often happens that, given the many problems throughout development, the schedule and budget have been compressed to the point that tests are often partially, if not completely, eliminated from the development or maintenance process. In addition, it is impossible to test a large software product completely. For example, for software that has barely 100 decisions (branches), there are more than 184,756 possible paths to test and for software with 400 decisions, there are 1.38E + 11 possible paths to test [HUM 08].

In this chapter, we present reviews. We will see that there are many types of reviews ranging from informal to formal.

Informal reviews are characterized as follows:

- – There is no documented process to describe reviews and they are carried out in many ways by different people in the organization;

- – Participants’ roles are not defined;

- – Reviews have no objective, such as fault detection rate;

- – They are not planned, they are improvised;

- – Measures, such as the number of defects, are not collected;

- – The effectiveness of reviews is not monitored by management;

- – There is no standard that describes them;

- – No checklist is used to identify defects.

Formal reviews will be discussed in this chapter as defined in the following text box.

In this chapter, we present two types of review as defined in the IEEE 1028 standard [IEE 08b]: the walk-through and the inspection. Professor Laporte contributed to the latest revision of this standard. We will also describe two reviews that are not defined in the standard: the personal review and the desk-check. These reviews are the least formal of all of the types of reviews. They are included here because they are simple and inexpensive to use. They can also help organizations that do not conduct formal reviews to understand the importance and benefits of reviews in general and establish more formal reviews.

Peer reviews are product activity reviews conducted by colleagues during development, maintenance, or operations in order to present alternatives, identify errors, or discuss solutions. They are called peer reviews because managers do not participate in this type of review. The presence of managers often creates discomfort as participants hesitate to give opinions that could reflect badly on their colleagues and the person who requested the review may be apprehensive of negative feedback from his own manager.

Figure 5.1 shows the variety of reviews as well as when they can be used throughout the software development cycle. Note the presence of phase-end reviews, document reviews, and project reviews. These reviews are used internally or externally for meetings with a supplier or customer.

Figure 5.1 Types of reviews used during the software development cycle [CEG 90] (© 1990 – ALSTOM Transport SA).

Figure 5.2 lists objectives for reviews. It should be noted that each type of review does not target all of these objectives simultaneously. We will consider what the objectives are for each type of review in a subsequent section.

Figure 5.2 Objectives of a review.

Source: Adapted from Gilb (2008) [GIL 08] and IEEE 1028 [IEE 08b].

The types of reviews that should be conducted and the documents and activities to be reviewed or audited throughout the project are usually determined in the software quality assurance plan (SQAP) for the project, as explained by the IEEE 730 standard [IEE 14], or in the project management plan, as defined by the ISO/IEC/IEEE 16326 standard [ISO 09]. The requirements of the IEEE 730 standard will be presented at the end of this chapter.

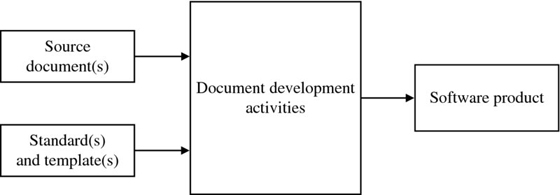

As illustrated in Figure 5.3, to produce a document, that is, a software product (e.g., documentation, code, or test), source documents are usually used as inputs to the review process. For example, to create a software architecture document, the developer should use source material such as the system requirements document, the software requirements, a software architecture document template, and possibly a software architecture style guide.

Figure 5.3 Process of developing a document.

A review of just the software product, for example, a requirements document, by its author is not sufficient to detect a large number of errors. As illustrated in Figure 5.4, once the author has completed the document, the software product is compared by his or her peers against the source documents used. At the end of the review, peers who participated in the review will have to decide if the document produced by the author is satisfactory as is, if significant corrections are required or if the document must be corrected by the author and peer reviewed again. The third option is only used when the revised document is very important to the success of the project. As discussed below, when an author makes many corrections to a document, it inadvertently creates other errors. It is these errors that we hope to detect with another peer review.

Figure 5.4 Review process.

The advantage of reviews is that they can be used in the first phase of a project, for example, when requirements are documented, whereas tests can only be performed when the code is available. For example, if we depend on tests alone and errors are injected when writing the requirements document, these will only become apparent when the code is available. However, if we use reviews, then we can also detect and correct errors during the requirements phase. Errors are much easier to find and are less expensive to correct at this phase. Figure 5.5 compares errors detected using only tests and using a type of review called inspections.

Figure 5.5 Error detection during the software development life cycle [RAD 02].

For illustration purposes, we used an error detection rate of 50%. Several organizations have achieved higher detection rates, that is, well over 80%. This figure clearly illustrates the importance of establishing reviews from the first phase of development.

5.2 Personal Review and Desk-Check Review

This section describes two types of reviews that are inexpensive and very easy to perform. Personal reviews do not require the participation of additional reviewers, while desk-check reviews require at least one other person to review the work of the developer of a software product.

5.2.1 Personal Review

A personal review is done by the person reviewing his own software product in order to find and fix the most defects possible. A personal review should precede any activity that uses the software product under review.

The principles of a personal review are [POM 09]:

- – find and correct all defects in the software product;

- – use a checklist produced from your personal data, if possible, using the type of defects that you are already aware of;

- – follow a structured review process;

- – use measures in your review;

- – use data to improve your review;

- – use data to determine where and why defects were introduced and then change your process to prevent similar defects in the future.

The following practices should be followed to develop an effective and efficient personal review [POM 09]:

- – pause between the development of a software product and its review;

- – examine products in hard copy rather than electronically;

- – check each item on the checklist once completed;

- – update the checklists periodically to adjust to your personal data;

- – build and use a different checklist for each software product;

- – verify complex or critical elements with an in depth analysis.

Figure 5.6 outlines the process of a personal review.

Figure 5.6 Personal review process.

Source: Adapted from Pomeroy-Huff et al. (2009) [POM 09].

As we can see, personal reviews are very simple to understand and perform. Since the errors made are often different for each software developer, it is much more efficient to update a personal checklist based on errors noted in previous reviews.

5.2.2 Desk-Check Reviews

A type of peer review that is not described in standards is the desk-check review [WAL 96], sometimes called the Pass around [WIE 02]. It is important to explain this type of peer review because it is inexpensive and easy to implement. It can be used to detect anomalies, omissions, improve a product, or present alternatives. This review is used for low-risk software products, or if the project plan does not allow for more formal reviews. According to Wiegers, this review is less intimidating than a group review such as a walk-through or inspection. Figure 5.7 describes the process for this type of review.

Figure 5.7 Desk-check review.

As shown in Figure 5.7, there are six steps. Initially, the author plans the review by identifying the reviewer(s) and a checklist. A checklist is an important element of a review as it enables the reviewer to focus on only one criterion at a time. A checklist is a reflection of the experience of the organization. Then, individuals review the software product document and note comments on the review form provided by the author. When completed, the review form can be used as “evidence” during an audit.

In this book, several checklists are presented. Here is a list of some important features of checklists:

- – each checklist is designed for a specific type of document (e.g., project plan, specification document);

- – each item of a checklist targets a single verification criteria;

- – each item of a checklist is designed to detect major errors. Minor errors, such as misspellings, should not be part of a checklist;

- – each checklist should not exceed one page, otherwise it will be more difficult to use by the reviewers;

- – each checklist should be updated to increase efficiency;

- – each checklist includes a version number and a revision date.

The following text box presents a generic checklist, that is, a checklist that can be used for almost any type of document to be reviewed (e.g., project plan, architecture). For each type of software product (e.g., requirements or design), a specific checklist will be used. For a list designed to facilitate the detection of errors in requirements, we could add the EX identifier and include the following element: EX 1 (testable)—the requirement must be testable. For a list of verifications for a test plan, one might use the TP identifier.

In the third step of the desk-check process, the reviewers verify the document and record their comments on the review form. The author reviews the comments as part of step 4. If the author agrees with all the comments, he incorporates them into his document. However, if the author does not agree, or if he believes the comments have a major impact, then he should convene a meeting with the reviewers to discuss the comments. After this meeting, one of three options should be considered: the comment is incorporated as is, the comment is ignored, or it is incorporated with modifications. For the next step, the author can make the corrections and note the effort spent reviewing and correcting the document, that is, the time spent by the reviewers as well as the time spent by the author to correct the document and conduct the meeting if this is the case. The activities of the desk-check (DC) review are described in Figure 5.8. In the final step, the author completes the review form illustrated in Figure 5.9.

Figure 5.8 Desk-check review activities.

Figure 5.9 Desk-Check review form.

Figure 5.9 illustrates a standard form used by reviewers to record their comments and the time they devoted to the revision of the document. The author of the document collects these data and adds the time it took him to correct the document. The forms will be retained by the author as “evidence” for an audit by the SQA of the organization the author belongs to, or by the SQA of the customer.

As an alternative to the distribution of hard copies to reviewers, one can place an electronic copy of the document, the review form and the checklist in a shared folder on the Intranet. Reviewers are invited to provide comments as annotations to documents over a defined period of time. The author can then view the annotated document, review the comments, and continue the Desk-Check review as described above.

In the next sections, we describe more formal reviews.

5.3 Standards and Models

In this section, we present the ISO/IEC 20246 standard on work product reviews, the Capability Maturity Model Integration (CMMI) model, and the IEEE 1028 standard, which lists requirements and procedures for software reviews.

5.3.1 ISO/IEC 20246 Software and Systems Engineering: Work Product Reviews

The purpose of ISO/IEC 20246 Work Product Reviews is [ISO 17d]: “to provide an International Standard that defines work product reviews, such as inspections, reviews and walk-throughs, that can be used at any stage of the software and systems life cycle. It can be used to review any system and software work product. ISO/IEC 20246 defines a generic process for work product reviews that can be configured based on the purpose of the review and the constraints of the reviewing organization. The intent is to describe a generic process that can be applied both efficiently and effectively by any organization to any work product. The main objectives of reviews are to detect issues, to evaluate alternatives, to improve organizational and personal processes, and to improve work products. When applied early in the life cycle, reviews are typically shown to reduce the amount of unnecessary rework on a project. The work product review techniques presented in ISO/IEC 20246 can be used at various stages of the generic review process to identify defects and evaluate the quality of the work product.”

ISO 20246 includes an annex that describes the alignment of the activities of the ISO 20246 standard and the procedures of the IEEE 1028 standard presented below.

5.3.2 Capability Maturity Model Integration

The CMMI® for Development (CMMI-DEV) [SEI 10a] is widely used by many industries. This model describes proven practices in engineering. In this model, a part of the “Verification” process area is devoted to peer reviews. Other verification activities will be considered in more detail in a later chapter. Figure 5.10 is an extract of the staged representation of the CMMI-DEV which describes peer reviews.

Figure 5.10 Peer reviews as described in the process area “Verification” of the CMMI-DEV.

Source: Adapted from Software Engineering Institute (2010) [SEI 10a].

The process and product quality assurance process areas provide the following list of issues to be addressed when implementing peer reviews [SEI 10a]:

- – Members are trained and roles are assigned for people attending the peer reviews.

- – A member of the peer review who did not produce this work product is assigned to perform the quality assurance role.

- – Checklists based on process descriptions, standards, and procedures are available to support the quality assurance activity.

- – Non-compliance issues are recorded as part of the peer review report and are tracked and escalated outside the project when necessary.

According to the CMMI-DEV, these reviews are performed on selected work products to identify defects and to recommend other changes required. The peer review is an important and effective software engineering method, applied through inspections, walk-throughs or a number of other review procedures.

Reviews that meet the CMMI requirements listed in Figure 5.10 are described in the following sections.

5.3.3 The IEEE 1028 Standard

The IEEE 1028-2008 Standard for Software Reviews and Audits [IEE 08b] describes five types of reviews and audits and the procedures required for the completion of each type of review and audit. Audits will be presented in the next chapter. The introductory text of the standard indicates that the use of these reviews is voluntary. Although the use of this standard is not mandatory, it can be imposed by a client contractually.

The purpose of this standard is to define reviews and systematic audits for the acquisition, supply, development, operation and maintenance of software. This standard describes not only “what to do” but also how to perform a review. Other standards define the context in which a review is performed and how the results of the review are to be used. Examples of such standards are provided in Table 5.1.

Table 5.1 Examples of Standards that Require the Use of Systematic Reviews

| Standard identification | Title of the standard |

| ISO/IEC/IEEE 12207 | Software Life Cycle Processes |

| IEEE 1012 | IEEE Standard for System and Software Verification and Validation. |

| IEEE 730 | IEEE Standard for Software Quality Assurance Processes |

The IEEE 1028 standard provides minimum acceptable conditions for systematic reviews and software audits including the following attributes:

- – team participation;

- – documented results of the review;

- – documented procedures for conducting the review.

Conformance to the IEEE 1028 standard for a specific review, such as an inspection, can be claimed when all mandatory actions (indicated by “shall”) are carried out as defined in this standard for the review type used.

This standard provides descriptions of the particular types of reviews and audits included in the standard as well as tips. Each type of review is described with clauses that contain the following information [IEE 08b]:

- Introduction to review: describes the objectives of the systematic review and provides an overview of the systematic review procedures;

- Responsibilities: defines the roles and responsibilities needed for the systematic review.

- Input: describes the requirements for input needed by the systematic review;

- Entry criteria: describes the criteria to be met before the systematic review can begin, including the following:

- Authorization,

- Initiating event;

- Procedures: details the procedures for the systematic review, including the following:

- Planning the review;

- Overview of procedures;

- Preparation;

- Examination/evaluation/recording of results;

- Rework/follow-up;

- Exit criteria: describe the criteria to be met before the systematic review can be considered complete;

- Output: describes the minimum set of deliverables to be produced by the systematic review.

5.3.3.1 Application of the IEEE 1028 Standard

Procedures and terminology defined in this standard apply to the acquisition of software, supply, development, operation, and maintenance processes requiring systematic reviews. Systematic reviews are performed on a software product according to the requirements of other local standards or procedures. The term “software product” is used in this standard in a very broad sense. Examples of software products include specifications, architecture, code, defect reports, contracts, and plans.

The IEEE 1028 standard differs significantly from other software engineering standards in that it does not only enumerate a set of requirements to be met (i.e., “what to do”), such as “the organization shall prepare a quality assurance plan,” but it also describes “how to do” at a level of detail that allows someone to conduct a systematic review properly. For an organization that wants to implement these reviews, the text of this standard can be adapted to the notation of the processes and procedures of the organization, adjusting the terminology to that which is commonly used by the organization and, after using them for a while, improve the descriptions of the review.

This standard concerns only the application of a review and not their need or the use of the results. The types of reviews and audits are [IEE 08b]:

- – management review: a systematic evaluation of a software product or process performed by or on behalf of the management that monitors progress, determines the status of plans and schedules, confirms requirements and their system allocation, or evaluates the effectiveness of the management approaches used to achieve fitness for purpose;

- – technical review: a systematic evaluation of a software product by a team of qualified personnel that examines the suitability of the software product for its intended use and identifies discrepancies from specifications and standards;

- – inspection: a visual examination of a software product to detect and identify software anomalies including errors and deviations from standards and specifications;

- – walk-through: a static analysis technique in which a designer or programmer leads members of the development team and other interested parties through a software product, and the participants ask questions and make comments about any anomalies, violation of development standards, and other problems;

- – audit: an independent assessment, by a third party, of a software product, a process or a set of software processes to determine compliance with the specifications, standards, contractual agreements, or other criteria.

Table 5.2 summarizes the main characteristics of reviews and audits of the IEEE 1028 standard. These features will be discussed in more detail in this chapter and in the following chapter on audits.

Table 5.2 Characteristics of Reviews and Audits Described in the IEEE 1028 Standard

| Management review | Technical review | Inspection | Walk-through | Audit | |

| Objective | Monitor progress | Evaluate conformance to specifications and plans | Find anomalies; verify resolution; verify product quality | Find anomalies, examine alternatives; improve product; forum for learning | Independently evaluate conformance with objective standards and regulations |

| Recommended group size | Two or more people | Two or more people | 3–6 | 2–7 | 1–5 |

| Volume of material | Moderate to High | Moderate to High | Relatively low | Relatively low | Moderate to High |

| Leadership | Usually the responsible manager | Usually the lead engineer | Trained facilitator | Facilitator or author | Lead auditor |

| Management participates | Yes | When management evidence or resolution may be required | No | No | No; however management may be called upon to provide evidence |

| Output | Management review documentation | Technical review documentation | Anomaly list, anomaly summary, inspection documentation | Anomaly list, action items, decisions, follow-up proposals | Formal audit report; observations, findings, deficiencies |

Source: Adapted from IEEE 1028 [IEE 08b].

In the following sections, walk-through and inspection reviews are described in detail. These reviews are described to clearly demonstrate the meaning of a “systematic review” as opposed to improvised and informal reviews.

5.4 Walk-Through

“The purpose of a walk-through is to evaluate a software product. A walk-through can also be performed to create discussion for a software product” [IEE 08b]. The main objectives of the walk-through are [IEE 08b]:

- – find anomalies;

- – improve the software product;

- – consider alternative implementations;

- – evaluate conformance to standards and specifications;

- – evaluate the usability and accessibility of the software product.

Other important objectives include the exchange of techniques, style variations, and the training of participants. A walk-through can highlight weaknesses, for example, problems of efficiency and readability, modularity problems in the design or the code or non-testable requirements. Figure 5.11 shows the six steps of the walk-through. Each step is composed of a series of inputs, tasks, and outputs.

Figure 5.11 The walk-through review.

Source: Adapted from Holland (1998) [HOL 98].

5.4.1 Usefulness of a Walk-Through

There are several reasons for the implementation of a walk-through process:

- – identify errors to reduce their impact and the cost of correction;

- – improve the development process;

- – improve the quality of the software product;

- – reduce development costs;

- – reduce maintenance costs.

5.4.2 Identification of Roles and Responsibilities

Four roles are described in the IEEE 1028: leader, recorder, author, and team member. Roles can be shared among team members. For example, the leader or author may play the role of recorder and the author could also be the leader. But, a walk-through shall include at least two members.

The standard defines the roles as follow (adapted from IEEE 1028 [IEE 08b]):

- – Walk-through leader

- conduct the walk-through;

- handle the administrative tasks pertaining to the walk-through (such as distributing documents and arranging the meeting);

- prepare the statement of objectives to guide the team through the walk-through;

- ensure that the team arrives at a decision or identified action for each discussion item;

- issue the walk-through output.

- – Recorder

- note all decisions and identified actions arising during the walk-through meeting;

- note all comments made during the walk-through that pertain to anomalies found, questions of style, omissions, contradictions, suggestions for improvement, or alternative approaches.

- – Author

- present the software product in the walk-through.

- – Team member

- adequately prepare for and actively participate in the walk-through;

- identify and describe anomalies in the software product.

The IEEE 1028 standard lists improvement activities using data collected from the walk-throughs. These data should [IEE 08b]:

- – be analyzed regularly to improve the walk-through process;

- – be used to improve operations that produce software products;

- – present the most frequently encountered anomalies;

- – be included in the checklists or in assigning roles;

- – be used regularly to assess the checklists for superfluous or misleading questions;

- – include preparation time and meetings; the number of participants should be considered to determine the relationship between the preparation time and meeting and the number and severity of anomalies detected.

To maintain the efficiency of walk-throughs, the data should not be used to evaluate the performance of individuals.

IEEE 1028 also describes the procedures of walk-throughs.

5.5 Inspection Review

This section briefly describes the inspection process that Michael Fagan developed at IBM in the 1970s to increase the quality and productivity of software development.

The purpose of the inspection, according to the IEEE 1028 standard, is to detect and identify anomalies of a software product including errors and deviations from standards and specifications [IEE 08b]. Throughout the development or maintenance process, developers prepare written materials that unfortunately have errors. It is more economical and efficient to detect and correct errors as soon as possible. Inspection is a very effective method to detect these errors or anomalies.

According to the IEEE 1028 standard, inspection allows us to (adapted from [IEE 08b]):

- verify that the software product satisfies its specifications;

- check that the software product exhibits the specified quality attributes;

- verify that the software product conforms to applicable regulations, standards, guidelines, plans, specifications, and procedures;

- identify deviations from provisions of items (a), (b), and (c);

- collect data, for example, the details of each anomaly and effort associated with their identification and correction;

- request or grant waivers for violation of standards where the adjudication of the type and extent of violations are assigned to the inspection jurisdiction;

- use the data as input to project management decisions as appropriate (e.g., to make trade-offs between additional inspections versus additional testing).

Figure 5.12 shows the major steps of the inspection process. Each step is composed of a series of inputs, tasks and outputs.

Figure 5.12 The inspection process.

Source: Adapted from Holland (1998) [HOL 98].

The IEEE 1028 standard provides guidelines for typical inspection rates, for different types of documents, such as anomaly recording rates in terms of pages or lines of code per hour. As an example, for the requirements document, IEEE 1028 recommends an inspection rate of 2–3 pages per hour. For source code, the standard recommends an inspection rate of 100–200 lines of code per hour.

Finally, IEEE 1028 also describes the procedures of inspection.

5.6 Project Launch Reviews and Project Assessments

In the SQAP of their projects, many organizations plan a project launch or kick-off meeting as well as a project assessment review, also called a lessons learned review.

5.6.1 Project Launch Review

The project launch review is a management review of: the milestone dates, requirements, schedule, budget constraints, deliverables, members of the development team, suppliers, etc. Some organizations also conduct kick-off reviews at the beginning of each of the major phases of the project when projects are spread over a long period of time (as in several years).

Before the start of a project, team members ask themselves the following questions: who will the members of my team be? Who will be the team leader? What will my role and responsibilities be? What are the roles of the other team members and their responsibilities? Do the members of my team have all the skills and knowledge to work on this project?

The following text box describes the kick-off review meeting used for software projects at Bombardier Transport.

5.6.2 Project Retrospectives

If the poor cousin of software engineering is quality assurance, the poor cousin of quality assurance reviews is the project retrospective. It is ironic that a discipline, such as software engineering, which depends as much as it does on the knowledge of the people involved, dismisses the opportunity to learn and enrich the knowledge of an organization's members. The project retrospective review is normally carried out at the end of a project or at the end of a phase of a large project. Essentially, we want to know what has been done well in this project, what has gone less well and what could be improved for the next project. The following terms are synonymous: lessons learned, post mortem, after-action-review.

Basili et al. (1996) [BAS 96] published the first controlled experiments that captured experience. This approach, called Experience Factory, where experience is gathered from software development projects, is packaged and stored in a database of experience. The packaging refers to the generalization, adaptation, and formalization of the experience until it is easy to reuse. In this approach, experience is separate from the organization that is responsible for capturing the experience.

A post mortem review, conducted at the end of a phase of a project or at the end of a project, provides valuable information such as [POM 09]:

- – updating project data such as length, size, defects, and schedule;

- – updating quality or performance data;

- – a review of performance against plan;

- – updating databases for size and productivity;

- – adjustment of processes (e.g., checklist), if necessary, based on the data (notes taken on the proposal process improvement (PIP) forms, changes in design or code, lists of default controls indicated and so on).

There are several ways to conduct project retrospectives; Kerth (2001) lists 19 techniques in his book [KER 01].

Some techniques focus on creating an atmosphere of discussion in the project, others consider past projects, still others are designed to help a project team to identify and adopt new techniques for their next project, and some address the consequences of a failed project. Kerth recommends holding a 3-day session to make a lasting change in an organization [KER 01]. This section presents a less stringent and less costly approach to capturing the experience of project members.

Since a retrospective session may create some tension, especially if the project discussed has not been a total success, we propose rules of behavior so that the session is effective. The rules of behavior at these sessions are:

- – respect the ideas of the participants;

- – maintain confidentiality;

- – not to blame;

- – not to make any verbal comment or gesture during brainstorming;

- – not to comment when ideas are retained;

- – request more details regarding a particular idea.

The following quote outlines the basis of a successful assessment session.

The main items on the agenda during a project retrospective review are:

- – list the major incidents and identify the main causes;

- – list the actual costs and the actual time required for the project, and analyze variances from estimates;

- – review the quality of processes, methods, and tools used for the project;

- – make proposals for future projects (e.g., indicate what to repeat or reuse (methodology, tools, etc.), what to improve, and what to give up for future projects).

A retrospective session typically consists of three steps: first, the facilitator explains, along with the sponsor, the objectives of the meeting; second, he explains what a retrospective session is, the agenda and the rules of behavior; lastly, he conducts the session.

A retrospective session takes place as follows:

Step One

- – presentation of the facilitators by the sponsor;

- – introduction of participants;

- – presentation of the assumption:

- Regardless of what we discover, we truly believe that everyone did the best job, given his qualifications and abilities, resources, and project context.

- – Presentation of the agenda of a typical retrospective session lasting approximately three hours:

- introduction;

- brainstorm to identify what went well and what could improve;

- prioritize items;

- identify the causes;

- write a mini action plan.

Step Two—Introduction to the retrospective session

- – what is a retrospective session?

- – when is a lesson really learned?

- what is individual learning, team learning?

- what is learning in an organization?

- – why have a retrospective session?

- – potential difficulties of a retrospective session;

- – session rules;

- – what is brainstorming? The rules of brainstorming are:

- no verbal comments or gestures;

- no discussion when ideas are retained.

Step Three—Conducting the retrospective session

- – chart a history (timeline) of the project (15–30 minutes);

- – conduct brainstorming (30 minutes);

- individually, identify on post-it notes:

- what went well during the project (e.g., what to keep)?

- what could be improved?

- were there any surprises?

- collect ideas and post them on the project history chart

- – clarify ideas (if necessary);

- – group similar ideas;

- – prioritize ideas;

- – find the causes using the “Five Why” technique:

- what went well during the project?

- what could be improved?

- – final questions;

- for this project, name what you would have liked to change?

- for this project, name what you wish to keep.

- – write a mini action plan;

- what, who, when?

- – end the session;

- ensure the commitment to implement the action plan;

- thanks to the sponsor and the participants.

Even if logic dictates that conducting project retrospective or lessons learned sessions are beneficial for the organization, there are still some factors that affect these types of sessions:

- – leading lessons learned sessions takes time and often management wants to reduce project costs;

- – lessons learned benefit future projects;

- – a culture of blame (finger pointing) can significantly reduce the benefits of these sessions;

- – participants may feel embarrassed or have a cynical attitude;

- – the maintenance of social relationships between employees is sometimes more important than the diagnosis of events;

- – people may be reluctant to engage in activities that could lead to complaints, to criticism, or blame;

- – some people have beliefs that predispose them to the acceptance of lessons learned; beliefs such as “Experience is enough to learn” or “If you do not have experience, you will not learn anything”;

- – certain organizational cultures do not seem able or willing to learn.

5.7 Agile Meetings

For several years, agile methods have been used in industry. One of these methods, “SCRUM,” advocates frequent short meetings. These meetings are held every day or every other day for about 15 minutes (no more than 30 minutes). The purpose of these meetings is to take stock and discuss problems. These meetings are similar to management meetings described in the IEEE 1028 standard but without the formality.

During these meetings, the “Scrum Master” typically asks three questions of the participants:

- – What have you accomplished, in the “to do” list of tasks (Backlog), since the last meeting?

- – What obstacles prevented you from completing the tasks?

- – What do you plan to accomplish by the next meeting?

These meetings allow all participants to be informed on the status of the project, its priorities, and the activities that need to be performed by members of the team. The effectiveness of these meetings is based on the skills of the “Scrum Master.” He should act as facilitator and ensure that the three questions are answered by all participants without drifting into problem-solving.

5.8 Measures

An entire chapter is devoted to measures. This section describes only the measures associated with reviews. Measures are mainly used to answer the following questions:

- – How many reviews were conducted?

- – What software products have been reviewed?

- – How effective were the reviews (e.g., number of errors detected by number of hours for the review)?

- – How efficient were the reviews (e.g., number of hours per review)?

- – What is the density of errors in software products?

- – How much effort is devoted to reviews?

- – What are the benefits of reviews?

The measures that allow us to answer these questions are:

- – number of reviews held;

- – identification of the revised software product;

- – size of the software product (e.g., number of lines of code, number of pages);

- – number of errors recorded at each stage of the development process;

- – effort assigned to review and correct the defects detected.

Tables 5.3 and 5.4, presented at a meeting of software practitioners, show the data that can be collected. Table 5.3 shows the number of reviews, the type of documents, and the errors documented during a project.

Table 5.3 A Company's Peer Review Data [BOU 05]

| Number of | Number of lines | Operational | Average OP defect | Minor defects | Average minor defect | |

| Product type | inspections | inspected | defects detected | density/1000 lines | detected | density/1000 lines |

| Plans | 18 | 5903 | 79 | 13 | 469 | 79 |

| System requirements | 3 | 825 | 13 | 16 | 31 | 38 |

| Software requirements | 72 | 31476 | 630 | 20 | 864 | 27 |

| System design | 1 | 200 | – | – | 1 | 5 |

| Software design | 359 | 136414 | 109 | 1 | 1073 | 8 |

| Code | 82 | 30812 | 153 | 5 | 780 | 25 |

| Test document | 30 | 15265 | 62 | 4 | 326 | 21 |

| Process | 2 | 796 | 14 | 18 | 27 | 34 |

| Change request | 8 | 2295 | 56 | 24 | 51 | 22 |

| User document | 3 | 2279 | 1 | 0 | 89 | 39 |

| Other | 72 | 29216 | 186 | 6 | 819 | 28 |

| Totals | 650 | 255481 | 1303 | 5 | 4530 | 18 |

Table 5.4 Error Detection Throughout the Development Process [BOU 05]

| Detection activity | |||||||||

| Attributed activity | RA | HLD | DD | CUT | T&I | Post-release | Total | Activity escape | Post-activity escape |

| System design | 6 | 1 | 1 | 0 | 3 | 2 | 13 | 15% | |

| RA | 25 | 2 | 1 | 0 | 1 | 1 | 30 | 17% | 3% |

| HLD | 32 | 7 | 2 | 8 | 3 | 52 | 38% | 6% | |

| DD | 43 | 15 | 5 | 7 | 70 | 39% | 10% | ||

| CUT | 58 | 21 | 4 | 83 | 30% | 5% | |||

| T&I | 8 | 2 | 10 | 20% | 20% | ||||

| Total | 31 | 35 | 52 | 75 | 46 | 19 | 258 | 7% | |

Legend: attributed activity, project phase where the error occurred; detection activity, phase of the project where the error was found; RA, requirements analysis; HLD, preliminary design; DD, detailed design; CUT, coding and unit testing; T&I, test and integration; post-release, number of errors detected after delivery; activity escape, percentage of errors that were not detected during this phase (%); post-activity escape, percentage of errors detected after delivery (%).

The data collected allow us to estimate the number of residual errors and the defect detection efficiency for the development process as illustrated in Table 5.4. For example, for the requirements analysis activity, 25 defects were detected, two defects during the development of the high-level design, one defect during the detailed design, zero defects in the coding and debugging activities, one failure during testing activities and integration, and one failure after delivery.

We can calculate the defect detection efficiency of the review conducted during the requirements phase:

We can also calculate the percentage of defects that originate from the requirements phase:

It is therefore possible, given these data, to make different decisions for a future project. For example:

- – to reduce the number of defects injected during the requirements phase, we can study the 25 defects detected and try to eliminate them;

- – you can reduce the number of pages inspected per unit of time in order to increase defect detection;

- – there is a large number of defects that were not detected during preliminary and detailed design activities: 38% and 39%, respectively. A causal analysis of these defects could reduce these percentages.

5.9 Selecting the Type of Review

To determine the type of review and its frequency, the criteria to be considered are: the risk associated with the software to be developed, the criticality of the software, software complexity, the size and experience of the team, the deadline for completion, and software size.

Table 5.5 is an example of a support matrix for selecting a type of review. The column “document review” shows a list of products to review. The column “complexity” shows the classification criteria and type of review to be used. In this example, the degree of complexity is measured as low, medium, and high. Complexity is defined as the level of difficulty for understanding a document and verifying it. A low complexity level indicates that a document is simple or easily checked while the high complexity level is defined for a product that is difficult to verify. Table 5.5 is only an example. The criteria for choosing the type of review and the product to review should be documented in the project plan or the SQAP.

Table 5.5 Example of a Matrix for the Selection of a Type of Review

| Technical drivers—complexity | |||

| Product | Low | Medium | High |

| Software requirements | Walk-through | Inspection | Inspection |

| Design | Desk-check | Walk-through | Inspection |

| Software code and unit test | Desk-check | Walk-through | Inspection |

| Qualification test | Desk-check | Walk-through | Inspection |

| User/operator manuals | Desk-check | Desk-check | Walk-through |

| Support manuals | Desk-check | Desk-check | Walk-through |

| Software documents, for example, Version Description Document (VDD), Software Product Specification (SPS), Software Version Description (SVD) |

Desk-check | Desk-check | Desk-check |

| Planning documents | Walk-through | Walk-through | Inspection |

| Process documents | Desk-check | Walk-through | Inspection |

In Chapter 1, we briefly introduced an example of the software quality for the aircraft engine manufacturer Rolls-Royce. Following is a concrete example of the application of code inspections at Rolls-Royce.

5.10 Reviews and Business models

In Chapter 1, we presented the main business models for the software industry [IBE 02]:

- – Custom systems written on contract: The organization makes profits by selling tailored software development services for clients.

- – Custom software written in-house: The organization develops software to improve organizational efficiency.

- – Commercial software: The company makes profits by developing and selling software to other organizations.

- – Mass-market software: The company makes profits by developing and selling software to consumers.

- – Commercial and mass-market firmware: The company makes profits by selling software in embedded hardware and systems.

Each business model is characterized by its own set of attributes or factors: criticality, the uncertainty of needs and requirements (needs versus expectations) of the users, the range of environments, the cost of correction of errors, regulation, project size, communication, and the culture of the organization.

Business models help us understand the risks and the respective needs in regards to software practices. Reviews are techniques that detect errors and thus reduce the risk associated with a software product. The project manager, in collaboration with SQA, selects the type of review to perform and the documents or products to review throughout the life cycle in order to plan and budget for these activities.

The following section explains the requirements of the IEEE 730 standard with regard to project reviews.

5.11 Software Quality Assurance Plan

The IEEE 730 standard defines the requirements with respect to the review activities to be described in the SQAP of a project. Reviews are central when it comes time to assess the quality of a software deliverable. For example, product assurance activities may include SQA personnel participating in project technical reviews, software development document reviews, and software testing. Consequently, reviews are to be used for both product and process assurance of a software project. IEEE 730 recommends that the following questions be answered during project execution [IEE 14]:

- – Have periodic reviews and audits been performed to determine if software products fully satisfy contractual requirements?

- – Have software life cycle processes been reviewed against defined criteria and standards?

- – Has the contract been reviewed to assess consistency with software products?

- – Are stakeholder, steering committee, management, and technical reviews held based on the needs of the project?

- – Have acquirer acceptance tests and reviews been supported?

- – Have action items resulting from reviews been tracked to closure?

The standard also describes how reviews can be done in projects that use an agile methodology. It states that “reviews can be done on a daily basis,” which reflects the agile culture of conducting a daily activity.

We know that SQA activities need to be recorded during the course of a software project. These records serve as proof that the project did the activities and can provide these records when asked. Review results and completed review checklists can be a good source of evidence. Consequently, it is recommended that project teams keep a record of the meeting minutes for all technical and management reviews they conduct.

Finally, an organization should base process improvement efforts on the results of in-process as well as completed projects, gathering lessons learned, and the results of ongoing SQA activities such as process assessments and reviews. Reviews can play an important role in organization-wide process improvement of software processes. Preventive actions are taken to prevent occurrence of problems that may occur in the future. Non-conformances and other project information may be used to identify preventive actions. SQA reviews propose preventive actions and identify effectiveness measures. Once the preventive action is implemented, SQA evaluates the activity and determines whether the preventive action is effective. The preventive action process can be defined either in the SQAP or in the organizational quality management system.

5.12 Success Factors

Although reviews are relatively simple and highly effective techniques, there are several factors that can greatly help their effectiveness and efficiency. Conversely, many factors can affect the review to the point of no longer being used in an organization. Some factors related to an organization's culture, which can promote the development of quality software, are listed below.

Following are the factors related to an organization's culture that can harm the development of quality software.

5.13 Tools

Following are some tools for effective reviews.

5.14 Further Reading

- WIEGERS K. The seven deadly sins of software reviews. Software Development, vol. 6, issue 3, 1998, pp. 44–47.

- WIEGERS K. A little help from your friends. Peer Reviews in Software, Pearson Education, Boston, MA, 2002, Chapter 2.

- WIEGERS K. Peer review formality spectrum. Peer Reviews in Software, Pearson Education, Boston, MA, 2002, Chapter 3.

5.15 Exercises

-

Develop a checklist for an architecture document.

-

Identify the activities that must be performed by Quality Assurance.

-

List the benefits of walk-throughs or inspections from the perspective of these key players:

- development manager;

- developers;

- quality assurance;

- maintenance personnel.

-

Provide some reasons for not carrying out inspections.

-

Name some objectives that are not the goal of an inspection.

-

Calculate the residual error given the following: 16 errors were identified in a 36-page document. We know our error detection rate is 60% and that we inject 17% of new errors when we make corrections to the errors detected. Calculate the number of errors per page in the document that remain after completing the review. Explain your calculation.

-

Develop a checklist from the Java/C++ programming guide.

-

What benefits do these key players get from a review?

- analysts,

- developers,

- managers,

- SQA,

- maintainers,

- testers.

-

Describe the advantages and disadvantages of formal reviews.

-

Describe the advantages and disadvantages of informal reviews.

-

Provide criteria for selecting a type of review.

-

Why should we do project retrospectives?

-

Complete the table on the next page by putting an “X” in the appropriate columns.

Objective of the peer review Desk-check Walk-through Inspection Find defects/errors Verify compliance with the specifications Verify compliance with standards Check that the software is complete and correct Assess maintainability Collect data Measure the quality of the software product Train personnel Transfer knowledge Ensure that errors were corrected