Chapter 12

Hybrid Architectures

THE AWS CERTIFIED ADVANCED NETWORKING – SPECIALTY EXAM OBJECTIVES COVERED IN THIS CHAPTER MAY INCLUDE, BUT ARE NOT LIMITED TO, THE FOLLOWING:

- Domain 1.0: Design and Implement Hybrid IT Network Architectures at Scale

1.1 Apply procedural concepts for the implementation of connectivity for hybrid IT architecture

1.1 Apply procedural concepts for the implementation of connectivity for hybrid IT architecture 1.2 Given a scenario, derive an appropriate hybrid IT architecture connectivity solution

1.2 Given a scenario, derive an appropriate hybrid IT architecture connectivity solution 1.4 Evaluate design alternatives leveraging AWS Direct Connect

1.4 Evaluate design alternatives leveraging AWS Direct Connect 1.5 Define routing policies for hybrid IT architectures

1.5 Define routing policies for hybrid IT architectures

- Domain 4.0: Configure Network Integration with Application Services

4.2 Evaluate Domain Name System (DNS) solutions in a hybrid IT architecture

4.2 Evaluate Domain Name System (DNS) solutions in a hybrid IT architecture

- Domain 5.0: Design and Implement for Security and Compliance

5.4 Use encryption technologies to secure network communications

5.4 Use encryption technologies to secure network communications

Introduction to Hybrid Architectures

The goal of this chapter is to provide you with an understanding of how to design hybrid architectures using the technologies and AWS Cloud services discussed so far in this Study Guide. We go into details of how AWS Direct Connect and Virtual Private Networks (VPNs) can be leveraged to enable common hybrid IT application architectures. In our discussion, hybrid refers to the scenario where on-premises applications communicate with AWS resources in the cloud. We focus on the connectivity aspects of these scenarios.

At their cores, AWS VPN connections and AWS Direct Connect enable communication between on-premises applications and AWS resources. They vary in their characteristics, and one may be preferable over the other for certain types of applications. Some hybrid applications might require the use of both. We cover these scenarios in this chapter.

We look at several application architectures, like a Three-Tier web application, Active Directory, voice applications, and remote desktops (Amazon Workspaces). We examine the connectivity requirements of these applications when running in a hybrid mode and how AWS VPN connections or AWS Direct Connect can help fulfill these requirements. We also dive deeper into how to design for special routing scenarios, such as transitive routing.

Choices for Connectivity

In a hybrid deployment, you will have on-premises servers and clients that require connectivity to and from Amazon Elastic Compute Cloud (Amazon EC2) instances that reside in a Virtual Private Cloud (VPC) environment. There are three ways to establish this connectivity.

Accessing AWS resources using public IPs over the public Internet You can assign Amazon EC2 instances with public IP addresses. The on-premises applications can then access these instances using the assigned public IP. This is the easiest option to implement but might not be the most preferable from a security and network performance perspective. From a security standpoint, you can protect the Amazon EC2 instances using security groups and network Access Control Lists (ACLs) to restrict traffic only from on-premises server IPs. You can also whitelist the elastic IPv4 addresses or IPv6 addresses on the on-premises firewalls. You can achieve traffic encryption by using Transport Layer Security (TLS) at the transport layer or using an encryption library at the application layer. The bandwidth and network performance will depend on your on-premises Internet connectivity, as well as the type and size of the Amazon EC2 instance.

Accessing AWS resources using private IPs leveraging site-to-site IP Security (IPsec) VPN over the public Internet Using the public Internet, you can set up an encrypted tunnel between your on-premises environment and your VPC. This tunnel will be responsible for transporting all traffic between the two environments in a secure, encrypted fashion. You can terminate this VPN on a Virtual Private Gateway (VGW) or on an Amazon EC2 instance. When terminating VPNs on the VGW, AWS takes care of IPsec and Border Gateway Protocol (BGP) configuration and is responsible for uptime, availability, and patching/maintenance of the VGW. You are responsible for configuring IPsec and routing at your end. When terminating a VPN on an Amazon EC2 instance, you are responsible for deploying the software, IPsec configuration, routing, high availability, and scaling. For more details, refer to Chapter 4, “Virtual Private Networks.” As VPN connections traverse the Internet, they are usually quick and easy to set up and often leverage existing network equipment and connectivity. Note that these connections are also subject to jitter and bandwidth variability, depending on the path that your traffic takes on the Internet. There are several applications that can use VPN to run efficiently. For other hybrid applications that require more consistent network performance or high network bandwidth, AWS Direct Connect will be the more suitable option.

Accessing AWS resources over a private circuit leveraging the AWS Direct Connect service The best way to remove the uncertainty of the public Internet when it comes to performance, latency, and security is to set up a dedicated circuit between a VPC and your on-premises data center. AWS Direct Connect lets you establish a dedicated network connection between your network and one of the AWS Direct Connect locations. For more details refer to Chapter 5, “AWS Direct Connect.”

Application Architectures

Now that you have a clearer understanding of the connectivity options, let’s examine various application types that require hybrid IT connectivity and how these connectivity options can be leveraged for each use case.

Three-Tier Web Application

A Three-Tier web application is commonly referred to as a web application stack. This type of application consists of multiple layers, each of which performs a specific function and is isolated in its own networking boundary. Typically, the stack consists of a web layer, which is responsible for accepting all incoming end-user requests, followed by an application layer, which is responsible for implementing the business logic of the application, and lastly followed by a database storage layer, which is responsible for storing the application data.

Usually, you would want all the three layers to be either on-premises or in AWS for minimal latency between layers, but there are scenarios where a hybrid solution is more suitable. For example, you may be in the transition phase of an application migration to AWS, or you want to use AWS to augment on-premises resources with Amazon EC2 instances, such as to distribute application traffic across both your AWS and on-premises resources. In such scenarios, the application stack spans both AWS and on-premises, and a crucial component to the success of such deployment is the connectivity between your on-premises data center and your VPC.

During this phased migration, you can start by deploying the web layer in AWS, while the application and database layers remain on-premises. Initially, you can have the web servers both in AWS and on-premises, using Application Load Balancer or Network Load Balancer to spread traffic across both of these stacks. Application Load Balancer and Network Load Balancer can route traffic directly to on-premises IP addresses that reside on the other end of the VPN or AWS Direct Connect connection. You need to make sure that the load balancer is in a subnet that has a route back to the on-premises network. We recommend testing this scenario for latency and user experience before deployment. Figure 12.1 depicts this hybrid use case.

FIGURE 12.1 Hybrid web application using AWS Load Balancing

Another way to load-balance traffic between multiple environments is to use DNS-based load balancing. In this scenario, you would use a Network Load Balancer in AWS to load-balance traffic to your web layer. You would create a DNS record mapping to your domain name that contains the IP of both the Network Load Balancer and the on-premises load balancer. If you’re using Amazon Route 53 as your DNS provider, you can choose any of the seven routing policies that are supported to load balance traffic across Network Load Balancer and an on-premises load balancer. An example would be using the weighted routing policy, with which you would create two records, one for an on-premises server and another for Network Load Balancer, and assign each record a relative weight that corresponds to how much traffic you want to send to each resource. For more details on Amazon Route 53 routing policies, refer to Chapter 6, “Domain Name System and Load Balancing.” This scenario is shown in Figure 12.2.

FIGURE 12.2 Hybrid web application using DNS and AWS load balancing

Active Directory

Active Directory is essential for Windows and/or Linux workloads in the cloud. When deploying application servers on AWS, you may choose to use the on-premises Active Directory servers and connect them to your VPC environment. You may also choose to deploy Active Directory servers in the VPC, which will act as a local copy of the on-premises Active Directory. This can be achieved by using AWS Directory Service for Microsoft Active Directory (Enterprise Edition) and establishing a trust relationship with on-premises Active Directory servers. In either scenario, you will require a VPN or an AWS Direct Connect private Virtual Interface (VIF) between your on-premises data center and AWS. Figure 12.3 depicts this hybrid use case.

FIGURE 12.3 Hybrid Active Directory setup

Domain Name System (DNS)

Similar to Active Directory, you may choose to use an on-premises server (colocated with the Active Directory Domain controller or otherwise) for assigning DNS names to your Amazon EC2 instances and/or resolving DNS queries for on-premises server names. In either case, you will require connectivity between the VPC and your on-premises data center. Typically, you will have one or more (for high availability) DNS forwarders sitting inside the VPC, which are responsible for forwarding DNS queries for VPC services to the VPC DNS and forwarding DNS queries for on-premises resources to the on-premises DNS server. Proper connectivity using AWS Direct Connect or VPN is required between the forwarder and the on-premises DNS server.

Applications Requiring Consistent Network Performance

Certain applications require a specific level of network consistency to ensure a proper end-user experience. This requires particular attention because traffic is often flowing from an on-premises environment to AWS when using a hybrid architecture. Customers usually architect hybrid IT connectivity such that all applications share the same Direct Connect port and so contend for the port bandwidth. A high-bandwidth-consuming application like data archiving can saturate the circuit, affecting the performance of other applications.

When looking at how application degradation can be avoided, you can consider reserving a separate AWS Direct Connect connection solely for any application traffic that requires Quality of Service (QoS). Essentially, if the shared connection cannot be policed, then it is best to have separate connections. A sub-1 Gbps or 1 Gbps connection can be dedicated to this application traffic, which will provide the application with the required bandwidth it needs to function properly. In Figure 12.4, Application 1 has been given a dedicated 1 Gbps AWS Direct Connect connection, while the rest of the production environment shares a 10 Gbps AWS Direct Connect connection. The two Apps can be located in two different VPC subnets within the same VPC if they communicate with different subnets on-premises. You can use as-path prepends to make the 1Gbps link primary for one on-premises subnet while the 10Gbps primary for the other. If the two Apps communicate with the same on-premises subnet, place them in different VPC’s. Make sure that the VPC’s are attached to different AWS Direct Connect Gateways. Private VIF over the 1Gbps link should be attached to the AWS Direct Connect Gateway of the App 1 VPC and respectively for 10Gbps link on App 2.

FIGURE 12.4 Quality of Service implementation

Within AWS Direct Connect routers, all traffic on a given port is shared. Certain traffic cannot be given priority over others. You can, however, use QoS markings within your networks and network devices. When packets leave on-premises network boundaries and traverse through your service provider networks that should honor Differentiated Services Code Point (DSCP) bits, QoS can be applied normally. When the traffic reaches AWS, DSCP is not used to modify traffic forwarding in AWS networks, but the header remains as it was received. This ensures that the return traffic has the QoS markings required for the intermediary service provider and your on-premises devices to police traffic.

If you are using VPN (over the Internet or AWS Direct Connect), the QoS configuration varies based on where you are terminating the VPN on the AWS end. When terminating VPN on the VGW, as well as in the case of AWS Direct Connect, QoS markings are not acted upon by the VGW. When you are terminating VPN on an Amazon EC2 instance, you can honor the QoS markings and/or implement policy-based forwarding to prioritize certain traffic flow over others. This should be supported by your VPN software loaded on the Amazon EC2 instance with appropriate configuration as required. Using advanced libraries like Data Plane Development Kit (DPDK), you can get complete visibility into the packet markings and can implement a custom packet forwarding logic.

FIGURE 12.5 AWS CodeDeploy endpoint access over public VIF

Hybrid Operations

You can use AWS CodeDeploy and AWS OpsWorks to deploy code and launch infrastructure, both on-premises and in AWS. You can also use Amazon EC2 Run Command to remotely and securely manage virtual machines running in your data center or on AWS. Amazon EC2 Run Command provides a simple way of automating common administrative tasks, like executing Shell scripts and commands on Linux, running PowerShell commands on Windows, installing software or patches, and more. Together, these tools let you build a unified operational plane for virtual machines, both on-premises and on AWS.

For these tools to work, you need to enable access to AWS public endpoints from within the on-premises environment. This access can be over the public Internet or can be through AWS Direct Connect. By creating an AWS Direct Connect public VIF, you can enable access to all AWS endpoints in the Amazon public IP space. All traffic to and from the Amazon EC2, AWS CodeDeploy, and AWS OpsWorks endpoints will be sent over the AWS Direct Connect circuit. An example of this traffic flow is shown in Figure 12.5.

Remote Desktop Application: Amazon Workspaces

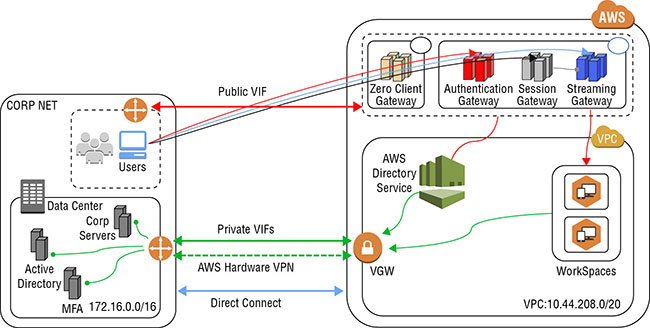

Amazon WorkSpaces is a managed, secure Desktop as a Service (DaaS) solution that runs on AWS. The networking requirements of Amazon Workspaces were briefly covered in Chapter 11, “Service Requirements.” In this section, we look at how you can leverage hybrid connectivity while using Amazon WorkSpaces. One common use case is for the connectivity of your on-premises Active Directory server with AWS Directory Service to enable using your existing enterprise user credentials with Amazon WorkSpaces. We covered how you can use AWS Direct Connect private VIF or VPN to enable this same capability in the previous section.

Another use case is for providing Amazon WorkSpaces users access to internal systems hosted in your data center, such as internal websites, human resources applications, and internal tools. You can connect your Amazon WorkSpaces VPC to your on-premises data center using an AWS Direct Connect private VIF or VPN. You would create a private VIF to the VPC in which Amazon WorkSpaces is hosted.

A third use case is for access to Amazon WorkSpaces from end-user clients. The Amazon WorkSpaces clients connect to a set of Amazon WorkSpaces PC over IP (PCoIP) proxy endpoints. The clients also require access to several other endpoints, such as update services, connectivity check services, registration, and CAPTCHA. These endpoints sit outside the VPC in the Amazon public IP range. You can use an AWS Direct Connect public VIF to enable connectivity to these IP ranges from your on-premises network to AWS. This connectivity option is depicted in Figure 12.6.

FIGURE 12.6 Using AWS Direct Connect and VPN for Amazon WorkSpaces connectivity

Application Storage Access

The two most commonly used AWS storage services in hybrid application architectures are Amazon Simple Storage Service (Amazon S3) and Amazon Elastic File System (Amazon EFS). In this section, we review how these storage classes can be accessed using an AWS Direct Connect VPN connection.

Amazon Simple Storage Service (Amazon S3)

Accessing Amazon S3 from on-premises networks is one of the most common hybrid IT use cases. Whether you have applications that use Amazon S3 as their primary storage layer, or you want to archive data to Amazon S3, low-latency and high-bandwidth access to the service is desirable.

Amazon S3 endpoints sit in the Amazon public IP space. You can easily access Amazon S3 endpoints using an AWS Direct Connect public VIF. If Amazon S3 is the only AWS Cloud service for which you want to use the public VIF, you can configure your on-premises router to route only to the Amazon S3 IP range and ignore all of the other routes advertised by AWS over the public VIF. You can determine the exact IP range of Amazon S3 in each AWS Region by programmatically calling the ec2 describe-prefix-lists Application Programming Interface (API). This API call describes the available AWS Cloud services in a prefix list format, which includes the prefix list name and prefix list ID of the service and the IP address range for the service.

Another way to get access to this same data is via the ip-ranges.json document located in the AWS documentation under the AWS General Reference, AWS IP Address Ranges section. The Amazon S3 IP range can be located by parsing the JSON file (manually or programmatically) and looking for all entries where the value of service is S3. The following is an example of an Amazon S3 entry:

{"ip_prefix": "52.92.16.0/20","region": "us-east-1","service": "S3"}

Note that as AWS continues to expand our services, the advertised IP ranges will change as AWS adds public ranges to services such as Amazon S3. These changes are automatically propagated using BGP route advertisements over public VIF. If you are filtering routes or putting static entries based on an Amazon S3 IP range as mentioned, however, you would want to keep track of these changes. You can be notified if that happens by subscribing to an Amazon Simple Notification Service (Amazon SNS) topic that we make available for this purpose. The Amazon Resource Name (ARN) for this topic is arn:aws:sns:us-east-1:806199016981:AmazonIpSpaceChanged. This notification will be generated anytime there is a change to the ip-ranges.json document. Upon receiving a notification change, you will have to check whether the Amazon S3 range is changed. This can be automated with an Amazon SNS-triggered AWS Lambda function.

Another way to access Amazon S3 from an on-premises network is to proxy all traffic via a fleet of Amazon EC2 instances that reside inside your application VPC. The idea is to use AWS Direct Connect private VIF to send Amazon S3-bound traffic to a fleet of Amazon EC2 instances sitting behind a Network Load Balancer in the VPC. These Amazon EC2 instances are running Squid and will proxy all traffic to Amazon S3 using the VPC private endpoint for Amazon S3. This solution is depicted in Figure 12.7.

You can also leverage the transit VPC architecture, which is discussed in greater detail later in this chapter, to access Amazon S3 over AWS Direct Connect. In this method, you will have to rely on the Amazon S3 IP range to send traffic to the transit hub. The same consideration regarding IP range changes and subscribing to an Amazon SNS topic applies.

FIGURE 12.7 Accessing Amazon S3 over AWS Direct Connect private VIF

You will have to configure the end clients to use a proxy and enter the Network Load Balancer DNS name as the proxy server in the client proxy settings.

From a throughput and latency point of view, leveraging public VIF (the first option discussed) should work the best. You should be able to get the full bandwidth to Amazon S3, as determined by your AWS Direct Connect port speed. The option involving the transit VPC architecture, is limited by the throughput of the transit Amazon EC2 instances. You will also have additional latency involved with IPsec packet processing. You also pay for Amazon EC2 transit instances compute costs and any software licensing for VPN software.

The option involving the use of an Amazon EC2-based proxy layer over private VIF supports horizontal scaling, so it can provide full bandwidth to Amazon S3, as determined by your AWS Direct Connect port speed. There is, however, additional latency involved with proxying traffic to intermediary hosts and the associated cost of running those hosts (Amazon EC2 compute cost plus proxy software licensing, if applicable).

Amazon Elastic File System (Amazon EFS)

Using AWS Direct Connect private VIF, you can simply attach an Amazon EFS file system to your on-premises servers, copy your data to it, and then process it in the cloud as desired, leaving your data in AWS for the long term.

After you create the file system, you can reference the mount targets by their IP addresses (which will be part of the VPC IP range), Network File System (NFS)-mount them on-premises, and start copying files. You need to add a rule to the mount target’s security group in order to allow inbound Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) traffic to port 2049 (NFS) from your on-premises servers.

If you want to use the DNS names of the Amazon EFS mount, then you will have to set up a DNS forwarder in the VPC. This forwarder will be responsible for accepting all Amazon EFS DNS resolution requests from on-premises servers and forwarding them to the VPC DNS for actual resolution.

Hybrid Cloud Storage: AWS Storage Gateway

AWS Storage Gateway is a hybrid storage service that enables your on-premises applications to seamlessly use AWS Cloud storage. You can use the service for backup and archiving, disaster recovery, cloud bursting, multiple storage tiers, and migration. Your applications connect to the service through a gateway appliance using standard storage protocols, such as NFS and Internet Small Computer Systems Interface (iSCSI). The gateway connects to AWS storage services, such as Amazon S3, Amazon Glacier, and Amazon Elastic Block Store (Amazon EBS), providing storage for files, volumes, and virtual tapes in AWS.

In order for AWS Storage Gateway to be able to push data to AWS, it needs access to the AWS Storage Gateway service API endpoints and the Amazon S3 and Amazon CloudFront service API endpoints. These endpoints are in Amazon’s public IP space and can be accessed over the public Internet or by using AWS Direct Connect public VIF.

Application Internet Access

Applications that require Internet access use an Internet gateway in the VPC as the default gateway. For some sensitive applications, you may choose to deploy an Amazon EC2 instance-based firewall capable of advanced threat protection as the default gateway. Another option is to route all Internet-bound traffic back to your on-premises location and then send it via your on-premises Internet connection. Packet inspection of the traffic can be performed using an existing on-premises security stack. You may also choose to bring all Internet traffic on-premises if you want to source all traffic from the IPv4 address range that you own.

In order to bring all traffic back on-premises, whether it be over VPN or AWS Direct Connect, you need to advertise a default route to the VGW attached to the VPC. Making appropriate bandwidth estimations is recommended before enabling this traffic flow to ensure that Internet-bound traffic is not saturating the link, which would otherwise be used for hybrid IT traffic.

Access VPC Endpoints and Customer-Hosted Endpoints over AWS Direct Connect

Gateway VPC endpoints and interface VPC endpoints allow you to access AWS Cloud services without the need to send traffic via an Internet gateway or Network Address Translation (NAT) device in your VPC. Customer-hosted endpoints allow you to expose your own service behind a Network Load Balancer as an endpoint to another VPC. For more details on how these endpoints work, refer to Chapter 3, “Advanced Amazon Virtual Private Cloud (Amazon VPC).” In this section, we focus on how these endpoints can be reached over AWS Direct Connect or VPN.

Gateway VPC endpoints use the AWS route table and DNS to route traffic privately to AWS Cloud services. These mechanisms prevent access to gateway endpoints from outside the VPC, i.e., over AWS Direct connect, AWS managed VPN. Connections that come from VPN and AWS Direct Connect through the VGW cannot natively access gateway endpoints. You can, however, build a self-managed proxy layer (as described in the Amazon S3 section of this chapter) to enable access to gateway VPC endpoints over AWS Direct Connect private VIF or VPN.

Interface VPC endpoints and customer-hosted endpoints, both powered by AWS PrivateLink, can be accessed over AWS Direct Connect. When the interface endpoint or customer-hosted endpoint is created, the AWS Cloud service has a regional and zonal DNS name that resolves to the local IP addresses within your VPC. This IP address will be reachable over AWS Direct Connect private VIF. Accessing interface VPC endpoints or customer-hosted endpoints over AWS managed VPN or VPC peering, however, is not supported.

If you want to access interface VPC endpoints, customer-hosted endpoints over VPN, you have to set up VPN termination on an Amazon EC2 instance and apply NAT for all incoming traffic to the IP address of the Amazon EC2 instance. This hides the source IP and makes the traffic look as if it was being initiated from within the VPC. This method is valid for accessing Gateway VPC endpoints as well. For more details on Amazon EC2 instance-based VPN termination, refer to Chapter 4.

Encryption on AWS Direct Connect

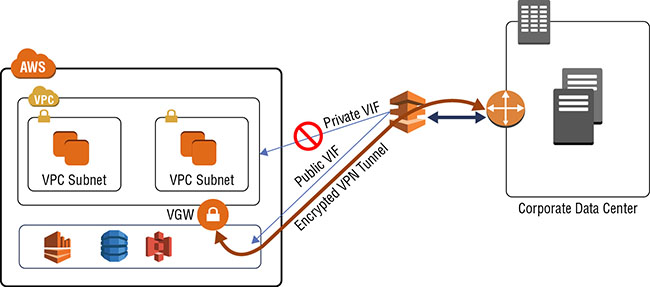

In Chapter 5, we briefly discussed how you can use IPsec site-to-site VPN over AWS Direct Connect to enable encryption. In this section, we examine the finer details of setting this up and the various options available.

If you want to encrypt all application traffic, ideally you should use TLS at the application layer. This approach is more scalable and does not impose challenges regarding high availability and throughput scalability, which inline encryption gateways impose. If your application is not able to encrypt data using TLS (or some other encryption library), or if you want to encrypt Layer 3 traffic (like Internet Control Message Protocol [ICMP]), you can set up site-to-site IPsec VPN over AWS Direct Connect.

The easiest way to set up IPsec VPN over AWS Direct Connect is to terminate VPN on the AWS-managed VPN endpoint, VGW. As discussed in Chapter 4, when setting up a VPN connection, VGW provides two public IP-based endpoints for terminating VPN. These public IPs can be accessed over an AWS Direct Connect public VIF. If required, you can configure your on-premises router to filter routes received from the AWS router over public VIF and allow only routing to the VPN endpoints. This will block the use of AWS Direct Connect for any other traffic destined to AWS public IP space. Figure 12.8 depicts this option.

FIGURE 12.8 VPN to VGW over AWS Direct Connect public VIF

Another way to encrypt traffic over AWS Direct Connect is to set up site-to-site VPN to an Amazon EC2 instance inside a VPC. The details for setting a site-to-site IPsec VPN to an Amazon EC2 instance from an on-premises environment is explained in Chapter 4. When setting up AWS Direct Connect, instead of using the Amazon EC2 instance’s elastic IP, you can use its private IP for VPN termination. You will set up private VIF to the VPC that contains the Amazon EC2 instance. You will use this private VIF to access the private IP of the Amazon EC2 instance. Figure 12.9 depicts this option.

FIGURE 12.9 VPN to Amazon EC2 instance over AWS Direct Connect private VIF

Note that the VPC IP range will be advertised over the private VIF. You want to use this routing domain to reach the Amazon EC2 instance responsible for VPN termination. All of the other traffic destined to other resources in the VPC should go through the VPN tunnel interface. You can leverage any router features/techniques to achieve this. One way to do this is by leveraging Virtual Routing and Forwarding (VRF) where the BGP peering to the VGW over private VIF is handled in a separate VRF in the router. VPN is set up to the Amazon EC2 instance leveraging VRF. Outside the VRF, the next hop to reach the VPC IP range will be the tunnel interface. An example of this is shown in Figure 12.10, where the customer router has a direct connection to AWS. It is using private VIF to connect to a VPC with Classless Inter-Domain Routing (CIDR) 172.31.0.0/16. The native access to this VPC is restricted to the VRF. A VPN tunnel is set up to an Amazon EC2 instance in the VPC. The tunnel interface on the customer router becomes the next hop to reach the VPC CIDR in the main routing table to the router.

FIGURE 12.10 Isolating routing domains using VRF

Another way to set this up to avoid receiving the same route from VGW and the Amazon EC2 instance is by assigning the Amazon EC2 instance an Elastic IP address and using public VIF to access that Elastic IP address. As mentioned before, you can configure your on-premises router to filter routes received from an AWS router over public VIF and allow only routing to this Elastic IP address. This will block the usage of AWS Direct Connect for any other traffic destined to AWS public IP space. This setup is depicted in Figure 12.11.

FIGURE 12.11 VPN to Amazon EC2 over AWS Direct Connect public VIF

For high availability, you should have two Amazon EC2 instances in different Availability Zones for VPN termination.

You should also implement this setup with Internet as a backup in case the AWS Direct Connect connectivity is interrupted. For the first method where we created tunnels to VGW endpoints over public VIF, your router will have to use the Internet to access the same endpoints in the event of a failure. The IPsec tunnels and routing will have to be reestablished upon failure, resulting in minor downtime. If you want to have an active-active setup, you will have to set up a secondary active VPN connection with a different source IP at your end—VGW cannot create two VPN connections to the same customer gateway IP at the same time.

In the case of terminating VPN over private VIF to an Amazon EC2 instance using its private IP, Internet failover cannot be achieved to the same IP because RFC 1918 IP ranges are publicly non-routable. You will have to assign an Elastic IP address to the Amazon EC2 instance and set up a VPN tunnel to it using the Elastic IP address over the Internet. You can configure this through the BGP AS path prepend and local preference parameters, making the VPN tunnel over the private VIF the primary VPN tunnel and the Internet-based VPN tunnel the backup.

Use of Transitive Routing in Hybrid IT

Chapter 3 and Chapter 4 discussed what transitive routing is and some of the limitations around establishing it natively on AWS. Those chapters also discussed how you can leverage Amazon EC2 instances to enable transitive routing by setting up VPN tunnels. Further, in Chapter 5, you saw how transit VPC architecture can be connected to AWS Direct Connect. In this section, we dive deeper into the transit VPC architecture.

In the context of hybrid connectivity, a transit VPC architecture allows on-premises environments to access AWS resources while transiting all traffic through a pair of Amazon EC2 instances. This is depicted in the architecture shown in Figure 12.12.

FIGURE 12.12 Transit VPC architecture

In this architecture, we have the transit hub VPC, which consists of Amazon EC2 instances with VPN termination software loaded on them. These Amazon EC2 instances are present in different Availability Zones for high availability and act as a transit point for all remote networks. Spoke VPCs use VGW as their VPN termination endpoint. There are pairs of VPN tunnels from each spoke VPC to the hub Amazon EC2 instances. Remote networks connect to the transit VPC using redundant, dynamically-routed VPN connections set up between your on-premises VPN termination equipment and the Amazon EC2 instances in the transit hub. This design supports BGP dynamic routing protocol, which you can use to route traffic automatically around potential network failures and to propagate network routes to remote networks.

All communication from the Amazon EC2 instances, including the VPN connections between corporate data centers or other provider networks and the transit VPC, uses the transit VPC Internet gateway and the instances’ Elastic IP addresses.

AWS Direct Connect can most easily be used with the transit VPC solution by using a detached VGW. This is a VGW that has been created but not attached to a specific VPC. Within the VGW, AWS VPN CloudHub provides the ability to receive routes via BGP from both VPN and AWS Direct Connect and then re-advertise/reflect them back to each other. This enables the VGW to form the hub for your connectivity.

Transit VPC Architecture Considerations

Using VPC peering vs. transit VPC for spoke-VPC-to-spoke-VPC communication For some inter-spoke VPC communication, VPC peering can be used instead of sending traffic via the Amazon EC2 instance in the transit hub VPC. This is a common scenario when you have two (or more) security zones: one trusted zone and other non-trusted zones. You would use the transit VPC to communicate between VPCs belonging to trusted and non-trusted zones. For VPCs that belong to the same trusted zone, you can bypass the transit VPC and directly use VPC peering. Using direct VPC peering provides better throughput and availability and also reduces traffic load on the transit VPC Amazon EC2 instances.

In order for this scenario to work, you will set up a VPC peering connection to the target VPC and add an entry in the spoke VPC subnet route table pointing to the peering connection as the next hop for reaching the remote VPC. Note that both of the spoke VPCs are still connected to the transit VPC. No architectural change is required for this to work, apart from creating the VPC peering connection and adding a new static entry in the route tables. Assuming that the routes from the transit VPC are propagated in the spoke VPC subnet route tables from the VGW, there will be a route to reach the target VPC with the next hop as VGW, in addition to the static entry pointing to the peering connection that you just created. The static route entry will take priority. If you remove this static route, all traffic will fall back to the transit VPC path. This scenario is depicted in Figure 12.13.

FIGURE 12.13 VPC peering vs. transit VPC for spoke-to-spoke communication

Using AWS Direct Connect gateway vs. transit VPC infrastructure for on-premises environment to spoke VPC communication Similar to the rationale for spoke-VPC-to-spoke-VPC communication, if you want to access a trusted VPC from an on-premises environment, you can leverage AWS Direct Connect to send traffic directly to the VPC instead of sending traffic to the transit VPC. In this scenario, your on-premises router will get two routes for the spoke VPC CIDR: one from the detached VGW and the other from the spoke VPC VGW. The spoke BGP route advertisement from the spoke VPC VGW will have a shorter AS path and will be preferred. This scenario is depicted in Figure 12.14.

Using VGW vs. Amazon EC2 instances over VPC peering for connecting spoke VPC to hub In our architecture, spoke VPC leverages VGW capabilities for routing and failover in order to maintain highly-available network connections to the transit VPC instances. VPC peering is not used to connect to the hub because VGW endpoints are not accessible over VPC peering. You can choose to deploy Amazon EC2 instances in spoke VPC for VPN termination instead of using VGW. In this architecture, you can leverage VPC peering for the VPN connection between the Amazon EC2 instance in spoke and hub. This is not the recommended approach, however, because you have to maintain and manage additional Amazon EC2 VPN instances in every spoke VPC, which is potentially more expensive, less highly available, and more difficult to manage and operate. One benefit of choosing this route would be to receive more hub-to-spoke VPN bandwidth than what VGW can support. This scenario is depicted in Figure 12.15.

FIGURE 12.14 Transit VPC vs. AWS Direct Connect Gateway for hybrid traffic

FIGURE 12.15 Transit VPC vs. AWS Direct Connect Gateway for hybrid traffic

Using detached VGW vs. VPN from an on-premises VPN device to Amazon EC2 instances in transit VPC In our architecture, we leverage a detached VGW as a bridging point between the AWS Direct Connect circuit and the transit VPC infrastructure. The AWS Direct Connect private VIF is set up to this detached VGW. Alternatively, you can also set up private VIF to a VGW attached to the transit VPC and set up VPN from an on-premises VPN termination device to the Amazon EC2 instances in the transit VPC. This setup is similar to setting up VPN over AWS Direct Connect, as discussed in the previous section. We did not choose that route in our architecture by default because you have to manage the VPN on-premises, which results in extra overhead. One reason for choosing this route would be to receive high VPN throughout, which can be achieved by scaling transit Amazon EC2 instances (assuming that your on-premises VPN infrastructure can support high VPN throughput over what VGW VPN supports). For more information on scaling the VPN throughput of Amazon EC2 instance-based VPN termination, refer to Chapter 4. This is a more viable option if you have a 10 Gbps AWS Direct Connect connection. This scenario is depicted in Figure 12.16.

FIGURE 12.16 Detached VGW vs. on-premises initiated VPN

Using BGP routing vs. static routes between hub and spokes In our architecture, we used BGP for exchanging routes. Alternatively, you can also set up static routes between spoke VPCs and the transit Amazon EC2 instances. While this is a valid way of networking, it is recommended to use BGP because it makes recovery from failure and traffic rerouting seamless and automatic.

Transit VPC Scenarios

Transit VPC design can be leveraged in several scenarios:

- It can be used as a mechanism to reduce the number of tunnels required to establish VPN connectivity to a large number of VPCs. In this scenario, the transit point (that is, a pair of Amazon EC2 instances in transit VPC) becomes the middleman between the on-premises VPN termination endpoint and the VPCs. You only need to set up a pair of VPN tunnels to the transit point once from your on-premises device. As new VPCs are created, VPN tunnels can be set up between the transit point and the VGW of the VPCs without requiring any change in configuration on the on-premises devices. This is a desirable pattern because VPN tunnel creation in AWS can be automated, but VPN configuration changes on on-premises devices require manual effort with a potentially long approval process.

- It can be used to build a security layer for all hybrid traffic. Because the transit point has visibility into all hybrid traffic, you can also build an advanced threat protection layer at this point to inspect all inbound and outbound traffic. This is useful if you have untrusted remote networks connecting into your transit VPC hub.

- It can also be useful when you want to connect an on-premises/remote network to a VPC when both have the same, overlapping IP address range. The transit point becomes the NAT layer, translating IP to a different range to enable communication between the two networks.

- It also allows remote access to VPC endpoints and customer-hosted endpoints. Similar to the previous scenario, the transit point will be responsible for performing a source NAT for all incoming packets. Note that only endpoints hosted in the hub VPC will be accessible to remote networks. Spoke VPC endpoints will not be accessible unless you are using Amazon EC2 instances for VPN termination in the spoke VPCs as well.

- It can also be used to build a highly-available client-to-site VPN infrastructure on AWS. The transit Amazon EC2 instances act as VPN termination devices for remote clients. Usually the remote VPN clients have a load balancing mechanism built-in, which results in them connecting and establishing VPN tunnels to one of the Amazon EC2 VPN instances. Once connected, they can access any of the spoke VPCs and on-premises environments connected to the transit hub.

- It can span globally across multiple AWS Regions and remote customer sites, allowing you to create your own global VPN infrastructure leveraging AWS. Both the VGW in spoke VPCs and the Amazon EC2 instances in the hub VPC leverage public IP addresses for VPN termination, so they can connect even if they are in different AWS Regions. In addition, as long as a remote customer network has Internet connectivity or an AWS Direct Connect connection, it can reach the hub and join the VPN infrastructure irrespective of its location in the world. An example of this architecture is shown in Figure 12.17.

- Based on your traffic access patterns, you can deploy one transit hub per AWS Region and bridge those transit hubs in mesh using IPsec or Generic Routing Encapsulation (GRE) VPNs over cross-region VPC peering. This is desirable if you have a lot of communication between the spoke networks within the local regions. This scenario is depicted in Figure 12.18.

FIGURE 12.17 Global transit VPC

FIGURE 12.18 Global transit VPC with regional transit hub

Summary

In this chapter, we looked at how AWS Direct Connect and VPN can be leveraged to build hybrid IT architectures. You can leverage these technologies to run applications in hybrid mode.

If you are in the process of migrating a Three-Tier web application to AWS, you can distribute the web layer across AWS and your on-premises data center using Application Load Balancer or DNS load balancing. You can also have the web layer only in AWS while the application and database layers are on-premises. You can leverage an AWS Direct Connect private VIF to connect the web layer in AWS to the application layer on-premises. You can also use AWS Direct Connect or VPN to make your on-premises Active Directory and DNS servers accessible to the resources inside your VPC.

For applications that require QoS, you have the option of using a separate AWS Direct Connect connection for isolating this traffic from other application traffic. If you are using Amazon EC2 instance-based VPN over the Internet or AWS Direct Connect, you can honor QoS markings on the VPN termination endpoints. Note that AWS endpoints like VGW do not honor QoS markings but will keep them intact.

You can leverage AWS Direct Connect or VPN to enable hybrid operations using AWS CodeDeploy, Amazon OpsWorks, and Amazon EC2 Run Command. The endpoints for these services can be accessed using an AWS Direct Connect public VIF.

VPN or AWS Direct Connect are crucial parts of Amazon WorkSpaces architectures, enabling access to on-premises Active Directory and several other internal servers. End users can also use their Amazon WorkSpaces clients to connect to their WorkSpace using an AWS Direct Connect public VIF.

Amazon S3 is one of the most widely-used AWS storage services across various applications, including for hybrid IT applications. Applications that reside on-premises can access Amazon S3 over an AWS Direct Connect public VIF. You can also use the Amazon S3 IP range to restrict traffic on your routers to access only Amazon S3. This is possible because AWS will advertise the entire Amazon public IP range by default over an AWS Direct Connect public VIF. You can also set up a proxy layer consisting of a fleet of Amazon EC2 instances in your VPC and use that as an intermediary point to access Amazon S3. All traffic will be proxied to this layer, which then will use VPC private endpoints to send traffic to Amazon S3. You can set this up in a transparent mode or non-transparent mode.

Another commonly accessed storage service in a hybrid IT setup is Amazon EFS. You can access Amazon EFS by referencing the Amazon EFS mount targets by their IP addresses. These IP addresses belong to the VPC IP range and so can be reached over AWS Direct Connect private VIF.

You can also use AWS Direct Connect to access interface VPC endpoints and customer-hosted endpoints, both powered by AWS PrivateLink. AWS VPN connections do not support access to these gateways, but you can leverage Amazon EC2 instance-based VPN termination along with source NAT to enable this access. Gateway VPC endpoints are not natively accessible over AWS Direct Connect or VPN. You can, however, use a fleet of proxy instances or Amazon EC2 instance-based VPN termination with source NAT, similar to the Amazon S3 setup discussed earlier.

We looked at how you can achieve encryption over AWS Direct Connect by setting up an IPsec VPN overlay. You can terminate a VPN connection on the VGW, which leverages the AWS Direct Connect public VIF. You can also set up a tunnel to an Amazon EC2 instance inside the VPC over an AWS Direct Connect private VIF using its private IP or over public VIF using its Elastic IP address. You should also consider an Internet-based VPN connection as a backup in case there is a failure on your AWS Direct Connect links.

We discussed how transitive routing challenges can be solved using the transit VPC architecture. In this architecture, we have the transit hub VPC that comprises a pair of Amazon EC2 instances with VPN termination software installed. These Amazon EC2 instances act as a transit point for all remote networks. All spoke VPCs connect to the pair of Amazon EC2 instances using IPsec VPN, leveraging VGW as the VPN termination endpoint at their end. AWS Direct Connect is integrated with this architecture using a detached VGW, which bridges the AWS Direct Connect routing domain with VPN infrastructure. When creating the transit VPC architecture, you have several architecture choices to make. In our example, we decided to use VGW in the spoke VPCs for VPN termination instead of a pair of Amazon EC2 instances. This allows us to leverage the inherent active-standby high availability of VGW and reduced maintenance overhead. For similar reasons, we leveraged a detached VGW instead of setting up a VPN from on-premises directly to the Amazon EC2 instances over an AWS Direct Connect private VIF. This section reviewed the considerations of using one option versus the other.

We also explored how we can leverage VPC peering for some use cases, thereby bypassing the transit hub. Similarly, we can directly access VPCs from on-premises using AWS Direct Connect gateway instead of using the transit hub. The transit VPC architecture has several use cases, including reducing the number of VPN tunnels required to connect your on-premises environment to large numbers of VPCs and implementing a security layer at the transit point. Transit VPC can also be useful when you want to connect an on-premises/remote network to a VPC when both have the same, overlapping IP address range. Transit VPC also allows remote access to VPC endpoints and customer-hosted endpoints. Lastly, transit VPC can be used to build a global VPN infrastructure.

Exam Essentials

Understand how AWS Direct Connect and VPN can be leveraged to enable several hybrid applications. You can leverage AWS Direct Connect and VPN to enable access to VPCs and other AWS resources from on-premises environments. This connectivity enablement is crucial for many hybrid applications, like the Three-Tier web application, Active Directory, DNS, and hybrid operations using AWS CodeDeploy, AWS OpsWorks, and Amazon EC2 Run Command. Each of these applications has certain requirements from a connectivity standpoint, and it is important to understand how these requirements can be fulfilled using AWS Direct Connect and VPN.

Understand how storage services like Amazon S3 and Amazon EFS can be accessed over AWS Direct Connect and VPN. Applications that are on-premises can use AWS Cloud services for their storage and archiving. It is important to understand how Amazon S3 and Amazon EFS can be accessed using AWS Direct Connect and VPN. Applications can access Amazon S3 using an AWS Direct Connect public VIF or via a proxy layer in a VPC over an AWS Direct Connect private VIF. Amazon EFS shares can be mounted using their private IPs over the private VIF.

Understand how VPC gateway endpoints and AWS PrivateLink endpoints can be accessed over AWS Direct Connect and VPN. Both interface endpoints and customer-hosted endpoints can be accessed over AWS Direct Connect private VIF. VPN does not allow access to these endpoints, but you can use Amazon EC2-based VPN termination along with source NAT to allow this connectivity. VPC gateway endpoints cannot be accessed natively over AWS Direct Connect. You can, however, build a proxy layer in the VPC or use Amazon EC2 instance-based VPN termination with source NAT to access the endpoint.

Understand how encryption can be achieved over AWS Direct Connect. You can set up IPsec VPN connections over AWS Direct Connect to achieve encryption. You can terminate VPN connections on the VGW by leveraging an AWS Direct Connect public VIF or by setting up an Amazon EC2 instance inside the VPC over an AWS Direct Connect private VIF using its private IP. You can set up the VPN over a public VIF using its Elastic IP address as well. Internet backup to this infrastructure is an option for added redundancy.

Understand how transit VPC architectures work and the rationale behind various design decisions. Transit VPC architectures leverage a pair of Amazon EC2 instances to act as the transit point for remote networks. This is implemented in a hub-and-spoke model: The Amazon EC2 instances sit in the hub VPC, and the spoke VPCs use VGW at their end to set up IPsec VPN tunnels to the hub. Remote, on-premises networks can leverage AWS Direct Connect or IPsec VPN to connect to the hub.

Understand the various use cases for implementing transit VPC architectures. Transit VPC architectures can be leveraged to fulfill several requirements, such as reducing the number of VPN tunnels required to connect an on-premises environment to a large number of VPCs, implementing a security layer at the transit point, overcoming overlapping IP address ranges between VPC and on-premises networks, establishing remote access to VPC endpoints and customer-hosted endpoints, and building a global VPN infrastructure.

Resources to Review

- Global transit VPC solution brief:

- https://aws.amazon.com/answers/networking/aws-global-transit-network/

- Transit VPC implementation guide:

- http://docs.aws.amazon.com/solutions/latest/cisco-based-transit-vpc/ welcome.html

- AWS Answers – Networking Section:

- https://aws.amazon.com/answers/networking/

- AWS re:Invent 2017: Networking Many VPCs: Transit and Shared Architectures (NET404):

- https://www.youtube.com/watch?v=KGKrVO9xlqI AWS re:Invent 2017: Extending Data Centers to the Cloud: Connectivity Options and Co (NET301):

- https://www.youtube.com/watch?v=lN2RybC9Vbk

Exercises

The best way to understand how hybrid IT connectivity works is to set it up and use it to access various AWS Cloud services, which is the goal of the exercises in this chapter.

Review Questions

-

You have an on-premises application that requires access to Amazon Simple Storage Service (Amazon S3) storage. How do you enable this connectivity while designing for high-bandwidth access with low jitter, high availability, and high scalability?

- Set up an AWS Direct Connect public Virtual Interface (VIF).

- Set up public Internet access to Amazon Simple Storage Service (Amazon S3).

- Set up an AWS Direct Connect private VIF.

- Set up an IP Security (IPsec) Virtual Private Network (VPN) to a Virtual Private Gateway (VGW).

-

You have two Virtual Private Clouds (VPCs) set up in AWS for different projects. AWS Direct Connect has been set up for hybrid IT connectivity. Your security team requires that all traffic going to these VPCs be inspected using a Layer 7 Intrusion Prevention System (IPS)/Intrusion Detection System (IDS). How will you architect this while considering cost optimization, scalability, and high availability?

- Set up a transit VPC architecture with a pair of Amazon Elastic Compute Cloud (Amazon EC2) instances acting as a transit point for all traffic. These transit instances will host Layer 7 IPS/IDS software.

- Use host-based IPS/IDS inspection on the end servers.

- Deploy an inline IPS/IDS instance in each VPC and add an entry in the route table to point to the Amazon EC2 instance as the default gateway.

- Use AWS WAF as an inline gateway for all hybrid traffic.

-

You have set up a transit Virtual Private Cloud (VPC) architecture and want to connect the spoke VPCs to the hub VPC. What termination endpoint should you choose on the spokes, considering the least management overhead?

- Virtual Private Gateway (VGW)

- Amazon Elastic Compute Cloud (Amazon EC2) instance

- VPC peering gateway

- Internet gateway

-

You are tasked with setting up IP Security (IPsec) Virtual Private Network (VPN) connectivity between your on-premises data center and AWS. You have an application on-premises that will exchange sensitive control information to an Amazon Elastic Compute Cloud (Amazon EC2) instance in the Virtual Private Cloud (VPC). This traffic should take priority in the VPN tunnel over all other traffic. How will you design this solution, considering the least management overhead?

- Terminate a VPN connection on an Amazon EC2 instance loaded with a software supporting Quality of Service (QoS) and use Differentiated Services Code Point (DSCP) markings to give priority to the application traffic as it sent and received over the VPN tunnel.

- Terminate VPN on a Virtual Private Gateway (VGW) and use DSCP markings to give priority to the application traffic as it is sent and received over the VPN tunnel.

- Terminate a VPN connection on two Amazon EC2 instances. Use one instance for sensitive control information and the other instance for the rest of the traffic.

- Move the sensitive application to a separate VPC. Create separate VPN tunnels to these VPCs.

-

Which of the following endpoints can be accessed over AWS Direct Connect?

- Network Address Translation (NAT) gateway

- Internet gateway

- Gateway Virtual Private Cloud (VPC) endpoints

- Interface VPC endpoints

-

You have to set up an AWS Storage Gateway appliance on-premises to archive all of your data to Amazon Simple Storage Service (Amazon S3) using the file gateway mode. You have AWS Direct Connect connectivity between your data center and AWS. You have set up a private Virtual Interface (VIF) to a Virtual Private Cloud (VPC), and you want to use that for sending all traffic to AWS. How will you architect this?

- Set up a Squid HTTP proxy on an Amazon Elastic Compute Cloud (Amazon EC2) instance in the VPC. Configure the storage gateway to use this proxy.

- Set up a storage gateway appliance in the VPC and use that as a gateway.

- Create an IP Security (IPSec) Virtual Private Network (VPN) tunnel between the storage gateway and the VPC over a private VIF.

- Configure the storage gateway to use a VPC private endpoint on the VPC.

-

You have a hybrid IT application that requires access to Amazon DynamoDB. You have set up AWS Direct Connect between your data center and AWS. All data written to Amazon DynamoDB should be encrypted as it is written to the database. How will you enable connectivity from the on-premises application to Amazon DynamoDB?

- Set up a public Virtual Interface (VIF).

- Set up a private VIF.

- Set up IP Security (IPsec) Virtual Private Network (VPN) over public VIF.

- Set up IPSec VPN over private VIF.

-

You have a transit Virtual Private Cloud (VPC) set up with the hub VPC in us-east-1 and the spoke VPCs spread across multiple AWS Regions. Servers in the VPCs in Mumbai and Singapore are suffering huge latencies when connecting with each other. How do you re-architect your VPCs to maintain the transit VPC architecture and reduce the latencies in the overall architecture?

- Set up a local transit hub VPC in the Mumbai region. Connect the VPCs in Mumbai and Singapore to this hub. Set up an IP Security (IPsec) Virtual Private Network (VPN) over cross-region VPC peering between the two hubs.

- Set up a local transit hub in the Singapore region. Connect the VPCs in Mumbai and Singapore to this hub VPC. Set up a Generic Routing Encapsulation (GRE) VPN over cross-region VPC peering between the two hubs.

- Add transit Amazon Elastic Compute Cloud (Amazon EC2) instances in the us-east-1 hub VPC dedicated to the traffic coming from the Mumbai and Singapore regions.

- Add a transit VPC hub in us-east-1. Connect the VPCs in Mumbai and Singapore to this new hub and then connect the two hubs using VPC peering.

-

You have an application in a Virtual Private Cloud (VPC) that requires access to on-premises Active Directory servers for joining the company domain. How will you enable this setup, considering low latency for domain join requests?

- Set up a Virtual Private Network (VPN) terminating on a Virtual Private Gateway (VGW) attached to the VPC.

- Set up an AWS Direct Connect public Virtual Interface (VIF).

- Set up an AWS Direct Connect private VIF.

- Set up a VPN terminating on an Amazon Elastic Compute Cloud (Amazon EC2) instance in the VPC.

-

Which of the following is a good use case for leveraging the transit Virtual Private Cloud (VPC) architecture?

- Allow on-premises resources access to any VPC globally in AWS.

- Allow on-premises resources access to Amazon Simple Storage Service (Amazon S3).

- Allow on-premises resources access to AWS resources while inspecting all traffic for compliance reasons.

- Allow on-premises resources access to other remote networks.