6

Decisions and Actions

Decision‐making processes are widespread and usually associated with actions as a means of achieving specific objectives. This could be the determination of operating points of industrial machines to maximize their outputs, the selection of the best route in the traveling salesman problem introduced in Chapter 5, or simply the decision to drink coffee before sleeping. This chapter will introduce different approaches to making decisions, being them centralized, decentralized, or distributed, and which are employed to govern actions based on informative data. In particular, we will discuss three different methodologies, namely optimization, game theory, and rule‐based decision. Our focus will be on mathematical and computational methods; examples from humans or animals are presented only as pedagogical illustrations, and thus, we ought to proceed with great care to avoid extrapolating such results. Therefore, one important remark before we start: decision‐making processes are generally normative, and thus, doctrinaire at some level even when they appear to be natural or spontaneous.

6.1 Introduction

Decision is defined in [1] as the act or process of deciding or a determination arrived at after consideration. However, decisions do not exist in the void: they always exist as a mediator between data and action. Hence, decision‐making process refers to the way that decisions are made about possible action(s) given certain data as inputs. Decisions and actions are then close to each other, but they are different processes.

In this book, we assume that decisions always refer to potential actions, but that deciding and acting elements are not necessarily the same. In the following, we will define the main terminology used here to avoid misunderstanding.

This definition reaffirms that the main elements in decision‐making processes are decision‐makers, not agents. A mathematical formalism is possible if some specific conditions concerning their possible choices hold. This is the focus of decision theory [2], employed in behavioral psychology and neoclassical economics, and whose basic assumptions about human beings are frequently questioned (e.g. [3–5]). We also share those concerns, and thus, our conceptualization of decision‐making will be presented in a form different from the ones usually found in the literature. Similar to previous chapters, we will introduce fundamental concepts and tools for decision‐making, as well as their limitations. The first step is to identify the forms that decision‐making processes can take.

6.2 Forms of Decision‐Making

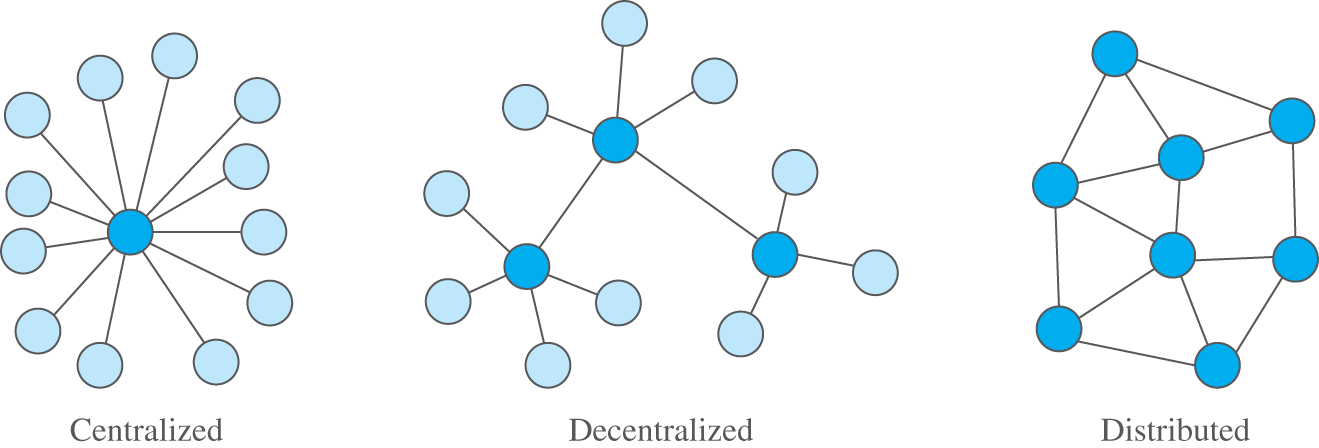

One way to classify decision‐making processes is the topological characteristic of the network composed of decision‐makers and agents connected through logical links. We can then discriminate decision‐making processes into three types: centralized, distributed, and decentralized. Figure 6.1 provides an illustration of the different types.

In centralized decision‐making, there is one element – the only decision‐maker – that has the attribute of deciding on the actions taken by all agents in a given system or process. This approach usually assumes that the agents have no autonomy and just follow the prescribed actions determined by the decision‐maker, and thus, agents are not involved in decision‐making processes. The only exception is a special case in which the decision‐maker itself is an agent – but a special agent because it determines the actions to be accomplished by itself and by all other agents. The network topology is usually the one‐to‐many topology, in which the central node is the decision‐maker and the agents are at the edge.

In distributed decision‐making, all elements are both potential decision‐makers and potential agents, i.e. all have the capability of deciding and acting. In comparison with the centralized approach, distributed decision‐making presumes that agents are relatively autonomous because they are part of decision‐making processes that define the actions to be taken. Distributed decision‐making is, in this sense, participatory and has two extreme regimes, namely: (i) unconstrained decision and action by all elements (i.e. full autonomy given the possible choices) and (ii) decision by consensus (i.e, all elements agree with the decision to be taken on individual actions). In between, there is a spectrum of possibilities, such as decision by the simple majority or by groups. Random networks with undirected links provide a usual topology for distributed decision‐making.

Figure 6.1 Examples of centralized, decentralized, and distributed networks.

Decentralized decision‐making can be seen as a hybrid between the centralized and distributed cases, which consists of more than one decision‐maker and may also include elements that are (i) only decision‐makers, (ii) only agents, and (iii) both decision‐makers and agents. In this case, decentralized decision‐making may take different forms, some resembling a centralized structure (e.g. few decision‐makers determining the action of many agents), others a distributed one (e.g. most of elements are both decision‐makers and agents). It is also possible to have hierarchical decision‐making, where some decision‐makers of higher ranking impose constraints and rules on lower‐ranked ones. Trees and networks with hubs are typical topologies of this case, although not the only ones.

In summary, the proposed classification refers to how the decision‐makers are structured in relation to the agents. If there is only one decision‐maker that directly commands and controls all elements, then the decision is centralized. If all the elements are both potential decision‐makers and agents, then the decision is distributed. If there are more than one decision‐maker coexisting with other elements, then the decision is decentralized.

It is important to reinforce that, in all three types, the autonomy of agents and decision‐makers is constrained by the norms that govern the decisions and the possible choices for actions. Because both norms and choices are generally predefined, fixed and given, decision‐making processes are doctrinaire. One important remark is that distributed decision‐making is very often associated with autonomy for deciding and then acting. This view may be misleading because the level of autonomy is relative in the sense that decision‐makers are always limited by the norms, and agents are always limited by the possible choices of action. The following example provides an illustration of different approaches of decision‐making using an ordinary decision‐making process of our daily lives.

This example is provided as a didactic illustration of the different types of decision‐making processes. Although the proposed nomenclature is not standardized in the literature, the distinction between centralized, distributed, and decentralized decision‐making is broadly used to indicate the tendency of economical and political decentralization in the post‐Fordist age [6], which is also emulated by recent computer network architectures [7–9]. Another important reminder is that the relation between decision‐makers and agents is necessarily mediated by data processes, and thus, the decision‐makers logically control the agents by data commands, not by physical causation (which, in Example 6.1, could be the case that the parents are both decision‐makers and agents who decide the clothes and directly dress the children). The following proposition generalizes this by using the concept of the level of processes introduced in Chapter 4.

This proposition has interesting implications that will be explored throughout this book. At this point, though, we will slightly shift the focus and investigate different formal frameworks for decision‐making processes as such, without considering the material reality they refer to.

6.3 Optimization

Optimization is a branch of mathematics that focuses on the selection of the optimal choice with respect to a given criterion among a (finite or infinite) set of existing alternatives. An optimization problem can be roughly defined by the questions: what is the maximum/minimum value that a given function can assume and how to achieve it given a set of constraints? Mathematically, we have [10]:

where ![]() is the optimization variable,

is the optimization variable, ![]() is the objective function,

is the objective function, ![]() 's are the constraint functions, and the constants

's are the constraint functions, and the constants ![]() 's are the constraint limits for

's are the constraint limits for ![]() . The solution of (6.1), denoted by

. The solution of (6.1), denoted by ![]() , is called optimal, indicating that

, is called optimal, indicating that ![]() for any

for any ![]() that satisfies the constraints

that satisfies the constraints ![]() . The proposed optimization problem is valid for vectors, and thus, the optimization variable

. The proposed optimization problem is valid for vectors, and thus, the optimization variable ![]() , and the functions

, and the functions ![]() . Besides, a maximization problem can always be formulated as a minimization:

. Besides, a maximization problem can always be formulated as a minimization: ![]() . The optimization problem can be read as what is the minimum value of

. The optimization problem can be read as what is the minimum value of ![]() in terms of

in terms of ![]() subject to the constraints defined by

subject to the constraints defined by ![]() and

and ![]() ?

?

Mathematical optimization is clearly an important tool for decision‐making regardless of its type. In the following, we will provide some examples to illustrate this.

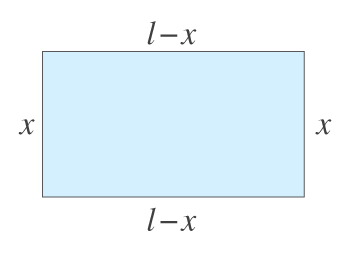

Figure 6.2 Rectangle with a fixed perimeter of length  .

.

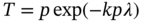

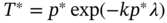

These two examples illustrate how optimization is usually carried out in a pedagogical manner. Optimization problems can be much more complicated as indicated by the cases presented in [10]. Nevertheless, the formulation stated in (6.1) is generally valid for all optimization problems. Example 6.2 presented a case that the optimal solution is guaranteed by the problem statement. Example 6.3 is different because it assumes that all the nodes act in the same way employing the same access probability ![]() while the optimization refers to a network‐level metric called spatial throughput. If the goal is to maximize the link throughput, the optimal solution taken by individual transmitters would lead to different results as presented in [12] based on a more sophisticated scenario. The comparison between network and individual level optimization approaches will be the focus of Exercise 6.1. Note that in Example 6.3 the decision‐making is centralized by an element that indicates to the agents the probability

while the optimization refers to a network‐level metric called spatial throughput. If the goal is to maximize the link throughput, the optimal solution taken by individual transmitters would lead to different results as presented in [12] based on a more sophisticated scenario. The comparison between network and individual level optimization approaches will be the focus of Exercise 6.1. Note that in Example 6.3 the decision‐making is centralized by an element that indicates to the agents the probability ![]() that they need to use to access the radio resource. In this case, this central element is a level 2 decision‐maker, and the transmitters are level 1 decision‐makers and also agents.

that they need to use to access the radio resource. In this case, this central element is a level 2 decision‐maker, and the transmitters are level 1 decision‐makers and also agents.

In the next section, we will look at a different problem defined when decision‐makers interact in a competitive manner, taking decisions based on their own self‐interest.

6.4 Game Theory

Game theory is a mathematical theory used as a support for strategic decision‐making in scenarios with more than one decision‐maker. Before we start to describe its fundamentals, it is important to state different views of such a theory to avoid possible pitfalls.

- [13]: Game theory is the mathematical study of interaction among independent, self‐interested agents. The audience for game theory has grown dramatically in recent years, and now spans disciplines as diverse as political science, biology, psychology, economics, linguistics, sociology, and computer science, among others.

- [14]: Game theory is an axiomatic‐mathematical theory that presents a set of axioms that people have to “satisfy” by definition to count as “rational.” This makes for “rigorous” and “precise” conclusions – but never about the real world. Game theory does not give us any information at all about the real world. Instead of confronting the theory with real‐world phenomena it becomes a simple matter of definition if real‐world phenomena are to count as signs of ‘rationality.’ It gives us absolutely irrefutable knowledge–but only since the knowledge is purely definitional.

- [15]: In many respects this enthusiasm is not difficult to understand. Game theory was probably born with the publication of The Theory of Games and Economic Behaviour by John von Neumann and Oskar Morgenstern (first published in 1944 with second and third editions in 1947 and 1953). They defined a game as any interaction between agents that is governed by a set of rules specifying the possible moves for each participant and a set of outcomes for each possible combination of moves. One is hard put to find an example of social phenomenon that cannot be so described. Thus a theory of games promises to apply to almost any social interaction where individuals have some understanding of how the outcome for one is affected not only by his or her own actions but also by the actions of others. This is quite extraordinary. From crossing the road in traffic, to decisions to disarm, raise prices, give to charity, join a union, produce a commodity, have children, and so on, it seems we will now be able to draw on a single mode of analysis: the theory of games.

At the outset, we should make clear that we doubt such a claim is warranted. This is a critical guide to game theory. Make no mistake though, we enjoy game theory and have spent many hours pondering its various twists and turns. Indeed it has helped us on many issues. However, we believe that this is predominantly how game theory makes a contribution. It is useful mainly because it helps clarify some fundamental issues and debates in social science, for instance those within and around the political theory of liberal individualism. In this sense, we believe the contribution of game theory to be largely pedagogical. Such contributions are not to be sneezed at.

This book presents a pragmatical account of game theory as a model for decision‐making under very specific assumptions, and thus, it is not considered to have any scientific value in terms of explaining human behavior, social dynamics, or nature. Game theory is an axiomatic construct that consists of decision‐makers that have choices that will result in different payoffs assuming that they (i) are instrumentally rational, i.e. always aim at maximizing their individual payoffs, (ii) have background knowledge about each others' rationality but do not communicate to coordinate decisions, and (iii) are completely aware of the rules of the game. In the following, we will formalize this by defining games in normal form.

This formal description of games is usually motivated by real‐world situations. There is a long list of games such as battle of the sexes, prisoners' dilemma, matching pennies, and dictator game. As a didactic example, we will analyze next one of those well‐known games representing the relation between two decision‐makers that could select aggressive and nonaggressive behaviors in a game called dove and hawk.

Because of such an instability, this game is used in evolutionary game theory [16], which tries to explain animal behavior using game theory, in a model introduced in a seminal paper called The Logic of Animal Conflict [17]. The idea is to verify the dynamic changes of strategy when the game is played for different rounds, which lead to different sequences of behavior, thus resulting in long‐term strategies like retaliation or bullying.

Dove and hawk game also indicates the possibility of sequential games, where one decision‐maker is the first mover, and the second reacts to the first mover. This is usually represented by game in extensive form, which also helps to analyze the situation where there is the possibility to randomize the decisions through mixed strategies, which are defined next.

Game theory as briefly introduced here has an axiomatic assumption that all decision‐makers follow only one rule: maximize the individual payoff. Mixed strategies indicate the possibility of randomization of decisions using, for example, a random number generator, a coin, or an urn. For example, in the dove and hawk with 50% probability as its Nash equilibrium, each decision‐maker could throw a fair coin having the following decision rule:

- if tail, then select aggressive,

- if head, then select nonaggressive.

Nevertheless, the individual objective is still the maximization of expected payoffs after repeated games.

Note that it is possible to define many other decision rules, and payoff maximization is not the only option. In the following section, we will present more details of rule‐based decision‐making.

6.5 Rule‐Based Decisions

Every decision‐making process is based on rules. For instance, a decision‐maker who is willing to select the operating point that will lead to the optimal use of resources has an implicit rule: always select the operating point that will lead to such an optimal outcome. Likewise, the game‐theoretic decision‐makers are always ruled by payoff maximization. Rule‐based decisions are a generalization of the previous, excessively restrictive, rules.

Decision rules can generally be represented by input and output relations. For example, a decision‐maker could use the logic gate AND to select an alternative. In concrete terms, a given person will go to the restaurant if his/her three friends will go. After calling and confirming that all three go, the person decides to go. Another option is to use an OR gate, and thus, at least one of the friend needs to go.

Other cases could be proposed. Imitating decisions from other decision‐makers or repeating the same decision until some predetermined event happens are two common examples of rules of thumb. Another example is the preferential attachment process described in the previous chapter, where new nodes in a network select with higher chances to be connected to nodes already with a high degree. In the following, we will exemplify a rule‐based decision process designed to indicate the potential risks.

This simple example illustrates well the idea of rule‐based decision‐making that is usually employed in sensor networks [18]. Besides, decision systems designed to support experts (i.e. expert systems), which are widespread today, relies on rules [19]. Heuristics are another approach that is usually based on predefined or “learned” rules.

We will return to this topic in later chapters. Now, it is important to discuss the limitations of the proposed methodologies for decision‐making.

6.6 Limitations

Decision‐making processes, as defined here, are always data‐mediated processes governed by decision rules, which are flexible and not universal. Decision rules are normative and open for changes, never inviolable as physical laws. This imposes a fundamental limitation because the outcome of decision‐making processes can only be predicted if decision rules are known, assumed to be known, estimated, or arbitrarily stated. Even worse, this is only a necessary condition, not sufficient: even if the decision rules are known, the outcome of the decision‐making process can only be determined in few special cases.

In this case, the mathematization introduced in Sections 6.3 and 6.4 refers to particular decision‐making processes supported by specific assumptions of decision rules, and thus, they are not universal and natural as frequently claimed. This fact is different from saying that optimization and game theory are useless or irrelevant, but only to emphasize that their reach is limited, and the mathematically consistent results must always be critically scrutinized as scientific knowledge. It is important to repeat here: mathematical formalism is neither a necessary nor a sufficient condition to guarantee that a piece of knowledge is scientific.

Besides, decision‐making processes depend on structured and interpretable data as inputs. Except for particular decision rules that are independent of the input data (e.g. random selection between alternatives, or always select the same alternative regardless of the input), all decisions are informed. Hence, decision‐makers need data with the potential of being information about the possible alternatives of actions to be taken by the agents. Informed decision‐making processes need data to decrease uncertainty, and thus, lack of data, unstructured data, misinformation, and disinformation are always potential threats to them.

In this case, each logical link between different decision‐makers, or between decision‐makers and agents, creates a potential vulnerability for the decision‐making process, whose impact depends on its topological characteristics. Centralized decision‐making relies on one decision‐maker, and thus, the process is vulnerable to the operation of such an element. Decentralized and distributed decision‐making processes are dependent on their network topology, but they are usually more resilient and robust against attacks. However, depending on the deliberation method used by decision‐makers (which may be governed by different individual decision rules) in those topologies, a selection between alternatives may never happen and the decision‐making process may never converge to a choice.

This brings in another limitation: individual decision‐makers need to have access to a rule book or doctrine that states how to determine the selection of one (or a few) alternatives among all possibilities. Moreover, in many cases of distributed and decentralized decision‐making processes, there should be an additional protocol involving a decision‐making process with many decision‐makers from the same or different levels. In game theory, this is exemplified by the knowledge of the rules of the game. The problem is even more challenging if we consider decision‐making processes of higher order where there is a decision‐making process to decide which rule book will be used to decide. This might lead to reflexive or self‐referential processes that are undecidable, such as the liar paradox and the halting problem studied by Turing [20].

Those limitations are the key to scientifically understand “autonomous” decision‐making processes, which are the basis of cyber‐physical systems, thus avoiding design pitfalls and tendencies of universalization, which too often haunt cybernetics as elegantly described in [21]. We should stop at this point, though, and wait for the chapters to come where the theory of cyber‐physical system will be presented, and the main limitations of decision‐making processes will be revisited.

6.7 Summary

This chapter introduced decision‐making processes performed by decision‐makers that will select, among possible alternatives, one action or a set of actions that agents will perform. We classified decision‐making processes with respect to the topological characteristics of the network composed of decision‐makers and agents. We also showed different approaches to analyze and perform decision‐making, namely optimization, game theory, and rule‐based decisions. Overall, our aim was to show that decision‐making processes in cyber‐physical systems are (i) mediated using potentially informative data as inputs and selecting action(s) to be performed by agent(s) as output, (ii) governed by decision rules or doctrines that are neither immutable as physical laws nor “natural” as usually claimed, (iii) susceptible to failures, and (iv) not universal.

Despite the immense specialized literature covering fields as diverse as evolutionary biology, psychology, political economy, and applied mathematics, there are few general texts that are worth reading. The paper [5] provides a well‐grounded overview of different approaches to human decision‐making in contrast to the mainstream orthodoxy [2, 4]. In a similar manner, the book [15] makes a critical assessment of game theory, indicating how its models and results should be employed with the focus on political economy. The paper [22] is an up‐to‐date overview of economics considering a more realistic characterization of human decision‐makers. The seminal paper [17] on explaining the evolution of animal behavior by using game theory provides interesting insights into the fields of theoretical biology and evolutionary game theory. In a technical vein, which is more related to the main focus of this book, the paper [19] can provide an interesting historical perspective of the early ages of expert systems. In their turn, the papers [7–9] discuss issues on decentralized and distributed decision‐making systems in the late 2010s and early 2020s.

Exercises

- 6.1 Wireless networks and decision‐making Compare the scenario presented in Example 6.3 with another considering that each transmitter acts selfishly in other to maximize its own link throughput

. The optimization problem can be formulated as:

(6.5)

. The optimization problem can be formulated as:

(6.5)

- What is the optimal solution

and

and  ?

? - Plot the spatial throughput

obtained when all the transmitters are selfish for

obtained when all the transmitters are selfish for  ,

,  and

and  for

for  .

. - Discuss the results.

- What is the optimal solution

- 6.2 Prisoners' dilemma Prisoners' dilemma is a well‐known game describing a situation where independent decision‐makers, which cannot communicate with each other, are stuck in a Nash equilibrium that is noncooperative, although it seems to be their best interest to be cooperative. The problem is described as follows. Two members A and B of a criminal gang are arrested and imprisoned. Each prisoner is in solitary confinement with no means of communicating with the other. The prosecutors lack sufficient evidence to convict the pair on the principal charge, but they have enough to convict both on a lesser charge. Simultaneously, the prosecutors offer each prisoner a bargain. Each prisoner is given the opportunity either to betray the other by testifying that the other committed the crime, or to cooperate with the other by remaining silent. The possible outcomes are:

- If A and B each betray the other (not cooperating with each other), each of them serves

years in prison (payoff of

years in prison (payoff of  ).

). - If A betrays B (not cooperating with B) but B remains silent (cooperating with A), A will serve

years in prison (payoff

years in prison (payoff  ) and B will serve

) and B will serve  years (payoff of

years (payoff of  ).

). - If B betrays A (not cooperating with A) but A remains silent (cooperating with B), B will serve

years in prison (payoff

years in prison (payoff  ) and A will serve

) and A will serve  years (payoff of

years (payoff of  ).

). - If A and B both remain silent, both of them will serve

years in prison (payoff of

years in prison (payoff of  ).

).

Member A Cooperate Betray Member B Cooperate

Betray

- What is the relation between the payoff values

,

,  ,

,  and

and  so that the game can be classified as a Prisoner's Dilemma? Note: Follow the approach used in Example 6.4 and note that the payoffs in the table are negative but the values of

so that the game can be classified as a Prisoner's Dilemma? Note: Follow the approach used in Example 6.4 and note that the payoffs in the table are negative but the values of  are positive.

are positive. - Would this game be a dilemma if the two members were trained to not betray each other, and thus, they have a background knowledge about how the other would decide? Justify and analyze this situation as if you were one of the captured persons.

- Study prisoners' dilemma as a sequential game following Example 6.5.

- Repeated prisoners' dilemma is usually used to study the evolution of cooperation in competitive games. In the abstract of the seminal paper [23] that introduces this idea, we read: Cooperation in organisms, whether bacteria or primates, has been a difficulty for evolutionary theory since Darwin. On the assumption that interactions between pairs of individuals occur on a probabilistic basis, a model is developed based on the concept of an evolutionarily stable strategy in the context of the Prisoner's Dilemma game. Deductions from the model, and the results of a computer tournament show how cooperation based on reciprocity can get started in an asocial world, can thrive while interacting with a wide range of other strategies, and can resist invasion once fully established. Potential applications include specific aspects of territoriality, mating, and disease. The task here is to critically assess the statements made by this text based on the discussions introduced in this chapter.

- If A and B each betray the other (not cooperating with each other), each of them serves

- 6.3 Alarm system Consider the scenario presented in Example 6.6. We assume that events A, B, and C are associated with a probability

,

,  , and

, and  . The following table defines the risk levels at the local stations.

. The following table defines the risk levels at the local stations.

Event A Event B Event C Risk level True False True Level 2 False True True Level 3 For the city level, the risk levels are given next.

Local 1 Local 2 Risk level Level 3 Level ‐ Level 2 Level ‐ Level 3 Level 2 Level 3 Level 3 Level 3 All the other situations are classified as risk level 1.

- Compute the probability mass function of the risk levels at the local stations. Note: events A and B are mutually exclusive (i.e. they cannot be true at the same time).

- Compute the probability mass function of the risk level at the central station considering that the two local stations are independent of each other.

- Critically assess the results presented in (b) based on the assumption of independence.

- Determine the level that each decision‐making process has following Proposition 6.1.

References

- 1 Merriam‐Webster Dictionary. Decision; 2021. Last accessed 1 March 2021. https://www.merriam-webster.com/dictionary/decision.

- 2 Steele K, Stefánsson HO. Decision Theory. In: Zalta EN, editor. The Stanford Encyclopedia of Philosophy. winter 2020 ed. Metaphysics Research Lab, Stanford University; 2020.

- 3 Mirowski P. Against Mechanism: Protecting Economics from Science. Rowman & Littlefield Publishers; 1992.

- 4 Wolff RD, Resnick SA. Contending Economic Theories: Neoclassical, Keynesian, and Marxian. MIT Press; 2012.

- 5 Gigerenzer G. How to explain behavior? Topics in Cognitive Science. 2020;12(4):1363–1381.

- 6 Srnicek N. Platform Capitalism. John Wiley & Sons; 2017.

- 7 Baldwin J. In digital we trust: Bitcoin discourse, digital currencies, and decentralized network fetishism. Palgrave Communications. 2018;4(1):1–10.

- 8 Pournaras E, Pilgerstorfer P, Asikis T. Decentralized collective learning for self‐managed sharing economies. ACM Transactions on Autonomous and Adaptive Systems (TAAS). 2018;13(2):1–33.

- 9 Nguyen MN, Pandey SR, Thar K, Tran NH, Chen M, Bradley WS, et al. Distributed and democratized learning: philosophy and research challenges. IEEE Computational Intelligence Magazine. 2021;16(1):49–62.

- 10 Boyd S, Vandenberghe L. Convex Optimization. Cambridge University Press; 2004. Available at https://web.stanford.edu/∼boyd/cvxbook/bv_cvxbook.pdf.

- 11 Popovski P. Wireless Connectivity: An Intuitive and Fundamental Guide. John Wiley & Sons; 2020.

- 12 Nardelli PHJ, Kountouris M, Cardieri P, Latva‐Aho M. Throughput optimization in wireless networks under stability and packet loss constraints. IEEE Transactions on Mobile Computing. 2013;13(8):1883–1895.

- 13 Leyton‐Brown K, Shoham Y. Essentials of game theory: a concise multidisciplinary introduction. Synthesis Lectures on Artificial Intelligence and Machine Learning. 2008;2(1):1–88.

- 14 Syll LP. Why game theory never will be anything but a footnote in the history of social science. Real‐World Economics Review. 2018;83:45–64.

- 15 Hargreaves‐Heap S, Varoufakis Y. Game Theory: A Critical Introduction. Routledge; 2004.

- 16 Vega‐Redondo F. Evolution, Games, and Economic Behaviour. Oxford University Press; 1996.

- 17 Smith JM, Price GR. The logic of animal conflict. Nature. 1973;246 (5427): 15–18.

- 18 Nardelli PHJ, Ramezanipour I, Alves H, de Lima CH, Latva‐Aho M. Average error probability in wireless sensor networks with imperfect sensing and communication for different decision rules. IEEE Sensors Journal. 2016;16(10):3948–3957.

- 19 Hayes‐Roth F. Rule‐based systems. Communications of the ACM. 1985;28(9):921–932.

- 20 Turing AM. On computable numbers, with an application to the Entscheidungsproblem. Proceedings of the London Mathematical Society. 1937;2(1):230–265.

- 21 Gerovitch S. From Newspeak to Cyberspeak: A History of Soviet Cybernetics. MIT Press; 2004.

- 22 Arthur WB. Foundations of complexity economics. Nature Reviews Physics. 2021;3:136–145.

- 23 Axelrod R, Hamilton WD. The evolution of cooperation. Science. 1981;211(4489):1390–1396.