Chapter 2

Relational Databases in Azure

Relational databases have been critical components to organizations' IT infrastructure for the last few decades. They are the most common way to store data due to their ease of use, the wide variety of solutions they can support, and the well-established best practices with which they are designed. Relational databases are useful for storing data elements that are related and must be stored in a consistent manner. This chapter will discuss the key features of a relational database, the different relational database offerings in Azure, basic management tasks for relational databases, and common query techniques for relational data.

Relational Database Features

Relational databases store data as collections of entities in the form of tables. In the context of data, entities can be described as nouns, such as persons, companies, countries, or products. Tables contain structured data that describes an entity and are composed of zero or more rows and one or more columns of data. Some of the columns might be special columns that are used to uniquely identify each row or act as a reference to another table that they might be related to. Rows might not include values for each column, but because relational databases are designed with a rigid schema, the row will still include that column in its definition. Default or null values are used when a value is not provided for a row. This organized approach to data storage allows relationships between entities that can easily be queried by a data analyst or a data processing solution. Let's examine the features of a relational database, starting with design considerations.

Relational Database Design Considerations

Design considerations for relational databases largely depend on what type of solution the database will be powering. As discussed in Chapter 1, “Core Data Concepts,” relational databases are commonly used to power online transaction processing (OLTP) and analytical systems. Solutions that are powered by OLTP databases have different write and read requirements than that of an analytical database. Even though OLTP databases often serve as data sources for data warehouses or online analytical processing (OLAP) systems, these requirements make it necessary to distribute and store data differently in each system.

OLTP Workload Design Considerations

Transactional data that is stored in an OLTP database involve interactions that are related to an organization's activities. These can include payments received from customers, payments made to suppliers, or orders that have been made. Typical OLTP databases are optimized to handle data that is written to them and must be able to ensure that transactions adhere to ACID properties (see Chapter 1 for more information on ACID properties). This will guarantee the integrity of the records that are stored. Relational database management systems (RDBMSs) typically enforce these rules using locks or row versioning.

Regardless of whether a transaction is reading, inserting, updating, or deleting data, the data involved in the transaction must be reliable. This becomes even more true as the number of users running transactions concurrently on the same pieces of data increases, resulting in the following issues:

- Dirty reads can occur when a transaction is reading data that is being modified by another transaction. The transaction performing the read is reading the modified data that has not yet been committed. This potentially results in an inaccurate result set if the transaction modifying the data is rolled back to the original values.

- Nonrepeatable reads occur when a transaction reads the same row several times and returns different data each time. This is the result of one or more other transactions being able to modify the data between the reads within the transaction.

- Phantom reads occur when two identical queries running in the same transaction return different results. This can happen when another query inserts some data in between the execution of the two queries, resulting in the second query returning the newly inserted data.

To mitigate these issues, a transaction will request locks on different types of resources, such as rows and tables, that the transaction is dependent on. Transaction locks prevent dirty, nonrepeatable, and phantom reads by blocking other transactions from performing modifications on data objects involved in the transaction. Transactions will free their locks from a resource once they have finished reading/modifying it. While locks are critical for ensuring consistency, they can cause long wait times for users that have issued transactions that are being blocked. The following isolation levels can be assigned to a transaction to balance consistency versus performance depending on its requirements:

- Read Uncommitted is the lowest isolation level, only guaranteeing that physically corrupt data is not read. Transactions using this isolation level run the risk of returning dirty reads since uncommitted data is read.

- Read Committed transactions issue locks on involved data at the time of data modification to prevent other transactions from reading dirty data. However, data can be modified by other transactions, which can result in nonrepeatable or phantom reads. This is the default isolation level for SQL Server and Azure SQL Database.

- Repeatable Read transactions issue read and write locks on involved data until the end of the transaction. No other transaction can modify data involved by a repeatable read transaction until the transaction has completed. However, other transactions can insert new rows into tables involved in a repeatable read transaction. This could possibly result in phantom reads occurring.

- Serializable is the highest isolation level and completely isolates transactions from one another. Statements cannot read data that has not yet been committed by a transaction with serializable isolation. What's more is that statements cannot modify data that is being read by a transaction whose isolation is set to serializable.

SQL Server and Azure SQL Database also allow users to use row versioning to maintain versions of rows that are modified. Transactions can be specified to use row versions to view data as it existed at the start of the transaction instead of protecting it with locks. This allows the transaction to read a consistent copy of the data while mitigating performance concerns from locking. The following isolation levels support row versioning:

- Read Committed Snapshot is a version of the Read Committed isolation level that uses row versioning to present each statement in the transaction with a consistent snapshot of the data as it existed at the beginning of the statement. Locks are not used to protect the data from updates by other transactions. To enable Read Committed Snapshot, set the READ_COMMITTED_SNAPSHOT database option to ON.

- Snapshot isolation uses row versioning to return rows as they existed at the start of the transaction, regardless of whether another transaction modifies those rows. To enable Snapshot isolation, set the ALLOW_SNAPSHOT_ISOLATION database option to ON.

Maintaining ACID compliancy while also ensuring a premium performance experience is no easy task. Design best practices for OLTP databases are able to accomplish this by breaking up data into smaller chunks that are less redundant, also known as data normalization. There are a few rules for data normalization, which can be defined as follows:

- First normal form (1NF) involves eliminating repeating groups in individual tables, creating separate tables for each set of related data, and identifying each set of related data with a primary key. This is essentially stating that you should not use multiple fields in a single table to store similar data. For example, a retail company may have customers that make multiple orders at different periods of time. Instead of duplicating the customers' information each time they place an order, place all customer information in a separate table called Customers and identify each customer with a unique primary key.

- Second normal form (2NF) takes 1NF a step further. Along with the rules that define 1NF, 2NF also involves creating separate tables for sets of values that apply to multiple records and then relating those tables via a foreign key. For example, if a customer's address is needed by the Customer, Order, and Shipping tables, separate the addresses into a single table such as the Customer table or their own Address table.

- Third normal form (3NF) builds on 1NF and 2NF by including a requirement to eliminate fields in tables that do not depend on the key. For example, let's assume that each product being sold by the retail company includes several subcategories. If each subcategory is stored in the Products table, then each product will be duplicated numerous times to include each subcategory. In this case, it is more efficient to create a Product Subcategory table and relate it to the Products table.

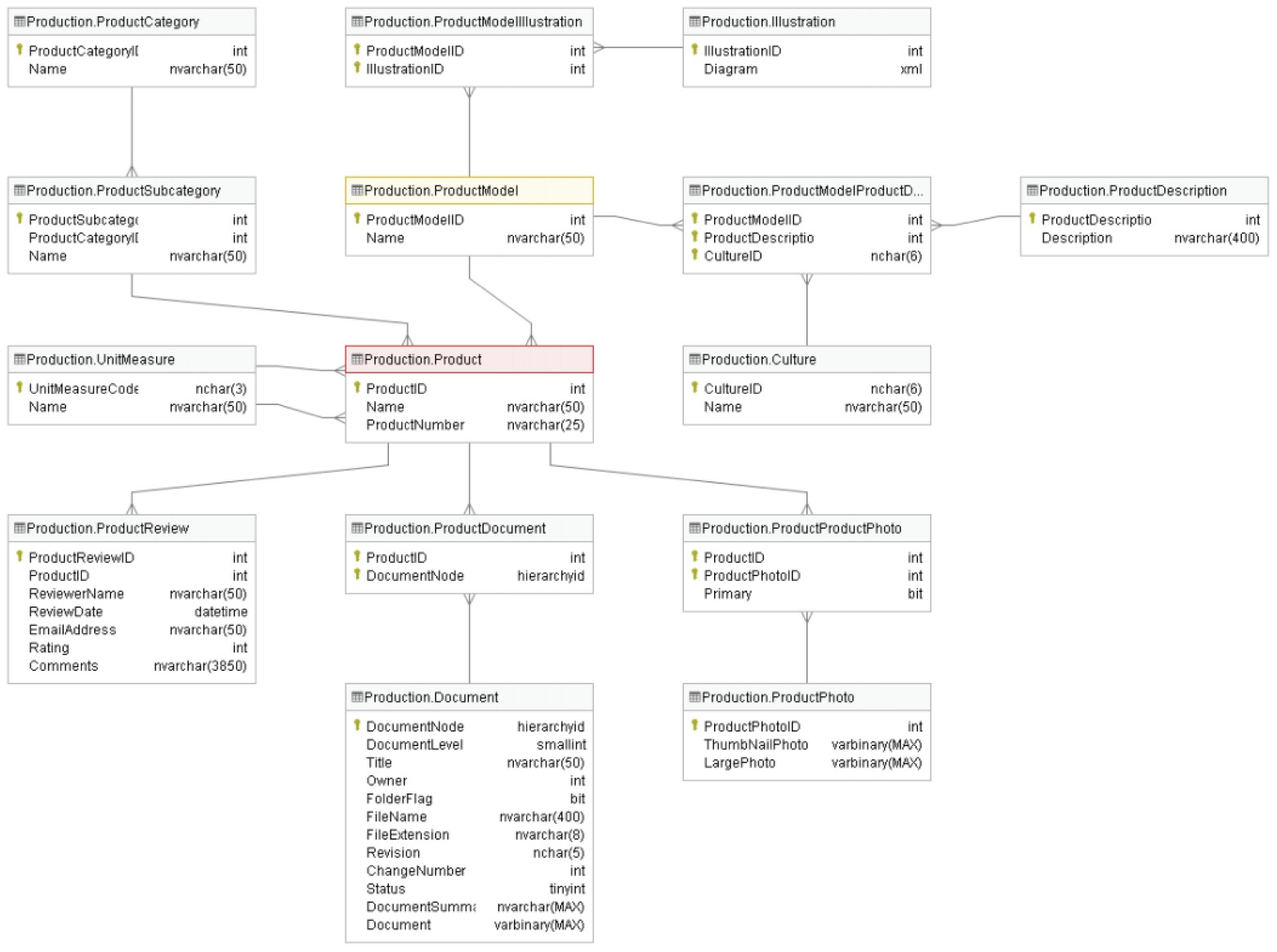

The more normalized a database is, the more efficiently the database will handle write operations. This is because normalized data avoids extra processing for redundant data. Typical OLTP databases follow 3NF to ensure that database writes are as efficient as possible. Figure 2.1 is a partial example of the AdventureWorks OLTP entity relationship diagram (ERD), focusing on entities that are related to products manufactured and sold by AdventureWorks. The entire ERD can be found at https://dataedo.com/download/AdventureWorks.pdf.

FIGURE 2.1 OLTP ERD

In this diagram, every entity that has multiple records is broken up into multiple tables to avoid redundant data storage. Entities that are related to one another can be related one or many times. For example, each product category has multiple product subcategories. Therefore, the relationship between the ProductCategory table and the ProductSubcategory table is one-to-many. The crow's feet shape in the relationship signals that there are many product subcategories for each product category.

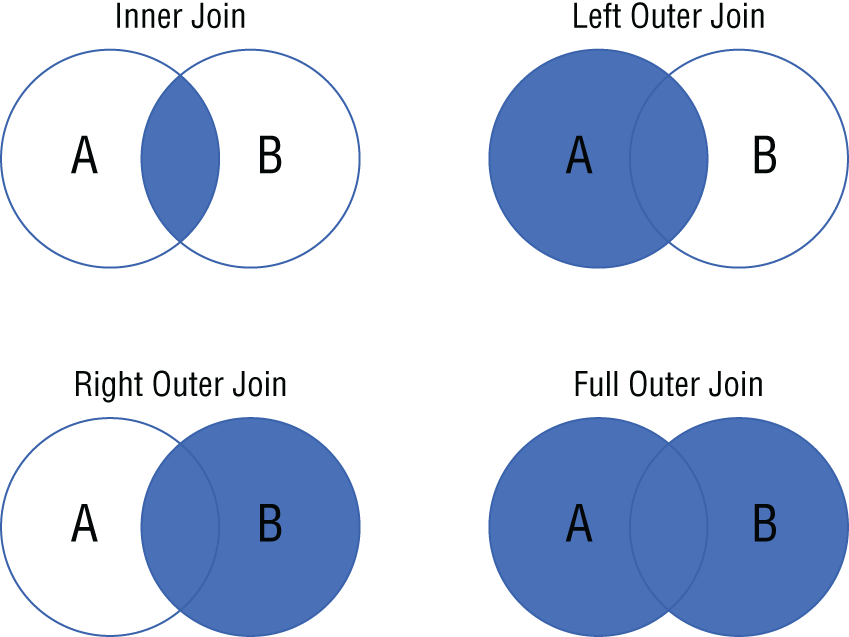

While this level of data normalization is highly efficient for writing and storing individual transactions, it can be less efficient for applications that perform large numbers of read operations. Queries that are issued from read-heavy applications (e.g., reporting and analytical applications) potentially require many joins to de-normalize the data, making these queries very long and complex. Read operations that perform aggregations over large amounts of data are also very resource intensive for OLTP databases and can cause blocking issues for other transactions issued against the database. It is for these reasons that analytical databases carry different design best practices than their OLTP counterparts.

Analytical Workload Design Considerations

Data warehouses and online analytical processing (OLAP) systems are optimally designed for read-heavy applications. While OLTP systems focus on storing current transactions, data warehouses and OLAP models focus on storing historical data that can be used to measure a business's performance and predict what future actions it should take.

Data warehouses serve as central repositories of data from one or more disparate data sources, including various OLTP systems. Not only does this eliminate the burden of running analytical workloads from the OLTP database, it also enriches the OLTP data with other data sources that provide useful information for decision makers. Data warehouses can store data that is processed in batch and in real time to provide a single source of truth for an organization's analytical needs. Data analysts commonly run analytical queries against data warehouses that return aggregated calculations that can be used to support business decisions.

Data warehouses can be built using one of the SMP database offerings on Azure, such as Azure SQL Database, or on the MPP data warehouse Azure Synapse Analytics dedicated SQL pools. The choice largely depends on the amount of historical data that is going to be stored and the nature of the queries that will be issued to the data warehouse. A good rule of thumb is that if the size of the data warehouse is going to be less than 1 terabyte, then Azure SQL Database will do the trick. However, this is a general statement, and more consideration is needed when deciding between SMP or MPP. Chapter 5, “Modern Data Warehouses in Azure,” covers more detail on what to consider when designing a modern data warehouse.

OLAP models extract commonly used data for reporting from data warehouses to simplify data analysis. Like data warehouses, OLAP models are used for read-heavy scenarios and typically include the following predefined features to allow users to see consistent results without having to write their own logic:

- Aggregations that can be immediately reported against

- Time-oriented calculations

OLAP models come in two flavors: multidimensional and tabular. Multidimensional cubes such as those created with SQL Server Analysis Services (SSAS) were used in traditional business intelligence (BI) solutions to serve data as dimensions and measures. Tabular models such as Azure Analysis Services and models built in Power BI serve data using relational modeling constructs (e.g., tables and columns) while storing metadata as multidimensional modeling constructs (e.g., dimensions and measures) behind the scenes. Tabular models have become the standard for OLAP models as they use similar design patterns to relational databases, make use of columnar storage that optimally compresses data for analytics and leverages an easy-to-learn language (DAX) that data analysts can use to create custom metrics. Chapter 6, “Reporting with Power BI,” will describe in detail tabular models and how they are used in Power BI.

Data warehouses and OLAP models store data in a way that is designed to be easy for analysts and developers to read. Tables in analytical systems are defined to be easily understood by business users so that they do not have to rely on IT every time they need to produce new analysis against historical data. Instead of using strict nomenclature and normalized rules that make OLTP systems ideal for storing transactional data, analytical systems flatten data so that business users can easily query data without having to join several tables together.

One common design pattern for data warehouses and OLAP models is the star schema. Star schemas denormalize data taken from OLTP systems, resulting in some attributes being duplicated in tables. This is done to make the data easier for analysts to read, allowing them to avoid having to join several tables in their queries. While de-normalization is not optimal for write-heavy, transactional workloads, it will increase the performance of read-oriented, analytical workloads.

Star schemas work by relating business entities, also known as the nouns of the business, to measurable events. These can be broken down into the following classifications that are specific to a star schema:

- Dimension tables store information about business entities. Dimension tables store descriptive columns for each entity and a key column that serves as a unique identifier. Examples include date, customer, geography, and product dimensions. Dimension tables typically store a relatively small number of rows but many columns, depending on how many descriptors are necessary for a given dimension.

- Fact tables store measurable observations or events such as Internet sales, inventory, or sales quotas. Along with numeric measurements, fact tables contain dimension key columns for each dimension that a measure or observation is related to. These relationships determine the granularity of the data in the fact table. For example, an Internet sales fact table that has a dimension key for date is only as granular as the level of detail stored in the date dimension table. If the date dimension table only includes details for years and months, then queries performing time-based calculations will only be able to drill down to monthly sales. However, if it includes details for years, quarters, months, weeks, days, and hours, then queries will be able to perform more fine-grained analysis of the data.

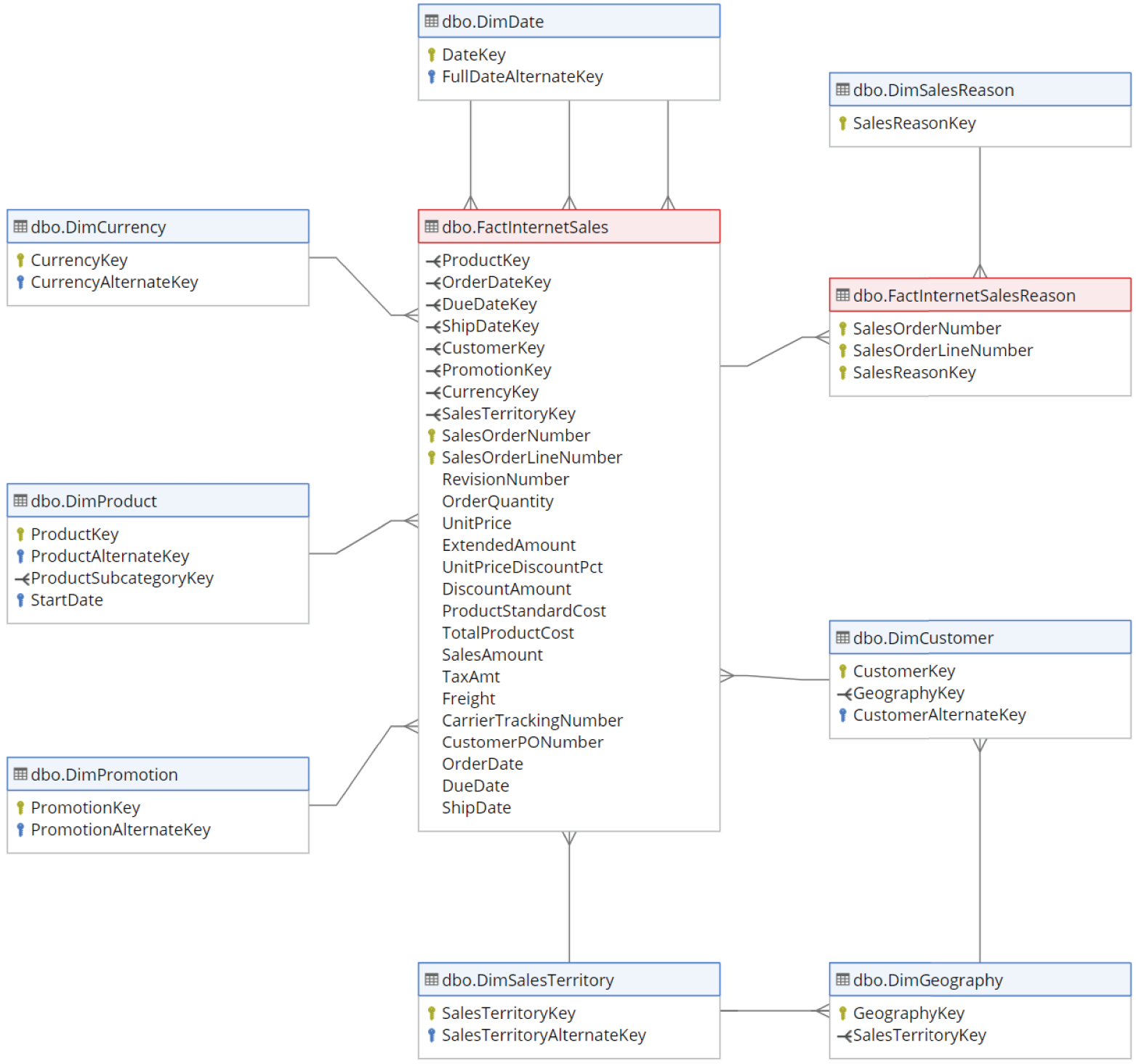

Figure 2.2 is a partial example of the AdventureWorks DW star schema, focusing on dimensions and facts related to Internet sales for products manufactured and sold by AdventureWorks. The entire diagram can be found at https://dataedo.com/samples/html/Data_warehouse/doc/AdventureWorksDW_4/modules/Internet_Sales_101/module.html.

FIGURE 2.2 Star schema

This diagram shows the relationship between the nouns involved in an online sale and the associated metrics. While not illustrated in the image, if you go to the link in the preceding paragraph, you will find more details on each dimension table and will see that they have many columns that provide high granularity for the sales metrics.

OLAP models take star schemas a step further by including business logic and predefined calculations that are ready to be used in reports. This level of abstraction that allows users to focus on building business-critical reports without needing to write SQL queries that perform aggregations and joins over the underlying data is known as a semantic layer. Semantic layers are typically placed over data pulled from a data warehouse. Along with the business-friendly names that come with a star schema, semantic layers store calculations that allow users to easily filter and summarize data.

Relational Data Structures

Relational databases are composed of several different components. Take an OLTP database that powers a retail company's POS for example. This database probably has a customer table that contains rows for every customer that has made a purchase. The table can include columns for each customer's first name, last name, phone number, address, and more. Every column has a predefined data type that inserted values must adhere to. If a customer chooses not to give a piece of information such as their phone number, a null value can be added as a placeholder so that the row maintains the structure of the table's schema. Every row is also assigned an ID that uniquely identifies the customer, also known as a primary key. Some columns, such as the ID column, are also used to relate to other tables such as one that stores more information about the products involved in a purchase. This is known as a foreign key. The customer table can also include indexes that optimize how the data is organized so that queries can quickly retrieve data. These database structures and others are defined in the following sections.

Tables

Tables are structured database objects that store all the data in a database. Data is organized into rows and columns, with rows representing records of data and columns representing a field in the record. Along with user-defined tables that persist data, users can choose to create temporary tables that briefly store data that does not need to be persisted long term. These come in two varieties:

- Local temporary tables are only visible to the instance of a user connection, also known as a session, that they are built in. They are deleted as soon as the session is disconnected.

- Global temporary tables are visible to any user after they are created and are deleted when all user sessions referencing the table are disconnected.

SMP and MPP databases allow users to create partitions on tables to horizontally distribute data across multiple filegroups in a database. This makes large tables easier to manage by allowing users to access individual partitions of data quickly and efficiently while the integrity of the overall table is maintained. MPP systems such as Azure Synapse Analytics dedicated SQL pools take this a step further. Along with being able to partition data across filegroups, MPP systems spread data across multiple distributions on one or more compute nodes. The types of distributed tables available in Azure Synapse Analytics dedicated SQL pools and when to use each are covered in Chapter 5, “Modern Data Warehouses in Azure.”

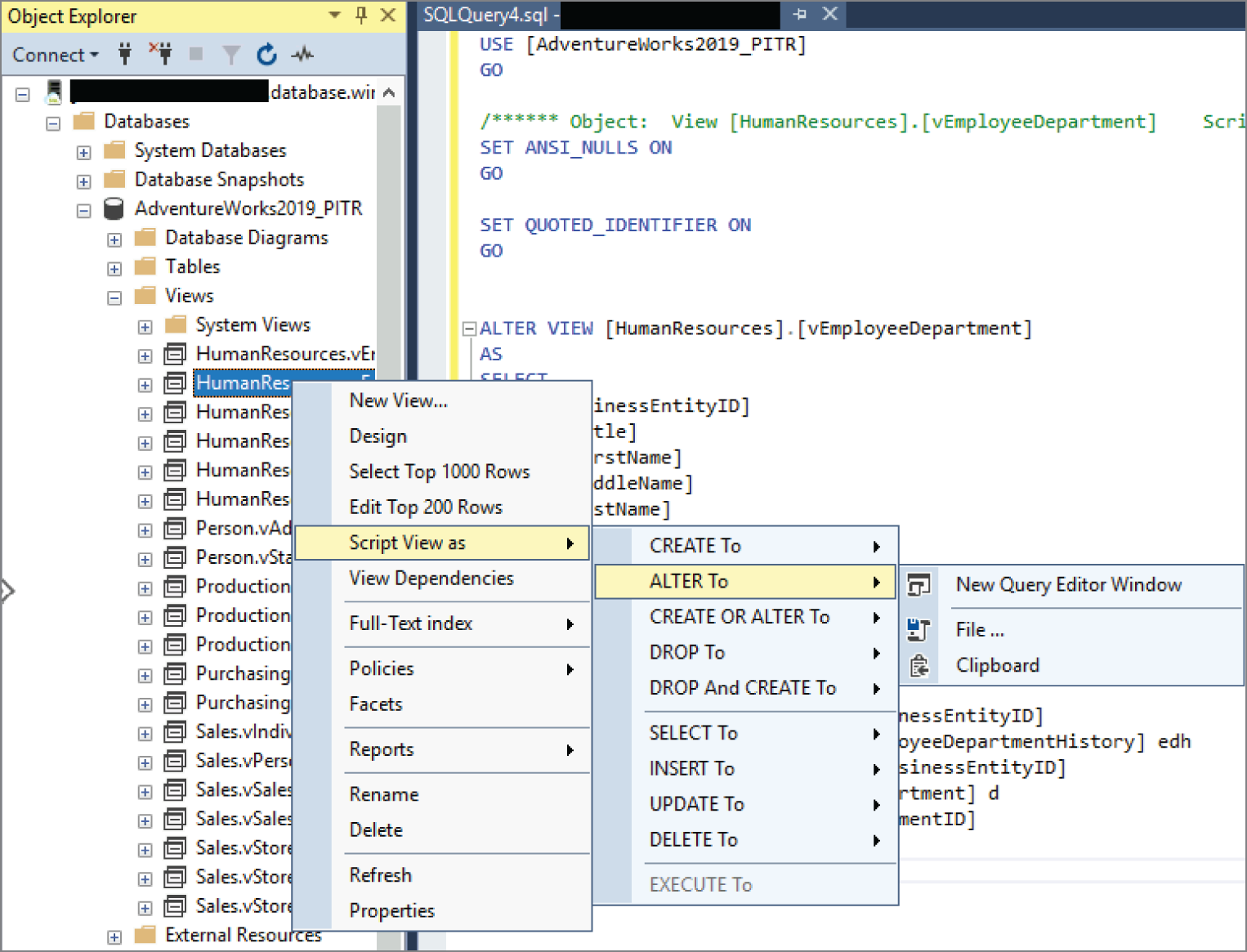

Views

Views are virtual tables whose contents are defined by a query. The rows and columns of data in a view come from tables referenced in the query that define the view. They act as a virtual layer to filter and combine data from regularly queried tables. Users can simplify their queries since views handle the complex filtering and joining of data that would normally need to be handled by the user. They are also useful security mechanisms as users do not need permission to the underlying tables that make up the views.

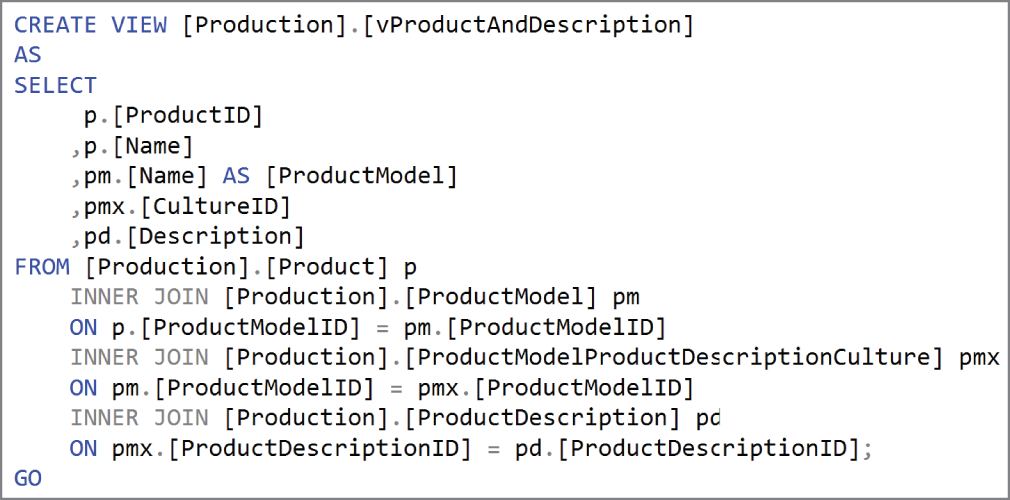

Figure 2.3 is an example of a view definition taken from the AdventureWorks OLTP database. This view queries the ProductModel, ProductModelProductDescriptionCulture, and ProductDescription tables to compile a list of products sold and their descriptions in multiple languages.

FIGURE 2.3 View definition

This view allows users querying product description information to simplify their queries from performing joins on multiple tables to only reading from one database object.

A special type of view that can be used to improve the performance of complex analytical queries that are issued against large data warehouse datasets are materialized views. Unlike regular views that are generated each time the view is used, materialized views are preprocessed and stored in the data warehouse. The data stored in a materialized view is updated as it is updated in the underlying tables. Materialized views that are defined by complex analytical queries improve performance and reduce the amount of time required to prepare data for analysis by pre-aggregating data and storing it in a manner that is ready to be used in reports.

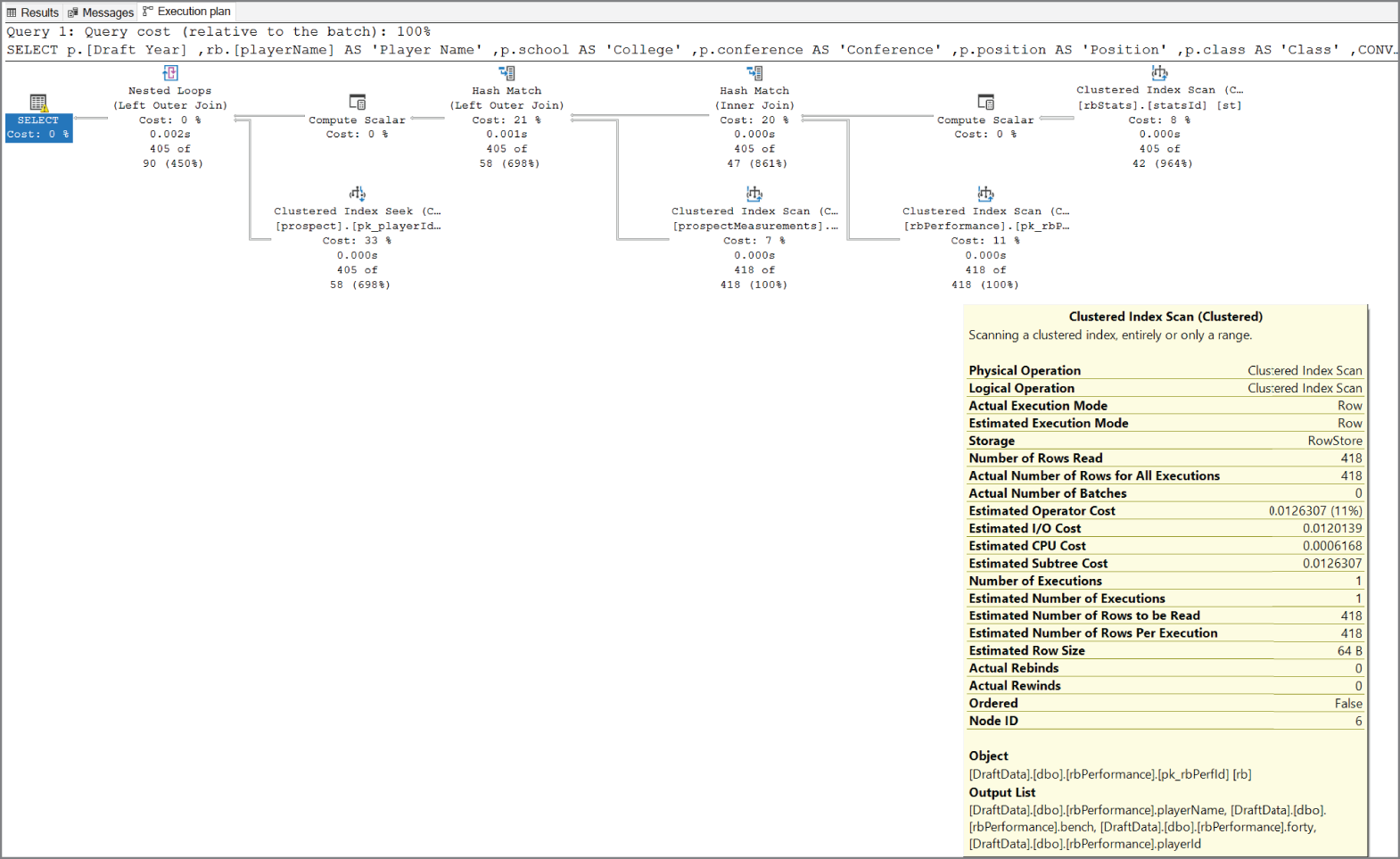

Indexes

Consider the index at the end of this book. Its purpose is to sort keywords and provide each keyword's location in the book. Database indexes work very similarly in that they sort a list of values and provide pointers to the physical locations of those values. Ideally, indexes are designed to optimize the way data is stored in database tables to best serve the types of queries that are issued to them.

Depending on the workload, indexes physically store data in a row-wise format (rowstore) or a column-wise format (columnstore). If queries are searching for values, also known as seeks, or for a small range of values, then rowstore indexes such as clustered and nonclustered indexes are ideal. On the other hand, columnstore indexes are best for database tables that store data that is commonly scanned and aggregated. The following are descriptions of the three commonly used types of indexes:

- Clustered indexes physically sort and store data based on their values. There can only be one clustered index because clustered indexes determine the physical order of the data. Columns that include mostly unique values are ideal candidates for clustered indexes. Clustered indexes are automatically created on primary key columns for this reason.

- Nonclustered indexes contain pointers to where data exists. There can be more than one nonclustered index on a database, and each one can be composed of multiple columns depending on the nature of the queries issued to the database. For example, queries that return data based on specific filter criteria can benefit from a nonclustered index on the columns being filtered. The nonclustered index allows the database engine to quickly find the data that matches the filter criteria.

- Columnstore indexes use column-based data storage to optimize the storage of data stored in a data warehouse. Instead of physically storing data in a row-wise format like that of a clustered or nonclustered index, columnstore indexes store data in a column-wise format. This provides a high level of compression and is optimal for analytical queries that perform aggregations over large amounts of data.

Proper index design can be the difference between a poorly performing database and one that runs like a charm. While index design best practices are out of scope for this book, I recommend the following article for guidelines on choosing an index strategy: https://docs.microsoft.com/en-us/sql/relational-databases/sql-server-index-design-guide?view=sql-server-ver15.

Stored Procedures

Stored procedures are groups of one or more T-SQL statements that perform actions on data in a database. They can be executed manually or via an external application (e.g., custom .NET application, Azure Data Factory). They can also be scheduled to run at predetermined periods of time with a SQL Server Agent job, such as every hour or every night at midnight. Stored procedures can accept input parameters and return multiple values as output parameters to the application calling them.

Code that is frequently used to perform database operations is an ideal candidate to be encapsulated in stored procedures. This eliminates the need to rewrite the same code repeatedly, which also reduces the chances of errors from code inconsistency. The application tier is also simplified since applications will only need to execute the stored procedure instead of needing to maintain and run entire blocks of T-SQL code.

Functions

Functions are like stored procedures in that they encapsulate commonly run code. The major difference between a user-defined function in SQL and a stored procedure is that functions must return a value. Stored procedures can be used to make changes to data without ever returning a response to the user running the stored procedure. Functions, on the other hand, can only return data that is typically the result of a complex calculation. Functions accept parameters and return values as either a single scalar value or a result set.

Triggers

Triggers are T-SQL statements that are executed in response to a variety of events. These events can be DDL, DML, or login related. Triggers are typically used when you want to do the following:

- Prevent certain changes to columns in tables.

- Perform an action based on a change to database schemas or underlying data.

- Log changes to the database schema.

- Enforce relational integrity throughout the database.

Relational Database Offerings in Azure

Until recently, most organizations hosted their database systems in on-premises datacenters that they owned or leased. They were responsible for applying updates to the database software and had to make sure that the hardware hosting the databases was properly maintained. Business continuity aspects such as database backup management, high availability (HA), and disaster recovery (DR) standards would need to be implemented to ensure minimal downtime in case of database corruption or server downtime. Scalability is also a concern, as database servers that outgrow compute allocated to them require someone to physically add compute to the server. All these items require additional hardware and levels of expertise from employees, thus increasing the total cost of ownership (TCO) for a database.

Cloud-based hosting has fundamentally shifted how organizations calculate TCO for their relational databases. Many operations that surround database upgrades or patching, business continuity, and scalability are handled by the cloud company. This allows organizations to shift their focus from maintaining hardware and managing business continuity concerns to being able to purely focus on the needs of the database users. Provisioning and scaling a database is also much easier as almost every requirement is preconfigured. Shortly put, databases can be easily deployed in Azure with the click of a button and scaled up and down with a slider (more on this later in the chapter).

Before getting into the different relational databases offerings in Azure, it's important to understand the three types of cloud computing services. Having a foundational knowledge of how each of these are implemented is paramount to understanding the responsibilities and the TCO for hosting a database on Azure.

- Infrastructure as a Service (IaaS) offerings in Azure provide customers with the ability to create virtual infrastructure that mirrors an on-premises environment. IaaS offerings give organizations the ability to easily migrate their on-premises infrastructure to similar IaaS-based offerings in Azure without needing to completely redesign their applications using a cloud native approach. This is a typical first step for moving to the cloud as it allows organizations to offload the management of their hardware to Microsoft using a lift-and-shift strategy. While IaaS deployments allow organizations to no longer worry about maintaining the hardware powering an application, they will still need to manage maintenance at the operating system (OS) and application level. IaaS offerings include virtual machines that host services that would typically be hosted in a customer's on-premises environment, such as SQL Server, and are connected via an Azure Virtual Network (VNet). These services can easily connect to an organization's existing network infrastructure, allowing them to utilize a hybrid cloud strategy.

- Platform as a Service (PaaS) takes IaaS a step further by abstracting the OS and application software from the user. When deploying a PaaS offering, organizations can specify the resources they would like deployed, an initial size and compute tier depending on the intensity of the workload, what Azure region they would like them deployed to, and other optional or service-specific requirements. Azure will then provision the necessary resources to meet those specific requirements. Once deployed, all OS and software maintenance such as business continuity, upgrades, and patches are handled by Azure. This allows organizations to minimize the amount of effort required to maintain these services and instead focus on using them to build solutions that impact the business. Like IaaS offerings, PaaS offerings can also be interconnected via a VNet and connected to an organization's existing on-premises network infrastructure. PaaS services include Azure SQL Database, Azure SQL Managed Instance (MI), and all the open-source relational database offerings that are hosted on Azure SQL.

- Software as a Service (SaaS) offerings represent the highest level of abstraction available to an organization hosting its infrastructure and applications on the cloud. Organizations simply purchase the number of licenses required for the service and then use it. Typical examples of SaaS offerings include Power BI Online and Office 365. None of the relational database offerings discussed in this chapter are SaaS offerings.

Azure SQL

Azure SQL is a broad term used to describe the family of SMP relational database products in Azure that are built upon Microsoft's SQL Server engine. These include one IaaS option with SQL Server on Azure Virtual Machines (VM) and two PaaS options with Azure SQL MI and Azure SQL Database. Azure SQL Database can be broken down even further into two different options: single database and elastic pool. There are also several service tiers available for each offering that best suit different types of workloads. With so many options available, organizations must weigh several factors when deciding which Azure SQL option is the most appropriate for their use cases:

- Cost—All three options include a base price that covers underlying infrastructure and licensing. Each option also includes hybrid licensing benefits that allow organizations to apply on-premises SQL Server licenses to reduce the cost of the service. Keep in mind that hosting a database in a virtual machine will require additional administration overhead that the PaaS options don't require.

- Service-level agreement (SLA)—All three options provide high, industry-standard SLAs. PaaS options guarantee a 99.99 percent SLA, while IaaS guarantees a 99.95 percent SLA for infrastructure, meaning that organizations will need to implement additional mechanisms to ensure database availability. You can refer to the following documentation for more information regarding Azure SQL SLAs:

https://docs.microsoft.com/en-us/azure/azure-sql/azure-sql-iaas-vs-paas-what-is-overview#service-level-agreement-sla. - Migration timeline—This is a factor that must be considered if an organization is migrating to Azure as opposed to building an application from scratch with a cloud native design. Organizations may consider one option over the other depending on how long the timeline is. For example, databases can be migrated to a virtual machine in a relatively short amount of time because virtual machines can host the same version of SQL Server as an on-premises SQL Server instance. Azure SQL MI also provides nearly the same feature parity as an on-premises SQL Server, but some changes may need to be applied, especially if the database that will be migrated is hosted on an older version of SQL Server.

- Administration—Azure SQL Database and Azure SQL MI minimize overhead by managing typical database administration activities such as database backups, patches, version upgrades, HA, and threat protection. However, this also limits the range of custom administrative activities that can be performed.

- Feature parity—Because Azure SQL Database abstracts the OS and database server components from the user, there are certain features of SQL Server that are not supported in Azure SQL Database. These include cross-database joins, CLR integration, and SQL Server Agent. Azure SQL MI is nearly 100 percent feature compatible with SQL Server but still maintains a few differences such as features that rely on Windows-related objects. SQL Server on Azure VMs provides 100 percent feature parity because it is the same as a SQL Server instance hosted on an on-premises virtual machine. However, because of potential SQL Server version differences, there could be feature differences when migrating from an old version of SQL Server to a newer version of SQL Server on Azure VM or one of the PaaS options. Deprecated features and features that are incompatible with the PaaS options can be discovered using the Data Migration Assistant (DMA). The DMA will be covered in further detail later in this chapter.

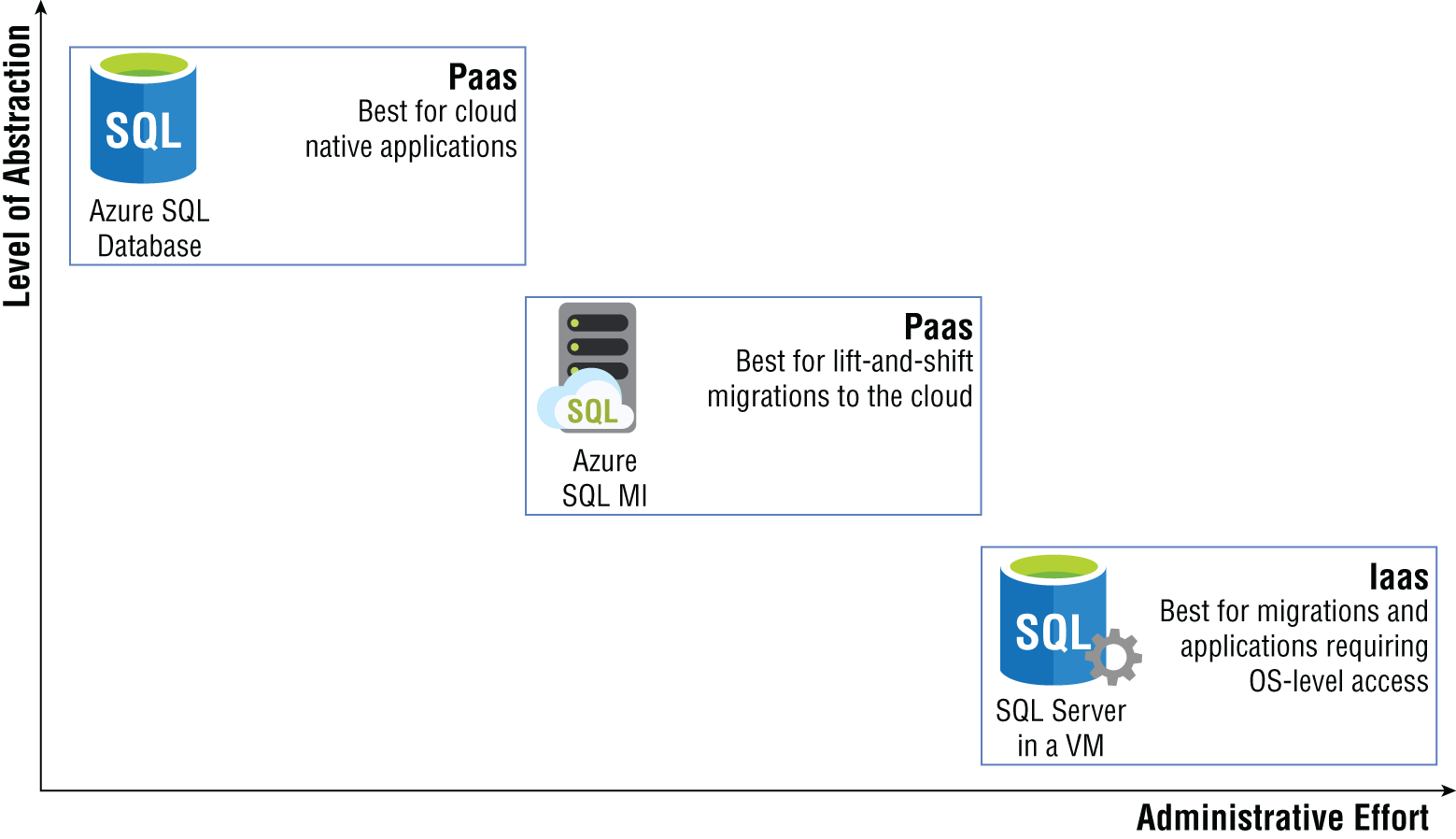

The various Azure SQL offerings come with different levels of abstraction and management. Figure 2.4 illustrates the relationship between abstraction and administrative effort for each option.

FIGURE 2.4 Azure SQL abstraction vs. administrative effort

As seen in the diagram, a SQL Server on Azure VM requires the most administrative effort because it provides full control over the SQL Server instance and the underlying OS. This is ideal for situations that require highly customized OS and/or database images or scenarios requiring very granular control over the SQL Server engine. Azure SQL MI removes the OS layer from the user's point of view but is more like an on-premises SQL Server instance than Azure SQL Database in that it provides a fully isolated environment encapsulated in a VNet and includes system databases. Users hosting their databases on Azure SQL MI still benefit from using a PaaS database in that patching, SQL Server version upgrades, backups, HA, DR, data encryption, auditing, and threat protection are all handled behind the scenes by Microsoft. Azure SQL Database completely abstracts the OS layer and database engine from users. Greenfield solutions that are developed using cloud native best practices typically use Azure SQL Database as their backend relational database.

Ultimately, choosing the right Azure SQL option comes down to the solution requirements and how much control is needed over the OS and database engine. The following sections explore each option in further detail.

SQL Server on Azure Virtual Machine

There are several reasons why an organization would want to migrate its applications to the cloud. Perhaps the most common reason is to offload the maintenance of hardware and networking equipment that it either owns or leases to a cloud provider. Expiring datacenter leases or aging hardware force many companies to rethink how they manage their IT infrastructure. While many organizations will work to modernize their applications to be cloud native, most of them still use legacy applications that rely on features of SQL Server that are not available in the PaaS offerings of Azure SQL. There could also be specific situations that require fine-grained control over the database engine and the OS that it sits on. For these reasons, organizations may decide to migrate their existing SQL Server footprint to Azure SQL's IaaS offering: SQL Server on Azure VMs.

As far as the database engine is concerned, a SQL Server on Azure VM is no different than a SQL Server instance hosted on a physical server in an on-premises environment. This allows developers and database administrators to acclimate quickly to working with SQL Server in Azure. Engineers deploying a SQL Server on Azure VM can choose one of three approaches for doing so:

- Choose one of the available SQL Server VM images from the Azure marketplace. These images allow you to easily deploy a specific version of SQL Server on the OS of your choosing.

- Install your own SQL Server license on a VM. Users with existing VMs in Azure can choose to install SQL Server with an existing license to save the need of deploying a new VM.

- Lift-and-shift existing VMs from an on-premises environment to Azure with Azure Migrate. Azure Migrate is a tool that can be used to assess and migrate on-premises infrastructure to Azure. VMs hosting SQL Server can be migrated to Azure using Azure Migrate without needing to deploy a new VM through the Azure Marketplace. More information on migrating VMs to Azure with Azure Migrate can be found at

https://docs.microsoft.com/en-us/azure/migrate/migrate-services-overview#azure-migrate-server-migration-tool.

Taking advantage of the ready-made images available in the Azure Marketplace greatly reduces the amount of time needed to provision a SQL Server VM in Azure. There are two licensing types available for SQL Server VMs: pay-as-you-go and bring your own license (BYOL). Pay-as-you-go simplifies licensing costs by billing you for the per-minute usage of the instance. Table 2.1 outlines the available pay-as-you-go SQL Server images in Azure.

TABLE 2.1 Available Pay-As-You-Go SQL Server images

| Version | Operating System | Edition |

|---|---|---|

| SQL Server 2019 | Windows Server 2019 | Enterprise, Standard, Web, Developer |

| SQL Server 2019 | Ubuntu 18.04 | Enterprise, Standard, Web, Developer |

| SQL Server 2019 | Red Hat Enterprise Linux (RHEL) 8 | Enterprise, Standard, Web, Developer |

| SQL Server 2019 | SUSE Linux Enterprise Server (SLES) v12 SP5 | Enterprise, Standard, Web, Developer |

| SQL Server 2017 | Windows Server 2016 | Enterprise, Standard, Web, Express, Developer |

| SQL Server 2017 | Red Hat Enterprise Linux (RHEL) 7.4 | Enterprise, Standard, Web, Express, Developer |

| SQL Server 2017 | SUSE Linux Enterprise Server (SLES) v12 SP2 | Enterprise, Standard, Web, Express, Developer |

| SQL Server 2017 | Ubuntu 16.04 LTS | Enterprise, Standard, Web, Express, Developer |

| SQL Server 2016 SP2 | Windows Server 2016 | Enterprise, Standard, Web, Express, Developer |

| SQL Server 2014 SP2 | Windows Server 2012 R2 | Enterprise, Standard, Web, Express |

| SQL Server 2012 SP4 | Windows Server 2012 R2 | Enterprise, Standard, Web, Express |

| SQL Server 2008 R2 SP4 | Windows Server 2008 R2 | Enterprise, Standard, Web, Express |

Organizations who have already purchased SQL Server licenses can also apply those licenses to reduce the VM's SQL Server cost component. This is known as bring your own license, or BYOL for short. Table 2.2 outlines the available BYOL SQL Server images in Azure.

TABLE 2.2 Available bring your own license SQL Server images

| Version | Operating System | Edition |

|---|---|---|

| SQL Server 2019 | Windows Server 2019 | Enterprise BYOL, Standard BYOL |

| SQL Server 2017 | Windows Server 2016 | Enterprise BYOL, Standard BYOL |

| SQL Server 2016 SP2 | Windows Server 2016 | Enterprise BYOL, Standard BYOL |

| SQL Server 2014 SP2 | Windows Server 2012 R2 | Enterprise BYOL, Standard BYOL |

| SQL Server 2012 SP4 | Windows Server 2012 R2 | Enterprise BYOL, Standard BYOL |

The available pay-as-you-go and BYOL SQL Server images are liable to change as new versions of SQL Server are introduced and older versions are deprecated. You can stay up to date on the available SQL Server VM images by referring to the tables in the following link: https://docs.microsoft.com/en-us/azure/azure-sql/virtual-machines/windows/sql-server-on-azure-vm-iaas-what-is-overview#get-started-with-sql-server-vms.

VM size and storage configuration must also be considered when creating a SQL Server Azure VM. There are multiple VM sizes available that include different virtual CPU quantities, memory sizes, and different disk sizes. Additional disks can be added to the VM depending on what is hosted in addition to SQL Server. There are also different categories of VM sizes that provide different baselines for performance, including these:

- Memory optimized—These provide stronger memory-to-vCPU ratios and are the Microsoft-recommended choice for SQL Server VMs on Azure.

- General purpose—These provide balanced memory-to-vCPU ratios and best serve smaller workloads such as development and test, web servers, and smaller database servers.

- Storage optimized—These are designed with optimized disk throughput and input-output (I/O) and are strong options for data analytics workloads.

These are general recommendations and should be used with application performance metrics to make the most appropriate VM choice for different workloads. Keep in mind that VMs use a pay-as-you-go cost model and can be stopped when not needed so that you are not charged during those times. However, most SQL Server VMs will need to stay online unless the SQL Server instance is a test instance. Organizations that will be using one or more SQL Server VMs for one or three years can purchase Azure Reserved Virtual Machine Instances. Once applied to a VM, Azure Reserved Virtual Machine Instances discount the cost of the virtual machine and compute costs.

Deploying a ready-made SQL Server VM image from the Azure Marketplace will include a default storage configuration for data, log, and tempdb files. While these configurations are optimal for general workloads, many workloads may benefit from different ones. There may also be a need to optimize for cost versus performance for non-production workloads. Regardless of workload type, these are some general checklist items that should be considered when configuring storage for a SQL Server VM on Azure:

- Place data, log, and tempdb files on separate drives.

- Place tempdb on the local SSD drive. This drive is ephemeral and will deallocate resources when the VM is stopped.

- Consider using standard HDD/SDD storage for development and test workloads.

- Use premium SSD disks for data and log files for production SQL Server workloads.

- Use P30 and/or P40 disks for data files to ensure caching support.

- Use P30 through P80 disks for log files.

Collecting storage performance metrics for workloads that will be migrated to Azure will help determine the most appropriate disk configuration. More information on SQL Server on Azure VM storage configurations can be found at https://docs.microsoft.com/en-us/azure/azure-sql/virtual-machines/windows/performance-guidelines-best-practices-storage.

Business Continuity

There are multiple solutions available in Azure to ensure that data hosted on SQL Server VMs is highly available in the event of several outage scenarios, ranging from planned downtime to datacenter-level disasters. These include solutions that provide database backup management at the database level and high availability and disaster recovery (HADR) capabilities at both the VM and database levels.

Azure provides business continuity for disk storage by creating copies of the data stored on disk and storing them on Azure Blob storage. This type of redundancy can be broken down with the following options:

- Locally redundant storage (LRS) creates three copies of the data stored on disk and stores them in the same location in the same Azure region.

- Geo-redundant storage (GRS) stores three copies of the disk data in the same Azure region as the VM and then stores an additional three copies in a separate region.

While these services provide redundancy for data stored on Azure VMs, they should not be relied on as the only business continuity solution for SQL Server data. Database backups should also be taken to protect against application or user errors. Also, GRS does not support the data and log files to be stored on separate disks. Data from these two files is copied independently and asynchronously, creating a risk of losing data in the event of an outage.

Organizations can choose to set up their own database backup strategy through maintenance plans that are run as a SQL Server Agent job on a scheduled basis. Backups can be stored on local storage or in Azure Blob storage. Azure also allows organizations to offload this process by using a service called Automated Backup. This service regularly creates database backups and stores them on Azure Blob storage without requiring a database administrator to set up the job on the database engine.

For true database-level HADR, organizations can add databases hosted on SQL Server VMs to a SQL Server Always On availability group. Availability groups, or AGs for short, replicate data from a set of user databases to one or more secondary SQL Server instances that are hosted on different VMs. The VMs, or server nodes, that host the primary and secondary SQL Server instances are clustered at the OS level. The cluster monitors the health of the server nodes and will promote a secondary server node to the primary if the existing primary experiences a failure.

Typical AG configurations include at least one secondary node in the same region as the primary to maintain HA and at least one secondary node in a different region for DR. Database connections will move, or failover, to the HA node during planned downtime for the primary node. If the primary node and the secondary nodes in the same region as the primary are down at the same time, database connections will failover to the DR node in the other region.

AG configurations are not limited to Azure-only VMs. Hybrid scenarios are possible, allowing organizations to add on-premises SQL Server instances to the solution. This requires VPN connectivity between the Azure network that SQL Server Azure VM is in and the on-premises network that the on-premises SQL Server is in. Network requirements for SQL Server VMs on Azure and hybrid scenarios will be discussed in the next section.

Network Isolation

A critical component of any IaaS offering is its ability to be completely self-isolated within a virtual network. Virtual networks in Azure, otherwise known as VNets, provide the backbone for isolating communication between different services. A VNet can include one or more subnets depending on the services that it is hosting. VNets can connect to other Azure VNets using a service called VNet peering as well as connect to on-premises networks through a point-to-site VPN, site-to-site VPN, or an Azure ExpressRoute. Hybrid connections are critical for organizations that have a presence in Azure and continue to maintain some of their applications in their on-premises environment.

VNets enable organizations to block specific IP address ranges and network protocols from being able to access resources connected to them. This includes blocking access to and from the public Internet. Databases hosted on SQL Server VMs on Azure are therefore restricted to only being able to communicate with applications that have been approved by an organization's network security team.

Deploying through the Azure Portal

Deploying services in Azure can be done manually on the Azure Portal or automated using a scripting language (e.g., PowerShell or Bash) or an Infrastructure as Code template. SQL Server on Azure VMs are no different than any other service in this aspect, providing users multiple options for managing the deployment of their SQL Server databases on Azure. This section will cover the steps on how to manually deploy a SQL Server Azure VM through the Azure Portal. See the section “Deployment Scripting and Automation” later in this chapter to learn more about scripting and automating the deployment process for relational databases in Azure.

Use the following steps to create a SQL Server on Azure VM using the Azure Portal:

- Log into

portal.azure.comand search for SQL virtual machines in the search bar at the top of the page. Click SQL virtual machines to go to the SQL virtual machines page in the Azure Portal. - Click Create to start choosing the configuration options for your SQL Server on Azure VM.

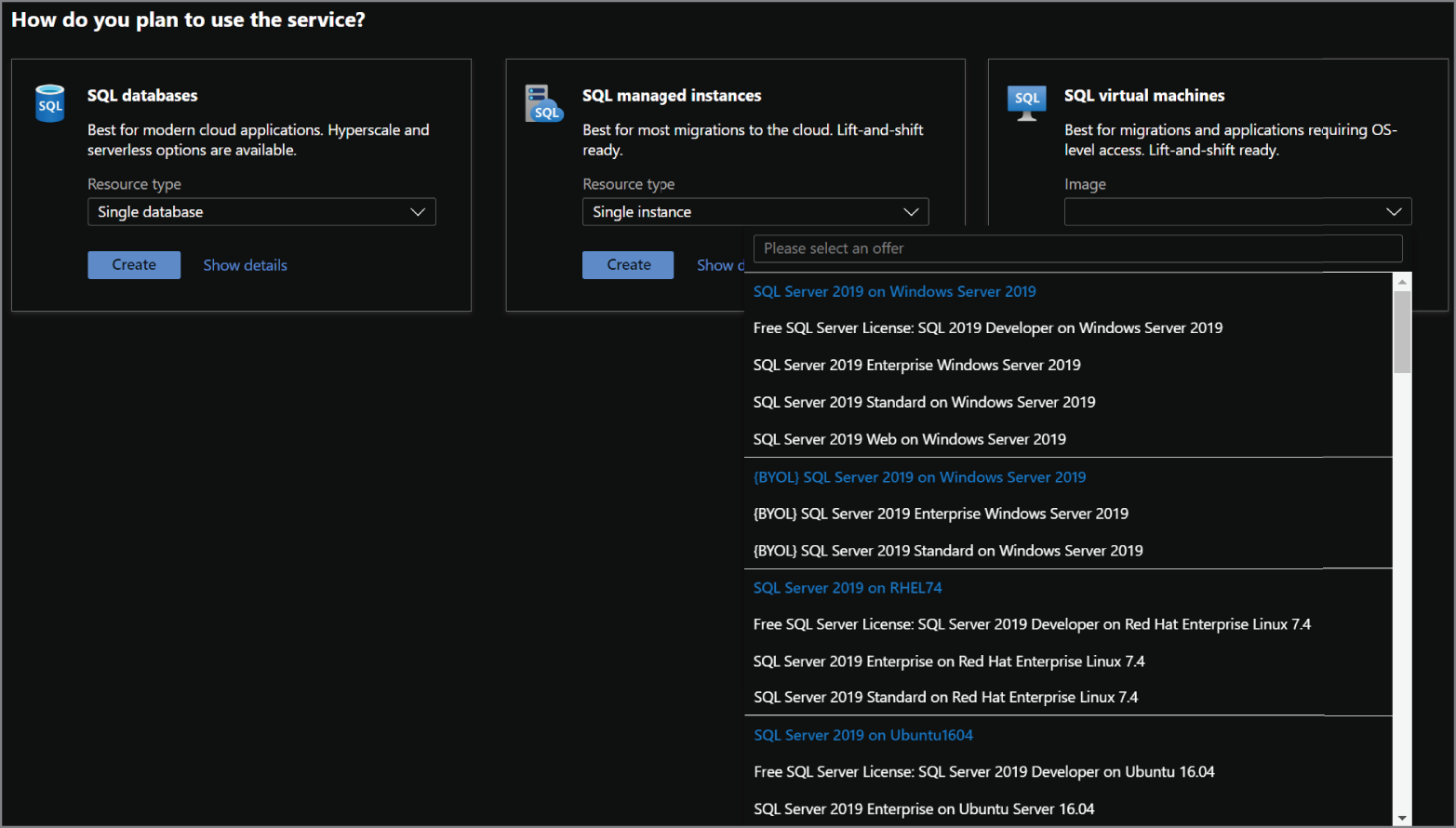

- Navigate to the SQL virtual machines option on the Select SQL deployment option page and select the VM image you would like to deploy. Figure 2.5 shows how this page is displayed and some of the options available after you click the Image drop-down arrow. Once you have selected an image, click the Create button to continue configuring the VM.

FIGURE 2.5 Select a SQL virtual machine image.

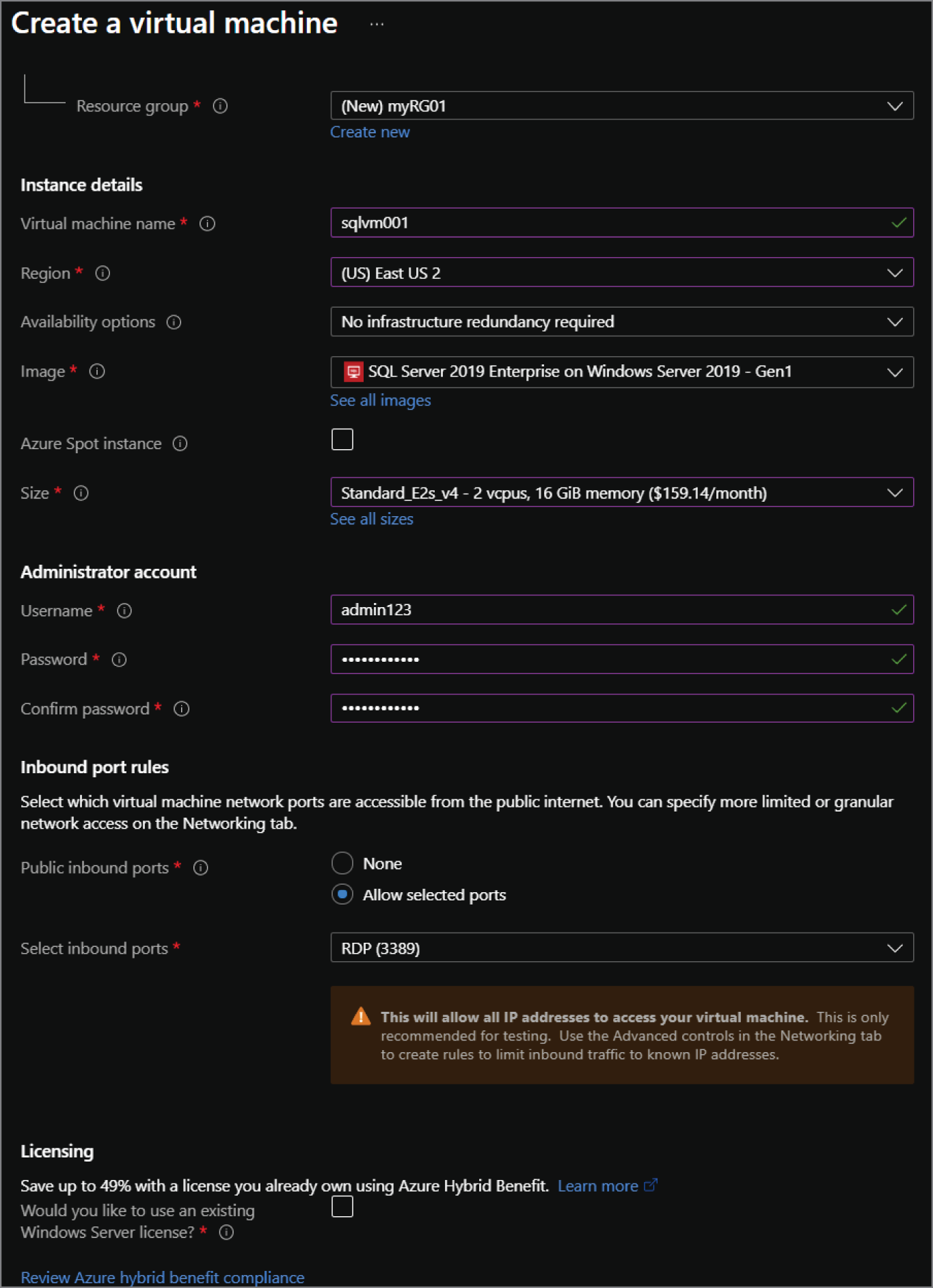

- The Create a virtual machine page includes eight tabs with different configuration options to tailor the SQL Server VM to fit your needs. Let's start by exploring the options available in the Basics tab. Along with the following list that describes each option, you can view a completed example of this tab in Figure 2.6.

- Choose the subscription and resource group that will contain the SQL Server VM. You can create a new resource group on this page if have not already created one.

- Enter a name for the VM.

- Choose the Azure region you wish to deploy the image to.

- Select whether you would like to enable high availability for the VM by using an Availability Zone or an Availability Set. Note that this is high availability for the VM and not for the SQL Server instance.

- Review the VM image selected and change it if necessary.

- Choose the VM size.

- Set a username and password for the administrator account.

- Set any inbound network ports that you wish to be accessible from the public Internet.

- The last optional step on this page is whether you would like to apply an existing Windows Server license to the VM to reduce its cost.

FIGURE 2.6 Create a SQL virtual machine: Basics tab.

- The Disks tab focuses on the disk configuration for the OS. You can choose to change this from a Premium SSD to another disk type as well as change the encryption type used for the disk.

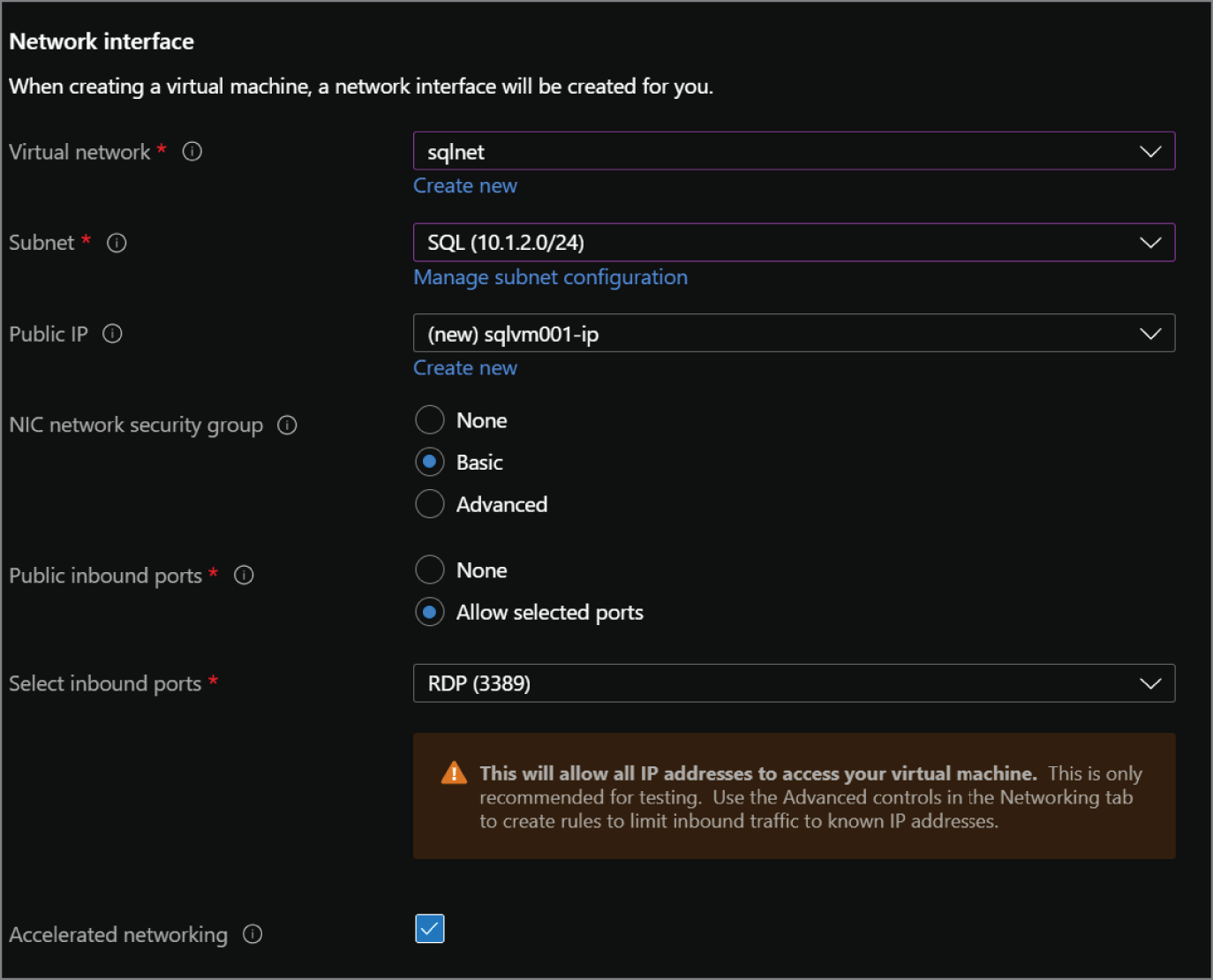

- The Networking tab provides the following network configuration options for the VM. A completed example of this tab can be seen in Figure 2.7.

- Choose the virtual network that the VM will be located in.

- Choose a subnet within that virtual network for the VM.

- Optionally choose a public IP address to be used for communication outside of the virtual network.

- If needed, revise the open inbound ports selected in the Basics tab.

FIGURE 2.7 Create a SQL virtual machine: Networking tab.

- The Management tab allows you to customize features such as Azure Security Center monitoring, enabling automatic shutdown for the VM, and when OS patches should be applied.

- The Advanced tab allows you to add any extensions or scripts to further customize the VM as it is being provisioned.

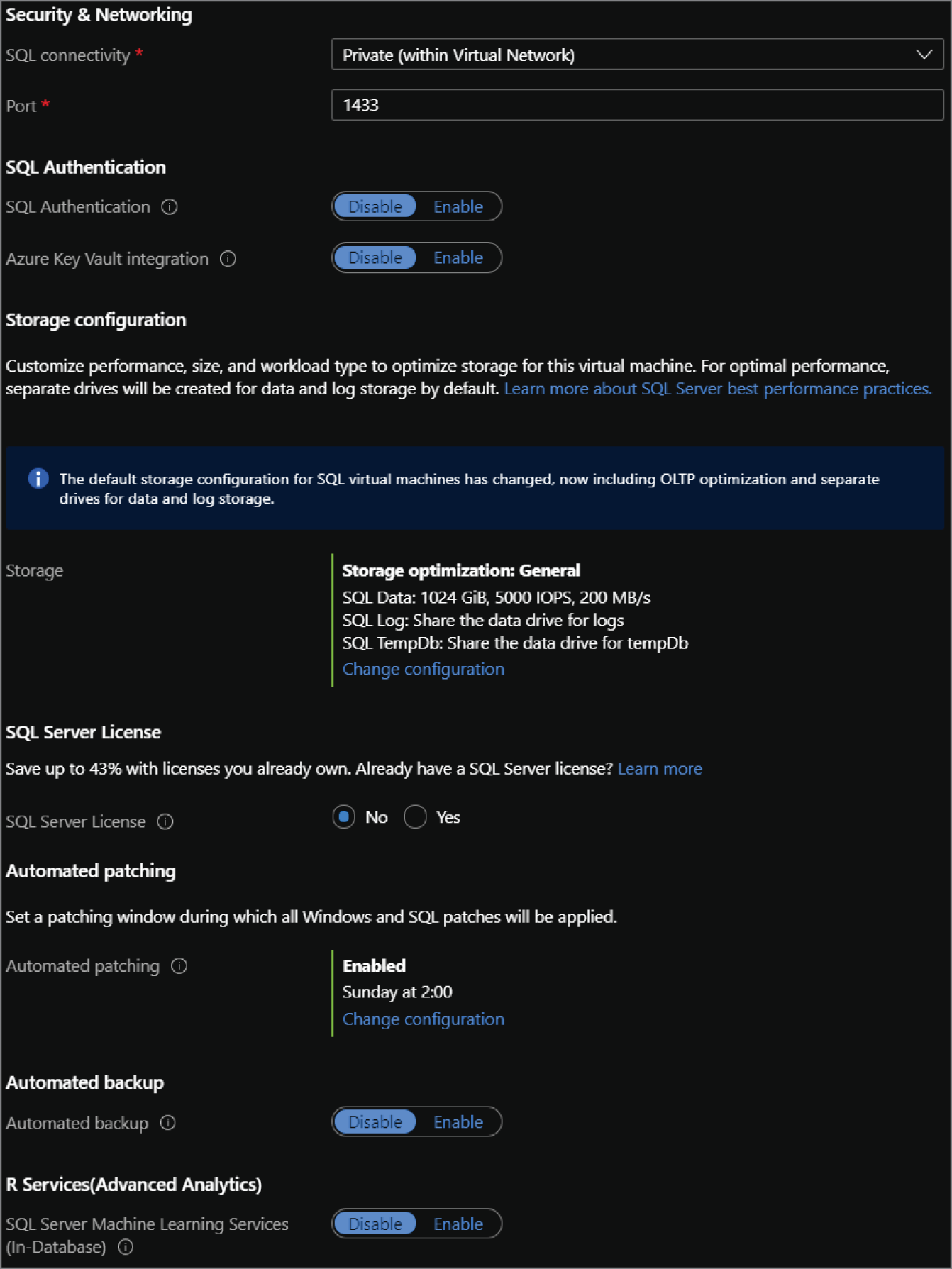

- The SQL Server settings tab provide the following configuration options for the SQL Server instance hosted on the VM. Figure 2.8 illustrates a completed view of this tab.

- Choose the level of network isolation for SQL. The default for this option is limiting communication to applications that are connected to the VNet the VM is in. However, there are options to further lock the SQL Server instance down so that only applications in the VM can communicate to it and to relax security by allowing any application communicating over the public Internet to access it.

- Choose whether you would like to enable SQL Authentication and Azure Key Vault Integration.

- Review the default storage configuration for SQL Server's data, log, and tempdb files. Edit the configuration if the default options do not meet your requirements.

- Choose to apply an existing SQL Server license to reduce the cost of SQL.

- Choose a time window for when patches can be applied to the OS and SQL.

- Choose to enable automated backups if you would like to offload backup management to Azure.

- The last optional setting is for R Services. This will enable users to perform machine learning activities in SQL Server using the R language.

- The Tags tab allows you to place tags on the resources deployed with the SQL Server VM. Tags are used to categorize resources for cost management purposes.

- Finally, the Review + Create tab allows you to review the configuration choices made during the design process. If you are satisfied with the choices made for the VM, click the Create button to begin provisioning the SQL Server VM.

Azure SQL Managed Instance

Migrating SQL Server workloads to Azure can provide more benefits than simply offloading hardware management. Organizations can also take advantage of PaaS benefits that remove the overhead of managing a virtual machine, such as the OS and the SQL Server instance from users. However, applications that require instance-scoped features will still need to be able to interact with the SQL Server instance. This leaves database architects with two options: (1) rearchitect the solution to use cloud native technologies in place of instance-scoped features, or (2) migrate to a technology that supports these features. Prior to a few years back, this meant that organizations wishing to move to Azure needed to commit a lot of time to rebuilding the solution or move to SQL Server on a VM and manage the virtual machine and SQL Server–level maintenance such as upgrades. It is for these reasons that Microsoft introduced Azure SQL Managed Instance.

Azure SQL Managed Instance, or Azure SQL MI for short, is a PaaS database offering on Azure. It abstracts the OS but includes a SQL Server instance so that users can continue using their existing SQL Server processes without having to manage hardware or virtual machines. This makes it the ideal solution for customers looking to migrate many databases to Azure with as little effort as possible. Azure SQL MI also includes many system databases such as model, msdb, and tempdb. It can be used to host a distribution database for transactional replication, SSRS databases, and SSIS data catalog databases.

FIGURE 2.8 Create a SQL virtual machine: Settings tab.

The Azure SQL MI database engine uses the latest version of SQL Server Enterprise Edition, with updates and patches applied by Microsoft as they are made available. Azure SQL MI is nearly 100 percent compatible with on-premises SQL Server and offers support for instance-scoped features such as the SQL Server Agent, common language runtime (CLR), linked servers, Database Mail, and distributed transactions. It also includes a native VNet implementation to provide network isolation for the databases it hosts.

Service Tiers

There are two service tiers available for Azure SQL MI:

- General Purpose is designed for applications with typical performance requirements.

- Business Critical is designed for applications with low latency and strict HA requirements. This tier uses a SQL Server Always On availability group for HA and enables one of the secondary nodes to be used for read-only workloads.

Table 2.3 outlines some of the key differences between the two tiers. The descriptions listed are for the Gen5 hardware version of Azure SQL MI.

TABLE 2.3 Azure SQL MI service tier characteristics

| Feature | General Purpose | Business Critical |

|---|---|---|

| Number of vCores | 4, 8, 16, 24, 32, 40, 64, 80 | 4, 8, 16, 24, 32, 40, 64, 80 |

| Max Memory | 20.4 GB—408 GB (5.1 GB/vCore) | 20.4 GB—408 GB (5.1 GB/vCore) |

| Storage Type | High Performance Azure Blob storage | Local SSD storage |

| Max Instance Storage | 2 TB for 4 vCores 8 TB for other sizes | 1 TB for 4, 8, 16 vCores 2 TB for 24 vCores 4 TB for 32, 40, 64, 80 vCores |

| Max Number of Databases per Instance | 100 user databases | 100 user databases |

| Data/Log IOPS | Up to 30–40K IOPS per instance | 16K–320K (4000 IOPS/vCore) |

| Storage I/O Latency | 5–10 ms | 1–2 ms |

More information on the different Azure SQL MI service categories can be found at https://docs.microsoft.com/en-us/azure/azure-sql/managed-instance/resource-limits#service-tier-characteristics. Each of these service tiers falls under the vCore-based purchasing model and can be scaled up or down in the Azure Portal or through an automation script as workload requirements change.

The cost for Azure SQL MI can be reduced using a couple of different methods. First, organizations with existing SQL Server licenses can apply them to Azure SQL MI to reduce its cost. If an organization does not have or decides not to use existing licenses, they can choose to purchase reserved capacity. Like Azure Reserved Virtual Machine Instances for SQL Server on Azure VMs, reserved capacity allows organizations to commit to Azure SQL MI for one or three years. To purchase reserved capacity, you will need to specify the Azure region the Azure SQL MI will be deployed to, the service tier, and the length of the commitment.

Network Isolation

An Azure SQL MI is required to be placed inside a VNet upon creation. On top of this requirement, the subnet that the Azure SQL MI is deployed to must be dedicated to hosting one or more Azure SQL MIs. This requirement restricts access to databases hosted on the Azure SQL MI to only applications that can communicate with that VNet. On-premises networks that host applications connecting to Azure SQL MI can use a VPN or Azure ExpressRoute to communicate with the VNet in Azure.

Deploying an Azure SQL MI to a subnet for the first time creates more than just the database engine. Along with the database engine, the deployment will create the following:

- A virtual cluster to host each Azure SQL MI that is deployed to that subnet. An Azure SQL MI is made up of a set of service components that are hosted on a dedicated set of virtual machines that are abstracted from the user and run inside the subnet. Together, these virtual machines form a virtual cluster.

- A network security group (NSG) to control access to the SQL Managed Instance data endpoint by filtering traffic on port 1433 and ports 11000–11999 when SQL Managed Instance is configured for redirect connections. The NSG will be associated with the subnet once it is provisioned.

- A User Defined Route (UDR) table to route traffic that has on-premises private IP ranges as a destination through the virtual network gateway or virtual network appliance (NVA). The UDR table will be associated with the subnet once it is provisioned.

The subnet will also be delegated to the Microsoft.Sql/managedInstances resource provider. See the section “Azure Resource Manager Templates” later in this chapter for more information on resource providers.

Deploying through the Azure Portal

Use the following steps to create an Azure SQL MI through the Azure Portal:

- Log into

portal.azure.comand search for SQL managed instances in the search bar at the top of the page. Click SQL managed instances to go to the SQL managed instances page in the Azure Portal. - Click Create to start choosing the configuration options for your Azure SQL MI.

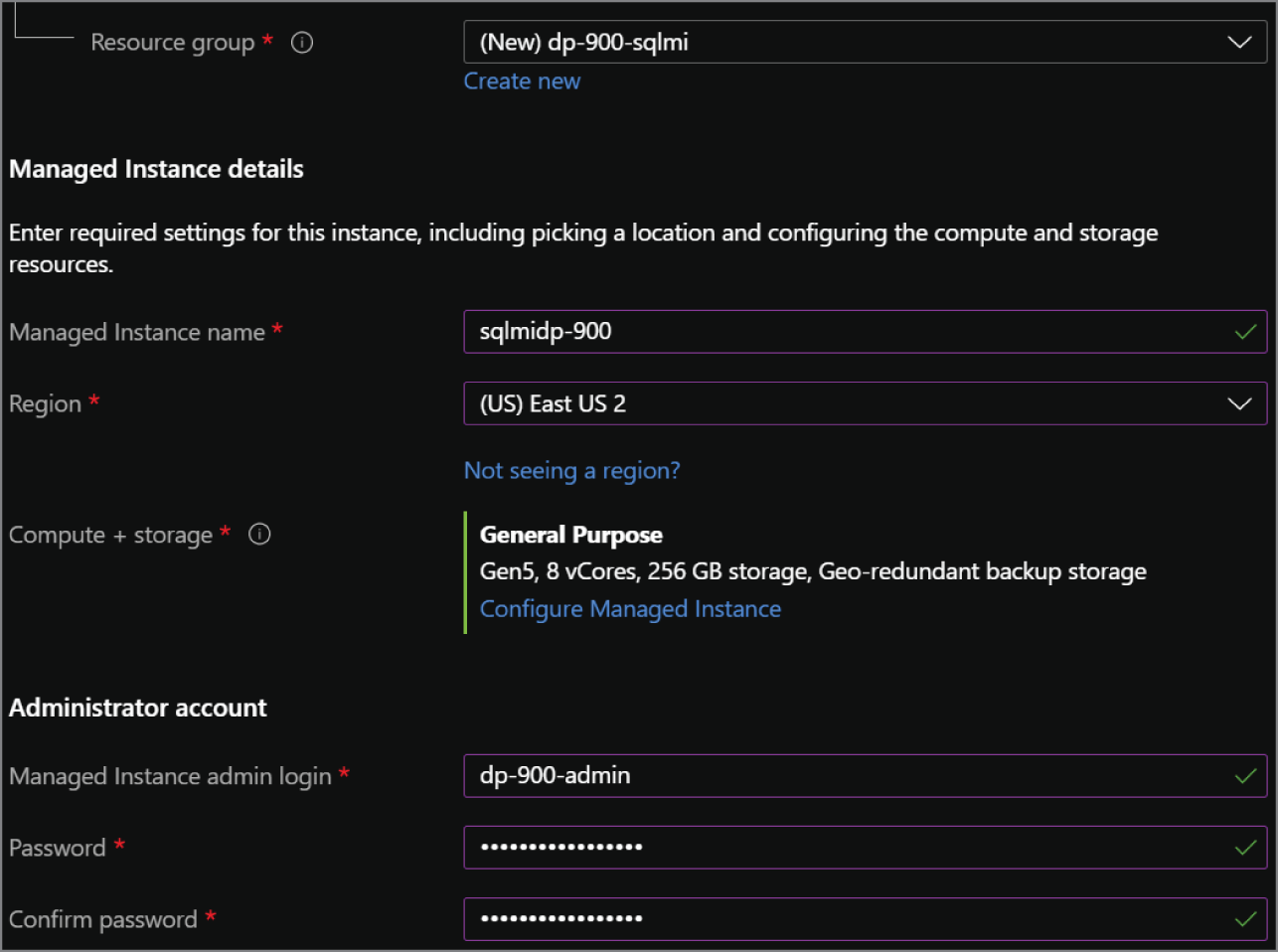

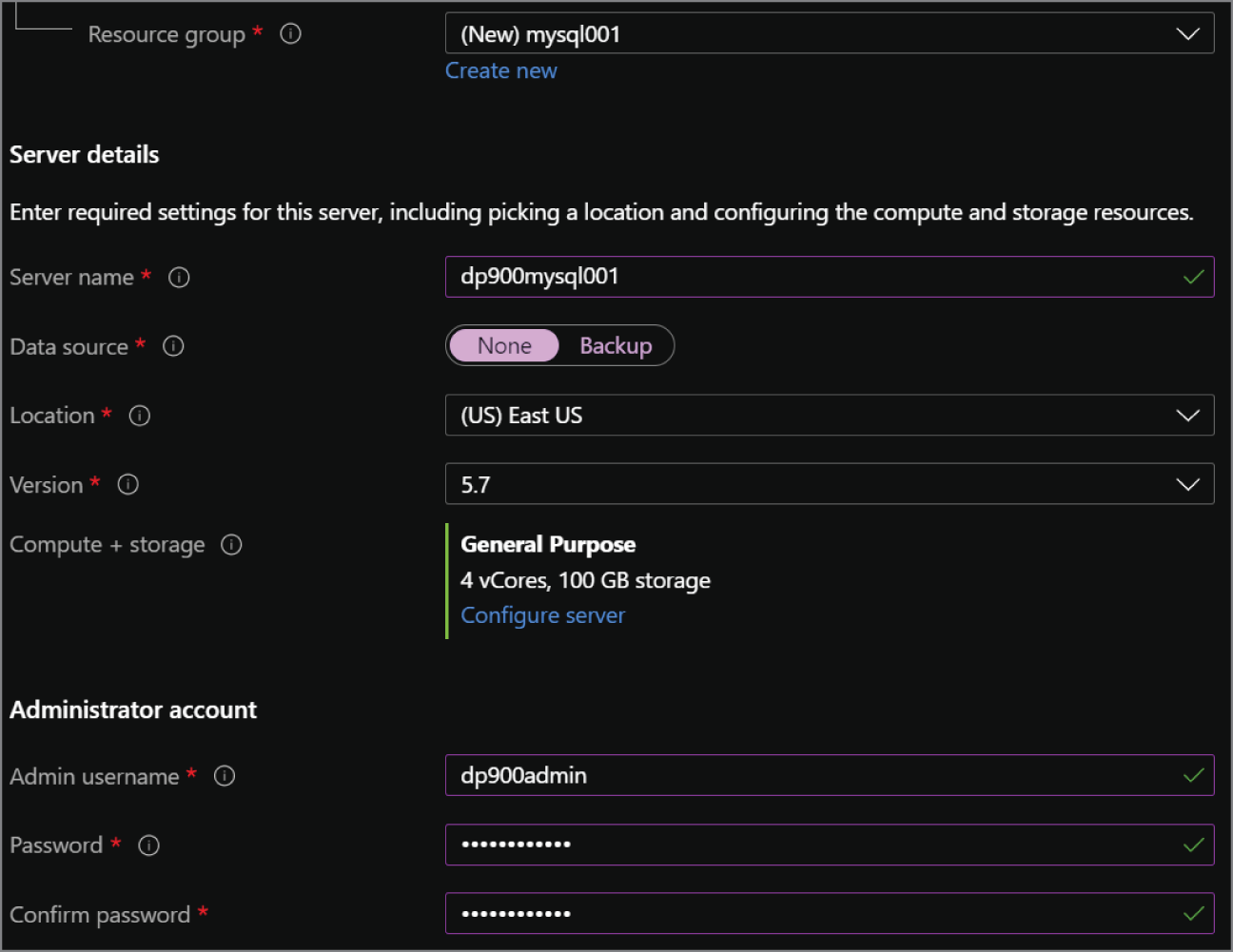

- The Create Azure SQL Database Managed Instance page includes five tabs with different configuration options to tailor the Azure SQL MI to fit your needs. Let's start by exploring the options available in the Basics tab. Along with the following list that describes each option, you can view a completed example of this tab in Figure 2.9.

- Choose the subscription and resource group that will contain the Azure SQL MI and the databases deployed to the instance. You can create a new resource group on this page if you have not already created one.

- Enter a name for the Azure SQL MI.

- Choose the Azure region you wish to deploy the instance to.

- Choose a tier for the instance (i.e., General Purpose or Business Critical), the number of vCores, the storage amount, and the type of redundancy for backup storage.

- Set a username and password for the administrator account.

FIGURE 2.9 Create Azure SQL Database Managed Instance: Basics tab.

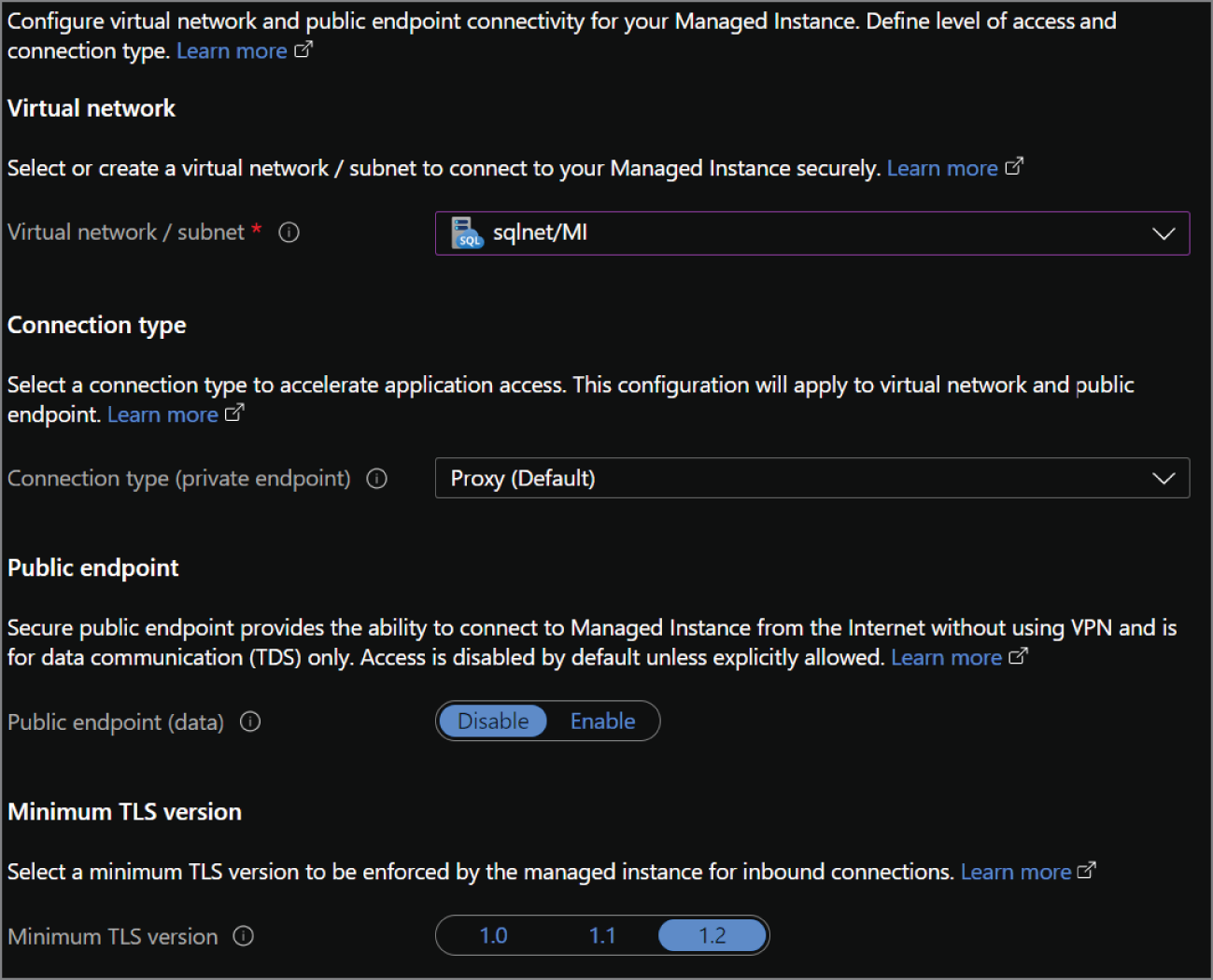

- The Networking tab provides the following network configuration options for the Azure SQL MI. A completed example of this tab can be seen in Figure 2.10.

- Choose the VNet and dedicated subnet that will host the Azure SQL MI.

- The next important component will be deciding whether you want to enable a public endpoint for the Azure SQL MI. Public endpoints are disabled by default to limit connectivity to applications that can communicate with the VNet that the Azure SQL MI is in.

- Choose the minimum TLS version that will be used to encrypt data in-transit for inbound connections. The default TLS version is 1.2 and should be left as is unless there are specific requirements for a lower version.

FIGURE 2.10 Create Azure SQL Database Managed Instance: Networking tab.

- The Additional Settings tab provides options to change the collation, time zone, and maintenance window for the Azure SQL MI's underlying SQL Server database engine. It also includes an option to enable the instance as a secondary in an Azure SQL failover group for DR purposes.

- The Tags tab allows you to place a tag on the Azure SQL MI for cost management.

- Finally, the Review + Create tab allows you to review the configuration choices made during the design process. If you are satisfied with the choices made for the instance, click the Create button to begin provisioning the Azure SQL MI.

Azure SQL Database

Modern applications that are built from the ground up with cloud native best practices rely on database platforms that are flexible and minimize the amount of administrative effort needed to manage the database. Administrators must be able to easily scale performance resources up or down to meet dynamic demand requirements at the most cost-optimal price point. Modern applications are typically designed not to need instance-scoped features that are available in a platform like SQL Server as these features can be implemented using other cloud native offerings. For example, Azure Data Factory, Azure Logic Apps, or Azure Automation can be used to automate when stored procedures or other tasks in the database are run, eliminating the need for SQL Server Agent jobs to perform custom maintenance tasks that are not natively handled by Microsoft.

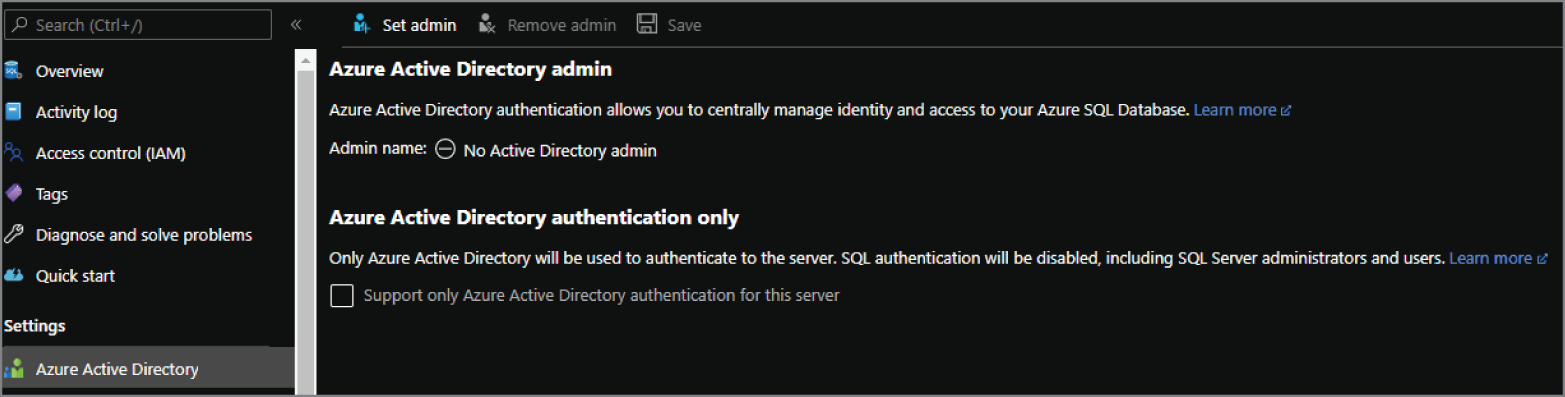

Azure SQL Database is a fully managed PaaS database engine that is designed to serve cloud native applications. It abstracts both the OS and the SQL Server instance so that users can fully focus on application development. Management operations such as upgrades, patches, backups, HA, and monitoring are also handled behind the scenes without requiring any effort from the user. Azure SQL Database comes with a 99.99 percent availability guarantee, regardless of the deployment option or service tier. Just like Azure SQL MI, Azure SQL Database uses the latest version of SQL Server Enterprise Edition. In fact, the newest features of SQL Server are first released to Azure SQL Database before they are released to SQL Server.

Even though Azure SQL Database abstracts the physical SQL Server instance from the user, it still exposes a logical server. Unlike a physical server, the logical server does not expose any instance-scoped features. It instead serves as a parent resource for one or more Azure SQL databases, and maintains firewall, auditing, and threat detection rules for the databases it is associated with. The logical server also provides a connection endpoint for each Azure SQL Database associated with it for applications to use to connect to them.

Azure SQL Database provides two deployment options that allow organizations to optimize database performance and cost:

- A single database is a fully managed, isolated database. This option leverages all the resources (e.g., CPU and memory) allocated to it and is used when a modern application needs a single reliable database.

- An elastic pool is a collection of single databases with a shared set of resources, such as CPU or memory. Elastic pools are useful in scenarios where some databases are used more than others during different time periods. This will reduce the cost of these databases since they will be sharing the same pool of resources.

These options can be broken down further by the following purchasing models that are available for Azure SQL Database:

- The DTU-based purchasing model offers a fixed blend of CPU, memory, and IOPS. Each blended compute package is known as database transaction units (DTUs). The DTU-based purchasing model comes with a fixed amount of storage that varies for each service tier.

- The vCore-based purchasing model lets organizations choose how many virtual cores (vCores) they would like allocated. Service tiers using the vCore-based purchasing model allocate a fixed amount of memory per vCore that varies based on the hardware generation and compute option used. This purchasing model allows organizations to apply their existing SQL Server licenses to reduce the overall cost of the database. Reserved capacity is also exclusively available for the vCore-based purchasing model, allowing organizations to commit to Azure SQL Database for one or three years at a discounted rate. The vCore-based purchasing model provides two options for compute:

- Provisioned compute allows organizations to deploy a specific service tier with a set amount of compute resources. Provisioned compute can be dynamically scaled manually or through an automation script.

- Serverless compute allows organizations to specify a minimum and maximum vCore limit for a database. Databases configured to use serverless compute will automatically scale based on workload demand. It will also automatically pause databases during inactive periods and restart them when activity resumes to cut back on compute costs. This option is only available for single databases.

Deciding on which purchasing model to choose comes down to how much control over compute resources you would like to have. The DTU-based purchasing model offers a fixed combination of resources that allow organizations to start developing very quickly. The vCore-based purchasing model allows organizations to choose the amount of compute resources, or a range of compute resources in the case of serverless. This model also includes a more extensive selection of storage sizes as well as more cost-saving options with reserved capacity or existing licenses.

Service Tiers

Azure SQL Database service tiers are different for each purchasing model. The DTU-based purchasing model offers Basic, Standard, and Premium tiers. Table 2.4 lists some of the common characteristics of these tiers.

TABLE 2.4 DTU-based purchasing model service tier characteristics

| Characteristic | Basic | Standard | Premium |

|---|---|---|---|

| DTUs | 5 | S0: 10 S1: 20 S2: 50 S3: 100 S4: 200 S6: 400 S7: 800 S9: 1600 S12: 3000 | P1: 125 P2: 250 P4: 500 P6: 1000 P11: 1750 P15: 4000 |

| Included Storage | 2 GB | 250 GB | P1–P6: 500 GB P11 and above: 4 TB |

| Maximum Storage | 2 GB | S0–S2: 250 GB S3 and above: 1 TB | P1–P6: 1 TB P11 and above: 4 TB |

| Maximum backup retention | 7 days | 35 days | 35 days |

| CPU | Low | Low, Medium, High | Medium, High |

| IOPS | 1–4 IOPS per DTU | 1–4 IOPS per DTU | >25 IOPS per DTU |

| IO Latency | 5 ms (read), 10 ms (write) | 5 ms (read), 10 ms (write) | 2 ms (read/write) |

| Columnstore Indexes | N/A | S3 and above | Supported |

| In-Memory OLTP | N/A | N/A | Supported |

The vCore-based purchasing model offers the following three service tiers:

- General Purpose is used for most business workloads. This tier offers balanced compute and storage options.

- Business Critical is used for business applications that require high I/O performance. It is also the best option for applications that require high resiliency to outages by leveraging a SQL Server Always On availability group for HA.

- Hyperscale is used for very large OLTP databases (>4 TB) and can automatically scale storage and compute. Hyperscale databases use local SSDs for local buffer-pool cache and data storage. Long-term data storage is done with remote storage.

Table 2.5 lists the common characteristics for the vCore-based purchasing model service tiers:

TABLE 2.5 vCore-based purchasing model service tier characteristics

| Characteristic | General Purpose | Business Critical | Hyperscale |

|---|---|---|---|

| Storage | Uses remote storage. Provisioned Compute: 5 GB–4 TB Serverless Compute: 5 GB–4 TB | Uses local SSD storage Provisioned Compute: 5 GB–4 TB | Supports up to 100 TB |

| Availability | 1 replica, no read-scale replicas | 3 replicas, 1 read-scale replica | 1 read-write replica, 0–4 read-scale replicas |

| In-Memory | Not Supported | Supported | Partial Support |

Network Isolation

Unlike SQL Server on a VM and Azure SQL MI, a logical server for an Azure SQL Database does not come with a built-in private endpoint. This means that an Azure SQL Database is not isolated within a VNet by default. Network isolation for Azure SQL Database can instead be achieved by limiting access to the logical server's public endpoint through the server's firewall, restricting access to only services in a specific VNet or subnet, or explicitly adding a private endpoint that is associated with a subnet in a VNet.

Public endpoint access can be limited using the following settings:

- Allow Azure Services allows all resources hosted on Azure, such as an Azure VM or Azure Data Factory, to communicate with databases associated with the logical server. This setting is turned off by default, as turning it on typically provides database access to more resources than what is needed.

- IP firewall rules open port 1433 (the default port SQL Server listens on) to a specific IP address or a range of IP addresses. Firewall rules can be set at the server level to allow access to all databases associated with a logical server or at the database level to only allow access to a specific database.

Private access to the logical server can also be enabled so that database connectivity is restricted to specific VNets. This type of access can be enabled using one of the following settings:

- Virtual network firewall rules restrict access to databases associated with a logical server to traffic using the private IP range of a VNet. Application traffic coming from a specific subnet in a VNet can be switched from using public IP addresses to private IP addresses by adding the Microsoft.Sql service endpoint to the subnet. The subnet can then be added as a virtual network rule in the logical server to allow traffic from that subnet to connect to databases associated with the logical server.

- Private Link is a service in Azure that allows you to add a private endpoint to a logical server. Private endpoints are private IP addresses within a specific subnet in a VNet. Once a private endpoint is attached, connectivity will be limited to other applications in the VNet or applications that can connect to the VNet through VNet peering, VPN, or Azure ExpressRoute.

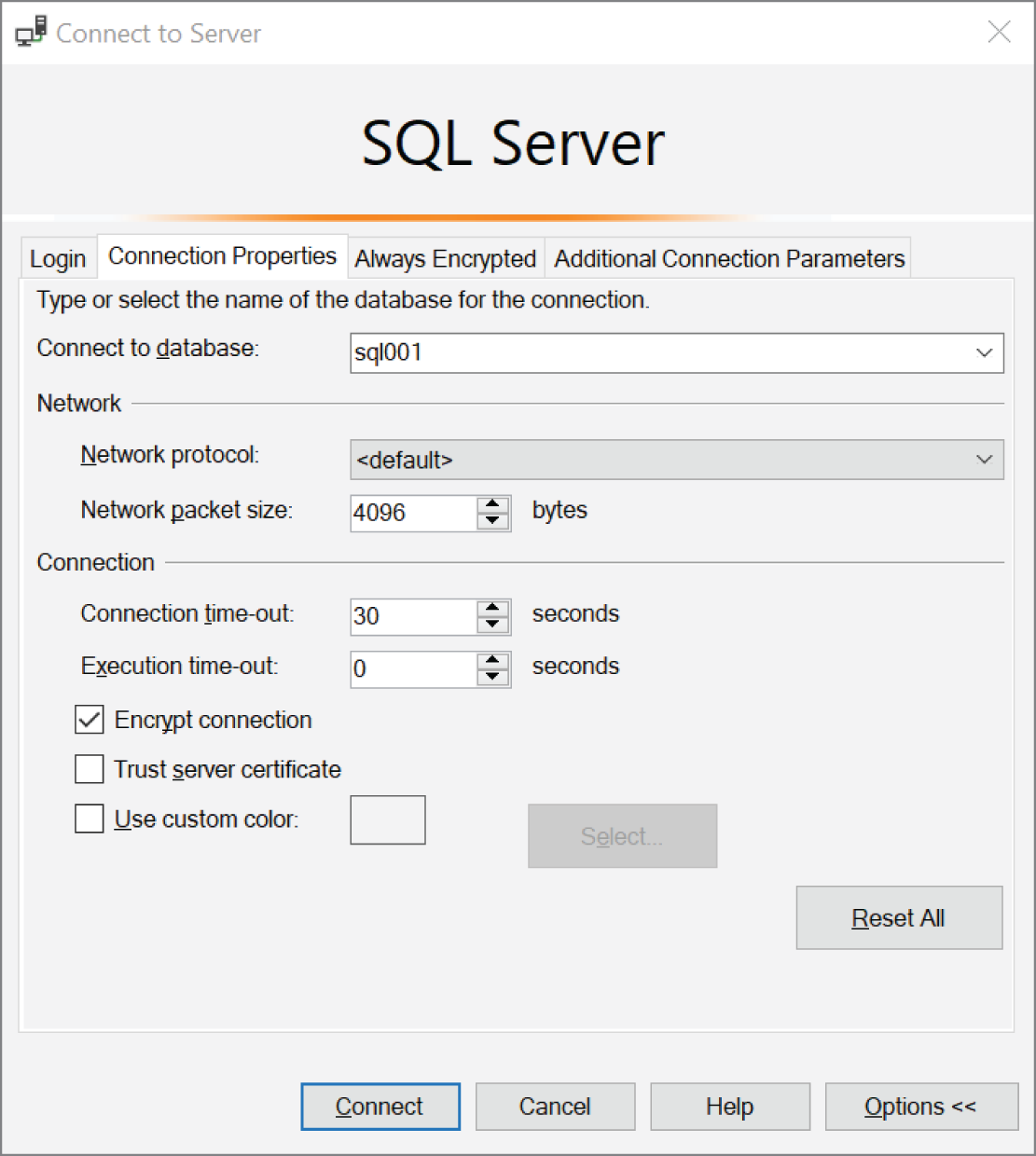

Deploying Through the Azure Portal

Use the following steps to create an Azure SQL Database through the Azure Portal:

- Log into

portal.azure.comand search for SQL databases in the search bar at the top of the page. Click SQL databases to go to the SQL databases page in the Azure Portal. - Click Create to start choosing the configuration options for your Azure SQL Database.

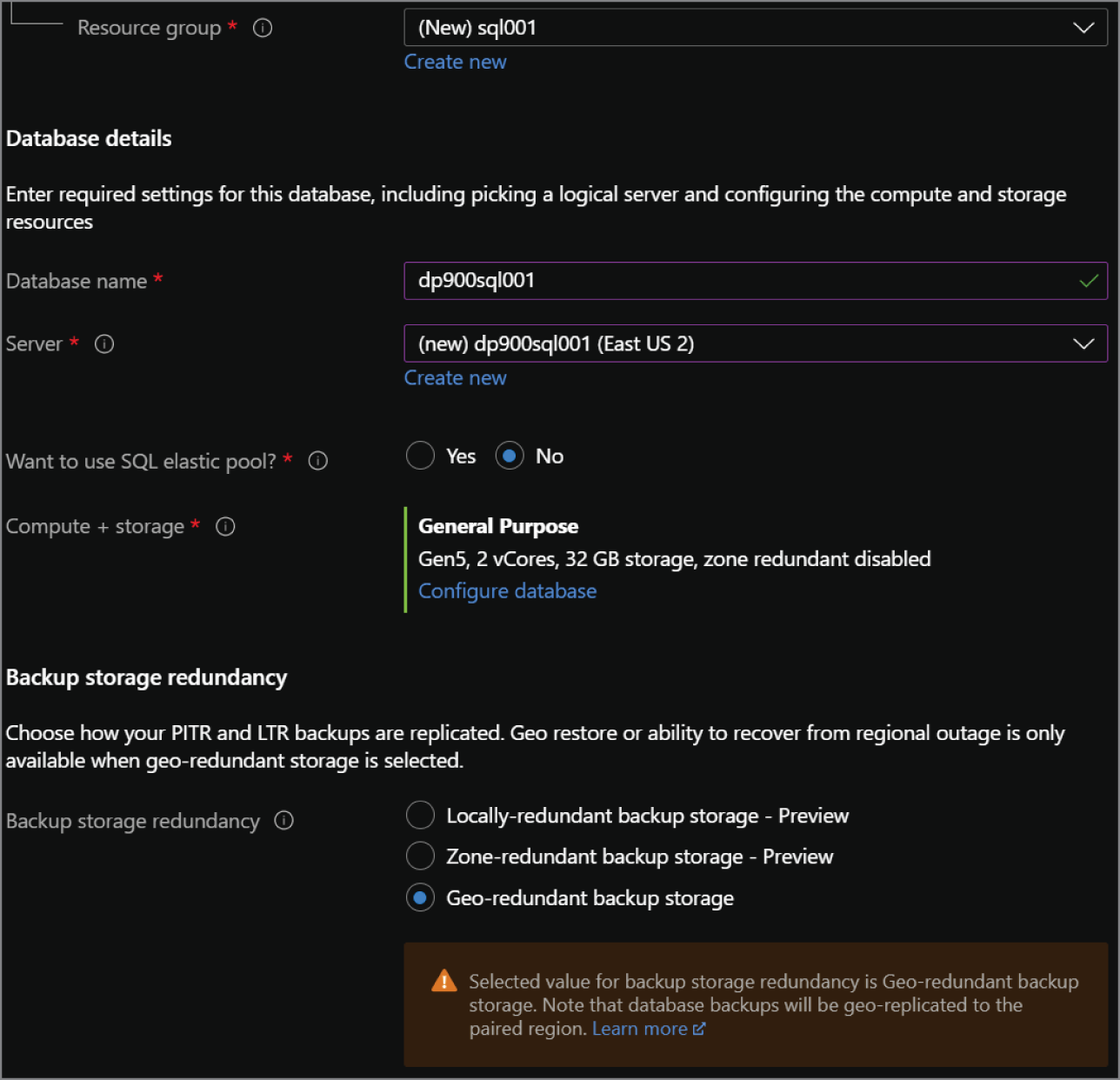

- The Create SQL Database page includes six tabs with different configuration options to tailor the Azure SQL Database to fit your needs. Let's start by exploring the options available in the Basics tab. Along with the following list that describes each option, you can view a completed example of this tab in Figure 2.12.

- Choose the subscription and resource group that will contain the Azure SQL Database. You can create a new resource group on this page if you have not already created one.

- Enter a name for the Azure SQL Database.

- Choose the logical server you wish to deploy the database to. You can create a new logical server on this page if there is not one already available. The logical server chosen will dictate which region the database will be deployed to. Note that creating a new logical server will also require you to set a username and password for the administrator account.

- Choose whether the database will be a part of an elastic pool.

- Click Configure database to choose the purchasing model and service tier. If you choose one of the vCore-based purchasing model service tiers, you will be given the option to apply existing SQL Server licenses, choose the number of vCores, and set the maximum amount of storage allocated for data. Choosing a DTU-based purchasing model service tier will give you options to change the number of DTUs allocated to the database and the maximum amount of storage allocated for data. Figure 2.11 is an example of completed configuration for a General Purpose database. As you can see, the database configuration comes with a monthly cost estimate.

- Choose the redundancy tier for database backups.

FIGURE 2.11 Configuring an Azure SQL Database

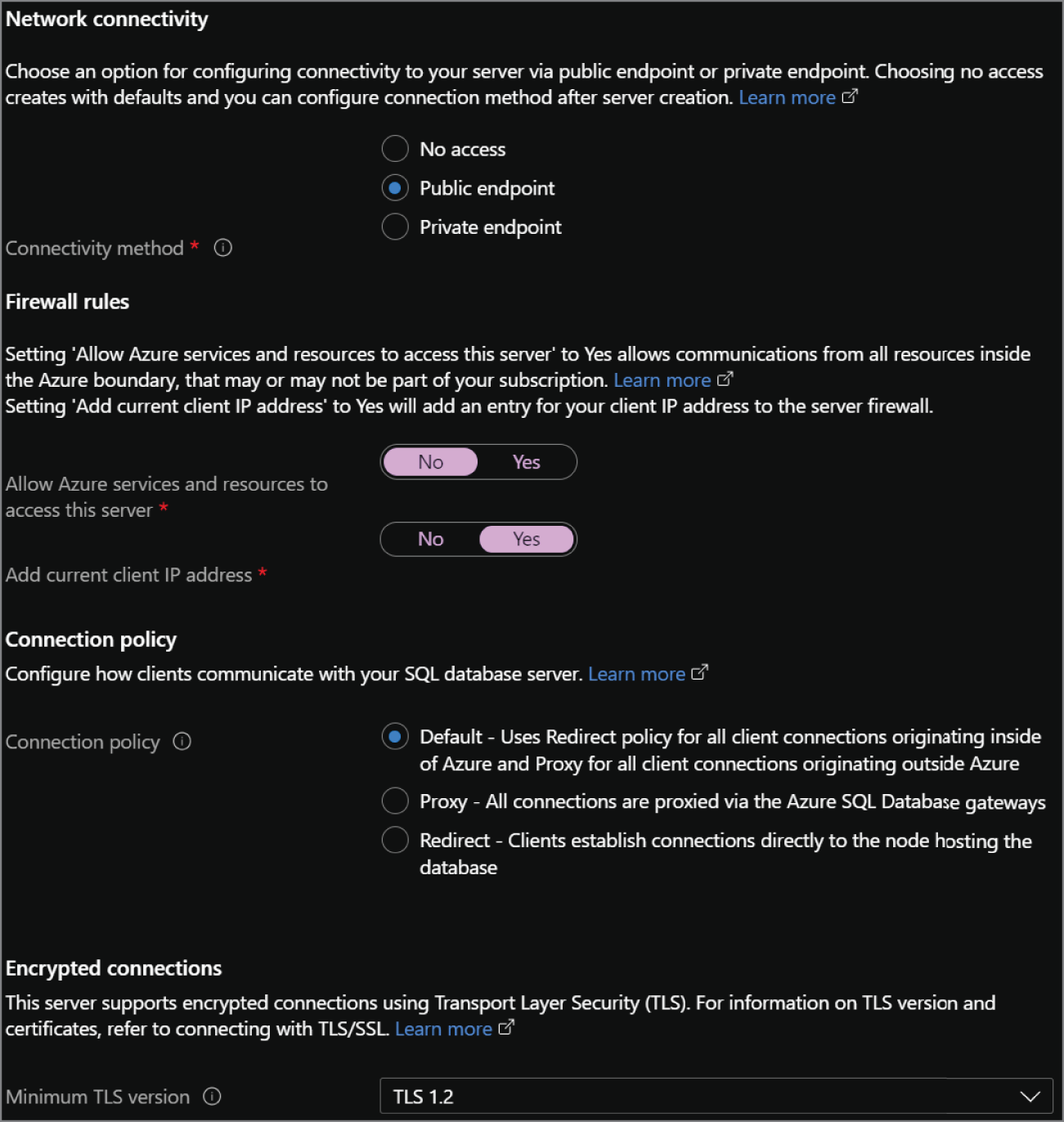

- The Networking tab allows you to configure network access and connectivity for your logical server if you are creating a new one. If you are deploying the database to an existing logical server, then most of the options will be grayed out as it will be taking on the existing state of the server. A completed example of configuring a new logical server can be seen in Figure 2.13.

FIGURE 2.12 Create Azure SQL Database: Basics tab.

- There are three options available for network connectivity. The first option, No Access, allows you to continue configuring your database without needing to configure any connectivity until after it is provisioned. Public Endpoint will display a new set of options specific to the logical server's firewall. These will allow you to allow or deny Azure services and the client IP address you are deploying the logical server from access to the databases on the server. The final option, Private Endpoint, will allow you to associate a private IP address from a VNet to the logical server. This will isolate the databases within a VNet, allowing connectivity only to applications that can communicate with the VNet.

- Choose how client applications will communicate with the logical server.

- Choose the minimum TLS version that will be used to encrypt data in-transit for inbound connections. The default TLS version is 1.2 and should be left as is unless there are specific requirements for a lower version.

FIGURE 2.13 Create Azure SQL Database: Networking tab.

- The Security tab allows you to choose if you would like to use Azure Defender for SQL to provide advanced threat protection for your data.

- The Additional Settings tab allows you to start your database as a blank database, from a backup, or from a sample provided by Microsoft. You can also choose if you would like to change the default collation for the database and the default maintenance window.

- The Tags tab allows you to place a tag on the Azure SQL Database for cost management.

- Finally, the Review + Create tab allows you to review the configuration choices made during the design process. If you are satisfied with the choices made for the instance, click the Create button to begin provisioning the Azure SQL Database.

Scaling PaaS Azure SQL in the Azure Portal

Scaling Azure SQL MI or Azure SQL Database resources up or down depending on workload demand, also known as vertical scale, is very easy in the Azure Portal. The need to vertically scale can result from performance degradation due to a lack of compute resources or overallocated compute resources that result in unnecessary expenses. The speed at which users can vertically scale compute and storage resources through the Azure Portal allows organizations to react very quickly to a change in workload demand. Since this process is the same for Azure SQL MI and Azure SQL Database, this section will detail how to scale an Azure SQL MI as an example. The only difference between the two is that you will need to go to the SQL databases page to scale your Azure SQL Database instead of the SQL managed instances page.

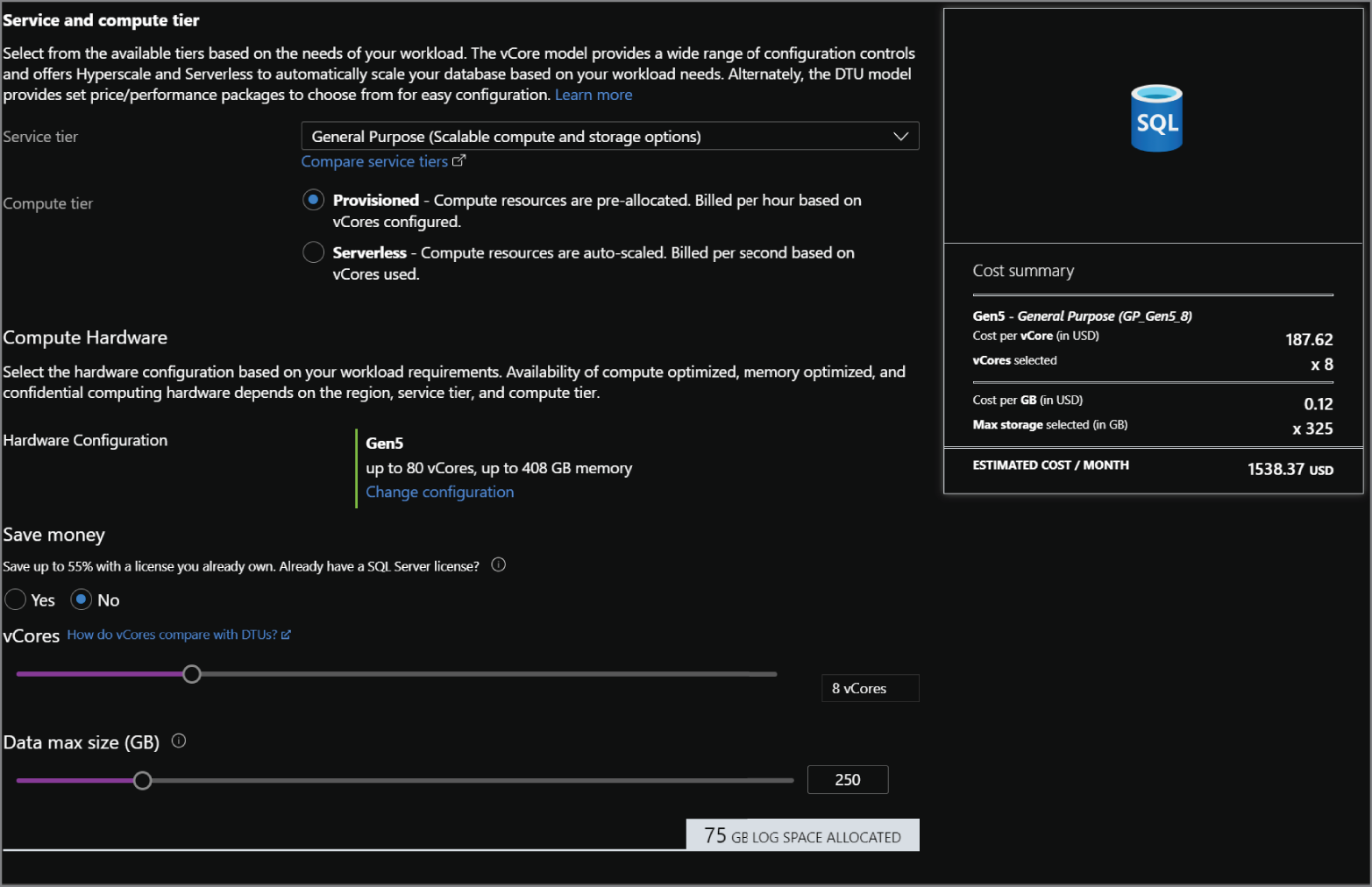

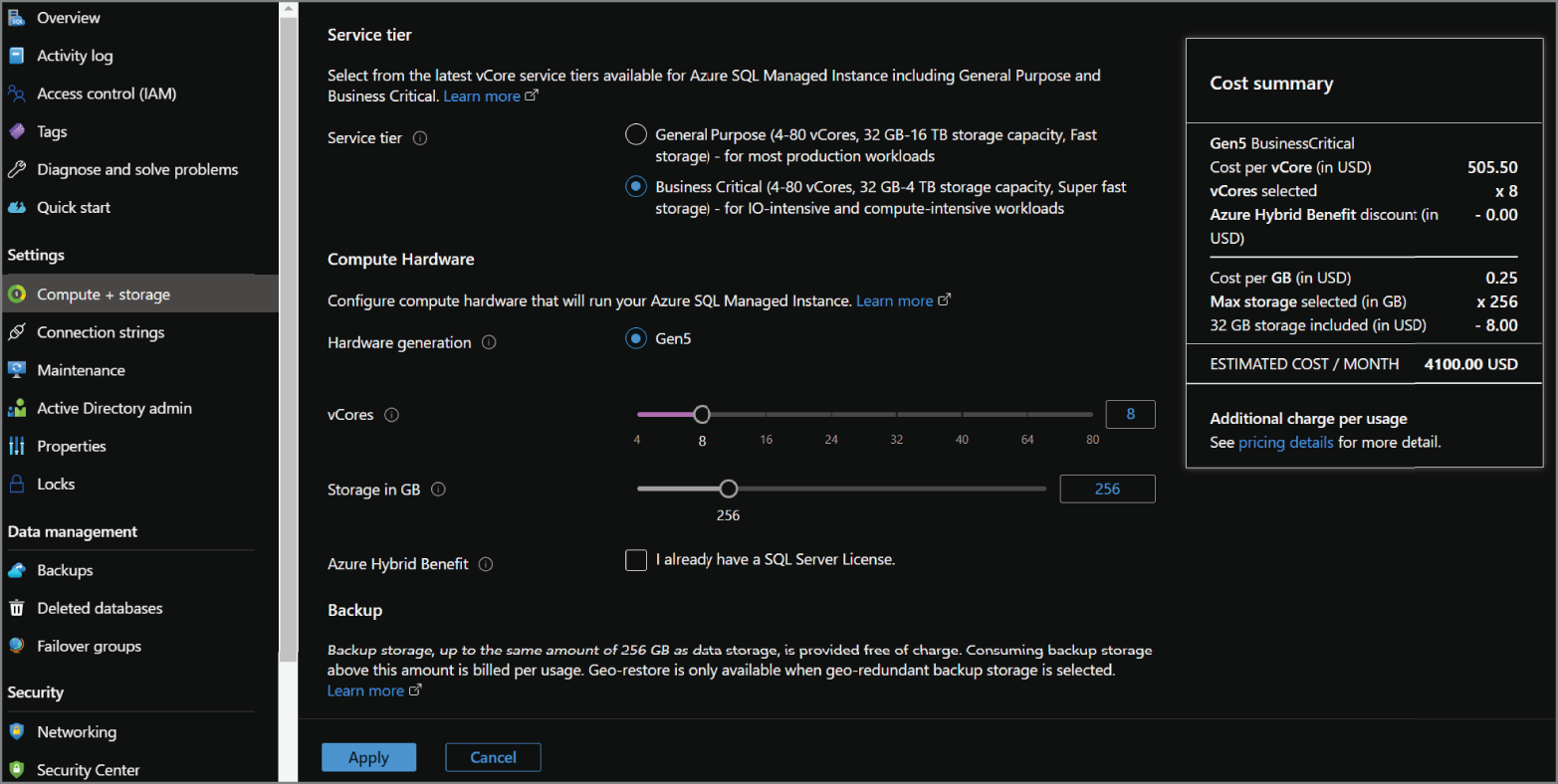

To scale an Azure SQL MI, go to the SQL managed instances page in the Azure Portal. Click your recently created Azure SQL MI and click the Compute + storage option under Settings. This page will allow you to change the service tier, number of vCores, and amount of storage allocated to the instance. The page will also update the cost summary for the instance as you change different configuration settings. Figure 2.14 illustrates an example of this process.

FIGURE 2.14 Scaling an Azure SQL MI

Business Continuity for PaaS Azure SQL

Azure manages backups for Azure SQL Database and Azure SQL MI databases by creating a full backup every week, differential backups every 12 to 24 hours, and transaction log backups every 5 to 10 minutes. These backups are stored in geo-redundant Azure Blob storage and are replicated to a separate Azure region. Backups are kept for 7 to 35 days, depending on the service tier and the retention settings set by an administrator. Long-term backup retention (LTR) can also be enabled to retain full database backups for up to 10 years.