CHAPTER 10

A Chaotic Private Sector

HE NEEDED TO MEET the sales quota. A senior sales executive shared his story about selling AI. This story, he mentioned, was repeated at multiple positions he has held in various organizations. He had led sales in three top AI firms. “Selling ERP was easy. Selling Salesforce is easy. There is a clear business need-and-solution relationship. But when it comes to machine learning, what are you really selling?” He shared his battle story. “Day after day, we would walk into client boardrooms and tried explaining AI to them. We created true excitement. Everyone loved the glamorous part of it—the chatbots and all. They agreed that it is a large-scale disruptive technology. But when we asked them to apply it in a more strategic manner, they wouldn't go for it. With ERP, it was possible to make an enterprise sale, but not with AI. The alternative was to sell them baby steps, but that wasn't meaningful deal size for us or a big ROI for them. We were not able to sell large deals.”

The above situation is typical for many AI platform companies. What are you really selling? AI is being sold like a panacea for all ailments but without specifying how it cures any. With no corresponding enterprise transformation visions with AI, the only path left for these firms was to sell insignificant use cases.

The absence of any direction from the government created a vacuum in the private sector. The gap existed on both supply and demand sides.

In another story, when nothing else worked, the board of a Boston-based AI platform company specifically asked the sales team to sell $50,000 deals. They were told by the board to forget about mega-sized deals and instead focus on “logo collection”—a term referring to the number of clients vs. the size of the deals. The board argued that investors respond to logos more than to the average deal sizes or quality of revenues.

As another sales executive explained,

“It was not that we were not selling, it was just that we were selling peanut-sized deals. Everyone just wanted to experiment. There was no commitment to transform. There were no grand visions. Consulting firms became our partners, and they were also selling services—mostly old-style IT services. It seemed as if they just wanted to maximize their number of billable hours while bundling our software for nothing. We had sales, but they meant nothing. Most importantly they did not help our clients at all. No real value was added.”

Right around the time when the government was seeking RFI responses in 2016, many AI start-ups were being formed. One of these start-ups was based in San Francisco. This firm was started by a math professor from a top university, and the executive team was composed of leading machine learning experts. The company developed powerful techniques in neural networks. When approached by a bank to solve a critical problem, the team analyzed the problem and was able to solve it using deep learning. Despite having a great grasp on algorithms and having a powerful success story from a use case, the firm started to experience problems with scaling up. The invested capital started bleeding quickly. The firm was unable to keep pace with the demands of the investors, who needed to see fast client buildup. The problem came down to how to sell machine learning projects. The firm tried to use the experience it had gained from the financial services client, but some potential clients could not relate to the problem while others did not have the data to train the algorithm. Some target clients did not understand the problem solved by the firm. Others were not ready to even experiment, while others did not understand the value proposition. And those who did understand the problem did not possess the data that the first firm did. Eventually the firm got sold to a regular IT tech firm.

A Texas-based AI platform company also had no luck in trying to secure big wins. The firm received significant funding from strategic investors. But it too faced the same challenges as other firms did. What were they really selling to the market? Was it a financial services platform? Was it health care? Was it just an AI platform that can be used to develop machine learning applications more easily?

To muddy the waters even further, many business intelligence (BI) firms erased the B from their BI and replaced it with an A and positioned themselves as AI firms. The AI in those platforms was like caffeine in decaffeinated coffee—but it was nonetheless marketed as AI. Firms that called themselves low code suddenly reemerged as AI firms.

When the supply side gets muddied, the transaction cost of technology adoption increases. There was no clarity about what AI did and what it meant. Anyone could have called themselves AI. In the DC area, government contractors began calling themselves AI firms, but only a few, like NCI, truly developed their capabilities. This part of reinvention was absent across the board.

THE SUPPLY SIDE PROBLEMS OF AI

American AI faced several supply side issues. Specifically there were five main problems:

- Dominant design of an AI platform;

- Confusion about machine learning;

- RPA confusion;

- Legacy IT issues;

- Use cases.

The concept of transaction cost is important to understand. In simple terms it can be understood as the risk related to transactions to which transacting parties are exposed. When the perceived risk is higher, the counterparties to the transaction incur greater costs to verify the legitimacy of the transaction, of what is exchanged, and of the counterparty itself. This increases the overall cost related to the transaction. Markets with higher standardization and credible institutions reduce transaction costs and hence tend to function more efficiently than those where trading parties have to treat each transaction separately and invest time and effort to verify and validate the legitimacy, measure the value, and assess the credibility of the counterparties.

Just as transaction costs can be viewed in markets, they can be applied to study the technology adoption trends in society and businesses. Corporate executives have a responsibility to their shareholders. They also must protect their own careers and jobs. Acquiring and adopting new technologies carries a risk. If no one has defined for them what it means to adopt AI, what the new processes and business models are, who are the real AI players, and how to embrace the new technology, they will remain hesitant and wary of adopting new technologies. When they do adopt them, they will do it slowly and experimentally. As new models emerge and the perceived risk of adoption declines, the adoption will become more enthusiastic. It happened with ERP. When a dominant design of enterprise resource planning emerged—for example, from SAP—adoption became universal.

AI is a complex technology, and it requires a different mindset to develop it than regular IT solutions. It needs a different raw material, a different skill set, and a different process to develop. It redefines current processes and enables the creation of new processes that do not exist today. It requires immense creativity.

When both legitimate and illegitimate suppliers rush into the field claiming to do AI, when the definition of AI is muddied, when reports are issued that a large number of AI projects are failing, and when every time AI is brought up a cadre of ethicists, virtue signalers, futurists, and governance enthusiasts rise up to attach AI with a plethora of social, political, institutional, and economic issues, it becomes detrimental to quickly adopt the technology. The repeated messaging about AI's evil neither truly addresses AI's real ethical or governance issues nor does it provide a good social feeling to embrace the revolution. All it does is to create fear and panic about AI. It simply taps into the preexisting Hollywood-defined and celebrity-promoted fearmongering about AI and creates an unnecessary distraction. All of that increases the transaction cost associated with AI.

We had talked about the demand side of AI in agencies. In Chapter 9 we discussed how agencies are experiencing challenges with developing the AI strategic and execution map. One would think that the situation was different on the business side, but unfortunately, other than in few companies, the demand side in the private sector is just as bad.

SENSEMAKING ABOUT AI

It was late in the evening, but the meeting continued. No one knew that within a few weeks the world would come to a halt and in-person meetings would become either a thing of the past or would be undertaken with masks and ample supply of hand sanitizers. The executive team of a large financial firm was pondering over their AI strategy, and passions were running high. Apparently, the CEO had discovered that his competitor had made AI one of their top priorities, and now the CEO wanted to build his firm's capabilities in AI. One of the authors (hereafter the consultant in this chapter) of this book was helping them with the strategy. “We need AI as soon as possible” a senior executive directed her team. “Why don't you just ask the programmers to write the AI code instead of writing regular code? Just teach them the AI language or whatever it is,” she demanded. The consultant explained that AI systems are developed differently, and they require data to train the system. Somehow, it turned out to be quite a difficult concept to understand for the executive and her team.

They knew how to develop the regular IT systems and just couldn't get their heads around what it means to train the system. Along the same lines, one of the most senior auditors in a US ally country who is also technology savvy continued to ask one of the authors of this book why can't he just ask companies to provide the code of their machine learning systems so his audit teams can audit the AI systems. In their defense, in regular IT, you collect requirements, engineer the solution, and then give it to the programmers to develop. Programmers write the code and testers test the program. There is no design risk in terms of that: as long as the program can be designed (architected or engineered), it can be developed. In other words, while bad code or bad architecting can create problems, there is no uncertainty that a regular or legacy IT program will not work if done correctly. And they had all grown up with the regular or legacy IT.

In fact, there is little in common between AI development and IT applications development. AI takes shape from the data whereas regular IT is deployed for data. AI development is also not deterministic. In other words, until you actually start training the algorithm, you don't know whether the algorithm will learn at the desired performance level or not.

Back to the financial services firm story. By the end of the evening, the consultant realized that his explanations were not helping. They failed to show the executive team that their entire concept of AI was faulty. The executives insisted that all they needed was good programming staff and that they have “excellent programmers who have years of experience in developing IT applications” and “if they can do other systems, I [we] don't understand why they can't develop AI.” They constantly requested a follow-up meeting, and the team met the following week.

After the meeting, the consultant realized he needed a different narrative to explain the AI process to the executives, and that was when he developed the concept of the AI supply chain.

THE AI SUPPLY CHAIN

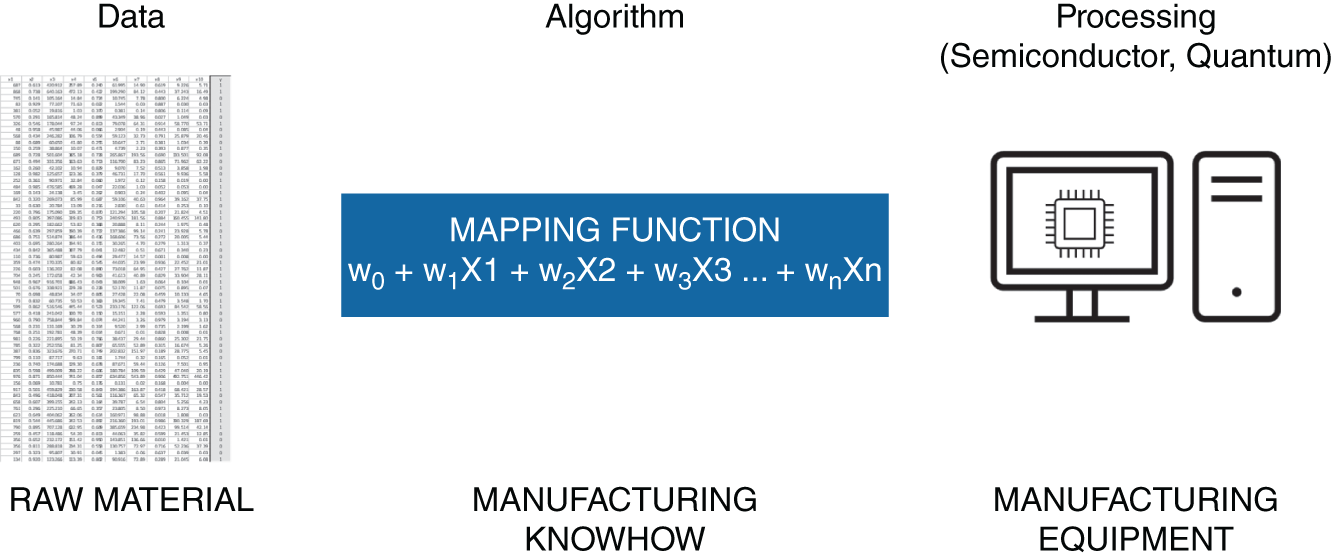

When the consultant returned the following week, he began explaining the idea of the AI supply chain to the management team. He drew the following diagram on a whiteboard.

The artificial intelligence supply chain is composed of three capability areas: data, algorithms, and processing power. It is helpful to view artificial intelligence in terms of a supply chain. As previously mentioned in the book, AI systems arise out of data. It is helpful to compare AI with a manufacturing process, which results from raw materials, production equipment, and know-how/processes. If you are manufacturing widgets, you need the raw materials that go into making the widgets, you need the best manufacturing process and know-how, and you need the manufacturing equipment. The manufacturing concept requires further explanation. Unlike the manufacturing undertaken on an assembly line, the AI manufacturing can be viewed as customized manufacturing where each product being manufactured would require its own raw materials, equipment, and the assembly process and know-how.

Since machine learning systems come from data, data can be viewed as a raw material in the supply chain. Therefore, having the raw materials, in the right quantity and quality, in the right shape and form, and in the right packaging is important. In data's case that means having plenty of data that is organized, usable, reliable, timely, and relevant to solve a given problem. Note that the way AI works, a stated problem can lead to requiring a certain type of data or alternatively, data can lead to the identification of a problem. Hence, knowledge grows from various ways, at times self-generating without any theoretical support. Sometimes a solution emerges for which a problem has not even been formulated.

Data scientists organize and process data, develop models, train the algorithms, and study the performance of various algorithms to solve a given problem. Human skill is required to make the supply chain flow smoothly in all three areas. This can be viewed as the manufacturing process of AI systems.

Finally, the processing power over which the manufacturing of AI systems takes place—analogous to the manufacturing equipment—is composed of the processors (semiconductors) and can be viewed as the industrial equipment required to manufacture systems.

If you don't have the data raw material, you will not be able to manufacture the product. If you don't have the equipment and the manufacturing know-how to put the product together, you will not be able to make the product. It is not about the programming. Programming is one small part of developing an AI system. The real skill of developing AI systems is about being able to conceptualize an idea, preprocess data, train the algorithm efficiently, and deploy the system.

This view of AI in terms of a supply chain can help determine the performance potential of a nation. A country that has more usable data (in terms of magnitude, types, structures) possesses more raw material. A nation that possesses the talent and access to algorithms can create more AI faster. A country that has the semiconductor advantage can create bigger, better, and more advanced AI systems faster.

The story of American AI can be told in terms of the supply chain and when told that way, it gets even more interesting. America failed to manage its AI supply chain just as the country was unsuccessful in managing other supply chains. The world was changing, but America not only refused to change, its leaders doubled down and insisted on keeping the status quo.

THE ERA OF DATA MANAGEMENT

One of the greatest errors happened in failing to identify data as the raw material and making efforts to cultivate, nurture, grow, and expand this essential raw material. To an outsider, it would seem that America was flourishing with data. After all the entire 2010s seemed to be about data management and big data. To an insider, it was clear that the early 2010s data management movement was not designed for AI.

Data is intangible and can be used by anyone to make things that you don't expect. In other words, you don't know what AI system can arise from data.

In the second decade of the twenty-first century a movement took hold in America. This movement came from the field of data management. It is important to understand the difference between data management and data science. Data management is where data professionals organize and govern data in an enterprise. These professionals study the data used or needed by a firm and then identify ways to govern data so that it can be used in various applications by the firm. Governing data typically includes understanding where data resides in a firm, identifying who owns, control, and contributes to that data, and how it is used by various applications and humans. This is not data science. Data management professionals are not necessarily AI or data science people. But due to the terminology that uses data in the description, many people confuse data management with data science.

The second decade of the twenty-first century saw the rise of data management. Companies rushed to hire chief data officers. CDOs and the CDO staff usually came from the business side and was augmented by people with some background in technology. These were not AI teams.

The idea was to help corporate America make use of years of data, reduce systems proliferation, make decision-making more efficient, and give firms greater visibility of their data. But as soon as CDOs landed with their lofty vision, organizational retaliation began. For one, no one was clear whether this was a business function or a technology function. In many cases data management departments received the wrath of both—often trying to find their place in the corporate world. After many trials and errors, these departments discovered that the best way to justify their existence was to turn the whole data management programs into collaborative endeavors and pass the ownership to business leaders. That created many roles, known as data stewards and data owners, for the managers on the business side. If you were a management accountant for a firm, you became the data steward for the accounting data. If you were in HR, you owned the HR data. These functional managers were expected to participate in the data management programs and perform work in addition to their regular jobs. Many hated it. But CDO offices prevailed, and companies began significant data management work. Many professional organizations, such as DAMA, were created to help codify the data management knowledge. The hype cycle of analytics, business informatics, and other such applied areas received significant attention. CEOs and CFOs bragged about their data management programs, although few could explain what the data management department was doing.

While data management functions received some discontent from the business functions, nowhere was this more prevalent than from the CIO offices. The IT and data management rapidly turned into one of the most prominent corporate rivalries and with an intensity that was putting the famous operations vs. sales rivalry to shame. First, the CDO offices were exposing many of the inefficiencies of the IT departments. IT shops grew out of the chaos left by layers of legacy technologies, mergers and acquisitions, and failed projects. Like the dead and injured left on the battleground after a medieval war, the remnants of this chaos were visible in the digital footprint of companies. Work was done on desktop spreadsheets, and the same data elements were repeated dozens of times in a firm. It was easy for the CDO office to point a finger at the CIO and link every corporate problem with the underperforming or troubled IT departments. If sales were not materializing, it must be because of a firm's failure to use analytics effectively. If costs were too high, it must be because IT failed to deploy a corporate-wide ERP. With the CDO in power, the CIO officer stood exposed. But the war didn't end there. Judging CIO vulnerability, many CDOs attempted to roll CIO organizations under the CDO office. In a few cases, such insurgencies succeeded, and CDOs became the owners of both data and technology, while in the other cases the CDO vs. CIO conflict continued. The data management function acquired maturity around 2014—perhaps the golden year for the data management function.

“They really did not understand the data management field,” remarked a former CDO of a large financial institution. The data management projects were often combined with major IT undertakings such as large-scale data repositories and centralized data lakes. Many such projects failed to achieve results.

Nearly all major data management conferences focused on change management as selling data management in the corporate environment was not an easy undertaking. The biggest complaint was that the executive leadership teams of companies generally do not understand the contribution and role of data management organizations.

It is true that data management organizations played a role in introducing the need for analytics, but what is also true is that the data management field did not develop with the needs of AI or the data science revolution in mind. AI and if anything, data management, created a new corporate layer. The lack of standards in data management introduced a plethora of practices, and organizations approached data governance differently. If the AI revolution benefited from the data management layer, it was likely not by design.

Data management organizations were not tasked with the objective of identifying data that can be used as a raw material to develop new AI applications. Their job was data governance in accordance with the general needs of a firm or a government agency. Fulfillment of this goal requires setting priorities, being selective, and managing data that lives in the regular use patterns of companies—and not for developing new intelligent applications.

This is critical to understand. America was not preparing its data for the AI revolution. For the entire 2010s the focus of the data management remained on “relevant” data, where relevance was defined by what was in the immediate periphery of the corporate strategy and what can be executed by the technology departments. But when you think about data as a raw material, any data can acquire the state of “relevance.” For example, weather data, shopping data, and psychological data seem to be very different. A firm interested in learning more about its customers may not use the weather data. But it is possible that weather can drive significant buying behaviors and patterns. This is where the idea of unmined “raw material” comes into play. You just don't know what data can become relevant for the strategy. This implies that you need to not only store and organize as much data as possible but also conduct a series of experiments to determine interesting links between data.

While Big Tech recognized the need for all data, most firms focused on a small segment of relevant in-use data. This was a strategic blunder on both fronts—government and companies. The White House report, if done with foresight, would have advised companies and agencies to not only focus on the immediate data they have but also to look for ways to add and organize data for AI.

THINK WITH SENSORS

Data is everywhere, but you will never know about the data because you don't have the sensors needed to bring in the data. Just as eyes, ears, tongue, nose, and hands play a key role in capturing the data for human consumption, the AI supply chain needs data. It is simple to understand that eyes (the sensors) are needed to bring in the visual information, and the efficiency of how much information is brought in depends on the quality and performance of an eye. A human eye at peak performance can capture significant data—but someone who is color-blind or has eyesight problems can have a decreased performance of their eyes. On the other hand, the eyes of an eagle can capture more data than a human eye. Similarly, a dog's or a bear's sense of smell (nose) and a whale's listening (ears) are better performing sensors than human nose or ears. This gives us at least five measures of installing sensors:

- How many sensors are installed?

- How do they perform? (quality of data)

- How many different types of sensors are installed?

- How much data they can capture?

- How quickly or efficiently they can capture the data?

Thus, to compete effectively in the AI supply chain, the data raw material specialization is critical, and to get that one needs to have sensors.

What happens when data is brought in is a different problem. Just because a sensor can bring data into an entity does not mean that entity can capture the data. A sensor's capability is limited to enabling an entity to bring the data in. It is the bridge through which an entity interacts with the external world. In our case, we can consider the AI supply chain of a nation as a living, breathing entity.

Long before the AI supply chain became the most critical supply chain in the world, corporate America failed to recognize the critical need to acquire and sustain a leadership position in this area.

RPA IS THE AI IN AMERICA

At a dinner meeting at a restaurant in Atlanta, the VP of AI of one of the largest commercial banks shared his story.

“We were implementing RPA and had set up a center of excellence (COE) where several people were working. Once the CEO walked past the COE room, stopped, turned back, and came in. We were shocked. He asked us what we were doing, and we explained to him that we were developing robots to do human work. He seemed fascinated with that and asked to see something in action. We showed him a chatbot that responded to a customer inquiry and interacted with a customer to answer some basic questions. Overwhelmed with excitement he invited the VP of AI to do a demo at the upcoming board meeting and then brought the board members to the COE. Once board members visited the COE and saw the demo, not only we received major funding to bring in additional RPA, but our program also expanded to develop more bots.” We (the co-authors) asked him if they are working on machine learning projects also; he said they couldn't think of many use cases so they will wait on that.

While there is nothing wrong with RPA, the problem was that while Chinese firms were embracing machine learning, American industry was trapped in the RPA world. RPA was shown as the AI that firms needed—so much so that it became analogous to AI.

While there was an abundant supply of a new and transformational technology, the demand side of AI was obscured and underdeveloped. The research on the possibilities of AI in business was in short supply.

Despite such confusion, there was no shortage of capital flowing into the industry.

A group of entrepreneurs from the UK created an interesting technology. It could read data and identify input tags on screen and insert data to update forms. This was a very basic digital robot. There was no learning involved in its operation. It was set up to do something, and it performed the operation. They named their firm Blue Prism. The firm's technology was perhaps less innovative than the powerful marketing the entrepreneurs used to describe their technology: robotic. Little did they know that one day RPA would become the symbol of automation in America.

As the AI wave rose, RPA was able to position itself as the AI technology—so much so that for many American businesses and agencies, their AI strategy became synonymous with their RPA strategy. Never before had a technology taken advantage of a rising wave like that. Never before had such a misnomer triggered this level of adoption. To a large extent, RPA became the face of the AI revolution in America.

While at a social level, the Chinese experience of artificial intelligence happened with the game of go, America had no such parallel. America experienced a completely different path to sensemaking about AI.

Besides RPA, America was receiving other marketing communications about AI and based on which a social and business meaning was being formed. A series of television ads, news articles, magazine articles, and other mass media introduced America to cognitive automation. Microsoft placed an ad where the term “AI” was repeated several times by Rapper Common. Ads began showing robots and intelligent machines. The social psychology of America was not responding to an intelligent machine that learned to defeat the grandmasters in a game that was considered a national pride but instead it was being shaped by marketing. So while the Chinese concept of AI turned out to be deep learning, the technology used to train the algorithm to play and win the game of go, America faced a gigantic vacuum to give meaning to what AI means. To some extent, this vacuum was filled by RPA.

Blue Prism's, and other RPA firms', bold and dynamic marketing and use of the term “RPA” was strategically aligned with the AI wave. Many foreign RPA start-ups and firms established US operations and captured a significant market share in the early stages of market shaping. Blue Prism announced many large-scale clients. Many other major RPA firms—for example, UiPath, Automation Anywhere, and WorkFusion—expanded the market and gained significant market share.

THE FUTURISTS AND VIRTUE SIGNALING

The rise of artificial intelligence had taken a lethal turn. In an experiment gone bad, machines developed consciousness and began taking over the world. As their first action, they launched nuclear weapons to destroy humankind. From the ashes of what was left of humanity rose a rebellion and resistance movement that attempted to take the world back from the robots. Sci-Fi plots such as these were part of the American social consciousness long before the modern AI revolution. It is safe to say that American understanding of intelligent machines was very much shaped by the fear of the machines viewing humans as adversaries and trying to take over the world. While this sci-fi scenario made hundreds of millions for Hollywood producers and directors, more recently it was used by futurists and virtue signalers to take a stake in the AI future.

The futurism and virtue signaling became just as much an issue in the corporate world as in the government. It was as if guilt by association was being established for AI. Futurists come in two forms: Futurists who focus on the future of work and the ones who paint a picture of what the world of the future would look like. AI governance and ethics became favorite topics and departments. In many companies AI governance people were recruited—mostly with HR experience—as if AI deployed to automate accounts payable or accounts receivable was going to become some kind of a racist, chauvinistic, and bigoted robot. The governance marketing was so strong that when companies talked about their AI programs they felt obligated to discuss their governance and ethics program. Money was being spent on spokespeople whose job would be to reduce the fear of Americans about AI. This was the money that should have been spent on building data capabilities. The fearmongering continued, and guilt by association gripped the corporate world. All that while the real culprits of governance and ethics problems in AI—Big Tech—developed without such concerns. In some cases when their ethics and governance boards did not conform to how Big Tech wanted to define the ethics and governance, the boards were dismantled with total impunity.

For everyone else, AI remained a combination of RPA and governance hodgepodge, a formidable, fearful force of potential evil that required significant ethics and governance. All that while China rapidly acquired the sophisticated deep learning capability and moved at the speed of relevance.