CHAPTER 6

Decompose It: Unpacking the Details

The everyday meanings of most terms contain ambiguities significant enough to render them inadequate for careful decision analysis.

—Ron Howard, Father of Decision Analysis1

Recall the cybersecurity analyst mentioned in Chapter 5 whose estimate of a loss was “$0 to $500 million” and who worried how upper management would react to such an uninformative range. Of course, if such extreme losses really were a concern, it would be wrong to hide it from upper management. Fortunately, there is an alternative: just decompose it. Surely such a risk would justify at least a little more analysis.

Impact usually starts out as a list of unidentified and undefined outcomes. Refining this is just a matter of understanding the object of measurement as discussed in Chapter 2. That is, we have to figure out what we are measuring by defining it better. In this chapter, we discuss how to break up an ambiguous pile of outcomes into at least a few major categories of impacts.

In Chapter 3 we showed how to make a simple quantitative model that simply makes exact replacements for steps in the familiar risk matrix but does so using quantitative methods. This is a very simple baseline, which we can make more detailed through decomposition. In Chapter 4 we discussed research showing how decomposition of an uncertainty especially helps when the uncertainty is particularly great—as is usually the case in cybersecurity. Now, we will exploit the benefits of decomposition by showing how the simple model in Chapter 3 could be given more detail.

Decomposing the Simple One‐for‐One Substitution Model

Every row in our simple model shown in Chapter 3 (Table 3.2) had only two inputs: a probability of an event and a range of a loss. Both the event probability and the range of the loss could be decomposed further. We can say, for example, that if an event occurs, we can assess the probability of the type of event (was it a sensitive data breach, denial of service, etc.?). Given this information, we could further modify a probability. We can also break the impact down into several types of costs: legal fees, breach investigation costs, downtime, and so on. Each of these costs can be computed based on other inputs that are simpler and less abstract than some aggregate total impact.

Now let's add a bit more detail as an example of how you could use further decomposition to add value.

Just a Little More Decomposition for Impact

A simple decomposition strategy for impact that many in cybersecurity are already familiar with is confidentiality, integrity, and availability, or “C, I, and A.” As you probably know, “confidentiality” refers to the improper disclosure of information. This could be a breach of millions of records, or it could mean stealing corporate secrets and intellectual property. “Integrity” means modifying the data or behavior of a system, which could result in improper financial transactions, damaging equipment, misrouting logistics, and so on. Lastly, “availability” refers to some sort of system outage resulting in a loss of productivity, sales, or other costs of interference with business processes. We aren't necessarily endorsing this approach for everyone, but many analysts in cybersecurity find that these decompositions make good use of how they think about the problem.

Let's simplify it even further in the way we've seen one company do it by combining confidentiality and integrity. Perhaps we believe availability losses were fairly common compared to others and that estimating availability lends itself to using other information we know about the system, such as the types of business processes the system supports, how many users it has, how it might affect productivity, and whether it could impact sales while it is unavailable. In Table 6.1, we show how this small amount of additional decomposition could look if we added it to the spreadsheet shown in the one‐for‐one substitution model shown in Chapter 3.

To save room, we are only showing here the impact decomposition for two types of impact. Each of these impact types would have its own annual probability and there would be formulas for combining all of these inputs. To see the entire spreadsheet, including how we determined which of these impacts occurred for how the costs are added up, just go to the full spreadsheet example at www.howtomeasureanything.com/cybersecurity. In addition to the original model shown in Chapter 3, you will also see this one and some other variations on decomposition.

TABLE 6.1 Example of Slightly More Decomposition of Impacts (see spreadsheet for entire set of columns)

| Event | Confidentiality and Integrity 90% Confidence Interval ($000) | Availability 90% Confidence Intervals | ||||

|---|---|---|---|---|---|---|

| Lower Bound | Upper Bound | Duration of Outage (hours) | Cost per Hour ($000) | |||

| Lower Bound | Upper Bound | Lower Bound | Upper Bound | |||

| AA | $50 | $50 | 0.25 | 4 | $2 | $10 |

| AB | $100 | $10,000 | 0.25 | 8 | $1 | $10 |

| AC | $200 | $25,000 | 0.25 | 12 | $40 | $200 |

| AD | $100 | $15,000 | 0.25 | 2 | $2 | $10 |

| AE | $250 | $30,000 | 1 | 24 | $5 | $50 |

The next steps in this decomposition are to determine whether confidentiality and integrity losses occurred, whether availability losses occurred, or both. When availability losses are experienced, that loss is computed by multiplying the hours of outage duration times the cost per hour of the outage. Any confidentiality losses are added in.

Just as we did in the simpler model in Chapter 3, we generate thousands of values for each row. In each random trial we determine the type of event and its cost. The entire list of event costs are totaled for each of the thousands of trials, and a loss exceedance curve is generated. As before, each row could have a proposed control, which would reduce the likelihood and perhaps impact of the event (these reductions can also be randomly selected from defined ranges).

If an event is an attack on a given system, we would know something about how that system is used in the organization. For example, we would usually have a rough idea of how many users a system has—whether they would be completely unproductive without a system or whether they could work around it for a while—and whether the system impacts sales or other operations. And many organizations have had some experience with system outages that would give them a basis for estimating something about how long the outage could last. We could decompose confidentiality further by estimating the number of records compromised and then estimate the costs as a function of records lost. We could also separate the costs of internal response, lost revenue as a function of duration of the outage, and even human safety where appropriate. You should choose the decomposition that you find more convenient and realistic to assess. There are many more ways to decompose impacts, and some decompositions of probabilities will be addressed in later chapters.

Now that you can see how decomposition works in general, let's discuss a few other strategies we could have used. If you want to decompose your model using a wider variety of probability distributions, see details on a list of distributions in Appendix A. And, of course, you can download an entire spreadsheet from www.howtomeasureanything.com/cybersecurity, which contains all of these random probability distributions written in native (i.e., without VBA macros or add‐ins) MS Excel.

A Few Decomposition Strategies to Consider

We labeled each row in the simulation shown in Table 6.1 as an event. However, in practice you will need to define an event more specifically and, for that, there are multiple choices. Think of the things you would normally have plotted on a risk matrix. If you had 20 things plotted on a risk matrix, were they 20 applications? Were they 20 categories of threats? Were they business units of the organization or types of users?

It appears that most users of the risk matrix method start with an application‐oriented decomposition. That is, when they plotted something on a risk matrix, they were thinking of an application. This is a perfectly legitimate place to start. Again, we have no particular position on the method of decomposition until we have evidence saying that some are better and others are worse. But out of convenience and familiarity, our simple model in Chapter 3 starts with the application‐based approach to decomposing the problem. If you prefer to think of the list of risks as being individual threat sources, vulnerabilities, or something else, then you should have no problem extrapolating the approach we describe here to your preferred model.

Once you have defined what your rows in the table represent, then your next question is how detailed you want the decomposition in each row to be. In each decomposition, you should try to leverage things you know—we can call them observables. Table 6.2 has a few more examples.

If we modeled even some of these details, we may still have a wide range, but we can at least say something about the relative likelihood of various outcomes. The cybersecurity professional who thought that a range for a loss was zero to a half‐billion dollars was simply not considering what can be inferred from what is known rather than dwelling on all that isn't known. A little bit of decomposition would indicate that not all the values in that huge range are equally likely. You will probably be able to go to the board with at least a bit more information about a potential loss than a flat uniform distribution of $0 to $500 million or more.

TABLE 6.2 A Few More Examples of Potential Decompositions

| Estimated Range | Knowledge Leveraged (Either You Know Them or Can Find Out, Even If It Is Also Just Another 90% CI) |

|---|---|

| Financial theft | You generally know whether a system even handles financial transactions. So some of the time the impact of financial theft will be zero or, if not zero, you can estimate the limit of the financial exposure in the system. |

| System outages | You can estimate how many users a system has, how critical it is to their work, and whether outages affect sales or other operations with a financial impact. You may even have some historical data about the duration of outages once they occur. |

| Investigation and remediation costs | IT often has some experience with estimating how many people work on fixing a problem, how long it takes them to fix it, and how much their time is worth. You may even have knowledge about how these costs may differ depending on the type of event. |

| Intellectual property (IP) | You can find out whether a given system has sensitive corporate secrets or IP. If it has IP, you can ask management what the consequences would be if the IP were compromised. |

| Notification and credit monitoring | Again, you at least know whether a system has this kind of exposure. If the event involves a data breach of personal information, paying for notification and credit monitoring services can be directly priced on a per‐record basis. |

| Legal liabilities and fines | You know whether a system has regulatory requirements. There isn't much in the way of legal liabilities and fines that doesn't have some publicly available precedent on which to base an estimate. |

| Other interference with operations | Does the system control some factory process that could be shut down? Does the system control health and safety in some way that can be compromised? |

| Reputation | You probably have some idea of whether a system even has the potential for a major reputation cost (e.g., whether it has customer data or whether it has sensitive internal communications). Once you establish that, reputation impact can be decomposed further (addressed again later in this chapter). |

Remember, the reason you do this is to evaluate alternatives. You need to be able to discriminate among different risk mitigation strategies. Even if your range was that wide and everything in the range were equally likely, it is certainly not the case that every system in the list has a range that wide, and knowing which do would be helpful. You know that some systems have more users than others, some systems handle personal health information (PHI) or payment card industry (PCI) data, and some do not, some systems are accessed by vendors, and so on. All this is useful information in prioritizing action even though you will never remove all uncertainty.

This list just gives you a few more ideas of elements into which you could decompose your model. So far we've focused on decomposing impact more than likelihood because impact seems a bit more concrete for most people. But we can also decompose likelihood. Chapters 8 and 9 will focus on how that can be done. We will also discuss how to tackle one of the more difficult cost estimations—reputation loss—later in this chapter.

More Decomposition Guidelines: Clear, Observable, Useful

When someone is estimating the impact of a particular cybersecurity breach on a particular system, perhaps they are thinking, “Hmm, there would at least be an outage for a few minutes if not an hour or more. There are 300 users, most of which would be affected. They process orders and help with customer service. So the impact would be more than just paying wages for people unable to work. The real loss would be loss of sales. I think I recall that sales processed per hour are around $50,000 to $200,000, but that can change seasonally. Some percentage of those who couldn't get service might just call back later, but some we would lose for good. Then there would be some emergency remediation costs. So I'm estimating a 90% CI of a loss per incident of $1,000 to $2,000,000.”

We all probably realize that we may not have perfect performance when recalling data and doing a lot of arithmetic in our heads (and imagine how much harder it gets when that math involves probability distributions of different shapes). So we shouldn't be that surprised that the researchers we mentioned back in Chapter 4 (Armstrong and MacGregor) found that we are better off decomposing the problem and doing the math in plain sight. If you find yourself making these calculations in your head, stop, decompose, and (just like in school) show your math.

We expect a lot of variation in decomposition strategies based on desired granularity and differences in the information analysts will have about their organizations. Yet there are principles of decomposition that can apply to anyone. Our task here is to determine how to further decompose the problem so that, regardless of your industry or the uniqueness of your firm, your decomposition actually improves your estimations of risk.

This is an important task because some decomposition strategies are better than others. Even though there is research that highly uncertain quantities can be better estimated by decomposition, there is also research that identifies conditions under which decomposition does not help. We need to learn how to tell the difference. If the decomposition does not help, then we are better off leaving the estimate at a more aggregated level. As one research paper put it, decompositions done “at the expense of conceptual simplicity may lead to inferences of lower quality than those of direct, unaided judgments.”2

Decision Analysis: An Overview of How to Think about a Problem

A good background for thinking about decomposition strategies is the work of Ron Howard, who is generally credited for coining of the term “decision analysis” in 1966.3 Howard and others inspired by his work were applying the somewhat abstract areas of decision theory and probability theory to practical decision problems dealing with uncertainties. They also realized that many of the challenges in real decisions were not purely mathematical. Indeed, they saw that decision makers often failed to even adequately define what the problem was. As Ron Howard put it, we need to “transform confusion into clarity of thought and action.”4

Howard prescribes three prerequisites for doing the math in decision analysis. He stipulates that the decision and the factors we identify to inform the decision must be clear, observable, and useful.

- Clear: Everybody knows what you mean. You know what you mean.

- Observable: What do you see when you see more of it? This doesn't mean you will necessarily have already observed it but it is at least possible to observe and you will know it when you see it.

- Useful: It has to matter to some decision. What would you do differently if you knew this? Many things we choose to measure in security seem to have no bearing on the decision we actually need to make.

All of these conditions are often taken for granted, but if we start systematically considering each of these points on every decomposition, we may choose some different strategies. Suppose, for example, you wanted to decompose your risks in such a way that you had to evaluate a threat actor's “skill level.” This is one of the “threat factors” in the OWASP standard, and we have seen homegrown variations on this approach. We will assume you have already accepted the arguments in previous chapters and decided to abandon the ordinal scale proposed by OWASP and others and that you are looking for a quantitative decomposition about a threat actor's skill level. So now apply Howard's tests to this factor.

The Clear, Observable, and Useful Test Applied to “Threat Actor Skill Level”

- Clear: Can you define what you mean by “skill level”? Is this really an unambiguous unit of measure or even a clearly defined discrete state? Does saying, “We define ‘average’ threat as being better than an amateur but worse than a well‐funded nation state actor” really help?

- Observable: How would you even detect this? What basis do you have to say that skill levels of some threats are higher or lower than others?

- Useful: Even if you had unambiguous definitions for this, and even if you could observe it in some way, how would the information have bearing on some action in your firm?

We aren't saying threat skill level is necessarily a bad part of a strategy for decomposing risk. Perhaps you have defined skill level unambiguously by specifying the types of methods employed. Perhaps you can observe the frequency of these types of attacks, and perhaps you have access to threat intelligence that tells you about the existence of new attacks you haven't seen yet. Perhaps knowing this information causes you to change your estimates of the likelihood of a particular system being breached, which might inform what controls should be implemented or even the overall cybersecurity budget. If this is the case, then you have met the conditions of clear, observable, and useful. But when this is not the case—which seems to be very often—evaluations of skill level are pure speculation and add no value to the decision‐making process.

Avoiding Over‐Decomposition

The threat skill level example just mentioned may or may not be a good decomposition depending on your situation. If it meets Howard's criteria and it actually reduces your uncertainty, then we call it an informative decomposition. If not, then the decomposition is uninformative, and you are better off sticking with a simpler model.

Imagine someone standing in front of you holding a crate. The crate is about 2 feet wide and a foot high and deep. They ask you to provide a 90% CI on the weight of the crate simply by looking at it. You can tell they're not a professional weightlifter, so you can see this crate can't weigh, say, 350 pounds. You also see that they lean a bit backward to balance their weight as they hold it. And you see that they're shifting uncomfortably. In the end, you say your 90% CI is 20 to 100 pounds. This strikes you as a wide range, so you attempt to decompose this problem by estimating the number of items in the crate and the weight per item. Or perhaps there are different categories of items in the crate, so you estimate the number of categories of items, the number in each category, and the weight per item in that category. Would your estimate be better? Actually, it could easily be worse. What you have done is decomposed the problem into multiple purely speculative estimates that you then use to try to do some math. This would be an example of an uninformative decomposition.

The difference between this and an informative decomposition is whether or not you are describing the problem in terms of quantities you are more familiar with than the original problem. An informative decomposition would be decompositions that use specific knowledge that the cybersecurity expert has about their environment. For example, the cybersecurity expert can get detailed knowledge about the types of systems in their organization and the types of records stored on them. They would have or could acquire details about internal business processes so they could estimate the impacts of denial of service attacks. They understand what types of controls they currently have in place. Decompositions of cybersecurity risks that leverage this specific knowledge are more likely to be helpful.

However, suppose a cybersecurity expert attempts to build a model where they find themselves estimating the number and skill level of state‐sponsored attackers or even the hacker group Anonymous (about which, as the name implies, it would be very hard to estimate any details). Would this actually constitute a reduction in uncertainty relative to where they started?

Decompositions should be less abstract to the expert than the aggregated amount. If you find yourself decomposing a dollar impact into factors such as threat skill level, then you should have less uncertainty about the new factors than you did about the original, direct estimate of monetary loss.

If decomposition causes you to widen a range, that might be informative if it makes you question the assumptions of your previous range. For example, suppose we need to estimate the impact of a system availability risk where an application used in some key process—let's say order taking—would be unavailable for some period of time. And suppose that we initially estimated this impact to be $150,000 to $7 million. Perhaps we consider that to be too uncertain for our needs, so we decide to decompose this further into the duration of an outage and the cost per hour of an outage. Suppose further that we estimated the duration of the outage to be 15 minutes to 4 hours and the cost per hour of the outage to be $200,000 to $5 million. Let's also state that these are lognormal distributions for each (as discussed in Chapter 3, this often applies where the value can't be less than zero but could be very large). Have we reduced uncertainty? Surprisingly, no—not if what we mean by “uncertainty reduction” is a narrower range. The 90% CI for the product of these two lognormal distributions is about $100,000 to $8 million—wider than the initial 90% CI of $150,000 to $7,000,000. But even though the range isn't strictly narrower, you might think it was useful because you realize it is probably more realistic than the initial range.

Now, just a note in case you thought that to get the range of the product you multiply the lower bounds together and then multiply the upper bounds together: That's not how the math works when you are generating two independent random variables. Doing it that way would produce a range of $50,000 to $20 million (0.25 hours times $200,000 per hour for the lower bound and 4 hours times $5 million per hour for the upper bound). This answer could only be correct if the two variables are perfectly correlated—which they obviously would not be.

So decomposition might be useful just as a reality check against your initial range. This can also come up when you start running simulations on lots of events that are added up into a portfolio‐level risk, as the spreadsheet shown in Table 6.1 does. When analysts are estimating a large number of individual events, it may not be apparent to them what the consequences for their individual estimates are at a portfolio level. In one case we observed that subject matter experts were estimating individual event probabilities at somewhere between 2% and 35% for about a hundred individual events. When this was done they realized that the simulation indicated they were having a dozen or so significant events per year. A manager pointed out that the risks didn't seem realistic because none of those events had been observed even once in the last five years. The estimates would only make sense if the subject matter experts had reason to believe there would be a huge uptick in these event frequencies (it was over a couple of years ago, and we can confirm that this is not what happened). But, instead, the estimators decided to rethink what the probabilities should be so that they didn't contrast so sharply with observed reality.

A Summary of Some Decomposition Rules

The lessons in these examples can be summarized in two fundamental decomposition rules:

- Decomposition Rule #1: Decompositions should leverage what you are better at estimating or data you can obtain (i.e., don't decompose into quantities that are even more speculative than the first).

- Decomposition Rule #2: Check your decomposition against a directly estimated range with a simulation, as we just did in the outage example. You might decide to toss the decomposition if it produces results you think are absurd, or you might decide your original range is the one that needs updating.

These two simple rules have some specific mathematical consequences. In order to ensure that your decomposition strategy is informative—that is, results in less uncertainty than you had before—consider the following:

- If you are expecting to reduce uncertainty by multiplying together two decomposed variables, then the decomposed variables need to not only have less uncertainty than the initial range but often a lot less. As a rule of thumb, the ratios of the upper and lower bounds for the decomposed variables should be a lot less than a third the width of the ratio of upper and lower bounds of the original range. For the case in the previous section, the ratio of bounds of the original range was about 47 ($7 million/$150,000), while the other two ranges had ratios of bounds of 16 and 25, respectively.

- If most of the uncertainty is in one variable, then the ratio of the upper and lower bounds of the decomposed variable must be less than that of the original variable. For example, suppose you initially estimated that the cost of an outage for one system was $1 million to $5 million. If the major source of uncertainty about this cost is the duration of an outage, the upper/lower bound ratio of the duration must be less than the upper/lower bound ratio of the original estimate (5 to 1). If the range of the duration doesn't have a lower ratio of upper/lower bounds, then you haven't added information with the decomposition. If you have reason to believe your original range, then just use that. Otherwise, perhaps your original range was just too narrow, and you should go with the decomposition.

- In some cases the variables you multiply together are related in a way that eliminates the value of decomposition unless you also make a model of the relationship. For example, suppose you need to multiply A and B to get C. Suppose that when A is large, B is small, and when B is large, A is small. If we estimate separate, independent ranges for A and B, the range for the product C can be greatly overstated. This might be the case for the duration and cost per hour of an outage. That is, the more critical a system, the faster you would work to get them back online. If you decompose these, you should also model the inverse relationship. Otherwise, just provide a single overall range for the cost of the impact instead of decomposing it.

- If you have enough empirical data to estimate a distribution, then you probably won't get much benefit from further decomposition.

A Hard Decomposition: Reputation Damage

In the survey we mentioned in Chapter 5, some cybersecurity professionals (14%) agreed with the statement, “There is no way to calculate a range of the intangible effects of major risks like damage to reputation.” Although a majority disagree with the statement and there is a lot of discussion about it, we have seen few attempts to model this in the cybersecurity industry. Both authors have seen cybersecurity articles and attended conferences where this is routinely given as an example of a very hard measurement problem. Therefore, we decided to drill down on this particular loss in more detail as an example of how even this seemingly intangible issue can be addressed through effective decomposition. Reputation, after all, seems to be the loss category cybersecurity professionals resort to when they want to create the most FUD. It comes across as an unbearable loss. But let's ask the question we asked in Chapter 2 regarding the object of measurement, or in this chapter regarding Howard's observability criterion: What do we see when we see a loss of reputation?

The first reaction many in private industry would have is that the observed quantity would be a long‐term loss of sales. Then they may also say that stock prices would go down. Of course, the two are related. If investors (especially the institutional investors who consider the math on the effect of sales on market valuation) believed that sales would be reduced, then we would see stock prices drop for that reason alone. So, if we could observe changes in sales or stock prices just after major cybersecurity events, that would be a way to detect the effect of loss of reputation or at least the effects that would have any bearing on our decisions.

It does seem reasonable to presume a relationship between a major data breach and a loss of reputation resulting in changes in sales, stock prices, or both. If we go back to events before the first edition of this book, we find articles published in the trade press and broader media that implied such a direct relationship between the breach and reputation. One such article about the 2013 Target data breach was titled “Target Says Data Breach Hurt Sales, Image; Final Toll Isn't Clear.”5 Forbes published an article in September 2014 by the Wall Street analysis firm Trefis, which noted that Target's stock fell 14% in the two‐month period after the breach, implying the two are connected.6 In that article, Trefis cited the Poneman Institute, a major cybersecurity research service using mostly survey‐based data, which anticipated a 6% “churn” of customers after a major data breach. Looking at this information alone, it seems safe to say that a major data breach means a significant loss of sales and market valuation.

The analysis behind these earlier headlines simply assumed that any negative change was due to the breach. Of course, even if there was no relationship between stock market prices and major breaches, the stock price would still go down half the time. The question journalists need to ask is whether the change is more than we would expect given historical volatility. We also need to consider general trends in the stock market. A general downturn in the market would explain a drop in price if it happened to be timed near the date of the breach.

Multiple studies before 2008 showed very little impact on stock prices, but breaches in those days were not as big as later events.7,8,9 In the first edition of this book, we looked at major breaches between 2007 and 2016, including T.J. Maxx, Anthem, Target, Home Depot, and JC Penny, and we still found no relationship compared to previous volatility and other adjustments for the stock market. Even Target's 14% price drop was within the volatility of a difficult year for the firm.

We also looked at revenue details in the quarterly reports for all of those retailers, and again, we saw no discernable effect. T.J. Maxx's forecasted $1.7 billion loss of revenue never materialized in the quarterly sales report.10 Also, recall that a survey of shoppers indicated Target would lose 6% of its customers. If Target lost customers at that time, it wasn't apparent in the quarterly reported revenue. Apparently, people don't actually always do what they say they would do in surveys. This is not inconsistent with the fact that what people say on a survey about their value of privacy and security does not appear to model what they actually do, as one Australian study shows.11 So we shouldn't rely on that method to estimate losses, either.

We only looked into a few major retailers, but other researchers at the time backed up this observation with data from a much larger number of organizations. In March 2015, another analysis by Trefis also pointed out that overall changes in retail foot traffic at the time and competitive positions explain the changes or lack of changes in Target and Home Depot.12,13 Marshall Kuypers, who at the time was working on his management science and engineering PhD at Stanford, focused his study on this issues. He explained that, up to that point in time, all academic research “consistently found little evidence that the two are related” and that “signals dissipate quickly and the statistically significant correlation disappears after roughly three days.”

Now that we have seen even larger breaches than what we saw by the time the first edition was published, do we see any change to these findings? Yes. Another look at more recent events and more recent academic research does show that there is a direct market response to major events—but only for some companies.

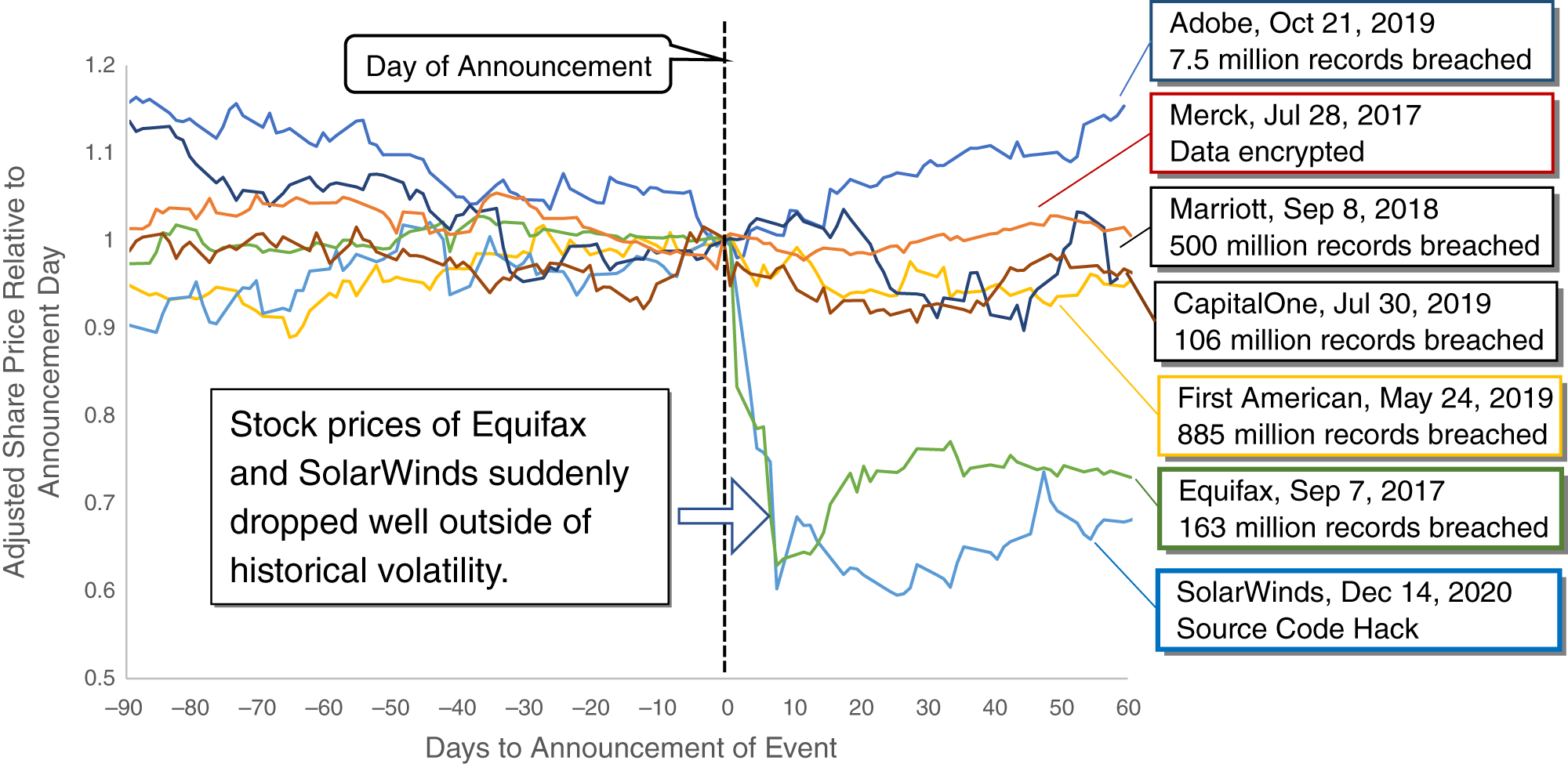

To see whether there were any new and obvious relationships between attacks—including data breaches and other malware—we collected just a few examples of reported breaches and other attacks since 2016. We included the SolarWinds and Merck attacks mentioned in Chapter 1. Just these few anecdotes show that for some firms a major data breach really does result in a big drop in stock prices. And that drop appears to be a long‐term loss of shareholder value. In Figure 6.1 we show how stock prices changed before and after cyberattacks at Adobe, Marriott, First American Financial Corporation, Merck, Equifax, and SolarWinds.

Figure 6.1 shows stock price as a ratio of the price just before the announcement of the attack, relative to the time of the attack. On the horizontal axis, the “0” refers to the last price before the attack was publicly disclosed, and at that point in time the price is shown as a “1.” These normalized prices are adjusted further to remove trends in the broader stock market based on the S&P 500. This ensures that the changes we see don't just reflect a big change in the stock market that happened to be about the same time as the cyberattack.

As you can see from the chart, the stock prices of some firms don't seem to be affected much or at all by the cyberattack. Adobe, with the smallest data breach shown in the chart at 7.5 million records, actually seems to have a stock price increase after the event. (This data is from the most recent Adobe attack. There was a much larger attack on Adobe in 2013.) Likewise, Marriott seemed to be unfazed. The Merck attack, for which Chubb was ordered to cover the claimed loss of $1.4 billion, seems to have a remarkably steady stock price in this time period. Remember, this attack involved the malicious encryption of data, including important intellectual property and operational systems, on thousands of computers. And yet it would be hard to discern any sell‐off by Merck investors from this data.

CapitalOne and First American Financial did have a temporary downturn at the point of public disclosure. In the case of CapitalOne, the change was about the size of the biggest drops you would expect to see two or three times a year in their historical data—a significant but not extremely rare change. Both stocks mostly recovered in 60 days. There may be some detectable long‐term effect if we looked at a large sample of companies with such a method. Some research shows that a buy‐and‐hold strategy of stocks involved in a major data breach had returns lower than market returns.14

Although the study was published in 2020, it only looked at breaches between 2003 and 2014. That study also did not differentiate what data was breached or the industry of the company breached. Even with our small sample, it appears this is an important consideration. Only two of the firms shown, SolarWinds and Equifax, clearly showed a major drop in stock price that was the day of or the day after the announcement of the attack and much more than any recent volatility. This is a change far larger than any normal fluctuation even over several years. Even if major market trends such as a recession would cause such an event, we've adjusted for that in the data. These large sell‐offs were a direct result of the cyberattacks.

Why do we see such a different market response to cyberattacks? SolarWinds and Equifax both provide critical services that rely on confidence in their products and data. These firms may be held to higher standards by the market than manufacturers or fast food chains.

Instead of relying on just a few anecdotes, we need to look at larger studies of many organizations and cyberattacks. One of the most comprehensive studies we've seen on this issue was published in 2021.15 This study is a useful source for computing data breach frequency because it counts the breaches by year and by industry type. Also, it shows that there is a detectable drop in market value of a firm under certain conditions. Here, we will summarize from all this research the key factors that seem to drive the stock price consequences of a data breach.

FIGURE 6.1 Changes in Stock Prices After Announcement of Selected Major Attacks

- The type of firm and the type of products attacked: If the Merck attack happened to a cloud provider, the shareholder value losses would have been much more significant. If the SolarWinds hack did not involve their software but their customer database, it is doubtful they would have seen anything like the stock price loss they experienced.

- The type of data lost: Personal financial data appears to affect stock prices. Other personal information, not so much.

- Frequency of attacks: If there is a second attack in the same year, it will have a bigger effect on stock prices.

- Responsiveness: If there is a delay in detection or a delay in reporting or inaction at the board level, there will be a bigger impact.

In summary, reputation damage might affect stock price depending on the expectations and trust of the market. If the conditions listed above don't apply, you are better off using a different method than stock price drops to assess reputation damage. That's our next topic.

Another Way to Model Reputation Damage: Penance Projects

We aren't saying major data breaches are without costs. There are real costs associated with them, but we need to think about how to model them differently than with vague references to reputation. If you think your risks are more like Adobe's, Merck's, or Marriot's and less like SolarWinds’ and Equifax's, then you need a different approach than just a drop in stock price to model impact on reputation. For example, instead of stock price drops or loss of revenue, management at Home Depot reported other losses in the form of expenses dealing with the event, including “legal help, credit card fraud, and card re‐issuance costs going forward.”16

Note that legal liabilities like what Home Depot faced are usually considered separately from reputation damage and there is now quite a lot of data on that point. Regulatory fines and Advisen data have been collected now for years. Even the European Union's General Data Protection Regulation (GDPR)—which was enacted just as the first edition of this book was released—now has thousands of fines in publicly available databases.

Costs, other than stock price drops and legal liabilities may be more realistically modeled as “penance projects.” Penance projects are expenses incurred to limit the long‐term impact of loss of reputation. In other words, companies appear to engage in efforts to control damage to reputation instead of bearing what could otherwise be much greater damage. The effect these efforts have on reducing the real loss to reputation seem to be enough that the impact seems hard to detect in sales or stock prices. These efforts include the following:

- Major new investments in cybersecurity systems and policies to correct cybersecurity weaknesses.

- Replacing a lot of upper management responsible for cybersecurity. (It may be scapegoating, but it may be necessary for the purpose of addressing reputation.)

- A major public relations push to convince customers and shareholders the problem is being addressed. (This helps get the message out that the efforts of the preceding bullet points will solve the problem.)

- Marketing and advertising campaigns (separate from getting the word out about how the problem has been confidently addressed) to offset potential losses in business.

For most organizations that aren't in the types of services Equifax or SolarWinds provide, these damage‐control efforts to limit reputation effects appear to be the real costs here—not so much reputation damage itself. Each of these are conveniently concrete measures for which we have multiple historical examples. Of course, if you really do believe that there are other costs to reputation damage, you should model them. But what does reputation damage really mean to a business other than legal liabilities if you can't detect impacts on either sales or stock prices? For example, employee turnover might be an arguable loss. Just be sure you have an empirical basis for your claim. Otherwise, it might be simpler to stick with the penance project cost strategy.

So if we spend a little more time and effort in analysis, it should be possible to tease out reasonable estimates even for something that seems as intangible as reputation loss.

Conclusion

A little decomposition can be very helpful up to a point. To that end, we showed a simple additional decomposition that you can use to build on the example in Chapter 3. We also mentioned a downloadable spreadsheet example and the descriptions of distributions in Appendix A to give you a few tools to help with this decomposition.

We talked about how some decompositions might be uninformative. We need to decompose in a way that leverages our actual knowledge—however limited our knowledge is, there are a few things we do know—and not speculation upon speculation. Test your decompositions with a simulation and compare them to your original estimate before the decomposition. This will show if you learned anything or show if you should actually make your range wider. We tackled a particularly difficult impact to quantify—loss of reputation—and showed how even that has concrete, observable consequences that can be estimated.

So far, we haven't spent any time on decomposing the likelihood of an event other than to identify likelihoods for two types of events (availability vs. confidentiality and integrity). This is often a bigger source of uncertainty for the analyst and anxiety for management than impact. Fortunately, that, too, can be decomposed. We will review how to do that later in Part II.

We also need to discuss where these initial estimates of ranges and probabilities can come from. As we discussed in earlier chapters, the same expert who was previously assigning arbitrary scores to a risk matrix can also be taught to assign subjective probabilities in a way that itself has a measurable performance improvement. Then those initial uncertainties can be updated with some very useful mathematical methods even when it seems like data is sparse. These topics will be dealt with in the next two chapters, “Calibrated Estimates” and “Reducing Uncertainty with Bayesian Methods.”

Notes

- 1. Ronald A. Howard and Ali E. Abbas, Foundations of Decision Analysis (New York: Prentice Hall, 2015), 62.

- 2. Michael Burns and Judea Pearl. “Causal and Diagnostic Inferences: A Comparison of Validity,” Organizational Behavior and Human Performance 28, no. 3 (1981): 379–394.

- 3. Ronald A. Howard, “Decision Analysis: Applied Decision Theory,” Proceedings of the Fourth International Conference on Operational Research (New York: Wiley‐Interscience, 1966).

- 4. Ronald A. Howard and Ali E. Abbas, Foundations of Decision Analysis (New York: Prentice Hall, 2015), xix.

- 5. “Target Says Data Breach Hurt Sales, Image; Final Toll Isn't Clear,” Dallas Morning News, March 14, 2014.

- 6. Trefis Team, “Home Depot: Will the Impact of the Data Breach Be Significant?” Forbes, March 30, 2015, www.forbes.com/sites/greatspeculations/2015/03/30/home-depot-will-the-impact-of-the-data-breach-be-significant/#1e882f7e69ab.

- 7. Karthik Kannan, Jackie Rees, and Sanjay Sridhar, “Market Reactions to Information Security Breach Announcements: An Empirical Analysis,” International Journal of Electronic Commerce 12, no. 1 (2007): 69–91.

- 8. Alessandro Acquisti, Allan Friedman, and Rahul Telang, “Is There a Cost to Privacy Breaches? An Event Study,” ICIS 2006 Proceedings (2006): 94.

- 9. Huseyin Cavusoglu, Birendra Mishra, and Srinivasan Raghunathan, “The Effect of Internet Security Breach Announcements on Market Value: Capital Market Reactions for Breached Firms and Internet Security Developers,” International Journal of Electronic Commerce 9, no. 1 (2004): 70–104.

- 10. Ryan Singel, “Data Breach Will Cost TJX 1.7B, Security Firm Estimates,” Wired, March 30, 2007, www.wired.com/2007/03/data_breach_wil/.

- 11. Wallis Consulting Group, Community Attitudes to Privacy 2007, prepared for the Office of the Privacy Commissioner, Australia, August 2007, www.privacy.gov.au/materials/types/download/8820/6616.

- 12. Trefis Team, “Home Depot: Will the Impact of the Data Breach Be Significant?” March 27, 2015, www.trefis.com/stock/hd/articles/286689/home_depot_will_the_impact_of_the_data_breach_be_significant/2015_03_27.

- 13. Trefis Team, “Data Breach Repercussions and Falling Traffic to Subdue Target's Results,” August 18, 2014, www.trefis.com/stock/tgt/articles/251553/aug-20data-breach-repercussions-and-falling-traffic-to-subdue-targets-results/2014–08–18.

- 14. Chang, Goa, Lee, “The Effect of Data Theft on a Firm's Short‐Term and Long‐Term Market Value,” Mathematics 2020, 8, 808.

- 15. Kameya, et al. “Risk Management, Firm Reputation, and the Impact of Successful Cyberattacks on Target Firms,” Journal of Financial Economics, Volume 139, Issue 3, March 2021, pp. 719–749.

- 16. Trefis Team, “Home Depot: Will the Impact of the Data Breach Be Significant?” March 27, 2015, www.trefis.com/stock/hd/articles/286689/home-depot-will-the-impact-of-the-data-breach-be-significant/2015-03-27.