Chapter 7

Evaluation: It’s Not Over Yet!

IN THIS CHAPTER

![]() Recognizing the purpose of evaluations

Recognizing the purpose of evaluations

![]() Getting an overview of Kirkpatrick’s Four Levels

Getting an overview of Kirkpatrick’s Four Levels

![]() Exploring Phillip’s ROI

Exploring Phillip’s ROI

![]() Understanding the basics of creating a practical evaluation plan

Understanding the basics of creating a practical evaluation plan

![]() Practicing evaluation using a practical virtual trainer skills assessment

Practicing evaluation using a practical virtual trainer skills assessment

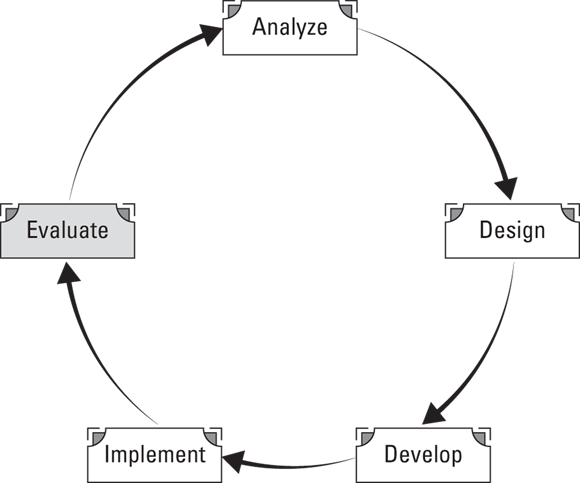

The fifth and final stage of the ADDIE model described in Chapter 3 is to evaluate performance. When you reach this stage, you have made it through the entire Training Cycle and can see the beauty of the complete cycle at this point.

You’ll find yourself returning to the earlier stages of the Training Cycle during the evaluation stage. For example, you return to the assessment stage to confirm that you’re evaluating what you designed the training for. You may use the objectives you wrote in the second stage to create specific evaluation criteria. You want the training objectives you write to be specific, measurable, and easily converted to items on an evaluation instrument or performance rating. And actually, you should have started the evaluation ideas at the first stage.

In this chapter, I expand on the reason for conducting evaluations, describe Kirkpatrick’s Four Levels of evaluation, and provide guidance for how to design a plan to evaluate your training skills — whether you train online or in a classroom. This chapter focuses on two important processes we use to evaluate training: Kirkpatrick’s Four Levels and Phillips’ ROI.

Grasping the Purpose of Evaluations

The evaluation stage of the Training Cycle, highlighted in Figure 7-1, is important to you as a trainer. It’s here that you can prove your value as a business partner to your organization. You will be able to answer questions such as these: How has training changed employee performance? How has training increased sales or reduced expenses? How has training reduced rework and defects? How has training affected turnover and employee satisfaction? And ultimately, how is training affecting the bottom line?

© John Wiley & Sons

FIGURE 7-1: Stage V of the Training Cycle: Evaluate.

One purpose of training is to upskill employees for more responsible jobs. Training can improve behavior, opinions, knowledge, or skill level. The purpose of evaluation is to determine whether the training met the objectives and whether these improvements have taken place. One way to consider the importance of evaluations is to recognize the feedback it provides. An organization can obtain feedback about the value of training through an employee’s self-reporting, or by observing the trainee, or from business data.

Various kinds of evaluations provide feedback to different people in the following ways:

- Employees can evaluate their success in mastering new knowledge, attitudes, and skills. They can also see their work-related strengths and weaknesses. Evaluation results can be a source of positive reinforcement and an incentive or motivation.

- Trainers can use feedback for developing future interventions and program needs or creating modifications in the current training efforts. It also helps trainers ensure that their work supports the organization’s goals.

- Supervisors can look for observable change in employees’ effectiveness or performance as a result of participating in the training efforts.

- The organization can use feedback to determine return on investment from the training.

Reviewing Kirkpatrick’s Four Levels of Evaluation

The concept of different levels of evaluation helps you understand the measurement and evaluation process.

As you examine each of the Four Levels in the following sections, note the elegant but simple sequence from Level 1 through Level 4. At each level, the data become more objective and more meaningful to management and the bottom line. In addition, progressing through each level requires more work and more sophisticated data-gathering techniques.

Although some organizations have shown an increased interest in training evaluation, many organizations still evaluate training at only the first level. That level is the easiest to implement but doesn’t really give organizations what they need to measure the value of training.

Level 1: Reaction

Level 1, or participant reaction data, measures the learners’ satisfaction with the training. The reaction to a training session may be the deciding point as to whether it will be repeated — especially if it was conducted by someone outside the organization. If the training was presented internally, the Level 1 evaluation provides guidance about what to change. This is true for externally funded training as well, but sometimes the provider doesn’t have a chance to make the improvement. The employee’s company just won’t attend another training session. Most training efforts are evaluated at this level.

Level 1 evaluation usually consists of a questionnaire, sometimes called a smile sheet, that participants use to rate their level of satisfaction with the training program and the trainer, among other things. In a virtual classroom, Level 1 data is often collected through electronic surveys.

If you’re conducting a multiple-day training session, it’s beneficial to evaluate at the end of each day. If you’re conducting a one-day session, you may decide to evaluate it halfway through. This mid-way evaluation benefits the trainer because it provides feedback to you to adjust the design to better meet the participants’ needs. It also benefits participants because they get to think about what they learned and how they will apply it to their jobs.

You can do the same online using a polling feature. If you create a discussion about what to change, you could enter it into chat as participants share their thoughts.

Level 1 makes no attempt to measure behavioral change or performance improvement. Nevertheless, Level 1 data does provide valuable information:

- It measures the trainer’s performance.

- It’s an easy and economical process.

- If the tool is constructed well, the data can identify what needs to be improved.

- The satisfaction level provides guidance to management about whether to continue to invest in the training.

- If you conduct the evaluation immediately following the training session, the return rate is generally close to 100 percent, providing a complete database.

Level 2: Learning

Level 2 measures the extent to which learning has occurred. The measurement of knowledge, skills, or attitude change (KSAs; see Chapter 2) indicates what participants have absorbed and whether they know how to implement what they learned. Probably all training designs have at least one objective to increase participant knowledge. Many training sessions also include objectives that improve specific skills, and some training sessions, such as diversity or team building, attempt to change attitudes.

The training design’s objectives provide an initial basis for what to evaluate in Level 2. This is the point when trainers can find out not only how satisfied the participants are but also what they can do differently as a result of the training. Tests, skill practices, simulations, group evaluations, role plays, and other assessment tools focus on what participants learned during the program. Although testing is a natural part of learning, the word test often conjures up stress and fears left over from bad experiences in school. Therefore, when possible, substitute other words for tests or exams — even if testing is what you’re doing. Measuring learning provides excellent data about what participants mastered as a result of the training experience. This data can be used as

- A self-assessment for participants to compare what they gained as a result of the training

- An assessment of an employee’s knowledge and skills related to the job requirements

- An indication of an employee’s attitude about the content, if an attitude survey is conducted

- An assessment of whether participants possess knowledge to safely perform their duties — which is especially critical in manufacturing or construction settings

Level 3: Behavior

Level 3 evaluation measures whether the skills and knowledge are being implemented — that is, whether participants are applying what they learned to the job.

Because this measurement focuses on changes in behavior on the job, it becomes more difficult to measure than Level 1 and 2 for several reasons. First, participants can’t implement the new behavior until they have an opportunity. In addition, it’s difficult to predict when the new behavior will be implemented. Even if an opportunity arises, the learner may not implement the behavior right away. Therefore, timing becomes an issue for when to measure.

To complicate things even more, the participant may have learned the behavior and applied it on the job, but the participant’s supervisor wouldn’t allow the behavior to continue. As a trainer, you hope that’s not happening, but unfortunately it occurs more often than you want. This is when the training department must ask itself whether the problem requires a training solution.

Measuring Levels 1 and 2 should occur immediately following the training, but this isn’t true for Level 3. To conduct a Level 3 evaluation correctly, you must find time to observe the participants on the job, create questionnaires, speak to supervisors, and correlate data. Even though measuring at Level 3 may be difficult, here are some clear benefits of measuring behaviors:

- The measure may encourage a behavioral change on the job.

- When possible, Level 3 can be quantified and tied to other outcomes on the job or in the organization.

- A clearly defined lack of transfer of skills can point to a required training design change or a change in the environment in which the skill is used.

- A before-and-after measurement provides data that can be used to understand other events.

- Sometimes Level 3 evaluations help to determine the reasons that change hasn’t occurred, reasons that may not be related to training.

Level 4: Results

Level 4 measures the business impact. Sometimes called return on expectation, it determines whether the benefits from the training were worth the cost of the training. At this level, the evaluation isn’t accomplished through methods like those suggested in the previous three levels.

Factors that can determine results can be reduced turnover, improved quality, increased quantity or output, reduction of costs, increased profits, increased sales, improved customer service, reduction in waste or errors, less absenteeism, or fewer grievances. You also need to determine whether the results are worth the cost to design and conduct the training. Identifying and capturing this data are relatively easy. You would account for the cost of the trainer, materials, and equipment; travel for participants and the trainer; training space; and the cost of having participants in a training session instead of producing the organization’s services and products.

Measurements focus on the actual results on the business as participants successfully apply the program material. Typical measures may include output, quality, time, costs, and customer satisfaction.

Guidelines for measuring the Four Levels

You don’t necessarily have to start with Level 1 and move through Levels 2, 3, and 4 when evaluating and measuring the training. As I remind you elsewhere, you start your design with the end in mind, and this is certainly true with evaluation. Return to the purpose of the training. What does the organization want to accomplish? What does it expect as a result of the training? Reduced turnover rates? Larger sales? Fewer accidents? This is Level 4. Before you design your training program, find out from management what their expectation is. Jim Kirkpatrick, Don’s son, calls this Return on Expectation, or ROE.

You will still use all four levels, and the chronological order will still be the same. But thinking about Level 4 first — standing Don’s Four Levels model on its head — gives evaluation a different perspective, as you can read in the “When evaluating training, the end is the beginning” sidebar. In this section, I tell you about methods and guidelines to use for measuring each of the Four Levels as you develop your evaluation plan.

Measuring Level 1

Level 1, or reaction, can be easily measured during the training event or immediately following it, preferably before participants leave the classroom. You usually use a questionnaire, composed of both questions with a rating scale and open-ended questions. Some trainers allow participants to take the evaluation with them or go online to complete it after the session. The drawback is a lower return rate (for in-person or online training).

How do you begin?

Determine what you want to learn about the training.

You will most likely want to know something about the content, including its relevance to the participants’ jobs and the degree of difficulty. You will also want to gather data about the trainer including effectiveness, communication skills, the ability to answer questions, and approachability.

Design a format that will be both easy for participants to complete and presents a way for you to quantify participant responses.

Many formats exist, and the design will be a factor of the first question: What do you want to learn? You may choose to have statements rated on a one- to seven-point scale representing strongly disagree to strongly agree (or poor to excellent). You may also want to ask open-ended questions, or you may choose to do a combination of both types of questions. Even if you develop a format with questions rated on a scale, adding space at the end for comments is a good idea.

Plan to obtain 100-percent response ratings that are complete.

Obtaining a high level of response is mostly in the timing. First, plan time into the training design to complete the questionnaire. Then, 20 minutes prior to the end of the training session, pass out the evaluations and ask participants to complete them. It should take only ten minutes, allowing you time to facilitate your closing activity so that the learners’ last interactions are memorable and positive. Online participants should know where and how to locate the evaluation.

Your training department will most likely have determined an acceptable standard against which you will measure results. For example, 5.5 on a 7.0 scale may be considered acceptable by your training department.

If you follow these guidelines, you will be well on your way to finalizing an effective Level 1 evaluation. If you are conducting a virtual session, a virtual trainer strengths assessment at the end of this chapter may provide additional ideas to create your Level 1 evaluation. You and your colleagues may want to use the evaluation to provide feedback to each other on your training skills.

Measuring Level 2

You can measure Level 2, or learning, using self-assessments, facilitator assessments, tests, simulations, case studies, and other exercises. You should conduct this type of evaluation at the end of the training before participants leave so that you can measure the degree to which they learned the content. Use pre- and post-test results to compare improvement.

Measuring before and after the training session gives you the best data because you can compare participants’ knowledge levels before and after they complete the training. You measure the knowledge, skills, and attitudes (KSAs; see Chapter 2) that the training session was designed to improve. Use tests to measure knowledge, and use attitude surveys to measure changing attitudes. To measure skill acquisition, use performance tests, which have the participant actually model the skill. As with Level 1, attempt to obtain a 100-percent response rate.

Some trainers use a control group for comparison, and Dr. Kirkpatrick recommends that you do so if practical. Although this may be the most scientific way to gather data, it may also be a waste of time. For example, if participants have another way to learn between pre- and post-tests, don’t send them to training. On the other hand, if training is the only way that participants can gain the knowledge, skills, and attitudes, why bother with a control group? You’ll need to be your own judge about whether a control group is beneficial.

You can choose from among many types of testing formats, and each brings with it advantages and disadvantages. True/False tests are easy to develop and cover many questions in a short amount of time. The drawback is that if the test is too easy, having superficial knowledge may lead to an inflated score. Be sure to use a subject matter expert (SME, pronounced “smee”) to assist with the design.

Other available testing options include oral tests, essay tests, multiple-choice tests, and measured simulations such as in-basket exercises, business games, case studies, or role plays. Assessments may also include self-assessments, team assessments, or instructor assessments. You can also create an online evaluation. Although an online assessment can be efficient, it brings a couple problems, including the difficulty of guaranteeing that participants are who they say they are when signing in and the ability to protect the questions in the exam banks. Most organizations that use online assessments have addressed both of these issues. Whatever testing option you use, be sure that the results are quantifiable.

Measuring Level 3

Level 3, or behavior, determines the successful transfer of learning to the workplace. Unlike with Levels 1 and 2, you need to allow time for the changed behavior to occur. How much time? The experts differ, and with good reason. The amount of time required for the change to manifest will itself depend on the type of training, how soon participants have an opportunity to practice the skill, how long participants take to develop a new behavioral pattern, and other aspects of the job. All this can take anywhere from one to six months. You’ll probably need to work with the SME and supervisors to determine the length of the delay required to allow participants an opportunity to transfer the learning (behavior) to the job.

By the way, as with Level 2, I recommend a pre- and post-testing method, and you’ll need to decide whether to incorporate a control group for comparison. (See the preceding “Measuring Level 2” section for the pros and cons of having a control group.)

Each item in an evaluation instrument for Level 3 should be related to an objective taught as part of the training program. Based on the training objectives, you create a list of skills that describe the desired on-the-job performance. Use a SME to assist you with the design of the evaluation. The SME will understand the nuances of being able to complete a task. For example, a skill maybe “uses the four-step coaching model with employees.” A SME will know that even though the four steps are essential, what truly makes the model successful is the supervisor’s “willingness to be available to respond to questions at any time.” Knowing this, your checklist of skills expands to include availability.

After you identify the skills to measure, you select an evaluation method. Evaluation tools may include interviews, focus groups, on-site observations, follow-up questionnaires, customer surveys, and colleague or supervisory input.

Don’t take Level 3 evaluations lightly; they require a major resource investment. Know what you will measure; know how you will measure; and most important, know how you will use the data.

What do you do if the results show that performance has not been changed or skills mastered? You need to go back to the training design. Certainly, the first step is to determine whether the skill is required. If yes, examine the training material to ensure that you’ve used appropriate learning techniques and placed enough emphasis on the skill. Perhaps a job aid is required to improve performance. Sometimes you may discover something that didn’t show up in the needs assessment. For example, you may discover that participants aren’t using the skill because it’s no longer important or isn’t frequently used on the job. In that case, you may want to remove it from the training session. This is a perfect example of how the fifth stage of the Training Cycle, evaluation, feeds back into the first stage.

Getting results that demonstrate that performance hasn’t improved is not what a trainer wants to hear. However, that knowledge helps you make an intelligent decision about whether to maintain the training program, overhaul it, or scrap it entirely. Without a Level 3 evaluation, you probably can’t make a wise decision.

Measuring Level 4

Level 4, or results, may be the most difficult to measure. Dr. Kirkpatrick frequently stated that the question he was asked most often was, “How do you evaluate Level 4?” Even though Level 4 is the most challenging to evaluate, training professionals need to be able to demonstrate that training is valuable and can positively affect the bottom line.

At this level, you can never be sure whether external factors affected what happened. There is always a possibility that something other than the on-the-job application contaminated the results. Can you really isolate the effects that training has on business results? This is one time that using a control group can be helpful to the evaluation results. Yet even with a control group, other factors may impact the business, such as the loss of a good customer, the introduction of a new competitor, a new hiring practice, or the economy.

So what do you do when management asks you to provide tangible evidence that training is having positive results? A before-and-after measurement is relatively easy because records for the kinds of things you measure (turnover, sales, expenses, errors, grievances) are generally available. The trick is to determine which figures are meaningful.

A second difficultly arises in predicting the amount of time to allow the change to take effect. It may take six or more months. Gather the data that you believe provides evidence of the impact of the training. This measurement usually extends beyond the training department and utilizes tools that measure business performance, such as sales, expenses, or rework. Remember, a key issue is to try to isolate training’s impact on results. You may not be able to prove beyond a doubt that training has had a positive business impact, but you will be able to produce enough evidence for management to make the final decision.

Figuring out which evaluation level to use

You may choose to evaluate training at one or all four levels. How do you decide? Base your decision on answers to the following questions:

- What is the purpose of the training?

- How will you use the results of the evaluation?

- What changes or improvements may be made as a result of the evaluations?

- Who are the stakeholders and what do they want you to measure?

- What are the stakeholders’ expectations?

- What business goals will the training impact?

- What resources will be required for the evaluation?

Just because there are four evaluation levels doesn’t mean that you should use all four every time. After answering the preceding questions, decide how far you will go through the four levels. Will you stop after Level 1 or use all four? This depends on what’s important to measure for the training program. Evaluation experts agree that Level 3 and especially Level 4 should be used sparingly because of the time and cost involved. Always start with Level 1. In addition, a rule of thumb seems to be to use all four levels in situations in which the results are a top organizational priority or for training that is expensive.

Taking a deeper look at evaluation methods

I present a number of evaluation methods up to this point in the chapter. In this section, you look at some of the specific tools and find out about their advantages and disadvantages to help you choose the one that will work best. All these evaluation methods work. All give you the information you need. Some work better than others for each of the four levels. The final decision about which method to use will be yours.

Objective test formats

This method measures how well trainees learn program content. A facilitator administers paper-and-pencil or computer tests in class to measure participants’ progress. The test should measure the learning specified in the objective. Tests should be valid and reliable. Valid means that an item measures what it’s supposed to measure; reliable means that the test gives consistent results from one application to another. Here are some objective test formats:

- Multiple-choice questions take time and consideration to design. However, they maximize test-item discrimination, yet minimize the accuracy of guessing. They provide an easy format for the participants and an easy method for scoring.

- True/False tests are more difficult to write than you may imagine. They are easy to score.

- Matching tests are easy to write and to score. They require a minimum amount of writing but still offer a challenge.

- Fill-in-the-blank or short-answer questions require knowledge without any memory aids. A disadvantage is that scoring may not be as objective as you may think. If the questions don’t have one specific answer, the scorer may need to be more flexible than originally planned. Guessing by learners is reduced because no choices are available.

- Essays are the most difficult to score, although they do measure achievement at a higher level than any of the other paper-and-pencil tests. Scoring is the most subjective.

Attitude surveys

These question-and-answer surveys determine what changes in attitude have occurred as a result of training. Practitioners use these surveys to gather information about employees’ perceptions, work habits, motivation, values, beliefs, and working relations. Attitude surveys are more difficult to construct because they measure less tangible items. There is also the potential for participants to respond with what they perceive to be the “right” answer.

Simulation and on-site observation

Instructors’ or managers’ observations of performance on the job or in a job simulation indicate whether a learner is demonstrating the desired skills as a result of the training. You facilitate this process by developing a checklist of the desired behaviors, which is sometimes the only way to determine whether skills have transferred to the workplace. Some people panic or behave differently if they think they are being observed. Observations of actual performance or simulated performance can be time consuming. This method also requires a skilled observer to decrease subjectivity.

Criteria checklists

Also called performance checklists or performance evaluation instruments, these are surveys using a list of performance objectives required to evaluate observable performance. The checklists may be used in conjunction with observations.

Productivity or performance reports

Hard production data such as sales reports and manufacturing totals can help managers and instructors determine actual performance improvement on the job. An advantage of using productivity reports is that you don’t have to develop a new evaluation tool. Also, the data is quantifiable. Disadvantages include a lack of contact with the participant, plus records may be incomplete.

Post-training surveys

Progress and proficiency assessments by both managers and participants indicate perceived performance improvement on the job. Surveys may not be as objective as necessary.

Needs/objectives/content comparison

Training managers, participants, and supervisors compare needs analysis results with course objectives and content to determine whether the program was relevant to participants’ needs. Relevancy ratings at the end of the program also contribute to the comparison.

Class evaluation forms

Sometimes called a response sheet or smile sheet, participants respond on end-of-program evaluation forms to indicate what they liked and disliked about the training delivery, content, logistics, location, and other aspects of the training experience. The form lets participants know that you desire their input. You can gather both quantitative and qualitative data.

Interviews

You can use interviews to determine the extent to which skills and knowledge are being used on the job. Interviews may also uncover constraints to implementation. In a way that no other method can, interviews convey interest, concern, and empathy in addition to collecting data. They are useful when observing behaviors directly isn’t possible. The interviewer becomes the evaluation tool, which can pose both an advantage and a disadvantage. Interviews are more costly than other methods, but they give instant feedback, and the interviewer can probe for more information.

Instructor evaluation

Professional trainers administer assessment sheets and evaluation forms to measure the instructor’s competence, effectiveness, and instructional skills. See an example at the end of this chapter.

Using ROI for Training

Trainers face a persistent trend to be more accountable and to prove their worth — their return on the dollars invested in training. Dr. Jack Phillips has been credited with the development of Return on Investment (ROI) to evaluate training. (See the upcoming sidebar for an interview with Jack Phillips about ROI.)

ROI measurement compares the monetary benefits of the training program with the cost of the program. Currently, fewer than 20 percent of organizations conduct evaluations at this level. Many of those organizations that do evaluate training using ROI limit its use to training programs that

- Are expensive

- Are high visibility or important to top management

- Involve a large population

- Are linked to strategic goals and operational objectives

Although many organizations claim to want to know more about training’s ROI, few seem willing to make the investment required to gather and analyze the data.

Exploring the ROI process

ROI presents a process that produces the value-added contribution of training in a corporate-friendly format, and Kirkpatrick’s Levels 1 through 4 are essential for gathering the initial data. The ROI process consists of these five steps:

Collect post-program data.

You use a variety of methods, similar to those identified in the last section, to collect Level 1 through Level 4 data.

Isolate the effects of training.

Many factors may influence performance data, so you need to take steps to pinpoint the amount of improvement that you can attribute directly to the training program. You can use a comprehensive set of tools that may include a control group, trend-line analysis, forecasting models, and impact estimates from various groups.

Convert data to a monetary value.

Assess the Level 4 data and assign a monetary value. Techniques may include using historical costs, using salaries and benefits as value for time, converting output to profit contributions, or using external databases.

Tabulate program costs.

Identifying program costs includes at least the cost to design and develop the program, materials, facilitator salary, facilities, travel, administrative and overhead, and the salary or wages for the participants who attend the program.

Calculate the ROI.

You calculate ROI by dividing the net program benefits (program benefits minus program costs) by the program costs and then multiplying that result by 100. In this step, you also identify intangible benefits, such as increased job satisfaction, improved customer service, and reduced complaints.

Although calculating ROI feels like a great deal of work, it will be worth it if you need to provide evidence to management regarding the value of training.

Knowing the benefits of ROI

Probably the most important benefit of using ROI with training is to be able to respond to management’s question of whether training adds value to the organization. The ROI calculations convince management that training is an investment, not an expense. The ROI analysis also provides information about specific training efforts: which ones contributed the most to the bottom line; which ones need to be improved; and which ones are an expense to the organization. When the training practitioner acts on this data, the process has the added benefit of improving the effectiveness of all training programs.

ROI provides an essential aspect of the entire evaluation process.

Evaluation: The Last Training Cycle Stage but the First Step to Improvement

You may now be ready to put your evaluation plan together. If so, don’t be shy about asking for outside assistance. Statisticians, researchers, and other professionals can help to expedite a process that will meet your stakeholders’ needs.

As a trainer, the Level 1 evaluations are important to you. Don’t ignore the feedback. Another practice to consider is to ask a training colleague to conduct a peer review. Do you have a colleague whose opinion you value? Ask the individual to observe one of your programs — even a portion is helpful. Another trainer will observe things and give you feedback on techniques that participants may overlook.

I am delighted to share with you a comprehensive virtual trainer strengths checklist in Table 7-1, courtesy of Cynthia Clay. Copy it and ask a colleague for input on your next virtual training session.

Evaluation is the final stage in the Training Cycle but is certainly only the beginning of improving training. It will be up to you to take your training efforts to the next level, relying on evaluation to help you decide what to improve. In Dr. Kirkpatrick’s words, “Evaluation is a science and an art. It is a blend of concepts, theory, principles, and techniques. It is up to you to do the application.”

TABLE 7-1 Virtual Trainer Strengths Assessment

Rate yourself on each competency using the following scale: 5 = Expert; 4 = Very Skilled; 3 = Somewhat Skilled; 2 = Basic Familiarity; 1 = Little Familiarity | ||

Core Competency | Specific Skills | Rating |

Communication |

|

|

Technology |

|

|

Virtual Facilitation Skills |

|

|

Interaction and Collaboration |

|

|

Adaptability |

|

|

Webcam Usage |

© 2022 Cynthia Clay. Used with permission. |

|

The very location of evaluation at the end of the Training Cycle doesn’t mean that you don’t think about it until the end. Quite the contrary. As I mention in

The very location of evaluation at the end of the Training Cycle doesn’t mean that you don’t think about it until the end. Quite the contrary. As I mention in  Dr. Don Kirkpatrick is credited with creating the Four Levels of training evaluation: reaction, learning, behavior, and results a half century ago. Jim Kirkpatrick has taken up his father’s charge to impart the importance of evaluation. He gives it a new slant with Return on Expectations. Dr. Jack Phillips, evaluation expert, approaches evaluation from a return on investment (ROI) perspective. Even with the new evaluation twists, Kirkpatrick’s original Four Levels are as applicable today as they were in the 1950s.

Dr. Don Kirkpatrick is credited with creating the Four Levels of training evaluation: reaction, learning, behavior, and results a half century ago. Jim Kirkpatrick has taken up his father’s charge to impart the importance of evaluation. He gives it a new slant with Return on Expectations. Dr. Jack Phillips, evaluation expert, approaches evaluation from a return on investment (ROI) perspective. Even with the new evaluation twists, Kirkpatrick’s original Four Levels are as applicable today as they were in the 1950s. Before you decide to conduct any type of evaluation, decide how you will use the data. If you aren’t going to use it, don’t evaluate.

Before you decide to conduct any type of evaluation, decide how you will use the data. If you aren’t going to use it, don’t evaluate. If you want to measure reaction at the end of day one and you don’t have an evaluation form, try one of these two ways: First, use two flip chart pages. At the top of one, write “Positives,” and at the top of the other, write “Changes.” Then ask participants to provide suggestions about what went well that day, the positives, and what needs to change. Capture ideas as they are suggested. A second evaluation format that I use regularly is to pass out an index card to each participant. Then ask everyone to anonymously rate the day on a 1-to-7 scale, with 1 being low and 7 being high, and to add one comment about why they rated it at the level they did.

If you want to measure reaction at the end of day one and you don’t have an evaluation form, try one of these two ways: First, use two flip chart pages. At the top of one, write “Positives,” and at the top of the other, write “Changes.” Then ask participants to provide suggestions about what went well that day, the positives, and what needs to change. Capture ideas as they are suggested. A second evaluation format that I use regularly is to pass out an index card to each participant. Then ask everyone to anonymously rate the day on a 1-to-7 scale, with 1 being low and 7 being high, and to add one comment about why they rated it at the level they did. All trainers should be skilled in Level 3 evaluation methods. The most important reason to conduct training is to improve performance and transfer knowledge, skills, and attitude changes to the workplace. You can’t expect the desired results unless a positive change in behavior occurs. Therefore, use a Level 3 evaluation to measure the extent to which a change in behavior occurs.

All trainers should be skilled in Level 3 evaluation methods. The most important reason to conduct training is to improve performance and transfer knowledge, skills, and attitude changes to the workplace. You can’t expect the desired results unless a positive change in behavior occurs. Therefore, use a Level 3 evaluation to measure the extent to which a change in behavior occurs.