Chapter 5

Consistent and High Level Platform View

5.1 Introduction

The first prerequisite necessary for managing service availability (SA) is reliable information about all the resources available in the system that can be used to maintain the availability of the services offered by the system. The goal of the platform services is to provide the availability management with this information about the platform, that is, about the execution environments (EEs)—encompassing the SA Forum middleware itself—that can be used to run applications that deliver the services in question. In the process of providing this information the platform services also manage the resources at each level and even expose some of the control functionality to their users.

The SA Forum system architecture splits this task into three parts:

The Hardware Platform Interface (or HPI) [35] deals with monitoring and controlling the hardware resources themselves. The approach is that hardware components are given; these resources cannot be modified from within the system. Appropriately HPI has a discovery mechanism to find out exactly what hardware components are present, in what state they are and what management capabilities they have. It exposes these components and their capabilities in an implementation independent way so HPI users can monitor and control them as necessary for their purposes. HPI also provides timely updates on changes that happen to the system or to its components regardless of whether these changes are the result of internal events such as failures, programmatic management operations from users such as resetting a resource; or external (physical) interventions such as the insertion or removal of a blade in a server blade system. The HPI makes accessible the manageable capabilities of hardware resources such as fan speeds, sensor thresholds, but it also offers interfaces to control firmware upgrades and to initiate diagnostics.

The Platform Management service (PLM) [36] uses the information provided through the HPI. The main task of the PLM is to map the hardware discovered by the HPI into the PLM configuration which is part of the system information model. The configuration indicates the expected entities and their particular locations within the system. PLM then compares this information with the discovered hardware entities. If there is a match, it allows the entity to become part of the PLM domain and to boot the EE together with the SA Forum middleware as appropriate. The PLM information model also reflects the virtualization facilities such as hypervisors with their hosted virtual machines (VMs) potentially each providing an operating system (OS) instance as an EE for applications.

The PLM monitors and performs some basic life-cycle management of these entities primarily for the purpose of fault isolation. It also exposes to its users the up-to-date status information on the entities within its domain and some management capabilities on them.

Once the inventory of the hardware elements (HEs) and the EEs running on them has been established, the Cluster Membership service (CLM) [37] forms a cluster. Each PLM EE may host a CLM node and the cluster may include only the nodes configured for the membership.

Based on the information received from PLM about the PLM entities, the CLM is in charge to decide whether a configured node residing in a given EE is indeed healthy and the node is reachable so that distributed applications can use the node as part of the cluster. The CLM guarantees the formation and maintenance of a single cluster and up-to-date and reliable membership information. All Application Interface Specification (AIS) services with cluster-wide services rely on this information for their decisions on their resource handling to best support SA.

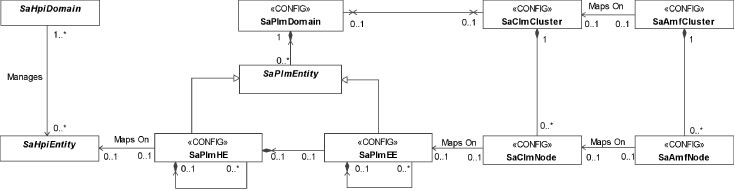

The platform services form a stack, one layer using the services of the other in a well-defined way for a well-defined purpose. However their interfaces are not exclusive to AIS services. Any application requiring up-to-date platform information may link the appropriate interface and obtain the information from any of these services directly. Figure 5.1 shows the mapping between the entities of the different layers of the Platform services.

Figure 5.1 Mapping of entities of the different layers of the platform services [62].

In the rest of this chapter we take a closer look at each of these services and the way they work together to enable SA.

5.2 Hardware Platform Interface

5.2.1 Background

Relying on a feature rich hardware platform is essential for availability management. This feature richness includes the capability to detect hardware, monitor, and control its state. Since the availability management is a software solution it is desirable that these functions are offered through a programming interface.

The development of the HPI specification was the result of the growing trend of building hardware platforms modularly as a loosely coupled set of compute and I/O blades. These platforms required a centralized management and a common interface that could be used by the availability management.

Several vendors started the development of their own proprietary solutions. At the same time the PCI Industrial Computer Manufacturers Group (PICMG) initiated the development of the CompactPCI [65] and later the AdvancedTCA [66] then MircoTCA [67] specifications, all of which for management relied on the Intelligent Platform Management Interface (IPMI) [68].

The IPMI effort was led by Intel who also initiated the work on a complementary hardware platform management application programming interface (API) named the Universal Chassis Management Interface (UCMI). It addressed the gaps identified by the High Availability Forum, an industry group discussing the issues of open architecture based high-availability computer systems.

From its formation the SA Forum drew on the heritage of the High Availability Forum, and the UCMI initiative became the core part of the first release of SA Forum HPI specification.

While exposing all the richness of the underlying technology HPI defines a platform independent management API that the high-availability middleware and applications can use to monitor and control the platform hardware in a uniform way.

HPI is designed to be flexible and to provide management capability for any type of hardware and not only blade systems typically used for high-availability systems. Its key feature of discovering the hardware topology and the management capabilities first allows for the creation of management applications without advance knowledge of the targeted hardware.

Since its first release, the SA Forum has published several updates to the HPI specification. It was complemented with specifications standardizing the access of an HPI implementation via Simple Network Management Protocol (SNMP) [69] and also with the mapping between HPI and xTCA compliant platforms [70].

5.2.2 Overview of the Hardware Platform Interface

The HPI specification defines a set of functions that allows applications and the middleware to access the management capabilities of the underlying hardware platform in a platform independent and uniform way. The specification defines a model that guides the access to these management capabilities. The basic concepts of the model are: the HPI entity, the management instrument, the resource, the session, and the domain.

HPI entities are the hardware components in the system that compose the hardware platform ranging from fans to compute blades and processors. HPI entities reflect the physical organization of the platform and accordingly HPI entities are identified based on their location information tagged with their type.

HPI entities expose different management capabilities allowing one to determine and/or control their status such as reading their temperature or performing a power cycle. These different management capabilities are modeled as management instruments.

There are different types of management instruments: as simple as a sensor or a timer, or as complex as a firmware upgrade management instrument (FUMI) or diagnostics initiator management instrument (DIMI). The HPI specification classifies them and for each class defines an appropriate set of functions that manages the particular aspect of the hardware components they represent.

For example, the sensor management instruments provide readings for different measurements regardless what is being read. The sensor type returned as part of the reading determines the interpretation of the values read. Besides reading sensor values HPI allows one to define and adjust thresholds on them so that when violated HPI generates events—essentially alarms. In turn management applications can react to them potentially using other management instruments associated with the same HPI entity to resolve the situation. For example, if the temperature is too high the user may use an available control management instrument to turn on a fan.

It is important to see that the management instrument physically does not have to be part of the associated HPI entity. For example, if the HPI entity we would like to control is this fan in the chassis and the triggering event to turn it on or raise its speed is a rise in temperature above a given threshold, the temperature sensor generating this triggering event is not likely to be part of the fan itself. It might be located at the farthest place that this fan needs to cool.

The control and the sensor used together to control this fan would compose an HPI resource, that is, a set of management instruments which are used together to manage some HPI entity representing some platform hardware. HPI resources reflect the logical organization of the system for some management purposes.

The management application gains access to the different HPI resources by opening a session on an HPI domain. The domain groups together a set of resources and the services necessary to discover these resources as well as additional domains. Furthermore, it provides event and alarm management and event logging services.

Accordingly, an HPI user is expected to discover the management capabilities available on the HPI implementation it is connected to. A possible starting point for this is the ‘default domain,’ which is a concept supported by all HPI implementations. From this and using the domain services the HPI user can discover the different resources available in the domain and any additional domains it can further explore. Different HPI implementations may interpret the ‘default domain’ concept differently. For example, one may associate it with one particular domain within the platform, while another implementation may associate it with a different domain for each subsequent user request.

For each of the resources in the domain the HPI user can find out the following information: The entity the resource is associated with including its inventory data; the management capabilities the resource itself exposes for the entity (e.g., whether the entity is a field replaceable unit (FRU) that supports hot-swap); and any additional management instruments included for the resource (e.g., sensors, controls, FUMI, DIMI, etc.) in the domain.

Although it is typical, resources are not in an exclusive relationship with their associated entities. They may represent only a certain management aspect of that entity, while another resource may expose additional aspects. For example, if the earlier-mentioned fan is hot-swappable the hot-swap capability may be exposed through a different resource which reflects all the features impacted through the hot-swap.

Once the HPI user has discovered the current platform configuration it may subscribe to the events generated in the domain it has a session with. Among others these include notifications about changes in the domain configuration, that is, the HPI implementation indicates when resources are added to or removed from the domain. There is no need for periodic rediscovery of the platform.

The HPI specification does not cover any details on how the HPI implementation itself collects the information exposed through the API or how the hardware entities and the related model concepts are configured, for example, what features of an entity are exposed through a particular resource. All this is left implementation and therefore vendor specific.

The HPI specification also does not mandate any synchronization among different instances of HPI implementations. For example, if implementations of different vendors are combined into a system, they will coexist without any knowledge of each other—at least with respect to their standard capabilities. Any coordination between them is beyond the scope of the current HPI specification.

In the following section we elaborate further each of the mentioned HPI model concepts starting with the HPI domain.

5.2.3 The HPI Model

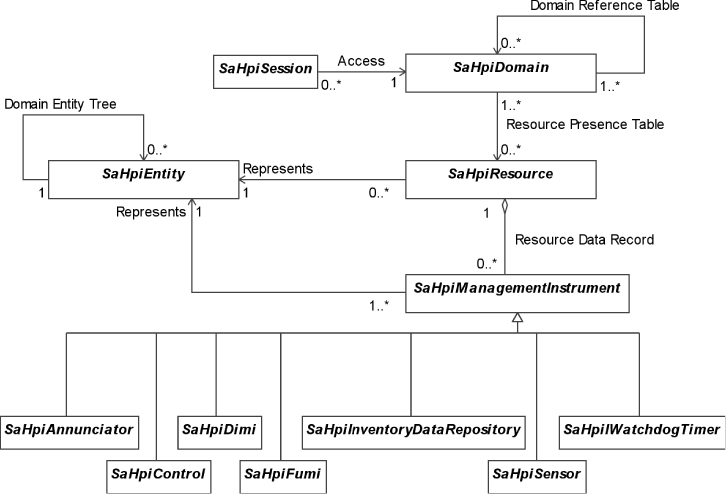

Figure 5.2 presents an overall view of the HPI model concepts that we elaborate on in this section.

Figure 5.2 HPI model elements and their relations.

5.2.3.1 HPI Domain and Session

As we have already seen, an HPI domain exposes a set of services common to the domain, which are collectively referred to as the domain controller as they cover administration aspects; and a collection of HPI resources accessible through the domain.

Although the wording ‘collection of resources’ could suggest that the HPI domain is a set of physical entities, it is more appropriate to think of the domain as an access control mechanism, or even filtering mechanism. The same resources may be accessible through several domains while others may be dedicated to a particular one.

An HPI user initiates the access to a domain by opening a session on the domain. The user will see only the resources of this domain and it can access only these resources within the given session.

The domain controller includes two tables: the resource presence table (RPT) and the domain reference table (DRT). The former allows the HPI user to discover the domain itself while the latter can be used to discover other domains in the system.

Domains may be related to each other depending on what can be discovered from these tables:

Peer domains are domains that allow access to a single set of resources and discover a single set of domains. That is,

- the RPT of each domain in the peer relationship lists exactly the same set of resources; and

- the DRT of each peer domain references all the other peer domains and the same set of nonpeer domains.

For example, assuming domains X, Y, Z, and W in an HPI implementation; domains X, Y, and Z are peers if they all list in their respective RPT the same set of resources {a, b, c, d} exclusively. In their DRT X has {Y, Z, W}, Y has {X, Z, W}, and Z has {X, Y, W}. W is a nonpeer domain that can be discovered from any of these peer domains.

Essentially peer domains provide redundancy of the domain controller functionality for a given set of resources.

There are subtleties, however, that HPI users need to be aware of:

Initially, the HPI implementation will populate the RPT of each of the peer domains X, Y, and Z with exactly the same information with respect to the set of resources {a, b, c, d}. The HPI users of each of the domains may manipulate this information, and they may manipulate it differently. For example, users may tag the resources differently in the different domains. It is like ‘personalizing the browser’ through which they see the domain. The HPI implementation does not synchronize such changes across peer domains; only users of the same domain will see the same information for a given resource, that is, all users of the domain use the same ‘browser.’

Subsequently, as changes happen in the system—some resources may go away, others may appear, the configuration, the state of the controlled hardware may change—the HPI implementation will update the RPTs of all the domains and peer domains will continue to see the same set of resources. However there may be some differences in the timing and the order as if the browsers of the peers domains show the same scenery, but from different angles.

Domains may also be in a related nonpeer domain relation as we have shown W in our example. These are those domains that can be discovered by an HPI user who initially had only a single entry point to the set of related domains. Related nonpeer domains also have disjoint sets of resources, which guarantees that the same resource will not be discovered twice, through two different domains except if these are peer domains, in which case the entire domain does not need to be discovered the second time. Related nonpeer domains compose a loopless tree structure in which there is only a single path leading from one related domain to another.

One may interpret related nonpeer domains as different management aspects of the system. For example, one domain may collect all the resources providing temperature control capabilities for the system, while another would allow hot-swap management only. These related nonpeer domains may be complemented by a set of peer domains that expose the same set of sensors so that any of the managers using the nonpeer domains can observe how the system state changes as a result of the interactions.

Finally domains that do not fall into either of these categories are called unrelated domains. Unrelated domains have no restrictions on what resources may be accessed through them. Typically an HPI user needs to have advance knowledge of an unrelated domain as by definition it cannot be discovered through the discovery process and therefore their use is discouraged by the specification.

Note also that this means that domains accessing overlapping but not equal sets of resources cannot be related through the DRT. They need to be unrelated. So if we want our temperature and hot-swap control managers to access each their own single domain we can only use unrelated domains as they would need to share the access to the overlapping set of sensor resources while also accessing the appropriate control resources.

HPI users may open multiple simultaneous sessions to the same or different domains. Also a domain may be accessed through multiple sessions simultaneously. However HPI does not control concurrency. User may detect concurrent changes by checking the respective update counters maintained by an HPI implementation as well as by listening to the events it generates.

Besides the RPT and the DRT, the domain controller maintains a single event channel specific to the domain. Events published on the channel usually indicate some kind of change within the domain or within the HPI resources accessible through the domain. This event channel is a very simple interpretation of the publish/subscribe paradigm.

The publishers of the domain event channel are: the domain controller itself, the resources accessible through the domains, and HPI users with open sessions to the domain.

To receive the events published on the channel, an HPI user needs to subscribe with its session id. Once done so it will receive all events published in the domain. That is, no filtering capability is provided by the HPI event manager; hence all subscribers of the domain will receive all events published on the domain's event channel.

Logically the subscription results in the creation of an event queue dedicated to the particular subscriber. The HPI implementation places a copy of an event to each of these queues. The copy remains in the queue until the consumer HPI user retrieves it. To read the events a subscribing HPI user needs to call the get API function, which returns the first available event in the queue. This call can be invoked in blocking mode so that events are delivered as they occur.

If it is a resource that generates an event, the same event will be published in all domains through which the resource is accessible. If more than one such events is generated simultaneously in the system, the order of their publication may be different in each of the domains that the generating resources are visible from.

One of the subscribers to the domain event channel is the domain event log. As one may expect, it collects the events occurred in the domain, however, there is no requirement on logging all the events. Each HPI implementation may implement its own filtering criteria. The HPI provides no API to set or modify these criteria.

Finally the domain controller maintains a third table, the domain alarm table (DAT). Alarms indicate detected error conditions in the system.

When an error is detected within the domain or in a resource accessible through the domain an entry is added to the DAT. The entry remains in the table as long as the error condition persists unless it is a user generated alarm, in which case the HPI user can remove the entry. System generated alarms can only be cleared by the HPI implementation. Their entry is removed from the DAT when the HPI implementation can no longer detect the related error condition.

The HPI specification does not mandate any method for announcing the presence of an alarm in the domain. An HPI implementation may choose its own mechanism, for example, it may light an LED or use an annunciator management instrument, which then generates an event that users subscribing to the event channel may receive in real-time.

5.2.3.2 HPI Entity

The actual hardware components managed through a domain and its resources are reflected in the HPI model by HPI entities. These are the hardware platform components capable of providing some services like housing other entities or cooling them; and carrying out some jobs such as running an OS instance or storing information.

HPI entities do not have an identity outside of the system; they have only types such as being a power supply, a cooling device, a central processing unit (CPU) blade, or a chassis. When an entity is inserted into the system it becomes uniquely identifiable for the HPI implementation based on the location it has been inserted. Accordingly, HPI entities are identified by a construct called the entity path.

The entity path is the sequence of {entity type, (relative) entity location} pairs starting from the entity and toward the system root. An example of an entity path is the following: {{power supply, 2}{subrack, 3}{rack, 1}{root, 0}}. The location numbering starts with zero, so this entity path identifies the third power supply in the fourth subrack of the second rack within the system.

The structure of the entity path implies that the HPI entities are organized into a tree—the domain entity tree (DET). This tree structure serves like a map for the HPI implementation. It knows the way it is built and therefore all the locations on the map that may house entities of different kind. When the entity is inserted, the type and the location information create its identity specific for the system into which it was inserted.

This tree organization allows for some flexibility, that is, the HPI implementation does not need to know the entire tree structure from the beginning; the tree can be extended at real-time with sub-trees for entities that nest smaller entities. The numbering of peer nodes within the tree, that is, the locations on the map is implementation specific. The specification does not mandate such details as whether it is left-to-right or right-to-left, and so on.

The tree organization also implies that when a node goes away, all its children go away too: For example, when a carrier blade is removed from the system all its mezzanine cards go away with it.

The entity path is unique within the system and it reflects the physical location of an entity regardless how it is obtained through which domain. The HPI implementations must guarantee this. In other words, all resources managing an entity should be able to use the same entity path, even if they access it via different domains.

For maintaining SA, it is essential to know the status of the different HPI entities at any moment in time and to be able to manage them so they best meet the availability requirements. For this HPI entities may expose some management capabilities. The exposed management capabilities compose the HPI resources that we are going to discuss next.

5.2.3.3 HPI Resource

An HPI entity typically has a number of management capabilities: its power supply may be turned on or off, its temperature may be read. These capabilities are exposed as HPI resources.

The HPI resource concept is very specific as it only represents management capabilities. HPI resources are management resources only as opposed to the HPI entities themselves that may expose resources in the generic sense such as computing or storage capabilities. HPI however has no direct concern of these.

An entity may expose its management capabilities by one or more resources. Typically each HPI entity is associated with at least one HPI resource.

On the other hand, each resource is associated with a single HPI entity for which it exposes a basic set of management capabilities. These resource capabilities include:

- resource configuration management—allowing to save and restore the configuration parameters of the resource manager itself;

- load management—allowing to control the software loaded into the entity associated with the resource;

- power management—controls whether the entity associated with the resource is powered on, off, or power-cycled;

- reset management—which allows one to perform different types of resets (e.g., warm, cold, etc.) on the entity associated with the resource;

- resource event log management—provides the same functionality as at the domain level, but for the particular resource only;

- hot-swap management—indicates whether the associated entity is hot-swappable; and

- additional management capabilities accessible through management instruments that are available in association with the resource. These additional management capabilities may be associated with the HPI entity the resource is representing or they may be associated with other entities in the system.

The currently available resources, their associated entities, and their resource capabilities are listed in the already mentioned RPT of each domain through which these management capabilities are accessible. The HPI implementation updates this table dynamically as changes occur in the system. As indicated in Section 5.2.3.1, the same resource may be exposed through different domains.

Also, an entity may expose different management capabilities in different domains, which is reflected in the appropriate setting of the resource capabilities information in the RPT of each domain. For example, even if a hot-swappable entity can be managed through several domains, typically managing its hot-swap is dedicated to and therefore exposed only in a single domain. In the other domains through which it is visible the setting of the resources associated with the entity would show no hot-swap capability. Such exposure of resources would be typical for related nonpeer domains, each of which would collect platform resources for a particular management aspect.

Note also that the RPT lists the resources as a flat structure, while in reality the entities whose management capabilities they represent are typically nested and comprise the hierarchical structure of the DET.

As ‘container’ entities are plugged in or removed from the system resources associated with them and their children show up in the RPTs or disappear from them in seemingly unrelated groups. Only the analysis of the entity path of the entities associated with these groups of resources would reveal the relationship, the nesting of entities.

The specification recommends that HPI implementations add first to the RPT the resources associated with the ‘container’ entity and remove them last.

Additional management capabilities of a particular resource are listed as resource data records (RDRs). Each of these records describes a management instrument associated with the resource. In turn each management instrument is also associated with an HPI entity, which may or may not be the same HPI entity with which the resource has the association.

5.2.3.4 Management Instruments

The HPI specification defines different management instruments for the management of HPI entities. A management instrument is a generalization of some management capabilities typically available in hardware platforms. HPI defines seven different management instruments and their appropriate API that represent classes of management capabilities. These management instruments are:

- the control management instrument—allowing for setting the state of the associated entity;

- the sensor management instrument—providing a mechanism to query some conditions;

- the inventory data repository management instrument—returns information identifying the associated entity such as serial number, manufacturer, and product name;

- the watchdog management instrument—provides timer functionalities;

- the annunciator management instrument—replicates the functionality of the DAT, that is, announces error conditions existing in the associated entity. As opposed to the DAT the content of which is standardized, annunciators can be tailored to the HPI implementation and even by the HPI user;

- the diagnostics initiator management instrument—exposes diagnostics capabilities for the associated entity; and

- the firmware upgrade management instrument—allows the management of upgrades of the firmware of the associated entity.

In the RPT it is indicated which types of management instruments a resource contains and for each of them a set of RDRs describes the actual management instruments.

RDRs have some common fields that include the RDR type (implying the management instrument type) and the entity associated with the management instrument, and some fields specific for each management instrument.

5.2.4 HPI Capability Discovery

When an HPI user would like to manage the hardware platform it first opens a session toward a domain. The user may be aware of a specific domain id or—most often—it opens the session on the ‘default domain.’ The HPI implementation then decides on which domain the session should be opened. This may depend, for example, on the security parameter the user provides in its call or other, HPI implementation specific details.

Once the user has an open session with a domain it is ready to discover the domain. It can find out on which domain the session was opened, and what the domain current status is. This includes:

- the domain id, if it participates in a peer relation, and some informal text associated with the domain;

- the DRT with a list of other domains;

- the domain resource table with the list of resources accessible through this domain;

- the DET with all the entities manageable via the resources of the domain;

- the DAT with the list of currently outstanding alarms.

To find out the information in each of the tables and in the DET, the HPI user needs to iterate through the tables and traverse the DET.

Iterating through the DRT allows the user to discover all the resources it can use to manage their associated entities through the given domain. This as we have seen in Section 5.2.3.3 covers the basic management needs such as power and reset management.

Each of the resources may indicate additional management capabilities such as watchdog timers and sensors as presented in Section 5.2.3.4. To discover these, the user needs to iterate through the RDRs associated with each resource. Once the user has discovered the management instruments from the RDRs, it can access them directly.

To find out about the current conditions and to perform management actions the user can access each of the management instruments using the methods appropriate for the type of the management instrument. If only a single management instrument of the given type can be associated with a resource the user only need to indicate its session id and the resource id. Otherwise the user needs to indicate the management instrument's number or may need to iterate through the management instruments of the given type to find out how many of them are available.

Besides the management capabilities, the user may also be interested to find out about the actual HPI entities composing the platform that are manageable through the domain. Indirectly some of this information is already included in the tables we described so far as the resources and management instruments all reference the HPI entities they are associated with. Of course, the user would need to correlate the different entity paths to reconstruct the organization of the managed entities. In the early versions of the HPI specification this was the only way to find out about the entities. Now the HPI API includes functions that allow the discovery of the DET directly.

Finally the user may subscribe to receive events generated within the domain. These will announce the changes as they occur within the domain.

Using the information of the DRT, the HPI user may continue the exploration by opening sessions and discovering other domains in the same way until they have discovered all related domains with all the management capabilities available.

5.2.5 Error Handling and Administrative Operations

One may have realized by now that the entire HPI is about the administration and management of the components within the hardware platform. Much of its functionality is or can be used by higher level administrative operations to carry out an intended operation. For example, higher level restarts or resets may map into an appropriate mode of the reset of the HPI resource associated with some hardware entity. As a result HPI does not expose any additional standard administrative API.

The interesting and additional aspect that an HPI implementation needs to deal with is that an administrator may physically manipulate the platform, open or close latches, remove or plug-in boards. In fact, such a manual operation is the required administrative repair action for many hardware error conditions or may be part of some upgrade operations when some old HEs are removed and replaced with new ones.

The HPI specification does not address this issue specifically in the context of administrative operations, but more from the perspective of error handling. It distinguishes the handling of either situation depending on whether the entity in question is a FRU and can be expected to report hot-swap events or it is not an FRU.

For non-FRU entities manual extraction and insertion are viewed as sudden failure and recovery of the entity. Some systems may also be capable of distinguishing whether the entity is physically not present or just inaccessible. Accordingly, the events that an HPI implementation may report for such entities are ‘resource failed,’ ‘resource inaccessible,’ ‘resource removed,’ ‘resource restored,’ ‘resource added,’ and ‘resource updated.’

For entities that can be replaced in a live system, that is, they are FRU; this fact is indicated as part of the resource capabilities. For such entities, or more precisely the resources representing them, the HPI implementation reports the hot-swap states. These are: ‘not-present,’ ‘inactive,’ ‘insertion-pending,’ ‘active,’ ‘extraction pending.’

The main difference between a manual administrative replacement operation and a failure recovery is that after recovering from a failure an FRU may not report the sequence of state transitions expected during a properly performed hot-swap reported at a replacement. It may transition directly from the not-present state to any other state.

In the opposite direction both a failure and also a surprise extraction may transition the resource from any state to the not-present state.

In either case when a resource is restored or added, HPI users cannot assume that any of the earlier discovered information is still applicable to the resource even if the resource id is the same as of the resource that was part of the system before.

The failure/removal and the repair/insertion of entities of the system may result in the reconfiguration of resources and reported as such by the HPI implementation.

The specification does not mandate whether the failure/removal of a resource results in only reporting its state as ‘not present’ or also in the removal of the associated record from the RPT completely.

On the other hand, if the resource associated with a newly inserted/repaired entity has not been discovered yet then once the HPI implementation detects the resource HPI reports it as a newly added resource and inserts a record into the RPT of the relevant domain. The specification does not cover how an HPI implementation determines the domain or domains through which the new resource needs to be exposed.

An HPI implementation reports any updates to the RPT, for example, as a consequence of some firmware upgrade. Each time it also increments the update count associated with the domain, so any user can detect the fact of a change.

5.2.6 Open Issues and Conclusions

From the presented features of the HPI one may understand that the software wanting to manage the hardware platform can be written independently of which HPI implementation it needs to interact with. The HPI user code is portable between HPI implementation.

With the tendency toward specialization and modularity, more and more system integrators face not only the need to port a management application from one homogeneous platform to another, but increasingly see the requirement of integrating such an application with heterogeneous hardware systems in which the different hardware modules come from different vendors, each of which provides their own HPI implementation.

The problem is that in this situation the HPI user code would need to be linked with the HPI libraries of the different vendors, which obviously does not work and another solution is required.

To address this need the SA Forum Technical Workgroup launched the work on the definition of an extension to the existing HPI specification allowing the modular use of HPI implementations.

Meanwhile the developers of the OpenHPI [71] implementation of the SA Forum HPI specification resolved the issue by using the plug-in Application Binary Interface (ABI) in their HPI implementation.

OpenHPI is an industry collaboration toward an open source implementation of HPI.

The OpenHPI plug-in solution remains within the framework of the current HPI specification. It works as a dynamic library. The OpenHPI plug-in handler maps the ABI functions to the HPI API. In this mapping a plug-in may not implement the entire ABI, only a subset supported by the hardware or the interface. OpenHPI requires only the implementation of the ‘open’ and the ‘get_event’ functions for proper operation.

This OpenHPI solution questions whether further standardization efforts are necessary or the HPI specification should simply recommend the ABI-based plug-in solution.

As pointed out earlier, the HPI specification does not specify how domains are configured, that is, there are no rules to determine how many domains a system would have and the visibility of resources from these different domains, and so on. All this is implementation specific.

We have also seen that the domain definition is the only defined standard filtering mechanism available in HPI compliant systems.

Considering that an HPI implementation cannot be aware of the applications' needs in advance there are only two possibilities: Either the implementation exposes some kind of nonstandard configuration interface for the system integrator—and this is the route OpenHPI took—or it needs to expose all the information available about the system. In either case an HPI user who opens a session on a domain will get all the information available for that domain; it has no option of filtering it.

In the first case the problem is that each HPI implementation may come up with its own configuration solution that makes the integration difficult. In the later case the amount of information generated in the domain can be overwhelming, for example, if the piece of software needs to manage a piece of hardware in the system without the need of understanding of the entire context of that piece of hardware.

Addressing this gap may be a direction to continue the standardization efforts within the SA Forum.

We can summarize that the HPI provides a platform independent representation and management of hardware components. This encompasses portable fault, alarm, and hot-swap management policies and actions, all essential for availability management.

The HPI solution can utilize different form factors ranging from rack mounted servers to xTCA systems. To help the adoption in these different systems, the SA Forum complemented the basic specification with mapping specifications to xTCA [66] and to SNMP [53].

5.3 Platform Management Service

5.3.1 The Conception of PLM

HPI users need to discover at least one HPI domain but maybe many to find the management capabilities of the piece of hardware they are interested in and to be able to obtain its status and receive subsequent updates. These updates are not filtered by the HPI and the users need to sort out themselves the relevant items from the stream of events delivered by HPI about all entities manageable through the domain or even domains.

Moreover, users need to be aware of the dependencies they may have toward HPI entities and also between different entities and their management resources to figure out whether the HPI entities indeed can be used and relied on for providing their own services.

All this may be a daunting task depending on the relation of the information the user is interested and the size of the domain. It may also consume significant computing resources if the procedure of obtaining the same information needs to be replicated in a number of places within the cluster.

On the other hand, the CLM (which was at the bottom of the AIS ‘stack’ until 2008) forms the cluster and provides its users and other AIS services with the membership information. While the information obtainable via HPI is essential for the cluster formation, it is not enough.

Between CLM and HPI was lying a no man's land encompassing the OS and the quickly emerging virtualization layers about which no information could be obtained and no control exercised that would fit the AIS stack and satisfy the high availability requirements.

This disconnect was further widened by the difference in the approaches used by HPI and AIS.

The basic philosophy of HPI is that the hardware is a given: One needs to discover the entities present in the platform and available for service provisioning. The HPI specification does not cover any configuration aspects, not even the configuration of domains and their content, which are used to control the visibility of the entities for HPI users.

On the other hand, since AIS deals with software entities it is based on configuration, namely the system information model. In high-availability clusters it is tightly managed what software entities are allowed to come up, where and when. The information model covers these aspects for the AIS stack and each service processes its relevant part to obtain the required configuration information. They also use the model as an interface to expose any status information for the system administration.

To bridge the gap between these two worlds, the option of extending the CLM came up; however, it was quickly turned down by the amount of jobs it would need to perform. Such a solution would go against the modular design of the SA Forum architecture.

Instead the PLM was defined to connect HPI's dynamic discovery of hardware with the configuration driven software world of AIS. The definition of a new service was also an opportunity to address the rapidly emerging virtualization layers, and to provide some representation and control over them and the OS that was not covered by any of the existing at the time specifications.

The PLM appeared within the AIS quite late and at the time of writing only the first version was available.

5.3.2 Overview of the SA Forum Platform Management

The main tasks of the PLM can be described as follows:

- it connects the information collected from the HPI about the available hardware entities with the system information model used by the AIS services and their users;

- it complements the information about the hardware with the information about the virtualization layers and the OS;

- it exposes an API through which user processes including the availability management can track the status of selected PLM entities; and

- it provides an administrative interface for the system administration to verify and perform lower level operations in a simple platform independent way.

To achieve these tasks PLM defines an information model with two basic categories of entities and their types. HEs are used to represent HPI entities in the system information model, while EEs may depict elements of the virtualization facilities (e.g., a VM, a hypervisor), OS instances or combinations of them.

An important feature of the PLM information model is that it does not necessarily present the reality in all its details. It paints the platform picture in ‘broad strokes,’ reflecting the significant aspects and abstracting from the rest.

The selection of what is significant and what can be abstracted is based on the configuration information provided to PLM as part of the system information model. PLM takes this configuration and compares it with the information collected from the HPI implementation.

The configuration describes in terms of HEs the types of HPI entities and their expected locations in the hardware platform. PLM matches this up with the detected HPI entities. If there is a match, the HE defining the criteria is mapped to the HPI entity with the matching characteristics. For example, the configuration may indicate that in slot 5 there should be an I/O blade. If HPI detected and reported a CPU blade for slot 5, there is no match and PLM would not allow the CPU blade to become part of the PLM domain. It even issues an alarm that there is an unmapped HE. If the detected blade in slot 5 is indeed an I/O blade the configuration element specifying the I/O blade in slot 5 is mapped to the HPI entity of the blade.

On a HE, which has been mapped and which is configured to host an EE PLM monitors if the booting EE is indeed matches the one indicated in the configuration. If yes, PLM lets the element to continue the boot process and bring up the EE. If the EE does not match, PLM may reboot the HE with the appropriate EE or if that is not possible it will prevent the EE to come up using lower level control, for example, keeping the hosting HE in a reset state or even powering it down.

The PLM specification also defines the state model maintained by a PLM implementation for its entities. The PLM state definitions resemble, but are not identical to, the state definitions of the X.731 ITU-T recommendation (International Telecommunication Union) [72]. A mapping between the PLM and X.731 states can be established easily however.

A major role of PLM is that it evaluates the state interaction between dependent entities. The basic dependency is derived from the tree organization of the information model. In addition the configuration may specify other dependencies between PLM entities. The PLM correlates this dependency information with the state information of each of the entities and determines the readiness status for each entity reflecting whether the entity is available for service provisioning.

PLM calls back client processes when there is a change in the readiness status of entities of their interest. Within the AIS stack the CLM is the primary client of PLM and uses this information to evaluate the cluster membership.

To register their interest with PLM, client processes need to be aware of the PLM information model, its entities. A PLM user first creates an entity group from PLM entities it wants to track and then it asks PLM to provide a callback when the readiness status of any entity within the group changes or when it is about to change.

The PLM readiness status track API supports the multi-step tracking to ensure if necessary that

- an operation proceeds only if it causes no service outage; and also that

- changes to the system are introduced whenever possible in a graceful way, that is, the assignments of service provisioning entity of the system are switched out before, for example, the powering of this entity.

With these options PLM takes into consideration the responses received from its users to allow and disallow different administrative operations, and to stage their execution appropriately regardless of whether they were initiated through physical manipulation (e.g., opening the latch) or by issuing a command through the administrative API.

In the following sections we take a closer look first at the PLM information and state models and then we present the ways PLM users and administrators may use it in their different interactions with the service.

5.3.3 The PLM Information Model

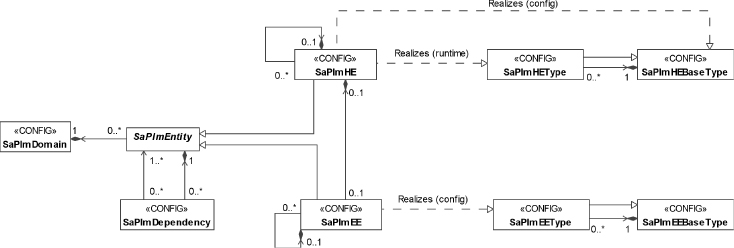

The information model of the PLM shown in Figure 5.3 is part of the system information model [62] maintained by the SA Forum Information Model Management service (IMM) [38] and follows its conventions.

Figure 5.3 The PLM information model [62].

5.3.3.1 PLM Domain

The PLM information model is rooted in the PLM domain object. The PLM domain represents the scope of entities a given PLM implementation manages. The hardware expected to be present in this PLM domain is configured as sub-trees of HEs rooted in this domain object. We discuss HE in details in Section 5.3.3.2.

The system software running on these HEs is represented as EEs. They may compose the last few tiers of the HE sub-trees or may compose separate sub-trees of EEs rooted directly in the PLM domain object. EEs are presented in Section 5.3.3.3.

The tree organization of the represented entities describes their dependency in terms of containment or hosting, for example, a compute blade hosting an OS instance is the parent of the EE representing the OS. Additional dependencies can be defined through the dependency class described in Section 5.3.3.4.

The model also permits that the PLM domain contains only EEs or only HEs.

5.3.3.2 Hardware Elements, Their Types, and Status

The HE represents some hardware within the PLM domain which in turn is mapped into one or a group of HPI entities (Section 5.2.3.2).

The HE is a configuration object describing for PLM the hardware expected to be present in the system. PLM will try to map it into an HPI entity among those discovered through the HPI. The HE is a purely logical entity, which does not manifest in any way in the realms of PLM. The actual manifestation is part of the HPI realm, where the HPI entity maps it into a piece of hardware.

The purpose of this logical HE entity is to provide PLM with enough information to perform the mapping, thus verify the hardware configuration of the system. Once the mapping is established the HE object is used

- to reflect the runtime status of the mapped physical hardware; and

- as a reference to this hardware in the interactions with PLM clients and administrators.

Let's say we have a CPU blade plugged into the fourth slot of our Advanced Telecommunication Computing Architecture (ATCA) chassis; this is the physical hardware. The HPI entity representing it at the HPI level has the entity path {{CPU blade, 0}{slot, 4}{ATCA chassis, 1}{root, 0}}, which is used to identify our blade within the HPI realm. At the PLM level PLM may have mapped it successfully into the CPU3 HE object within the information model and by that PLM verified the correctness of the hardware configuration. As a result PLM sets the entity path attribute of the CPU3 HE object to the entity path {{CPU blade, 0}{slot, 4}{ATCA chassis, 1}{root, 0}}.

From the entity path we can see that at the HPI level there are more HPI entities in the system: at least the slot and the ATCA chassis. The PLM configuration does not necessarily reflect these. The CPU3 HE could be placed in the model directly under the PLM domain object.

The CPU3 HE object may represent the entire blade as a single entity or may have child HE objects representing the different hardware components such as memory, processor, or the firmware on the blade.

The choice is based on whether we would like to expose the states and the control of these HPI entities via PLM.

Each HE has two important attributes: the HE base type and the HE type, which represents a particular version within the HE base type. A HE base type specifies an HPI entity type and it is a collection of different implementation versions of this entity type, each of which is described as a separate HE type. More precisely, each HE type qualifies the HPI entity type of the HE base type with information found in the HPI inventory data record (IDR) management instrument, for example, indicating the vendor, the product line, the model, and so on.

Considering the example CPU3 above it may indicate that it needs to be a CPU blade by referencing a HE base type specifying this feature. PLM will accept and map any CPU blade in slot 4 as long as it matches the IDR characteristics of one of the versions belonging to the ‘CPU blade’ HE base type in the PLM model. Their characteristics may be very specific or just a few depending on the range of implementations that need to satisfy the relation.

Among the attributes of the HE the attribute showing the HE base type is configured, while the attribute holding the HE type of the HE is set by PLM as the result of the mapping. It tells the HE type matching the IDR information of the piece of hardware discovered by HPI at the location that has been mapped to this HE object. For more details on the mapping procedure itself see Section 5.3.5.1.

To reflect the status of the mapped hardware, PLM defines the presence, the operational, and the administrative states as runtime attributes for HEs. The PLM implementation sets the appropriate values for each of these states based on the information it receives from HPI about the mapped HPI entity and also information gathered at the PLM level.

HE Presence State

The presence state of a HE indicates whether the piece of hardware it represents is present in the system and whether it is active. Its values follow the HPI hot-swap state values; however this does not mean that the hardware actually needs to be hot-swappable. In fact it may not even support all the values defined for the state. The PLM implementation maps any information received from HPI to the specific values of the presence state based on their general interpretation. These values and interpretations are the following:

- Not-present: PLM is not aware of any piece of hardware that would match the configuration of the HE entity. For example, there is no blade inserted into the slot where a CPU blade is expected to appear, or the blade inserted is an I/O blade.

- Inactive: PLM has mapped successfully the HE to a piece of hardware in the system, but it provides no functionality. Effectively the hardware is isolated from the rest of the system. For example, HPI detected a CPU blade, which at the PLM level matches the HE configured for the slot; and HPI reports the inactive hot-swap state for the blade.

- Activating: The activation of the piece of hardware represented by the HE has been initiated, but it has not reached its full functionality yet. For example, HPI reports the insertion pending hot-swap state for the CPU blade after the latches have been closed.

- Active: The hardware represented by the HE has reached its full functionality. It can be used for service provisioning as intended. For example, HPI reports the active hot-swap state for the CPU blade.

- Deactivating: The deactivation or removal of the hardware represented by the HE has been initiated. For example, HPI reports the extraction pending hot-swap state for the CPU blade when someone opens its latches.

HE Operational State

The HE operational state reflects whether PLM is aware of any kind of error condition regarding the piece hardware the HE represents. PLM sets the operational state to disabled when it learns about an error condition in the piece of hardware represented by the HE. Otherwise the operational state is enabled.

PLM may find out about error conditions from several sources: From HPI by monitoring the relevant HPI resources and by analyzing the events reported by HPI; from PLM users who may submit error reports on PLM entities; and by implementation specific means.

Whichever way PLM detects an error, it sets the operational state to disabled and, to protect the rest of the system and prevent fault propagation, it isolates the related piece of hardware from the system. HEs are considered intrinsic fault zones.

To isolate, the PLM implementation performs the operations appropriate for the HE to put it to the inactive presence state. How it does so depends on the HPI resources available for the HPI entity representing the hardware at the HPI level. For example, for an FRU with manageable hot-swap states this would mean setting the inactive hot-swap state. For other HPI resource this could be equivalent to a power-off.

Sometimes PLM cannot initiate or verify the isolation as the hardware is inaccessible. PLM indicates this condition by setting the management-lost and the isolation-pending flags.

The HE operational state becomes enabled when the represented hardware is physically removed from the system, or if PLM receives a report that the error condition has been cleared.

HE Administrative State

The administrative state reflects to what extent the HE may offer its functionality within the system. It is controlled solely by administrative operations that PLM receives via the administrative API. Here we only provide the values that the administrative state may take. We will look at its control in Section 5.3.4:

- Unlocked: The HE is not directly blocked from providing services.

- Locked: The HE may be in the active presence state, but is being blocked from providing service. As a result all of its child entities are also blocked. For example, if the HE hosts an EE when the HE is locked PLM makes sure that the EE does not come up or if it is running PLM shuts it down. However if the HE is active one may run diagnostics on it as it is not related to service provisioning.

- Locked-inactive: The administrator forces the isolation of the HE and all its dependants from the system. It is put into the inactive presence state.

- Shutting-down: The HE may continue to serve existing users, but it may not accept new service requests. When the last existing user completes the administrative state of the HE changes to locked.

5.3.3.3 Execution Environments, Their Types, and Status

An EE may represent an instance of an OS, a VM hosting an OS, or a hypervisor hosting, and managing some VMs.

The common theme among these entities is that they are often referred as system software as each of them is a kind of container, which is capable of running some other software entities while managing system resources. They also expose some control interface through which the hosted software entities can be controlled individually or as a collective.

Since within the container itself, the different software shares the different resources fault propagation may occur even between otherwise independent pieces. Consequently the representation of the different containers, the EE is considered as a barrier to fault propagation. It is an inherent fault zone and therefore it is used for fault isolation.

In the PLM information model a HE representing some HPI entity may host at most one EE and vice versa an EE is hosted on exactly one HE.1 This single EE may be an OS instance or a hypervisor—also referred as virtual machine monitor (VMM).

This single EE may host additional VM and OS instances all sharing the computing facilities of the HPI entity and consequently all impacted by a failure of this HPI entity. Obviously any software running in these EEs will also be impacted, therefore for availability management it is essential to be aware of this lower level organization of the system when, for example, one decides about the distribution of redundant entities among EEs.

Being a fault zone and reflecting the organization of fault zones are the features that the EE logical entity introduces to the system information model. It also exposes a simple and uniform management interface for these different software entities that PLM users can rely on without the need to be aware of the specificities of each of these system software entities.

The PLM implementation is responsible for mapping the administrative control operations and the state information between the particular software instance represented by the EE entity and the PLM administrator. Doing so PLM may interact with the software instance using a Portable Operating System Interface for Unix (POSIX) [73] like interface in case of an OS instance, or use some API such as libvirt [74] for managing the virtualization facilities.

In either case, PLM does control the life-cycle of the EE software entities. So we can say that there is an actual entity in the system, which corresponds to the configuration object in the information model and which is managed by PLM. PLM also controls the type of the EE instance starting up, which means that if the booting software does not correspond to the expected EE, PLM is at least able to prevent it from the completion of booting, but typically it is able even to initiate the boot of the correct software.

To determine whether an EE is the correct one, the PLM information model defines EE base types and EE types. The base type specifies at least the vendor of the EE, but it may also indicate the product and the release. The EE type identifies a particular version within a base type. It also provides some instantiation and termination parameters for the type applicable to all EE instances of the type. For example, the EE base type may say that it is a particular vendor's Enterprise Linux line. It may have several EE types depending on the various versions used in the system, and for each it would indicate the appropriate instantiation and termination timers.

Looking at the EE object class used to configure each EE instance in the system; it references the EE type as a configuration attribute based on which PLM can determine if the booting instance is the expected one.

As we have seen in Section 5.3.3.2, this approach differs from the approach used with HEs which only configures the HE base type. This difference reflects the fact that PLM controls the version of the EE booting as opposed to just detecting the version of the HE plugged into the system.

Each EE object also provides the state information for the EE instance it represents. The PLM specification defines the same set of states for the EE as for the HE. That is, it defines the presence, the operational, and the administrative states. The interpretation of these states is slightly different though than it is in the case of HEs, reflecting the EE's software nature.

EE Presence State

The presence state of an EE reflects its life-cycle. The EE presence state may take the following values:

- Uninstantiated: The system software represented by the EE object is not running, PLM has not started or detected its instantiation.

- Instantiating: The instantiation of the system software has started either by PLM initiating it or automatically after the hosting hardware reached the active presence state.

- Instantiated: The system software represented by the EE object has completed its startup procedure and reached the state where it is capable of providing the desired operating environment.

- Instantiation-failed: The system software did not reach the fully operational state within the applicable time limit. It failed to instantiate.

- Terminating: The EE presence state is set to terminating as soon as the procedure to stop an already instantiated EE has been initiated. The termination typically starts first with the graceful termination of the software entities hosted by the EE followed by the termination of the EE itself.

- Termination-failed: PLM sets the presence state of an EE to termination-failed if after the applicable timeout it still could not verify the successful stopping of the EE instance.

As opposed to the HE presence state which follows the HPI hot-swap state definition the EE presence state definition is aligned with the presence state of Availability Management Framework (AMF) [48] entities. The main difference compared to AMF is that there is no restarting state for EEs. In AMF the restarting state reflects that any assigned service function logically remains with the entity during its restart. A lack of this state at the PLM level reflects that there is no such expectation for PLM entities mainly because AMF and the other AIS services manage themselves the life-cycle of their entities and perform service recoveries as appropriate. A PLM level service recovery could contradict to these higher level actions.

EE Operational State

The EE operational state is very similar to the HE operational state. It reflects whether the PLM implementation is aware of any type of error condition of the represented EE instance.

PLM sets the operational state to disabled

- if the EE presence state becomes instantiation-failed or termination-failed;

- if it detects an error condition in the EE in some implementation specific way; or

- if it receives an error report for the EE.

Whenever the EE enters the disabled state, the PLM implementation is responsible of isolating it from the rest of the system to protect the system and prevent any fault propagation. This means the abrupt termination of the EE instance, which PLM carries out via the entity hosting the faulty EE. It depends on the management capabilities of this host entity how PLM achieves this task.

For example, if the EE resides directly on a HE, PLM may power off or reset the hosting HE. If the EE is a VM hosted by a hypervisor, the hypervisor typically offers the control to terminate the particular VM and the PLM implementation would use that.

As in the case of HEs, when PLM cannot initiate or verify the isolation due to the relevant entity being inaccessible it indicates the condition by setting the management-lost and the isolation-pending flags.

PLM re-enables the EE operational state if it receives a report that the EE has been repaired by the administrator or the error condition was cleared.

EE Administrative State

As for HEs, the EE administrative state reflects whether the EE may offer its functionality for its users. The EE administrative state is controlled via administrative operations, which we will discuss later in Section 5.3.5.2.

The EE administrative state has the following values:

- Unlocked: The EE is not prevented from providing service.

- Locked: The EE may be instantiated, but it is prohibited from providing service. For example, for an EE representing an instance of the Linux OS the locked state may mean that it runs at a lower init level.

- Locked-instantiation: The EE must not be instantiated. For example, PLM may use the hypervisor's control capability to block the instantiation of a VM, or for an OS hosted directly on an HE assert the reset state of this hosting HE.

- Shutting-down: The EE may serve existing user, but it may not accept new service requests. Once all existing users have been served the EE enters the locked state.

5.3.3.4 Entity State Interaction

As PLM entities change their states they impact each other, allowing or preventing the other to provide services. Whether an entity can provide any service is reflected in its readiness status. The path along which the entities impact each other is determined by the dependencies among the entities.

In this section we present how this essential for availability management information is defined and maintained by PLM. Subsequently in Section 5.3.4, we present how PLM users can obtain this single most important information.

Readiness Status

The readiness status reflects to what extent an HE or an EE is available for service. It is composed of the readiness state and the readiness flags.

The readiness state summarizes the presence, the operational, and the administrative states of a PLM entity itself and the readiness state of the entities it depends on. It has three values:

- In-service: When the readiness state is in-service it indicates that

- the PLM entity is

- healthy—its operational state is enabled;

- capable—its presence state is active or deactivating in case of an HE, and instantiated or terminating for an EE; and

- permitted of providing services—its administrative state is unlocked. And

- all the entities the PLM entity depends on are also in the in-service readiness state.

- the PLM entity is

- Out-of-service: A PLM entity is out-of-service when it is

- not capable—it is disabled or it is in a presence state other than

- active or deactivating in case of an HE;

- instantiated or terminating in case of EEs; or

- administratively prevented from supporting service provisioning—it is locked, locked-inactive, or locked-instantiation; or

- missing a dependency such as its container entity being out-of-service, for example, due to being locked.

- not capable—it is disabled or it is in a presence state other than

- Stopping: A PLM entity enters the stopping readiness state when the administrator moves it or an entity it depends on to the shutting-down administrative state.

The readiness flags further refine the readiness state. There are six flags qualifying the information provided by the readiness state:

- Management-lost flag: It indicates that the PLM implementation has no management access to the PLM entity; any state information for the entity needs to be taken with a grain of salt as PLM cannot verify the information and the state values which represent the last known values may be old data.

The following two flags can only be used in conjunction with the management-lost flag:

- Admin-operation-pending: The PLM could not perform or verify the execution of an administrative operation due to the loss of management access.

- Isolate-pending: Because of the loss of management access, the PLM could not isolate or verify the isolation of the PLM entity.

- Dependency: By setting this flag PLM indicates that the PLM entity is not in-service due to a state change of an entity that the PLM entity depends on, rather than due to a state change in the PLM entity itself.

- Imminent-failure: PLM uses this flag when it receives or detects some indications that a currently enabled PLM entity may become disabled any moment (e.g., a sensor may have signaled the stepping over of some threshold). However at the moment there is no state change yet. Effectively it is a warning recommending users to abandon the use of the entity for which it is set to prevent sudden service impacts.

- Dependency-imminent-failure: Same as imminent-failure but rather than by its own fault the PLM entity is expected to become unavailable due to a dependency.

PLM Entity Dependencies

PLM entities are organized in sub-trees under the PLM domain object. This tree organization is interpreted as a dependency resulting from containment and it also implies a top-down propagation of the state change implications.

Let us assume a chassis having slots which house blades that are carriers of mezzanine cards, each of which may host an OS instance. If a mezzanine card fails it can no longer run the OS it hosts; if the carrier blade breaks none of the mezzanine cards can run their EEs or provide their other functionalities, and so on. The presence and operational states of these entities interact in this manner according to the specification, which reflects reality.

Depending on the hardware architecture, the representation of hardware dependencies may not be this straightforward. It is also influenced by the choice of which HPI entities one wants to reflect in the PLM model.

For example, the CPU, the memory, the hard drive, the I/O card all may be necessary to run an OS instance, but they may be different pieces of hardware, which means that they show up as different HPI entities and accordingly they may be represented in PLM as different HEs. In the tree representation these HEs could be children of an HE representing the board. The question is where in the tree should be the EE object reflecting the OS requiring all these elements.

To properly reflect this relation besides the tree organization the PLM information model also includes the configuration object class that indicates dependencies among PLM entities. Using objects of this class one could define that although it is the HE mapped to the CPU HPI entity that hosts the OS, this EE also depends on the HEs representing the other devices (i.e., memory, hard drive, and I/O card).

Hardware platforms built for high-availability systems typically encompass some—a lot of—redundancy. Among others, this is the reason why they are often built as a chassis capable of housing many identical blades. These blades then are connected through redundant communication paths including redundant switches and powered through redundant power supplies.

For the correct operation of the system, however, at any moment in time not all of these redundant devices are needed. It is enough that at least one of the duplicated devices is available. The dependency class allows the reflection of this constraint.

The PLM dependency object defines the way a PLM entity (HE or EE) depends on a set of other PLM entities. It is used for each set of redundant entities and it specifies how many entities in the set need to be available for service to satisfy the dependency.

‘Available for service’ here means that the readiness state is not out-of-service. In other words, at least the indicated number of entities of the set needs to be either in the in-service or in the stopping readiness state.

For example, for the chassis with dual power supply we could specify that the chassis has a dependency on two power supplies and at least one of them must not be out-of-service.

The dependency—whether it is implied through containment or defined through dependency objects—impacts the readiness state of the dependent entity.

In case of containment all ancestor entities need to be in the in-service state for the PLM entity to be able to stay in the in-service state. If any of them moves to the stopping or the out-of-service readiness state, the dependent entity moves with them. In other words, if an entity moves to the stopping or out-of-service readiness state, all its in-service descendant entities move with it immediately.

In case of a dependency relation configured using the dependency object class, if an entity requires m out of the set of n entities, then for the entity to be in-service at least m of these n entities need to be in-service. If at least one of these m entities moves to the stopping readiness state the dependent entity also moves to the stopping readiness state.

In conjunction with the readiness state the dependency readiness flag indicates that the entity is stopping or out-of-service due to a dependency (regardless of whether it is specified through the tree hierarchy or the dependency objects) and not as a result of a change of its own state values.

The dependency-imminent-failure flag is used to indicate that an imminent failure may occur due to a dependency; however, this does not change the readiness state of the entity for which it is reported. It is a warning. An HPI sensor stepping over a sensor threshold may set the imminent-failure flag for the related HE. PLM propagates this to all its dependent entities by setting their dependency-imminent-failure flags. For the propagation PLM takes into account both the containment and the dependency objects.

It may be interesting to look at how some virtualization features may be represented using dependencies. As we mentioned earlier, if an EE is hosted on a HE it can be configured as the child of this HE. If it is a hypervisor it could be the parent of a set of dependent child-EEs. We can represent each VM and its OS as such a child-EE of the hypervisor EE. These will be the leaf objects in the PLM information model.

Such a representation, however, fixes the relationship of these child-EEs with the HEs and the hosting hypervisor due to the naming convention used in the information model discussed in Section 4.3.1.3. This conflicts with one of the desirable feature of virtualized environments, namely that VMs may migrate.

Instead, one may configure such migrating VMs using dependencies. In this case the migration enabled VMs would be configured as direct descendants of the PLM domain, each of which depends on a set of hypervisors which are configured as leaves of the HE sub-trees. As long as at least one of the hypervisors is in-service and ready to host the VM, the VM can be migrated to it and can be in-service as well. Note however that at the moment PLM is not required by the specification to manage this migration nor it is specified how the dependency object reflects which hypervisor hosts the VM at any given instance of time.

Dependencies just like the PLM domain are not typed objects in the PLM information model.

5.3.4 Tracking of PLM Entities

For PLM users the most important aspect of platform management is that through its track interface it can provide them with up-to-date information about the readiness status of the different PLM entities within the system. This includes readiness state changes, readiness flag changes, but also allows the users to