CHAPTER 6

Networking in the AWS Cloud

In this chapter, you will

• Learn about basic AWS networking concepts

• Learn about virtual networking with Amazon Virtual Private Cloud (VPC)

• Learn how AWS Direct Connect lets you bypass the public Internet to access your resources in the AWS cloud

• Learn how Elastic Load Balancing (ELB) helps you balance your workloads among multiple EC2 instances in an AWS region

• Understand how to use Amazon Route 53 to manage your network domains and connect to AWS

• Learn how Amazon CloudFront helps deliver web content faster to your users

AWS networking is a crucial component of AWS, as networking underlies everything you do in AWS. You’re likely to be quite familiar with several of the networking features that I’ll be discussing in this chapter, while some are specific to AWS, such as virtual private clouds and Amazon’s Route 53, its Domain Name System (DNS) service.

The certification exam expects you to know the basics of networking, such as IP addresses, subnets, and so on. I introduce the basics first, and following that, explain the key AWS networking features.

Basic AWS Networking Concepts

Before we jump into AWS networking concepts such as Amazon VPC, you need to understand the definitions of several common networking terms and concepts.

Virtual Private Cloud

A VPC is an isolated virtual network dedicated to your account. This logically isolated network is inside the AWS cloud, where you can launch your AWS resources such as EC2 instances within your VPC and run AWS services safely. You manage the VPC entirely.

You must specify a range of IPv4 addresses when you create the VPC in a Classless Inter-Domain Routing (CIDR) block (such as 10.0.0.0/16). The first block you create will be the primary CIDR block, and you can associate secondary CIDR blocks later to the VPC if required.

Subnets

A subnet is a range of IP addresses in your Amazon VPC. After you launch services such as EC2 into specific subnets in your VPC, you place them into a public subnet if you want those services to be connected to the Internet. Otherwise, you use a private subnet to launch the services.

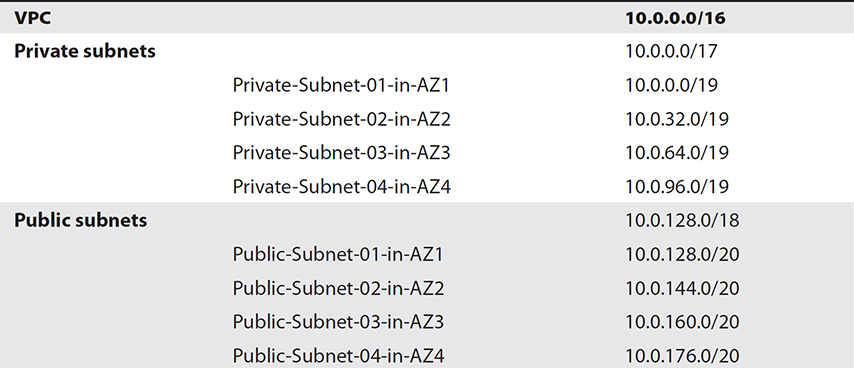

A VPC is divided into public subnets and private subnets, and further segmented into availability zones (AZs), as shown in Table 6-1. Remember that when you create a subnet, it’s mapped to an AZ, and you can attach only a single AZ to a subnet.

Table 6-1 VPC Divisions

Subnets of a PC must be in the same address space as the VPC. Thus, if you create a VPC with the CIDR of 10.0.0.0/16, you can create a pair of subnets (one public, the other private) such as 10.0.1.0/24 and 10.0.2.0/24, but not a pair such as 10.1.0.0/24 and 10.2.0.0/24.

Route Tables

A route table is associated with each subnet and specifies the permissible routes for outbound traffic from that subnet. A route table includes a set of rules, or routes, that you configure, which are used to determine where network traffic is directed. Every subnet in a VPC must be associated with a route table. Your VPC has a main route table, and by default, every subnet you create is associated with your VPC’s main route table.

Security Groups

A security group controls inbound and outbound traffic for your EC2 instances. It is a virtual firewall that controls traffic to an instance. If you don’t specify a security group when you create an instance, AWS uses the default security group. Here are the default rules for a default security group:

• Allow all inbound traffic from all other instances associated with the default security group

• Allow all outbound IPV4 traffic from the instance

A custom security group enables you to control the type of role an instance plays, such as a web server or a database server. Here are the default rules for a custom security group:

• Allow no inbound (ingress) traffic

• Allow all outbound (egress) traffic

You can add rules in a security group that control traffic flow to or from the instances you associate with that security group. By default, a security group allows all outbound traffic. AWS evaluates all the rules from all the security groups you associate with an instance before determining whether it should allow traffic to reach the instance. You can also assign multiple security groups to an instance. AWS aggregates the rules in all the security groups you assign to an instance to determine whether it should grant access.

Network Access Control Lists

Network access control lists (NACLs) control both inbound and outbound traffic for your subnets. Each subnet that you create in your VPC must be associated with an NACL, and by default, all subnets are associated with your VPC’s default NACL.

Security groups and ACLs offer additional layers of security for AWS resources in each subnet in a VPC. Unlike a security group, an NACL is stateless. That means that any changes applied to an incoming rule will not be applied to the outgoing rule. So, for example, if you allow an incoming request on port 80, you would also need to apply the rule for port 80 for outgoing traffic. Network ACLs are tied to the subnet, so the rule applies to all instances within the subnet group.

Domain Name System

DNS uses a distributed directory that resolves human-readable hostnames, such as example.com, into machine-readable IP addresses, such as 192.161.1.132.

Amazon Virtual Private Cloud

An Amazon VPC is a virtual network dedicated to your AWS account. A VPC is isolated from other networks in the AWS cloud. Amazon VPC enables you to launch your AWS resources such as EC2 instances into your own virtual private network (VPN), and it thus acts as the network layer for EC2. The virtual network, as its name says, is private to you and is quite similar to traditional networks that you work with in your data centers, which means that it includes entities such as routing, ACLs, and firewalls.

You can specify an IP address type (IPv4 and/or IPv6) and range for the VPC that you create and add subnets to the VPC. You can also associate security groups with the VPC as well as configure route tables.

IP Address Types and IP Ranges

You can use either or both IPv4 and IPv6 address types for a VPC; the IPv4 type is the default and mandatory, and IPv6 is optional. It’s important to understand that all IPv6 address types are reachable from the Internet. By default, your VPC and subnets must have IPv4 CIDR blocks, but you can optionally associate an IPv6 CIDR block with the VPC. You must specify a range of IP addresses (CIDR block) for your VPC when you create the VPC. If you’re creating multiple VPCs, each one must have a separate address range.

The size of the CIDR block for your VPC depends on the address range that you choose, and it can range from a /16 (65,536 IP addresses) to a /28 (16 IP addresses) netmask. You can associate one or more secondary CIDR blocks, with a maximum of four secondary IPv4 blocks, with the VPC after you create it, enabling you to increase the size of the VPC.

Here are some key factors about CIDR blocks and a VPC:

• You can’t change the size of a VPC’s CIDR block after creating it—that is, you can’t increase or decrease the size of an existing CIDR block.

• A subnet’s CIDR block is a subset of the VPC’s CIDR block if you create multiple subnets.

• CIDR blocks of different subnets can’t overlap.

• If you have a single subnet in your VPC, the CIDR block of the subnet and the VPC can be identical.

Each subnet in a VPC must have its own distinct CIDR block. Let’s say, for example, that you create a VPC with the CIDR block 10.0.0.0/24. You want to create two subnets within this VPC. The /24 netmask allows 256 IP addresses, meaning that each of your subnets can have a maximum of 128 IP addresses (if you decide to assign each subnet the same number of IP addresses). One of the subnets uses the CIDR block 10.0.0.0/25, with the IP address range 10.0.0.0–10.0.0.127. The other subnet uses the remaining portion of the VPC’s CIDR block, which will be 10.0.0.0/25, with the IP address range 10.0.0.128–10.0.0.0.255.

AWS recommends that you specify a CIDR block of /16 or smaller and dedicate that network to your VPCs to allow for future needs. AWS further recommends that you specify the CIDR block (/16 or smaller) from the following set of private IPv4 address ranges:

• 10.0.0.0–10.255.255.255.255 (10/8 prefix)

• 172.16.0.0–172.31.255.255 (172.16/12 prefix)

• 192.168.0.0–192.168.255.255 (192.168/16 prefix)

One you create a VPC with a CIDR block such as 20.0.0.0/24, you can create both public and private subnets in the VPC. In this case, you can create, for example, two subnets: one public subnet with the CIDR block 20.0.0.0/25 (128 IP addresses, in the range 20.0.0.00–20.0.0.0.127) and a private subnet with the CIDR block 20.0.0.0/25 (128 IP addresses, with the IP range 20.0.0.128–20.0.0.0-255).

AWS reserves certain IP addresses in the network for its own use, as shown in the following list. If, for example, you create a subnet with the CIDR block 10.0.0.0/24, AWS reserves the first four IP addresses, as well as the last address.

• 10.0.0.0 The network address.

• 10.0.0.1 Reserved for the Amazon VPC router.

• 10.0.0.2 Reserved for the IP address of the DNS server, which is always the base of the VPC network range, plus two. However, AWS also reserves the base of each subnet’s range plus two. If you have multiple CIDR blocks, the DNS server’s IP address is located in the primary CIDR.

• 10.0.0.3 Reserved for future use by AWS.

• 10.0.0.255 Reserved as the network broadcast address, because AWS doesn’t support broadcast in a VPC.

The reservation of these IP addresses means that you can’t assign any of them to an instance running in either a private or a public subnet inside your VPC. So, in the earlier example of a VPC with the CIDR 20.0.0.0/24 (256 IP addresses), you can assign any of the following IP addresses (private IP address) for an EC2 instance, but not 20.0.0.0/255, since it’s the network broadcast addresses reserved for AWS use:

• 20.0.0.132

• 20.0.0.55

• 20.0.0.122

Accessing the Internet from a VPC

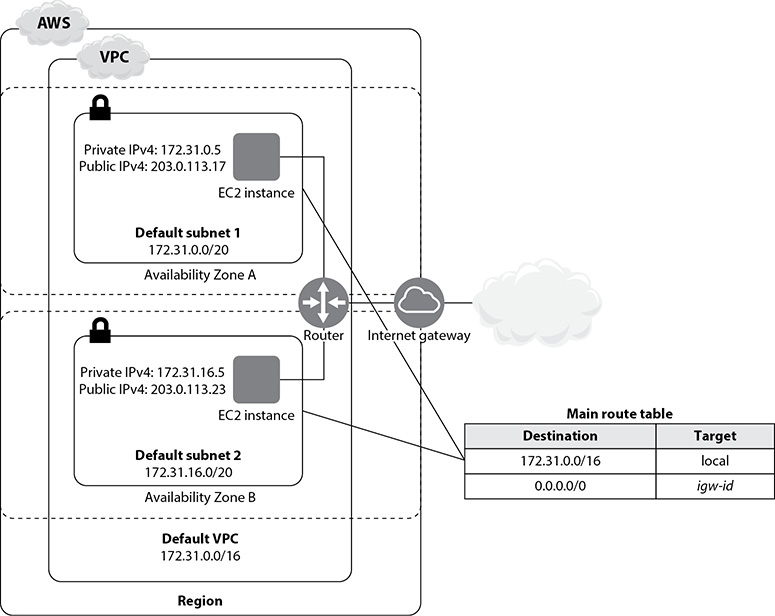

Each default subnet in a VPC is a public subnet. The EC2 instances you launch into the default VPC have both private and public IPv4 addresses. You communicate with the Internet from your VPC through an Internet gateway, which helps your EC2 instances connect to the Internet via the EC2 network edge. You need the Internet gateway to connect to services that are outside your VPC. Some AWS services such as Amazon S3 have endpoints that eliminate the need for an Internet gateway, but not every service has such an endpoint. A default VPC comes with an Internet gateway, and for a non-default VPC, you must create a gateway to communicate with the Internet.

Figure 6-1 shows how EC2 instances in a VPC communicate through an Internet gateway.

Figure 6-1 Accessing the Internet from inside a VPC

Amazon Virtual Private Cloud Components

To work with a VPC, you must understand various networking components that play a key role, such as subnets and route tables. I’ve been using several of these terms out of necessity, but I’ll provide clear definitions and explanations for all the VPC network-related terms and concepts here.

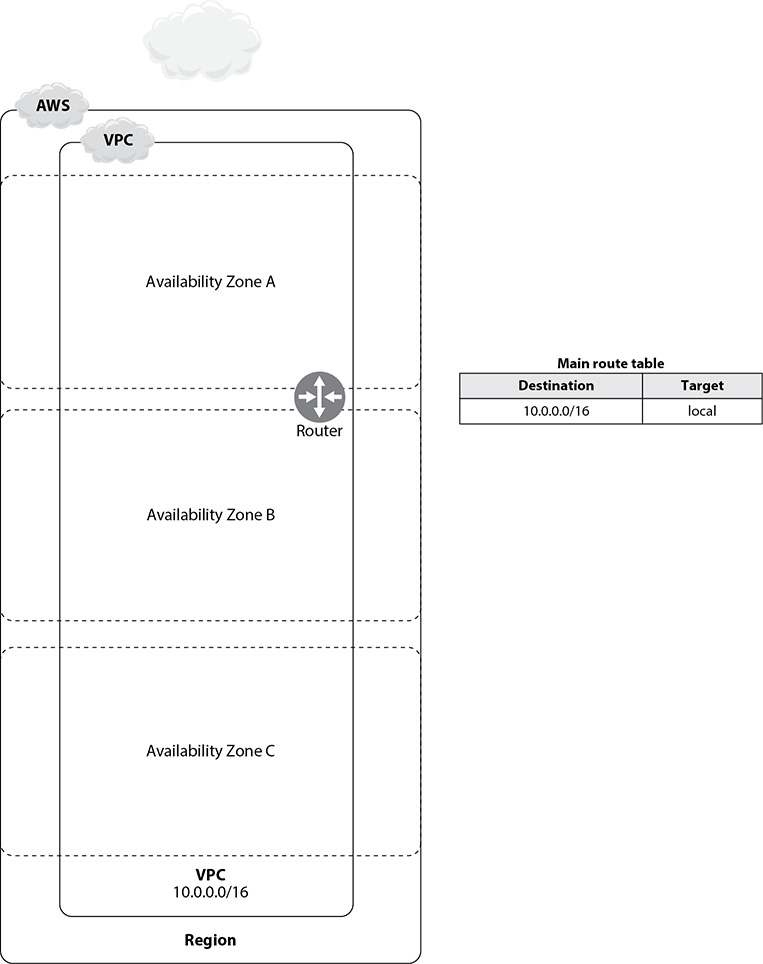

Figure 6-2 shows a VPC with a CIDR block of IP addresses and a main route table.

Figure 6-2 A simple VPC

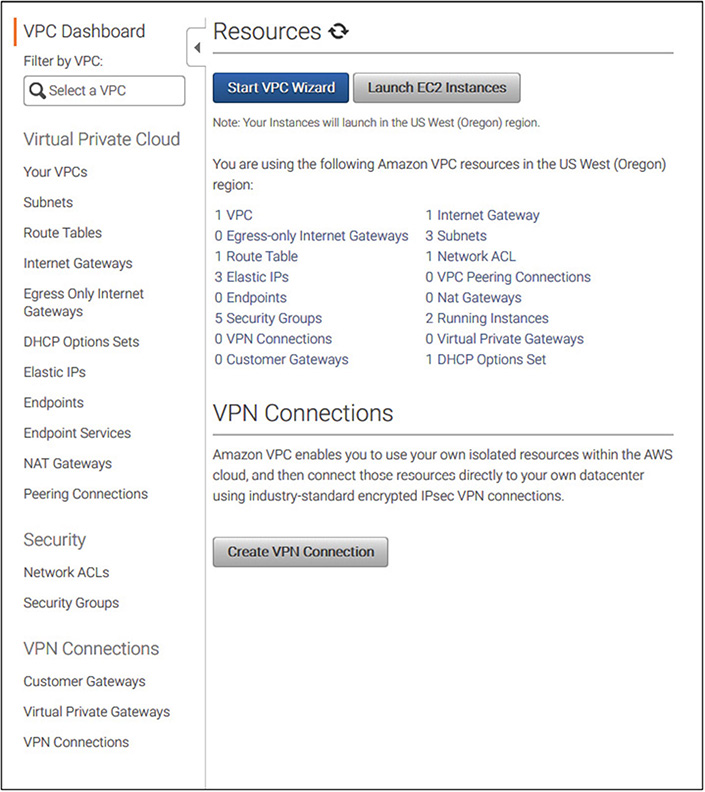

A VPC comes with several components that work together to enable networking. Figure 6-3 shows the VPC dashboard, listing all the VPC resources you can use in your VPC.

Figure 6-3 The VPC dashboard lists all the VPC components.

In the following sections, I explain each of the key VPC resources.

Subnets and a VPC

When you create a VPC, you can launch AWS resources such as instances into that VPC. As part of the VPC creation process, you must specify a range of IP addresses (IPv4) for the VPC. The VPC further subdivides that into one or more ranges of IP addresses, called a subnet. You specify the IP addresses in the form of a CIDR block, such as 10.0.0.0/16, which is the primary CIDR block for the VPC.

Subnet Types There are two main types of subnets: private and public. A private subnet is used for resources such as databases that don’t need to connect to the Internet. A public subnet is used for resources such as web servers that must connect to the Internet.

Default and Nondefault Subnets and Access to the Internet When you create a VPC, you create a default subnet as well, which is a public subnet. The EC2 instances you launch into these subnets have private IPv4 addresses as well as public IPv4 addresses. These instances can communicate among themselves and are able to access the Internet through an Internet gateway.

When you launch an EC2 instance into a nondefault subnet, the instance has only a private IPv5 address. Thus, these instances can communicate only among themselves, with no Internet access. You can, however, assign a public IPv4 address at instance launch time or modify the subnet’s public IP address attribute.

You can enable Internet access for the EC2 instances that you launch into a custom nondefault subnet in one of the following ways:

• Attach an Internet gateway to the VPC (if it isn’t a default VPC) and associate an elastic IP (EIP) address with the instance.

• For IPV4 traffic, to enable EC2 instances in a VPC to initiate outbound connections to the Internet but disallow random, unsolicited connections from the Internet, you can use a network address translation (NAT) device, or a NAT gateway. The NAT device uses an EIP and communicates with the Internet via an Internet gateway. You can connect EC2 instances in a private subnet to the Internet through the NAT device, which routes traffic from the EC2 instance to the Internet gateway and routes the responses to the EC2 instance.

When you create subnets, they inherit the VPC’s route table, security groups, and network ACLs. You must therefore modify the route table, security groups, and network ACLs to change the routing of Internet traffic to the subnet you created.

AWS recommends that you create a public subnet and private subnet for every AZ. The public subnet (used for the web layer) includes a route to the Internet. It has either a public IP or an EIP assigned to it. The private subnet (used for the database and application layer) has no route to the Internet and is assigned only a private IP address for internal communications inside the VPC.

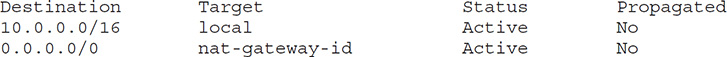

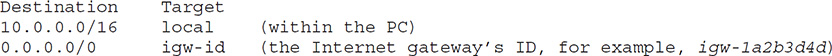

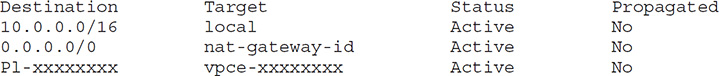

If you want to set up the private subnet to send and receive Internet traffic, you must configure it to do so. If you want the private subnet to send network packets to the Internet, you must create a route in the subnet’s route table, specifying that the IP packets must be routed through either a NAT gateway or a NAT instance. For the private subnet to receive traffic from the Internet, the network packets must go through a load balancer of some type, such as the Classic Load Balancer or the Application Load Balancer (both described later in the section “Elastic Load Balancing”). A private subnet with a NAT gateway attached has a route table with the NAT gateway specified in a route, as shown here:

Accessing Your Corporate Network from Your VPC

You can connect your corporate data center with your Amazon VPC through an AWS-managed VPN connection. This in effect makes the AWS cloud an extension of your corporate data center. A VPN connection uses two gateways: a virtual private gateway on the AWS side and a customer gateway in your data center. The virtual private gateway is attached to your VPC and the customer gateway is a physical device or software appliance located in your data center.

Accessing AWS Services Through AWS PrivateLink AWS PrivateLink helps you privately connect your Amazon VPC to supported AWS services and to services that are hosted by other AWS account (VPC endpoint services). The traffic between your VPC and these services remains within the Amazon network. You don’t need an Internet gateway, a NAT device, an AWS Direct Connect connection, or a VPN connection to communicate with the services. When you create a VPC endpoint for a service in your VPC, you create an elastic network interface with a private IP address that the traffic sent to the service uses as an entry point.

Launching an EC2 Instance Inside Your VPC Follow these steps, in this order, to launch EC2 instances in a VPC:

1. Create an empty VPC, either with the AWS CLI command create_vpc or from the Amazon VPC console. (See Exercise 6-2 to create a VPC.)

2. Next, you can create subnets. To add a new subnet to a VPC, you need to specify an IPv4 CIDR block for the subnet from the IP range of a VPC. You can create a subnet with the create-subnet command or from the Amazon VPC console, as shown in Exercise 6-3.

3. After you create a subnet, you must configure routing. By default, a subnet you create is a private subnet. To make the subnet a public subnet, you must attach an Internet gateway to your VPC. So, you need to do the following:

• Create and attach an Internet gateway to the VPC.

• Create a custom route table and add a route to the Internet gateway.

4. Also, you can do the following to enhance the instance’s security in your VPC.

• Create a security group.

• Create network ACLs.

5. After you’ve created your subnet, configured routing, and secured the VPC, you can launch an EC2 instance into your subnet (from the EC2 console or via the command line with the create-instance command).

For the EC2 instance to communicate with services outside your VPC, you must associate a public IP address with the instance by assigning one when you create the instance. Alternatively, NAT can assign the IP address with the NAT service being provided by a NAT gateway.

The Default VPC

Your account comes with a default VPC with a default subnet in each AZ. If you don’t create your own VPC, your EC2 instances will launch in the default VPC. The VPC you create is called a nondefault VPC. Subnets that you create in this VPC are called nondefault subnets.

The default VPC has the following components:

• A default subnet in each AZ

• A size /16 CIDR block (172.31.0.0/16), which provides up to 65,536 private IP addresses

• A default subnet /20, which provides up to 4096 addresses

• An Internet gateway

• A main route table, which routes all IPv4 traffic destined for the Internet to the Internet gateway

• A default security group and a default ACL that allow all traffic

• A default DHCP option set

Elastic Network Interfaces

An elastic network interface (ENI) is a virtual network interface that includes attributes such as these:

• A primary and one or more secondary private IPv4 addresses

• An EIP for each private IPv4 address

• A public IPv4 address that can be assigned to the eth0 network interface

• One or more IPv6 addresses

• One or more security groups

• A MAC address

• A description

Every instance in a VPC has a default network interface, which is the primary network interface (eth0). This interface has a private IPv4 address. Though you can’t detach an instance’s primary network interface, you can configure and attach multiple network interfaces to instances in your VPC. Among other things, multiple network interfaces offer an inexpensive high availability solution for instances in your VPC.

Let’s say, for example, that you have an application inside your VPC with hard-coded IP addresses. You can enable the application to fail over to new instances without having to reconfigure the application by assigning a secondary private IP address to the primary ENI that can be moved to a failover instance. You can also achieve the failover capability by creating a secondary ENI that can be moved to a failover instance. You can do both of these when launching an instance (cold attach), when you are allowed to specify up to two network interfaces. You can also add network interfaces to a running instance (hot attach) or when the instance is stopped (warm attach). If you specify more than one network interface for an instance, you can’t auto-assign a public IPv4 address to that instance.

The maximum number of network interfaces depends on the instance type. For example, the c4.8xlarge instance type can have a maximum of eight network interfaces and up to thirty IPv4 addresses per interface.

An elastic network interface is a static public IPv4 address associated with your account. The interfaces are associated with your AWS account, not to the instances. You can thus associate an ENI with any instance or network interface in your VPC. When you turn off an instance, the ENI that you’ve associated with that instance remains. If you delete the instance, the ENI continues to be associated with your account, because you created it.

When you create a network interface, it inherits the public IPV4 address from the subnet. After you create a network interface (same as an ENI in our context), you can attach it to an instance, detach it from that instance, and attach it to another instance. The network interface carries over the attributes that you’ve defined, and network traffic is sent to the new instance to which you attached the network interface. If you want, you can modify the network interface’s attributes—say, by changing its security groups and managing its IP addresses.

You can monitor IP traffic going to and from a network interface by enabling a VPC flow log on the network interface. Once you create the flow log, you can view its data in CloudWatch Logs.

Attaching Multiple Network Interfaces to an Instance

You can attach multiple network interfaces to an instance in the following use cases.

Create a Low Budget High Availability Solution You can keep a hot-standby instance running and preconfigure it for the same role as the primary network interface. When the primary instance fails, you attach the primary network interface to the hot-standby instance. When you attach the network interface to the secondary instance, the interface maintains its private IP addresses, EIPs, and MAC address, which helps network traffic to start going to the standby instance.

Create a Management Network Multiple network interfaces help support a management network. You set up the primary network interface (eth0) to handle public traffic and a secondary network interface (eth1) to take care of management traffic.

You configure different access controls for the primary network (public traffic) and the secondary network (management traffic) interfaces. Typically, the access to the public network is less restrictive, and the security group that you associate with this interface permits access to the server from the Internet.

The security group associated with the secondary network interface doesn’t face the Internet. It’s a private-facing interface for management purposes. The security group associated with the secondary interface allows SSH access for a small set of IP addresses (in the Internet or in your own VPC, or a virtual private gateway).

Create Dual-Home Instances Multiple network interfaces help you manage workloads (and roles) on separate subnets. Suppose you have two web servers that connect to a middle tier where an application server runs. In this scenario, you can also configure the application server as dual-homed to a backend network (subnet) such as the database server’s network. Each of the dual-homed instances can receive requests on the front end and send their requests to the servers running in the backend network.

Creating, Attaching, and Detaching a Network Interface

You can create a network interface from the command line with the ec2 create-network-interface AWS CLI command or create it from the Amazon EC2 console. You can create a network interface in a subnet, but you can’t move it to a different subnet.

You can attach a network interface to an instance while the instance is running or while it is stopped. You can also attach the network interface (both primary and secondary) when you’re launching an instance. And you can attach the interface to instances running in the same AZ.

You can’t detach the primary interface (eth0). However, you can detach a secondary (ethN) network interface while the instance is running or is stopped.

Elastic IP Addresses

An EIP is a static public IPv4 address reachable from the Internet that you can associate with an instance or network interface in a VPC. An EIP is associated with an account while a public IP is assigned to a specific instance. If you reboot an instance, neither a public IP nor an EIP that is associated with the instance is removed. If you stop an instance, while a public IP is removed from the instance, the EIP stays with the instance. If an instance fails, the IP address can be quickly remapped to another EC2 instance in your VPC.

Here’s what you need to remember about an EIP:

• You can associate an EIP address to an EC2 instance or a network interface.

• You can move an EIP from one EC2 instance to another.

• If you associate the EIP with a network interface, you can move all the attributes for the interface from one EC2 instance to another.

• When you associate an EIP with an instance that has a public IPv4 address, the public DNS name of the instance changes and becomes the same as that for the EIP.

• An EIP is a regional entity.

• An EIP is accessed through a VPC’s Internet gateway.

• You must first allocate an EIP for use in your VPC, before you can associate it with an instance or a network interface. (Exercise 6-1 shows how to perform both tasks.)

• If you associate an EIP to an instance or its primary network interface, the instance’s public IP address, if you’ve assigned one, goes back to Amazon’s pool of public IPv4 addresses.

• You can assign an EIP only to one instance at a time.

• You associate an EIP with an EC2 instance by configuring the network interface that you’ve attached to that interface.

• When you move an EIP from an instance, the new instance can be in the same or in a different VPC.

• You can disassociate an EIP from an instance or network interface and re-associate it to a different instance or interface. An EIP you’ve disassociated from a resource remains allocated to you until you release it.

• You can have a maximum of five EIP addresses, which is a soft limit that can be increased by submitting a request to AWS. Use a NAT device to conserve your limited EIPs. AWS won’t charge you for one EIP associated with a running instance but will charge you for any additional EIPs you’ve associated with that instance.

Route Tables

A VPC that you create will have one or more subnets. Each of those subnets must be associated with a route table, which contains a set of rules (routes) that determine where network traffic is directed in a subnet.

Main and Custom Route Tables

Each VPC comes with a default route table called the main route table. If you don’t associate a subnet explicitly with any custom route table of your own, the subnet is implicitly associated with the main route table.

The default route table for a VPC with the CIDR range of 10.3.0.0/16 looks like the following, with a single route:

![]()

This route table specifies that all IP packets with the destination address in the 10.3.0.0/16 network (addresses between 10.3.0.0 and 10.3.255.255) are delivered within this VPC. Suppose you create a private subnet with CIDR range 10.3.55.0/24 in this VPC. The default route table for this subnet will be 10.3.0.0/16, since a subnet inherits its default route table from the VPC.

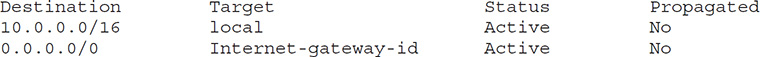

By default, the main route table doesn’t contain a route to an Internet gateway. You can modify the main route table, and add other addresses, as shown here with two routes, the second one added by you:

As with the first example, the first route delivers all network traffic bound for the 10.155.0.0/16 network within this VPC. The second route ensures that all other network traffic bound for this network is routed to the Internet gateway.

You can make a private subnet a public one by adding a route to an Internet gateway. You must first create an Internet gateway and then add a route with a destination of 0.0.0.0/0 for IPv4 traffic or ::/0 for IPv6 traffic, along with a target of the Internet gateway ID (igw-xxxxxxxxx).

You may explicitly associate a subnet with the main route table, although it’s not common. You do this temporarily when replacing the main route table. Custom route tables that you create help you explicitly control how a subnet routes outbound traffic.

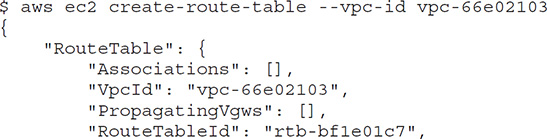

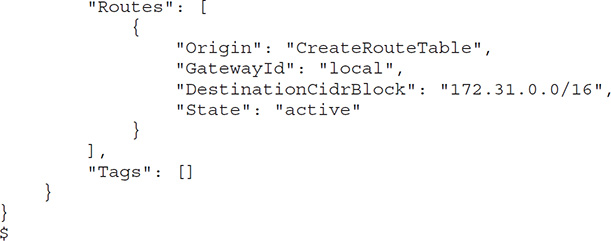

Creating a Route Table

You can create a route table with the aws ec2 create-route-table command. You must specify the VPC. Here’s an example:

Connecting to the Internet or Other External Networks from a VPC

You can create a VPC that has no connectivity to the Internet or to your other AWS services or networks, but that wouldn’t be of much use to you. Instead, you can set up external connectivity from a VPC through various means:

• Internet gateway This VPC component enables communications between the EC2 instances in a VPC and the Internet. It is a redundant and highly available component that provides a target in the route tables for traffic to or from the Internet, and it performs NAT for instances with public IPv4 addresses.

• NAT gateway This gateway enables EC2 instances in a private subnet to connect to the Internet and to other AWS services. The big difference between the NAT and Internet gateways is that the NAT gateway prevents the Internet from initiating connections to your EC2 instances.

• VPN gateway This gateway enables VPN connections through the Internet to your on-premise data centers.

• VPC endpoints VPC endpoints enable a VPC to connect to services such as Amazon Simple Storage Service (S3) that don’t live inside your VPC (remember that EC2 instances live inside the VPC).

• VPC peering connections These enables connectivity between a VPC and another VPC, both owned by you as well as those outside your AWS account.

Enabling Internet Access for an EC2 Instance Inside a Subnet

For an instance inside a VPC subnet to access the Internet, you must first attach an Internet gateway to the VPC. Then you must ensure that the subnet’s route table points to the Internet gateway. The scope of the route can be all destinations not explicitly known to the route table, such as 0.0.0.0/0 for IPv4 addresses or ::/0 for IPv6 IP addresses. You can also narrow the route to a specific range of IP addresses, such as the EIP of EC2 instances outside the VPC.

Each instance in the subnet must have a globally unique IP address, such as a public IPv4 address, an EIP that’s associated with a private IPv4 address on the instance, or an IPv6 address. The Internet gateway provides the NAT on behalf of the instance. The reply address field of the traffic going to the Internet is thus set to the public IPv4 instead of the private IP address of the instance. Similarly, the destination address of traffic sent to the public IPv4 or the EIP of the instance is translated by NAT to the instance’s private IPv4 address to deliver the inbound Internet traffic to the VPC.

Creating a VPC

Although AWS does offer you a default VPC, it’s a good practice to create your own VPC. You can create a VPC through the VPC Wizard in the Amazon VPC console. When you use the VPC Wizard to create your VPC (follow the steps in Exercise 6-3), the wizard does the following for you:

• It creates a VPC with a /16 IPv4 CIDR block.

• It associates a /56 IPv6 CIDR block with the VPC.

• It attaches an Internet gateway to the VPC.

• It creates a subnet with a /24 IPv4 CIDR block and a /64 IPv6 CIDR block in your VPC.

• It creates a route table and associates it with the subnet it creates, to facilitate network traffic between that subnet and the Internet gateway.

From the AWS CLI, you can execute the create-vpc command to create a VPC:

$ aws ec2 create-vpc –cidr-block 10.0.0.0/16

To create a VPC manually with a single public subnet that supports Internet access through an Internet gateway, follow these steps:

1. Create the subnet. (Exercise 6-3 shows how to do this.)

2. Create and attach an Internet gateway (or a NAT gateway) to the VPC. (Exercise 6-7 shows how to create a NAT gateway.)

3. Create a custom route table and associate that with the subnet, since, by default, the main route table doesn’t contain a route to an Internet gateway. (Exercise 6-8 shows how to create a route for a NAT gateway.)

4. Update the security group rules.

Common VPC Scenarios

I described the generic process for creating a VPC, but you can create a VPC under various scenarios, with different configurations for your subnets, route table, and other VPC components. Let’s run through two basic VPC configuration scenarios.

A Simple Single Public Subnet Scenario

This simple VPC has a single public subnet and an Internet gateway that allows Internet communications. AWS recommends this type of configuration for a simple public-facing web applications, such as a small web site or a blog.

In this simple scenario, the VPC’s configuration can have the following components:

• A VPC with a size /16 IPv4 CIDR block (remember, this is the maximum size you choose for a VPC’s CIDR block and supports 65,536 private IPv4 addresses).

• A single subnet, which can be as large as /16, but is usually something like size /24 IPv4 CIDR block (for example, 10.0.0.0/24), providing 256 private IPv4 addresses.

• An Internet gateway to connect this VPC to the Internet as well as other AWS services. An Internet gateway isn’t automatically attached to a VPC by default; you must attach the gateway to your VPC and ensure that the subnet’s route table points to the gateway.

• Assigned instances running in this subnet private IPv4 addresses in the subnet’s range, which is 10.0.0.0–10.0.0.0.255. You can assign an instance the private IP address of 10.0.0.8, enabling the instance to communicate with other instances in this VPC. You also assign an EIP such as 198.51.100.4, which is a public IPv4 address that enables these instances to be reached from the Internet.

• The custom route table associated with the subnet, which enables instances in this subnet to communicate with other instances running in your VPC, and to communicate directly over the Internet.

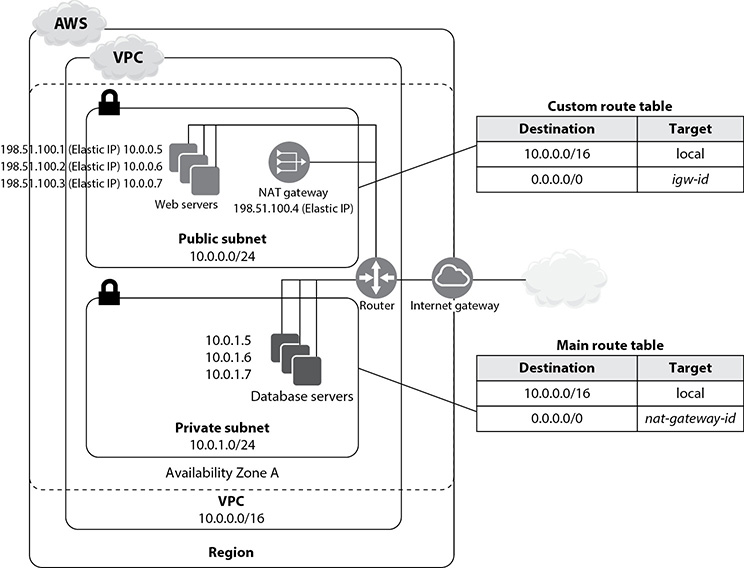

A VPC with a Public and a Private Subnet

Another common scenario is a VPC with both public and private subnets. AWS recommends this configuration when you want to run public-facing web applications while also running backend servers (such as those that host database servers) without any public access.

In this scenario, instances that run in the public subnet can directly send outbound traffic to the Internet, and instances in the private subnet can access the Internet only via a NAT gateway. The NAT gateway is located in the public subnet.

Although the database servers can access the Internet (for things such as software and security updates) via the NAT gateway, the Internet won’t be able to initiate inbound connections to the database servers.

A typical configuration of two subnets, one private and the other public, includes the following entities:

• A VPC with a size /16 IPv4 CIDR block such as 10.0.0.0/16

• A public subnet, such as 10.0.0.24, associated with a route table with a route to an Internet gateway

• A private subnet, such as 10.0.1.24

• An Internet gateway to connect the VPC to the Internet as well as to other AWS services

• Instances in this VPC may have difference connectivity capabilities:

• Instances with private IPv4 addresses in the subnet range, such as 10.0.04 and 10.0.1.5, are allowed to communicate with each other within the VPC.

• Instances in the public subnets can have an EIP address, such as 198.51.100.1, that enables them to be reached from the Internet. You can also assign a public IP address for these instances when you launch them, instead of assigning them an EIP.

• Instances in the private subnet that run in the backend won’t have any public IP addresses since they don’t need to accept traffic from the Internet. However, these instances can send requests to the Internet via the NAT gateway (located in the public subnet).

• A NAT gateway in the public subnet with an EIP, which allows instances in the private subnet to send requests to the Internet.

The public subnet has a custom route table associated with it. This route table contains two entries: The first entry enables instances in this subnet to communicate with other instances in the VPC. The second entry enables the instances to communicate directly with the Internet (over IPv4). The route table looks like this:

The private subnet has the main route table associated with it. This route table also contains two entries. The first entry enables instances in this subnet to communicate with the other instances in the VPC, and the second entry enables the instances to communicate with the Internet via the NAT gateway.

![]()

If a subnet doesn’t have a route to the Internet gateway, but instead has its traffic routed to a virtual private gateway (this is called a site-to-site, or S2S, connection), the subnet is a VPN-only subnet. Only IPv4 traffic is currently supported over a S2S VPN connection.

Securing Your VPC

Let’s say, for example, that one of the instances in a subnet is running a database and another instance in another subnet is running a web application that needs to talk to the database. To ensure that the database and the web application can talk to each other, you must ensure that you configure the VPC’s security groups so that they allow the application host to talk to the database on the correct protocol. You must also configure a NACL that allows communication between the two subnets.

Amazon VPC offers three main features or capabilities to secure your VPC: security groups, NACLs, and VPC flow logs, which monitor network traffic going to and coming from VPC, rather than a full-fledged security feature. Both security groups and network ACLs can enhance your VPC’s security.

Security Groups

A security group serves as a virtual firewall to control the traffic for one or more EC2 instances. You can specify one or more security groups when you launch an instance or use the default security group of the VPC.

Security groups provide strong security for the instances, but NACLs (discussed next) offer an additional layer of security at the subnet level. The EC2 instances in your VPC don’t have to belong to the same security group; they can each belong to a separate security group.

Security Group Rules You add rules to each of your security groups to control traffic into the instances. One set of rules controls inbound traffic, and another set controls outbound traffic from the instances:

• Inbound rule Consists of the traffic source and the source port (or port range). The source may be another security group, a single IP address, or an IPv4 or IPv6 CIDR block.

• Outbound rule Contains the traffic destination and the destination port (or port range). The destination may be another security group, a single IP address, or an IPv4 or IPv6 CIDR block.

A security group rule contains the following entities:

• Any protocol with a standard protocol number

• An optional description of the rule

You can specify allow rules that allow traffic, and deny rules that keep traffic from flowing into or out of an entity. You can specify allow rules only for a security group, not deny rules. The type of security group rules that you add depend on the role that the EC2 instance plays. A database server would need a security rule that allows inbound MySQL access, for example.

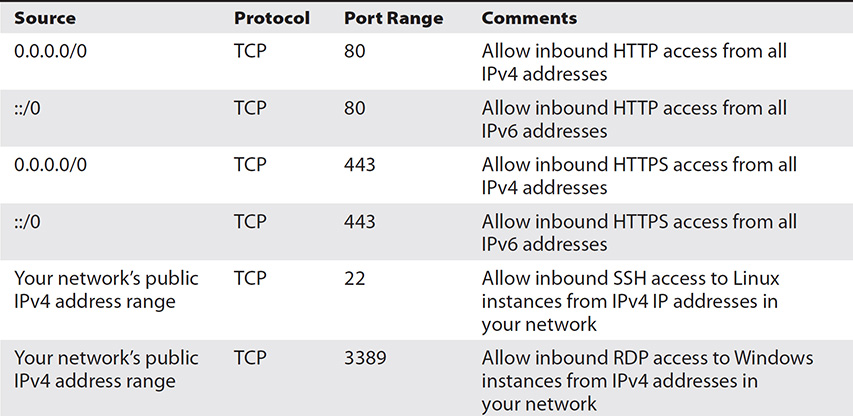

A rule for a security group for a web server looks different from that of a database server. A web server usually needs to receive HTTP/HTTPS traffic from all IPv4/IPv6 addresses. So its inbound rules would be something like that shown in Table 6-2.

Table 6-2 A Security Group’s Inbound Rules

The same set of web servers also needs to send Microsoft SQL Server and MySQL traffic to a database server, so the outbound rules for the security group could look like this:

Default Security Group Behavior The default security group has an initial set of rules that enables all instances associated with the security group to communicate with each other. However, instances from other security groups by default can’t communicate with each other, unless you add security rules explicitly allowing those instances to communicate.

Here are a few summary points about a default security group:

• A security group has an outbound rule, and the rule allows all outbound traffic. You can remove the outbound rule entirely or add outbound rules that allow only specific outbound traffic.

• There are no inbound rules by default. Therefore, all traffic from other hosts is denied, until you add appropriate inbound rules to the security group.

• All traffic between instances in a default security group is allowed.

• You can’t delete a default security group, but you may change its rules, just as you can with any other security group.

Security Group Statefulness A security group is stateful. In other words, all responses to requests that an instance sends out are allowed in, irrespective of the inbound security group rules. Similarly, responses to all permitted inbound requests are also allowed to flow out, irrespective of the security group’s outbound rules.

Creating Security Groups Every VPC comes with a default security group, but you need to create your own custom security groups to control the traffic into and out the various instances that you run in your VPC. You can create a security group from the AWS CLI by executing the create-security-group command:

$ aws ec2 create-security-group --group-name MySecurityGroup --description "My security group" --vpc-id vpc-1a2b3c4d

Run the describe-security-groups command to describe one or more security groups, as shown in this example:

$ aws ec2 describe-security-groups --group-ids sg-123008a4

This command displays information about the security group with the ID sg-123008a4.

Network Access Control Lists

NACLs help you control inbound and outbound traffic to a subnet. While a security group controls traffic going to and from an instance, ACLs offer an additional layer of protection at the subnet level. Each subnet must be associated with a NACL, and the rules that you configure for the ACL apply to the entire subnet. Every VPC comes with a default NACL that allows all inbound traffic. Each subnet must be associated with a NCL, and if you don’t associate a subnet with a network ACL, the subnet is associated with the default NACL. You can add or remove rules from the default network ACL and associate a subnet with a different custom ACL that you create. You can also change the default ACL if you want. A custom network ACL that you create and associate with a subnet by default denies all inbound and outbound traffic. You can associate a network ACL with multiple subnets, but the opposite isn’t true. In other words, you can associate a subnet with only one network ACL.

You can divide your VPC into logical areas by configuring a NACL that restricts which subnets in your VPC can talk to each other—for example, if you don’t want development team members working in a development subnet to impact production instances that you’re running in a separate production subnet.

Security groups and NACLs work in tandem. If an instance running Subnet A can’t ping another instance running in Subnet B, there are two possible reasons:

• The NACL in Subnet B doesn’t allow outbound ICMP traffic (ping uses ICMP).

• The security group that you attached to Subnet B doesn’t allow inbound ICMP traffic.

NACL Rules As with security group rules, NACLs have separate inbound and outbound rules that can either allow or deny traffic:

• Inbound rule Consists of the traffic source and the destination port (or port range). The source may be another security group, a single IP address, or an IPv4 or IPv6 CIDR block.

• Outbound rule Contains the traffic destination and the destination port (or port range). The destination may be another security group, a single IP address, or an IPv4 or IPv6 CIDR block.

Unlike security groups, NACLs are stateless, meaning that responses to any allowed inbound external traffic depend on the NACL rules for outbound traffic. The same principle applies to requests sent via outbound traffic.

A NACL rule has the following components:

• Rule number Each rule has a number, and the rules are evaluated starting with the lowest numbered rule. As soon as a numbered rule matches the traffic (network packet), the rule is applied.

• Protocol Any protocol with a standard protocol number can be used.

• A choice of ALLOW or DENY for the specified traffic Suppose you configure an ACL for Subnet A that specifies two rules: Rule #100 explicitly denies TCP traffic on port 21 from 0.0.0.0/0 and Rule #110 explicitly allows TCP traffic on the same port from 0.0.0.0/0. Since rules are evaluated starting from the lowest numbered rule, Rule #100 is evaluated first. Rule #110 is ignored, since there’s already a match with a lower numbered rule (#100). The explicit deny in Rule #100 overrides the explicit allow in Rule #110, and traffic is disallowed.

If you need to block access for specific IP addresses to your VPC, you can modify the NACLs associated with the public subnets in the VPC to prevent requests from the IP addresses to access your VPC. You must create an inbound rule for the NACL that denies traffic coming from one or more IP addresses so that the inbound request is stopped at the subnet level. So, if you suspect that a web server is under a phishing or a Denial of Service (DoS) attack and you identify a specific IP address or a set of IP addresses as the source of the attack, you can configure an ACL rule to block all traffic coming from this IP address. You place a DENY rule in the NAL to deny access to the IP addresses.

VPC Flow Logs

VPC Flow Logs enable you to capture information about the IP traffic passing to and from your VPC’s network interfaces. You can choose to publish the flow log data to Amazon S3 and CloudWatch Logs, from where you can view the flow log data.

VPC Flow Logs help you secure your instances by monitoring your network traffic. They also help in troubleshooting certain issues, such as when network traffic fails to reach an instance because of security group rules with overly restrictive filtering rules.

You can create a flow log for a VPC, a subnet, or a network interface. AWS monitors all network interfaces in a VPC or subnet when you create a flow log at the subnet or VPC level. Each network interface traced by flow logs has a separate log stream, which consists of log records that store the log events pertaining to the network traffic for a specific network interface.

When you create a flow log, you specify the following key entities:

• Resource VPC, subnet, or network interface

• Traffic type Accepted, rejected, or all traffic

• Destination at which to publish the records Amazon S3 or to the CloudWatch Logs service.

Although you can create flow logs for network interfaces created by AWS services such as Amazon RDS, Elastic Load Balancing (ELB), and Amazon ElastiCache, you cannot do so from the consoles or APIs that belong to these services. You must create the flow logs for these network interfaces from the Amazon EC2 console or the Amazon EC2 API. In addition, you cannot create the log streams from the CloudWatch Logs console or API.

The capture window for a flow log record is the duration of time for which the flow logs service aggregates data before it publishes the flow log records via S3 or CloudWatch Logs. The capture window is around 10 minutes but can be extended to 15 minutes. This means that you can’t expect flow logs to capture the real-time log events for the network interfaces.

You cannot enable flow logs for VPCs that are peers of your VPC unless the other VPCs are also in your AWS account. Flow logs don’t capture all IP traffic. For example, they don’t log DHCP traffic and traffic generated by EC2 instances when they contact the Amazon DNS service.

Amazon VPC Optional Components

Amazon VPC offers several optional components that you can use for various purposes:

• NAT gateways NAT gateways enable instances in private subnets to connect to the Internet and other services but prevent the Internet from initiating connections with the instances in the subnet.

• DHCP options sets The Dynamic Host Configuration Protocol is a standard for passing configuration parameters to hosts on a TCP/IP network. The parameters include the domain name, domain name server, and the netbios-node-type.

• VPN connections These are private connections you establish between the Amazon VPC and remote networks or an on-premise network.

• VPC peering VPC peering is a networking connection between two VPCs that helps you transmit traffic between the VPCs with private IPv4 or IPv6 addresses. The two VPCs communicate as if they were in the same network.

We’ll review these optional VPC components in the following sections.

NAT Instances and NAT Gateways

You use a NAT device when you want to let instances in a private subnet connect to the Internet or to other AWS services, but block the Internet from initiating connections to those instances. You also use the NAT device to forward traffic from instances that live in a private subnet. NAT forwards the traffic from the instances to the Internet and returns the response back to the instances. When the NAT device forwards the traffic from the instances to the Internet, it replaces the instance’s IP address with the NAT device’s address. Similarly, when the NAT device sends response traffic to these instances, it translates the address back to the instance’s private IP address.

How a NAT Gateway Works You must specify a public subnet and an EIP to create a NAT gateway. The NAT gateway lives in this public subnet. You can associate only one EIP to a NAT gateway. After you create the NAT gateway, you must update the route table of the private subnet (could be multiple) to direct all Internet-bound traffic to the NAT gateway, allowing the instances in that private subnet to talk to the Internet.

For availability purposes, AWS creates a NAT gateway in an AZ and implements redundancy in that zone. If the AZ goes down, the resources from other AZs that use this gateway lose their Internet access. To keep your operations running despite an AZ failure, you must create a NAT gateway in each AZ.

Any ACLs that you create in a subnet in which you locate the NAT gateway apply to the NAT gateway’s traffic. You can thus use network ACLs to control traffic to and from this subnet.

Let’s say you’re running a set of EC2 instances in a private subnet such as 10.0.1.0/24. You’ve also set up a NAT instance in your public subnet. The EC2 instances running in the private subnet need to download their updates from the Internet via HTTPS. You must add an incoming rule to the security group for the NAT instance that allows incoming requests from Source 10.0.1.0/24 on port 443.

VPC Endpoints, VPC Peering, VPN, AWS Direct Connect, and NAT Gateways You won’t able to use a NAT gateway to send traffic over VPC endpoints, VPN, Direct Connect, or a VPC peering connection. The instances in the private subnet must use the subnet’s route table to route traffic directly to these devices. Similarly, resources on the other side of the VPC peering connection, a VPN connection, or Direct Connect can’t route traffic to a NAT gateway.

Here’s an example of a private’s subnet’s route table’s routes:

• Internet-bound traffic (0.0.0.0.0) is routed to a NAT gateway.

• The traffic for 10.25.0.0/16, which is more specific than 0.0.0.0/0, is routed to a VPC peering connection.

• The instance’s Amazon S3-bound traffic uses (pl-xxxxxxxx), a specific IP address range for Amazon S3, and is routed to a VPC endpoint.

Creating a NAT Gateway You can use the Amazon VPC console to create a NAT gateway. To create the gateway, you must specify a public subnet and an EIP.

After you create the NAT gateway, you must update the route table of the private subnet (or subnets) so it can direct Internet traffic to the NAT gateway. Figure 6-4 shows the architecture of a VPC with a NAT gateway.

Figure 6-4 Architecture of a VPC with a NAT gateway

DHCP Option Sets

DHCP is a standard for passing configuration information to hosts running on a TCP/IP network. You can configure what are known as DHCP options sets for a VPC. When you launch an EC2 instance into your VPC (custom, not the default VPC), by default, the instance has only a private IP address, and not a public IPv4 address. You can change this default behavior by specifying a public IP for the instance when you launch it, or by modifying the subnet’s public IPv4 address attribute.

AWS assigns all instances in a non-default VPC that you create an unresolvable host-name, such as ip-10-0-0-204. AWS automatically creates a set of DHCP options for a VPC when you create the VPC. The default DHCP options set contains two DHCP options:

• domain-name-servers The AmazonProvidedDNS points to an Amazon DNS server that provides DNS for EC2 instances that want to connect to the Internet over the VPC’s Internet gateway.

• domain-name Domain name for your region.

AWS provides an EC2 instance that you launch into a nondefault VPC with a private DNS hostname. It might also provide a public DNS hostname, if your instance has a public IPv4 address and you’ve also configured the required DNS attributes for your VPC. The two VPC attributes that you must set are

• enableDnsHostnames If you set this attribute to true, the instances in your VPC get public DNS hostnames, but only if you’ve also set the enableDnsSupport attribute to true.

• enableDnsSupport If you set this attribute to true, DNS resolution is supported for your VPC.

When you set both attributes to true, this is what happens:

• The instances in this VPC receive public hostnames.

• The Amazon-provided DNS server resolves Amazon-provided private DNS hostnames.

If you haven’t set both attributes to true, your instance gets a custom private DNS hostname, if you’ve specified a custom domain name in your DHCP options set.

The default public DNS hostnames for the instances have the following form:

• ec2-public-ipv4-address.compute-1.amazonaws.com (for the us-east-1 region)

• ec2-public-ipv4-address.region.compute.amazonaws.com (for all other regions)

The private hostnames take the following forms:

• ip-private-ipv4-address.ec2.internal (for the us-east-1 region)

• ip-private-ipv4-address.region.compute.internal (for all other regions)

You can configure the supported options for a DHCP options set for use with your VPC, to do things like assigning your own domain name to your instances. Here are the five DHCP options you can set:

• domain-name-servers You can provide the IP addresses of up to four domain name servers if you don’t want to use the default Amazon DNS server, AmazonProvidedDNS. Suppose, for example, your corporate policies dictate that the DNS name for your internal applications must be resolved internally and not publicly over the Internet. You can create a DHCP option set that includes both options for the domain name servers—AmazonProvidedDNS and your internal DNS server name.

• domain-name If you’re using AmazonProvidedDNS in the us-east-1 region, specify ec2.itnernal land region.compute.internal for all other regions. If you’re not using AmazonProvidedDNS, you must specify a domain name such as mycompany.com.

• ntp-servers You can specify up to four Network Transfer Protocol (NTP) servers by providing their IP addresses.

• netbios-name-servers You can specify up to four NetBios name servers by suppling their IP addresses.

• netbios-node-type You can specify a NetBios node type, such as point-to-point, broadcast, and multicast. AWS recommends specifying node type 2 (point-to-point, or P-node). The broadcast and multicast types aren’t supported at this time.

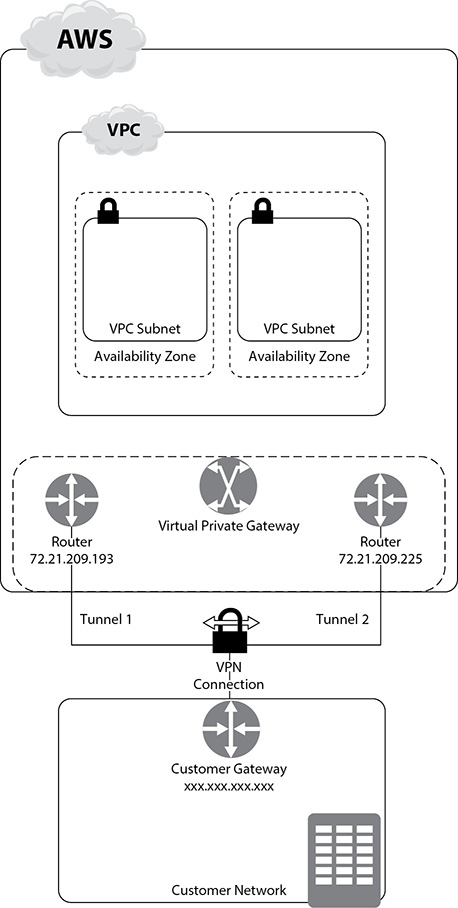

VPN Connections

A VPN connection is a connection between your AWS VPC and your own network. You can also connect your VPC to a remote network through a Virtual Private network (VPN) connection. You can choose to use AWS Managed VPN (hardware VPN) to set up the connection to your remote networks. Alternatively, you can do the same thing by configuring an EC2 instance in your VPC that runs a third-party software VPN appliance that is provided by the AWS Partner Network and the open source community. A software VPN offers you more control, but you also become responsible for managing it and ensuring that the software is working as designed. AWS doesn’t provide support or maintain a third-party VPN appliance.

When using an AWS Managed VPN, you can enable connectivity between your VPC and your own remote networks by attaching a virtual private gateway to the VPC and creating an AWS-managed VPN connection.

A VPN connection contains several components, such as a virtual private gateway and a customer gateway. You must ensure that a customer gateway is in place, attach a virtual private gateway to your VPC, and create the VPN connection.

VPN Gateway A VPN gateway (also called a Virtual Private Gateway or VGW ) works on the Amazon side of the VPN connection. You create the VPN and attach it to your VPC from where you want to create the VPN connection to your remote network. You can use a VPN gateway to provide either a VPN connection through the Internet to your on-premise location or an AWS Direct Connect connection to your location. Remember the following:

• If you’re setting the VPN gateway to provide a VPN connection, two connections are configured for availability purposes, and the gateway supports both static routing and Border Gateway Protocol (BGP).

• When you set up a VPN gateway for an AWS Direct Connect connection, it supports only BGP.

By enabling route propagation, you allow a virtual private gateway to automatically propagate routes to the route tables, removing the need for you to manually enter the VPN routes to the route tables. So, if don’t see any network traffic on the AWS side of your VPN connection to your VPC, ensure that route propagation is turned on in your VPC’s main route table, after you attach the virtual private gateway to your VPC.

Customer Gateway The customer gateway is a physical device (or virtual software application) that you configure on your remote network. You create the customer gateway resource in AWS to provide information about the device, such as the IP address of its external interface and the type of routing (static/dynamic) used. After creating the resource in AWS, you must configure the device (or software application) in the remote network to enable the VPN connection. You must set up routing so that any traffic from your VPC to your remote external network is routed to the Virtual Private Gateway.

Route Tables You must add a route to your remote network in your route table, with the virtual private gateway as the target. This ensures that the VPC’s traffic going to your remote network passes through the Virtual Private Gateway and over a VPN tunnel.

Configuring VPN Tunnels and High Availability When traffic is generated from the remote network side of the VPN connection, the customer gateway initiates VPN tunnels. A VPN gateway comes with two publicly reachable IP addresses routed through a pair of IPsec tunnels, each using a separate Virtual Private Gateway public IP address. Both tunnels must be working for redundancy purposes. Figure 6-5 shows the two tunnels of a VPN connection.

Figure 6-5 Configuring both tunnels for redundancy of a VPN connection

To protect against the unavailability of one customer gateway, it’s a good idea to configure a second customer gateway to set up a redundant, secondary VPN connection to your VPC and Virtual Private Gateway. The two public IP addresses that you need to provision for the tunnels can be on a single customer gateway or on two customer gateways in the same or a different location. More than one customer gateway can connect to a VPN gateway.

A software VPN supports two tunnels. To make a hardware VPN redundant, download the router configuration from the AWS Management Console and configure two customer gateways, each with endpoints for the hardware VPN’s virtual private gateway.

If you are deploying Direct Connect to replace your VPN connection to your VPC, failover to the new Direct Connect connection is automatic, using the BGP. Once established, the Direct Connect connection path will always be the preferred path.

AWS VPN CloudHub If you have several VPN connections, you can enable the multiple remote sites to communicate with one another and not just with the VPC, by setting up the AWS VPN CloudHub. VPN CloudHub uses a VPN gateway and a hub-and-spoke architecture to connect multiple customer gateways. VPN CloudHub uses BGP, with each customer location assigned a different autonomous system number (ASN).

To configure the AWS VPN CloudHub, you must first create multiple customer gateways. Then you create a VPN connection between each of the customer gateways to the same virtual private gateway.

Using VPN as a Backup for AWS Direct Connect You can use a VPN connection as a backup to AWS Direct Connect. Let’s assume, for example, that you’ve configured both a VPN connection and AWS Direct Connect. Should the Direct Connect connection fail for any reason, your VPN can act as a backup for transmitting your data. This is provided by the AWS route preference algorithm, when it has multiple routes to choose from. The route preference is in the following order:

• A VPC’s local routes

• Longest prefix first from the route tables

• Static routes

• AWS Direct Connect BGP

• VPN static routes that you’ve configured in your VPN connection

• VPN BGP

You can use a Direct Connect connection as your main means of connectivity to your VPC, but configure a VPN backup as a lower-cost backup connection.

VPC Peering

A VPC peering connection enables two VPCs to communicate as though they are part of the same network. These connections enable a VPC to access resources in one of your other VPCs. You can create a peering connection between VPCs in your own AWS account, VPCs in a different AWS customer’s account, or VPCs in a different AWS region. Creating a peering connection provides the other VPC(s) an entry in the VPC’s route table.

A VPC peering connection makes it easy to transfer data between multiple AWS accounts you own by creating a file sharing network to transfer the data easily.

You can set up VPC peering connections that provide access (routes) to parts of a CIDR block, an entire CIDR block, or a specific instance within the peer VPC. The CIDR blocks of the VPCs that you want to set up a peering connection for can’t match or overlap with each other.

Your VPC peering configurations can include the following:

• Two VPC peered to two subnets in one VPC

• Two VPCs peered to a specific CIDR block in one VPC

• One VPC peered to specific subnets in two VPCs

• Instances in one VPC peered to instances in two VPCs

The VPCs in a peering connection must belong to the same region and should not have overlapping IP ranges. The VPCs must use security groups to control access, and they should not have overlapping IP ranges. If you need to connect VPCs in different regions, you must use a VPN connections between all the VPCs.

Setting Up VPC Peering Here are the steps to create a VPC peering connection:

1. The owner of the VPC that requests the peering connection (requester VPC) sends a request to the owner of the target VPC (accepter VPC) to create the connection.

2. The owner of the accepting VPC accepts the connection request.

3. The owners of both VPCs in the peering connection add a route to at least one of their VPC route tables that point to the IP address range of the other VPC—the peer VPC in this context.

4. The owners of the two VPCs may also need to update the security group rules to ensure that traffic from the peer VPC is allowed.

You must enable DNS hostname resolution for the VPC connection. Then, when instances in the VPC peering connection address each other using a public DNS hostname, that hostname resolves to the private and not the public IP address of the instance.

After you configure VPC peering, you must ensure that you update all the route tables in each VPC with the appropriate peering configuration. Let’s say, for example, that you have set up a VPC peering connection between VPC A (10.0.0.0/16) and VPC B (20.0.0.0/16). The peering connection ID is pcx-1a2b1a2b. Following this, you need to add the following route entries to the route tables of the two VPCs so that traffic can flow between them:

• For VPC A: Destination 20.0.0.0/16 and Target pcx-1a2b1a2b

• For VPC B: Destination 10.0.0.0/16 and Target pcx-1a2b1a2b

Multiple VPC Peering Connections You can create more than one peering connection for one of your VPCs. However, you must create a unique VPC peering connection between different VPCs, because AWS doesn’t support a transitive peering relationship, even if the VPCs belong to the same AWS account.

For example, if you have set up a peering connection between VPC A and VPC B and also between VPC A and VPV C, there’s no automatic transitive peering relationship between VPC B and VPC C. You must explicitly set up a peering relationship between any pair of VPCs. In this case, you must set up a peering relationship between VPC B and VPC C as well, if you want those VPCs to have a peering relationship.

VPC Endpoints

A VPC endpoint is a virtual device that enables communications between VPC-based EC2 instances and AWS services, without affecting your network traffic bandwidth. A VPC endpoint helps you privately connect your VPC to supported AWS services and VPC endpoint services powered by AWS PrivateLink. For example, to connect to a service outside a VPC, such as Amazon S3, you can use a VPC endpoint, which makes an Amazon S3 bucket an entry in the VPC’s route table.

VPC endpoints enable connections without the use of an Internet gateway, an NAT device, or a VPN/AWS Direct Connect connection. The VPC instances don’t need public IP addresses to communicate with AWS resources.

The following example shows a route table with a VPC endpoint.

There are two VPC endpoint types: interface endpoints and gateway endpoints.

Interface Endpoints

An interface endpoint is an elastic network interface with a private IP address. It acts as the entry point for traffic sent to supported AWS services such as AWS CloudFormation, AWS CloudWatch, AWS Config, Amazon Simple Notification Service (SNS), endpoint services hosted by other AWS customers and partners in their own VPCs, and supported AWS marketplace partner services.

A service provider is the owner of the service, and you, as the service consumer, are the principal that creates the interface endpoints and provides the name of the AWS service or endpoint services to which you want to connect. After you create an interface endpoint, it’s available for use only after it’s accepted by the service provider, which must configure either automatic or manual acceptance of requests by the service.

An endpoint retunes responses to traffic sent to resources in your VPC. Services won’t be able to initiate requests to your resources inside your VPC through the endpoint.

Gateway Endpoints

A gateway endpoint serves as a target for a specified route in your route table, for traffic going to a supported AWS service. As of this writing, the supported services are Amazon S3 and DynamoDB.

You specify one or more route tables to control the routing of the traffic between your VPC and the other services. Subnets using the route tables can access the endpoints. You must change the route table for a private subnet to route traffic through an endpoint. Traffic from instances within the subnets to other services is sent through the endpoint.

Troubleshooting AWS Network Connectivity Problems

You might occasionally run into an issue related to AWS networks. Troubleshooting network connectivity issues in the cloud is similar to how you do so on premises. There are several network tools that you can use to troubleshoot connectivity issues. You may also need to check your security group rules to ensure that they allow the connectivity you’re looking for.

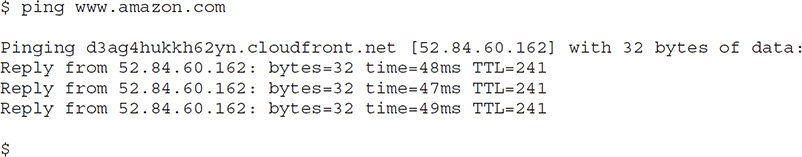

The ping,nslookup, and traceroute Utilities

A quick tool for testing AWS network connectivity is the ping utility, which sends an ICMP package from a server to another server. Here’s an example:

If ping doesn’t respond with an IP address, the server name may be incorrect. A ping test may fail when an AWS target is offline or inaccessible. You can view the AWS Service Health Dashboard (https://status.aws.amazon.com/), which reports up-to-the-minute information on AWS service availability, to see if the service you’re trying to connect to, such as EC2 in a specific region, is unavailable.

If you are unable to ping your instance, ensure that the security group’s inbound rules allow ICMP traffic for the Echo Request message for all sources or from the server where you’re issuing the ping command. If you are unable to issue the ping command from an instance, check the security group’s outbound rules and make sure they allow ICMP traffic for the Echo Request message to all destinations or to the destination you are trying to ping.

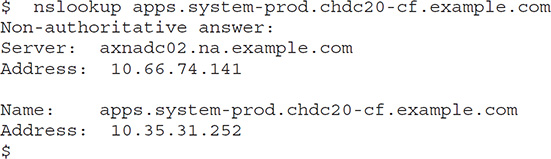

You can use the nslookup command on a Linux system to resolve the target hostname from the IP address. If there’s an error when you run this command or if the name that’s returned isn’t associated with the right node or server, there may be either a DNS failure or a configuration error. Here’s an example:

The Linux traceroute command (or the Windows tracert command) helps trace the path and checks delays between a data center and the AWS-based instances. The utility shows details about each router hop and helps find potential network bottlenecks across the public Internet. Pay attention to hops with persistenly high packet losses or packet losses that accumulate to a sizable number over multiple hops.

Errors Connecting to an Instance

You may receive error messages such as Network error: Connection timed out, or Error connecting to [instance], reason: -> Connection timed out: connect. When you see these types of errors when connecting to an instance with an IPv4 address, follow these steps:

• Check the security group’s inbound rules to ensure it has a rule that allows inbound traffic from your public IP address on the correct port. For SSH connectivity errors from a Linux server, ensure that there is a rule that allows traffic from your server to port 22. For the same errors from a Windows server, the port is 3389 (RDP).

• Check the route table for the subnet to ensure that there’s a route that sends all traffic destined outside your VPC to the VPC’s Internet gateway.

• Ensure that there’s an Internet gateway attached to your VPC. If there is not, create the gateway and attach it to the VPC.

• Ensure that the route table has a route with 0.0.0.0/0 as the destination and the Internet gateway for your VPC as the target.

• Check the NACL for the subnet and ensure that it allows inbound and outbound traffic to and from your local IP address on the proper port. The default NACL allows all inbound and outbound traffic.

• If your server is on a corporate network, make sure that the internal firewall allows inbound and outbound traffic from your server on port 22 for Linux servers and port 3389 for Windows servers. If you have a firewall on your computer make sure it allows the same traffic as the internal corporate firewall.

• Check your instance’s CPU load to make sure it is not overloaded. You can do this with CloudWatch metrics and by checking the instance status in the EC2 console. If your workloads are variable, try using Auto Scaling and ELB to manage the workload. If your workload is growing steadily over time, move to a larger EC2 instance type.

AWS Direct Connect

In some use cases, you won’t want to use the Internet to access your AWS resources. That’s where AWS Direct Connect comes in; it enables you to bypass the public Internet to access your resources in the AWS cloud. Direct Connect links the internal networks in your data centers to an AWS Direct Connect location. This helps you create direct virtual interfaces to public AWS services such as Amazon S3, bypassing Internet service providers. So, in a scenario where you are performing a daily backup of, say, 10GB of data from your on-premise data center to Amazon S3, you can drastically reduce the time it takes to move this data by moving it via Direct Connect between your data center and your AWS VPC. (As you’ll learn in Chapter 7, doing a multipart upload to S3 can also speed up the upload process.)

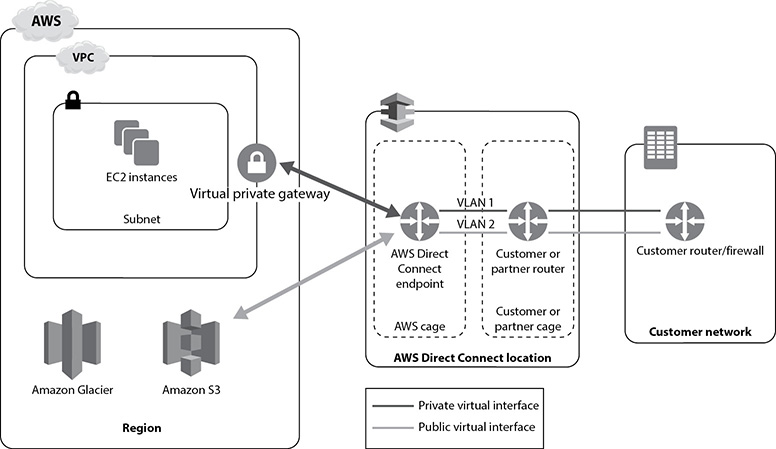

A Direct Connect connection provides access to AWS in the region in which the direct connection is associated. You can use this connection to access public AWS services in any public region. Figure 6-6 shows the AWS Direct Connect architecture and how it works with your internal networks.

Figure 6-6 How AWS Direct Connect interfaces with your internal networks

Direct Connect offers several benefits for transferring data between your own network and your VPC:

• Lowers your network bandwidth costs Because AWS charges reduced rates for data transfers via your dedicated connection, compared to Internet data transfer rates, you end up lowering your bandwidth costs. Costs can be further reduced by reducing your bandwidth commitment to your Internet service provider.

• Provides consistent network performance Because you can choose how data is routed through Direct Connect, you gain more consistent network performance, without the variable and inconsistent network latency that’s common in public Internet–based network connections.

• Facilitates large data transfers A VPN connection requires specialized VPN hardware and has problems handling large data transfers (over 4 Gbps). With Direct Connect, you can select a 10 Gbps Direct Connect connection and set up multiple connections to enhance your data transfer capacity.

• Provides a private connection to AWS Direct Connect helps you set up a private, secure, and fast network connection between your own network and your VPC.

Setting Up Direct Connect

You don’t necessarily have to set up your Direct Connect connection at an AWS Direct connection location offered by AWS. You can also set up the connectivity through a network carrier or a member of the AWS Partner Network (APN). Instead of setting up your own Direct Connect connection, you can opt for a hosted connection that’s provided by an APN member.

Direct Connect Components

The two key components of Direct Connect are connections and virtual interfaces. Because Direct Connect supports only the BGP routing protocol, you need a router that supports BGP. To use Direct Connect, your network must be collocated with an existing AWS Direct Connect location, or you must work with an independent service provider to connect to Direct Connect.

Connections

To create a dedicated connection between your network and a Direct Connect location, you must work with a partner in the APN. This enables you to set up a network circuit between your data center (or colocation environment) and an AWS Direct Connect location. When you request a connection, AWS authorizes the connection via a Letter of Authorization and Connecting Facility Assignment (LOA-CFA). Your network provider or the colocation provider requires this to order a cross-connect (a cross-network connection) for you. AWS recommends that you request two dedicated connections to AWS to provide failover of the Direct Connect connection.

Virtual Interfaces

After you create a connection, you must create a virtual interface (VIF) to use a Direct Connect connection. For each Direct Connect connection that you configure, you can configure multiple virtual interfaces. There are two types of virtual interfaces:

• A private virtual interface A private VIF is a logical interface that enables you to connect to an EC2 instance within your VPC. Each VPC needs a separate private VIF to connect to Direct Connect (or you can use a Direct Connect gateway). The private virtual interface handles traffic originating from your onsite network, destined for resources in your VPC that may not have either a public IP address or an EIP.

• A public virtual interface This connects to AWS services that don’t live in your VPC, such as Amazon S3 or Glacier. The public interface is meant for handling traffic to other AWS services or to the Internet.

If your traffic is coming both from your onsite network and from other AWS services and the Internet, you need both a private and public virtual interface. After creating a virtual interface, you download the router configuration for the Direct Connect connection. To implement Direct Connect, you also need a public Autonomous System Number for the public VIF and a private Autonomous System Number for the private VIF.

For high availability, you must create multiple Direct Connect connections, and the best practice is to create these in different regions. When you set up a redundant Direct Connect connection, in case of a failure, traffic fails over to the second link automatically. The best practice is to enable Bidirectional Forwarding Detection (BFD) to enable fast failure detection and failover.

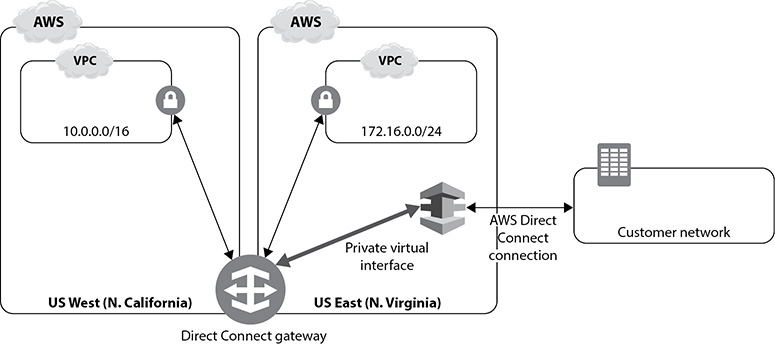

Direct Connect Gateways