Before There Was an Internet

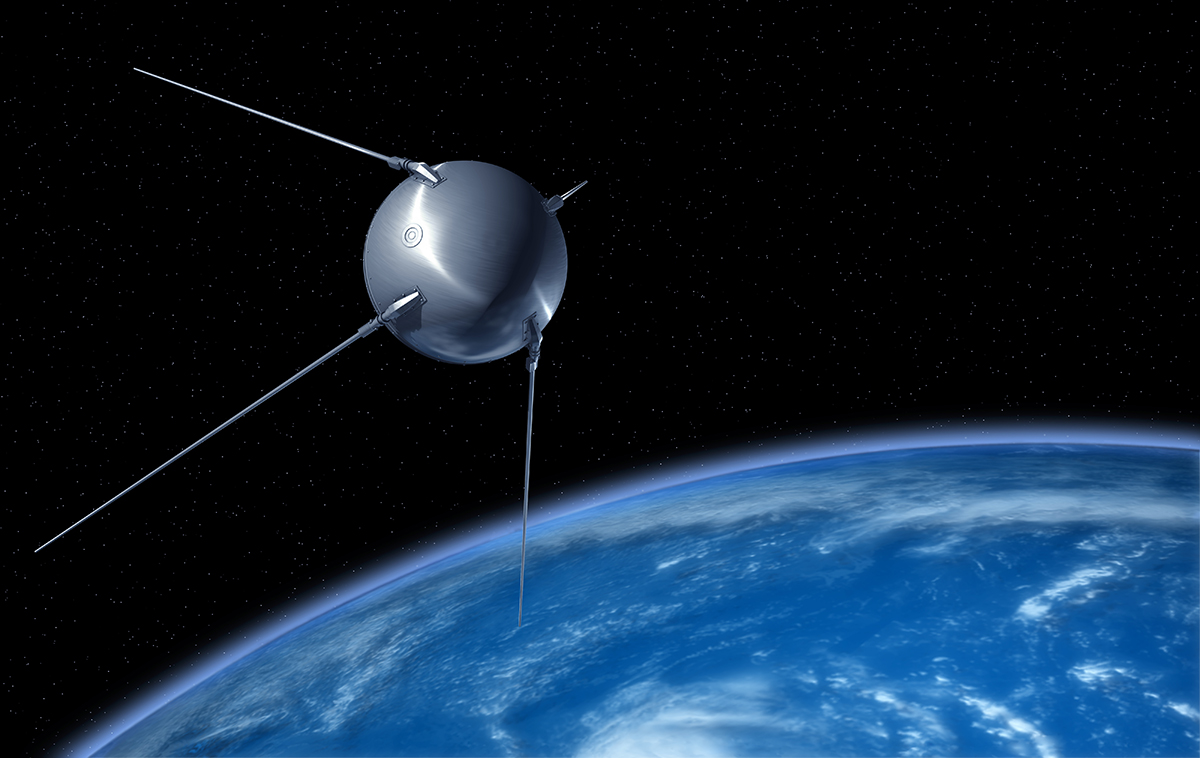

To truly understand the internet, we have to start at its beginning, which was in 1957 with a seemingly unrelated event. The USSR launched the first man-made, Earth-orbiting satellite Sputnik (shown in Figure 1-3), which was 23 inches in diameter and weighed about 190 pounds. Though small by today’s standards, this object truly energized the technology boom that was to follow.

FIGURE 1-3 The USSR’s Sputnik satellite.

© Graphic Compressor/Shutterstock

Space, satellites, and science were suddenly on the public’s mind. Schools expanded their curriculum to include more courses in chemistry, physics, and mathematics. The U.S. government began grant programs for scientific research and development. Agencies such as NASA (National Aeronautics and Space Administration) and DARPA (Defense Advanced Research Projects Agency) were formed to look into advanced technologies, which included rockets, new weapons, and computers.

ARPANET

The new awareness of the potential of technology caused scientists and the military to begin examining their technology and infrastructure, especially voice communications. The fear was that if the Soviets could launch a rocket that puts a satellite in orbit, they could probably also launch a missile that could conceivably take down the telephone communication system in the United States. What was needed, they projected, was a system that could withstand an attack and remain operational.

One solution proposed was put forward in 1962 by an MIT scientist, J.C.R. Licklider for a “galactic network” of interconnected computers that could communicate to one another. Rather than replace the telephone system, this network would be used should the Soviets destroy the phone system. While this proposal generated interest, the technology to make the network work had yet to be fully developed.

MIT scientist Paul Baran and Donald Davies, a scientist at the National Physical Laboratory (NPL) in England, independently developed a means to transmit “packets” across a switched network of computers. Baran called his “distributed adaptive message block switching,” but it was the name Davies used that caught on—”packet switching.” Packet switching was the key to creating a computer network that could not be taken down by a single site being destroyed.

In 1966, Licklider and a team of developers designed what became known as the ARPANET project. ARPAnet was completed and ready for testing in October 1969. So three months after Neil Armstrong walked on the moon, Charley Kline, a student at the University of California at Los Angeles (UCLA), was to send the message “LOGIN” to a computer at Stanford University. However, the network failed after only the “L” and the “O” were entered and received at Stanford. An hour later, Kline was able to enter the entire word, which Stanford received. While this test only confirmed the transmission of data between two computers connected point-to-point, it also proved the concept of the Interface Message Processor (IMP), the early precursor to a router. By the end of 1969, the University of Utah and the University of California at Santa Barbara (UCSB) were added to ARPAnet and packet switching was first used.

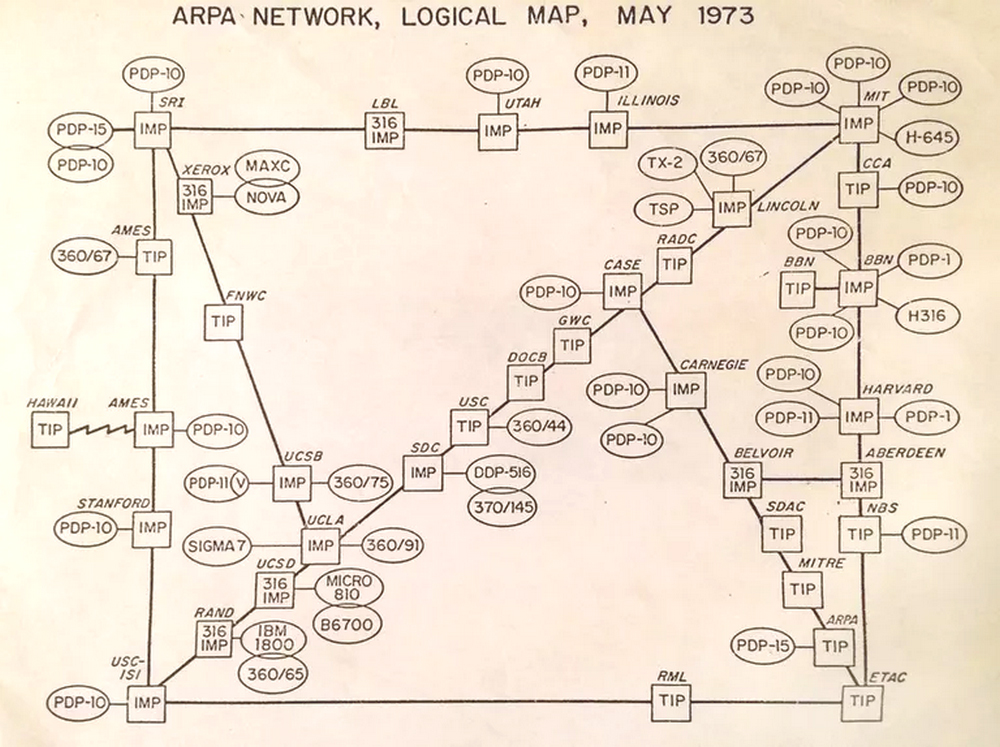

The ARPAnet continued to add nodes, as shown in Table 1-2. Growth started slowly, but it gained momentum as the network and its new technologies proved to be beneficial. The University of Hawaii was added, and the network went international in 1971, adding nodes in England and Norway. The ARPAnet had grown into an internetwork, which is a network of networks that are interconnected for communication. The term internetwork was eventually shortened to internet. Figure 1-4 shows an early sketch of the architecture of the ARPAnet.

Table 1-2 The Growth of the ARPAnet

| Date | Total Nodes |

|---|---|

| October 1969 | 2 |

| December 1969 | 4 |

| June 1970 | 9 |

| December 1970 | 13 |

| September 1971 | 18 |

| August 1972 | 29 |

| September 1973 | 40 |

| June 1974 | 46 |

| July 1975 | 57 |

| June 1981 | 213 |

FIGURE 1-4 A diagram of the ARPAnet.

Courtesy of the Defense Advanced Research Projects Agency, U.S. Department of Defense.

By the end of the 1970s, the network began having problems with the number of IMPs that shared a single network framework. In the early 1970s, two computer scientists, Vinton (Vint) Cerf and Bob Kahn, developed the Transmission Control Protocol (TCP) that originally included the functions of what was later separated as the Internet Protocol (IP). These developments solved most of the communication problems by forming the distributed nodes of the internetwork into smaller groupings that better facilitated communication.

In the early 1980s, the Network Control Protocol (NCP) that had been the mainstay of the ARPAnet was replaced by the TCP/IP suite of protocols. There is no doubt that TCP/IP made a worldwide network possible. What was limited to primarily education and government during the 1980s began to be attractive to medicine, commerce, and industry.

The Legacy of ARPANET

Much of what we now associate with the internet was developed under ARPAnet. Here is a list of some of the important technologies and tools originally developed for or because of the ARPAnet:

-

Electronic mail (email) (1971)—Ray Tomlinson developed a program that sends electronic messages over a distributed network. Limited to the characters available on Teletype devices, he chose the at-sign (@) to separate local addresses from global addresses.

-

Telnet (Teletype network) (1972)—Developed originally for NCP, telnet provided a bidirectional communications link between two stations that allowed a local device to work at the command prompt of a remote device.

-

FTP (File Transfer Protocol) (1973)—Another protocol originally developed for NCP by Abnay Bushan, it facilitates the transfer of files and bulk data to be sent from one node to another.

-

IS-IS (Intermediate System to Intermediate System) Protocol (1980s)—Radia Perlman developed a method to route IP messages. Later, she developed the Spanning Tree Protocol (STP) to eliminate switching issues on Ethernet networks.

-

Domain Naming System (DNS) (1983)—Paul Mockapetris and John Klensin develop DNS, which allows the network to expand beyond education and government.

-

Open Systems Interconnection (OSI) Reference Model (1984)—What is now commonly referred to as the OSI model was issued jointly by the International Standards Organization (ISO) and the International Telecommunication Union (ITU) as a specification for a universal protocol suite. The OSI protocol suite was not successful, but the reference model has remained a standard for internetwork communication.

-

NSFnet (1986)—The National Science Foundation (NSF) left the ARPAnet to form its own competing network (NSFnet). As this network grew from connecting five supercomputers to virtually every major university in the United States, many of the developments that are still in use today allowed the NSFnet to outperform the older and slower ARPAnet. NSFnet was one of the primary components of the internet as we know it.

By 1983, the Pentagon felt that the general lack of security and the growing number of nodes with network access (and some believe the movie War Games) presented too much of a risk and split off to form the MILNET with very strict access rules. ARPAnet continued to support its academic and nonmilitary government members.

Other complementary or competing networks also began to appear:

-

Computer Science Network (CSNET)—A network developed to provide networking capabilities to computer science programs at academic and research institutions that were unable to connect to the ARPAnet because of funding or governance limitations.

-

Energy Sciences Network (ESnet)—A network serving the U.S. Department of Energy (DOE) scientists and collaborators worldwide.

-

NASA Science Internet (NSI)—The NSN and SPAN networks were merged to create an integrated communication platform for the earth, space, and life sciences. At its peak, NSI connected more than 20,000 scientists around the world.

-

NASA Science Network (NSN)—Space scientists were connected to data stored around the world.

-

Space Physics Analysis Network (SPAN)—SPAN provided communication for investigators and engineers and access to science databases.

There is little doubt that the ARPAnet and all of the scientists, programmers, and visionaries who contributed to its successes and failures, from which much was learned, effectively built the foundation for the internet of today. The NSFnet and the smaller networks that were eventually absorbed into it replaced the ARPAnet in the late 1980s and was itself shut down in 1995. The control and management of the national and international internetwork became privatized, which opened the network of networks to commercial use.

The Maturing Network

One new development often leads to one or more developments. This has certainly been the case over the history of the internet. However, one series of developments has had perhaps the most impact on the internet since the IMP: the TCP/IP protocol suite, hypertext links, and the World Wide Web. These developments changed and improved how we use the internet, increased its popularity, and facilitated its expansion.

TCP/IP Model

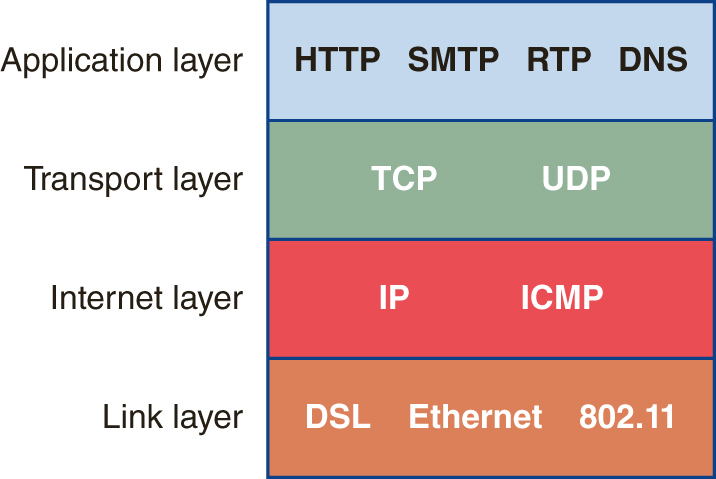

The Transmission Control Protocol/Internet Protocol (TCP/IP) suite is the standard set of protocols for connecting and interconnecting computers and networks into the internet. Essentially, the TCP/IP protocols are the working parts that make the internet work. To understand what TCP/IP is and how it works requires that we look at the TCP/IP Reference Model and the protocols on each of its layers. As shown in Figure 1-5, the TCP/IP model has four layers (top to bottom):

-

Application Layer—This layer of the TCP/IP model is where a host computer or a server interacts with a network to create or interpret the initial or final component of a communication. The name Application refers to protocols and services, such as email, FTP, and Telnet clients, which interact directly with other protocols. The functions that operate at the Application Layer include the identification of senders and receivers, access to remote hosts, and the request and retrieval of content from other hosts and servers.

-

Transport Layer—Much like the functions of a shipping and receiving department, the Transport Layer of the TCP/IP model packages or unpackages outgoing or incoming messages, determines how they are to be transported, and monitors their transit. At this layer, functions such as flow control, error control, and segmenting or desegmenting are performed.

-

Internet Layer—This layer of the TCP/IP model, which is also known as the Network Layer, performs the essential tasks involved with physically moving messages from the sending host or server to the receiving host or server. In performing these tasks, the Internet Layer applies addressing, forwarding, and routing to ensure messages arrive at their destinations, regardless of the path they may actually take.

-

Link Layer—Also referred to as the Network Interface or Network Access Layer, the Link Layer provides the appropriate packaging of a message before it is placed on the network’s physical medium. Incoming messages are interpreted and formatted before being passed on to the Internet Layer. On a local network, this layer also provides physical-level addressing and control.

FIGURE 1-5 Examples of the TCP/IP protocols that operate at each layer of the TCP/IP model.

TCP/IP Protocols

Reference models, like the OSI Reference Model and the TCP/IP Reference Model, define the policies, actions, and formats as standards for the programs and services that carry out their specifications. For the most part, the standards and specifications are implemented through protocols. A protocol is basically a set of requirements, guidelines, or rules that prescribe how an event, action, or process is to be performed. In computer networking, a protocol standardizes a specific process to ensure that any and every program wishing to invoke a specific action will do it the exact same, and consistent, way.

The TCP/IP protocol suite includes one or more protocols for virtually every standardized action or function required to format, transmit, and interpret any message sent over local or wide area networks, including the internet. The following lists the primary TCP/IP protocols used on the internet:

-

TCP—TCP operates at the Transport Layer of the TCP/IP model and is responsible for the creation and management of the transmission session and the segmentation or desegmentation of messages passed to the Internet Layer to be forwarded or to the Application Layer for delivery to a host and its user. TCP is a connection-oriented protocol that guarantees the delivery of message segments.

-

IP—This protocol defines the structure, assignment, and interpretation of network addressing, which is key to delivering messages to the intended destination. Two versions of IP are currently in use: IP version 4 (IPv4) and IP version 6 (IPv6); these are discussed later in this chapter.

-

Hypertext Transfer Protocol (HTTP)—This protocol transfers webpage content from one computer on a network to another computer on the same network or a remote network. It does not define the content of the webpage. HTTP and its secure version, HTTPS, implement a client/server relationship (see the “Client/Server Computing” section later in this chapter) between a requesting host and a providing server.

-

User Datagram Protocol (UDP)—Unlike TCP, UDP doesn’t guarantee the delivery of its transmitted messages and is used primarily for message streams that are loss-tolerant, meaning that the loss of a few message segments is acceptable. TCP and UDP have been compared as TCP being a controlled and verified transmission and UDP being more like the strong and steady stream of a fire hose.

-

Email protocols—TCP/IP includes two commonly used protocols for electronic mail: the Post Office Protocol (POP3) and the Simple Mail Transport Protocol (SMTP). POP3 receives incoming email, and SMTP sends and distributes email.

-

File Transfer Protocol (FTP)—Although most of its functions are being embedded into web browsers, FTP facilitates the transfer of files, especially large files, between computers.

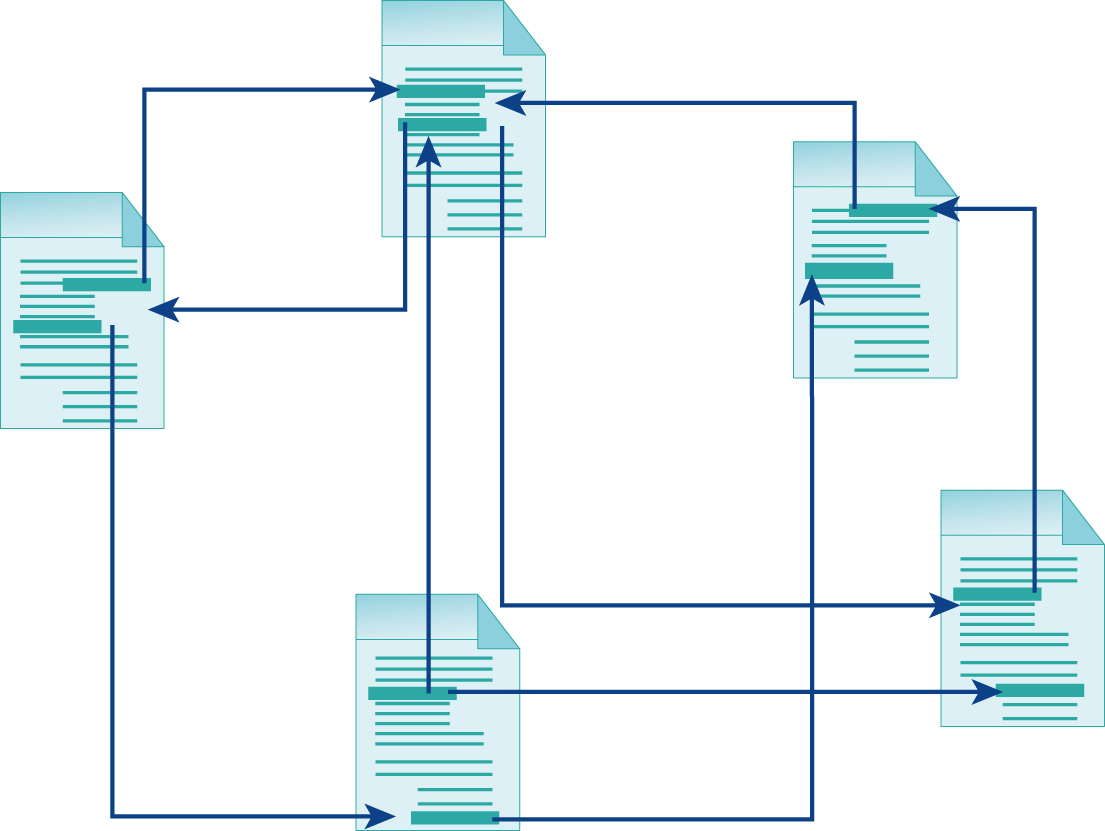

Hypertext

Hypertext provides internet users with the ability to move from one document to another on a network by clicking a link. It’s probably hard now to imagine the internet without hypertext links. Hypertext has become an assumed and required element of the internet fabric. As illustrated in Figure 1-6, a link embedded in one document can allow the reader to use the link to jump to related content on another document. However, unlike the majority of internet technologies, hypertext was developed over a period of about 60 years. The developers who contributed to what we know as hypertext today are covered in the following sections.

FIGURE 1-6 Hypertext links documents together, allowing users to jump from one document to another.

Vannevar Bush

The idea for the capability to move from one source to another was first conceptualized by Vannevar Bush, an American engineer who conceived of a system called “Memex.” Bush explained in his article “As We May Think” in 1945, his “memory enhancement” system used a microfilm library

. . . in which an individual stores all his books, records, and communications, and which is mechanized so that it may be consulted with exceeding speed and flexibility. It is an enlarged intimate supplement to his memory.

The Memex was to simulate the way that a human brain links data through associations that make information available. The Memex was envisioned to have the shape of and replace a desk.

Doug Englebart

Not much development of linked media really happened after Bush’s article until 1968, when Doug Englebart demonstrated his “oN-Line System” or NLS in his “Mother of All Demonstrations.” Though NLS was not developed to showcase what he called hypermedia, it did demonstrate its use. Even though Englebart was credited with being the “inventor” of hypertext, there was still a ways to go. Englebart is one of the more influential inventors of computer technology devices. His “inventions” include bitmapped displays, the mouse, and the graphical user interface (GUI), among many others.

Ted Nelson

Ted Nelson was the one who coined the term “hypertext” in 1965. It was a part of his design for “Xanadu,” a system that supported a repository of anything and everything ever written and interconnected to other such repositories through hypertext links. This system was never completely implemented.

Brown University

Brown University has been very active in the development of hypertext systems and their related applications. Under the direction of Andries van Dam, two important hypertext developments were produced in the late 1960s. The first was the Hypertext Editing System (HES) in 1967, the first hypertext system that actually worked. The second was the File Retrieval and Editing System (FRES) in 1968, the follow-up project to HES.

Both of these systems proved the linking of one document to another functionality of hypertext. However, continued development was needed because the user interfaces were completely in text and configuring the links was a separate process.

Through its evolutionary iterations, hypertext has continued to be improved. However, what really energized its development in the years to come was the World Wide Web and browsers. These developments themselves owe their existence to hypertext capabilities, but hypertext owes the web and its exploded growth for its development, which may not be complete yet.