Cryptography and the RSA Algorithm

Cryptography is the practice of applying encryption techniques to ensure secure communication in the presence of third parties (whom we will consider adversaries). Generally, cryptography is about constructing and analyzing protocols that block adversaries, protect data confidentiality and data integrity, and provide authentication for the sender and the message. Modern cryptography is at the intersection of mathematics, computer science, and electrical engineering. Common applications of cryptography include the encryption of information on ATM cards, the encryption of computer passwords, and the process of encryption and the employment of keys for transmission and the conduct of electronic commerce.

Prior to the modern age, cryptography was generally synonymous only with encryption, which is the conversion of information from a readable state to untranslatable content that is unreadable by the unauthorized. The originator of an encrypted message shares the decoding technique for the transmission with the intended recipients. Since World War I and the advent of the computer, the methods used to carry out cryptology have become increasingly complex, and the application has become widespread and necessary.

Modern cryptography is heavily based on mathematical theory and computer science practice; cryptographic algorithms are designed around assumptions of computational hardness, making them difficult to break. It is theoretically possible to break such a system, but in practice, it is infeasible. These schemes are therefore termed computationally secure. Improvements in integer factorization algorithms and faster computing technology require these solutions to be continually adapted.

The history of cryptography is a long and storied one that includes contributions by luminaries such as Pierre de Fermat, Leonhard Euler, Johann Carl Friedrich Gauss, Alan Turing, and Claude Shannon.

Cryptography has two main goals: to secure communication and to protect files.

The employment of cryptography is a very important aspect of providing for the security of information, both as it is transported as well as when it is stored in encrypted form. Cryptography is centered around the process of creating algorithms to encrypt messages and stored data in such a way that they are hard to decipher (crack), based on estimates of the time it would take for an adversary to decrypt (crack) that material. However, as the computational power of computers has increased, allowing for both brute-force trial-and-error techniques as well as the application of sophisticated encryption-breaking algorithms, a series of adaptations to popular cryptography algorithms have been required for them to remain secure. Among these types of encryption are symmetric encryption, asymmetric encryption, and hashing.

Symmetric-key encryption (also called private-key encryption) is the process of scrambling information with a single key shared by both the sender and the receiver. The encrypted information is extremely difficult for someone to read without decrypting the data using the original key or a key made by the same algorithm with which it was originally encrypted. If the shared encryption key is disclosed, all communications between the sender and receiver are compromised.

Symmetric-key encryption assumes that all parties already share a secret key. For this type of cryptography to work correctly, the encryption algorithm must be publicly known, and the use of a proprietary cipher is discouraged.

Use cases can either be single use or multiuse. Single-use keys are used only once to encrypt a single message. A new key is generated for every e-mail and there is no need for nonce (it is set to zero). Stream ciphers are an example of a single-use key. A multiuse key is used to encrypt many messages and requires either a unique or random nonce.

Block ciphers are the cryptography workhorse. These are special ciphers that are based on a deterministic algorithm and operate on fixed-length groups of bits/blocks with an unvarying transformation that is specified in the symmetric key. These ciphers are important elementary components in the design of many cryptographic protocols and are widely used to implement the encryption of bulk data.

Some symmetric block cipher algorithms are Data Encryption Standard (DES), 3-DES, Advanced Encryption Standard (AES), Electronic Codebook (ECB), and Cipher Block Chaining (CBC). The simplest of the encryption modes is the Electronic Codebook (ECB) mode. The message is divided into blocks, and each block is encrypted separately. In CBC mode, each block of plaintext is computed with the previous ciphertext block before being encrypted. This way, each ciphertext block depends on all plaintext blocks processed up to that point. To make each message unique, an initialization vector must be used in the first block.

Data Encryption Standard and Advanced Encryption Standard

DES is an old version of encryption. There is an inherent weakness in DES that allows the encryption to be broken using certain methods of attack. DES uses a much smaller 56-bit encryption that has a maximum of 256 combinations. This is easy for a computer to break with a brute-force attack.

AES can use a 128-, 192-, or 256-bit encryption key with 2^128, 2^129, or 2^256 combinations. This, coupled with the fact that the system has no other weaknesses, means that AES is considered relatively unbreakable (Figure 8.1).

In Figure 8.1, the symmetric key is a string of randomized data that is used in the process of encrypting the data. This process is normally used to secure computer storage drives, with a good example being the encryption used with MAC OS X’s FireVault drives. However, this symmetric approach is not the most secure method, since the key used for encryption and decryption needs to be protected. Otherwise, the encrypted data will be compromised.

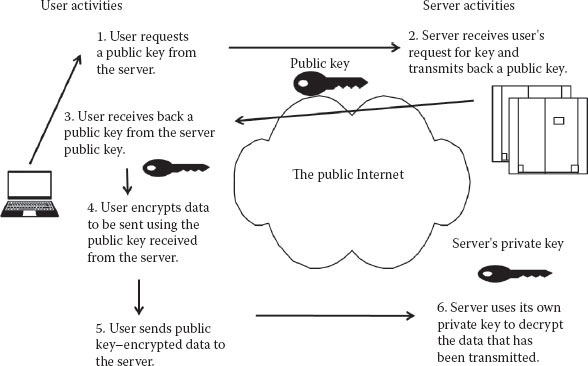

Asymmetric encryption is another more complicated form of encryption. The concept of public-key schemes (implemented in the first practical asymmetric scheme) was for key distribution only. This process was published in 1977 by Diffie and Hellman. This approach scrambles data during transmission by utilizing a public key during the encryption of the data, while a separate private key is utilized during the decryption process. The principle distinction between asymmetric and symmetric encryption is that the former uses one key while the latter uses a public key to encrypt and a different private key to decrypt (a two-key process). A very popular form of asymmetric encryption is the Rivest–Shamir–Adleman (RSA) algorithm, named for its three inventors, which is illustrated in Figure 8.2.

Figure 8.1 The symmetric-key encryption process.

RSA encryption is designed to be very difficult to crack. In fact, Martin Gardner stated that he would give $100 dollars to the first person to break the cipher text based on a 129-digit product of primes that was based on RSA cryptography. However, as computing power grew, so did peoples’ knowledge of how to break RSA encryption. One of the most famous ways to break RSA encryption is by utilizing a man-in-the-middle attack. What this does is to send your public key to an untrusted computer when you intend it to be sent to a trusted computer. The untrusted computer can then use that public key to decrypt the data that was supposed to be decrypted by the other intended, trusted machine within the network.

Figure 8.2 The RSA asymmetric encryption process.

The RSA algorithm relies on the difficulty of factoring large composite numbers. The largest factored RSA number thus far is RSA-200, which is 663 bits in length. The RSA algorithm generally uses integers of 1024 bits.

RSA employs a radically different public-key system in which two keys are used: a public and a private key.

Anyone who knows the public key can encrypt messages or verify signatures, but they cannot decrypt messages or create signatures.

It works by the clever use of number theory where problems that are easy to compute in one direction, such as encrypting material, are difficult to compute in a reverse direction, such as unencrypting that information to reverse the encryption process. Note that public-key schemes are neither more secure than private-key schemes (security depends on the key size for both), nor do they replace private-key schemes (they are too slow to do so). Rather, they complement them.

This algorithm was developed to address two key issues:

1. Distributing the two keys: How to have secure communications in general without having to trust a separate key distribution coordinator with your key

2. Using digital signatures: How to verify a message comes intact from the claimed sender

With RSA, each party—the sender and the receiver—has a pair of keys. If you know only the public key, you can encrypt but not decrypt the message. Those who encrypt messages or verify signatures cannot decrypt messages or create signatures.

The second key in the pair, a private key, is known only to the recipient and is used to decrypt messages and sign (create) signatures.

For the process to work, you choose two large prime numbers, say p and q.

An RSA number n is a number such that n = p × q where p and q are distinct, large prime integers (by large, we typically mean at least 512–2048 bits), and n is the product of these two large prime numbers.

Then compute n = p × q and φ = (p − 1)(q − 1).

Then choose another prime number e, which is relatively prime to φ.

Then compute d from φ and e such that e*d mod φ = 1.

The public key depends upon n and e. There must be no numbers that divide neatly into e and into (p − 1)(q − 1), except for 1. That makes e = a prime number.

The private key depends on p, q, and φ (e), and it is used to compute the private key d.

Obviously a simple process!

Hashing is another form of cryptography that is used to encrypt passwords. Hashing takes your password and transforms it into a string of data. Two main properties are associated with hashed data. The first is that the same data will always produce the same hash, and the second is that it is impossible to reverse the hashed data back to the original data without a hash key. As one can see, encryption and cryptography are very important when dealing with information security (IS) because they help ensure that the right data is being sent to the right person in a safe way.

Encryption and code has been in existence for hundreds of years. The hiding of information in plain sight for the desired audience is the most purposeful usage of encryption. How can information be broadcast to many individuals, but only the correct individuals understand what to do with the information? These concepts cover the overall use case for encryption and cryptography. Radio technology was the first example of the need for some sort of encryption for sensitive information transmitted. In World War I, there was no way to digitally encrypt communications, so all information that was broadcast for military logistics could be intercepted by the enemy and used against the transmitting party. To combat this challenge, encryption had to be implemented.

The famous Alan Turing was the inventor of the computing machine and most importantly for cryptography, many of its algorithms. This invention sparked an interest in automated or digital signal encryption, which much of the world relies on today. Alan Turing wrote a paper in 1936, “On Computable Numbers with an Application,” that is considered the first description of a computing machine with loadable programs and loadable data. The first complete computer he built following that paper was the ACE computer, which he designed and built in 1948 at Manchester University in England. He previously built a special-purpose computing machine at Bletchley Park to decode the German Enigma code encryption machines. In 1950, he wrote the paper “Computing Machinery and Intelligence,” which defined the Turing Test for whether an artificial process could be determined to be intelligent.

The development of public keys relies on a few very basic equations. The premise is that a “public key” can be provided in plain sight, but the private key of an individual is held private. The most common algorithm to use this concept is the previously mentioned RSA algorithm, which employs public and private keys. Securing the running of code relies on trust between two entities. One example of this is the digital certificates that are evaluated before lower-level code, such as drivers, are installed on a machine. Such “certificates” have been encoded with a public/private-key structure.

Modern Approaches for Breaking Encryption

The breaking of modern encryption began in the 1990s once regular usage occurred by higher profile companies and government entities. One method to break RSA-129 encryption was the “factorization” of messages sent using this standard. It took 8 months using this method with over 600 volunteers from more than 20 countries to decode a simple string—“The Magic Words Are Squeamish Ossifrage.” This ability, from a computational perspective, has been drastically improved as Moore’s law moves forward. The overall point is that once an encryption algorithm is released to the public, individuals immediately begin the process of deconstructing these algorithms. Encryption is a temporary possibility; it can ultimately always be broken given enough interest, time, and resources in the hands of capable individuals. Another modern encryption type is the previously mentioned AES. This was invented in 1997 and is still regularly used today. Most important in this method is to understand the “block size,” which is what speeds or slows the process. The lower the “variable key size,” the faster a computer will be able to decrypt—or, from a security perspective, break—the encryption. The larger the block size, the more difficult it is for the encryption to be broken.

The most recent point of interest and advancement in the data-processing world is the use of quantum computers. These machines use concepts drawn from physics to perform computing operations. These operations can also be used to perform the encryption and decryption of data. Quantum computing provides room beyond the binary structure we are used to (in which 1 means on and 0 means off). Using quantum computing, there are any number of substates available to these values, meaning it is capable of doing far more parallel operations.

More Cryptography, Private-Key, Public-Key Encryption, RSA Algorithm Details

Offset codebook (OCB) mode was designed to provide both authentication and privacy. It is essentially a scheme for integrating a method authentication code (MAC) into the operation of a block cipher. In this way, OCB mode avoids the need to use two systems: a MAC for authentication and encryption for privacy. This results in lower computational cost compared with using separate encryption and authentication functions.

There are three versions of OCB: OCB1, OCB2, and OCB3. OCB1 was published in 2001. OCB2 improves on OCB1 by allowing associated data to be included with the message—that is, data that is not encrypted but should be authenticated—and a new method for generating a sequence of offsets. OCB2 was first published in 2003, originally named authenticated encryption mode or advanced encryption mode (AEM). OCB3, published in 2011, changed again the way offsets are computed and introduced minor performance improvements.

OCB mode is listed as an optional method in the IEEE 802.11 wireless security standard as an alternative to counter with CBC-MAC (CCM). OCB2 is standardized in ISO/IEC 19772:2009 and OCB3 in RFC 7253.

Initialization vectors: This vector is a fixed-size input to a cryptographic primitive that is typically required to be random or pseudorandom. The randomization is critical for encryption schemes to achieve semantic security (a property wherein repeated usage of the scheme under the same key does not allow an attacker to infer relationships between segments of the encrypted messages).

Data integrity: A MAC is a short piece of information used to authenticate a message and to provide integrity and authenticity assurances on that message. Integrity assurances detect accidental and intentional message changes, while authenticity assurances affirm the message’s origins. A MAC algorithm accepts a secret key and an arbitrary-length message to be authenticated and then outputs a MAC (which is also sometimes known as a tag). This code protects the message’s integrity and authenticity by allowing verifiers (who also possess the secret key) to detect any changes to the message content.

Keyed-hash MAC (HMAC) is a specific construction for calculating a MAC involving a cryptographic hash function in combination with a secret cryptographic key. Like a standard MAC, it can also be used to verify data integrity and message authentication simultaneously. The cryptographic strength of the HMAC depends on the cryptographic strength of the underlying hash function, the size of its hash output, and the size and quality of the key.

The Merkle–Damgard (MD) construction or hash function is a method of building collision-resistant cryptographic hash functions from collision-resistant one-way compression functions. This function applies an MD-compliant padding function to create an output whose size is a multiple of a fixed number (usually 512 or 1024); this is done because functions cannot handle inputs of arbitrary size. The hash function then breaks the result into blocks of fixed size and processes them one at a time with the compression function, each time combining a block of the input with the output of the previous round.

Public-key cryptography, also known as asymmetric cryptography, is a class of cryptographic protocols based on algorithms that require two separate keys, one of which is secret (or private) and one of which is public. Although different, the two parts of this key pair are mathematically linked. The public key is used to encrypt plaintext or to verify a digital signature, whereas the private key is used for the opposite operation, in these examples to decrypt cipher text or to create a digital signature. The term asymmetric stems from the use of different keys to perform these opposite functions, each the inverse of the other—as contrasted with conventional (symmetric) cryptography, which relies on the same key to perform both.

Public-key algorithms are based on mathematical problems that currently admit no efficient solution and are inherent in certain integer factorization, discrete logarithm, and elliptic curve relationships. It is computationally easy for a user to generate a public- and private-key pair and to use it for encryption and decryption. The strength lies in the “impossibility” (computational impracticality) of a properly generated private key being determined from its corresponding public key. Thus, the public key may be published without compromising security. Security depends only on keeping the private key private. Public-key algorithms, unlike symmetric-key algorithms, do not require a secure channel for the initial exchange of one or more secret keys between the parties.

Because of the computational complexity of asymmetrical encryption, it is typically used only to transfer a symmetrical encryption key by which the message (and usually the entire conversation) is encrypted. The symmetrical encryption/decryption is based on simpler algorithms and is much faster.

Message authentication involves hashing the message to produce a digest and encrypting the digest with the private key to produce a digital signature. Thereafter, anyone can verify this signature by (1) computing the hash of the message, (2) decrypting the signature with the signer’s public key, and (3) comparing the computed digest with the decrypted digest. Equality between the digests confirms that the message is unmodified since it was signed and that the signer, and no one else, intentionally performed the signature operation—assuming the signer’s private key has remained secret to the signer.

Public-key algorithms are fundamental security ingredients in cryptosystems, applications, and protocols. They underpin various Internet standards, such as Transport Layer Security (TLS), Secure/Multipurpose Internet Mail Extensions (S/MIME), Pretty Good Privacy (PGP), and GNU Privacy Guard (GPG). Some public-key algorithms provide key distribution and secrecy, some provide digital signatures, and some provide both (e.g., RSA).

Public-key cryptography finds application in, among others, the IT security discipline of IS. IS is concerned with all aspects of protecting electronic information assets against security threats. Public-key cryptography is used as a method of ensuring the confidentiality, authenticity, and non-reputability of electronic communications and data storage.

A digital signature is a mathematical scheme for demonstrating the authenticity of a digital message or document. A valid digital signature gives a recipient reason to believe that the message was created by a known sender, that the sender cannot deny having sent the message (authentication and non-repudiation), and that the message was not altered in transit (integrity). Digital signatures are commonly used for software distribution and financial transactions and in other cases where it is important to detect forgery or tampering.

A public-key infrastructure (PKI) is a set of hardware, software, people, policies, and procedures needed to create, manage, distribute, use, store, and revoke digital certificates and manage public-key encryption. The purpose of a PKI is to facilitate the secure electronic transfer of information for a range of network activities such as e-commerce, Internet banking, and confidential e-mail. It is required for activities in which simple passwords are an inadequate authentication method and more rigorous proof is required to confirm the identity of the parties involved in the communication and to validate the information being transferred.

A PKI is an arrangement that binds public keys with respective user identities by means of a certificate authority (CA). The user identity must be unique within each CA domain. The third-party validation authority (VA) can provide this information on behalf of the CA. The binding is established through the registration and issuance process. Depending on the assurance level of the binding, this may be carried out by software at a CA or under human supervision. The PKI role that assures this binding is called the registration authority (RA). The RA is responsible for accepting requests for digital certificates and for authenticating the person or organization making the request.

QUESTIONS

1. This is the cornerstone of modern electronic security technology used to protect valuable information. What is it?

2. What is the difference between cryptography and encryption?

3. This is the workhorse of cryptography. What is it?

4. Computer encryption systems belong in one of two categories: symmetric-key encryption and public-key encryption. Explain each and which one is more secure.

5. When would you use hashing?