Chapter 5

Gear and Technical Tasks

The variety of equipment, tools, and technical tasks that a digital media designer should know and understand is wide and deep. There is a wide range of equipment involved in digital media design and covering all of them exhaustively is beyond the scope of this printed book. New features, companies, and products constantly emerge and disappear from the market and surely there are elements of this chapter that may already be outdated. It would take more room than we have here to thoroughly delve the depths all of the different aspects of choosing, mounting, rigging, maintaining, operating, and fully understanding the technical details of all the various gear encountered by a designer. This chapter’s goal is to highlight key equipment and technical tasks that you should know as a digital media designer.

Systems

In the simplest system setup, you need only to focus on the video content and the manner in which it is displayed. Complicated systems can include networks of multiple computers, cameras, switchers, scalers, projectors, and so forth. The control system you use has a high potential to change from show to show. Designers often find a media server environment that speaks to them and works with their internal logic and regular design needs. However, we recommend all designers, especially designers new to digital media design, become familiar with as many different video, media, and show control systems as possible. The boundaries between video systems, lighting systems, sensor systems, sound systems, and other forms of show control are becoming more blurred and the ability to network them together is becoming increasingly easier. Getting work in this field sometimes depends on whether you can program or design with a given system or if you are able to spec, design, install, and program a system along with creating the content.

Video Signals

Video signals transmit video (and audio) via analog or digital means, transforming the original information into electric signals. Video signals have developed over the years with the invention of specific video standards and intended uses. Signals are used to transmit video from live events and prerecorded video, such as traditional broadcast programming. Video signals have typically been in the standard formats of NTSC, PAL, or SECAM. In our field, we deal primarily in digital signals and, occasionally, analog signals as well.

Analog signals represent information carried by transmitting a continuous waveform that is similar to the information itself. By varying the amplitude, information is encoded into a waveform. For example, for sound or video signal information at a 1,000 Hertz sine-wave, the analog signal varies in voltage 1,000 times per second from positive to negative and back again in the form of a sine-wave pattern (Hertz). In this way, the signal ends up resembling the actual amplitudes it is recording or playing back. Unlike digital signals, analog signals degrade slowly between each generation of duplication. They are also less efficient and cannot contain as much information over the same amount of time as a digital signal.

Digital signals do not resemble the information they transmit. Instead they transmit information via binary. Binary is made up of zeros and ones representing two distinct amplitudes in rapid transitions of voltage. Digital signals use discrete (discontinuous) and instantaneous values represented by a square wave. Digital signals usually do not degrade by being copied, unless there is a problem in the method used for storage, lossy compression is used, or there is significant interference in how they are transmitted.

Video signals are typically divided into different color channels to allow for more information for both recording and transmission. Depending on the video format, different methods of color channel separation are used. In the early days of television, broadcast video was only in black and white and relied on a black and white scale based on luma (Y) information. When color was added, video signals continued developing the luma model by adding a color or chroma channel (C) that could be mixed with the existing black and white luminance information and maintain backwards compatibility with older black and white sets. The chroma channel also includes the saturation and hue.

Commonly used in broadcast and live theatre is the family of Serial Digital Interface (SDI) standards developed by the Society of Motion Picture and Television Engineers (SMPTE). SDI has established methods of transmitting uncompressed and unencrypted video between studio-grade cameras and video equipment. Because it does not support encryption, SDI is not intended for the consumer market.

Figure 5.1 Analog and digital video signal wave shapes/forms

Source: Alex Oliszewski

Video Cables

Video cables carry a video signal from point A to point B and come in many different types. There are three primary types of video cables: analog, digital, and fiber optic. Analog cables are still common but are typically associated with older equipment and standards. Each type of cable is associated with a maximum resolution it can transmit, as well as how it manages color space, video encryption, and audio signals. Some types of cables allow for longer runs before any degradation in video quality. Different cable types are designed to transmit:

- Video only

- Video and audio

- Video, audio, control, and power

Since video signals have high frequencies it can lead to degradation when using substandard conductors. We recommend you do not skimp on cheap video cables. Cheaply made cables introduce more noise or allow greater interference to degrade the video signal than higher-quality cables. For the most part poorly made cables do not provide good-quality connections. It is best to use high-quality cables with double shielding to insure the best transmission of the original video signal.

Any signals are prone to radio frequency and electromagnetic interference, causing lines, snow, or other visible artifacts. Digital cables do not usually suffer these distortions of outside signals. Usually digital signals are normally all or nothing. That is, if it works, the image is crystal clear and any distortions or video artifacts you see are most likely because of the content or some setting on the equipment versus the effects of crossover signal interface from the environment you are in. The downside here is that if the signal is fighting through interference and it fails, the whole image goes away. There are exceptions, however, depending on how a system transmits it signals and so noticeable artifacts can occur. However, these artifacts tend to be deal breakers and even when a signal is making it through, the results are rarely usable. Where you might have been able to live with that little bit of fuzz in an analog cable, you will have to solve the interference issues when using the digital cable for a quality image to show up.

![]() Tip 5.1 Cable Paths and Runs

Tip 5.1 Cable Paths and Runs

It may seem like a waste of time at the beginning of a system install to test all the cables. But it is important to know that all the cables work. It is better to find out that you have a bad cable before you run them all and connect them to the devices. In the end, it is always worth it to take the time, before running cables, to make sure they work.

Not all cables are created equal. Like most things, you don’t typically need the highest-end, most expensive cable on the market and rarely are the best cables the most expensive. But avoid the cheapest cable. Typically, it is a good idea to look for a middle of the road cable in regard to price and focus on those with strong reviews. Things to look for are the thickness of the lining and if it is a shielded cable.

If you have a cable with too little insulation you may be at risk of having the video signal pick up interference from other theatre equipment, especially lighting fixtures. Have you ever seen a phantom-like fuzz or other distortion artifact suddenly appear in the video that wasn’t there before? It is most likely because the shielding in the video cable is not strong enough to keep all of the other department’s newly installed electrical, lighting, and radio technology from bleeding through to the copper in the cables.

On the other hand, you don’t want to pay for more insulation in a cable than the job requires.

When using Cat 5/6 converters to carry video signals over greater distances, make sure to use shielded Cat 5/6 cables. Some projectors may not display even a garbled signal if you don’t have a shielded cable.

When running cables avoid crossing a video cable with a power cable or any cable that carries power. Especially avoid power lines that connect to fluorescent lighting fixtures. This may introduce noise in the video signal as the electromagnetic inference from them interrupts the signals in the video cable. Always maintain separation between power, video, and network cables.

Label each end of each cable before you run them. You don’t want to run seven VGA cables and then realize you forgot to label them. Having labels allows you to identify each cable later on for when you need to swap one out.

If coupling cables together, use gaffer tape or tie line to secure and take the weight off cables at each connection point to a device.

While video cables are not the sexiest element of digital media design, choosing the wrong cable can undermine a project. If you have a bad cable in the system it can mean that the video signal doesn’t transmit, drops a color, or adds an element of video degradation. You can spend precious hours trying to hunt down a bad cable. Save time up front by purchasing a quality cable and testing it.

The Anatomy of a Video Cable with a Single Strand of Wire

There are five main components, made of various types of materials, of a typical single wire video cable. The outside of the cable is known as the outer jacket and protects everything inside of the cable. The shielding helps prevent interference from contacting the conductor and creating noise in the signal. It also works in reverse, helping to limit noise from the conductor from spreading beyond the cable. Depending on the quality of the cable, there can be multiple layers of shielding, such as rubber or foil sheaths. The dielectric protects the video signal and insulates the conductor. The conductor is the wire or wires that carry the video signal. The connector is the interface that allows you to plug a cable into the components.

Figure 5.2 Anatomy of a single strand of wire-based video cable

Source: Alex Oliszewski

Coax

There are a number of different cable connection types that all rely on the underlying analog coaxial (coax) cables. Coax cables are used throughout the world of audiovisual technology, most commonly associated with cable TV and home-based Internet connections. Coax is on a significant decline as hybrid digital cable types, such as HDMI, are taking over the market. In professional video production environments coax cables, such as those used with BNC connectors, still reign supreme. In the consumer market, today’s newest TVs still tend to include coaxial input types, such as RCA and Digital-RF antenna connections.

Different coax cables have different ohm ratings. The choice is normally between 75 ohm and 50 ohm types. 75 ohm is the most common and what you will likely find installed in your home. 50 ohm is used for extremely long runs of cable or for particularly high-resolution video signals. As a rule of thumb, when working at distances above 100′ it is recommended to use 50 ohm coax.

BNC

Standing for Bayonet Neill-Concelman, BNC is commonly referred to as a cable type, but is actually more properly understood as a connection type for coaxial cables. There are adapters available that allow conversion between RCA, BNC, and other coax-based connectors. In video applications, BNC connectors are most commonly made to match either 50 ohm or 75 ohm coax cables.

BNCs are a workhorse of the professional video world because they are shielded and tough. The 50 ohm types can be used over long runs of 200′. Video transfer interfaces, such as SDI and HD-SDI video formats, rely on them. When working with high-grade video equipment that uses coax cables with BNC connectors, such as SDI video interfaces, confirm with the equipment manufacturers if 75 or 50 ohm cables are needed.

Figure 5.4 Male and female BNC connectors on a coax type cable

Source: Alex Oliszewski

RCA

RCA cables are used for transferring analog composite video and stereo audio signals. The most common cables feature yellow (video), and white and red (stereo audio) connectors. The color coding of RCA cables is for the ease of installation and represents the only physical difference between the cables. A white cable can send a video signal just as well as a red or yellow one. RCA gained popularity first as an audio cable format for record players, which earned them the nickname “phono,” short for phonograph connections. This video cable typically carries standard 480i NTSC or 576i PAL resolution signals. In the theatre when working near lighting equipment and cables, unless you are using a well-shielded RCA cable, expect issues when pushing 50′–100′ runs.

RCA ports on displays and computers are being phased out in newer equipment. We still run across them, though, and find that it is good to have a few around when you want to use an older live video camera, VHS (Video Home System) player, DVD player, or video mixer.

There are also some video components that can serialize the transmission of color data over multiple RCA connections. This type of connection is often referred to as a component video connection. Component video cables split the video signal into three or more separate channels and allow for a larger overall color space, thus providing better overall video quality at larger resolutions than single RCA cables.

S-Video or Y/C

Standing for separate video and using a four-pin connector, S-video cables are also referred to as Y/C cables. They are a legacy format and represent one of the historical first steps in upgrading image quality from RCA-type video cables. While they allow a higher quality of video than typical RCA composite video signals, the noticeable increase in image quality is modest. They can be used to send 480i and 576i resolution video signals.

S-video cables are uncommon now. If you find yourself working with older video equipment you may still run across a need for this cable type. In our experience working in the theatre, they should not be considered for lengths over 30′.

Figure 5.6

Male and female S-video connectors

Source: Alex Oliszewski

VGA

Figure 5.7

Chart of VGA names and corresponding resolutions

Source: Alex Oliszewski

Though other standards are rising, the trusty VGA connection and its associated cable are still ubiquitous in conference rooms and lecture halls. However, it is definitely on its way out the door. This standard uses an analog-based signal and so you may find that it sometimes fails at providing pixel-perfect transmissions of content and causes other losses in quality to the image data. These can lead to color shifts and problems when trying to map or blend projectors to pixel-perfect precision. VGA is able to support a wide range of resolutions and frame rates, while allowing for relatively long runs and cable lengths. If you try to use a VGA connection of over 100′ you may find the need to reduce the resolution of the signal in order to maintain image quality, unless you use some sort of signal booster. If budget allows, avoid using VGA.

DVI

DVI (Digital Visual Interface) was the standard and first major player in consumer-grade digital video transmission, though some versions include support for analog signals for older monitor types still being used when it was first introduced. DVI comes in several varieties, which are used for different purposes and needs. DVI Single Link typically supports a maximum resolution of 1920x1200 and Dual Link 2560x1600, but this ultimately depends on the equipment you are using it with. There are three types of DVI connectors: DVI-A (Analog), DVI-D (digital), and DVI-I (integrated digital and analog).

HDMI and DisplayPort have pretty much replaced DVI at this point. DVI has a limited cable run of less than 50′ without a signal booster in line. We have found that the pins on these cables are susceptible to damage, as are the connectors. One beauty of DVI is that the connectors often include screw heads to connect to ports. As much as they hurt your fingers screwing them in, once they are connected they usually stay that way.

Figure 5.9

Chart of DVI names and corresponding resolutions

Source: Alex Oliszewski

HDMI

HDMI (High-Definition Multimedia Interface) represents a significant evolution in the utility of video cables. Unlike its ancestors, HDMI cables accommodate audio, 5-volt power transfer, and an ever-growing number of ancillary signal types commonly associated with video.

Like DVI, HDMI also comes in multiple versions. While the cables themselves have so far been backwards-compatible, you should ensure that the cables you have match the version of equipment you are using.

50′ is considered the longest length that HDMI is reliable. Use signal boosters or HDMI to Cat 6 converters for longer distances. Long cables are especially vulnerable to damage, even with gentle handling, and we recommend you have at least two backup cables ready to go at any given time when working with long runs.

Similar to USB, there is more than one type of connector used by HDMI cables. Small devices can utilize Mini or Micro HDMI connections. These are often found on cameras, such as the currently popular GoPro. The types pictured in Figure 5.10 represent the most common. We anticipate that some version of HDMI will continue as a video standard into the near future.

Figure 5.11

Male and female HDMI connectors and ports

Source: Alex Oliszewski

DisplayPort

DisplayPort is a companion cable to HDMI that is intended specifically for connecting computers and monitors. With an adaptor, you are able to convert a cable between HDMI and DisplayPort. Not all the functionality of an HDMI cable is replicated when being converted between port types as audio signals are typically dealt with differently by the equipment that relies on them. Like HDMI it also has a smaller version, called Mini-DisplayPort.

SDI

SDI cables rely on BNC-type connectors and coaxial cable types. Because of their frequent use with SDI video interfaces, BNC-type coaxial cables are sometimes also referred to as SDI cables.

As implied by its name, various groupings of SDI cables are used depending on the specific standard and features demanded by the equipment in use. Some devices with limited space have a special connector that breaks out into multiple BNC cables to carry the SDI signal.

There are eight types of SDI: SD, ED, and HD-SDI as well as Dual Link, 3G, 6G, 12G, and 24G-SDI. The three primary types of SDI that we have encountered working in theatre settings are SD-SDI, HD-SDI, and Dual Link-SDI. SD-SDI supports 480i, HD-SDI supports 720p and 1080i, and Dual Link HD-SDI supports 1080p60.

HD-SDI is prized not only for its quality but also for the range of additional data streams that can be incorporated with it. These include, but are not limited to, multichannel audio, the use of different color spaces, and timecode data.

The maximum range for an SDI-type signal cable depends on the specifications provided by an individual technology variant or manufacturer, but cable lengths of up to 250′ are normally considered reliable. Though expensive, we recommend the use of a fiber optic video interface to extend SDI over distances longer than 200′.

Figure 5.12

Male and female DisplayPort connectors

Source: Alex Oliszewski

Fiber Optic

Fiber optic cables are prized at the highest ends of the profession, both in live production and broadcast environments. When Cat 6–type video converters are proving unreliable in the theatre, or the environment has known interference issues, use fiber optic cables and interfaces. One of the standout features of fiber optic systems is that they are not susceptible to the interference suffered by metal cables. Even low-end fiber optic converters often claim ranges measured in miles and should transmit a strong signal even in a demanding theatrical environment. Strands of fiber optic cables are commonly sold in lengths exceeding 1,000′.

Unless your equipment includes native fiber optic connections, the signal needs to be converted to the types of connectors on the media servers and digital displays. There are SDI, HDMI, DVI, and other types of video interface conversion systems available. Depending on the type of video signal interface being converted, you may need to use a specific type of fiber optic cable that corresponds to the kind of conversion interface being used. Like SDI, fiber optic video cables are sometimes used in groups, allowing for different streams of data to be spread across different cables. The most common fiber optic cable you are likely to come across, which is not normally relevant to digital media design, is the TOSLINK-style fiber optic audio cable popular in high-end sound systems.

Figure 5.14

Male and female fiber optic/TOSLINK

Source: Alex Oliszewski

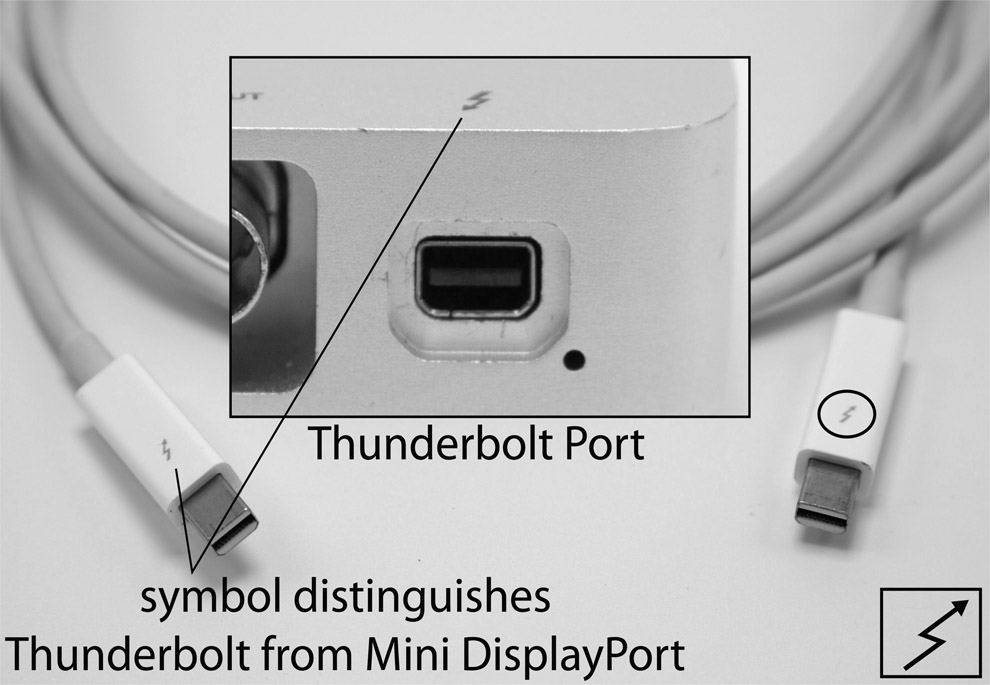

Thunderbolt

Thunderbolt (versions 1–3) is a video interface cable type developed by Apple for its line of computers. Like other types of multi-data video cables, Thunderbolt can transmit video signals along with other types of data. This cable is normally used as a high-speed data cable that allows port replication (allowing for the continued use of peripherals that rely on a different plug type) on docking stations or as a video display output.

Thunderbolt is prized because it provides access to the PCI Express bus on the computer’s motherboard, which allows for high-speed data transmission rates. If you use an Apple laptop, external hard drives, video capture cards, or other peripherals, this is typically the fastest connection type available. Thunderbolt 2 and 3 are fast enough to interface with devices that send and receive high-quality video signals, such as 4k and SDI, and provide access to video signals with low compression rates.

Figure 5.15

Male and female thunderbolt

Source: Alex Oliszewski

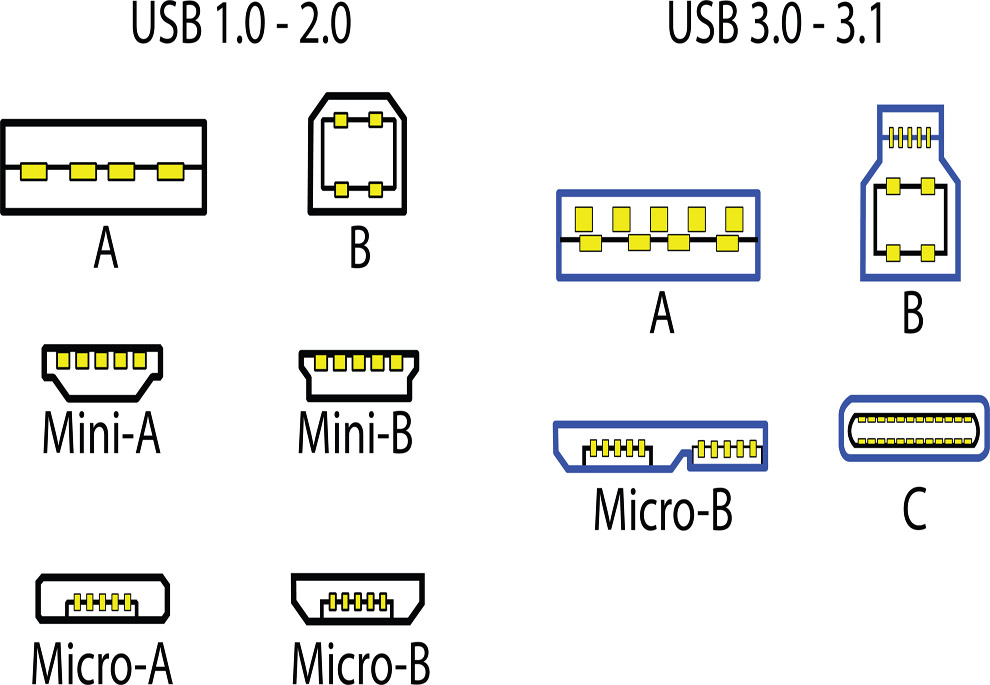

USB

USB is a common computer data cable that can also transmit power. Many web-based cameras connect using USB. Standard varieties are 1.0, 2.0, 3.0, and USB Type-C. The larger the number, the faster the cable. USB Type-C is currently the newest and fastest, providing up to 100 watts of power, which allows for more compact and powerful devices to be used with a single cable. USB 2.0 is just fast enough to provide decent-quality, if heavily compressed, video streams; however, we recommend no longer investing in any equipment that is not at least USB 3.0 or Type-C.

USB cables are not meant for long runs, and while 50′ and 75′ extenders exist, we have had mixed results in using them with anything but the simplest of USB-enabled devices, such as mice or keyboards. If you need to extend a USB-based camera, such as a Kinect, choose an extender that has an active (powered) signal booster.

FireWire

FireWire is now mostly a legacy connection type that came in two primary varieties, FireWire 400 and FireWire 800. The numbers refer to their bandwidths, with 800 being the faster of the two. Now usurped by USB 3.0, which is four times faster than FireWire 800, this standard has been abandoned in new equipment. FireWire cables are typically not reliable on runs of greater than 15′.

In digital media design, they were (are, in some cases) primarily used to transfer live video from digital camcorders and IR cameras. They were also commonly used for fast file transfers between external HDDs.

Figure 5.16

Chart of USB video cables

Source: Alex Oliszewski adapted from Darx, Damian Yerrick and Bruno Duyé

Figure 5.17

FireWire 400 and 800 cables

Source: Alex Oliszewski

Cat 5 and Cat 6 Ethernet Cable

![]() For More Info 5.1 Description of Cat 5/6 Cables

For More Info 5.1 Description of Cat 5/6 Cables

See “Cat 5/6 Ethernet Cables” in Chapter 5.

Cat 5/6 is easily used for video signal runs of up to 320′. It is an affordable cable for long runs, and there are a number of video adapters that allow you to convert to HDMI, DVI, VGA, and other video signal types over Cat 5/6 cables. Because of the high levels of interference common in theatre environments, invest in Ethernet cables that are well shielded regardless of their category.

When driving multiple projectors and their networked media servers over the hundreds of feet demanded in theatre installations, Cat 5/6 cables and signal converters are a good solution. Cat 5/6 can be purchased by the spool, allowing you to make custom lengths. This requires you know or learn how to attach and test the phone jack–like plugs on either end of a custom-built cable.

Video Signal Distribution Hardware

EDID Managers, Video Amplifiers, Replicators, Extenders, Repeaters, Splitters, and Distribution

Within the industry, you may hear a single piece of equipment referred to as a video amplifier, replicator, extender, repeater, DA (distribution amplifier), VDA (video distribution amplifier), EDID manager, or distributor. More or less these terms are used interchangeably, but there are differences between them depending on the manufacturer. With this class of hardware, you achieve three main goals: EDID management, amplifying a video signal, and distributing the signal to multiple displays.

Extended Display Identification Data (EDID) is a data structure, which conforms to the VESA (Video Electronics Standards Association) protocol, and allows computers to recognize the resolution of attached displays. EDID management is often necessary when connecting multiple display resolutions and/or when connecting displays over long runs. These devices allow you to discretely set and lock the resolution and order of each connected display from a media server. Not all hardware includes EDID management so take particular note if the system needs this feature.

Amplifying a video signal is needed on long cable runs. For instance, on a 200′ run of VGA cable, you may need to run two 100′ cables. Add a video amplifier in the middle to ensure the signal remains usable.

In addition to amplifying a video signal, you may also wish to distribute it to multiple sources. There are situations where you have more displays than the media server supports. In this case, the distribution hardware device accepts a single input signal, boosts the amplitude, and then sends it to multiple outputs without any degradation. The capacity of the hardware is determined by an internal processor. These units are either passive or active. Check the specifications before purchasing or renting to ensure that the hardware meets your specific needs. If you are using displays with multiple resolutions EDID management features are essential.

Replicating outputs is the same as mirroring, allowing you to place the same image on multiple displays. Depending on the device you choose you have to make detailed choices about the arrangement and size of display outputs.

Currently, the two most used video display replicators/extenders we have seen used in theatre applications are Datapath’s Video Controllers and Matrox’s Triple Head products.

Datapath

The Datapath is a professional-grade device that supports custom configuration through the included software. The software is PC-only, so you need to program and set up the hardware on a PC. Once configured, the Datapath works on Mac or PC video outputs. The software has a bit of a learning curve, but once you figure it out, it is pretty straightforward. There are four- and eight-output options. You can purchase as either a stand-alone unit or a rack mount. Since these are professional units, you can expect rock-solid performance.

Matrox Triple Head

The Matrox Triple Head is a more affordable and consumer-focused version of the Datapath. It has custom software to configure the displays and is available for both Mac and PC. The Triple Head has either two or three output versions. In our experience the Triple Heads are less robust than Datapaths, but they also cost far less.

Figure 5.19

Front and back view of a Datapath × 4 display controller

Source: Daniel Fine

Video Scalers

A video scaler allows for the conversion of a video signal’s resolution and/or interlacing method, such as upscaling WXGA 1280x800p to 1080i. For a scalar to alter the resolution of an image it must interpret information to create new pixels. It does this by sampling the incoming video stream’s pixels and then effectively spreads or compresses that same amount of information across the needed raster. Scalers use different methods to ensure the final result looks as good as possible. Read reviews on scalers before purchase, as we have seen quite varied results in quality between models.

Removing image information to reduce resolutions is called downscaling. This is a simpler process than upscaling, as removing data is easier then inferring new data.

Video scalers are particularly useful when designing a system with interlaced and deinterlaced video equipment. They are an affordable way of maintaining compatibility between legacy systems and newer equipment.

In the theatre, scalers are used to send a single video signal, such as HDMI or VGA, to multiple displays with different signal formats and/or resolutions. Using a video scaler to convert or duplicate a video signal coming from the media server can reduce latency, which is desirable. Some scalers allow for EDID management as well, so you can more easily convert a signal type and lock it down.

Video Mixers

A video mixer is a device that receives multiple inputs and allows for their simultaneous display and compositing. They are essential for live broadcast of sporting and awards events. While media servers are often capable of duplicating the functionality of video mixers, this can introduce unwanted latency. A computer’s CPU or GPU will likely never match the low latency of hardware-based video mixers.

Figure 5.21

Back panel and top view of a Roland V-4EX video mixer

Source: Alex Oliszewski

Most video mixers have built-in keying capabilities, with higher-end models also supporting alpha channels. Just as they do for live television, video mixers in the theatre allow designers to easily combine live and prerecorded video content at the lowest possible latencies.

Video Cable Adapters and Signal Converters

Video cable adapters change the physical connections of cables and devices from one type to another. Video signal converters actually modify the type of video signal from one to another. Adapters and converters are available for almost every type of video signal and often come in some type of cable form. Yet, not all adapters are created equal. If you find an adapter that is much cheaper than all the rest don’t assume it will actually work. A converter is always needed when converting between analog and digital devices.

Wireless Video

When cables are not an option but video must be delivered from point A to point B, wireless transmission is an option. Contemporary theatres can prove to be complicated wireless environments to work in. Audio designers in particular tend to use wireless microphones and front-of-house teams often communicate using high-powered walkie-talkie systems. Audience members introduce hundreds of Bluetooth, Wi-Fi, and cell radio interferences to performance venues in a way that is almost impossible to simulate before opening night, and there is significant potential for poor performance with the lower end of wireless video transmitters currently available on the market.

There are a large number of wireless transmitter types, so you should be able to find one that meets any common video transmission standard from RCA all the way up to HD-SDI. These types of systems can get quite pricey if you need to work at high resolutions. Some include battery-powered belt pack–type transmitters that are meant to be powered directly off of a professional video camera’s power pack or otherwise enable it to be worn by a mobile video camera operator who requires freedom of movement. Other versions of this technology rely on transmitters and base stations that require a dedicated wall plug for power.

Still other types require a wire running from the camera to the transmitter, and the transmitter must be stationed within clear sight lines to the receiver, as well as somewhere near a power supply.

RF Modulator

Occasionally you may use a display that has only coax inputs, meant to receive RF video signals. In order to convert this signal type to VGA or another video signal type, a special RF modulator is needed.

Cat 5/6 Extenders

Cat 5 and Cat 6 video conversion devices transmit a video signal over a longer distance compared to the native run lengths of a particular cable, such as HDMI or VGA. On the input side, these devices increase the native voltages of the incoming video signal so that it can travel further distances made possible by Ethernet cables. On the other end of the cable, the Cat 6 extender then reduces the voltages back to the original specifications. The converters need to be powered and attached to each end of the cable.

Figure 5.23

Top row: coax to RCA modulator. Bottom two rows: multiple views of a VGA to RCA/S-video modulator.

Source: Daniel Fine

Not all extenders are made equally. Read reviews and don’t be swayed by the cheap ones, because they may not work as promised over long runs in a theatre setting. Regardless of build quality, slight changes in a signal’s final voltage can occur. These devices might cause negligible degradation of image quality. They should include some form of gain control that allows for you to tune its signal output. We regularly use and recommend these kinds of extenders to those needing to balance robustness and affordability of long cable runs. Check the specifications for EDID pass-through features.

Analog-to-Digital Converters (ADC) and Digital-to-Analog Converters (DAC)

ADC and DAC devices are used to translate analog signals and digital signals back and forth. They are heavily used by sound designers when dealing with microphones and digital audio systems. Digital media designers who are working with analog cameras use them to convert the analog video signal for capture in a media server. Conversely, you may need to convert an HDMI or other digital signal for input into a system that accepts only analog signals, such as component video (YUV/S-Video) or RCA.

Media Servers

A media server is simply anything that plays back media. In the simplest form, a media server can be a CD, DVD, or VHS player. Modern media servers make the job of playing back media files (video, images, sound) for a show reliable and repeatable in terms of being able to cue the video content with the live performance while quickly changing the way that content is played back.

Theatrical media servers typically allow for control of the content, such as speed, looping, and changes in intensity, saturation, and contrast, as well as the ability to decide where a particular video clip starts and stops playing. Most media servers are able to send and receive different network messages in order to communicate with other systems and equipment. Many media servers have built-in mapping and blending tools. Features are not standard across all media servers. You should know what the server you are using can and cannot do before heading into any professional setting.

Today’s media servers are very sophisticated. There are two main categories of theatrical media server: software – based and turnkey solutions. Software-based solutions allow you to operate on any computer that meets the minimum requirements of the software. This allows for more flexibility and custom configurability, while also representing the most affordable option. Turnkey solutions include software and hardware (computer). For this reason, they are typically regarded as more robust. They are also more expensive, often by many thousands of dollars.

![]() Aside 5.1 Building Your Own Media Server

Aside 5.1 Building Your Own Media Server

If you want to build your own computer to act as a media server or you need to spec the hardware on an existing computer, you need to understand what the components of a computer are and why they matter for media servers.

Graphic Cards

What Is It/What Does It Do?

The graphics card or GPU (graphics processing unit) in a computer comes in many different flavors. Today you’re likely to see computers with integrated graphics cards, discrete graphics cards, and sometimes even a combination of those two. Integrated graphics cards are usually found on laptops and in this case the graphics processing unit is tied into the motherboard of the computer. A mother-board is the central circuit board that components are attached to in your computer.

Integrated cards are typically much more power-efficient, and reduce the presence of another component in your computer. The primary drawback of integrated cards is that they are typically less powerful than discrete cards—in terms of both processing power and memory.

Discrete cards are stand-alone components that are attached to the motherboard in your computer. These come in lots of different shapes and sizes, affording the user many different configurations. While these cards offer the best performance, that processing power typically comes at the cost of power efficiency and space.

Configurations offering a combination of both types are common in higher-end laptops focused on the gaming market. Computers with both an integrated and discrete GPU are a common solution for graphics power and extended battery life. Many modern operating systems allow for smart switching between integrated and discrete cards—the idea here is to create a computing experience that has power when you need it, and power efficiency for most other computing tasks.

Like its name suggests, the GPU is used for rendering images on your computer—everything from rendering web pages to rendering the fantastical worlds of modern games, and even making for speedier processes in video and image editing applications. Unlike CPUs (central processing units) that tend to handle processes in serial (one operation after another), GPUs handle processes in parallel (many operations all at the same time). Due to their parallel computing nature, GPUs tend to be much faster at many computation processes—though they’re not well suited for all problems.

GPUs have both their own processors and their own memory—both factors matter in GPU design, though when building a machine, you might have to choose between faster performance or more memory. It’s important to note that the GPU’s memory, VRAM (video random-access memory), is different than your computer’s primary memory, RAM (random-access memory).

How Do I Pick the Right One?

If you’re building a media server, chances are you’ll want a discrete GPU. While integrated GPUs may seem like a good idea on paper, for many high-end playback situations you often want as much computation power as you can manage, and not to be stranded with an integrated graphics solution. You’re also likely to want more VRAM than an integrated card will have.

Discrete cards come in a huge number of varieties, so choosing the right card can be a daunting process. There are a number of things to keep in mind:

More GPUs don’t always mean better performance. While many modern games support multiple GPU configurations, programming environments and turnkey media server software packages might not. It’s never safe to assume that more cards mean better performance. Instead it’s important to do your research and make sure that your application or development pipeline will benefit from multiple cards.

Not all applications and development environments are optimized to benefit from using a GPU. A great number of applications and pipelines benefit tremendously from using a powerful GPU, but not all of them. Some processes are inherently bound to the CPU and see no significant gains from a faster GPU. While many live rendering pipelines benefit from additional graphics processing power, typical movie decoding may not (this is largely dependent on the type of codec you’re using for your video). Before choosing a card, it’s important to first think about what your server needs to be able to do and the type of computation work it will primarily be used for.

Card vendors shouldn’t matter, but sometimes they do. While searching for a bargain GPU is a tempting endeavor, it’s also important to realize that not all applications are optimized for all graphics cards. That is to say that in some cases applications and development environments may be optimized for better performance on a single vendor’s cards. Both NVIDIA and AMD make powerful video cards, but it’s important to make sure that the applications you plan on using will benefit from the card you purchase.

Some general ideas worth considering:

- The newest technology is always the most expensive. While it might be tempting to get the newest card on the market, it’s likely to be out of date in just a few short months.

- VRAM is hugely important for modern rendering environments. When working with large textures, high-density displays, or large numbers of displays you’ll want to make sure your GPU has plenty of memory to accommodate those needs.

- Professional cards aren’t always the best solution, but sometimes they are. It might be easy to shrug off the idea of a $5,000 video card, but it might be the right solution. Then again, the consumer-level card that’s just a little slower might be more than enough. High-end cards tend to support important features like frame-lock (keeping multiple video cards in sync) and have better multi-display support.

- Choosing the right video card is hard; read lots of reviews, and ask your colleagues about how to determine the right piece of hardware.

HDD/SSD

What Is It/What Does It Do?

Hard disk drives (HDD) come in many varieties and are an essential part of your server build. Drive space is especially important for video playback as it can be a bottleneck in your pipeline. Your hard drive not only holds files that allow various applications on your computer to run but also is where you store your video files. These days’ disk drives come in three primary varieties: platter drives, solid-state drives, and hybrid drives.

Platter drives are the oldest technology of the three varieties, have the largest capacity, and tend to be the most cost-efficient. Platter drives are just that— they’re composed of several platters or disks that are stacked together in a single housing. These platters spin at several thousand revolutions per second and the information is read off and written to them with a small head that moves across the surface of the platter. Platter drives are rated based on, among other properties, their rotation speed. You’ll often find platter drive speeds described as 5400 rpm or 7200 rpm.

Solid-state drives (SSD) are a type of memory technology that involves no moving parts. Similar in principle to a USB memory stick, an SSD uses electrical current to change the state of “flash” memory. This type of memory holds its state even without power, ensuring that you don’t lose any information even when your computer is turned off. While SSD prices continue to come down, this is typically a more expensive form of memory than platter drives. SSDs may be more expensive, but unlike platter drives, SSDs have no moving parts and consequently are able to access files at a much faster rate.

Hybrid drives are a combination of platter and SSD technologies. These types of drives typically have a portion of the drive that is flash-based, while another section of the drive is platter-based. This is largely hidden from the user, and in some applications, the management of the memory is also obfuscated from user—the principle here being that the operating system should work in tandem with the drive to store frequently accessed contents in the flash memory portion, while infrequently accessed information is stored on the platters. While this is certainly a powerful technology for many uses, it’s not one that’s especially advantageous for building a media server.

How Do I Pick the Right One?

By and large you probably want to use SSDs to house your media files. This is especially true if you’re dealing with large assets, 4k video and above, or playing back multiple files simultaneously. Fast read speeds are essential to good playback performance and currently SSDs are a strong choice. That said, you might well decide to put multiple drives in your server.

For example, you might want a platter drive to house your operating system and working files while you’re animating, and a smaller SSD for finished assets that you use for playback. Alternatively, you might decide that you want to work with just SSDs on the server, and rely on USB 3.0 or above, Thunderbolt 2 or above, or eSATA externals for working files.

Regardless, it’s worth remembering that more space is better than less when dealing with hard drives. It’s easy to accumulate files quickly, and when you’re deciding on the amount of space for your assets, it’s good to err on the side of larger rather than smaller. If you can afford it, 1 terabyte of space is a good place to start for your assets—if at all possible, you shouldn’t give yourself less than 500 GB. That may seem like a lot now, but drive space can disappear quickly if you’re working on complex projects.

Processor Speed

What Is It/What Does It Do?

The central processing unit (CPU) does the lion’s share of computing tasks on most computers. The major defining characteristics of a CPU are its clock speed and number of cores. A speedy CPU will help ensure your applications feel fast and responsive. This is also the primary bottleneck for many computing challenges.

Clock speed is a measure of how many cycles per second a microprocessor is capable of when executing instructions. For example, 1 GHz represents one billion cycles per second. This measure is helpful in getting a sense of the performance speed you’re likely to see from a processor. Many other factors matter, but generally speaking, a faster CPU will usually equate to faster performance provided that you have sufficient system resources.

The number of cores on a CPU refers to the internal architecture of the microprocessor. We can reductively think about multi-core CPUs as having multiple processors. It’s important to remember that CPUs largely perform operations in sequence—A then B then C then D and so on. Sometimes when your computer becomes unresponsive it’s precisely because it is busy working through the computation associated with a previous operation. Multiple cores are a step in helping to address this problem as they allow your computer to multitask. It’s not uncommon to see terms like multi-core or hyper-threading used to describe this process. In brief, we can think of this as a means of allowing your computer to do multiple operations at the same time by giving different processes a discrete environment to operate in. Your virus scanner can run in the background without interrupting your work editing images or video. When you’re rendering a complex scene in a 3D authoring program your computer is able to work on multiple parts of the computation associated with creating the final image. This, of course, has limits—some operations need to be performed in sequence, while others can be performed out of sequence.

How Do I Pick the Right One?

Faster isn’t always better, and neither is more—which is to say that some applications benefit from multiplecore CPUs while others might ignore multiple cores and will benefit only from a faster clock. How do you choose then? Generally speaking, most modern applications don’t see huge benefits in performance past six cores. Applications will, on the other hand, almost always benefit from a faster clock.

You will, however, probably have to choose between more cores and a faster clock speed—so how do you choose? In terms of video playback most decoding happens on the CPU (with the exception of some specialized codecs). Some applications and development environments support multi-threaded video playback; if this is your case and you’re dealing with playing lots of simultaneous videos then you might see better performance from choosing a processor with more cores rather than a faster clock. If, on the other hand, you’re working in real-time 3D environments with complex changes in your scene and changes to the geometry you’re likely to see more direct benefits from a faster clock.

RAM

What Is It/What Does It Do?

Random-access memory (RAM) is used by your computer for all manner of operations. We might, as a metaphor, think about RAM as being similar to counter space or desk space. When you’re working on a project it’s often helpful to spread your work on a big table or counter—it lets you see everything at once, and find anything you need very quickly. RAM is used by your computer to put lots of information into a place that’s easily and quickly accessible. One caveat about RAM is that it requires power to maintain persistent memory—which is to say that when you turn your computer off, whatever is in RAM is lost. Every application on your computer uses RAM, and when you use all of your available RAM the operating system on your computer will try various techniques to keep working, but essentially it will just slow down. RAM is an essential part of your server build and in some cases adding more RAM can have a more powerful impact than a faster processor or faster GPU.

How Do I Pick the Right One?

These days RAM is typically described in terms of gigabytes (GB). If you have a 32-bit operating system you won’t be able to use more than 4 GB of RAM. Luckily for you, that’s probably not the case. Just about every modern operating system is now 64-bit. Some applications, however, are not. An application that’s only 32-bit will be able to use only 4 GB of your machine’s RAM. If you’re building applications, or using off-the-shelf software it’s worth noting if the application is 32- or 64-bit.

Okay, so how much RAM do you want? As much as you can afford/fit in your machine. It’s a good idea to install at least 8 GB of RAM for most modern computing. Generally speaking, you will probably be happier with 16 or 32 GB of RAM. You’ll probably see RAM described as something like: 16 GB (2x8) or 16 GB (4x4). Here, 16 describes the total amount of RAM. The numbers in parentheses describe how that memory is spread across the hardware. 2x8 means that you have two 8 GB sticks of RAM. 4x4 means that you have four 4 GB sticks of RAM. This is an important detail to consider. Generally, you want fewer, denser sticks of memory. Why? A motherboard has a limited number of slots for RAM. By using fewer slots initially you end up leaving yourself room to expand later on.

PCI Slot/Expansion

What Is It/What Does It Do?

A Peripheral Component Interconnect slot (PCI) is a standard expansion slot on a motherboard. Many additional computer components can be attached with PCI or PCIe (Peripheral Component Interconnect—Express). Modern motherboards typically have both PCI and PCIe slots. PCIe slots are used for components that need fast, high-bandwidth access to your motherboard. Adding an additional network adapter to your computer—that’s probably PCI; adding a capture card for multiple 4k inputs—that’s probably PCIe.

How Do I Pick the Right One?

If you’re building a server yourself, it’s worth thinking carefully about what other components you want/need in your server. Do you need a sound card, or an additional network card? What about a capture car for live video, or an uncommon interface element, like fiber? You’ll want to make sure you purchase a motherboard that has enough space to accommodate your other components and has some free space to attach additional internal components to expand your machine’s capabilities.

If you’re buying a computer from a manufacturer or a vendor that configures machines then you’ll likely want to think about any additional needs your server might have now and in the future, and plan to have enough space to attach these other components.

Turnkey media servers are PC-based due to the customization of hardware. Software-based servers may be either multi-platform, Mac-only, or PC-only, depending on the developer. Some multi-platform servers like Isadora have specific features per platform. For instance, on the Mac version, Isadora has a number of features built upon Quartz Composer. These features do not exist on the PC version of Isadora, since Quartz is Mac-only. The underlying methods of graphics handling are different on PC and Mac computers. Expect to see variations in performance across operating system platforms.

There are a wide range of media servers that each focus on a different segment of the performing arts and live events industries. Media servers have different forms of cueing logic. The four main types of cueing logic used in media servers are as follows:

- Timeline-based servers have a playhead that moves across multiple layers of video similar to the graphical user interface of a nonlinear video editing software. Typically, timeline-based servers work in a similar logic to editing software in terms of layering, compositing, and sequential playback.

- Layer/cue stack-based servers allow you to program content for playback through a spreadsheet-like system where you can move between cues without a playhead moving in time.

- Node-based servers have a variety of different methods to play back cues. Isadora is currently the only type of node-based server we are aware of that is specifically created for playback of cues as understood in the theatrical context. All other node-based servers we are familiar with, such as TouchDesigner and MAX, require you to program a custom method and interface for playing theatrical-type cues.

- VJ-based servers typically do not have cue systems designed for theatrical situations; however, we have seen them used to great effect by designers where idiosyncratic playback of content is desired. These types of servers typically offer the ability to quickly composite, add effects, and adapt content playback, while integrating with other software applications through features such as Syphon/Wyphon.

Most media servers allow you to hand over the operation for the run of a show to a relatively unskilled board operator. If programmed correctly, the operator is able to run the show with no additional programming and minimal control. They can use a single go button to execute a cue and should have a method for jumping between cues when one is missed or needs to be skipped for some reason during an individual performance.

For More Info 5.2

See “Types of Media Servers” in Chapter 5.

It is common for the sound department to play audio without the need to route through the media server. There are times when the audio and video need to be perfectly synced, such as when a prerecorded video character is delivering lines, so often these soundtracks are played along with the video in the media server to avoid latency. In this case, the media server needs an audio line out to the sound department. This allows the audio designer to control levels and ensure quality and continuity in their sound design. Be aware of the capabilities of the media server when it comes to playing audio and work directly with the sound designer to ensure system integration before technical rehearsals. Latency can still be an issue, so test that the two systems are in sync.

As with any technology, media servers are becoming more powerful and affordable every day. The only thing for certain is that tomorrow everything will be different. The career of a digital media designer is one of constant learning and adaptation to new technologies and buttons.

Types of Media Servers

Timeline-Based Media Servers

Timeline-based media servers use the same types of visual metaphors for setting and manipulating content as nonlinear editing (NLE) packages, like Final Cut Pro, Avid, Premiere, and so forth. On these systems you align video, define fades, set in and out points, place still images, and control various types of triggerable equipment all via a timeline, as you would in a NLE editor. Timeline-based media servers offer a familiar interface metaphor for video editors. Their learning curve is normally quite shallow for new designers and programmers already familiar with video editing. Watchout is the theatrical standard of this kind of media server.

Figure 5.26

Screen capture of Watchout media server interface

Source: Alex Oliszewski

Timeline-based servers typically support the layering of video and basic compositing effects, such as keying, color correction, cropping, and image blending. If the computer is properly configured and equipped, timeline-based servers also allow the mixing of live video signals and digital files. The ability to keep content in separate layers that are edited in place to the live action can offer significant time savings and for a skilled programmer enables quick responses to a director’s notes.

Layer/Cue Stack-Based Media Servers

Layer/cue stack-based server interfaces act more or less like spreadsheet cells where you can type in information. The server stores information and counts time while moving between settings and cells of what ultimately is a spreadsheet. Layer/cue stacks track the order of cues as well as applied effects and timings associated with them for playback.

The layer/cue stack has been the mainstay of digitized theatrical cueing devices and electronics since their first integration into theatre productions. Theatrical lighting boards and audio cuing systems have relied on the cue stack method for timing and cuing because it is a robust and logical way of dealing with automating dimmers, volumes, and other functions.

Because of the connection to existing methods of cuing audio and lighting, cue stack-based media servers integrate excellently into the theatrical cueing process. They typically are more robust in their support for audio playback and can often allow the consolidation of both audio and video content into a single server, such as QLab. This can reduce the cost of both the equipment and the number of operators needed to run the show.

Layer/cue stacks allow for basic real-time control of content, while also providing fast programming and well-designed playback interfaces.

Figure 5.27

Screen capture of QLab Media server interface from One Man Two Govners . Digital media design by Jeromy Hopgood.

Source: Jeromy Hopgood

Node-Based Media Servers

Node-based (sometimes called patch-based or flow-based) media servers use small building blocks, each with their own functionality, to build the logic and flow of data. These building blocks, or nodes, have inputs and outputs that link together to make a patch (sometimes called a graph). Node-based media servers can rightly be considered a type of programming language or environment in that they often do not come with a predetermined method of use, but instead provide libraries of possible functions that assemble into highly customizable configurations.

Since node-based environments often work closely with software libraries and other resources it is often quite possible to accomplish custom tasks by importing or developing additional functionality and libraries that integrate into the server. This means that if you are trying to use a new sensor or some uncommon piece of gear, node-based media servers are often the only type flexible enough to allow for integration without having to get support directly from the developer of the media server software. Other types of media servers have made great strides recently in integrating sensor-based interactions that are the hallmark of node-based media servers. However, they are still catching up when it comes to the flexibility and upgradability of node-based servers.

Aspects of node-based programming are included in some advanced media servers, such as Pandoras Box, and various content creation software packages, like After Effects. This provides an artist-friendly method of manipulating low-level computer behaviors normally addressed with text-based code.

Figure 5.28

Screen capture of Isadora server interface

Source: Alex Oliszewski

As patches become more complicated, they begin to take up a lot of screen real estate. We recommend using large external monitors when programming these kinds of media servers on a laptop. Patches can quickly become clunky and ungainly, making it difficult to figure out what is going on within the mess of wires and lines connecting everything. Organization and notation are crucial when needing to quickly look at a patch and understand what is happening.

Once you are facile in using this kind of media server the speed and ease with which you can program and affect content in a rehearsal are amazing, but getting to this point is not as easy as mastering other types of servers. If you use node-based servers other than Isadora it requires you to program a custom method and interface for cueing. This is not a trivial task when dealing with a large number of assets and the intensely limited amount of time available to programmers in rehearsals and tech.

VJ-Based Media Servers

VJ-based media servers are designed as more of a real-time instrument allowing artists to mix and composite premade content and live video cameras. Since they are designed for manipulation by an artist in real time during a performance, they do not typically have a cueing method for theatrical playback of prerecorded looks. This class of media server has advanced in recent years to include powerful video mapping capabilities. They easily route to and from interfaces, cameras, and other software packages for warping/mapping, like MapMapper. They are great tools to quickly manipulate video and allow for dynamic performances.

Common Media Server Features

Tip 5.2 Order of Turning On/Off Equipment

It is important to turn on and off the equipment in the proper order for everything to work correctly.

Figure 5.29 Screen capture of Resolume media server interface

Source: Alex Oliszewski

Order of turning on gear:

- Projectors/displays

- Media server

In order for the media server to properly recognize the displays they need to be powered on. If you don’t power on the displays first and then power on the media server, the computer may re-sequence the order of the displays. This could mean that you end up sending content to the wrong display. EDID management devices can help solve this, but it is still a good practice.

Order of turning off gear:

- Media server

- Projectors/displays

The media server should be powered down, with all of the displays still on. This way, the server remembers the proper sequence and routing of each display. Once the media server is off, power down all displays.

Built-In Mapping and Masking Features

Some media servers have the ability to warp/map content to specific surfaces and to use premade and custom masks. Generally, the most robust mapping and masking capabilities are found on turnkey media servers, but for most theatrical productions mapping features offered by Watchout, Isadora, and QLab are more than sufficient.

![]() For More Info 5.3 Warping/Blending, Projection Mapping, and Masks

For More Info 5.3 Warping/Blending, Projection Mapping, and Masks

See “Working with Projectors” in Chapter 5.

Max # of Inputs

This refers to the maximum number of individual video signal inputs a system is capable of directly ingesting.

Max # of Outputs

This refers to the maximum number of individual video channels the system is capable of directly outputting for display or further manipulation and distribution.

When dealing with software-only servers the chosen hardware is just as important as, if not more important than, the software’s capability. The fact that the software allows you to have x number of inputs/outputs does not mean the computer is capable of actually outputting that number at performance-grade quality. Computer hardware in turnkey servers is configured and optimized to work with the software and typically is limited in its feature set to ensure performance quality. On self-built systems, it is up to you to make sure that the system is configured properly for optimal playback.

Max # of Layers

Layers generally refer to the number of separate video or image files that can be played/placed concurrently within the same raster/display space. In this way designers are able to mix separate elements in real time and maintain flexibility in the timing and composition of how content is composited on the stage.

Max # of Simultaneous HD Videos

While some servers may have many layers, there is a cap as to the amount of HD movies that can play at any one given time without dropping frames.

Notable Supported Protocols

There are a number of networking protocols that a media server may be capable of interfacing with in order to communicate with other equipment.

![]() For More Info 5.4 Networking

For More Info 5.4 Networking

See “Networking” in Chapter 5.

![]() Tip 5.3 Updating/Upgrading Media Server

Tip 5.3 Updating/Upgrading Media Server

When is it a good time to upgrade software or hardware?

Upgrading usually comes with the need to update plug-ins and can take anywhere from twenty minutes to multiple days to complete. Give yourself extra time whenever upgrading the media server. Make sure to check that the new version of the software and hardware complies with all the related equipment and associated software you are using. Upgrading can have other dependencies, such as the need for the latest operating system or new video codecs installed. If you don’t have these readily available you can find yourself stuck in the middle of the process and lose important working time.

You don’t want to upgrade the software-only media server only to find it won’t run effectively on the computer after the install. Do your homework by reading all the technical specifications first.

Do not update the media server after beginning to program a show unless you are willing to start again from scratch. Things can go wrong and you don’t want to lose time to troubleshooting problems while you are in the midst of a project. Plan updates in-between shows.

This also is true for operating systems. Before upgrading the OS, make sure that the current media server software and hardware you are using work with the new OS.

Projectors

There are currently four main types of projectors (DLP, LCD, LCoS, and Laser) that are commonly used in theatrical productions. They share and differ in many of their specifications across types, makes, and models. This section covers the basics about projectors, the related gear associated with them, and the fundamental technical tasks involved with working with them in the theatre.

Projector Types

DLP

DLP (digital light processing) describes the image creation device used by these types of projectors that have small moving mirrors to either block projected light or reflect it so that it passes through the lens. These projectors have the advantage of achieving a better quality of video black, which produces better dark tones than LCD technologies. Typically, light passes through a single DLP chip, consisting of a sequence of spinning colored glass filters that produce the different colors (RGB) needed to reproduce an image. On some higher-end projectors there are three DLP chips, one for red, green, and blue.

When video is projected by a DLP projector, it may contain a shifting rainbow effect created by the red, blue, and green reflected from the projector’s spinning disk of dichroic filters. If you use live video in a production that catches sight of a DLP projection you may see the rainbow effect in that live video. You may see the rainbow effect when using a video camera to document the production. By changing the shutter speed and frame rate of the video camera capturing the projection you may alleviate this problem. We have heard anecdotal stories that there are even some people who can see this effect with their naked eye. Though rare, those who see it have found it quite annoying.

Figure 5.30

Shifting rainbow effect of a DLP projector as seen by a video camera

Source: Alex Oliszewski

LCD

LCD (liquid crystal display) describes the image creation device used in these projectors. LCD projectors represent the reason that video projection has become affordable and so widely adopted, by not only the theatre and performance community but also enthusiasts interested in building personal home cinemas. They provide sharp images, come in small sizes, and are often more affordable than other types of projectors.

This type of projection technology is similar to most LCD televisions. An image is created by allowing light to transmit to three liquid crystal panels. There is one panel for red, green, and blue. A light beam is split between the three colors and then recombined to create a full-color image.

The video black and dark tones created by LCD projectors tend to be brighter than those created by DLP projectors, offering less range in contrast than other newer projection technologies. These types of projectors are more susceptible to dust and other foreign bodies than DLP projectors and in turn tend to require more maintenance and cleaning during a performance run that lasts more than a few weeks.

LCoS

LCoS (liquid crystal on silicon) describes the image creation device used and is similar to that of LCD projectors. Instead of using a transmissive method, LCoS uses a reflective method of forming an image with an LCD panel. LCoS projectors tend to achieve better contrast ratios as well as darker video black and dark tones. However, along with LCD, these types of projectors are becoming rarer in favor of DLP and newer laser projectors.

Laser

Once known as LASER for “light amplification by stimulated emission of radiation” the term is no longer used as an acronym and simply referred to as laser. Unlike the other projector types listed here, laser describes not the image creation device but the light source. DLP, LCD, and LCoS imagers are still used in laser projectors. LCD-based laser projectors provide the sharpest and brightest images of any technology currently available, though they are relatively new and quite expensive to use at most theatrical scales.

LCoS-based laser projectors, unlike other projectors, have no specific set focal distance. This allows the projected image to be sharply focused over a wide range of distances. This is particularly desirable when a designer wants to project an image on a dimensional geometric surface, as is common in video mapping applications, or when images need to be projected on multiple surfaces that are placed at varying distances from upstage to downstage. Other advantages include:

- The ability to nearly instantly turn on/off since there are no lamps.

- Less fragility.

- Rated for a longer life than lamps.

- The ability to mount on the side, point straight up, or point straight down.

- Less maintenance.

- Enclosed lighting elements that do not require cleaning over the course of a long run.

Technical Specifications of Projectors

Lumens

Established by the American National Standards Institute (ANSI), the ANSI lumen is the standard calculation used to measure how bright a light source is. Lumens are the brightest at the center and drop off toward the edges. Projectors have different settings, such as eco, standard, gaming, and cinema, that affect the lumen output, as well as the color temperature and the lamp life.

Resolution and Aspect Ratio

Projectors have different native resolutions and aspect ratios. When possible, always use the native settings. Other resolutions and aspect ratios are simulated by adding black bars to either the top/bottom or sides of the image.

Contrast Ratio

The projector’s contrast ratio determines the level of black. A contrast ratio of 5,000:1 means that the white of an image is 5,000 times brighter than the black. The higher the contrast ratio is the more detail and the blacker the blacks are. Typically, the lowest contrast ratio you should work with is 3,000:1.

Inputs/Outputs

Projectors have a number of different input types, with VGA and HDMI being the most common. Some projectors are pass-through-capable, meaning there is a video signal out. This allows you to either directly monitor the projector’s output or duplicate it by passing the video signal onto another projector.

Fan/Air Flow/Filters

All lamp-based projectors have a fan to cool the lamp(s). To cool the lamps the fans circulate air by taking it in from one place on the projector and then blowing out the hot air via an output. The fans can be loud, which may be a problem depending on where they are installed. Try to avoid any fan noise over 60 db. There are disposable air filters over the air intake/output to protect any particulate matter from entering the inside of the projector. The filters need to be replaced regularly. Refer to the projector’s manual so you are familiar with maintenance needs and schedules.

Network Capable

Some projectors are capable of connecting to network, usually via Cat 5/6 or serial inputs. Many new models have browser-based interfaces to control them remotely over the network.

Sometimes when you have the same projector make and model that are positioned near each other, the remote controls both projectors, which may become a hassle when trying to make changes to a projector’s setting. You can avoid this by controlling them via the browser interface. An added bonus of controlling the projectors via a network is that you can access all of the projector’s settings, including power, right from the tech table. You should specify network cable runs in the system diagram and connect all projectors to the same local network that the media server or show control computer is connected to. Having a dedicated laptop or other computer to interface with the projectors is recommended.

Installation

Most projectors have a maximum tilt angle specified. Do not assume you can point a projector straight up or down or install it on its side. It is a very rare (and expensive) projector that allows you to do any of these installation methods, though it is a common feature of most laser projectors.

When installing projectors do not block the air intake/output. Leave at least one to two feet from the projector to other equipment or walls to allow for airflow. Without proper spacing, the heat that projectors discharge quickly builds up and may cause a projector’s internal sensors to trigger a shutdown. In a worst-case scenario, overheating can permanently damage a projector or cause visible distortions in its output. Refer to the projector’s manual for airflow warnings before building any baffles or boxes around them to control light leaks or fan noise.

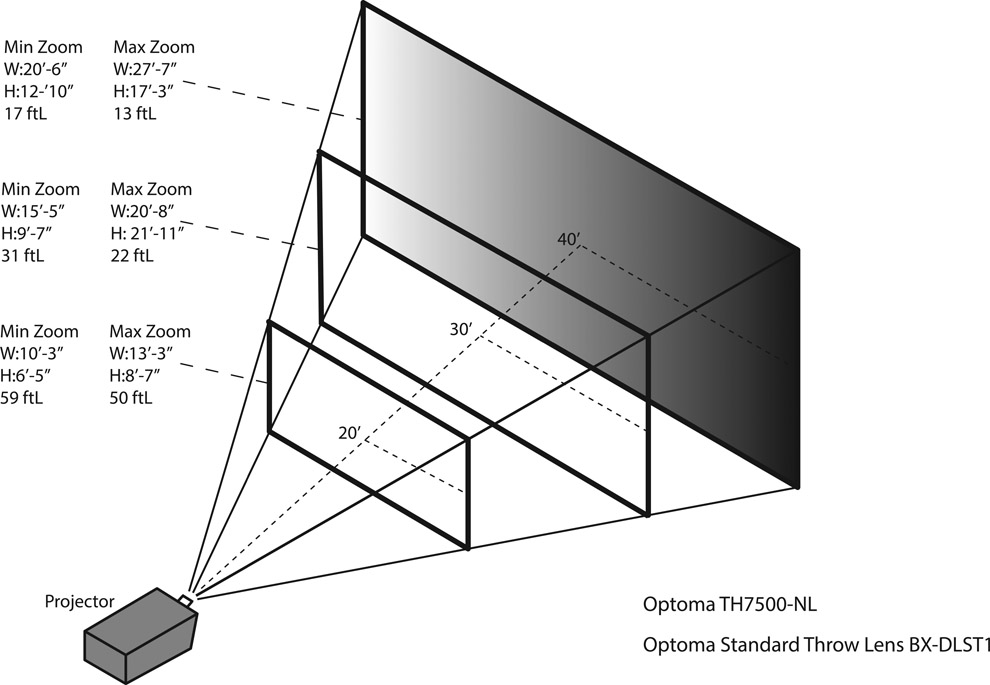

Projector Lenses

Professional projectors have interchangeable lenses, while cheaper, more portable projectors usually have a built-in lens. Projector lenses are similar to camera lenses, but differ in the fact that they are meant to direct the light from the projector out onto a surface to produce an image, rather than directing the light from a subject into the camera’s sensors to capture an image. F-stop and focal length are the two main technical specifications that you should be aware of when choosing and working with projector lenses.

The f-stop, like in camera lenses, is a number that specifies apature, which controls how much light it allows to pass through it, aiding in the rendering of image contrast and brightness. The lower the f-stop number is, the more light it allows to pass through, which in turn affects the depth of field similar to how a camera is affected. However, this is less of an issue with projectors in typical installations where the focus is set and locked to a single plane. As a rule of thumb, get the lowest f-stop lenses you can to ensure the brightest images. Most advanced projectors and lenses actually change the f-stop on the fly with a dynamic aperture to insure the best contrast levels.

The focal length, usually specified in millimeter ranges (i.e., 50–75 mm), is used to determine the ratio between the final image size on the desired surface and the throw distance—the distance from the tip of the projector’s lens to the screen. Typically, manufacturers specify the f-stop over the entire focal length of a lens, so for a 50–75mm focal length you might have a f2.0–2.6 f-stop range.

Marketing standards for projector lenses are hardly standard and can get confusing with the different terms and methods used by various manufacturers. Some might detail throw ratio and focal length, while another lists zoom ratios and maximum and minimum distances. These describe essentially the same thing, but require slightly different methods of decoding. It can be tricky, so make sure you understand how the specific manufacturer is using the terms to determine the lensing that is right for your application.

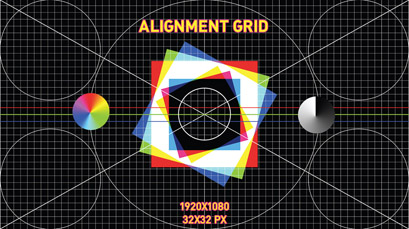

Different lenses have different throw ratios, which is designated as two numbers separated by a colon, such as 2:1. The first number is the distance away from the lens to the projection surface. The second number indicates one foot of screen width. In the foregoing example, for every foot of screen width, the lens needs to be two feet away from the screen.