C H A P T E R 1

Windows Azure Platform Overview

In the past couple of years, cloud computing has emerged as a disruptive force in the information technology (IT) industry. Its impact is of the same magnitude as the Internet and offshoring. Gartner Research has identified cloud computing as one of the “top 10 disruptive technologies 2008–2012.” According to Gartner, a disruptive technology is one that causes major change in the accepted way of doing things. For developers, architects, and operations, cloud computing has caused a major shift in the way of architecting, developing, deploying, and maintaining software services.

Cloud computing democratizes IT, similar to how the Internet democratized the consumer industry and made possible the outsourcing of the IT industry. The Internet opened up a vast ocean of accessible resources to consumers, ranging from free advertising-based searching to online banking. Cloud computing is bringing similar trends to businesses small and big. Businesses can now reap the benefits of agility by simply deploying their software in someone else's datacenter for a consumption fee. Hardware costs are out of the equation because of cloud service providers. These may sound like the hosting companies you already host your web sites on, but the big difference is that this is now a utility model built on highly scalable datacenter platforms. The cloud-computing wave is powerful enough for a company like Microsoft to start disrupting its own business model to invest in the opportunity.

In this chapter, I will cover some of the basics of cloud services and then jump into an overview of the Microsoft's Windows Azure platform. In the previous edition of this book, I introduced the development models of some of the cloud service providers in the market. I took that approach because the technology was new and I wanted readers to understand the differences in the offerings. In this edition, I do compare the cloud services providers, but not with the same detail that I did in the previous edition. The public literature about these platforms has matured enough for this to be eliminated from the book.

Introducing Cloud Services

As an introduction to our discussion, consider a typical scenario in today's medium to large enterprises. Assume a business unit has an immediate need to deploy a highly interactive niche web application (a micro-site) for a new product that will be released in five months. The application will provide the consumers a detailed view of the product and also the ability to customize and order the product right from the web site. The business unit has the budget but not the time and resources to implement it, and this deployment needs to happen in the next three months for it to be ready for the launch.

The IT hosting team understands the requirement, but to deploy an application with IT resources requires coordination among hardware, software, operations, and support teams. Perhaps ordering hardware and preparing the operating system build itself takes two months. After that, IT has to go through its standard testing process and operations procedures to make sure all the operations and support needs are identified. So, the earliest application delivery date would be in six months.

The business owner escalates the urgency of the issue, but cannot get past the process boundaries of the enterprise. Ultimately, the business owner outsources the project to a vendor and delivers the application in three months. Even though the application is delivered, it doesn't have the desired enterprise support and operations quality. It doesn't have to go this way—the company IT department should be the preferred and one-stop shop for all the business' needs. Even though outsourcing gives you a great return on investment, in the long run you lose significantly on the innovation. Your own IT department has the ability to innovate for you, and its members should be empowered to do so instead of forced to overcome artificial process boundaries.

I see such scenarios on a daily basis, and I don't see a clear solution to the problem unless the entire process and structure in which these organizations operate is revamped, or unless technology like cloud computing is embraced wholeheartedly.

How will cloud computing help? To understand, let's go back to the original business requirement: the business owner has an immediate need to deploy an application, and the time frame is within three months. Basically, what the business is looking for is IT agility, and if the application takes only one month to develop, then is it really worth wasting six months on coordination and acquisition of the hardware?

Cloud computing gives you an instant-on infrastructure for deploying your applications. The provisioning of the hardware, operating system, and the software is all automated and managed by the cloud service providers.

Industry Terminology

For standardizing the overall terminology around cloud computing, the industry has defined three main cloud service categories: Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS).

IaaS is a utility service that provides hardware and virtualized operating systems running in massively scalable data centers of the cloud service provider. You can then rent this infrastructure to deploy your own software and manage the lifecycle of not only your software applications but also the underlying operating systems. In IaaS, you are still responsible for upgrading, patching, and maintaining the operating systems and the software applications that run on the rented hardware. Therefore, the target audiences for IaaS are system administrators and operations engineers. In short, IaaS abstracts the hardware and virtualization infrastructure from you.

PaaS is a utility service that provides the hardware, operating systems, and the runtime environment for running your applications in massively scalable data centers of the cloud service provider. The PaaS manages the operating systems and hardware maintenance for you, but you have to manage your applications and data. Therefore, naturally, the target audience for PaaS is typically developers. Even though the final deployment and maintenance will be managed by the operations teams, the platform empowers developers to make certain deployment decisions through configurations. In short, PaaS abstracts the infrastructure and the operating system from you.

SaaS is a utility service that provides you an end-to-end software application as a service. You only have to manage your business data that resides and flows through the software service. The hardware, operating systems, and the software is managed by the SaaS provider for you. Therefore, the target audience for SaaS is typically business owners who can go to the SaaS web site, sign-up for the service, and start using it.

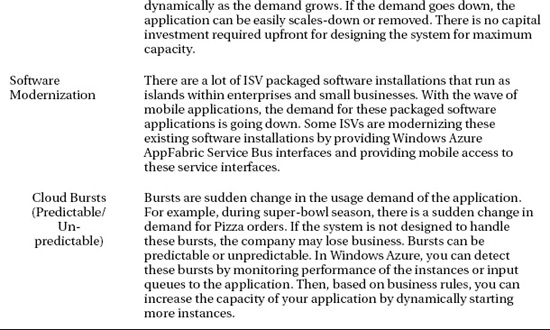

In its natural progression, a SaaS is built on a PaaS and a PaaS is built on an IaaS. Therefore, PaaS providers have capabilities of IaaS built into PaaS. Whether to offer it as a separate service is mostly a strategic decision. Figure 1-1 illustrates the typical management boundaries between IaaS, PaaS and SaaS.

Figure 1-1. IaaS, PaaS, and SaaS Management Boundaries

The management of the user identities differs in different scenarios. Some enterprises will expose their on-premises identity provider as a federated service, while some businesses will keep SaaS and on-premises identities separate. Some additional terms that have been floating around in the past couple of years are Data as a Service (DaaS), IT as a Service, Security as a Service, and more. In the interest of simplicity, I categorize all the services into IaaS, PaaS, and SaaS.

Types of Clouds

Along with the types of cloud services, the industry also frequently talks about the types of clouds that exist in the marketplace. A cloud is the underlying data center architecture that powers cloud services. Then what is the difference between a hosting provider and a cloud service provider? Great question....

As per my experience and knowledge, I would define a cloud only if the data center architecture provides you with the following services:

- Pay as you go service – A cloud must provide you with a utility service model where you are charged only for the resources you use or by the number of users accessing the service. The price should go down or up dynamically based on your usage.

- A self-service provisioning portal – A cloud must provide you with a self-service portal for acquiring and releasing resources manually and programmatically.

- Server hardware abstraction – A cloud must relieve you from acquiring and/or maintaining any hardware resources required for an application to run.

- Network hardware abstraction – A cloud must relieve you from acquiring and/or maintaining any networking hardware resources required by your application.

- Dynamic scalability – A cloud must provide you with a manual and/or programmatic option for dynamically scaling your application up and down to match the demand.

- High Availability Service Level Agreement (SLA) – A cloud must clearly define an SLA with guaranteed availability of the platform.

The location of this cloud determines its type: private or public. In the interest of keeping the topic simple, I will define only these two types of clouds.

A public cloud is a data center that exists in the public domain and is accessible over the Internet. The public cloud is managed by the cloud service provider. Some public cloud platforms integrate with your company's intranet services through federation and virtual private networks or similar connectivity. The core application and the data still runs in the cloud service provider's data center.

A private cloud is cloud infrastructure running in your own datacenter. Because the definition or a cloud is wide open to interpretation, every company has carved out its own definition of a private cloud. I use the capabilities defined previously as bare minimum requirements for defining a public or a private cloud. If any of these services are not offered by a private cloud, then it's merely an optimized datacenter. And it is not necessarily a bad choice; an optimized datacenter may be a better choice than a cloud in a particular scenario. The primary difference between private and public clouds is the amount of capital cost involved in provisioning private cloud infrastructure. Public clouds don't require this provisioning.

![]() Note Throughout this book, depending on the context of the conversation, I use the terms cloud services and cloud applications interchangeably to generally represent cloud services. A cloud service may be thought of as a collection of cloud applications in some instances, but in the context of this book, both mean the same thing.

Note Throughout this book, depending on the context of the conversation, I use the terms cloud services and cloud applications interchangeably to generally represent cloud services. A cloud service may be thought of as a collection of cloud applications in some instances, but in the context of this book, both mean the same thing.

Defining Our Terms

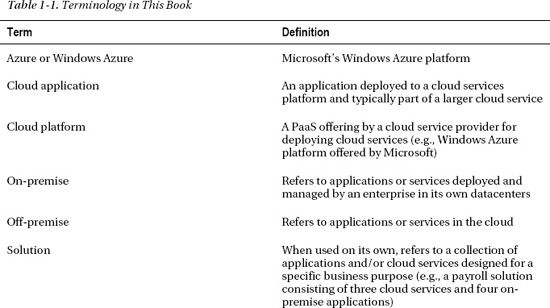

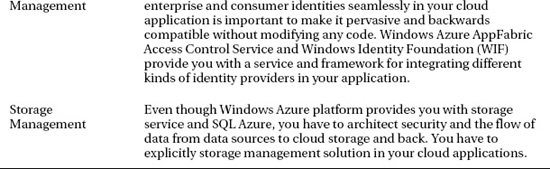

Before diving deep into cloud services, I would like to introduce you to the terminology used in this book. To be consistent, I have developed this section for defining some important terms used in this book. Table 1-1 lists the terms and their definitions as they relate to this book.

Cloud Service Providers

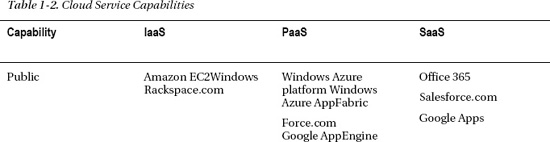

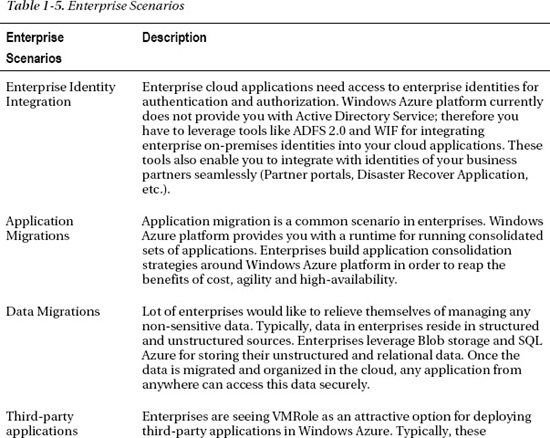

In the past couple of years, several large software and Internet platform companies have started offering cloud services. It was a natural transition for companies like Amazon, Google, and Microsoft that already had a large Internet presence. VMware has been building these capabilities through acquisitions like Springsource and Zimbra. The offerings from all the cloud services are fragmented and it can sometimes be difficult to get a grasp of all the service offerings just from a single vendor. In Table 1-2, I have listed a few providers with mature cloud services offerings. You can apply the same capabilities table to any cloud service provider present and future.

From Table 1-2, you will be able to qualify the cloud service providers that fit your specific needs. Typically, you will not find any single cloud service provider that satisfies all your needs, which is true even with on-premises software.

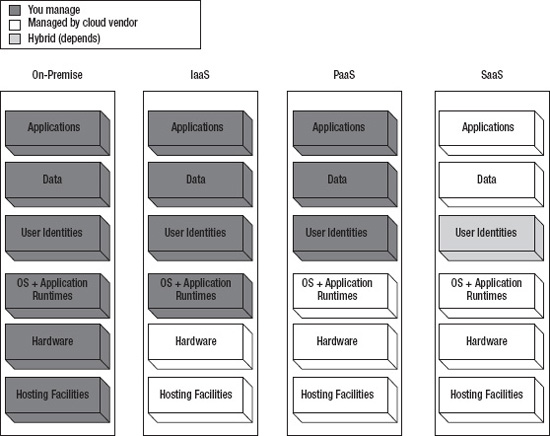

Shifting to the Cloud Paradigm

As seen in the previous section, the choices provided by these offerings can put you in a decision dilemma, and most probably you will end up testing at least two cloud services before deciding on one. The move from a traditional on-premise model to an off-premise cloud model is a fundamental paradigm shift for businesses. Usually businesses are in their comfort zone when managing IT internally. With the cloud services model, even though the cost savings become evident, the challenge for businesses is to get out of their comfort zones and make the paradigm shift of moving to cloud services to stay competitive. The shift does not happen overnight; it takes several months of rigorous analysis, planning, and implementation. Depending on the costs, benefits, risks, and security requirements, a business can stay on-premise, embrace cloud services fully, or settle on a hybrid model yielding cost benefits while keeping core competencies on-site. Figure 1-2 illustrates the ownership of key enterprise assets in on-premise, cloud, and hybrid scenarios.

The recommended migration process is to move step by step, one application at a time. When the offshore software development model became popular in 2000, businesses faced a similar challenge in getting aboard the outsourcing wagon. Now, many businesses have significant offshore investments and clearly see the payoffs. It took time and learning for businesses to make the paradigm shift in offshore software development projects. For cloud services to succeed, businesses will be required to make a paradigm shift again.

Figure 1-2. On-premise, cloud, and hybrid scenarios

In Figure 1-2, the on-premise and cloud scenarios are fairly easy to understand, because either all the assets are on-premise or in the cloud. The user profiles asset is usually required on both sides because of single sign-on requirements between on-premise and cloud services. In hybrid models, the businesses and the service provider must negotiate and decide which assets and services are better suited for locations on-premise, in cloud, or both. In the Hybrid 1 scenario in Figure 1-1, the user profiles and hosting facilities are present on both the sides; the business applications are in the cloud, whereas the utility applications, operating systems, data storage, and hardware are on-premise. In the Hybrid 2 scenario, the user profiles, operating systems, data storage, and hardware are present on both sides, whereas the business applications, utility applications, and hosting facilities are in the cloud. Most companies typically choose some hybrid model that best suits them.

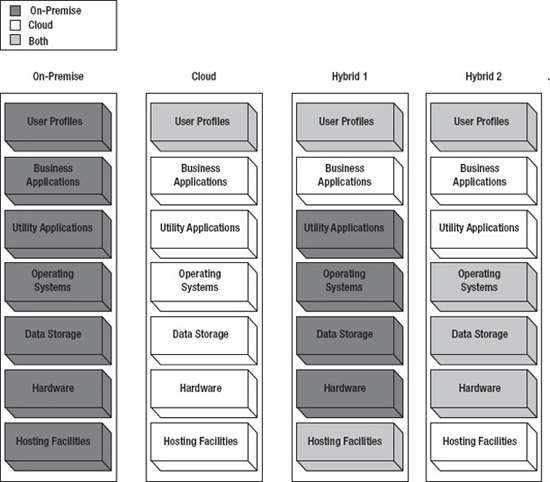

Understanding the Cloud Services Ecosystem

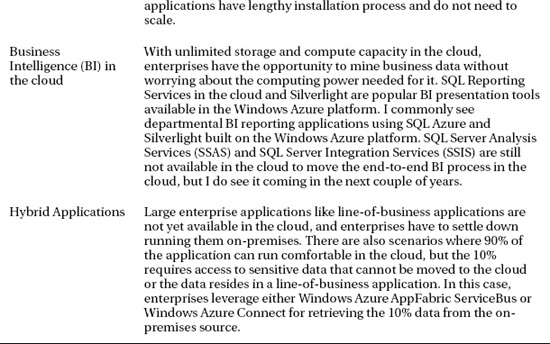

The cloud services ecosystem consists of five major roles, as shown in Figure 1-3.

Figure 1-3. The cloud services ecosystem

Service Providers

The service providers are the companies that provide cloud services to the businesses and consumers. These companies run giant data centers hosting massively virtualized and redundant software and hardware systems. Service providers like Amazon, with its EC2 service, and Microsoft, with its Windows Azure platform, fall into this category. These companies not only have expertise in data center management, but also in scalable software management. The service providers may offer services directly to the businesses, consumers, or ISVs.

Software Vendors

Software designed to run on-premise is very different from software designed for cloud. Even though they both may provide the same business functionality to the end users, architecturally they may differ. The cloud services must account for multi-tenancy, scalability, reliability and performance at a much broader scale than on-premise architecture. Cloud services run in data centers offered by cloud service providers. In some cases, there is a significant overlap between the service providers and the software vendors. For example, Microsoft Windows Azure platform, Microsoft's Office 365, and Google Apps are cloud software running in their own datacenters. The software vendors have found it economically feasible to package hardware and software together in the datacenters to optimize software delivery via cloud.

Independent Software Vendors

Independent software vendors (ISVs) are going to play a key role in the success of cloud services because of their expertise in vertical business applications. ISVs typically build vertical applications on an already existing platform. ISVs identify the business demand for a particular solution in vertical markets and thrive by offering the solution on existing platforms. The cloud offers a great platform for the ISVs to build vertical solutions. For example, an ISV could build a medical billing solution in the cloud and offer the service to multiple doctors and hospitals. The infrastructure required for building multitenant scalable software is already provided by the service providers, so the ISVs have to focus only on building the business solution and can enable them to penetrate new markets with lightning speed.

Enablers

Enablers (which are also called implementers or system integrators) are vendors offering services to build end-to-end solutions by integrating software from multiple vendors. Many enterprises purchase software licenses from vendors but never deploy the software because of lack of strategic initiative or availability of product expertise. Enablers fill in the gap by offering consulting services for the purchased software. Organizations like Microsoft Consulting Services and IBM Global Services offer customer-specific services regardless of the underlying platform. Enablers play a key role by integrating on-premise and cloud services or building end-to-end cloud services customized for a business. Cloud platform offers enablers an opportunity to expand their service offerings beyond on-premise solutions.

Businesses

Finally, businesses drive the demand for software products and services. If businesses see value or cost savings in a particular solution, they do not hesitate to implement it. To stay competitive in today's market, businesses have to keep their IT and applications portfolios up to date and take advantage of economies of scale wherever possible. Cloud service offerings are architected to achieve economies of scale by supporting multiple businesses on a scalable and automated platform. For cloud service offerings to be successful, service providers, software vendors, ISVs, and enablers must work together in creating cloud applications and services not only providing cost savings but also a competitive edge to businesses and in-turn to consumers through these businesses.

Microsoft's Cloud Strategy

For building a successful cloud services business, a company needs to first invest in building globally distributed datacenters that are highly automated, efficient, and well connected. Building such datacenters requires significant investment and support from software and operating system business partners to help monetize them. Therefore, typically, you will only see very large companies like Microsoft and Amazon offer such services at a global scale.

Microsoft is the largest software manufacturer in the world and its Global Foundation Services (GFS) group has done a phenomenal job in building a global network of datacenters that can be leveraged by software partners within the company for delivering software services. This network of Microsoft datacenters is termed as Microsoft's cloud. I have toured one of these datacenters and I think they are one of the most advanced in the world. What follows is a list of the 10 largest datacenters in the world. Four in the top 10 belong to Microsoft (I have highlighted them in bold).1

__________

- 350 East Cermak / Lakeside Technology Center (Digital Realty)

- Metro Technology Center, Atlanta (Quality Technology)

- The NAP of the Americas, Miami (Terremark)

- NGD Europe, Newport Wales (Next Generation Data)

- Container Data Center, Chicago (Microsoft)

- Microsoft Dublin (Microsoft)

- Phoenix ONE, Phoenix (i/o Datacenters)

- CH1, Elk Grove Village, Ill. (DuPont Fabros)

- 9A and 9B. Microsoft Datacenters in Quincy Washington and San Antonio

- The SuperNAP, Las Vegas (Switch Communications)

As the adoption of cloud services increase in the IT industry, this list will change over time.

![]() Note I highly recommend you visit

Note I highly recommend you visit www.globalfoundationservices.com for more information on Microsoft's datacenters and GFS.

Microsoft's cloud strategy consists of the following four main initiatives:

- Build a network of highly available datacenters around the world as a software platform of the future.

- Leverage these datacenters for delivering its PaaS offerings.

- Leverage these datacenters for delivering its SaaS offerings.

- Leverage the partner network for delivering IaaS offerings.

PaaS and SaaS offerings will be the primary mode of delivering most of its software assets in the future. In the past two years, Microsoft has successfully positioned Windows Azure platform as a leading public cloud platform. Microsoft went an extra mile innovating PaaS beyond traditional IaaS. Today, there are several Fortune 500 and Small & Medium businesses actively using the Windows Azure platform. Ultimately Microsoft will need to provide an IaaS offering just to ease the on-boarding process for enterprises. The current on-boarding is not as attractive for Enterprise customers because of investment required in migrating legay applications to Windows Azure platform.

Windows Azure Platform Overview

In 2008 during the Professional Developer's Conference (PDC), Microsoft announced its official entry into the PaaS arena with the Windows Azure platform. Even though the SaaS offering called Business Productivity Online Suite (BPOS) or Office 365 has been around for a few years, the Windows Azure platform is an attempt to create a complete PaaS offering. See Figure 1-4.

Figure 1-4. Platform as a service

Windows Azure platform is a key component of Microsoft's cloud strategy. The Windows Azure platform is a paradigm shift where unlimited resources are available at your fingertips for developing and deploying any .NET application in a matter of minutes. It disrupts your current ways of process-oriented sequential thinking. Microsoft has designed Windows Azure as an operating system for the data center. You don't have to wait for provisioning of any server or networking hardware, and then the operating system for deploying your application. Windows Azure platform drastically reduces the time from idea to production by completely eliminating the hardware and operating systems provisioning steps.

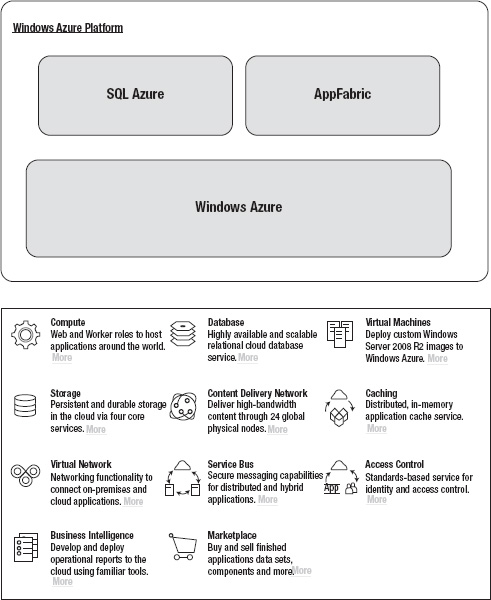

Windows Azure platform is a collection of building blocks for cloud services. Microsoft has been in the cloud business for quite some time with its consumer cloud services like MSN, Xbox Live, and Hotmail. Microsoft has also rebranded its business productivity and collaboration suite as Office 365 that includes services like SharePoint Online, Exchange Online, and Conferencing Services (Microsoft Office Lync). Windows Azure consists of three core components: Windows Azure, SQLAzure, and Windows Azure AppFabric, as shown in Figure 1-5.

Figure 1-5. Microsoft Windows Azure Platform (Source: www.microsoft.com/windowsazure/features/)

The core components are then split into several sub-components. But, the overall idea is to provide an a-la-carte menu so you can use any individual component for your solution. The pricing is typically based on each sub-component usage. The feature set is updated every six months, and there will be more features released between writing and publishing of this book.

![]() Note Figure 1-5 only addresses the core components of the Windows Azure platform, as the platform matures; Microsoft is slowly promoting some sub-categories into its own offerings like the Virtual Network category that includes Windows Azure Connect and Traffic Manager. The Marketplace feature currently consists of the Windows Azure Marketplace DataMarket where you can publish and monetize your data over standard interfaces. Covering DataMarket goes beyond the scope of this book.

Note Figure 1-5 only addresses the core components of the Windows Azure platform, as the platform matures; Microsoft is slowly promoting some sub-categories into its own offerings like the Virtual Network category that includes Windows Azure Connect and Traffic Manager. The Marketplace feature currently consists of the Windows Azure Marketplace DataMarket where you can publish and monetize your data over standard interfaces. Covering DataMarket goes beyond the scope of this book.

Windows Azure is the operating system for the datacenter that provides compute, storage, and management services. SQL Azure is a relational database engine in the Windows Azure Platform. Windows Azure AppFabric is the middleware component that consists of services like Service Bus, Access Control, and Caching Services. Developers can either build services that span across all these components or pick and choose the components as needed by the service architecture. The overall concept of Windows Azure platform is to offer developers the flexibility to plug in to the cloud environment as per the architectural requirements of the service.

In this book, I have covered all the three main components and their sub-components.

Software development today typically consists one or more of the following types of applications:

- Rich client and Internet applications – Examples are Windows Client, Windows Presentation Foundation, HTML5, and Silverlight.

- Web services and web applications – Examples are ASP.NET, ASP.NET Web Services, and Windows Communications Foundation.

- Server applications – Examples are Windows Services, WCF, middleware, message queuing, and database development.

- Mobile application – Examples are .NET Compact Framework and mobile device applications.

The Windows Azure platform provides you with development tools and the deployment platform for developing and deploying all of these types of applications.

![]() Note It took me more time to write this chapter than it did to develop and deploy a high-scale compute application on Windows Azure platform. Traditionally, the application would have taken 6–12 months to develop and deploy in production.

Note It took me more time to write this chapter than it did to develop and deploy a high-scale compute application on Windows Azure platform. Traditionally, the application would have taken 6–12 months to develop and deploy in production.

Understanding Windows Azure Compute Architecture

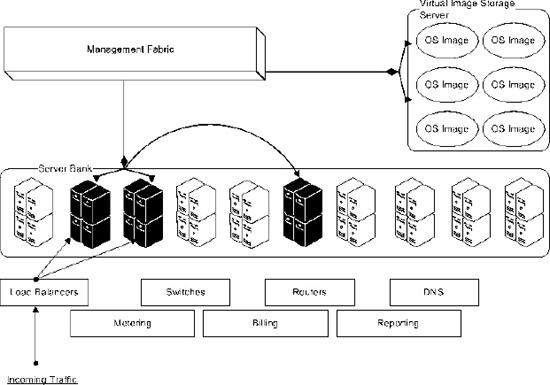

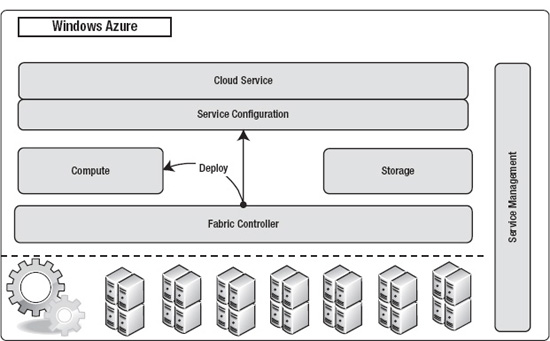

Fundamentally, Windows Azure platform compute architecture is based on a software fabric controller running in the data center and defining clear abstraction between server hardware and operating systems. The fabric controller automates the deployment of virtualized operating systems images on server hardware. In its simplest form, a typical cloud data center consists of a bank of server hardware and massive storage for storing fully functional operating system images. The fabric controller manages the life cycle of the deployment by allocating and decommissioning hardware and operating system images as needed. As a user, when you deploy your service to the cloud, the fabric controller provisions the hardware servers, deploys operating system image on those servers, provisions appropriate networking software like routers and load-balancers, and deploys your service to those servers. Once the service is deployed on servers, it is ready to be consumed. The numbers of service instances are configured by the service owner and would typically depend on the demand and high availability requirements of the service. Over the life cycle of the instance, the fabric controller is responsible for automating the maintenance, security, operations and high availability of the instances. Figure 1-6 illustrates the Windows Azure platform compute architecture.

Figure 1-6. Windows Azure platform compute architecture

The architecture also consists of some fixed assets like switches, routers, and DNS servers that manage the workload distribution across multiple service instances. This architecture is componentized and deployed into several geographically dispersed datacenters for providing geo-located services. The metering, billing and reporting components complement the infrastructure with the ability to measure and report the usage of the service per customer.

![]() Note Windows Azure platform is hosted in six datacenters around the world. North Central US (1), South Central US (1), Europe (2), Asia (2). From the administration portal, you have the ability to choose the datacenter location for your application. Microsoft gives regular tours of these datacenters to enterprises. You will have to poke your management to get a tour of one of these world-class datacenters. You can find more information of these datacenters at

Note Windows Azure platform is hosted in six datacenters around the world. North Central US (1), South Central US (1), Europe (2), Asia (2). From the administration portal, you have the ability to choose the datacenter location for your application. Microsoft gives regular tours of these datacenters to enterprises. You will have to poke your management to get a tour of one of these world-class datacenters. You can find more information of these datacenters at www.globalfoundationservices.com.

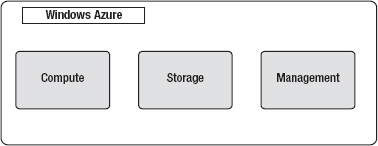

Windows Azure

Windows Azure is the core operating system of the platform that provides all the necessary features for hosting your services in the cloud. It provides a runtime environment that includes the IIS web server, background services, storage, queues, management services, and load-balancers. Windows Azure also provides developers with a local development fabric for building and testing services before they are deployed to Windows Azure in the cloud. Windows Azure also integrates seamlessly with the Visual Studio 2010 development environment by providing you with service publishing and IntelliTrace features. Figure 1-7 illustrates the three core services of Windows Azure.

Figure 1-7: Windows Azure core services

Following is a brief description of the three core services of Windows:

Compute – The compute service offers scalable hosting of services on 64-bit Windows Server 2008 R2. The platform is virtualized and designed to scale dynamically based on demand. The platform runs Internet Information Server (IIS) version 7 enabled for ASP.NET Web applications. From version 1.3 of the SDK, you have access to Full IIS and administration features. You can also script start-up tasks that require administration privileges like writing to a registry or installing a COM dll library or installing third-party components like Java Virtual machines. Developers can write managed and unmanaged services for hosting in the Windows Azure Compute without worrying about the underlying operating systems infrastructure.

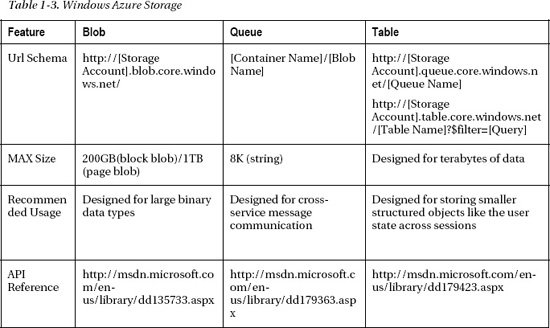

Storage – There are three types of storage supported in Windows Azure: tables, blobs, and queues. These storage types support direct access through REST APIs. Windows Azure tables are not relational database like SQL Server tables. Instead, they provide structured data storage capabilities. They have an independent data model popularly known as the entity model. Tables are designed for storing terabytes of small-sized highly available data objects. For example, user profiles in a high-volume ecommerce site would be a good candidate for tables. Windows Azure blobs are designed to store large sets of binary data like videos, images, and music in the cloud. Windows Azure queues are the asynchronous communication channels for connecting between services and applications not only in Windows Azure but also from on-premises applications. Queues are also the recommended method of communication between multiple Windows Azure role instances. The queue infrastructure is designed to support unlimited number of messages, but the maximum size of each message cannot exceed 8KB. Any account with access to storage can access tables, blobs, and queues. The total storage capacity of one storage account is 100TB and you can have multiple storage accounts. Windows Azure Drives provides NTFS drive volumes for Windows Azure applications in the cloud. So, you can create a drive, upload it to the blob storage and then attach it as an external drive to the windows azure instances. Drives provide you with durable storage access natively within your role but at the same time you lose the broad distributed nature of storing blobs that are scaled-out across multiple storage servers. A drive is still a single blob from Windows Azure Blob storage perspective.

Management – The management service supports automated infrastructure and service management capabilities to Windows Azure cloud services. These capabilities include automatic commissioning of virtual machines and deploying services in them, as well as configuring switches, access routers, and load balancers for maintaining the user defined state of the service. The management service consists of a fabric controller responsible for maintaining the health of the service. The fabric controller supports dynamic upgrade of services without incurring any downtime or degradation. Windows Azure management service also supports custom logging and tracing and service usage monitoring. You can interact with the management service using a secure REST-API. Most of the functionality available on the Windows Azure platform portal is available through the service management API for programmatically executing tasks. The API is widely used for automating the provisioning and dynamic scaling tasks. For example, the publish feature in the Visual Studio and the Windows Azure PowerShell cmdlets use the service management API behind the scenes to deploy cloud services to Windows Azure.

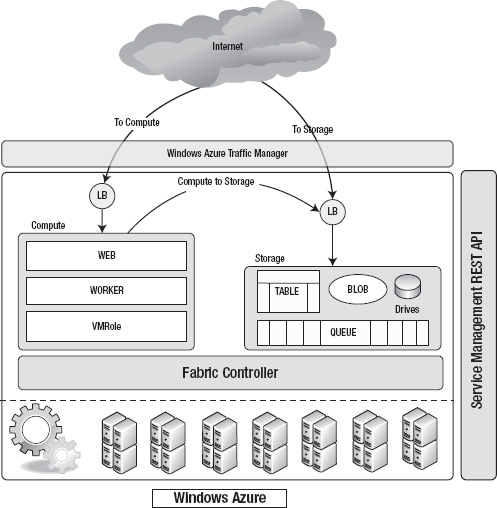

Figure 1-8 illustrates the Windows Azure architecture from available service perspective.

Figure 1-8. Windows Azure

When a request from the Internet comes in for your application, it passes through the load balancer and then to the specific Web/Worker role instance running your application. If a request for a Storage service comes in, it passes through the load balancer to the appropriate Storage service component.

Windows Azure Traffic Manager is a cross-region (datacenter) high-availability service that allows you to configure rules-based traffic diversions to your applications running in multiple Windows Azure datacenters. Traffic Manager should be a key component in your disaster/recovery and business continuity strategy. Even though Traffic Manager manages traffic diversions, it does not replicate data across multiple datacenters; you have to replicate data across multiple-datacenters for maintaining data consistency. At the time of writing, Traffic Manager was not available in production release. In the current version, there were three traffic diversion rules available: Fault-tolerance, Performance-based, and Round-Robin. In the CTP version, you could try it out for free.

Compute

The Windows Azure Compute service is based on a role-based design. To implement a service in Windows Azure, you have to implement one or more roles supported by the service. The current version of Windows Azure supports three roles: Web Role, Worker Role, and VM Role. A role defines specific behavior for virtual machine instances running in the cloud. A web role is used for deploying web sites, a worker role is used for deploying background services or middle tier applications, and a VM Role is typically used for running applications that do not fit a web or a worker role. Applications with intrusive installation process are well suited for VM Role. Architecture of the system should dictate the types of roles you will need in your application.

The abstraction between the roles and the hardware is managed by the Fabric Controller. Fabric Controller manages end-to-end automation of the role instances, from hardware provisioning to maintaining service availability. Fabric Controller reads the configuration information you provide for your services and adjusts the deployment profile accordingly, as shown in Figure 1-9.

Figure 1-9. Fabric Controller deploys application

In the service configuration, you have to specify how many instances of a particular role you want to start with. The provisioning portal and the service management API can give you the status of the deployment. Once the cloud service is deployed, it is managed entirely by Windows Azure. You only manage your application and data.

Web Role

A Web role gives you the ability to deploy a web site or web service that can run in an IIS 7 environment. Most commonly, it will be an ASP.NET web application or external facing Windows Communications Foundation (WCF) service endpoints. Even though a Web Role can host multiple sites, it is assigned only one external endpoint or entry point. But, you can configure different ports on the same entry point for http, https and custom TCP connections.

![]() Note The UDP protocol is not supported at this time in Windows Azure services.

Note The UDP protocol is not supported at this time in Windows Azure services.

The Web role also supports FastCGI extension module to IIS 7.0. This allows developers to develop web applications in interpreted languages like PHP and native languages like C++. Windows Azure supports Full Trust execution that enables you to run FastCGI web applications in Windows Azure Web role. To run FastCGI applications, you have to set the enableNativeCodeExecution attribute of the Web role to true in the ServiceDefinition.csdef file. In support of FastCGI in the Web role, Windows Azure also introduces a new configuration file called Web.roleconfig. This file should exist in the root of the web project and should contain a reference to the FastCGI hosting application, like php.exe.

In the interest of keeping this book conceptual, I will not be covering FastCGI applications. For more information on enabling FastCGI applications in Windows Azure, please visit the Windows Azure SDK site at http://msdn.microsoft.com/en-us/library/dd573345.aspx.

![]() Caution Even though Windows Azure supports native code execution, the code still runs in the user context, not administrator, so some WIN32 APIs that require system administrator privileges will not be accessible by default but can be configured using the startup tasks and elevated privileges. I will cover start up tasks in detail in the next chapter.

Caution Even though Windows Azure supports native code execution, the code still runs in the user context, not administrator, so some WIN32 APIs that require system administrator privileges will not be accessible by default but can be configured using the startup tasks and elevated privileges. I will cover start up tasks in detail in the next chapter.

Can I Run Existing Java Server Applications in Windows Azure?

There are a lot of enterprises that run Java and migrating these applications to Windows Azure is a big opportunity for Microsoft in winning and sustaining the underlying platform. Behind the scenes, Windows Azure runs Windows Server operating systems that can run Java virtual machines. Therefore, you can write custom scripts to install Java virtual machines on the compute instances and then run your Java application on those instances. I have covered writing custom start-up tasks in Azure in a bit more detail in the next chapter, but the short answer is yes, you can run Java server applications on Windows Azure, but it's still not the first class citizen due to tools and endpoint limitations.

Worker Role

The Worker role gives you the ability to run a continuous background process in the cloud. It is analogous to Windows Services in the Windows platform. Technically, the only major difference between a Web Role and a Worker Role is the presence of IIS on the Web Role. The Worker role can expose internal and external endpoints and also call external interfaces. A Worker role can also communicate with the queue, blob, and table storage services. A Worker role instance runs in a separate virtual machine from a Web role instance, even though both of them may be part of the same cloud service application. In some Windows Azure services, you may require communication between a Web role and a Worker role. Even though the Web and Worker role expose endpoints for communication among roles, the recommended mode of reliable communication is Windows Azure queues. Web and Worker roles both can access Windows Azure queues for communicating runtime messages. I have covered Windows Azure queues later in the book.

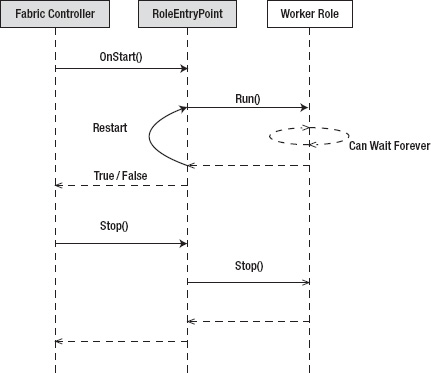

A Worker role class must inherit from the Microsoft.WindowsAzure.ServiceRuntime.RoleEntryPoint class. RoleEntryPoint is an abstract class that defines functions for initializing, starting and stopping the Worker role service. A Worker role can stop either when it is redeployed to another server, or you have executed the Stop action from the Windows Azure developer portal. Figure 1-10 illustrates the sequence diagram for the life cycle of a Worker role.

Figure 1-10. Sequence diagram for a Worker role service

In Figure 1-10, there are three objects: Fabric Controller, RoleEntryPoint, and a Worker role implementation of your code. Fabric Controller is a conceptual object; it represents the calls that the Windows Azure Fabric Controller makes to a Worker role application. The Fabric Controller calls the Initialize() method on the RoleEntryPoint object. RoleEntryPoint is an abstract class so it does not have its own instance; it is inherited by the Worker role instance to receive calls. The OnStart() method is a virtual method, so it does not need to be implemented in the Worker role class. Typically, you would write initialization code like starting diagnostics service or subscribing to role events in this method. The Worker role starts its application logic in the Run() method. The Run() method should have a continuous loop for continuous operation. If the Run() method returns, the role is restarted by the OnStart() method. If the role is able to start successfully, the OnStart() method returns True to the Fabric Controller; otherwise, it returns False. The Fabric Controller calls the Stop() method to shut down the role when the role is redeployed to another server or you have executed a Stop action from the Windows Azure developer portal.

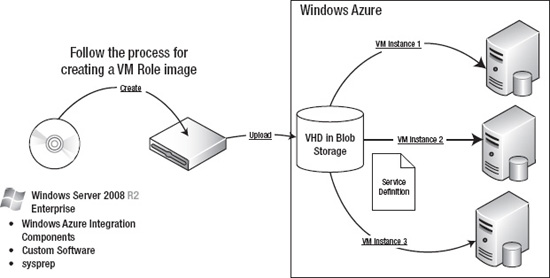

VM Role

The VM role is specifically designed by Microsoft to reduce the barriers to entry into the Windows Azure Platform. VM Role lets you customize a Windows Server 2008 R2 Enterprise virtual machine, based on a virtual hard drive (VHD), and then deploy it as a base image in Windows Azure. Typical scenarios for using VM role are as follows:

- If you want to install software in your farm that does not support silent installation

- If the installation of a specific software component required manual intervention

- If you want to install a third-party application that needs significant modifications for deploying to the web or worker roles

- Any application that does not fit in web role or a worker role model

- Quick functional testing for specific software components that may later need to run on-premises

VM role gives you more control over the software you can install on the virtual machine before uploading and running in Windows Azure. Therefore, when creating a VM role, you have for first create a VHD on your local machine, install the appropriate software on it, and then upload the operating system image to Windows Azure. Once the operating system image is uploaded, you can then create a service definition for the service and then deploy multiple instances of the operating system image adhering to your service definition. In a Web role and a Worker role, the underlying operating system image is provided to you by Windows Azure, but in the case of VM role, the service runs in the operating system image you created. Figure 1-11 illustrates the high-level process for creating a VM role image in Windows Azure. I have covered the entire process of creating and running VM Roles in detail later in the book.

Figure 1-11. Windows Azure VM Role

![]() Tip Don't use VM role unless absolutely needed, because by acquiring more control over the underlying operating system image, you also inherit the risks and the burden associated with maintaining it. Windows Azure does not understand the health of your applications running on a VM role, and therefore it becomes your responsibility to track application health. For any Windows Azure application architecture, the Web role and the Worker role models must be preferred over VM role.

Tip Don't use VM role unless absolutely needed, because by acquiring more control over the underlying operating system image, you also inherit the risks and the burden associated with maintaining it. Windows Azure does not understand the health of your applications running on a VM role, and therefore it becomes your responsibility to track application health. For any Windows Azure application architecture, the Web role and the Worker role models must be preferred over VM role.

Windows Azure Connect

Windows Azure Connect (aka Project Sydney) provides you with secure network connectivity between your on-premises machines and Windows Azure role instances. Windows Azure Connect is a new feature launched with Windows Azure SDK 1.3. With Windows Azure Connect, you can host your applications in Windows Azure and connect back to your data or applications that run on-premises. The motivation behind this feature was reducing the barriers to entry into Windows Azure. There is some data in enterprises that cannot be moved to the cloud due to various reasons like regulatory compliance, legal holds, company policies or simply IT politics. With Windows Azure Connect, the argument is reduced to tradeoff between latency and benefits of Windows Azure.

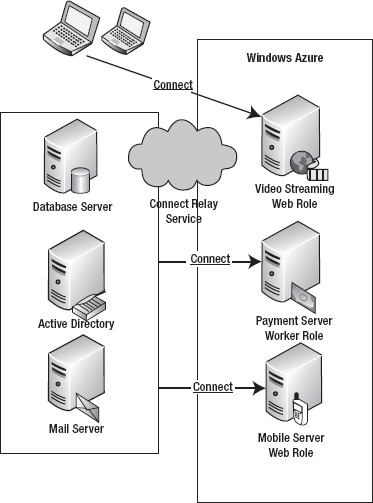

In the current version, Windows Azure Connect allows you to create point-to-point connectivity between your Windows Azure role instances and on-premises machines. It also gives you the ability to domain join the Windows Azure instances to your domain. Figure 1-12 illustrates the high-level architecture of the Windows Azure Connect capabilities.

Figure 1-12. Windows Azure Connect

In Figure 1-12, the database server, the Active Directory server, and the mail server are grouped together for connecting with the Payment Server and Mobile Server Windows Azure role instances, whereas the development machines are grouped together for connecting with the Video Streaming role instance. The Windows Azure administration portal allows you to setup group-level as well as machine level connectivity. The connectivity between the on-premises and Windows Azure instances is secured end to end via IPsec. Windows Azure Connect also uses a cloud-based relay service for firewall and NAT traversal of the network traffic between your on-premises machines and Windows Azure role instances.

![]() Note In the current version (1.3), you cannot use Windows Azure Connect to connect between your Windows Azure role instances because they are assumed to connect with each other. For such communication, you can use Windows Azure Queues, Input or Internal endpoints.

Note In the current version (1.3), you cannot use Windows Azure Connect to connect between your Windows Azure role instances because they are assumed to connect with each other. For such communication, you can use Windows Azure Queues, Input or Internal endpoints.

![]() Tip Don't use Windows Azure Connect unless absolutely needed because as you move data away from the application, you will see performance degradation due to latency. If you have an interactive web application, it is better to move the data closer to the application in Windows Azure storage or SQLAzure. In some cases, you can separate the sensitive data and keep it on-premises and move rest of the data into the cloud and use Windows Azure Connect for calling on-premises applications

Tip Don't use Windows Azure Connect unless absolutely needed because as you move data away from the application, you will see performance degradation due to latency. If you have an interactive web application, it is better to move the data closer to the application in Windows Azure storage or SQLAzure. In some cases, you can separate the sensitive data and keep it on-premises and move rest of the data into the cloud and use Windows Azure Connect for calling on-premises applications

Windows Azure Storage

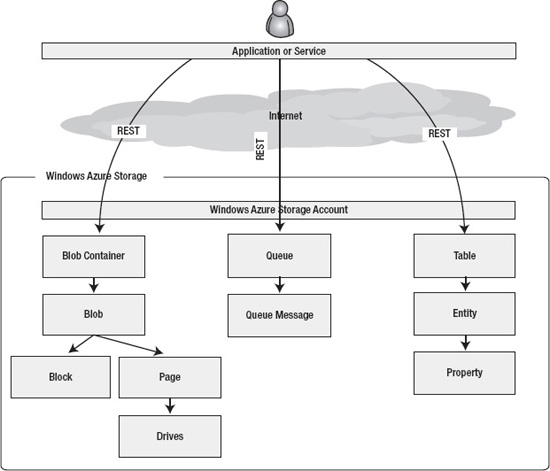

Storage service offers the compute nodes access to a scalable storage system. The storage service has built-in highly availability within a datacenter boundary. It maintains three copies of your data at any point in time behind the scenes. You can access the storage service from anywhere through a REST API. The open architecture of the Storage service lets you design your applications to store data using REST APIs. Figure 1-13 illustrates the Windows Azure storage service architecture.

Figure 1-13. Storage service architecture

Windows Azure storage supports four types of services: blobs, drives, queues, and tables. Blobs, queues, and tables have independent REST APIs. Drives are a special type of storage that is different from the other storage services. Drives are a type of page blob and are uploaded to the blob storage for mounting them to the compute nodes. Drives don't have a direct REST API because they behave like other page blobs when uploaded to the blob storage. Drives do have a managed API in SDK that you can use in your compute nodes.

Windows Azure Storage types are scoped at the account level. This means that when you open a storage account, you get access to all the Windows Azure storage services: blobs, queues, and tables.

A blob account is a collection of containers. You can create any number of containers in an account. A container consists of number of blobs. A blob can be further composed of a series of blocks or pages. I have covered blocks and pages in the Blob storage chapter.

A queue account is a collection of queues. An account can have any number of queues. A queue is composed of queue messages sent by the message sending applications.

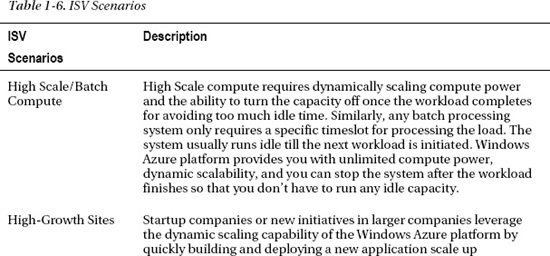

Table 1-3 lists the commonalities and differences among the three storage types in Windows Azure.

Even though the Storage service makes it easy for Windows Azure compute services to store data within the cloud, you can also access it directly from on-premises applications using the REST API. For example, you could write a music storage application that uploads all your MP3 files from you client machine to the blob storage, completely bypassing the Windows Azure Compute service. Compute and Storage services can be used independently of each other in Windows Azure. There are several customers using the storage service purely for backing up their on-premises data to the cloud. You can create a scheduled automated utility that uploads back-up files to Windows Azure blob storage. This gives you clear separation between your production data and disaster recovery data.

![]() Note The Windows Azure SDK also includes .NET-managed classes for calling storage service REST API. If you are programming in .NET, I recommend you to use the classes in Microsoft.WindowsAzure.StorageClient assembly.

Note The Windows Azure SDK also includes .NET-managed classes for calling storage service REST API. If you are programming in .NET, I recommend you to use the classes in Microsoft.WindowsAzure.StorageClient assembly.

Windows Azure, because it is a platform, does not provide you with any direct user interface for uploading files to the storage service. You have to build your own application client for using the storage service. There are several third-party tools like the Cloud Storage Studio from Cerebrata (www.cerebrata.com/Products/CloudStorageStudio/Default.aspx) you can use to upload and download files.

![]() Note The Windows Azure Storage service is independent of the SQL Azure database service offered by the Windows Azure Platform. Windows Azure storage services are very specific to Windows Azure and unlike the compute and SQL Azure services, there is no parity with any on-premises product offered by Microsoft.

Note The Windows Azure Storage service is independent of the SQL Azure database service offered by the Windows Azure Platform. Windows Azure storage services are very specific to Windows Azure and unlike the compute and SQL Azure services, there is no parity with any on-premises product offered by Microsoft.

Management

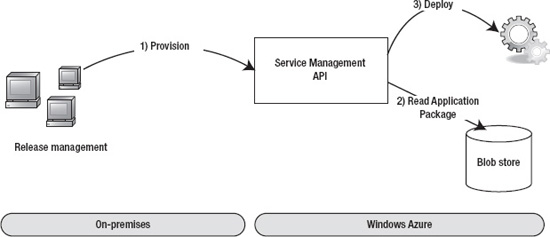

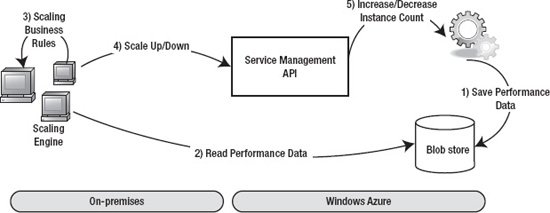

Unlike on-premise applications, the deployment of cloud services in PaaS involves only software provisioning from the developer's perspective. In a scalable environment for provisioning multiple services across thousands of instances, you need more programmatic control over the provisioning process. Manually uploading service packages and then starting and stopping services from the portal interface works well for smaller services, but are time-consuming and error-prone for large-scale services. The Windows Azure Service Management API allows you to programmatically perform most of the provisioning functions via a REST-based interface to your Windows Azure cloud account. The Service Management API is the hidden jewel of the platform. It makes Windows Azure a truly dynamically scalable platform allowing you to scale-up and scale-down your application on-demand. Using the Service Management API, you can automate provisioning, de-provisioning, scaling, and administration of your cloud services. Some of the common scenarios for leveraging the Service Management API are as follows:

- Automating the provisioning and de-provisioning of your cloud services through a well-defined release management process. Figure 1-14 illustrates the typical provisioning process of loading the applicationf package from blob store and deploying it through service management API.

Figure 1-14. Provisioning using the Service Management API

- Dynamically scaling-up and down your applications based on the demand and application performance. Figure 1-15 illustrates a typical scaling architecture based on performance metrics of the roles instances of your application.

Figure 1-15. Scaling using the Service Management API

- Service Management API also enables you to build an enterprise applications store in Windows Azure. Combining it with other Windows Azure platform services like Access Control and Windows Identity Foundation, you can federate on-premises identities with applications running in Windows Azure.

![]() Note There are some third-party tools that offer dynamic scaling as a service. AzureWatch from Paraleap (

Note There are some third-party tools that offer dynamic scaling as a service. AzureWatch from Paraleap (www.paraleap.com/AzureWatch) is one such tool and even Microsoft Consulting Services has an Auto-scale toolkit that is offered in the context of a consulting engagement. You can also build your own using the Service Management API.

SQL Azure

SQL Azure is a relational database service in the Windows Azure platform. It provides core relational database management system (RDBMS) capabilities as a service, and it is built on the SQL Server product code base. In the current version, developers can access SQL Azure using tabular data stream (TDS), which is the standard mechanism for accessing on-premise SQL Server instances through SQL client today. The SQL client can be any TDS-compliant client, like ADO.NET, LINQ, ODBC, JDBC, or ADO.NET Entity Framework.

![]() Note At the time of writing, the maximum database size allowed per database was 50GB. There are well known partitioning patterns (e.g., sharding) for distributing data across multiple instances of 50GB databases. Built-in support for federation is on the roadmap for SQL Azure.

Note At the time of writing, the maximum database size allowed per database was 50GB. There are well known partitioning patterns (e.g., sharding) for distributing data across multiple instances of 50GB databases. Built-in support for federation is on the roadmap for SQL Azure.

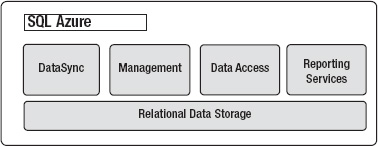

Figure 1-16 illustrates the core components of SQL Azure.

Figure 1-16: SQL Azure core components

The core services offered by SQL Azure are as follows:

Relational Data Storage – The relational data storage engine is the backbone of SQL Azure and is based on the core SQL Server code base. This component exposes the traditional SQL Server capabilities like the tables, indexes, views, stored procedures, and triggers. From a developer's perspective, SQL Azure is a relational subset of SQL Server. Therefore, if you have developed for SQL Server, you are already a SQL Azure developer. The majority of the differences are along the physical management of the data and some middleware features like ServiceBroker that are not offered in SQLAzure.

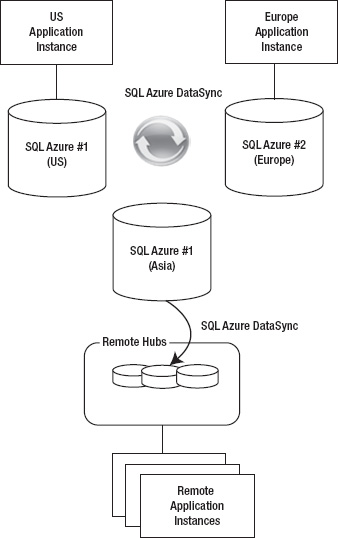

Data Sync – The Data Sync capabilities provide the synchronization and aggregation of data to and from SQL Azure to enterprise, workstations, partners and consumers devices using the Microsoft Sync Framework. The Data Sync component has two flavors: Data Sync service between SQLAzure instances and Data Sync services between on-premises database and SQL Azure. Both the services are based on Microsoft Sync Framework. With the combination of the two flavors, you can trickle down data all the way to field offices that are in remote locations.

![]() Note The Microsoft Sync Framework is included in the SQL Server 2008 product (

Note The Microsoft Sync Framework is included in the SQL Server 2008 product (http://msdn.microsoft.com/en-us/sync/default.aspx).

Management – The management component provides automatic provisioning, metering, billing, load-balancing, failover, and security capabilities to SQL Azure. Each database is replicated to one primary and two secondary servers. In case of a failover, the switching between the primary and the secondary server is automatic without interruptions. You can manage your SQL Azure databases from the Windows Azure portal as well as other existing toolsets like SQL Server Management Studio, OSQL, and BCP.

Data Access – The Data Access component defines different methods for accessing SQL Azure programmatically. Currently, SQL Azure will support Tabular Data Stream (TDS), which includes ADO.NET, Entity Framework, ODBC, JDBC, and LINQ clients. Developers can access SQL Azure either directly from on-premise applications or through cloud services deployed in Windows Azure. You can also locate a Windows Azure compute cluster and a SQL Azure instance in the same datacenter for faster data access.

Reporting Services –The Windows Azure platform is new and you should expect it getting rich in features every year. Microsoft is committed to reducing the parity gap between on-premises and Windows Azure platform services. SQL Azure is no exception; during PDC 2010, Microsoft announced the availability of SQL Reporting Services in Windows Azure platform. This brings reporting capabilities to your applications and an essential step towards business intelligence in the cloud.

Some of the common scenarios for leveraging the SQL Azure are as follows:

Database consolidation – If you have home-brewed databases that are sitting under desktops or isolated islands, then having SQL Azure as one of the options in your database consolidation strategy will greatly help you strategize you long term goal of reducing the operations footprint. You can migrate to SQL Azure from Access, MySQL, SQL Server, DB2, Oracle, Sybase, and pretty much any relational database to SQL Azure with proper tools like SQL Server Migration Assistant (SSMA) and SQL Azure Migration Wizard from Codeplex. If there is no direct migration path to SQL Azure for a particular database, then the most common path is from third-party database to SQL Server and then from SQL Server to SQL Azure. SQL Azure will provide you with ability to quickly provision databases and reduce the time to market for your solution. You don't have to wait for provisioning clustered hardware for running your databases.

Back-end for Windows Azure Compute – SQL Azure is the recommended relational database for applications running in Windows Azure compute. If you co-locate your Windows Azure compute instances and SQL Azure in the same datacenter, you can get great performance advantage and you will also not incur any data-transfer costs.

Geo-replication of Data – SQL Azure and Data Sync services for SQL Azure give you the ability to synchronize two-way synchronizations across multiple geographies and also trickle down data to remote hubs. This kind of data movement allows you to move data closer to the application for better user experience. Figure 1-17 illustrates a common geo-replication pattern in SQL Azure.

Figure 1-17. Geo-replication in SQL Azure

Quick Application Migrations – SQL Azure allows you to quickly migrate the relational database component of your on-premises application to Windows Azure. This is a big differentiator for Microsoft in comparison to its competitors. With minimal efforts, you can quickly move your existing relational data to Windows Azure and then focus on migrating the application. Once the data is moved to SQL Azure, you can just change the connection string of your application to point to the SQL Azure database.

![]() Tip SQLAzure runs a labs program where you can try out upcoming features for free and provide feedback to the Microsoft product teams. You can find more information about the labs program here

Tip SQLAzure runs a labs program where you can try out upcoming features for free and provide feedback to the Microsoft product teams. You can find more information about the labs program here www.sqlazurelabs.com.

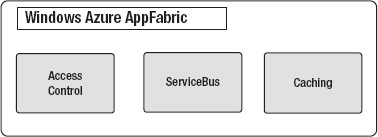

Windows Azure AppFabric

Windows Azure AppFabric is the cloud-based middleware platform hosted in Windows Azure. Windows Azure AppFabric is the glue for connecting critical pieces of a solution in the cloud and on-premises. You must have heard several times from industry speakers and even cloud enthusiasts that “everything is moving to the cloud.” I firmly believe that everything is not moving to the cloud. The disruptive effect of cloud computing is similar to the disruption created by cell phones. Cell phones gave users quick provisioning and anywhere access to communications. But, some critical functions like building security still run over landlines and have no plans for switching to a cell phone network. Windows Azure AppFabric provides you with components for integrating Windows Azure applications with on-premises applications. The core components of the Windows Azure AppFabric are as follows:

- An Internet service bus, called Service Bus, for connecting applications running in Windows Azure (and other domains) and on-premises

- A claims-mapping service, called Access Control Service, for supporting claims-based authorization in your applications running in Windows Azure and on-premises

- A distributed caching service within the Windows Azure platform, called Windows Azure AppFabric Cache

![]() Note Windows Azure AppFabric and Windows Server AppFabric are two different products. In the long run, Microsoft's goal is to bring services available in Windows Server AppFabric to Windows Azure AppFabric. Some of the features like ACS and Service Bus will always remain exclusive to Windows Azure AppFabric.

Note Windows Azure AppFabric and Windows Server AppFabric are two different products. In the long run, Microsoft's goal is to bring services available in Windows Server AppFabric to Windows Azure AppFabric. Some of the features like ACS and Service Bus will always remain exclusive to Windows Azure AppFabric.

I think of Azure AppFabric as the integration middleware of the Windows Azure platform, because it provides connectivity, caching, identity claims federation, and messaging capabilities among distributed applications. You can leverage these capabilities not only for cloud services but also for on-premises applications. Microsoft's strategy is to provide Windows Azure AppFabric as a middleware and building blocks for building and deploying distributed applications on Windows Azure. Figure 1-18 illustrates the three core services of Windows Azure AppFabric.

Figure 1-18. Windows Azure AppFabric core services

Access control – The access control service provides rules-driven, claims-based access control for distributed applications. The access control service is designed to abstract identity providers from your application. It maps input claims from different types of identity providers to a standard set of output claims known to your application. This architecture provides a model for dynamically adding and removing identity providers without changing a single line of code in your application. The most common uses of Access Control service are:

- Providing identity federation between multiple partner identity providers and partner portals

- Providing enterprise identity federation using ADFS 2.0 and enterprise applications running in Windows Azure

Service bus – The service bus is a generic Internet service bus based on the Windows Communications Foundations (WCF) programming model. It is analogous to the Enterprise Service Bus (ESB) popularly seen in large enterprises. Unlike the ESB, the Azure AppFabric Service Bus is designed for Internet scale and messaging with cross-enterprise and cross-cloud scenarios in mind. The service bus provides key messaging patterns like publish/subscribe, point-to-point, and durable buffers for message exchanges across distributed applications in the cloud as well as on-premise. You can expose an on-premises line-of-business application interface as a Service Bus endpoint and then consume that endpoint from a Windows Azure application or any other application. The most common uses of Service Bus are:

- Accessing line-of-business data from Windows Azure applications

- Providing connectivity to data residing in on-premises applications at the web service level (Windows Azure Connect provides connectivity at the network level)

Caching – The caching service was announced during PDC 2010. It is a late comer to the Azure AppFabric family, but one of the most valuable. The caching service provides distributed caching for applications running Windows Azure. Caching is an essential component for any internet-scale application. Before the caching service, developers either built custom caching components or modified third-party components like memcached (http://memcached.org/)to run on Windows Azure. The Azure AppFabric caching service makes caching a first-class citizen of the Windows Azure platform. It provides in-memory as well as external datacenter co-located caching service. The most common uses of the caching service in Windows Azure are:

- In-memory caching of SQLAzure database data

- Caching on-premises data to be consumed by Windows Azure applications

- Caching multi-tenant user interface data for providing high-performance interactive applications

- Caching user state in multi-player gaming applications

- Caching location-based usage information

- Caching session state of users

![]() Tip Like SQLAzure, Windows Azure AppFabric also runs a labs program where you can try upcoming features for free. You can login and start playing with these features here

Tip Like SQLAzure, Windows Azure AppFabric also runs a labs program where you can try upcoming features for free. You can login and start playing with these features here https://portal.appfabriclabs.com.

Now that we have covered the Windows Azure technology fundamentals, let's see how the pricing for all these features is structured. In the cloud, every feature you use is metered based on usage like your electricity bill, therefore architects and developers needs to choose appropriate features and are naturally forced to not over-architect an application.

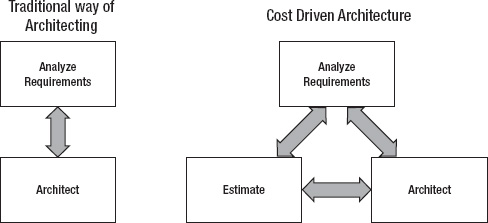

Windows Azure Platform Pricing

Each Windows Azure platform component is priced differently and within each component there are further pricing choices. Typically, architects are used to designing applications assuming capital investment for supporting maximum capacity. But, cloud platforms give you the flexibility for designing applications for minimum capacity and scale up dynamically based on demand. Such flexibility adds a new variable “operating cost” to your design. Every cloud resource you plan to utilize in your design has a cost associated with it. Cloud computing gives rise to a new architecture paradigm I call cost-driven architecture (CDA). In CDAs, an architect iteratively evaluates the operating cost of the architecture and modifies the architecture according to the cost boundaries of the application as shown in Figure 1-19.

Figure 1-19. Cost driven architecture

CDAs are tied directly to the pricing of the cloud services. The pricing for Windows Azure platform components are available publicly at:

www.microsoft.com/windowsazure/pricing/default.aspx

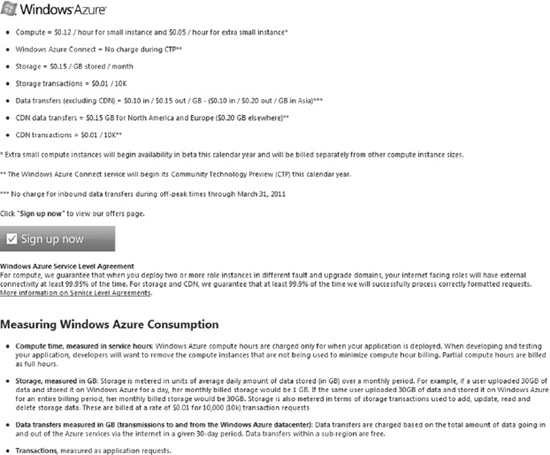

Figure 1-20 illustrates the pricing for Windows Azure Compute and Storage services.

Figure 1-20. Windows Azure compute and storage pricing

A couple of things to observe in Figure 1-20 are as follows:

The compute service charges are per hour of consumption and the price is based on the size of the virtual machine instance. You will be charged not only for a running application but also for an application that is deployed but not running. You have to unload the application to avoid any charges.

The Storage service charges are for data storage per GB as well as transactions (ingress and egress) in and out of the datacenter. So, if your compute instances and storage are located in the same datacenter, there will be no transaction cost for data transfer between the two, but if the compute instances access storage service from another datacenter, you will incur transaction costs.

![]() Note Microsoft no longer charges for input data bandwidth (ingress). There is network bandwidth charge for only egress (data-out); therefore it is easier to migrate your data into Windows Azure.

Note Microsoft no longer charges for input data bandwidth (ingress). There is network bandwidth charge for only egress (data-out); therefore it is easier to migrate your data into Windows Azure.

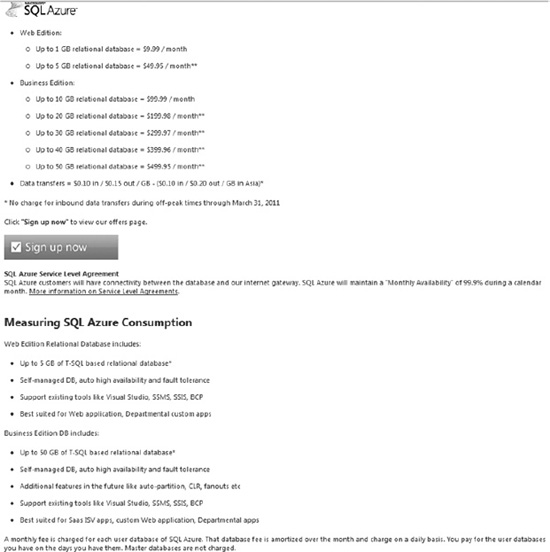

Figure 1-21 illustrates the pricing for SQL Azure.

Figure 1-21. SQL Azure pricing

A few things to observe in Figure 1-21 are:

- There are two types of database editions: Web and Business (5GB or more is the Business Edition).

- The maximum size per database is 50GB, but you can create multiple 50GB databases and partition data between those instances.

- “The database fee is amortized over the month and charged on a daily basis.” If you dynamically create and delete databases frequently, you have to make sure you factor-in this daily cost.

- Similar to storage service, SQL Azure also charges for data transfer between datacenters. There will be no charge for data transfer within the same datacenter.

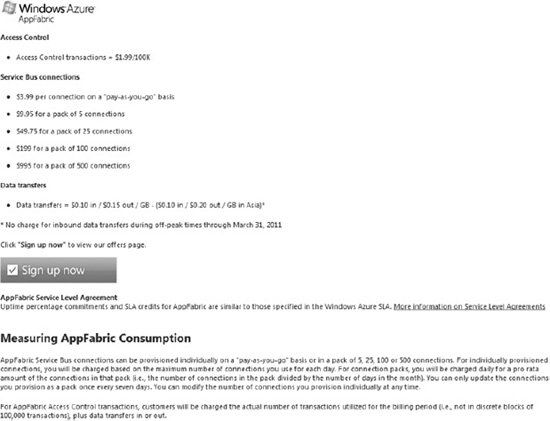

Figure 1-22 illustrates the pricing for Windows Azure AppFabric.

Figure 1-22. Windows Azure AppFabric pricing

![]() Tip For quick cost estimation, there is a nice pricing calculator on the Windows Azure web site at

Tip For quick cost estimation, there is a nice pricing calculator on the Windows Azure web site at www.microsoft.com/windowsazure/pricing-calculator/. I encourage you to try it out by mapping any of your on-premise application to Windows Azure.

All of the Windows Azure platform components can be managed from the Windows Azure platform management portal. Let's review that now.

Management Portal – Let's Provision

![]() Note By the time this book will be released, the portal experience will be much different, but the concepts will still remain the same.

Note By the time this book will be released, the portal experience will be much different, but the concepts will still remain the same.

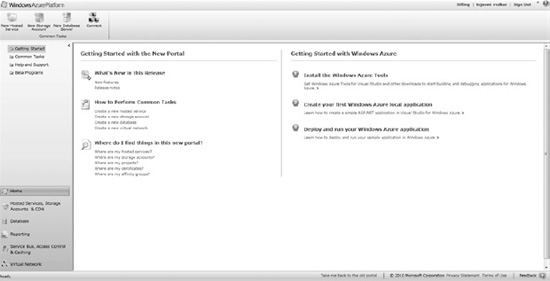

Microsoft has designed the Windows Azure platform management portal in Silverlight, providing better interactivity and a central place for managing all the Windows Azure platform components. You can access the portal via the following URL https://windows.azure.com. Before accessing the portal, you will need to create a subscription using your Live Id. Microsoft offers several introductory specials that can be found here www.microsoft.com/windowsazure/offers/. Sometimes there are one month free trials. If you have MSDN subscription, you also receive some free hours per month. After you create a subscription, you can log in to the management portal using the same Live Id you used for creating the subscription.

Once you create a subscription, you get access to all the Windows Azure platform components. After you login to the management portal, you will be taken to the main portal page. Figure 1-23 illustrates the screenshot of the main portal page.

Figure 1-23. Windows Azure platform management portal

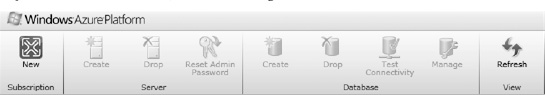

The management portal user interface is highly interactive and gives you the feeling of interacting with the desktop software. The left navigation list all the services from the Windows Azure platform and the top navigation bar lists commands in context of your navigation. For example, on the main page you can create new services: Hosted Service, Storage Service, Database Server, and Windows Azure Connect service. When you navigate to the Database tab, you will see the commands change in the context of the SQL Azure database server, as shown in Figure 1-24.

Figure 1-24. Management portal commands in Database context

For running examples from this book, you will need a provisioned account. Even though some of the applications you can run in the Windows Azure development fabric, for experiencing the real cloud environment, you will need a Windows Azure platform account.

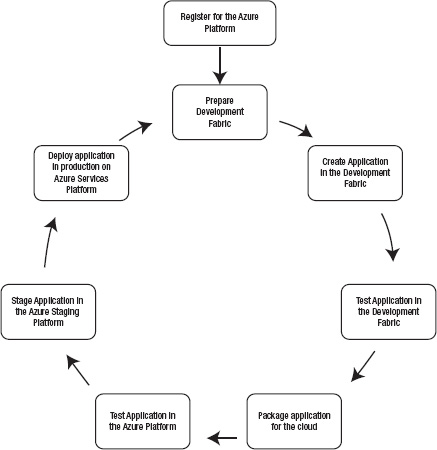

Figure 1-25 illustrates a typical developer workflow on the Windows Azure Platform.

Figure 1-25. Windows Azure platform developer workflow

The typical developer workflow steps for Windows Azure Platform follow:

- Create a Windows Azure account (i.e., an account for Windows Azure, AppFabric, or SQL Services).

- Download and prepare the development fabric to create a local cloud platform.

- Create an application in the development fabric.

- Test the application in the development fabric.

- Package the application for cloud deployment.

- Test the application on Windows Azure in the cloud.

- Stage the application in the Windows Azure staging environment in the cloud.

- Deploy the application in the production farm.

Windows Azure platform is a living platform; new features are added every few weeks. One such feature that I have not covered in detail is the Windows Azure Marketplace DataMarket. I will give you a brief overview of the topic on this subject that will conceptually understand the service offering.

Windows Azure Marketplace DataMarket

Windows Azure Marketplace DataMarket is a data service broker that runs on Windows Azure. It standardizes the data consumption and data publishing interfaces with a standard web protocol called Open Data Protocol (OData). Let's say you want to build a mobile application that gives end users insights into real estate sales and rental rates in relationship to local crime statistics. What would be your process for building such an application? The application is completely driven by public domain data and three different data sources: Real Estate Sales, Rental Data, and Crime Statistics. Then you realize that the programmatic interfaces for these data sources are different. Ultimately, you end up building your own service that transforms and aggregates the data from these three sources and presents it to the user. It would have been ideal if all the three data sources had a standardized interface so that you don't have to transform the data and only worry about aggregating and presenting it in the user's context.

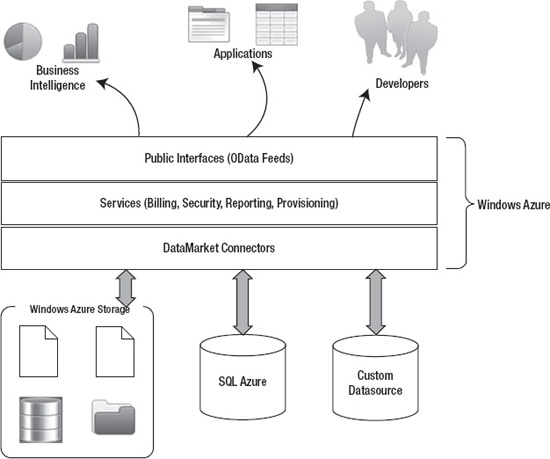

The DataMarket standardizes this conversation by brokering such data feeds in OData format. OData is a web protocol that standardizes data publishing and consumption so that tightly integrated data can be exposed as standardized cross-platform feeds. You can find more information on OData at www.odata.org. The web site also provides client (consumption) and server (publisher). As an ISV or a developer, DataMarket provides you with a single point for consuming public domain as well as premium commercial data in a standard format. You maintain a single billing relationship with Microsoft and not worry about signing multiple checks for data providers. As a data provider, DataMarket provides you with a global brokerage service for monetizing your data by delivering it anywhere in the world. DataMarket also provides an authorization model for delivering your data only to your customers. You don't have to worry about maintaining separate customer relationships and separate infrastructure for publishing your data globally. You can find more information on the DataMarket on its web site https://datamarket.azure.com/. Figure 1-26 illustrates the high-level architecture of the DataMarket platform.

Figure 1-26. Windows Azure Marketplace DataMarket

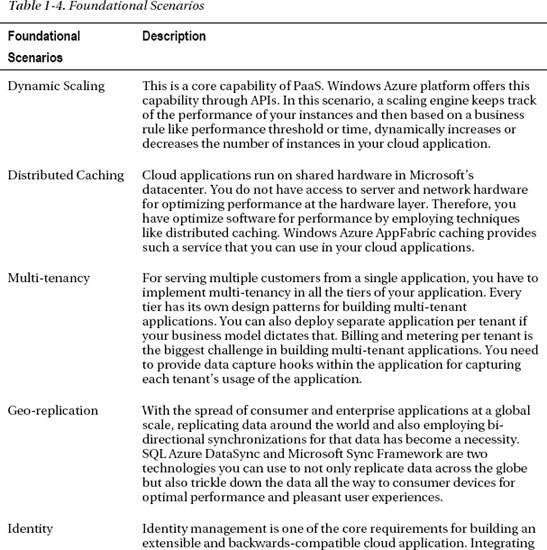

As shown in Figure 1-26, you can expose your custom data source, SQL Azure data source or a data source from Windows Azure Storage to the DataMarket. The DataMarket itself does not store your data, but provides you with a marketplace for your data. The DataMarket connectors provide the API services for publishing your data to the DataMarket. The DataMarket in turn exposes the data to the consumers as OData feeds. The services layer manages the billing, security, reporting, and provisioning.