Chapter 4. Optimize database objects and SQL infrastructure

In the previous chapter, we considered how to optimize database performance by managing concurrency. Database optimization also requires you not only to understand how SQL Server runs queries efficiently and uses its resources effectively, but also how to recognize problems that prevent it from doing so. Given this, you must know how to use the tools that help you find those problems and the steps that you can take to tune the SQL Server infrastructure for better performance.

Often the first step you take to improve your SQL Server query performance is to add indexes to tables, as we described in Chapter 1, “Design and implement database objects.” In Skill 4.1, we review the tasks you should perform periodically to ensure that the indexes you have are in fact helpful, and also to identify and resolve index issues. We then delve deeper into query performance optimization by exploring how to capture and analyze query plans in Skill 4.2. Then we shift our attention to the SQL Server infrastructure in Skill 4.3 to consider its impact on performance and understand how to use built-in tools to manage and troubleshoot the database engine’s use of resources. We close the chapter with Skill 4.4 in which we review the tools at your disposal for ongoing monitoring of your database infrastructure so that you are able to recognize when performance begins to deviate from normal and can take proactive action to keep SQL Server running at its best.

Skills in this chapter:

![]() Optimize statistics and indexes

Optimize statistics and indexes

![]() Analyze and troubleshoot query plans

Analyze and troubleshoot query plans

![]() Manage performance for database instances

Manage performance for database instances

![]() Monitor and trace SQL Server baseline performance metrics

Monitor and trace SQL Server baseline performance metrics

Skill 4.1: Optimize statistics and indexes

One way to significantly improve the performance of queries is to add one or more indexes to a table. When you create an index, SQL Server creates statistics, a database object in which statistical information about the distribution of values in a column or index is stored. An index improves query performance only if it is up-to-date and selected by SQL Server to resolve queries. Therefore, you should periodically review and optimize indexes as part of your regular routine.

This section covers how to:

![]() Determine the accuracy of statistics and associated impact to query plans and performance

Determine the accuracy of statistics and associated impact to query plans and performance

![]() Design statistics maintenance tasks

Design statistics maintenance tasks

![]() Use dynamic management objects to review current index usage and identify missing indexes

Use dynamic management objects to review current index usage and identify missing indexes

![]() Consolidate overlapping indexes

Consolidate overlapping indexes

Determine the accuracy of statistics and the associated impact to query plans and performance

SQL Server uses statistics to determine a column’s cardinality, which is the number of rows containing a specific value in a column. For example, when a value is unique in a column, such as a primary key, the cardinality is 1. When a column is highly unique like this, it is commonly characterized as having high selectivity or high cardinality. Conversely, when the number of unique values in a column are fewer or non-existent, the column has low selectivity and low cardinality. This information influences the query optimizer’s selection of an appropriate index as well as an efficient query plan. If the wrong index or query plan is selected, or if an index is missing, a query might not execute as optimally as possible. Therefore, the accuracy of the statistics is critical to query performance.

There are several ways that you can manage statistics. First, you can review the current statistics to determine whether the distribution of the data and the cardinality of data accessed by an index is likely to be helpful. In addition, you can control whether SQL Server updates statistics automatically. Last, you can check which objects have statistics managed by SQL Server and when they were last updated.

Review data distribution and cardinality

To see statistics for a table, use the DBCC SHOW_STATISTICS command to return the following information:

![]() Metadata about the statistics including date and time of the last update of the statistics, number of rows in the table, number of rows sampled, number of steps in the histogram, index density, average key length, whether the index contains string summary statistics, filtered predicate for statistics if applicable, and number of rows before applying the filter.

Metadata about the statistics including date and time of the last update of the statistics, number of rows in the table, number of rows sampled, number of steps in the histogram, index density, average key length, whether the index contains string summary statistics, filtered predicate for statistics if applicable, and number of rows before applying the filter.

![]() Index densities for the combination of columns in the index.

Index densities for the combination of columns in the index.

![]() A histogram of up to 200 sample values in the first key column in the index.

A histogram of up to 200 sample values in the first key column in the index.

Let’s create a simple index for the Purchasing.Suppliers table in the WideWorldImporters database and view its statistics, as shown in Listing 4-1, to explore the output of the DBCC SHOW_STATISTICS command.

LISTING 4-1 Create an index and show its statistics

USE WideWorldImporters;

GO

CREATE NONCLUSTERED INDEX IX_Purchasing_Suppliers_ExamBook762Ch4

ON Purchasing.Suppliers

(

SupplierCategoryID,

SupplierID

)

INCLUDE (SupplierName);

GO

DBCC SHOW_STATISTICS ('Purchasing.Suppliers',

IX_Purchasing_Suppliers_ExamBook762Ch4 );

/* Output

Name Updated Rows Rows Sampled Steps Density

Average key length String Index Filter Expression Unfiltered Rows

------------------------------ ---------------------- ---- -------------

IX_Purchasing_Suppliers_ExamBook762Ch4 Nov 7 2016 6:40AM 13 13 5 1

8 NO NULL 13

(1 row(s) affected)

All density Average Length Columns

----------------- ------------ ------------------------------------------

0.125 4 SupplierCategoryID

0.07692308 8 SupplierCategoryID, SupplierID

(2 row(s) affected)

RANGE_HI_KEY RANGE_ROWS EQ_ROWS DISTINCT_RANGE_ROWS AVG_RANGE_ROWS

--------------------- -------------------- ---------------- ---------------

2 0 6 0 1

4 1 1 1 1

6 1 1 1 1

8 1 1 1 1

9 0 1 0 1

(5 row(s) affected)

DBCC execution completed. If DBCC printed error messages, contact your system

administrator.

*/

The first result set is the statistics header that shows the name of the object for which statistics exist. In this case, the object is the IX_Purchasing_Suppliers_ExamBook762Ch4 index. You can see when the statistics were last updated, the number of rows in the index, the number of rows sampled for the statistics calculations, and the number of rows in the table without a filter. You also see a value for density in the statistics header. However, this density calculation is no longer used by the query optimizer in SQL Server 2016. Last, the average key length is 8 bytes, there are no string summary statistics, and no filter applied.

The second result set shows the densities of each combination of columns in the index. Density is calculated by dividing one by the count of distinct values in the column (or columns when you have a compound key). In Listing 4-1, the first row with SupplierCategoryID has higher density than the second row with SupplierCategoryID, SupplierID which means it has lower selectivity and is less helpful as an index. Conversely, the lower density of the second row indicates higher selectivity.

The last result set is the statistics histogram, which contains up to 200 sample values for the first column of the index. Each sample value is called a step and is listed in the RANGE_HI_KEY column. In Listing 4-1, there are only 5 sample values: 2, 4, 6, 8, and 9. For each step, SQL Server stores the following four values:

![]() RANGE_ROWS The number of rows inside the range between the current step and the previous step, but does not include the step values themselves.

RANGE_ROWS The number of rows inside the range between the current step and the previous step, but does not include the step values themselves.

![]() EQ_ROWS The number of rows having the same value as the sample value.

EQ_ROWS The number of rows having the same value as the sample value.

![]() DISTINCT_RANGE_ROWS The number of distinct values between the current step and the previous step, but does not include the step values themselves.

DISTINCT_RANGE_ROWS The number of distinct values between the current step and the previous step, but does not include the step values themselves.

![]() AVG_RANGE_ROWS The average number of rows for each distinct value with the step range.

AVG_RANGE_ROWS The average number of rows for each distinct value with the step range.

Note DBCC SHOW_STATISTICS

For more details about the DBCC SHOW_STATISTICS output, see https://msdn.microsoft.com/en-us/library/ms174384.aspx.

When creating an estimated query plan (described in more detail in Skill 4.2), SQL Server looks at the histogram to estimate the number of rows that match a WHERE clause in a query as long as the condition in the clause is a single constant expression, such as WHERE SupplierCategoryID = 3. When the expression uses two columns, as in WHERE SupplierCategoryID = 3 AND SupplierID = 10, SQL Server uses the index densities to estimate rows.

Let’s look at an example of how inaccurate statistics can affect a query plan. First, create a test database with automatic statistics updates disabled, load the table, add an index, and then review the statistics, as shown in Listing 4-2.

LISTING 4-2 Create test environment with automatic statistics disabled

CREATE DATABASE ExamBook762Ch4_Statistics;

GO

ALTER DATABASE ExamBook762Ch4_Statistics

SET AUTO_CREATE_STATISTICS OFF;

ALTER DATABASE ExamBook762Ch4_Statistics

SET AUTO_UPDATE_STATISTICS OFF;

ALTER DATABASE ExamBook762Ch4_Statistics

SET AUTO_UPDATE_STATISTICS_ASYNC OFF;

GO

USE ExamBook762Ch4_Statistics;

GO

CREATE SCHEMA Examples;

GO

CREATE TABLE Examples.OrderLines (

OrderLineID int NOT NULL,

OrderID int NOT NULL,

StockItemID int NOT NULL,

Description nvarchar(100) NOT NULL,

PackageTypeID int NOT NULL,

Quantity int NOT NULL,

UnitPrice decimal(18, 2) NULL,

TaxRate decimal(18, 3) NOT NULL,

PickedQuantity int NOT NULL,

PickingCompletedWhen datetime2(7) NULL,

LastEditedBy int NOT NULL,

LastEditedWhen datetime2(7) NOT NULL);

GO

INSERT INTO Examples.OrderLines

SELECT *

FROM WideWorldImporters.Sales.OrderLines;

GO

CREATE INDEX ix_OrderLines_StockItemID

ON Examples.OrderLines (StockItemID);

GO

DBCC SHOW_STATISTICS ('Examples.OrderLines',

ix_OrderLines_StockItemID );

GO

/* Partial Output

RANGE_HI_KEY RANGE_ROWS EQ_ROWS DISTINCT_RANGE_ROWS AVG_RANGE_ROWS

------------------ --------------- ----------- ------------------------ ---

1 0 1048 0 1

2 0 1078 0 1

4 1022 1066 1 1022

*/

When the index is added to the table, its statistics are also created. However, a significant number of inserts or updates to the table can render these statistics obsolete. Execute the statements in Listing 4-3 to update rows and check the statistics afterwards to confirm there has been no change.

LISTING 4-3 Update table rows and check statistics

UPDATE Examples.OrderLines

SET StockItemID = 1

WHERE OrderLineID < 45000;

DBCC SHOW_STATISTICS ('Examples.OrderLines',

ix_OrderLines_StockItemID );

GO

/* Partial Output

RANGE_HI_KEY RANGE_ROWS EQ_ROWS DISTINCT_RANGE_ROWS AVG_RANGE_ROWS

-------------- ------------- -------- --------------- ----------------

1 0 1048 0 1

2 0 1078 0 1

4 1022 1066 1 1022

*/

Next, click the Include Actual Execution Plan button in the toolbar, and then execute the following query:

SELECT StockItemID

FROM Examples.OrderLines

WHERE StockItemID = 1;

When you hover the cursor over the Index Seek (NonClustered) in the query plan, notice the difference between Actual Number Of Rows and Estimated Number Of Rows in the tooltip, shown in Figure 4-1. Because the statistics are out-of-date, the estimated row count is 1048, which is the value currently in the histogram for rows having StockItemID = 1. Because this value is relatively low, the query optimizer generated a plan using an index seek, which could be less optimal than performing a scan when data volumes are high.

Review automatic statistics updates

Statistics are generated when you add an index to a table that contains data or when you run the UPDATE STATISTICS command. In most cases, as illustrated by the previous example, you should allow SQL Server to create and update statistics automatically by setting one of the following database options, each of which is enabled by default:

![]() AUTO_UPDATE_STATISTICS SQL Server updates statistics automatically as needed. It determines an update is necessary by using a counter on modifications to column values. This counter is incremented when a row is inserted or deleted or when an indexed column is updated. The counter is reset to 0 when the statistics are generated. When it does this, it acquires compile locks and query plans might require recompilation. You can disable this option by using the sp_autostats system stored procedure.

AUTO_UPDATE_STATISTICS SQL Server updates statistics automatically as needed. It determines an update is necessary by using a counter on modifications to column values. This counter is incremented when a row is inserted or deleted or when an indexed column is updated. The counter is reset to 0 when the statistics are generated. When it does this, it acquires compile locks and query plans might require recompilation. You can disable this option by using the sp_autostats system stored procedure.

![]() AUTO_UPDATE_STATISTICS_ASYNC When it is enabled, SQL Server updates statistics asynchronously. That is, SQL Server uses a background thread so as not to block query execution. In this case, the query optimizer might choose a less than optimal query execution plan until the statistics are updated. Use the ALTER DATABASE T-SQL command to disable this option.

AUTO_UPDATE_STATISTICS_ASYNC When it is enabled, SQL Server updates statistics asynchronously. That is, SQL Server uses a background thread so as not to block query execution. In this case, the query optimizer might choose a less than optimal query execution plan until the statistics are updated. Use the ALTER DATABASE T-SQL command to disable this option.

![]() AUTO_CREATE_STATISTICS During query execution, SQL Server creates statistics on individual columns in query predicates to help the query optimizer improve query plans. Use the ALTER DATABASE T-SQL command to disable this option.

AUTO_CREATE_STATISTICS During query execution, SQL Server creates statistics on individual columns in query predicates to help the query optimizer improve query plans. Use the ALTER DATABASE T-SQL command to disable this option.

Even when statistics are set to update automatically, SQL Server does not update statistics unless one of the following thresholds is met:

![]() One or more rows is added to an empty table.

One or more rows is added to an empty table.

![]() More than 500 rows are added to a table having fewer than 500 rows.

More than 500 rows are added to a table having fewer than 500 rows.

![]() More than 500 rows are added to a table having more than 500 rows and the number of rows added is more than a dynamic percentage of total rows. With a small table under 25,000 rows, this percentage is around 20 percent. As the number of rows in the table increases, the percentage rate that triggers a statistics update is lower. For example, SQL Server updates statistics for a table with 1 billion rows when more than 1 million changes occur, or 0.1 percent. Prior to SQL Server 2016, this threshold was fixed at 20 percent of the original total number of rows in the table which means that 200 million rows were required to trigger an update of statistics.

More than 500 rows are added to a table having more than 500 rows and the number of rows added is more than a dynamic percentage of total rows. With a small table under 25,000 rows, this percentage is around 20 percent. As the number of rows in the table increases, the percentage rate that triggers a statistics update is lower. For example, SQL Server updates statistics for a table with 1 billion rows when more than 1 million changes occur, or 0.1 percent. Prior to SQL Server 2016, this threshold was fixed at 20 percent of the original total number of rows in the table which means that 200 million rows were required to trigger an update of statistics.

You can check to see if SQL Server automatically created statistics in a database by checking the value of the auto_created column in the sys.stats catalog view, as shown in Listing 4-4.

LISTING 4-4 Check auto-created statistics in a database

Use WideWorldImporters;

GO

SELECT

OBJECT_NAME(object_id) AS ObjectName,

name,

auto_created

FROM sys.stats

WHERE auto_created = 1 AND

object_id IN

(SELECT object_id FROM sys.objects WHERE type = 'U');

/* Partial Output

ObjectName name auto_created

------------------------------------------- --------------------

Colors_Archive _WA_Sys_00000001_04E4BC85 1

OrderLines _WA_Sys_00000006_05A3D694 1

OrderLines _WA_Sys_0000000C_05A3D694 1

plan_persist_runtime_stats _WA_Sys_00000006_0CBAE877 1

StockGroups_Archive _WA_Sys_00000001_10566F31 1

StateProvinces _WA_Sys_00000002_114A936A 1

StateProvinces _WA_Sys_00000009_114A936A 1

CustomerTransactions _WA_Sys_0000000B_15DA3E5D 1

*/

To check the last time the statistics were updated for each statistics object in a table, you can use the STATS_DATE system function as shown in Listing 4-5. As an alternative, you can use the sys.dm_db_stats_properties DMV to get row counts and modifications occurring since the last statistics update in addition to the last update date.

LISTING 4-5 Check last update of statistics for an object

SELECT

name AS ObjectName,

STATS_DATE(object_id, stats_id) AS UpdateDate

FROM sys.stats

WHERE object_id = OBJECT_ID('Sales.Customers');

/* Output

ObjectName UpdateDate

------------------------------- ---------------------------------------

PK_Sales_Customers 2016-06-02 10:07:35.170

UQ_Sales_Customers_CustomerName 2016-06-02 10:07:35.240

FK_Sales_Customers_CustomerCategoryID 2016-06-02 10:08:13.080

FK_Sales_Customers_BuyingGroupID 2016-06-02 10:07:38.010

FK_Sales_Customers_PrimaryContactPersonID 2016-06-02 10:07:43.027

FK_Sales_Customers_AlternateContactPersonID 2016-06-02 10:07:48.040

FK_Sales_Customers_DeliveryMethodID 2016-06-02 10:07:53.043

FK_Sales_Customers_DeliveryCityID 2016-06-02 10:07:58.060

FK_Sales_Customers_PostalCityID 2016-06-02 10:08:03.060

IX_Sales_Customers_Perf_20160301_06 2016-06-02 10:08:08.067

_WA_Sys_00000003_2FCF1A8A 2016-06-02 10:10:11.130

_WA_Sys_0000000B_2FCF1A8A 2016-06-02 10:10:11.623

_WA_Sys_0000001E_2FCF1A8A 2016-06-02 10:54:31.173

*/

Design statistics maintenance tasks

SQL Server creates and updates statistics automatically for all indexes and for columns used in a WHERE or JOIN ON clause. At one extreme, the automatic statistics update process might run when the database is busy and adversely affects performance or, at the other extreme, it might not run frequently enough for a table that is subject to high-volume data changes. For these situations, you can disable the automatic statistics update options for the database and then implement a maintenance plan to update statistics on demand or on a schedule.

Note Enabling SQL Server Agent extended stored procedures

Before you can create a maintenance plan, you must enable SQL Server Agent extended stored procedures. You can do this by starting the SQL Server Agent service in SQL Server Management Studio or by executing the following code:

EXEC sp_configure 'show advanced options', 1;

GO

RECONFIGURE;

GO

EXEC sp_configure 'Agent XPs', 1;

GO

RECONFIGURE;

GO

To create a maintenance plan, open SQL Server Management Studio. and then, in Object Explorer, expand the Management node, right click the Maintenance Plans folder, and then select either New Maintenance Plan or Maintenance Plan Wizard. If you select New Maintenance Plan, type a name for the maintenance plan. Then drag the Update Statistics Task from the Toolbox to the plan designer surface, as shown in Figure 4-2.

Double click the task to open the Update Statistics Task dialog box, shown in Figure 4-3.

In the Databases drop-down list, you can select one of the following options:

![]() All Databases All SQL Server databases, except tempdb.

All Databases All SQL Server databases, except tempdb.

![]() System Databases All SQL Server system databases, except tempdb.

System Databases All SQL Server system databases, except tempdb.

![]() All User Databases (Excluding Master, Model, Msdb, Tempdb) All user databases and none of the SQL Server system databases.

All User Databases (Excluding Master, Model, Msdb, Tempdb) All user databases and none of the SQL Server system databases.

![]() These Databases A list of user and SQL Server system databases (except tempdb) from which you must select at least one database. If you select this option, you must also specify whether to update statistics for tables, views, or both tables and views. If you select Tables or Views, you must also select one or more tables or views from the respective list.

These Databases A list of user and SQL Server system databases (except tempdb) from which you must select at least one database. If you select this option, you must also specify whether to update statistics for tables, views, or both tables and views. If you select Tables or Views, you must also select one or more tables or views from the respective list.

Then you specify one of the following options for the update:

![]() All Existing Statistics Statistics for both columns and indexes.

All Existing Statistics Statistics for both columns and indexes.

![]() Column Statistics Only Statistics for columns only.

Column Statistics Only Statistics for columns only.

![]() Index Statistics Only Statistics for indexes only.

Index Statistics Only Statistics for indexes only.

Last, you select one of the following options for scan type:

![]() Full Scan Update statistics by reading all rows in a table or view.

Full Scan Update statistics by reading all rows in a table or view.

![]() Sample By Update statistics based on a specified percentage or specified number of rows. This is a better option when updating statistics for a large table or view.

Sample By Update statistics based on a specified percentage or specified number of rows. This is a better option when updating statistics for a large table or view.

You can click the View T-SQL button at the bottom of the Update Statistics Task dialog box to generate the T-SQL script for the new maintenance task. Then you can use this script as a template to create additional maintenance plans for updating statistics. Listing 4-6 shows a portion of the script generated for the WideWorldImporters database:

LISTING 4-6 Script to update statistics for a specific table

USE WideWorldImporters;

GO

UPDATE STATISTICS [Application].[Cities]

WITH FULLSCAN

GO

Note UPDATE STATISTICS options

You can add any of the following options when using the UPDATE STATISTICS statement.

![]() No FULLSCAN or SAMPLE option If you omit the FULLSCAN or SAMPLE option, SQL Server calculates statistics by computing an appropriate sample size and performing a sample scan.

No FULLSCAN or SAMPLE option If you omit the FULLSCAN or SAMPLE option, SQL Server calculates statistics by computing an appropriate sample size and performing a sample scan.

![]() FULLSCAN SQL Server performs a full scan of the table data or the index to generate more accurate statistics, although this option takes more time and more IO.

FULLSCAN SQL Server performs a full scan of the table data or the index to generate more accurate statistics, although this option takes more time and more IO.

![]() SAMPLE With this option, you specify the number or percentage of rows that SQL Server samples when generating the statistics.

SAMPLE With this option, you specify the number or percentage of rows that SQL Server samples when generating the statistics.

![]() RESAMPLE SQL Server generates the statistics using the same sampling ratio that was defined during the previous statistics generation.

RESAMPLE SQL Server generates the statistics using the same sampling ratio that was defined during the previous statistics generation.

The maintenance task should be scheduled at a time that interferes least with regular database operations. On the Maintenance Plan designer, click the Subplan Schedule button to open the New Job Schedule dialog box. Here you can specify whether the maintenance plan is a recurring schedule, whether it runs only when SQL Server starts or when the CPU is idle, or one time only. If you choose the Recurring option, you specify a frequency, and a start and optional end date. If you instead choose the One Time option, you specify the date and time to execute the maintenance plan. When you save the schedule, a SQL Server Agent job is created and the job executes as a SQL Server Integration Services (SSIS) package.

You can also execute a maintenance plan without waiting for the scheduled SQL Server Agent job. To do this, expand the Management node, expand the Maintenance Plan node, right click the maintenance plan, and then select Execute. When you use this method, the SSIS package is launched and you can observe the execution status in the Execute Maintenance Plan dialog box.

Use dynamic management objects to review current index usage and identify missing indexes

SQL Server uses indexes to speed up data access. In Chapter 1, we covered many of the considerations affecting the design of tables. Over time, you might find that some indexes are not as helpful as expected due to changes in the distribution of the data or in the query patterns. Furthermore, the existence of an index that SQL Server never uses adds overhead to write operations. Therefore, it’s important to periodically review indexes not only to determine whether existing indexes are still useful, but also whether any are ignored or missing.

In this section, we review several dynamic management objects (DMOs) that are useful for this review process. We focus on how to perform specific review tasks that are important for index management without delving into all the possible information that you can derive from using these DMOs.

Need More Review? Resources for managing indexes with DMOs

You can find more detail about each DMO by accessing the respective topic in Books Online. For deeper coverage of index management by using DMOs, download the free ebook “Performance Tuning with Dynamic Management Views” by Tim Ford and Louis Davidson available at https://www.simple-talk.com/books/sql-books/performance-tuning-with-sql-server-dynamic-management-views---ebook-download.

Review current index usage

The following DMOs provide information about whether and how indexes are used:

![]() sys.dm_db_index_usage_stats Use this DMV to review the use of indexes to resolve queries.

sys.dm_db_index_usage_stats Use this DMV to review the use of indexes to resolve queries.

![]() sys.dm_db_index_physical_stats Use this dynamic management function (DMF) to check the overall status of indexes in a database.

sys.dm_db_index_physical_stats Use this dynamic management function (DMF) to check the overall status of indexes in a database.

sys.dm_db_index_usage_stats

To get a quick overview of which indexes are being used, you can use the sys.dm_db_index_usage_stats DMV as shown in Listing 4-7. To appear in this DMV’s output, an index must be read or written to at least once by a user or system operation. In this example, the count of user seeks, scans, and lookups are aggregated as user_reads and sorted in descending order to make it clear which indexes are used more frequently than others. Counts in this DMV are reset when the server restarts or when an index is dropped and recreated.

LISTING 4-7 Review current index usage

SELECT

OBJECT_NAME(ixu.object_id, DB_ID('WideWorldImporters')) AS [object_name] ,

ix.[name] AS index_name ,

ixu.user_seeks + ixu.user_scans + ixu.user_lookups AS user_reads,

ixu.user_updates AS user_writes

FROM sys.dm_db_index_usage_stats ixu

INNER JOIN WideWorldImporters.sys.indexes ix ON

ixu.[object_id] = ix.[object_id] AND

ixu.index_id = ix.index_id

WHERE ixu.database_id = DB_ID('WideWorldImporters')

ORDER BY user_reads DESC;

/*Partial Output

object_name index_name user_reads user_writes

------------------------------------------- --------------- ---------------

StockGroups PK_Warehouse_StockGroups 19 0

Suppliers PK_Purchasing_Suppliers 13 0

ColdRoomTemperatures_Archive ix_ColdRoomTemperatures_Archive 0 1959

StockItems FK_Warehouse_StockItems_SupplierID 0 0

*/

Just as important as knowing which indexes are being accessed is knowing which indexes are never used. To find these indexes, you must start by retrieving all tables and indexes in a database and then filter out those appearing in sys.dm_db_index_usage_stats as shown in Listing 4-8.

LISTING 4-8 Find unused indexes

USE WideWorldImporters;

GO

SELECT

OBJECT_NAME(ix.object_id) AS ObjectName ,

ix.name

FROM sys.indexes AS ix

INNER JOIN sys.objects AS o ON

ix.object_id = o.object_id

WHERE ix.index_id NOT IN (

SELECT ixu.index_id

FROM sys.dm_db_index_usage_stats AS ixu

WHERE

ixu.object_id = ix.object_id AND

ixu.index_id = ix.index_id AND

database_id = DB_ID()

) AND

o.[type] = 'U'

ORDER BY OBJECT_NAME(ix.object_id) ASC ;

/* Partial Output

ObjectName name

------------------------------------------------------------------------------

BuyingGroups UQ_Sales_BuyingGroups_BuyingGroupName

BuyingGroups_Archive ix_BuyingGroups_Archive

Cities_Archive ix_Cities_Archive

ColdRoomTemperatures NULL

ColdRoomTemperatures PK_Warehouse_ColdRoomTemperatures

ColdRoomTemperatures IX_Warehouse_ColdRoomTemperatures_ColdRoomSensorNumber

*/

Whereas the previous example helps you find indexes for which there is no read or write activity, Listing 4-9 helps you find indexes that SQL Server maintains but never uses to retrieve data for a query. These indexes are consuming resources without helping query performance. You should consider dropping these indexes if further investigation reveals that there is no need to continue to maintain them.

LISTING 4-9 Find indexes that are updated but never used

USE WideWorldImporters;

GO

SELECT

o.name AS ObjectName ,

ix.name AS IndexName ,

ixu.user_seeks + ixu.user_scans + ixu.user_lookups AS user_reads ,

ixu.user_updates AS user_writes ,

SUM(p.rows) AS total_rows

FROM sys.dm_db_index_usage_stats ixu

INNER JOIN sys.indexes ix ON

ixu.object_id = ix.object_id AND

ixu.index_id = ix.index_id

INNER JOIN sys.partitions p ON

ixu.object_id = p.object_id AND

ixu.index_id = p.index_id

INNER JOIN sys.objects o ON

ixu.object_id = o.object_id

WHERE

ixu.database_id = DB_ID() AND

OBJECTPROPERTY(ixu.object_id, 'IsUserTable') = 1 AND

ixu.index_id > 0

GROUP BY

o.name ,

ix.name ,

ixu.user_seeks + ixu.user_scans + ixu.user_lookups ,

ixu.user_updates

HAVING ixu.user_seeks + ixu.user_scans + ixu.user_lookups = 0

ORDER BY

ixu.user_updates DESC,

o.name ,

ix.name ;

/* Output

ObjectName IndexName user_reads user_writes total_rows

-------------------------- ---------------- ---------------- -----------------

ColdRoomTemperatures_Archive ix_ColdRoomTemperatures_Archive 0 2016 3654736

StockItems FK_Warehouse_StockItems_SupplierID 0 0 227

*/

sys.dm_db_index_physical_stats

In addition to reviewing usage of indexes, you should also review index health by using the sys.dm_db_index_physical_stats DMF. As inserts, updates, and deletes occur, an index becomes increasingly fragmented and IO increases as data is no longer efficiently stored on disk. Listing 4-10 shows how to review fragmentation. In general, you should focus on indexes for which fragmentation is greater than 15percent and the page count is greater than 500. When fragmentation is between 15 percent and 30 percent, you should reorganize the index, and when its greater, you should rebuild it.

LISTING 4-10 Review index fragmentation

DECLARE @db_id SMALLINT, @object_id INT;

SET @db_id = DB_ID(N'WideWorldImporters');

SET @object_id = OBJECT_ID(N'WideWorldImporters.Sales.Orders');

SELECT

ixs.index_id AS idx_id,

ix.name AS ObjectName,

index_type_desc,

page_count,

avg_page_space_used_in_percent AS AvgPageSpacePct,

fragment_count AS frag_ct,

avg_fragmentation_in_percent AS AvgFragPct

FROM sys.dm_db_index_physical_stats

(@db_id, @object_id, NULL, NULL , 'Detailed') ixs

INNER JOIN sys.indexes ix ON

ixs.index_id = ix.index_id AND

ixs.object_id = ix.object_id

ORDER BY avg_fragmentation_in_percent DESC;

/* Output

idx_id ObjectName index_type_desc pg_ct AvgPageSpacePct frag_ct AvgFragPct

-------- ---------------------------------- ---------- ---------------------------

4 FK_Sales_Orders_PickedByPersonID NONCLUSTERED INDEX 237 53.6864838151717 237

99.57805907173

2 FK_Sales_Orders_CustomerID NONCLUSTERED INDEX 189 67.3274277242402 189

97.8835978835979

5 FK_Sales_Orders_ContactPersonID NONCLUSTERED INDEX 189 67.3274277242402 189

97.8835978835979

3 FK_Sales_Orders_SalespersonPersonID NONCLUSTERED INDEX 136 93.5749444032617 136

97.0588235294118

1 PK_Sales_Orders CLUSTERED INDEX 688 99.0945268099827 88

0.290697674418605

1 PK_Sales_Orders CLUSTERED INDEX 2 55.2260934025204 2 0

1 PK_Sales_Orders CLUSTERED INDEX 1 0.296515937731653 1 0

2 FK_Sales_Orders_CustomerID NONCLUSTERED INDEX 1 39.6713615023474 1 0

3 FK_Sales_Orders_SalespersonPersonID NONCLUSTERED INDEX 1 28.5396590066716 1 0

4 FK_Sales_Orders_PickedByPersonID NONCLUSTERED INDEX 1 58.5001235483074 1 0

5 FK_Sales_Orders_ContactPersonID NONCLUSTERED INDEX 1 39.6713615023474 1 0

*/

Identify missing indexes

When the query optimizer compiles a T-SQL statement, it also tracks up to 500 indexes that could have been used if they had existed. The following DMVs help you review these missing indexes:

![]() sys.dm_db_missing_index_details Use this DMV to identify the columns used for equality and inequality predicates.

sys.dm_db_missing_index_details Use this DMV to identify the columns used for equality and inequality predicates.

![]() sys.dm_db_missing_index_groups Use this DMV as an intermediary between sys.dm_db_index_details and sys.dm_db_missing_group_stats.

sys.dm_db_missing_index_groups Use this DMV as an intermediary between sys.dm_db_index_details and sys.dm_db_missing_group_stats.

![]() sys.dm_db_missing_index_group_stats Use this DMV to retrieve metrics on a group of missing indexes.

sys.dm_db_missing_index_group_stats Use this DMV to retrieve metrics on a group of missing indexes.

Note SQL Server restart clears information from DMOs

The information in these DMOs is cleared when SQL Server restarts and reflects only information accumulated since the last restart.

You use the three DMVs as a group, as shown in Listing 4-11. The output, which will vary on your computer, will list each database, schema, and table that is missing an index in descending order of the overall improvement expected by adding an index. The improvement is derived by multiplying the sum of the seeks and scans that the index helps by the average cost of the user queries that could be reduced by the index and by the average percent decrease in cost resulting from implementing the index. The equality_columns column lists one or more columns in the table that are good candidates for the new index. The inequality_columns column lists columns that are useful for queries that include the <> operator that you might consider adding to the index. Last, the included_columns column lists the columns that are suggested for addition to the index in the INCLUDE clause.

LISTING 4-11 Review missing indexes

SELECT

(user_seeks + user_scans) * avg_total_user_cost * (avg_user_impact * 0.01) AS

IndexImprovement,

id.statement,

id.equality_columns,

id.inequality_columns,

id.included_columns

FROM sys.dm_db_missing_index_group_stats AS igs

INNER JOIN sys.dm_db_missing_index_groups AS ig

ON igs.group_handle = ig.index_group_handle

INNER JOIN sys.dm_db_missing_index_details AS id

ON ig.index_handle = id.index_handle

ORDER BY IndexImprovement DESC;

/* Output

IndexImprovmeent statement equality_columns inequality_columns included_columns

-------------------------- ------------------------------ -------------------------

79.89008274829 [AdventureWorksDW].[dbo].[FactInternetSales] [ProductKey] NULL

[CustomerKey]

*/

Consolidate overlapping indexes

Consider a situation in which two indexes include similar indexes such as those shown in Listing 4-12. In this example, the two indexes are the same except one index contains an additional column. When SQL Server processes a query that needs the columns in the smaller index, it uses the smaller index. Conversely, when processing a query that uses all columns in the larger index, then SQL Server uses that index.

LISTING 4-12 Create overlapping indexes

USE [WideWorldImporters];

GO

CREATE NONCLUSTERED INDEX [IX_Sales_Invoices_ExamBook762Ch4_A]

ON [Sales].[Invoices]

(

[CustomerID],

[InvoiceDate]

)

INCLUDE ([TotalDryItems]);

GO

CREATE NONCLUSTERED INDEX [IX_Sales_Invoices_ExamBook762Ch4_B]

ON [Sales].[Invoices]

(

[CustomerID],

[InvoiceDate],

[CustomerPurchaseOrderNumber]

)

INCLUDE ([TotalDryItems]);

GO

With regard to query performance, all is well. However, SQL Server has an additional index to manage and requires more disk space for the database when similar indexes exist. This situation can occur when you create new indexes based on the DMVs for missing indexes or follow the recommendations from the Database Engine Tuning Advisor because they do not always account for existing indexes. Therefore, when using the DMVs or Database Engine Tuning Advisor to identify missing indexes, you might consider simply adding a new column to an existing index by adding it as another key or as an included column.

Meanwhile, when you encounter overlapping indexes, you should drop one of them so that database maintenance tasks run faster and less storage is required. Use the script in Listing 4-13 to find overlapping indexes.

![]() Exam Tip

Exam Tip

The exam has several different styles of questions to test your understanding of missing indexes and overlapping indexes. In particular, you should know how to identify overlapping indexes and what actions are necessary to consolidate and optimize them. It’s also important to understand which operators can be removed with a clustered or covering index.

LISTING 4-13 Find overlapping indexes

USE [WideWorldImporters];

WITH IndexColumns AS (

SELECT

'[' + s.Name + '].[' + T.Name + ']' AS TableName,

ix.name AS IndexName,

c.name AS ColumnName,

ix.index_id,

ixc.index_column_id,

COUNT(*) OVER(PARTITION BY t.OBJECT_ID, ix.index_id) AS ColumnCount

FROM sys.schemas AS s

INNER JOIN sys.tables AS t ON

t.schema_id = s.schema_id

INNER JOIN sys.indexes AS ix ON

ix.OBJECT_ID = t.OBJECT_ID

INNER JOIN sys.index_columns AS ixc ON

ixc.OBJECT_ID = ix.OBJECT_ID AND

ixc.index_id = ix.index_id

INNER JOIN sys.columns AS c ON

c.OBJECT_ID = ixc.OBJECT_ID AND

c.column_id = ixc.column_id

WHERE

ixc.is_included_column = 0 AND

LEFT(ix.name, 2) NOT IN ('PK', 'UQ', 'FK')

)

SELECT DISTINCT

ix1.TableName,

ix1.IndexName AS Index1,

ix2.IndexName AS Index2

FROM IndexColumns AS ix1

INNER JOIN IndexColumns AS ix2 ON

ix1.TableName = ix2.TableName AND

ix1.IndexName <> ix2.IndexName AND

ix1.index_column_id = ix2.index_column_id AND

ix1.ColumnName = ix2.ColumnName AND

ix1.index_column_id < 3 AND

ix1.index_id < ix2.index_id AND

ix1.ColumnCount <= ix2.ColumnCount

ORDER BY ix1.TableName, ix2.IndexName;

/* Output

TableName Index1 Index2

-------------------------------------------------------------------

[Sales].[Invoices] IX_Sales_Invoices_ExamBook762Ch4_A

IX_Sales_Invoices_ExamBook762Ch4_B

[Sales].[OrderLines]IX_Sales_OrderLines_AllocatedStockItems

IX_Sales_OrderLines_Perf_20160301_02

*/

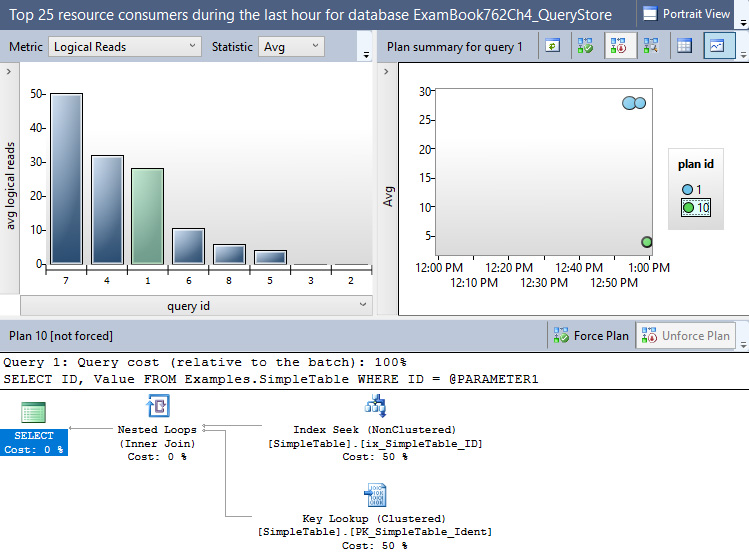

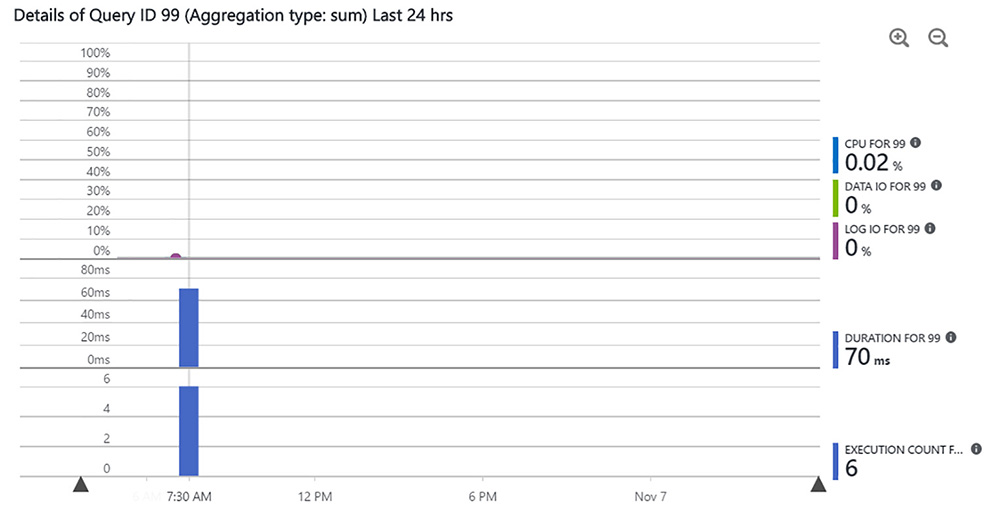

Skill 4.2: Analyze and troubleshoot query plans

One of the most important skills that you can have as a database administrator is the ability to analyze and troubleshoot query plans. In this section, we explain how to capture query plans, how certain query plan operators can indicate a potential performance problem, and how to interpret estimated versus actual query plans. We also introduce Query Store as a SQL Server 2016 feature that you can use to review SQL Server’s selection of query plans for a query over time. For Azure SQL Database implementations, we show you how Azure SQL Database Performance Insight provides visibility into query performance in the cloud.

This section covers how to:

![]() Capture query plans using extended events and traces

Capture query plans using extended events and traces

![]() Identify poorly performing query plan operators

Identify poorly performing query plan operators

![]() Create efficient query plans using Query Store

Create efficient query plans using Query Store

![]() Compare estimated and actual query plans and related metadata

Compare estimated and actual query plans and related metadata

![]() Configure Azure SQL Database Performance Insight

Configure Azure SQL Database Performance Insight

Capture query plans using extended events and traces

Before a query executes, several processes occur to manage how SQL Server performs the instructions in the T-SQL statement. The first process is query parsing, a step in which the database engine checks to make sure the submitted query uses valid T-SQL syntax. If query parsing is successful and if the T-SQL statement is a DML statement, the next process to run is the algebrizer, which verifies the existence of the referenced objects, such as tables and columns. If this process succeeds, the next process invoked is the query optimizer. The query optimizer checks to see if a query plan already exists for the query. If not, it generates one or more query plans based on the statistics available for the data and then selects the query plan that is good enough to perform the task at hand while minimizing the use CPU and IO when possible.

Although you can use the graphical query plan to analyze a single query, such as you might during index design as described in Chapter 1, you need an alternate approach when you need to troubleshoot many queries running on a server. In that case, you can automate the process of capturing execution plans by using extended events or SQL Trace.

Important Permissions required to view a query plan

You must have the appropriate permissions within a database to view a query plan. If your login is assigned to the sysadmin, dbcreator, or db_owner role, you have the necessary permissions. Otherwise, you need to be granted the SHOWPLAN permission by running the following statement:

GRANT SHOWPLAN TO [username];

Extended Events

Using Extended Events is a lightweight approach to capturing query plans. There are two Extended Events that you can use to review query plans:

![]() query_pre_execution_showplan This Extended Event captures the estimated query plan for a query. An estimated query plan is prepared without executing the query.

query_pre_execution_showplan This Extended Event captures the estimated query plan for a query. An estimated query plan is prepared without executing the query.

![]() query_post_execution_showplan This Extended Event captures the actual query plan for a query. An actual query plan is the estimated query plan that includes runtime information. For this reason, it is not available until after the query executes.

query_post_execution_showplan This Extended Event captures the actual query plan for a query. An actual query plan is the estimated query plan that includes runtime information. For this reason, it is not available until after the query executes.

Listing 4-14 shows how to create and start an Extended Event session for actual query plans. In this example, the session definition filters the query activity on the server for a specific database and query type, ADHOC. In your own environment, you can remove filters entirely or apply more filters as needed. We describe how to work with Extended Events in greater detail in Skill 4.4.

Note Create target folder for query plan before running sample script

The target folder for the query plan, C:ExamBook762Ch4, must exist before running the script shown in Listing 4-14.

Also, be aware that running an Extended Event session to capture actual query plans is an expensive operation and should be used sparingly on a production server and only with highly selective filtering in place.

LISTING 4-14 Create and start an Extended Event session to capture an actual query plan

IF EXISTS(SELECT *

FROM sys.server_event_sessions

WHERE name='ActualQueryPlans')

DROP EVENT SESSION ActualQueryPlans

ON SERVER;

GO

CREATE EVENT SESSION ActualQueryPlans

ON SERVER

ADD EVENT sqlserver.query_post_execution_showplan(

ACTION (sqlserver.database_name,

sqlserver.client_hostname,

sqlserver.client_app_name,

sqlserver.plan_handle,

sqlserver.sql_text,

sqlserver.tsql_stack,

package0.callstack,

sqlserver.query_hash,

sqlserver.session_id,

sqlserver.request_id)

WHERE

sqlserver.database_name='WideWorldImporters' AND

object_type = 'ADHOC'

)

ADD TARGET package0.event_file(SET filename=N'C:ExamBook762Ch4ActualQueryPlans.xel',

max_file_size=(5),max_rollover_files=(4)),

ADD TARGET package0.ring_buffer

WITH (MAX_DISPATCH_LATENCY=5SECONDS, TRACK_CAUSALITY=ON);

GO

ALTER EVENT SESSION ActualQueryPlans

ON SERVER

STATE=START;

GO

You can review the query plans captured by this Extended Event using the graphical interface in SQL Server Management Studio. In Object Explorer, expand the Management node, expand the Sessions node, right click ActualQueryPlans, and select Watch Live Data. Now that you are watching the sessions, execute a query, like this:

USE WideWorldImporters;

GO

SELECT *

FROM Warehouse.StockGroups;

In the session window, click the row in which the query event appears, and then click the Query Plan tab in the lower portion of screen, as shown in Figure 4-4.

When you no longer need to capture query plans, be sure to disable or drop the Extended Event session, using the applicable statement in Listing 4-15.

LISTING 4-15 Disable or drop extended event sessions

--Disable extended event session

ALTER EVENT SESSION ActualQueryPlans

ON SERVER

STATE=STOP;

GO

--Drop extended event session

IF EXISTS(SELECT *

FROM sys.server_event_sessions

WHERE name='ActualQueryPlans')

DROP EVENT SESSION ActualQueryPlans

ON SERVER;

GO

SQL Trace

Although SQL Trace is designated as a deprecated feature and will be removed from a future release of SQL Server, it remains an available option in SQL Server 2016. You can define server-side traces by using system stored procedures and then run these traces on demand or on a scheduled basis. As an alternative, you can use SQL Server Profiler as a client-side option. The overhead of running server-side traces is much less than the overhead of using SQL Server Profiler, but the overhead is still significant. Therefore, take care when using SQL Trace in a production environment regardless of the approach you take and disable tracing as soon as possible.

Server-side tracing

To define a trace, use the following system stored procedures:

![]() sp_trace_create This procedure creates a new trace and defines a file into which SQL Server stores trace data. It returns a trace ID that you reference in the other procedures to manage the trace.

sp_trace_create This procedure creates a new trace and defines a file into which SQL Server stores trace data. It returns a trace ID that you reference in the other procedures to manage the trace.

![]() sp_trace_setevent This procedure must be called once for each data column of the events to capture in the trace. That means you must call this procedure many times for any single trace. When you call this procedure, you pass in the following arguments, the trace identifier captured as output when you create the trace, the event identifier, the column identifier, and the status of ON (1) or OFF (0).

sp_trace_setevent This procedure must be called once for each data column of the events to capture in the trace. That means you must call this procedure many times for any single trace. When you call this procedure, you pass in the following arguments, the trace identifier captured as output when you create the trace, the event identifier, the column identifier, and the status of ON (1) or OFF (0).

![]() sp_trace_setfilter This procedure must be called once for each filter on an event data column.

sp_trace_setfilter This procedure must be called once for each filter on an event data column.

![]() sp_trace_setstatus This procedure starts, stops, or removes a trace. It must be stopped and removed before you can open the related trace file.

sp_trace_setstatus This procedure starts, stops, or removes a trace. It must be stopped and removed before you can open the related trace file.

Listing 4-16 illustrates how to use these four system stored procedures to create a trace for a query plan. The trace data is stored in the ExamBook762Ch4 folder that must exist prior to executing the sp_trace_create system stored procedure. Next, the sp_trace_setevent system stored procedure is called multiple times to capture the query plan, the login name associated with the query, the start and end time of the query, and the text of the query, respectively.

LISTING 4-16 Create a trace, add events and filter to a trace, and start a trace

USE master;

GO

DECLARE @TraceID int;

EXEC sp_trace_create

@TraceID output,

0,

N'C:ExamBook762Ch4ActualQueryPlanTrc';

EXEC sp_trace_setevent @TraceID,

146, -- Showplan XML Statistics Profile

27, -- BinaryData column

1; -- Column is ON for this event

EXEC sp_trace_setevent @TraceID,

146,

1, -- TextData column

1;

EXEC sp_trace_setevent @TraceID,

146,

14, -- StartTime column

1;

EXEC sp_trace_setevent @TraceID,

146,

15, -- EndTime column

1;

-- Set filter for database

EXEC sp_trace_setfilter @TraceID,

@ColumnID = 35, --Database Name

@LogicalOperator = 0, -- Logical AND

@ComparisonOperator = 6, -- Comparison LIKE

@Value = N'WideWorldImporters' ;

-- Set filter for application name

EXEC sp_trace_setfilter @TraceID,

@ColumnID = 10, --ApplicationName

@LogicalOperator = 0, -- Logical AND

@ComparisonOperator = 6, -- Comparison LIKE

@Value = N'Microsoft SQL Server Management Studio - Query' ;

-- Start Trace (status 1 = start)

EXEC sp_trace_setstatus @TraceID, 1;

GO

Note Resources for sp_trace_setevent and sp_trace_setfilter

Lists of event and column identifiers for defining the event columns are available at “sp_trace_setevent (Transact-SQL)”, https://msdn.microsoft.com/en-us/library/ms186265.aspx. Lists of the logical and comparison operators for the trace filter are available at “sp_trace_setfilter (Transact-SQL)” at https://msdn.microsoft.com/en-us/library/ms174404.aspx.

Now execute a query to generate an event for the trace like this:

USE WideWorldImporters;

GO

SELECT *

FROM Warehouse.StockGroups;

To view the trace information, find the trace identifier by using the sys.fn_trace_getinfo system function and then use sp_trace_setstatus twice, as shown in Listing 4-17, replacing <traceid> with the trace identifier that you find by executing the first statement. The first time you set the status of the trace to 0 to stop it and the second time you set the status to 2 to close and delete the trace information from SQL Server.

LISTING 4-17 Stop and delete a trace

---- Find the trace ID

USE master;

GO

SELECT *

FROM sys.fn_trace_getinfo(0)

WHERE value = 'C:ExamBook762Ch4ActualQueryPlanTrc.trc';

-- Set the trace status to stop

EXEC sp_trace_setstatus

@traceid = <traceid>,

@status= 0;

GO

-- Close and Delete the trace

EXEC sp_trace_setstatus

@traceid = <traceid>,

@status = 2;

GO

The trace file remains on the file system and is available for you to view in SQL Server Profiler. Be sure to open SQL Server Profiler by using the Run As Administrator option and then open the trace file. Click the row containing the query’s Showplan event to view the graphical query plan, as shown in Figure 4-5.

Setting up traces manually by using the system stored procedures can be tedious due to the number of numeric parameters required. Rather than refer to Books Online to find the necessary values, you can take advantage of SQL Server catalog views to find the values you need, as shown in Listing 4-18.

LISTING 4-18 Get event and column identifiers for use in a trace definition

--Get event identifiers

SELECT

e.trace_event_id AS EventID,

e.name AS EventName,

c.name AS CategoryName

FROM sys.trace_events e

JOIN sys.trace_categories c

ON e.category_id = c.category_id

ORDER BY e.trace_event_id;

/* Partial output

EventID EventName CategoryName

------------ ------------------------------- ---------------------

10 RPC:Completed Stored Procedures

11 RPC:Starting Stored Procedures

12 SQL:BatchCompleted TSQL

13 SQL:BatchStarting TSQL

*/

--Get column identifiers for events

SELECT

trace_column_id,

name AS ColumnName

FROM sys.trace_columns

ORDER BY trace_column_id;

/* Partial output

trace_column_id ColumnName

----------------------- -----------------------------

1 TextData

2 BinaryData

3 DatabaseID

4 TransactionID

5 LineNumber

*/

LISTING 4-19 Create an indexed view to improve aggregate query performance

CREATE VIEW Sales.vSalesByYear

WITH SCHEMABINDING

AS

SELECT

YEAR(InvoiceDate) AS InvoiceYear,

COUNT_BIG(*) AS InvoiceCount

FROM Sales.Invoices

GROUP BY YEAR(InvoiceDate);

GO

CREATE UNIQUE CLUSTERED INDEX idx_vSalesByYear

ON Sales.vSalesByYear

(InvoiceYear);

GO

Client-side tracing

You can use SQL Server Profiler instead of manually creating the many stored procedures to define a trace when you need to capture a query plan. On the File menu, click New Trace, and then connect to the server on which you want to run the trace. In the Trace Properties dialog box, click the Events Selection tab and then select the Show All Events checkbox. Expand the Performance node, and select one or more of the following checkboxes:

![]() Showplan XML This event is raised when SQL Server selects an estimated query plan.

Showplan XML This event is raised when SQL Server selects an estimated query plan.

![]() Showplan XML For Query Compile This event is raised when SQL Server compiles a query and produces an estimated query plan which it adds to the query plan cache. Generally, this event is raised only once for a query unless the query requires recompilation.

Showplan XML For Query Compile This event is raised when SQL Server compiles a query and produces an estimated query plan which it adds to the query plan cache. Generally, this event is raised only once for a query unless the query requires recompilation.

![]() Showplan XML Statistics Profile This event is raised after SQL Server executes a query and has generated an actual query plan.

Showplan XML Statistics Profile This event is raised after SQL Server executes a query and has generated an actual query plan.

To minimize the performance impact of running SQL Server Profiler, you should apply as many filters as possible to capture only the query plans of interest. As an example, you might want to focus on queries in a specific database. To do this, select the Show All Columns checkbox, and then click Column Filters. In the Edit Filter dialog box, click DatabaseName, expand Like, type WideWorldImporters as shown in Figure 4-6, and then click OK. Next, click Run to start the trace.

After enabling a trace, you can run a query against the WideWorldImporters database, such as the one shown in Listing 4-15. After executing the query, click the Stop Selected Trace in the SQL Server Profiler toolbar. You can click on any of the events that begin with Showplan to view the graphical estimated or actual query plan, depending on the specific event you select. To save the query plan to a separate file for later review, right click the event, and then select Extract Event Data to save the file with a SQLPlan file extension. You can then open this file in SQL Server Management Studio.

Identify poorly performing query plan operators

Not only can a query plan tell you the specific steps performed by SQL Server during query execution, it can also help you discover which step in the sequence is performing poorly. Each step in the query plan is a separate operation performed by the database engine and is represented as an icon known as an operator. As you analyze a graphical query plan, you should check for the following conditions that can affect query performance:

![]() Query plan optimization You can find this property by right clicking the first operator in the plan (for example, SELECT) and selecting Properties. In the Properties window, look for the Reason For Early Termination Of Statement Optimization property. If it is Good Enough Plan Found, then proceed with further analysis of the query plan. If the value is Timeout, you should spend time tuning your query because the property indicates that the current query plan is not optimal.

Query plan optimization You can find this property by right clicking the first operator in the plan (for example, SELECT) and selecting Properties. In the Properties window, look for the Reason For Early Termination Of Statement Optimization property. If it is Good Enough Plan Found, then proceed with further analysis of the query plan. If the value is Timeout, you should spend time tuning your query because the property indicates that the current query plan is not optimal.

![]() Operators In particular, operators requiring a lot of memory (such as a Sort) or blocking operators can contribute to performance problems in a query. We describe potential issues with query plan operators in more detail later in this section.

Operators In particular, operators requiring a lot of memory (such as a Sort) or blocking operators can contribute to performance problems in a query. We describe potential issues with query plan operators in more detail later in this section.

![]() Arrow width The width of arrows between operators is an indicator relative to the number of rows affected by the operation. If you see one operation outputs a wide arrow, while the arrow preceding a SELECT operator is narrow, the query must process many rows before returning a small number of rows in the result set. In this case, you might investigate whether you can add a filter to the query to reduce the number of rows for the earlier operation and thereby improve overall query performance.

Arrow width The width of arrows between operators is an indicator relative to the number of rows affected by the operation. If you see one operation outputs a wide arrow, while the arrow preceding a SELECT operator is narrow, the query must process many rows before returning a small number of rows in the result set. In this case, you might investigate whether you can add a filter to the query to reduce the number of rows for the earlier operation and thereby improve overall query performance.

![]() Operator cost Each operator’s contribution to the overall cost of the query is represented as a percentage value. As you analyze the query plan, look for the operators with the highest costs.

Operator cost Each operator’s contribution to the overall cost of the query is represented as a percentage value. As you analyze the query plan, look for the operators with the highest costs.

![]() Warnings When the optimizer detects a problem, it includes a warning in an operator’s properties and displays a warning icon on the operator in the graphical query plan. This is a significant clue that the query performance is likely to suffer. If you see a warning, you should take steps to tune your query or optimize your environment to eliminate the warning.

Warnings When the optimizer detects a problem, it includes a warning in an operator’s properties and displays a warning icon on the operator in the graphical query plan. This is a significant clue that the query performance is likely to suffer. If you see a warning, you should take steps to tune your query or optimize your environment to eliminate the warning.

Let’s consider some examples of query plan operators that can adversely affect performance. Before executing the ad hoc queries in this section, click the Include Actual Execution Plan button once to enable the graphical query plan for each query.

Note Understanding query plans for queries using the WideWorldImporters database

The size of the WideWorldImporters database is too small to illustrate performance problems with the use of certain operators. Nonetheless, we use queries against this database to familiarize you with the appearance of specific poorly performing operators in a query plan, explain the conditions in which SQL Server might use them, and suggest steps you can take to improve query performance.

![]() Exam Tip

Exam Tip

Understanding query plan operators is important both in the real world and on the exam. Be prepared for questions that present two possible query plans and ask you to choose the more optimal of the two query plans.

Table Scan operator

As we explained in Chapter 1, SQL Server must read a heap row by row to find the rows for a query. This operation can perform slowly when run against a large table. Try this query:

SELECT *

FROM Warehouse.VehicleTemperatures;

In the Execution Plan window, as shown in Figure 4-7, you can see that SQL Server used a Table Scan operator that represents 100% of the query cost. Note also the size of the arrow between the SELECT and Table Scan operators to indicate a relatively large result set. In this case, the table is memory-optimized, so the performance cost of reading 659,998 rows for this query is minimal. On the other hand, if this table were not memory-optimized and you were investigating poor query performance, the presence of the Table Scan operator should lead you to consider adding a clustered index to the table or look for ways to filter the query to return fewer rows.

Clustered Index Scan operator

Another potential problem for query performance is the use of the Clustered Index Scan operator. It is similar in concept to the Table Scan operator in that SQL Server must scan all the data. This might occur when the query must return so many rows that using the index to find specific rows is no longer advantageous, index selectivity is too low, or statistics are obsolete. To see this operator in action, execute the following query:

SELECT *

FROM Warehouse.StockGroups;

As you can see in Figure 4-8, SQL Server uses the Clustered Index Scan operator to process the query. You can also see which index is used, PK_Warehouse_StockGroups. This clustered index is relatively small (as you can see by the size of the arrows between operators), so performance is not an issue here. However, the presence of a Clustered Index Scan operator can indicate the source of poor query performance and merits further investigation to determine whether you can modify the query by adding a WHERE clause to return only the needed rows, as long as a proper index exists for the column used in the filter. Importantly, the WHERE clause must have a predicate that includes a column on one side of the operator and an expression on the other side with both the column and expression having the same data type. If you use the LIKE operator in the WHERE clause, you cannot use a wildcard as the first character in the search string.

That said, an index scan is not necessarily a bad operation. It is more efficient than a table scan by comparison. Nonetheless, if you need to find a way to improve query performance, your next step is to add a filter. To see what happens when you do this, execute the following query:

SELECT *

FROM Warehouse.StockGroups

WHERE StockGroupID = 1;

Now SQL Server uses a Clustered Index Seek operator, as shown in Figure 4-9. This change to the query plan is good because a seek operator can be one of the faster data retrieval methods used by SQL Server. In particular, a Clustered Index Seek is a preferred operator because all data in the table is also included in the index and SQL Server can return results directly from the index without performing additional steps.

Notice also that SQL Server converted the predicate to @1 instead of using the actual value of 1 that was requested in the query. That way, SQL Server can reuse the query plan when the same query executes with a different value in the WHERE clause. This reuse is known as simple parameterization.

Index Seek (NonClustered) and Key Lookup (Clustered) operators

Like the Clustered Index Seek operator, the Index Seek (NonClustered) operator is a much better operator to see in a query plan than a Table Scan or a Clustered Index Scan operator because it can selectively find rows in the index rather than read all the rows, although this behavior is dependent on the query. On the other hand, if the non-clustered index is not a covering index, the query plan also includes a Key Lookup (Clustered) operator, which adds a slight overhead to query performance. To see a query plan with these characteristics, as shown in Figure 4-10, execute this query:

SELECT

StockGroupID,

StockGroupName,

ValidFrom,

ValidTo

FROM Warehouse.StockGroups

WHERE StockGroupName = 'Novelty Items';

In this case, the index used by the Index Seek (NonClustered) operator contains only the StockGroupName column, but the query references other columns in the SELECT clause, so it must use the Key Lookup (Clustered) operator to get the additional columns for each row. Incidentally, if the query references columns in JOIN conditions or the WHERE clause, SQL Server includes the Key Lookup (Clustered) operator in the query plan even if those columns are not also in the SELECT clause. Therefore, whenever you see the Key Lookup (Clustered) operator in combination with an Index Seek (NonClustered) operator, consider creating a covering index by adding the necessary columns to the index key or as included columns.

Note RID Lookup operator versus Key Lookup (Clustered) operator

When a query accesses a table without a clustered index, SQL Server uses the RID Lookup operator instead of the Key Lookup (Clustered) operator. The net effect of each operator type has a similar effect on query performance which can be mitigated by the addition of a covering index or a clustered index.

Sort operator

The Sort operator can also increase the cost of a query. Consider the query plan shown in Figure 4-11 that results from executing the following query which includes an ORDER BY clause containing a column that is not used in an index:

SELECT *

FROM Warehouse.StockItems

ORDER BY StockItemName;

Contrast the query plan in Figure 4-11 with the one in Figure 4-12 that is created by executing this query:

SELECT *

FROM Warehouse.StockItems

ORDER BY StockItemID;

Because a clustered index is already sorted, SQL Server no longer requires a Sort operator when the ORDER BY clause includes a key column from the clustered index. With this in mind, look for Sort operators with a significant query cost and consider adding the sort columns to a clustered index on the table. Another potential solution is to reduce the number of rows to sort by adding a WHERE clause to the query.

Another consideration when working with a Sort operator is the volume of data that SQL Server must sort. The query optimizer uses the estimated number of rows and the average row size to calculate the minimum amount of memory required to perform the operation and the amount of memory needed to perform the operation entirely in memory. If the actual number of rows to be sorted is larger than the estimate due to obsolete statistics, the operation spills to tempdb. You can identify this type of memory problem in the actual query plan when you see a warning symbol on the Sort, as shown in Figure 4-13, or Hash Match operators.

When you hover the cursor over the Sort operator, the tooltip includes a warning that the operator used tempdb to spill data, as shown in Figure 4-14.

The query performance will suffer when the sort must use tempdb instead of memory. Use the tooltip for the SELECT operator to check the Memory Grant property which shows how much memory that SQL Server is allocating to the query. In SQL Server 2016, you can now add a query hint to request a minimum memory grant size as a percentage of the default limit to override the minimum memory per query property that is set on the server like this:

OPTION(min_grant_percent = 100)

Hash Match (Aggregate) operator

Aggregations in a query can have a negative effect on performance and should be reviewed carefully. Figure 4-15 shows the query plan created for the following aggregate query:

SELECT

YEAR(InvoiceDate) AS InvoiceYear,

COUNT(InvoiceID) AS InvoiceCount

FROM Sales.Invoices

GROUP BY YEAR(InvoiceDate);

In this case, the Hash Match (Aggregate) operator to group the rows from the Index Scan (NonClustered) operator contributes a significant percentage to the query cost. To perform this aggregation, SQL Server creates a temporary hash table in memory to count the rows by invoice year. Notice the larger width of the arrow sending data into the Hash Match (Aggregate) operator as compared to the width of the arrow sending the results to the next operator as an indicator that a larger row set has been reduced to a smaller row set by the operation.

Options to consider for minimizing the impact on performance when performing aggregations is to minimize the number of rows to aggregate where possible or to use an indexed view to pre-aggregate rows. Execute the statements in Listing 4-19 to set up an indexed view to improve the previous query’s performance.

DCREATE VIEW Sales.vSalesByYear

WITH SCHEMABINDING

AS

SELECT

YEAR(InvoiceDate) AS InvoiceYear,

COUNT_BIG(*) AS InvoiceCount

FROM Sales.Invoices

GROUP BY YEAR(InvoiceDate);

GO

CREATE UNIQUE CLUSTERED INDEX idx_vSalesByYear

ON Sales.vSalesByYear

(InvoiceYear);

GO

Now you can execute the earlier query again, even though it does not reference the indexed view directly, to see how the query optimizer takes advantage of the indexed view in the query plan shown in Figure 4-16.

SELECT

YEAR(InvoiceDate) AS InvoiceYear,

COUNT(InvoiceID) AS InvoiceCount

FROM Sales.Invoices

GROUP BY YEAR(InvoiceDate);

As a result of the addition of the indexed view, SQL Server no longer requires the Hash Match (Aggregator) operator and instead uses a Clustered Index Scan (ViewClustered) operator to retrieve data. Because the data is pre-aggregated, the index scan is much faster in this case than it would be against an index containing all rows in the table.

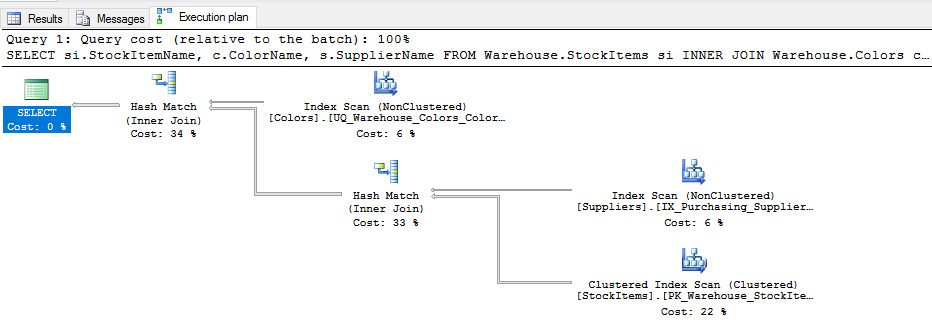

Hash Match (Inner Join) operator

Thus far, the queries we have examined have been relatively simple and read data from only one table. Now let’s consider a query that combines data from multiple tables to produce the query plan shown in Figure 4-17:

SELECT

si.StockItemName,

c.ColorName,

s.SupplierName

FROM Warehouse.StockItems si

INNER JOIN Warehouse.Colors c ON

c.ColorID = si.ColoriD

INNER JOIN Purchasing.Suppliers s ON

s.SupplierID = si.SupplierID;

In this example, we see the addition of the Hash Match (Inner Join) operator in two places in the query plan. We also see that these two operations have the two highest costs in the plan and therefore should be the first operations we assess for possible optimization. SQL Server uses this operator when it puts data into temporary hash tables so that it can match rows in two different data sets and produce a single result set. Specifically, SQL Server converts, or hashes, rows from the smaller data set into a value that is more efficient for comparisons and then stores these values in a hash table in tempdb. Then it compares each row in the larger data set with the hash table to find matching rows to join. As long as the smaller data set is in fact small, this comparison operation is fast, but performance can suffer when both data sets are large. Furthermore, if a query requires many of these operations, tempdb might experience memory pressure. Last, it’s important to note that the Hash Match (Inner Join) operator is a blocking operator as it requires SQL Server to gather data from each data set before it can perform the join.

In Figure 4-17, the Hash Match (Inner Join) operator combines the results of the Index Scan (NonClustered) and the Clustered Index Scan operators that gets SupplierID and SupplierName by scanning a non-clustered index on the Suppliers table and StockItemName, SupplierID, and ColorID by scanning the clustered index on the StockItems table. This result set becomes input for the second usage of the operation and is combined with ColorName set from an index scan on the Colors table.

Your options for improving the query performance based on this query plan include adding or revising indexes, filtering the data by using a WHERE clause, or fixing a WHERE clause that prevents the query optimizer from using an existing index. Let’s try adding indexes to the Suppliers and StockItems tables, as shown in Listing 4-20. The index on the Suppliers table includes the SupplierID column used for the JOIN operation and the SupplierName column to return in the final query results. Similarly, the index on the StockItems table includes the ColorID and SupplierID columns used for JOIN operations and the StockItemName column to return in the final query results.

LISTING 4-20 Add indexes to eliminate Hash Match (Inner Join) operators

CREATE NONCLUSTERED INDEX IX_Purchasing_Suppliers_ExamBook762Ch4_SupplierID

ON Purchasing.Suppliers

(

SupplierID ASC,

SupplierName

);

GO

CREATE NONCLUSTERED INDEX IX_Warehouse_StockItems_ExamBook762Ch4_ColorID

ON Warehouse.StockItems

(

ColorID ASC,

SupplierID ASC,

StockItemName ASC

);

After adding the indexes, execute the following query to see the new query plan, as shown in Figure 4-18:

SELECT

si.StockItemName,

c.ColorName,

s.SupplierName

FROM Warehouse.StockItems si

INNER JOIN Warehouse.Colors c ON

c.ColorID = si.ColoriD

INNER JOIN Purchasing.Suppliers s ON

s.SupplierID = si.SupplierID;

After adding the indexes, execute the following query to see the new query plan, as shown in Figure 4-18:

SELECT

si.StockItemName,

c.ColorName,

s.SupplierName

FROM Warehouse.StockItems si

INNER JOIN Warehouse.Colors c ON

c.ColorID = si.ColoriD

INNER JOIN Purchasing.Suppliers s ON

s.SupplierID = si.SupplierID;