Don't blame the Compiler.

In the previous chapters we have encountered here and there hints for testing individual functions. Without meaningful tests to ensure the quality of our package, all of our work would be for naught, for on what else are we to base our confidence in the reliability of our functions? Therefore, we are now going to give our full attention to this important topic, and to this end we ask two questions that every software developer should ask:

How can we be certain that our software functions behave according to their specifications, which in our case means first of all that they are mathematically correct?

How can we achieve stability and reliability in the functioning of our software?

Although these two questions are closely related, they are actually concerned with two different problem areas. A function can be mathematically incorrect, for example if the underlying algorithm has been incorrectly implemented, yet it can reliably and stably reproduce this error and consistently give the same false output for a given input. On the other hand, functions that apparently return correct results can be plagued by other sorts of errors, for example an overflow of the length of a vector or the use of incorrectly initialized variables, leading to undefined behavior that remains undetected due to favorable (or should we rather say unfavorable?) test conditions.

We thus must be concerned with both of these aspects and institute development and test methods that can provide us sufficient trust in both the correctness and reliability of our programs. There are numerous publications that discuss the significance and consequences of these wide-ranging requirements for the entire software development process and delve deeply into the issues of software quality. Considered attention to this topic has found expression not least in the international trend to institute the ISO 9000 standard in software production. In this regard one no longer speaks merely of "teshtting" or "quality assurance," but instead one hears talk of "quality management" or "total quality management," which in part are simply the result of effective marketing, but which nonetheless cast the issue in the proper light, namely, to consider the process of software creation in its multifaceted entirety and thereby improve it. The frequently employed expression "software engineering" cannot blind us to the fact that this process, as it relates to predictability and precision, as a rule can scarcely compete with the classical discipline of engineering.

The comparison may be characterized aptly by the following joke: A mechanical engineer, an electrical engineer, and a software engineer have decided to take an automobile trip together. They seat themselves in the car, but it refuses to start. The mechanical engineer says at once, "The problem is with the motor. The injection nozzle is clogged." "Nonsense," retorts the electrical engineer. "The electronics are to blame. The ignition system has certainly failed." Whereupon the software engineer makes the following suggestion: "Let's all get out of the car and climb back in. Perhaps then it will start."

Without pursuing the further conversations and adventures of the three intrepid engineers, let us proceed to consider some of the options that were implemented in the creation and testing of the FLINT/C package. Above all, the following references were consulted, which do not exhaust the reader with abstract considerations and guidelines but get down to concrete assistance in solving concrete problems, without in the process losing sight of the big picture.[41] Each of these books contains numerous references to further important literature on this topic:

[Dene] is a standard work that deals with the entire process of software development. The book contains many methodological pointers based on the practical experience of the author as well as many clear and useful examples. The theme of testing is attacked again and again in connection with the various phases of programming and system integration, where the conceptual and methodological fundamentals are discussed together with the practical point of view, all in conjunction with a thoroughly worked out example project.

[Harb] contains a complete description of the programming language C and the C standard library, and it gives many valuable pointers and comments on the prescriptions of the ISO standard. This is an indispensable reference work to be consulted at every turn.

[Hatt] goes into great detail on the creation of security-critical software systems in C. Typical experience and sources of error are demonstrated by means of concrete examples and statistics—and C certainly offers many opportunities for error. There is also comprehensive methodological advice, which if heeded would lead to increased trust in software products.

[Lind] is an excellent and humorous book, which reveals a deep understanding of the C programming language. Moreover, the author knows how to transmit this understanding to the reader. Many of the topics considered could be supplied the subtitle, "Did you know that ... ?" and only a very few readers could honestly—hand on heart—reply in the affirmative.

[Magu] deals with the design of subsystems and is therefore of particular interest to us. Here are discussed the interpretation of interfaces and the principles of dealing with functions with input parameters. The differences between risky and defensive programming are elucidated as well. The effective use of assertions (see page 153) as testing aids and for the avoidance of undefined program states is a further strong point of this book.

[Murp] contains a host of testing tools that can be put to use in testing programs with little effort and that yield immediate useful results. Among its other features the book offers libraries on an accompanying diskette for the implementation of assertions, testing the processing of dynamic memory objects, and reporting the degree of coverage of tests, which were also used for testing the FLINT/C functions.

[Spul] offers a broad view of methods and tools for testing programs in the C and C++ languages and gives numerous pointers for their effective use. The book contains an extensive overview of programming errors typical in C and C++ and discusses techniques for recognizing and eliminating them.

The methodological approaches to testing can be divided into two categories: static testing and dynamic testing. In the first category are to be found code inspection, whereby the source code is carefully examined and inspected line by line for such problems as deviations from specifications (in our case these are the selected algorithms), errors in reasoning, inaccuracies with respect to the arrangement of code lines or the style guide, doubtful constructions, and the presence of unnecessary code sequences.

Code inspection is supported by the use of analytic tools, such as the well-known Unix lint tools, that largely automate this laborious task. Originally, one of the main applications of lint was to compensate for earlier existing deficits in C in consistency checking of parameters that were passed to functions in separately compiled modules. Meanwhile, there have appeared more convenient products than the classical lint, products that are capable of discovering an enormous bandwidth of potential problems in program code, represented only in small part by syntax errors that definitively prevent a compiler from effecting a translation of the code. A few examples of the problem domains that can be uncovered by static analysis are as follows:

syntax errors,

missing or inconsistent function prototypes,

inconsistencies in the passing of parameters to functions,

references to or joining of incompatible types,

use of uninitialized variables,

nonportable constructs,

unusual or implausible use of particular language constructs,

unreachable code sequences,

An imperative condition for stringent type-checking by automated tools is the use of function prototypes. With the help of prototypes an ISO-conforming C compiler is capable of checking, across all modules, the types of arguments passed to functions and detecting inconsistencies. Many compilers can also be set to analyze the source code, as they recognize many problems when the appropriate warning levels are turned on. The C/C++ compiler gcc of the GNU project of the Free Software Foundation, for example, possesses above-average analysis functions, which can be activated with the options -Wall -ansi and -pedantic.[42]

For static testing in setting up the FLINT/C functions, in addition to tests being performed on a number of different compilers (see page 8), there were employed primarily the products PC-lint from Gimpel Software (version 7.5; see [Gimp]) and Splint from the Secure Programming Group at the University of Virginia (version 3.1.1; see [Evan]).[43]

PC-lint has proved itself to be a very useful tool for testing both C and C++ programs. It knows about approximately two thousand separate problems and uses mechanisms that in a limited way derive from the code the values loaded into automatic variables at run time and include this in the diagnosis. In this way many problems, such as exceeding the limits of vectors, that are usually, if at all, detected only at run time (which is to say during testing, it is to be hoped, and not afterwards) can be uncovered already during static analysis.

In addition to these tools, the freely obtainable Splint has been adapted to run under Linux. Splint distinguishes four modes (weak, standard, check, strict), each connected with certain presets, and which carry out tests of varying degrees of rigorousness. In addition to the typical lint functions, Splint offers possibilities to test programs for the presence of particular specifications, which are inserted as specially formatted comments in the source code. In this way boundary conditions for the implementation of functions and their invocation can be formulated and their conformity to specifications checked, and there are additional possible semantic controls.

For programs not equipped with supplementary specifications the mode set with the option -weak is recommended as standard. However, according to the manual a special reward will be presented to the first person who can produce a "real program" that produces no errors with Splint in -strict mode. As a precondition for using these two tools in a reasonable manner it proved to be useful in testing the FLINT/C functions to test precisely which options are used and to create corresponding profile files so as to configure the tools for individual use.

After extensive revisions of the FLINT/C code, at the end of the test phase neither of the two products produced any warnings that on close examination could be considered serious. With this goes the hope that we have come a long way in fulfilling the conditions set above for the quality of the FLINT/C functions.

The goal of run-time tests should be to prove that a building block of a piece of software fulfills its specifications. To give the tests sufficient expressive power so as to justify the expense of time and money that goes into their development and execution, we must make of them the same demands that we do of scientific experiments: They must be completely documented, and their results must be reproducible and able to be checked by outsiders. It is useful to distinguish between testing individual modules and integrated system tests, though here the boundaries are fluid (see [Dene], Section 16.1).

To achieve this goal in testing modules the test cases must be so constructed that functions can be exhaustively tested to the extent possible, or in other words, that as large a coverage as possible be achieved of the functions being tested. To establish test coverage various metrics can be employed. For example, for C0 coverage what is measured is the portion of instructions of a function or module that are actually run through, or, concretely, which instructions are not run through. There are more powerful measurements than C0 coverage, which take note of the portion of branches that are taken (C1 coverage), or even the portion of paths of a function that are run through. The last of these is a considerably more complex measure then the first two.

In each case the goal is to achieve maximal coverage with test cases that completely check the behavior of the interface of the software. This covers two aspects that are only loosely connected one with the other: A test driver that runs through all the branches of a function can still leave errors undetected. On the other hand, one can construct cases in which all the properties of a function are tested, even though some branches of the function are not considered. The quality of a test can thus be measured in at least two dimensions.

If to achieve a high degree of test coverage it does not suffice to establish the test cases based simply on knowledge of the specification, which leads to so-called black box tests, it is necessary to take into consideration the details of the implementation in the construction of test cases, a modus operandi that leads to so-called white box tests. An example where we have created test cases for a special branch of a function based only on the specification is the division algorithm on page 53: To test step 5 the special test data on page 65 were specified, which have the effect that the associated code is executed. On the other hand, the necessity of special test data for division by smaller divisors becomes clear only when one considers that this process is passed off to a special part of the function div_l(). What is involved here is an implementation detail that cannot be derived from the algorithm.

In practice, one usually ends up with a mixture of black box and white box methods, which in [Dene] are aptly called gray box tests. However, it can never be expected that one hundred percent coverage is to be achieved, as the following considerations demonstrate: Let us assume that we are generating prime numbers with the Miller – Rabin test with a large number of iterations (50, say) and a corresponding low probability of error

The testing of the arithmetic functions in the FLINT/C package, which is carried out primarily from a mathematical viewpoint, is quite a challenge. How can we establish whether addition, multiplication, division, or even exponentiation of large numbers produces the correct results? Pocket calculators can generally compute only on an order of magnitude equivalent to that of the standard arithmetic functions of the C compiler, and so both of these are of limited value in testing.

To be sure, one has the option of employing as a test vehicle another arithmetic software package by creating the necessary interface and transformations of the number formats and letting the functions compete against each other. However, there are two strikes against such an approach: First, this is not sporting, and second, one must ask oneself why one should have faith in someone else's implementation, about which one knows considerably less than about one's own product. We shall therefore seek other possibilities for testing and to this end employ mathematical structures and laws that embody sufficient redundancy to be able to recognize computational errors in the software. Discovered errors can then be attacked with the aid of additional test output and modern symbolic debuggers.

We shall therefore follow selectively a black box approach, and in the rest of this chapter we shall hope to work out a serviceable test plan for the run-time tests that follows essentially the actual course of testing that was used on the FLINT/C functions. In this process we had the goal of achieving high C1 coverage, although no measurements in this regard were employed.

The list of properties of the FLINT/C functions to be tested is not especially long, but it is not without substance. In particular, we must convince ourselves of the following:

All calculational results are generated correctly over the entire range of definition of all functions.

In particular, all input values for which special code sequences are supplied within a function are correctly processed.

Overflow and underflow are correctly handled. That is, all arithmetic operations are carried out modulo Nmax + 1.

Leading zeros are accepted without influencing the result.

Function calls in accumulator mode with identical memory objects as arguments, such as,

add_l(n_l, n_l, n_l), return correct results.All divisions by zero are recognized and generate the appropriate error message.

There are many individual test functions necessary for the processing of this list, functions that call the FLINT/C operations to be tested and check their results. The test functions are collected in test modules and themselves individually tested before they are set loose on the FLINT/C functions. For testing the test functions the same criteria and the same means for static analysis are employed as for the FLINT/C functions, and furthermore, the test functions should be run through at least on a spot-check basis with the help of a symbolic debugger in single-step mode in order to check whether they test the right thing. In order to determine whether the test functions truly respond properly to errors, it is helpful deliberately to build errors into the arithmetic functions that lead to false results (and then after the test phase to remove these errors without a trace!).

Since we cannot test every value in the range of definition for CLINT objects, we need, in addition to fixed preset test values, randomly generated input values that are uniformly distributed across the range of definition [0, Nmax]. To this end we use our function rand_l(r_l, bitlen), where we select the number of binary digits to be set in the variable bitlen with the help of the function usrand64_l() modulo (MAX2 + 1) randomly from the interval [0, MAX2]. The first pass at testing mustbe the functions for generating pseudorandom numbers, which were discussed in Chapter 12, where among other things we employ the chi-squared test described there for testing the statistical quality of the functions usrand64_l() and usrandBBS_l(). Additionally, we must convince ourselves that the functions rand_l() and randBBS_l() properly generate the CLINT number format and return numbers of precisely the predetermined length. This test is also required for all other functions that output CLINT objects. For recognizing erroneous formats of CLINT arguments we have the function vcheck_l(), which is therefore to be placed at the beginning of the sequence of tests.

A further condition for most of the tests is the possibility of determining the equality or inequality and size comparison of integers represented by CLINT objects. We must also test the functions ld_l(), equ_l(), mequ_l(), and cmp_l(). This can be accomplished with the use of both predefined and random numbers, where all cases—equality as well as inequality with the corresponding size relations—are to be tested.

The input of predefined values proceeds optimally, depending on the purpose, by means of the function str2clint_l() or as an unsigned type with the conversion function u2clint_l() or ul2clint_l(). The function xclint2str_l(), complementary to str2clint_l(), is used for the generation of test output. These functions are therefore the next to appear on our list of functions to be tested. For the testing of string functions we exploit their complementarity and check whether executing one function after the other produces the original character string or, for the other order, the output value in CLINT format. We shall return to this principle repeatedly below.

All that now remains to test are the dynamic registers and their control mechanisms from Chapter 9, which in general we would like to include in the test functions. The use of registers as dynamically allocated memory supports us in our efforts to test the FLINT/C functions, where we additionally implement a debug library for the malloc() functions for allocation of memory. A typical function in such a package, of which there are to be found both public-domain and commercial products (cf. [Spul], Chapter 11), is checking for maintenance of the bounds of dynamically allocated memory. With access to the CLINT registers we can keep close tabs on our FLINT/C functions: Every penetration of the border into foreign memory territory will be reported.

A typical mechanism that enables this redirects calls to malloc() to a special test function that receives the memory requests, in turn calls malloc(), and thereby allocates a somewhat greater amount of memory than is actually requested. The block of memory is registered in an internal data structure, and a frame of a few bytes is constructed "right" and "left" of the memory originally requested, which is filled with a redundant pattern such as alternating binary zeros and ones. Then a pointer is returned to the free memory within the frame. A call to free() now in turn goes first to the debug shell of this function. Before the allocated block is released a check is made as to whether the frame has been left unharmed or whether the pattern has been destroyed by overwriting, in which case an appropriate message is generated and the memory is stricken from the registration list. Only then is the function free() actually called. At the end of the application one can check using the internal registration list whether, or which, areas of memory were not released. The orchestrating of the code for rerouting the calls to malloc() and free() to their debug shells is accomplished with macros that are usually defined in #include files.

For the test of the FLINT/C functions the ResTrack package from [Murp] is employed. This enables the detection, in certain cases, of subtle instances of exceeding the vector bounds of CLINT variables, which otherwise might have remained undetected during testing.

We have now completed the basic preparations and consider next the functions for basic calculation (cf. Chapter 4)

add_l(), sub_l(), mul_l(), sqr_l(), div_l(), mod_l(), inc_l(), dec_l(), shl_l(), shr_l(), shift_l(),

including the kernel functions

add(), sub(), mult(), umul(), sqr(),

the mixed arithmetic functions with a USHORT argument

uadd_l(), usub_l(), umul_l(), udiv_l(), umod_l(), mod2_l(),

and finally the functions for modular arithmetic (cf. Chapters 5 and 6)

madd_l(), msub_l(), mmul_l(), msqr_l(),

and the exponentiation function

*mexp*_l().

The calculational rules that we shall employ in testing these functions arise from the group laws of the integers, which have been introduced already in Chapter 5 for the residue class rings

Table 13-1. Group law for the integers to help in testing

|

| |

|---|---|---|

| a + 0 = a | a • 1 = a |

| a + b = b + a | a • b = b • a |

| (a + b) + c = a + (b + c) | (a • b) • c = a • (b • c) |

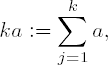

Addition and multiplication can be tested one against the other by making use of the definition

at least for small values of k. Further relations amenable to testing are the distributive law and the first binomial formula:

Distributive law : a • (b + c)= a • b + a • c,

Binomial formula : (a + b)2 = a2 + 2ab + b2.

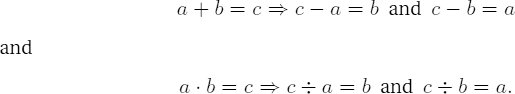

The cancellation laws for addition and multiplication provide the following test possibilities for addition and subtraction, as well as for multiplication and division:

Division with remainder can be tested against multiplication and addition by using the division function to compute, for a dividend a and divisor b, first the quotient q and remainder r. Then multiplication and addition are brought into play to test whether

| a = b • q + r. |

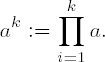

For testing modular exponentiation against multiplication for small k we fall back on the definition:

From here we can move on to the exponentiation laws (cf. Chapter 1)

| ars = (ar)s, |

| ar+s = ar • as, |

which are likewise a basis for testing exponentiation in relation to multiplication and addition.

In addition to these and other tests based on the rules of arithmetic calculation we make use of special test routines that check the remaining points of our above list, in particular the behavior of the functions on the boundaries of the intervals of definition of CLINT objects or in other special situations, which for certain functions are particularly critical. Some of these tests are contained in the FLINT/C test suite, which is included in the downloadable source code. The test suite contains the modules listed in Table 13-2.

Table 13-2. FLINT/C test functions

Module Name | Content of Test |

|---|---|

| linear congruences, pseudorandom number generator |

| Blum-Blum-Shub pseudorandom number generator |

| register management |

| basic functions |

| addition, including |

| subtraction, including |

| multiplication |

| Karatsuba multiplication |

| squaring |

| division with remainder |

| modular addition |

| modular subtraction |

| modular multiplication |

| modular squaring |

| modular exponentiation |

| bit access functions |

| shift operations |

| Boolean operations |

| integer square root |

| greatest common divisor and least common multiple |

We shall return to the tests of our number-theoretic functions at the end of part 2, where they are presented as exercises for the especially interested reader (see Chapter 18).

[41] The titles named here represent the author's personal, subjective selection. There are many other books and publications that could as well have been listed here but that have been omitted for lack of space and time.

[42] The compiler is included in the various Linux distributions and can also be obtained from http://www.leo.org.

[43] Splint is the successor to the tool LCLint, which was developed in cooperation with the Massachusetts Institute of Technology and Digital Equipment Corporation (DEC). Splint can be found at the address http://splint.cs.virginia.edu/.