If you have not memorized all the possible moves in Tic-Tac-Toe, you probably play by thinking something like, “If I move here, he could move there, there, or there....” This is the heart of look-ahead. Another way of thinking about it would be to ask the simple question, “If I move here, and then each of us takes our best moves in turn, will I win in the end?” We will revisit the implications of each part of this reasonably simple question throughout the chapter.

The method seems simple on the surface, but even simple games such as Tic-Tac-Toe reveal some hidden complexities. Every part of our seemingly simple question is an iceberg hiding many complexities, including evaluation functions, pruning, heuristics, discrete moves, and knowledge representation. By the end of this chapter, it will be clear that look-ahead lives and dies on how well the implementation manages computational complexity. We mentioned combinatorics in passing in Chapter 5, “Random and Probabilistic Systems.” We will lightly brush up against it here as well, mostly hidden as determining the product of a bunch of numbers multiplied together. Computational complexity will be a running theme throughout the discussion of other complexities.

After examining look-ahead and its complexities, we will summarize the method’s advantages and disadvantages. This will make it easy to discuss the kinds of games for which look-ahead is well suited. This chapter then ends with the Fox and Hounds project, which illustrates in depth many of the challenges to using look-ahead.

Evaluation functions are how we turn “... best moves...” in our reasonably simple question given in the first paragraph into code. The simplest evaluation function for Tic-Tac-Toe would look at any game and return one of four values:

Victory: Three in a row for our side.

Loss: Three in a row for their side.

Tie: All moves have been taken without a victor.

Unknown: The game is still in play.

While this method always gives a correct answer, we should consider its drawbacks. An AI using this evaluation of the board will always get back unknown until the fifth move. It will not always know with certainty in some games without looking ahead to the ninth move. Looking ahead to the ninth move means looking at all possible games.

How many moves have to be evaluated to get to the end of every possible game? Tic-Tac-Toe has nine different beginning moves, eight different second moves, and so on until it has one final move. To get the total number of outcomes, we multiply those numbers together to get 362,880, also know as 9 factorial, which is written as 9!. A 1MHz computer of 25 years ago could do this after a very long pause; modern hardware running a thousand times faster makes it seem nearly effortless. However, any function that has factorial cost must be kept to small numbers. Factorial functions start out slow but grow more rapidly than linear functions, faster than polynomial functions, even faster than exponential functions. Think of a factorial function as a grenade; after a short while, it explodes. When this happens in a game, the player’s computer becomes an expensive space heater, appearing to do nothing but blow warm air for very long periods of time.

A more useful evaluation function would attempt to foretell the outcome of an indeterminate game—one not yet played to completion. Our reasonably simple question asked “... will I win in the end?” It would be nice if we could predict the end without playing to the end. We ignore the fact that we can state from the outset that if both sides play to win, Tic-Tac-Toe always ends in a tie. But if one side makes a mistake, we would like our evaluation function to be able to indicate victory while using the smallest amount of looking ahead. We want our evaluation function to generate good advice from indeterminate games. Tic-Tac-Toe does not provide meaningful insight into this kind of evaluation function.

Fox and Hounds, the game we will use for our project, does provide insights into more complex evaluation functions. We will return in depth to evaluation functions when we take up the project.

Our reasonably simple question started out, “If I move here.” Think of Tic-Tac-Toe as a tree. From the root of the tree, there are nine branches, one for each possible first move. Each of those branches has eight sub-branches, corresponding to the possible second moves that could follow a first move. This is the top of the search space that our AI will look through in search of victory.

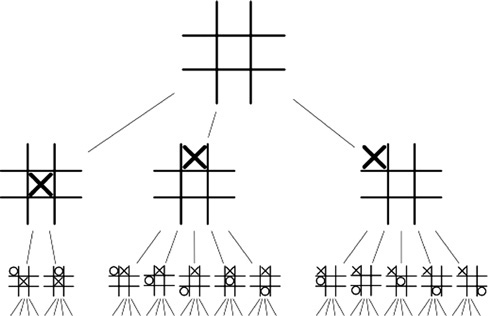

If we count the number of possible game boards in the first three levels, there is one empty game board, nine game boards with one move, and 72 boards with two moves on them. As it turns out, while there are nine squares available for a first move, there are only three different kinds of first moves: the center, any corner, and the middle of an outer row or column. You can make your favorite corner into any other corner simply by viewing the game from another angle—you do not need to move any marks! From those three first moves, there are 12 kinds of second move. This is shown in Figure 6.1, from Wikimedia Commons [Wikimedia04].

From the figure, we see that there are 12 kinds of boards after two moves, while our naïve method of look-ahead calls for 72 boards. We can get rid of ![]() of the expected workload if our code is smart enough to realize that most opening moves are the same as some other opening moves, and only the unique ones need to be evaluated. This is called pruning, and the numbers show how effective it can be at fighting computational complexity. The idea with pruning is that the numbers that we multiply together to measure complexity are all smaller numbers than before. Our computational “grenade” either takes longer to explode or becomes a less problematic firecracker.

of the expected workload if our code is smart enough to realize that most opening moves are the same as some other opening moves, and only the unique ones need to be evaluated. This is called pruning, and the numbers show how effective it can be at fighting computational complexity. The idea with pruning is that the numbers that we multiply together to measure complexity are all smaller numbers than before. Our computational “grenade” either takes longer to explode or becomes a less problematic firecracker.

In the Tic-Tac-Toe example, there was no loss of information after pruning. There are other kinds of pruning that may cause loss of information, however. One of the most common kinds of pruning is to limit the depth of the look-ahead. Tic-Tac-Toe has barely any middle ground where setting a depth limit makes sense. It takes five moves of look-ahead at the opening move to see the first possible victory. After four or more moves have been made, there are five or fewer moves left in the game. In other games, the AI programmer sets the look-ahead “high enough to stay out of trouble, low enough to run quickly.” If that limit is too low, the player will observe something like, “Now he sees it coming, but it’s too late for him to do anything about it.” In terms of gameplay, the AI programmer can be tempted to use a variable depth limit to change the skill level of the AI in what seems to be a realistic manner. Be warned that small changes in look-ahead depth can cause major changes in the effectiveness of the AI. In the case of Fox and Hounds, we will see that five moves of look-ahead are all the fox ever needs; more depth does not help. With four or fewer moves, the fox may wander too far from the vicinity of effective moves to ever see them. Tuning the AI via look-ahead depth is effective only in games where incrementally more look-ahead produces incrementally better AI.

Heuristics give guidance in ambiguous situations. Think of heuristics as general rules, often based on experience. Heuristics are very helpful in game AI, and evaluation functions need all the help that they can get. At some point, the AI will hit the look-ahead depth limit, and the evaluation function will have to pass judgment on an indeterminate game. Heuristics provide guidance to the evaluation function in otherwise ambiguous circumstances. Pruning methods often need help as well. What moves can safely be ignored? What moves are the most promising? Heuristics provide guidance to pruning as well as to evaluation. Note that risky, high-payoff moves illustrate differences between the needs of evaluation and the needs of pruning. Risky moves evaluate poorly because of the risk. If we prune them, we will not exploit the ones with a high payoff that follows. In short, heuristics are very important to game AI. Tic-Tac-Toe is too small for good heuristics, but Fox and Hounds is not. A brief description of Fox and Hounds is in order.

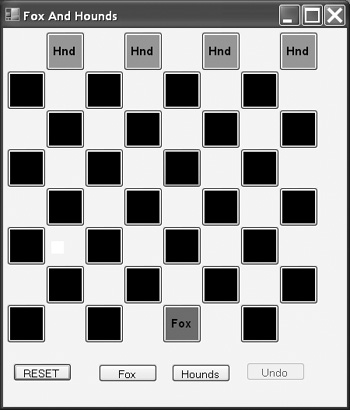

Fox and Hounds is played on the dark squares of a standard checkerboard. The fox moves like a king in checkers. The hounds move like regular checkers; they cannot move backward. There is no jumping and no capturing. Once a hound reaches the far end of the board, it can no longer move. The goal of the hounds is to pin the fox so that it cannot move. The goal of the fox is to get past the hounds. The fox moves first. The game starts with four hounds at one end of the board and the fox at the other, as shown in Figure 6.2.

While perfect play always results in a win for the hounds, the game is a pleasant step up in richness compared to Tic-Tac-Toe.

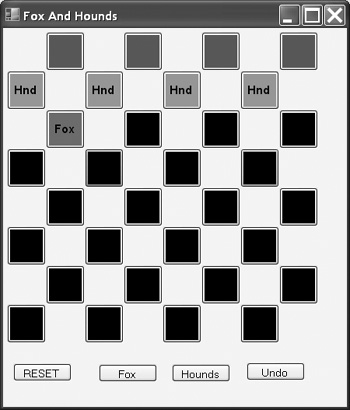

There are many heuristics. The hounds start as an unbroken line. If they can keep the fox behind their unbroken line, they cannot lose. If the fox does not interfere, there is always a safe move for the hounds that keeps the line unbroken. Early on, when the line is straight or nearly straight, the hound that has only one possible move is the hound with the safe move. That hound is found against an edge when all the hounds are on the same row. When the hounds are in the middle of moving from one row to the next, the hound that has one of its two moves blocked by the hound that moved last is the hound with the safe move. In Figure 6.2, the right-most hound has the safe move. In Figure 6.3, the fox is blocking a safe move from the left-most hound.

One heuristic for the fox is that any time it is behind an unbroken line, any move that forces the hounds to break the line is better than any move that does not. This is shown in Figure 6.3. It is clear that the fox must break the line to win, and experience shows that there is nothing to be gained by waiting to break the line later.

When the hounds are forced to break their line, they use the simple heuristic of picking the move that results in the longest path to freedom for the fox. This gives the hounds the time they need to reform their line and close the hole before the fox escapes. It is clear that having more time to correct a worrisome situation is better than having less time.

Once the line is broken, good hounds’ moves are ones that force the fox behind a newly reformed line. They must do this in order to win. As we will see later, the sooner they reform the line the better.

A reasonable heuristic for the fox is to head directly for the nearest hole in the line when the line is broken. We will see later that this heuristic is imperfect. It is clear that the fox must eventually head for freedom in order to win, but in certain circumstances the fox should wait and not head directly for the nearest gap.

Collectively, we can call these the line heuristics. A related heuristic is that when the hounds have more than one move that keeps their line unbroken, the move that hems the fox in the most is the best. A less obvious heuristic is that if the fox ever makes it to any square that no hound can make it to, the fox has gotten past the hounds and wins. A final pair of heuristics is that we can safely limit the look-ahead depth to five fox moves or six hounds’ moves. The project will use all of these heuristics. We will examine the impact they have on complexity.

Heuristics greatly help the evaluation function. Figure 6.3 shows the fox forcing the hounds to break their line. That move is not sufficient to win, but it is better than any other possible move the fox can make that does not break the line. The heuristics can also help prune the search space. The hounds have at most eight moves to pick from. If any of those moves keeps the fox behind an intact wall, then there is no need for the hounds to do any look-ahead. They still might need to decide between two such moves, but no look-ahead is called for.

In the very first paragraph of this chapter we posed the simple question, “If I move here, and then each of us takes our best moves in turn, will I win in the end?” Now we look at the complexity of “... takes our best moves in turn.” The hounds cannot take more than 28 total moves because each of the four hounds can only move seven times each before it hits the back wall. That yields 28 moves by the hounds. Since the fox moves first, such a game would require a matching 28 more moves from the fox. A fox in the middle of the board with nothing around it has four possible moves. It can have fewer when near hounds or an edge, but it can never have more. Each of the four hounds, when nothing is in front of it and it is not next to an edge of the board, has two possible moves. Setting up the math, we get four possible fox moves times eight possible hounds moves for 32 combinations. We do this 28 times, yielding 3228. This is the same as 2140. Very roughly speaking, this is 1042 combinations to evaluate. If somehow our 1GHz computer could do a billion of these per second, it would take 1033 seconds, which compares poorly to the age of the Earth, which is around 1017 seconds, and to the estimated time until the heat decay death of the universe at 1019 seconds. The polite word for this kind of problem is “intractable,” although it might not be the first thing AI programmers say when they run the numbers. It should be very clear that heuristics are required to keep the computational complexity of the game manageable. A brute-force look-ahead approach will simply take too long.

It is worth noting that Fox and Hounds has only 32 possible combinations for a single move and the following countermove. A move and countermove pair is called a “ply.” Chess starts out with 32 pieces, and most of them have two or more possible moves to consider each turn. If the pieces were limited to just two possible moves each (a number that is far too small), a single move for one side would involve one of 16 pieces, times two possible moves, for 32 combinations. Not all of the 16 pieces can always move, but half of the pieces have far more than the two moves we limited them to, with eight being a more typical number. If 32 combinations is a reasonable estimate, then there are 32 possibilities for the white times the 32 possibilities for black as a countermove for a product of 1,024 combinations in each ply. This number might seem too large, but Chess starts out with exactly 20 possible opening moves followed by 20 opening response for 400 combinations in the first ply. This is called the branching factor, and in games such as Chess (or worse yet, Go), the branching factor is so high that simple look-ahead is quickly overwhelmed. Heuristics and other measures have helped a great deal in the case of Chess, but they have achieved far more modest success with Go.

There are noteworthy comparisons to be made between the complexity of Fox and Hounds and Tic-Tac-Toe. Tic-Tac-Toe is small enough that its factorial growth never goes on long enough to overwhelm the situation. For small numbers, factorial growth is well behaved. Fox and Hounds is more complex, but unlike Tic-Tac-Toe, the complexity of the individual turns is fixed. If you increased the number of turns for both games, by playing on a larger board, for example, eventually Tic-Tac-Toe would show higher complexity as ever higher numbers are multiplied together. Both show unacceptable growth rates; in Tic-Tac-Toe, it is managed by keeping the game small, where in Fox and Hounds we will manage it with heuristics.

Our project will prove just how much heuristics help. For example, when the hounds have the fox behind an unbroken line and they have moves that keep the line unbroken, the hounds have no need for look-ahead. In the aforementioned calculations, each time this happens, the eight hounds’ moves to explore in depth become just one. In terms of a tree, only one of the eight branches will need to be followed. Our heuristic allows us to prune away ⅞ of the work at each level in the tree that the heuristic can be applied. After the first move, when the fox is looking to break the wall but is too far away to make contact with the hounds, the complexity computation of three moves of naïve look-ahead without this heuristic gives a sequence resembling the following:

...(4* 8) * (4 * 8) * (4 * 8)...

The naïve method requires 32,768 combinations to be searched. With the heuristic, the hounds know right away that their best move is the one that keeps the wall intact. The three-move sequence becomes this:

...(4* 1) * (4* 1) * (4* 1)...

With the heuristic applied, there are 64 combinations to be searched.

Careful examination of the board shows that in this part of the game, the hounds have only seven moves available instead of eight. One hound in the line is either against an edge or has another hound in front of it blocking one of its two moves. Using seven instead of eight yields 21,952 combinations to be searched. This is lower than 32,768, but pales when compared to the 64 combinations that the heuristic yields. Good heuristics like this one can be applied at multiple levels in the tree. There are other heuristics that can be applied to the game.

We can do something similar when the line is broken. The heuristic is that the fox takes the shortest path to freedom. This reduces the number of moves evaluated by the fox from four to just one. This allows us to eliminate ¾ of the work at every level of the tree while the hounds are looking ahead to reform their line. We started with our naïve look-ahead sequence:

...(4* 8) * (4 * 8) * (4 * 8)...

With the heuristic for the fox it becomes:

...(1 * 8) * (1 * 8) * (1 * 8) ...

Instead of needing 32,768 combinations to search three turns, we now only need to search 512.

We will allow the fox to look ahead at most five turns and the hounds to look ahead at most six. These numbers come from experience tuning the AI; the fox can always find a way to break the line in five fox moves or fewer if one exists. Six hounds’ moves are enough for them to reform their line if it is possible. These make the complexity computations completely different. When the fox is looking ahead, it is trying to break the line. This implies that the line is there, so the hounds know their best move. So we get four fox moves times one hound’s move per turn, for five turns.

(4 * 1) * (4 * 1) * (4 * 1) * (4 * 1) * (4 * 1) = 1024

This is 1,024 moves, and it can be evaluated in a few seconds even with the debugging output scrolling by. The hounds’ computation is similar: eight moves for six turns.

(1 * 8) * (1 * 8) * (1 * 8) * (1 * 8) * (1 * 8) * (1 * 8) = 262,144

A quarter million moves might seem troubling, but without the heuristic, the number would be 4,096 times larger—at just over a billion. Heuristics make otherwise impossible look-ahead tasks possible.

The danger with heuristics is when they fail. “Usually right” is not the same as “always right.” One of the heuristics that we will use in Fox and Hounds was not always correct for all the given evaluation functions tested. That heuristic is that the best move for the fox when the line is broken is to take the shortest path toward freedom. We use that heuristic to prevent the fox from looking ahead when the hounds are looking ahead; this improves performance considerably. The hounds look ahead when the line is broken, as they look to pin the fox behind a reformed line. The hounds, in their look-ahead, predict the fox’s move using the heuristic. The hounds are predicting their opponent’s move, but the prediction might not come true. As long as any move the fox might take puts the fox in a worse position, the hounds are fine. As we shall see, this was not always the case; the hounds pruned potential fox moves that the hounds needed to know about.

As it turned out, fixing the problem had an impact on the style of play shown by the hounds. The hounds like it when the fox has fewer moves available to it. So when reforming their line, their look-ahead could see a sequence of moves that reformed the line quickly and a different sequence of moves that reformed the line a few moves later. The first option left the fox more room than the second option. Early versions of the evaluation function preferred the second option because it hemmed in the fox better, which is closer to the winning condition of hemming in the fox completely. As long as the fox took the shortest path, the hounds would “toy” with the fox and slam the door at the last possible moment. It worked fine when the fox behaved as predicted, but could be made to fail if the fox took a different path than expected. The fix meant that the hounds prefer moves that reform their line as soon as possible, with ties broken by how hemmed in the move leaves the fox. The fix makes for more effective but less flashy play by the hounds.

There are two heuristics involved here. One says, “Reforming the line is good”; the other says, “Hemming in the fox is good.” A few chapters earlier, we solved the issue of ambiguous transitions in FSM by prioritizing them. Here, we have a similar issue with competing heuristics that also require proper prioritization. Both heuristics apply; neither is broken, but we have to be intentional about which takes precedence.

Both Tic-Tac-Toe and Fox and Hounds benefit from having discrete moves. While this is true of many computer games, it is not true for an even larger number. American football is played in discrete turns, but soccer and hockey feature nearly continuous play. When we have to deal with continuous games, we transform the complex possibilities into as few discrete buckets as we can get away with because we know that the number of buckets is going to get multiplied many times. Terrain is often treated this way. Continuous terrain gets implemented as a set of discrete tiles. All the different possible movements to different points on a given tile can be reduced to a single move, “Move to this tile.” The tiles provide our buckets. Sometimes the tiles are even grouped into regions to keep the number of buckets manageable. A small number of buckets is in conflict with the richness of the possibilities; too few, and the AI appears dumb. But too many, and it will run too slowly. Alas for the beginning AI programmer, experience is the best guide. Other AI techniques can be employed, including faking it, to give the look-ahead system a fighting chance.

When the AI looks ahead, it has to think using views of the world that do not exist outside of the AI’s thought process. The AI programmer has to make sure that those views are rich enough to allow the AI to answer any questions it is allowed to ask of the current state of the world plus any questions it wishes to pose to its predictions of the future. The AI programmer needs to carefully consider how the AI will deal with knowledge. Besides being rich enough to be useful, the view has to be small enough that it can be passed around, copied, modified, and restored as needed. If the AI is not going to cheat, the view also needs to be properly restricted to keep the AI from having access to information that should be unavailable to it.

One method for dealing with these views of the world is to have just one copy. The AI can edit the world view as needed while it is deciding what to do, but it needs to restore the view to its original state when it is done. If the view takes up a large amount of storage, and no piece of the AI code changes very much of it, this method makes sense. This method dies if the restoration code has any imperfections at all. One part of the AI will consider doing something, and later AI code will treat the earlier consideration as having happened. The computational cost of setting the view back also needs to be considered against the very low cost of discarding a copy.

Another method is to give each part of the AI its own private copy of the view to play with as it sees fit. The act of copying by itself has only modest cost. It is no surprise that computers can copy from memory to memory with good speed. Doing so begs the question, “How many copies exist at one time?” Look-ahead methods are often recursive. How deep is the AI allowed to look? Heuristics that control depth not only save us from computation, they also can save us from excessive memory usage.

Look-ahead provides a minimum level of competence for the AI even when other methods are used for longer-term planning. With a few moves of look-ahead, the AI will not willingly step into instant disaster. It may not be all that smart, but it’s not that dumb either. Controlling the search depth also provides the AI programmer with a good tool for controlling difficulty.

Look-ahead methods are conceptually easy for the AI programmer to understand. Letting the AI search relieves the AI programmer of the burden of crafting an AI that makes good moves for the future by looking only at the current situation. Look-ahead provides a goal-oriented approach; the programmer programs the ability to recognize a goal state or to recognize states that will lead to the goal state, and then the AI searches ahead for them. Dealing with the goals may be easier for the programmer than dealing with alternative methods for getting to them.

Look-ahead dies when the number of combinations to evaluate grows too high. Complexity can sometime be controlled by pruning, but imperfect pruning methods risk pruning moves that looked poor when pruned but would have led to superior results if followed. Even exact pruning can remove richness of play. Look-ahead gives strange and bizarre results if the player does not play in the manner that the AI is using to model player behavior. The AI “over thinks” the situation and comes up with what would be elegant moves if it were playing against someone else (our implementation can be made to show this trait).

Game AI look-ahead can be applied easily to games that have discrete moves and no hidden information. Look-ahead works particularly well for end-game situations for games that would not otherwise use it. A limited look-ahead AI can advise other AI methods, particularly when the other methods are occasionally prone to blunders. Look-ahead by itself is difficult to apply to games with a high branching factor, such as Go or Chess, without assistance from other methods.

The complete code for this project is also on the CD. In addition, there are multiple versions of some of the files reflecting the evolution of the AI code. If you use the code on the CD instead of typing it in, be sure to read the commentary that goes with the code so that you will understand the context in which the AI will operate. Like many of the games we have implemented so far, we will start with a fully playable game and then add AI to it. As we go, we will add code to make the game easier for the AI to play and easier for the programmer to understand. We will start with some design discussions before we get to the game board that contains the squares.

A checkerboard is more than 64 graphical squares. People have no trouble seeing that the squares of the same color are connected diagonally, but we will need to program that knowledge for the benefit of the AI and the user interface code. Our game only uses squares of one color, so if we do it right, we ignore squares of the other color. As we look at connectivity, we see that the neighbors of any square are also the set of moves that a fox can take from that square if there are no hounds in the way. Continuing that thought leads us to the idea that the half of the neighbors that are “down” as we see the board are potential moves for hounds. The AI will be using this knowledge all of the time, so it is best if we precompute it. While we are at it, moves up are handy to know as well (more on that later). All of this makes us ask, “How do we tell one square apart from another?”

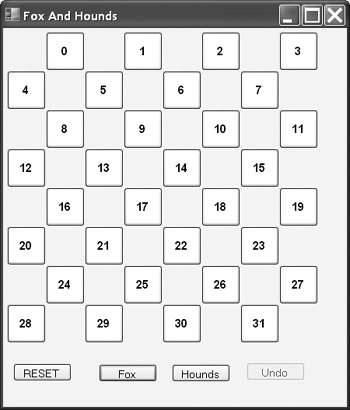

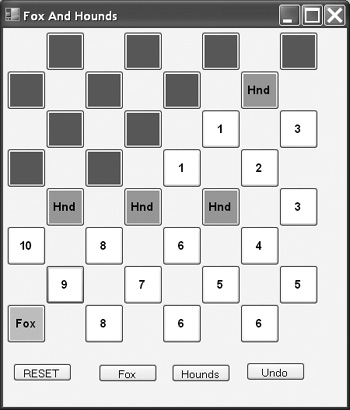

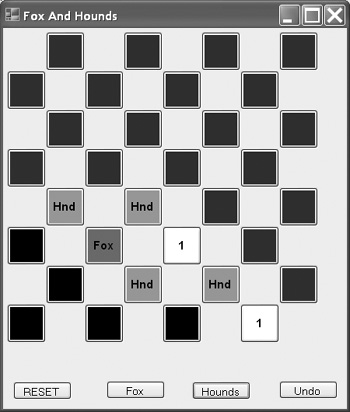

As shown in Figure 6.4, we will tell squares apart by numbering them (ignore the buttons at the bottom for now). For us, the upper-left square is square 0. Our board has four squares in each row, since we are ignoring the off-color squares. The lower-right square will then be square 31. If you have a cheap folding checkerboard, it may have similar numbers that differ by one. Numbering from 0 to 31 makes our code easier, even if we humans have a pesky habit of starting out at 1.

The kind of design we are doing now is the first concrete step toward dealing with knowledge representation. We know that the AI will need to make numerous copies of the boards it considers, so we need to be aware of the performance impact of those copies. There are three standard factors to consider: memory space, computational speed, and programmer time.

The space consideration is worth at least a quick examination. Earlier, we saw complexity numbers such as 1,024 combinations for the fox and 262,144 for the hounds. While we might examine that many boards, we will never store them all at the same time. The number of boards we have in memory at the same time is related to how deep we are looking ahead. The most we look ahead is six moves when the hounds are looking ahead. Since there is a fox move between each hound’s move, we get six more boards for a total of 12. In our case, 12 is small enough that we can ignore it, but the back-of-the-envelope check is always a good idea.

The speed consideration can be dealt with by a time-honored method: ignoring it unless it proves to be a problem. Unlike the space consideration, where the numbers are trivial, our AI will take the time needed to create over a quartermillion boards. The time required to make determinations about those boards is the concern, not the time spent copying them; modern hardware copies memory extremely rapidly. Ignoring potential speed issues is easy, and the use of the profiling tools needed to find problem areas is beyond the scope of this book.

Programmer time must be mentioned as a third vital resource. Time and care spent along the way that prevent the program from wasting resources are an investment. Time spent trying on early optimizations is wasted. Wasted time has direct costs in terms of money and opportunity costs in terms of features that cannot be added and deadlines that cannot be met. “Premature optimization is the root of all evil.” [Knuth74]

The minimum amount of state data we need is five integers. These correspond to four hound subscripts plus one more for the fox. The locations of the checkers are the only way we can tell one game apart from another. We will actually keep far more state data. This data will make the game more pleasant for the player and will make things easier on the AI. We will display some of this data graphically. While the player merely enjoys knowing what the AI is thinking, the AI programmer has a burning need to know exactly what the AI is thinking. Our game will show some of the game state data. What other data would be useful in the game state?

One very important bit of data would be the output of the evaluation function, which we will call the rank, for this board. We will compute it once and store it, trading space to gain speed. It would also be useful to know what the turn number is for the board. As the AI generates multiple boards to represent possible future states of the game, if it sees two different ways to win, it can take the one that comes sooner.

The AI and the display system also benefit from storing some per-square data. We will store what the square holds, which is the fox, a hound, or nothing. We will store what color we are going to paint the square. This color helps more than the display; it will help the AI by providing a fast way to exploit heuristics. We use three colors for our squares: white, black, and green. We color the squares that the hounds cannot get to with green. As the hounds move, they leave behind them a growing area of green, since the hounds do not move backward. If the fox makes it to a green square, the fox wins. We internally mark the squares that the hounds occupy as black, which we show graphically using gray so that we can read the black “Hnd” labels on the buttons. The rest of the squares we color black or white, depending on how the square relates to the hounds and to the green squares. Any square that is not already green or black and has a path that avoids the hounds and leads to a green we color white. Any square that has no unblocked path to a green square is colored black. Figure 6.3 is not in color, but there are green squares behind the hounds and black squares in front of them. If the fox is hemmed in, the squares it can get to are black, which we indicate by using a dark red for the fox as suggested in Figure 6.3. If the fox has a path to freedom, those squares are white, and we use a light red for the fox, as seen in Figure 6.5. Finally, we will store a number that holds the number of steps each white square is away from a green square, also seen in Figure 6.5 As you might expect, coloring and numbering are also used by the AI to implement heuristics. The coloring and the numbering will let us see at a glance if the AI is working as we expect.

Our evaluation function will be part of the class we use to store the state data instead of being part of the AI. Our evaluation function describes the fox’s situation. We use the concept of “big numbers for big decisions” [Dill08] in our evaluation function. Any game board in Fox and Hounds can be categorized as either good for the hounds or good for the fox. If the fox is hemmed in, it is good for the hounds; if the fox has a path to freedom, it is good for the fox. There are variations within each category, but there is no confusing middle ground. By making the most important categories numerically far apart, we have sufficient latitude for the variations. Our numbers will reflect this separation of the two categories. A value of 0 means that the fox has won. A value of 127 means that the fox is trapped and has lost. We need in-between numbers as well. Getting those numbers is an evolutionary process.

The first step in the evolution of the evaluation function is quite simple. If the fox can move but not reach freedom, the evaluation function gives a value of 64, which is coded using a constant named UNREACHABLE. If the fox has a path to freedom, the evaluation function returns the length of that path. With only 32 squares on the board, the path can never be long enough to conflict with the value 64 used to mark UNREACHABLE. The highest rank that still allows the fox to reach freedom appears to be 10, as shown in Figure 6.5, where the fox is 10 steps from reaching a square that no hound can get to. The dark solid squares shown with no numbers are green squares, which are winning squares for the fox if it can get to them.

The simple evaluation function is not good enough for the hounds. Some moves that restore or keep the line intact are better than others. These come late in the game, when the hounds are closing in on the fox. One wrong move will let the fox out.

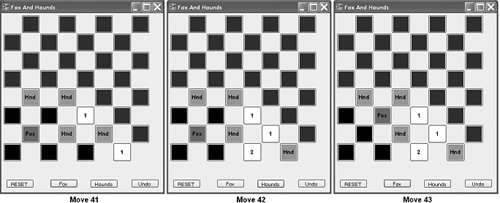

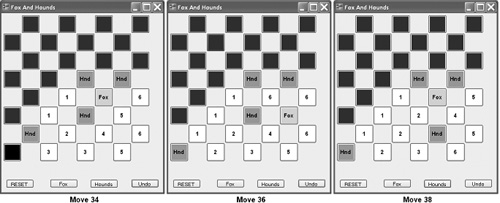

Figure 6.6 shows a sequence of moves illustrating this problem. The sequence starts after the fox takes the 41st move, backing away from the encroaching hounds as it is forced into the lower-left corner. Disaster for the hounds comes on move 42, when they have to pick from two possible moves that keep the fox behind their line. Since the hounds have the fox behind an intact line, they do not use look-ahead to pick between the two moves that keep the fox behind the line. The better move closely presses the fox. But since the simple evaluation function treats all UNREACHABLE moves as equal, the hounds pick the move shown on the middle board. Moving the end hound, which is not part of the line, does not improve their position at all. The fox steps forward on move 43, leaving the hounds no choice but to let it out on their next move.

The evaluation function needs a way to judge which UNREACHABLE move is better. One way to describe the better move for the hounds is that the better move keeps pressing the fox. In Figure 6.6, the hounds threw away a chance to close in on the fox in favor of wasting time with a “free” move. We need a way to turn words like “close in” and “pressing” into something our AI can compute. One of the finer arts of AI programming is the ability to turn these concepts into code.

The next step in the evolution is to come up with a number to indicate “better” UNREACHABLE moves, with “better” meaning “fewer black squares.” Recall that the black squares have no path to freedom and that the hounds try to keep the fox restricted to black squares. The idea is that the smaller the number of squares left to the fox, the closer the fox is to being pinned by the hounds. There is a subtle difference between “fewer black squares” and “fewer squares available to the fox,” “however, as we shall soon see.

It is easy to simply count the number of black squares. This gives an evaluation function that generates board rank as follows: If the fox can move but not reach freedom, the value for rank is 127 (the value when the fox is pinned) reduced by the number of black squares. Note that the number of black squares in this situation is at least six; the four squares the hounds sit upon are always black, the fox is on a black square, and since the fox is not pinned, there is one or more black square, to which it can move. The opening board shown in Figure 6.2 has 32 black squares, for a board rank of 95. This is as low as the rank can go and still have the fox behind an unbroken wall. The value of 95 is well above the value of 64 used to mark UNREACHABLE, so all such boards would have a rank that is greater than or equal to UNREACHABLE, making the code changes easy to implement.

This new evaluation makes judgments between different UNREACHABLE boards. Any ties are broken using the idea that good things should happen sooner and bad things should happen later. A rank of 102 is always better than a rank of 104. A rank of 102 on turn 36 is better than a rank of 102 on turn 38.

This step in the evolution fixes the problem illustrated in Figure 6.6. This evaluation function proved to be the most interesting, even though it has flaws. The interesting part is that the hounds appeared to “toy” with the fox after the fox had broken the line. The hounds would see one move that reformed their line early and another move that would reform their line later. The early move would have more black squares, thus a lower board rank, so the hounds would pursue the later move. “Instead of slamming the door in your face now, I’ll lead you on and just do it to you later.”

Note

The AI.V5.vb file on the CD has the code for this method. The routines of interest are the better move routines in the “Internal Stuff” region. Use it with the naïve method for counting black squares (mentioned later in this chapter) to get the behaviors shown in Figures 6.7 through 6.9.

The hounds can do this if the fox moves the way the hounds expect the fox to move. In games where the fox AI is pitted against the hounds AI, this is always true. Recall that when the hounds are looking ahead, it is to fix their broken line. This is the time that the fox is not looking ahead, but instead taking the shortest path to freedom. The fox is not required to play the way the hounds want it to. A few moves of human intervention helps the fox by ignoring a path to freedom that the hounds will close before the fox escapes. Instead, the fox blocks the hounds from making critical moves on which their plan depends.

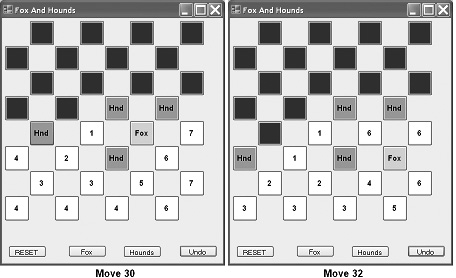

This is illustrated in the next few figures. The boards shown are after the hounds make a move, hence the even move numbers. The action starts in Figure 6.7 with move 30. The fox has just forced the hounds to break their line. Move 30 might seem strange, but recall that when the hounds first break their line, they do not look ahead. Instead, they pick the move that puts the fox farthest from the hole. The alternative of moving the left-most hound would have created a shorter path to freedom for the fox.

While move 30 might appear to be strange, move 32 at first glance appears completely insane. Before they made move 32, the hounds looked ahead, expecting the fox to always take the shortest path to freedom. They saw that they could put their line back together at move 34, yielding a board with an evaluation score of 116. They also saw that they could put their line together on move 36 with a score of 117. What they liked best was putting their line together on move 42 with a score of 119. With this evaluation function, the hounds appear to have mastered the concept of delayed gratification.

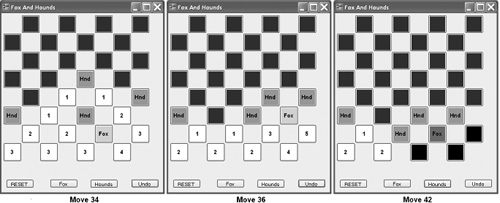

If the fox moves as the hounds predict on move 33, heading downward for freedom, then the hounds will toy with it. They will open a second hole to tempt the fox, as shown with move 34 in Figure 6.8. If the fox takes this new bait, the hounds close the new hole on move 36, setting up the inevitable enclosure shown a few moves later in move 42. If the fox ignores the new hole opened on move 34 and heads along its original path downward toward freedom, the hounds will be able to block both holes before it escapes (not shown).

The critical move for the fox was move 33, visible in board 34. Is there a better alternative for the fox after the hounds make move 32, as shown in Figure 6.7?

There is indeed. The fox can pin three hounds if it stays close to them, as shown in move 34 (see Figure 6.9). The hounds will not open a hole with the fox right there to exploit it. The fox AI will not move this way, but the game allows the user to make moves. Instead of moving down toward freedom, the player moves the fox back into the pocket to hold the three hounds fixed. The left-most hound is too far to the left to help, proving that move 32 was an error. The hounds can stall, as shown in move 36, but as long as the fox keeps the pressure on the pocket, that only leads to move 38. With no free moves left, the hounds have to break open the pocket holding the fox. Our fox does not see this because our fox does not look ahead when it has a path to freedom.

The hounds’ delayed gratification strategy is flashy but flawed. When writing game AI, avoiding AS—that is, artificial stupidity—is a higher priority than increased brilliance of play. There is also a lesson here about making plans that survive contact with the enemy. The hounds’ AI needs to evolve.

The hounds look ahead to reform their line. Rather than change the evaluation function, the hounds’ method for comparing different boards needs to change. The best move for the hounds, when the line is broken, is the earliest move that reforms the line. This equates to “slamming the door now to make the win inevitable.” The hounds stop “over thinking” the situation and quit toying with the fox. If the line is intact, they take the board with the highest rank, which means the board with the fewest black squares. Things look promising for the hounds, but they still could lose. The subtle difference between the simple-to-compute “fewer black squares” and the more complex “fewer moves for the fox” bites them, so we will fix it.

This last evolutionary step is to change how the black squares were counted. If the fox cannot get to an open black square, that square should not count in the computation. Otherwise, the hounds could be distracted into making a move that reduces the number of black squares but does not reduce the moves available for the fox. Late in the game, most moves for the hounds reduce the total number of black squares by one. All such moves are equally good, and if the line is intact, the hounds do not look ahead. Look back at the sequence of boards shown in Figure 6.6. After the fox has taken move 41, there are two moves for the hounds that do not break their line. The first is to move the hound near the center of the board down and to the left, directly toward the fox. The second is the one shown as move 42 in Figure 6.6: The right-most hound moves down and to the right, placing it on the last row. Using the naïve method of counting black squares, each of these hounds’ moves reduces the number of black squares by one, giving them identical evaluation scores. The hounds do not have any moves that reduce the number of black squares more than one; in fact all of their other moves break the line. The two moves we are considering do not give identical results! The first move we considered will bring victory to the hounds on their next move when the fox retreats to one of three squares, each of which allows the hounds to trap it. The second move, shown as move 42 in Figure 6.6, leads the fox to make move 43 as shown, which dooms the hounds.

A more sophisticated way of counting black squares prevents this from happening. We count a black square in the board evaluation score only if it has a hound on it or if the fox can get to it. In Figure 6.6, there is a black square after move 41 in the bottom row that becomes a white square with the number 2 after move 42. In the naïve counting method, this square counted as a black square for the evaluation score. It is the square that lead the hounds to make the ill-fated move shown. Under the better method for counting black squares, this black square does not count in the evaluation score because the fox cannot get to it. The coloring algorithm colors it black after move 41, but it no longer counts in the score. The two moves that do not break the line no longer have identical scores; the hounds will now prefer the winning move of pushing the center hound directly at the fox.

We will implement both ways of counting the number of black squares. The code for this will be described in the next section when we deal with game state. The ColorMe routine will call either NaiveBlackCount or BetterBlackCount to get the number of black squares. You will be able to switch between the two by commenting out the one you do not want to be used.

As we have seen, the AI lives and dies on the quality of the evaluation function. The good news is that simple methods go a long way, and they suggest the areas to improve when they fail. The bad news is that careful testing is required to make sure that the evaluations always behave. The code in the project will have signs of this evolution; presenting the final code in a fully optimized form would reduce the impact of the lesson. We will start with the game board user interface and proceed through to the AI.

We need to start with a new project. That will give us the form that we will use for the board. Use Figure 6.2 or Figure 6.3 as a guide.

Launch Visual Basic.

Create a new Windows Forms Application and name it Fox And Hounds.

Double-click My Project in the Solution Explorer, click the Compile tab, and set Option Strict to On. This forces us to make all potentially risky type conversions explicit. It is not mandatory, but it is a good habit for programmers to be intentional about conversions between numbers with differing precision or objects of differing types.

Rename Form1.vb to Board.vb.

Change the Text property to Fox And Hounds.

Change the size to 450 by 530.

Change the BackColor property to Old Lace, which is located in the Web tab of colors.

Click the File menu and choose Save All. (Do this regularly as you proceed.)

Drag a button from the Toolbox to the lower-left corner of the form. Change its Name property to ResetButton and its Text property to RESET.

Drag a button from the Toolbox and place it to the right of the ResetButton. Change the Name property of this new button to FoxButton and its Text property to Fox.

Drag a button from the Toolbox and place it to the right of FoxButton. Change the Name property of this new button to HoundsButton and its Text property to Hounds.

Drag a final button from the Toolbox and place it to the right of HoundsButton. Change the Name property to UndoButton and its text property to Undo.

This completes the entirety of the graphical elements of the user interface. For squares, we will create a class that inherits from the built-in Button class, but we will not do anything graphical with them. We do need to implement our checkerboard.

Right-click Fox And Hounds in the Solution Explorer window (or use the Project menu) and choose Add—Module. Name it Moves.vb. Add the Public keyword to the module definition so that the rest of the program can access what we put here:

Public Module Moves

We will keep the three kinds of possible moves for each square in this module. The number of moves from any given square will differ from square to square, but we know in advance exactly how many squares we have to deal with. Arrays are intuitive when we know how many things there are in advance, and collections are easy to use with a variable number of things, so we will use both. Add the following code to the module:

'Three kinds of moves

Public MovesUp(31) As Collection

Public MovesDown(31) As Collection

Public Neighbors(31) As Collection

Reading this carefully, we see that MovesUp is an array with 32 elements, each of which is a collection. In VB, arrays start with subscript 0 and go as high as the number given. A range from 0 to 31 has 32 elements. So square 0 will have a collection of moves going up, as will every square on the board. Examine Figure 6.4, and you will notice square 0 has no upward moves. We handle this by making sure that the collection has no moves in it. There will still be a collection to look at; it will simply have no moves. Squares that are near any edge of the board will have different numbers of moves than squares in the middle of the board.

When we add moves to the collections, we will add the subscript for every square that connects to a given square. We will mark each added subscript with a key corresponding to its value converted to a string so that we can interrogate the collection using the key to see if it contains the number of a square we are interested in. (VB collections can hold any type of data, but their keys are always strings.) The formula for computing neighbors changes between even- and odd-numbered rows. We will take that into account when we compute the neighbors. Using Figure 6.4, we can see that the square above and to the left of square 22 is square 17, which is a difference of 5. But from square 17, the same move takes us to square 13, a difference of 4. In both cases, the move up and to the right is one more than the move up and left.

An important point to notice is that we can influence the effectiveness of the AI by how we arrange the moves in the collections. We will do two things to help. We will add upward moves first to the neighbors so that the fox tries to go up the board before trying to go down the board. We will also change how we order the upward or downward moves so that even and odd rows do left or right moves in different order. This encourages the fox to zigzag upward instead of going up and left.

The code to initialize all three arrays may appear daunting, but it beats the alternative of 200 lines of mind-numbingly regular initializations that differ from each other only slightly.

Public Sub InitMoves()

Dim row As Integer

Dim col As Integer

'The array subscript is computed off row and col.

Dim ss As Integer

'The final subscript is what we store in the collections.

Dim finalss As Integer

Dim offset As Integer

'Do moves up first so the fox prefers them.

For row = 0 To 7

'Offset will = 0 or 1.

offset = row Mod 2

For col = 0 To 3

ss = row * 4 + col

'Everybody gets a collection even if it might stay empty.

MovesUp(ss) = New Collection

MovesDown(ss) = New Collection

Neighbors(ss) = New Collection

'Treat even and odd rows differently.

'The changing order in which up-left and up-right

'moves are added is by design to make the fox zigzag

'upward instead of going up diagonally.

If offset = 0 Then

'Do moves up first (helps fox AI).

'Don't fall off the top of the board.

If row > 0 Then

'The last col in even rows lacks the

'neighbors to the right.

If col <> 3 Then

'up and right.

finalss = ss - 3

MovesUp(ss).Add(finalss, finalss.ToString)

Neighbors(ss).Add(finalss, finalss.ToString)

End If

'up and left.

finalss = ss - 4

MovesUp(ss).Add(finalss, finalss.ToString)

Neighbors(ss).Add(finalss, finalss.ToString)

End If

'Now do moves down.

'Even rows always have an odd row below

'down and left.

finalss = ss + 4

MovesDown(ss).Add(finalss, finalss.ToString)

Neighbors(ss).Add(finalss, finalss.ToString)

'The last col in even rows lacks the

'two neighbors to the right.

If col <> 3 Then

'down and right.

finalss = ss + 5

MovesDown(ss).Add(finalss, finalss.ToString)

Neighbors(ss).Add(finalss, finalss.ToString)

End If

Else

'This is an odd numbered row.

'Same concepts, different numbers.

'Always an even row above, do the moves up.

'The first col lacks the

'neighbors to the left.

If col<> 0 Then

'up and left.

finalss = ss - 5

MovesUp(ss).Add(finalss, finalss.ToString)

Neighbors(ss).Add(finalss, finalss.ToString)

End If

'The move up and right we always get

finalss = ss - 4

MovesUp(ss).Add(finalss, finalss.ToString)

Neighbors(ss).Add(finalss, finalss.ToString)

'Moves down.

If row < 7 Then

'down and right.

finalss = ss + 4

MovesDown(ss).Add(finalss, finalss.ToString)

Neighbors(ss).Add(finalss, finalss.ToString)

If col <> 0 Then

'down and left.

finalss = ss + 3

MovesDown(ss).Add(finalss, finalss.ToString)

Neighbors(ss).Add(finalss, finalss.ToString)

End If

End If

End If

Next col

Next row

End Sub

Moves and neighbors are only part of the checkerboard. We still have the graphical parts, and we still need to decide what we will pass around to the AI. We will do the minimum of both needed to get us to the point where we can begin testing as we go.

Our graphical squares will be square buttons that have been adapted to our needs. We need our squares to know their subscript. We do this because we will split the graphical elements apart from the state data that drives them. The state data is going to be copied and passed around and analyzed. We only need one graphical board. People play Chess with one board in front of them and many boards in their head; our code follows this pattern as well. Add a class to the project and name it FaHButton.vb. Add the following code to the class:

Inherits Button

'Just a button, but we would love to know our subscript in the array

'so that we can tell others when we take events.

Private MySubscript As Integer

'When we drag/drop, we will have a hound number or -1 for fox.

Protected HoundNumber As Integer

That last bit is for later, when we will implement player moves using drag and drop. The rest of the code makes our class act just like a regular button except in the ways that we extend it. One way we do that is that our class will keep around a subscript value. We want to make that value available to outside code, but we never want it to change. Add the following code to do that:

Public ReadOnly Property Subscript() As Integer

Get

Return MySubscript

End Get

End Property

This is the simplest possible property method; it returns our private value and provides no way for outside code to change that value. We need a way to set it in the first place, and we will do that when the class is created. Add the following code to the class:

'We need a subscript value when we are created.

Public Sub New(ByVal ss As Integer)

MySubscript = ss

'We use drag and drop for player moves.

AllowDrop = True

'Bold looks nicer.

Me.Font = New System.Drawing.Font("Arial", 9, FontStyle.Bold)

End Sub

Again, we are laying groundwork for implementing player moves in the future using drag and drop. Since our class is in all respects a button, we let the code that creates instances of our class manipulate them as though it were a button.

We will implement the state data using a class. The code for that class will color the squares and compute the rank of the board. We will also have it mark the buttons on the board when we ask it to. We would normally pull that kind of functionality out of the class, but we will take the expedient route here. Before we can implement the class, we need some helper code.

Add a module to the project and name it Constants.vb. Add the Public keyword to the module declaration.

Public Module Constants

We will keep more than just constants in this file, but we will start with the constants. Add the following code:

'UNREACHABLE has to be bigger than any possible count.

Public Const UNREACHABLE As Integer = 64

'And TRAPPED needs to be bigger than that.

Public Const TRAPPED As Integer = 127

We use UNREACHABLE as our dividing line between when the fox can and cannot reach freedom. We use TRAPPED to denote the fox has lost. We also will add the per-square data definitions to this module.

'The raw data needed to process a square.

Public Class SquareData

Public Holds As Checker

Public Kind As SquareColor

Public Steps As Integer

End Class

'What, if anything, sits on this?

Public Enum Checker As Integer

None

Fox

Hound

End Enum

'Just the color (used when thinking as well as for display).

Public Enum SquareColor As Integer

Black

White

Green

End Enum

We are finally ready to start on the class for the state data. Add a class to the project and name it GameState.vb. Add the following data to that class:

'The fox and hounds are the minimum.

Dim Fox As Integer

Dim Hounds(3) As Integer

'But it is very useful to analyze the board.

Dim Turn As Integer

Dim Rank As Integer

Dim Squares(31) As SquareData

Now we want to create a brand-new game state. Later on we will add a method that creates a new game state as a copy of a given state. Since this file will contain a good deal of code, we use regions to be able to group like parts together and to be able to collapse them out of sight. And the following code to the class:

#Region "Class New"

Public Sub New()

Dim i As Integer

'New game locations; fox on bottom row.

Fox = 30

For i = 0 To 3

'Hounds go in the top row.

Hounds(i) = i

Next i

'No one has moved.

Turn = 0

'Allocate the squares.

For i = 0 To 31

Squares(i) = New SquareData

Next

'Make this new board displayable.

Call ColorMe()

End Sub

#End Region

The system will complain that ColorMe does not exist, so we will do that next. ColorMe is only called within the GameState class, so we will put it in a separate region. Regions cannot overlap, so put the following code between the #End Region and the End Class statements.

#Region "Internal Stuff"

Protected Sub ColorMe()

'The only given is where the fox and hounds are.

'Compute everything else.

'The square in game state.

Dim StateSquare As SquareData

'i is for going through squares.

Dim i As Integer

'ss is for subscripts of OTHER squares.

Dim ss As Integer

'The base state is an all black board.

'Do all squares (no need for the subscript).

For Each StateSquare In Squares

StateSquare.Holds = Checker.None

StateSquare.Kind = SquareColor.Black

StateSquare.Steps = UNREACHABLE

Next

'Add the fox.

Squares(Fox).Holds = Checker.Fox

'Add the hounds.

For i = 0 To 3

Squares(Hounds(i)).Holds = Checker.Hound

Next

'Start coloring the top row of green.

For i = 0 To 3

StateSquare = Squares(i)

If StateSquare.Holds <> Checker.Hound Then

StateSquare.Kind = SquareColor.Green

StateSquare.Steps = 0

End If

Next i

'I am green if all of my parents are green

'and no hound sits on me.

For i = 4 To 31

StateSquare = Squares(i)

If StateSquare.Holds <> Checker.Hound Then

Dim AmGreen As Boolean = True

For Each ss In Moves.MovesUp(i)

AmGreen = AmGreen And (Squares(ss).Kind = SquareColor.Green)

Next

If AmGreen Then

StateSquare.Kind = SquareColor.Green

StateSquare.Steps = 0

End If

End If

Next

'Renumber the squares if the hounds left an opening.

'Keep renumbering until the numbers stabilize (at most

'something like 11 times).

Dim NeedsMorePasses As Boolean = True

While NeedsMorePasses

'We are done unless we do something.

NeedsMorePasses = False

'Start at 4, the top row is never white.

For i = 4 To 31

StateSquare = Squares(i)

'Don't number hound squares.

If StateSquare.Holds <> Checker.Hound Then

'Use the neighbors to see if I have a lower number.

For Each ss In Moves.Neighbors(i)

'Can my neighbor lower my steps count?

If Squares(ss).Steps + 1 < StateSquare.Steps Then

StateSquare.Steps = Squares(ss).Steps + 1

'That makes me a white square.

StateSquare.Kind = SquareColor.White

'We changed stuff, have to keep looping.

NeedsMorePasses = True

End If

Next ss

End If

Next i

End While

'Is the fox trapped?

StateSquare = Squares(Fox)

Dim CanMove As Boolean = False

For Each ss In Moves.Neighbors(Fox)

If Squares(ss).Holds <> Checker.Hound Then

CanMove = True

End If

Next

'Set the game rank (and maybe change fox from UNREACHABLE to TRAPPED).

If Not CanMove Then

StateSquare.Steps = TRAPPED

Rank = TRAPPED

Else

'It can move, is it on black or white?

If StateSquare.Steps < UNREACHABLE Then

'Use the steps value if on white.

Rank = StateSquare.Steps

Else

'The first version of the code was happy with UNREACHABLE.

'See Figure 6.6 to see this fail.

'Rank = UNREACHABLE

'Rank is higher the fewer black squares remain,

'but always lower than TRAPPED (four hounds are black)

'and always higher than UNREACHABLE.

'The naive black count has issues: see Figure 6.6 and the

'last part of the discussion of the evaluation function.

'Rank = TRAPPED - NaiveBlackCount()

Rank = TRAPPED - BetterBlackCount()

End If

End If

End Sub

#End Region

We started by making all the squares black. After adding the checkers, we started coloring in any green squares. The top row is easy to color green; if no hound sits on a top square, it is green. The rest of the board is checked from top to bottom. Note that the code uses MovesUp. The hounds cannot move up, and the fox is never restricted to moving up. But the coloring algorithm needed to know what neighbors are above any given square. After making green squares, we can number any white squares. We keep checking the squares against their neighbors until the numbers settle. We then see if the fox is trapped and if the fox cannot reach freedom. The code and the comments show the three ways of computing board rank that were mentioned in the discussion of the evaluation function. All this work makes the board easy to display, informative for the player, and far easier for the AI to consider. The benefits for the AI programmer cannot be overemphasized.

We need to implement the two ways we discussed to count the number of black squares when computing board rank. The simplest is to just count them, so we will do that one first. Add the following code to the Internal Stuff region:

Private Function NaiveBlackCount() As Integer

'Just count them.

Dim NBC As Integer = 0

For i = 0 To 31

If Squares(i).Kind = SquareColor.Black Then NBC = (NBC + 1)

Next

Return NBC

End Function

This function can distract the AI into making sub-optimal moves as was shown in Figure 6.6. What we really want is to count the number of black squares available to the fox. We saw that in the end game, the hounds can be fatally distracted by reducing black squares that the fox cannot get to. Add the following code to the region for a better way to count black squares:

Private Function BetterBlackCount() As Integer

'Only the ones that the fox can reach.

Dim BN As New Collection

Dim stopAt As Integer = 1

Dim startAt As Integer = 1

Dim ss As Integer

Dim pbs As Integer

Dim i As Integer

'Add the fox's square to the collection.

BN.Add(Fox, Fox.ToString)

While startAt <= stopAt

'Check the members of the collection we've not checked before.

For i = startAt To stopAt

ss = CInt(BN(i))

'My neighbors are potentially black squares.

For Each pbs In Moves.Neighbors(ss)

If Squares(pbs).Holds = Checker.None Then

'Don't add if already there.

If Not BN.Contains(pbs.ToString) Then

'Add them to be counted, we'll check

'their neighbors next loop.

BN.Add(pbs, pbs.ToString)

End If

End If

Next pbs

Next i

'Start at the one after the end of the group we just did,

'which is the first new one if we added any.

startAt = stopAt + 1

stopAt = BN.Count

'If we didn't add any, stopAt didn't change, so

'startAt is now greater, terminating the loop.

End While

'Add in the hounds.

Return BN.Count + 4

End Function

We need to add the ability to mark the buttons with the values of the game state. There will be a number of methods that the outside world can use to access the game state, so we will create a separate region for them. Add the following code to the class, outside any of the other regions:

#Region "Public Methods"

'Make a graphical board reflect this game state.

Public Sub MarkButtons(ByVal Board() As Button)

Dim i As Integer

'The square on the board.

Dim BoardSquare As Button

'The same square in game state.

Dim StateSquare As SquareData

For i = 0 To 31

BoardSquare = Board(i)

StateSquare = Squares(i)

'Set the back color of that square.

Select Case StateSquare.Kind

Case SquareColor.Black

BoardSquare.BackColor = Color.Black

Case SquareColor.Green

BoardSquare.BackColor = Color.Green

Case SquareColor.White

BoardSquare.BackColor = Color.White

End Select

'Set the text of that square and maybe

'improve the back color.

Select Case StateSquare.Holds

Case Checker.Fox

BoardSquare.Text = "Fox"

'Fox needs better colors.

Select Case StateSquare.Kind

Case SquareColor.White

BoardSquare.BackColor = Color.LightPink

Case SquareColor.Black

BoardSquare.BackColor = Color.Red

Case SquareColor.Green

BoardSquare.BackColor = Color.LightGreen

End Select

Case Checker.Hound

BoardSquare.Text = "Hnd"

'Can't read text on black.

BoardSquare.BackColor = Color.DarkGray

Case Checker.None

Select Case StateSquare.Kind

Case SquareColor.Black, SquareColor.Green

'Green and black squares are solid.

BoardSquare.Text = ""

Case SquareColor.White

'White have the step number.

BoardSquare.Text = Squares(i).Steps.ToString

End Select

End Select

Next

End Sub

#End Region

With some help from the board, we will be able test what we have. We will return to add more functionality to the GameState class, but we can leave that for later.

The board itself will need to store some data. We need our buttons and the game state used to paint them. As you may have guessed from the figures, we will support an undo function, so we will need to stash the prior boards away. View the code for Board.vb and add the following to the class:

'The graphics:

Dim BoardSquares(31) As FaHButton

'Our current game:

Dim ThisGame As GameState

'The boards before this one so we can undo:

Dim PriorBoards As New Collection

We will do one-time initializations in the form Load event handler. Once those are done, we will ask the reset button click handler to start a new game for us. Add the following code to the class:

Private Sub Board_Load(ByVal sender As System.Object, _

ByVal e As System.EventArgs) Handles MyBase.Load

Dim i As Integer

Dim sq As FaHButton

Const sqsize As Integer = 50

'Do the once per run stuff.

Call InitMoves()

For i = 0 To 31

sq = New FaHButton(i)

sq.Height = sqsize

sq.Width = sqsize

sq.Parent = Me

'Four in every row.

sq.Top = 5 + sqsize * (i 4)

'Left: 5 for border, i mod 4 term steps through the 4 columns,

'and the mod 2 term for the offset in alternating rows.

sq.Left = 5 + (sqsize * 2) * (i Mod 4) + sqsize * (((i 4) + 1) Mod 2)

BoardSquares(i) = sq

Next

'Just use the reset function from here to get a new game.

Call ResetButton_Click(Nothing, Nothing)

End Sub

That provides us with the one-time initializations. We need to add the reset code that we called in order to get a new game. Add the following to the class:

Private Sub ResetButton_Click(ByVal sender As System.Object, _

ByVal e As System.EventArgs) Handles ResetButton.Click

'Start with a brand new one.

ThisGame = New GameState

'Clear the undo collection.

PriorBoards.Clear()

'Means we can't undo.

UndoButton.Enabled = False

'Have the current game imprint itself on the buttons.

Call ThisGame.MarkButtons(BoardSquares)

End Sub

We are now ready to fire up the game and see if we get the board we expect. Run the code in the debugger, and you should get the board illustrated in Figure 6.2. While the reset button works, we cannot tell because we cannot make any changes to the game. This was a great deal of work for a static picture, so we should let the player have some fun.

We will use drag and drop to let the player make moves. On the graphical side, there are three parts to a drag-and-drop operation: the mouse button down event, the drag over, and the drop. Since our game board is more than just moveable squares, we also have to change the game state after we make our move.

In addition, we have to prevent moves that break the rules of the game. We will make additions to the code in three areas: the board, the button class, and the GameState class.

The board has to hold the current game state for the game it is showing. Drag and drop is going to happen to the buttons. The buttons will need to ask the board for the current game state to validate potential moves. The buttons will need to tell the board about the new game state after a valid move has been performed. The buttons will need help from the GameState class to generate that new game state. As before, the board will ask the GameState class to paint the new state onto the array of buttons the board is holding.

The board code is the easiest to deal with. Add the following code to the Board class:

Public Property CurrentGame() As GameState

'There can be two parts to a property.

Get

'Get is very simple for us:

Return ThisGame

End Get

'Set requires some work on our part.

Set(ByVal value As GameState)

'Did we have a prior game to save?

If ThisGame IsNot Nothing Then

'If one side couldn't move, the undo

'will need multiple clicks to make a visible change.

PriorBoards.Add(ThisGame)

UndoButton.Enabled = True

End If

'Store the new current game.

ThisGame = value

'Ask game state to paint itself.

Call ThisGame.MarkButtons(BoardSquares)

End Set

End Property

The outside world calls Get to get the GameState object that represents the current game shown on the board. The outside world calls Set to give the board a new GameState object to display. Before it displays the new GameState, the board pushes the current game, if any, onto the undo stack. It then paints the UI with the new game state. The AI code will also exploit these new capabilities. For many games, it is a great idea to merge the player input pipeline and the AI input pipeline as early as possible. Doing so prevents you from having to keep two different pieces of code that do the same thing synchronized.

Now that we have enabled the undo button, we should give it something useful to do. Add the following code to the Board class:

Private Sub UndoButton_Click(ByVal sender As System.Object, _

ByVal e As System.EventArgs) Handles UndoButton.Click

'Do I have a prior board to show?

If PriorBoards.Count > 0 Then

'Overwrite the current board with the last board

'out of the collection.

ThisGame = CType(PriorBoards(PriorBoards.Count), GameState)

'Remove it from the collection.

PriorBoards.Remove(PriorBoards.Count)

'That might have been the last board.

UndoButton.Enabled = (PriorBoards.Count > 0)

'Paint the board.

Call ThisGame.MarkButtons(BoardSquares)

End If

End Sub

The undo function is very handy when debugging the AI. Human players likewise appreciate the ability to recover from accidentally letting go of the mouse button too soon. That is all of the additions to the board that the buttons need, but they still need help from the GameState class.

The buttons need to be able to ask the game state where the fox is and where the hounds are. Only those squares can be the source of a valid move. It does not make sense to move an empty square, and we have to allow the fox to move differently than the hounds. Getting the location of the fox is easy. Change to the GameState.vb tab in the editor. Add the following code to the Public Methods region of the GameState class:

'Where is the fox?

Public Function FoxAt() As Integer

Return (Fox)

End Function

For the hounds, we will return a copy of the game state’s array holding the locations of all of the hounds. Add the following code to the Public Methods region:

'Where are the hounds?

Public Function HoundsAt() As Integer()

'Create a new array.

Dim Locations(3) As Integer

Dim i As Integer

'Fill that array from our private copy.

For i = 0 To 3

Locations(i) = Hounds(i)

Next

'Return that array to protect our private copy.

Return Locations

End Function

To be a valid move, the target square has to be open. If it has a checker on it, it is blocked. We could use the FoxAt() and HoundsAt () functions, but the code is easier to read if we can just ask the game state if a checker is on a given square. Add the following code to the Public Methods region:

'Is some square occupied?

Public Function HasChecker(ByVal ss As Integer) As Boolean

'Is the fox there?

If ss = Fox Then Return True

Dim i As Integer

'Is one of the hounds there?

For i = 0 To 3

If ss = Hounds(i) Then Return True

Next

Return False

End Function

While we are in the Public Methods, we will add some code that the AI will need. Other code might want to know these things, but the AI has to be able to get to them. Add the following code to the Public Methods region:

'Fox wants 0, Hounds want TRAPPED (127).

Public Function GameRank() As Integer

Return Rank

End Function

'How many turns have been taken?

Public Function MoveCount() As Integer

Return Turn

End Function

Right now, the buttons can ask the game state if there is a fox or a hound on the source square. The buttons can ask the game state if there is a checker on the target square. The buttons can use the arrays in Moves.vb to check for valid moves for the fox or the hounds. What remains is for the buttons to be able to get the new game state from the current game state by asking the current game state to make a move. Then the buttons will be able to set the board’s game state with the new one.

We will have the GameState class provide move capability rather than have outside code change the game state. This defensive measure protects the game from AI code bugs as well as user-interface code bugs. We can live with the idea that the AI is not smart enough, but we cannot accept having an untrustworthy game state. The game must work, even if the AI goes off the rails. Our highly defensive way of making a move is to ask the game state to return a clone of itself changed by one move. This lets us do anything we desire with the new game state, including throwing it away, which the AI will do often. This also makes our undo method work as expected.

We will implement the cloning part by providing a different way of creating a new instance of the class. Add the following code to the Class New region. (You may want to collapse other regions to reduce the clutter in the editor):

'Clone the passed-in gamestate.

Public Sub New(ByVal GS As GameState)

Dim i As Integer

'Copy the positions.

Fox = GS.Fox

For i = 0 To 3

Hounds(i) = GS.Hounds(i)

Next

'Allocate the squares.

For i = 0 To 31

Squares(i) = New SquareData

Next

'Copy the turn number.

Turn = GS.Turn

'We do NOT color. The caller will want to move stuff

'and after the move, it will call color.

End Sub

We will not call this New method directly from the outside. Instead, we will provide an interface based on the idea of making a move. The fox is slightly simpler since there is only one. Add the following code to the Public Methods region:

'So what do we get if the fox moves to some square?

Public Function ProposeFoxTo(ByVal targetSqaure As Integer) As GameState

'Clone me.

Dim afterMove As GameState = New GameState(Me)

'Take a turn ...

afterMove.Turn = afterMove.Turn + 1

' ... by moving the fox.